Personal Information Classification on Aggregated Android Application’s Permissions

Abstract

1. Introduction

1.1. Problem Statement and Motivation

1.2. Key Consideration and Contribution

1.3. Roadmap

2. Related Work

2.1. Personal Information and Privacy

2.2. Application Permission System

2.3. Android Application Permission Associated Privacy Risk

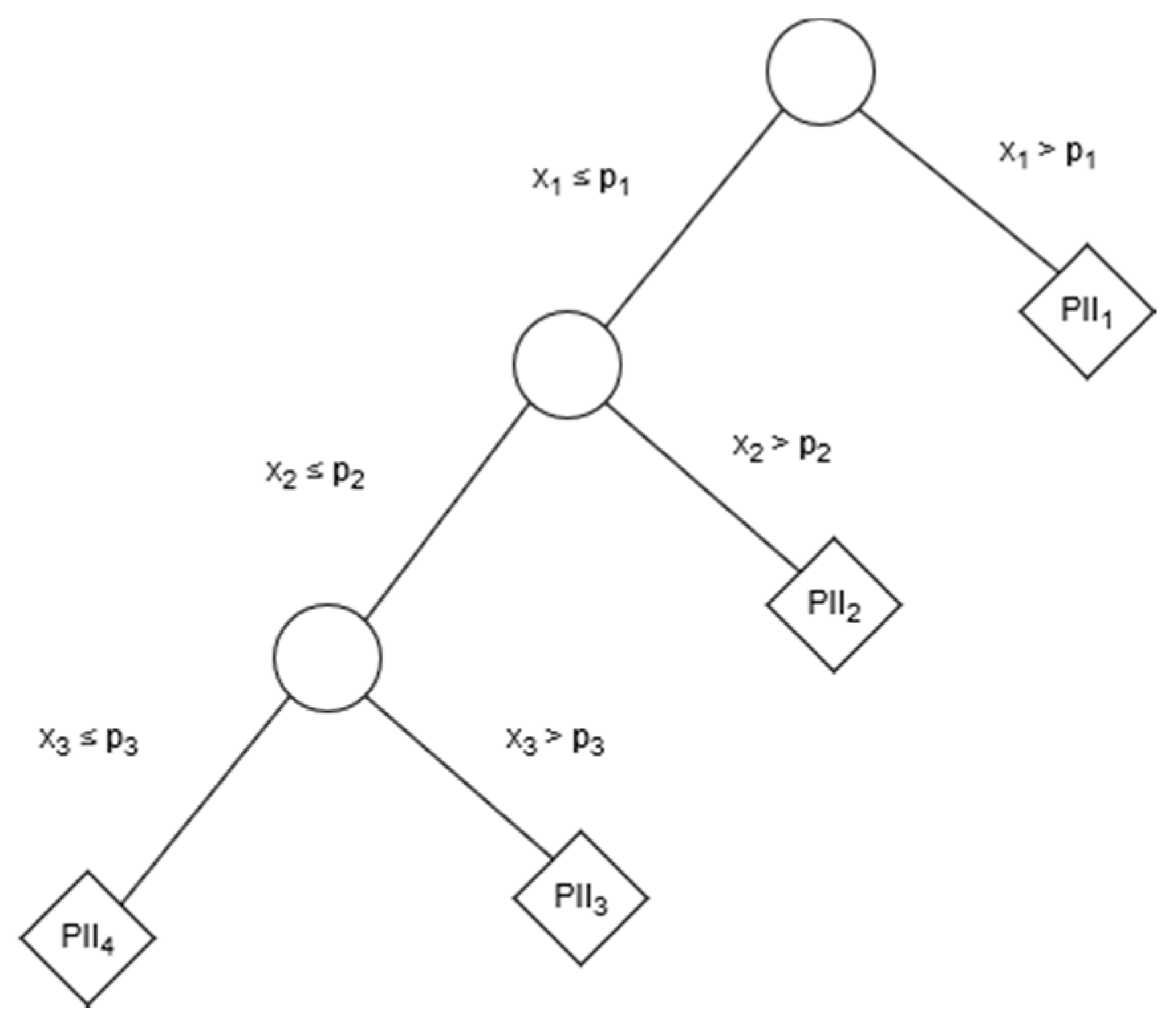

3. Risk Modeling

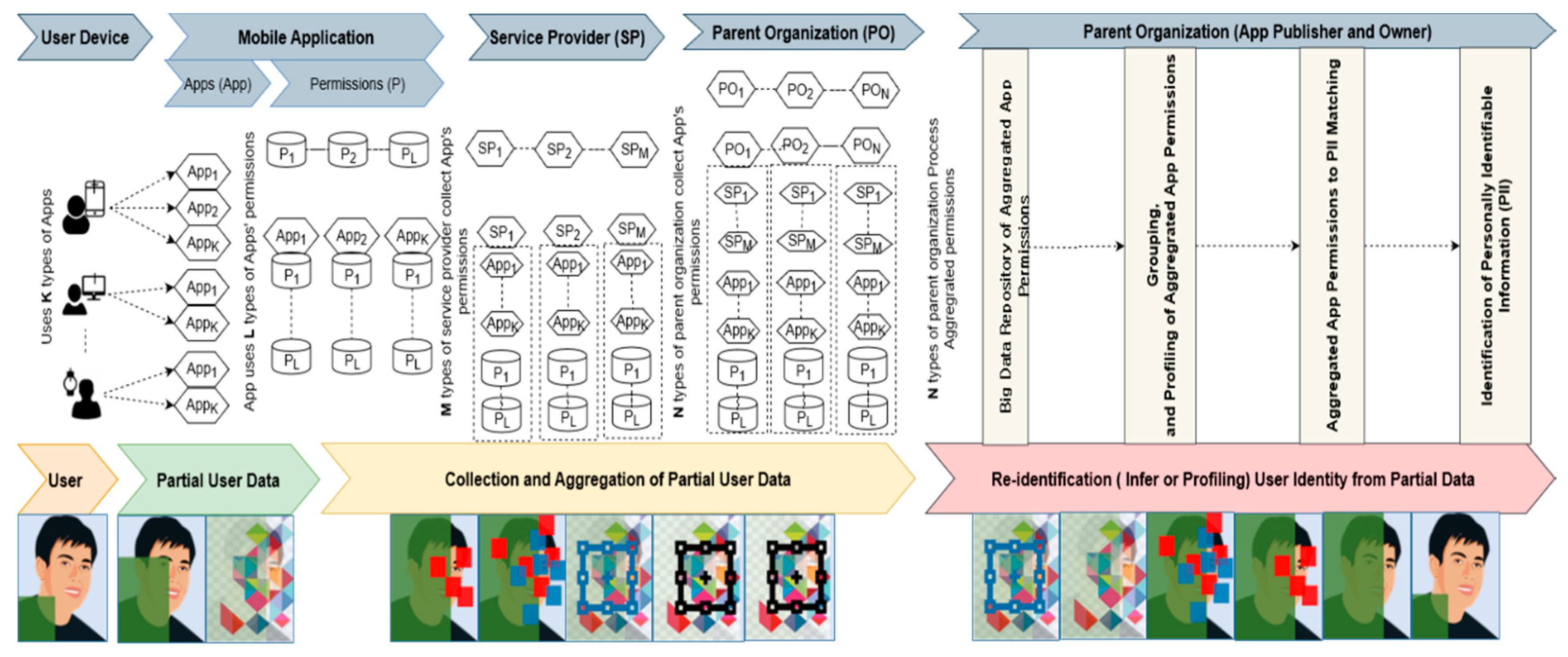

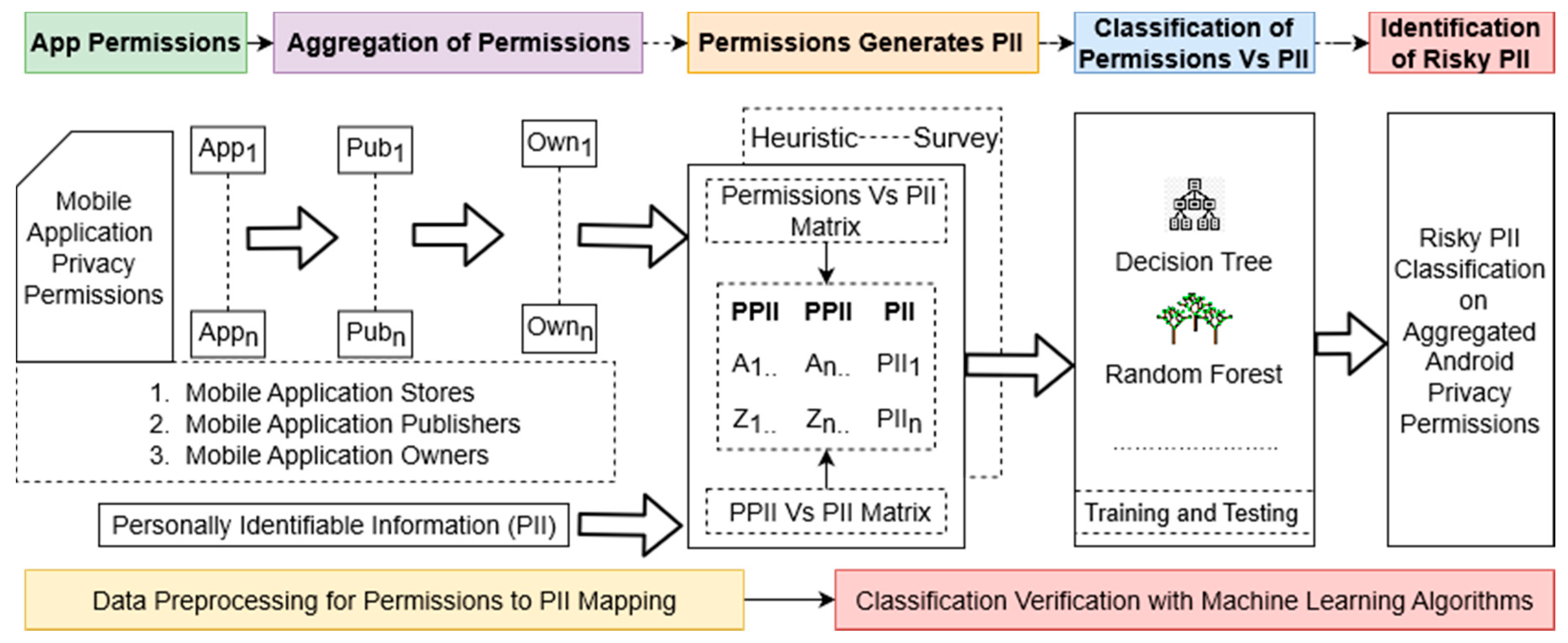

- Application (app) permissions: This study considered sensitive information sensing ‘Android app permissions’. An app provides services in exchange for those permissions.

- Android permission aggregation: This study considered the accumulation of the available Android permissions by apps, associated app publishers and the ultimate app owners.

- Permission generates PII: This study listed PII from several established resources. The study blended a heuristic approach with a real-life survey to link the app’s permissions to a specific PII.

- Classification of PII on aggregated permissions: After coupling a specific permission set to PII, PII was classified. This study used several machine learning approaches to verify the classification.

- Identification of risky PII: The study identified the classified PII as a risky PII set.

- The risk model considered the odds of permission (partial information) flow from an Android app to the publishers and the ultimate owner. Many Android apps are being published by multiple subsidiaries. As most of those subsidiaries (publishers) are owned by very few companies, the chance of permission aggregation is high. This study considered the earlier stated facts to explore a set of a risky PII classes by proposing a risk model. Since gaining a specific PII requires a precise set of Android permissions, a classification of PII on Android permissions are highly needed. In short, the risk model identified easily exposed PII caused by the Android permission aggregation-based user profiling.

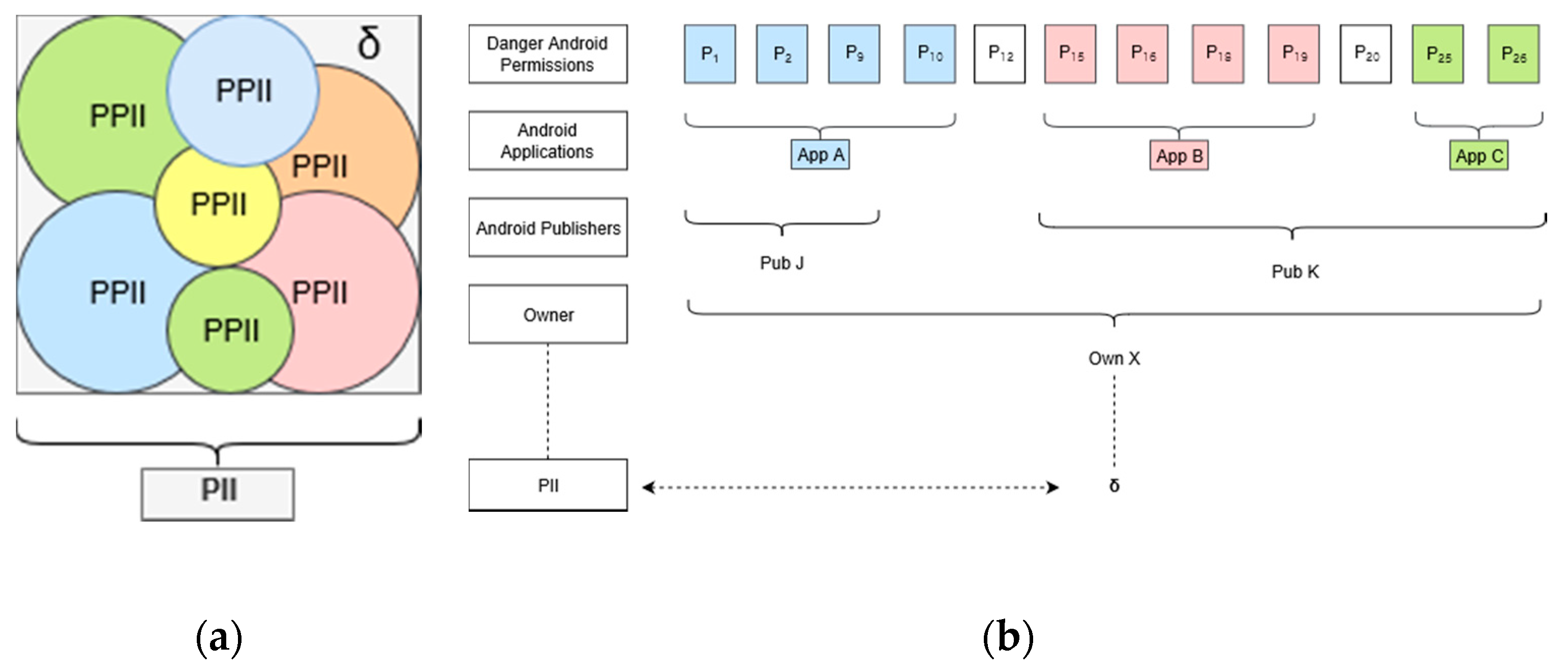

3.1. Android Permission Scope Model

- Firstly, {A1, A2…. ANAPP} collects four types of user consents: Normal, dangerous, signature and system [79]. Only {Pn1, Pn2 …. Pn26} are used by the apps for sensitive personal data collection.

- Secondly, {W1, W2…. WNPUB} publishes and owns the {A1, A2…. ANAPP} those are available at the Google Play-store [57]. As a {W1, W2…. WNPUB} controls the {A1, A2…. ANAPP}, it can easily accumulate partial data through underneath {Pn1, Pn2 …. Pn26} of those {A1, A2…. ANAPP}.

- Finally, {K1, K2…. KNOWN} owns several app publishing publishers or sub-organizations. Personal information selling to the same owners and even different owners are well-practiced [12,36,80]. Therefore, {K1, K2…. KNOWN} can directly or indirectly collect personal data from the {W1, W2…. WNPUB} and the {A1, A2…. ANAPP} through associated {Pn1, Pn2 …. Pn26}.

3.2. The Personal Information Scope Model

3.3. Android Permission Triggered User Profiling

3.3.1. Connection Between Android Permission and PPII

3.3.2. Association of Android Permission and PPII

The heuristic method for Android application permission and PII mapping:

Survey for Android application permission and PII mapping:

- Contact Number: Contact number includes access to the land phone, fax, mobile phone, workplace phone, family member’s phone number, etc.

- Biometric ID: Biometric ID includes access to the iris, retina, blood, gene, fingerprint, etc.

- Address: Address includes access to the current, home, work, educational institute, frequently covered addresses, etc.

- Social Graph: Social graph includes access to the information of a close friend, neighborhood, cousins, family member, club member, etc.

- Human Behavior: Human behavior includes access to sensitive personal attitude, hobby, buying hobby, brand preference, shopping habit, etc.

- Unique ID: Unique ID includes access to any internet identity, password, membership id, verification code, etc.

- Email: Email includes access to the email domain, email serving company, email address, email-based service preference, email associated photos, etc.

- Location: Finally, the location includes access to the exact location, fine location, job location, recently visited place, family location, workplace, current position, location-based habit data, etc.

4. Case Studies

4.1. Experimental Environment

4.1.1. Case Study Selection Criteria

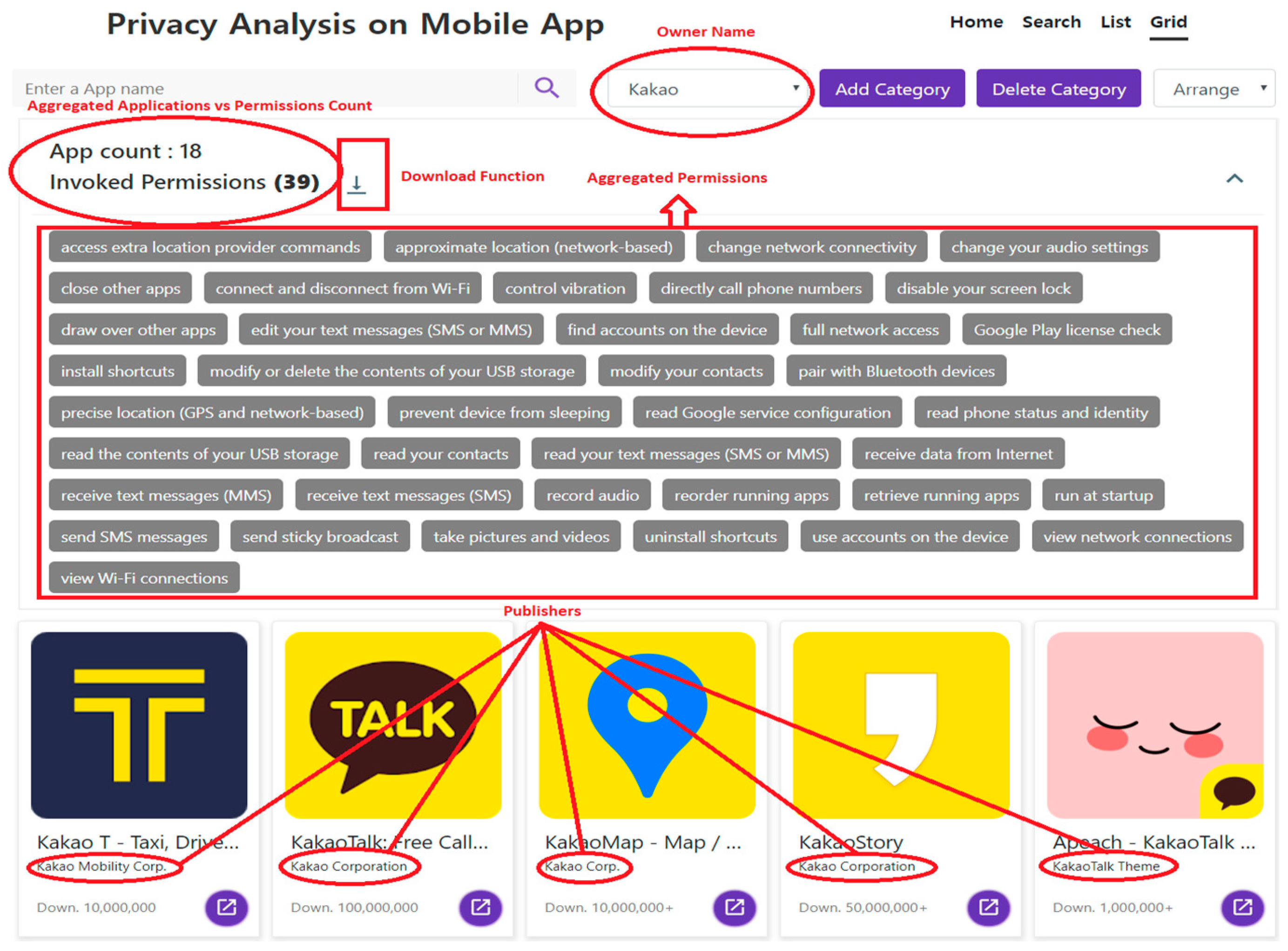

- Application brand popularity in the local market: Both Kakao and Naver are the top application brand in South Korea. Out of the top 10 popular apps, three is from Kakao and two is from Naver [88].

- The similarity in service: Availability of diverse apps such as chatting, map, dictionary, translator, social, etc. from both the types are another reason for selecting Kakao and Naver.

- Availability of multiple publishers: Both Kakao and Naver publish apps via several publishers.

4.1.2. Android Privacy Permission Analyzing Web Application

- A node.js based web application server (WAS): The application gathered data from the ‘Google Play-store’ [57] using the ‘open API’. An asynchronous input and output (I/O) based service providing mechanism was implemented using Node.js to provide a non-blocking facility. Regardless of the response success rate of the used API, the proposed application could perform independently.

- A complete database: The database included 18,500 mobile applications with 160,000 dangerous Android permissions. The information of each mobile application included various related information such as title, publisher, downloads and rating of an individual mobile application. It also shows the permission list for each mobile application. Our web application used the Google Play-store [57] API to integrate applications of the Android Play store and our database. In addition, if any application was not in our database that could be added immediately for future use.

- The last part was the web-based service portal: It provided functions like searching, listing and grouping of data in terms of owning a company. The application also allowed users to download an excel file containing the Android permission set and associated app owner’s identity.

4.1.3. Android Privacy Permission Collection

4.1.4. Description of the Evaluation Tool

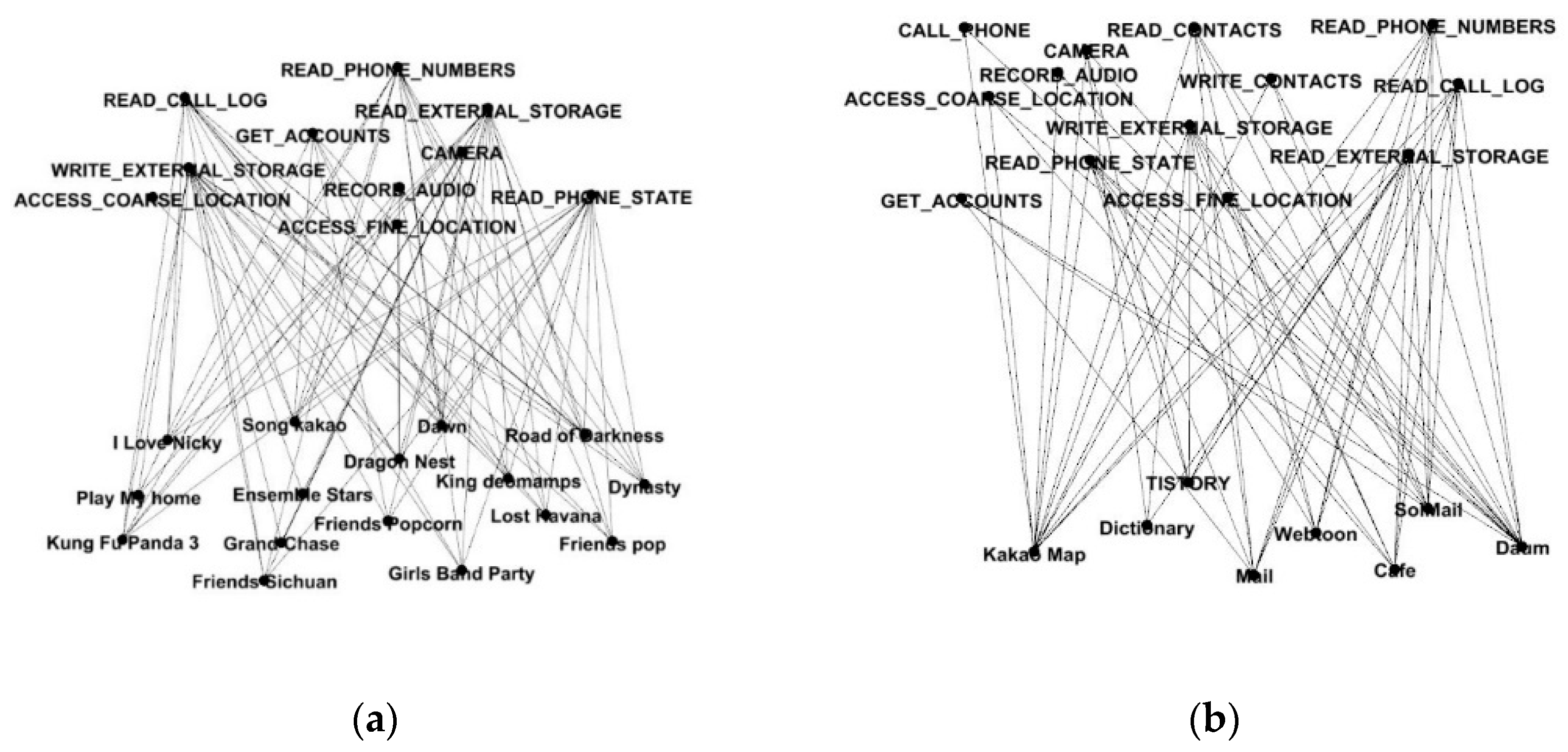

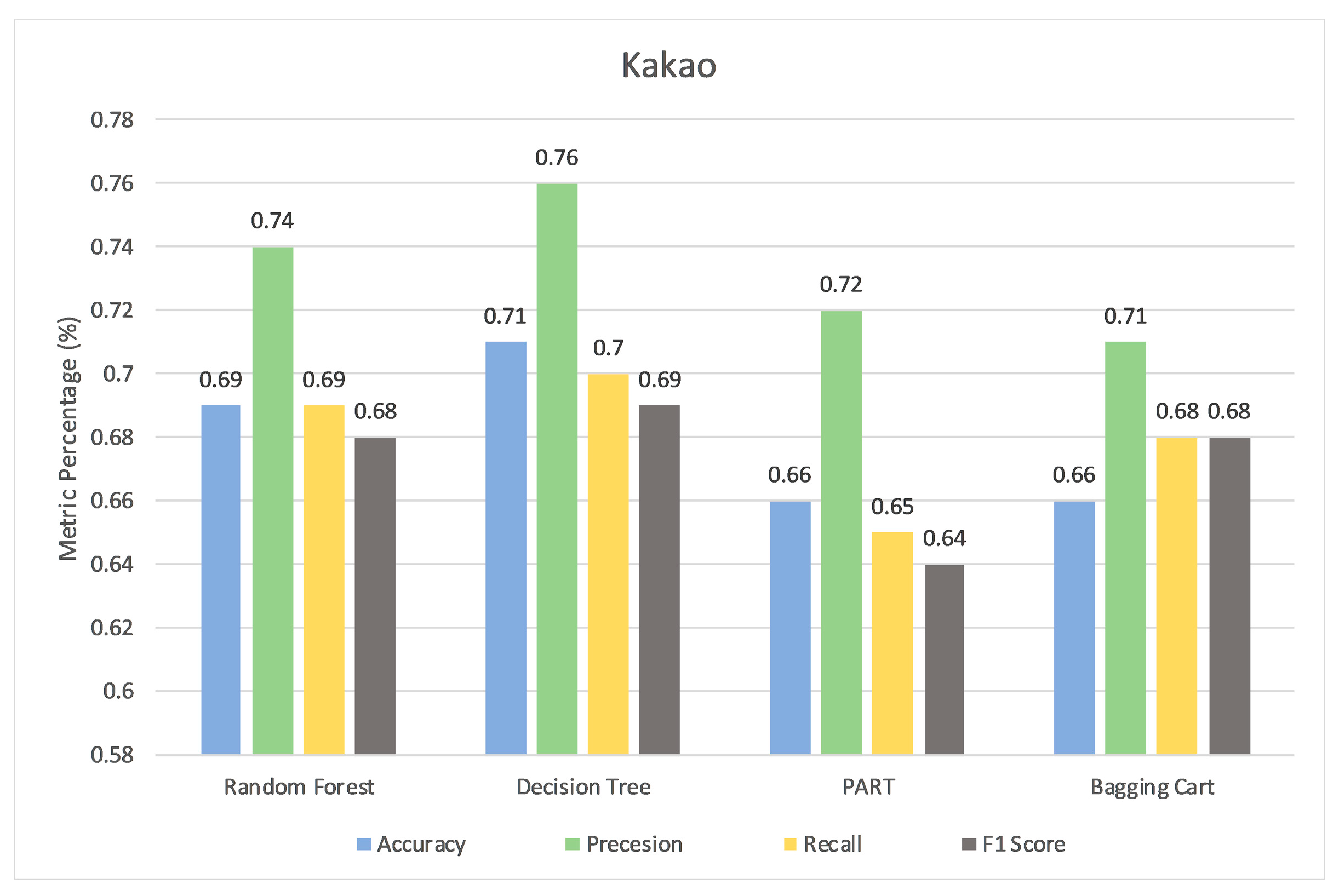

4.2. Case Study of Kakao

4.2.1. Description of the Dataset

4.2.2. Key Insight from Experimental Evaluation

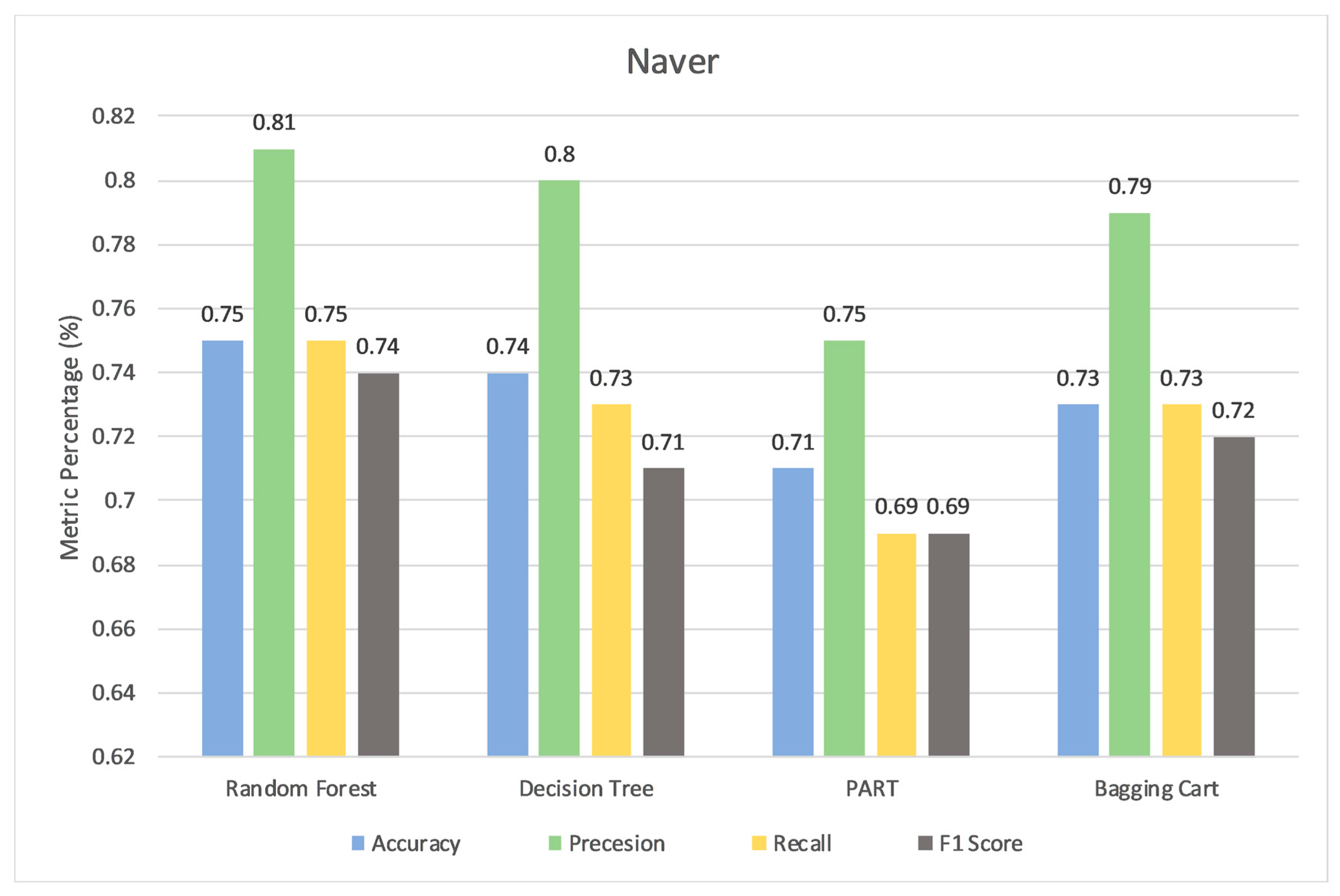

4.3. Case Study of Naver

4.3.1. Description of the Dataset

4.3.2. Key Insight from Experimental Evaluation

5. Discussions

5.1. Key Learning From the Study

- On the severity of access, Android permissions were of two types of one-time access and continuous access. PII like ‘biometric IDs’ and ‘contact numbers’ were directly exposed through one-time access of ‘USE_FINGERPRINT’ and ‘CELL_PHONE’. Alternatively, ‘social graph’ and ‘human behavior’ required continuous access to ‘READ_CALL_LOG’, ‘READ_EXT_STORAGE’, ‘READ_CALENDAR’, etc.

- PII exposing risk depended on two sub-factors like quantitative and qualitative attributes of the Android permissions. For example, PII like ‘location’ (location graph) got more accurate after reiteration of ‘FINE_LOCATION’ and ‘COURSE_LOCATION’ permission. That is, the more specific the Android permissions used, the more the PII is exposed. Alternatively, the permission ‘BODY_SENSOR’ sensed surroundings, preferably the human body continuously. However, few ‘BODY_SENSOR’s might collect a high-quality attribute, some might not get to. Thus, the user profiling risk (PII exposing rate) also depended on the data quality gathered through permissions.

- The PII revealing risk did not depend on a single Android app (permission). As the publishers and owners could aggregate permissions from different apps, awareness and research on a single Android application (permission) were not enough anymore. Due to owner side permission accumulation risk, risk modeling studies must evaluate from multiple app perspectives.

5.2. Probabilistic Analysis of the Risk Model

5.3. Recommendations

- Along with the assessment of an application privacy features, publisher’s and owner’s identity must be noted. The study recommended using applications from different publishers and owners.

- The study also recommended the users to track the total flow of dangerous Android permissions to any specific application publishers or owners. For example, the user should seriously monitor which of the sensitive PII is about to be revealed to a specific publisher or owner.

- Most of the studies were dealing with Android permissions to personal data leak classification by considering the first level (application level) information. However, this study recommended researchers to consider the second (publishers) and third level (owner) data (permissions) aggregation. Otherwise, the overall research outcomes and objective might be missed.

5.4. Limitations

5.5. Future Consideration

- Considering the diverse data sharing scope among stakeholder: Data sharing scope among stakeholders (owners, publishers and applications) is variable. In future, the study plans to consider a distinct data sharing likelihood from the application to the publisher (α), the publisher to the owner (β) and the owner to owner (γ). The inter-company relationship and data-storing architecture decide the data sharing options. Permission data acquisition rate would vary based on the aforesaid factor.

- The weighting of the individual Android permission: Not all Android permission carries equal information that can form PII. The study plans to introduce a data value weight (ω1, ω2… ωN) on each Android dangerous permission based on its contribution of PII formation. The dangerous Android permission to PII formation matrices will be redefined based on these weights.

- Redesigning the analyzing app: Finally, the study plans to improve the web app ‘Privacy analysis on mobile App’ (http://52.79.237.144:3000/) [45] based on the aforesaid factors. The web application will be reshaped in such a way where the user can track the percentage of the overall personal data flow through Android permissions towards a specific owner.

- In the future, a larger dataset with higher application owning enterprises, such as Alphabet, Facebook, eBay, Amazon, etc. would be considered

6. Conclusions

Author Contributions

Funding

Acknowledgments

Conflicts of Interest

References

- Chong, I.; Ge, H.; Li, N.; Proctor, R.W. Influence of privacy priming and security framing on mobile app selection. Comput. Secur. 2018, 78, 143–154. [Google Scholar] [CrossRef]

- Onik, M.M.H.; Ahmed, M. Blockchain in the Era of Industry 4.0. In Data Analytics: Concepts, Techniques, and Applications; Mohiuddin Ahmed, A.-S.K.P., Ed.; CRC Press: Boca Raton, FL, USA, 2018; pp. 259–298. ISBN 9781138500815. [Google Scholar]

- Ahmed, E.; Yaqoob, I.; Hashem, I.A.T.; Shuja, J.; Imran, M.; Guizani, N.; Bakhsh, S.T. Recent Advances and Challenges in Mobile Big Data. IEEE Commun. Mag. 2018, 56, 102–108. [Google Scholar] [CrossRef]

- Cadwalladr, C.; Graham-Harrison, E. Revealed: 50 Million Facebook Profiles Harvested for Cambridge Analytica in Major Data Breach. Available online: https://www.theguardian.com/news/2018/mar/17/cambridge-analytica-facebook-influence-us-election (accessed on 12 February 2019).

- Volodzko, D. Marriott Breach. Available online: https://www.forbes.com/sites/davidvolodzko/2018/12/04/marriott-breach-exposes-far-more-than-just-data/#19e9b70f6297 (accessed on 12 February 2019).

- Kenthapadi, K.; Mironov, I.; Thakurta, A. Privacy-preserving Data Mining in Industry. In Proceedings of the 2019 World Wide Web Conference, Taipei, Taiwan, 20–25 April 2019; pp. 1308–1310. [Google Scholar]

- He, Y.; Yang, X.; Hu, B.; Wang, W. Dynamic privacy leakage analysis of Android third-party libraries. J. Inf. Secur. Appl. 2019, 46, 259–270. [Google Scholar] [CrossRef]

- Jha, A.K.; Lee, W.J. An empirical study of collaborative model and its security risk in Android. J. Syst. Softw. 2018, 137, 550–562. [Google Scholar] [CrossRef]

- Yu, L.; Luo, X.; Qian, C.; Wang, S.; Leung, H.K.N. Enhancing the Description-to-Behavior Fidelity in Android Apps with Privacy Policy. IEEE Trans. Softw. Eng. 2018, 44, 834–854. [Google Scholar] [CrossRef]

- Ito, K.; Hasegawa, H.; Yamaguchi, Y.; Shimada, H.Y. Detecting Privacy Information Abuse by Android Apps from API Call Logs. In Proceedings of the 2018 International Workshop on Security, Miyagi, Japan, 3–5 September 2018; pp. 143–157. [Google Scholar]

- Islam, M.R. Numeric rating of Apps on Google Play Store by sentiment analysis on user reviews. In Proceedings of the 2014 International Conference on Electrical Engineering and Information & Communication Technology; IEEE: Piscataway, NJ, USA, 2014; pp. 1–4. [Google Scholar]

- Hatamian, M.; Momen, N.; Fritsch, L.; Rannenberg, K. A Multilateral Privacy Impact Analysis Method for Android Apps. In Annual Privacy Forum; Springer: Cham, UK, 2019; pp. 87–106. [Google Scholar]

- Azfar, A.; Choo, K.-K.R.; Liu, L. An Android Communication App Forensic Taxonomy. J. Forensic Sci. 2016, 61, 1337–1350. [Google Scholar] [CrossRef] [PubMed]

- Azfar, A.; Choo, K.-K.R.; Liu, L. Forensic Taxonomy of Android Social Apps. J. Forensic Sci. 2017, 62, 435–456. [Google Scholar] [CrossRef]

- Mehrnezhad, M.; Toreini, E. What Is This Sensor and Does This App Need Access to It? Informatics 2019, 6, 7. [Google Scholar] [CrossRef]

- Gu, J.; Huang, R.; Jiang, L.; Qiao, G.; Du, X.; Guizani, M. A Fog Computing Solution for Context-Based Privacy Leakage Detection for Android Healthcare Devices. Sensors 2019, 19, 1184. [Google Scholar] [CrossRef] [PubMed]

- Moore, S.R.; Ge, H.; Li, N.; Proctor, R.W. Cybersecurity for Android Applications: Permissions in Android 5 and 6. Int. J. Hum. Comput. Interact. 2019, 35, 630–640. [Google Scholar] [CrossRef]

- Mcilroy, S.; Shang, W.; Ali, N.; Hassan, A.E. User reviews of top mobile apps in Apple and Google app stores. Commun. ACM 2017, 60, 62–67. [Google Scholar] [CrossRef]

- Wang, X.; Wang, W.; He, Y.; Liu, J.; Han, Z.; Zhang, X. Characterizing android apps’ behavior for effective detection of malapps at large scale. Futur. Gener. Comput. Syst. 2017, 75, 30–45. [Google Scholar] [CrossRef]

- Kumar, R.; Zhang, X.; Khan, R.U.; Sharif, A. Research on Data Mining of Permission-Induced Risk for Android IoT Devices. Appl. Sci. 2019, 9, 277. [Google Scholar] [CrossRef]

- Kim, J.; Jung, I. Efficient Protection of Android Applications through User Authentication Using Peripheral Devices. Sustainability 2018, 10, 1290. [Google Scholar] [CrossRef]

- Liu, X.; Du, X.; Zhang, X.; Zhu, Q.; Wang, H.; Guizani, M. Adversarial Samples on Android Malware Detection Systems for IoT Systems. Sensors 2019, 19, 974. [Google Scholar] [CrossRef] [PubMed]

- Doğru, İ.; KİRAZ, Ö. Web-based android malicious software detection and classification system. Appl. Sci. 2018, 8, 1622. [Google Scholar] [CrossRef]

- Duffie, D.; Malamud, S.; Manso, G. The relative contributions of private information sharing and public information releases to information aggregation. J. Econ. Theory 2010, 145, 1574–1601. [Google Scholar] [CrossRef][Green Version]

- Richter, H.; Slowinski, P.R. The Data Sharing Economy: On the Emergence of New Intermediaries. IIC-Int. Rev. Intellect. Prop. Compet. Law 2019, 50, 4–29. [Google Scholar] [CrossRef]

- Venkatadri, G.; Lucherini, E.; Sapiezynski, P.; Mislove, A. Investigating sources of PII used in Facebook’s targeted advertising. Proc. Priv. Enhancing Technol. 2019, 2019, 227–244. [Google Scholar] [CrossRef]

- Huckvale, K.; Torous, J.; Larsen, M.E. Assessment of the data sharing and privacy practices of smartphone apps for depression and smoking cessation. JAMA Netw. Open 2019, 2, 192542. [Google Scholar] [CrossRef]

- Shilton, K.; Greene, D. Linking Platforms, Practices, and Developer Ethics: Levers for Privacy Discourse in Mobile Application Development. J. Bus. Ethics 2019, 155, 131–146. [Google Scholar] [CrossRef]

- Facebook, Instagram, WhatsApp Go down Simultaneously. Available online: https://www.businesstoday.in/technology/internet/facebook-instagram-whatsapp-go-down-simultaneously/story/327610.html (accessed on 6 August 2019).

- Facebook is Sharing Users’ WhatsApp and Instagram Data to Catch Terrorists | The Independent. Available online: https://www.independent.co.uk/life-style/gadgets-and-tech/news/facebook-policy-share-users-data-across-whatsapp-instagram-tackle-terrorists-social-media-app-isis-a7797201.html (accessed on 29 July 2019).

- Rangole, W.F.H.K. Large-Scale Authorization Data Collection and Aggregation. US Patents 16/056,322, 7 March 2019. [Google Scholar]

- Boehm, M.; Evfimievski, A.; Reinwald, B. Efficient data-parallel cumulative aggregates for large-scale machine learning. In BTW 2019; Grust, T., Naumann, F., Böhm, A., Lehner, W., Härder, T., Rahm, E., Heuer, A., Klettke, M., Meyer, H., Eds.; Gesellschaft für Informatik: Bonn, Germany, 2019; pp. 267–286. [Google Scholar]

- Bakalash, R.; Shaked, G.; Caspi, J. Enterprise-Wide Data-Warehouse with Integrated Data Aggregation Eng. U.S. Patent 7,315,849, 1 January 2008. [Google Scholar]

- Rabl, T.; Gómez-Villamor, S.; Sadoghi, M.; Muntés-Mulero, V.; Jacobsen, H.A.; Mankovskii, S. Solving big data challenges for enterprise application performance management. Proc. VLDB Endow. 2012, 5, 1724–1735. [Google Scholar] [CrossRef]

- Fritsch, L.; Momen, N. Derived Partial Identities Generated from App Permissions. In Open Identity Summit 2017; Fritsch, L., Roßnagel, H., Hühnlein, D., Eds.; Gesellschaft für Informatik: Bonn, Germany, 2017; pp. 117–130. [Google Scholar]

- Jinhong, Y.; Chul-Soo, K.I.M.; ONIK, M.M.H. Aggregated Risk Modelling of Personal Data Privacy in Internet of Things. In Proceedings of the 2019 21st International Conference on Advanced Communication Technology (ICACT); IEEE: Piscataway, NJ, USA, 2019; pp. 425–430. [Google Scholar]

- Onik, M.M.H.; Al-Zaben, N.; Yang, J.; Lee, N.-Y.; Kim, C.-S. Risk Identification of Personally Identifiable Information from Collective Mobile App Data. In Proceedings of the International Conference on Computing, Electronics & Communications Engineering 2018 (iCCECE ’18); IEEE: Southend, UK, 2018; pp. 71–76. [Google Scholar]

- Liang, S.; Du, X. Permission-combination-based scheme for android mobile malware detection. In Proceedings of the 2014 IEEE International Conference on Communications (ICC); IEEE: Piscataway, NJ, USA, 2014; pp. 2301–2306. [Google Scholar]

- Shuba, A.; Bakopoulou, E.; Mehrabadi, M.A.; Le, H.; Choffnes, D.; Markopoulou, A. AntShield: On-Device Detection of Personal Information Exposure. arXiv 2018, arXiv:1803.01261. [Google Scholar]

- Minen, M.T.; Stieglitz, E.J.; Sciortino, R.; Torous, J. Privacy Issues in Smartphone Applications: An Analysis of Headache/Migraine Applications. Headache J. Head Face Pain 2018, 58, 1014–1027. [Google Scholar] [CrossRef] [PubMed]

- Hosseini, M.; Qin, X.; Wang, X.; Niu, J. Extracting Information Types from Android Layout Code Using Sequence to Sequence Learning. In Proceedings of the Thirty-Second AAAI Conference on Artificial Intelligence, New Orleans, LA, USA, 2–7 February 2018; pp. 767–770. [Google Scholar]

- Gündüç, S.; Eryiğit, R. Role of new ideas in the mobile phone market share. Int. J. Model. Simul. Sci. Comput. 2018, 9, 1850018. [Google Scholar] [CrossRef]

- Kakao. Available online: https://www.kakaocorp.com/ (accessed on 6 April 2019).

- Naver. Available online: https://www.naver.com/ (accessed on 6 April 2019).

- Privacy Analysis on Mobile App. Available online: http://52.79.237.144:3000/ (accessed on 11 May 2019).

- Posey, C.; Raja, U.; Crossler, R.E.; Burns, A.J. Taking stock of organisations’ protection of privacy: Categorising and assessing threats to personally identifiable information in the USA. Eur. J. Inf. Syst. 2017, 26, 585–604. [Google Scholar] [CrossRef]

- Voss, W.G. European union data privacy law reform: General data protection regulation, privacy shield, and the right to delisting. Bus. Lawyer 2017, 72, 221–223. [Google Scholar]

- McCallister, E. Guide to Protecting the Confidentiality of Personally Identifiable Information; Diane Publishing: Collingdale, PA, USA, 2010; ISBN 1437934889. [Google Scholar]

- Pfitzmann, A.; Hansen, M. Anonymity, unlinkability, undetectability, unobservability, pseudonymity, and identity management-a consolidated proposal for terminology. Version v0 2008, 31, 15. [Google Scholar]

- Kaur, G.; Agrawal, S. Differential Privacy Framework: Impact of Quasi-identifiers on Anonymization. In Proceedings of the 2nd International Conference on Communication, Computing and Networking, Chandigarh, India, 29–30 March 2018; pp. 35–42. [Google Scholar]

- Soh, C.; Njilla, L.; Kwiat, K.; Kamhoua, C.A. Learning quasi-identifiers for privacy-preserving exchanges: A rough set theory approach. Granul. Comput. 2018, 3, 1–14. [Google Scholar]

- Murphy, R.S. Property rights in personal information: An economic defense of privacy. In Privacy; Routledge: London, UK, 2017; pp. 43–79. [Google Scholar]

- NIST PII. Available online: https://csrc.nist.gov/glossary/term/personally-identifiable-information (accessed on 12 February 2019).

- Butler, D.A.; Rodrick, S. Australian Media Law; Thomson Reuters (Professional) Australia Limited: Pyrmont, NSW, Australia, 2015; ISBN 9780455234403. [Google Scholar]

- Porter, C.C. De-identified data and third party data mining: The risk of re-identification of personal information. Shidler JL Com. Tech. 2008, 5, 1. [Google Scholar]

- Momen, N.; Pulls, T.; Fritsch, L.; Lindskog, S. How Much Privilege Does an App Need? Investigating Resource Usage of Android Apps (Short Paper). In Proceedings of the 2017 15th Annual Conference on Privacy, Security and Trust (PST); IEEE: Piscataway, NJ, USA, 2017; pp. 268–2685. [Google Scholar]

- Android Apps on Google Play. Available online: https://play.google.com/store/apps (accessed on 6 April 2019).

- iTunes - Apple (IN). Available online: https://www.apple.com/kr/itunes/ (accessed on 1 July 2019).

- Google Developers Android Dangerous Permissions. Available online: https://developer.android.com/guide/topics/permissions/overview (accessed on 12 December 2018).

- Sharma, U.; Bansal, D. A Study of Android Application Execution Trends and Their Privacy Threats to a User with Respect to Data Leaks and Compromise. In Advanced Computational and Communication Paradigms; Springer: Singapore, 2018; pp. 675–682. ISBN 978-981-10-8237-5. [Google Scholar]

- Baalous, R.; Poet, R. How Dangerous Permissions are Described in Android Apps’ Privacy Policies? In Proceedings of the 11th International Conference on Security of Information and Networks, Glasgow, UK, 9–11 September 2018; pp. 26–27. [Google Scholar]

- Sivan, N.; Bitton, R.; Shabtai, A. Analysis of Location Data Leakage in the Internet Traffic of Android-based Mobile Devices. arXiv 2018, arXiv:1812.04829. [Google Scholar]

- Kim, K.; Kim, T.; Lee, S.; Kim, S.; Kim, H. When Harry Met Tinder: Security Analysis of Dating Apps on Android. In Proceedings of the 2018 Nordic Conference on Secure IT Systems, Oslo, Norway, 28–30 November 2018; pp. 454–467. [Google Scholar]

- Onik, M.M.H.; Al-Zaben, N.; Hoo, H.P.; Kim, C.-S. A Novel Approach for Network Attack Classification based on Sequential Questions. Ann. Emerg. Technol. Comput. 2018, 2, 1–14. [Google Scholar] [CrossRef]

- Chiluka, N.; Singh, A.K.; Eswarawaka, R. Privacy and Security Issues Due to Permissions Glut in Android System. In Proceedings of the 2018 International Conference on Inventive Research in Computing Applications (ICIRCA), Coimbatore, India, 11–12 July 2018; pp. 406–411. [Google Scholar]

- Jadon, P.; Mishra, D.K. Security and Privacy Issues in Big Data: A Review. In Emerging Trends in Expert Applications and Security; Springer: Singapore, 2019; pp. 659–665. ISBN 978-981-13-2285-3. [Google Scholar]

- Jain, V.; Laxmi, V.; Gaur, M.; On, M.M. APPLADroid: Automaton Based Inter-app Privacy Leak Analysis for Android. In Proceedings of the 2019 International Conference on Security & Privacy, Prague, Czech Republic, 23–25 February 2019; pp. 219–233. [Google Scholar]

- Sharma, K.; Gupta, B.B. Towards Privacy Risk Analysis in Android Applications Using Machine Learning Approaches. Int. J. E Serv. Mob. Appl. 2019, 11, 1–21. [Google Scholar] [CrossRef]

- Song, D.H.; Son, C.Y. Mismanagement of personally identifiable information and the reaction of interested parties to safeguarding privacy in South Korea. Inf. Res. 2017, 22, 1–16. [Google Scholar]

- Sadeghi, A.; Bagheri, H.; Garcia, J.; Malek, S. A taxonomy and qualitative comparison of program analysis techniques for security assessment of android software. IEEE Trans. Softw. Eng. 2017, 43, 492–530. [Google Scholar] [CrossRef]

- Li, J.; Sun, L.; Yan, Q.; Li, Z.; Srisa-an, W.; Ye, H. Significant Permission Identification for Machine Learning Based Android Malware Detection. IEEE Trans. Ind. Inform. 2018, 14, 3216–3225. [Google Scholar] [CrossRef]

- Arora, A.; Peddoju, S.K.; Chouhan, V.; Chaudhary, A. Poster: Hybrid Android Malware Detection by Combining Supervised and Unsupervised Learning. In Proceedings of the 24th Annual International Conference on Mobile Computing and Networking, New Delhi, India, 29 October–2 November 2018; pp. 798–800. [Google Scholar]

- Shuba, A.; Bakopoulou, E.; Markopoulou, A. Privacy Leak Classification on Mobile Devices. In Proceedings of the IEEE Workshop on Signal Processing Advances in Wireless Communications, SPAWC, Kalamata, Greece, 25–28 June 2018. [Google Scholar]

- Razaghpanah, A.; Nithyanand, R.; Vallina-Rodriguez, N.; Sundaresan, S.; Allman, M.; Kreibich, C.; Gill, P. Apps, Trackers, Privacy, and Regulators: A Global Study of the Mobile Tracking Ecosystem. In Proceedings of the 2018 Network and Distributed System Security Symposium, San Diego, CA, USA, 18–21 February 2018. [Google Scholar]

- Ren, J.; Rao, A.; Lindorfer, M.; Legout, A.; Choffnes, D. Recon: Revealing and controlling pii leaks in mobile network traffic. In Proceedings of the 14th Annual International Conference on Mobile Systems, Applications, and Services, Singapore, 25–30 June 2016; pp. 361–374. [Google Scholar]

- Daly, A. The legality of deep packet inspection. Int. J. Commun. Law Policy 2011, 14, 1–12. [Google Scholar] [CrossRef][Green Version]

- Cohen, I.G.; Mello, M.M. HIPAA and protecting health information in the 21st Century. JAMA 2018, 320, 231–232. [Google Scholar] [CrossRef]

- Onik, M.M.H.; Kim, C.S.; Yang, J. Personal Data Privacy Challenges of the Fourth Industrial Revolution. In Proceedings of the 2019 21st International Conference on Advanced Communication Technology (ICACT), PyeongChang, Korea, 17–20 February 2019; pp. 635–638. [Google Scholar]

- Permissions Overview. Android Developers. Available online: https://developer.android.com/guide/topics/permissions/overview (accessed on 5 April 2019).

- Galloway, S. The Four: The Hidden DNA of Amazon, Apple, Facebook and Google; Bantam Press: London, UK, 2017; ISBN 978-0525501220. [Google Scholar]

- The Data Brokers Quietly Buying and Selling Your Personal Information. Available online: https://www.fastcompany.com/90310803/here-are-the-data-brokers-quietly-buying-and-selling-your-personal-information (accessed on 11 July 2019).

- Liccardi, I.; Pato, J.; Weitzner, D.; Abelson, H.; De Roure, D. No technical understanding required: Helping users make informed choices about access to their personal data. In Proceedings of the 11th International Conference on Mobile and Ubiquitous Systems: Computing, Networking and Services, Houston, TX, USA, 12–14 November 2014; pp. 140–150. [Google Scholar]

- Kumar, S.; Shanker, R. Context Aware Dynamic Permission Model: A Retrospect of Privacy and Security in Android System. In Proceedings of the 2018 International Conference on Intelligent Circuits and Systems, Hsinchu, Taiwan, 18–20 March 2018; pp. 324–329. [Google Scholar]

- Todorov, A.; Chaiken, S.; Henderson, M.D. The heuristic-systematic model of social information processing. In The Persuasion Handbook: Developments in Theory and Practice; SAGE Publications Sage UK: London, UK, 2002; pp. 195–211. [Google Scholar]

- Creswell, J.; Poth, C. Qualitative Inquiry and Research Design: Choosing among Five Approaches; Sage Publications Sage CA: Thousand Oaks, CA, USA, 2017. [Google Scholar]

- Kakaotalk: Number of Monthly Active Users Worldwide 2019 | Statista. Available online: https://www.statista.com/statistics/278846/kakaotalk-monthly-active-users-mau/ (accessed on 6 August 2019).

- YouTube Threatens Naver in Korean Internet Search Market - 비즈니스코리아 - BusinessKorea. Available online: http://www.businesskorea.co.kr/news/articleView.html?idxno=30000 (accessed on 6 August 2019).

- Top Grossing Apps and Download Statistics Google Play | App Annie. Available online: https://www.appannie.com/en/apps/google-play/top/south-korea/overall/ (accessed on 30 July 2019).

- Markov, Z.; Russell, I. An introduction to the WEKA data mining system. ACM SIGCSE Bull. 2006, 38, 367–368. [Google Scholar] [CrossRef]

- Liaw, A.; Wiener, M. Classification and regression by randomForest. R news 2002, 2, 18–22. [Google Scholar]

- Safavian, S.R.; Landgrebe, D. A survey of decision tree classifier methodology. IEEE Trans. Syst. Man. Cybern. 1991, 21, 660–674. [Google Scholar] [CrossRef]

- Tsoumakas, G.; Katakis, I. Multi-label classification: An overview. Int. J. Data Warehous. Min. 2007, 3, 1–13. [Google Scholar] [CrossRef]

- Breiman, L. Bagging predictors. Mach. Learn. 1996, 24, 123–140. [Google Scholar] [CrossRef]

- Powers, D.M. Evaluation: From precision, recall and F-measure to ROC, informedness, markedness and correlation. J. Mach. Learn. Technol. 2011, 2, 37–63. [Google Scholar]

- Boström, H. Estimating Class Probabilities in Random Forests. In Proceedings of the 2007 Sixth International Conference on Machine Learning and Applications, ICMLA 2007, Cancun, Mexico, 13–15 December 2007; pp. 211–216. [Google Scholar]

- Dankowski, T.; Ziegler, A. Calibrating random forests for probability estimation. Stat. Med. 2016, 35, 3949–3960. [Google Scholar] [CrossRef]

- Wei, L.; Luo, W.; Weng, J.; Zhong, Y.; Zhang, X.; Yan, Z. Machine learning-based malicious application detection of android. IEEE Access 2017, 5, 25591–25601. [Google Scholar] [CrossRef]

| PII | PPII |

|---|---|

| Full name and home address, biological identities, contact number, full email address, national identification number, passport number, social security number, taxpayer number, patient identification data, Iris data, fingerprints, credit card info, digital identity | Partial birthdate, part of a name, state or city, job status, web cookie, few digits of SSN, employment info, medical info, blood pressure data, education status, financial info, religion, supported team, postal code, music choice, IP address, place of birth, political view, race |

| Permission Group (10) | Individual Dangerous Permissions (26) |

|---|---|

| Calendar | Read calendar and write calendar |

| Call log | Read call log, write call log and process outgoing calls |

| Camera | Camera |

| Contacts | Read contacts, write contacts and get accounts |

| Location | Access fine location and access coarse location |

| Microphone | Record audio |

| Phone | Read phone state, read phone numbers, call phone, answer phone calls, add voice mall and use session initiation protocol (sip) |

| Sensor | Body sensors |

| SMS | Send SMS, receive SMS, read SMS, receive WAP push and receive mms |

| Storage | Read external storage and write external storage |

| Case | Dangerous Android Permissions (1st to 26th) Owned by an Owner Company | Generated PII | |||

|---|---|---|---|---|---|

| Set 1 | 5th | 10th | 15th | 23rd | α |

| Set 2 | 1st | 15th | 9th | 26th | β |

| Set 3 | 3rd | 4th | 9th | 17th | δ |

| Set 4 | 5th | 6th | 23rd | 26th | γ |

| Dangerous Android Permission | Dangerous Android Permissions | Detail Description |

|---|---|---|

| 1 | read_calendar | Read and write user information from the calendar |

| 2 | write_calendar | |

| 3 | camera | Access camera and capture image and video |

| 4 | read_contacts | Access contacts and profiles |

| 5 | write_contacts | |

| 6 | get_accounts | |

| 7 | access_fine_location | The network provider can access both, but the GPS provider can access the fine location only |

| 8 | access_coarse_location | |

| 9 | record_audio | Access microphone |

| 10 | read_phone_state | Access related telephony features with accessing the phone number, answer calls, track phone status, etc. |

| 11 | read_phone_numbers | |

| 12 | call_phone | |

| 13 | answer_phone_calls | |

| 14 | read_call_log | Access related telephony features with the read and write call log |

| 15 | write_call_log | |

| 16 | process_outgoing_calls | |

| 17 | add_voicemail | |

| 18 | use_sip | Session initiation protocol |

| 19 | body_sensors | Access the body or environment sensor |

| 20 | send_sms | Access the message body and send messages |

| 21 | receive_sms | |

| 22 | read_sms | |

| 23 | receive_wap_push | |

| 24 | receive_mms | |

| 25 | read_external_storage | Access to external storage |

| 26 | write_external_storage |

| Contact Number | Biometric ID | Address | Social Graph | Human Behavior | Unique ID | Location |

| Case | Dangerous Android Permissions (1 to 26) Owned by a Company | PII Class | |||

|---|---|---|---|---|---|

| 1 | camera | record_audio | body_sensors | use_fingerprint | biometric_id |

| 2 | read_ext_storage | read_calendar | access_network | access_coarse_location | location |

| 3 | call_phone | send_sms | get_account | read_call_log | contact number |

| Owners | Publishers | Number of Apps | Possible Permission Set (Rows) | Considered PPII (Dangerous Android Permissions) | Considered PII Class |

|---|---|---|---|---|---|

| Kakao [43] | Kakao corporation | 20 | 326 | 26 | 8 |

| Kakao mobility | 8 | ||||

| Kakao game corp | 16 | ||||

| Kakao theme | 11 | ||||

| Daum corporation | 8 |

| Owners | Publishers | Number of Apps | Possible Permission Set (Rows) | Considered PPII (Dangerous Android Permissions) | Considered PII Class |

|---|---|---|---|---|---|

| Naver [44] | Naver corporation | 36 | 394 | 26 | 8 |

| Line corporation | 19 |

© 2019 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (http://creativecommons.org/licenses/by/4.0/).

Share and Cite

Onik, M.M.H.; Kim, C.-S.; Lee, N.-Y.; Yang, J. Personal Information Classification on Aggregated Android Application’s Permissions. Appl. Sci. 2019, 9, 3997. https://doi.org/10.3390/app9193997

Onik MMH, Kim C-S, Lee N-Y, Yang J. Personal Information Classification on Aggregated Android Application’s Permissions. Applied Sciences. 2019; 9(19):3997. https://doi.org/10.3390/app9193997

Chicago/Turabian StyleOnik, Md Mehedi Hassan, Chul-Soo Kim, Nam-Yong Lee, and Jinhong Yang. 2019. "Personal Information Classification on Aggregated Android Application’s Permissions" Applied Sciences 9, no. 19: 3997. https://doi.org/10.3390/app9193997

APA StyleOnik, M. M. H., Kim, C.-S., Lee, N.-Y., & Yang, J. (2019). Personal Information Classification on Aggregated Android Application’s Permissions. Applied Sciences, 9(19), 3997. https://doi.org/10.3390/app9193997