Abstract

This study proposes a double-track method for the classification of fruit varieties for application in retail sales. The method uses two nine-layer Convolutional Neural Networks (CNNs) with the same architecture, but different weight matrices. The first network classifies fruits according to images of fruits with a background, and the second network classifies based on images with the ROI (Region Of Interest, a single fruit). The results are aggregated with the proposed values of weights (importance). Consequently, the method returns the predicted class membership with the Certainty Factor (CF). The use of the certainty factor associated with prediction results from the original images and cropped ROIs is the main contribution of this paper. It has been shown that CFs indicate the correctness of the classification result and represent a more reliable measure compared to the probabilities on the CNN outputs. The method is tested with a dataset containing images of six apple varieties. The overall image classification accuracy for this testing dataset is excellent (99.78%). In conclusion, the proposed method is highly successful at recognizing unambiguous, ambiguous, and uncertain classifications, and it can be used in a vision-based sales systems in uncertain conditions and unplanned situations.

1. Introduction

Recognizing different kinds of fruits and vegetables is perhaps the most difficult task in supermarkets and fruit shops []. Retail sales systems based on bar code identification require the seller (cashier) to enter the unique code of the given fruit or vegetable because they are individually sold by weight. This procedure often leads to mistakes because the seller must correctly recognize every type of vegetable and fruit; a significant challenge even for highly-trained employees. A partial solution to this problem is the introduction of an inventory with photos and codes. Unfortunately, this requires the cashier to browse the catalog during check-out, extending the time of the transaction. In the case of self-service sales, the species (types) and varieties of fruits must be specified by the buyer. Unsurprisingly, this can often result in the misidentification of fruits by buyers (e.g., Conference pear instead of Bartlett pear). Independent indication of the product, in addition to both honest and deliberate mistakes (purposeful indication of a less expensive species/variety of fruit/vegetable) can lead to business losses. The likelihood of an incorrect assessment increases when different fresh products are mixed up.

One potential solution to this challenge is the automatic recognition of fruits and vegetables. The notation of recognition (identification, classification) can also be understood in different ways: as the recognition of a fruit (distinguishing a fruit from another object, e.g., a leaf, a background), recognizing the species of a fruit (e.g., apple from a pear), and recognizing a variety of a given species of fruit (e.g., Golden Delicious apples from Gloster apple). In the case of retail systems, the last two applications have special significance. The concept of fruit classification best reflects the essence of the issue discussed in the article as a way of automatically determining the right species and variety of fruits. Classification of fruits and vegetables is a relatively complex problem owing to the huge number of varieties []. Considerable differences in appearance exist within species and varieties, including irregular shapes, colors, and textures. Furthermore, images range widely in lightning conditions, distance, and angle of the camera; all of which result in distorted images. Another problem is the partial or full occlusion of the object. These constraints have led to the lack of multi-class automated fruit and vegetable classification systems [] in real-life applications.

An examination of the literature suggests that the effectiveness of fruit and vegetable classification using various machine learning methods [] (support vector machine, k-nearest neighbor, decision trees, neural networks), especially recent advancements in deep learning, is great []. However, the construction of online fruit and vegetable classification systems in retail sales is challenged by the required model learning time and promptness in receiving the classification result, as well as the accuracy of the model prediction. In the case of complex, multi-layered models of deep neural networks, the learning and inference time can be significant. Therefore, in the analyzed application, the most preferred models are those that provide a solution relatively quickly and with high classification accuracy. However, even with high classification rates, it is not guaranteed that the tested method will recognize the given fruit (vegetable) objects in the image in all cases. The system is used by a person who may unwittingly place his/her hand or other object in the frame, which in turn may result in erroneous classifications. There are also unplanned situations, such as the accidental mixing of fresh products, fruit placement in unusual packaging, different lighting conditions or spider webs on the lens, etc. Such situations may also cause uncertainty in the model results.

The primary aim of this study is to propose a method for fruit classification, which in addition to the classifier, would inform about the certainty factor of the results obtained. In the case of a low value of the certainty factor, it would inform the user regarding the most suitable species (varieties) of products. Since the biggest challenge is recognizing the variety of a given species of fruit (vegetable), this work focuses on the classification of several varieties of one species of a popular fruit as a case study: the apple.

The remainder of the paper is organized into the following sections. Section 2 provides a review of the fruit and vegetable classification techniques used in retail sales systems. The problem statement and the research methodologies together with the proposed method of fruit classification using the certainty factor are outlined in Section 3. The results of the research and discussion are also reported. Section 4 provides a conclusion for the study.

2. Related Work

The VeggieVision system is one of the first classifiers of fruit and vegetable products []. It recognizes the product based on color and texture from color images according to a nearest-neighbor classifier. This system reports the most likely products to the user, one of which has a high probability of being the correct one. The accuracy of the system compared to currently achieved results is not too high: it is over 95%, however for the top four answers.

The authors of [] also used the nearest-neighbor classifier for fruit classification, but focused on the depth channel of RGBD (Red, Green, Blue, Depth) images. The use of hierarchical multi-feature classification and hybrid features made it possible to obtain better results for system accuracy among species of fruits, as well as their variety.

The authors in [] presented a vision-based online fruit and vegetable inspection system with detection and weighing measurement. As a preliminary proposal, the authors used an algorithm leveraging data on hue and morphology to identify bananas and apples. A fruit classifier based on additional extraction of color chromaticity was presented in []. A number of other studies [] indicated that fruit recognition can be also provided by other classification methods such as fuzzy support vector machine, linear regression classifier, twin support vector machine, sparse autoencoder, classification tree, logistic regression, etc.

The proposal to use neural networks as the fruit classifier was presented among others by Zhang et al. [], who used a feedforward neural network. The authors first removed the image background with the split-and-merge algorithm, then the color, texture, and shape information was extracted to compose feature data. The authors analyzed numerous learning algorithms, and the FSCABC algorithm (Fitness-Scaled Chaotic Artificial Bee Colony algorithm) was reported to have the best classification accuracy (89.1%). Other applications of neural networks for fruit classification can be found in [,].

In recent years, a number of articles have shown considerable modeling success with deep learning applications for image recognition. In [], the authors applied deep learning with the Convolutional Neural Network (CNN) to vegetable object recognition with the results of learning rate being 99.14% and the recognition rate being 97.58%. In [], the authors evaluated two CNN architectures (Inception and MobileNet) as classifiers of 10 different kinds of fruits or vegetables. They reported that MobileNet propagated images significantly faster with almost the same accuracy (top three accuracy of 97%). However, there were difficulties in predicting clementines and kiwis. This may be due to the choice of the training and testing of a variety of images, which were captured with a video camera attached to the proposed retail market systems and at the same time extracted from ImageNet.

The article [] presented a comparative study between Bag Of Features (BOF), Conventional Convolutional Neural Network (CNN), and AlexNet for fruit recognition. The results indicated that all three techniques had excellent recognition accuracy, but the CNN technique was the fastest at presenting a recognition prediction. In turn, in the article [], two deep neural networks were proposed and tested for using simple and more demanding datasets, with very good results for fruit classification accuracy in both bases. Many numerical experiments for training various architectures of CNN to detect fruits were presented in []. A 13-layer CNN was proposed for a similar purpose in [].

The literature review reported above was used to inform the use of computer vision techniques in an automated sales stand or self-checkout. The literature indicates that machine learning methods (especially CNN methods) perform well at classification of fruits and vegetables in the case of pre-prepared datasets. However, pre-trained (tuned) methods are dependent on the data, but the availability of large collections of images of fruits and vegetables is limited []. Given the detailed discussion on the use of CNN methods in automated sales stands or self-checkout, the suggestion can be raised that it is necessary to become independent of a single result in order to increase the certainty of the obtained class and achieve a more effective use of computer vision.

For this purpose, we combine several methods: a CNN method for the fruit classification from a whole image, a YOLO (You Only Look Once) V3 method [] for the fruit detection from a whole image, and then, a CNN method for the fruit classification from images with a single object (apple). This double-track approach to the fruit classification allows determining the Certainty Factor (CF) of the results, the use of which is the main novelty of this paper.

The problem of fruit detection is also widely analyzed in the literature, especially during the detection of fruits in orchards [] and damage detection []. The YOLO V3 model [], the Faster R-CNN model [], and their modifications are the state-of-the-art fruit detection approaches [,]. The use of object detection and recognition techniques for multi-class fruit classification was presented in []. This approach is also effective, but does not calculate an objective certainty factor for the results, which are independent of one classification method.

3. Application of the Proposed Method to the Fruit Variety Classification

3.1. Problem Statement

The traditional grocery store has been evolving in recent decades to a supermarket and discount store concept, carrying all the goods shoppers often desire. These stores offer a very large number of products, both processed and partially processed, as well as fresh produce such as fruits and vegetables. Fresh product is typically sold per piece and by weight. As discussed earlier, the sale of produce may be burdensome for cashiers, because they must remember (or search for) the identification code of each item. In the case of self-service checkouts, the sale of fruits and vegetables is connected with the identification of the products species and varieties by buyers. Thus, the sales process in current use leads to longer customer service time, often causing errors (payments for bad products) and business losses.

The published literature suggests that machine vision systems and machine learning methods allow for the construction of systems for automatic fruit and vegetable classification. In particular, deep learning methods have high classification accuracy for both training and testing images, mainly in the case of recognizing species of fruits and vegetables. Recognizing varieties of fruits and vegetables is more difficult due to highly similar color, structure, and shapes in the same class. In fact, the image of the identified object may differ from the learned pattern, resulting in classification errors.

The primary problem addressed in this study is the following: Is it possible to build a machine vision system that can quickly classify the variety of fruits and vegetables together with providing the result certainty factor and, in the case of uncertainty, will notify about the set of the most probable classes?

To tackle this question, a double-track method for fruit variety classification is proposed that uses the image classification methods on the example of images with a background, as well as the method of object detection allowing the detection of fruit objects that are also used for classification. Comparison of the classification results of different objects of the same image, using the weights of the results, will allow the calculation of the Certainty Factor (CF) regarding the proposed result of the classification.

3.2. Research Method

3.2.1. CNN for Fruit Classification

In the proposed fruit classification method, the inference procedure based on CNN is used several times for classification of one variety of fruit. Therefore, the CNN architecture should be as simple as possible, with the goal of handling the task of classification with the highest possible prediction accuracy. By advancing previous research [,], we present a simplified CNN architecture in Table 1. This CNN model has been tested for the variety classification of apples.

Table 1.

An architecture of the CNN model for fruit classification.

Here, we propose a deep neural network model architecture with 9 layers of neurons. The first layer is an input layer that contains 150 × 150 × 3 neurons (RGB image with 150 × 150 × 3 pixels as a resized image with 320 × 258 × 3 pixels). The next 4 layers constitute two tracks with convolution pooling layers that use receptive field (convolutional kernels) of size 3 × 3 with no stride and no padding. The layers give 32 and 64 features maps, respectively. The convolution layers use nonlinear ReLU (Rectifier Linear Unit) activation functions as follows []:

This function reduces (turns into zero) the number of parameters in the network, resulting in faster learning. To reduce dimensionality and simultaneously capture the features contained in the sub-regions binned, the max pooling strategy [] is used in the 3rd and 5th layers. The convolutional and max-pooling layers extract features from image. Then, in order to classify the fruits, the fully-connected layers are applied to the previous dropout layer. Dropout is applied to each element within the feature maps (with a 50% chance of setting inputs to zero), thus allowing for randomly dropping units (along with their connections) from the neural network during training and helping prevent overfitting by adding noise to its hidden units [].

The 8th layer provides 64 ReLU fully-connected neurons. The last layer as a final classifier has the 6 Softmax neurons, which correspond to the six varieties of apples.

To train the CNN model (optimize its weights and biases), the Adam (Adaptive moment estimation) algorithm [] was employed with cross entropy as the loss function. The Adam algorithm is a computationally-efficient extension of the stochastic gradient descent method.

The presented architecture of the CNN model was tested for fruit classification in three different ways. First, the network was trained (and validated) with image data from apple objects (original images). Second, the network was trained (and validated) with training data for a single apple object (called the image with the apple or ROI as the Region Of Interest). Finally, the network was trained (and validated) with the both training data. In all cases, different network weights were obtained for the same CNN model. All trained CNN models were tested using the same testing data.

3.2.2. You Only Look Once for Fruit Detection

The YOLO V3 [] architecture was used to generate the apple ROIs from the original images of []. The YOLO (You Only Look Once) family of models is a series of end-to-end deep learning models designed for fast object detection. Version 3 used in this research has 53 convolutional layers. The main difference from the previous version of this architecture is that it makes detections at three different scales, thus making it suitable for the smaller objects. Object features are extracted from these scales like the feature pyramid network.

In the first step, YOLO divides the input image into an S × S grid where S depends on the scale. For each cell, it predicts only one object using boundary boxes. The network predicts an objectless score for each bounding box using logistic regression. The score parameter was used to filter out weak predictions. The result prediction is a box described by the top left and bottom right corner.

The original dataset consisted of folders for each fruit class, such as apple, banana, etc. Within the current class folder, additional classification was done for specific species. Apples in the images were located on a silver shiny plate that generated many false predictions. We used the weights pre-trained on a COCO dataset, containing 80 classes where one of them was the apple class. The COCO apple class consists of many different apple species; a desirable attribute in this case. We could run the object detection using YOLO and filter out just the apple class. To maximize predictive performance, we set the minimum score parameter to 0.8. The predictions were good, but many apples were not detected. This this reason, we set the minimum score to 0.3, which allowed almost all objects to be included regardless of the species.

Model predictions were saved as separate files named according to the source sample to allow for later verification. Generated predictions could be used for ground truthing during the training process.

3.2.3. Proposed Fruit Classification Method Using the Certainty Factor

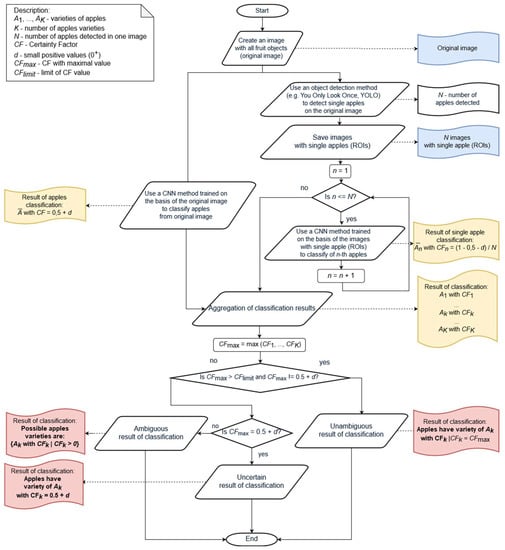

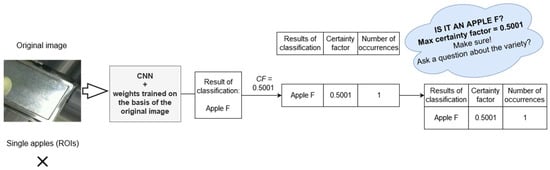

This study proposes a fruit classification method for a retail sales system. The method uses machine vision system together with machine learning methods (shown in Figure 1). The first stage of the method involves creating an image with all fruit objects. The image includes one or many fruits (intended for one variety and species) with the background, and it is called original image.

Figure 1.

Proposed fruit classification method on an example of apple variety classification.

In order to be sure of the obtained fruit classification result, the proposed method has two separate pathways for fruit classification.

The first pathway was to identify the fruit variety based on the entire original image. For this purpose, the previously described nine-layer CNN was used, which was trained based on the original images. The result of classification was determined with a certainty factor CF with the following value:

where is a small positive value (here, ). In order not to introduce errors in the interpretation of the results, it is recommended that the d value be less than 0.5/(o + 1), where o is the maximum number of objects (apples) on the one image in the dataset.

Studies have shown slightly higher accuracy for CNN trained with original images than with ROI objects. Therefore, the small positive value for the certainty factor gave slightly more importance to the result obtained in the first pathway of the fruit classification algorithm compared to the second pathway described below.

The second pathway of fruit classification consisted of recognizing the fruit variety based on single fruits from the original image. The first step was to use the object detection method to identify single apple objects in the amount of (). The recorded objects of single apples were images with ROIs.

The You Only Look Once (YOLO) method was used to extract images of individual objects from the original images. The fruit variety classification was done based on each nth ROI image (). For this purpose, the previously described nine-layer CNN was used. It was trained with the ROI images. Each result of the classification (each CNN inference) was provided with the appropriate value of certainty factor :

where is the number of objects (apples) detected in the original image and is a small positive value (in research ).

The results obtained from both pathways were grouped, and factor for each kth variety was calculated as follows:

where is a certainty factor for the kth variety of fruit (in the researched variety of apples).

The indirect result of the classification can be determined in the form of the following model:

where K is the number of fruit varieties that were detected.

Based on:

the final result of the classification was provided. If the value of was higher than the limit value of certainty factor ( and is not equal , then an unambiguous classification was obtained:

Fruits (for example, apples) are variety of where =.

If the value of did not exceed the limit value of the certainty factor ( and was not equal to , then an ambiguous classification was obtained. The user can only be informed about the set of possible classification results:

Possible varieties of fruits (apples) are .

If the value of equaled , then uncertain classification was the result:

Fruits (for example, apples) are variety of =,

where is a small positive value (). This result was obtained using only one pathway of classification, which may give rise to uncertainty about the model results.

3.3. Datasets

We employed six different apple varieties (named A–F), and the number of images for each class is provided in Table 2. The images (320 × 258 × 3 pixels) of apples came from the datasets presented in []. The images were obtained using an HD Logitech web camera with five-megapixel snapshots and present objects (different amount of apples) placed in the shop scenery. Various poses and different lighting conditions (i.e., in fluorescent, natural light, with or without sunshine) were preserved.

Table 2.

Number of images in the variety class of apples.

More information on the analyzed dataset was reported in [,]. For simplicity, the images of fruits were taken without being placed in plastic bags.

The data were divided into three sets (training data, validation data, testing data) in the ratio of 70% (4311 original images), 15% (924 original images), and 15% (926 original images), respectively. The recognition algorithm was used to identify a single apple in the original image. Each apple object was saved as a separate image (named as image with apple, or ROI image). In addition, all apples in each original image were identified and recorded. The detailed structure of the analyzed dataset is presented in Table 3.

Table 3.

Dataset structure considered in the research.

All tests and analyzes were carried out using Python programming (v. 3.6.3) with Keras as a high-level neural network API, capable of operating on TensorFlow.

3.4. Results and Discussion

3.4.1. Original contra Region of Interest Images

The CNN model architecture (presented in Section 3.2.1) was tested with various testing and validation images (with only original images, only ROI images, and both). Our goal was to determine the image types necessary to estimate appropriate values of weights in the CNN model to classify the varieties of the fruit correctly. Despite different training and validation files, the model was tested using the same testing dataset. The test results are given in Table 4.

Table 4.

Test results of CNNs trained with various training and validation datasets.

According to the accuracy values presented in Table 4, the CNN should be trained with only image types that will be recognized by this network. Training the network with additional images of different scales (many objects or one object) did not improve the accuracy of the classification. The results may also indicate that the size of the ROI in the image is diametrically significant.

It can be also concluded that the best classification possibilities are for the proposed CNN trained and tested with the original images; these models only produced two incorrect classifications (accuracy: 99.78%). However, it should be noted that the number of testing images in this case was much smaller (about 35%) than in the case of testing with ROI images. The misclassifications referring to these cases are described in Table 5. Unfortunately, despite the high values of probabilities that samples belonged to the varieties obtained, the results were incorrect. Thus, the CNN output was not considered a fully reliable classifier, although its performance was close to perfect. As a result, additional methods were explored.

Table 5.

Incorrect classification of original images for the CNN trained with original images.

The proposed CNN, which was trained and validated with ROI images, demonstrated slightly less accuracy (97.56%). This accuracy was characteristic for the network, which was trained and validated with the same type of images.

3.4.2. Proposed Fruit Classification Method Using the Certainty Factor

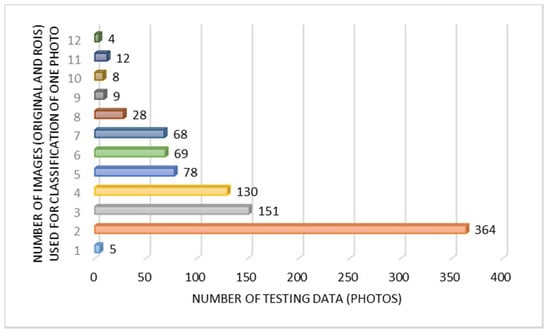

The proposed fruit classification method was tested for the same dataset with six different apple varieties. In this method, one photo classification was associated with classifications of a few images (one original image and ROI images). The amount of testing data according to the number of images for one photo classification is presented in Figure 2. It is evident that the most cases (364 photos) concerned the classification of an apple based on one original image and one ROI image (this was a photo with one apple or a photo on which the YOLO method detected only one apple object). In the dataset, there were photos for which the YOLO method failed to identify any fruit (five photos). The YOLO method detected the most fruits (as many as 11) in four cases.

Figure 2.

The number of testing data related to the number of classifications for one photo

As a result, the proposed method gave the recognized fruit varieties together with their certainty factors (s). Because the classification model was performed based on two CNNs with different weights and many different fruit objects, it can be assumed that the approach was relatively objective, and the certainty factors can be reliable factors validating the correctness of the classification result. Consequently, the maximal value of CF () may indicate the result of classification (i.e., a fruit variety with can be the correct class). In a situation where equals one, the given classification result can be treated as certain (it was in 875 cases out of 926 all - 94.49%). In 97.94% of cases, exceeded 0.7501, then the variety of fruit with was the correct variety. Only two misclassifications were detected for varieties with (Table 6). Therefore, in the large majority of cases (99.78%), the fruit variety with was the correct variety. All results of correct and incorrect classifications together with the values of the maximal value of CF are presented in Table 7. The actual classification related to predicted varieties of apples is shown in Table 8.

Table 6.

Incorrect classification based on the value in the proposed method.

Table 7.

Results of apple variety classification using the proposed method.

Table 8.

Results of apple variety classification related to actual apple varieties.

According to the model results, it was possible to identify the limit value of ( for which variety of fruit with can be treated as an ambiguous classification. In the analyzed case, can be equal to 0.7501. We had 19 original images for which the results of classification had , including five original images with (ROIs were not detected). Thus, the analyzed classification cases can be divided into three types as follows:

- an unambiguous classification (where , 97.95% of cases)

- an ambiguous classification (where and classification based on the original image and at least one ROI image, 1.51% of cases)

- an uncertain classification (where and classification based only on the original image, 0.54% of cases).

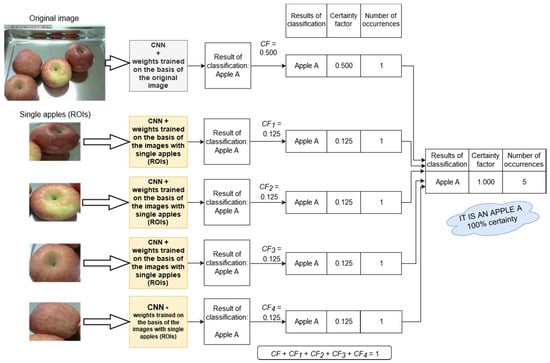

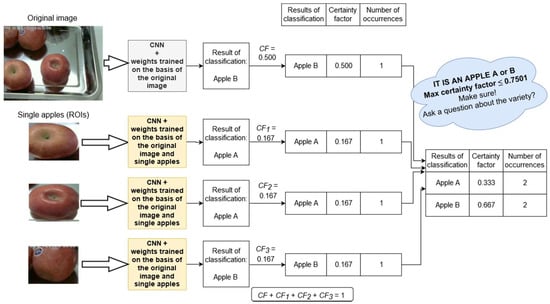

To illustrate the above situations in detail, Figure 3, Figure 4 and Figure 5 display examples of apple variety classification using the proposed method.

Figure 3.

An example of classification using the proposed method: unambiguous classification (based on the original image and ROI images).

Figure 4.

An example of classification using the proposed method: ambiguous classification (based on the original image and ROI images).

Figure 5.

An example of classification using the proposed system: uncertain classification (based only on the original image).

To complete the analysis, the execution time (predicting time) of proposed method is presented in Table 9. As can be seen, the execution time depended on the number of objects detected in the original image.

Table 9.

Execution time analysis for the proposed method.

3.4.3. Comparison of the Results

The research was focused on the synergy of two approaches, the object detection method (in our case, YOLO V3) and the classifier of the full frame and ROIs. Therefore, the comparisons can relate to each method separately or the whole proposed method, which calculated the CFs of object classes.

First, a comparison of YOLO V3’s performance in relation to other tested methods is presented in Table 10. The YOLO accuracy did not directly influence the result of the system’s end inference. The YOLO V3 method affected the relation between the size of the object in the image and the image size itself in the training set and testing set, which in turn affected the accuracy of the fruit identification method using ROIs (in our case, CNN). In addition, YOLO accuracy also affected the number of classified objects (number of ROIs), which in turn affected the accuracy of the certainty factor.

Table 10.

Comparison of object detection methods.

In the research, all the methods were tested with the same training data, which consisted of 926 files with multiple objects. As can be seen, the YOLO V3 generated the highest number of apple class detections, which could be used as the learning ROI images for the classification network. The best average processing times were obtained using the MobileNetV2 + SSDLite and SSD Inception v2 configuration, but the number of detections was much lower comparing to other architectures.

The second method used in our approach was the nine-layer CNN model. When we compared the proposed CNN with the CNN built based on [], we had a slightly higher overall accuracy rate (99.78% for the proposed CNN, 99.53% for CNN built on the basis of []). In both research works, the same training, validation, and testing data with original images were analyzed. In the learning process of both models, the augmentation methods were used. This improved the ability of models to generalize. In particular, RGB normalization, horizontal flips, image zoom (0.2), and image shear (0.2) were used. In addition, we used a simpler CNN architecture; it contained one less convolution pooling layer; therefore, the average processing time for one image was slightly lower (98.89 ms for the proposed CNN; 125.79 ms for CNN built based on [).

The accuracy of the proposed method can be compared to others by treating the final classification of the variety of fruit with the maximum value of (. In Table 11, we present the comparison of the proposed method also with the CNN built based on []. In both studies, the same training, validation, and testing data were analyzed. We found that the proposed method had a slightly higher accuracy rate.

Table 11.

Comparison of the two methods based on CNN.

We compared the proposed method with the method based only on the nine-layer CNN (Table 1) learned and tested with the original image. In this case, the same incorrect classifications were realized (see Table 5 and Table 8), reflected in an accuracy of 99.78% (two wrong classifications for 926). An important distinction is that in the proposed method, the value of , which was not higher than , can indicate an incorrect classification by the proposed vision system. This allows the user to be informed about several possible variety of fruits, from which they can select the correct one. In our study, only 14 cases out of 926 resulted in an ambiguous classification (the question addressed to the user: Which of presented varieties is correct?), and five cases resulted in an uncertain classification (the user should ensure that the proposed classification result is correct). For the testing dataset, the proposed method did not provide incorrect answers and, thus, did not mislead the user. Taken together, the results from this study indicate that the proposed method had a 100% accuracy.

4. Conclusions

This study proposed a double-track method for fruit variety classification in a retail sales system. The method used two nine-layer CNNs with the same architecture and different weight matrices. First, the network classified fruits based on images of fruits with a background and the second one based on images with the ROI (a single fruit). The results were aggregated with proposed values of neuron weights (importance). Consequently, the method returned predicted class/classes (fruits variety/varieties) together with their Certainty Factor (CF). The presented method combined the detection and classification methods and determined the certainty factor associated with the prediction results from original and cropped images ROIs, which was the contribution of this paper. The CFs had an advantage in that the correctness of the classification result could be determined, resulting in more reliable predictions compared to the probabilities from the CNNs’ outputs. This suggests that the proposed vision-based method can be used in uncertain conditions and unplanned situations as commonly encountered in sales systems (such as the accidental mixture of fresh products, placement of another object in the frame, unusual packaging of fruit, different lighting conditions, etc.). The test using 926 images of six apple varieties indicated that classification accuracy for this method (based on a maximal value of CF) was excellent (99.78%). In addition, the method was 100% successful at recognizing unambiguous, ambiguous, and uncertain classifications.

It is important to recognize that the proposed method also had limitations. First, the method performed the classification process several times (for the whole image and detected objects), which could result in a longer time for obtaining the result. However, the uncomplicated structure of CNN and the YOLO V3 method for real-time processing [] imply that the method can still be used in online sales systems. Second, the use of two different types of training images complicated the learning process of the system. Therefore, the learning process together with determining CFlimit values in the proposed method is recommended for further research.

In addition, the future direction is to test the method using a larger dataset containing greater amounts of different fruit and vegetable varieties of different species. It is also preferable to build a fruit and vegetable dataset with more demanding images, which will ultimately be the true test of the system. An interesting research direction will be testing the system with a dataset containing images of fruits and vegetables wrapped in a transparent plastic bag. This situation may cause uncertainty of the obtained result.

Author Contributions

Conceptualization, R.K. and M.P.; formal analysis, R.K.; investigation, R.K. and M.P.; methodology, R.K.; software, R.K. and M.P.; supervision, R.K.; validation, R.K. and M.P.; visualization, R.K.; writing, original draft, R.K. and M.P.; writing, review and editing, R.K. and M.P.

Funding

This research received no external funding.

Conflicts of Interest

The authors declare no conflict of interest.

References

- Hussain, I.; He, Q.; Chen, Z. Automatic Fruit Recognition Based on DCNN for Commercial Source Trace System. Int. J. Comput. Sci. Appl. 2018, 8. [Google Scholar] [CrossRef]

- Hameed, K.; Chai, D.; Rassau, A. A comprehensive review of fruit and vegetable classification techniques. Image Vis. Comput. 2018, 80, 24–44. [Google Scholar] [CrossRef]

- Srivalli, D.S.; Geetha, A. Fruits, Vegetable and Plants Category Recognition Systems Using Convolutional Neural Networks: A Review. Int. J. Sci. Res. Comput. Sci. Eng. Inf. Technol. 2019, 5, 452–462. [Google Scholar] [CrossRef]

- Bolle, R.M.; Connell, J.H.; Haas, N.; Mohan, R.; Taubin, G. Veggievision: A produce recognition system. In Proceedings of the Third IEEE Workshop on Applications of Computer Vision, WACV’96, Sarasota, FL, USA, 2–4 December 1996; pp. 244–251. [Google Scholar]

- Rachmawati, E.; Supriana, I.; Khodra, M.L. Toward a new approach in fruit recognition using hybrid RGBD features and fruit hierarchy property. In Proceedings of the 2017 4th International Conference on Electrical Engineering, Computer Science and Informatics (EECSI), Yogyakarta, Indonesia, 19–21 September 2017; pp. 1–6. [Google Scholar]

- Zhou, H.; Chen, X.; Wang, X.; Wang, L. Design of fruits and vegetables online inspection system based on vision. J. Phys. Conf. Ser. 2018, 1074, 012160. [Google Scholar] [CrossRef]

- Garcia, F.; Cervantes, J.; Lopez, A.; Alvarado, M. Fruit Classification by Extracting Color Chromaticity, Shape and Texture Features: Towards an Application for Supermarkets. IEEE Lat. Am. Trans. 2016, 14, 3434–3443. [Google Scholar] [CrossRef]

- Yang, M.-M.; Kichida, R. A study on classification of fruit type and fruit disease, Advances in Engineering Research (AER). In Proceedings of the 13rd Annual International Conference on Electronics, Electrical Engineering and Information Science (EEEIS 2017), Guangzhou, Guangdong, China, 8–10 September 2017; pp. 496–500. [Google Scholar] [CrossRef]

- Zhang, Y.-D.; Wang, S.; Ji, G.; Phillips, P. Fruit classification using computer vision and feedforward neural network. J. Food Eng. 2014, 143, 167–177. [Google Scholar] [CrossRef]

- Wang, S.; Zhang, Y.; Ji, G.; Yang, J.; Wu, J.; Wei, L. Fruit Classification by Wavelet-Entropy and Feedforward Neural Network Trained by Fitness-Scaled Chaotic ABC and Biogeography-Based Optimization. Entropy 2015, 17, 5711–5728. [Google Scholar] [CrossRef]

- Zhang, Y.; Phillips, P.; Wang, S.; Ji, G.; Yang, J.; Wu, J. Fruit classification by biogeography-based optimization and feedforward neural network. Expert Syst. 2016, 33, 239–253. [Google Scholar] [CrossRef]

- Sakai, Y.; Oda, T.; Ikeda, M.; Barolli, L. A Vegetable Category Recognition System Using Deep Neural Network. In Proceedings of the 2016 10th International Conference on Innovative Mobile and Internet Services in Ubiquitous Computing (IMIS), Fukuoka, Japan, 6–8 July 2016; pp. 189–192. [Google Scholar]

- Femling, F.; Olsson, A.; Alonso-Fernandez, F. Fruit and Vegetable Identification Using Machine Learning for Retail Applications. In Proceedings of the 14th International Conference on Signal Image Technology and Internet Based Systems, SITIS 2018, Gran Canaria, Spain, 26–29 November 2018; pp. 9–15. [Google Scholar]

- Hamid, N.N.A.A.; Rabiatul, A.R.; Zaidah, I. Comparing bags of features, conventional convolutional neural network and AlexNet for fruit recognition. Indones. J. Electr. Eng. Comput. Sci. 2019, 14, 333–339. [Google Scholar] [CrossRef]

- Hossain, M.S.; Al-Hammadi, M.; Muhammad, G. Automatic Fruit Classification Using Deep Learning for Industrial Applications. IEEE Trans. Ind. Inform. 2019, 15, 1027–1034. [Google Scholar] [CrossRef]

- Muresan, H.; Oltean, M. Fruit recognition from images using deep learning. Acta Univ. Sappientiae 2018, 10, 26–42. [Google Scholar] [CrossRef]

- Zhang, Y.-D.; Dong, Z.; Chen, X.; Jia, W.; Du, S.; Muhammad, K.; Wang, S.-H. Image based fruit category classification by 13-layer deep convolutional neural network and data augmentation. Multimed. Tools Appl. 2017, 78, 3613–3632. [Google Scholar] [CrossRef]

- Redmon, J.; Farhadi, A. Yolo V3: An incremental improvement. arXiv 2018, arXiv:1804.02767. [Google Scholar]

- Tian, Y.; Yang, G.; Wang, Z.; Wang, H.; Li, E.; Liang, Z. Apple detection during different growth stages in orchards using the improved YOLO-V3 model. Comput. Electron. Agric. 2019, 157, 417–426. [Google Scholar] [CrossRef]

- Tian, Y.; Yang, G.; Wang, Z.; Wang, H.; Li, E.; Liang, Z. Detection of Apple Lesions in Orchards Based on Deep Learning Methods of CycleGAN and YOLOV3-Dense. J. Sens. 2019, 2019. [Google Scholar] [CrossRef]

- Ren, S.; He, K.; Girshick, R.B.; Sun, J. Faster RCNN: Towards Real-Time Object Detection with Region Proposal Networks. arXiv 2016, arXiv:1506.01497v3. [Google Scholar]

- Khan, R.; Debnath, R. Multi Class Fruit Classification Using Efficient Object Detection and Recognition Techniques. Int. J. Image Graph. Signal Process. 2019, 8, 1–18. [Google Scholar] [CrossRef]

- Hussain, I.; Wu, W.L.; Hua, H.Q.; Hussain, N. Intra-Class Recognition of Fruits Using DCNN for Commercial Trace Back-System. In Proceedings of the International Conference on Multimedia Systems and Signal Processing (ICMSSP May 2019), Guangzhou, China, 10–12 May 2019. [Google Scholar] [CrossRef]

- Jarrett, K.; Kavukcuoglu, K.; Ranzato, M.; LeCun, Y. What is the best multi-stage architecture for object recognition? In Proceedings of the International Conference on Computer Vision (ICCV’09), Kyoto, Japan, 29 September–2 October 2009.

- Qian, R.; Yue, Y.; Coenen, F.; Zhang, B. Traffic sign recognition with convolutional neural network based on max pooling positions. In Proceedings of the 2th International Conference on Natural Computation, Fuzzy Systems and Knowledge Discovery (ICNC-FSKD), Changsha, China, 13–15 August 2016; pp. 578–582. [Google Scholar]

- Srivastava, N.; Hinton, G.; Krizhevsky, A.; Sutskever, I.; Salakhutdinov, R. Dropout: A Simple Way to Prevent Neural Networks from Overfitting. J. Mach. Learn. Res. 2014, 15, 1929–1958. [Google Scholar]

- Kingma, D.P.; Ba, J. Adam: A Method for Stochastic Optimization. arXiv 2014, arXiv:1412.6980. [Google Scholar]

- Hussain, I.; He, Q.; Chen, Z.; Xie, W. Fruit Recognition dataset. Zenodo 2018. [Google Scholar] [CrossRef]

- Liu, W.; Anguelov, D.; Erhan, D.; Szegedy, C.; Reed, S.; Fu, C.Y.; Berg, A.C. SSD: Single shot multibox detector. In Proceedings of the European Conference on Computer Vision (ECCV), Amsterdam, The Netherlands, 11–14 October 2016; pp. 21–37. [Google Scholar]

- Dai, J.; Li, Y.; He, K.; Sun, J. R-FCN: Object detection via region based fully convolutional networks. arXiv 2016, arXiv:1506.01497. [Google Scholar]

- Sandler, M.; Howard, A.; Zhu, M.; Zhmoginov, A.; Chen, L.C. MobileNetV2: Inverted Residuals and Linear Bottlenecks. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition (CVPR), Salt Lake City, UT, USA, 18–22 June 2018; pp. 4510–4520. [Google Scholar]

© 2019 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (http://creativecommons.org/licenses/by/4.0/).