1. Introduction

With the rapid progress of science and technology, the living standards of human beings are constantly improving, and the service robot market is booming day by day. This includes the development of sweeping robots and restaurant service robots. However, there are not many service robots for sports, especially tennis service robots, and such robots are still in a rudimentary stage of development. Tennis, as a worldwide sport, has many fans all over the world. However, the scattered tennis balls on the court are difficult to deal with, both during training and during matches. Picking up tennis balls is time-consuming and laborious. Based on these factors, we researched and designed an intelligent tennis robot to satisfy the requirements of tennis players. See details in [

1].

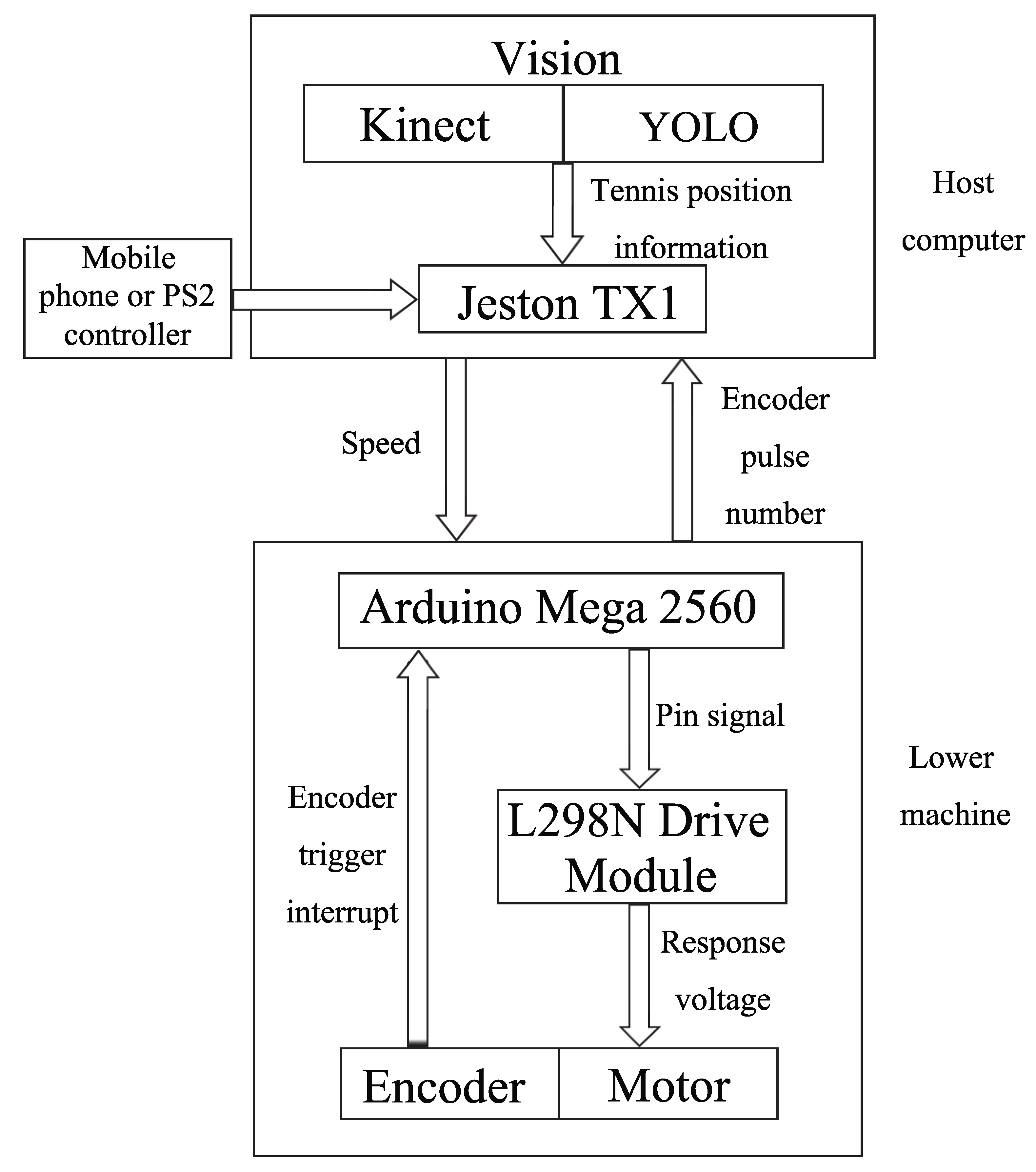

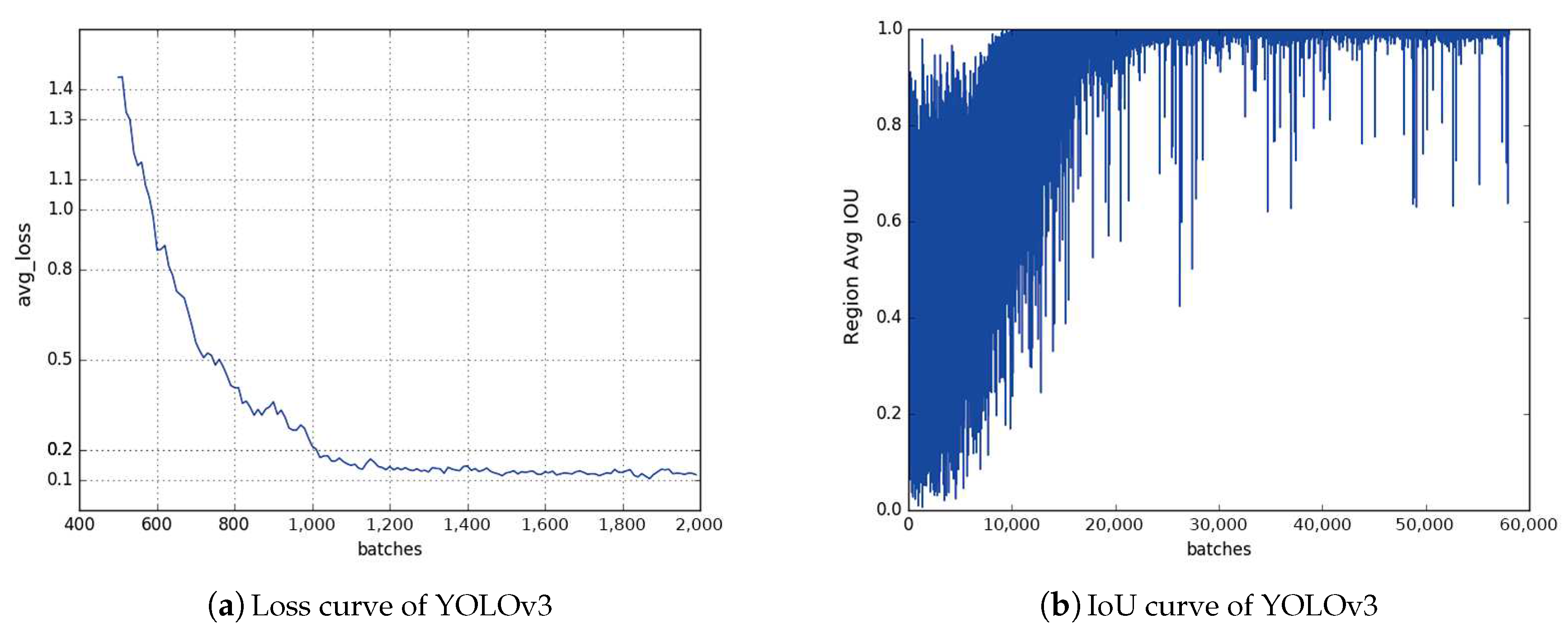

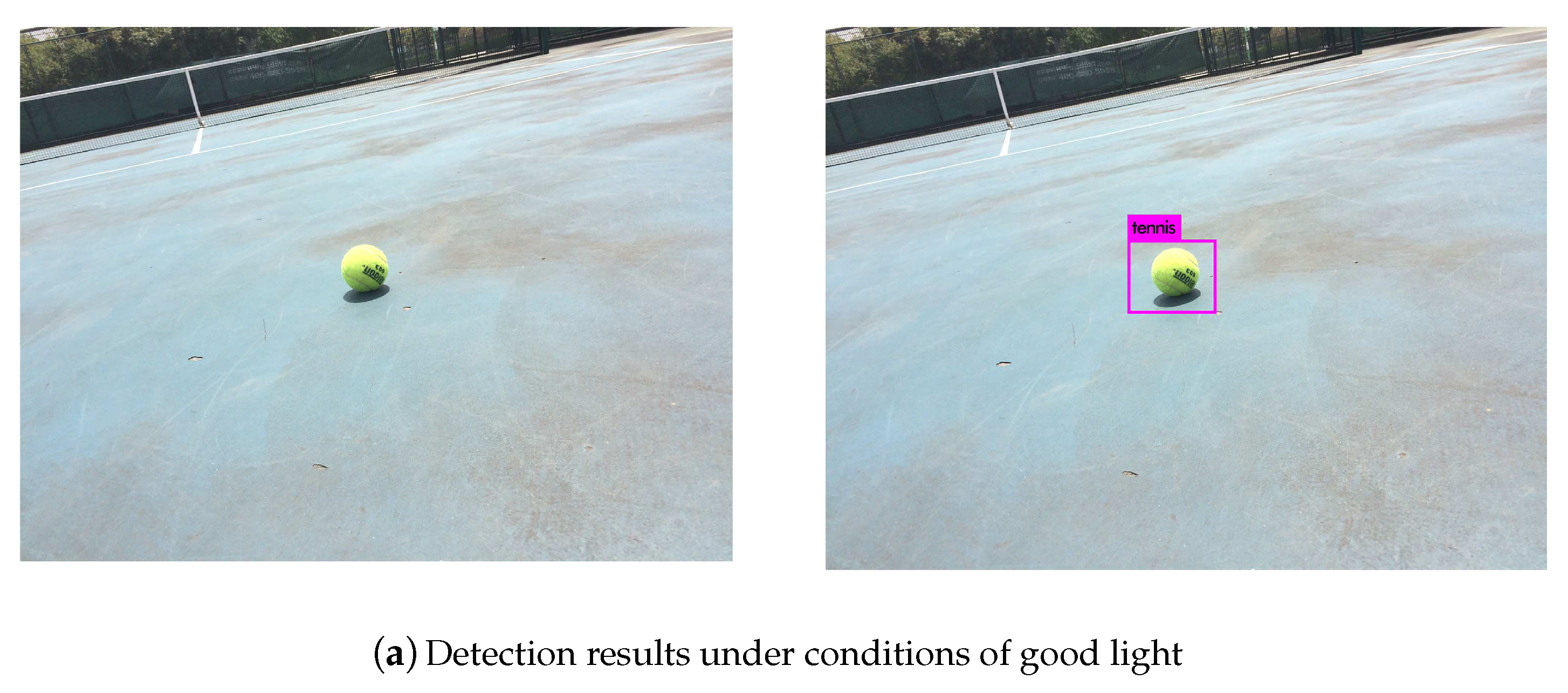

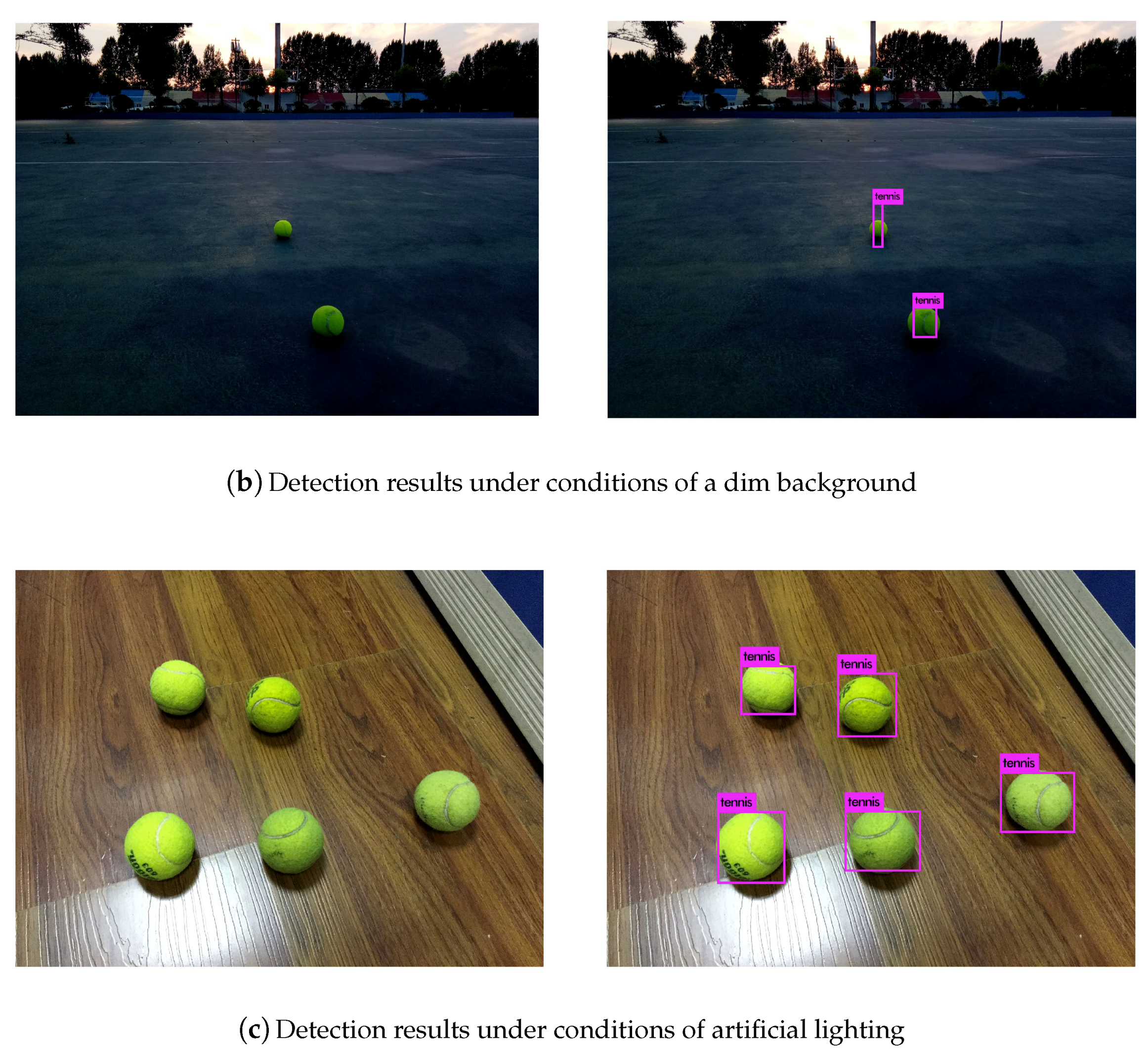

We applied the you only look once (YOLOv3) detection algorithm to design a tennis robot. The structural block diagram of our tennis robot is shown in

Figure 1. The tennis robot can be divided into two parts: motion control and machine vision. In the motion control part, we used Arduino Mega 2560 as the control board of the slave computer to drive the DC motor with L298N and count the mileage of the two wheels by the encoder. It forms a closed-loop control system which composes the slave computer. The main function of the slave computer is to receive speed information from the host computer for movement and then feedback to the host. The task of the host computer includes two aspects: Proportional integral derivative (PID) control of the slave computer’s motion and processing of the visual information obtained by Kinect. The robot identifies objects (tennis balls here) by YOLO and then calculates the specific position of the target. We used a laptop or Jeston TX1 as the host computer. Through the unique mechanism of the robot operating system (ROS), communication is established between host computer and the slave computer.

The deep neural network has a very good detection effect and is widely used in the field of target detection. However, many problems need to be considered when deploying the neural network to embedded devices, such as the multiple layers of the network and the huge number of model parameters. Meanwhile, to obtain a better recognition effect, a lot of time needs to be spent training with more data. YOLOv3 extracts features based on the Darknet-53 network, the volume of the convolution calculation is large, the network is deep and complex, and the number of weights is also very large. These features are not conducive to our application to mobile robots. For our usage scenario, we hope that the network model can be better deployed on mobile devices and embedded in development boards. We also hope that it will be lighter and more agile. On the other hand, the deep neural network usually needs to be iterated many times with a large data set to get a good recognition effect. For our research, we only need to recognize tennis balls. Since the images of tennis balls downloaded from the network are usually dissatisfactory, we need to take pictures manually when preparing the data sets, which is time-consuming and labor-intensive. Therefore, we want to only utilize a small number of iterations of mini data sets to train the network model to meet the basic identification requirements.

In our application scenarios, there are usually multiple tennis balls in the picture, and these balls are very likely to overlap. In addition, without the GPU, YOLOv3 detects a picture at a speed of about 12s, which is too slow for a robot. So, we wanted our detection algorithm to not only ensure high accuracy but also work at a faster speed.

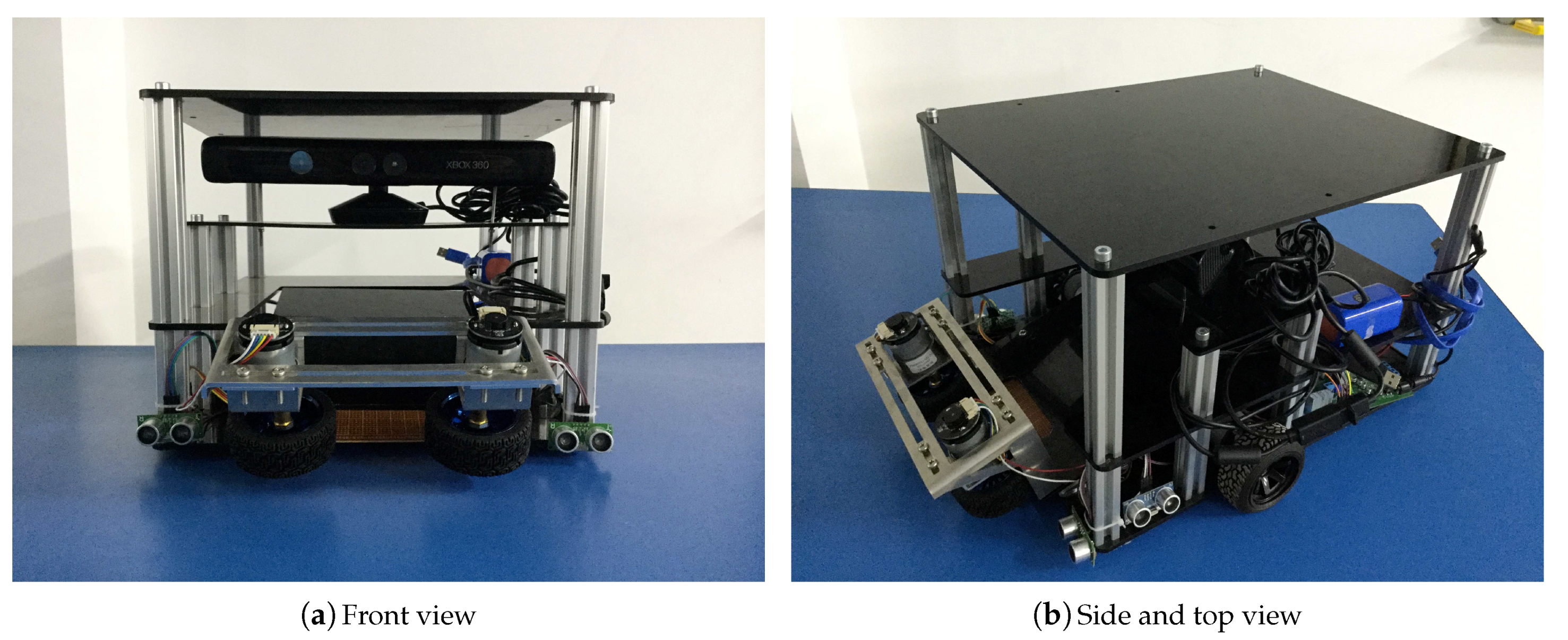

Based on the above factors, we made the following improvements to the YOLOv3 model. Our intelligent tennis robot is shown in

Figure 2.

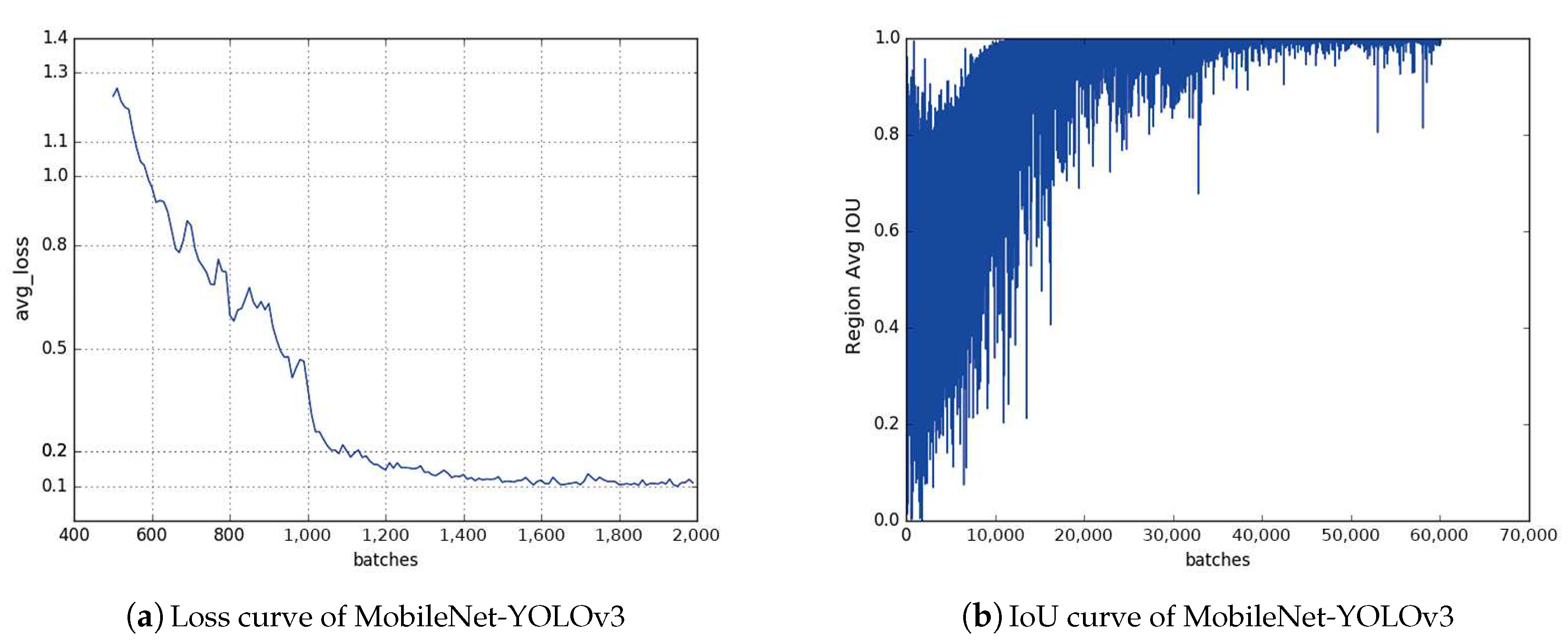

We replaced the original Darknet-53 with the lightweight network MobileNetv1. We optimized the speed by reducing the number of convolution calculations, model depth, and weights.

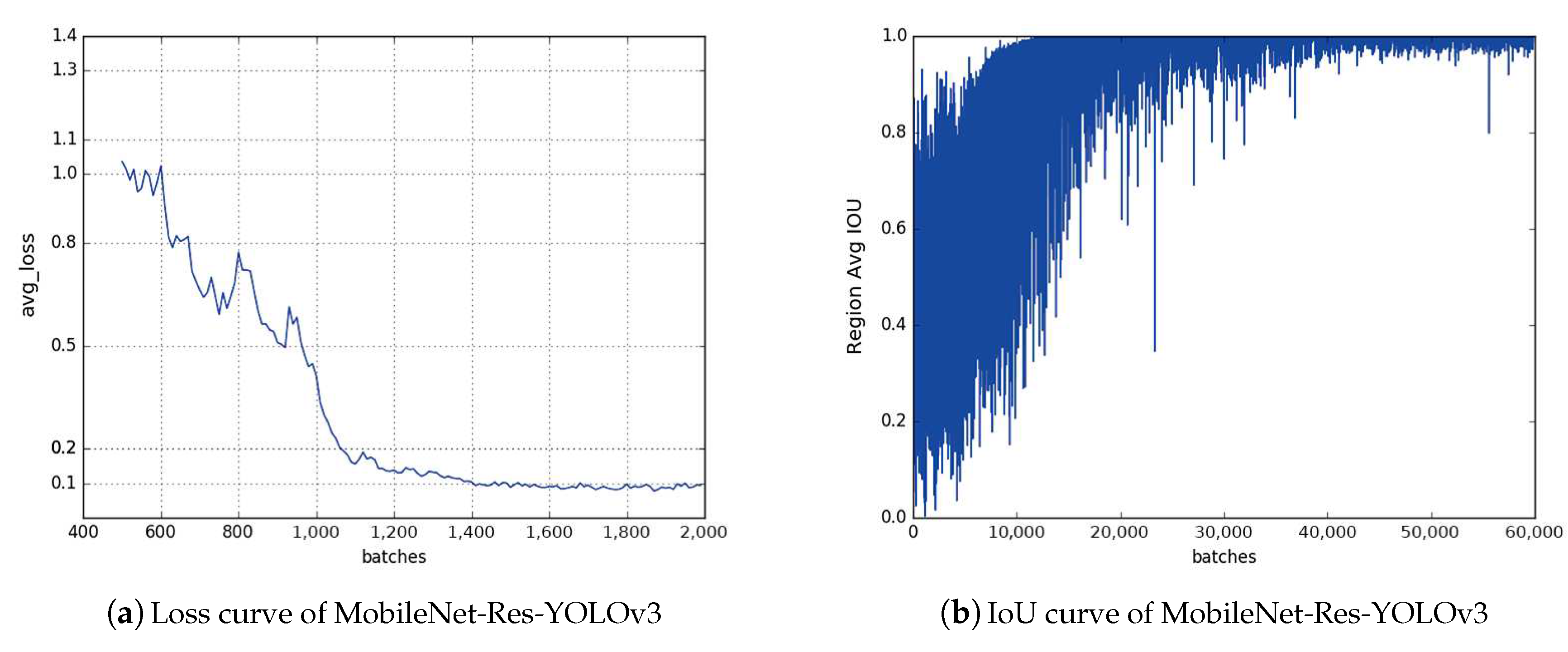

We designed the residual block which can be added to MobileNetv1. For the detection effect, we referred to the idea of the residual block in the DenseNet network and designed a residual block without increasing the network’s burden and depth. We added it to the network structure of MobileNetv1 and then got a good detection effect.

Considering that tennis balls may be scattered everywhere on the tennis court, if our robot can first find the most concentrated place of tennis balls and then identify and pick up tennis balls there, it will save a lot of time and improve efficiency. We drew on the idea of the maximum clique problem (MCP) to solve this problem.

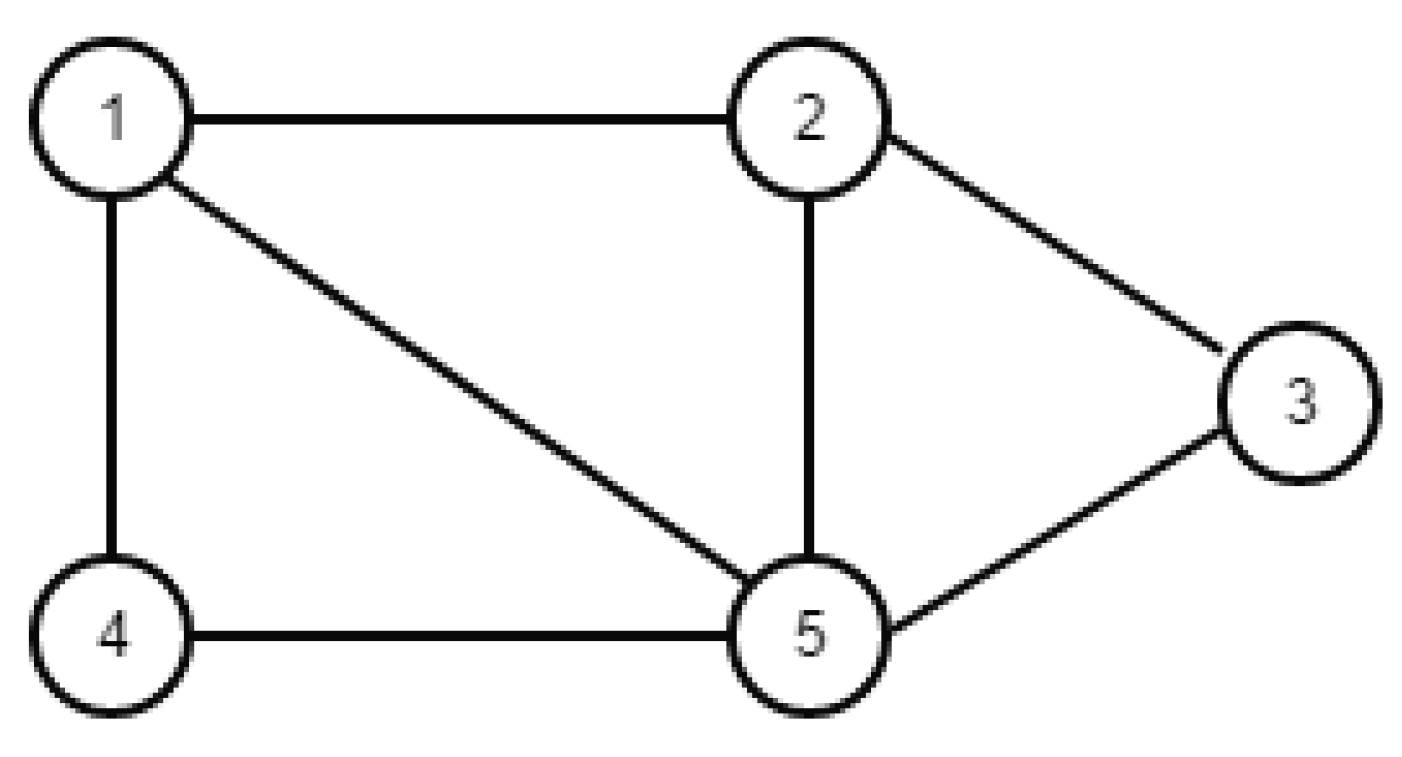

The MCP, also known as the maximum independent set problem, is a classical combinatorial optimization problem in graph theory. It is an important problem in the real world and is widely used in market analysis, scheme selection, signal transmission, computer vision, fault diagnosis, and other fields. The problem can be formally defined as follows: Given an undirected graph , where V is a non-empty set, called a vertex set, E is a set of disordered binary tuples composed of elements in V, called edge sets. In an undirected graph, all disordered pairs of vertices are commonly represented by brackets “()”. If , for any two vertices u and v belonging to U, we can get . Then, U is a complete subgraph of G. The complete subgraph U of G is the group of G. The largest group of G is the largest complete subgraph of G.

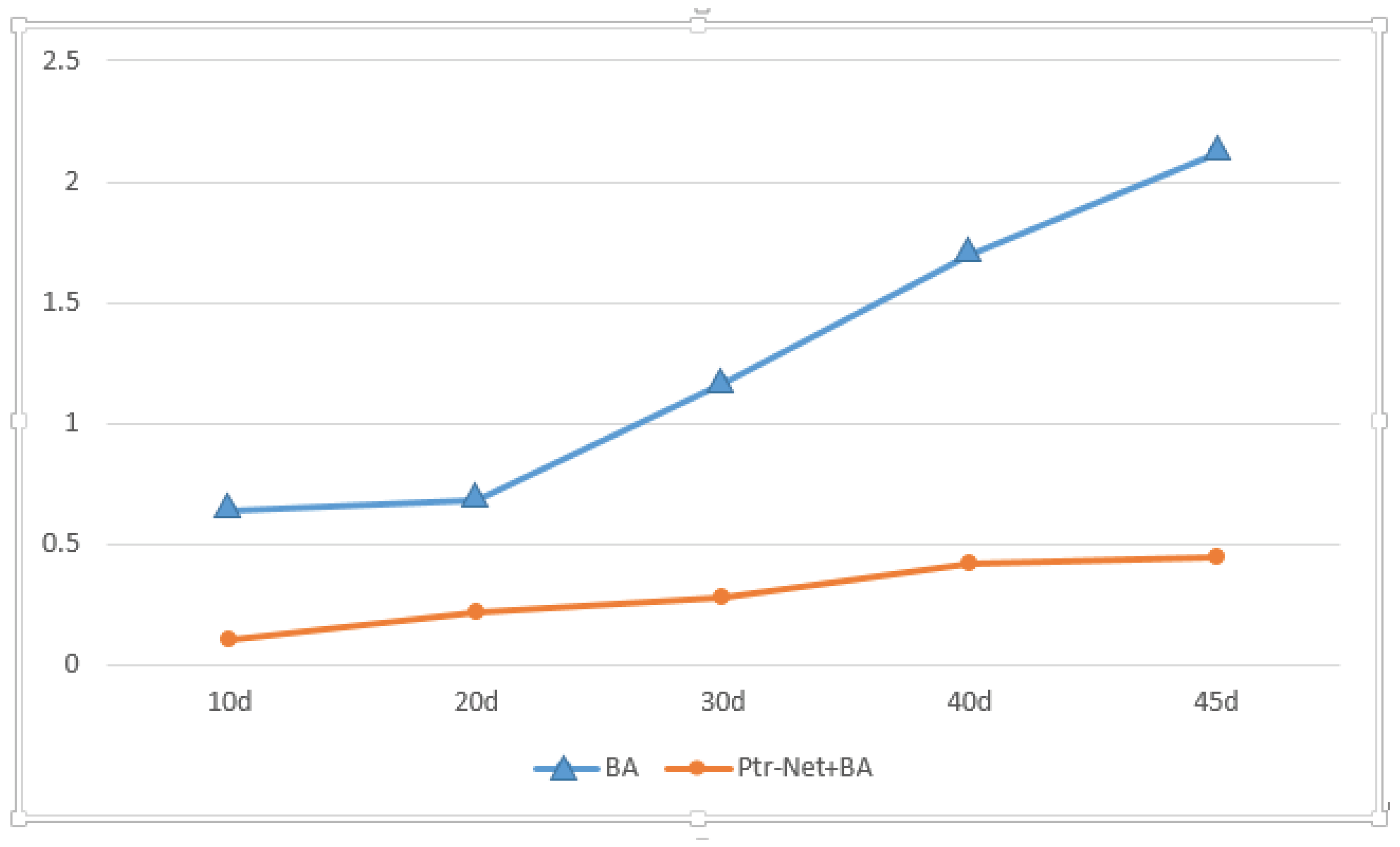

In this paper, we regard each tennis ball scattered on the ground as a point, obtain their position coordinates with the depth camera, and calculate the distances between them. If the distance is less than the average value of the sum of all distances, it is considered that there is a connection between the two tennis balls, so the problem of picking up tennis balls efficiently can be modeled as the maximum clique problem. Under the framework of tensorflow, we use the method of combining the pointer network (Ptr-Net) with the backtracking algorithm (BA) to solve the MCP. After training the Pointer Network, we use the backtracking algorithm to deal with the network output prediction results, which not only reduces the dimensions of the original problem, but also greatly improves the accuracy of the output results. In this way, we can find the gathering point of the tennis balls and pre-process the motion path for the tennis robot.

The chapters of this paper are arranged as follows: firstly,

Section 1 introduces the background and significance of this study and briefly introduces the function and principles of our tennis robot. Then,

Section 2 briefly introduces YOLO, MobileNet, and ResNet and explains how to combine them to realize the function of tennis detection.

Section 3 introduces the solution of MCP based on Ptr-Net and BA and explains how to apply it to the path preprocessing of the tennis robot.

Section 4 introduces how to make data sets and compares the training and testing results of different models. It also introduces the training process and results of solving MCP with Ptr-Net and BA. Finally,

Section 5 summarizes the work of this paper.

2. Tennis Ball Detection with Improved YOLOv3 Algorithm

With the development of computer vision, a large number of excellent research results have been achieved in the field of object recognition. These results not only have certain theoretical significance, but also have extraordinary practical value, which can provide us with great convenience. In particular, various detection algorithms based on deep learning have replaced the traditional object recognition methods.

Conventional object recognition methods include using a gradient vector histogram (HOG) [

2] for pedestrians in a video, using various animal and vehicle detection methods in still images, face recognition using Haar features [

3]. These types of traditional identification methods have low recognition rates. They usually take more time to complete the entire recognition process. Additionally, the generalization ability is poor. The emergence of a series of requirements, such as the diversification of recognition objects, diversity of recognition angles, and recognition of background complexity in identification tasks, has brought more challenges to the research of object recognition. Deep learning focuses on studying how to automatically extract multi-layer feature representations from data and uses a series of nonlinear transformations to extract features from raw data in a data-driven manner, avoiding the drawbacks of manual feature extraction. The convolutional neural network (CNN) [

4] has been widely and successfully applied to image classification tasks, making it the gold standard for image classification.

Methods based on the convolution neural network can be divided into two categories: one is based on region nomination and the other is based on regression. The regional nomination-based approach is represented by the R-CNN series: R-CNN [

5], SPP-Net [

6], fast R-CNN [

7], and faster R-CNN [

8]. Although various improvements have been made, it still adopts the step-by-step detection strategy of first extracting candidate frames and then classifying based on candidate frames. The FPS of the faster R-CNN only reaches seven frames per second (f/s), which is far from reaching real-time requirements. YOLO is a regression-based detection algorithm [

9]. It first uses the regression method to directly predict the bounding box coordinates and classification of an object from an image. The detection speed reaches 45 f/s. However, due to the single scale and proportion of the candidate frame selection, the accuracy is reduced when the speed is increased. Redmon J successively proposed the YOLOv2 [

10] and YOLOv3 [

11] detection algorithms, among which YOLOv3 has a better detection effect. It uses the depth residual network to extract image features and achieves multi-scale prediction, which is implemented on the COCO data set. Additionally, the mAP achieves an effect of 57.9%. In the target detection field, YOLOv3 can ensure accuracy and a detection rate of 51 ms, achieving better detection results.

2.1. Brief Introduction to YOLO

The main idea of YOLO is to use the whole picture as the input of the network, directly returning the position of the bounding box and the category to which the bounding box belongs in the output layer. YOLOv3 is the latest version of YOLO and has many important improvements. In terms of basic image feature extraction, YOLOv3 adopts a network structure called Darknet-53 (containing 53 convolutional layers) instead of using Darknet-19. It draws on the practices of the residual network and sets up shortcut connections between some layers. Besides, tail activation function is also changed from softmax to sigmoid, and the number of anchor boxes is changed from five to three.

The way to implement YOLO is that each grid is predicted to have bounding boxes, and each bounding box is predicted to have a confidence value in addition to its position. This confidence represents the confidence that the predicted box contains the object and the quasi-predictive information of the box prediction. The value is calculated as follows:

If an object falls in a grid cell, the first item is taken as 1; otherwise, it is given a value of 0. The second item is the value of the predicted bounding box and the actual IoU.

In the test, the class information of each grid prediction is multiplied by the confidence information predicted by the bounding box to obtain the class-specific confidence score for each bounding box:

As shown in Equation (

2), the product of the category information of each grid prediction and the confidence of each bounding box is the probability that the box belongs to a certain category. After obtaining the class-specific confidence score of each box, the threshold is set and filtered. The boxes with low scores are dropped and non-maximum suppression processing is performed on the reserved boxes to get the final test results.

YOLOv3 applies a residual skip connection to solve the vanishing gradient problem of deep networks and makes use of an up-sampling and concatenation method that preserves fine-grained features for small object detection.

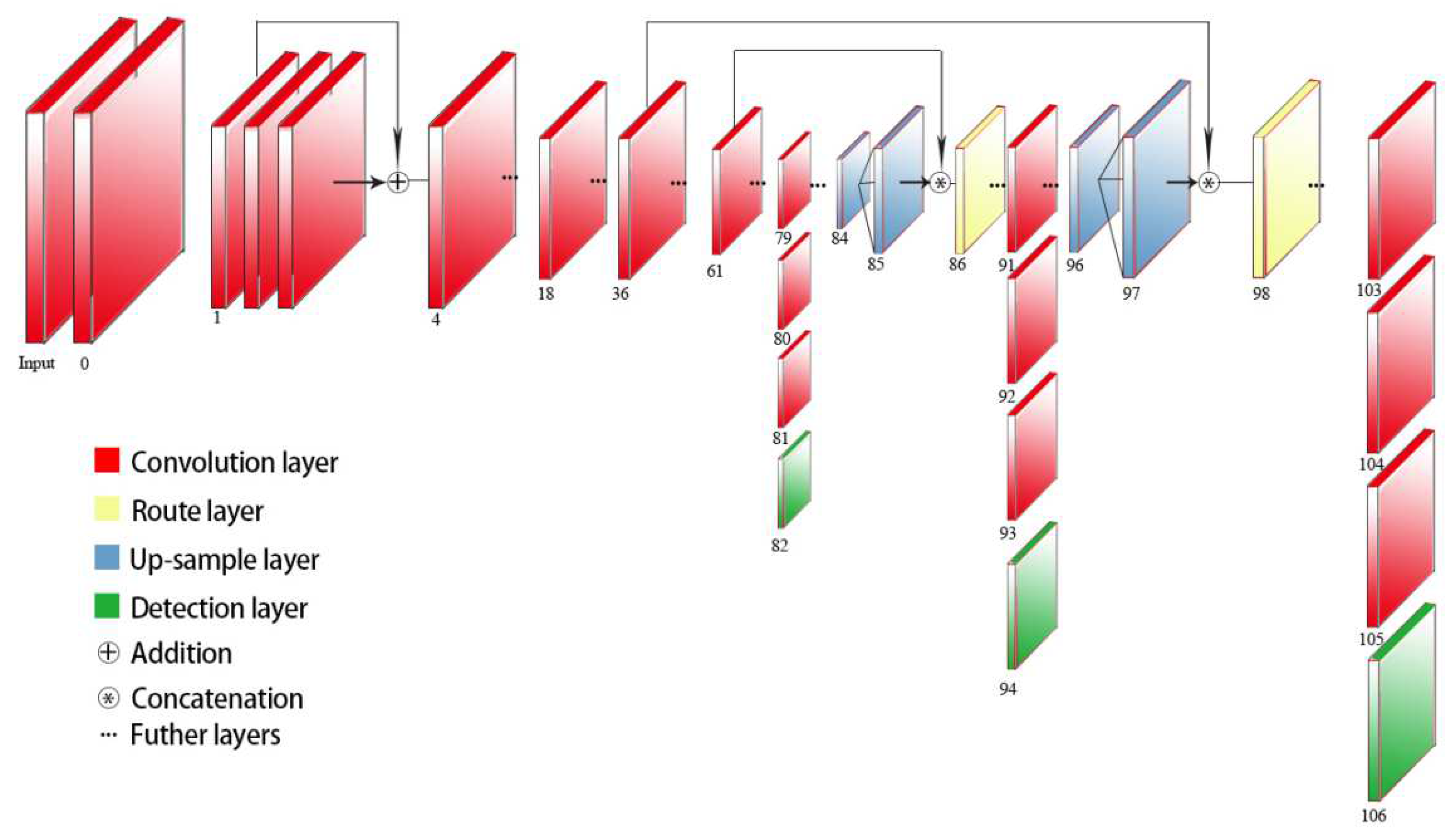

It is well known that YOLOv2 uses pass-through to detect the fine-grained features. However, in YOLOv3, object detection is done using three different scale feature maps. We can see from

Figure 3 that after the 79th layer, the measurement result of a scale is obtained through the lower convolutional layers. The feature map used here for detection has 32 times down-sampling compared with the input image. For example, if the input is 416 × 416, the feature map here is 13 × 13. Since the down-sampling factor is high, the receptive field of the feature map is relatively large. Therefore, it is suitable for detecting objects with a relatively large size in the image. In order to achieve fine-grained detection, the feature map of the 79th layer starts to be up-sampled (upstream sampling convolution from the 79th layer to the right) and then merged with the 61st layer feature map, thus obtaining the 91st layer, which is thinner. The feature map of the granularity is also obtained through several convolutional layers to obtain a feature map which is down-sampled 16 times from the input image. It has a medium-scale receptive field and is suitable for detecting medium-scale objects. Then, the 91st layer feature map is again up-sampled and merged with the 36th layer feature map (concatenation). Finally, a feature map of eight down-samplings relative to the input image is obtained. It has the smallest receptive field and is suitable for detecting small objects. In YOLOv3, the k-means algorithm used in YOLOv2 also exists, and nine a priori boxes are clustered to detect objects of different sizes. In addition, for the classification of predicted objects, it is also changed from softmax to logistic, which can support the existence of multiple tags for an object [

11].

2.2. Configuration of the Improved Network Structure

In order to solve the problem of the large computational complexity and low computational efficiency of traditional convolution operations, the standard convolution operation in the YOLOv3 model is transformed into a separable convolution operation. We utilize MobileNetv1 instead of Darknet-53 and YOLOv3 to extract features. The latter three resolution grafting networks further detect the possibility, confidence, and categories of targets in each cell. These three resolutions are still 13 × 13, 26 × 26, and 52 × 52, respectively.

However, if we use standard optimization algorithms, such as the gradient descent method, to train a general network or other popular optimization algorithms without these shortcuts or jump connections, we will find that with the deepening of the network, training errors will decrease first and then increase. In theory, the training performance will be better with a deeper theoretical network. However, if there is no residual network, it is more difficult to train a deeper theoretical network with an optimization algorithm. Actually, with the deepening of the network, the number of training errors will increase. So, while simplifying the network, we still consider a joining shortcut. The residual network can map equally through the following two alternatives when the dimensions do not match:

Add channels directly by zero padding.

Multiply the W matrix and project it into a new space. The implementation is implemented by 1 × 1 convolution, which directly changes the number of filters of 1 × 1 convolution.

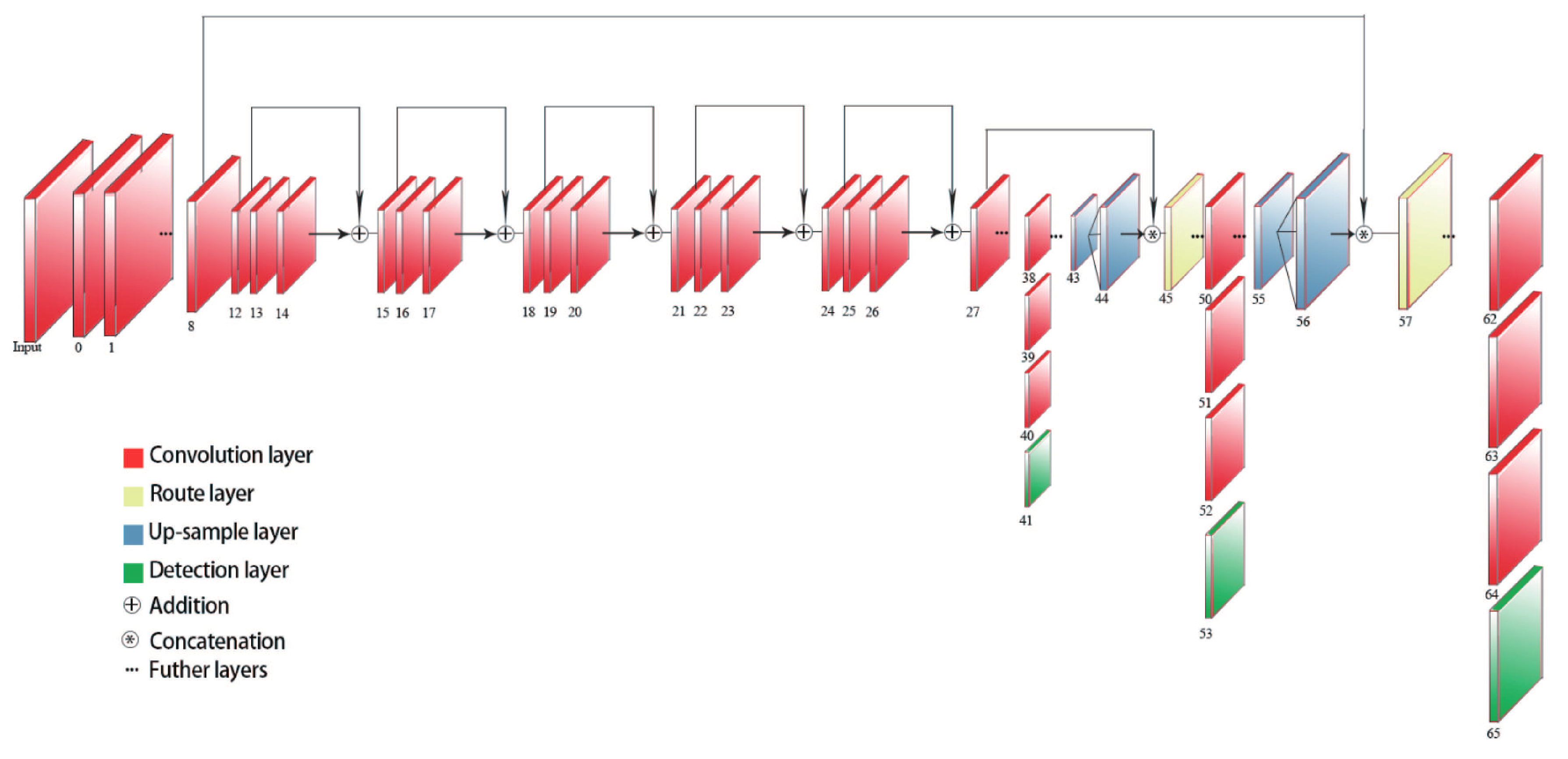

In order not to increase the network burden, we still choose to make a shortcut between the layers of dimension matching. Combined with the network structure of MobileNetv1, the convolution layer is the structure of step two and step one, alternately; the step size of layers 14–23 is one; and five jump connections are added between the 14th layer and the 23rd layer to form a new residual block. The final network structure is shown in

Figure 4.

The 8th and 98th layers of the original network are route layers. The reason for this design is that the deep layer network has a good expressive effect. Compared with the deep layer network, the shallow layer network has a better performance. Based on this idea, this paper adjusts the parameters of the original network and retains the route layer.

The main contributions of our improvements are depth-wise separable convolution and residual block. Their principles are given in the following text.

2.2.1. Depth-Wise Separable Convolution

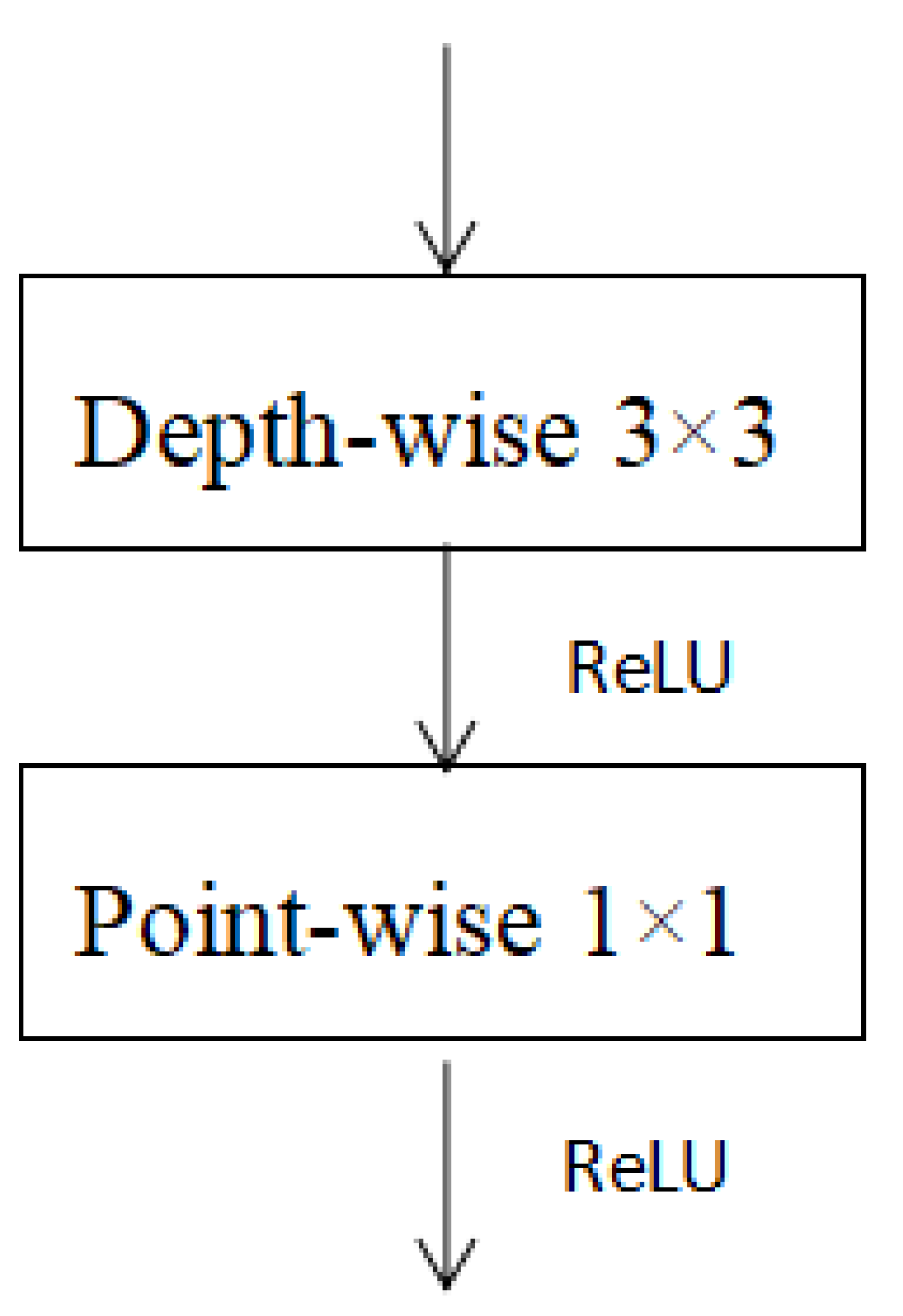

MobileNet is a model that reduces the size of the model and speeds up the inference. Depth-wise (DW) convolution and point-wise (PW) convolution are used to extract features. These two operations are also called depth-wise separable convolution, as shown in

Figure 5. The benefit of this is that it is theoretically possible to reduce the time complexity and spatial complexity of the convolutional layer. It can be seen from Equation (

3) that since size

K of the convolution kernel is usually much smaller than the number of output channels

, the computational complexity of the standard convolution is approximately

times that of the combination of DW and PW convolution [

12].

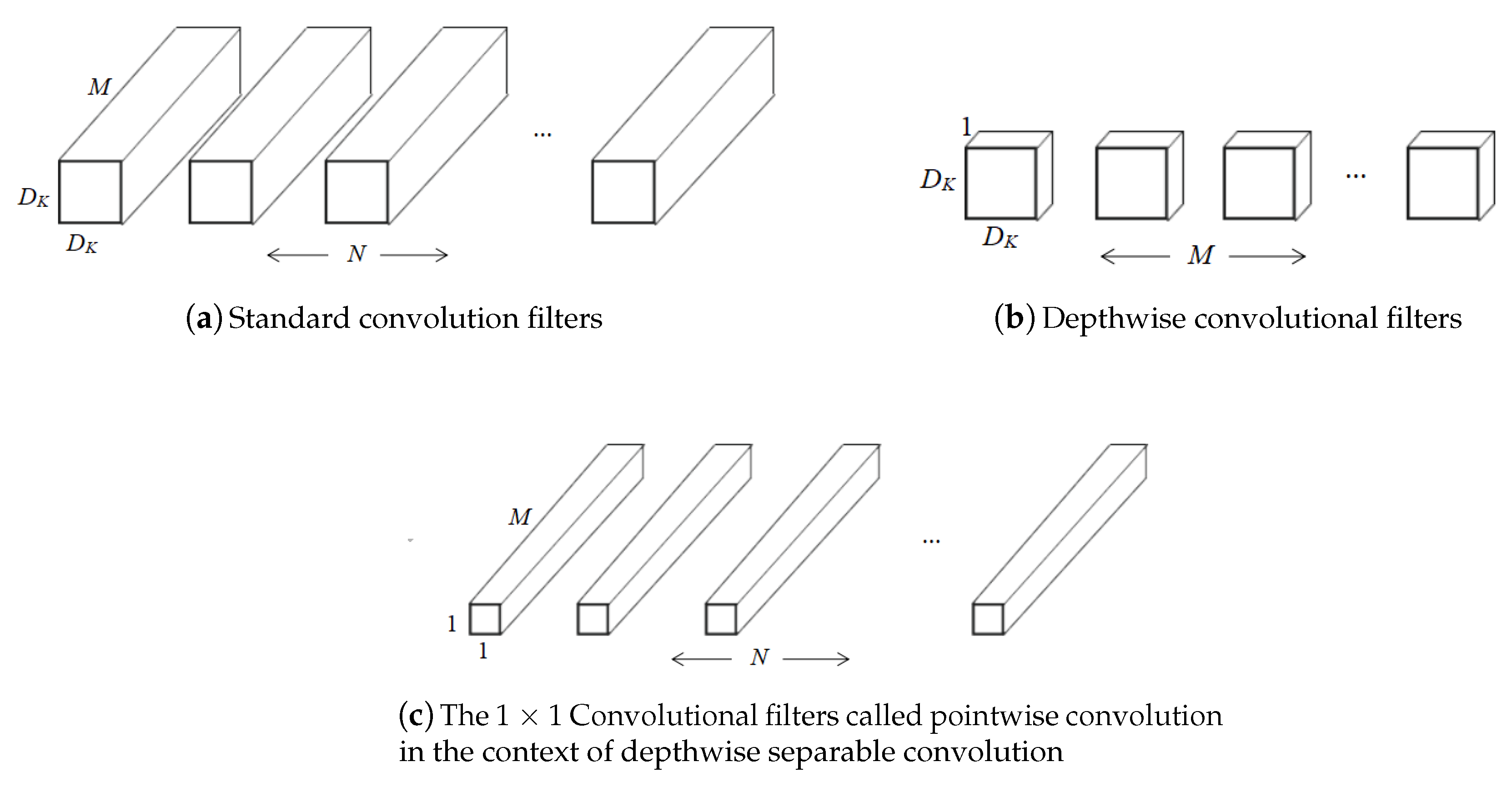

The main idea is to decompose the traditional volume integral into a depth separable convolution with 1 × 1 convolution. The depth separable convolution means that each channel of the input feature map corresponds to a convolution kernel, so that each channel of the output feature is only related to the channel corresponding to the input feature map. This convolution operation can significantly reduce the size of the model. As shown in

Figure 6, for the original convolutional layer, the input feature map has

M channels,

×

is the size of the output feature map, the number of channels is

N, and there are

N convolution kernels of size

×

. The convolution kernel convolves each feature map of the input, and the convolution calculation of each feature map mainly depends on the size of the generated feature map. Since each pixel of the feature map is output, it is a convolution operation. When the output is

×

, each feature map performs a

×

convolution operation. The amount of computation per convolution is related to the size of the convolution kernel. Convolution is the matrix multiplication, so the computation is

×

. Then, the calculation required for a convolution kernel is

×

×

×

× M. If the number of convolution kernels is

N, the total calculation amount is

×

×

×

× M × N. For MobileNet’s convolution calculation, when the input is a feature map of

M channels, the operation is performed by

×

convolutions. The output feature map size is

×

, and the operation amount is

×

×

×

× M. The number of convolution kernels is the same as that of the input channel, and there is no superimposition between channels, just one-to-one correspondence. In the traditional convolution,

M feature maps are superimposed on each other, and all feature map inputs are convolved by

N convolution kernels [

13].

2.2.2. Resnet

The original network structure of YOLOv3 draws on the idea of the residual network. Darknet-53 goes from layer 0 to layer 74, including 53 convolution layers and 22 res layers. The res layers come from ResNet. When the network layer becomes deeper and deeper, the parameter initialization will generally move close to 0. When the parameters of the shallow network are updated during the training process, it is easy to cause gradient dispersion or the gradient explosion phenomenon, and shallow parameters cannot be updated. This phenomenon is not caused by over-fitting but by the learning parameters of the redundant network layers that are not constantly mapping.

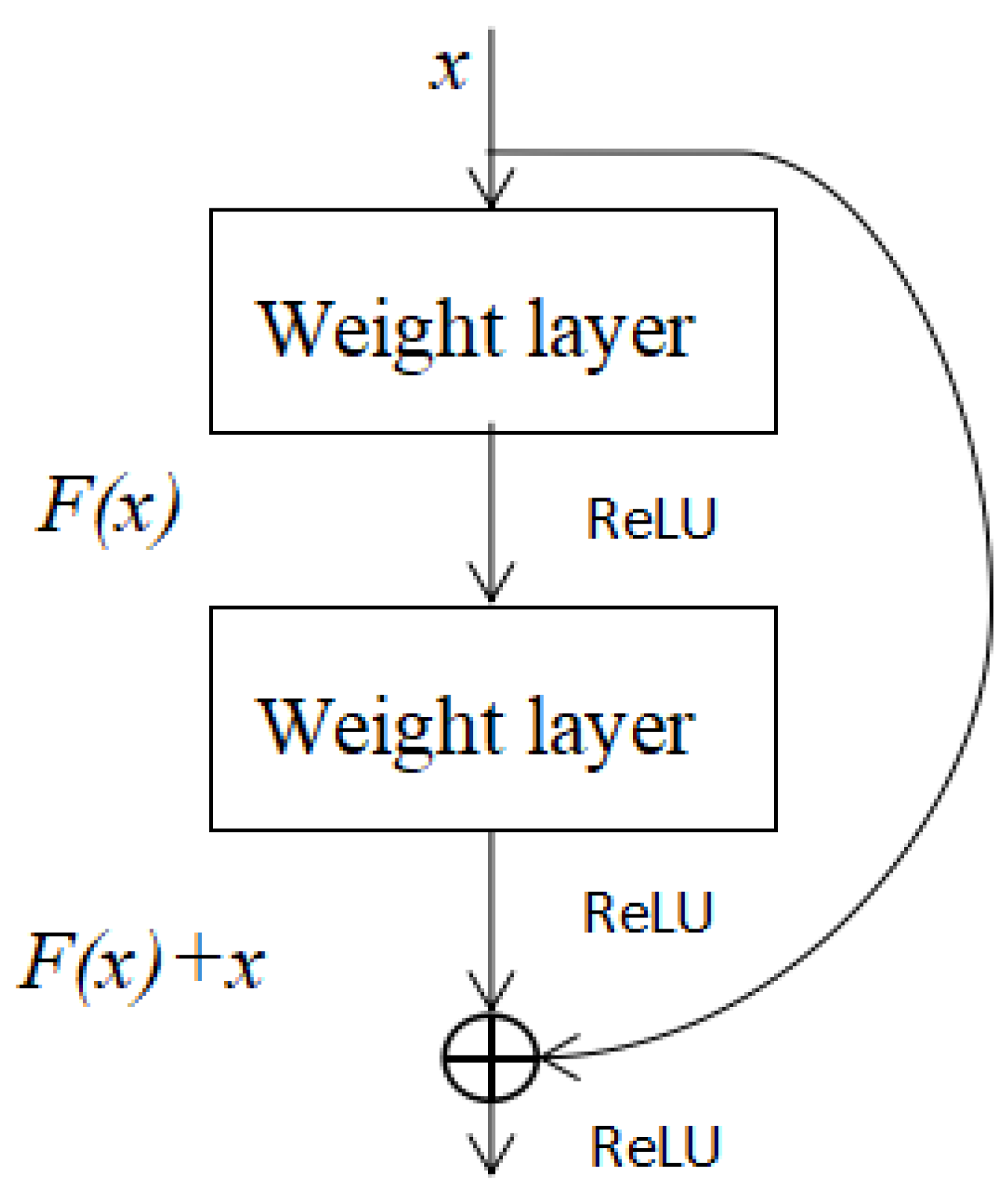

ResNet was jointly proposed by He K and Zhang X in 2015. ResNet takes inspiration from a new idea. If we design a network layer and there is an optimal network level, the designed deep network often has many redundant network layers. Then, we hope that these redundant layers can complete the identity mapping and ensure that the input and output through the identity layer are identical. The identity layer will be judged by the network when training. As shown in

Figure 7, it can be seen that

x is the input to this layer of residual blocks, also known as the residual of

.

x is the input value, and

is the output after the first layer of linear change and activation.

Figure 7 shows that in the residual network, before the second layer is linearly changed and activated,

adds this layer of input value

x, and then activates and outputs. Adding

x before the second layer activates the output value. This path is called a shortcut connection. In general, ResNet changes the layer-by-layer training of deep neural networks to phase-by-stage training [

14]. The deep neural network is divided into several sub-segments, each of which contains a relatively shallow number of network layers, and then the shortcut connection method is used to make each small segment train the residuals. Each small segment learns a part of the total difference (total loss) and finally reaches an overall smaller loss. It controls the spread of the gradient well, as well as avoids the situation where the gradient disappears or the explosion is not conducive to training [

15].

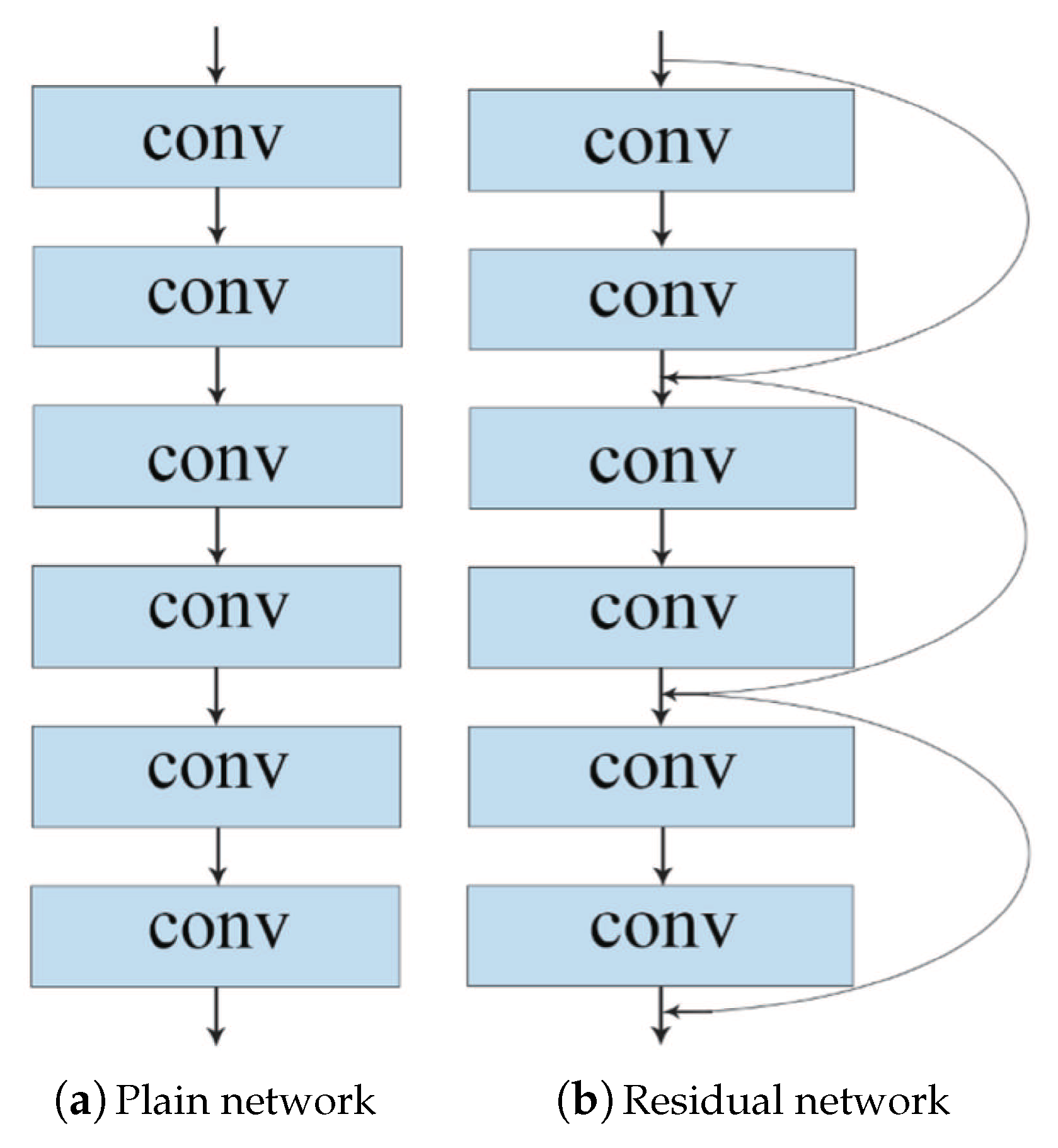

The architectures of the plain network and the residual network are shown in

Figure 8. The difference is that, unlike the plain network, ResNet adds all jump connections and adds a shortcut every two layers to form a residual block.

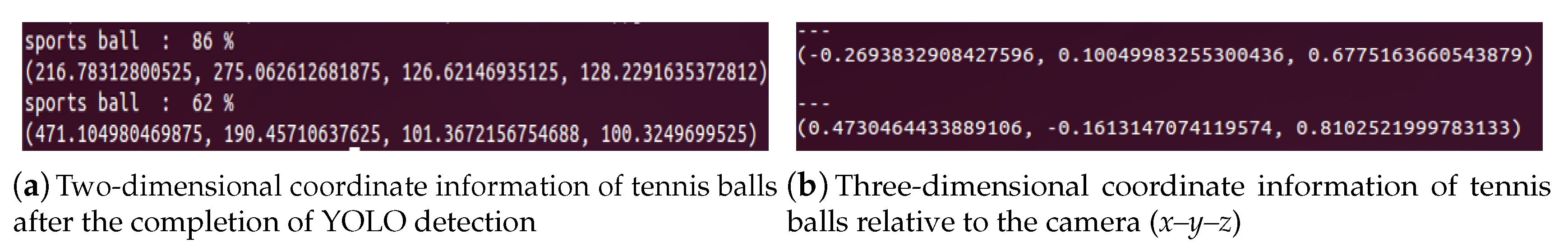

3. Path Preprocessing of the Tennis Robot

After the detection of tennis balls, the improved YOLOv3 algorithm can print labels, confidence, coordinates, and other information on the terminal. The coordinates include

x,

y,

w, and

h.

x and

y represent the coordinates of the central point of the object, and

w and

h represent the width and height, as shown in

Figure 9a. YOLOv3 recognizes the coordinate information of the obtained object and sends it to Kinect to extract the depth information. Kinect can acquire color image and depth information. It can also transform depth image data into the actual depth information of each pixel. According to the imaging principle of the Kinect camera, we can finally get the

x,

y, and

z coordinates in the real world for every tennis ball, as shown in

Figure 9b, and calculate the distances between them. Then, we can use the MCP method for path preprocessing.

3.1. Solving the Maximum Clique Problem by the Pointer Network and Backtracking Algorithm

In 1957, Hararv and Ross first proposed the deterministic algorithm for solving the maximum clique problem. Since then, researchers have proposed a variety of deterministic algorithms to solve the MCP. However, with the increase in the complexity of the problem, such as the vertices and edge density, the deterministic algorithm cannot effectively solve these NP-hard problems.

In the late 1980s, researchers began to use heuristic algorithms, such as the sequential greedy heuristic algorithm, genetic algorithm, neural network algorithm and so on, to solve the Maximum Clique Problem and achieved satisfactory results in terms of time performance and results. The only drawback is that it is not always possible to find the global optimal solution. Sometimes, we can only find near optimal values [

16].

The main method used in this paper is to combine the backtracking algorithm with the pointer network.

The backtracking algorithm is also known as the “general problem solving method”. It can search for all or any solution of a problem systematically. It is a systematic and jumping search algorithm. This method, based on the root node, traverses the solution space tree according to the depth-first strategy and searches for the solution satisfying the constraints. A process called “pruning” is used to select nodes. When searching for any node in the tree, it first determines whether the corresponding partial solution of the node satisfies the constraint conditions or exceeds the bounds of the objective function. Then, it judges whether the node contains the solution to the problem. If not, it skips the search for the subtree with the node as the root. The search continues along the subtree with the node as its root according to the depth-first strategy.

Pointer networks, referred to as Ptr-Nets, are variants of the attention model and sequence-to-sequence (Seq2seq) model. Instead of converting one sequence into another, they produce a series of pointers to the elements of the input sequence. The most basic usage is to sort elements of variable length sequences or collections. Pointer networks have been widely used to solve combinatorial optimization problems and have achieved good results [

17]. For example, Oriol V solved TSP problems in [

18] by using a pointer network. Gu S S solved the knapsack problem in [

19]. Combining these papers, we find that pointer networks are effective for solving such problems. As a result, we applied them to solve the maximum clique problem in this paper [

20].

After training the pointer network, the maximum clique solution given by the network can be obtained. However, since the prediction accuracy of the network is not satisfactory, sometimes, the output result is not a clique or the clique is contained in these nodes. Therefore, we should further use BA to solve the prediction results. In this way, we can not only reduce the dimensions of the original problem but also improve the accuracy of the output results.

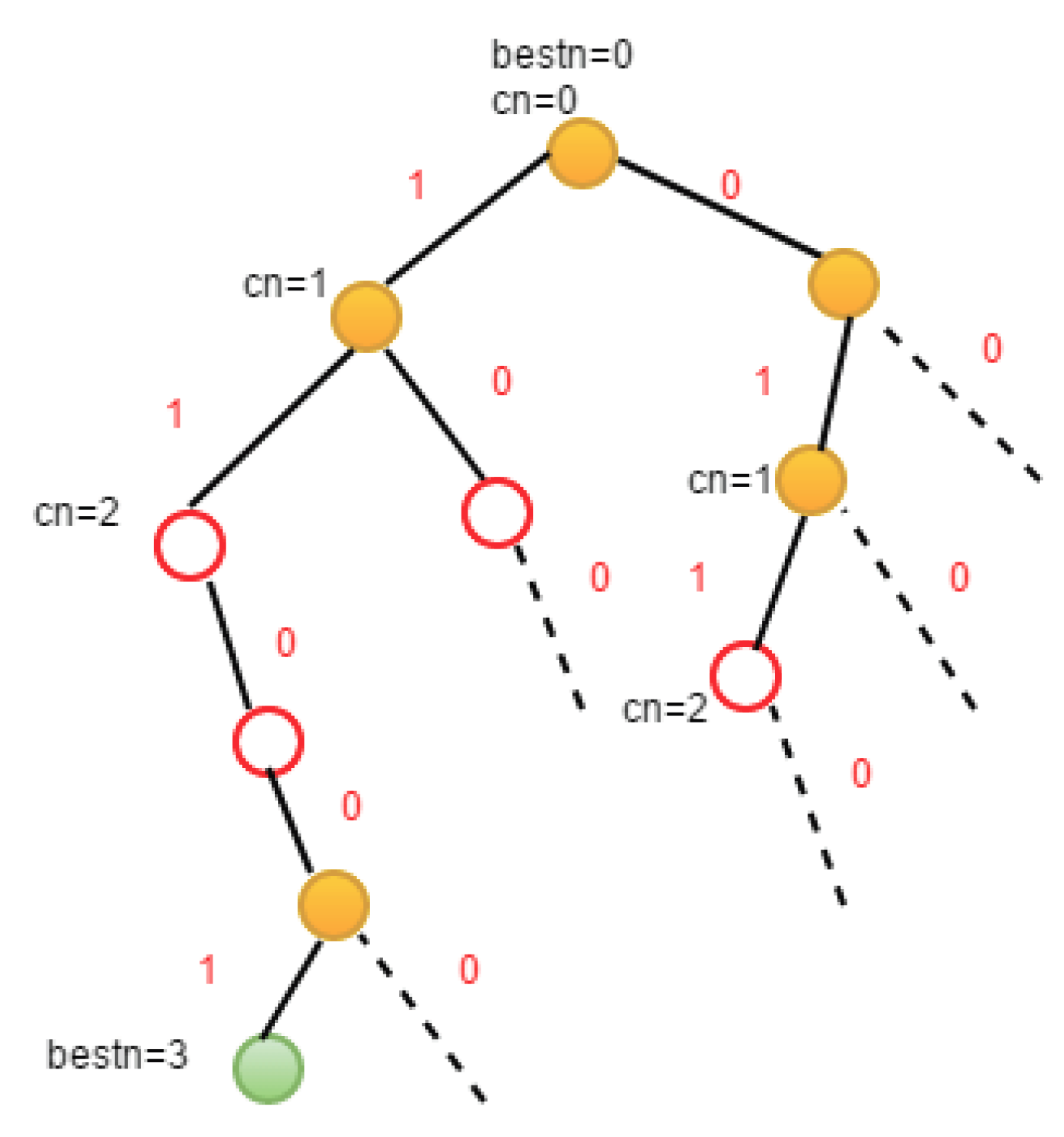

3.1.1. Backtracking Algorithm

The basic steps of the Backtracking Algorithm for solving problems are as follows:

Define the solution space of the problem.

Determine the structure of the solution space that is easy to search.

Search for the solution space by the depth-first method, and remove invalid searches with the Pruning function.

Given an undirected graph G, the problem of solving the MCP can be considered as the problem of selecting a subset of the vertex set V of graph G. So, the subset tree can be used to represent the solution space of the problem. The current extension node Z is located at the level i of the solution space tree. Before entering the left subtree, it is necessary to make sure that every vertex from vertex i to the selected vertex set has an edge connection. Before entering the right subtree, you must make sure that there are enough optional vertices to make it possible for the algorithm to find a larger clique in the right subtree.

The graph

G is represented by the adjacency matrix,

n is the vertex number of

G,

stores the vertex number of the current clique, and

stores the vertex number of the largest clique. Also,

is the upper bound function that enters the right subtree. When

<

, a larger clique cannot be found in the right subtree. The right node of

Z can be cut off by the Pruning function [

21].

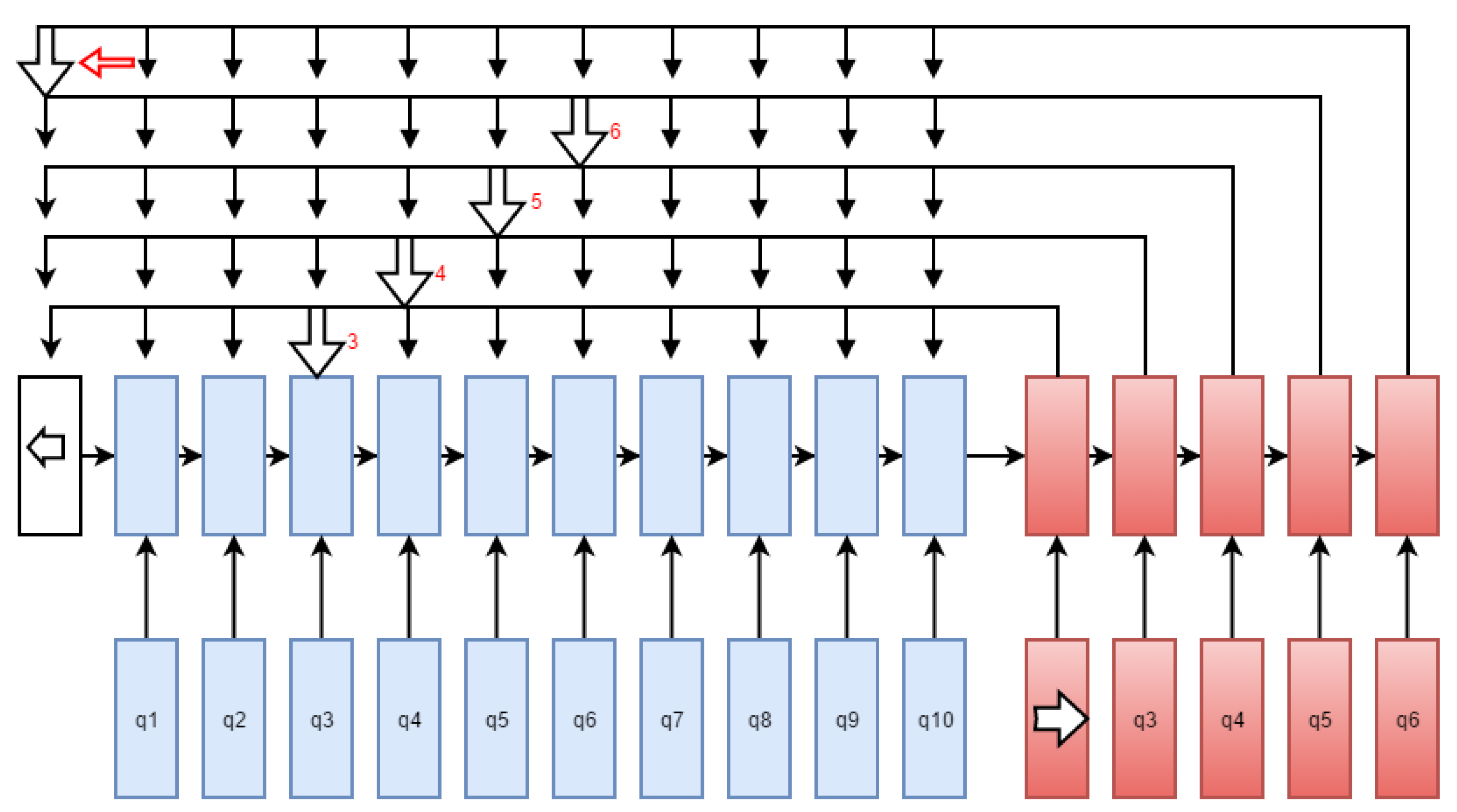

3.1.2. Architecture of Pointer Networks

Pointer networks are also Seq2seq models. They are based on an improvement in the attention mechanism that overcomes the “output heavily dependent input” problem in the Seq2seq model. The meaning of “output heavily dependent on input” simply means that the output sequence is selected from the input. For different input sequences, the length of the output sequence depends on the length of the input.

As shown in

Figure 10, the method of selecting a point is called pointer. This converts attention into a pointer to select elements in the original input sequence [

22]. The attention mechanism learns the weight relationship and the implicit state and then predicts the next output according to them. Ptr-Net directly passes through softmax, pointing to the most likely output element in the input sequence selection.

For the training data set, each line is a piece of data, the input is separated from the output by the word “output”, and the input is a graph represented by a matrix. In the Pointer Network, the model first defines four input parts, which are the input and length of the encoder, the prediction sequence, and the length of the decoder. Then Ptr-Net processes the input and converts it to embedding, which is the same length as the number of hidden neurons in the long short-term memory (LSTM). The solution is to first extend the input and then call the function for 2D convolution. After processing the input, the input shape changes to [batch, max

enc

seq

length, hidden

[

23]. According to the number of LSTM layers in the configuration, we build the encoder and input the processed input into the model to get the output and the final state of the encoder [

24]. After that, we add a start output to the front output, and the start output of the addition will also be the initial input to the encoder, as shown in

Figure 12.

Unlike Seq2seq, the input to the Ptr-Net is not the embedding of the target sequence, but the output of the encoder at the corresponding location is based on the value of the target sequence. Similarly, a multi-layer LSTM network is built into the Ptr-Net, where we enter only one value for each batch for each decoder and then loop through the entire decoder process. In the Ptr-Net, two arrays are defined to hold the output sequence and softmax values for each output.

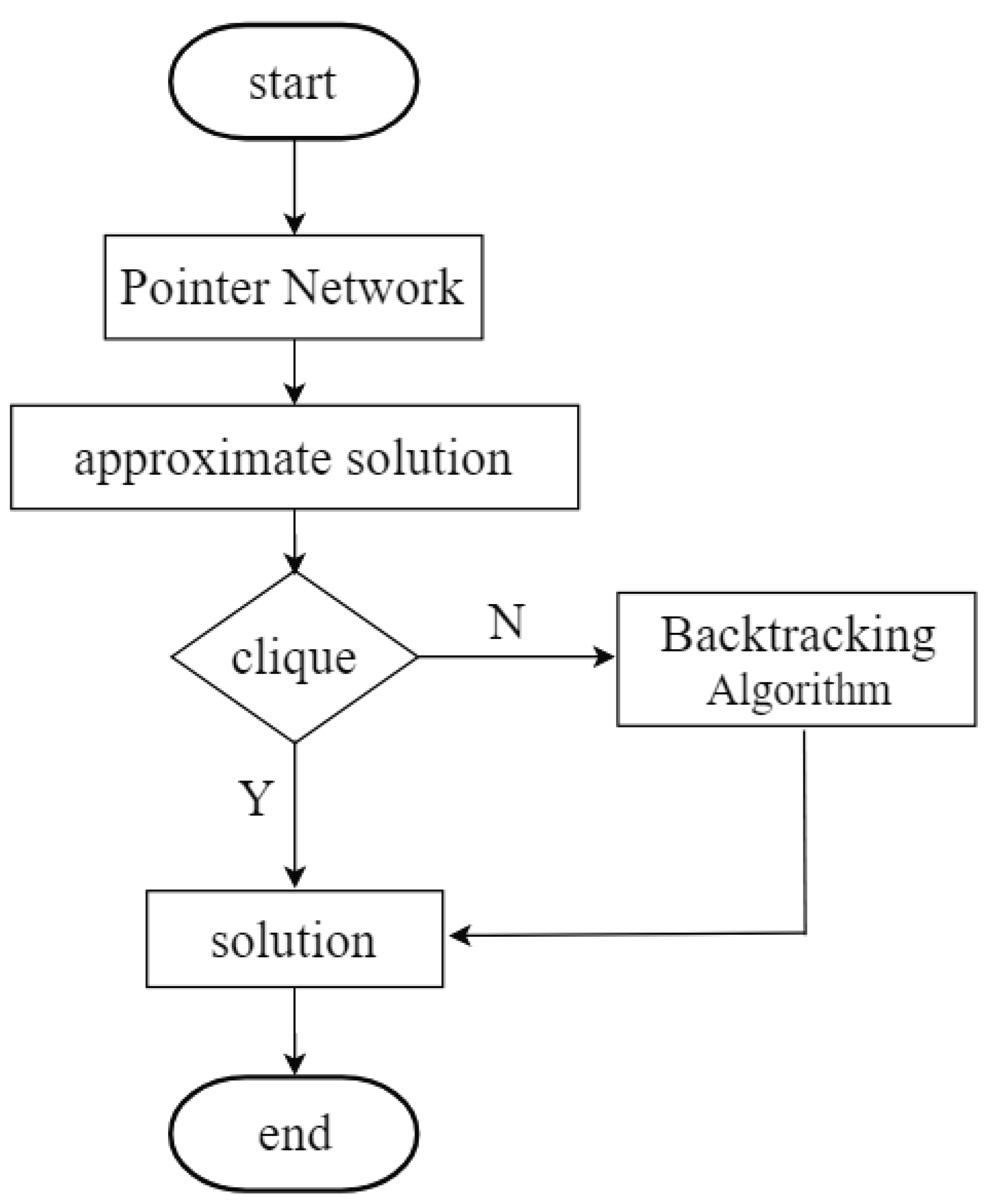

3.2. The Combination of Backtracking Algorithm and Pointer Networks

At first, we train the Ptr-Net to only get the solution to the MCP. We find that the accuracy is not so satisfactory. After comparing the predicted answers and the optimal values of the Ptr-Net, we find that the difference between them is not great. The output of the Ptr-Net very likely contains the largest group.

So, we use a method that combines the Ptr-Net with BA. After the Ptr-Net predicts the answer, a judgment is made on the result. If the output result is the optimal value of MCP, it is printed out directly. If not, the maximum clique is continuously found in the predicted selected points by the BA method. The flow chart is shown in

Figure 13.