Information Extraction from Electronic Medical Records Using Multitask Recurrent Neural Network with Contextual Word Embedding

Abstract

1. Introduction

2. Materials and Methods

2.1. Related Work on Clinical Named Entity Recognition

2.2. ELMo Contextual Word Embedding

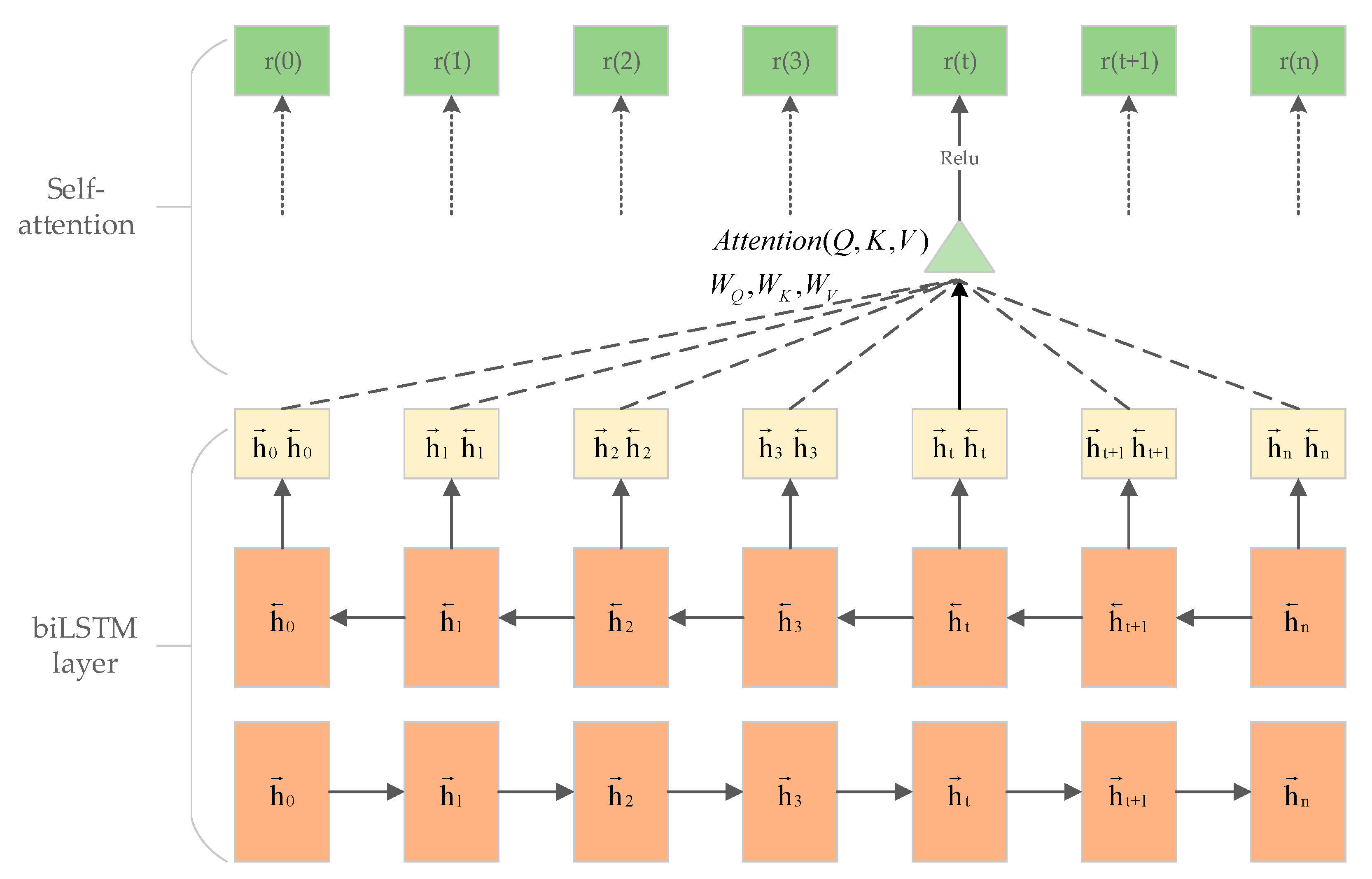

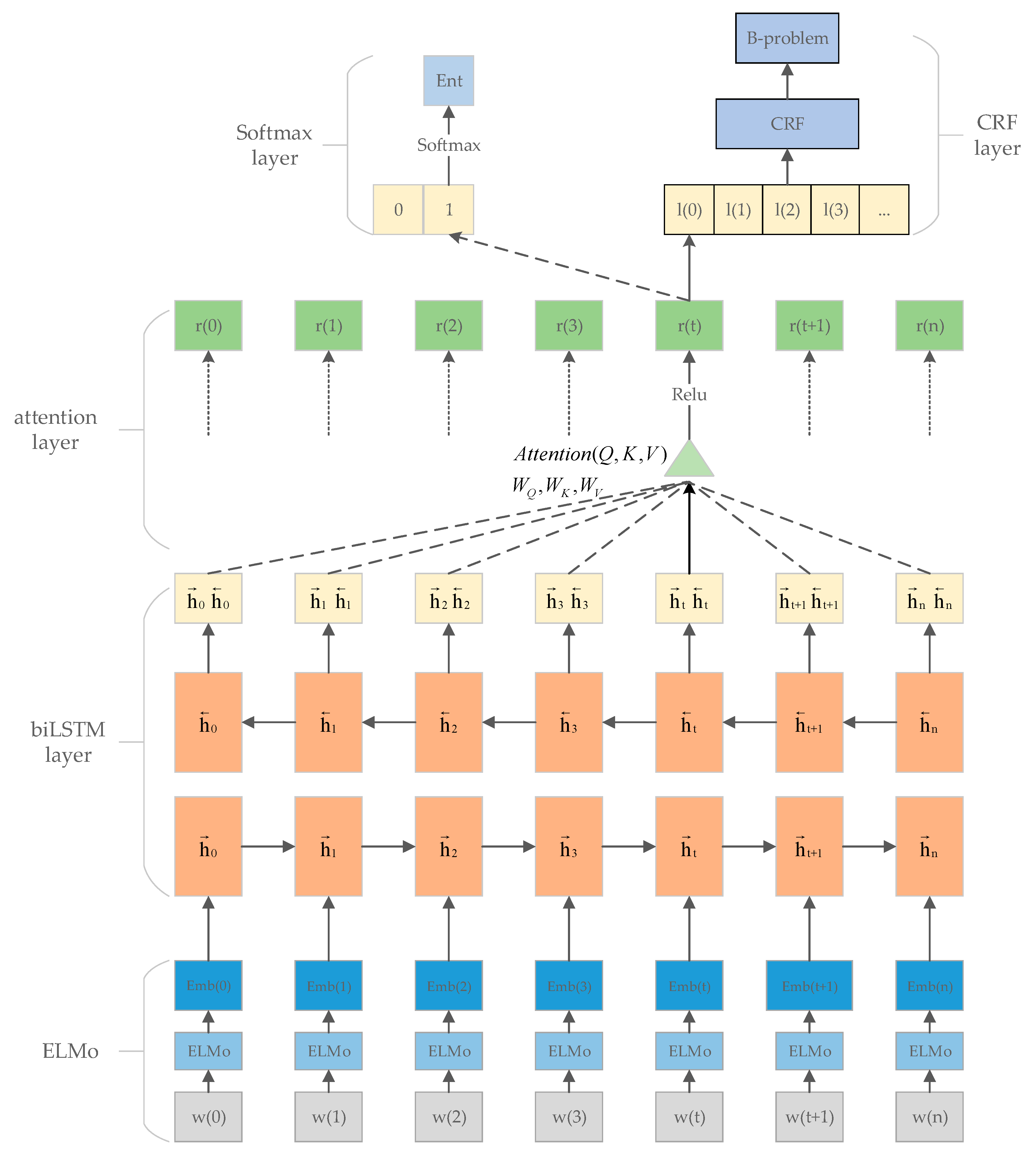

2.3. The Att-biLSTM Model

2.4. Multitask Mechanism

3. Results

3.1. Dataset

3.2. Experimental Setting

3.3. Evaluation Metrics

3.4. Results

4. Discussion

5. Conclusions

Supplementary Materials

Author Contributions

Funding

Conflicts of Interest

References

- Meystre, S.M.; Savova, G.K.; Kipper-Schuler, K.C.; Hurdle, J.F. Extracting information from textual documents in the electronic health record: A review of recent research. Yearb. Med. Inform. 2008, 17, 128–144. [Google Scholar]

- Friedman, C.; Alderson, P.O.; Austin, J.H.; Cimino, J.J.; Johnson, S.B. A general natural-language text processor for clinical radiology. J. Am. Med. Inform. Assoc. 1994, 1, 161–174. [Google Scholar] [CrossRef] [PubMed]

- Aronson, A.R.; Lang, F.-M. An overview of MetaMap: Historical perspective and recent advances. J. Am. Med. Inform. Assoc. 2010, 17, 229–236. [Google Scholar] [CrossRef] [PubMed]

- Savova, G.K.; Masanz, J.J.; Ogren, P.V.; Zheng, J.; Sohn, S.; Kipper-Schuler, K.C.; Chute, C.G. Mayo clinical Text Analysis and Knowledge Extraction System (cTAKES): Architecture, component evaluation and applications. J. Am. Med. Inform. Assoc. 2010, 17, 507–513. [Google Scholar] [CrossRef] [PubMed]

- Denny, J.C.; Irani, P.R.; Wehbe, F.H.; Smithers, J.D.; Spickard, A., III. The KnowledgeMap project: Development of a concept-based medical school curriculum database. In Proceedings of the AMIA Annual Symposium Proceedings, Washington, DC, USA, 8–12 November 2003; pp. 195–199. [Google Scholar]

- Liu, S.; Tang, B.; Chen, Q.; Wang, X. Drug name recognition: Approaches and resources. Information 2015, 6, 790–810. [Google Scholar] [CrossRef]

- Chalapathy, R.; Borzeshi, E.Z.; Piccardi, M. Bidirectional LSTM–CRF for clinical concept extraction. arXiv 2016, arXiv:1611.08373. [Google Scholar]

- Uzuner, Ö.; South, B.R.; Shen, S.; DuVall, S.L. 2010 i2b2/VA challenge on concepts, assertions, and relations in clinical text. J. Am. Med. Inform. Assoc. 2011, 18, 552–556. [Google Scholar] [CrossRef] [PubMed]

- Pradhan, S.; Elhadad, N.; Chapman, W.; Manandhar, S.; Savova, G. Semeval-2014 task 7: Analysis of clinical text. In Proceedings of the 8th International Workshop on Semantic Evaluation (SemEval 2014), Dublin, Ireland, 23–24 August 2014; pp. 54–62. [Google Scholar]

- Boag, W.; Wacome, K.; Naumann, T.; Rumshisky, A. CliNER: A lightweight tool for clinical named entity recognition. In Proceedings of the AMIA Joint Summits on Clinical Research Informatics, San Francisco, CA, USA, 23–25 March 2015. [Google Scholar]

- Wang, Y.; Patrick, J. Cascading classifiers for named entity recognition in clinical notes. In Proceedings of the Workshop on Biomedical Information Extraction, Association for Computational Linguistics, Borovets, Bulgaria, 14–16 September 2009; pp. 42–49. [Google Scholar]

- DeBruijn, B.; Cherry, C.; Kiritchenko, S.; Martin, J.; Zhu, X. Machine-learned solutions for three stages of clinical information extraction: The state of the art at i2b2 2010. J. Am. Med. Inform. Assoc. 2011, 18, 557–562. [Google Scholar] [CrossRef]

- Lafferty, J.; McCallum, A.; Pereira, F.C. Conditional random fields: Probabilistic models for segmenting and labeling sequence data. In Proceedings of the 18th International Conference on Machine Learning (ICML 2001), Williamstown, MA, USA, 28 June–July 1 2001; pp. 282–289. [Google Scholar]

- Wu, Y.; Jiang, M.; Xu, J.; Zhi, D.; Xu, H. Clinical named entity recognition using deep learning models. In Proceedings of the AMIA Annual Symposium Proceedings, Washington, DC, USA, 4–8 November 2017; pp. 1812–1819. [Google Scholar]

- Hochreiter, S.; Schmidhuber, J. Long short-term memory. Neural Comput. 1997, 9, 1735–1780. [Google Scholar] [CrossRef]

- Unanue, I.J.; Borzeshi, E.Z.; Piccardi, M. Recurrent neural networks with specialized word embeddings for health-domain named-entity recognition. J. Biomed. Inform. 2017, 76, 102–109. [Google Scholar] [CrossRef]

- Luo, L.; Yang, Z.; Yang, P.; Zhang, Y.; Wang, L.; Lin, H.; Wang, J. An attention-based BiLSTM–CRF approach to document-level chemical named entity recognition. Bioinformatics 2017, 34, 1381–1388. [Google Scholar] [CrossRef] [PubMed]

- Zeng, D.; Sun, C.; Lin, L.; Liu, B. LSTM–CRF for drug-named entity recognition. Entropy 2017, 19, 283. [Google Scholar] [CrossRef]

- Xu, G.; Wang, C.; He, X. Improving clinical named entity recognition with global neural attention. In Proceedings of the Asia-Pacific Web (APWeb) and Web-Age Information Management (WAIM) Joint International Conference on Web and Big Data, Macau, China, 23–25 July 2018; pp. 264–279. [Google Scholar]

- Peters, M.E.; Neumann, M.; Iyyer, M.; Gardner, M.; Clark, C.; Lee, K.; Zettlemoyer, L. Deep contextualized word representations. arXiv 2018, arXiv:1802.05365. [Google Scholar]

- Devlin, J.; Chang, M.-W.; Lee, K.; Toutanova, K. Bert: Pre-training of deep bidirectional transformers for language understanding. arXiv 2018, arXiv:1810.04805. [Google Scholar]

- Mikolov, T.; Chen, K.; Corrado, G.; Dean, J. Efficient estimation of word representations in vector space. arXiv 2013, arXiv:1301.3781. [Google Scholar]

- Pennington, J.; Socher, R.; Manning, C. Glove: Global vectors for word representation. In Proceedings of the 2014 Conference on Empirical Methods in Natural Language Processing (EMNLP), Doha, Qatar, 25–29 October 2014; pp. 1532–1543. [Google Scholar]

- Zhu, H.; Paschalidis, I.C.; Tahmasebi, A. Clinical Concept Extraction with Contextual Word Embedding. arXiv 2018, arXiv:1810.10566. [Google Scholar]

- Collobert, R.; Weston, J. A unified architecture for natural language processing: Deep neural networks with multitask learning. In Proceedings of the 25th International Conference on Machine Learning (ICML 2008), Helsinki, Finland, 5–9 July 2008; pp. 160–167. [Google Scholar]

- Aguilar, G.; Maharjan, S.; López-Monroy, A.P.; Solorio, T. A Multi-task Approach for Named Entity Recognition in Social Media Data. In Proceedings of the Third Workshop on Noisy User-generated Text of Association for Computational Linguistics, Copenhagen, Denmark, 7 September 2017; pp. 148–153. [Google Scholar]

- Savova, G.K.; Fan, J.; Ye, Z.; Murphy, S.P.; Zheng, J.; Chute, C.G.; Kullo, I.J. Discovering peripheral arterial disease cases from radiology notes using natural language processing. In Proceedings of the AMIA Annual Symposium Proceedings, Washington, DC, USA, 13–17 November 2010; pp. 722–726. [Google Scholar]

- Bedmar, I.S.; Martínez, P.; Herrero Zazo, M. Semeval-2013 task 9: Extraction of drug-drug interactions from biomedical texts (ddiextraction 2013). In Proceedings of the Second Joint Conference on Lexical and Computational Semantics (*SEM), Volume 2: Seventh International Workshop on Semantic Evaluation (SemEval 2013), Atlanta, GA, USA, 13–14 June 2013; pp. 341–350. [Google Scholar]

- Wang, Y.; Wang, L.; Rastegar-Mojarad, M.; Moon, S.; Shen, F.; Afzal, N.; Liu, S.; Zeng, Y.; Mehrabi, S.; Sohn, S. Clinical information extraction applications: A literature review. J. Biomed. Inform. 2018, 77, 34–49. [Google Scholar] [CrossRef]

- Hebbring, S.J. The challenges, advantages and future of phenome—Wide association studies. Immunology 2014, 141, 157–165. [Google Scholar] [CrossRef]

- Law, V.; Knox, C.; Djoumbou, Y.; Jewison, T.; Guo, A.C.; Liu, Y.; Maciejewski, A.; Arndt, D.; Wilson, M.; Neveu, V. DrugBank 4.0: Shedding new light on drug metabolism. Nucleic Acids Res. 2013, 42, D1091–D1097. [Google Scholar] [CrossRef]

- Roberts, K.; Rink, B.; Harabagiu, S.M.; Scheuermann, R.H.; Toomay, S.; Browning, T.; Bosler, T.; Peshock, R. A machine learning approach for identifying anatomical locations of actionable findings in radiology reports. In Proceedings of the AMIA Annual Symposium Proceedings, Chicago, IL, USA, 3–7 November 2012; pp. 779–788. [Google Scholar]

- Sarker, A.; Gonzalez, G. Portable automatic text classification for adverse drug reaction detection via multi-corpus training. J. Biomed. Inform. 2015, 53, 196–207. [Google Scholar] [CrossRef]

- Rochefort, C.M.; Buckeridge, D.L.; Forster, A.J. Accuracy of using automated methods for detecting adverse events from electronic health record data: A research protocol. Implement. Sci. 2015, 10, 5. [Google Scholar] [CrossRef]

- Deleger, L.; Brodzinski, H.; Zhai, H.; Li, Q.; Lingren, T.; Kirkendall, E.S.; Alessandrini, E.; Solti, I. Developing and evaluating an automated appendicitis risk stratification algorithm for pediatric patients in the emergency department. J. Am. Med. Inform. Assoc. 2013, 20, e212–e220. [Google Scholar] [CrossRef]

- Collobert, R.; Weston, J.; Bottou, L.; Karlen, M.; Kavukcuoglu, K.; Kuksa, P. Natural language processing (almost) from scratch. J. Mach. Learn. Res. 2011, 12, 2493–2537. [Google Scholar]

- Huang, Z.; Xu, W.; Yu, K. Bidirectional LSTM–CRF models for sequence tagging. arXiv 2015, arXiv:1508.01991. [Google Scholar]

- Lample, G.; Ballesteros, M.; Subramanian, S.; Kawakami, K.; Dyer, C. Neural architectures for named entity recognition. arXiv 2016, arXiv:1603.01360. [Google Scholar]

- Xu, K.; Zhou, Z.; Hao, T.; Liu, W. A bidirectional LSTM and conditional random fields approach to medical named entity recognition. In Proceedings of the International Conference on Advanced Intelligent Systems and Informatics 2015 (AISI 2015), Beni Suef, Egypt, 28–30 November 2015; pp. 355–365. [Google Scholar]

- Habibi, M.; Weber, L.; Neves, M.; Wiegandt, D.L.; Leser, U. Deep learning with word embeddings improves biomedical named entity recognition. Bioinformatics 2017, 33, i37–i48. [Google Scholar] [CrossRef] [PubMed]

- Liu, Z.; Yang, M.; Wang, X.; Chen, Q.; Tang, B.; Wang, Z.; Xu, H. Entity recognition from clinical texts via recurrent neural network. BMC Med. Inform. Decis. Mak. 2017, 17, 67. [Google Scholar] [CrossRef]

- Si, Y.; Wang, J.; Xu, H.; Roberts, K. Enhancing Clinical Concept Extraction with Contextual Embedding. arXiv 2019, arXiv:1902.08691. [Google Scholar] [CrossRef] [PubMed]

- Jin, Q.; Liu, J.; Lu, X. Deep Contextualized Biomedical Abbreviation Expansion. arXiv 2019, arXiv:1906.03360. [Google Scholar]

- Jin, Q.; Dhingra, B.; Cohen, W.W.; Lu, X. Probing biomedical embeddings from language models. arXiv 2019, arXiv:1904.02181. [Google Scholar]

- Johnson, A.E.; Pollard, T.J.; Shen, L.; Li-wei, H.L.; Feng, M.; Ghassemi, M.; Moody, B.; Szolovits, P.; Celi, L.A.; Mark, R.G. MIMIC-III, a freely accessible critical care database. Sci. Data 2016, 3, 160035. [Google Scholar] [CrossRef] [PubMed]

- Mikolov, T.; Karafiát, M.; Burget, L.; Černocký, J.; Khudanpur, S. Recurrent neural network based language model. In Proceedings of the 17th Annual Conference of the International Speech Communication Association, Makuhari, Chiba, Japan, 26–30 September 2010; pp. 1045–1048. [Google Scholar]

- Pascanu, R.; Mikolov, T.; Bengio, Y. On the difficulty of training recurrent neural networks. In Proceedings of the 30th International Conference on Machine Learning (ICML 2013), Atlanta, GA, USA, 16–21 June 2013; pp. 1310–1318. [Google Scholar]

- Vaswani, A.; Shazeer, N.; Parmar, N.; Uszkoreit, J.; Jones, L.; Gomez, A.N.; Kaiser, Ł.; Polosukhin, I. Attention is all you need. In Proceedings of the Advances in Neural Information Processing Systems, Long Beach, CA, USA, 4–9 December 2017; pp. 5998–6008. [Google Scholar]

- Kingma, D.P.; Ba, J. Adam: A method for stochastic optimization. arXiv 2014, arXiv:1412.6980. [Google Scholar]

- Bergstra, J.; Bengio, Y. Random search for hyper-parameter optimization. J. Mach. Learn. Res. 2012, 13, 281–305. [Google Scholar]

- I2B2. Evaluation Methods and Procedures for 2010 i2b2/VA Challenge. Available online: https://www.i2b2.org/NLP/Relations/assets/Evaluation%20methods%20for%202010%20Challenge.pdf (accessed on 25 May 2019).

- Boag, W.; Sergeeva, E.; Kulshreshtha, S.; Szolovits, P.; Rumshisky, A.; Naumann, T. CliNER 2.0: Accessible and Accurate Clinical Concept Extraction. arXiv 2018, arXiv:1803.02245. [Google Scholar]

- Kohavi, R. A study of cross-validation and bootstrap for accuracy estimation and model selection. In Proceedings of the 1995 International Joint Conference on AI, Montreal, QC, Canada, 20–25 August 1995; pp. 1137–1145. [Google Scholar]

| Dataset | Records | Sentences | Tokens | Tokens Per Sentence |

|---|---|---|---|---|

| Training set | 170 | 16,315 | 149,666 | 9.17 |

| Testing set | 256 | 27,626 | 267,758 | 9.69 |

| Hyperparameters | Value |

|---|---|

| Dimension of Embeddings from Language Models (ELMo) | 1024 |

| Bidirectional Long Short-term Memory (biLSTM) hidden size | 256 |

| Number of biLSTM layers | 2 |

| Number of attention heads | 2 |

| Dropout rate | 0.5 |

| Learning rate | 0.001 |

| Batch size | 64 |

| Epochs | 100 |

| Solutions | F1 | Precision | Recall |

|---|---|---|---|

| GloVe-biLSTM–CRF [7] | 83.88 | 84.36 | 83.41 |

| Clinical Named Entity Recognition system (CliNER) 2.0 [52] | 83.8 | 84.0 | 83.6 |

| Att-biLSTM–CRF + Transfer [19] | 85.71 | 86.27 | 85.15 |

| ELMo (General) + BiLSTM–CRF (Single) [24] | 82.54 ± 0.14 | 83.26 ± 0.25 | 81.84 ± 0.22 |

| Word2vec + multitask-Att-biLSTM–CRF | 78.70 | 79.98 | 77.47 |

| ELMo (General) + multitask-Att-biLSTM–CRF | 83.00 | 82.91 | 83.09 |

| ELMo (Clinical) + BiLSTM–CRF (Single) [24] | 86.84 ± 0.16 | 87.44 ± 0.27 | 86.25 ± 0.26 |

| Our model (Single) | 87.53 ± 0.11 | 87.75± 0.18 | 87.32 ± 0.26 |

| ELMo (Clinical) + BiLSTM–CRF (Ensemble) [24] | 88.60 | 89.34 | 87.87 |

| Our model (Ensemble) | 88.78 | 89.11 | 88.46 |

| Entity Type | F1 | Precision | Recall |

|---|---|---|---|

| Medical Test | 88.37 | 88.61 | 88.13 |

| Problem | 89.03 | 89.40 | 88.66 |

| Treatment | 88.96 | 89.34 | 88.58 |

| SD | 0.21 | 0.25 | 0.16 |

© 2019 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (http://creativecommons.org/licenses/by/4.0/).

Share and Cite

Yang, J.; Liu, Y.; Qian, M.; Guan, C.; Yuan, X. Information Extraction from Electronic Medical Records Using Multitask Recurrent Neural Network with Contextual Word Embedding. Appl. Sci. 2019, 9, 3658. https://doi.org/10.3390/app9183658

Yang J, Liu Y, Qian M, Guan C, Yuan X. Information Extraction from Electronic Medical Records Using Multitask Recurrent Neural Network with Contextual Word Embedding. Applied Sciences. 2019; 9(18):3658. https://doi.org/10.3390/app9183658

Chicago/Turabian StyleYang, Jianliang, Yuenan Liu, Minghui Qian, Chenghua Guan, and Xiangfei Yuan. 2019. "Information Extraction from Electronic Medical Records Using Multitask Recurrent Neural Network with Contextual Word Embedding" Applied Sciences 9, no. 18: 3658. https://doi.org/10.3390/app9183658

APA StyleYang, J., Liu, Y., Qian, M., Guan, C., & Yuan, X. (2019). Information Extraction from Electronic Medical Records Using Multitask Recurrent Neural Network with Contextual Word Embedding. Applied Sciences, 9(18), 3658. https://doi.org/10.3390/app9183658