Development of Machine Learning for Asthmatic and Healthy Voluntary Cough Sounds: A Proof of Concept Study

Abstract

:1. Introduction

2. Materials and Methods

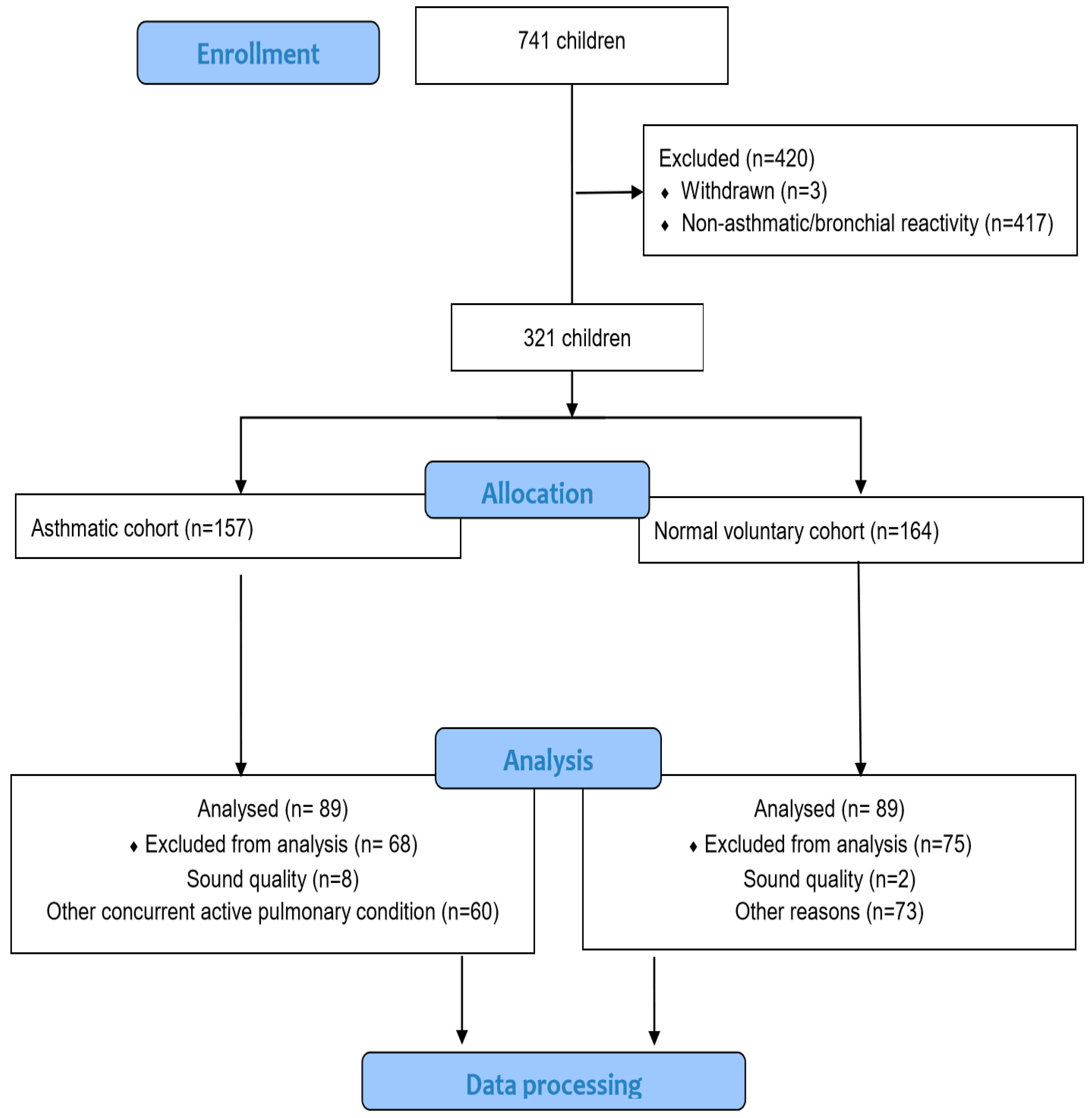

2.1. Study Design and Study Population

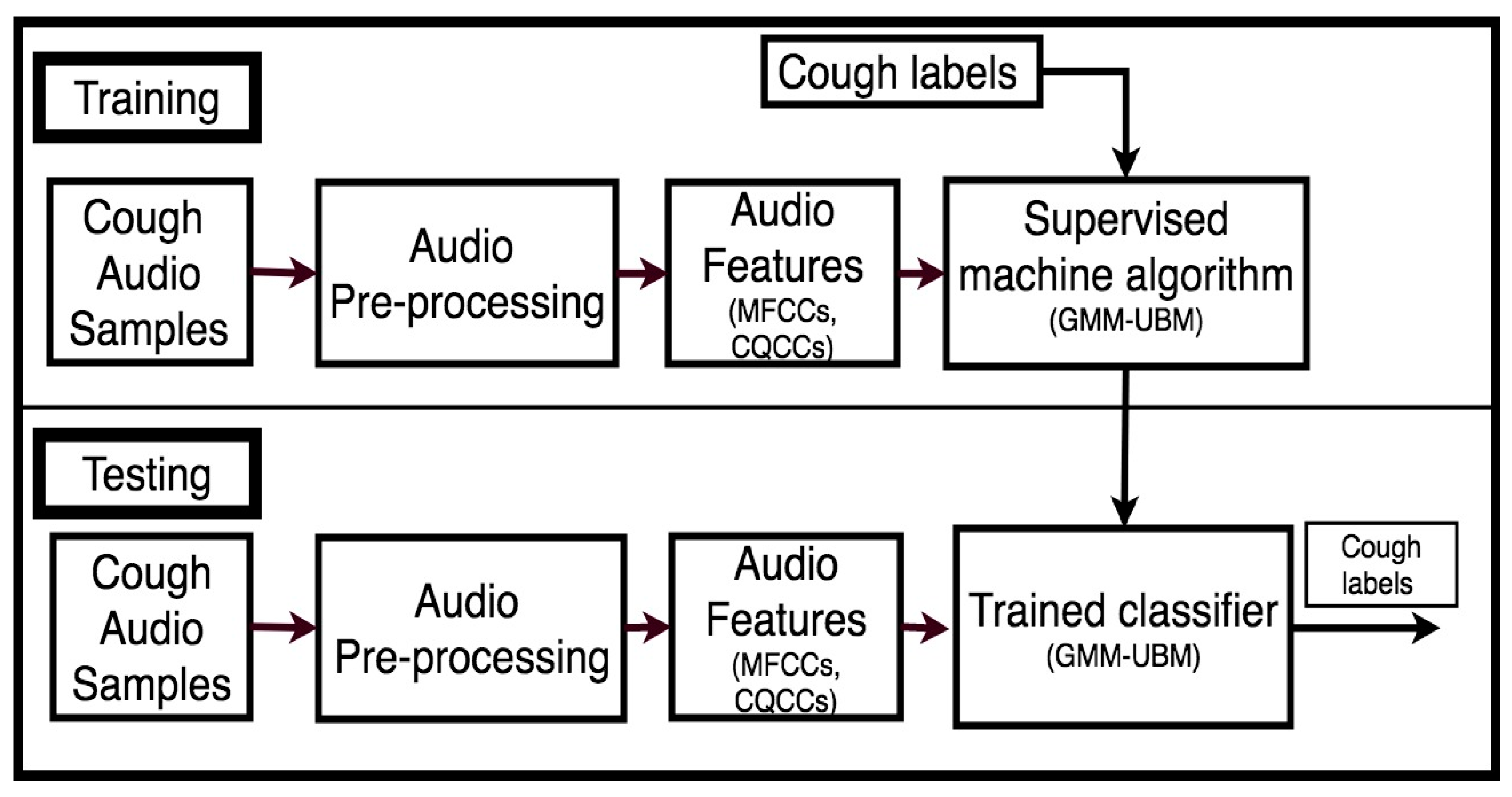

2.2. Cough Data Processing

2.2.1. Audio Pre-Processing

2.2.2. Audio Feature Extraction

2.2.3. Classification Modeling of Cough Sound

2.2.4. Train-Test Data Split-Up

2.2.5. Performance of the Classification Model

Classification Accuracy of the Classification Model

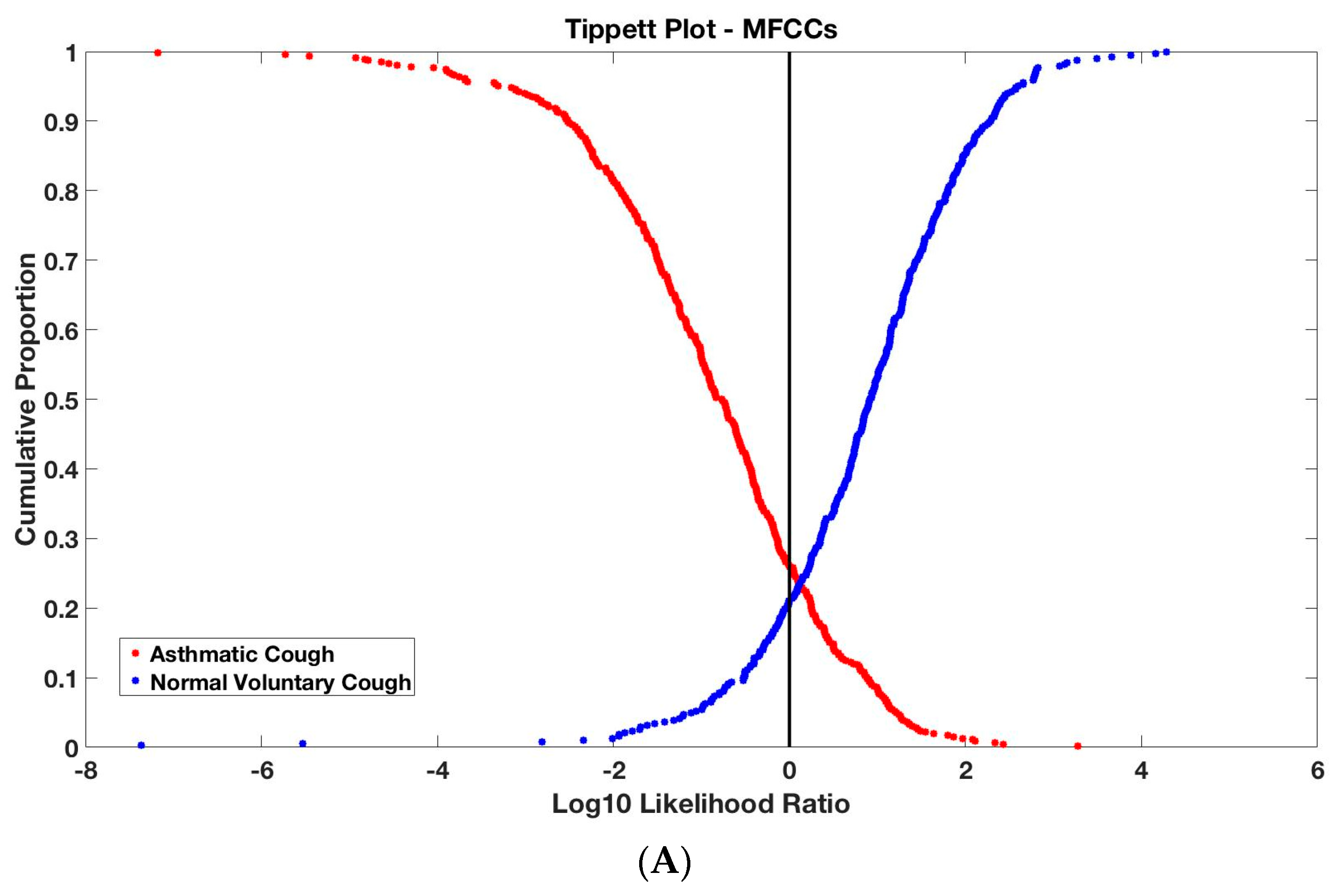

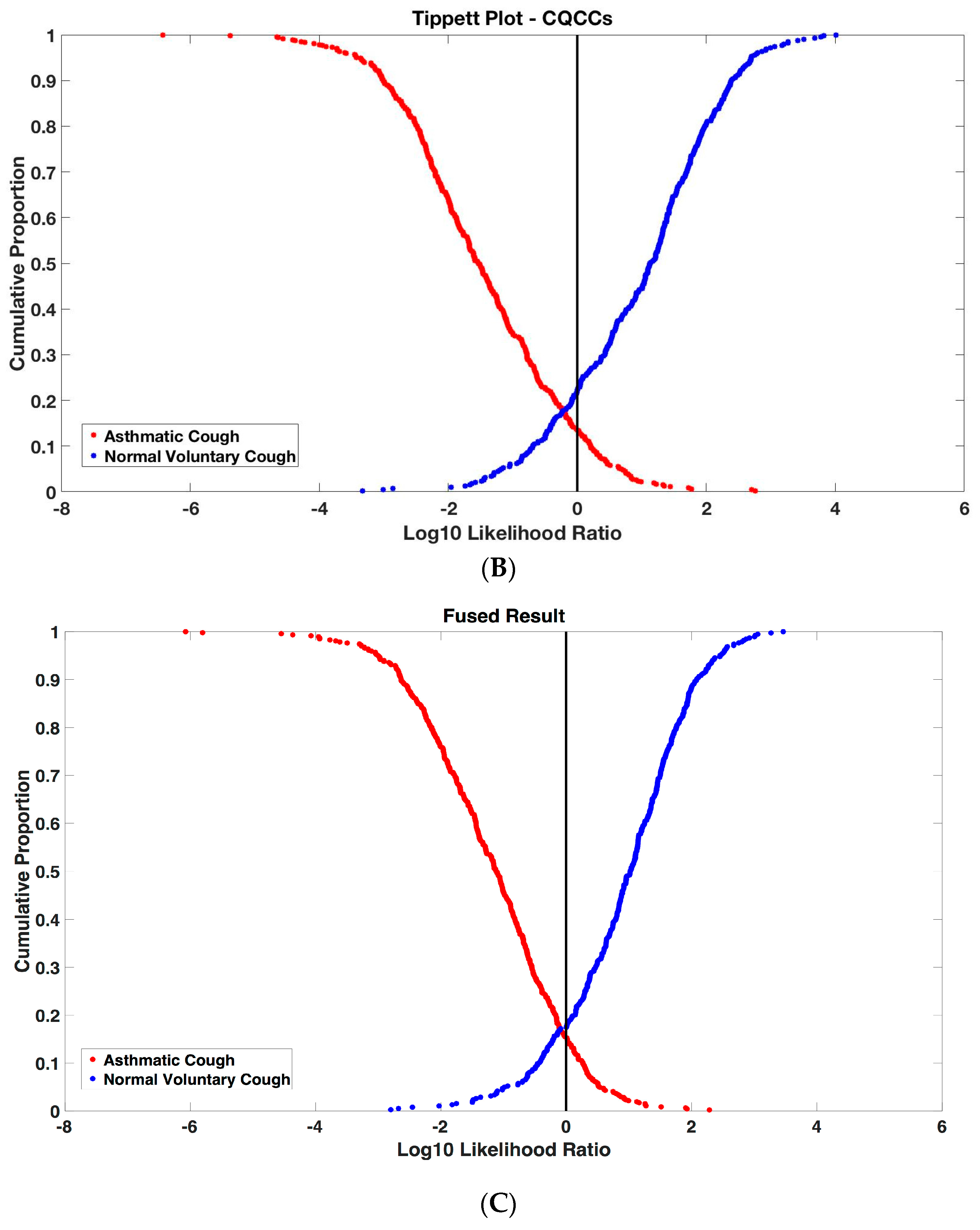

Tippett Plots

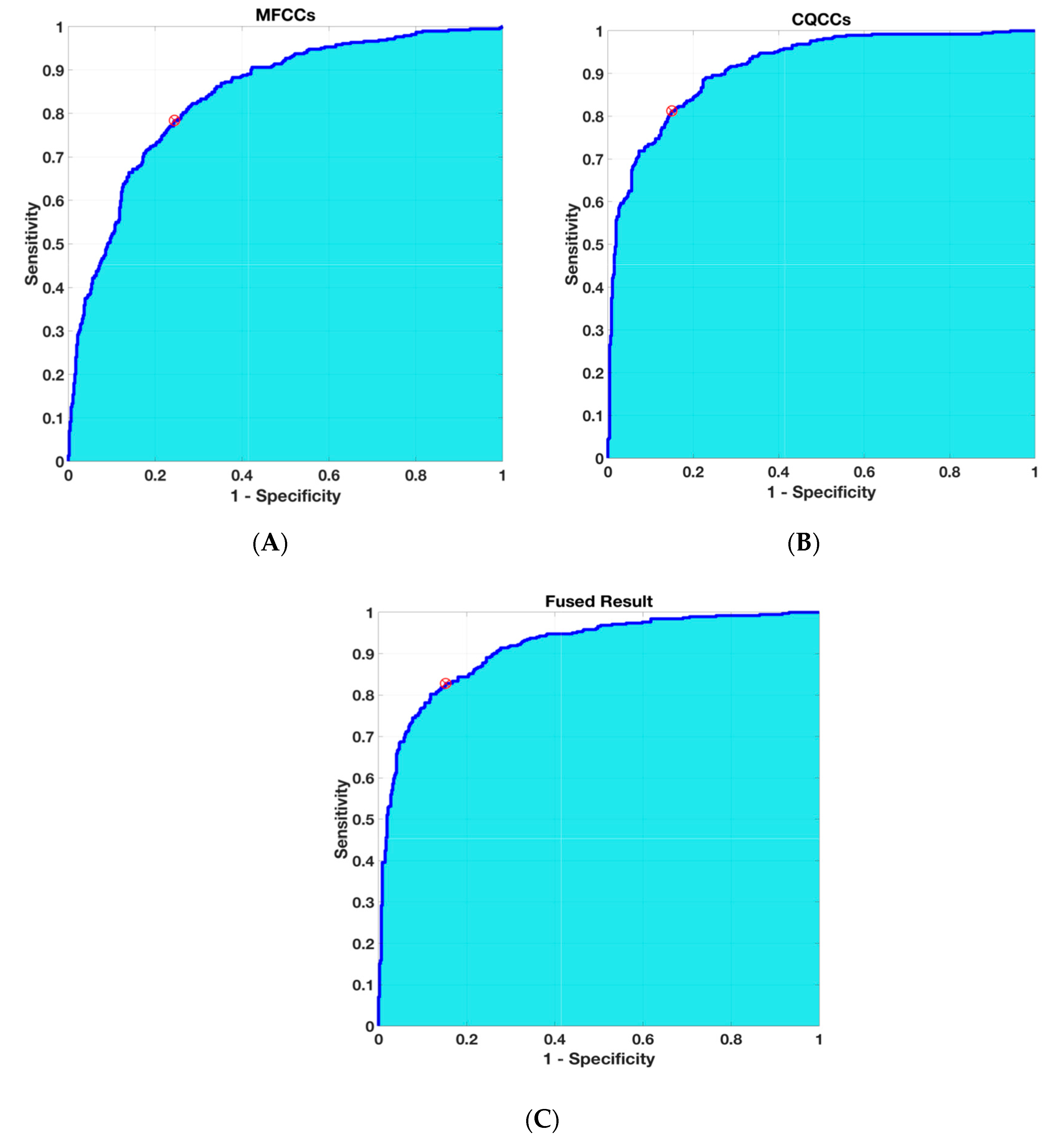

Receiver Operating Characteristics (ROC)

2.2.6. Statistical Analysis

3. Results

4. Discussion

5. Conclusions and Future Work

Author Contributions

Funding

Acknowledgments

Conflicts of Interest

References

- Shields, M.D.; Bush, A.; Everard, M.L.; McKenzie, S.; Primhak, R. British Thoracic Society Cough Guideline Group. BTS guidelines: Recommendations for the assessment and management of cough in children. Thorax 2008, 63 (Suppl. 3), iii1–iii15. [Google Scholar]

- Shields, M.D.; Thavagnanam, S. The difficult coughing child: Prolonged acute cough in children. Cough 2013, 9, 11. [Google Scholar] [CrossRef]

- Alsubaie, H.; Al-Shamrani, A.; Alharbi, A.S.; Alhaider, S. Clinical practice guidelines: Approach to cough in children: The official statement endorsed by the Saudi Pediatric Pulmonology Association (SPPA). Int. J. Pediatr. Adolesc. Med. 2015, 2, 38–43. [Google Scholar] [CrossRef] [Green Version]

- O’Grady, K.F.; Grimwood, K.; Toombs, M.; Sloots, T.P.; Otim, M.; Whiley, D.; Anderson, J.; Rablin, S.; Torzillo, P.J.; Buntain, H.; et al. Effectiveness of a cough management algorithm at the transitional phase from acute to chronic cough in Australian children aged <15 years: Protocol for a randomised controlled trial. BMJ 2017, 7, e013796. [Google Scholar] [CrossRef]

- Chang, A.B.; Gaffney, J.T.; Eastburn, M.M.; Faoagali, J.; Cox, N.C.; Masters, I.B. Cough quality in children: A comparison of subjective vs. bronchoscopic findings. Respir. Res. 2005, 6, 3. [Google Scholar] [CrossRef]

- Niimi, A. Cough Variant Asthma A Major Cause of Chronic Cough. Clin. Pulm. Med. 2008, 15, 189–196. [Google Scholar] [CrossRef]

- Niimi, A. Cough and Asthma. Curr. Respir. Med. Rev. 2011, 7, 47–54. [Google Scholar] [CrossRef]

- Chang, A. Isolated cough: Probably not asthma. Arch. Dis. Child. 1999, 80, 211–213. [Google Scholar] [CrossRef]

- Begic, E.; Begic, Z.; Dobraca, A.; Hasanbegovic, E. Productive Cough in Children and Adolescents—View from Primary Health Care System. Med. Arch. 2017, 71, 66–68. [Google Scholar] [CrossRef]

- Oren, E.; Rothers, J.; Stern, D.A.; Morgan, W.J.; Halonen, M.; Wright, A.L. Cough during infancy and subsequent childhood asthma. Clin. Exp. Allergy 2015, 45, 1439–1446. [Google Scholar] [CrossRef] [Green Version]

- Jesenak, M.; Babusikova, E.; Petrikova, M.; Turcan, T.; Rennerova, Z.; Michnova, Z.; Havlicekova, Z.; Villa, M.P.; Banovcin, P. Cough reflex sensitivity in various phenotypes of childhood asthma. J. Physiol. Pharm. 2009, 60, 61–65. [Google Scholar]

- Koh, Y.Y.; Chae, S.A.; Min, K.U. Cough variant asthma is associated with a higher wheezing threshold than classic asthma. Clin. Exp. Allergy 1993, 23, 696–701. [Google Scholar] [CrossRef]

- Turcotte, S.E.; Lougheed, M.D. Cough in asthma. Curr. Opin. Pharmacol. 2011, 11, 231–237. [Google Scholar] [CrossRef]

- Chang, A.B.; Harrhy, V.A.; Simpson, J.; Masters, I.B.; Gibson, P.G. Cough, airway inflammation, and mild asthma exacerbation. Arch. Dis. Child. 2002, 86, 270–275. [Google Scholar] [CrossRef]

- Chang, A.B. Cough, Cough Receptors, and Asthma in Children. Pediatric Pulmonol. 1999, 28, 59–70. [Google Scholar] [CrossRef]

- Chang, A.B. Cough: Are children really different to adults? Cough 2005, 1, 7. [Google Scholar] [CrossRef]

- Murata, A.; Taniguchi, Y.; Hashimoto, Y.; Kaneko, Y.; Takasaki, Y.; Kudoh, S. Discrimination of productive and non-productive cough by sound analysis. Intern. Med. 1998, 37, 732–735. [Google Scholar] [CrossRef]

- Abaza, A.A.; Day, J.B.; Reynolds, J.S.; Mahmoud, A.M.; Goldsmith, W.T.; McKinney, W.G.; Petsonk, E.L.; Frazer, D.G. Classification of voluntary cough sound and airflow patterns for detecting abnormal pulmonary function. Cough 2009, 5, 8. [Google Scholar] [CrossRef]

- Abeyratne, U.R.; Swarnkar, V.; Setyati, A.; Triasih, R. Cough sound analysis can rapidly diagnose childhood pneumonia. Ann. Biomed. Eng. 2013, 41, 2448–2462. [Google Scholar] [CrossRef]

- Toop, L.J.; Thorpe, C.W.; Fright, R. Cough sound analysis: A new tool for the diagnosis of asthma? Fam. Pract. 1989, 6, 83–85. [Google Scholar] [CrossRef]

- Al-Khassaweneh, M.; Bani Abdelrahman, R.E. A signal processing approach for the diagnosis of asthma from cough sounds. J. Med. Eng. Technol. 2013, 37, 165–171. [Google Scholar] [CrossRef]

- Amrulloh, Y.; Abeyratne, U.; Swarnkar, V.; Triasih, R. Cough Sound Analysis for Pneumonia and Asthma Classification in Pediatric Population. In Proceedings of the 6th International Conference on Intelligent Systems, Modelling and Simulation, Kuala Lumpur, Malaysia, 9–12 February 2015. [Google Scholar]

- Cernatescu, I.; Cinteza, E. It is chronic cough variant asthma a realistic diagnosis in children? Medica 2008, 3, 281–284. [Google Scholar]

- Muda, L.; Begam, M.; Elamvazuthi, I. Voice Recognition Algorithms using Mel Frequency Cepstral Coefficient (MFCC) and Dynamic Time Warping (DTW) Techniques. J. Comput. 2010, 2, 138–143. [Google Scholar]

- Rabiner, L.R.; Schafer, R.W. Theory and Applications of Digital Speech Processing; Pearson: Upper Saddle River, NJ, USA, 2011. [Google Scholar]

- Todisco, M.; Delgado, H.; Evans, N. A new feature for automatic speaker verification anti-spoofing: Constant Q cepstral coefficients. In Proceedings of the Speaker Odyssey Workshop, Bilbao, Spain, 21 June 2016; Volume 25, pp. 249–252. [Google Scholar]

- Todisco, M.; Delgado, H.; Evans, N. Constant Q cepstral coefficients: A spoofing countermeasure for automatic speaker verification. Comput. Speech Lang. 2017, 45, 516–535. [Google Scholar] [CrossRef]

- Aitken, C.G.; Taroni, F. Statistics and the Evaluation of Evidence for Forensic Scientists.; John Wiley & Sons: Chichester, UK, 2004. [Google Scholar]

- Reynolds, D.A.; Quatieri, T.F.; Dunn, R.B. Speaker verification using adapted Gaussian mixture models. Digit. Signal. Process. 2000, 10, 19–41. [Google Scholar] [CrossRef]

- Reynolds, D. Gaussian mixture models. Encycl. Biom. 2015, 827–832. [Google Scholar] [CrossRef]

- Morrison, G.S. Forensic voice comparison and the paradigm shift. Sci. Justice 2009, 49, 298–308. [Google Scholar] [CrossRef]

- Rose, P. Forensic Speaker Identification; CRC Press: Boca Raton, FL, USA, 2003. [Google Scholar]

- Crowther, P.S.; Cox, R.J. A method for optimal division of data sets for use in neural networks. In Proceedings of the International Conference on Knowledge-Based and Intelligent Information and Engineering Systems, Melbourne, VIC, Australia, 14–16 September 2005; Springer: Berlin, Germany, 2005; pp. 1–7. [Google Scholar]

- Meuwly, D.; Drygajlo, A. Forensic speaker recognition based on a Bayesian framework and Gaussian mixture modelling (GMM). In Proceedings of the A Speaker Odyssey-The Speaker Recognition Workshop, Crete, Greece, 18–22 June 2001. [Google Scholar]

- Gill, P.; Curran, J.; Neumann, C. Interpretation of complex DNA profiles using Tippett plots. Forensic Sci. Int. Genet. Suppl. Ser. 2008, 1, 646–648. [Google Scholar] [CrossRef]

- Todokoro, M.; Mochizuki, H.; Tokuyama, K.; Morikawa, A. Childhood cough variant asthma and its relationship to classic asthma. Ann. Allergy Asthma Immunol. 2003, 90, 652–659. [Google Scholar] [CrossRef]

- Satia, I.; Badri, H.; Woodhead, M.; O’Byrne, P.M.; Fowler, S.J.; Smith, J.A. The interaction between bronchoconstriction and cough in asthma. Thorax 2017, 72, 1144–1146. [Google Scholar] [CrossRef]

- Bonjyotsna, A.; Bhuyan, M. Performance Comparison of Neural Networks and GMM for Vocal / Nonvocal segmentation for Singer Identification. Int. J. Eng. Technol. 2014, 6, 1194–1203. [Google Scholar]

- Bahoura, M.; Pelletier, C. Respiratory sounds classification using Gaussian mixture models. In Proceedings of the Canadian Conference on Electrical and Computer Engineering 2004 (IEEE Cat. No. 04CH37513), Niagara Falls, ON, Canada, 2–5 May 2004; Volume 3, pp. 1309–1312. [Google Scholar]

- Mayorga, P.; Druzgalski, C.; Morelos, R.L.; Gonzalez, O.H.; Vidales, J. Acoustics based assessment of respiratory diseases using GMM classification. In Proceedings of the 2010 Annual International Conference of the IEEE Engineering in Medicine and Biology, Buenos Aires, Argentina, 31 August–4 September 2010; pp. 6312–6316. [Google Scholar]

- Bahoura, M. Pattern recognition methods applied to respiratory sounds classification into normal and wheeze classes. Comput. Biol. Med. 2009, 39, 824–843. [Google Scholar] [CrossRef]

- Sen, I.; Saraclar, M.; Kahya, Y.P. A comparison of SVM and GMM-based classifier configurations for diagnostic classification of pulmonary sounds. IEEE Trans. Biomed. Eng. 2015, 62, 1768–1776. [Google Scholar] [CrossRef]

- Aykanat, M.; Kılıç, Ö.; Kurt, B.; Saryal, S. Classification of lung sounds using convolutional neural networks. Eurasip J. Image Video Process. 2017, 1, 65. [Google Scholar] [CrossRef]

- Folland, R.; Hines, E.; Dutta, R.; Boilot, P.; Morgan, D. Comparison of neural network predictors in the classification of tracheal–bronchial breath sounds by respiratory auscultation. Artif. Intell. Med. 2004, 31, 211–220. [Google Scholar] [CrossRef]

- Güler, İ.; Polat, H.; Ergün, U. Combining neural network and genetic algorithm for prediction of lung sounds. J. Med. Syst. 2005, 29, 217–231. [Google Scholar] [CrossRef]

- Chen, C.H.; Huang, W.T.; Tan, T.H.; Chang, C.C.; Chang, Y.J. Using k-nearest neighbor classification to diagnose abnormal lung sounds. Sensors 2015, 15, 13132–13158. [Google Scholar] [CrossRef]

- Ntalampiras, S.; Potamitis, I. Classification of Sounds Indicative of Respiratory Diseases. In Proceedings of the 2019 International Conference on Engineering Applications of Neural Networks, Crete, Greece, 24–26 May 2019; Springer: Cham, Switzerland, 2019; pp. 93–103. [Google Scholar]

- Bhattacharya, G.; Alam, J.; Stafylakis, T.; Kenny, P. Deep neural network based text-dependent speaker recognition 2016: Preliminary results. Odyssey 2016, 2016, 9–15. [Google Scholar]

| Demographic Characteristics | Normal–Voluntary Cohort N = 89 | Asthmatic Cohort N = 89 | p-Value |

|---|---|---|---|

| Age (months)—mean (SD) | 108.86(34.59) | 102.09(36.22) | 0.734 * |

| Sex (Male:Female) | 80:9 | 60:29 | 0.000 |

| Race (Malay:Chinese:Indian:Others) | 43:38:6:2 | 44:24:14:7 | 0.012 |

| Weight (kg)—mean (SD) | 33.74(15.39) | 32.39(15.43) | 0.457 ^ |

| Asthmatic Cohort, N = 89 | |||

|---|---|---|---|

| Training Group N = 65 | Testing Group N = 24 | p-Value | |

| Demographics Characteristics | |||

| Age (months)—mean (SD) | 104.75(37.97) | 94.92(30.57) | 0.091 * |

| Sex (Male:Female) | 41:24 | 19:5 | 0.205 |

| Race (Malay:Chinese:Indian:Others) | 33:17:11:4 | 11:7:3:3 | 0.738 |

| Weight (kg)—mean (SD) | 32.29(15.17) | 32.65(16.47) | 0.963 ^ |

| Duration of cough at presentation (days)—mean (SD) | 3.54(3.96) | 4.86(4.91) | 0.364 ^ |

| Past Medical History, N | 0.476 * | ||

| None | 13 | 3 | |

| Asthma | 43 | 19 | |

| Allergic rhinitis | 9 | 6 | |

| Recurrent wheeze | 7 | 1 | |

| Clinical Parameters at Presentation of Cough | |||

| Shortness of breath (Yes:No) | 37:23 | 15:7 | 0.231 |

| Tmax (°C): mean (SD) | 37.26(0.48) | 37.03(0.50) | 0.016 ^ |

| Respiratory rate/min—mean (SD) | 27.57(5.59) | 28.92(6.82) | 0.400 ^ |

| Heart rate/min—mean (SD) | 117.38(20.90) | 112.08(17.55) | 0.273 * |

| Auscultation at Presentation of Cough, N | 0.189 | ||

| No added sounds | 16 | 4 | |

| Rhonchi/wheeze | 44 | 14 | |

| Crepitation | 2 | 1 | |

| Rhonchi/wheeze and Crepitation | 3 | 5 | |

| Chest Radiography Performed, N | 0.600 | ||

| Not done | 52 | 18 | |

| Done, negative finding | 6 | 4 | |

| Done, positive finding | 7 | 2 | |

| Normal–Voluntary Cohort, N = 89 | |||

|---|---|---|---|

| Training Group N = 71 | Testing Group N = 42 | p-Value | |

| Demographics Characteristics | |||

| Age (months)—mean (SD) | 108.81(32.60) | 107.61(35.47) | 0.857 * |

| Sex (Male:Female) | 63:8 | 39:3 | 0.744 |

| Race (Malay:Chinese:Indian:Others) | 34:31:4:2 | 19:20:2:1 | 0.980 |

| Weight (kg)—mean (SD) | 32.76(13.89) | 34.97(16.62) | 0.680 ^ |

| Past Medical History, N | 0.734 * | ||

| None | 52 | 32 | |

| Asthma | 6 | 2 | |

| Allergic rhinitis | 10 | 7 | |

| Recurrent wheeze | 0 | 0 | |

| Mild cold or recovered from URTI | 6 | 2 | |

| Tippet Classification Accuracy (%) | Accuracy Using ROC Analysis | |||||

|---|---|---|---|---|---|---|

| Audio Features | Normal–Voluntary Cough | Asthma /Bronchial Reactivity Cough | Overall Mean | Sensitivity (%) | Specificity (%) | AROC (95% CI) |

| MFCCs | 78.91 | 74.03 | 76.24 | 78.39 | 75.54 | 0.84 (0.82–0.87) |

| CQCCs | 78.12 | 86.48 | 82.71 | 81.25 | 84.98 | 0.92 (0.90–0.93) |

| Fused Result | 82.55 | 84.76 | 83.76 | 82.81 | 84.76 | 0.91 (0.89–0.93) |

© 2019 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (http://creativecommons.org/licenses/by/4.0/).

Share and Cite

Hee, H.I.; Balamurali, B.; Karunakaran, A.; Herremans, D.; Teoh, O.H.; Lee, K.P.; Teng, S.S.; Lui, S.; Chen, J.M. Development of Machine Learning for Asthmatic and Healthy Voluntary Cough Sounds: A Proof of Concept Study. Appl. Sci. 2019, 9, 2833. https://doi.org/10.3390/app9142833

Hee HI, Balamurali B, Karunakaran A, Herremans D, Teoh OH, Lee KP, Teng SS, Lui S, Chen JM. Development of Machine Learning for Asthmatic and Healthy Voluntary Cough Sounds: A Proof of Concept Study. Applied Sciences. 2019; 9(14):2833. https://doi.org/10.3390/app9142833

Chicago/Turabian StyleHee, Hwan Ing, BT Balamurali, Arivazhagan Karunakaran, Dorien Herremans, Onn Hoe Teoh, Khai Pin Lee, Sung Shin Teng, Simon Lui, and Jer Ming Chen. 2019. "Development of Machine Learning for Asthmatic and Healthy Voluntary Cough Sounds: A Proof of Concept Study" Applied Sciences 9, no. 14: 2833. https://doi.org/10.3390/app9142833

APA StyleHee, H. I., Balamurali, B., Karunakaran, A., Herremans, D., Teoh, O. H., Lee, K. P., Teng, S. S., Lui, S., & Chen, J. M. (2019). Development of Machine Learning for Asthmatic and Healthy Voluntary Cough Sounds: A Proof of Concept Study. Applied Sciences, 9(14), 2833. https://doi.org/10.3390/app9142833