TOSCA-Based and Federation-Aware Cloud Orchestration for Kubernetes Container Platform

Abstract

:1. Introduction

2. Containerized Service Orchestration Technology

2.1. Docker and Kubernetes

- Kubernetes pod: this is an essential building block of Kubernetes, usually containing multiple Docker containers.

- Kubernetes node: this represents a VM (Virtual Machine) or physical machine where the Kubernetes pods are run.

- Kubernetes cluster: this consists of a set of worker nodes that cooperate to run applications as a single unit. Its master node coordinates all activities within the cluster.

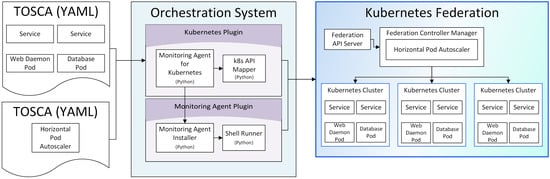

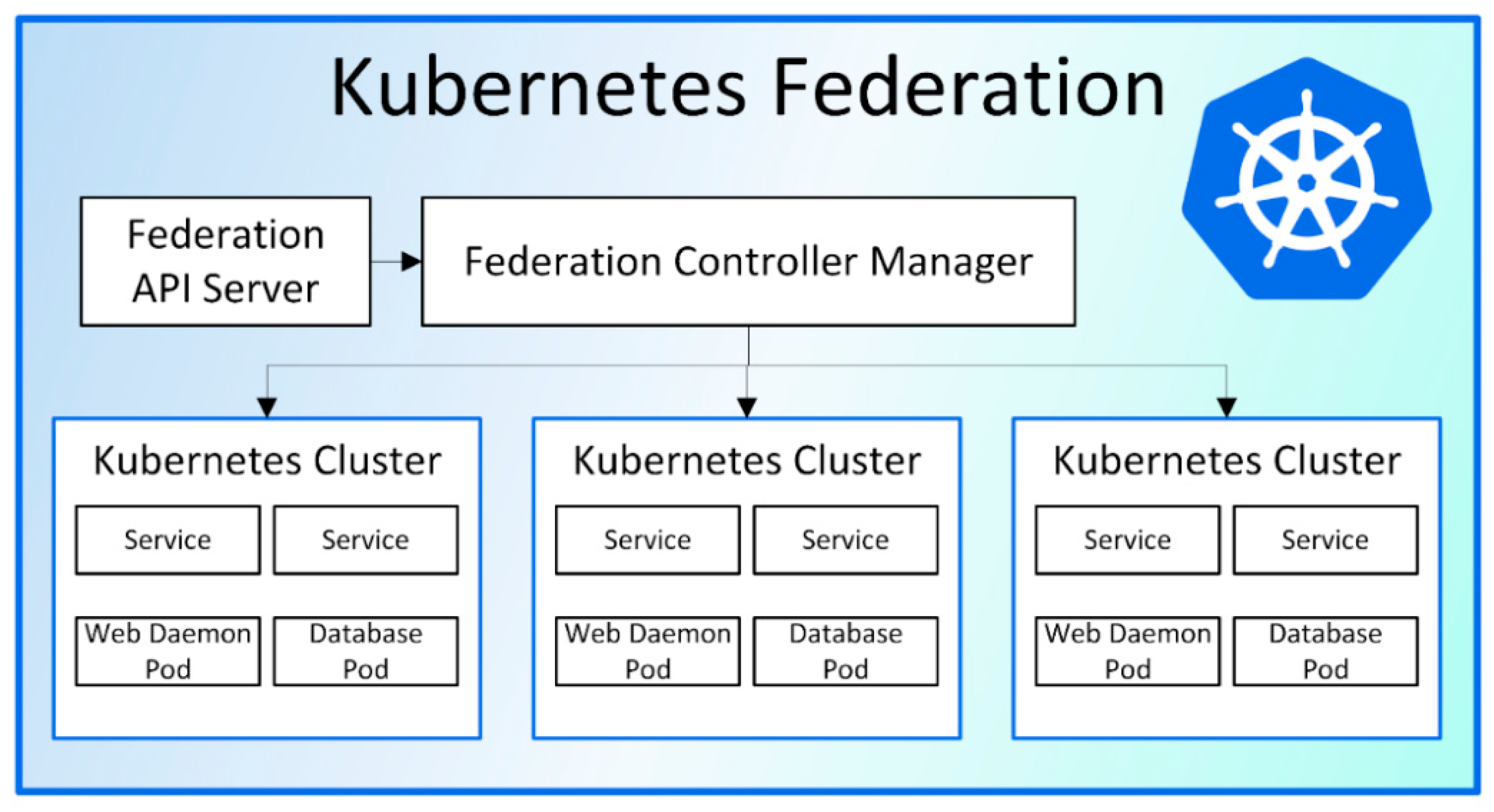

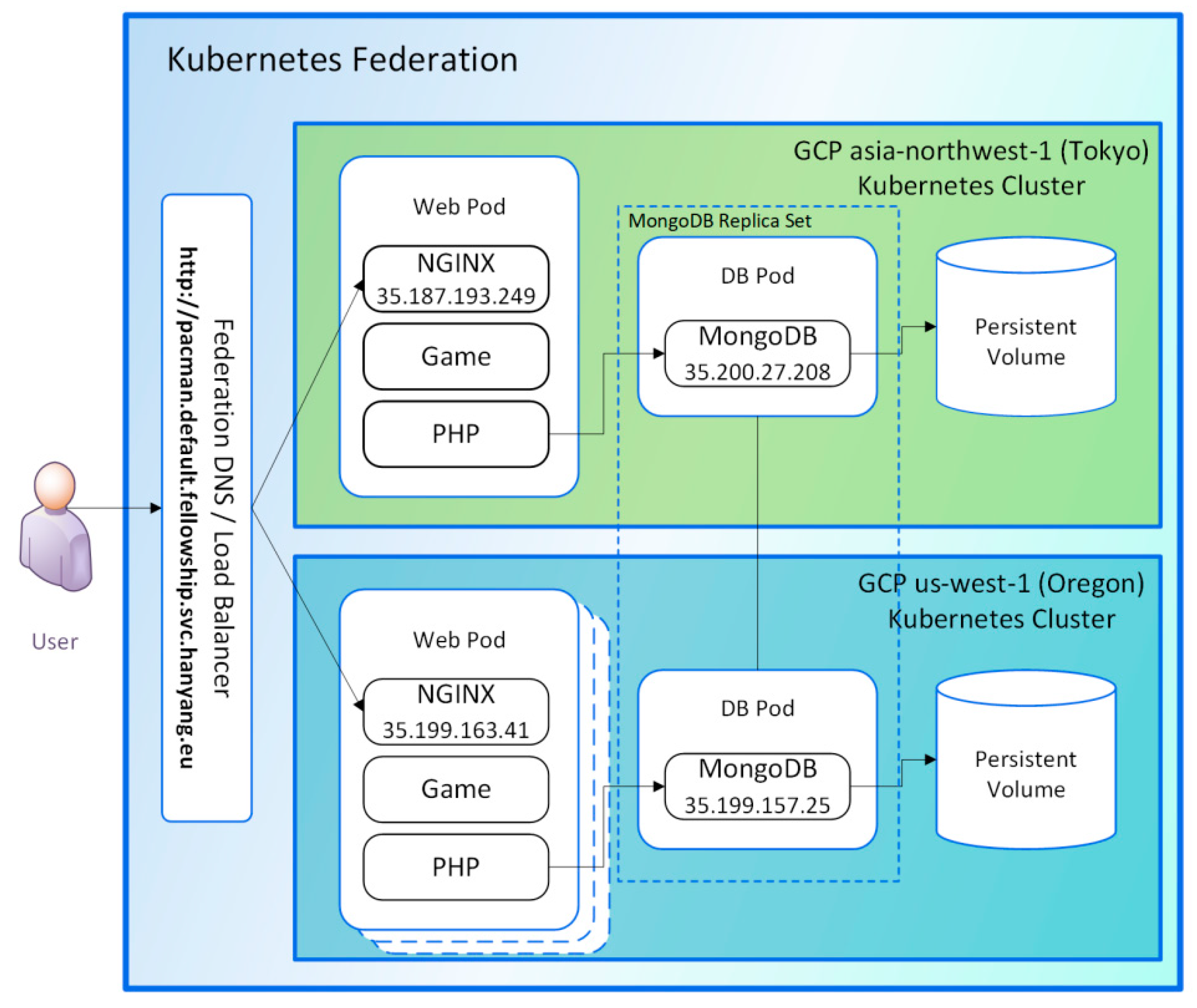

- Kubernetes Federation: this is a cluster of clusters, i.e., viewed as a backbone cluster that combines multiple Kubernetes clusters. For example, when one Kubernetes cluster is running on Google Cloud Platform in Tokyo, Japan, and another is running in Oregon, U.S., one Kubernetes federation might be configured such that if there is a problem in the Oregon platform, the Tokyo cluster would be able to take over the share of the faulty platform, thereby increasing the resiliency of the service. Figure 1 shows an example of the Kubernetes Federation architecture. Kubernetes provides a flexible, loosely coupled mechanism for service delivery. The federation application program interface (API) server interacts with the cluster through the federation controller manager. The master is responsible for exposing the API, scheduling the deployments, and overall cluster management. The interaction with the Kubernetes cluster is done through the federation controller manager using the federation API server.

2.2. Orchestration Tools Supporting Topology and Orchestration Specification for Cloud Applications (TOSCA)

- The node template defines the components of the cloud-based applications. The node type is specified to express the characteristics and the available functions of the service component.

- The relationship template defines the relations between the components. The relationship type is specified to express the relationship characteristics between the components.

3. Federation Frameworks of Containerized Services

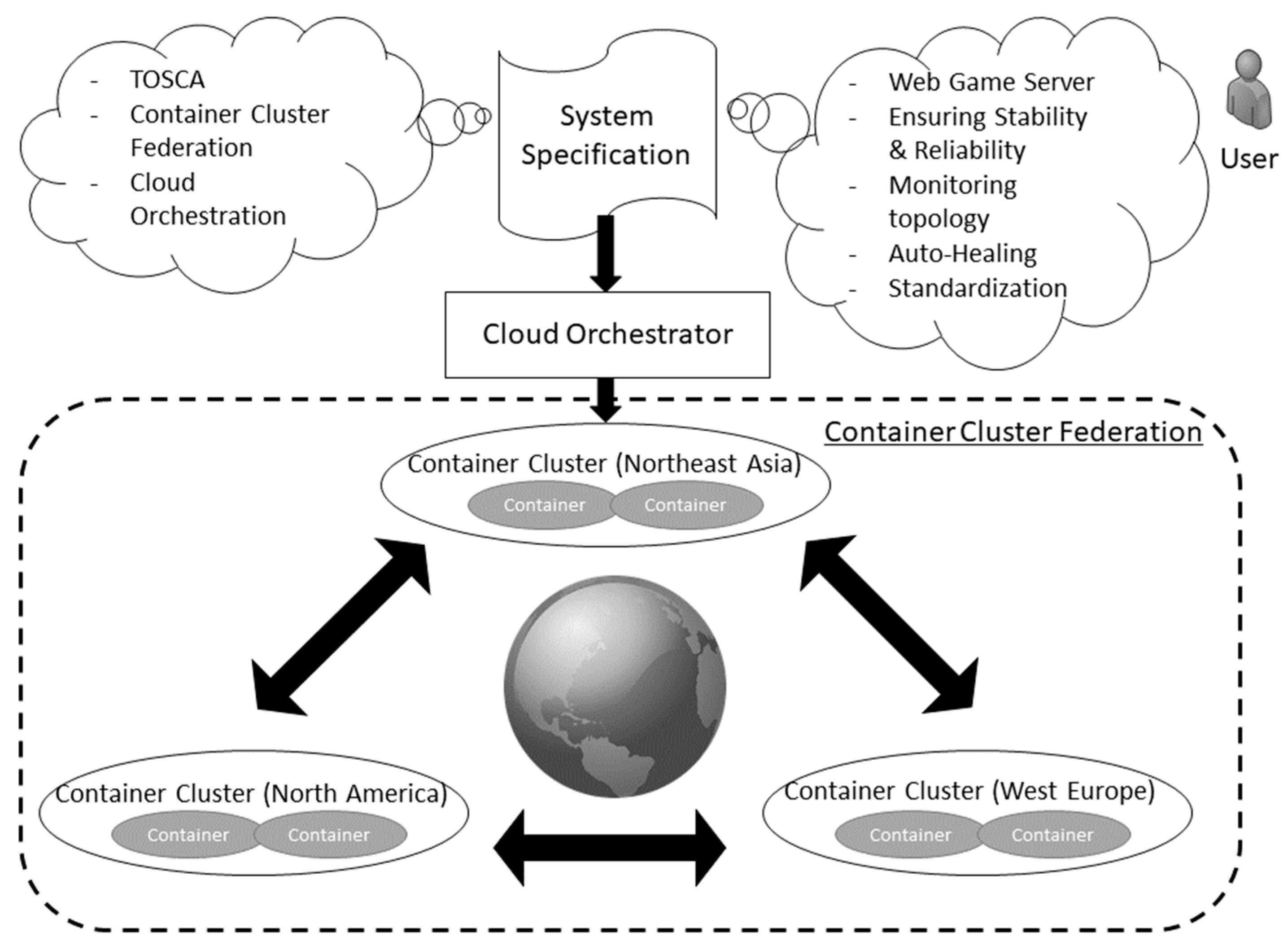

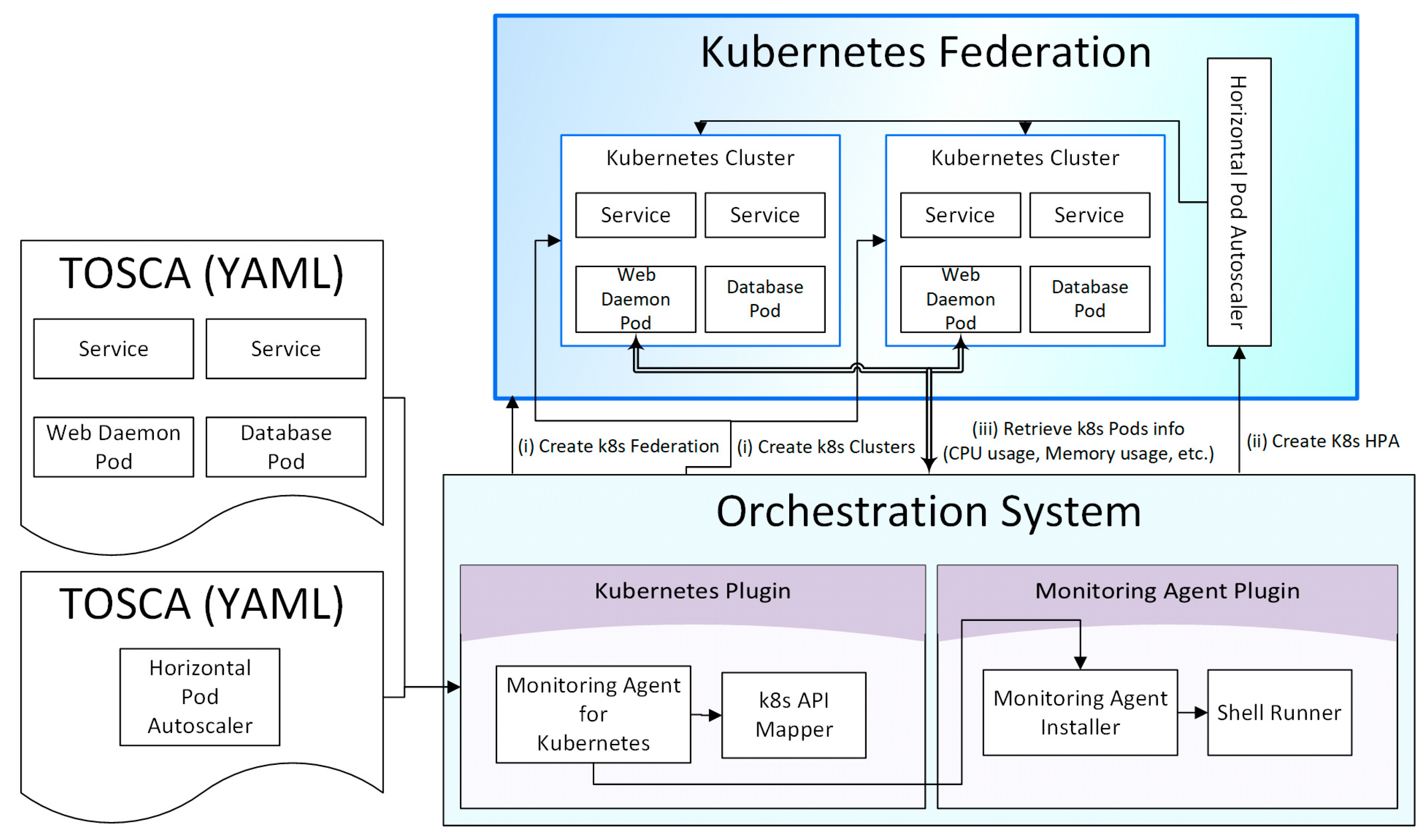

3.1. Overall Architecture

- Automation of the distribution and federation of container clusters by defining the Kubernetes cluster federation in the TOSCA description of the application, receiving the information of the Kubernetes clusters, and executing “join Kubernetes federation”.

- Automation of the service status management by defining Kubernetes horizontal pod auto-scaler (HPA) information with regard to the TOSCA description of the cloud application and enabling its operation in the cloud orchestration tool.

- Enabling the identification and monitoring of the entire service topology of the application by allowing the cloud orchestration tool to access the information of the Kubernetes components.

- (i)

- Defining a Kubernetes federation according to the TOSCA standard makes it easy to communicate Kubernetes clusters information and join them to the federation. Input TOSCA descriptions contain new federation and cluster components that are backed up by the corresponding federation and cluster types we introduced in Kubernetes plugins. For an association between a cluster and its intended federation, Kubernetes plugin module first makes a connection to the federation and then executes “kuberfed join” command to make the cluster join the federation.

- (ii)

- The system also supports Kubernetes HPA, which auto-scales the number of pods across the entire Kubernetes federation. The HPA component type is defined to be associated with the K8s API Mapper so that AutoscalingV1Api requests can be sent to the Kubernetes Federation. V1HorizontalPodAutoscaler and V1DeleteOptionsinputs payloads are transported for HPA creation and deletion, respectively.

- (iii)

- Furthermore, the monitoring agent allows the system to monitor the status of the Kubernetes components. A monitoring agent installed on each pod allows direct monitoring access to individual pods. Shell Runner module is introduced to our system architecture to support the installation of the Diamond monitoring program on the pod via the kubectl shell command execution.

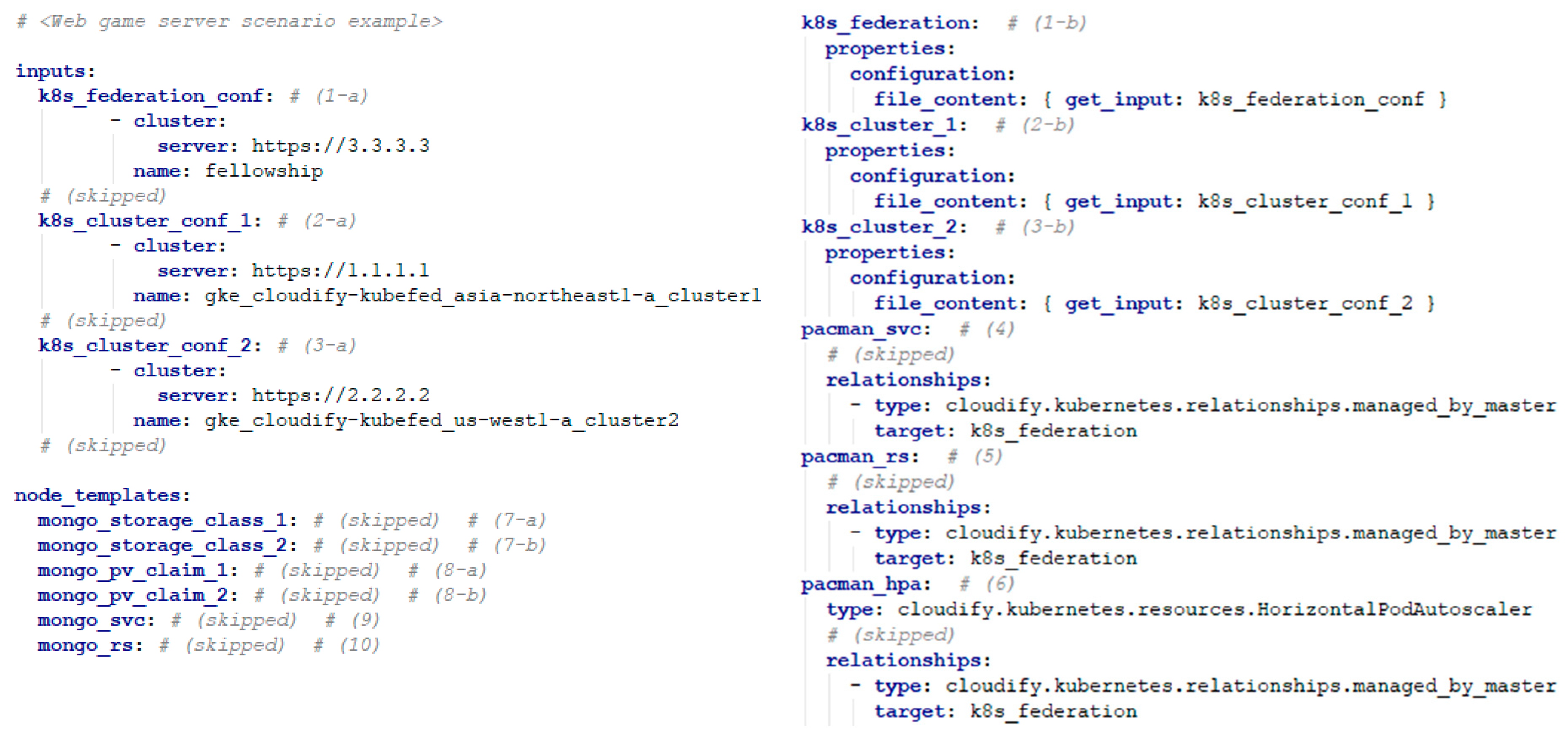

3.2. Implementation of Kubernetes Federation Cluster Configuration

- The Kubernetes cluster template is implemented as a component containing the Kubernetes cluster information that exists in the cloud provider’s service when creating the topology of applications.

- The Kubernetes Federation template is implemented as a component using the information of the Kubernetes cluster with the Kubernetes Federation control plane installed.

- Kubernetes cluster template and Kubernetes Federation template are defined to permit master/slave configuration (“managed_by_master”).

3.3. Definition of Horizontal Pod Autoscaler (HPA) Components

3.4. Monitoring the Information of Kubernetes Components

4. Evaluation

4.1. Environment Setup for Development and Performance Verification

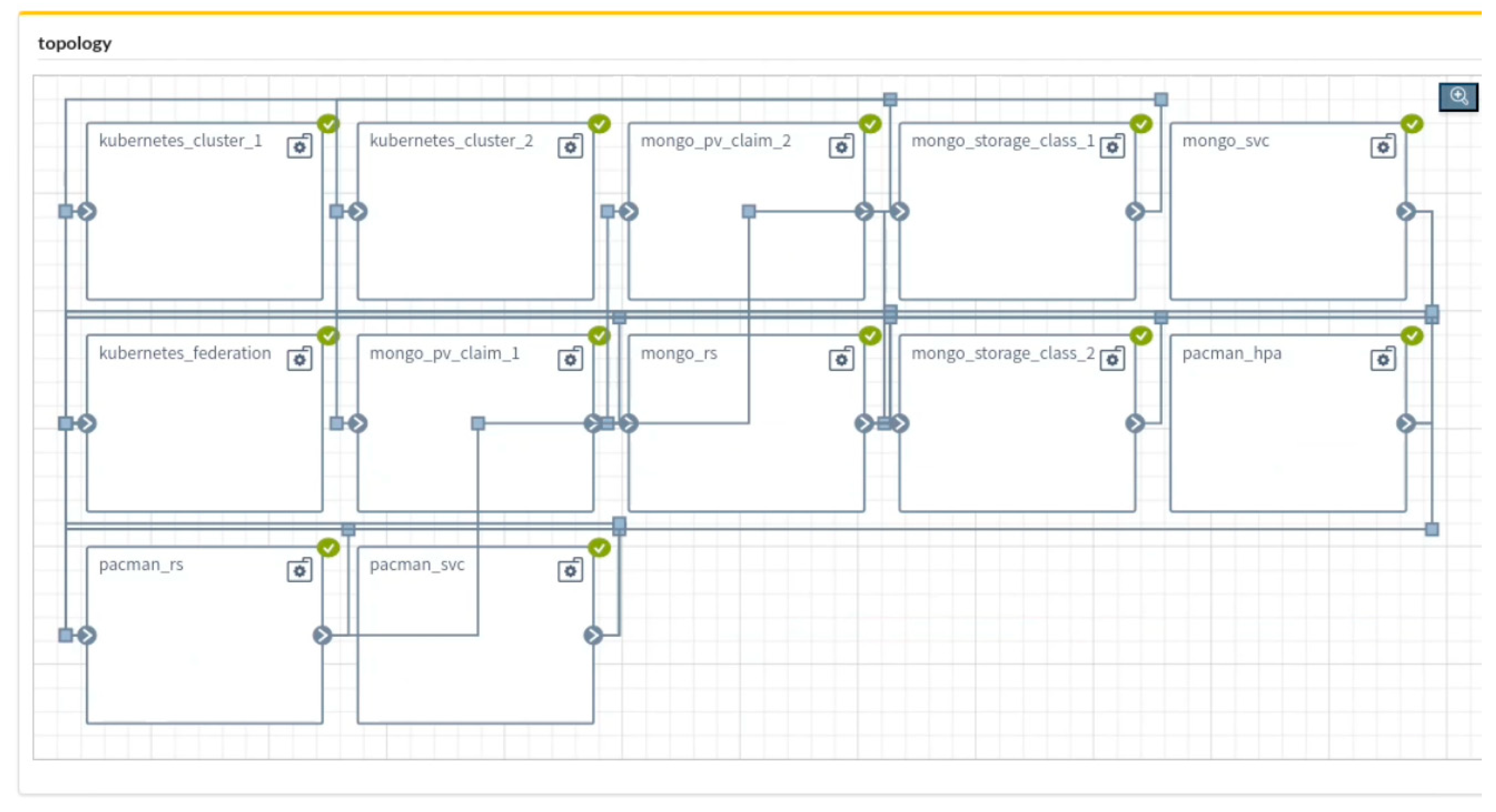

4.2. Kubernetes Federation by TOSCA

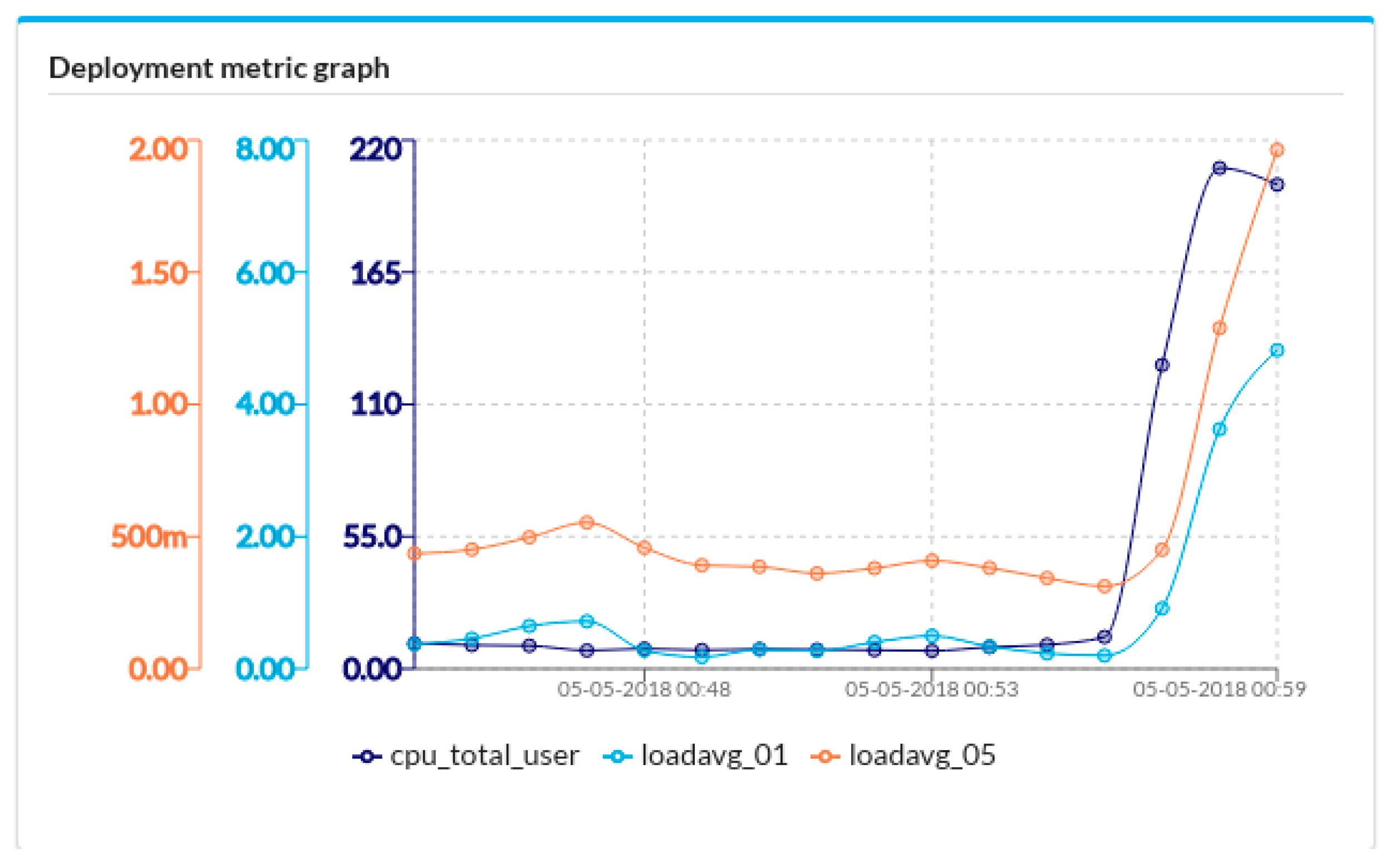

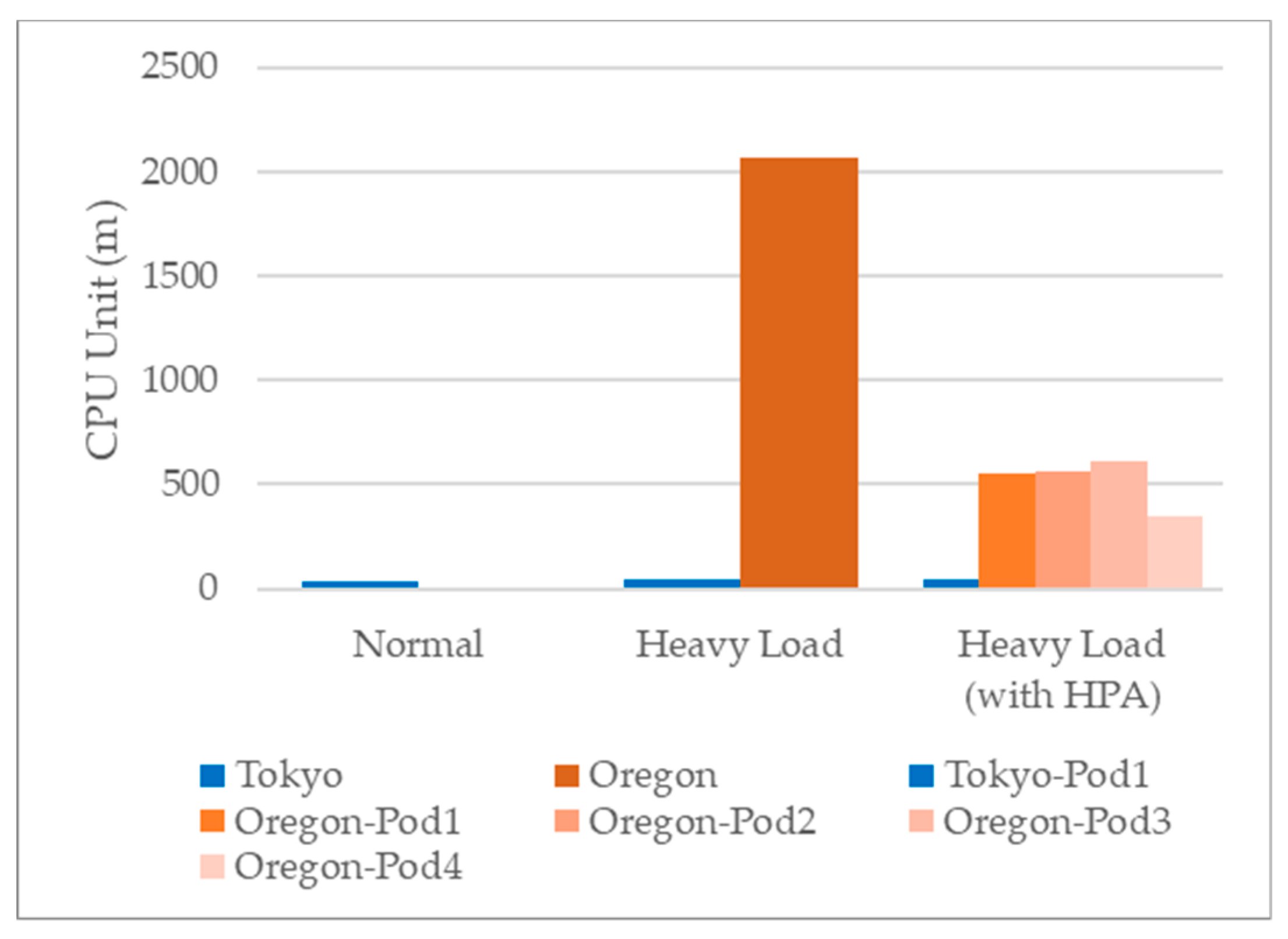

4.3. Federated Auto-Scaling by TOSCA

5. Related Research

6. Conclusions

Author Contributions

Funding

Conflicts of Interest

References

- Armbrust, M.; Fox, A.; Griffith, R.; Joseph, A.D.; Katz, R.H.; Konwinski, A.; Lee, G.; Patterson, D.A.; Rabkin, A.; Stoica, I.; et al. Above the Clouds: A Berkeley View of Cloud Computing; EECS Department, University of California: Berkeley, CA, USA, 2009. [Google Scholar]

- Hoenisch, P.; Weber, I.; Schulte, S.; Zhu, L.; Fekete, A. Four-fold auto-scaling on a contemporary deployment platform using docker containers. In Proceedings of the Lecture Notes in Computer Science, Goa, India, 16–19 November 2015; pp. 316–323. [Google Scholar] [CrossRef]

- Li, W.; Kanso, A. Comparing Containers versus Virtual Machines for Achieving High Availability. In Proceedings of the IEEE International Conference on Cloud Engineering (IC2E), Tempe, AZ, USA, 9–13 March 2015; pp. 353–358. [Google Scholar] [CrossRef]

- Gerber, A. The State of Containers and the Docker Ecosystem 2015. Available online: https://www.oreilly.com/webops-perf/free/state-of-docker-2015.csp (accessed on 6 January 2019).

- Mikalef, P.; Pateli, A. Information technology-enabled dynamic capabilities and their indirect effect on competitive performance: Findings from PLS-SEM and fsQCA. J. Bus. Res. 2017, 70, 1–16. [Google Scholar] [CrossRef]

- Persistence Market Research: Cloud Orchestration Market. Available online: https://www.persistencemarketresearch.com/market-research/cloud-orchestration-market.asp (accessed on 6 January 2019).

- Wu, Q. Making Facebook’s Software Infrastructure More Energy Efficient with Auto-Scale; Technical Report; Facebook Inc.: Cambridge, MA, USA, 2014. [Google Scholar]

- Kouki, Y.; Ledoux, T. SCAling: SLA-driven Cloud Auto-scaling. In Proceedings of the 28th ACM Symposium on Applied Computing, Coimbra, Portugal, 18–22 March 2013; pp. 411–414. [Google Scholar] [CrossRef]

- Grozev, N.; Buyya, R. Inter-Cloud architectures and application brokering: Taxonomy and survey. Softw. Pract. Exp. 2014, 44, 369–390. [Google Scholar] [CrossRef]

- Liu, C.; Loo, B.T.; Mao, Y. Declarative automated cloud resource orchestration. In Proceedings of the Proceedings of the 2nd ACM Symposium on Cloud Computing (SOCC’11), Cascais, Portugal, 26–28 October 2011; p. 26. [Google Scholar] [CrossRef]

- Quint, P.-C.; Kratzke, N. Towards a Lightweight Multi-Cloud DSL for Elastic and Transferable Cloud-native Applications. In Proceedings of the International Conference on Cloud Computing and Services Science (CLOSER), Madeira, Portugal, 19–21 March 2018; pp. 400–408. [Google Scholar] [CrossRef]

- OASIS, T. Topology and Orchestration Specification for Cloud Applications Version 1.0. Organ. Advacement Struct. Inf. Stand. 2013. Available online: http://docs.oasis-open.org/tosca/TOSCA/v1.0/os/TOSCA-v1.0-os.html (accessed on 6 January 2019).

- Docker—Build, Ship, and Run any App, Anywhere. Available online: https://www.docker.com (accessed on 6 January 2019).

- Paraiso, F.; Challita, S.; Al-Dhuraibi, Y.; Merle, P. Model-driven management of docker containers. In Proceedings of the IEEE International Conference on Cloud Computing, San Francisco, CA, USA, 27 June–2 July 2017. [Google Scholar] [CrossRef]

- Kubernetes. Available online: https://kubernetes.io (accessed on 6 January 2019).

- Opara-Martins, J.; Sahandi, R.; Tian, F. Critical analysis of vendor lock-in and its impact on cloud computing migration: A business perspective. J. Cloud Comput. 2016, 5, 4. [Google Scholar] [CrossRef]

- Nikolaos, L. D1.1 Requirements Analysis Report. Cloud4SOA Project Deliverable. Available online: https://pdfs.semanticscholar.org/20fb/57b26982a404138a32ff756e73d26c29a6f2.pdf (accessed on 6 January 2019).

- TOSCA Simple Profile in YAML Version 1.0. Available online: http://docs.oasis-open.org/tosca/TOSCA-Simple-Profile-YAML/v1.0/os/TOSCA-Simple-Profile-YAML-v1.0-os.html (accessed on 6 January 2019).

- Brogi, A.; Ibrahim, A.; Soldani, J.; Carrasco, J.; Cubo, J.; Pimentel, E.; D’Andria, F. SeaClouds: A European Project on Seamless Management of Multi-cloud Applications. SIGSOFT Softw. Eng. Notes 2014, 39, 1–4. [Google Scholar] [CrossRef]

- Alexander, K.; Lee, C.; Kim, E.; Helal, S. Enabling end-to-end orchestration of multi-cloud applications. IEEE Access 2017, 5, 18862–18875. [Google Scholar] [CrossRef]

- Sampaio, A.; Rolim, T.; Mendonça, N.C.; Cunha, M. An approach for evaluating cloud application topologies based on TOSCA. In Proceedings of the IEEE International Conference on Cloud Computing, San Francisco, CA, USA, 27 June–2 July 2016; pp. 407–414. [Google Scholar] [CrossRef]

- Alexander, K.; Lee, C. Policy-based, cost-aware cloud application orchestration. In Proceedings of the International Conference on Software & Smart Convergence, Vladivostok, Russia, 27 June–1 July 2017. [Google Scholar]

- Cloudify Cloud & NFV Orchestration Based on TOSCA. Available online: https://cloudify.co (accessed on 7 January 2019).

- Kubernetes Plugin. Available online: http://docs.getcloudify.org/4.1.0/plugins/kubernetes (accessed on 6 January 2019).

- Jérôme, P. If You Run SSHD in Your Docker Containers, You’re Doing It Wrong! Available online: https://jpetazzo.github.io/2014/06/23/docker-ssh-considered-evil (accessed on 6 January 2019).

- Murudi, V.; Kumar, K.M.; Kumar, D.S. Multi Data Center Cloud Cluster Federation—Major Challenges & Emerging Solutions. In Proceedings of the IEEE International Conference on Cloud Computing in Emerging Markets, Karnataka, India, 19–20 October 2017. [Google Scholar] [CrossRef]

- Villegas, D.; Bobroff, N.; Rodero, I.; Delgado, J.; Liu, Y.; Devarakonda, A.; Fong, L.; Masoud Sadjadi, S.; Parashar, M. Cloud federation in a layered service model. J. Comput. Syst. Sci. 2012. [Google Scholar] [CrossRef]

- Tricomi, G.; Panarello, A.; Merlino, G.; Longo, F.; Bruneo, D.; Puliafito, A. Orchestrated Multi-Cloud Application Deployment in OpenStack with TOSCA. In Proceedings of the IEEE International Conference on Smart Computing, Hong Kong, China, 29–31 May 2017; pp. 1–6. [Google Scholar] [CrossRef]

- Kratzke, N. About the Complexity to Transfer Cloud Applications at Runtime and How Container Platforms Can Contribute? In Proceedings of the International Conference on Cloud Computing and Services Science (CLOSER), Porto, Portugal, 24–26 April 2017; pp. 19–45. [Google Scholar] [CrossRef]

- Moreno-Vozmediano, R.; Huedo, E.; Llorente, I.M.; Montero, R.S.; Massonet, P.; Villari, M.; Merlino, G.; Celesti, A.; Levin, A.; Schour, L.; et al. BEACON: A cloud network federation framework. J. Comput. Syst. Sci. 2016, 325–337. [Google Scholar] [CrossRef]

- Alipour, H.; Liu, Y. Model Driven Deployment of Auto-Scaling Services on Multiple Clouds. In Proceedings of the IEEE International Conference on Software Architecture Companion (ICSA-C), Seattle, WA, USA, 30 April–4 May 2018; pp. 93–96. [Google Scholar] [CrossRef]

- Aderaldo, C.M.; Mendonça, N.C.; Pahl, C.; Jamshidi, P. Benchmark Requirements for Microservices Architecture Research. In Proceedings of the IEEE/ACM International Workshop on Establishing the Community-Wide Infrastructure for Architecture-Based Software Engineering (ECASE), Buenos Aires, Argentina, 20–28 May 2017; pp. 8–13. [Google Scholar] [CrossRef]

© 2019 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (http://creativecommons.org/licenses/by/4.0/).

Share and Cite

Kim, D.; Muhammad, H.; Kim, E.; Helal, S.; Lee, C. TOSCA-Based and Federation-Aware Cloud Orchestration for Kubernetes Container Platform. Appl. Sci. 2019, 9, 191. https://doi.org/10.3390/app9010191

Kim D, Muhammad H, Kim E, Helal S, Lee C. TOSCA-Based and Federation-Aware Cloud Orchestration for Kubernetes Container Platform. Applied Sciences. 2019; 9(1):191. https://doi.org/10.3390/app9010191

Chicago/Turabian StyleKim, Dongmin, Hanif Muhammad, Eunsam Kim, Sumi Helal, and Choonhwa Lee. 2019. "TOSCA-Based and Federation-Aware Cloud Orchestration for Kubernetes Container Platform" Applied Sciences 9, no. 1: 191. https://doi.org/10.3390/app9010191

APA StyleKim, D., Muhammad, H., Kim, E., Helal, S., & Lee, C. (2019). TOSCA-Based and Federation-Aware Cloud Orchestration for Kubernetes Container Platform. Applied Sciences, 9(1), 191. https://doi.org/10.3390/app9010191