Multiple Network Fusion with Low-Rank Representation for Image-Based Age Estimation

Abstract

Featured Application

Abstract

1. Introduction

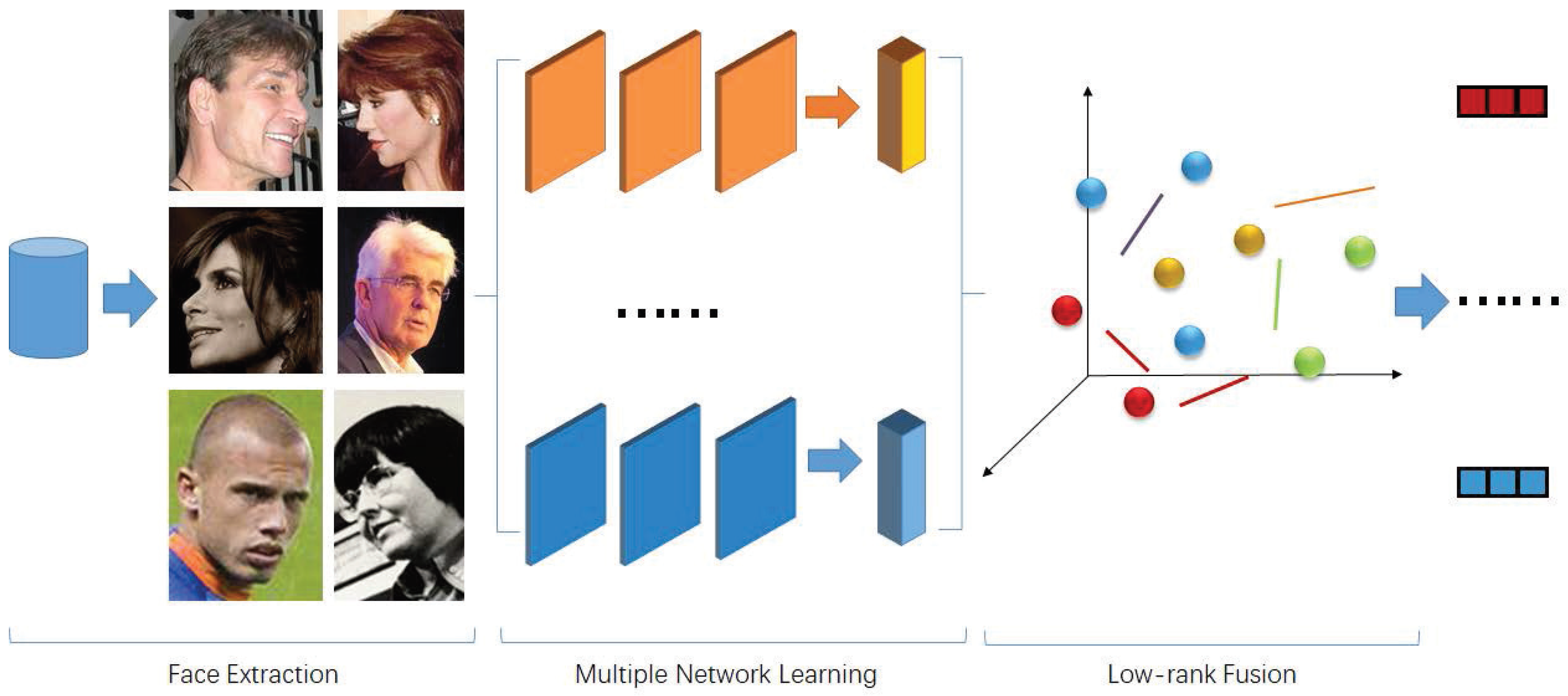

- The first and key contribution is a novel framework that estimates ages with a single image by fusing multiple deep neural networks. This framework is flexible and the hidden representations are computed independently. In this way, different types of neural networks, different network structures, and different features can be used in this framework.

- The second contribution of the proposed method is multiple-network fusion with low-rank learning. Low-rank representation is naturally sparse. Besides, different types of features are extracted by different networks and their distributions can be observed clearly. To improve traditional low-rank learning, we introduce a hypergraph manifold. In this way, samples can be represented in a unified low-rank space and the process of fusion can be achieved in this space.

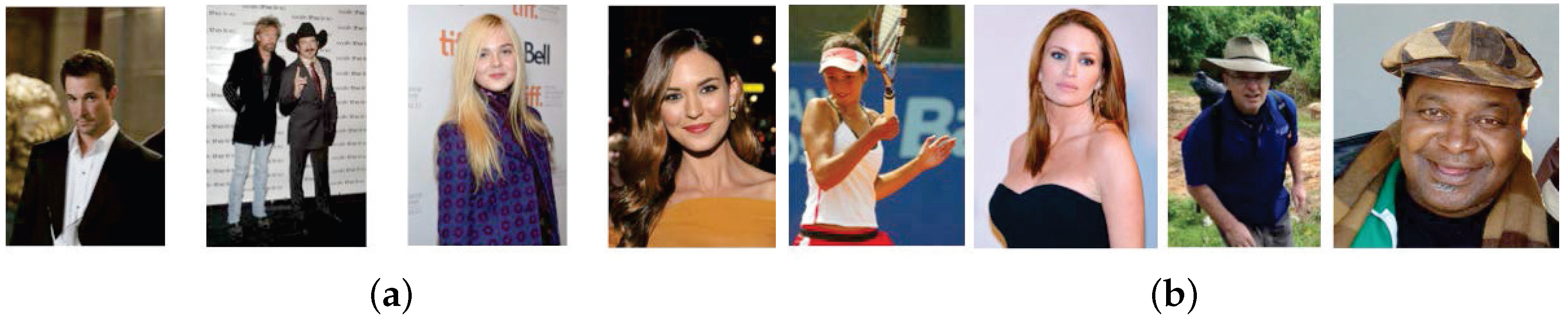

- The third contribution is that the performance of the proposed method is verified on datasets from the Internet Movie Database (IMDB) and Wikipedia (WIKI). They are challenging datasets since the images are collected in natural scenarios and not all of the faces are frontal. The performance on this dataset indicates that the proposed MNF-LRR is suitable for practical and complicated applications.

2. Multiple Network Learning with Low-Rank Representation

2.1. Overview of the Proposed Method

2.2. Definitions

2.3. Multiple Network Learning

- Autoencoders (AE). Autoencoders are unsupervised to learn the hidden representation. To solve Equation (2), people usually use denoising autoencoders (DAE). In DAE, inputs are corrupted by randomly removing some features. After corruption, is converted to and is denoted as the transform matrix to reconstruct with . In this way, the squared reconstruction loss can be defined asThe solution to Equation (3) depends on corrupted features of each input. To lower the variance, Marginal Denoising Autoencoders (MDA) [27] utilize multiple epochs with the training set, each epoch with different corruption settings. In this way, the overall squared loss can be transformed towhere represents the jth corrupted features, and m is the number of epochs.To represent features with the matrix form, is denoted as the data matrix, while the m-epochs repeated version of X is denoted by and the corrupted version of is denoted by . Equation (4) can then be reduced toWe can clearly figure out that Equation (5) is a convex problem, and the global optimal solution to it can be computed by setting its partial derivative for W to 0. We then need to compute partial derivative of , which is defined asis set, and the close form to compute optimal W is

- Convolutional Neural Networks (CNNs). CNNs are constructed by alternatively stacking convolutional layers and spatial pooling layers. Convolutional layers are key to CNNs since they generate feature maps by linear convolutional filters. The feature maps are then activated by nonlinear functions, which are called activation functions. Different activation functions are defined, such as rectifier, sigmoid, tanh, and so on. Taking the Rectified Linear Units (ReLUs) as an example, the feature maps can be computed byIn computational networks, given an input or set of inputs, the activation function of a neuron defines the output of that neuron with these inputs. In the scenario of the deep neural network, activation functions project to a higher level hidden representation step by step with a sequence of non-linear mappings, which can be defined aswhere l is the number of layers, and is the mapping function from input to estimated output.To optimize the weighted matrix W, which contains the mapping parameters, we use a back-propagation strategy. For each echo of this process, the weighted matrix is updated by , which is defined byis the learning rate, and we can defineIn this way, we try to train a model that minimizes the differences between the groundtruth and the estimated output . The back-propagation strategy can be modeled by

- Recursive Neural Networks (RNN). RNNs process a structured input with the same set of weights recursively. In this way, we can traverse the given structure into topological order and obtain a structured output or a scalar prediction on it. Different from CNNs, nodes in RNNs are integrated into parents with a weight matrix. This matrix is shared across the whole network. Besides, a non-linearity such as activation functions mentioned above is used. Taking tanh as an example, if and are n-dimensional features of nodes, their parent must be an n-dimensional feature, too. It can be computed bywhere W is a learned weight matrix, which is usually computed with Stochastic Gradient Descent (SGD). The gradients are calculated using back-propagation through structure (BPTS). BPTS is a variant of the aforementioned back-propagation through time for RNNs.

2.4. Fusion with Low-Rank Representation

3. Implementation of Age Estimation

4. Experimental Evaluation

4.1. Settings and Datasets

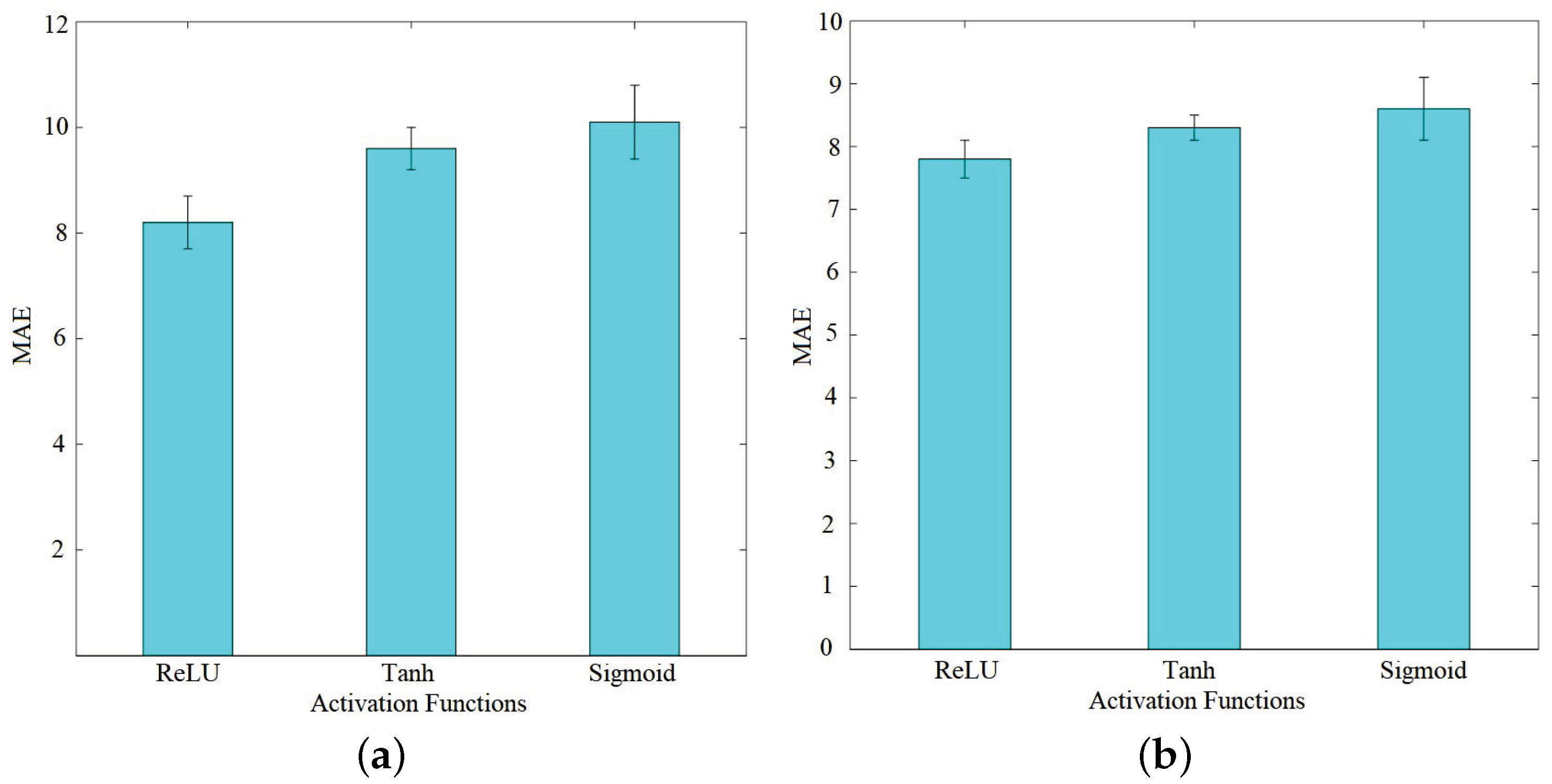

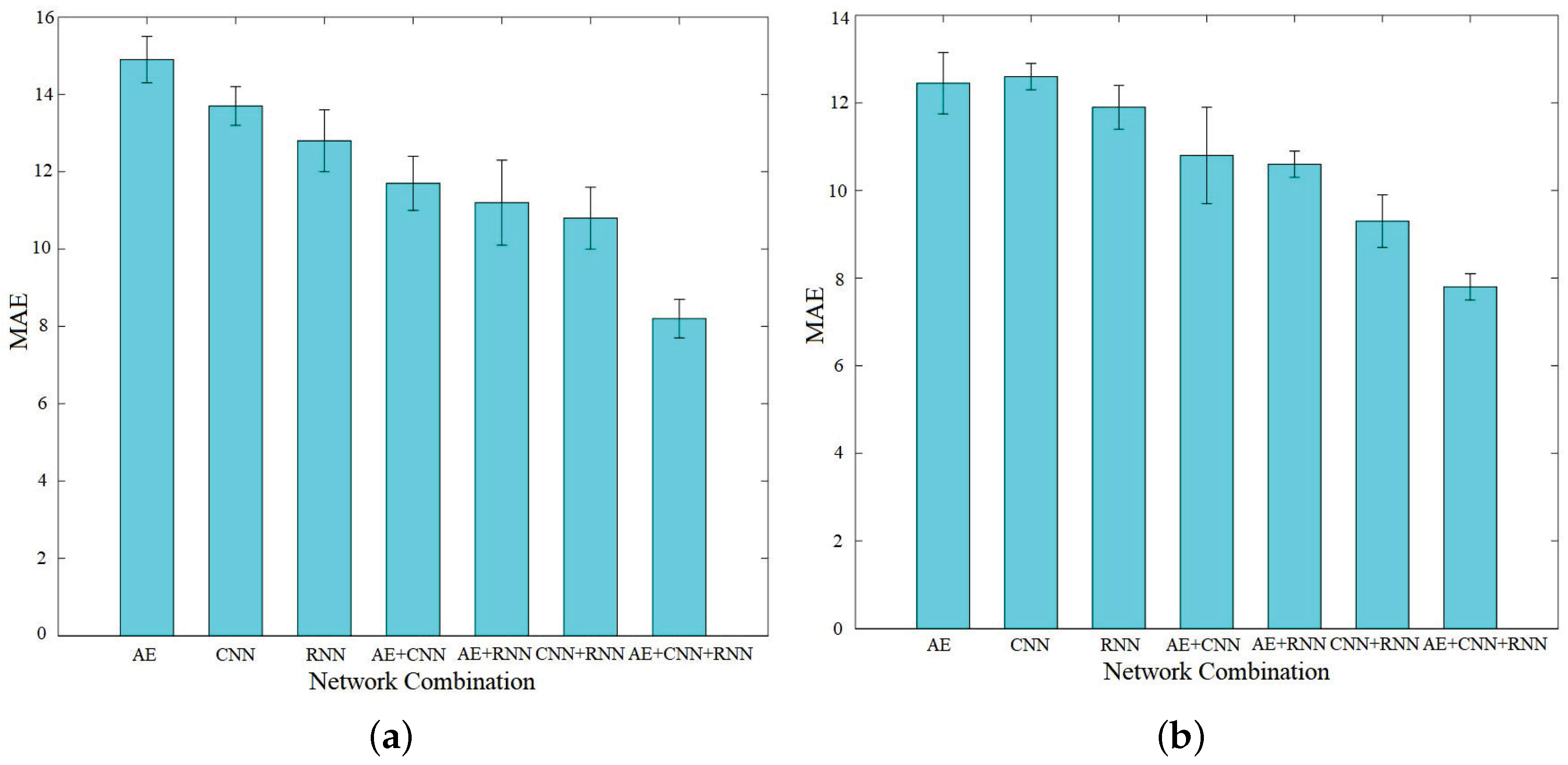

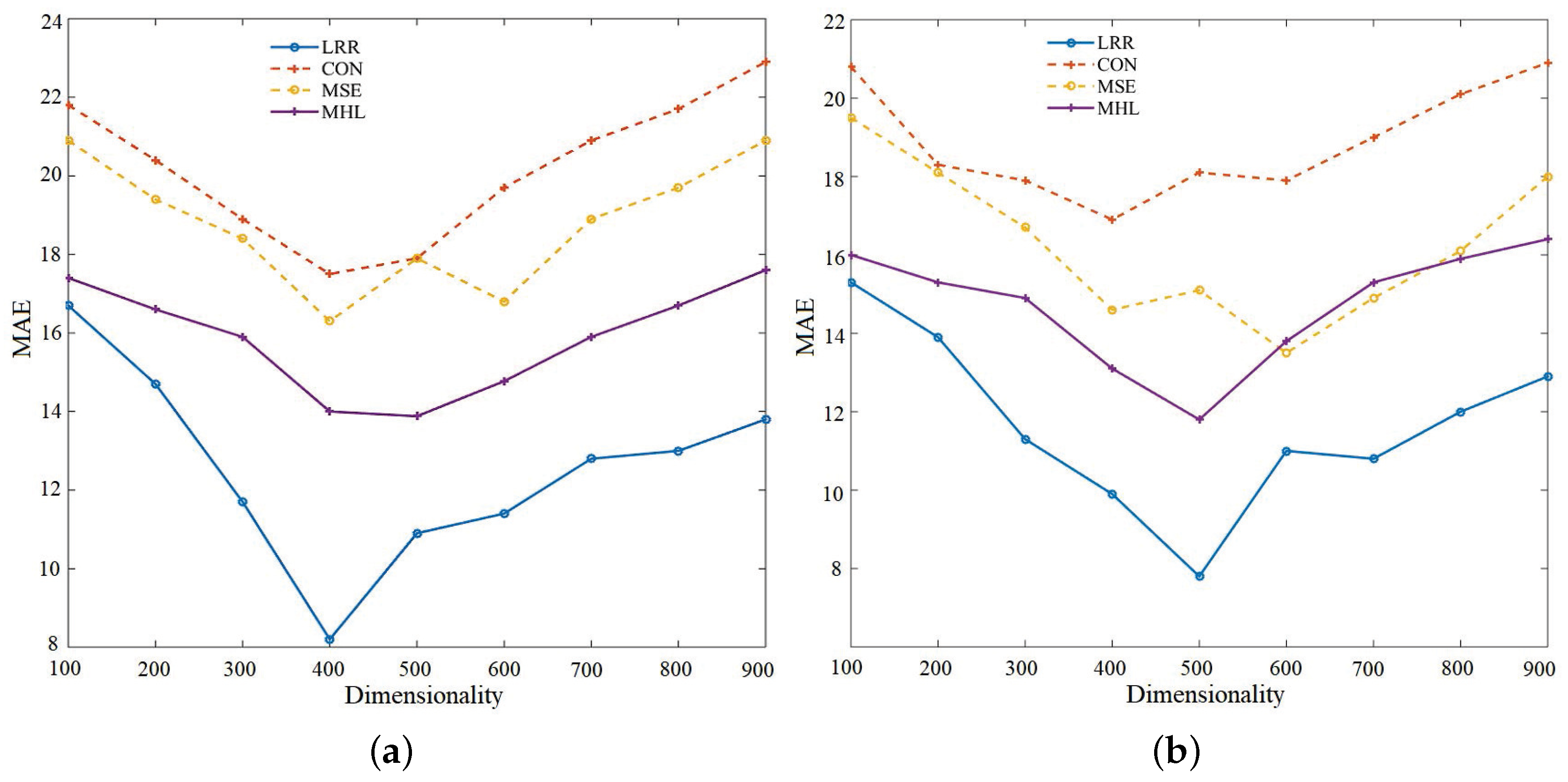

4.2. Optimization of Settings

4.3. Comparison of Multi-Modal Fusion Methods

- Low-Rank Representation (LRR): The proposed method using multiple neural network fusion and low-rank representation.

- Concatenating Different Features (CON): For CON, features from different modals are simply concatenated to construct long features. Principle Component Analysis [31] is then used for dimensionality reduction.

- Multiview Spectral Embedding (MSE) [32]: This method calculates a low-dimensional embedding. In this embedding, the distribution of each modal is sufficiently smooth. The complementary properties of different modals are then explored to obtain a fused representation.

- Multi-View Hypergraph Learning (MHL) [33]: In this method, hypergraph learning is combined with the patch alignment framwork [34]. A multi-view hypergraph Laplacian matrix is constructed, and fused features are computed by solving the standardeigen-decomposition of the multi-view hypergraph Laplacian matrix.

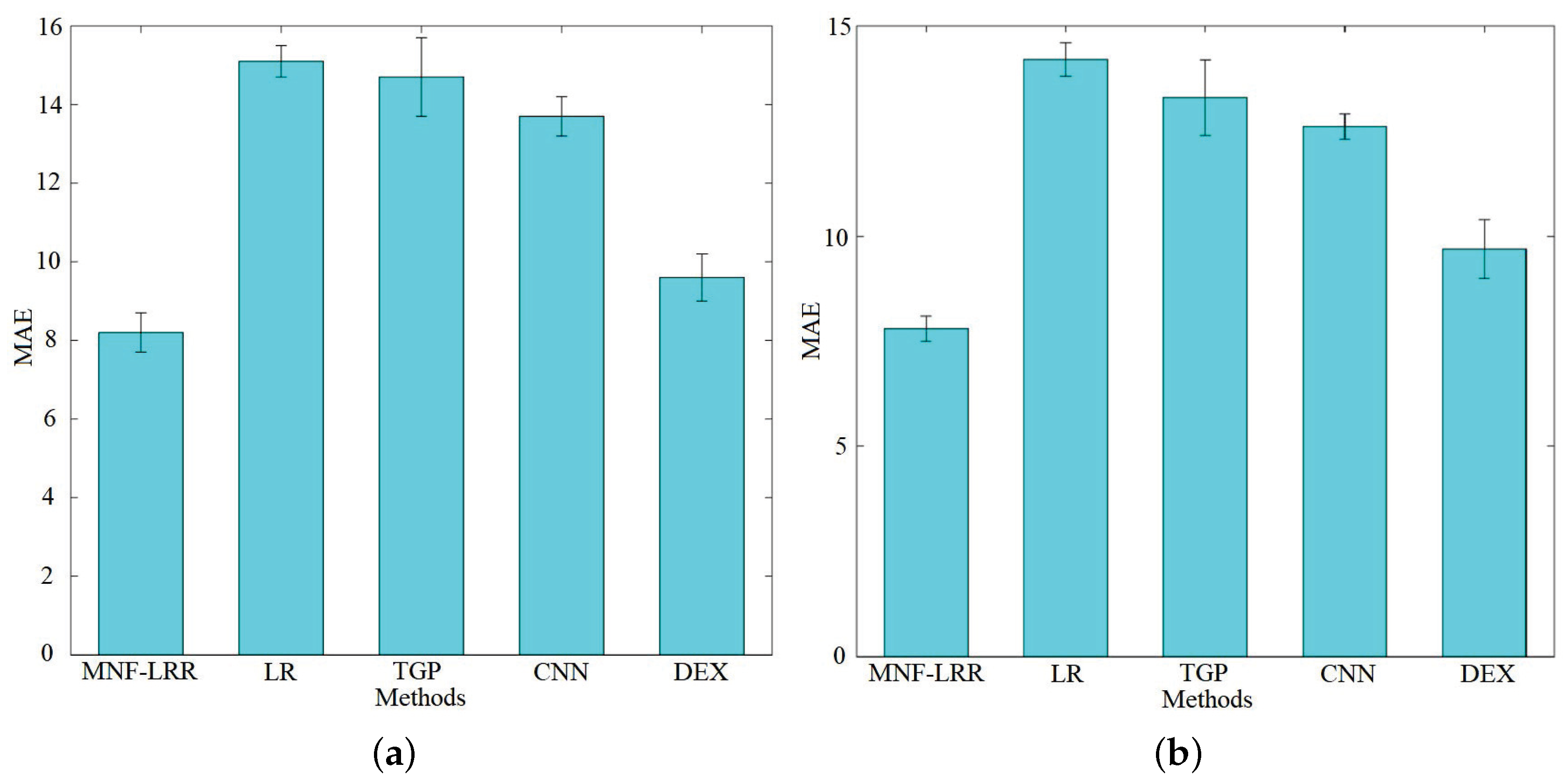

4.4. Comparison of Different Methods for Age Estimation

- Multiple Network Fusion with Low-Rank Representation (MNF-LRR): The proposed method using multiple neural network fusion and low-rank representation.

- Linear Regression (LR) [17]: This method estimates ages directly by linear regression against feature vectors of facial images. In this paper, HOG [35] was used as image features. Ridge regression (RR-LR) and relevance vector machine (RVM-LR) regression were both implemented by the authors. Their results were similar. We used RVM-LR and set in the experimental comparison.

- Twin Gaussian Processes (TGPs) [18]: This method applies Gaussian process priors on both covariates and responses. Two Gaussian processes are then modeled as normal distributions over finite index sets of training and testing examples. Finally, outputs can be estimated by minimizing the Kullback–Leibler (K-L) divergence between them. The authors have provided several different implementations of TGP, such as Twin Gaussian Processes with K Nearest Neighbors (TGPKNN), Weighted K-Nearest Neighbor Regression (WKNNRegressor), Gaussian Process Regression (GPR), Hilbert-Schmidt Independent Criterion with K Nearest Neighbors (HSICKNN) and Kernel Target Alignment with K Nearest Neighbors (KTAKNN). We found that TGPKNN outperformed all other methods. Therefore, we used HOG for image features and TGPKNN as the regressor.

- Convolutional Neural Networks (CNNs) [36]. This method uses a simple convolutional net architecture. The network is composed of three convolutional layers and two fully connected layers. The authors have provided the Caffe model for age classification and deployed prototext.

- Deep Expectation (DEX) [30]. The authors here treated age estimation as a classification problem based on deep learning, which was followed by an expected value refinement with softmax. The key to DEX for age regression contains deep learning models with a large amount of data, a robust face alignment process, and softmax-based expected value formulation.

- The performance of general mapping learning methods such as LR and TGP is not satisfactory. They are fast and use traditional features such as HOG, but the definition of mapping relationship is oversimplified.

- The methods based on neural networks such as CNNs and DEX can achieve a stable performance. Neural networks provide descriptive features but require a large amount of training data. Besides, previous neural-network-based methods have not considered multiple features.

- The performance of the proposed MNF-LRR outperformed the state of the art. We made use of multiple features from different network types and found a reasonable way to fuse them.

5. Discussion

- We compared different activation functions and different combinations of neural networks to determine the optimal neural network.

- We compared different feature fusion methods to emphasize the effectiveness of choosing low-rank learning.

- We compared the proposed method with the state of the art in terms of age estimation to emphasize the overall improvement of the proposed method.

6. Conclusions

Author Contributions

Funding

Conflicts of Interest

References

- Chen, B.C.; Chen, C.S.; Hsu, W.H. Cross-Age Reference Coding for Age-Invariant Face Recognition and Retrieval. LNCS 2014, 8694, 768–783. [Google Scholar]

- Eidinger, E.; Enbar, R.; Hassner, T. Age and Gender Estimation of Unfiltered Faces. IEEE Trans. Inf. Forensics Secur. 2014, 9, 2170–2179. [Google Scholar] [CrossRef]

- Hu, H.; Otto, C.; Jain, A.K. Age estimation from face images: Human vs. machine performance. In Proceedings of the International Conference on Biometrics, Madrid, Spain, 4–7 June 2013; pp. 1–8. [Google Scholar]

- Cootes, T.F.; Edwards, G.J.; Taylor, C.J. Active appearance models. In Proceedings of the European Conference on Computer Vision, Freiburg, Germany, 2–6 June 1998; pp. 484–498. [Google Scholar]

- Fu, Y.; Huang, T.S. Human Age Estimation With Regression on Discriminative Aging Manifold. IEEE Trans. Multimed. 2008, 10, 578–584. [Google Scholar] [CrossRef]

- Guo, G.; Fu, Y.; Dyer, C.R.; Huang, T.S. Image-Based Human Age Estimation by Manifold Learning and Locally Adjusted Robust Regression. IEEE Trans. Image Process. 2008, 17, 1178–1188. [Google Scholar] [PubMed]

- Ahonen, T.; Hadid, A.; Pietikainen, M. Face Description with Local Binary Patterns: Application to Face Recognition. IEEE Trans. Pattern Anal. Mach. Intell. 2006, 28, 2037–2041. [Google Scholar] [CrossRef] [PubMed]

- Guo, G.; Mu, G.; Fu, Y.; Huang, T.S. Human age estimation using bio-inspired features. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Miami, FL, USA, 20–25 June 2009; pp. 112–119. [Google Scholar]

- Fu, Y.; Guo, G.; Huang, T.S. Age Synthesis and Estimation via Faces: A Survey. IEEE Trans. Pattern Anal. Mach. Intell. 2010, 32, 1955–1976. [Google Scholar] [PubMed]

- He, R.; Zheng, W.S.; Tan, T.; Sun, Z. Half-Quadratic-Based Iterative Minimization for Robust Sparse Representation. IEEE Trans. Pattern Anal. Mach. Intell. 2014, 36, 261–275. [Google Scholar] [PubMed]

- Gupta, K.; Majumdar, A. Imposing Class-Wise Feature Similarity in Stacked Autoencoders by Nuclear Norm Regularization. Neural Process. Lett. 2018, 48, 615–629. [Google Scholar] [CrossRef]

- Kim, J.; Bukhari, W.; Lee, M. Feature Analysis of Unsupervised Learning for Multi-task Classification Using Convolutional Neural Network. Neural Process. Lett. 2018, 47, 783–797. [Google Scholar] [CrossRef]

- Liu, H.; Lu, J.; Feng, J.; Zhou, J. Group-Aware Deep Feature Learning For Facial Age Estimation. Pattern Recognit. 2016, 66, 82–94. [Google Scholar] [CrossRef]

- Yu, J.; Yang, X.; Gao, F.; Tao, D. Deep Multimodal Distance Metric Learning Using Click Constraints for Image Ranking. IEEE Trans. Cybern. 2017, 47, 4014–4024. [Google Scholar] [CrossRef] [PubMed]

- Yu, J.; Kuang, Z.; Zhang, B.; Zhang, W.; Lin, D.; Fan, J. Leveraging Content Sensitiveness and User Trustworthiness to Recommend Fine-Grained Privacy Settings for Social Image Sharing. IEEE Trans. Inf. Forensics Secur. 2018, 13, 1317–1332. [Google Scholar] [CrossRef]

- Kwon, Y.H.; da Vitoria Lobo, N. Age classification from facial images. Comput. Vis. Image Underst. 1999, 74, 1–21. [Google Scholar] [CrossRef]

- Agarwal, A.; Triggs, B. Recovering 3D human pose from monocular images. IEEE Trans. Pattern Anal. Mach. Intell. 2006, 28, 44–58. [Google Scholar] [CrossRef] [PubMed]

- Bo, L.; Sminchisescu, C. Twin Gaussian Processes for Structured Prediction; Kluwer Academic Publishers: Alphen aan den Rijn, The Netherlands, 2010; pp. 28–52. [Google Scholar]

- Tian, Q.; Xue, H.; Qiao, L. Human Age Estimation by Considering both the Ordinality and Similarity of Ages. Neural Process. Lett. 2015, 43, 1–17. [Google Scholar] [CrossRef]

- Liu, X.; Li, S.; Kan, M.; Zhang, J.; Wu, S.; Liu, W.; Han, H.; Shan, S.; Chen, X. AgeNet: Deeply Learned Regressor and Classifier for Robust Apparent Age Estimation. In Proceedings of the IEEE International Conference on Computer Vision Workshop, Santiago, Chile, 7–13 December 2015; pp. 258–266. [Google Scholar]

- Kuang, Z.; Huang, C.; Zhang, W. Deeply Learned Rich Coding for Cross-Dataset Facial Age Estimation. In Proceedings of the IEEE International Conference on Computer Vision Workshop, Santiago, Chile, 7–13 December 2015; pp. 338–343. [Google Scholar]

- Yang, X.; Gao, B.B.; Xing, C.; Huo, Z.W. Deep Label Distribution Learning for Apparent Age Estimation. In Proceedings of the IEEE International Conference on Computer Vision Workshop, Santiago, Chile, 7–13 December 2015; pp. 344–350. [Google Scholar]

- Ranjan, R.; Zhou, S.; Chen, J.C.; Kumar, A.; Alavi, A.; Patel, V.M.; Chellappa, R. Unconstrained Age Estimation with Deep Convolutional Neural Networks. In Proceedings of the IEEE International Conference on Computer Vision Workshop, Santiago, Chile, 7–13 December 2015; pp. 351–359. [Google Scholar]

- Wang, X.; Guo, R.; Kambhamettu, C. Deeply-Learned Feature for Age Estimation. In Proceedings of the Applications of Computer Vision, Waikoloa, HI, USA, 5–9 January 2015; pp. 534–541. [Google Scholar]

- Srivastava, N.; Hinton, G.; Krizhevsky, A.; Sutskever, I.; Salakhutdinov, R. Dropout: A Simple Way to Prevent Neural Networks from Overfitting. J. Mach. Learn. Res. 2014, 15, 1929–1958. [Google Scholar]

- Yoshua, B. Learning Deep Architectures for AI; Foundations and Trends in Machine Learning Series; Now Publishers Inc.: Breda, The Netherlands, 2009; Volume 2, pp. 1–127. [Google Scholar]

- Chen, M.; Weinberger, K.Q.; Sha, F.; Bengio, Y. Marginalized Denoising Auto-encoders for Nonlinear Representations. In Proceedings of the IEEE International Conference on Machine Learning, Beijing, China, 3–6 December 2014; IEEE: Piscataway, NJ, USA, 2014; pp. 1476–1484. [Google Scholar]

- Zhou, D.; Huang, J.; Scholkopf, B. Learning with Hypergraphs: Clustering, Classification, and Embedding. In Advances in Neural Information Processing Systems; MIT Press: Cambridge, MA, USA, 2007; Volume 19, pp. 1601–1608. [Google Scholar]

- Palm, R.B. Prediction as a Candidate for Learning Deep Hierarchical Models of Data. Master’s Thesis, Technical University of Denmark, Lyngby, Denmark, 2012. [Google Scholar]

- Rothe, R.; Timofte, R.; Gool, L.V. Deep Expectation of Real and Apparent Age from a Single Image without Facial Landmarks. Int. J. Comput. Vis. 2016, 126, 144–157. [Google Scholar] [CrossRef]

- Hotelling, H. Analysis of a complex of statistical variables into principal components. Br. J. Educ. Psychol. 1933, 24, 417–520. [Google Scholar] [CrossRef]

- Xia, T.; Tao, D.; Mei, T.; Zhang, Y. Multiview Spectral Embedding. IEEE Trans. Syst. Man Cybern. Part B 2010, 40, 1438–1446. [Google Scholar]

- Hong, C.; Yu, J.; Li, J.; Chen, X. Multi-view hypergraph learning by patch alignment framework. Neurocomputing 2013, 118, 79–86. [Google Scholar] [CrossRef]

- Zhang, T.; Tao, D.; Li, X.; Yang, J. Patch Alignment for Dimensionality Reduction. IEEE Trans. Knowl. Data Eng. 2009, 21, 1299–1313. [Google Scholar] [CrossRef]

- Dalal, N.; Triggs, B. Histograms of oriented gradients for human detection. In Proceedings of the IEEE International Conference on Computer Vision and Pattern Recognition, San Diego, CA, USA, 20–25 June 2005; IEEE Press: Piscataway, NJ, USA, 2005; pp. 886–893. [Google Scholar]

- Levi, G.; Hassncer, T. Age and gender classification using convolutional neural networks. In Proceedings of the Computer Vision and Pattern Recognition Workshops, Boston, MA, USA, 7–12 June 2015; pp. 34–42. [Google Scholar]

| Network | Structure |

|---|---|

| Autoencoders | Stacked denoising autoencoders with 0.3 corruption level and 5 layers |

| CNN | CNN with 3 convolutional layers and 2 fully-connected layers |

| RNN | RNN with 3 layers |

© 2018 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (http://creativecommons.org/licenses/by/4.0/).

Share and Cite

Hong, C.; Zeng, Z.; Wang, X.; Zhuang, W. Multiple Network Fusion with Low-Rank Representation for Image-Based Age Estimation. Appl. Sci. 2018, 8, 1601. https://doi.org/10.3390/app8091601

Hong C, Zeng Z, Wang X, Zhuang W. Multiple Network Fusion with Low-Rank Representation for Image-Based Age Estimation. Applied Sciences. 2018; 8(9):1601. https://doi.org/10.3390/app8091601

Chicago/Turabian StyleHong, Chaoqun, Zhiqiang Zeng, Xiaodong Wang, and Weiwei Zhuang. 2018. "Multiple Network Fusion with Low-Rank Representation for Image-Based Age Estimation" Applied Sciences 8, no. 9: 1601. https://doi.org/10.3390/app8091601

APA StyleHong, C., Zeng, Z., Wang, X., & Zhuang, W. (2018). Multiple Network Fusion with Low-Rank Representation for Image-Based Age Estimation. Applied Sciences, 8(9), 1601. https://doi.org/10.3390/app8091601