Abstract

Novelty detection is a classification problem to identify abnormal patterns; therefore, it is an important task for applications such as fraud detection, fault diagnosis and disease detection. However, when there is no label that indicates normal and abnormal data, it will need expensive domain and professional knowledge, so an unsupervised novelty detection approach will be used. On the other hand, nowadays, using novelty detection on high dimensional data is a big challenge and previous research suggests approaches based on principal component analysis (PCA) and an autoencoder in order to reduce dimensionality. In this paper, we propose deep autoencoders with density based clustering (DAE-DBC); this approach calculates compressed data and error threshold from deep autoencoder model, sending the results to a density based cluster. Points that are not involved in any groups are not considered a novelty; the grouping points will be defined as a novelty group depending on the ratio of the points exceeding the error threshold. We have conducted the experiment by substituting components to show that the components of the proposed method together are more effective. As a result of the experiment, the DAE-DBC approach is more efficient; its area under the curve (AUC) is shown to be 13.5 percent higher than state-of-the-art algorithms and other versions of the proposed method that we have demonstrated.

1. Introduction

An abnormal pattern that is not compatible with most of the data in a dataset is named a novelty, outlier, or anomaly [1]. Novelty can be created for several reasons, such as data from different classes, natural variation and data measurement or collection errors [2]. Although there may be novelty due to some mistakes, sometimes it is a new, unidentified process [3]; therefore, it is useful to discover important information by detecting the novelty in a variety of application domains such as internet traffic detection [4,5], medical diagnosis [6], fraud detection [7], traffic flow forecasting [8] and patient monitoring [9,10].

There are three basic ways to detect novelty depending on the availability of data label [1]. If the data is labeled as normal or novelty, a supervised approach can be used as a traditional classification task. In this case, training data consists of both normal and novelty data and builds a model that predicts unseen data as normal and novelty. However, novelty faces with a class imbalance problem due to the relatively low comparability of normal data [11]. Also, obtaining an accurate labeling for abnormal data is transformed into a complex problem in data size and high dimension. The second method is a semi-supervised method, which only uses normal data to build a classification model. If the training data contains abnormal data, the model may find it difficult to detect the abnormal data. Moreover, most of the data are not labeled in the practice, and in this case, an unsupervised method, such as the third, is used.

In recent years, research related to unsupervised novelty detection suggest using One-class Support Vector Machine (OC-SVM) based, clustering based, reconstruction error based methods and to combine these methods together.

OC-SVM separates normal and novelty data as projecting the input features into the high dimensional feature spaces using a kernel trick. In other words, finding the decision boundary that the farthest isolate the origin and data points and the closest points to the origin considered as the novelty. However, SVM is a memory and time-consuming task in practice and its complexity grows quadratically with the number of records [12].

Cluster analysis divides data into groups that are meaningful, useful, or both [2]. In clustering based novelty detection, there are several ways to identify novelty, such as clusters that are located far away from other clusters, or smaller or sparser than other clusters, or points not belonging to any group are considered as abnormal data. But the cluster-based method performance is very dependent on which algorithm is used as well as high-dimensional data in clustering with distance is special challenges to data mining algorithms [13].

To address this issue caused by the curse of dimensionality, reconstruction error based approach and hybrid approaches are widely used. PCA is a technique used to reduce dimensionality. Peter J. Rousseeuw and Mia Hubert introduced diagnosis of anomalies by the first principal component score and its orthogonal distance to each data point [14]. Heiko Hoffmann introduced kernel PCA (KPCA), which maps input spaces into higher-dimensional space before performing the PCA using a kernel function for outlier detection [15]. In a hybrid approach, dimensionality reduction first made and then clustering or other classification algorithms are performed in the latent low-dimensional space. In recent publications, unsupervised novelty detection using deep learning is proven by high effectiveness [3,12,16,17,18]. They use an autoencoder that is an artificial neural network to extract features and to reduce the dimension then give them as an input to the novelty identification methods such as Gaussian mixture model, cumulative distribution function and clustering algorithm and so on.

In this paper, the DAE-DBC method is suggested and aims to increase the accuracy of unsupervised novelty detection. First, use a deep autoencoder model to extract a low dimensional representation from the high dimensional input space. The architecture of the autoencoder model consists of two symmetrical deep neural networks—an encoder and a decoder that applies backpropagation, setting the target values to be equal to the inputs. It attempts to copy its input to its output and anomalies are harder to reconstruct compared with normal samples [16]. In DAE-DBC, low dimensional representation of data includes bottleneck hidden layer in autoencoder network and reconstruction error of each point. Based on reconstruction error, we estimate the first outlier threshold and distinguish data is normal or abnormal using it. But in this step, data cannot be divided clearly. Then, the autoencoder model is retrained only with normal data and low dimensional representation and optimal outlier threshold are calculated again using by the retrained model. The low dimensional data from retrained autoencoder model is classified into groups using the Density-based spatial clustering of applications with noise (DBSCAN) clustering algorithm. The optimal value of eps parameter (minimum distance between two points) of DBSCAN is estimated by DMDBSCAN algorithm [19]. Based on the optimal outlier threshold from retrained autoencoder model, identify novelty. If most of the instances in a group exceed our threshold, all instances in this cluster are considered as abnormal and labeled by novelty. We have tested DAE-DBC on several public benchmark datasets, it presents higher AUC score than the state-of-the-art techniques.

We propose the unsupervised novelty detection method over high dimensional data based on deep autoencoder and density-based clustering. The main contribution of the proposed method are as follows:

- We derive a new DAE-DBC method for unsupervised novelty detection that is not domain specific.

- The number of clusters is unlimited and each cluster will be labeled by novelty depending on what percentage of objects that it contains exceed the error threshold. In other words, identifying novelty is based on the reconstruction error threshold without considering the sparse, or far, or small ones. Therefore, large-scale clusters are also possible to be novelty.

- Our extensive experiment shows that DAE-DBC has a greater performance than other state-of-the-art unsupervised anomaly detection methods.

This article consists of 5 sections. In Section 2, we provide a detailed survey of related work on unsupervised anomaly detection. Our proposed method is explained in Section 3. Section 4 presents the experimental dataset, compared methods, the evaluation metric and the result and comparison of experiments. Finally, Section 5 concludes the paper.

2. Related Work

In this section, we have introduced an unsupervised novelty detection method related to the proposed method. Recent research suggests unsupervised techniques, such as OC-SVM, one-class neural network (OC-NN), clustering based and reconstruction error based and so on.

The relatively small percentage of total data is novelty and class imbalance problems can occur because of divergence of normal and novelty sample ratios. In this case, traditional classification methods are not well suited for discovering novelty. OC-SVM is the semi-supervised approach that is used for a classifier based on building a prediction model from a normal dataset [20,21,22,23]. It is an extension of SVM for an unlabeled dataset that was introduced first by Schölkopf et al. [23] and usage of one-class SVM in unsupervised mode is rising due to the most data is not labeled in the practice. In this case, all data is considered normal and used for the training of OC-SVM. The disadvantage of this method is that if the training data contains abnormal or novelty, the novelty detection classification model does not work well because the decision boundary (the normal boundary) created by OC-SVM shifts toward outliers.

Mennatallah et al. showed an Enhanced OC-SVM which is the Robust OC-SVM and the eta-SVM together to eliminate these deficiencies [20]. Robust OC-SVM focused on slack variables that are far from the centroid. They are dropped from the minimization objective because of these points, the decision boundary will be wrong. Sarah M. Erfani et al. proposed a hybrid model that combines a deep belief network for feature extraction and OC-SVM for unsupervised novelty detection—named DBN-1SVM—that solves the curse of the dimensionality problem by reducing dimensionality using a deep belief network [12].

Another popular novelty detection method is principal component analysis (PCA) based methods. PCA is a dimension reduction technique in which the direction with the largest projected variance is called the first principal component. The orthogonal direction that captures the second largest projected variance is called the second principal component and so on [24]. Although in most cases, PCA is used for dimensionality reduction purpose, it is also used to detect novelty. Peter J. Rousseeuw et al. introduced to diagnose outliers by the first principal component score and its orthogonal distance of each data points. Regular observations have both a small orthogonal distance and small PCA score [14]. Heiko Hoffman proposes kernel PCA for novelty detection which is a non-linear extension of PCA. In kernel PCA, input spaces are mapped into higher-dimensional space before performing PCA using a kernel function. In Heiko Hoffman’s proposed method, Gaussian kernel function and reconstruction error in feature space are used [15]. Using the covariance matrix to PCA is sensitive to the outlier. Roland Kwitt et al. proposed a Robust PCA based novelty detection approach using the correlation matrix instead of the covariance matrix to calculate the principal component scores [25].

In recent studies, hybrid techniques are being suggested that making novelty detection as a deep autoencoder is combined with other methods. Deep autoencoder is a neural network that is trained to attempt to copy its input to its output. Yu-Dong Zhang et al. proposed a deep neural network with seven layers for voxelwise detection of cerebral microbleeds. They used sparse autoencoder with 4 hidden layers for dimensionality reduction and softmax layer for classification [26]. Also, Wenjuan Jia et al. proposed a deep stacked autoencoder based multi-class classification approach to the image dataset. The first dimensionality is reduced using deep stacked autoencoder and then softmax layer is used as a classifier [27]. Yan Xia et al. propose a reconstruction based outlier detection method that has 2 steps including discriminative labeling and reconstruction learning [28]. They show that dataset reconstructed from low-dimensional representations, the inliers and the outliers can be well separated according to their reconstruction error. Hyunsoo Kim et al. propose an unsupervised land classification system using quaternion autoencoder for feature extraction and self-organizing map (SOM) for classification without human-predefined categories [18]. Bo Zong et al. introduce a technique that combines the deep autoencoder model with the Gaussian mixture model—named the DAGMM algorithm. DAGMM identifies novelty by providing inputs of Gaussian mixture model by the outcome of deep autoencoder model which is low dimensional features and reconstruction errors [16].

3. Methodology

In this section, we have amplified how to describe the novelty detection of data without labels using a deep learning autoencoder (AE) reconstruction error.

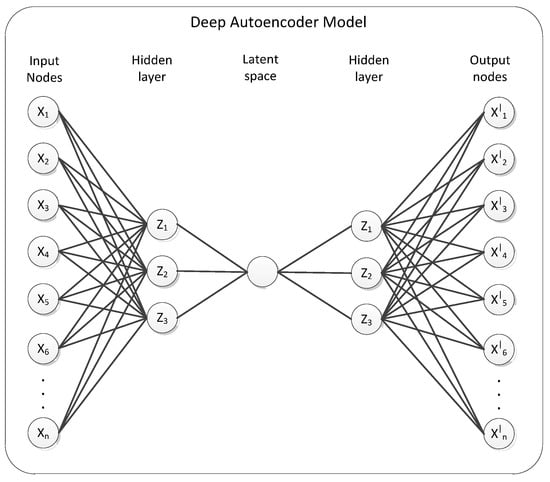

The proposed approach has two basic functions: dimension reduction and identification of novelty. The general architecture of the proposed method is illustrated in Figure 1:

Figure 1.

General architecture of proposed method.

3.1. Deep Autoencoders for Dimensionality Reduction

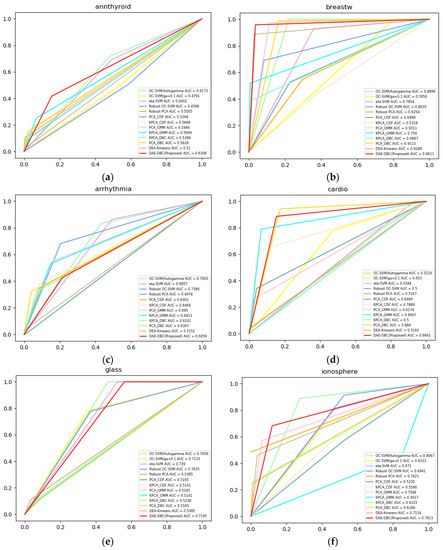

Autoencoder is one kind of ANN [28], which is the unsupervised algorithm for learning to copy its own input (x1 … xn) to its output (y1 … yn) as close (xi = yi) as possible by reducing the gap between inputs and outputs [29]. As visualized in Figure 2, input and output neurons are the same for AE and its hidden layer is a compressed or learned feature. In general, the AE structure is the same as a neural network with a hidden layer at least 1 but AE is distinguished from the goal to predict the output corresponding to the specific input of NN by the purpose of reconstruct the input. The learning process of AE first compress input x to a small dimension and reconstruct an output y from the small dimension and calculates the difference between the input and reconstructed values and changes weight assignments to reduce the difference. As the novelty data, the reconstruction error is high due to the lack of successful reconstruction of the low-dimensional projection.

Figure 2.

Deep autoencoder model.

In the research work, the compressed representation of data provided by deep AE contains two features (1) reduced dimensional representation and (2) the reconstruction error from input and reconstructed features by learned deep autoencoder model. As shown in Figure 1, there are two AE models are used to estimate the novelty threshold and compressed representation of data. From the first AE model, the initial novelty threshold will be calculated. After that, we get rid of data that close to the normal using this threshold and the second AE model is trained by these data. To estimate the optimal threshold from the distribution histogram of the reconstruction error commonly used thresholding technique, the Otsu method [30] is used. The Otsu method calculates the optimum threshold by separating the two classes. Therefore, the desired threshold corresponds to the maximum value of between two class variances. Get the best-suited error threshold for separating novelty and normal values because of the second model trained by data close to the norms. We calculate the final compressed data that is composed of a reconstruction error and reduced dimensional representation by giving again all inputs to the second AE model. Algorithm 1 shows the Dimensionality reduction which is one of the two basic functions of the proposed method step by step:

| Algorithm 1: Dimensionality reduction. |

| 1: Input: Set of points 2: Output: Z |

| 3: inputLayer ← ( n_ nodes = n) 4: encoderLayer1 ← Dense(n_ nodes = n/2, activation = sigmoid)(inputLayer) 5: encoderLayer2 ← Dense(n_ nodes = 1, activation = sigmoid)(encoderLayer1) 6: decoderLayer1 ← Dense(n_ nodes = n/2, activation = tanh)(encoderLayer2) 7: decoder _layer2 ← Dense(n_ nodes = n, activation = tanh)(decoderLayer1) 8: AEModel ← Model(input_layer, decoder _layer2) 9: AEModel.fit(X) 10: ← AEModel.predict(X) |

| 11: reconstructionError ← [] 12: for i=0 to do 13: reconstructionErrori ← 2 14: end for |

| 15: threshold ← Otsu(reconstructionError) 16: X[reconstructionError] ← reconstructionError |

| 17: norms ← (X [reconstructionrError] < threshold) |

| 18: AEModel.fit(norms) 19: ← AEModel.predict(X) 20: E ← AEModel. encoderLayer2 |

| 21: reconstructionError ← [] 22: for i to do 23: reconstructionErrori ← 2 24: end for |

| 25: finalThreshold ← Otsu(reconstructionError) |

| 26: Z ← [] 27: Z[encoded] ← E 28: Z[error] ← reconstructionError 29: return Z |

The dimension of each benchmark dataset is varying. Our AE model will be built like that if the dimension of the dataset is n, reduced from dimension n to n/2, from dimension n/2 to 1 and then reconstruct from the reduced dimension by reverting from 1 to n/2, from n/2 to n. Take two-dimensional new data to combine the bottleneck hidden layer which has only 1 node and reconstruction error of each point from this AE model. This new dataset is used by input for further processes for novelty detection. Algorithm 1’s computational complexity is defined as follows:

- Run-time complexity of backpropagation of autoencoder model is O(epochs*training example*(number of weights))

- Prediction process is O(n)

- Calculate reconstruction errors from decoded output and input is O(n)

- Calculate novelty threshold is O(n)

3.2. Density Based Clustering for Novelty Detection

Density-based clustering locates regions of high density that are separated from one another by regions of low density [2]. DBSCAN [31] is a basic, simple and effective density-based clustering algorithm and it can find arbitrarily shaped clusters. In this algorithm, a user specifies two parameters eps which determine a maximum radius of the neighborhood and minPts which determine a minimum number of points in the eps of point.

To automatically adjust the value of the eps parameter, we used the K-dist plot method [31] to calculate the nearest neighboring space for each point. The main idea is that if the point is contained in any cluster, its K-dist value is less than the size of the cluster and in the absence of any cluster, the value of K-dist will be high. Therefore, we have calculated the K-dist value for all points and placed it in ascending order and have chosen the optimal eps for the initial maximum change.

When grouping the data via DBSCAN clustering algorithm, some points do not remain a part of any cluster. In the proposed method, we have considered data points which do not include any clusters as one whole cluster. After grouping of extracted low dimensional feature space (2D), find out which clusters are the novelty. DBSCAN algorithm cannot provide a degree of novelty score [32]. So, we use a final error threshold obtained from the second AE model to make a novelty detection. If the majority of instances of the cluster exceed the threshold, the whole cluster is considered a novelty. Algorithm 2’s computational complexity is defined as:

- Calculate nearest neighbors is O(dn3) where d is dimension and n is the number of samples

- DBSCAN clustering is O(n2)

- Identify novelty is O(n)

In Algorithm 2 shows how to discover novelty step by step:

| Algorithm 2: Identify novelty. |

| 1: Input: Set of points , set op points X, 2: Output: X, #with decision |

| 3: nbrs ← NearestNeighbors (n_neighbors=3).fit (Z) 4: distance ← nbrs.kneighbors (Z) 5: distance.sort() 6: eps ← first extreme value of distance |

| 7: cluster ← dbscan(Z, eps) 8: n_clusters ← len(cluster.labels_) 9: X[labels] ← cluster.labels_ |

| 10: dict_error ← {} 11: for i=0 to n_clusters do 12: bool_values ← (X [labels_] == i ) 13: cluster_i_set ← Z[boolvalues] 14: n_instance_in_cluster_i = len(cluster_i_set) 15: error_count = 0 16: for j in n_instance_in_cluster_i do 17: if cluster_i_set[j] > final_threshold 18: error_count ← error_count + 1 19: endif 20: end for 21: dict_error[i] ← error_count 22: end for 23: for i=0 to n_clusters do 24: cluster_i ← X[db_labels == i] 25: n_of_instance ← len(cluster_i) 26: if n_of_instance * 0.5 less than or equal to dict_error.get(i) 27: X.update[ all rows in cluster, labels ] = 1 #novelty 28: else 29: X. update[ all rows in cluster, labels ] = 0 #normal 30: endif 31: end for 32: return X |

We have summarized the general architecture solutions in Algorithm 3 and the dimensionality reduction and have identified the novelties that are contained in it, which have been summarized in Algorithms 1 and 2.

The generic functions of the proposed DAE-DBC are shown in Algorithm 3:

| Algorithm 3: DAE-DBC algorithm. |

| 1: Input: Set of points 2: Output: Y, { #decision |

| 3: Z ← Dimensionality reduction(X) 4: Y ← Identify novelty(Z) 5: return Y |

High-dimensional data can cause problems for data mining and analysis [24]; also, this curse of dimensionality problem introduced by R. Bellman [33]. To avoid this problem, we have proposed a method instead of grouping directly the input data, to group the low dimensional representation of the input.

The advantage of the proposed method is that by calculating the error threshold in two stages, making it more precise novelty detection. First, the autoencoder model is trained by all data and the first threshold is estimated from its reconstruction error. In this case, using all the data including both the novelty and the normal to train the autoencoder model, so using directly this threshold may have a negative effect on novelty detection. Therefore, according to the estimated threshold, it can get rid of the data which is close to the normal and retrain the autoencoder model using close to normal data. The second model that trained from the data including less number of the novelty than the first one, thus, it is used to estimate the final error threshold and to reduce the dimensionality. Creating clusters from final low dimensional space, to determine whether the cluster is the novelty if the most of instance in a group is abnormal, this cluster is considered as the novelty and labeled by abnormal.

4. Experimental Study

In this section, we have compared the results of our DAE-DBC method, OC-SVM, eta-SVM, Robust-SVM [20], PCA with Gaussian Mixture Model (GMM), PCA with Cumulative distribution function (CDF), kernel PCA with GMM, kernel PCA with CDF, Robust PCA [25] and have proposed method versions that replace its some components by PCA or kernel PCA or K-means clustering technique.

4.1. Datasets

Our outlier benchmark datasets are from the Outlier Detection Datasets (ODDS) website [34]. The original version of benchmark datasets with the ground truth are for a classification in the UCI machine learning repository. The description and class labels that are considered as the novelty of the benchmark datasets in this experiment are detailed in Table 1.

Table 1.

Description of datasets.

4.2. Methods Compared

We have compared our DAE-DBC which is deep autoencoder reconstruction error based method with the unsupervised state-of-the-art methods below.

- OC-SVM. OC-SVM is used for novelty detection based on building prediction model from only normal dataset [23]. Eta-SVM and Robust SVM that an enhanced OC-SVM are introduced by Mennatallah et al. [20] and they suggest an implementation which is an extension of Rapidminer. We use this extension configured by RBF kernel function for these three algorithms.

- PCA based methods. PCA is commonly used for outlier detection and calculates the outlier score using the principal component scores and reconstruction error which is the orthogonal distance of each data point and its first principal component score. The Gaussian Mixture Model and Cumulative Distribution Function are used to make novelty detection decision from Outlier score [35,36]. We compare the PCA-based methods including PCA-GMM, PCA-CDF, Kernel PCA-GMM, Kernel PCA-CDF and Robust PCA [25].

We present a series of proposed methods by modifying the components to show that the components of the proposed DAE-DBC method together are more effective. In other words, we have tested with some methods, such as the proposed approach with K-means, proposed approach with PCA and proposed approach with KPCA.

- DAE-DBC (Proposed method). The structure of the deep autoencoder is composed of the 5 number of layers like {input layer (n neurons) → encoding layer (n/2 neurons) → encoding layer (1 neuron) → decoding layer (n/2 neurons) → decoding layer (n neuron)}, where n is the number of neurons. In here, the number of neurons in a bottleneck hidden layer which is the compressed representation of the original input is equal to one, other hidden layers are composed of neurons of about a half of the input neurons. The sigmoid activation function is used for encoding type-layers, the tanh activation function is for decoding-type layers respectively. Reconstruction error and compressed representation are grouped by density-based DBSCAN clustering algorithm and the novelty threshold will decide whether the group is the novelty.

- DAE-Kmeans. Compare with the proposed method using the K-means clustering algorithm instead of density-based clustering. K-means clustering algorithms require the number of cluster k and we have used Silhouettes analysis which is used for evaluation of clustering validity to find optimal k [37].

- PCA-DBC, KPCA-DBC. Our proposed method is based on reconstruction error. So, we have used PCA and KPCA in place of AE for calculating reconstruction error and compressed representation.

4.3. Evaluation Metrics

The grand-truth label is used to measure the performance of all the methods used in the experiment. The Receiver Operating Characteristic (ROC) curve and its area under curve (AUC) is used to measure the accuracy of all test methods. AUC represents a summary measure of accuracy and high AUC indicates the good result.

4.4. Experimental Result

The experiment was carried out on a Thinkpad W510, 1st gen i7 and 8GB Ram. First, we have made an experiment on 20 benchmark datasets to compare Algorithm 1 and Algorithm 3. As a result of applying Algorithm 1, we get 2 dimensional features consisting of the reduced dimension and the reconstruction errors as well as a finalThreshold which represents the novelty threshold. If algorithm 1 is used without algorithm 2, each point that is exceeding the finalThreshold will be defined as a novelty. However, the synergy of using both algorithm 1 and algorithm 2 will result in grouping points that exceed the finalThreshold as a novelty group. Table 2 shows that the AUC score of novelty detection is growing when using Algorithm 1 and Algorithm 2 together (Algorithm 3) instead of using Algorithm 1 alone.

Table 2.

AUC score comparison between Algorithm 1 and Algorithm 3. The highest values of AUC scores are marked in bold.

Second, we have made experiments on a total of 20 benchmark datasets to compare our proposed algorithms with state-of-the-art algorithms. Extensions of RapidMiner, which is proposed by OC-SVM, eta-SVM, Robust SVM and Robust PCA Mennatallah et al. was used and other algorithms were implemented in Python with Keras, which is a high-level neural networks API, written in Python and capable of running on top of TensorFlow. The Deep autoencoder model is set to match the same for DAE-DBC and DAE-Kmeans algorithms. Deep autoencoder was trained by the Adam algorithm [38] and learning rate was 0.001 to minimize mean squared error. Batch size was 2 and number of epochs to train model was 50.

In implementation of algorithm 1, original CSV data file is loaded into DataFrame in pandas Python package. DataFrame is a 2-dimensional labeled data structure with columns of potentially different types. After building first autoencoder model, decoded output result and reconstruction errors are stored in one-dimensional arrays. The data with a lower reconstruction error is stored into new DataFrame. Train the 2nd autoencoder model by the data obtained and the final compressed data including reconstruction errors and low dimensional representation from second AE model is written into CSV file. In the implementation of algorithm 2, the compressed data file is loaded into the DataFrame. Clusters’ labels and distances between points in the compressed representation are stored in one-dimensional arrays. Also, the count of the novelty in each cluster is stored in a dictionary data structure. The final novelty labels will be appended into the original CSV data file.

Experimental results show that we can use the DAE-DBC method on various dimensions of data due to the fact that it has given higher AUC than other methods on most of the data. A total of 13 methods were tested on 20 benchmark datasets, the highest values of AUC scores are marked in bold in Table 3. The first is the DAE-DBC algorithm 0.7829, the second is the PCA-DBC algorithm 0.6924, the third is OC-SVM algorithm 0.6865 by the average AUC score are higher than other algorithms. The proposed DAE-DBC method shows the AUC score of less than 0.8 on Vertebral, Optdigits, Letter, Arrhythmia and Annthyroid datasets. Comparing the above datasets with the datasets shown in the high AUC score, the average values between some of the features have high variance. Testing 13 methods have sometimes indicated memory errors in KPCA-CDF, KPCA-GMM and KPCA-DBC algorithms depending on sample number. For our proposed DAE-DBC algorithm, all of the data has been successfully tested.

Table 3.

AUC score of compared methods on benchmark datasets. The highest values of AUC scores are marked in bold.

Table 4 shows the time performance of algorithms used in our experimental study and the unit of the table is seconds for all columns. However, for the small dataset, the complexity time of the SVM based (OC-SVM, eta-SVM and Robust-SVM) and PCA based (Robust-PCA, PCA-CDF, KPCA-CDF, PCA-GMM, KPCA-GMM and KPCA-DBC) algorithms work faster than the Autoencoder based (DAE-Kmeans and DAE-DBC) algorithms. Our proposed algorithm achieves higher accuracy compared with the other algorithms. For the large dataset such as Suttle, our proposed method works faster than SVM based methods. As a conclusion, the accuracy complexity of our proposed algorithm is higher than other algorithms and the computation time of our algorithm is constant for all datasets.

Table 4.

Comparison of computation time of algorithms.

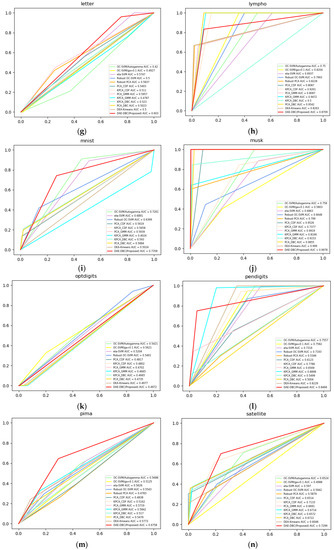

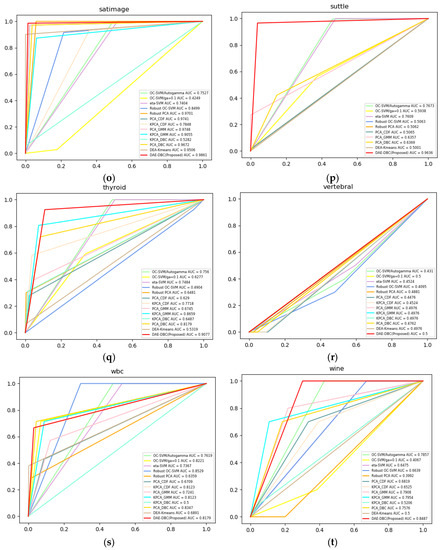

Figure 3 shows the ROC curves of compared 13 methods on 20 different benchmark datasets from ODDS. The DAE-DBC method shows higher AUC scores on 9 of total 20 datasets than other methods. The x-axis is False Positive Rate and the y-axis is True Positive Rate in Figure 3 ROC curves of compared methods.

Figure 3.

ROC curves of compared methods. (a) Annthyroid, 6 dimension, novelty ratio 0.08; (b) BreastW, 9 dimension, novelty ratio 0.53; (c) Arrhythmia, 274 dimension, novelty ratio 0.17; (d) Cardio, 21 dimension, novelty ratio 0.1; (e) Glass, 9 dimension, novelty ratio 0.04; (f) Ionosphere, 33 dimension, novelty ratio 0.56; (g) Letter, 32 dimension, novelty ratio 0.06; (h) Lympho, 18 dimension, novelty ratio; (i) Mnist, 100 dimension, novelty ratio 0.1; (j) Musk, 72 dimension, novelty ratio; (k) Optdigits, 64 dimension, novelty ratio 0.03; (l) Pendigits, 16 dimension, novelty ratio 0.02; (m) Pima, 8 dimension, novelty ratio 0.5; (n) Satellite, 36 dimension, novelty ratio 0.46; (o) Satimage, 36 dimension, novelty ratio 0.01; (p) Suttle, 9 dimension, novelty ratio 0.07; (q) Thyroid, 6 dimension, novelty ratio 0.02; (r)Vertebral, 6 dimension, novelty ratio 0.14; (s) Wbc, 30 dimension, novelty ratio 0.05; (t) Wine, 13 dimension, novelty ratio 0.08.

In order to demonstrate the effectiveness of components together, we have tested multiple variants for replacing components with different algorithms by this experiment. In other words, we used to reduce dimension and get the reconstruction error using AE, then we have made experiments to create PCA-DBC, KPCA-DBC version replacing AE by PCA technique and create DAE-Kmeans version replacing density-based clustering with the K-means algorithm. The DAE-DBC algorithm works better than its other versions and the-state-of-the-art methods that are proposed for other researches.

5. Conclusions

By this paper, we have proposed DAE-DBC approach to detect novelty on unsupervised mode by combining deep autoencoder for low dimensional representation and clustering techniques for novelty estimation. This approach can classify unlabeled dataset with different ratio with normal and novelty by calculating optimal parameters for error threshold and clustering algorithm. We have demonstrated that the components in suggested approach in the experiment has given more efficient results together. DAE-DBC algorithm has improved average AUC of 9 state-of-the-art algorithms on 20 public benchmark datasets by 13.5 percent. Therefore, we suggest this approach on high dimensional data to make unsupervised novelty detection.

Author Contributions

T.A. and B.J. conceived and designed the experiments; T.A. performed the experiments; T.A. and B.J. analyzed the data; T.A and B.J. wrote the paper; K.H.R. supervised, checked, gave comments and approved this work.

Funding

This research was supported by Basic Science Research Program through the National Research Foundation of Korea (NRF) funded by the Ministry of Science, ICT & Future Planning (No. 2017R1A2B4010826).

Conflicts of Interest

The authors declare no conflict of interest.

References

- Chandola, V.; Banerjee, A.; Kumar, V. Anomaly Detection: A Survey. ACM Comput. Surv. 2009, 41, 1–58. [Google Scholar] [CrossRef]

- Tan, P.N.; Steinbach, M.; Kumar, V. Anomaly Detection. In Introduction to Data Mining; Goldstein, M., Harutunian, K., Smith, K., Eds.; Pearson Education Inc.: Boston, MA, USA, 2006; pp. 651–680. ISBN 0-321-32136-7. [Google Scholar]

- Chalapathy, R.; Menon, A.K.; Chawla, S. Anomaly Detection using One-Class Neural Networks. arXiv, 2018; arXiv:1802.06360. [Google Scholar]

- Pascoal, C.; Oliveira, M.R.; Valadas, R.; Filzmoser, P.; Salvador, P.; Pacheco, A. Robust Feature Selection and Robust PCA for Internet Traffic Anomaly Detection. In Proceedings of the IEEE INFOCOM, Orlando, FL, USA, 25–30 March 2012. [Google Scholar]

- Kim, D.P.; Yi, G.M.; Lee, D.G.; Ryu, K.H. Time-Variant Outlier Detection Method on Geosensor Networks. In Proceedings of the International Symposium on Remote Sensing, Daejeon, Korea, 29–31 October 2008. [Google Scholar]

- Lyon, A.; Minchole, A.; Martınez, J.P.; Laguna, P.; Rodriguez, B. Computational techniques for ECG analysis and interpretation in light of their contribution to medical advances. J. R. Soc. Interface 2018, 15. [Google Scholar] [CrossRef] [PubMed]

- Anandakrishnan, A.; Kumar, S.; Statnikov, A.; Faruquie, T.; Xu, D. Anomaly Detection in Finance: Editors’ Introduction. In Proceedings of the Machine Learning Research, Halifax, Nova Scotia, 14 August 2017. [Google Scholar]

- Jin, C.H.; Park, H.W.; Wang, L.; Pok, G.; Ryu, K.H. Short-term Traffic Flow Forecasting using Unsupervised and Supervised Learning Techniques. In Proceedings of the 6th International Conference FITAT and 3rd International Symposium ISPM, Cheongju, Korea, 25–27 September 2013. [Google Scholar]

- Hauskrecht, M.; Batal, I.; Valko, M.; Visweswaran, S.; Cooper, C.F.; Clermont, G. Outlier detection for patient monitoring and alerting. J. Biomed. Inform. 2013, 46, 47–55. [Google Scholar] [CrossRef] [PubMed]

- Cho, Y.S.; Moon, S.C.; Ryu, K.S.; Ryu, K.H. A Study on Clinical and Healthcare Recommending Service based on Cardiovascula Disease Pattern Analysis. Int. J. Biosci. Biotechnol. 2016, 8, 287–294. [Google Scholar] [CrossRef]

- Chawla, N.V.; Japkowicz, N.; Kotcz, A. Editorial: Special Issue on Learning from Imbalanced Data Sets. SIGKDD Explor. Newsl. 2004, 6, 1–6. [Google Scholar] [CrossRef]

- Sarah, M.E.; Rajasegarar, S.; Karunasekera, S.; Leckie, C. High-dimensional and large-scale anomaly detection using a linear one-class SVM with deep learning. Pattern Recognit. 2016, 58, 121–134. [Google Scholar]

- Zimek, A.; Schubert, E.; Kriegel, H.P. A survey on unsupervised outlier detection in high-dimensional numerical data. Stat. Anal. Data Min. 2012, 5, 363–387. [Google Scholar] [CrossRef]

- Rousseeuw, P.J.; Hubert, M. Anomaly Detection by Robust Statistics. WIREs Data Min. Knowl Discov. 2018, 8, 1–30. [Google Scholar] [CrossRef]

- Hoffmann, H. Kernel PCA for novelty detection. Pattern Recognit. 2007, 40, 863–874. [Google Scholar] [CrossRef]

- Zong, B.; Song, Q.; Min, M.R.; Cheng, W.; Lumezanu, C.; Cho, D.; Chen, H. Deep autoencoding gaussian mixture model for unsupervised anomaly detection. In Proceedings of the 6th International Conference on Learning Representations, Vancouver, BC, Canada, 30 April–3 May 2018. [Google Scholar]

- Protopapadakis, E.; Voulodimos, A.; Doulamis, A.; Doulamis, N.; Dres, D.; Bimpas, M. Stacked Autoencoders for Outlier Detection in Over-the-Horizon Radar Signals. Comput. Intell. Neurosci. 2017, 2017. [Google Scholar] [CrossRef] [PubMed]

- Kim, H.; Hirose, A. Unsupervised Fine Land Classification Using Quaternion Autoencoder-Based Polarization Feature Extraction and Self-Organizing Mapping. IEEE Trans. Geosci. Remote Sens. 2018, 56, 1839–1851. [Google Scholar] [CrossRef]

- Elbatta, M.T.; Ashour, W.M. A Dynamic Method for Discovering Density Varied Clusters. International Journal of Signal Processing. Image Process. Pattern Recognit. 2013, 6, 123–134. [Google Scholar]

- Amer, M.; Goldstein, M.; Abdennadher, S. Enhancing One-class Support Vector Machines for Unsupervised Anomaly Detection. In Proceedings of the ACM SIGKDD Workshop on Outlier Detection and Description, Chicago, IL, USA, 11 August 2013. [Google Scholar]

- Ghafoori, Z.; Erfani, S.M.; Rajasegarar, S.; Bezdek, J.C.; Karunasekera, S.; Leckie, C. Efficient Unsupervised Parameter Estimation for One-Class Support Vector Machines. IEEE Trans. Neural Netw. Learn. Syst. 2018, 1–14. [Google Scholar] [CrossRef] [PubMed]

- Yin, S.; Zhu, X.; Jing, C. Fault Detection Based on a Robust One Class Support Vector Machine. Neurocomputing 2014, 145, 263–268. [Google Scholar] [CrossRef]

- Schölkopf, B.; Williamson, R.; Smola, A.; Shawe-Taylor, J.; Platt, J. Support Vector Method for Novelty Detection. In Proceedings of the 12th International Conference on Neural Information Processing Systems, Cambridge, MA, USA, 29 November–4 December 1999. [Google Scholar]

- Zaki, M.J.; Wagner, M., Jr. Data Mining and Analysis: Fundamental Concepts and Algorithms; Cambridge University Press: New York, NY, USA, 2014; ISBN 978-0-521-76633-3. [Google Scholar]

- Kwitt, R.; Hofmann, U. Robust Methods for Unsupervised PCA-based Anomaly Detection. In Proceedings of the IEEE/IST Workshop on “Monitoring, Attack Detection and Mitigation”, Tuebingen, Germany, 28–29 September 2006. [Google Scholar]

- Zhang, Y.D.; Zhang, Y.; Hou, X.X.; Chen, H.; Wang, S.H. Seven-Layer Deep Neural Network Based on Sparse Autoencoder for Voxelwise Detection of Cerebral Microbleed. Multimedia Tools Appl. 2018, 77, 10521–10538. [Google Scholar] [CrossRef]

- Jia, W.; Muhammad, K.; Wang, S.H.; Zhang, Y.D. Five-Category Classification of Pathological Brain Images Based on Deep Stacked Sparse Autoencoder. Multimedia Tools Appl. 2017, 77, 1–20. [Google Scholar] [CrossRef]

- Liou, C.; Cheng, W.; Liou, J.; Liou, D. Autoencoder for Words. Neurocomputing 2014, 139, 84–96. [Google Scholar] [CrossRef]

- Guo, Y.; Liu, Y.; Oerlemans, A.; Lao, S.; Wu, S.; Lew, M.S. Deep Learning for Visual Understanding: A review. Neurocomputing 2016, 187, 27–48. [Google Scholar] [CrossRef]

- Otsu, N. A Threshold Selection Method from Gray-Level Histograms. IEEE Trans. Syst. Man Cybern. 1979, 9, 62–66. [Google Scholar] [CrossRef]

- Ester, M.; Kriegel, H.P.; Sander, J.; Xu, X. A Density-Based Algorithm for Discovering Clusters in Large Spatial Databases with Noise. KDD-96 Proc. 1996, 96, 226–231. [Google Scholar]

- Hodge, V.; Austin, J. A Survey of Outlier Detection Methodologies. Artif. Intell. Rev. 2004, 22, 85–126. [Google Scholar] [CrossRef]

- Bellman, R.E. Adaptive Control Processes: A Guided Tour; Princeton University Press: Princeton, NJ, USA, 1961; ISBN 9781400874668. [Google Scholar]

- Rayana, S. ODDS. Stony Brook University, Department of Computer Sciences, 2016. Available online: http://odds.cs.stonybrook.edu/ (accessed on 30 July 2018).

- Yu, J. Fault Detection Using Principal Components-Based Gaussian Mixture Model for Semiconductor Manufacturing Processes. IEEE Trans. Semicond. Manuf. 2011, 24, 432–444. [Google Scholar] [CrossRef]

- Kim, J.; Grauman, K. Observe locally, infer globally: A space-time MRF for detecting abnormal activities with incremental updates. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Miami, FL, USA, 20–25 June 2009. [Google Scholar]

- Rousseeuw, P.J. Silhouettes: A Graphical Aid to the Interpretation and Validation of Cluster Analysis. J. Comput. Appl. Math. 1987, 20, 53–65. [Google Scholar] [CrossRef]

- Kingma, D.P.; Ba, J. Adam: A method for stochastic optimization. In Proceedings of the 3rd International Conference for Learning Representations, San Diego, CA, USA, 7–9 May 2014. [Google Scholar]

© 2018 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (http://creativecommons.org/licenses/by/4.0/).