Accelerated DEVS Simulation Using Collaborative Computation on Multi-Cores and GPUs for Fire-Spreading IoT Sensing Applications

Abstract

1. Introduction

2. Related Works

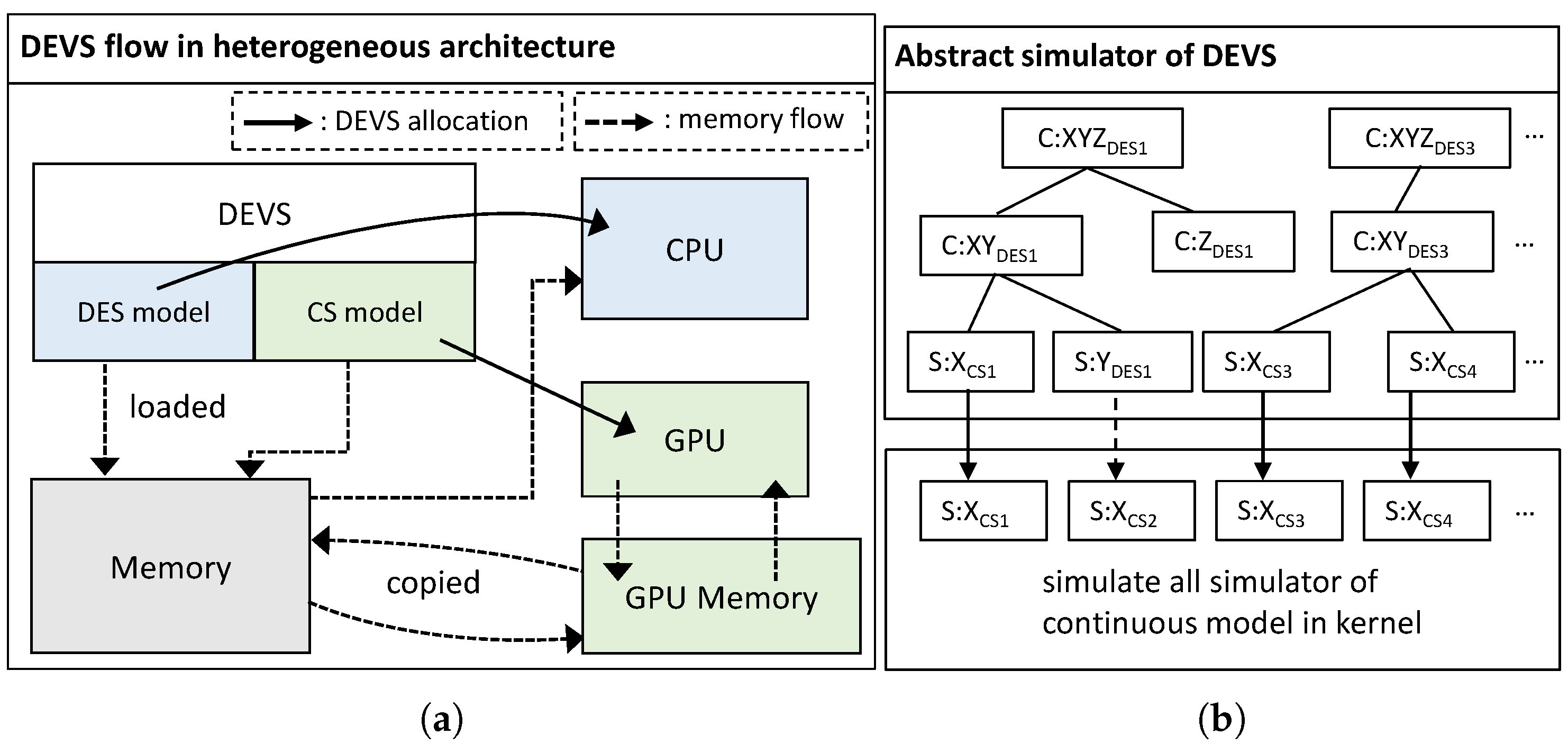

3. Background

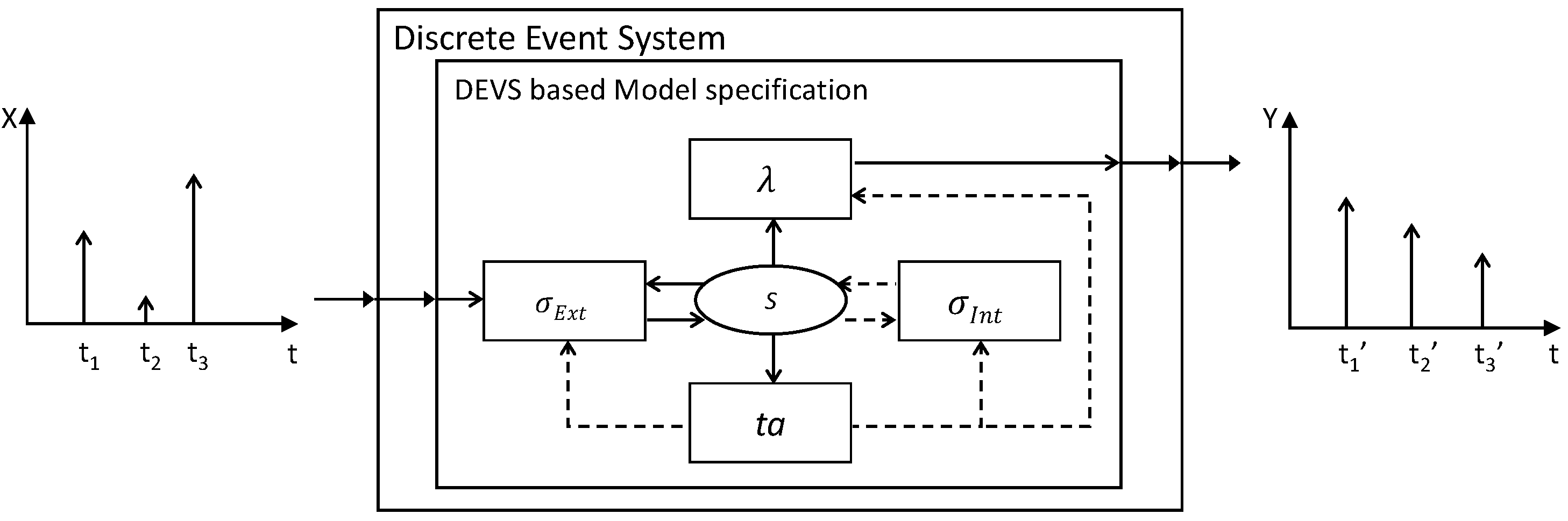

3.1. Discrete Event System

3.2. Continuous System

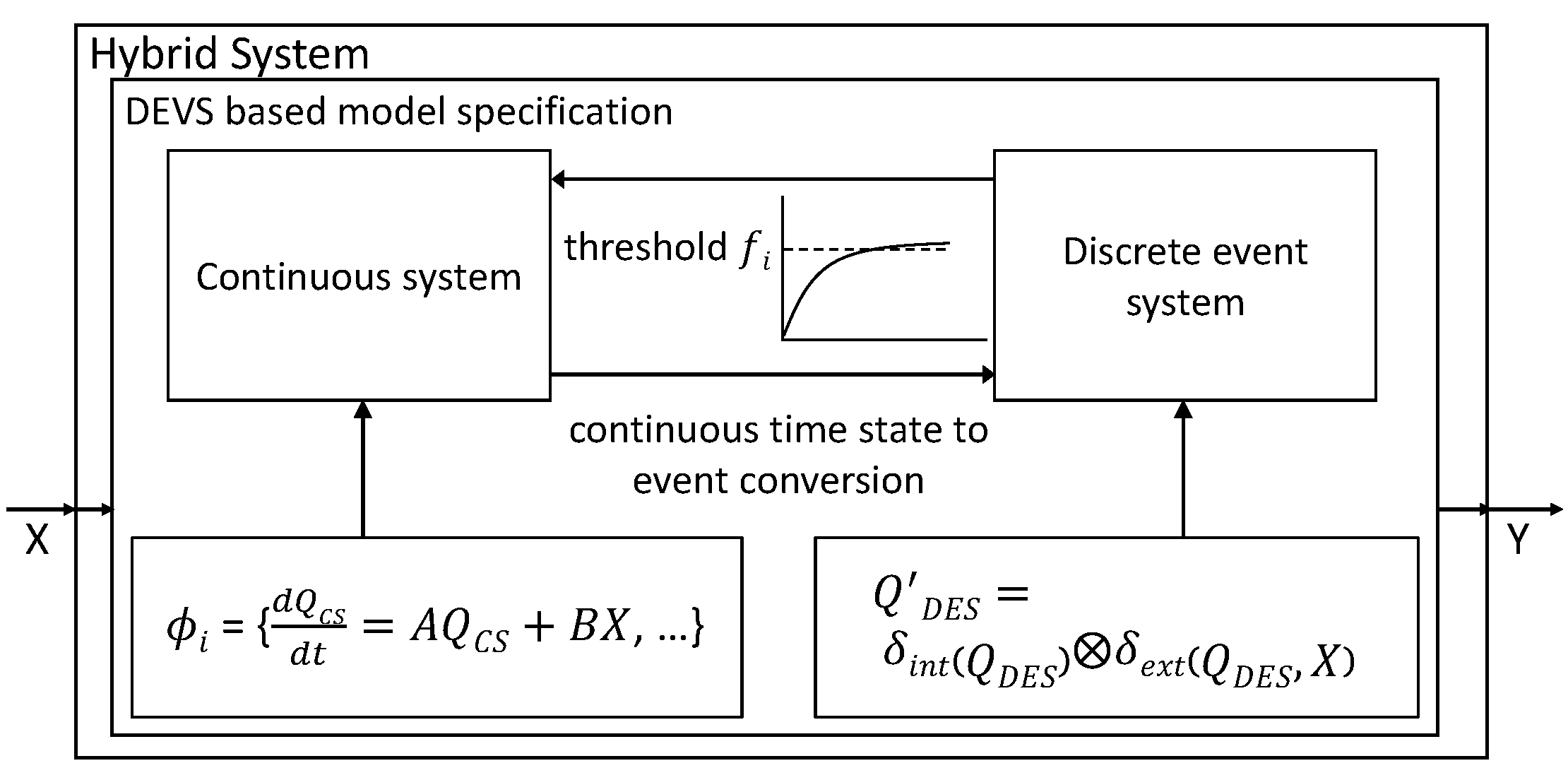

3.3. Hybrid System

3.4. CUDA Multi-Process Service

4. Proposed Architecture

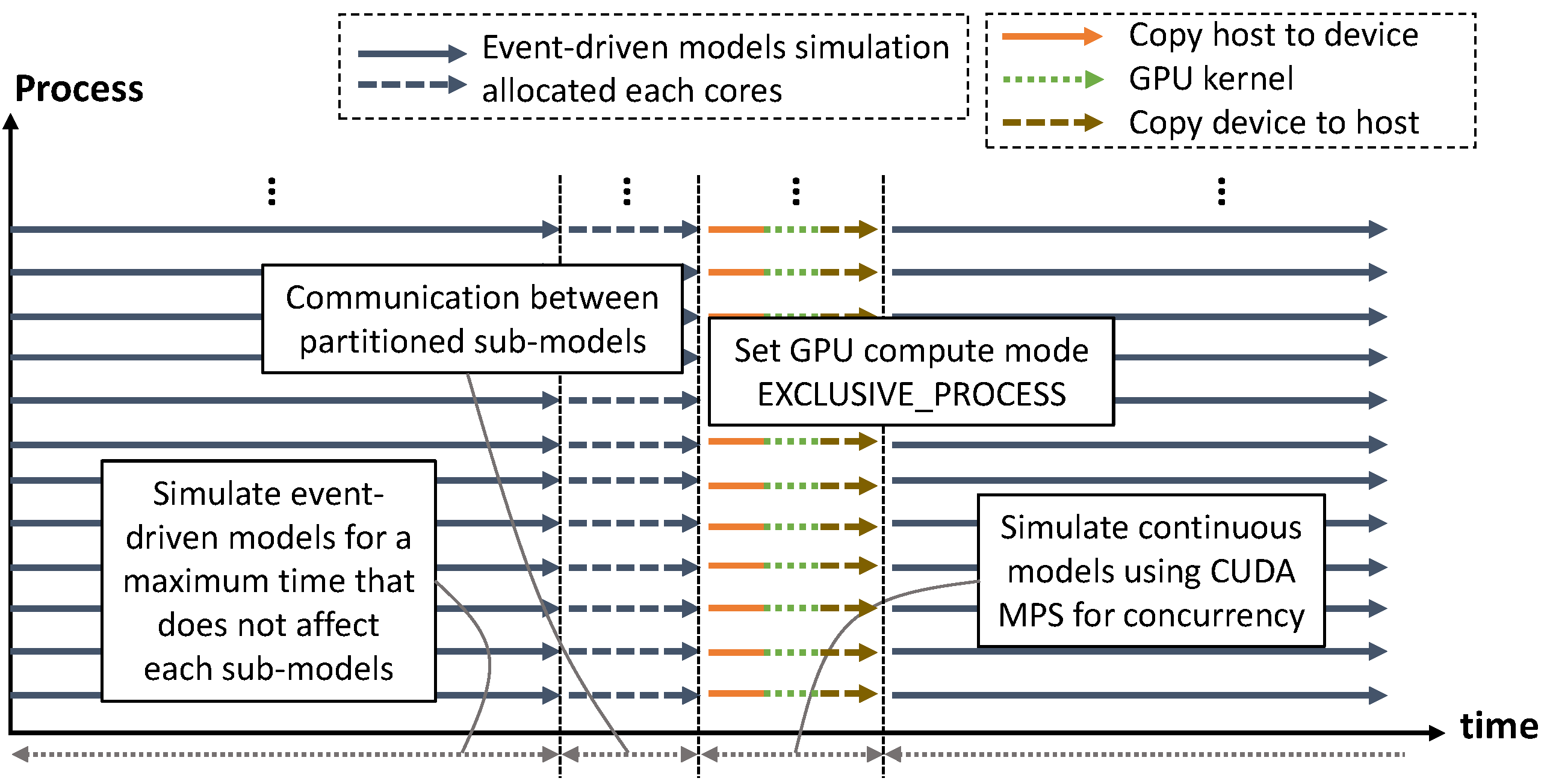

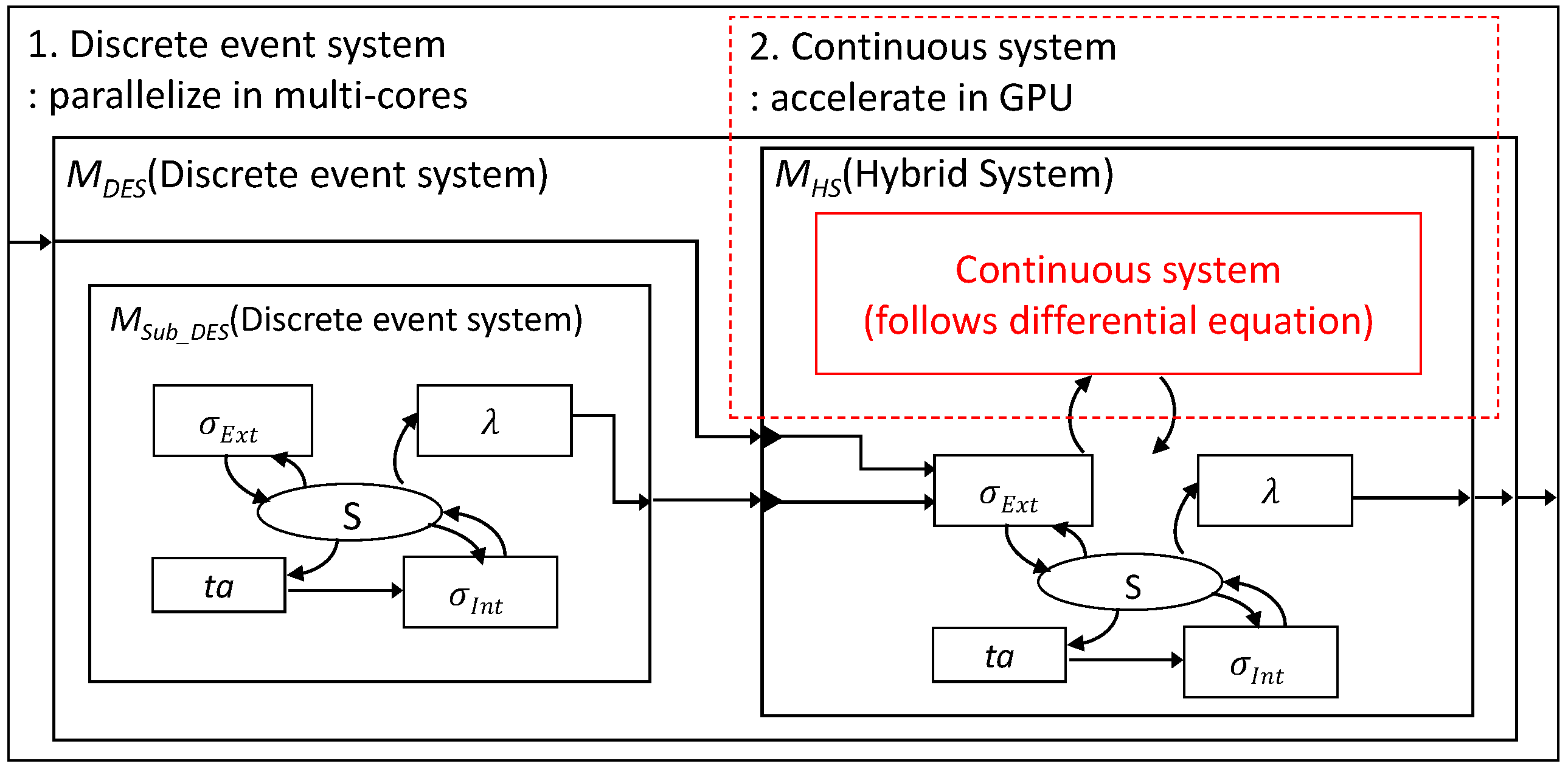

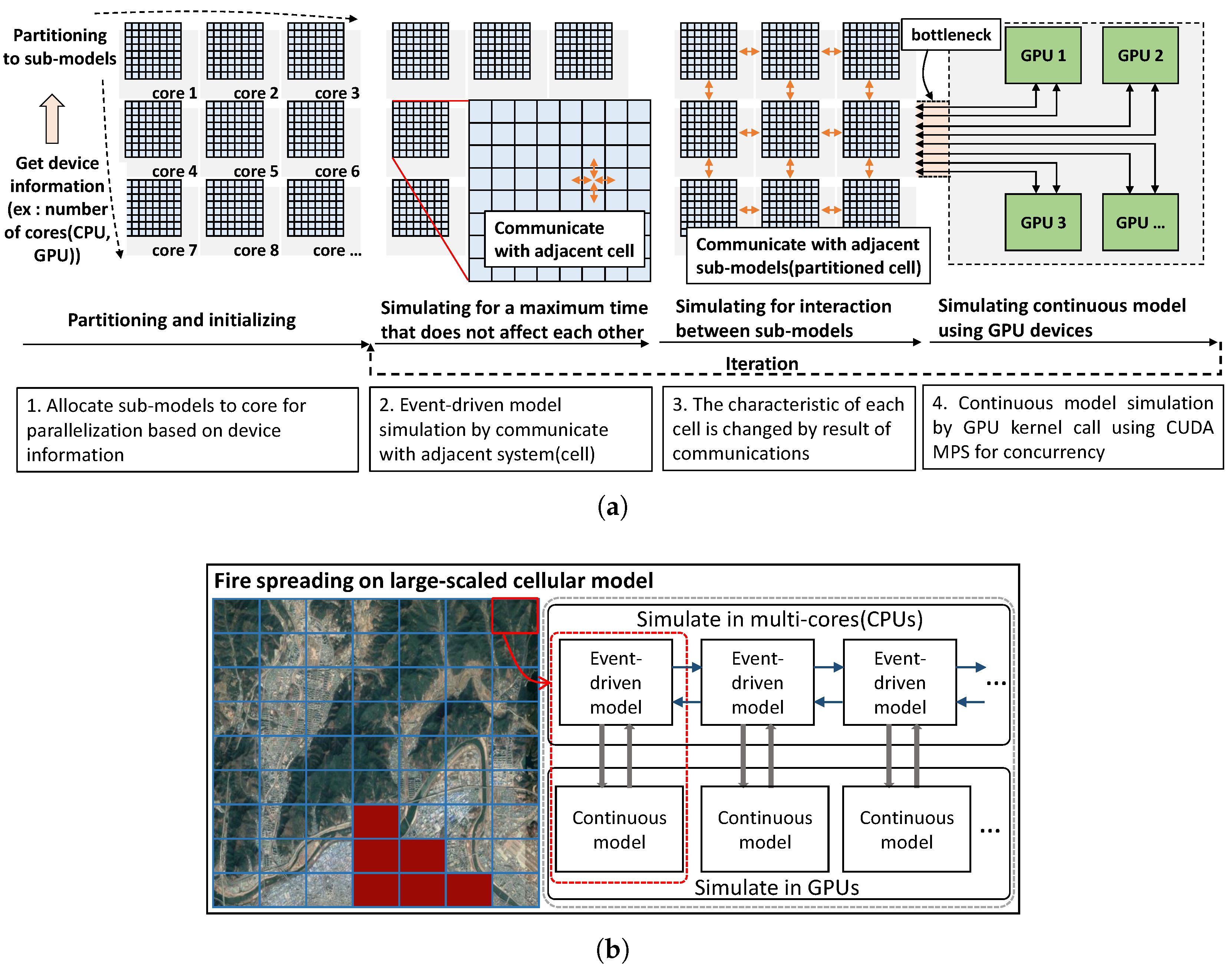

Multi-Core and GPU Tightly Coupled Simulation

| Algorithm 1 Proposed DEVS simulation cycle |

| for number of K cells |

| for discrete-event simulation |

| coordinator.send() simulator.reply() coordinator.compare () coordinator.send() simulator.check() if imminent |

| simulator.computes () end if simulator.send() simulator.computes() end for for continuous-time simulation by GPU kernel |

| for i = 1 to K, i is calculated by thread, block index |

| simulator.compute() end for end for end for |

5. Implementation

5.1. Simulation in Multi-Cores and GPUs

5.2. Fire Spreading Based on a Large-Scale Cellular Model

- is the set of inputs where represents vector value of neighbor cells;

- the set of outputs;

- is the set of DES model states;

- ,

- ,

- ,

- set of which used in internal transition;

- is the set of threshold sensor;

- the set of outputs which represent continuous value;

- is the set of differential equation.

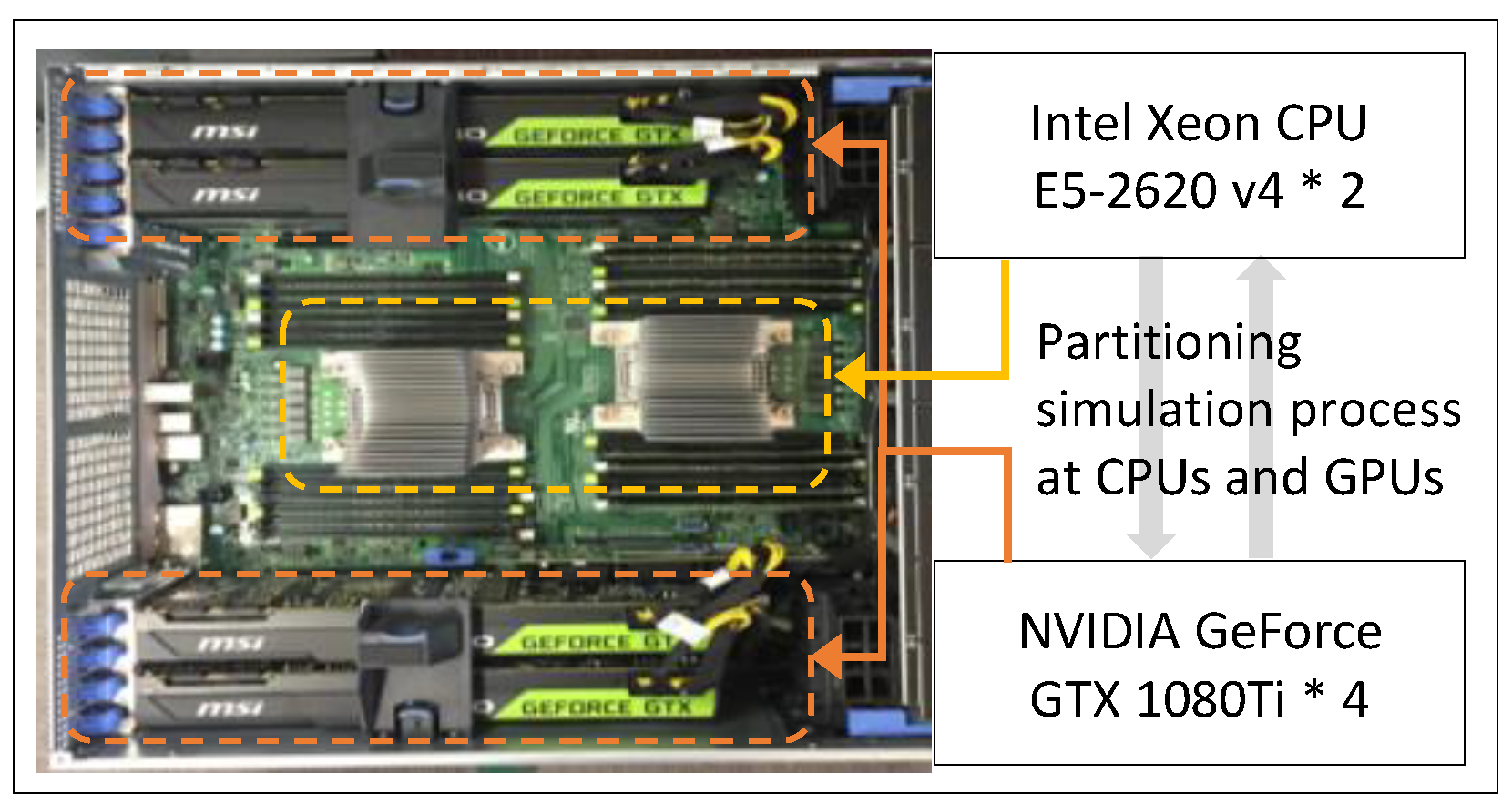

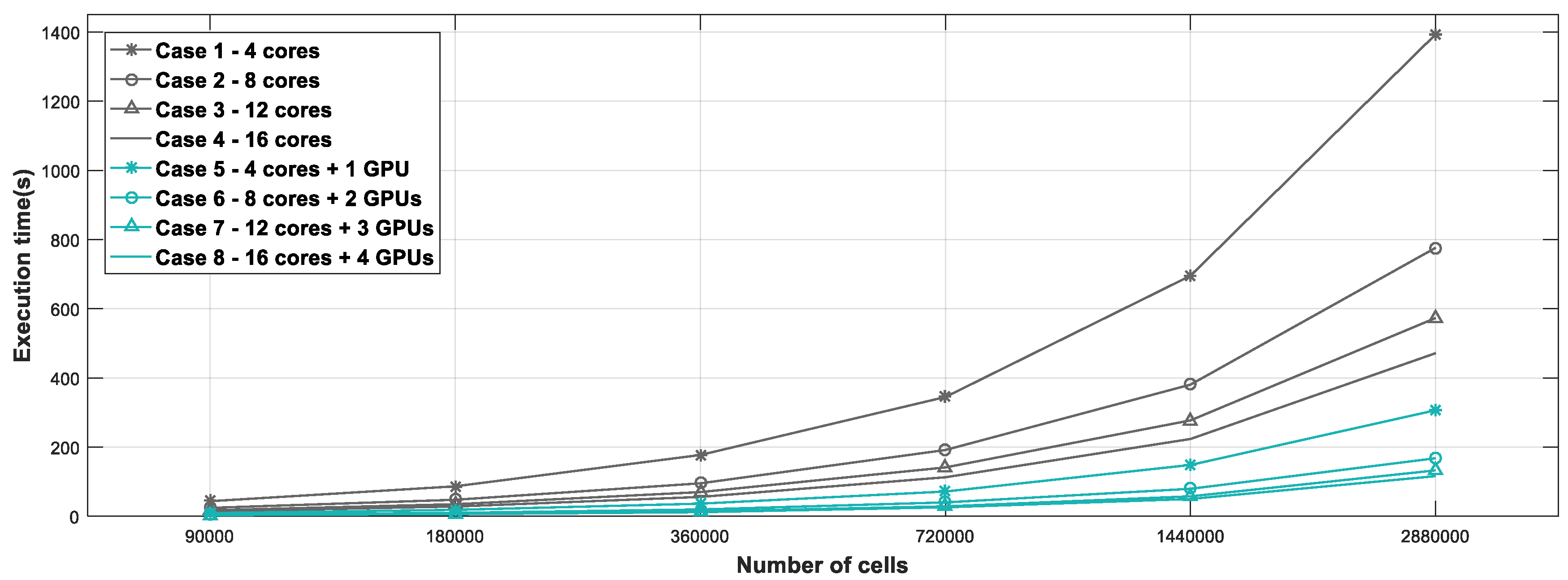

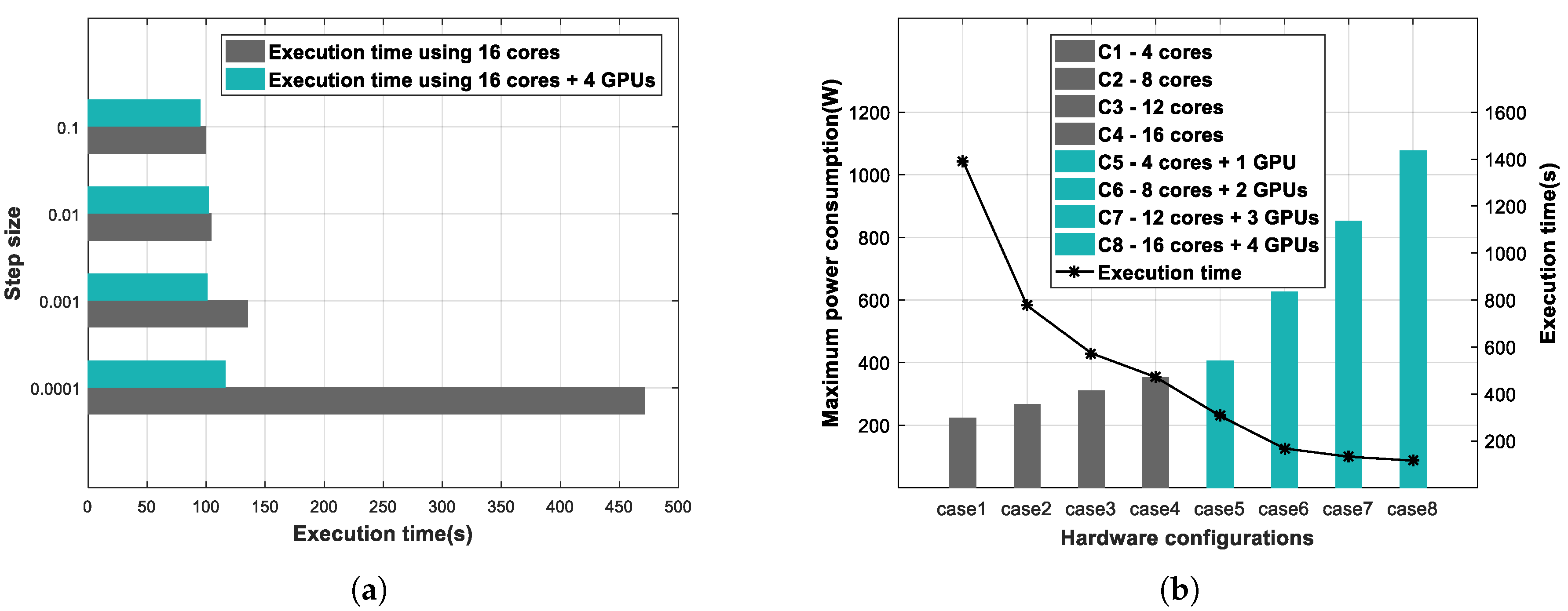

6. Experimental Results

7. Conclusions

Author Contributions

Acknowledgments

Conflicts of Interest

Abbreviations

| DEVS | Discrete Event System Specification |

| IoT | Internet of Things |

| DES | Discrete Event System |

| CS | Continuous System |

| CUDA | Compute Unified Device Architecture |

| CUDA MPS | CUDA Multi Process Service |

References

- Zeigler, B.P.; Kim, T.G.; Praehofer, H. Theory of Modeling and Simulation; Academic Press, Inc.: Orlando, FL, USA, 2000. [Google Scholar]

- Zeigler, B.P.; Praehofer, H.; Kim, T.G. Theory of Modeling and Simulation: Integrating Discrete Event and Continuous Complex Dynamic Systems; Academic Press: Cambridge, MA, USA, 2000. [Google Scholar]

- Zeigler, B.P.; Song, H.S.; Kim, T.G.; Praehofer, H. DEVS Framework for Modelling, Simulation, Analysis, and Design of Hybrid Systems; Hybrid Systems II; Antsaklis, P., Kohn, W., Nerode, A., Sastry, S., Eds.; Springer: Berlin/Heidelberg, Germany, 1995; pp. 529–551. [Google Scholar]

- Zeigler, B.; Moon, Y.; Kim, D.; Ball, G. The DEVS environment for high-performance modeling and simulation. Comput. Sci. Eng. IEEE 1997, 4, 61–71. [Google Scholar] [CrossRef]

- Bergero, F.; Kofman, E. PowerDEVS: A tool for hybrid system modeling and real-time simulation. Simulation 2011, 87, 113–132. [Google Scholar] [CrossRef]

- Hong, J.H.; Seo, K.M.; Kim, T.G. Simulation-based optimization for design parameter exploration in hybrid system: A defense system example. Simulation 2013, 89, 362–380. [Google Scholar] [CrossRef]

- Wainer, G.A. Modeling and simulation of complex systems with Cell-DEVS. In Proceedings of the 2004 Winter Simulation Conference, Washington, DC, USA, 5–8 December 2004; p. 60. [Google Scholar] [CrossRef]

- Liu, Q.; Wainer, G. Parallel Environment for DEVS and Cell-DEVS Models. Simulation 2007, 83, 449–471. [Google Scholar] [CrossRef]

- Liu, Q.; Wainer, G. Multicore Acceleration of Discrete Event System Specification Systems. Simulation 2012, 88, 801–831. [Google Scholar] [CrossRef]

- Seok, M.G.; Kim, T.G. Parallel Discrete Event Simulation for DEVS Cellular Models Using a GPU. In Proceedings of the 2012 Symposium on High Performance Computing, Orlando, FL, USA, 26–30 March 2012; pp. 11:1–11:7. [Google Scholar]

- Saadawi, H.; Wainer, G. On the Verification of Hybrid DEVS Models. In Proceedings of the 2012 Symposium on Theory of Modeling and Simulation—DEVS Integrative M&S Symposium, Orlando, FL, USA, 26–30 March 2012; pp. 26:1–26:8. [Google Scholar]

- Chow, A.C.; Zeigler, B.P.; Kim, D.H. Abstract simulator for the parallel DEVS formalism. In Proceedings of the Fifth Annual Conference on AI, and Planning in High Autonomy Systems, Gainesville, FL, USA, 7–9 December 1994; pp. 157–163. [Google Scholar] [CrossRef]

- Troccoli, A.; Wainer, G. Implementing Parallel Cell-DEVS. In Proceedings of the 36th Annual Symposium on Simulation, Washington, DC, USA, 30 March–2 April 2003; p. 273. [Google Scholar]

- Chow, A.C.H.; Zeigler, B.P. Parallel DEVS: a parallel, hierarchical, modular modeling formalism. In Proceedings of the 26th Conference on Winter Simulation, Orlando, FL, USA, 11–14 December 1994; pp. 716–722. [Google Scholar]

- Jafer, S.; Wainer, G. Flattened Conservative Parallel Simulator for DEVS and CELL-DEVS. In Proceedings of the 2009 International Conference on Computational Science and Engineering, Vancouver, BC, Canada, 29–31 August 2009; pp. 443–448. [Google Scholar]

- Himmelspach, J.; Ewald, R.; Leye, S.; Uhrmacher, A.M. Parallel and Distributed Simulation of Parallel DEVS Models. In Proceedings of the 2007 Spring Simulation Multiconference—Volume 2, Norfolk, Virginia, 25–29 March 2007; pp. 249–256. [Google Scholar]

- Bergero, F.; Kofman, E.; Cellier, F. A novel parallelization technique for DEVS simulation of continuous and hybrid systems. Simulation 2013, 89, 663–683. [Google Scholar] [CrossRef]

- Park, H.; Fishwick, P.A. A GPU-Based Application Framework Supporting Fast Discrete-Event Simulation. Simulation 2010, 86, 613–628. [Google Scholar] [CrossRef]

- Wainer, G.A.; Giambiasi, N. Application of the Cell-DEVS Paradigm for Cell Spaces Modelling and Simulation. Simulation 2001, 76, 22–39. [Google Scholar] [CrossRef]

- Wainer, G.A.; Giambiasi, N. N-dimensional Cell-DEVS Models. Discret. Event Dyn. Syst. 2002, 12, 135–157. [Google Scholar] [CrossRef]

- Ntaimo, L.; Zeigler, B.P.; Vasconcelos, M.J.; Khargharia, B. Forest Fire Spread and Suppression in DEVS. Simulation 2004, 80, 479–500. [Google Scholar] [CrossRef]

- Muzy, A.; Innocenti, E.; Aiello, A.; Santucci, J.F.; Wainer, G. Methods for Special Applications: Cell-DEVS Quantization Techniques in a Fire Spreading Application. In Proceedings of the 34th Conference on Winter Simulation: Exploring New Frontiers. Winter Simulation Conference, San Diego, CA, USA, 8–11 December 2002; pp. 542–549. [Google Scholar]

- Hu, X.; Zeigler, B.P. A High Performance Simulation Engine for Large-Scale Cellular DEVS Models. In High Performance Computing Symposium (HPC’04), Advanced Simulation Technologies Conference; University of Arizona: Tucson, AZ, USA, 2004; pp. 3–8. [Google Scholar]

| Devices | Model | Cores |

|---|---|---|

| CPU | Intel Xeon CPU E5-2620 v4 × 2 | 16 |

| GPU | NVIDIA GeForce GTX 1080Ti × 4 | 14,336 |

| Memory | 16 GB × 8 | - |

| Case | Devices |

|---|---|

| 1 | 4 CPU cores |

| 2 | 8 CPU cores |

| 3 | 12 CPU cores |

| 4 | 16 CPU cores |

| 5 | 4 CPU cores, 3584 GPU cores |

| 6 | 8 CPU cores, 7186 GPU cores |

| 7 | 12 CPU cores, 10,752 GPU cores |

| 8 | 16 CPU cores, 14,336 GPU cores |

| Hardware Configuration | Without the CUDA MPS | With the CUDA MPS | Reduction Rate |

|---|---|---|---|

| 16 cores + 1 GPU | 2.206 s | 1.227 s | 44.369% |

| 16 cores + 2 GPUs | 1.289 s | 0.704 s | 45.339% |

| 16 cores + 3 GPUs | 0.951 s | 0.565 s | 40.571% |

| 16 cores + 4 GPUs | 0.666 s | 0.537 s | 19.354% |

| Hardware Configuration | Memory Usage in GPU |

|---|---|

| 4 cores + 1 GPU | 686 MB |

| 8 cores + 2 GPUs | 1308 MB |

| 12 cores + 3 GPUs | 1938 MB |

| 16 cores + 4 GPUs | 2552 MB |

© 2018 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (http://creativecommons.org/licenses/by/4.0/).

Share and Cite

Kim, S.; Cho, J.; Park, D. Accelerated DEVS Simulation Using Collaborative Computation on Multi-Cores and GPUs for Fire-Spreading IoT Sensing Applications. Appl. Sci. 2018, 8, 1466. https://doi.org/10.3390/app8091466

Kim S, Cho J, Park D. Accelerated DEVS Simulation Using Collaborative Computation on Multi-Cores and GPUs for Fire-Spreading IoT Sensing Applications. Applied Sciences. 2018; 8(9):1466. https://doi.org/10.3390/app8091466

Chicago/Turabian StyleKim, Seongseop, Jeonghun Cho, and Daejin Park. 2018. "Accelerated DEVS Simulation Using Collaborative Computation on Multi-Cores and GPUs for Fire-Spreading IoT Sensing Applications" Applied Sciences 8, no. 9: 1466. https://doi.org/10.3390/app8091466

APA StyleKim, S., Cho, J., & Park, D. (2018). Accelerated DEVS Simulation Using Collaborative Computation on Multi-Cores and GPUs for Fire-Spreading IoT Sensing Applications. Applied Sciences, 8(9), 1466. https://doi.org/10.3390/app8091466