Partial Block Scheme and Adaptive Update Model for Kernelized Correlation Filters-Based Object Tracking

Abstract

:1. Introduction

2. Related Works

Discriminative Correlation Filter

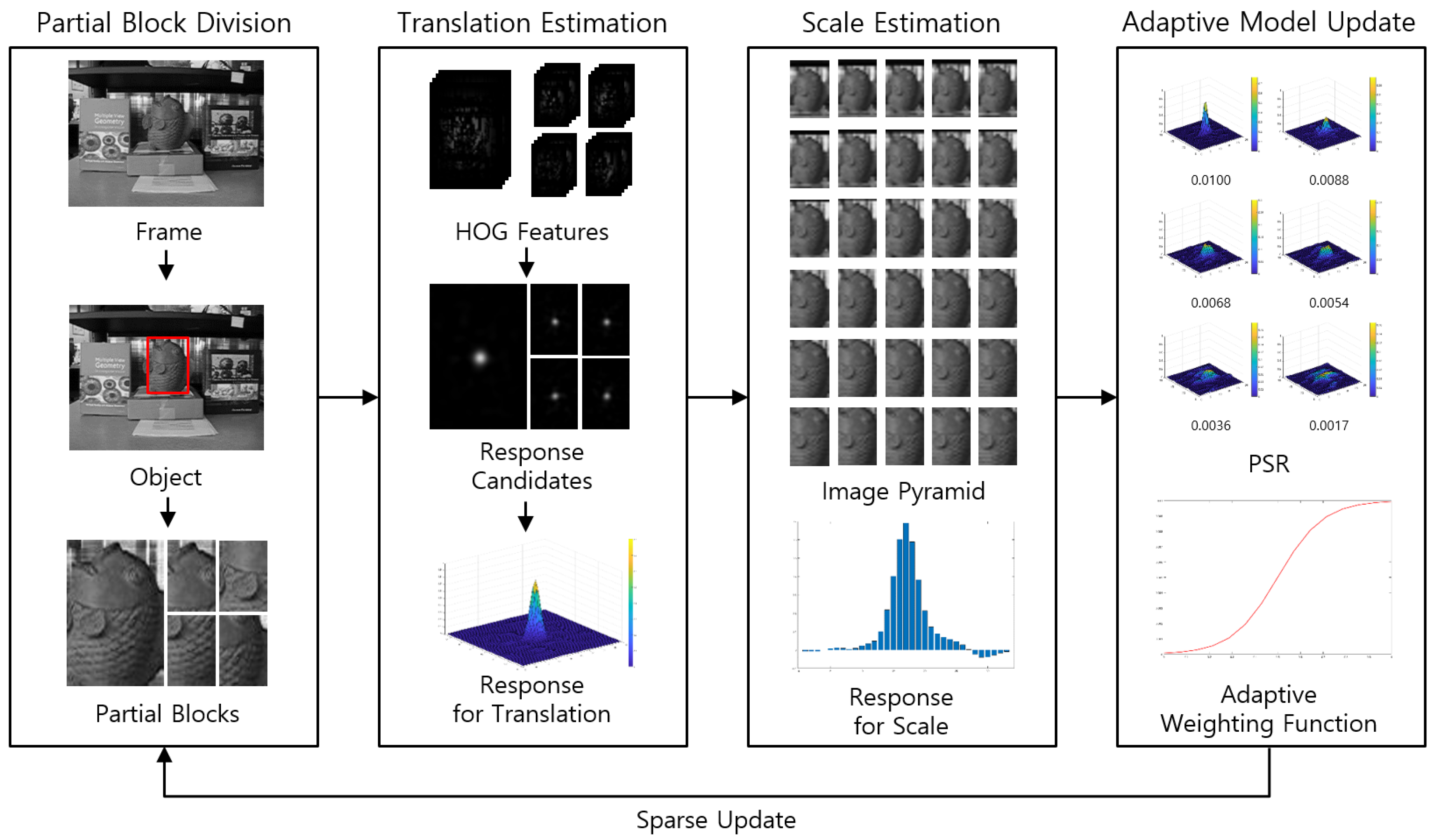

3. Proposed Method

- Partial block separation: separating the partial blocks from the whole block of an object. Partial blocks can be adjusted in size and position according to the parameter.

- Translation estimation: calculating the responses using a kernelized correlation filter of all blocks and then selecting the translation response map.

- Scale estimation: estimating the object scale with the scale space and calculating the scale factor.

- Adaptive model update: model updating with the adaptive learning rate considering the reliability of responses.

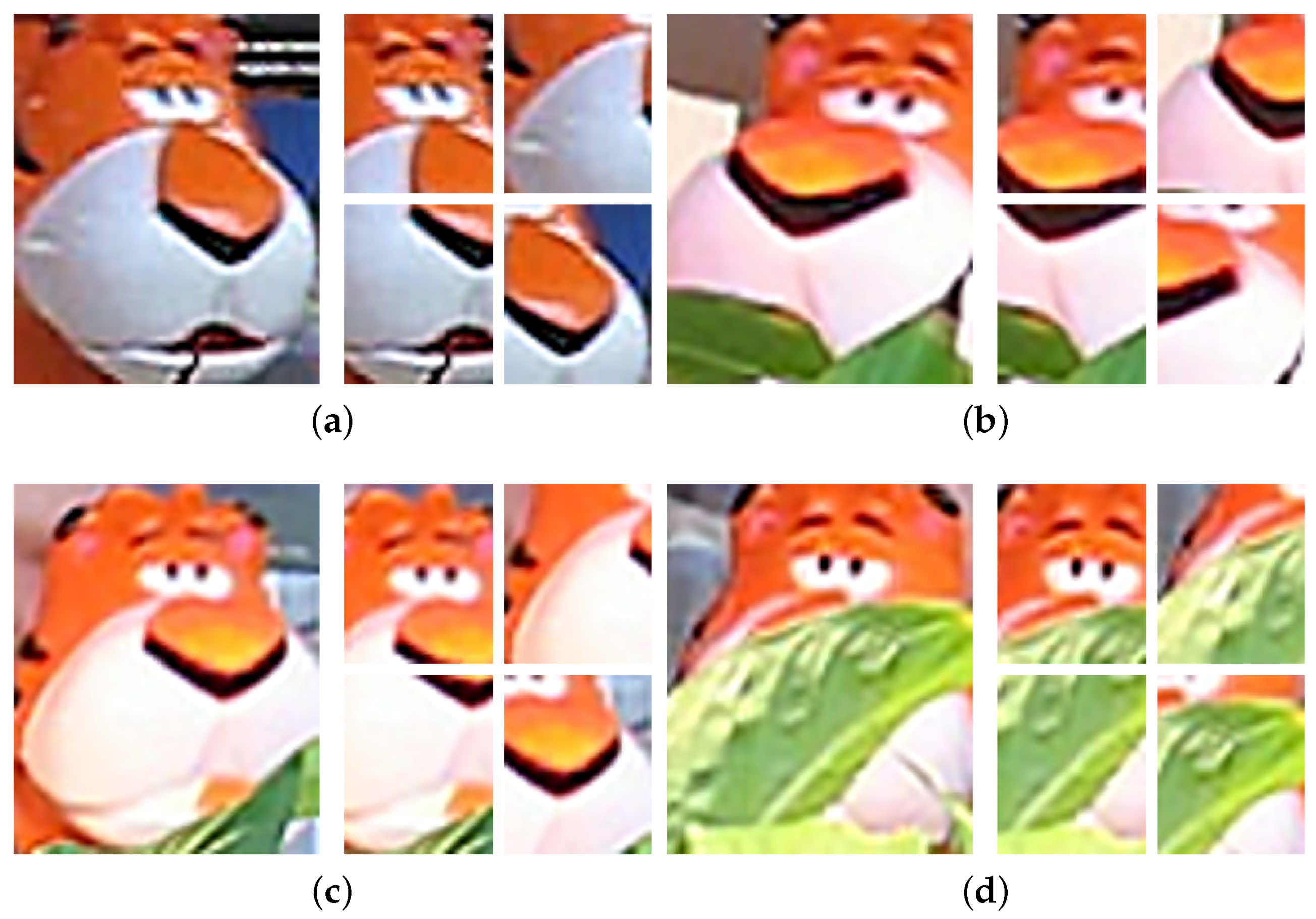

3.1. Partial Block Scheme

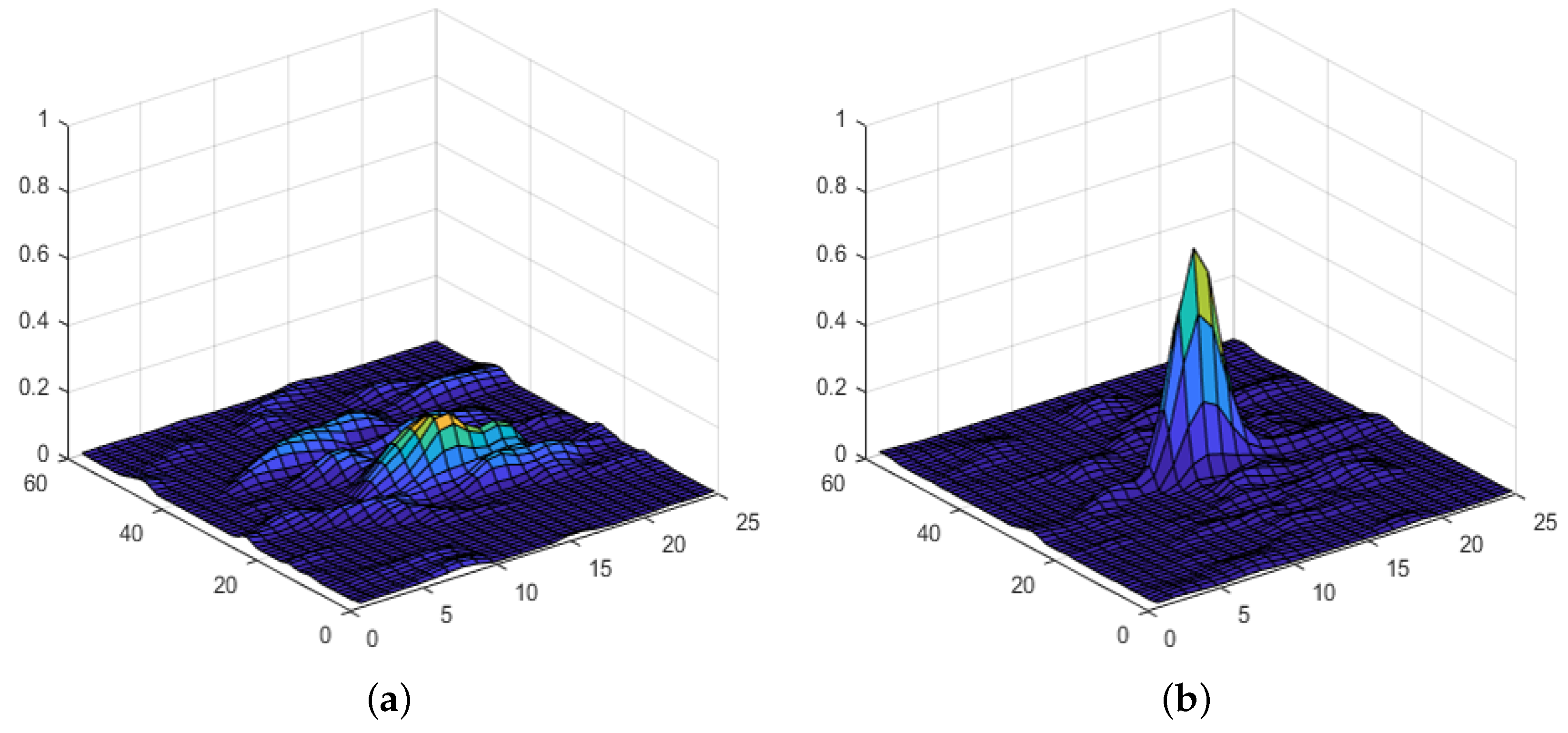

3.2. Translation Estimation

3.3. Scale Estimation

3.4. Adaptive Update Model

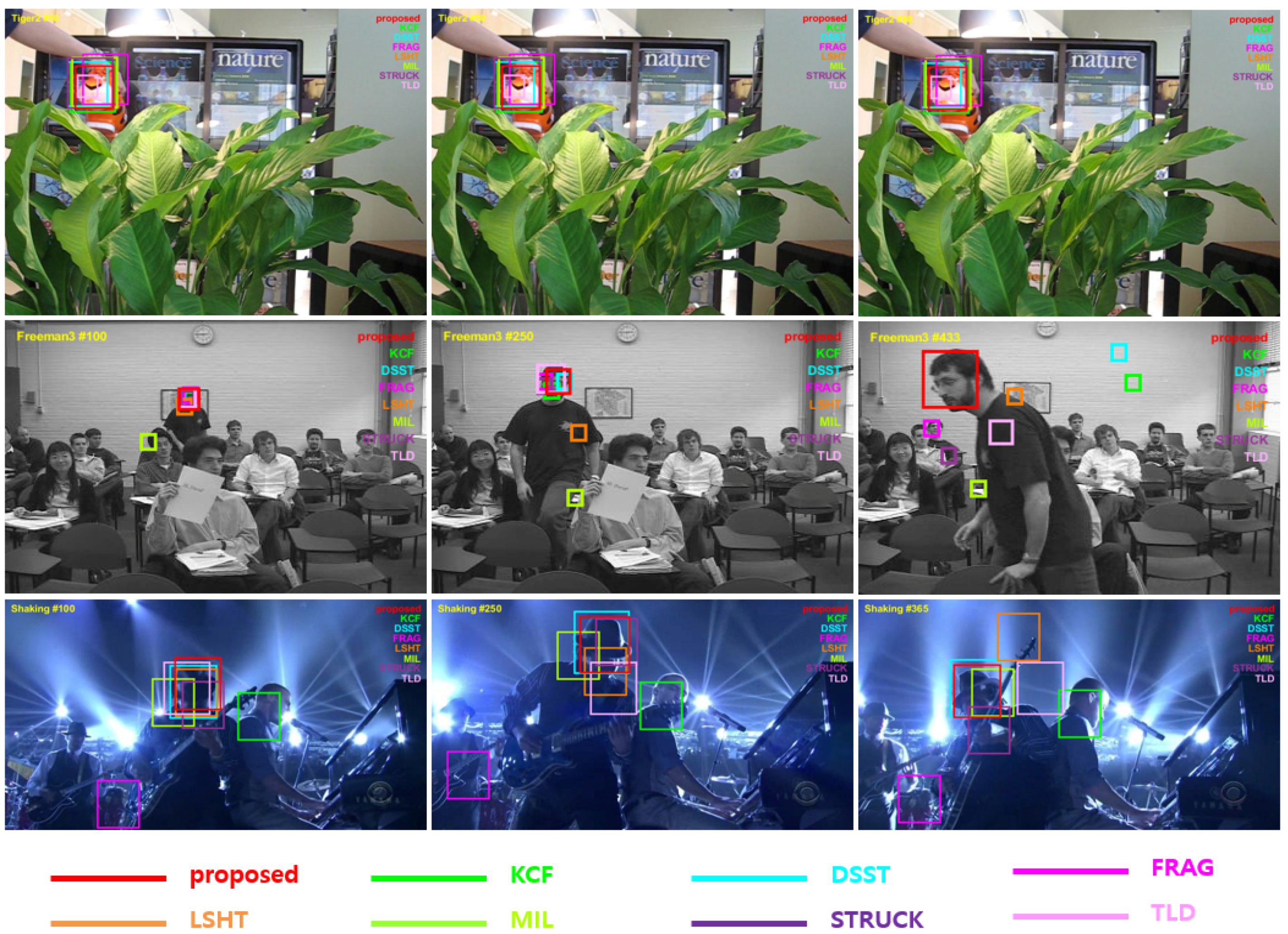

4. Experiments

4.1. Parameters and Experimental Setup

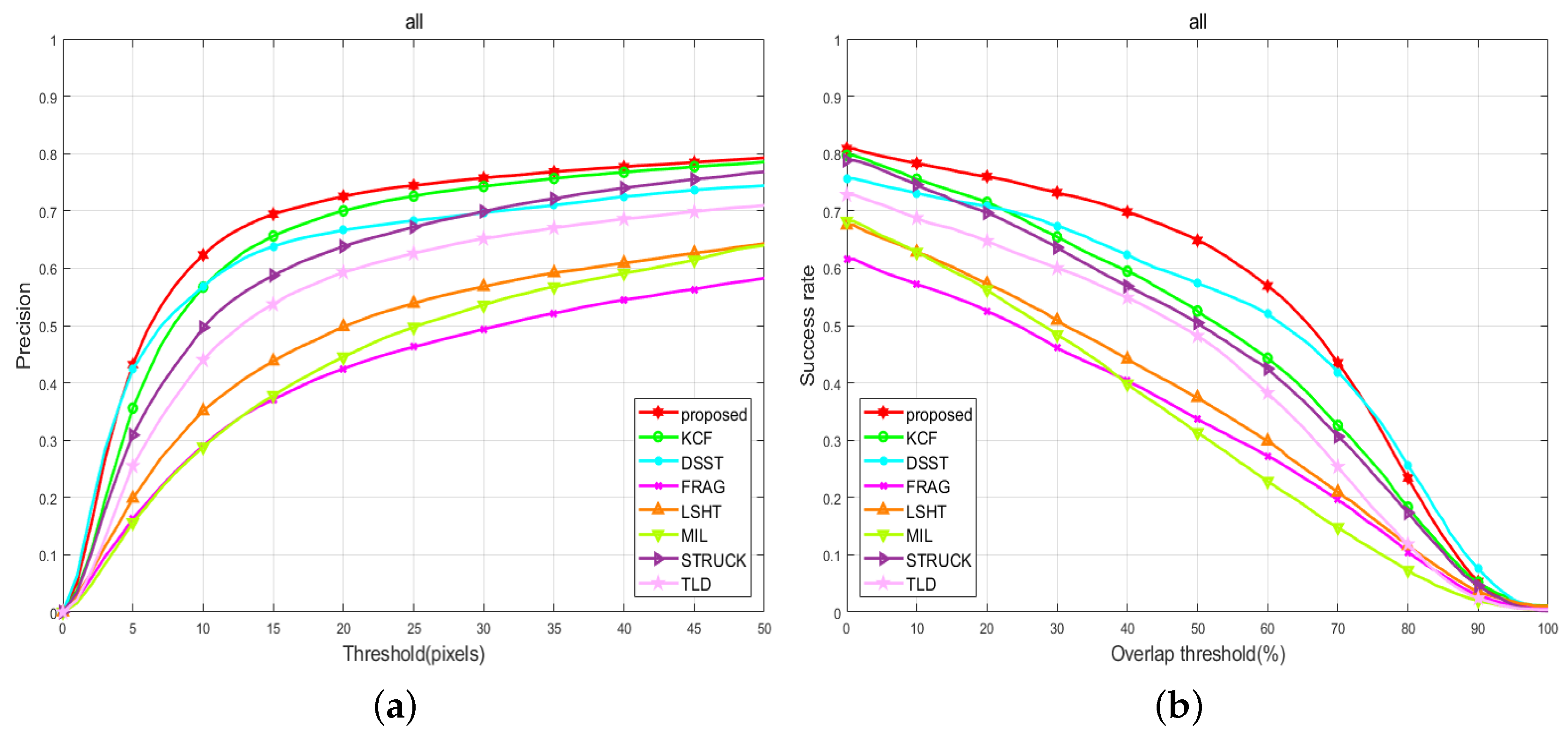

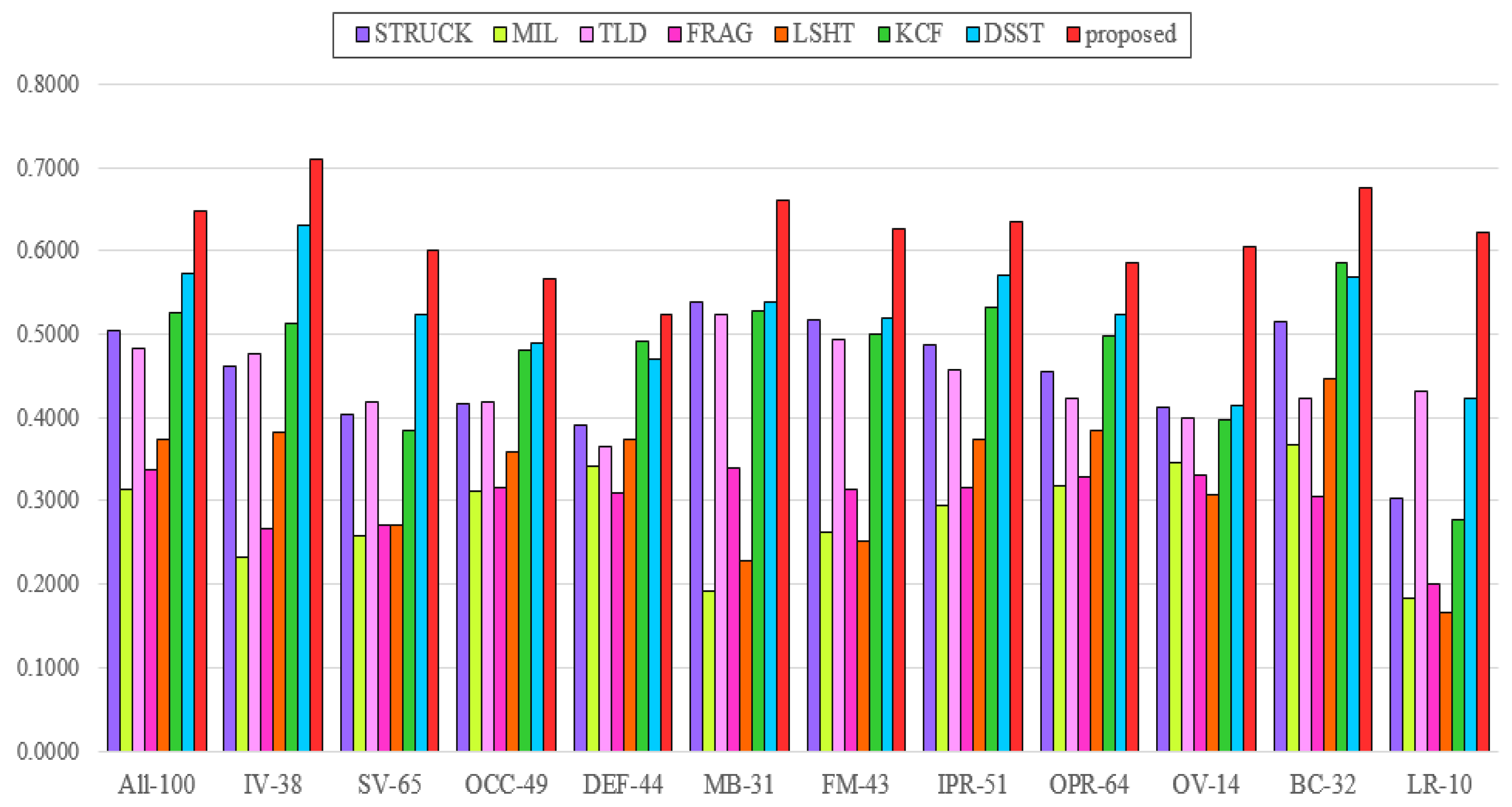

4.2. Quantitative Evaluation

5. Conclusions

Author Contributions

Funding

Acknowledgments

Conflicts of Interest

References

- Avidan, S. Ensemble tracking. IEEE Trans. Pattern Anal. Mach. Intell. (TPAMI) 2007, 29, 261–271. [Google Scholar] [CrossRef] [PubMed]

- Grabner, H.; Grabner, M.; Bischof, H. Real-time tracking via on-line boosting. In Proceedings of the British Machine Vision Conference (BMVC), Edinburgh, UK, 4–7 September 2006; Volume 1. [Google Scholar]

- Saffari, A.; Leistner, C.; Santner, J.; Godec, M.; Bischof, H. On-line random forests. In Proceedings of the 3rd IEEE ICCV Workshop on On-line Computer Vision, Kyoto, Japan, 27 September–4 October 2009. [Google Scholar]

- Avidan, S. Support vector tracking. IEEE Trans. Pattern Anal. Mach. Intell. (TPAMI) 2004, 26, 1064–1072. [Google Scholar] [CrossRef] [PubMed] [Green Version]

- Hare, S.; Saffari, A.; Torr, P. Struck: Structured output tracking with kernels. In Proceedings of the IEEE Conference on Computer Vision (ICCV), Barcelona, Spain, 6–13 November 2011. [Google Scholar]

- Babenko, B.; Yang, M.-H.; Belongie, S. Robust object tracking with online multiple instance learning. IEEE Trans. Pattern Anal. Mach. Intell. (TPAMI) 2011, 33, 1619–1632. [Google Scholar] [CrossRef] [PubMed]

- Zhang, K.; Song, H. Real-time visual tracking via online weighted multiple instance learning. Pattern Recognit. 2013, 46, 397–411. [Google Scholar] [CrossRef]

- Zhang, K.; Zhang, L.; Yang, M.-H. Real-time compressive tracking. In Proceedings of the European Conference on Computer Vision (ECCV), Florence, Italy, 7–13 October 2012. [Google Scholar]

- Kalal, Z.; Mikolajczyk, K.; Matas, J. Tracking-learning-detection. IEEE Trans. Pattern Anal. Mach. Intell. (TPAMI) 2012, 34, 1409–1422. [Google Scholar] [CrossRef] [PubMed] [Green Version]

- Collins, R.T.; Liu, Y.; Leordeanu, M. Online selection of discriminative tracking features. IEEE Trans. Pattern Anal. Mach. Intell. (TPAMI) 2005, 27, 1631–1643. [Google Scholar] [CrossRef] [PubMed]

- Ross, D.A.; Lim, J.; Lin, R.-S.; Yang, M.-H. Incremental learning for robust visual tracking. Int. J. Comput. Vis. (IJCV) 2007, 77, 125–141. [Google Scholar] [CrossRef]

- Wang, D.; Lu, H. Visual tracking via probability continuous outlier model. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition (CVPR), Columbus, OH, USA, 23–28 June 2014; pp. 3478–3485. [Google Scholar]

- Kwon, J.; Lee, K.M. Visual tracking decomposition. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition (CVPR), San Francisco, CA, USA, 13–18 June 2010; pp. 1269–1276. [Google Scholar]

- Mei, X.; Ling, H. Robust visual tracking using L1 minimization. In Proceedings of the IEEE Conference on Computer Vision (ICCV), Kyoto, Japan, 27 September–4 October 2009; pp. 1436–1443. [Google Scholar]

- Adam, A.; Rivlin, E.; Shimshoni, I. Robust fragments-based tracking using the integral histogram. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition (CVPR), New York, NY, USA, 17–22 June 2006. [Google Scholar]

- Zhang, T.; Bibi, A.; Ghanem, B. In defense of sparse tracking: Circulant sparse tracker. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition (CVPR), Honolulu, HI, USA, 21–26 July 2016; pp. 3880–3888. [Google Scholar]

- Zhang, T.; Ghanem, B.; Liu, S.; Ahuja, N. Robust visual tracking via multi-task sparse learning. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition (CVPR), Providence, RI, USA, 16–24 June 2012; pp. 2042–2049. [Google Scholar]

- Zhang, T.; Ghanem, B.; Liu, S.; Ahuja, N. Low-rank sparse learning for robust visual tracking. In Proceedings of the European Conference on Computer Vision (ECCV), Florence, Italy, 7–13 October 2012; pp. 470–484. [Google Scholar]

- Bolme, D.S.; Beveridge, J.R.; Draper, B.A.; Lui, Y.M. Visual object tracking using adaptive correlation filters. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition (CVPR), San Francisco, CA, USA, 13–18 June 2010; pp. 2544–2550. [Google Scholar]

- Henriques, J.F.; Caseiro, R.; Martins, P.; Batista, J. Exploiting the circulant structure of tracking-by-detection with kernels. In Proceedings of the European Conference on Computer Vision (ECCV), Florence, Italy, 7–13 October 2012. [Google Scholar]

- Henriques, J.F.; Caseiro, R.; Martins, P.; Batista, J. High-speed tracking with kernelized correlation filters. IEEE Trans. Pattern Anal. Mach. Intell. (TPAMI) 2015, 37, 583–596. [Google Scholar] [CrossRef] [PubMed]

- Danelljan, M.; Hager, G.; Khan, F.; Felsberg, M. Accurate scale estimation for robust visual tracking. In Proceedings of the British Machine Vision Conference, Nottingham, UK, 1–5 September 2014. [Google Scholar]

- Danelljan, M.; Khan, F.S.; Felsberg, M.; van de Weijer, J. Adaptive color attributes for real-time visual tracking. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition (CVPR), Columbus, OH, USA, 23–28 June 2014. [Google Scholar]

- Shu, G.; Dehghan, A.; Oreifej, O.; Hand, E.; Shah, M. Part-based multiple-person tracking with partial occlusion handling. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition (CVPR), Providence, RI, USA, 16–24 June 2012; pp. 1815–1821. [Google Scholar]

- Akin, O.; Mikolajczyk, K. Online Learning and Detection with Part-based Circulant Structure. In Proceedings of the IEEE International Conference on Pattern Recognition (ICPR), Stockholm, Sweden, 24 August 2014; pp. 4229–4233. [Google Scholar]

- Yao, R.; Xia, S.; Shen, F.; Zhou, Y.; Niu, Q. Exploiting Spatial Structure from Parts for Adaptive Kernelized Correlation Filter Tracker. IEEE Signal Process. Lett. 2016, 23, 658–662. [Google Scholar] [CrossRef]

- Zhang, T.; Jia, K.; Xu, C.; Ma, Y.; Ahuja, N. Partial occlusion handling for visual tracking via robust part matching. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition (CVPR), Columbus, OH, USA, 23–28 June 2014; pp. 23–28. [Google Scholar]

- Bertinetto, L.; Valmadre, J.; Golodetz, S.; Miksik, O.; Torr, P.H.S. Staple: Complementary Learners for Real-Time Tracking. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition (CVPR), Honolulu, HI, USA, 21–26 July 2016; pp. 1401–1409. [Google Scholar]

- Danelljan, M.; Hager, G.; Khan, F.S.; Felsberg, M. Learning spatially regularized correlation filters for visual tracking. In Proceedings of the IEEE Conference on Computer Vision (ICCV), Santiago, Chile, 11–18 December 2015; pp. 58–66. [Google Scholar]

- Li, Y.; Zhu, J. A scale adaptive kernel correlation filter tracker with feature integration. In Proceedings of the European Conference on Computer Vision (ECCV), Zurich, Switzerland, 6–12 September 2014; pp. 254–265. [Google Scholar]

- Ruan, Y.; Wei, Z. Real-Time Visual Tracking through Fusion Features. Sensors 2016, 16, 949. [Google Scholar] [CrossRef] [PubMed]

- Grabner, H.; Leistner, C.; Bischof, H. Semi-supervised on-line boosting for robust tracking. In Proceedings of the European Conference on Computer Vision (ECCV), Marseille, France, 12–18 October 2008. [Google Scholar]

- Zhong, W.; Lu, H.; Yang, Mi. Robust object tracking via sparsity-based collaborative model. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition (CVPR), Providence, RI, USA, 16–24 June 2012. [Google Scholar]

- Jia, X.; Lu, H.; Yang, Mi. Visual tracking via adaptive structural local sparse appearance model. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition (CVPR), Providence, RI, USA, 16–24 June 2012. [Google Scholar]

- Sevilla-Lara, L.; Learned-Miller, E.G. Distribution fields for tracking. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition (CVPR), Providence, RI, USA, 16–24 June 2012. [Google Scholar]

- Oron, S.; Bar-Hillel, A.; Levi, D.; Avidan, S. Locally orderless tracking. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition (CVPR), Providence, RI, USA, 16–24 June 2012. [Google Scholar]

- He, S.; Yang, Q.; Lau, R.; Wang, J.; Yang, Mi. Visual tracking via locality sensitive histograms. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition (CVPR), Portland, OR, USA, 23–28 June 2013. [Google Scholar]

- Scholkopf, B.; Smola, A. Learning with Kernels: Support Vector Machines, Regularization, Optimization, and Beyond; The MIT Press: Cambridge, MA, USA, 2002. [Google Scholar]

- Wu, Y.; Lim, J.; Yang, M. Online object tracking: A benchmark. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition (CVPR), Portland, OR, USA, 23–28 June 2013. [Google Scholar]

- Felzenszwalb, P.F.; Girshick, R.B.; McAllester, D.A.; Ramanan, D. Object detection with discriminatively trained part-based models. IEEE Trans. Pattern Anal. Mach. Intell. (TPAMI) 2010, 32, 1627–1645. [Google Scholar] [CrossRef] [PubMed]

| Trackers | CLE | Precision | Success Rate | AUC |

|---|---|---|---|---|

| STRUCK [5] | 47.09 | 0.6381 | 0.5046 | 0.4454 |

| MIL [6] | 71.96 | 0.4450 | 0.3132 | 0.3162 |

| TLD [9] | 60.14 | 0.5930 | 0.4819 | 0.4071 |

| FRAG [15] | 80.65 | 0.4245 | 0.3368 | 0.3182 |

| LSHT [37] | 68.24 | 0.4979 | 0.3742 | 0.3493 |

| KCF [21] | 44.88 | 0.7002 | 0.5252 | 0.4613 |

| DSST [22] | 56.47 | 0.6664 | 0.5738 | 0.4923 |

| Proposed | 43.50 | 0.7253 | 0.6485 | 0.5280 |

© 2018 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (http://creativecommons.org/licenses/by/4.0/).

Share and Cite

Jeong, S.; Paik, J. Partial Block Scheme and Adaptive Update Model for Kernelized Correlation Filters-Based Object Tracking. Appl. Sci. 2018, 8, 1349. https://doi.org/10.3390/app8081349

Jeong S, Paik J. Partial Block Scheme and Adaptive Update Model for Kernelized Correlation Filters-Based Object Tracking. Applied Sciences. 2018; 8(8):1349. https://doi.org/10.3390/app8081349

Chicago/Turabian StyleJeong, Soowoong, and Joonki Paik. 2018. "Partial Block Scheme and Adaptive Update Model for Kernelized Correlation Filters-Based Object Tracking" Applied Sciences 8, no. 8: 1349. https://doi.org/10.3390/app8081349

APA StyleJeong, S., & Paik, J. (2018). Partial Block Scheme and Adaptive Update Model for Kernelized Correlation Filters-Based Object Tracking. Applied Sciences, 8(8), 1349. https://doi.org/10.3390/app8081349