1. Introduction

In recent years, researchers have been increasingly interested in systems for human activity monitoring based on machine learning techniques. In particular, the scientific community is paying particular attention to the definition and experimentation of techniques that use inertial signals captured by wearable devices to automatically recognize activities of daily living (ADLs) [

1] and/or promptly detect falls [

2,

3]. These techniques have proven to be effective in many application domains, such as physical activity recognition and the estimation of energy expenditure [

4,

5], the monitoring of Parkinson’s disease development [

6], and the early detection of dementia disease [

7].

Very recently, these techniques have been embedded in smartphone applications and rely on data acquired by the hosted sensors [

8]. For instance, the applications by Sposaro et al. [

9], Tsinganos et al. [

10], Pierleoni et al. [

11], and Casilari et al. [

12] promptly detect falls; the application by Reyes-Ortiz et al. [

13] classifies ADLs; and the application by Rasheed [

14] both detects falls and classifies ADLs.

The success of these techniques depends on the effectiveness of the machine learning based algorithm employed, which results in being penalized by at least two factors: (i) the lack of an adequate number of public datasets to evaluate the effectiveness; and (ii) the lack of tools to be used to record labeled datasets and thus increase the number of available datasets.

Despite the strong interest in publicly available datasets containing labeled inertial data, their number is still limited. Casilari et al. [

15] and Micucci et al. [

16] analyze the state of the arts and provide a nearly complete list of those currently available, which includes, for example, UniMiB SHAR [

16], MobiAct [

17], and UMAFall [

18]. For this reason, many researchers experiment with their techniques using ad hoc built datasets that are rarely made publicly available [

19,

20,

21]. This practice makes it difficult to compare in an objective way the several newly proposed techniques and implementations because of a lack of a common source of data [

16,

21,

22,

23].

Almost none of the applications used to collect data are made publicly available [

24]. To the authors’ knowledge, the only exception is that used in the Gravity project, which, however, records falls only [

25]. Thus, we searched for applications that also record inertial data in well-established digital marketplaces, such as Android’s Google Play Store [

26], Apple’s App Store [

27], and Microsoft’s Windows Phone App Store [

28]. We decided to focus on this market because we were interested in smartphones and smartwatches, and thus we neglected all the applications, such as that presented in [

29], that take into consideration wearable devices not equipped with a mobile operating system. In the marketplaces we explored, we found some applications for registration, but all of them presented some limitations, for example, the kind of sensors used as the data source (e.g., G-sensor Logger [

30]), the lack of support for labeling (e.g., Sensor Data Logger [

31]), and the possibility of recording only one type of activity at each registration (e.g., Sensor Log [

32]).

In this article, we present UniMiB AAL (Acquisition and Labeling), an Android suite that provides support for the acquisition of signals from sensors and their labeling. The suite is available for download from the following address

http://www.sal.disco.unimib.it/technologies/unimib-aal/ [

33]. The suite can manage the sensors hosted by both an Android smartphone and, if present, a paired Wear OS smartwatch. The suite is composed by two applications:

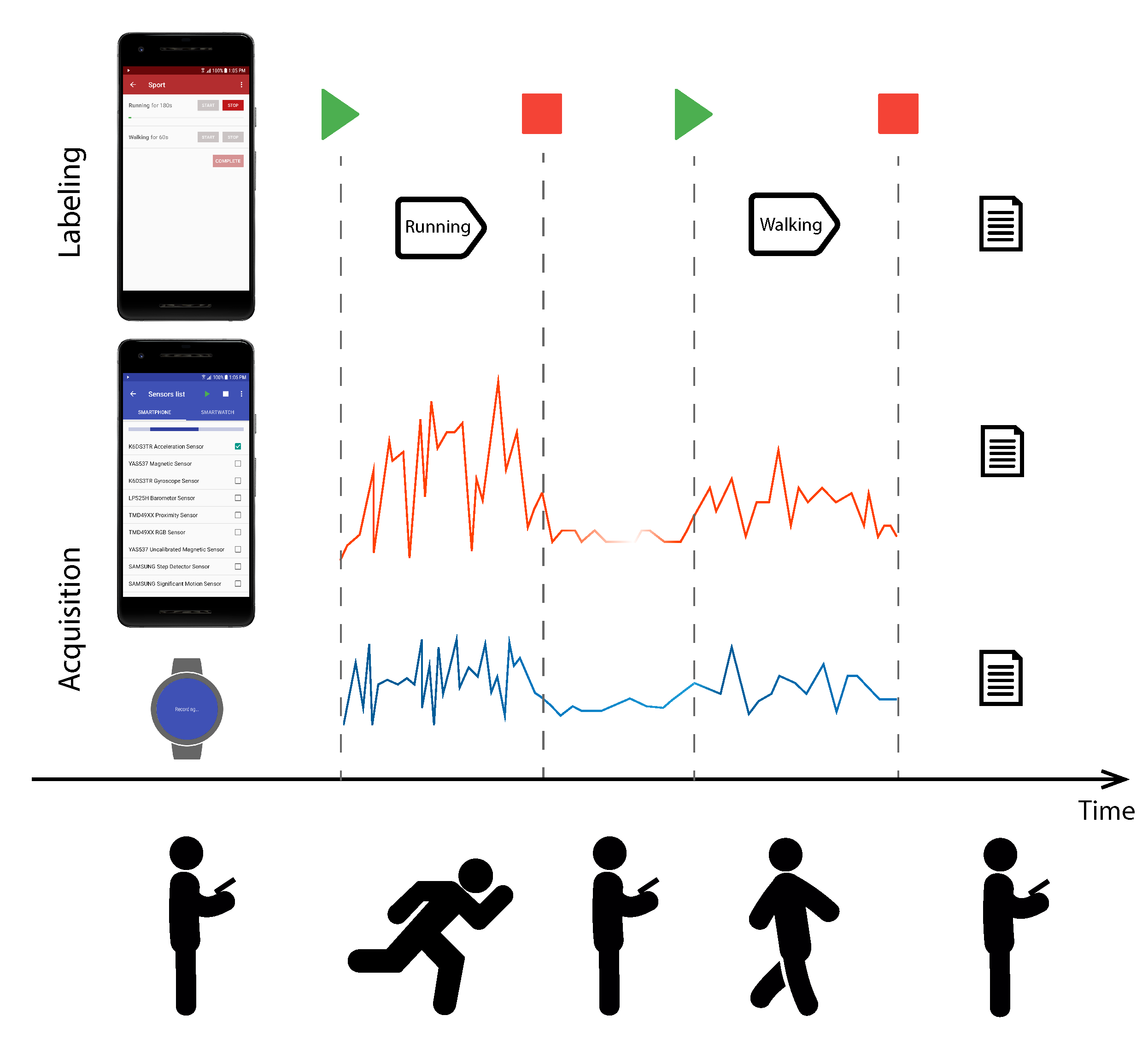

acquisition, which collects signals from the available sensors while a human subject is performing activities, and

labeling, which allows the user to specify which type of activity he/she is carrying out, such as walking, running, or sitting. The two applications are designed to run on two different smartphones; thus the user can specify each time an activity performed and its beginning and end timings using a smartphone without having to handle the other smartphone that is acquiring the data. This solution allows signals and assigned labels as synchronous as possible with the exact activity carried out by the subject to be obtained. Indeed, the smartphone that records signals is not moved from its position when the subject specifies the beginning and the end of each activity with the labeling smartphone. The suite discovers, independently of the hosting device, the available sensors and all their settings. The definitions of the types of activities that the user is requested to perform are easily configurable, as their definitions are stored in external files. Moreover, UniMiB AAL also collects information related to the sensor types and sampling rates that can be used to perform any kind of normalization that may be required to standardize the data with other data acquired with different devices. Finally, in addition to the use for which the suite was designed, it can also be used as a tool to label data from wearable devices that are not directly connected with smartphones and that provide data from different kinds of sensors, including those related to health. Because these devices are widely used, this could allow a potential amount of labeled data to be obtained. This can be achieved by placing the device (smartphone or smartwatch) on which the acquisition application is running next to the wearable device. The data acquired by the two devices is then analyzed in order to identify time series that refer to the same activity. The labels captured by the labeling application are then assigned to the wearable device data on the basis of these analyses. If the wearable device can be synchronized with a reference time (e.g., through the Network Time Protocol (NTP) [

34]), only the labeling application is required, because the labels can be assigned directly to the data acquired by analyzing the timestamps only.

The article is organized as follows.

Section 2 discusses differences between some existing applications and the UniMiB AAL suite;

Section 3 describes the method that guided the design and implementation of the suite;

Section 4 describes the applications that are included in the UniMiB AAL suite;

Section 5 describes how signals and labels are stored and managed;

Section 6 provides information about the usability tests we performed; finally,

Section 7 sketches the conclusions.

2. Related Work

This section discusses some of the available mobile applications for data acquisition and labeling. The search was performed on Google Scholar and Google Play Store, on July 15, 2018, considering applications available from 2015 to the end of 2017 with the following keywords: “sensor recording”, “sensor log”, “activity recognition”, and “sensor labeling activity”. In the case of Google Scholar, we also added to these the keyword “Android”.

We looked for applications that are able to collect and label signals from sensors embedded in a smartphone (and from a possibly paired wearable device), that provide the opportunity to select the sensors to be used during recording, and that may support the user by showing the sequence of activities he/she has to execute to be compliant with the design of a dataset collecting campaign.

Thus, in order to be considered, the applications had at least the following characteristics, which made them usable and complete: (i) compliancy with the human–computer interaction (HCI) guidelines [

35] in order to guarantee both the applications’s correct use and its correct use by the subjects; (ii) provision of the possibility to record signals from a wearable device in order to collect more signals or signals from different positions; (iii) a selectable pool of sensors to be used for recording in order to choose those most suitable for the current acquisition campaign; (iv) in the case of acquisition campaigns with a sequence of predefined activities that the subjects have to perform, help provided to the user proposing time after time the activity that he/she must carry out, in order to relieve the user from having to remember the protocol (i.e., the sequences of activities) of the acquisition campaign; and finally; (v) labeling and acquisition activities executed in different devices to obtain signals and assigned labels as synchronous as possible with the exact activity carried out by the subject. Executing the two activities on different devices allows the user not to have to move the device that acquires when he/she specifies the beginning and the end of each activity from its position relative to the device used for labeling.

We clearly did not consider applications that do not store the acquired signals, because these were useless for our scope. We considered only applications that make the acquired data available for offline processing related to the experimentation of activity recognition techniques. Moreover, we did not exclude from our research applications that record signals only, that is, applications that do not provide a built-in labeling feature.

At the end of the research, we found only seven applications that met the requirements.

Table 1 summarizes their characteristics. We include the UniMiB AAL suite in the table in order to compare it with the applications we found.

Table 1 includes the following information:

App: The name of the application and its reference (i.e., the URL (Uniform Resource Locator) used to download the application or a reference to the article presenting the application).

Google Play Store: The availability of the application on Google Play Store.

Source code: The availability of the source code of the application.

Multi-devices (Wear OS): Support for recording sensors mounted on external devices such as smartwatches equipped with Wear OS.

Multi-sensors: The possibility of recording data acquired from a set of sensors that are not only the inertial sensors.

Sensor selection: The possibility to choose which sensors to use to record data.

Labeling: The possibility to apply labels to the recorded data.

Multi-devices (Acquisition and Labeling - Acq. & Lab.): The possibility to run the acquisition and the labeling activities on different devices.

List of activities: The possibility of supporting the user with the list of activities he/she has to perform (i.e., the protocol defined by the acquisition campaign if defined).

Material Design Guidelines: Indicates if the application follows the HCI guidelines in order to meet the requirements related to usability. In particular, because we considered Android applications only, we checked if the application followed the Material Design Guidelines [

36], which are a set of usability guidelines for the Android environment.

All columns are populated with Yes, No, or—if the corresponding characteristic was respectively present, not present, or unknown.

Some of the applications provided a very limited set of features. For example, G-Sensor Logger [

30] collects accelerations only, and Sensor Sense [

40] acquires signals from only one sensor at a time, which can be chosen by the user. On the other hand, there were also more advanced applications, such as Sensor Data Logger [

31], which allows signals to be registered from more than one sensor at a time (chosen by the user) and which can be paired with a Wear OS device.

Apart from UniMiB AAL, only four applications allowed labeling of the acquired data: Sensor Data Collector [

39], Activity Recognition Training [

37], ExtraSensory [

38], and Sensor Log [

32].

Sensor Data Collector and Activity Recognition Training are both available on Google Play Store.

Sensor Data Collector allows the user to specify the activity he/she is carrying out and his/her environment (e.g., home or street). The application saves the data in an SQLite database, which is stored in a non-public folder of the device.

Activity Recognition Training is an application designed to generate datasets that can be used for activity recognition. The application allows the user to specify the beginning and the end of each activity. The data are saved in a single text file stored in a public folder of the device. Each row in the file is a pair: the sensor type and the corresponding acquired signal.

ExtraSensory and Sensor Log are not available on Google Play Store, despite that the latter was present in the store until at least January 2018.

ExtraSensory is an open-source project, and the source code for both the server-side and the Android application is available. The application was designed in order to build the ExtraSensory Dataset [

38] and was then released to ease further data collection. The application combines unsupervised labeling (exploiting classifiers trained with the ExtraSensory Dataset) and self-reported labels by the user, both before the actions and as a post-activity inquiry.

Sensor Log was developed to ease the process of collecting and labeling sensory data from smartphones. The recorded signals are stored in an SQLite database and can be exported in CSV (Comma-Separated Values) format.

All four applications supporting labeling, in addition to supporting the acquisition of signals from inertial sensors, also allow data to be registered from other built-in sensors, such as temperature and pressure. Of these four applications, only ExtraSensory and Sensor Data Collector allow signals to be recorded from the sensors of both the smartphone and the possible paired Wear OS smartwatch; only Sensor Data Collector and Sensor Log allow the user to select the sensors to be used for acquisition.

However, even if all four of these applications allow signals to be labeled, they are lacking in terms of satisfying some of the characteristics that we list at the beginning of this section, which are, on the contrary, fulfilled by UniMiB AAL. These characteristics are detailed in the following:

Multi-Devices (Acq. & Lab.): The acquisition and labeling of the data happen on the same device. In the case of Sensor Log, Sensor Data Collector, and Activity Recognition Training, these happen during the acquisition, thus introducing some incorrect labels in the dataset, as explained in

Section 3.1. ExtraSensory relies on its own oracle to label data, which may not be accurate, and improves its accuracy by asking the user about the performed activities. While this gives better knowledge of the acquired data, there are inherent synchronisation issues between the offline labeling and the data.

Activities List: None of the four applications support a predefined list of activities (i.e., protocols) in order to ease the data acquisition. Each time the user wishes to perform an activity, he/she has to choose the activity from the list of configured activities (or give feedback about it afterwards, in ExtraSensory); moreover, each activity is performed and recorded separately, thus removing from the acquired dataset the transitions between the activities, which may decrease performance once the online recognition takes place.

Material Design Guidelines: Developing an end-user application that follows the HCI guidelines increases the end-user usage. On the opposite side, the user will experience frustration that will lead to him/her refraining from using the application [

41]. Furthermore, depending on the marketplaces, users are familiar with specific guidelines (e.g., Material Design [

36] for Android, and Human Interface Guidelines [

42] for iOS). Therefore, developers should ensure consistency with these and not bore the user with new ways of interacting for common features.

Despite this, none of the four applications follow the Material Design Guidelines, hence not guaranteeing usability. For example, Sensor Data Collector does not provide floating action buttons [

43] (which represent the primary action of a screen) to control the application with a single action; it forces the user to leave the main screen and move to a settings panel to start the recording, and it fails in providing correct feedback to the user. In contrast, Sensor Sense follows the Material Design principles, for example, by providing a floating action button to start the recording and by using cards [

44] (which contain content and actions about a single subject) to present a list of elements.

3. Method

The aim of our work is to provide the scientific community with a system capable of recording data from smartphone sensors (and the possibly paired smartwatch) to be easily used in evaluating and training machine learning techniques. The evaluation and training of these techniques require the availability of datasets containing signals recorded by sensors while subjects are carrying out activities, such as walking, sitting, or running. The subjects range from children to the elderly, with a scarce or marked attitude toward the use of computer technologies. Signals can range from inertial sensors data (e.g., accelerometers, gyroscopes, and compasses) to any kind of physiological or environmental sensor (e.g., pressure, temperature, and heart rate). In addition, these signals may come from sensors hosted in smartphones and/or smartwatches that differ from each other in terms of characteristics (e.g., precision, acquisition rate, and value ranges) depending on their manufacturer. Such signals must be labeled with the true activities carried out by the subjects, who can autonomously choose which activity to carry out and for how long or follow a protocol (i.e., a fixed sequence of actions and relative durations) defined by the researchers.

Thus, the definition of such datasets requires systems that are easy to use even by inexperienced users and that are easily configurable. To this aim, simple system configurations (e.g., a list of smartphone and smartwatch positions) should be possible without the applications having to be recompiled and deployed to the device. The data acquisition component must be designed in such a way that it does not depend on the physical characteristics of specific sensors. This allows a portable application that automatically adapts to the actual execution context to be obtained. The data labeling should be as consistent as possible to the activity the user is performing. Finally, the data and their labels should be accompanied by a set of additional information to be used in the technique evaluation. Such information may include, for example, the sensor type, position, and acquisition frequency.

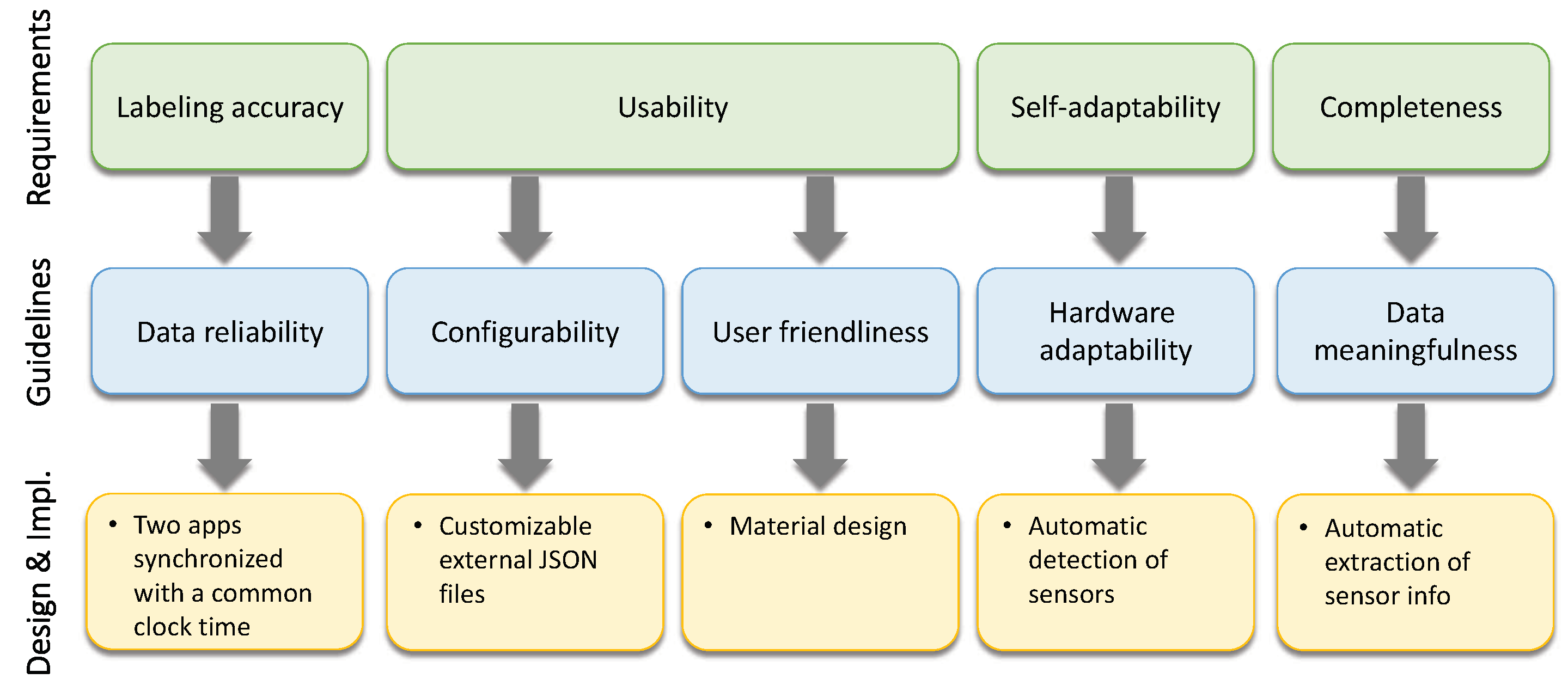

An acquisition and labeling system must therefore address the following requirements:

labeling accuracy: the data must be labeled according to the corresponding performed activity;

usability: the system must be easily used to avoid mistakes and must be easily configured without the presence of a programmer;

self-adaptability: the system must be able to be used with different smartphones, smartwatches, and sensors;

completeness: the system must acquire and provide additional information to improve the dataset usage in the experimentations. Such requirements result in the following guidelines for the design of the system:

data reliability,

configurability,

user friendliness,

hardware adaptability, and

data meaningfulness.

Figure 1 sketches the identified requirements, the corresponding guidelines, and the design and implementation choices that fulfil them. The following subsections detail the guidelines and the identified design and implementation solutions.

3.1. Data Reliability

The two main functionalities that the system must provide are data acquisition and support for data labeling. Data acquisition includes interfacing with the sensors embedded in the smartphone, managing the connection with the paired smartwatch, and storing the data acquired by the sensors. Data labeling includes the user interface setting the beginning and end times of a given activity performed by a human subject.

These two functionalities may result in a single application running on a smartphone. Using only one application would result in the following usage scenario: The user uses the application’s user interface to specify the activity he/she will perform and then starts the registration and puts the smartphone in the designated place, for example, in her trouser pocket. When the user completes the activity, he/she picks up the smartphone from his/her trouser pocket and then stops recording. This usage scenario has a significant drawback: the data recorded from when the user starts recording to when he/she puts the smartphone in her pocket and, vice versa, the data recorded from when he/she picks up the smartphone from her pocket to when he/she stops recording are incorrectly labeled. These data are in fact labeled as belonging to the activity performed. To overcome this problem, we decided to operate a separation of concerns by assigning the responsibility of data acquisition and data labeling to two different software components, each of them reified by a different application. The idea is that these two applications should be executed in two different smartphones. In this way, the user exploits the acquisition application to start the recording of sensors. Once started, the user may place the smartphone in the designated location, for example, his/her trouser pocket. Then, the user exploits the labeling application running on a second smartphone to specify from time to time the activity that is being performed.

Two key aspects need to be considered to ensure that the process of recording and labeling data is properly implemented: Firstly, the two smartphones hosting the acquisition and labeling applications should be synchronized in accordance with a common clock time; secondly, the data recorded by the sensors and the activities carried out by the subjects should have a unique subject identifier.

Clock synchronization between the two applications allows acquired data to be easily matched with the beginning and end time labels of the corresponding activity. For the implementation, we decided to use the

currentTimeMillis() [

45] method of the

System class provided by the Java SDK (Java Software Development Kit), which allows the current time to be obtained in milliseconds. For the correct synchronization of the two smartphones, it is necessary that their wall-clock times are set by the same NTP server, so that the wall-clock times are the same and the

currentTimeMillis() method returns consistent values from the two devices.

Concerning the identifiers, users are required to input their unique identifiers each time they start a new acquisition and labeling session. Identifiers along with timestamps are then used to store recordings as described in

Section 5.

Clearly, the two applications can run on the same smartphone, but this use case would cause incorrect labels to be assigned to the recorded data.

3.2. Configurability

The application should be easily configured by people who are not programmers of mobile applications. This facilitates the use of the application by researchers mainly interested in developing and experimenting with new machine learning techniques for human activity monitoring.

When performing an activity or a set of activities, the subject has to specify both the kind of activity he/she will execute and where the smartphone and/or smartwatch will be placed during the execution of the activity. It has been demonstrated that the information regarding the position of the recording device (e.g., trouser pocket, wrist, or waist) is exploited in the machine learning literature to study the robustness of recognition methods to changes in device position [

46].

The type of activity and device position are chosen from predefined lists and are not inserted by hand. This permits the user’s interaction with the application to be simplified and, more importantly, the list of possible activities allowed during the recording session to be predefined. Thus, we have defined two sets of lists: one set contains the lists related to the positions, and the other set contains the lists related to the activities.

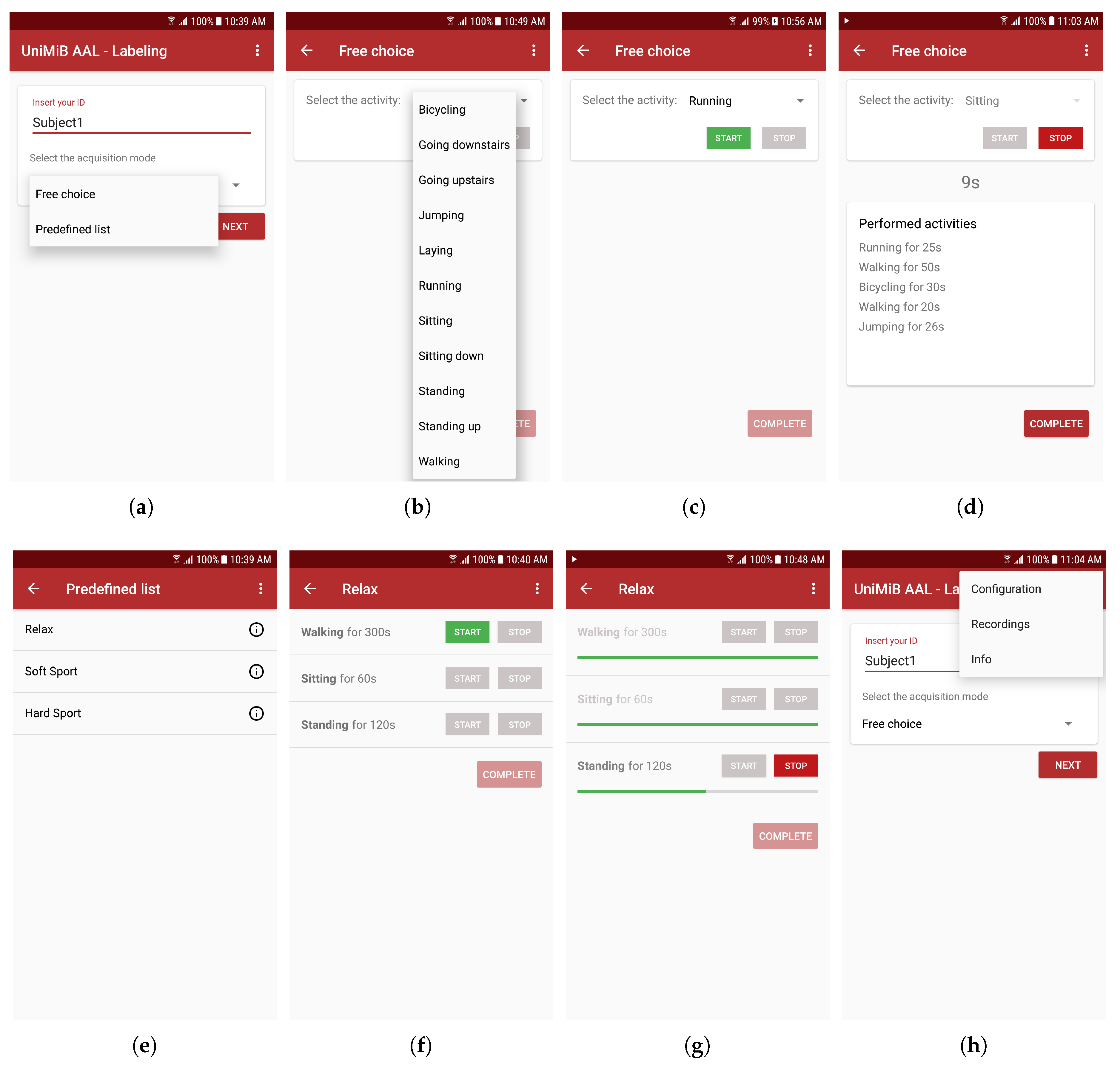

The set related to positions includes two lists: one list contains the positions where the smartphone can be placed, and the other contains the positions where the smartwatch can be placed.

The set related to activities also contains two lists, one containing a simple list of activities (e.g., walking, running, and sitting), and the other containing a list of predefined protocols. A protocol is an ordered sequence of activities and their durations, for example, running for 5 min, then jumping for 1 min, then standing for 2 min, and so on. Indeed, we identified two distinct ways in which the subject could carry out the activities: by selecting the activity to be performed from a list (i.e., operating a free choice among the list of activities) or by following a protocol (i.e., a predefined list of activities and durations). The former offers to the subject the possibility to choose which activities he/she wishes to carry out and for how long. On the contrary, the latter does not allow the subject to change the order in which the activities have to be carried out or their duration.

The four configuration lists are turned into four corresponding JSON (JavaScript Object Notation) files: the smartphone positions’ list, the smartwatch positions’ list, the list of activities for the free choice mode, and the list of protocols in the predefined list mode.

Listings 1 and 2 show examples for the smartphone and smartwatch positions’ lists, respectively.

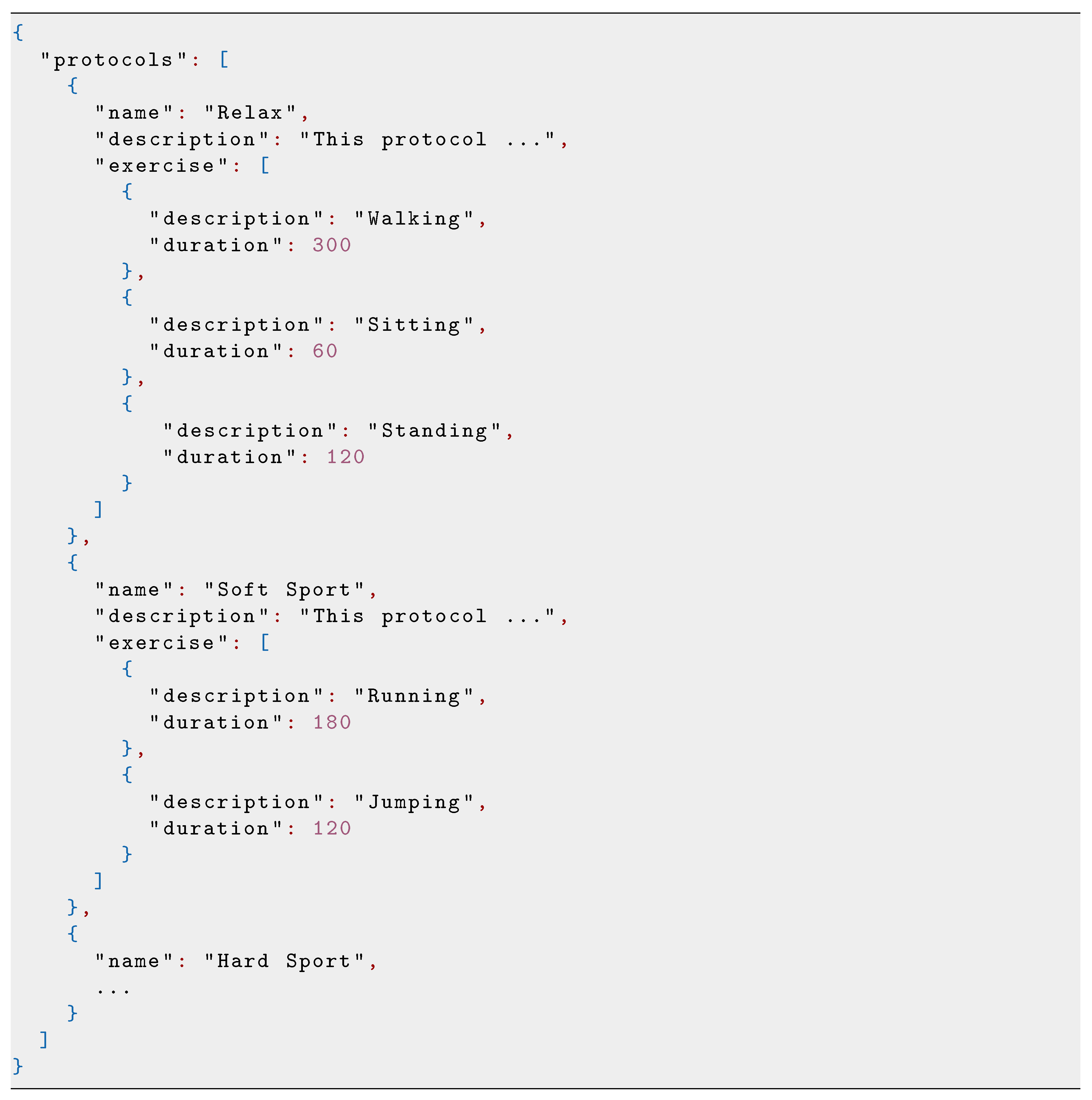

Listing 3 shows an example list related to the free choice of activities, while Listing 4 shows an example list containing three protocols.

Because the positions are related to the acquisition devices, we decided to assign the functionality regarding the choice of positions to the acquisition application. Because activities are used to correctly label signals, we decided to assign the functionality regarding the choice of the activity or the protocol to the labeling application. It follows that the JSON files related to positions are located in the smartphone running the acquisition application, while the JSON files related to the activities are located in the smartphone running the labeling application.

Listing 1.

Example of the configuration file that includes the list of the smartphone positions.

Listing 1.

Example of the configuration file that includes the list of the smartphone positions.

Listing 2.

Example of the configuration file that includes the list of smartwatch positions.

Listing 2.

Example of the configuration file that includes the list of smartwatch positions.

Listing 3.

Example of the file that includes the list of activities from which the user makes their selection.

Listing 3.

Example of the file that includes the list of activities from which the user makes their selection.

To avoid having to compile the application every time these files are modified, the JSON files are located in a public folder of the smartphone called configuration in both the acquisition and labeling applications. Because these files are in public storage, it is possible to access them in an easy way either by connecting the smartphone to a computer or directly from the mobile device. This allows some positions and/or activities or protocols to be changed, added, or removed in accordance with the needs. Every change to these four files is automatically reflected in the acquisition and labeling application, and thus there is no need to either rebuild the application or make a new installation.

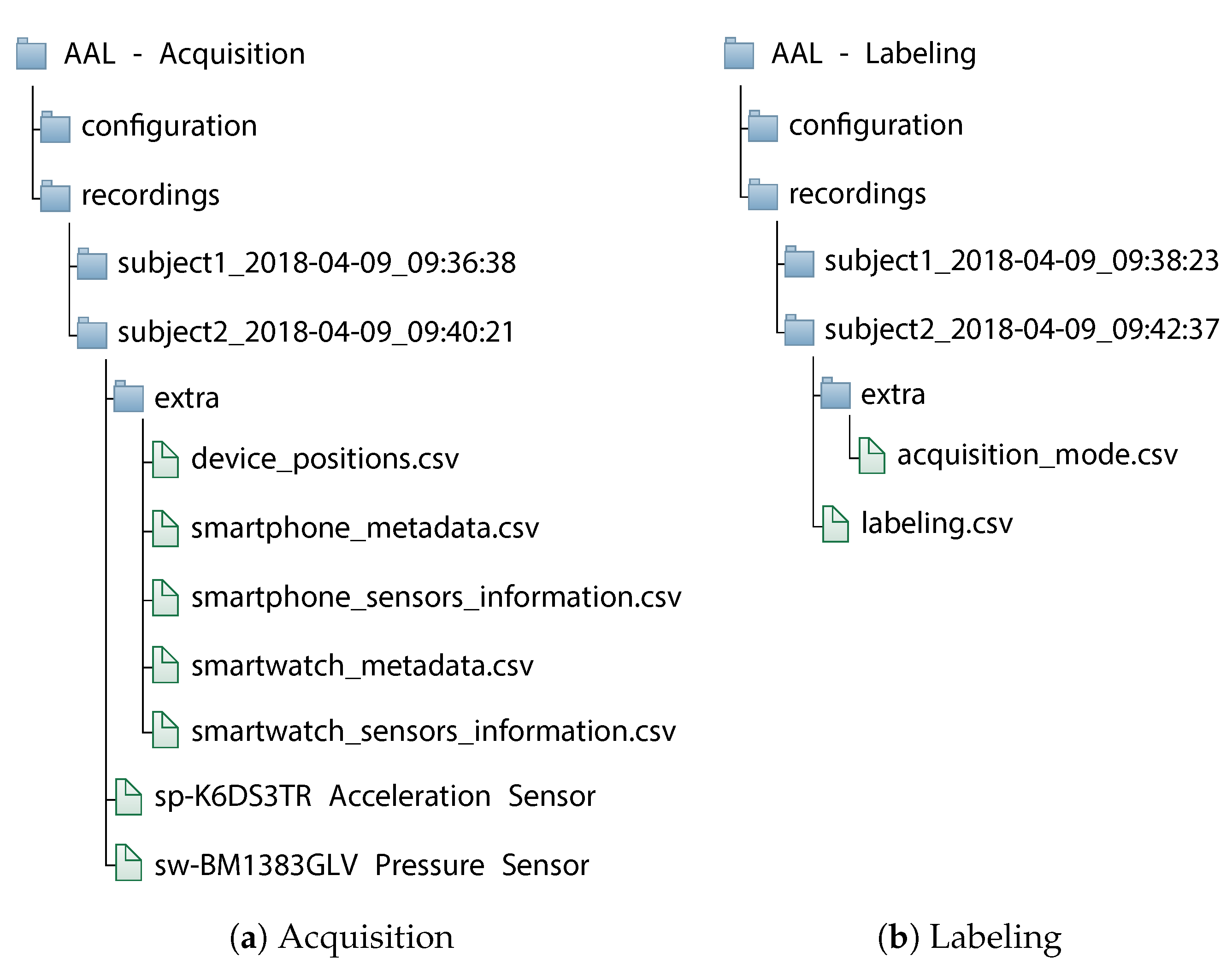

The

configuration folder of the acquisition application is placed in the

AAL - Acquisition folder. On the left of

Figure 2 is shown the folder hierarchy and the two JSON files for the device positions. The

configuration folder of the labeling application is located in the

AAL - Labeling folder of the labeling application. On the right of the

Figure 2 is shown the folder hierarchy and the two JSON files for the activities.

Listing 4.

Example of the file that includes the list of protocols from which the user makes a selection.

Listing 4.

Example of the file that includes the list of protocols from which the user makes a selection.

3.3. User Friendliness

The application must have a pleasant, intuitive, and clean user interface in order to promote its use and to minimize errors in its use.

To fulfil this aim, we followed two directions. On one hand, we analyzed the layouts of other applications with similar functionalities. In this way, we were able to identify the positive and negative aspects of every application according to our point of view. On the other hand, we followed the guidelines defined in the Material Design [

36] specification, which is the way Google recommends Android applications be developed. The Material Design Guidelines, as well as providing recommendations about the patterns to follow to create a well-designed user interface, also gives advice on the basis of accessibility studies.

Taking into consideration the positive aspects of the analyzed applications and the indications provided by the Material Design Guidelines, we obtained a user interface that provides a good user experience and that is consistent with the operating system and other Android applications. These aspects allow the subject not to be disoriented when he/she uses both applications and to think only about carrying out the activities and recording the data.

Section 4 provides some screenshots of both of the applications to show their adherence to the Material Design Guidelines.

3.4. Hardware Adaptability

Ensuring hardware adaptability requires that the acquisition application should not embed a list of predefined sensors to interface with but should be able to discover those that are available in the target smartphone and in the paired smartwatch. If available in the smartwatch, the automatically populated list should include not only classical inertial sensors (e.g., accelerometer, gyroscope, and compass), but also different sensors for the monitoring of, for example, the ambient temperature, ambient level of humidity, heart rate, and others.

To achieve this goal, we used the Android API. In particular we exploited the

SensorManager class that provides the

getSensorList (int type) [

47] method, through which it is possible to obtain a list of the available sensors in a device. In this way, the acquisition application can adapt to the smartphone in which it is executed and offers the possibility to choose all the sensors or some of them. Moreover, if the smartphone is paired with a smartwatch, the application can also adapt to the smartwatch, thus also making it possible to select the sensors available in the smartwatch itself, increasing the information associated to the activities carried out by the subject.

This ensures extreme flexibility in the choice of the data type that can be recorded. Moreover, the subject can select only the sensors that are hosted by the smartphone or the smartwatch.

3.5. Data Meaningfulness

Signals acquired by the sensors can be used without any additional information. However, the knowledge of information about the devices (smartphone and smartwatch) and the sensors used to acquire the signals may help in improving the data processing.

Concerning the devices, additional information may include, for example, the version of the operating system, the manufacturer, and the model of the device. Concerning the sensors, the manufacturer and the model can be very useful to search for the technical datasheet of the sensor. In addition, operational information of the sensors, such as the resolution, the delay, and the acquisition rate, can be very useful for processing purposes.

As for hardware adaptability, to achieve this goal, we used the Android API (Application Programming Interface). In particular, we exploited the

Build class [

48], which makes available a set of constant variables whose values are initialized according to the device in which the application is running. Such constants include, for example, the manufacturer, the brand, the version release, and much other information about the device. In this way, the application can automatically retrieve such information each time a new acquisition is started.

On the other side, we exploited the

Sensor class [

49], which represents a sensor. The class is initialized with the specific characteristics of the represented sensor that can be accessed by means of ad hoc methods. For example, the method

getMaximumRange() makes available the maximum range of the sensor in the sensor’s unit, and the method

getMinDelay() makes available the minimum delay allowed between two events in microseconds.

As for the Build class, the Sensor class automatically adapts the status of its instances according to the sensor the instance is representing. Thus, the application can automatically retrieve sensors’ information each time a new acquisition is started, as the corresponding sensor instances are initialized accordingly.

5. The Recordings

This section details how recordings generated by the two applications are organized and how they can be accessed. As introduced in

Section 3.2, both the applications have a main folder in the public storage of the smartphone:

AAL - Acquisition for the application that acquires the signals from the sensors, and

AAL - Labeling for the application that labels these data. Because these files are in public storage, a user can access them in an easy way either by connecting the smartphone to a computer or directly from the mobile device.

As shown in

Figure 6, both the

AAL - Acquisition folder and

AAL - Labeling folder contain two subfolders:

configuration and

recordings. The

configuration folder is discussed in

Section 3.2 and contains the files that a user can change in order to adapt the applications according to researchers’ needs, while the

recordings folder contains the files with the signals and the labels recorded.

Each time a subject starts a recording session, the acquisition application creates a new folder whose name is obtained by concatenating the

id (identifier) of the subject and the

date and the time of the beginning of the recording. For example, the folder

subject2_2018-04-09_09:40:21 in

Figure 6a specifies that the recordings are related to a subject with ID “subject2” who recorded a session on April 9, 2018 at 9 am for 40 min and 21 s. Each folder related to an acquisition session performed by a subject contains as many CSV files as the number of sensors that have been selected for the recordings, each of them containing the acquired signals. The name of each file is characterized by an initial string that specifies if the sensor belongs to the smartphone (

sp) or to the smartwatch (

sw) and is followed by the name of the sensor as provided by the Android API. The files containing signals from smartwatch sensors are transferred to the smartphone via Bluetooth. For example, the file

sp-K6DS3TR Acceleration Sensor in

Figure 6a contains the acceleration signals acquired by the sensor identified as K6DS3TR hosted in the smartphone.

Similarly, the

recordings folder of the labeling application contains the data related to the activities performed during application runs. Each time a subject starts to label his/her first activity, the labeling application creates a new folder following the same pattern of the acquisition application. For example, the folder

subject2_2018-04-09_09:42:37 in

Figure 6b specifies that the labels are related to a subject with ID “subject2” who recorded a session on April 9, 2018 at 9 am for 42 min and 37 s. Each folder related to a labeling session contains a CSV file named

labeling that contains the labels of the activities that the subject has performed (see

Figure 6b).

The subfolders of the acquisition application recordings folder can be associated to the corresponding subfolders of the labeling application recordings folder because of the names assigned to the subfolders. That is, given a recordings subfolder in the acquisition application, the associated subfolder in the labeling application is that which has the same identifier for the subject and a time that is the closest to that of the acquisition subfolder. For example, the subfolder subject2_2018-04-09_09:40:21 created by the acquisition application and the subfolder subject2_2018-04-09_09:42:37 created by the labeling application can be associated because they have the same identifier and because the date of the subfolder in the labeling application is the closest to that of the folder in the acquisition application.

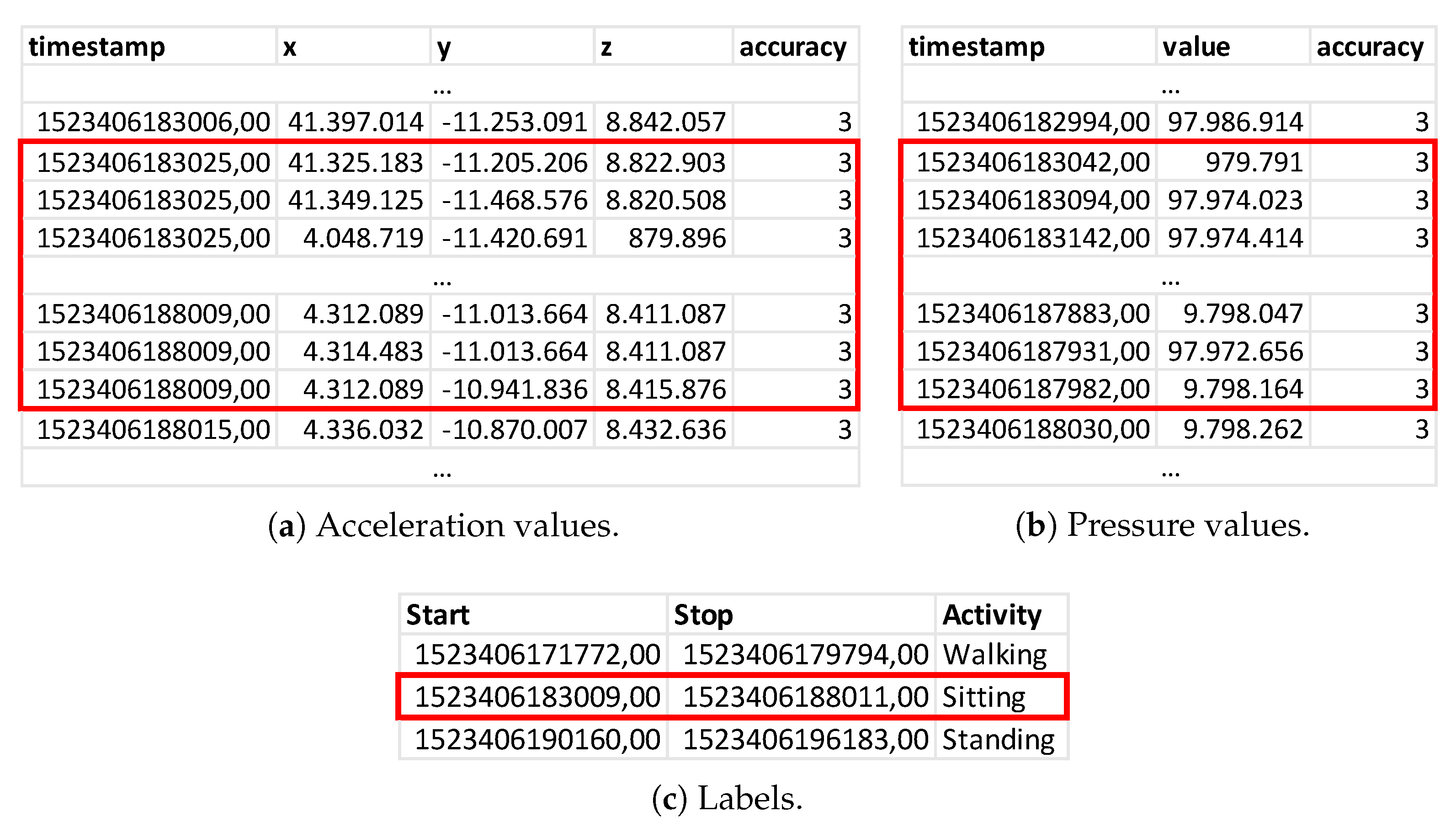

Figure 7a,b show respectively the fragments of the

sp-K6DS3TR Acceleration Sensor and

sw-BM1383GLV Pressure Sensor files stored in the

subject2_2018-04-09_09:40:21 folder. The first column indicates the acquisition time in milliseconds, the following columns contain the acquired values (

x,

y, and

z for the acceleration sensor and the value of the pressure for the pressure sensor), and the last column specifies the accuracy of the values as returned by the Android API. In particular, the level of accuracy can assume one of five predefined values: −1 when the values returned by the sensor cannot be trusted because the sensor had no contact with what it was measuring (e.g., the heart rate monitor is not in contact with the user), 0 when the values returned by the sensor cannot be trusted because calibration is needed or the environment does not allow readings (e.g., the humidity in the environment is too high), 1 when the accuracy is low, 2 when the accuracy is medium, and 3 when the accuracy is maximum [

47].

Figure 7c shows the content of the paired

labeling file (contained in the

subject2_2018-04-09_09:42:37 folder). The first and second columns respectively specify the beginning and the end of the activity, which are labeled in the third column. Red bounding boxes in

Figure 7a,b indicate the signals that are labeled with “sitting” as they have compatible timestamps.

At each recording session, both the acquisition and the labeling application store in their

extra folder additional information that can be useful for data processing (see

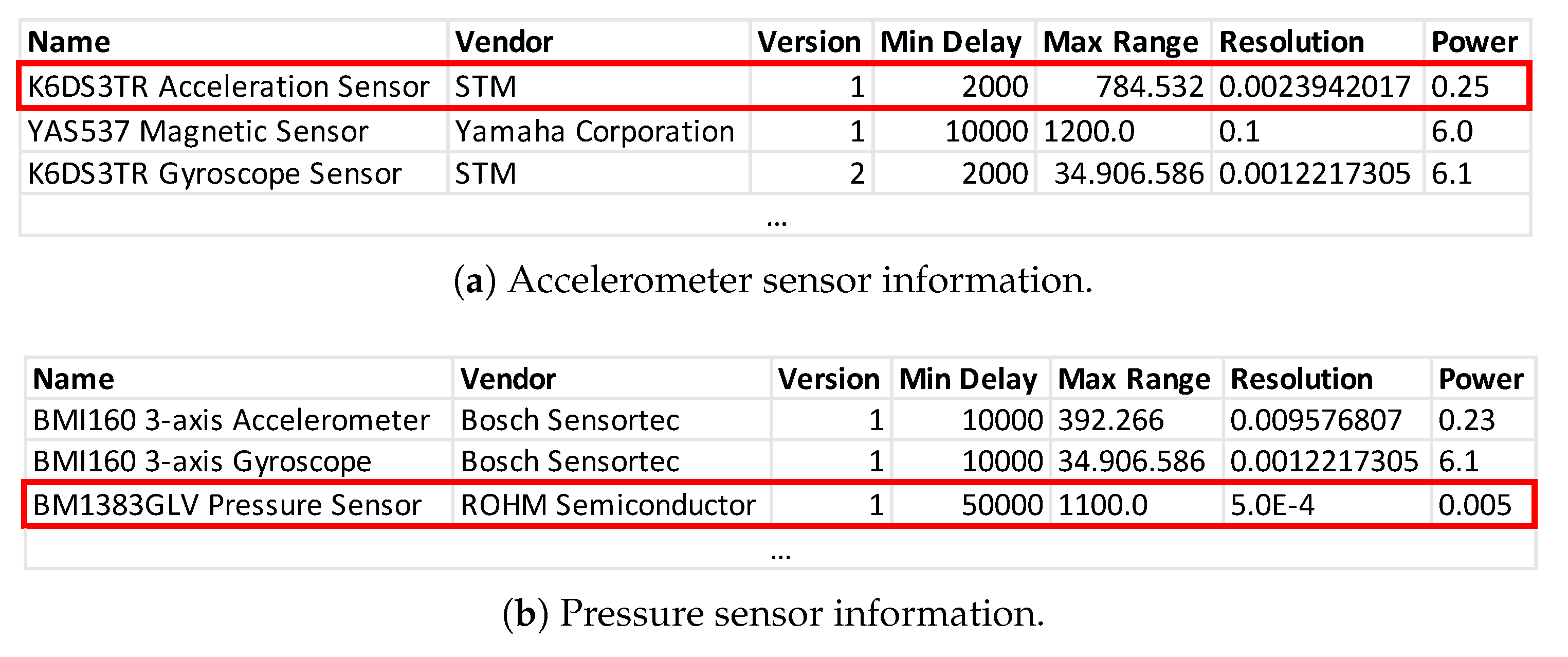

Figure 6). The acquisition application includes the following CSV files:

device_positions contains the positions of the devices indicated by the subject.

smartphone_metadata and smartwatch_metadata respectively contain information about the smartphone and the smartwatch used to perform recordings, such as, for example, the manufacturer, the model, and the Android version. This information is automatically retrieved from the smartphone and from the smartwatch by the applications.

smartphone_sensors_information and

smartwatch_sensors_information respectively contain information about the sensors of the smartphone and the smartwatch that have been used to record the signals. Besides the name of the sensor, its vendor, and other information that allows the sensor’s detailed specifications to be determined, the files include other data such as the minimum delay (i.e., the minimum time interval in microseconds that a sensor can use to sense data), the maximum range (i.e., the maximum range of the sensor in the sensor’s unit), and the resolution of the sensor in the sensor’s unit.

Figure 8 shows the content of these files in the recording session sketched in

Figure 7. The red bounding boxes highlight the sensors that generated the signals in

Figure 7a,b.

The labeling application includes the acquisition_mode CSV file that specifies the acquisition mode (i.e., free choice or predefined list) selected by the subject. In the case that the predefined list mode has been selected, the labeling application also specifies the name of the selected protocol.

7. Conclusions

The lack of available applications to acquire and label signals acquired from sensors and the small number of public datasets containing labeled signals force researchers to develop their own applications whenever they need to experiment with a new activity recognition technique based on machine learning algorithms.

In this article, we present UniMiB AAL, an application suite for Android-based devices that eases the acquisition of signals from sensors and their labeling. The suite is not yet loaded in Google Play Store, but it is available for download from the following address:

http://www.sal.disco.unimib.it/technologies/unimib-aal/ [

33]. The suite is composed by two applications (acquisition and labeling) in order to be executed on two different smartphones. This may reduce the number of signals erroneously labeled, as the smartphone acquiring signals is never moved from its position during the labeling task. Moreover, assigning labeling and acquisition to two separated and dedicated applications also becomes an advantage when an operator assists the subject during recording activities. In this scenario, having a second smartphone adds the possibility to have the operator handling the labeling while the subjects only have to perform the experiment. Indeed, our empirical experience when collecting the UniMiB SHAR [

16] dataset suggested that subjects have the tendency to be less precise and make more errors than the designers of the experiments. Even if the configuration that exploits two smartphones helps in achieving correctly labeled signals, the two applications can be run on one smartphone only. This can be useful whenever the subject is unable to manage two smartphones.

Both the applications feature a simple and clear interface and can acquire data from a variety of sensors. The acquisition application automatically discovers the sensors hosted by the devices so that it can adapt to the true running environment. Moreover, the configuration information, including the list of activities or the positions of the devices, is stored in external accessible files. This allows the researches to personalize the configurations according to their needs, without having to recompile the applications.

In order to validate the usability, we conducted a number of tests involving 10 users equally distributed in terms of age and gender. The tests showed that a very short time was needed to understand how to use the applications and start using them.

The application scenarios of the proposed suite are numerous and mostly focus on human monitoring (see

Section 2). The idea of implementing a suite for recording and labeling signals from smartphone and smartwatch sensors arose because of the difficulties we encountered in acquiring a dataset of accelerometer signals related to human activities and falls [

16]. This dataset, as well as others in the state of the arts, are used to develop machine learning algorithms that are then able to predict whether a person’s activity is a race or a walk. To obtain robust machine learning algorithms, a huge amount of data is needed, and more importantly, data needs to be correctly labeled. The manual work for data labeling would be tedious and noise-prone. One of the possible application scenarios of the proposed suite would be the following: needing to acquire signals from the accelerometer and the gyroscope of a smartphone. The idea is to record signals related to

n activities (walking, running, etc.) performed by a group of

m humans. Each activity is performed following a given story board: walking for 5 min, running for 3 min, and so on. Each person performing the activity holds the smartphone for data recording in his/her pocket and the smartphone for data labeling in his/her hands. Using the proposed labeling suite running on the latter smartphone, the human is able to easily label the activities that he/she is performing.

Future developments will include a simplification of the process of selecting sensors when both the smartphone and the smartwatch have been selected, as suggested from the tests. We plan to substitute the current tab layout with a simple activity that includes two buttons: one button is used to show a popup from which the subject can select the smartphone’s sensors; the other button is used to show a popup from which the subject can select the smartwatch’s sensors. Moreover, we plan to include in both the applications additional functionality that will allow the researchers/users to define the content of the configuration files so that they do not have to edit files in JSON format.

Finally, we will explore the possibility to integrate voice recognition as a way to ease the process of starting, stopping, and labeling activities. This improvement will release the user of the burden of manually signaling the beginning of each activity in the predefined list operation mode and of manually selecting and signaling the beginning and end timings of each activity in the free choice operation mode.