Line Matching Based on Viewpoint-Invariance for Stereo Wide-Baseline Aerial Images

Abstract

1. Introduction

2. Image Rectification Using a Perspective Transformation Model

2.1. The Oblique Cameras Structure and the Rotation Matrix Acquisition

2.2. Perspective Transformation Matrix’s Solution and Image Correction

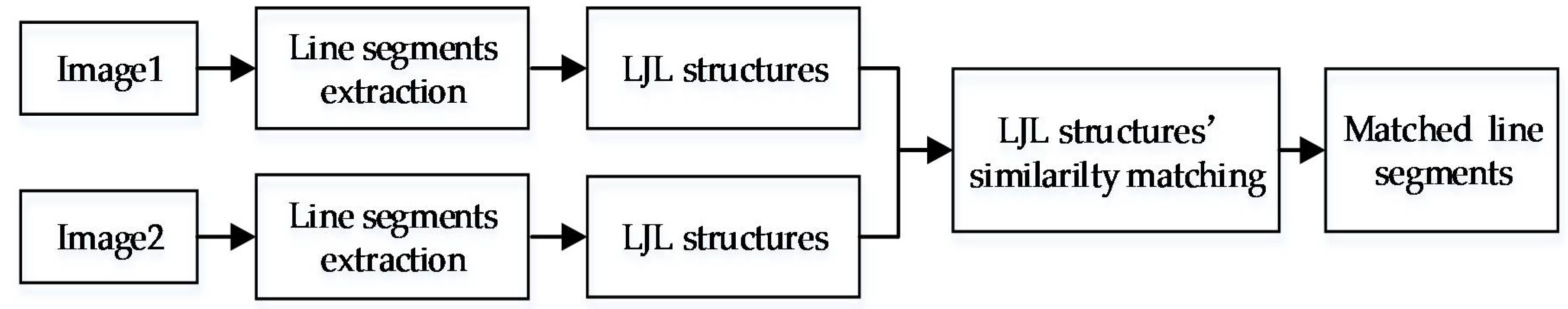

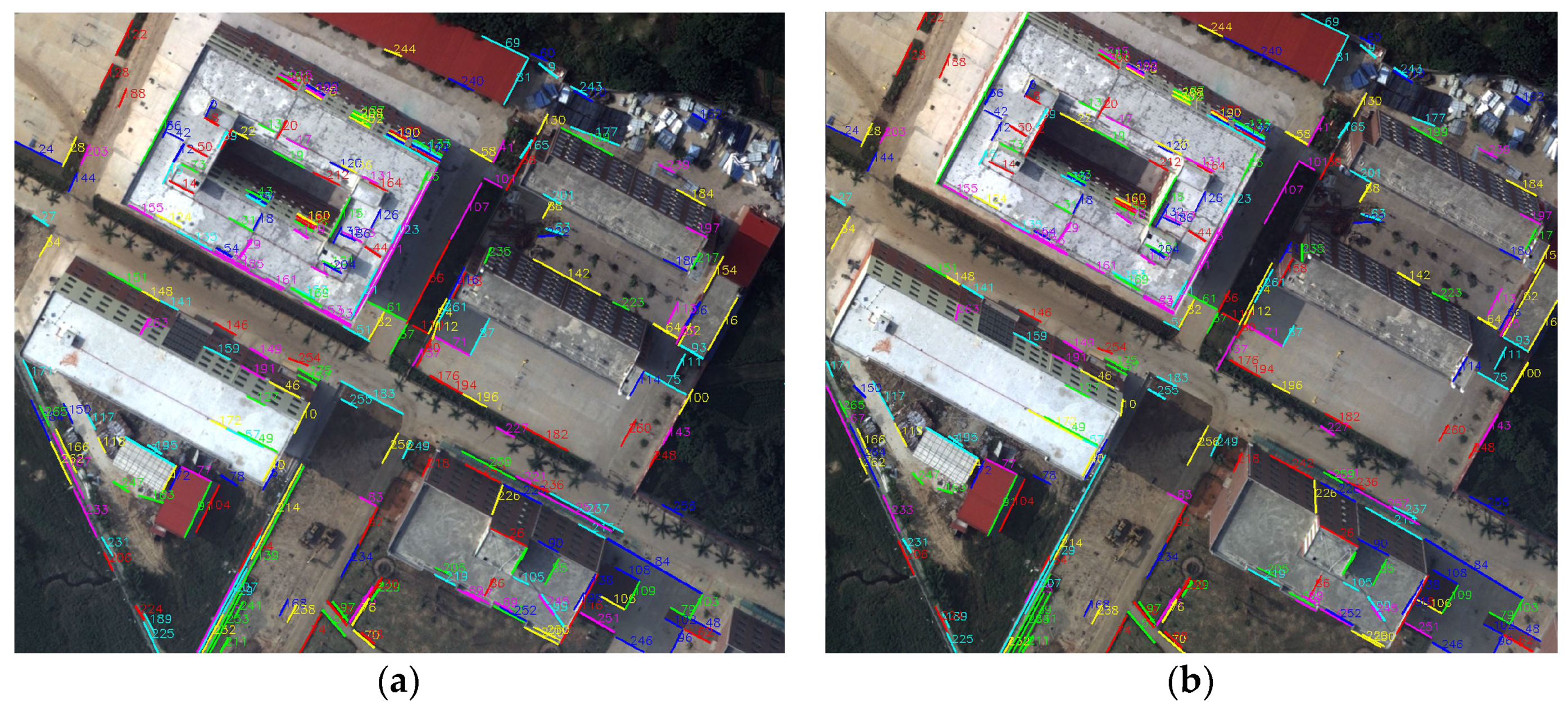

3. Line Matching for Image Pair on the Rectified Image Space

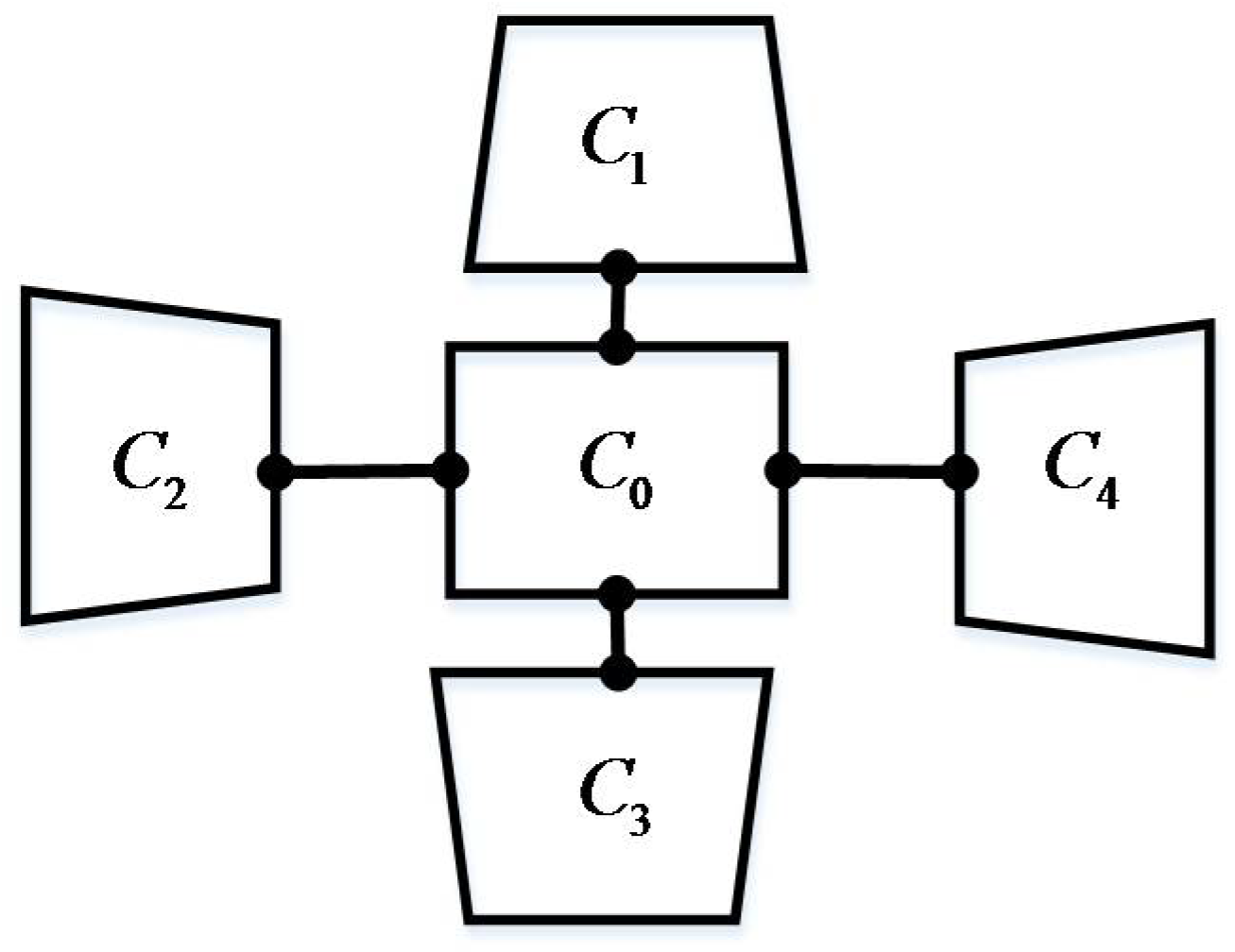

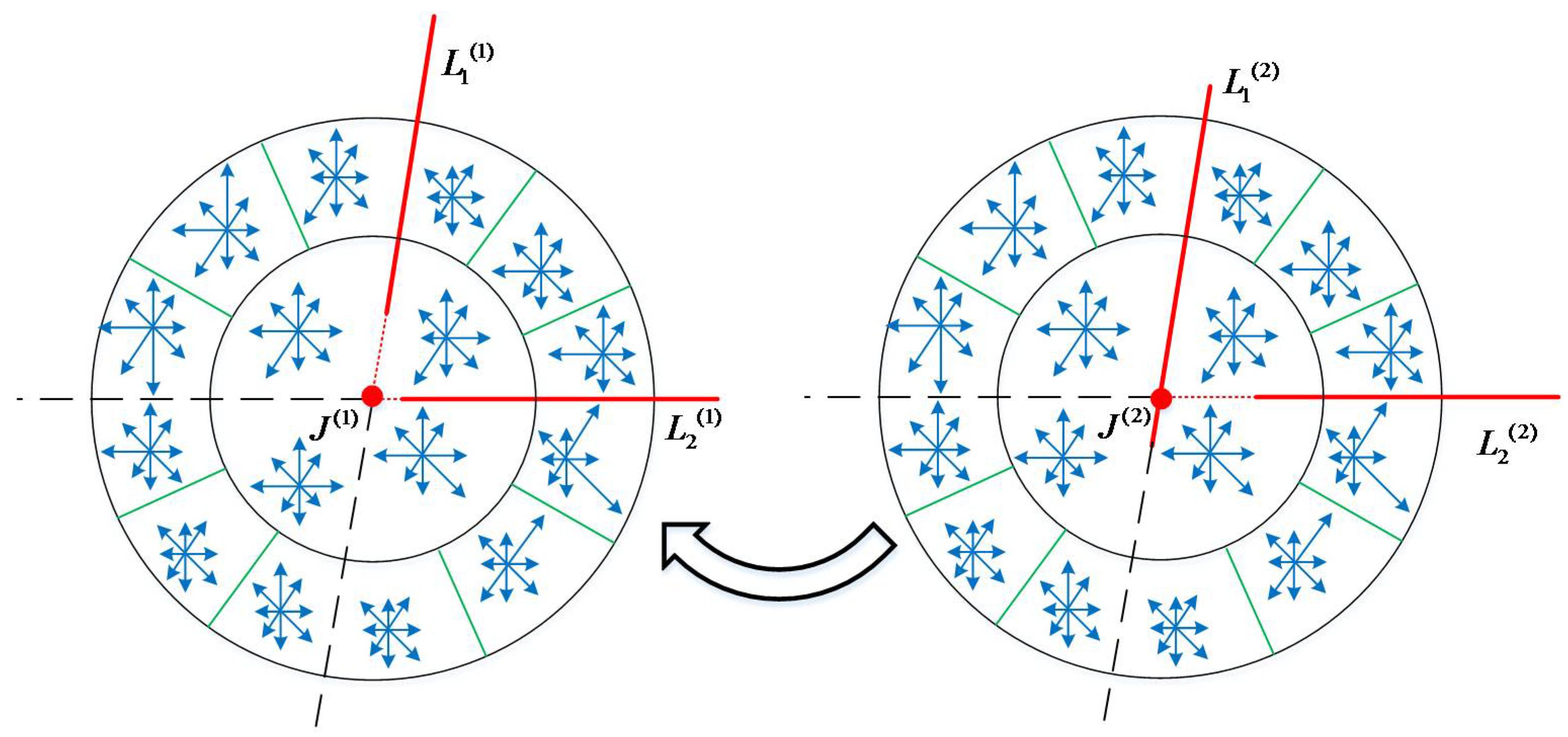

3.1. LJL Descriptor and Similarity Matching

3.2. Back-Projection of MatchedLine Segments

4. Experiment and Analysis

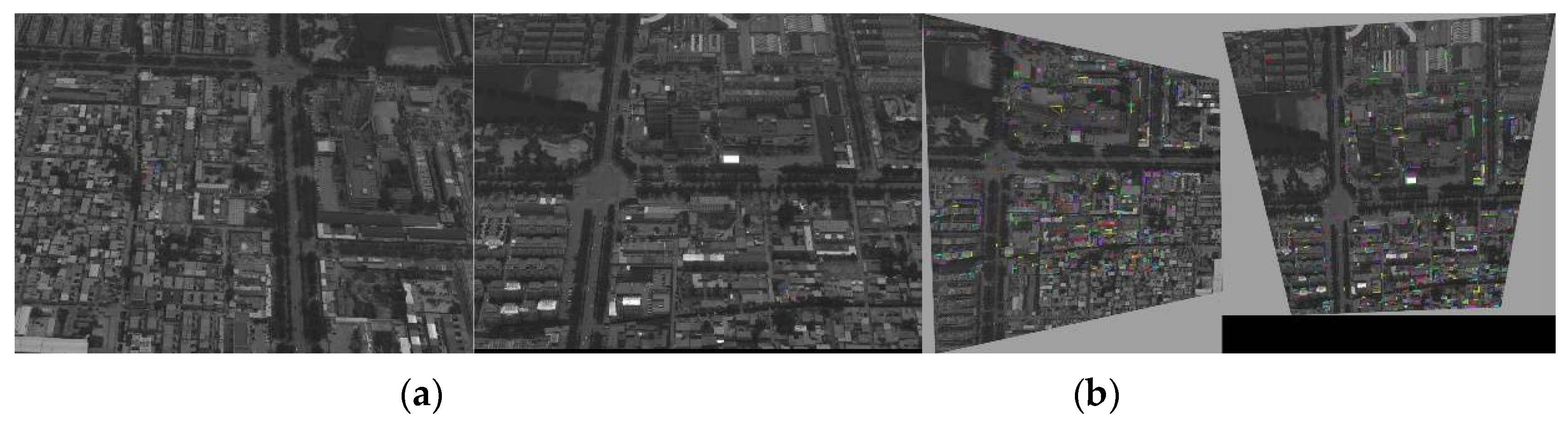

- (1)

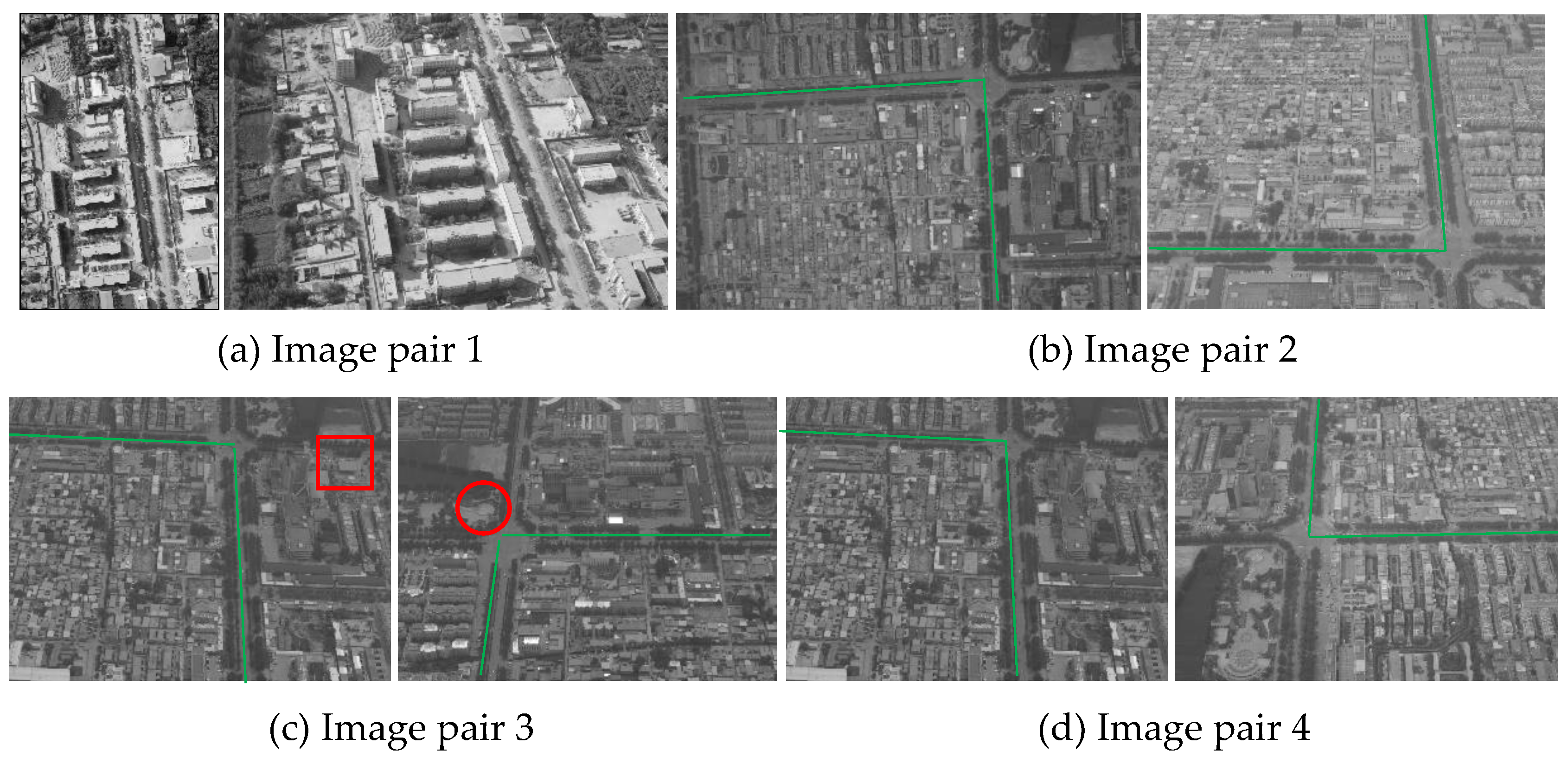

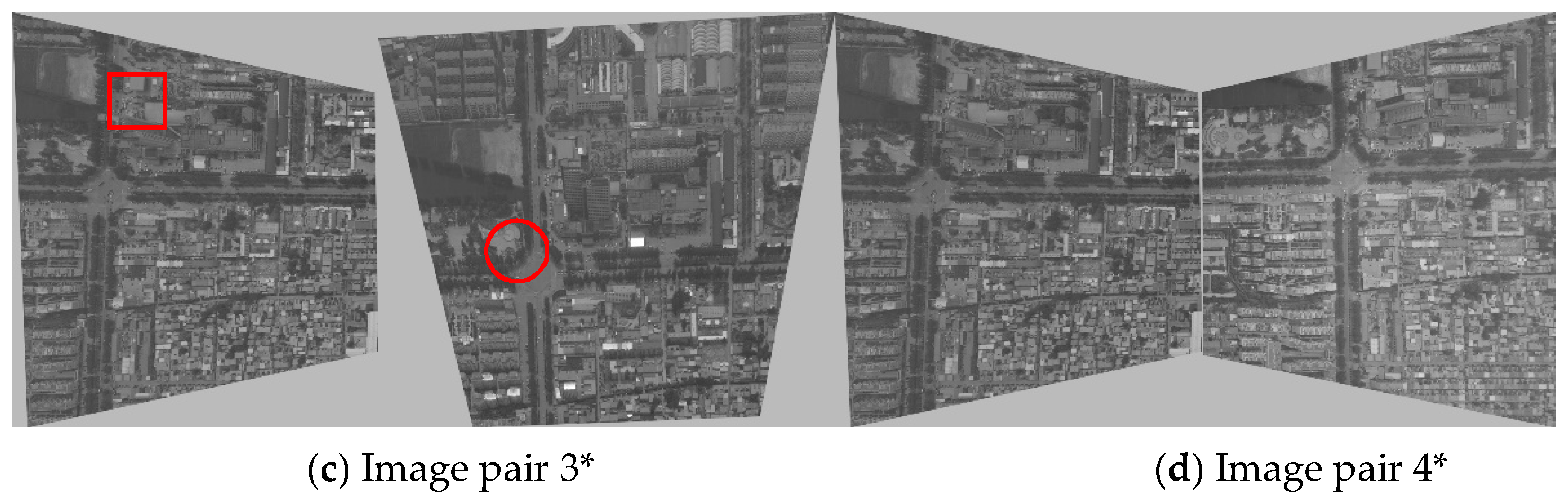

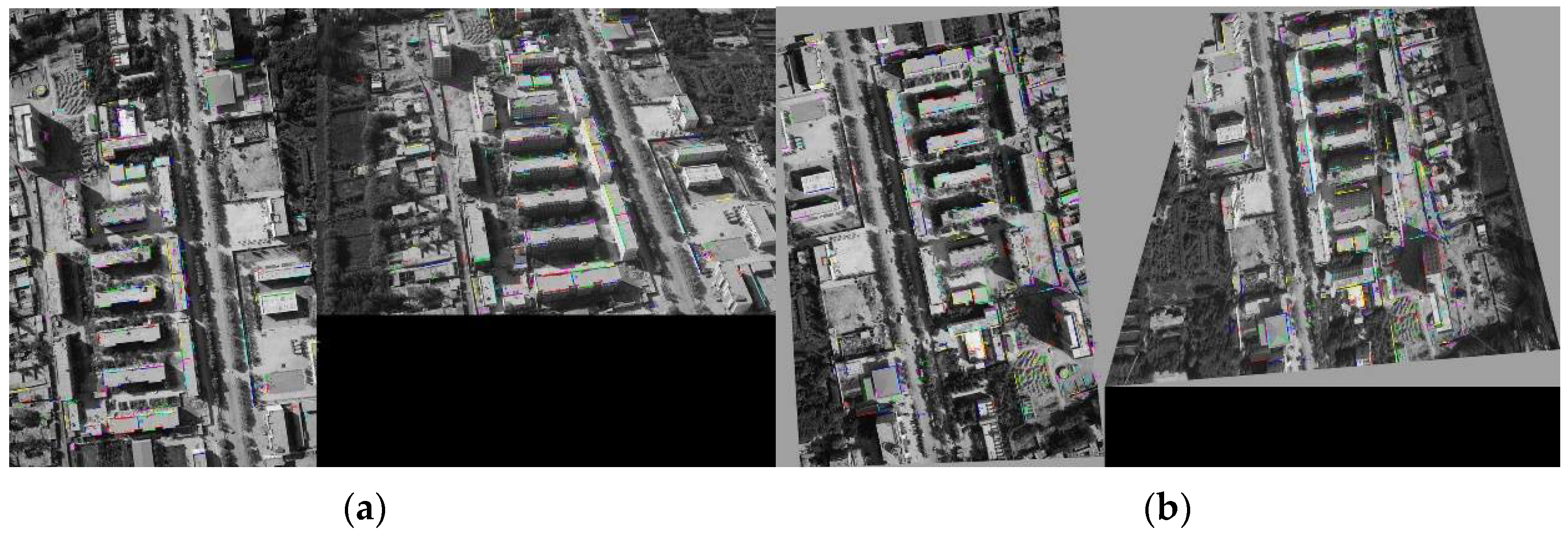

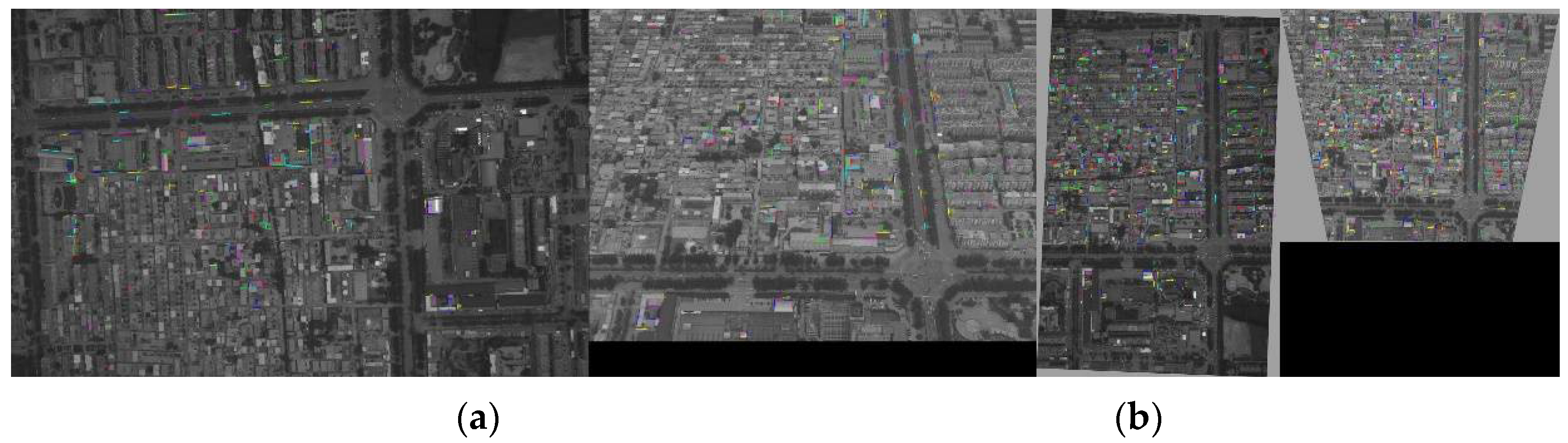

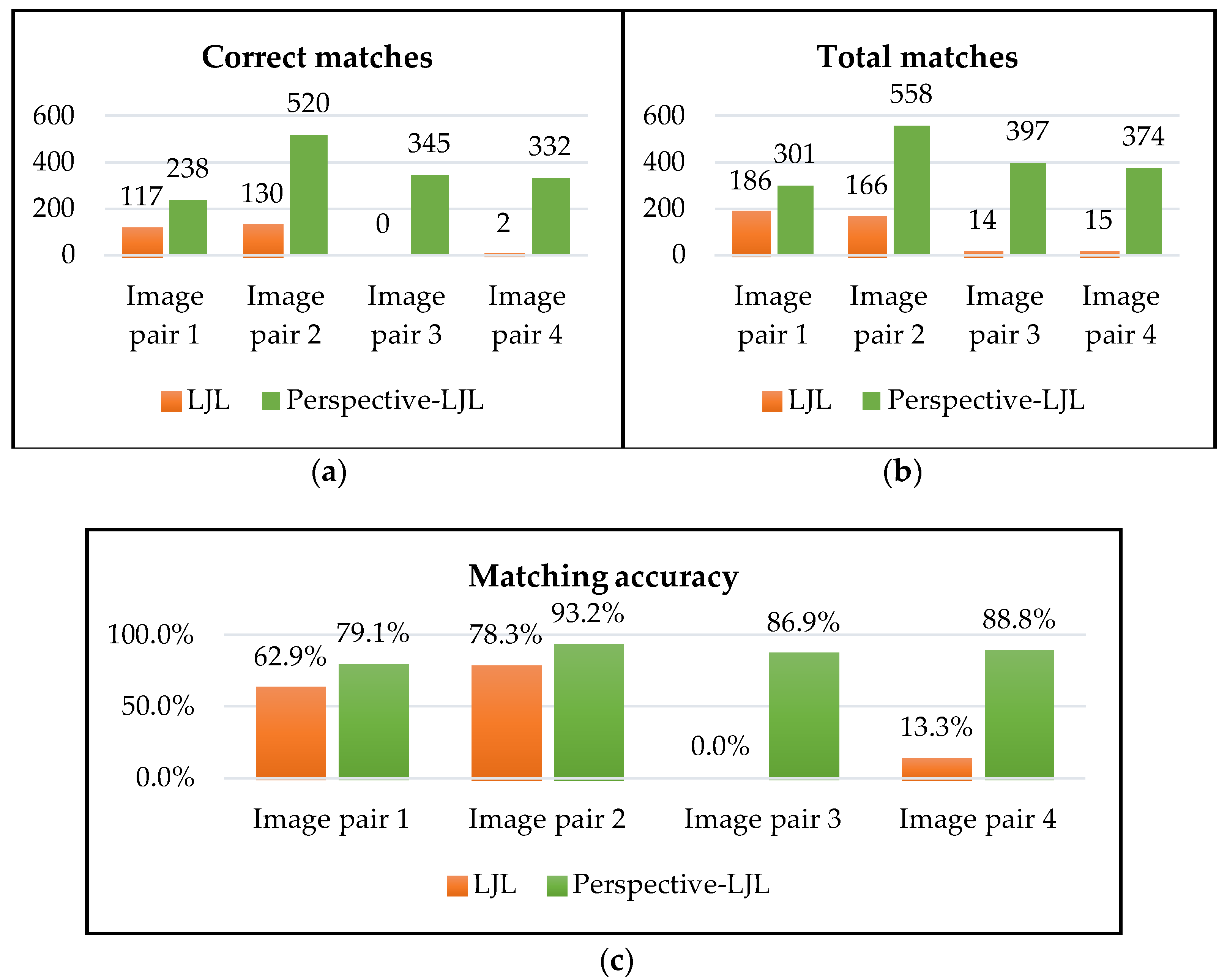

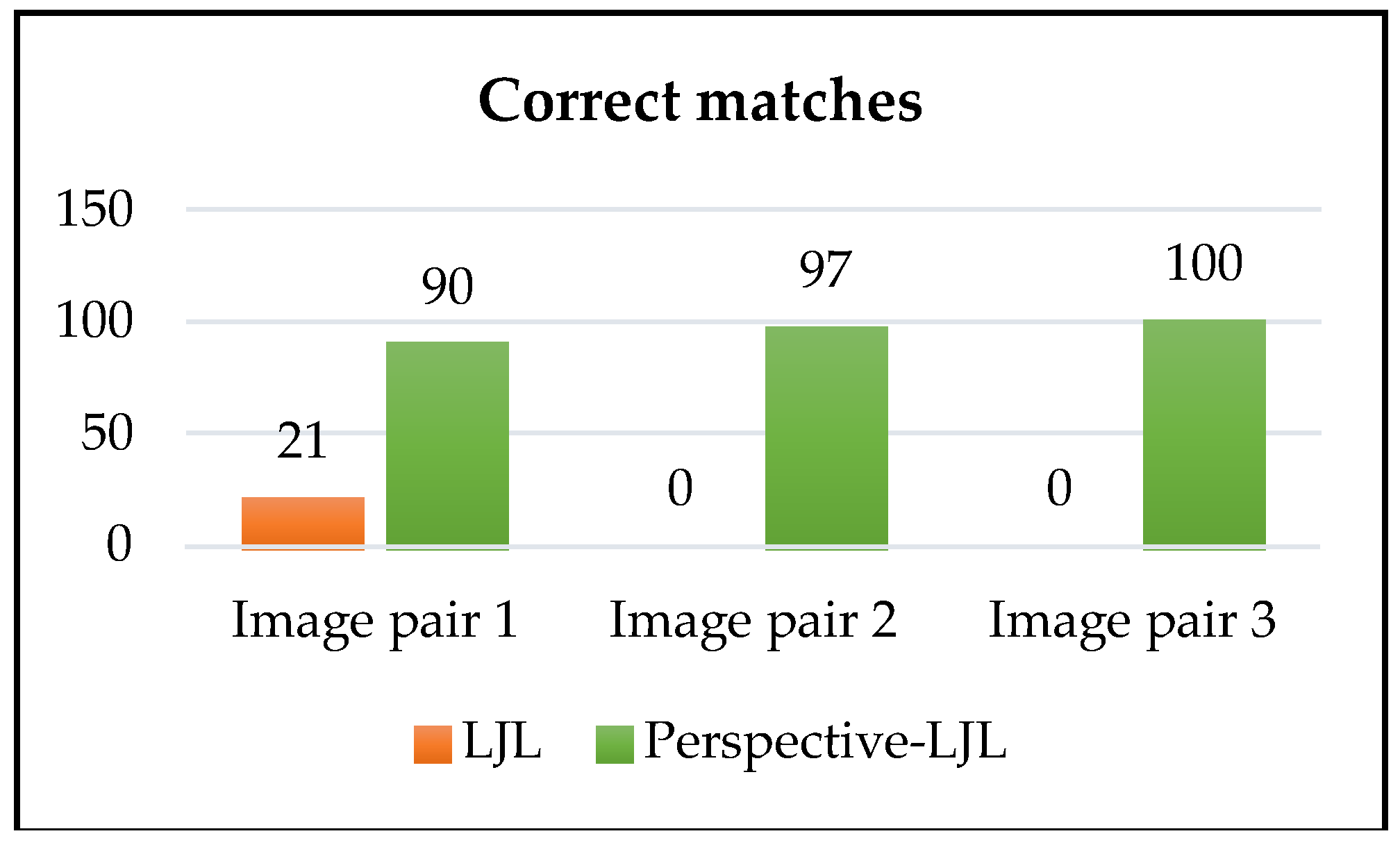

- There is a large gap between the matching results of the four oblique image pairs with respect to the matching results of conventional images (Table 1). The matching accuracy rate is far less than 97%. There are thousands of lines extracted from the two pairs of down-looking and back-looking images; however, only a small proportion of lines are matched. The number of matched line segments is 186 and 166, whereas the accuracy rate is only 62.9% and 79.1%, respectively. Especially in the last two pairs of side-looking images, the baselines are wider and the difference in viewing angles is larger; therefore, the matched line segments are fewer in number, and the accuracy rate is 0% and 13.3%. The data fully reflects the matching difficulty of images with wide baselines and large viewing angles.

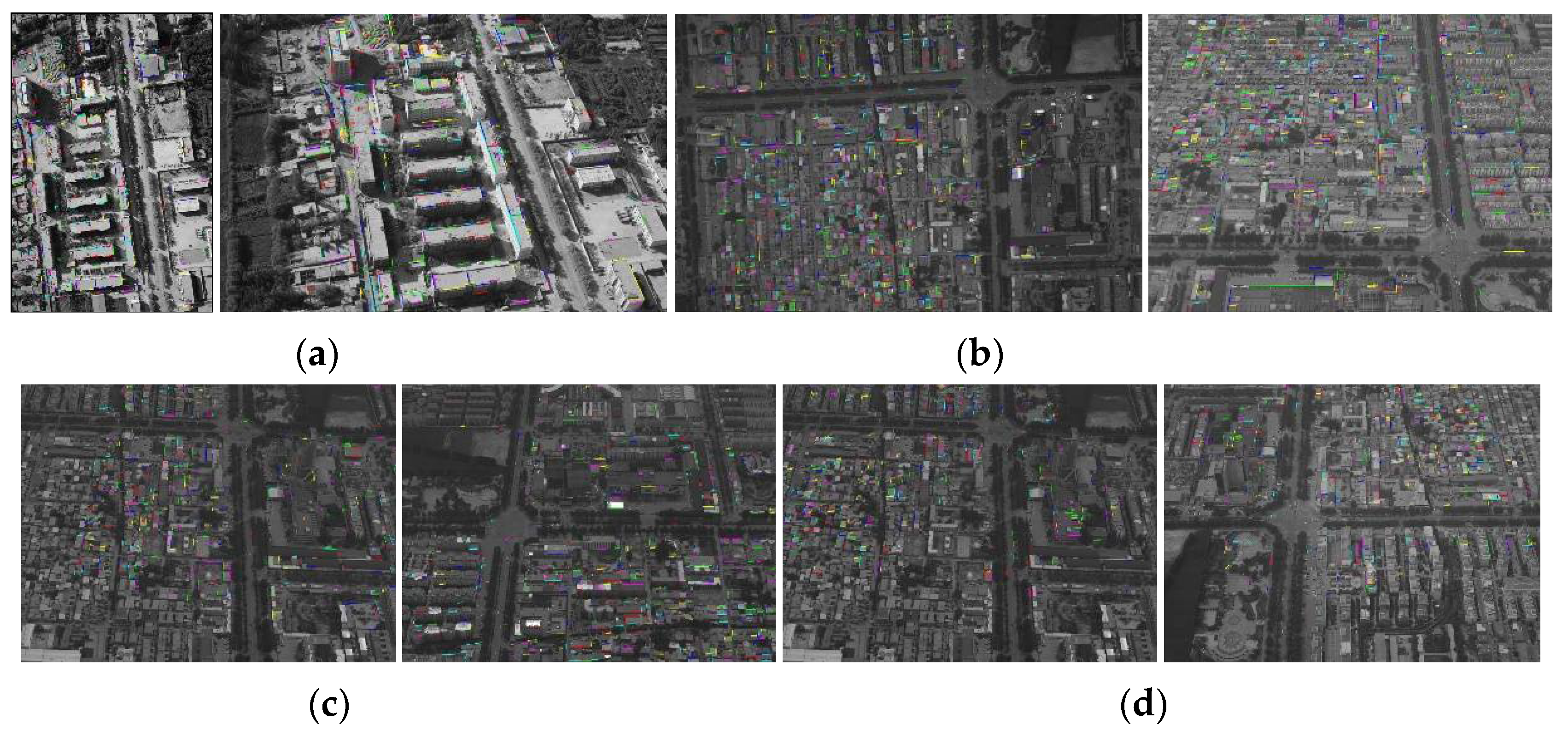

- (2)

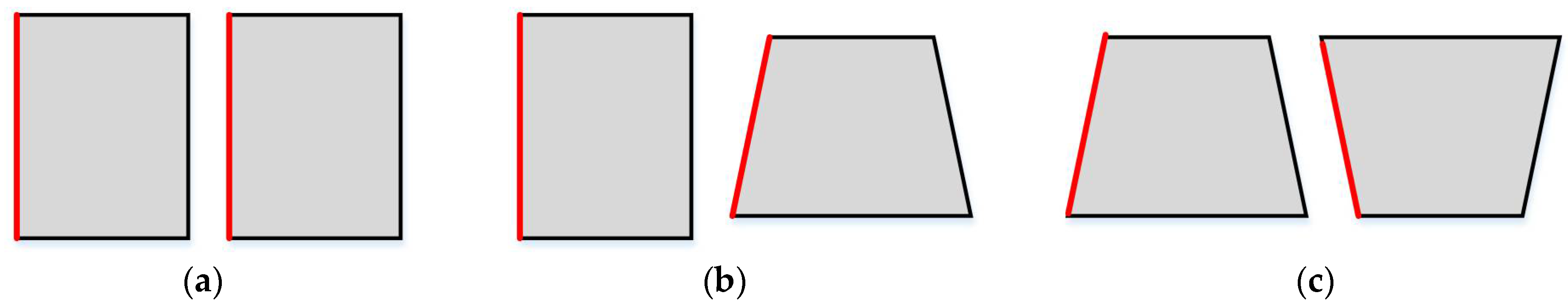

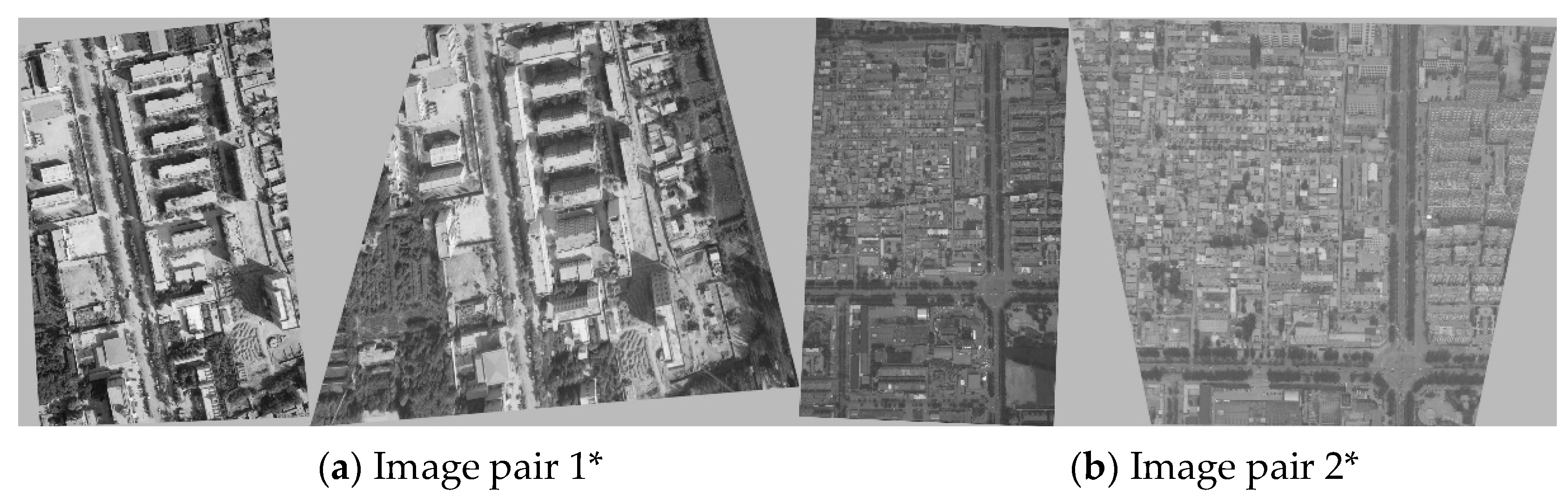

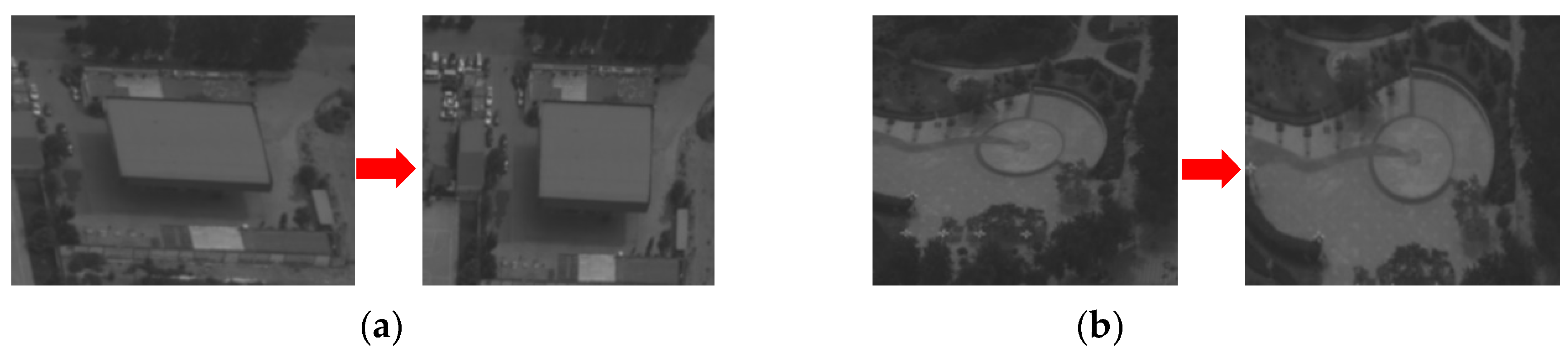

- The number of line segments extracted from rectified images is less than that of original images. Because rectified images are resampled and their quality is lower than that of original images, the extraction effect will be worse. At the same time, their effective trapezoidal contents are smaller than the original images’ rectangular contents. For a line extraction algorithm such as LSD, the line segments smaller than a certain length threshold is not used (the threshold value in this algorithm is set to 20 pixels), so some line segments that are compressed to be shorter are discarded.

- (3)

- The number of LJL structures is directly proportional to the number of extracted line segments; that is, the more line segments are extracted, the more LJL structures can be constructed and the greater probability of matched line segments can be obtained. Although the number of line segments and the LJL structures of rectified images is smaller than that of the original images, the total number, correct number, and accuracy rate of the finally matched line segments is much higher. This is because although the original images have many LJL structures to try to match, due to serious distortions, the LJL structure descriptors to be matched are relatively different. This leads to large differences in descriptor vector distances, resulting in greater difficulty in matching and an increase in the mismatching rate.

- (4)

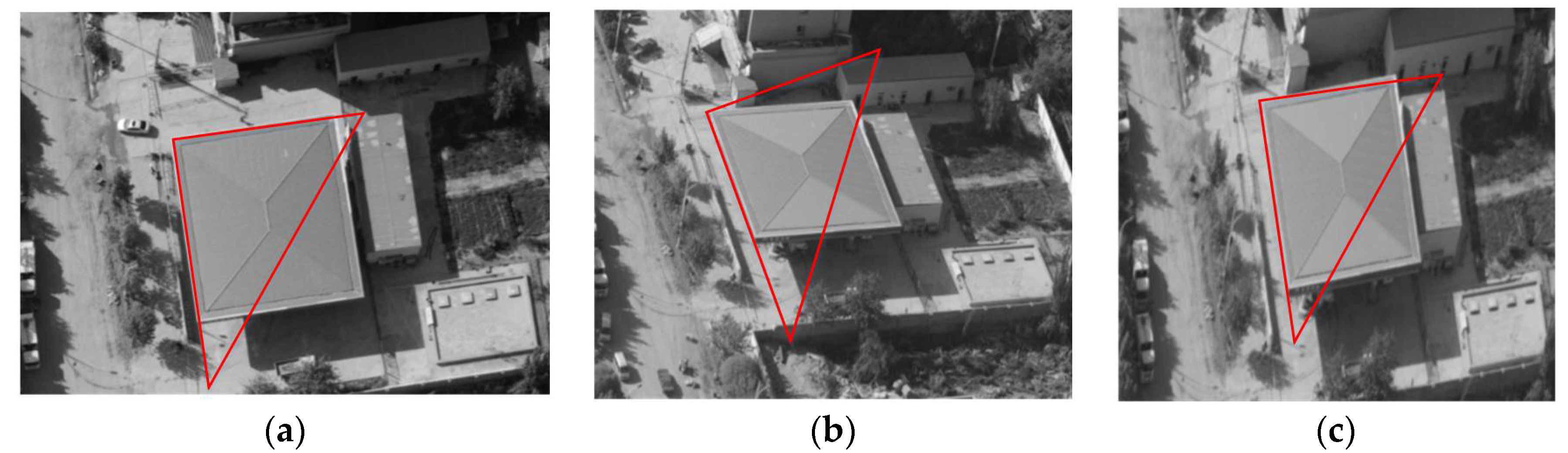

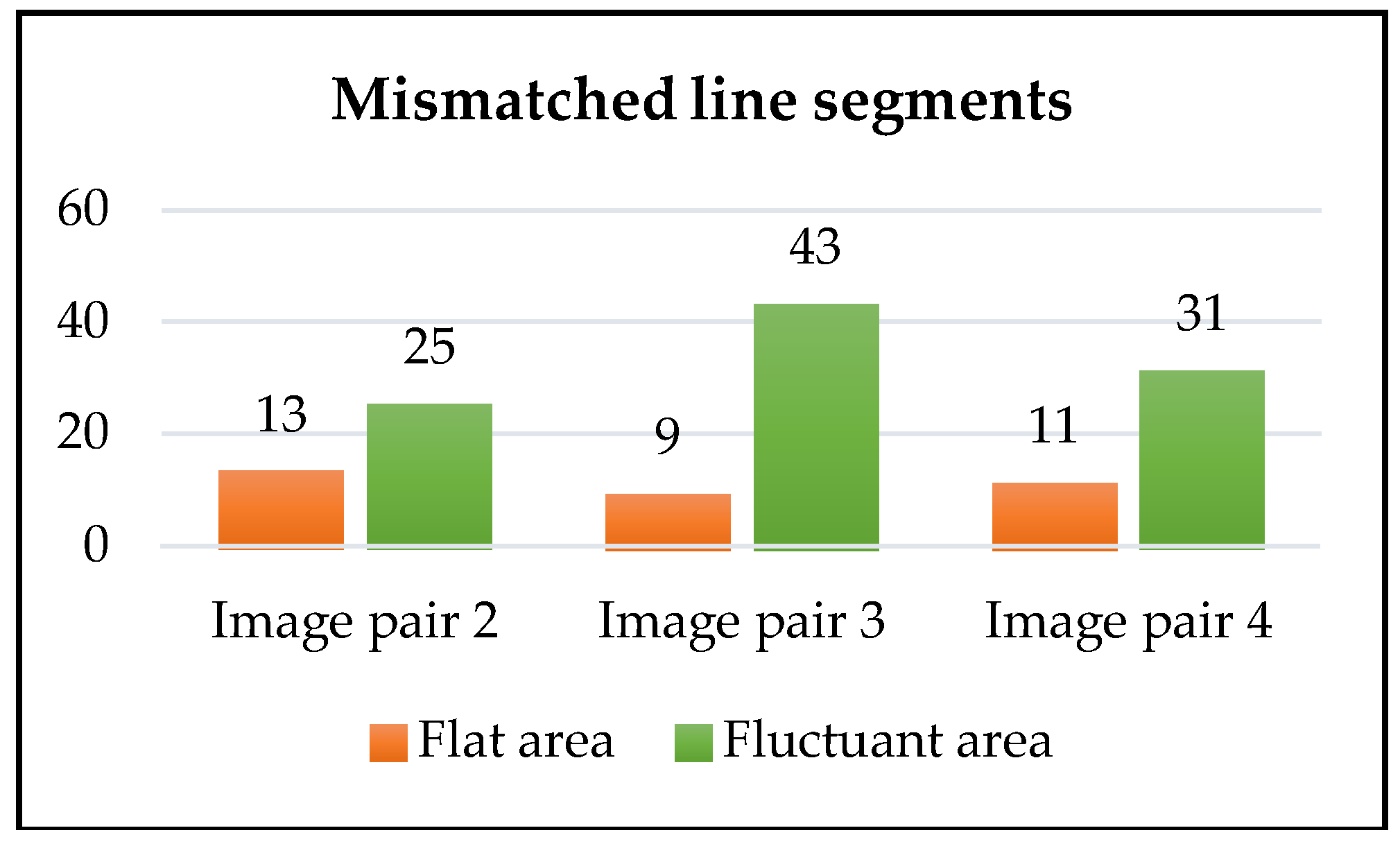

- Comparing results of the first and second image pairs, it is found that both of these image pairs are down-looking and back-looking; however, for the first image pair, the correct matching rate is 62.9% under LJL processing, and 79.1% under Perspective–LJL; this is lower than the rate of 78.3% and 93.2% for the second image pair. Meanwhile, for the increase in the number of matched lines (both total number and the correct number), the effect of the second image pair is significantly better than that of the first image pair. In other words, the matching effect of the first image pair and the improving effect of Perspective–LJL on the first image pair is weaker than those observed in the second image pair. By observing the buildings’ distribution and topography in these two surveying areas, it can be seen that the elevation difference of buildings in the first image pair is large. There are many spatial planes with obvious differences, and occlusions are more serious. In the second image pair, most buildings are flatly distributed, and there are uniform deformations. It is appropriate to use a plane to fit the surfaces of these buildings, and the occlusions are not obvious. When the perspective transformation model is used to rectify buildings to a new spatial plane, the deformations can be well rectified.

- (5)

- Compared to the first and second image pairs, the Perspective–LJL method has more obvious effects on the extra-wide-baseline images of the third and fourth pairs, which fully prove the effectiveness of the proposed method.

- (6)

- By using the Perspective–LJL method, the matching accuracy rate is improved to 79.1%, 93.2%, 86.9%, and 88.8%, respectively; however, there still exists a gap of improving the matching rate up to 97% of the short-baseline images. This indicates that the perspective transformation model can only eliminate some of the perspective effects, and cannot completely solve the problems caused by large viewing angles.

5. Discussion

5.1. The Effect of Building Distribution on Line Matching

5.2. Time Cost Analysis of the Proposed Method

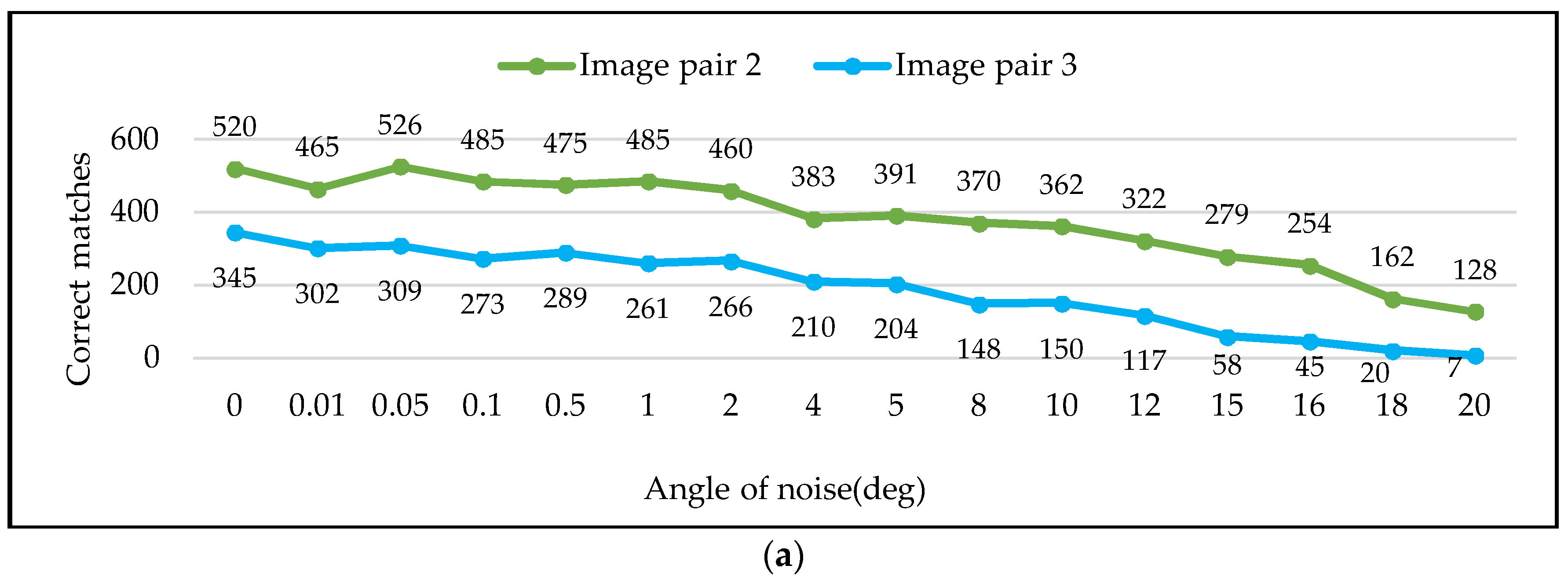

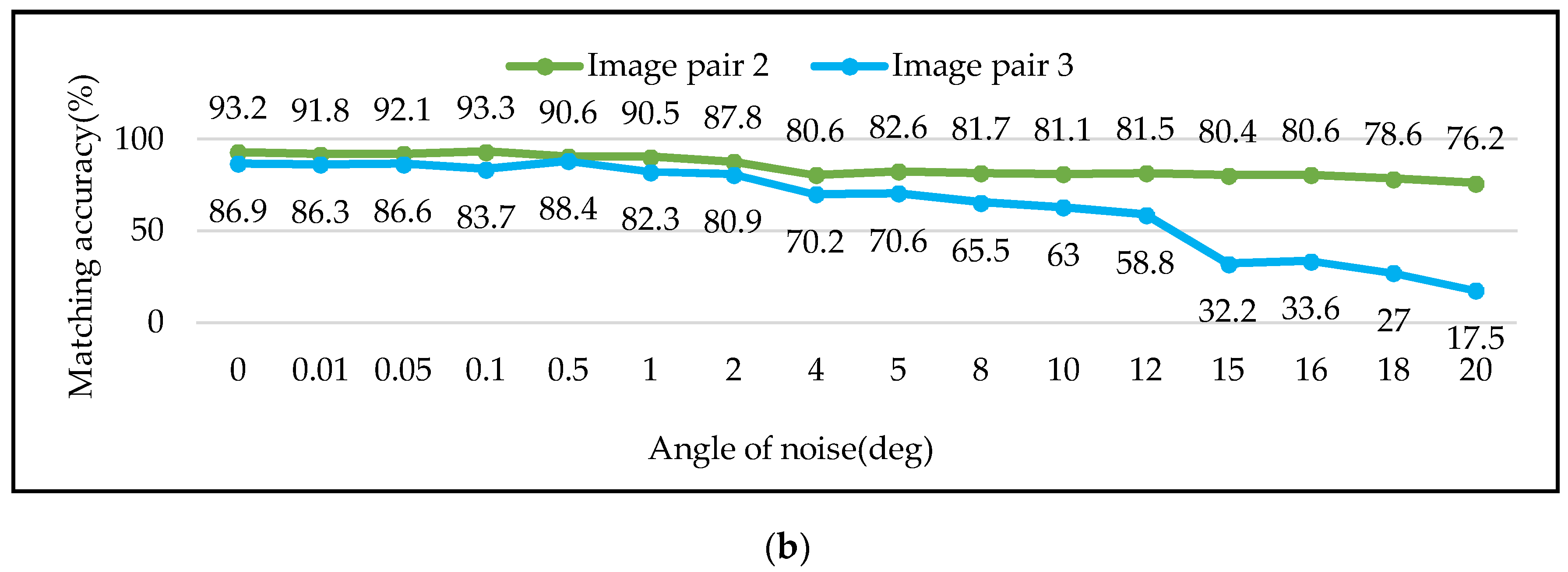

5.3. Matching Outcomes Affected by Different POS Accuracy

5.4. Applicability of Perspective Transformation Model to Other Line Matching Methods

6. Conclusions

Author Contributions

Acknowledgments

Conflicts of Interest

References

- Morel, J.M.; Yu, G. ASIFT: A New Framework for Fully Affine Invariant Image Comparison. Siam J. Imaging Sci. 2009, 2, 438–469. [Google Scholar] [CrossRef]

- Xiao, X.; Zhang, C. Robust and rapid matching of oblique UAV images of urban area. In Proceedings of the SPIE—The International Society for Optical Engineering, San Diego, CA, USA, 25–29 August 2013. [Google Scholar]

- Tuytelaars, T. Wide baseline stereo matching based on local, affinely invariant regions. In Proceedings of the 11th British Machine Vision Conference, Bristol, UK, 11–14 September 2000; pp. 412–425. [Google Scholar]

- Petsa, E.; Patias, P. Relative orientation of image triples using straight linear features. Int. Arch. Photogramm. Remote Sens. 1994, 30, 663–669. [Google Scholar]

- Wang, Q.; Yan, L.; Sun, Y.; Cui, X.; Mortimer, H.; Li, Y. True orthophoto generation using line segment matches. Photogramm. Rec. 2018. [CrossRef]

- Zhang, Z.; Faugeras, O. Building a 3D world model with a mobile robot: 3D line segment representation and integration. In Proceedings of the 10th IEE International Conference on Pattern Recognition, Atlantic City, NJ, USA, 16–21 June 1990; Volume 1, pp. 38–42. [Google Scholar]

- Zhang, L.; Zhu, L. 3D line segments reconstruction for building facades with line matching across multi-image with non-geometry constraint. Hsi-An Chiao Tung Ta Hsueh J. Xi'an Jiaotong Univ. 2014, 48, 15–19. [Google Scholar]

- Tanaka, S.; Nakagawa, M. The Triplet Measured by Aerial Camera Using Line Segments Line Matching-Based Relative Orientation Using Triplet Camera. Int. Arch. Photogramm. Remote Sens. Spat. Inf. Sci. 2015, 40, 217–222. [Google Scholar] [CrossRef]

- Ok, A.Ö.; Wegner, J.D.; Heipke, C.; Rottensteiner, F.; Soergel, U.; Toprak, V. In-Strip Matching and Recons-truction of Line Segments from UHR Aerial Image Triplets. In Photogrammetric Image Analysis; Springer: Berlin/Heidelberg, Germany, 2011; pp. 61–72. [Google Scholar]

- Bay, H.; Ferraris, V.; Van Gool, L. Wide-baseline stereo matching with line segments. In Proceedings of the 2005 IEEE Computer Society Conference on Computer Vision and Pattern Recognition (CVPR), San Diego, CA, USA, 20–25 June 2005; Volume 1, pp. 329–336. [Google Scholar]

- Shi, W.; Zhu, C. The line segment match method for extracting road network from high-resolution satellite images. IEEE Trans. Geosci. Remote Sens. 2002, 40, 511–514. [Google Scholar]

- Wang, Z.; Liu, H.; Wu, F. MSLD: A robust descriptor for line matching. Pattern Recognit. 2009, 42, 941–953. [Google Scholar] [CrossRef]

- López, J.; Santos, R.; Fdez-Vidal, X.R.; Pardo, X.M. Two-view line matching algorithm based on context and appearance in low-textured images. Pattern Recognit. 2015, 48, 2164–2184. [Google Scholar] [CrossRef]

- Fan, B.; Wu, F.; Hu, Z. Line matching leveraged by point correspondences. In Proceedings of the 2010 IEEE Conference on Computer Vision and Pattern Recognition (CVPR), San Diego, CA, USA, 13–18 June 2010; pp. 390–397. [Google Scholar]

- Jia, Q.; Gao, X.; Fan, X.; Luo, Z.; Li, H.; Chen, Z. Novel Coplanar Line-Points Invariants for Robust Line Matching Across Views. In European Conference on Computer Vision; Springer: Cham, Switzerland, 2016; pp. 599–611. [Google Scholar]

- Lourakis, M.I.A.; Halkidis, S.T.; Orphanoudakis, S.C. Matching disparate views of planar surfaces using projective invariants. Image Vis. Comput. 2000, 18, 673–683. [Google Scholar] [CrossRef]

- Zhang, L.; Koch, R. An efficient and robust line segment matching approach based on LBD descriptor and pairwise geometric consistency. J. Vis. Commun. Image Represent. 2013, 24, 794–805. [Google Scholar] [CrossRef]

- Baillard, C.; Schmid, C.; Zisserman, A.; Fitzgibbon, A. Automatic line matching and 3D reconstruction of buildings from multiple views. In Proceedings of the ISPRS Conference on Automatic Extraction of GIS Objects from Digital Imagery, Munich, Germany, 8–10 September 1999; Volume 32, pp. 69–80. [Google Scholar]

- Wang, L.; Neumann, U.; You, S. Wide-baseline image matching using Line Signatures. In Proceedings of the 2009 12th IEEE International Conference on Computer Vision, Kyoto, Japan, 29 September–2 October 2009; pp. 1311–1318. [Google Scholar]

- Meltzer, J.; Soatto, S. Edge descriptors for robust wide-baseline correspondence. In Proceedings of the 2008 IEEE Conference on Computer Vision and Pattern Recognition, CVPR, Anchorage, AK, USA, 23–28 June 2008; pp. 1–8. [Google Scholar]

- Li, K.; Yao, J.; Lu, X. Robust line matching based on ray-point-ray structure descriptor. In Asian Conference on Computer Vision; Springer: Cham, Switzerland, 2014; pp. 554–569. [Google Scholar]

- Li, K.; Yao, J.; Lu, X.; Zhang, Z.; Zhang, Z. Hierarchical line matching based on line-junction-line structure descriptor and local homography estimation. Neurocomputing 2016, 184, 207–220. [Google Scholar] [CrossRef]

- Sun, Y.; Zhao, L.; Huang, S.; Yan, L.; Dissanayake, G. Line matching based on planar homography for stereo aerial images. ISPRS J. Photogramm. Remote Sens. 2015, 104, 1–17. [Google Scholar] [CrossRef]

- Gao, Y.; Liu, S.; Sun, Y.; Fan, S.; Tan, X. Line matching using a disparity map in rectified image space for stereo aerial images. Remote Sens. Lett. 2016, 7, 751–760. [Google Scholar] [CrossRef]

- Hartley, R.; Zisserman, A. Multiple View Geometry in Computer Vision; Cambridge University Press: Cambridge, UK, 2003. [Google Scholar]

- Gioi, R.G.V.; Jakubowicz, J.; Morel, J.M.; Randall, G. LSD: A line segment detector. Image Process. Line 2012, 2, 35–55. [Google Scholar] [CrossRef]

- Petsa, E.; Κarras, G. Constrained line-photogrammetric 3D reconstruction from stereopairs. Int. Arch. Photogramm. Remote Sens. 2000, 33, 604–610. [Google Scholar]

- Van den Heuvel, F.A. Line-photogrammetric mathematical model for the reconstruction of polyhedral objects. In Videometrics VI; El-Hakim, S.F., Ed.; SPIE: Bellingham, WA, USA, 1999; Volume 3174, pp. 60–71. [Google Scholar]

- Gerke, M. Using horizontal and vertical building structure to constrain indirect sensor orientation. ISPRS J. Photogramm. Remote Sens. 2011, 66, 307–316. [Google Scholar] [CrossRef]

| Conventional Images | Extracted Line Segments | Line-Juncture-Line (LJL) Structures | Matched Line Segments | Time (s) |

|---|---|---|---|---|

| Image pair | 465,499 | 375,366 | 258/266/97.0% | 4.3 |

| Data | Resolution | Pixel Size (um) | Focal Length (mm) | |

|---|---|---|---|---|

| Image pair 1 | Down-looking | 1326 × 1988 | 4.52 | 35.0 |

| Back-looking | 1988 × 1326 | 4.52 | 50.0 | |

| Image pair 2 | Down-looking | 2817 × 1795 | 6.8 | 47.2 |

| Back -looking | 2184 × 1620 | 6.8 | 80.2 | |

| Image pair 3 | Left-looking | 2622 × 1944 | 6.8 | 80.1 |

| Forward-looking | 2571 × 1916 | 6.8 | 80.1 | |

| Image pair 4 | Left-looking | 2622 × 1944 | 6.8 | 80.1 |

| Right-looking | 2613 × 1933 | 6.8 | 80.1 | |

| Data | Method | Extracted Line Segments | Constructed LJL Structures | Matched Line Segments (Correct/Total/Accuracy) |

|---|---|---|---|---|

| Image pair 1 | LJL | 1459,1459 | 1323,1251 | 117/186/62.9% |

| Perspective–LJL | 1369,1369 | 1274,1121 | 238/301/79.1% | |

| Image pair 2 | LJL | 2795,2310 | 2800,1912 | 130/166/78.3% |

| Perspective–LJL | 2640,2143 | 2636,2025 | 520/558/93.2% | |

| Image pair 3 | LJL | 2825,2570 | 3221,2046 | 0/14/0% |

| Perspective–LJL | 2461,2138 | 2877,1760 | 345/397/86.9% | |

| Image pair 4 | LJL | 2825,2879 | 3221,3196 | 2/15/13.3% |

| Perspective–LJL | 2461,2779 | 2877,3038 | 332/374/88.8% |

| Time Cost (s) | Image Pair 1 | Image Pair 2 | Image Pair 3 | Image Pair 4 |

|---|---|---|---|---|

| LJL | 12.3 | 18.0 | 20.5 | 24.8 |

| Perspective–LJL | 12.4 | 19.5 | 19.8 | 24.6 |

© 2018 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (http://creativecommons.org/licenses/by/4.0/).

Share and Cite

Wang, Q.; Zhao, H.; Zhang, Z.; Cui, X.; Ullah, S.; Sun, S.; Liu, F. Line Matching Based on Viewpoint-Invariance for Stereo Wide-Baseline Aerial Images. Appl. Sci. 2018, 8, 938. https://doi.org/10.3390/app8060938

Wang Q, Zhao H, Zhang Z, Cui X, Ullah S, Sun S, Liu F. Line Matching Based on Viewpoint-Invariance for Stereo Wide-Baseline Aerial Images. Applied Sciences. 2018; 8(6):938. https://doi.org/10.3390/app8060938

Chicago/Turabian StyleWang, Qiang, Haimeng Zhao, Zhenxin Zhang, Ximin Cui, Sana Ullah, Shanlin Sun, and Fan Liu. 2018. "Line Matching Based on Viewpoint-Invariance for Stereo Wide-Baseline Aerial Images" Applied Sciences 8, no. 6: 938. https://doi.org/10.3390/app8060938

APA StyleWang, Q., Zhao, H., Zhang, Z., Cui, X., Ullah, S., Sun, S., & Liu, F. (2018). Line Matching Based on Viewpoint-Invariance for Stereo Wide-Baseline Aerial Images. Applied Sciences, 8(6), 938. https://doi.org/10.3390/app8060938