Smart Grid Testing Management Platform (SGTMP) †

Abstract

1. Introduction

2. Background

2.1. Smart Grid (SG)

2.2. Smart Grid Testing Requirements

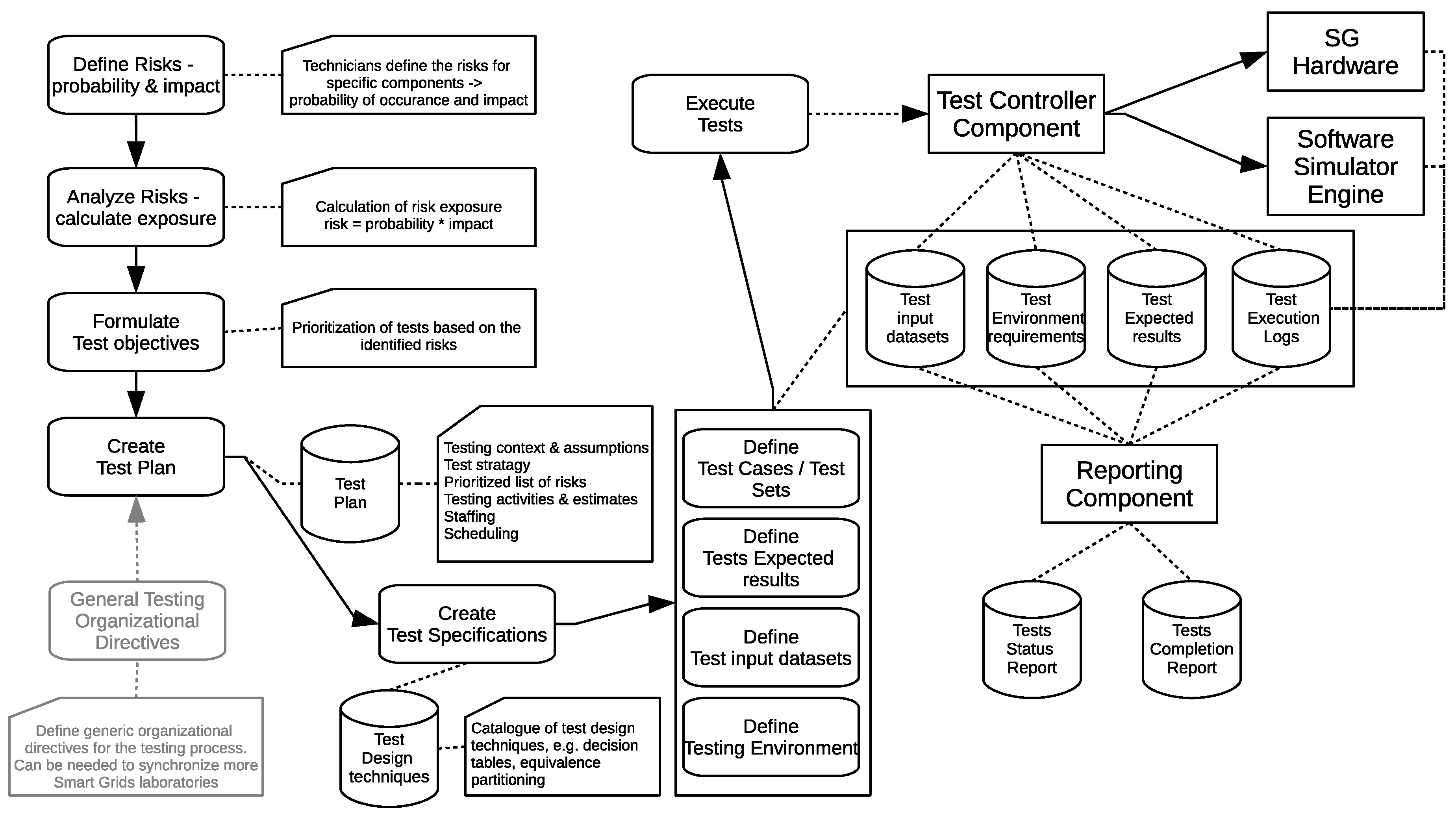

2.3. The ISO/IEC/IEEE 29119 Testing Process

2.4. SG Simulations and Co-Simulations

2.5. Related Works

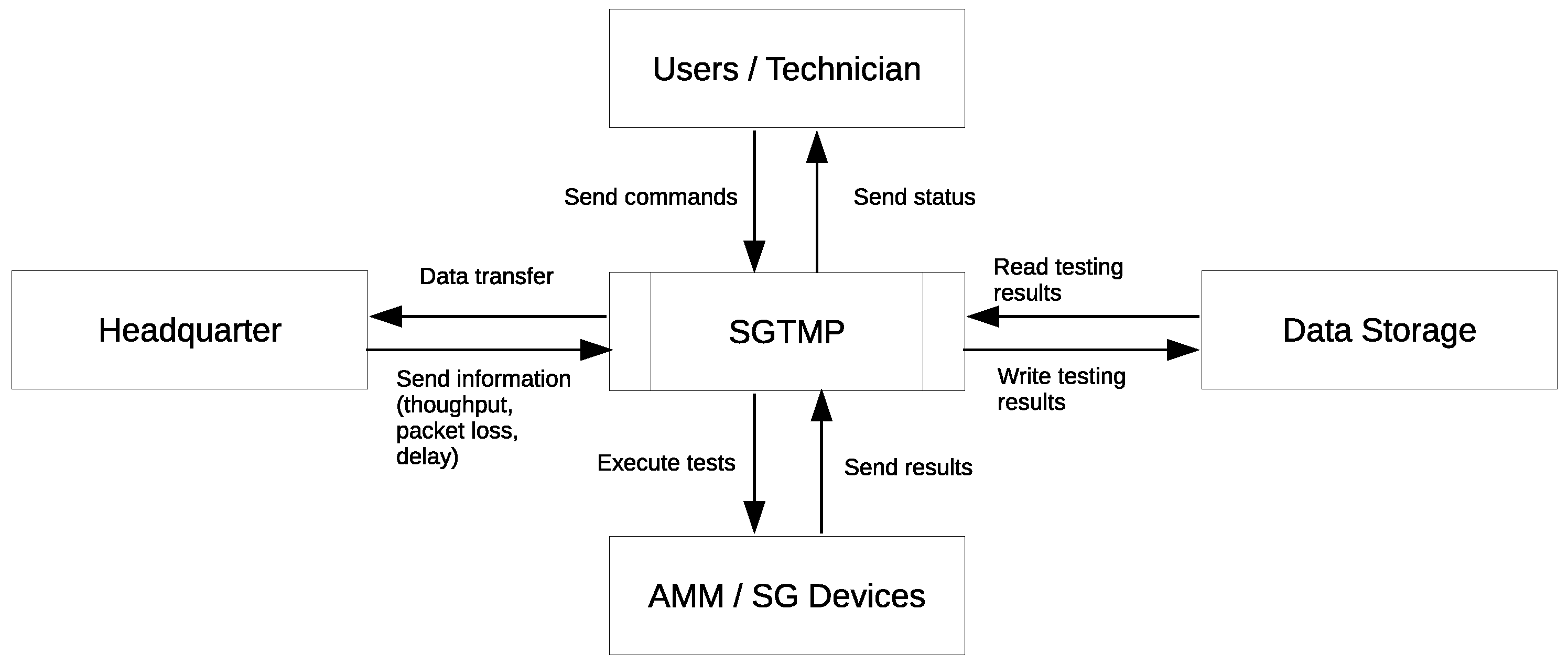

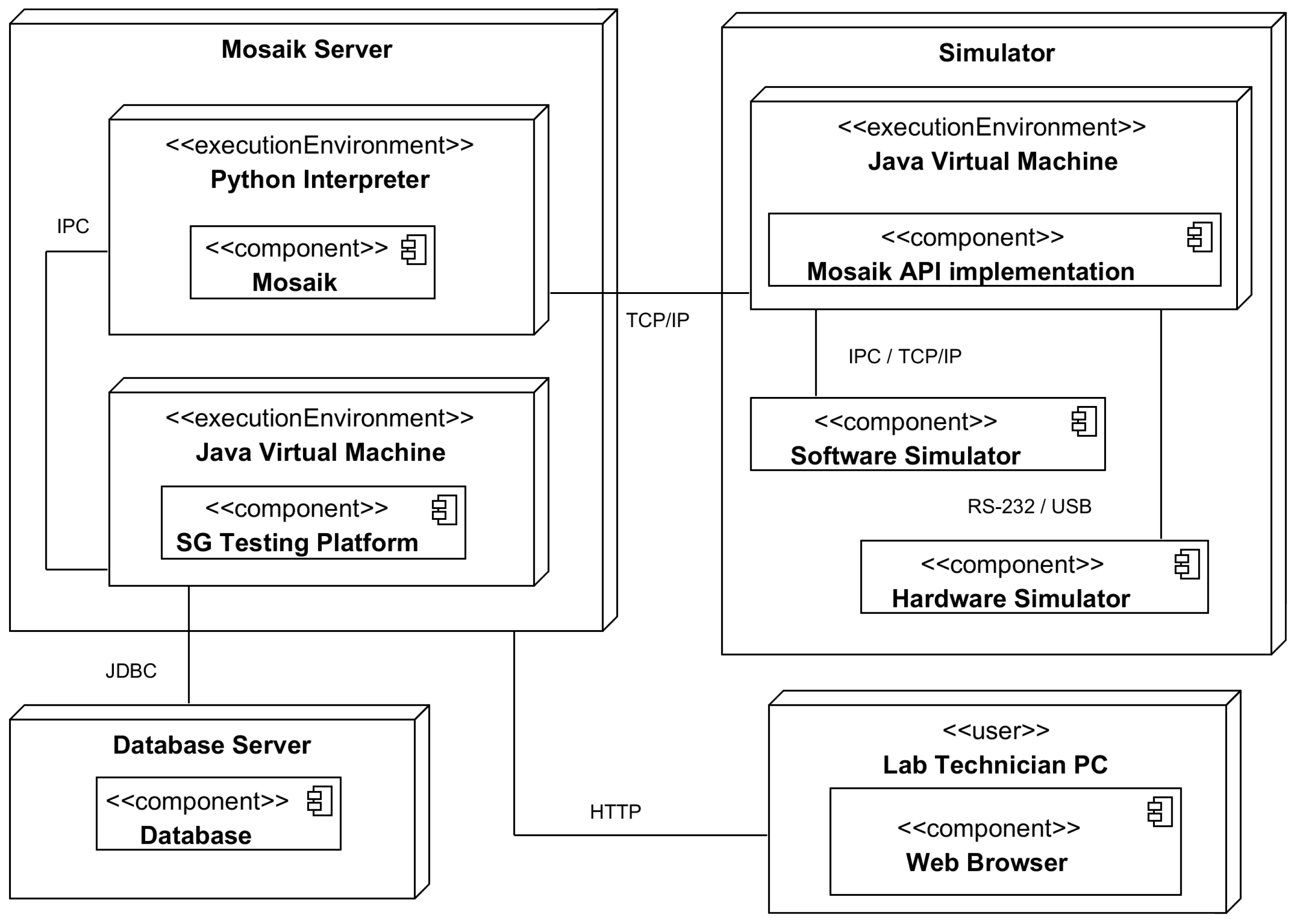

3. Implementing SGTMP

3.1. Assumptions

- A test can involve a single component or several interacting components that can be either real hardware devices or simulated by software simulators. In all cases, SGTMP will allow for the interaction of the different simulators, with the precondition that the necessary interfacing code has been implemented (see Section 3.6). One aim of SGTMP is to make this process as simple as possible, yet it cannot be fully automated to provide more flexibility.

- The main expectation is that SGTMP can be used to simulate various scenarios to improve the decision-making process (e.g., analyzing results from thousands of smart meters running concurrently), mainly aiding for devices of Types A,B,C (see Section 2.2). SGTMP was not meant as a platform to test cyber-attacks (intruder devices, Type D, Section 2.2), as this would require different design constraints. However, potentially, other network communication-based simulators could be integrated in SGTMP (e.g., OMNET++ [46,47]). Such integration was not among the goals at the basis of the SGTMP design.

3.2. Context

3.3. Platform Architecture

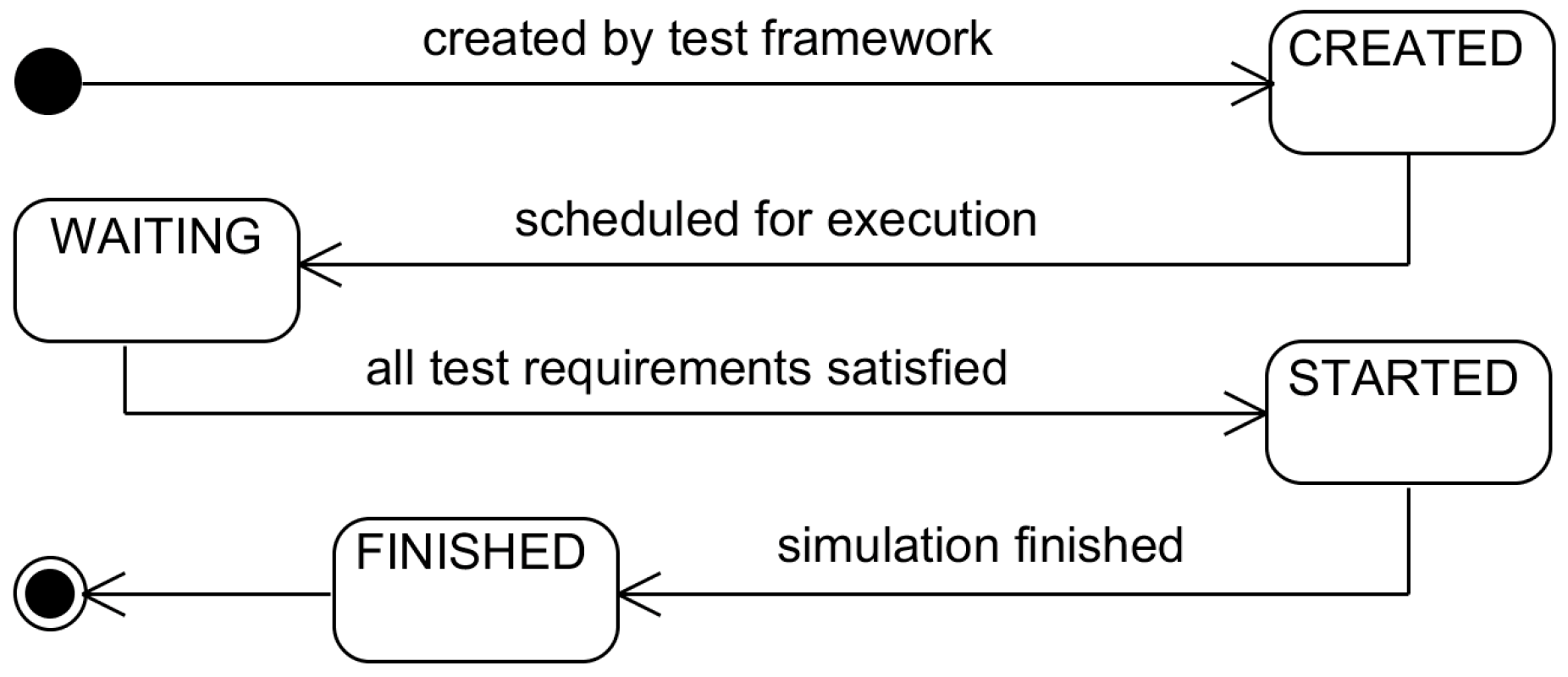

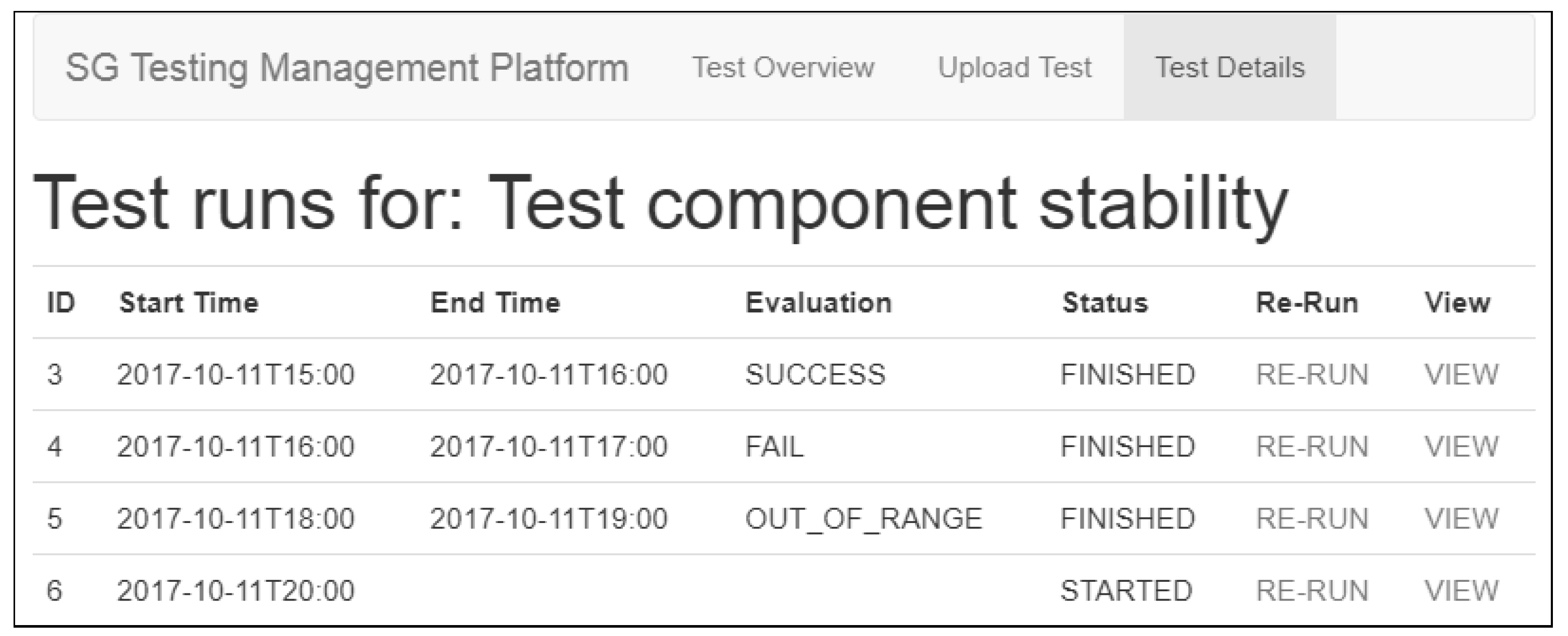

3.4. Test Execution and Result Processing

3.4.1. The Test Executor

3.4.2. The Test Run Scheduler

- Maximize test runs: This strategy tries to maximize the number of test runs that can be executed in parallel by trying to find the best combination of simulator utilization and the number of test runs. If a solution is found, the result will be the largest possible amount of test runs executed at once, at the cost of increased selection time; however, test case generation plays a role in the extensiveness of the test results [49].

- Maximize simulator usage: This strategy is a complement of the “Maximize test runs” strategy. Here the amount of simulators (resources) is maximized per each test. The reason might be that tests with more simulators are more important (integration tests as opposed to single simulator tests).

- OS scheduling-based: This strategy uses well-known OS scheduling algorithms for selecting test runs to execute. The simulation requirements and currently used simulators can be used to ensure fairness and resource usage optimization. All of the queued test runs for execution are considered.

- Heuristics-based: This strategy uses different metrics from the ones described above for prioritizing test runs, such as risk or business importance. This aspect can be the Feature priority, where the highest priority features would be selected first, using a secondary selection strategy for selecting a test that currently has all of the available resources. Other metrics, such as the maximization of diagnostic information available per test [50], can be considered.

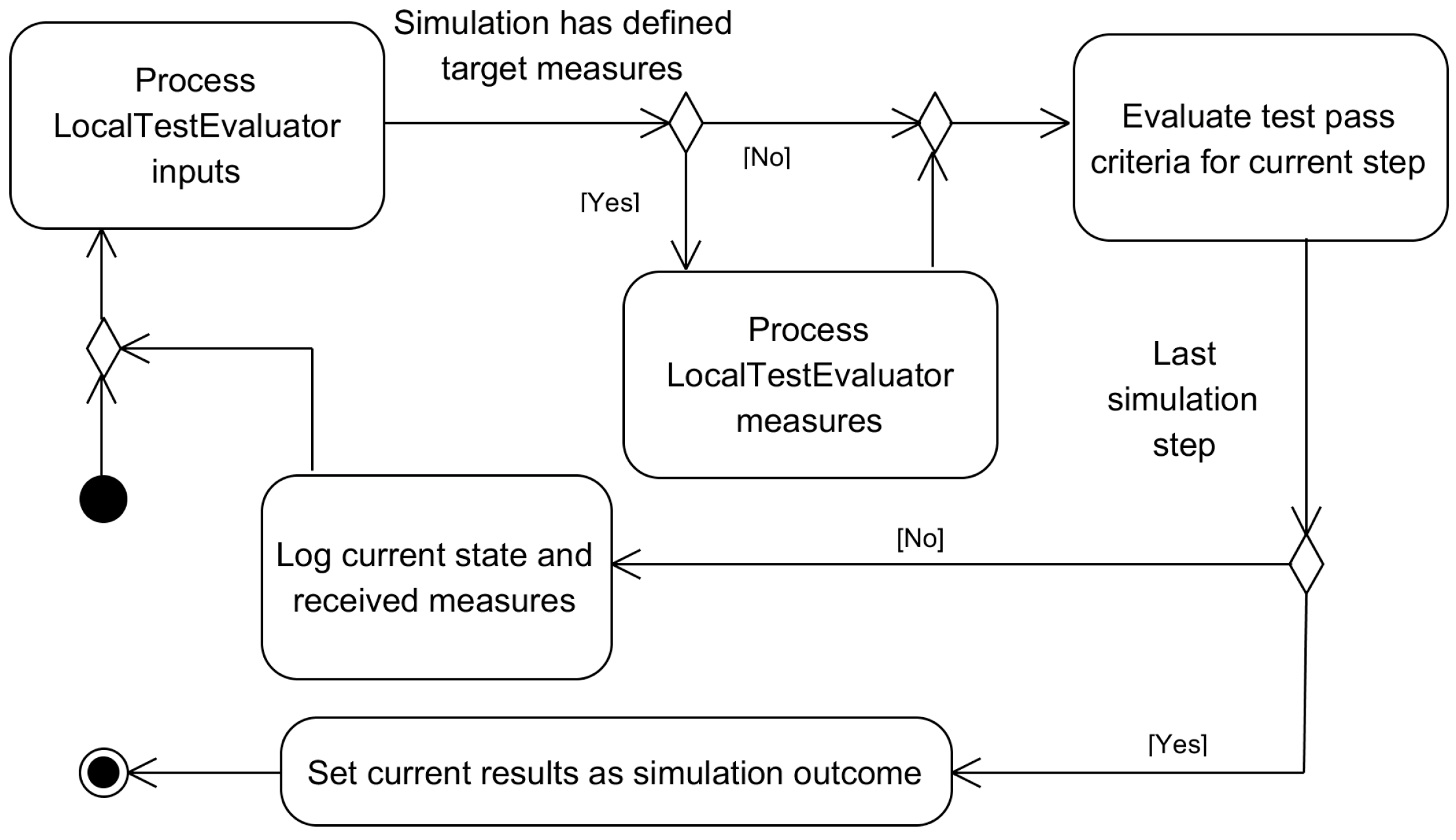

3.4.3. Local Test Evaluators

3.4.4. Global Test Evaluators

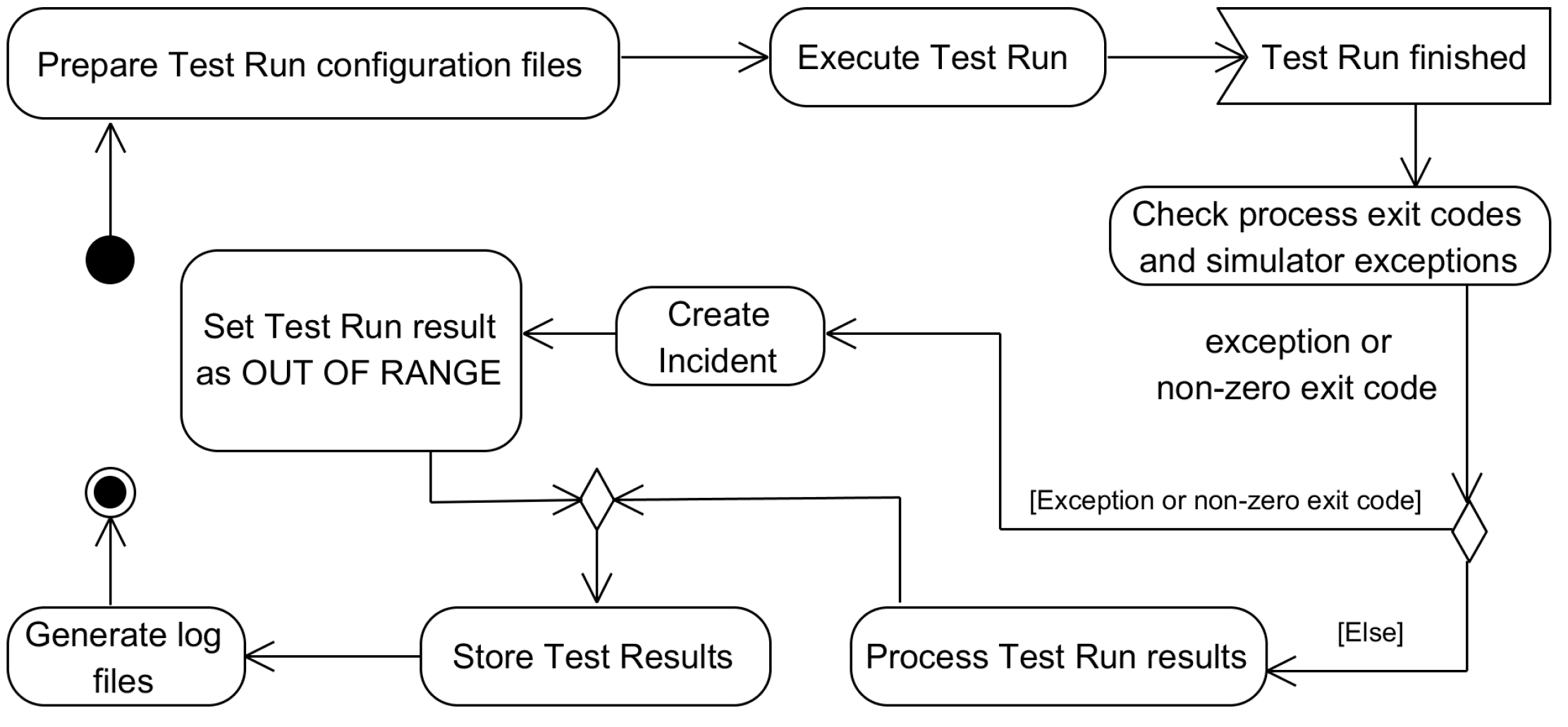

3.4.5. Test Result Processing

3.4.6. Boundary Value Testing

3.4.7. Logging

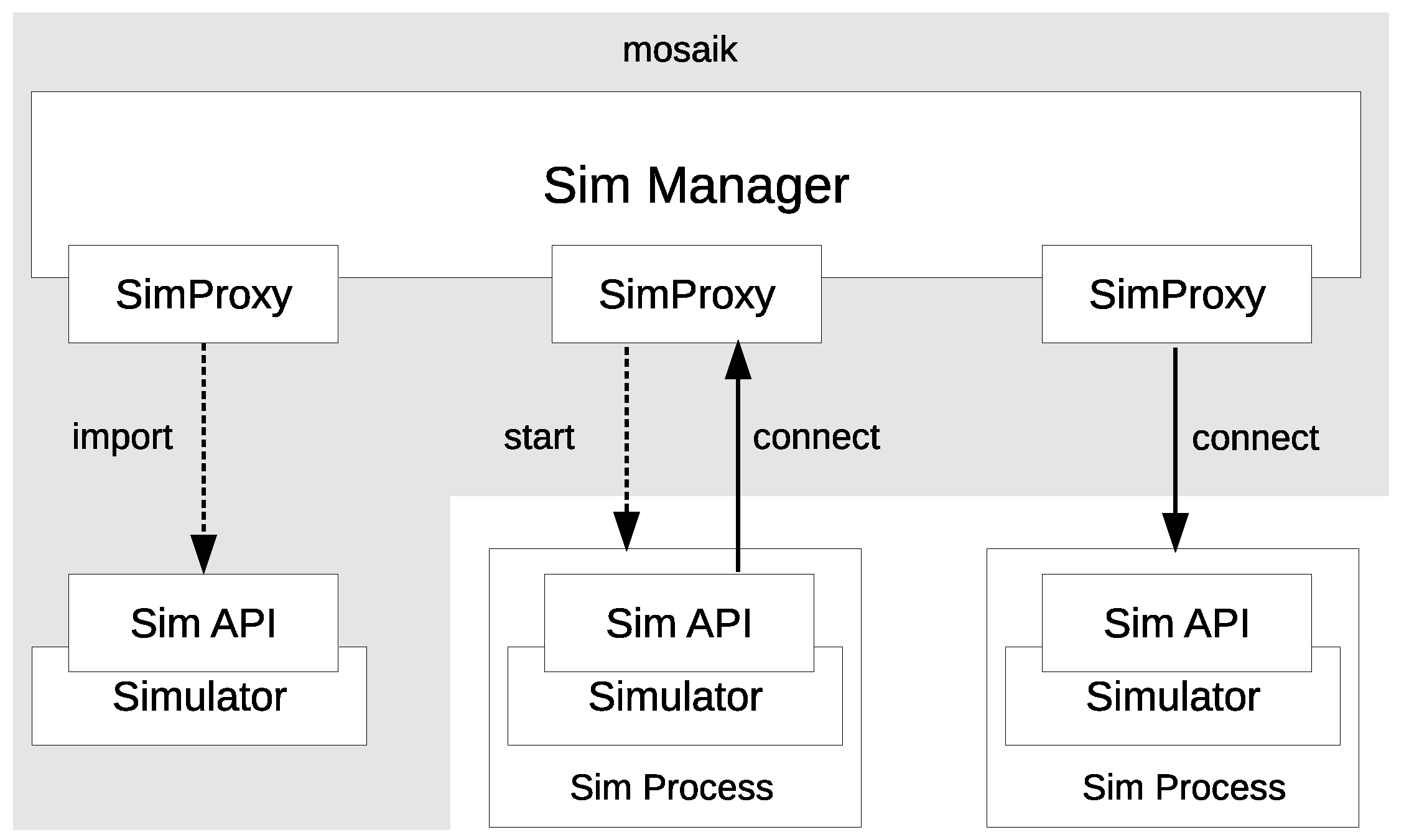

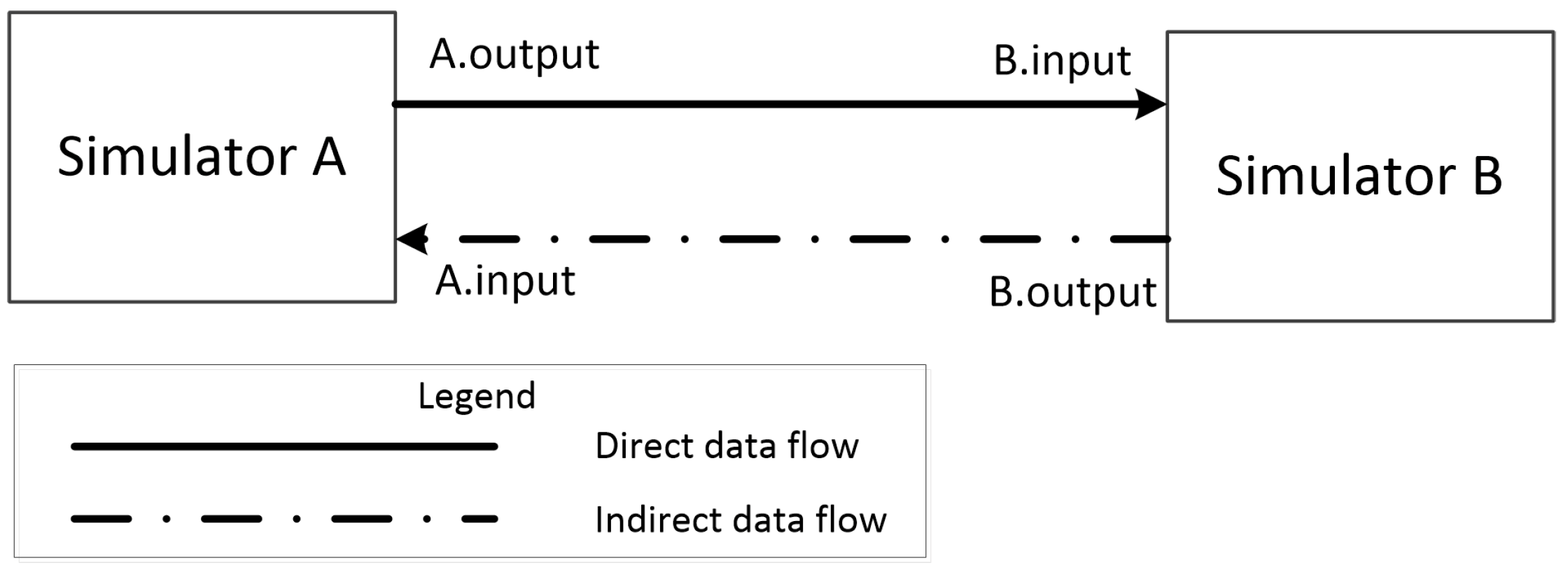

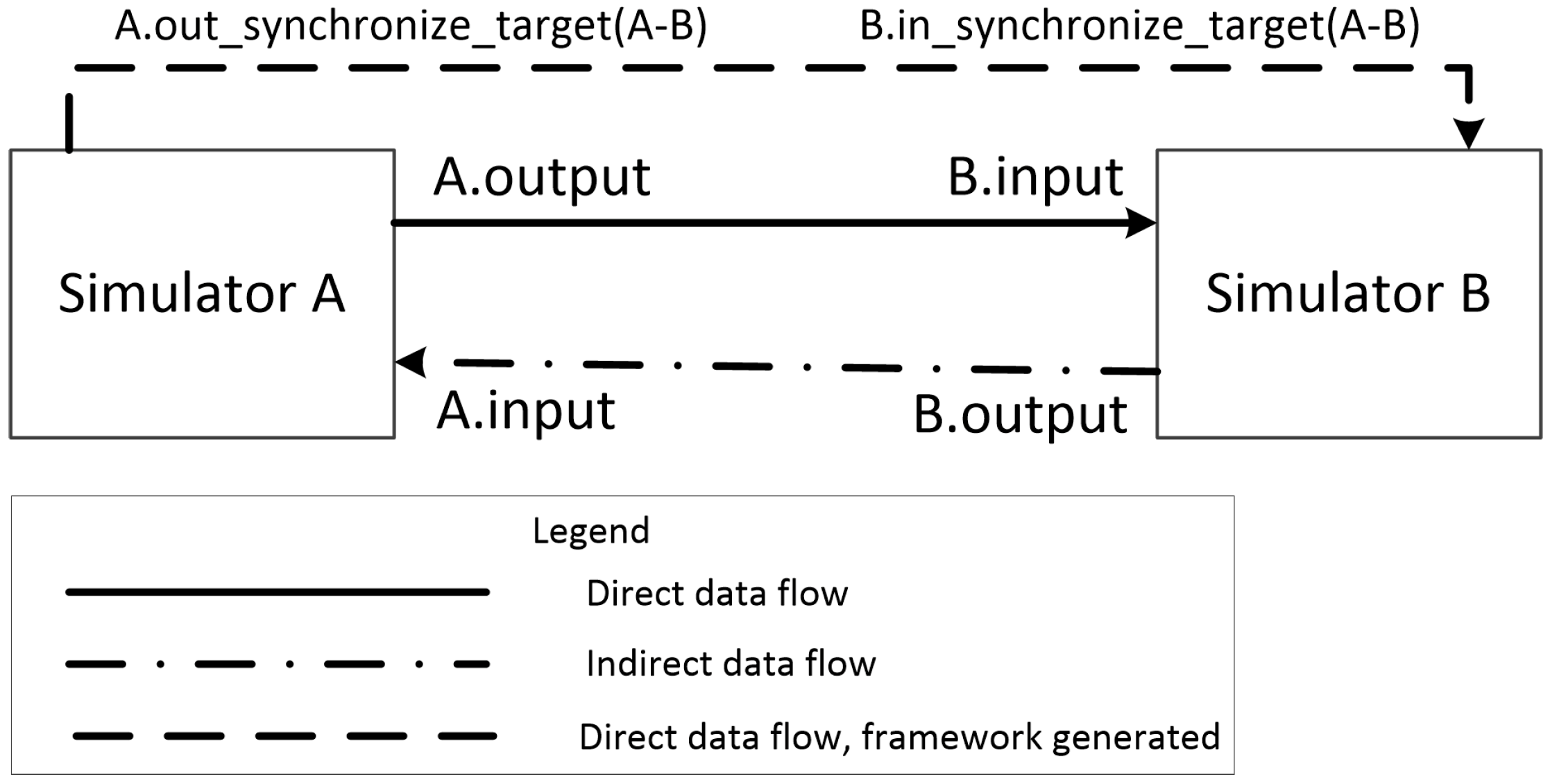

3.5. The Mosaik Co-Simulation Framework

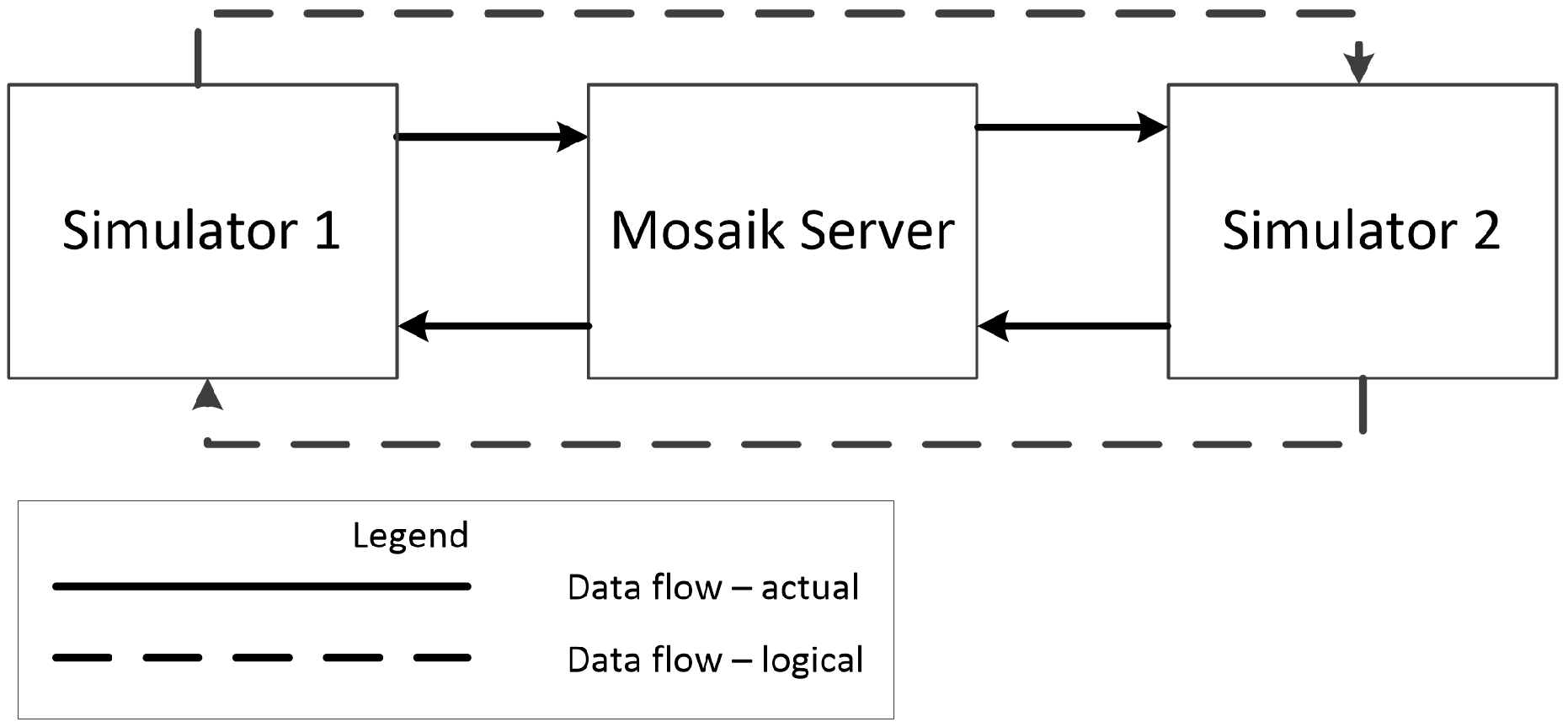

3.6. Java–Mosaik Interface

3.7. Configuration Generation

3.8. Enhanced Simulator Connection Support

3.9. Platform API

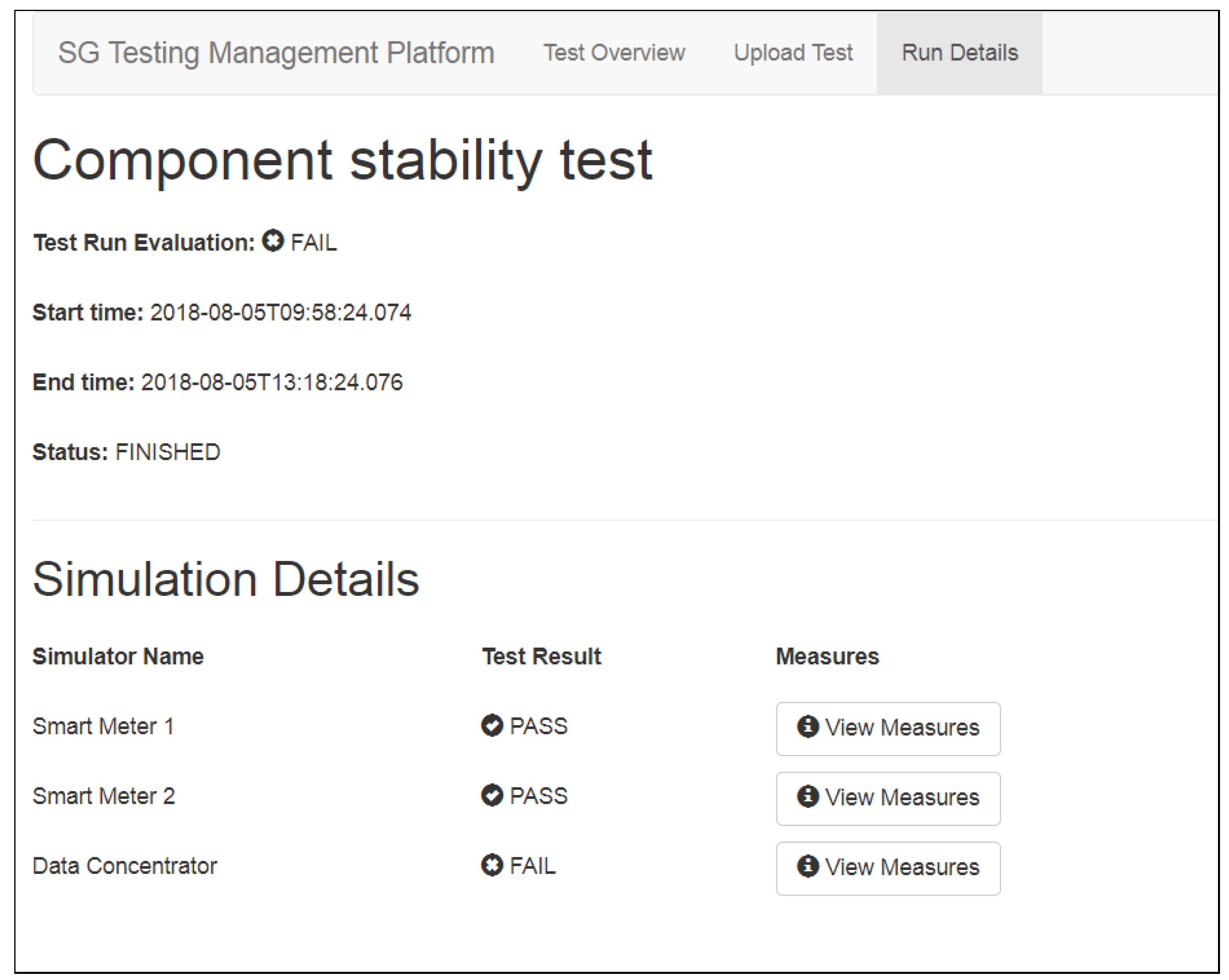

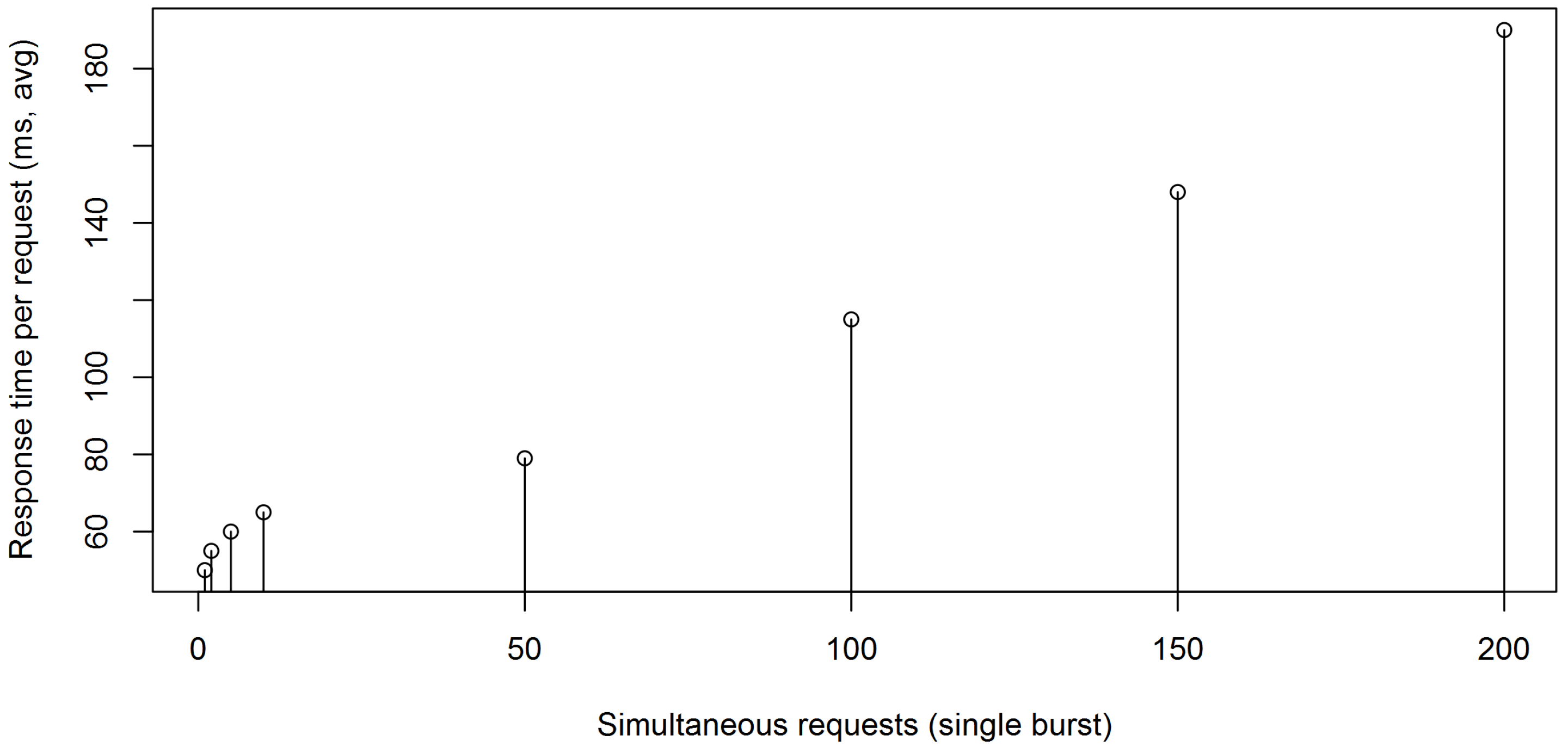

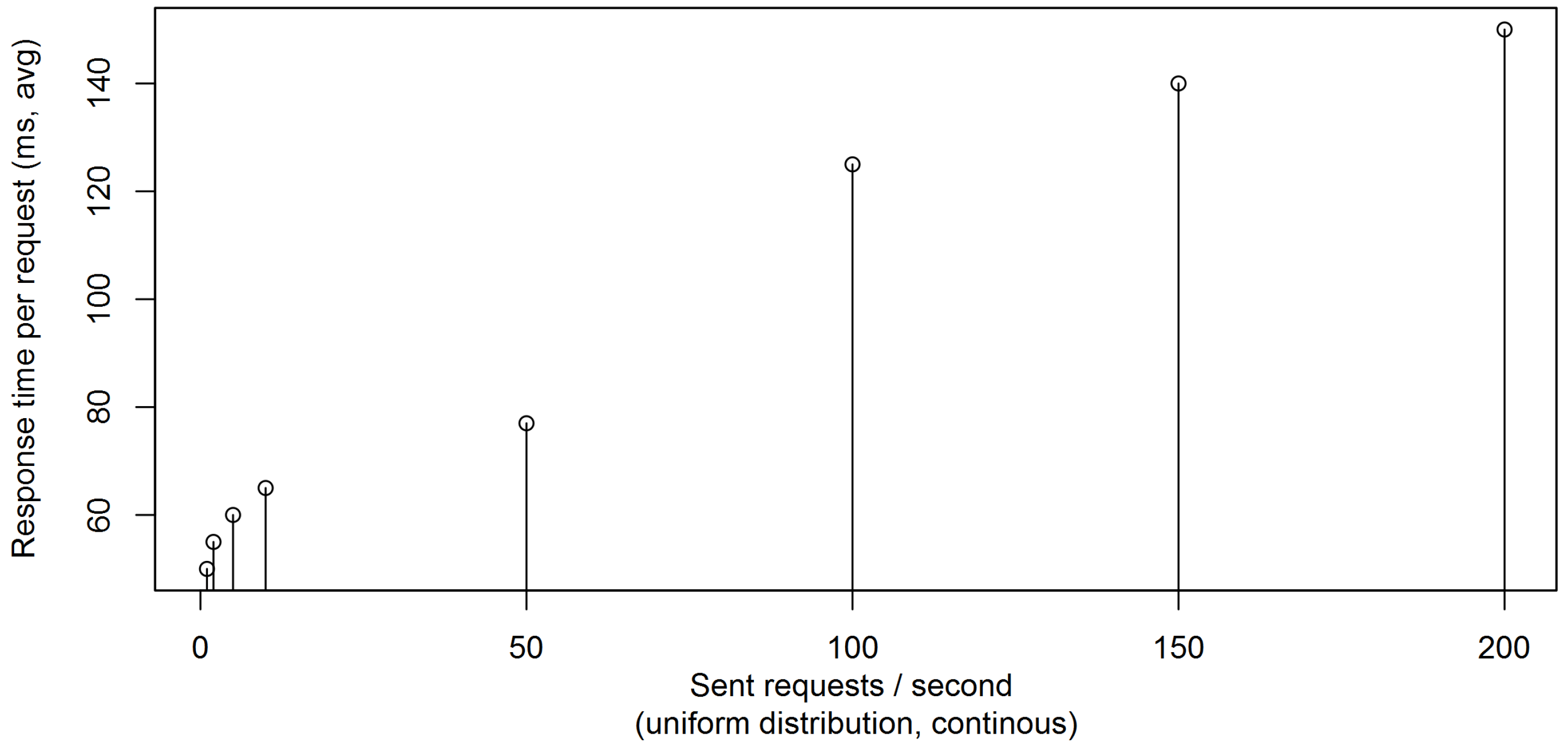

4. SGTMP Deployment Scenario—SG Component Stress Testing

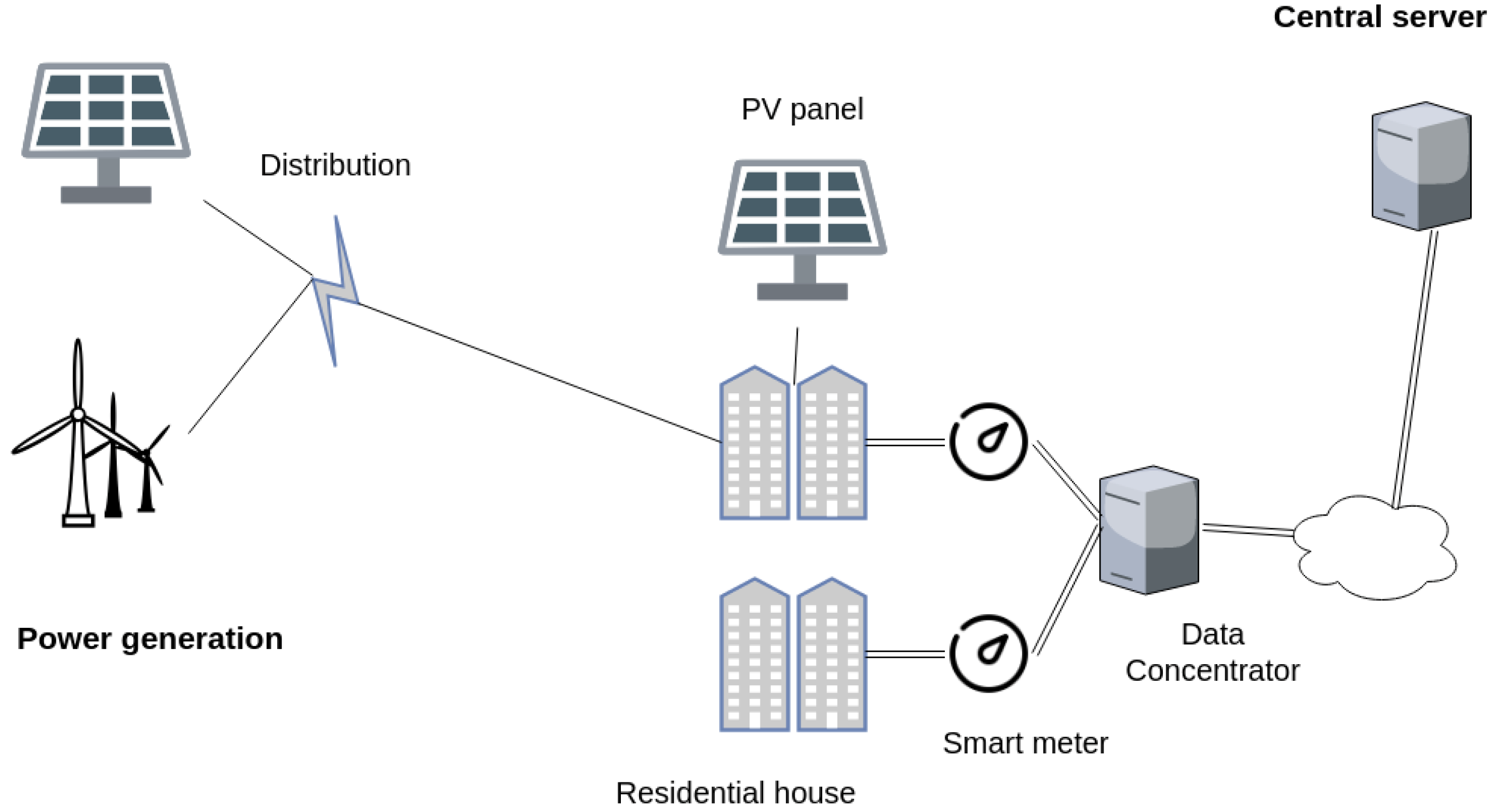

4.1. SG Topology

- Several energy sources generating electricity for the SG system. These can include photovoltaic panels, wind turbines, or power plants using non-renewable energy sources. Each of these energy sources can be created at full scale or virtualized, providing only a simulated energy production profile for other parts of the system. For example, this whole layer can be emulated through cheap hardware devices such as Arduino, as described in our previous work in Schvarcbacher and Rossi [22], providing the benefits of a quick set-up for educational needs.

- Electric distribution lines that collect and transmit energy generated to other parts of the tested network. Depending on the system setup, transmission losses can be simulated or observed in this step.

- Several houses in one or more neighborhoods connected to the electric distribution lines. Each home must have an SM; some can optionally have photovoltaic panels mounted on their roofs. The energy generated by them can be used inside the house or sold back to the grid.

- Smart meter data concentrators (SMDCs) attached to the endpoints at each neighborhood. Their amount depends on the specific data collection requirements of the energy distributor.

- The SG main server, collecting data from SMDC to run aggregated analytics on the data and respond appropriately.

4.2. Test Execution

4.3. Simulation Results Overview

4.4. Other SGTMP Usage Scenarios

5. Conclusions

Author Contributions

Funding

Conflicts of Interest

Abbreviations

| SG | Smart Grid |

| SGTMP | Smart Grid Testing Management Platform |

| SM | Smart Meter |

| SMDC | Smart Meter Data Concentrator |

| AMM | Advanced Metering Management |

| HIL | Hardware-In-the-Loop |

| REST | REpresentational State Transfer |

| HTTP | HyperText Transfer Protocol |

| GUI | Graphical User Interface |

| API | Advanced Programming Interface |

| IPC | Inter-Process Communication |

References

- Farhangi, H. The path of the smart grid. IEEE Power Energy Mag. 2010, 8, 18–28. [Google Scholar] [CrossRef]

- Lopez, J.; Rubio, J.E.; Alcaraz, C. A Resilient Architecture for the Smart Grid. IEEE Trans. Ind. Inform. 2018, 14, 3745–3753. [Google Scholar] [CrossRef]

- Fang, X.; Misra, S.; Xue, G.; Yang, D. Smart grid—The new and improved power grid: A survey. IEEE Commun. Surv. Tutor. 2012, 14, 944–980. [Google Scholar] [CrossRef]

- Chren, S.; Rossi, B.; Pitner, T. Smart grids deployments within EU projects: The role of smart meters. In Proceedings of the 2016 IEEE Smart Cities Symposium Prague (SCSP), Prague, Czech Republic, 26–27 May 2016; pp. 1–5. [Google Scholar]

- Yan, Y.; Qian, Y.; Sharif, H.; Tipper, D. A Survey on Smart Grid Communication Infrastructures: Motivations, Requirements and Challenges. IEEE Commun. Surv. Tutor. 2012, 15, 5–20. [Google Scholar] [CrossRef]

- Young, M. Software Testing and Analysis: Process, Principles, And Techniques; John Wiley & Sons: Hoboken, NJ, USA, 2008. [Google Scholar]

- Možucha, J.; Rossi, B. Is Mutation Testing Ready to Be Adopted Industry-Wide? In Proceedings of the International Conference on Product-Focused Software Process Improvement, Trondheim, Norway, 22–24 November 2016; Springer: Berlin, Germany,, 2016; pp. 217–232. [Google Scholar]

- Rossi, B.; Russo, B.; Succi, G. Modelling failures occurrences of open source software with reliability growth. In Proceedings of the IFIP International Conference on Open Source Systems, Notre Dame, IN, USA, 30 May–2 June 2010; Springer: Berlin, Germany, 2010; pp. 268–280. [Google Scholar]

- CEN-CENELEC-ETSI, Smart Grid Coordination Group. Available online: https://ec.europa.eu/energy/sites/ener/files/documents/xpert_group1_reference_architecture.pdf (accessed on 16 September 2018).

- Chren, S.; Rossi, B.; Bühnova, B.; Pitner, T. Reliability data for smart grids: Where the real data can be found. In Proceedings of the 2018 Smart City Symposium Prague (SCSP), Prague, Czech Republic, 24–25 May 2018. [Google Scholar]

- Palensky, P.; Widl, E.; Elsheikh, A. Simulating cyber-physical energy systems: Challenges, tools and methods. IEEE Trans. Syst. Man Cybern. Syst. 2014, 44, 318–326. [Google Scholar] [CrossRef]

- Khaitan, S.K.; McCalley, J.D. Design techniques and applications of cyberphysical systems: A survey. IEEE Syst. J. 2015, 9, 350–365. [Google Scholar] [CrossRef]

- Vogt, M.; Marten, F.; Braun, M. A survey and statistical analysis of smart grid co-simulations. Appl. Energy 2018, 222, 67–78. [Google Scholar] [CrossRef]

- Song, W.Z.; De, D.; Tan, S.; Das, S.K.; Tong, L. A wireless smart grid testbed in lab. IEEE Wirel. Commun. 2012, 19, 58–64. [Google Scholar] [CrossRef]

- Anderson, D.; Zhao, C.; Hauser, C.; Venkatasubramanian, V.; Bakken, D.; Bose, A. Intelligent Design Real-Time Simulation for Smart Grid Control and Communications Design. IEEE Power Energy Mag. 2012, 10, 49–57. [Google Scholar] [CrossRef]

- Tan, S.; Song, W.Z.; Dong, Q.; Tong, L. Score: Smart-grid common open research emulator. In Proceedings of the 2012 IEEE Third International Conference on Smart Grid Communications (SmartGridComm), Tainan, Taiwan, 5–8 November 2012; pp. 282–287. [Google Scholar]

- Annor-Asante, M.; Pranggono, B. Development of smart grid testbed with low-cost hardware and software for cybersecurity research and education. Wirel. Pers. Commun. 2018, 101, 1357–1377. [Google Scholar] [CrossRef]

- Chassin, D.P.; Schneider, K.; Gerkensmeyer, C. GridLAB-D: An open-source power systems modeling and simulation environment. In Proceedings of the Transmission and Distribution Conference and Exposition, Chicago, IL, USA, 21–24 April 2008; pp. 1–5. [Google Scholar]

- Steinbrink, C.; Schlögl, F.; Babazadeh, D.; Lehnhoff, S.; Rohjans, S.; Narayan, A. Future perspectives of co-simulation in the smart grid domain. In Proceedings of the 2018 IEEE International Energy Conference (ENERGYCON), Limassol, Cyprus, 3–7 June 2018. [Google Scholar]

- Büscher, M.; Claassen, A.; Kube, M.; Lehnhoff, S.; Piech, K.; Rohjans, S.; Scherfke, S.; Steinbrink, C.; Velasquez, J.; Tempez, F.; et al. Integrated Smart Grid simulations for generic automation architectures with RT-LAB and mosaik. In Proceedings of the 2014 IEEE International Conference on Smart Grid Communications (SmartGridComm), Venice, Italy, 3–6 November 2014; pp. 194–199. [Google Scholar]

- Schloegl, F.; Rohjans, S.; Lehnhoff, S.; Velasquez, J.; Steinbrink, C.; Palensky, P. Towards a classification scheme for co-simulation approaches in energy systems. In Proceedings of the 2015 International Symposium on Smart Electric Distribution Systems and Technologies (EDST), Vienna, Austria, 8–11 September 2015; pp. 516–521. [Google Scholar]

- Schvarcbacher, M.; Rossi, B. Smart Grids Co-Simulations with Low-Cost Hardware. In Proceedings of the 2017 43rd Euromicro Conference on Software Engineering and Advanced Applications (SEAA), Vienna, Austria, 30 August–1 Septemer 2017; pp. 252–255. [Google Scholar]

- Rossi, B.; Chren, S.; Buhnova, B.; Pitner, T. Anomaly detection in smart grid data: An experience report. In Proceedings of the 2016 IEEE International Conference on Systems, Man, and Cybernetics (SMC), Budapest, Hungary, 9–12 October 2016; pp. 2313–2318. [Google Scholar]

- Liu, X.; Golab, L.; Golab, W.M.; Ilyas, I.F. Benchmarking Smart Meter Data Analytics. In Proceedings of the 18th International Conference on Extending Database Technology, EDBT 2015, Brussels, Belgium, 23–27 March 2015; pp. 385–396. [Google Scholar]

- Hebner, R. Nanogrids, Microgrids, and Big Data: The Future of the Power Grid. Available online: https://spectrum.ieee.org/energy/renewables/nanogrids-microgrids-and-big-data-the-future-of-the-power-grid (accessed on 8 August 2018).

- Kok, K.; Karnouskos, S.; Ringelstein, J.; Dimeas, A.; Weidlich, A.; Warmer, C.; Drenkard, S.; Hatziargyriou, N.; Lioliou, V. Field-testing smart houses for a smart grid. In Proceedings of the 21st International Conference and Exhibition on Electricity Distribution (CIRED 2011), Frankfurt, Germany, 6–9 June 2011. [Google Scholar]

- Karnouskos, S.; Holanda, T.N.D. Simulation of a Smart Grid City with Software Agents. In Proceedings of the 2009 Third UKSim European Symposium on Computer Modeling and Simulation, Athens, Greece, 25–27 November 2009; pp. 424–429. [Google Scholar]

- Wang, Z.; Scaglione, A.; Thomas, R.J. Generating Statistically Correct Random Topologies for Testing Smart Grid Communication and Control Networks. IEEE Trans. Smart Grid 2010, 1, 28–39. [Google Scholar] [CrossRef]

- Hahn, A.; Ashok, A.; Sridhar, S.; Govindarasu, M. Cyber-Physical Security Testbeds: Architecture, Application, and Evaluation for Smart Grid. IEEE Trans. Smart Grid 2013, 4, 847–855. [Google Scholar] [CrossRef]

- Pipattanasomporn, M.; Feroze, H.; Rahman, S. Multi-agent systems in a distributed smart grid: Design and implementation. In Proceedings of the Power Systems Conference and Exposition, Seattle, WA, USA, 15–18 March 2009; pp. 1–8. [Google Scholar]

- Oliveira, P.; Pinto, T.; Morais, H.; Vale, Z. MASGriP—A multi-agent smart grid simulation platform. In Proceedings of the Power and Energy Society General Meeting, San Diego, CA, USA, 22–26 July 2012; pp. 1–8. [Google Scholar]

- Afzal, W.; Alone, S.; Glocksien, K.; Torkar, R. Software test process improvement approaches: A systematic literature review and an industrial case study. J. Syst. Softw. 2016, 111, 1–33. [Google Scholar] [CrossRef]

- JTC1/SC7, I. ISO/IEC/IEEE 29119 Software Testing Standard. Available online: https://www.iso.org/standard/45142.html (accessed on 12 September 2018).

- Garcia, C.; Dávila, A.; Pessoa, M. Test Process Models: Systematic Literature Review. In Communications in Computer and Information Science; Springer: Cham, Switzerland, 2014; pp. 84–93. [Google Scholar]

- Garousi, V.; Felderer, M.; Hacaloğlu, T. Software test maturity assessment and test process improvement: A multivocal literature review. Inf. Softw. Technol. 2017, 85, 16–42. [Google Scholar] [CrossRef]

- Garousi, V.; Felderer, M.; Hacaloğlu, T. What we know about software test maturity and test process improvement. IEEE Softw. 2017, 35, 84–92. [Google Scholar] [CrossRef]

- Swinkels, R. A Comparison of TMM and Other Test Process Improvement Models; Technical Report; Frits Philips Institute, Technische Universiteit: Eidhoven, The Netherlands, 2000; Volume 51. [Google Scholar]

- Farooq, A.; Dumke, R.R. Evaluation Approaches in Software Testing; Technical Report; Otto von Guericke University of Magdeburg: Magdeburg, Germany, 2008. [Google Scholar]

- Farooq, A. An Evaluation Framework for Software Test Processes. Ph.D. Thesis, Otto von Guericke University of Magdeburg, Magdeburg, Germany, 2009. [Google Scholar]

- Abdou, T.; Grogono, P.; Kamthan, P. Managing Corrective Actions to Closure in Open Source Software Test Process. In Proceedings of the 25th International Conference on Software Engineering and Knowledge Engineering, Boston, MA, USA, 27–29 June 2013. [Google Scholar]

- Felderer, M.; Ramler, R. Integrating risk-based testing in industrial test processes. Softw. Qual. J. 2014, 22, 543–575. [Google Scholar] [CrossRef]

- Gomes, C.; Thule, C.; Broman, D.; Larsen, P.G.; Vangheluwe, H. Co-simulation: State of the art. arXiv, 2017; arXiv:1702.00686. [Google Scholar]

- Bian, D.; Kuzlu, M.; Pipattanasomporn, M.; Rahman, S.; Wu, Y. Real-time co-simulation platform using OPAL-RT and OPNET for analyzing smart grid performance. In Proceedings of the Power & Energy Society General Meeting, Denver, CO, USA, 26–30 July 2015; pp. 1–5. [Google Scholar]

- Li, W.; Ferdowsi, M.; Stevic, M.; Monti, A.; Ponci, F. Cosimulation for smart grid communications. IEEE Trans. Ind. Inform. 2014, 10, 2374–2384. [Google Scholar] [CrossRef]

- Li, W.; Zhang, X. Simulation of the smart grid communications: Challenges, techniques, and future trends. Comput. Electr. Eng. 2014, 40, 270–288. [Google Scholar] [CrossRef]

- Varga, A.; Hornig, R. An overview of the OMNeT++ simulation environment. In Proceedings of the 1st International Conference on Simulation Tools and Techniques for Communications, Networks and Systems & Workshops, Marseille, France, 3–7 March 2008; p. 60. [Google Scholar]

- Dede, J.; Kuladinithi, K.; Förster, A.; Nannen, O.; Lehnhoff, S. OMNeT++ and mosaik: enabling simulation of Smart Grid communications. arXiv, 2015; arXiv:1509.03067. [Google Scholar]

- Ramler, R.; Biffl, S.; Grünbacher, P. Value-based management of software testing. In Value-Based Software Engineering; Springer: Berlin, Germany, 2006; pp. 225–244. [Google Scholar]

- Chan, E.Y.K.; Chan, W.K.; Poon, P.L.; Yu, Y.T. An empirical evaluation of several test-a-few strategies for testing particular conditions. Softw. Pract. Exp. 2012, 42, 967–994. [Google Scholar] [CrossRef]

- Gonzalez-Sanchez, A.; Piel, É.; Abreu, R.; Gross, H.G.; van Gemund, A.J. Prioritizing tests for software fault diagnosis. Softw. Pract. Exp. 2011, 41, 1105–1129. [Google Scholar] [CrossRef]

- Hrabovská, K. Supporting a Smart Grids Laboratory: Testing Management for Cyber-Physical Systems. Master’s Thesis, Masaryk University, Brno, Czech Republic, 2017. [Google Scholar]

- Scherfke, S.; Nannen, O.; El-Ama, A. The Simulator Manager—Mosaik 2.3.1 Documentation. Available online: https://mosaik.readthedocs.io/en/latest/simmanager.html (accessed on 12 September 2018).

- Mets, K.; Ojea, J.A.; Develder, C. Combining Power and Communication Network Simulation for Cost-Effective Smart Grid Analysis. IEEE Commun. Surv. Tutor. 2014, 16, 1771–1796. [Google Scholar] [CrossRef]

- Nägele, T.; Hooman, J. Rapid Construction of Co-simulations of Cyber-Physical Systems in HLA using a DSL. In Proceedings of the 2017 43rd Euromicro Conference on Software Engineering and Advanced Applications (SEAA), Vienna, Austria, 30 August–1 September 2017; pp. 247–251. [Google Scholar]

- Nägele, T.; Hooman, J.; Broenink, T.; Broenink, J. CoHLA: Design space exploration and co-simulation made easy. In Proceedings of the 2018 IEEE Industrial Cyber-Physical Systems (ICPS), St. Petersburg, Russia, 15–18 May 2018; pp. 225–231. [Google Scholar]

| Reference | Criteria | Support Requirements |

|---|---|---|

| Kok et al. [26] | Power flow | Real (1:1, scaled); simulated |

| Kok et al. [26] | Data flows | Power grid only; information grid only; combined |

| Kok et al. [26], Karnouskos and Holanda [27] | Interaction capture | RT capture&monitoring; large data volume; simulation playback |

| Karnouskos and Holanda [27], Wang et al. [28] | Topological changes | Before test; at simulation start; during runtime, multiple changes |

| Karnouskos and Holanda [27] | Multi-agent systems | One entity; breakdown into components |

| Karnouskos and Holanda [27] | Simulator integration | Well defined API; extensibility |

| Karnouskos and Holanda [27], Hahn et al. [29] | Entity classification | Power producer/consumer/transporter; state reporter; network intruder; SCADA |

| Hahn et al. [29] | Network requirements | Network analysis; packet injection; expose to simulated intruders |

| Wang et al. [28] | Topology generation | Automatic; determine if model generalizes; model future SG deployments |

| Wang et al. [28] | Testing platform | Support different SG topologies |

© 2018 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (http://creativecommons.org/licenses/by/4.0/).

Share and Cite

Schvarcbacher, M.; Hrabovská, K.; Rossi, B.; Pitner, T. Smart Grid Testing Management Platform (SGTMP). Appl. Sci. 2018, 8, 2278. https://doi.org/10.3390/app8112278

Schvarcbacher M, Hrabovská K, Rossi B, Pitner T. Smart Grid Testing Management Platform (SGTMP). Applied Sciences. 2018; 8(11):2278. https://doi.org/10.3390/app8112278

Chicago/Turabian StyleSchvarcbacher, Martin, Katarína Hrabovská, Bruno Rossi, and Tomáš Pitner. 2018. "Smart Grid Testing Management Platform (SGTMP)" Applied Sciences 8, no. 11: 2278. https://doi.org/10.3390/app8112278

APA StyleSchvarcbacher, M., Hrabovská, K., Rossi, B., & Pitner, T. (2018). Smart Grid Testing Management Platform (SGTMP). Applied Sciences, 8(11), 2278. https://doi.org/10.3390/app8112278