Correlation Plenoptic Imaging: An Overview

Abstract

1. Introduction

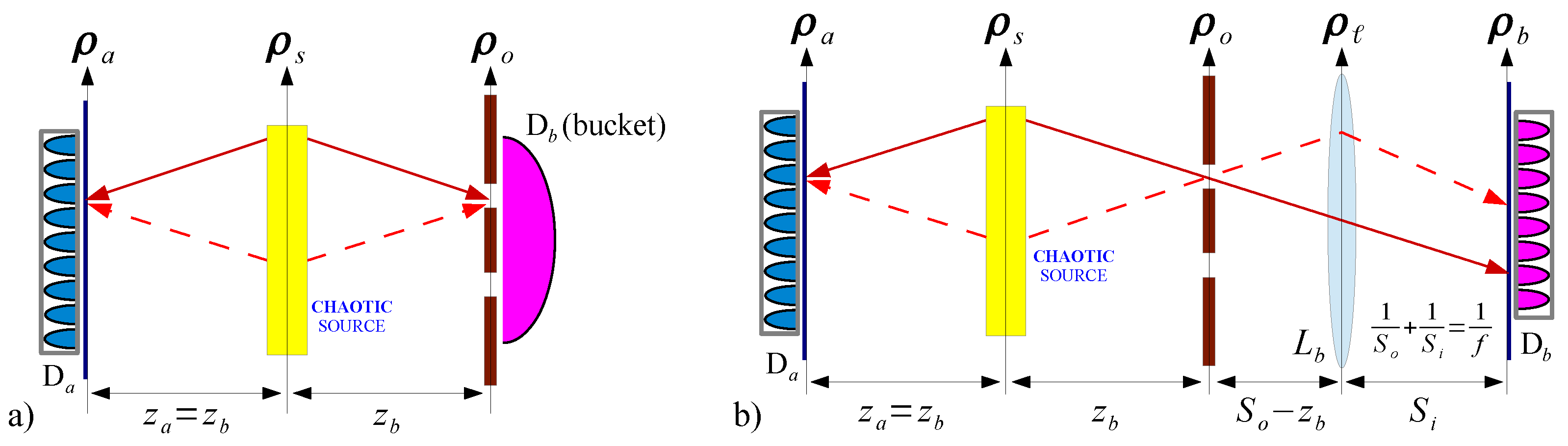

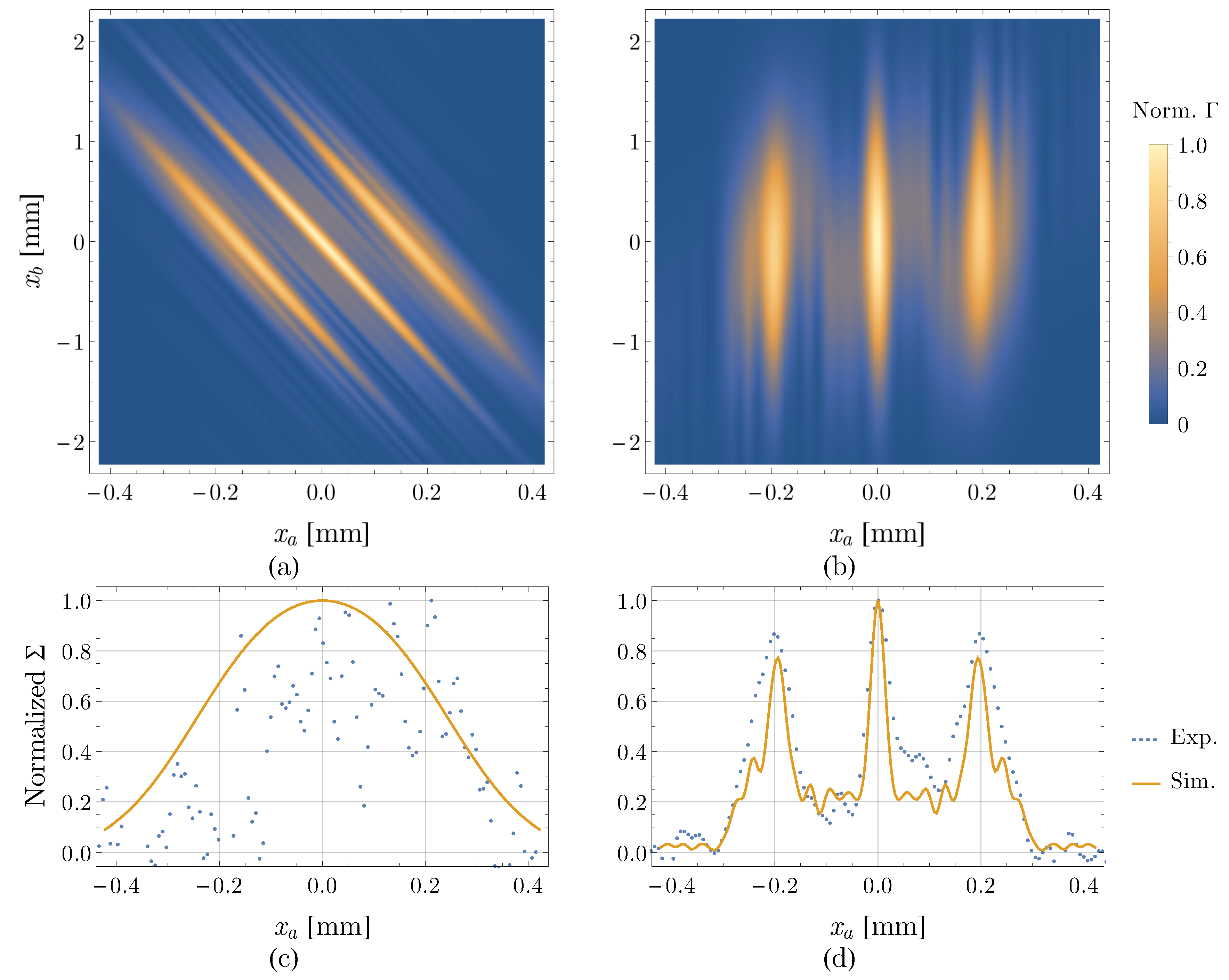

2. Correlation Plenoptic Imaging with Chaotic Light—First Scheme

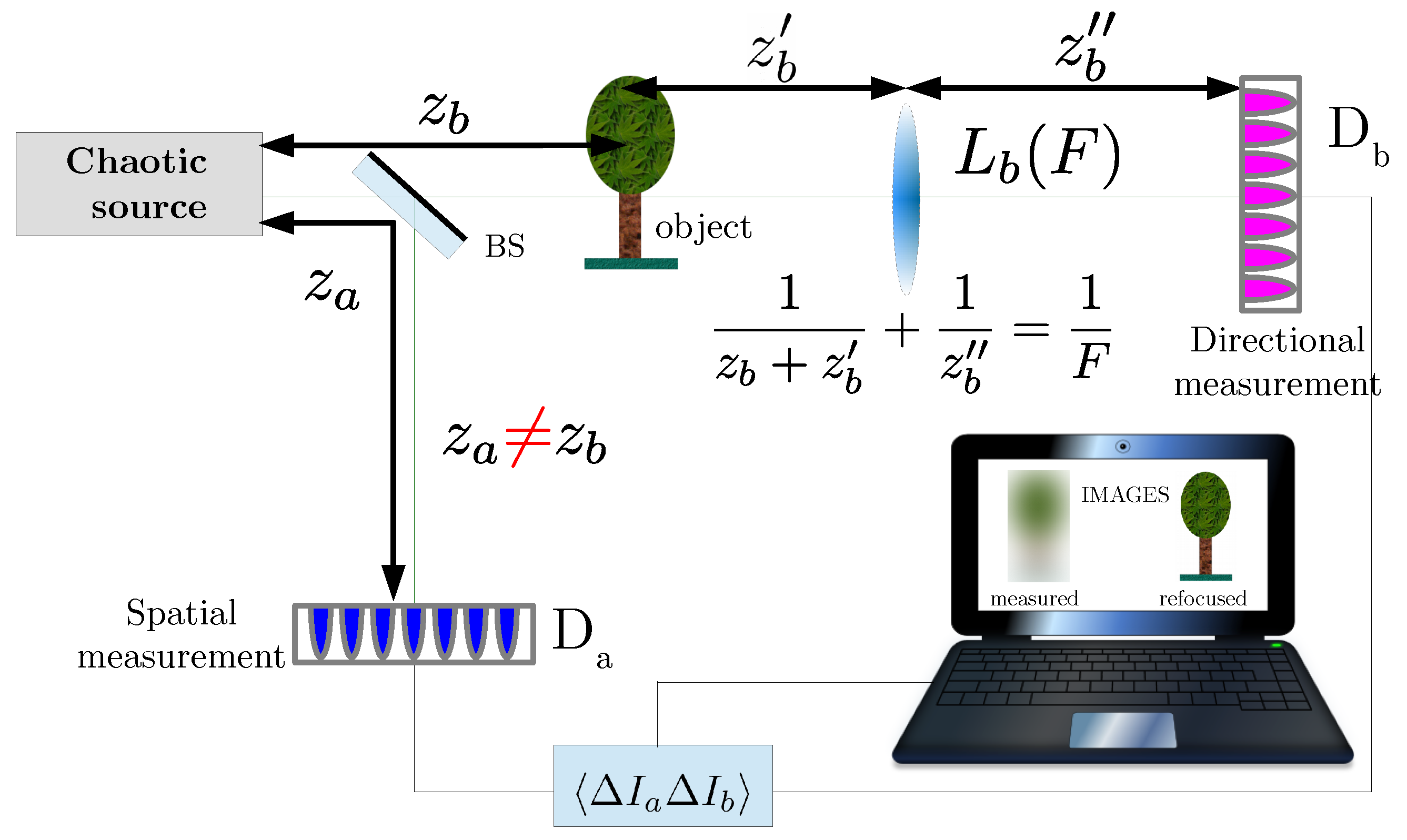

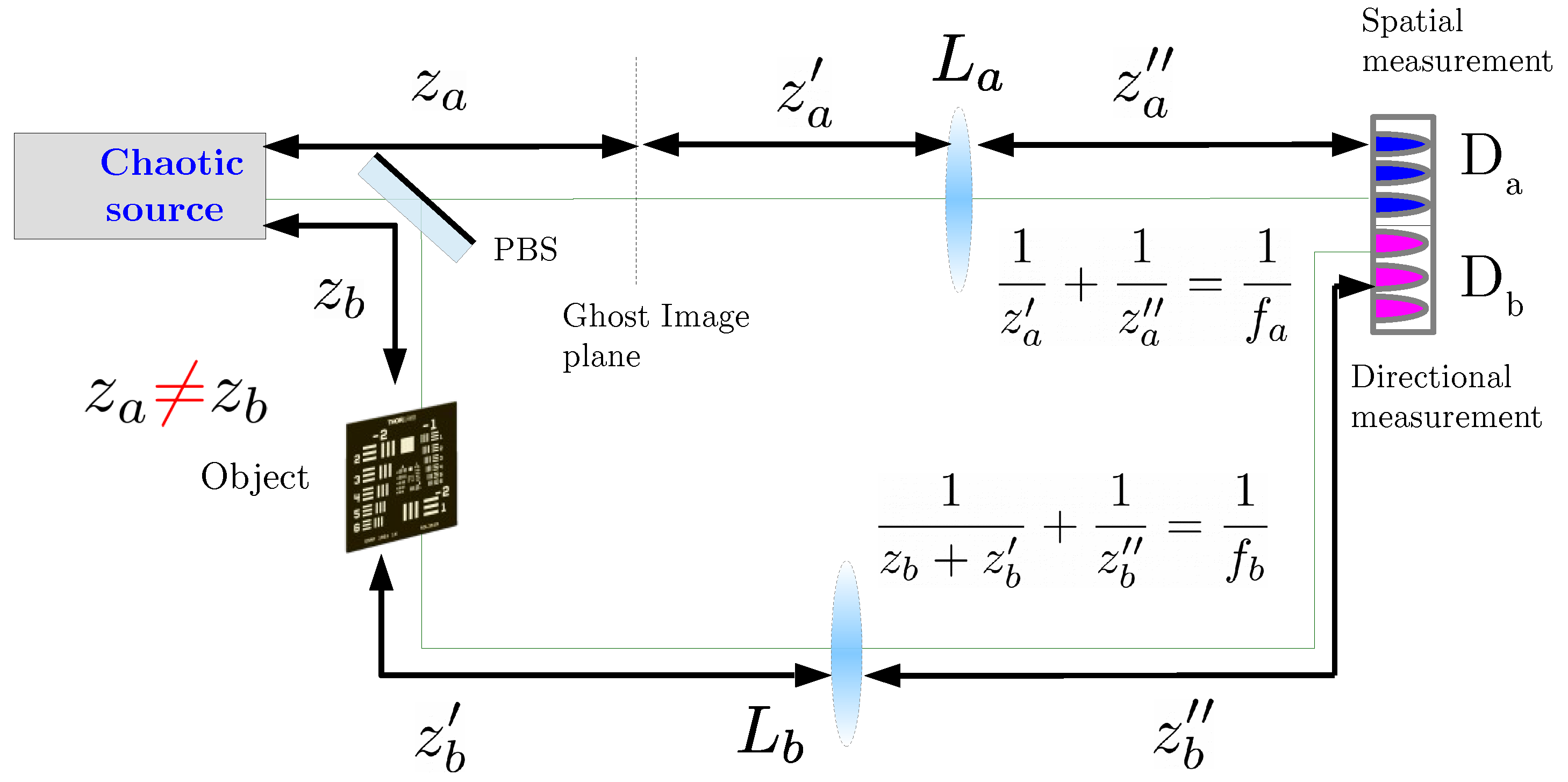

2.1. Correlation Functions in CPI

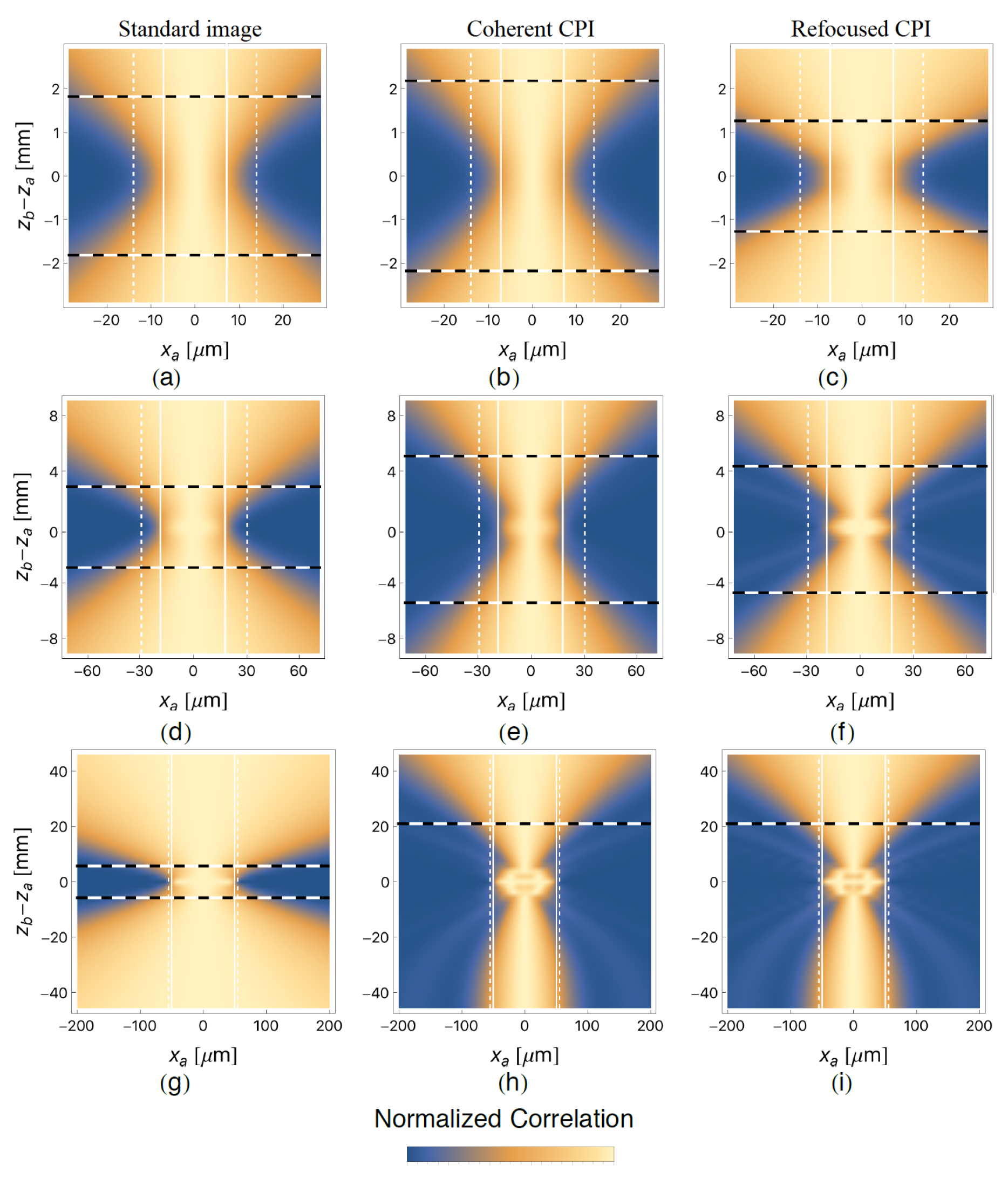

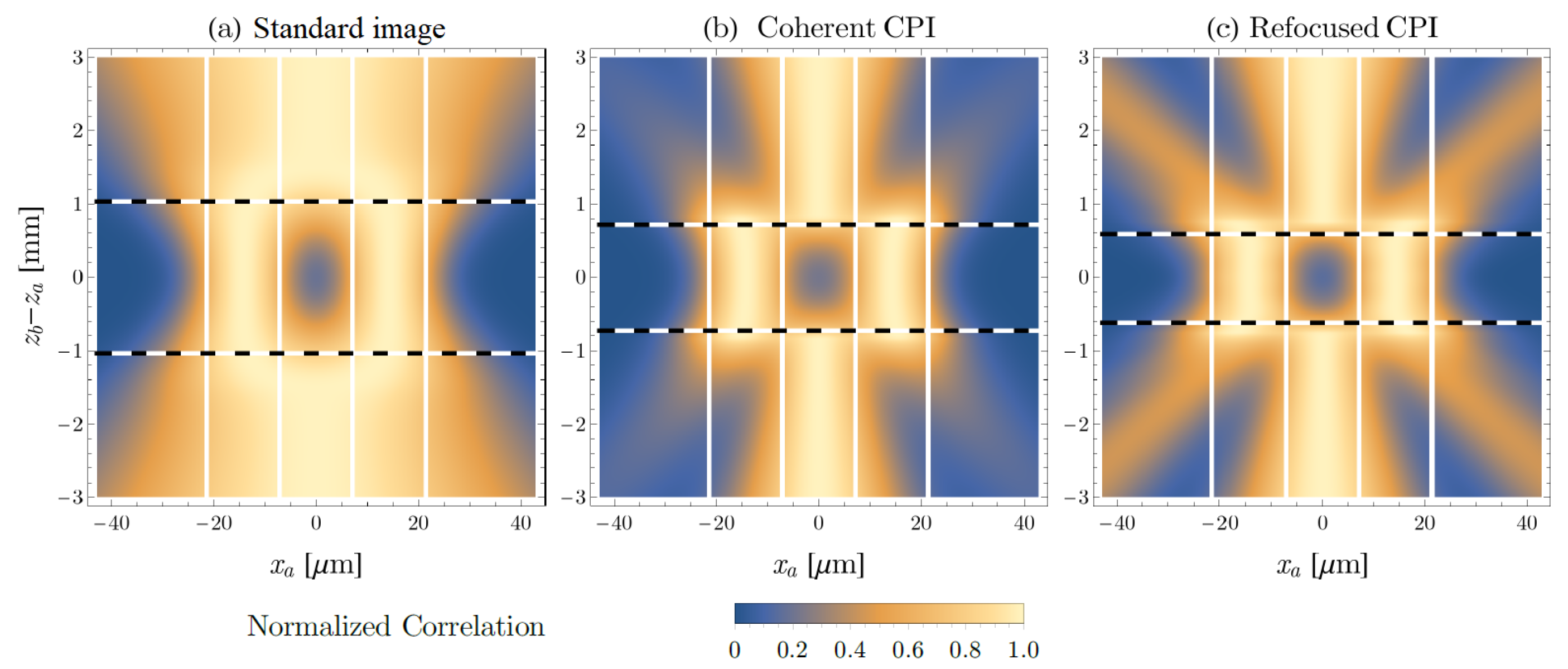

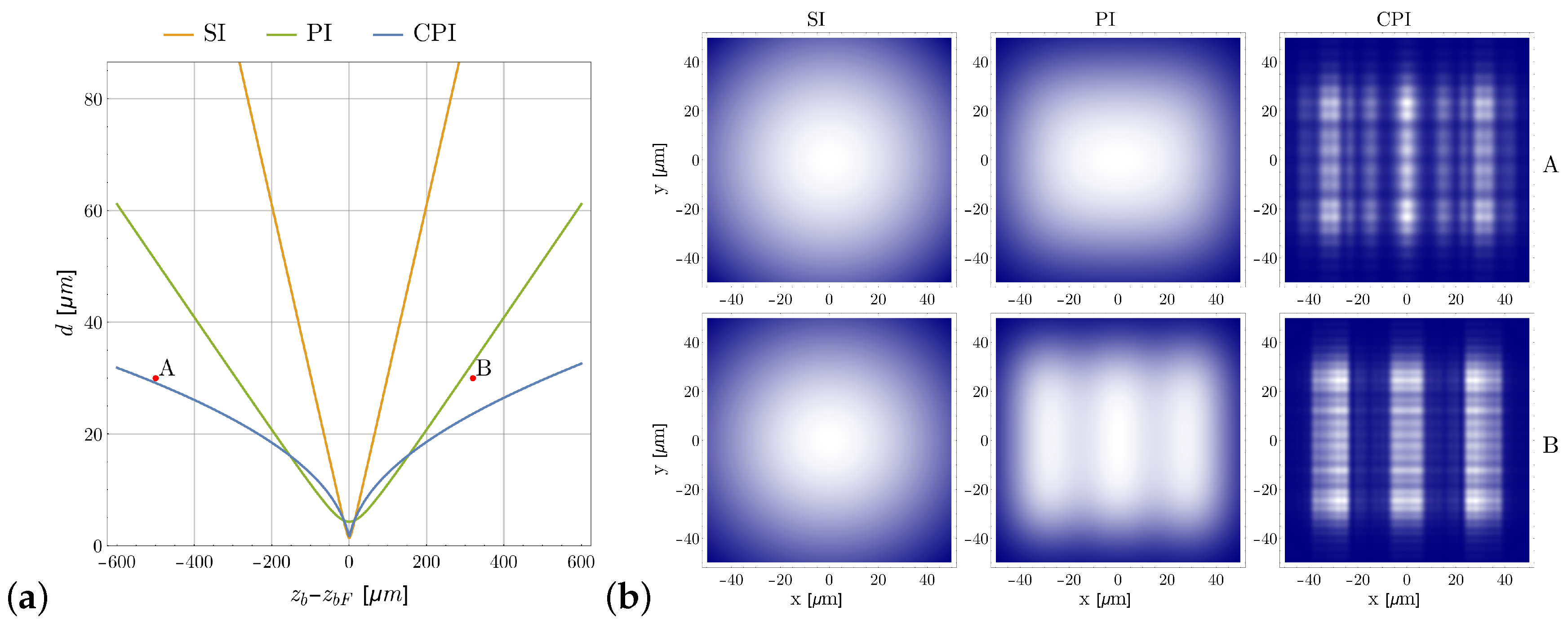

2.2. Point-Spread Function and Plenoptic Properties

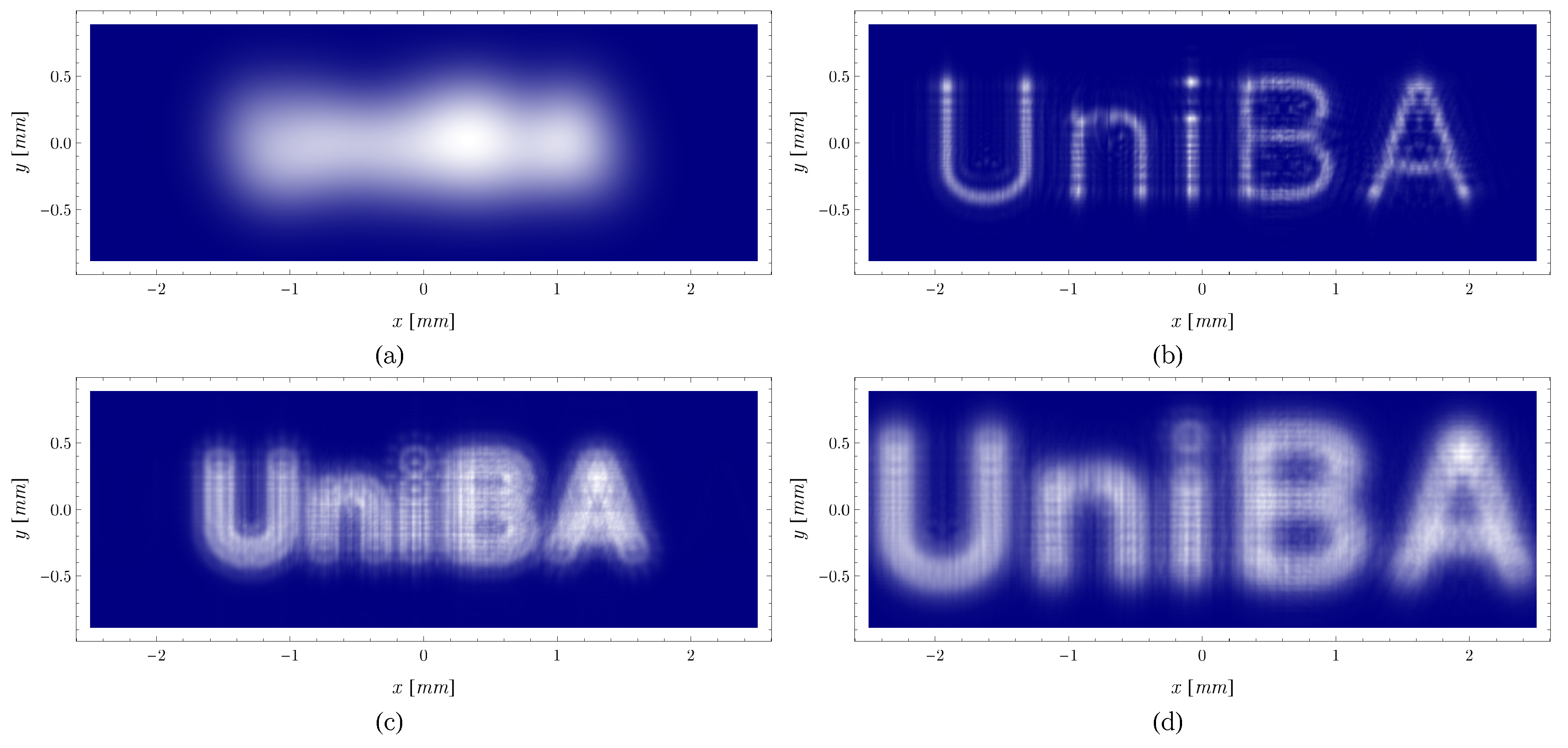

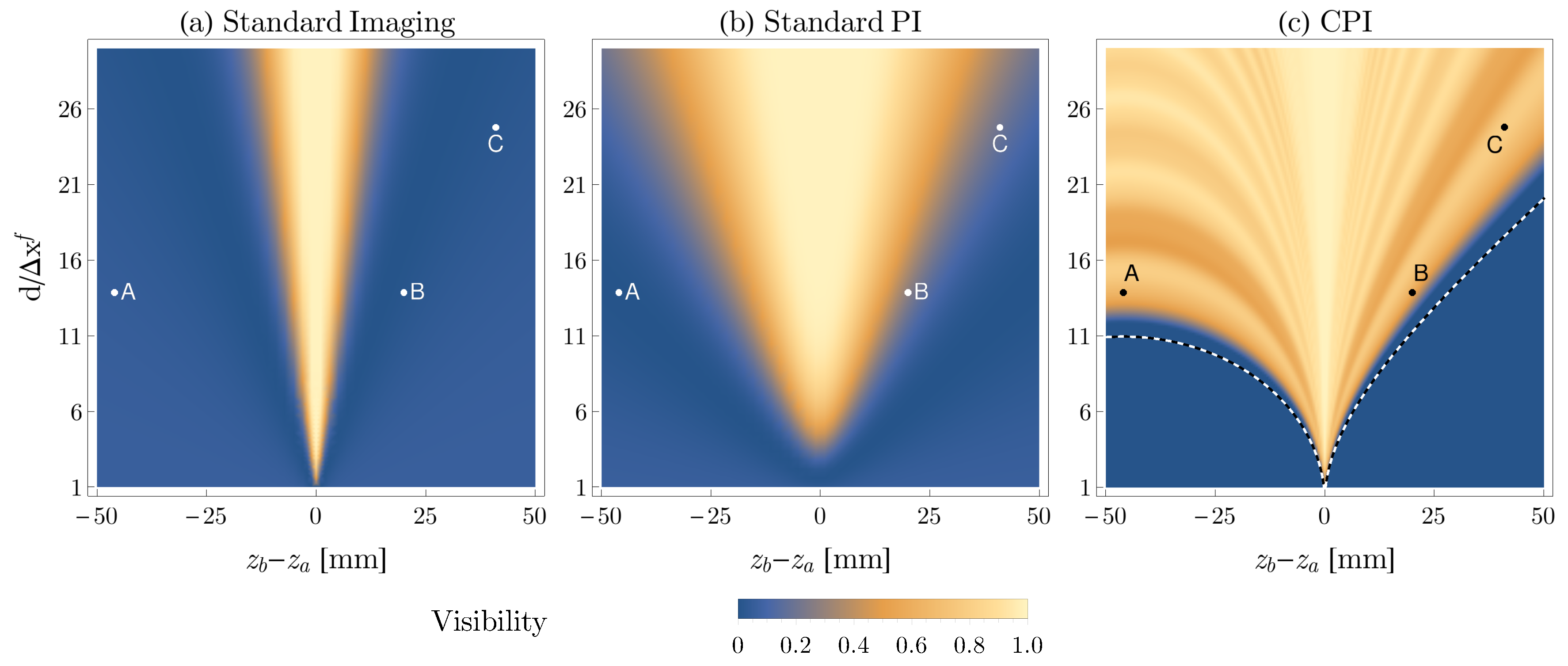

2.3. Depth-of-Field Improvement

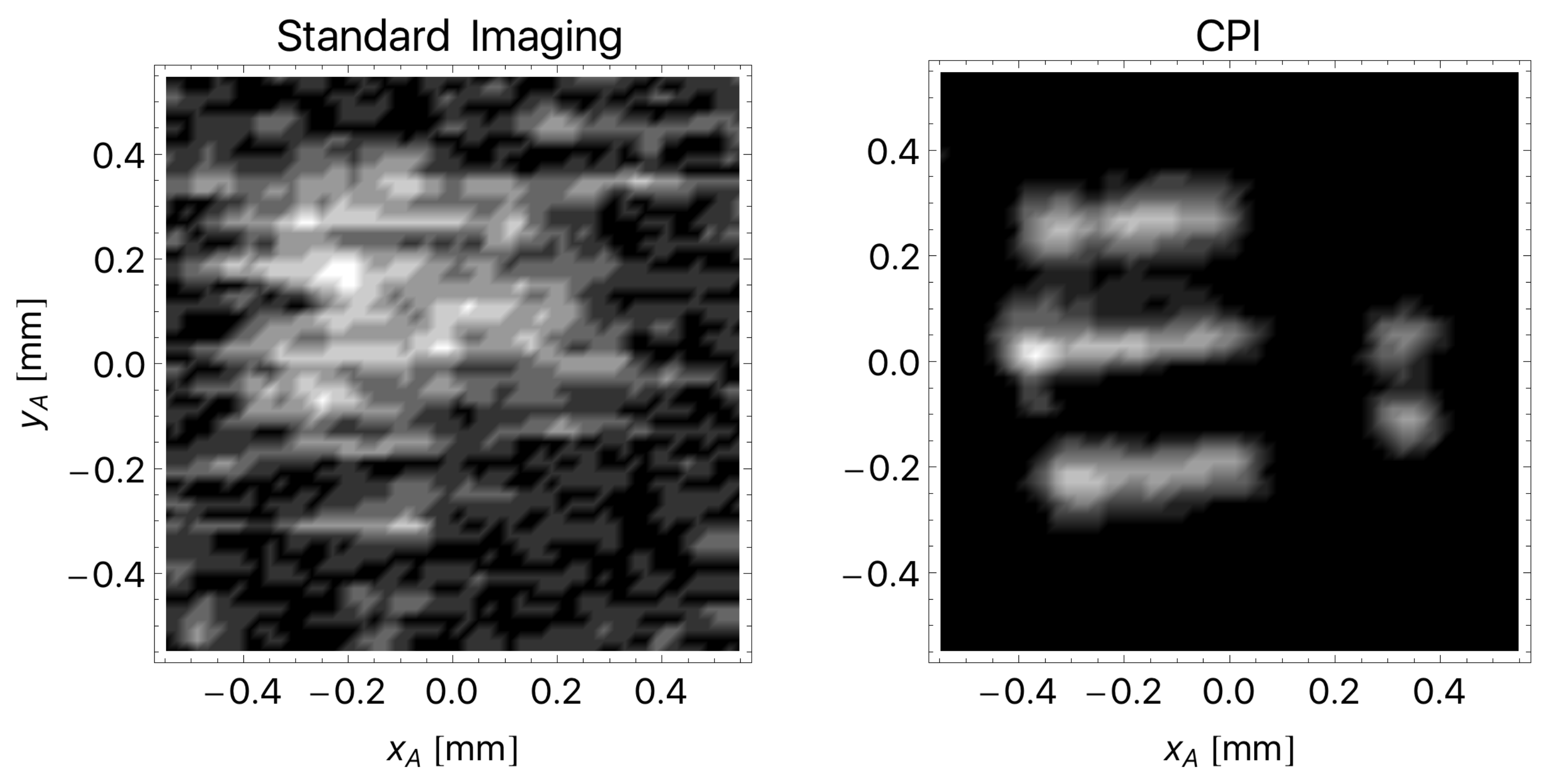

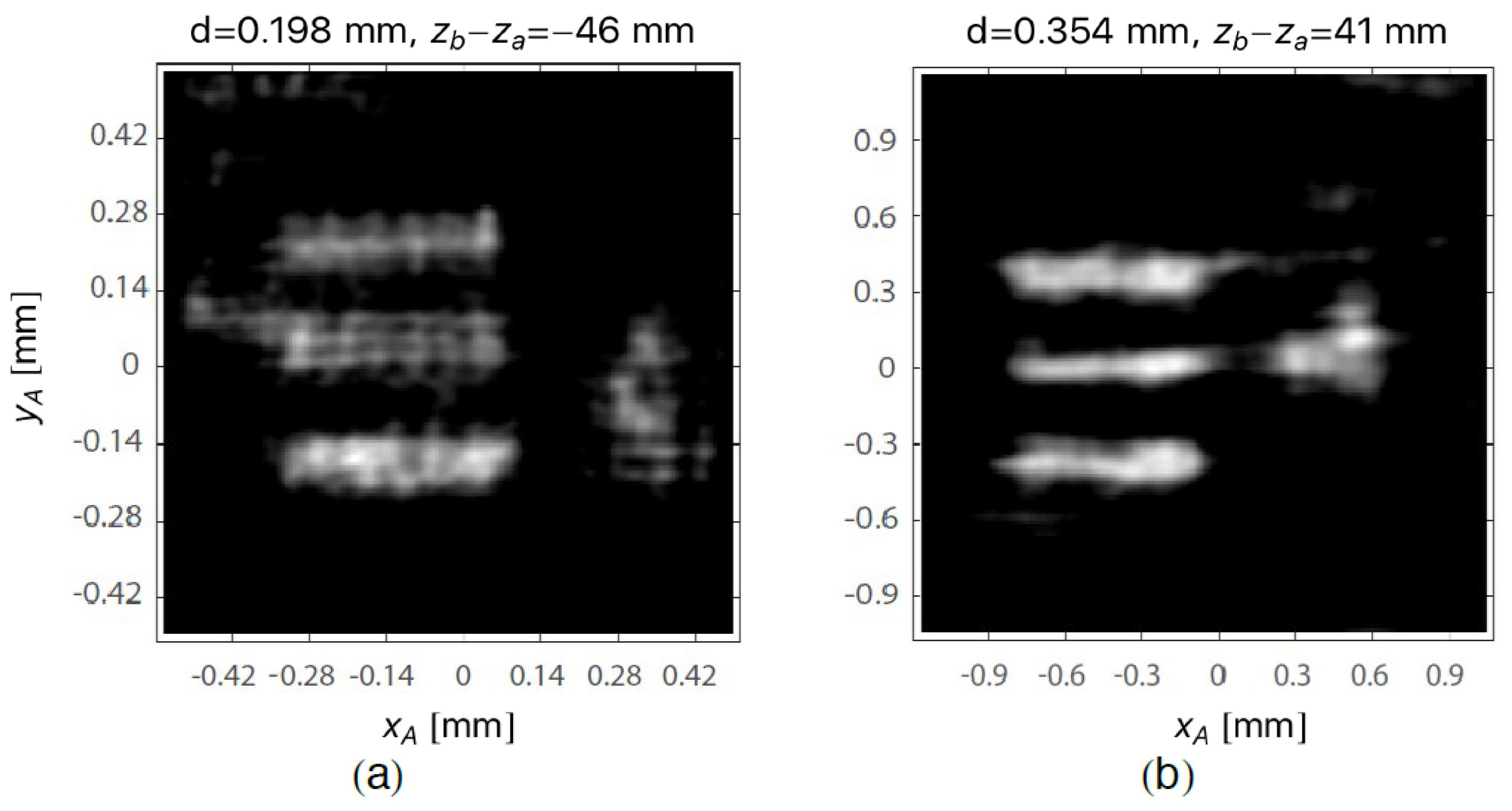

3. Experimental Demonstration of CPI

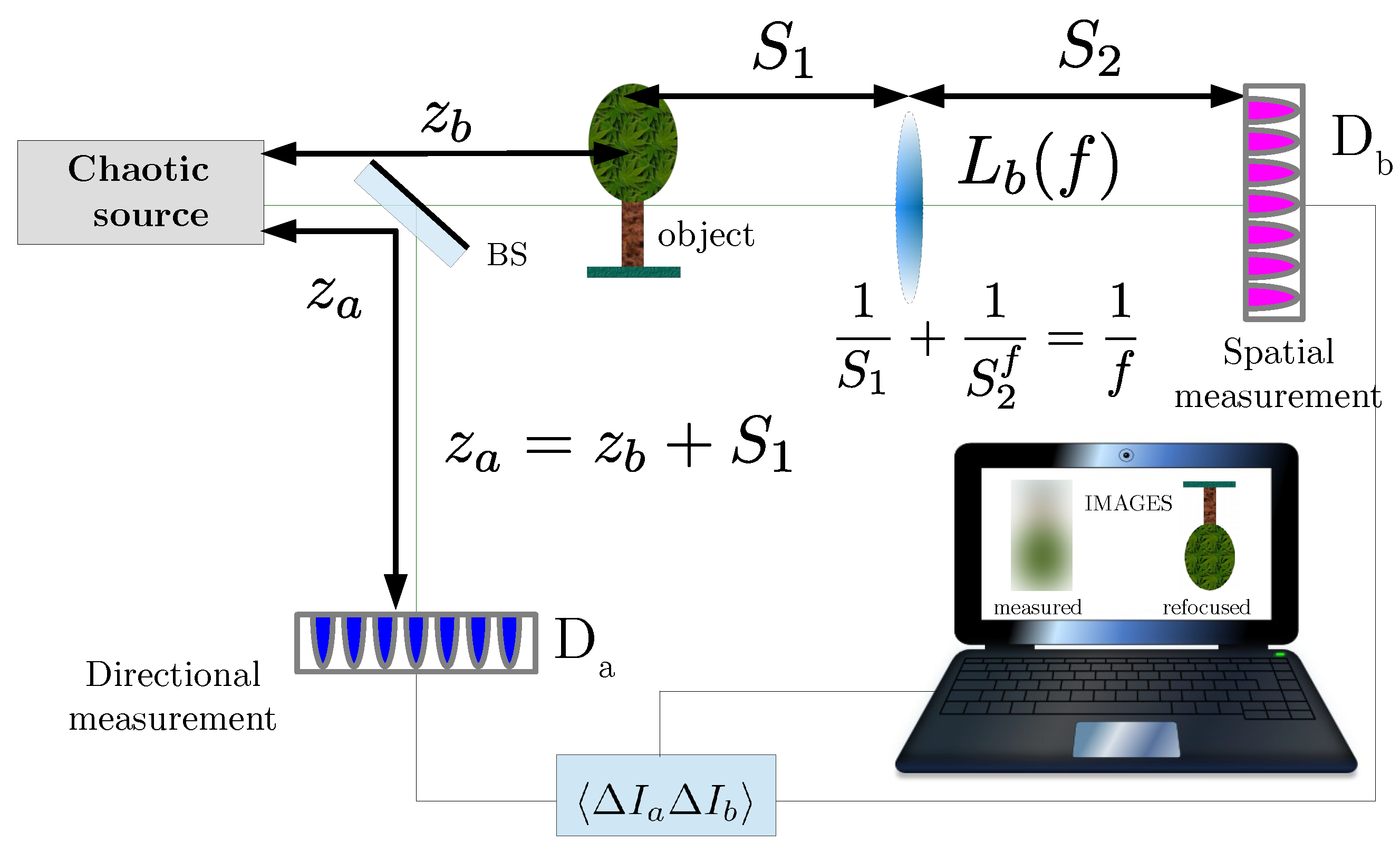

4. A Different Architecture of CPI with Chaotic Light

Comparison between the Two Schemes

5. CPI with Entangled Photons

5.1. Plenoptic Properties of the Correlation Function

5.2. Depth-of-Field Improvement

6. Conclusions

7. Patents

- Device and process for the plenoptic capture of images, request n. 102016000027106 of 15 March 2016 to the Italian Patent Office (approved); extension request n. EP17160543.9 of 13 March 2017 to the European Patent Office (pending); inventors: Milena D’Angelo, Augusto Garuccio, Francesco V. Pepe, Teresa Macchia, Ornella Vaccarelli.

- Device and process for the contemporary capture of standard and plenoptic images, request n. PCT/IB2017/055842 of 26 September 2017 to the International Searching Authority (pending); inventors: Milena D’Angelo, Augusto Garuccio, Francesco V. Pepe, Ornella Vaccarelli.

- Device and process for the acquisition of microscopic plenoptic images with turbulence mitigation, request n. 102018000007857 of 3 August 2018 to the Italian Patent Office (pending); inventors: Milena D’Angelo, Francesco Di Lena, Augusto Garuccio, Francesco V. Pepe, Alessio Scagliola.

Author Contributions

Funding

Acknowledgments

Conflicts of Interest

Abbreviations

| PI | plenoptic imaging |

| DOF | depth of field |

| NA | Numerical Aperture |

| CPI | correlation plenoptic imaging |

| SPDC | spontaneous parametric down-conversion |

| SNR | signal-to-noise ratio |

| GI | ghost imaging |

| BS | beam splitter |

| PSF | point-spread function |

| SI | standard imaging |

| FWHM | full width at half-maximum |

| Symbol | Meaning |

| Transverse correlation function | |

| A | Aperture function of the object |

| Transverse intensity profile of the chaotic light source | |

| Incoherent image function | |

| P | Lens pupil function |

| Transverse amplitude profile of the laser pump in SPDC |

Appendix A. Theoretical Background of of CPI with Chaotic Light

Appendix B. Theoretical Background of CPI with Entangled Photos

References

- Lippmann, G. Épreuves réversibles donnant la sensation du relief. J. Phys. Theor. Appl. 1908, 7, 821–825. [Google Scholar] [CrossRef]

- Adelson, E.H.; Wang, J.Y. Single lens stereo with a plenoptic camera. IEEE Trans. Pattern Anal. Mach. Intell. 1992, 14, 99–106. [Google Scholar] [CrossRef]

- Ng, R.; Levoy, M.; Brédif, M.; Duval, G.; Horowitz, M.; Hanrahan, P. Light field photography with a hand-held plenoptic camera. Comput. Sci. Tech. Rep. 2005, 2, 1–11. [Google Scholar]

- Broxton, M.; Grosenick, L.; Yang, S.; Cohen, N.; Andalman, A.; Deisseroth, K.; Levoy, M. Wave optics theory and 3-D deconvolution for the light field microscope. Opt. Express 2013, 21, 25418–25439. [Google Scholar] [CrossRef] [PubMed]

- Xiao, X.; Javidi, B.; Martinez-Corral, M.; Stern, A. Advances in three-dimensional integral imaging: Sensing, display, and applications. Appl. Opt. 2013, 52, 546–560. [Google Scholar] [CrossRef] [PubMed]

- Prevedel, R.; Yoon, Y.G.; Hoffmann, M.; Pak, N.; Wetzstein, G.; Kato, S.; Schrödel, T.; Raskar, R.; Zimmer, M.; Boyden, E.S.; et al. Simultaneous whole-animal 3D imaging of neuronal activity using light-field microscopy. Nat. Methods 2014, 11, 727–730. [Google Scholar] [CrossRef] [PubMed]

- Ren, M.; Liu, R.; Hong, H.; Ren, J.; Xiao, G. Fast Object Detection in Light Field Imaging by Integrating Deep Learning with Defocusing. Appl. Sci. 2017, 7, 1309. [Google Scholar]

- Dansereau, D.G.; Pizarro, O.; Williams, S.B. Decoding, calibration and rectification for lenselet-based plenoptic cameras. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Washington, DC, USA, 23–28 June 2013; pp. 1027–1034. [Google Scholar]

- Adhikarla, V.K.; Sodnik, J.; Szolgay, P.; Jakus, G. Exploring direct 3D interaction for full horizontal parallax light field displays using leap motion controller. Sensors 2015, 15, 8642–8663. [Google Scholar] [CrossRef] [PubMed]

- Wanner, S.; Goldluecke, B. Globally consistent depth labeling of 4D light fields. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition (CVPR), Providence, RI, USA, 16–21 June 2012; pp. 41–48. [Google Scholar]

- Levoy, M.; Ng, R.; Adams, A.; Footer, M.; Horowitz, M. Light field microscopy. ACM Trans. Gr. 2006, 25, 924–934. [Google Scholar] [CrossRef]

- Levoy, M.; Zhang, Z.; McDowall, I. Recording and controlling the 4D light field in a microscope using microlens arrays. J. Microsc. 2009, 235, 144–162. [Google Scholar] [CrossRef] [PubMed]

- Cheng, A.; Gonçalves, J.T.; Golshani, P.; Arisaka, K.; Portera-Cailliau, C. Simultaneous two-photon calcium imaging at different depths with spatiotemporal multiplexing. Nat. Methods 2011, 8, 139–142. [Google Scholar] [CrossRef] [PubMed]

- Abrahamsson, S.; Chen, J.; Hajj, B.; Stallinga, S.; Katsov, A.Y.; Wisniewski, J.; Mizuguchi, G.; Soule, P.; Mueller, F.; Darzacq, C.D.; et al. Fast multicolor 3D imaging using aberration-corrected multifocus microscopy. Nat. Methods 2012, 10, 60–63. [Google Scholar] [CrossRef] [PubMed]

- Quirin, S.; Peterka, D.S.; Yuste, R. Instantaneous three-dimensional sensing using spatial light modulator illumination with extended depth of field imaging. Opt. Express 2013, 21, 16007–16021. [Google Scholar] [CrossRef] [PubMed]

- Georgiev, T.G.; Lumsdaine, A.; Goma, S. High Dynamic Range Image Capture with Plenoptic 2.0 Camera. In Proceedings of the Frontiers in Optics 2009/Laser Science XXV/Fall 2009 OSA Optics & Photonics Technical Digest, San Jose, CA, USA, 11–15 October 2009; Optical Society of America: Washington, DC, USA, 2009; p. SWA7P. [Google Scholar] [CrossRef]

- Georgiev, T.G.; Lumsdaine, A. Focused plenoptic camera and rendering. J. Electron. Imaging 2010, 19, 021106. [Google Scholar]

- Georgiev, T.; Lumsdaine, A. The multifocus plenoptic camera. In Proceedings of the Digital Photography VIII, Burlingame, CA, USA, 24 January 2012; International Society for Optics and Photonics: Washington, DC, USA, 2012; Volume 8299, p. 829908. [Google Scholar]

- Goldlücke, B.; Klehm, O.; Wanner, S.; Eisemann, E. Plenoptic Cameras. In Digital Representations of the Real World: How to Capture, Model, and Render Visual Reality; Magnor, M., Grau, O., Sorkine-Hornung, O., Theobalt, C., Eds.; CRC Press: Boca Raton, FL, USA, 2015. [Google Scholar]

- Jin, X.; Liu, L.; Chen, Y.; Dai, Q. Point spread function and depth-invariant focal sweep point spread function for plenoptic camera 2.0. Opt. Express 2017, 25, 9947–9962. [Google Scholar] [CrossRef] [PubMed]

- Ng, R. Fourier slice photography. ACM Trans. Gr. 2005, 24, 735–744. [Google Scholar] [CrossRef]

- Liu, H.Y.; Jonas, E.; Tian, L.; Zhong, J.; Recht, B.; Waller, L. 3D imaging in volumetric scattering media using phase-space measurements. Opt. Express 2015, 23, 14461–14471. [Google Scholar] [CrossRef] [PubMed]

- Muenzel, S.; Fleischer, J.W. Enhancing layered 3D displays with a lens. Appl. Opt. 2013, 52, D97–D101. [Google Scholar] [CrossRef] [PubMed]

- Levoy, M.; Hanrahan, P. Light field rendering. In Proceedings of the 23rd Annual Conference on Computer Graphics and Interactive Techniques, New Orleans, LA, USA, 4–9 August 1996; pp. 31–42. [Google Scholar]

- Fahringer, T.W.; Lynch, K.P.; Thurow, B.S. Volumetric particle image velocimetry with a single plenoptic camera. Meas. Sci. Technol. 2015, 26, 115201. [Google Scholar] [CrossRef]

- Hall, E.M.; Thurow, B.S.; Guildenbecher, D.R. Comparison of three-dimensional particle tracking and sizing using plenoptic imaging and digital in-line holography. Appl. Opt. 2016, 55, 6410–6420. [Google Scholar] [CrossRef] [PubMed]

- Lv, Y.; Wang, R.; Ma, H.; Zhang, X.; Ning, Y.; Xu, X. SU-G-IeP4-09: Method of Human Eye Aberration Measurement Using Plenoptic Camera Over Large Field of View. Med. Phys. 2016, 43, 3679. [Google Scholar] [CrossRef]

- Wu, C.; Ko, J.; Davis, C.C. Using a plenoptic sensor to reconstruct vortex phase structures. Opt. Lett. 2016, 41, 3169–3172. [Google Scholar] [CrossRef] [PubMed]

- Wu, C.; Ko, J.; Davis, C.C. Imaging through strong turbulence with a light field approach. Opt. Express 2016, 24, 11975–11986. [Google Scholar] [CrossRef] [PubMed]

- Glastre, W.; Hugon, O.; Jacquin, O.; de Chatellus, H.G.; Lacot, E. Demonstration of a plenoptic microscope based on laser optical feedback imaging. Opt. Express 2013, 21, 7294–7303. [Google Scholar] [CrossRef] [PubMed]

- Raytrix GmbH. Available online: https://raytrix.de/ (accessed on 4 September 2018).

- Shademan, A.; Decker, R.; Opfermann, J.; Leonard, S.; Kim, P.; Krieger, A. Plenoptic cameras in surgical robotics: Calibration, registration, and evaluation. In Proceedings of the 2016 IEEE International Conference on Robotics and Automation (ICRA), Stockholm, Sweden, 16–21 May 2016. [Google Scholar]

- Le, H.N.; Decker, R.; Opferman, J.; Kim, P.; Krieger, A.; Kang, J.U. 3-D endoscopic imaging using plenoptic camera. In Proceedings of the Conference on Lasers and Electro-Optics, San Jose, CA, USA, 5–10 June 2016. [Google Scholar] [CrossRef]

- Carlsohn, M.; Kemmling, A.; Petersen, A.; Wietzke, L. 3D real-time visualization of blood flow in cerebral aneurysms by light field particle image velocimetry. Proc. SPIE 2016, 9897, 989703. [Google Scholar]

- Waller, L.; Situ, G.; Fleischer, J.W. Phase-space measurement and coherence synthesis of optical beams. Nat. Photonics 2012, 6, 474. [Google Scholar] [CrossRef]

- Georgiev, T.; Zheng, K.C.; Curless, B.; Salesin, D.; Nayar, S.K.; Intwala, C. Spatio-angular resolution trade-offs in integral photography. Rendering Tech. 2006, 2006, 263–272. [Google Scholar]

- Pérez, J.; Magdaleno, E.; Pérez, F.; Rodríguez, M.; Hernández, D.; Corrales, J. Super-Resolution in plenoptic cameras using FPGAs. Sensors 2014, 14, 8669–8685. [Google Scholar] [CrossRef] [PubMed]

- Li, Y.; Sjöström, M.; Olsson, R.; Jennehag, U. Scalable coding of plenoptic images by using a sparse set and disparities. IEEE Trans. Image Process. 2016, 25, 80–91. [Google Scholar] [CrossRef] [PubMed]

- D’Angelo, M.; Pepe, F.V.; Garuccio, A.; Scarcelli, G. Correlation plenoptic imaging. Phys. Rev. Lett. 2016, 116, 223602. [Google Scholar] [CrossRef] [PubMed]

- Pepe, F.V.; Scarcelli, G.; Garuccio, A.; D’Angelo, M. Plenoptic imaging with second-order correlations of light. Quantum Meas. Quantum Metrol. 2016, 3, 20–26. [Google Scholar] [CrossRef]

- Pepe, F.V.; Di Lena, F.; Garuccio, A.; Scarcelli, G.; D’Angelo, M. Correlation Plenoptic Imaging with Entangled Photons. Technologies 2016, 4, 17. [Google Scholar] [CrossRef]

- Pepe, F.V.; Di Lena, F.; Mazzilli, A.; Edrei, E.; Garuccio, A.; Scarcelli, G.; D’Angelo, M. Diffraction-limited plenoptic imaging with correlated light. Phys. Rev. Lett. 2017, 119, 243602. [Google Scholar] [CrossRef] [PubMed]

- Rubin, M.H.; Shih, Y. Resolution of ghost imaging for nondegenerate spontaneous parametric down-conversion. Phys. Rev. A 2008, 78, 033836. [Google Scholar] [CrossRef]

- Karmakar, S.; Shih, Y. Two-color ghost imaging with enhanced angular resolving power. Phys. Rev. A 2010, 81, 033845. [Google Scholar] [CrossRef]

- Aspden, R.S.; Gemmell, N.R.; Morris, P.A.; Tasca, D.S.; Mertens, L.; Tanner, M.G.; Kirkwood, R.A.; Ruggeri, A.; Tosi, A.; Boyd, R.W.; et al. Photon-sparse microscopy: Visible light imaging using infrared illumination. Optica 2015, 2, 1049–1052. [Google Scholar] [CrossRef]

- Brida, G.; Genovese, M.; Berchera, I.R. Experimental realization of sub-shot-noise quantum imaging. Nat. Photonics 2010, 4, 227–230. [Google Scholar] [CrossRef]

- Meda, A.; Losero, E.; Samantaray, N.; Scafirimuto, F.; Pradyumna, S.; Avella, A.; Ruo-Berchera, I.; Genovese, M. Photon-number correlation for quantum enhanced imaging and sensing. J. Opt. 2017, 19, 094002. [Google Scholar] [CrossRef]

- Samantaray, N.; Ruo-Berchera, I.; Meda, A.; Genovese, M. Realization of the first sub-shot-noise wide field microscope. Light Sci. Appl. 2017, 6, e17005. [Google Scholar] [CrossRef] [PubMed]

- Bennink, R.S.; Bentley, S.J.; Boyd, R.W.; Howell, J.C. Quantum and classical coincidence imaging. Phys. Rev. Lett. 2004, 92, 033601. [Google Scholar] [CrossRef] [PubMed]

- D’Angelo, M.; Kim, Y.H.; Kulik, S.P.; Shih, Y. Identifying entanglement using quantum ghost interference and imaging. Phys. Rev. Lett. 2004, 92, 233601. [Google Scholar] [CrossRef] [PubMed]

- Scarcelli, G.; Zhou, Y.; Shih, Y. Random delayed-choice quantum eraser via two-photon imaging. Eur. Phys. J. D 2007, 44, 167–173. [Google Scholar] [CrossRef]

- Kim, M.K. Principles and techniques of digital holographic microscopy. SPIE Rev. 2010, 1, 018005. [Google Scholar] [CrossRef]

- Zheng, G.; Horstmeyer, R.; Yang, C. Wide-field, high-resolution Fourier ptychographic microscopy. Nat. Photonics 2013, 7, 739. [Google Scholar] [CrossRef] [PubMed]

- Albota, M.A.; Aull, B.F.; Fouche, D.G.; Heinrichs, R.M.; Kocher, D.G.; Marino, R.M.; Mooney, J.G.; Newbury, N.R.; O’Brien, M.E.; Player, B.E.; et al. Three-dimensional imaging laser radars with Geiger-mode avalanche photodiode arrays. Lincoln Lab. J. 2002, 13, 351–370. [Google Scholar]

- Marino, R.M.; Davis, W.R. Jigsaw: A foliage-penetrating 3D imaging laser radar system. Lincoln Lab. J. 2005, 15, 23–36. [Google Scholar]

- Hansard, M.; Lee, S.; Choi, O.; Horaud, R.P. Time-of-Flight Cameras: Principles, Methods and Applications; Springer Science & Business Media: Berlin, Germany, 2012. [Google Scholar]

- McCarthy, A.; Krichel, N.J.; Gemmell, N.R.; Ren, X.; Tanner, M.G.; Dorenbos, S.N.; Zwiller, V.; Hadfield, R.H.; Buller, G.S. Kilometer-range, high resolution depth imaging via 1560 nm wavelength single-photon detection. Opt. Express 2013, 21, 8904–8915. [Google Scholar] [CrossRef] [PubMed]

- McCarthy, A.; Ren, X.; Della Frera, A.; Gemmell, N.R.; Krichel, N.J.; Scarcella, C.; Ruggeri, A.; Tosi, A.; Buller, G.S. Kilometer-range depth imaging at 1550 nm wavelength using an InGaAs/InP single-photon avalanche diode detector. Opt. Express 2013, 21, 22098–22113. [Google Scholar] [CrossRef] [PubMed]

- Altmann, Y.; McLaughlin, S.; Padgett, M.J.; Goyal, V.K.; Hero, A.O.; Faccio, D. Quantum-inspired computational imaging. Science 2018, 361, eaat2298. [Google Scholar] [CrossRef] [PubMed]

- Mertz, J. Introduction to Optical Microscopy; Roberts and Company Publishers: Englewood, CO, USA, 2010; Volume 138. [Google Scholar]

- D’Angelo, M.; Shih, Y. Quantum imaging. Laser Phys. Lett. 2005, 2, 567–596. [Google Scholar] [CrossRef]

- Valencia, A.; Scarcelli, G.; D’Angelo, M.; Shih, Y. Two-photon imaging with thermal light. Phys. Rev. Lett. 2005, 94, 063601. [Google Scholar] [CrossRef] [PubMed]

- Ferri, F.; Magatti, D.; Gatti, A.; Bache, M.; Brambilla, E.; Lugiato, L.A. High-resolution ghost image and ghost diffraction experiments with thermal light. Phys. Rev. Lett. 2005, 94, 183602. [Google Scholar] [CrossRef] [PubMed]

- Scarcelli, G.; Berardi, V.; Shih, Y. Can two-photon correlation of chaotic light be considered as correlation of intensity fluctuations? Phys. Rev. Lett. 2006, 96, 063602. [Google Scholar] [CrossRef] [PubMed]

- Brida, G.; Chekhova, M.; Fornaro, G.; Genovese, M.; Lopaeva, E.; Berchera, I.R. Systematic analysis of signal-to-noise ratio in bipartite ghost imaging with classical and quantum light. Phys. Rev. A 2011, 83, 063807. [Google Scholar] [CrossRef]

- Klyshko, D.N. Photons and Nonlinear Optics; Gordon and Breach Science Publishers Inc.: London, UK, 1988. [Google Scholar]

- Pepe, F.V.; Di Lena, F.; Garuccio, A.; D’Angelo, M. Correlation plenoptic imaging. Proc. SPIE 2017, 10333. [Google Scholar] [CrossRef]

- Klyshko, D.N. Effect of focusing on photon correlation in parametric light scattering. Zh. Eksp. Teor. Fiz 1988, 94, 82–90. [Google Scholar]

- Pepe, F.V.; Vaccarelli, O.; Garuccio, A.; Scarcelli, G.; D’Angelo, M. Exploring plenoptic properties of correlation imaging with chaotic light. J. Opt. 2017, 19, 114001. [Google Scholar] [CrossRef]

- Rubin, M.H.; Klyshko, D.N.; Shih, Y.; Sergienko, A. Theory of two-photon entanglement in type-II optical parametric down-conversion. Phys. Rev. A 1994, 50, 5122. [Google Scholar] [CrossRef] [PubMed]

- Rubin, M.H. Transverse correlation in optical spontaneous parametric down-conversion. Phys. Rev. A 1996, 54, 5349. [Google Scholar] [CrossRef] [PubMed]

- Burlakov, A.; Chekhova, M.; Klyshko, D.; Kulik, S.; Penin, A.; Shih, Y.; Strekalov, D. Interference effects in spontaneous two-photon parametric scattering from two macroscopic regions. Phys. Rev. A 1997, 56, 3214. [Google Scholar] [CrossRef]

- Kim, Y.H. Quantum interference with beamlike type-II spontaneous parametric down-conversion. Phys. Rev. A 2003, 68, 013804. [Google Scholar] [CrossRef]

- Baek, S.Y.; Kim, Y.H. Spectral properties of entangled photon pairs generated via frequency-degenerate type-I spontaneous parametric down-conversion. Phys. Rev. A 2008, 77, 043807. [Google Scholar] [CrossRef]

- Pittman, T.; Shih, Y.; Strekalov, D.; Sergienko, A. Optical imaging by means of two-photon quantum entanglement. Phys. Rev. A 1995, 52, R3429. [Google Scholar] [CrossRef] [PubMed]

- Di Lena, F.; Pepe, F.V.; Avella, A.; Ruo-Berchera, I.; Scarcelli, G.; Garuccio, A.; D’Angelo, M. Correlation plenoptic imaging with entangled photons. In Proceedings of the SPIE Quantum Technologies, Strasbourg, France, 21 May 2018; Volume 10674, p. 106740H. [Google Scholar]

- D’Angelo, M.; Garuccio, A.; Romano, F.; Di Lena, F.; D’Incecco, M.; Moro, R.; Regano, A.; Scarcelli, G. Toward “Ghost Imaging” with Cosmic Ray Muons. In Frontiers of Fundamental Physics and Physics Education Research; Springer: Berlin, Germany, 2014; pp. 237–247. [Google Scholar]

- Gatti, A.; Brambilla, E.; Bache, M.; Lugiato, L. Correlated imaging, quantum and classical. Phys. Rev. A 2004, 70, 013802. [Google Scholar] [CrossRef]

- Remondino, F.; Stoppa, D. TOF Range-Imaging Cameras; Springer: Berlin, Germany, 2016; Volume 68121. [Google Scholar]

- Katz, O.; Bromberg, Y.; Silberberg, Y. Compressive ghost imaging. Appl. Phys. Lett. 2009, 95, 131110. [Google Scholar] [CrossRef]

- Hradil, Z.; Řeháček, J.; Sánchez-Soto, L. Quantum reconstruction of the mutual coherence function. Phys. Rev. Lett. 2010, 105, 010401. [Google Scholar] [CrossRef] [PubMed]

- Stoklasa, B.; Motka, L.; Rehacek, J.; Hradil, Z.; Sánchez-Soto, L. Wavefront sensing reveals optical coherence. Nat. Commun. 2014, 5, 3275. [Google Scholar] [CrossRef] [PubMed]

- Scully, M.O.; Zubairy, M.S. Quantum Optics; Cambridge University Press: Cambridge, UK, 1997. [Google Scholar]

- Goodman, J.W. Introduction to Fourier Optics; Roberts and Company Publishers: Englewood, CO, USA, 2005. [Google Scholar]

© 2018 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (http://creativecommons.org/licenses/by/4.0/).

Share and Cite

Di Lena, F.; Pepe, F.V.; Garuccio, A.; D’Angelo, M. Correlation Plenoptic Imaging: An Overview. Appl. Sci. 2018, 8, 1958. https://doi.org/10.3390/app8101958

Di Lena F, Pepe FV, Garuccio A, D’Angelo M. Correlation Plenoptic Imaging: An Overview. Applied Sciences. 2018; 8(10):1958. https://doi.org/10.3390/app8101958

Chicago/Turabian StyleDi Lena, Francesco, Francesco V. Pepe, Augusto Garuccio, and Milena D’Angelo. 2018. "Correlation Plenoptic Imaging: An Overview" Applied Sciences 8, no. 10: 1958. https://doi.org/10.3390/app8101958

APA StyleDi Lena, F., Pepe, F. V., Garuccio, A., & D’Angelo, M. (2018). Correlation Plenoptic Imaging: An Overview. Applied Sciences, 8(10), 1958. https://doi.org/10.3390/app8101958