Temporal Modeling on Multi-Temporal-Scale Spatiotemporal Atoms for Action Recognition

Abstract

1. Introduction

- Single temporal scale. A typical action contains characteristic spatiotemporal information in different temporal scales. However, the existing CNN-based action recognition methods extract deep information from a single temporal scale, including single frame, stacked frames with fixed length or clip with fixed length. For example, the spatial and temporal streams of the two-stream model [7] were used to learn the information from single RGB frame and 10-stacked optical flow frames, respectively. C3D [12] and Res3D [13] were used to learn spatiotemporal information from clips with 16 and 8 frames, respectively.

- Unordered modeling. An action can be considered as a temporal evolution of the spatiotemporal information. However, some CNN-based action recognition methods ignore the temporal evolution. The C3D and Res3D methods split the video action into clips and merged the features of the split clips by averaging and L2-normalization. Yu et al. [17] attempted to fuse the CNN frame-wise spatial feature to video-wise spatiotemporal feature via stratified pooling.

- Equal weight. For a given action, each frame, or clip contains information with different weights. Nevertheless, most of the CNN-based methods treat each frame or clip with equal weight.

- Information broken. Some of the CNN-based action recognition methods extract deep information from clips with sparse splitting, such as non-overlapped 8-frames clips in Res3D work [13] or stacked 10-frames in the temporal stream of two-stream model [7], however, these sparse splitting would break the key spatiotemporal information across two consecutive clips.

- We propose a method of temporal modeling on multi-temporal-scale atoms for action recognition.

- We extend the video summarization technique to action atoms mining in the deep spatiotemporal space.

3. Temporal Modeling of Multi-Temporal-Scale Spatiotemporal Atoms

2. Related Works

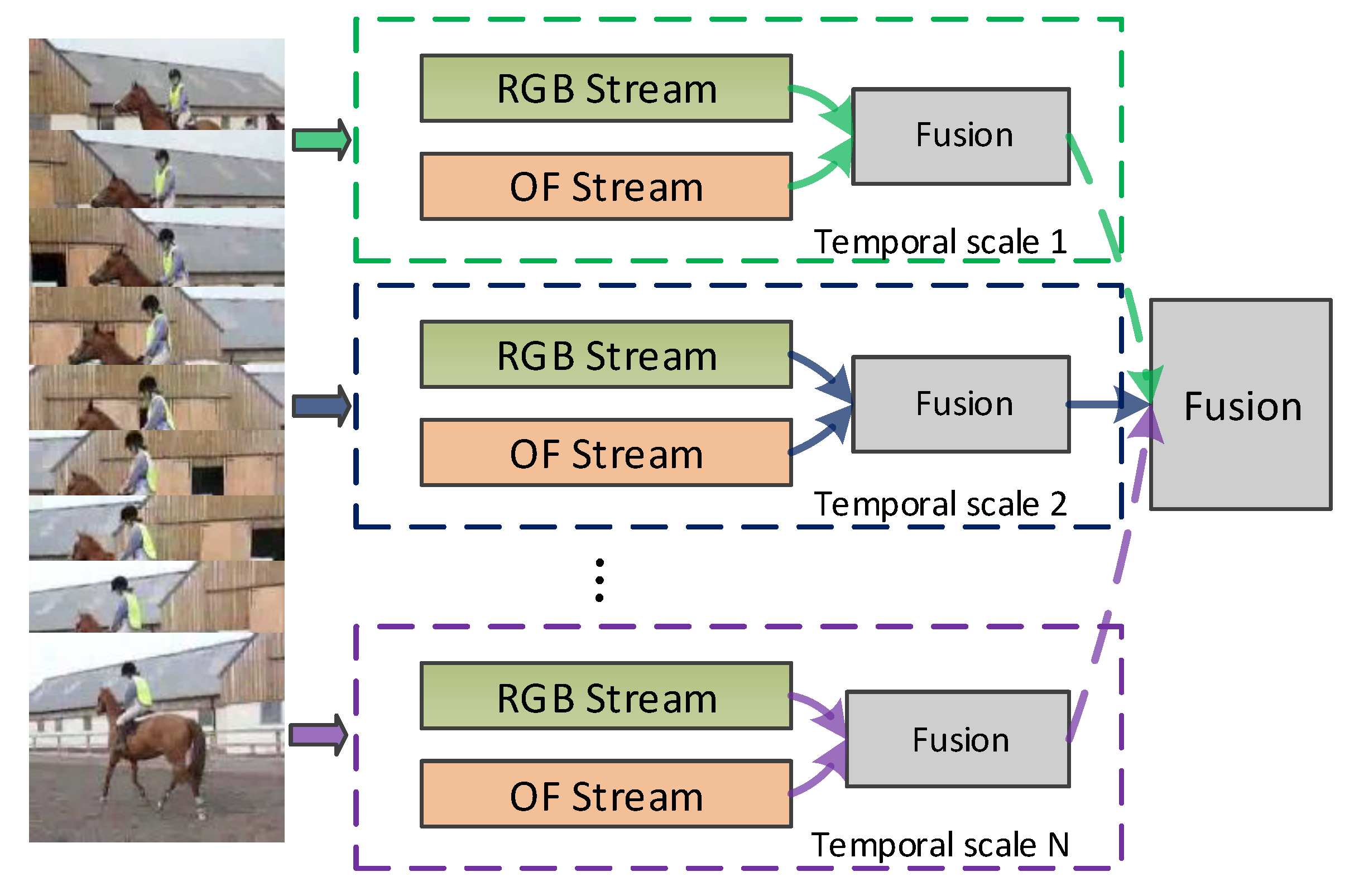

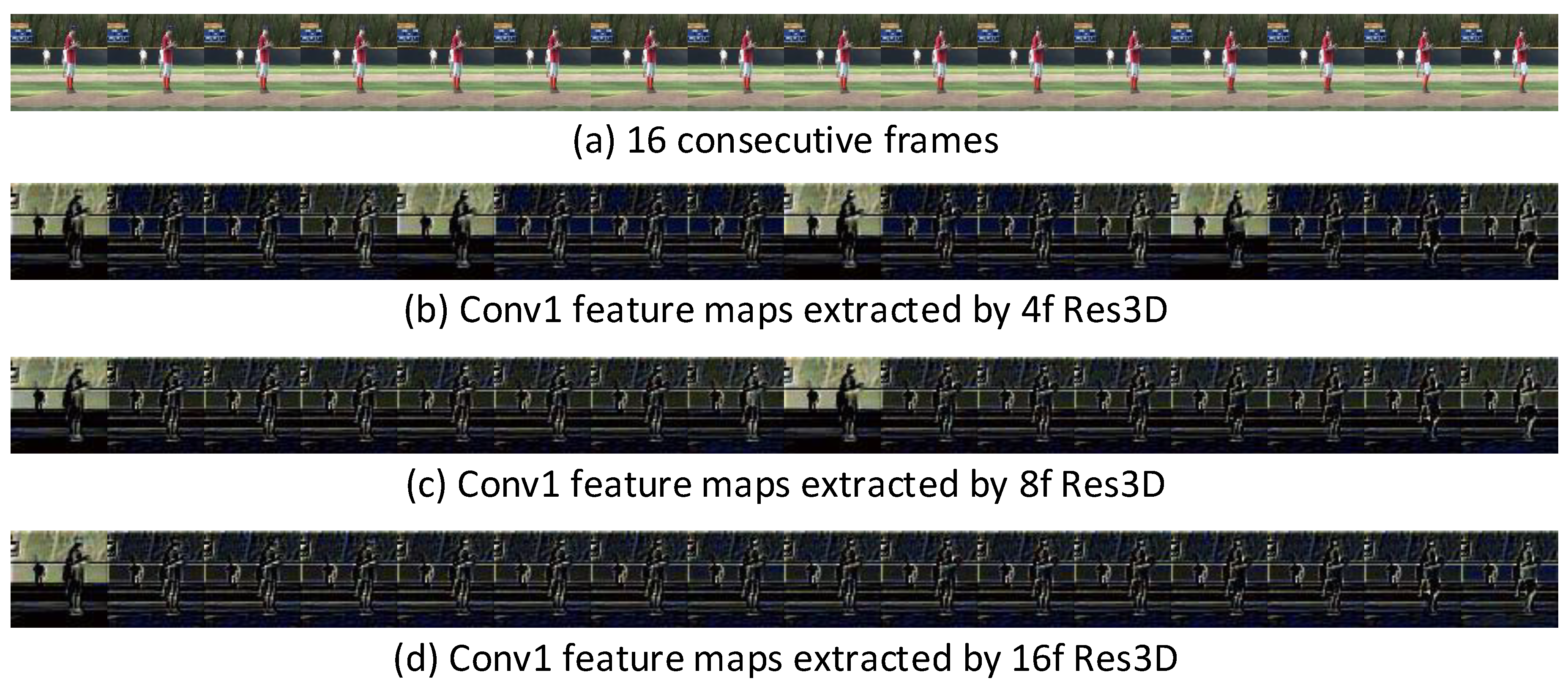

3.1. Multi-Temporal-Scale Res3D Architecture

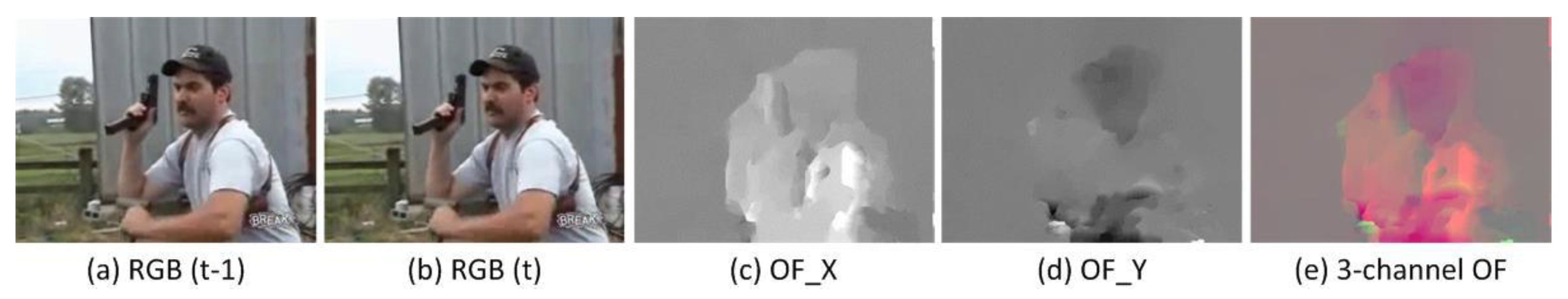

3.2. Knowledge Transfer to Optical Flow

3.3. Unsupervised Action Aton Mining

3.4. Temporal Evolution Modeling on Atoms

3.5. Fusion Operations

- Spatiotemporal information fusion (early fusion): The deep spatiotemporal information of the RGB and OF streams are fused. Then action atoms are mined from the fused spatiotemporal information.

- Atom fusion (middle fusion): The action atoms mined from the deep spatiotemporal information of the RGB and OF streams are fused. Then the fused atoms are fed into LSTM.

- Prediction fusion (late fusion): In each of the RGB and OF streams, LSTM are performed on the mined atoms. Then the softmax predictions of these two streams are fused.

4. Evaluation

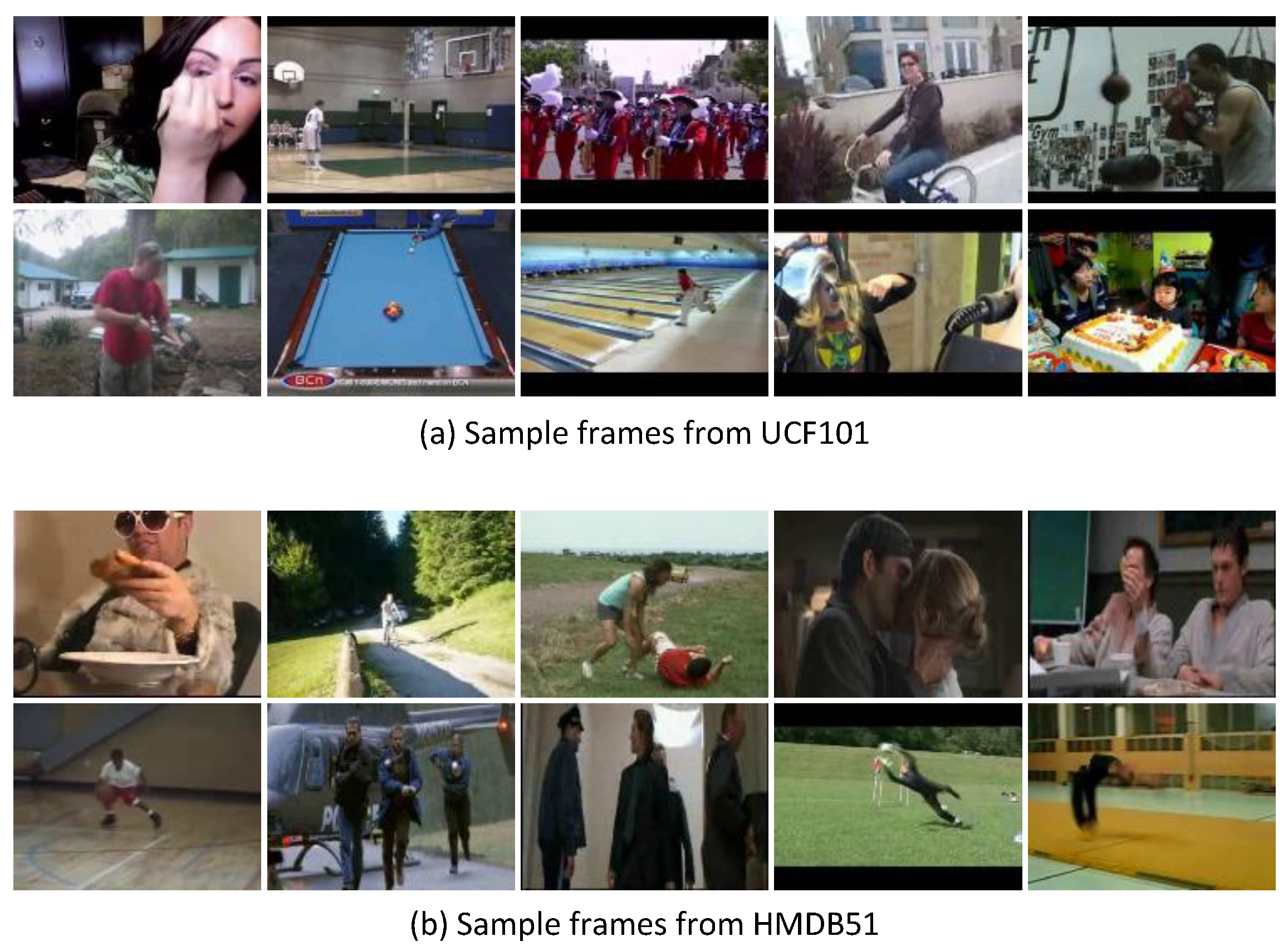

4.1. Datasets

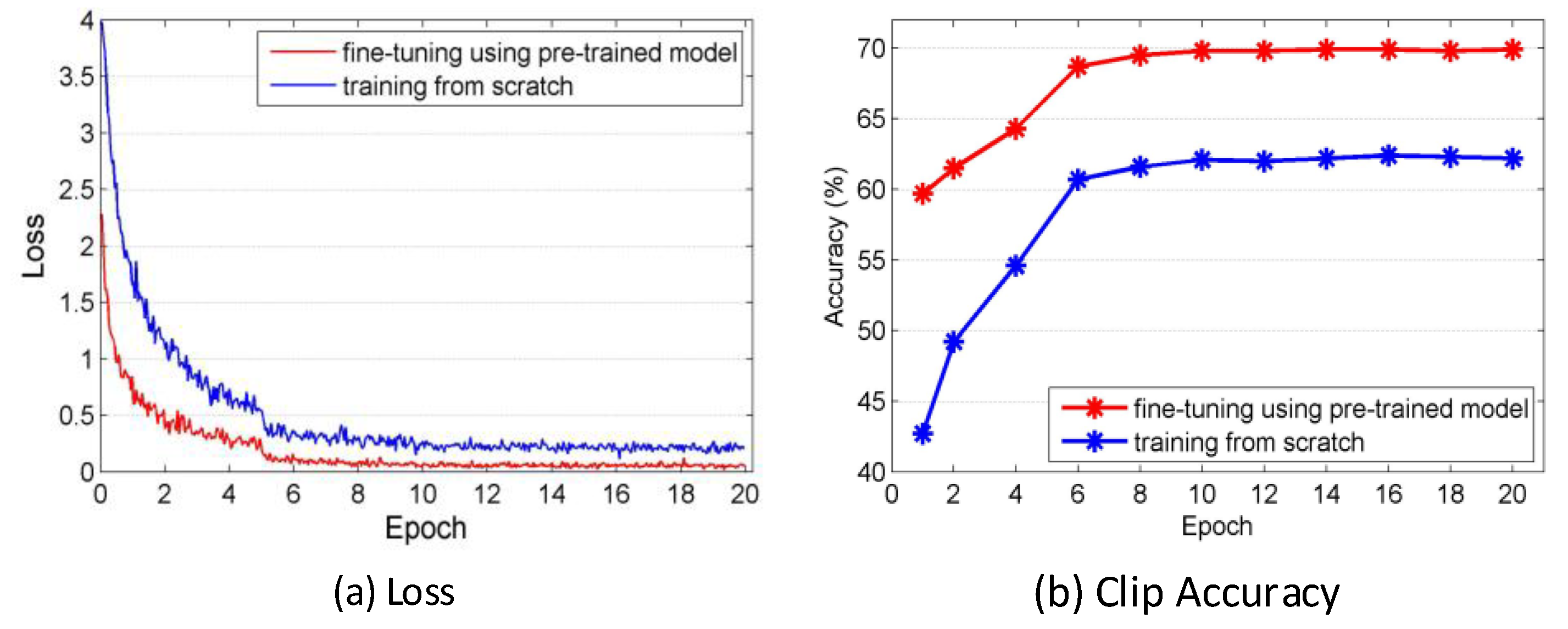

4.2. Implementation Details

4.3. Performance Evaluation

4.4. Qualitative Run-Time Analysis

4.5. Comparison with State-of-the-Art Methods

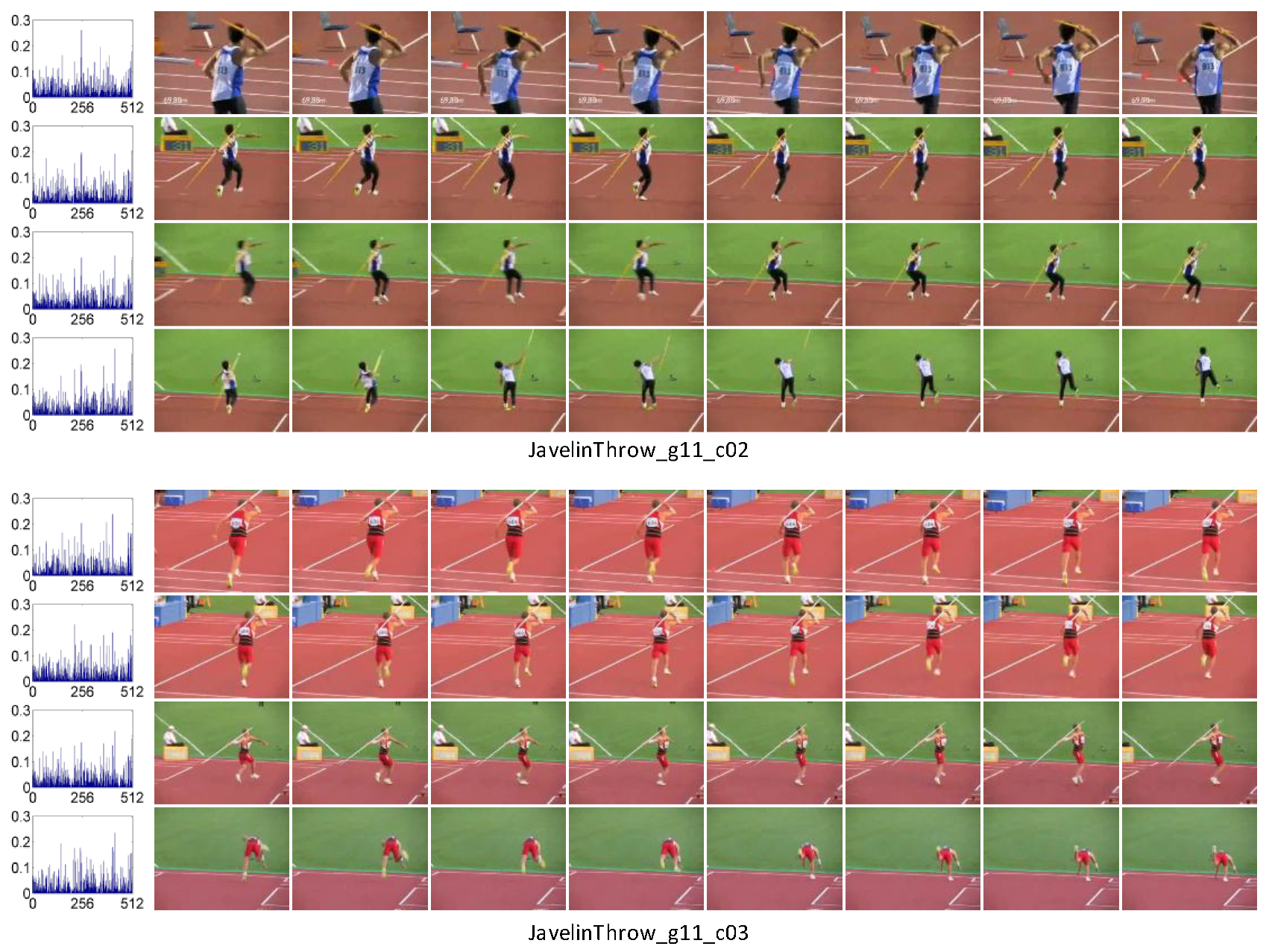

4.6. Model Visualization

5. Conclusions

Author Contributions

Funding

Conflicts of Interest

References

- LeCun, Y.; Bottou, L.; Bengio, Y.; Haffner, P. Gradient-based learning applied to document recognition. Proc. IEEE 1998, 86, 2278–2324. [Google Scholar] [CrossRef]

- Krizhevsky, A.; Sutskever, I.; Hinton, G. Imagenet classification with deep convolutional neural networks. In Proceedings of the Annual Conference on Neural Information Processing Systems, Lake Tahoe, NV, USA, 3–6 December 2012; pp. 1097–1105. [Google Scholar]

- Girshick, R.; Donahue, J.; Darrell, T.; Malik, J. Rich feature hierarchies for accurate object detection and semantic segmentation. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Columbus, OH, USA, 23–28 June 2014; pp. 580–587. [Google Scholar]

- Farabet, C.; Couprie, C.; LeCun, Y. Learning hierarchical features for scene labeling. IEEE Trans. Pattern Anal. Mach. Intell. 2013, 35, 1915–1929. [Google Scholar] [CrossRef] [PubMed]

- Shelhamer, E.; Long, J.; Darrell, T. Fully convolutional networks for semantic segmentation. IEEE Trans. Pattern Anal. Mach. Intell. 2017, 39, 640–651. [Google Scholar] [CrossRef] [PubMed]

- Karpathy, A.; Toderici, G.; Shetty, S.; Leung, T.; Sukthankar, R.; Li, F. Large-scale video classification with convolutional neural networks. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Columbus, OH, USA, 23–28 June 2014; pp. 1725–1732. [Google Scholar]

- Simonyan, K.; Zisserman, A. Two-stream convolutional networks for action recognition in videos. In Proceedings of the Advances in Neural Information Processing Systems, Montreal, QC, Canada, 8–13 December 2014; pp. 568–576. [Google Scholar]

- Yang, M.; Ji, S.; Xu, W.; Wang, J. Detecting human actions in surveillance videos. In Proceedings of the TREC Video Retrieval Evaluation Workshop, Fira, Greece, 8–10 July 2009. [Google Scholar]

- Ji, S.; Xu, W.; Yang, M.; Yu, K. 3D convolutional neural networks for human action recognition. In Proceedings of the International Conference on Machine Learning, Haifa, Israel, 21–24 June 2010; pp. 495–502. [Google Scholar]

- Baccouche, M.; Mamalet, F.; Wolf, C.; Carcia, C.; Baskurt, A. Sequential deep learning for human action recognition. In Proceedings of the International Conference on Human Behavior Unterstanding, Amsterdam, The Netherlands, 16 November 2011; pp. 29–39. [Google Scholar]

- Ji, S.; Xu, W.; Yang, M.; Yu, K. 3D convolutional neural networks for human action recognition. IEEE Trans. Pattern Anal. Mach. Intell. 2013, 35, 221–231. [Google Scholar] [CrossRef] [PubMed]

- Tran, D.; Bourdev, L.; Fergus, R.; Torresani, L.; Paluri, M. Learning spatiotemporal features with 3D convolutional networks. In Proceedings of the IEEE International Conference on Computer Vision, Venice, Italy, 22–29 October 2017; pp. 4489–4497. [Google Scholar]

- Tran, D.; Ray, J.; Shou, Z.; Changm, S.F.; Paluri, M. ConvNet architecture search for spatiotemporal feature learning. arXiv, 2017; arXiv:1708.05038. [Google Scholar]

- Feichtenhofer, C.; Pinz, A.; Zisserman, A. Convolutional two-Stream network fusion for video action recognition. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Las Vegas, NV, USA, 26 June–1 July 2016; pp. 1933–1941. [Google Scholar]

- Zhang, B.; Wang, L.; Wang, Z.; Qiao, Y.; Wang, H. Real-time action recognition with enhanced motion vector CNNs. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Las Vegas, NV, USA, 26 June–1 July 2016; pp. 2718–2726. [Google Scholar]

- Gao, Z.; Hua, G.; Zhang, D.; Jojic, N.; Wang, L. ER3: A unified framework for event retrieval, recognition and recounting. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Honolulu, HI, USA, 21–26 July 2017. [Google Scholar]

- Yu, S.; Cheng, Y.; Su, S. Stratified pooling based deep convolutional neural networks for human action recognition. Multimedia Tools Appl. 2017, 76, 13367–13382. [Google Scholar] [CrossRef]

- Hochreiter, S.; Schmidhuber, J. Long short-term memory. Neural Comput. 1997, 9, 1735–1780. [Google Scholar] [CrossRef] [PubMed]

- He, K.; Zhang, X.; Ren, S.; Sun, J. Deep residual learning for image recognition. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Las Vegas, NV, USA, 26 June–1 July 2016; pp. 770–778. [Google Scholar]

- Varol, G.; Laptev, I.; Schmid, C. Long-term temporal convolutions for action recognition. IEEE Trans. Pattern Anal. Mach. Intell. 2018, 40, 1510–1517. [Google Scholar] [CrossRef] [PubMed]

- Donahue, J.; Hendricks, L.; Guadarrama, L.; Rohrbach, M.; Venugopalan, S.; Darrell, T.; Saenko, K. Long-term recurrent convolutional networks for visual recognition and description. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Boston, MA, USA, 7–12 June 2015; pp. 2625–2634. [Google Scholar]

- Donahue, J.; Hendricks, J.; Rohrbach, M.; Venugopalan, S.; Guadarrama, S.; Saenko, K.; Darrell, T. Long-term recurrent convolutional networks for visual recognition and description. IEEE Trans. Pattern Anal. Mach. Intell. 2017, 39, 677–691. [Google Scholar] [CrossRef] [PubMed]

- Ng, J.; Hausknecht, M.; Vijayanarasimhan, S.; Vinyals, O.; Monga, R.; Toderici, G. Beyond short snippets: Deep networks for video classification. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Boston, MA, USA, 7–12 June 2015; pp. 4694–4702. [Google Scholar]

- Srivastava, N.; Mansimov, E.; Salakhutdinov, R. Unsupervised learning of video representations using LSTMs. In Proceedings of the International Conference on Machine Learning, Lille, France, 6–11 July 2015. [Google Scholar]

- Simonyan, K.; Zisserman, A. Very deep convolutional networks for large-scale image recognition. In Proceedings of the International Conference on Learning Representation, Banff, AB, Canada, 14–16 April 2014. [Google Scholar]

- Oliver, N.; Rosario, B.; Pentland, A. A bayesian computer vision system for modeling human interactions. IEEE Trans. Pattern Anal. Mach. Intell. 2000, 22, 255–272. [Google Scholar] [CrossRef]

- Wang, Y.; Mori, G. Hidden part models for human action recognition: Probabilistic versus max margin. IEEE Trans. Pattern Anal. Mach. Intell. 2011, 33, 1310–1323. [Google Scholar] [CrossRef] [PubMed]

- Laxton, B.; Lim, J.; Kriegman, D. Leveraging temporal, contextual and ordering constraints for recognizing complex activities in video. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Minneapolis, MN, USA, 17–22 June 2007. [Google Scholar]

- Zeiler, M.; Fergus, R. Visualizing and understanding convolutional networks. In Proceedings of the European Conference on Computer Vision, Zurich, Switzerland, 6–12 September 2014; pp. 818–833. [Google Scholar]

- Chatfield, K.; Simonyan, K.; Vedaldi, A.; Zisserman, A. Return of the devil in the details: Delving deep into convolutional nets. In Proceedings of the British Machine Vision Conference, Nottingham, UK, 1–5 September 2014. [Google Scholar]

- Szegedy, C.; Liu, W.; Jia, Y.; Sermanet, P.; Reed, S.; Anguelov, D.; Erhan, D.; Vanhoucke, V.; Rabinovich, A. Going deeper with convolutions. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Boston, MA, USA, 7–12 June 2015. [Google Scholar]

- Russakovsky, O.; Deng, J.; Su, H.; Krause, J.; Satheesh, S.; Ma, S.; Huang, Z.; Karpathy, A.; Khosla, A.; Bernstein, M.; et al. ImageNet large scale visual recognition challenge. Int. J. Comput. Vis. 2015, 115, 211–252. [Google Scholar] [CrossRef]

- Soomro, K.; Zamir, A.; Shah, M. UCF101: A Dataset of 101 Human Actions Classes from Videos in the Wild; Technical Report; University of Central Florida: Orlando, FL, USA, 2012. [Google Scholar]

- Kuehne, H.; Jhuang, H.; Garrote, E.; Poggio, T.; Serre, Y. HMDB: A large video database for human motion recognition. In Proceedings of the IEEE International Conference on Computer Vision, Barcelona, Spain, 6–13 November 2011. [Google Scholar]

- Wang, L.; Xiong, Y.; Wang, Z.; Qiao, Y. Towards good practices for very deep two-stream convnets. arXiv, 2015; arXiv:1507.02159. [Google Scholar]

- Liu, D.; Hua, G.; Chen, T. A hierarchical visual model for video object summarization. IEEE Trans. Pattern Anal. Mach. Intell. 2010, 32, 2178–2190. [Google Scholar] [CrossRef] [PubMed]

- Gong, B.; Chao, W.L.; Grauman, K.; Sha, F. Diverse sequential subset selection for supervised video summarization. In Proceedings of the Advances in Neural Information Processing Systems, Montreal, QC, Canada, 8–13 December 2014. [Google Scholar]

- Laganiere, R.; Bacco, R.; Hocevar, A.; Lambert, P.; Pais, G.; Ionescu, B.E. Video summarization from spatio-temporal features. In Proceedings of the ACM TRECVid Video Summarization Workshop, Vancouver, BC, Canada, 31 October 2008. [Google Scholar]

- Lu, Z.; Grauman, K. Story-driven summarization for egocentric video. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Portland, OR, USA, 23–28 June 2013. [Google Scholar]

- Qiu, Q.; Jiang, Z.; Chellappa, R. Sparse Dictionary-based Attributes for Action Recognition and Summarization. arXiv, 2013; arXiv:1308.0290. [Google Scholar]

- Rasmussen, C.; Williams, C. Gaussian Processes for Machine Learning; The MIT Press: Cambridge, MA, USA, 2006. [Google Scholar]

- Graves, A.; Mohamed, A.; Hinton, G. Speech recognition with deep recurrent neural networks. In Proceedings of the International Conference on Acoustics, Speech and Signal Processing, Vancouver, BC, Canada, 26–31 March 2013; pp. 6645–6649. [Google Scholar]

- Sutskever, I.; Vinyals, O.; Le, Q.V. Sequence to sequence learning with neural networks. In Proceedings of the Advances in Neural Information Processing Systems, Montreal, QC, Canada, 8–13 December 2014; pp. 3104–3112. [Google Scholar]

- Baccouche, M.; Mamalet, F.; Wolf, C.; Garcia, C.; Baskurt, A. Action classification in soccer videos with long short-term memory recurrent neural networks. In Proceedings of the International Conference on Artificial Neural Networks, Thessaloniki, Greece, 15–18 September 2010; pp. 154–159. [Google Scholar]

- Olah, C. Understanding LSTM Networks. Available online: http://colah.github.io/posts/2015-08-Understanding-LSTMs/ (accessed on 17 Semptember 2018).

- Zach, C.; Pock, T.; Bischof, H. A duality based approach for realtime tv-L1 optical flow. In Proceedings of the DAGM Symposium on Pattern Recognition, Heidelberg, Germany, 12–14 September 2007; pp. 214–223. [Google Scholar]

- Wang, H.; Schmid, C. Action recognition with improved trajectories. In Proceedings of the IEEE International Conference on Computer Vision, Sydney, Australia, 1–8 December 2013; pp. 3551–3558. [Google Scholar]

- Peng, X.; Wang, L.; Wang, W.; Qiao, Y. Bag of visual words and fusion methods for action recognition. Comput. Vis. Image Underst. 2016, 150, 109–125. [Google Scholar] [CrossRef]

- Wang, L.; Qiao, Y.; Tang, X. Action recognition with trajectory-pooled deep-convolutional descriptors. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Boston, MA, USA, 7–12 June 2015; pp. 4305–4314. [Google Scholar]

- Fernando, B.; Anderson, P.; Hutter, H.; Gould, S. Discriminative hierarchical rank pooling for activity recognition. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Las Vegas, NV, USA, 26 June–1 July 2016; pp. 1924–1932. [Google Scholar]

- Kar, A.; Rai, N.; Sikka, K.; Sharma, G. AdaScan adaptive scan pooling in deep convolutional neural networks for human action recognition in Videos. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Honolulu, HI, USA, 21–26 July 2017. [Google Scholar]

- Wang, X.; Farhadi, A.; Gupta, A. Actions transformations. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Las Vegas, NV, USA, 26 June–1 July 2016; pp. 2658–2667. [Google Scholar]

- Zhao, S.; Liu, Y.; Han, Y.; Hong, R. Pooling the convolutional layers in deep ConvNets for video action recognition. IEEE Trans. Circuits Syst. Video Technol. 2017, 28, 1839–1849. [Google Scholar] [CrossRef]

- Park, E.; Han, X.; Berg, T.; Berg, A. Combining multiple sources of knowledge in deep CNNs for action recognition. In Proceedings of the IEEE Winter Conference on Applications of Computer Vision, Lake Placid, NY, USA, 7–9 March 2016; pp. 177–186. [Google Scholar]

- Li, Y.; Li, W.; Mahadevan, V.; Vasconcelos, N. VLAD3: Encoding dynamics of deep features for action recognition. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Las Vegas, NV, USA, 26 June–1 July 2016; pp. 1951–1960. [Google Scholar]

- Qiu, Z.; Yao, T.; Mei, T. Learning spatio-temporal representation with pseudo-3D residual networks. In Proceedings of the IEEE International Conference on Computer Vision, Venice, Italy, 22–29 October 2017; pp. 5534–5542. [Google Scholar]

- Wu, Z.; Wang, X.; Jiang, Y.; Ye, H.; Xue, X. Modeling spatial-temporal clues in a hybrid deep learning framework for video classification. In Proceedings of the ACM Multimedia Conference, Seoul, Korea, 26–30 October 2018. [Google Scholar]

- Wu, Z.; Jiang, Y.; Ye, H.; Wang, X.; Xue, X.; Wang, J. Fusing Multi-Stream Deep Networks for Video Classification. arXiv, 2015; arXiv:509.06086. [Google Scholar]

- Maaten, D.; Hinton, G. Visualizing data using t-sne. J. Mach. Learn. Res. 2008, 9, 2579–2605. [Google Scholar]

| Layers | Building Blocks | Shape of the Input | ||||||

|---|---|---|---|---|---|---|---|---|

| Res3D-8 (8f Res3D) | Res3D-4D | Res3D-4B3 (4f Res3D) | Res3D-4B4 | Res3D-16D | Res3D-16S | Res3D-16B2 (16f Res3D) | ||

| Conv1 | 3 × 7 × 7, 64 | 3 × 8 × 112 × 112 | 3 × 4 × 112 × 112 | 3 × 4 × 112 × 112 | 3 × 4 × 112 × 112 | 3 × 16 × 112 × 112 | 3 × 8 × 112 × 112 | 3 × 16 × 112 × 112 |

| Conv2_x | 64 × 8 × 56 × 56 | 64 × 4 × 56 × 56 | 64 × 4 × 56 × 56 | 64 × 4 × 56 × 56 | 64 × 16 × 56 × 56 | 64 × 8 × 56 × 56 | 64 × 16 × 56 × 56 | |

| Conv3_x | 64 × 8 × 56 × 56 | 64 × 4 × 56 × 56 | 64 × 4 × 56 × 56 | 64 × 4 × 56 × 56 | 64 × 16 × 56 × 56 | 64 × 8 × 56 × 56 | 64 × 8 × 56 × 56 | |

| Conv4_x | 128 × 4 × 28 × 28 | 128 × 2 × 28 × 28 | 128 × 4 × 28 × 28 | 128 × 2 × 28 × 28 | 128 × 8 × 28 × 28 | 128 × 4 × 28 × 28 | 128 × 4 × 28 × 28 | |

| Conv5_x | 256 × 2 × 14 × 14 | 256 × 1 × 14 × 14 | 256 × 2 × 14 × 14 | 256 × 2 × 14 × 14 | 256 × 4 × 14 × 14 | 256 × 2 × 14 × 14 | 256 × 2 × 14 × 14 | |

| FC | InnerProduct Softmax | 512 × 1 × 7 × 7 | 512 × 1 × 7 × 7 | 512 × 1 × 7 × 7 | 512 × 1 × 7 × 7 | 512 × 2 × 7 × 7 | 512 × 1 × 7 × 7 | 512 × 1 × 7 × 7 |

| Clip Accuracy | -- | 82.5% | 75.2% | 80.4% | 79.1% | No Functional | 84.4% | 84.5% |

| Video Accuracy (Res5b) | -- | 87.6% | 81.3% | 85.9% | 84.6% | No Functional | 88.3% | 88.7% |

| Video Accuracy (Pool5) | -- | 87.1% | 81.1% | 85.3% | 84.2% | No Functional | 87.8% | 88.1% |

| Features Selection | Video Accuracy (Pool5) |

|---|---|

| Sparse split | 87.1% |

| Dense split | 87.3% |

| 16/32 atoms mining | 86.7%/86.9% |

| 16/32 random selection | 86.3%/86.4% |

| Methods | Video Accuracy |

|---|---|

| the max_num step | 20.4% |

| the num step | 87.2% |

| Summation of the first num steps | 87.9% |

| Methods | Video Accuracy |

|---|---|

| Single Stream | |

| RGB stream | 87.9% |

| OF stream | 84.7% |

| Two Streams | |

| Early fusion | 92.2% |

| Middle fusion | 91.5% |

| Late fusion | 92.4% |

| Methods | Video Accuracy |

|---|---|

| Single-temporal-scale | |

| 4f temporal scale | 92.1% |

| 8f temporal scale | 92.4% |

| 16f temporal scale | 92.2% |

| Multi-temporal-scale | |

| Our method | 92.9% |

| Sparse Clips | Atoms | |

|---|---|---|

| Unordered Modeling | 87.1% | 86.9% |

| Temporal Modeling | -- | 87.9 |

| Unordered Modeling | Our Temporal Modeling |

|---|---|

| Feature extraction: 8f Res3D: 2.99 ms/frame | Feature extraction: 4f Res3D: 3.96 ms/frame 8f Res3D: 2.99 ms/frame 16f Res3D: 1.91 ms/frame |

| Classification: 25088-dimensional feature: 367.44 ms | Atoms Mining: 0–32 clips: 0 ms (No need atom mining) 33 clips: 101.21 ms 64 clips: 282.62 ms 96 clips: 836.72 ms 128 clips: 1197.43 ms |

| Temporal modeling (with classification): 0.46 ms |

| Methods | UCF101 | HMDB51 | CNN Architecture | Input of CNN |

|---|---|---|---|---|

| Handcrafted Representation Methods | ||||

| iDT [47] | 85.9% | 57.2% | -- | -- |

| iDT+HSV [48] | 87.9% | 61.1% | -- | -- |

| Deep Learning Methods (2D CNN) | ||||

| SlowFusion [6] | 65.4% | -- | AlexNet | RGB |

| TDD [49] | 90.3% | 63.2% | ZFNet | RGB + OF |

| Two-stream (Averaging/SVM) [7] | 86.9%/ 88.0% | 58.0%/ 59.4% | CNN-M | RGB + OF |

| Improved Two-stream [14] | 92.5% | 65.4% | VGG-16 | RGB + OF |

| RankPooling [50] | 91.9% | 65.4% | VGG-16 | RGB + OF |

| AdaScan [51] | 89.4% | 54.9% | VGG-16 | RGB + OF |

| Transformation [52] | 92.4% | 62.0% | VGG-16 | RGB + OF |

| Trajectory Pooling [53] | 92.1% | 65.6% | VGG-16,CNN-M | RGB + OF |

| Multi-Source [54] | 89.1% | 54.9% | VGG-19 | RGB + OF |

| ConvPooling [23] | 88.2% | -- | GoogLeNet | RGB + OF |

| SlowFusion [6] | 65.4% | -- | AlexNet | RGB |

| Deep Learning Methods (3D CNN) | ||||

| C3D [12] | 85.2% | -- | C3D | RGB |

| VLAD3 [55] | 90.5% | -- | C3D | RGB |

| LTC [20] | 91.7% | 64.8% | LTC | RGB + OF |

| Res3D [13] | 85.8% | 54.9% | Res3D | RGB |

| P3D-ResNet [56] | 88.6% | -- | P3D-ResNet | RGB |

| C3D [12] | 85.2% | -- | C3D | RGB |

| Deep Learning Methods (2D CNN-RNN) | ||||

| LRCN [22] | 82.3% | -- | ZFNet | RGB + OF |

| LSTM [23] | 88.6% | -- | GoogLeNet | RGB + OF |

| Hybrid Deep [57] | 91.3% | -- | VGG-19,CNN-M | RGB + OF |

| Unsupervised [24] | 84.3% | VGG-16 | RGB + OF | |

| Multi-stream [58] | 92.6% | VGG-19,CNN-M | RGB + OF | |

| LRCN [22] | 82.3% | -- | ZFNet | RGB + OF |

| Deep Learning Methods (3D CNN-RNN) | ||||

| Our method | 92.9% | 66.7% | Res3D | RGB + OF |

© 2018 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (http://creativecommons.org/licenses/by/4.0/).

Share and Cite

Yao, G.; Lei, T.; Liu, X.; Jiang, P. Temporal Modeling on Multi-Temporal-Scale Spatiotemporal Atoms for Action Recognition. Appl. Sci. 2018, 8, 1835. https://doi.org/10.3390/app8101835

Yao G, Lei T, Liu X, Jiang P. Temporal Modeling on Multi-Temporal-Scale Spatiotemporal Atoms for Action Recognition. Applied Sciences. 2018; 8(10):1835. https://doi.org/10.3390/app8101835

Chicago/Turabian StyleYao, Guangle, Tao Lei, Xianyuan Liu, and Ping Jiang. 2018. "Temporal Modeling on Multi-Temporal-Scale Spatiotemporal Atoms for Action Recognition" Applied Sciences 8, no. 10: 1835. https://doi.org/10.3390/app8101835

APA StyleYao, G., Lei, T., Liu, X., & Jiang, P. (2018). Temporal Modeling on Multi-Temporal-Scale Spatiotemporal Atoms for Action Recognition. Applied Sciences, 8(10), 1835. https://doi.org/10.3390/app8101835