1. Introduction

With the rapid development of optical measurement technology and three-dimensional (3-D) imaging [

1,

2,

3], point cloud data has received substantial attention as a special information format that contains complete 3-D spatial data. The application of the 3-D image information is widespread in the fields of 3-D reconstruction for medical applications [

4], 3-D object recognition, reverse engineering of mechanical components [

5], virtual reality, and many others such as image processing and machine vision [

6,

7].

There have been many efforts to achieve point cloud registration. The classic algorithm for this purpose is the iterative closest point [

8], proposed by Besl and Mckay. This algorithm can be efficiently applied to registration problems for simple situations. However, if there is significant variance in the initial position of the two cloud points, it is easy to fall into a local optimum and thus increase the possibility of inaccurate registration. In order to provide improved initial parameters, it is necessary to perform the initial registration before accurate registration using algorithms such as the sampling consistency initial registration algorithm [

9]. Due to the large capacity and complexity of point cloud data models, describing feature points is one of the most important and decisive steps in the processing for initial registration. Various methods have been developed to obtain feature information, such as local binary patterns (LBP) [

10], local reference frame (LRF) [

11], signatures of histogram of orientations (SHOT) [

12], and point feature histograms (PFH) [

13]. These feature operators can only provide a single description for feature information with high feature dimensions and high computational complexity.

Other efforts have been made in terms of feature matching. Scale-invariant feature transform (SIFT) [

14,

15,

16] utilizes difference of gaussian (DOG) images to calculate key points. It describes local features of images and obtains the corresponding 3-D feature points through mapping relationships. It has certain stability in terms of the change of view and affine transformation; however, the matching speed for this algorithm is the main limitation. The speeded-up robust features (SURF) algorithm can be used to extract the feature points of the image [

17,

18,

19,

20] and implement image matching according to the correlation. However, this algorithm relies too much on the gradient direction of the pixels in the local area, which yields unsatisfactory feature matching results. The intrinsic shape signature (ISS) algorithm has been proposed for feature extraction to complete the initial registration process [

21,

22]; however, wide range in searching feature point pairs and low computational efficiency are the limitations for this algorithm. The method for interpolating point cloud models using basis functions has been proposed for establishing local correlation to reduce computational complexity [

23]. There are some limitations in traditional methods, such as the inability to comprehensively describe feature information and slow matching of feature point pairs. These issues limit the accuracy and speed of 3-D point cloud registration and significantly impacts its application in practical fields. Based on the traditional sampling consistency initial registration, and iterative closest point accurate registration, a new point cloud registration algorithm is proposed herein. The proposed algorithm combines fast point feature histograms (FPFH) feature description with greedy projection triangulation. The FPFH feature descriptor describes feature information accurately and comprehensively, and greedy projection triangulation reflects the topological connection between data points and its neighbors, establishes local optimal correlation, narrows the search scope, and eliminate unnecessary matching times. The combination solves the problems of the slow speed and the low accuracy in traditional point cloud registration, which leads to improvements in the optical 3-D measurement technology. The effectiveness of the proposed algorithm is experimentally verified by performing point cloud registration on a target object.

The contents of the paper consist of four sections. In

Section 2, the specifications of the point cloud registration algorithm are discussed. In

Section 3, experiments and analysis performed using the point cloud library (PCL) are presented. Finally, the conclusions are presented in

Section 4.

2. Point Cloud Registration Algorithm

Regarding the complexity of the target, integral information can only be obtained by scanning multiple stations from different directions. The data scanned by each direction is based on its own coordinate system, and then unify them to the same coordinate system. Control points and target points are set in the scan area such that there are multiple control points or control targets with the same name on the map of the adjacent area. Thus, the adjacent scan data has the same coordinate system through the forced attachment of control points. The specific algorithm is as follows.

First, the FPFH of the point cloud is calculated and the local correlation is established to speed-up the search for the closest eigenvalue using the greedy projection triangulation network. Because of the unknown relative position between the two point cloud models, sample consensus initial alignment is used to obtain an approximate rotation translation matrix to realize the initial transformation. In addition, the iterative closest point is further refined to obtain a more accurate matrix with the initial value. The point cloud registration chart is shown in

Figure 1.

2.1. Feature Information Description

FPFH is a simplification algorithm for point feature histograms (PFH), which is a histogram of point features reflecting the local geometric features around a given sample point. All neighboring points in the neighborhood

of the sample point

are examined and a local

coordinate system is defined as follows:

The relationship between pairs of points in the neighborhood

is represented by the parameters (

) and can be obtained as follows:

where

and

(

) denote the point pairs and

and

denote their corresponding normals in the sample point neighborhood

.

The eigenvalues of all point pairs are then calculated and the PFH of each sample point

is then statistically integrated. Next, the neighborhood

of each point is determined to form a simplified point feature histogram (SPFH), which is then integrated into the final FPFH. Hence, each sample point is uniquely represented by the FPFH feature descriptor. The eigenvalues of FPFH can be calculated using the following equation:

where

denotes the distance between the sample point

and the neighboring point

in the known metric space.

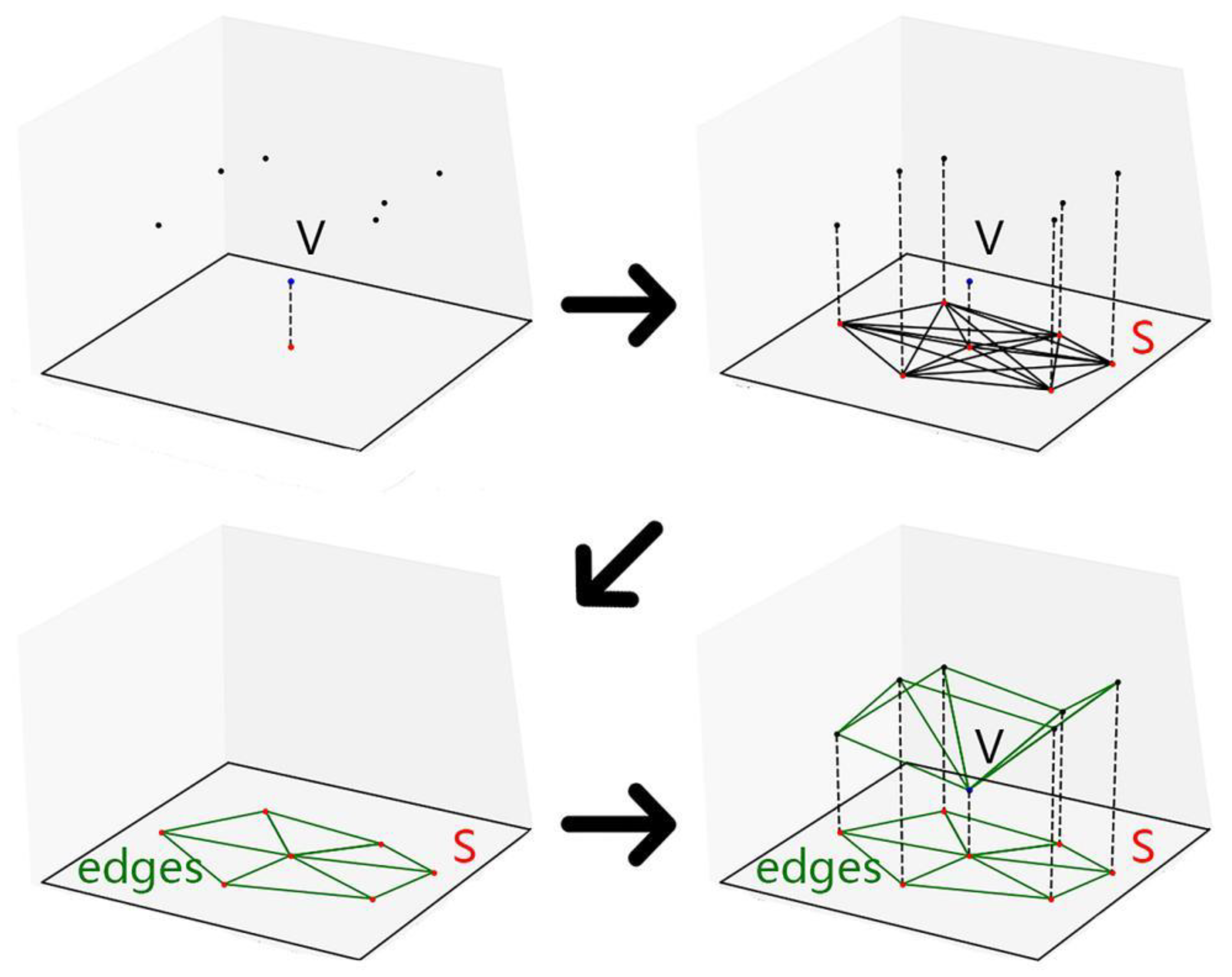

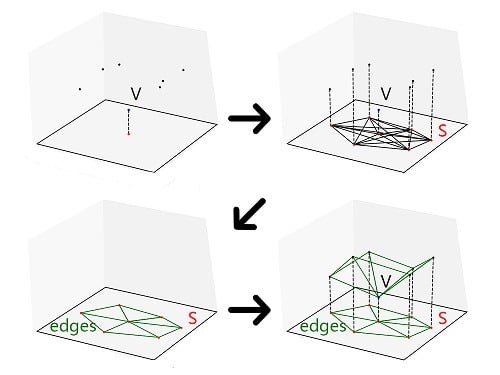

2.2. Greedy Projection Triangulation

Greedy projection triangulation bridges computer vision and computer graphics. It converts the scattered point cloud into an optimized spatial triangle mesh, thereby reflecting the topological connection relationship between data points and their neighboring points, and maintaining the global information of the point cloud data [

24]. The established triangulation network reflects the topological structure of the target object that is represented by the scattered data-set. The triangulation process is shown in

Figure 2. The specific steps are given as follows:

Step 1: A point and its normal vector exist on the surface of the three-dimensional object. The tangent plane perpendicular to the normal vector must first be determined.

Step 2: The point and its vicinity are projected to the tangent plane passing through , denoted as the point set , and the point set , which forms all N/2 edges between the two points, is linearly arranged in order of distance from small to large.

Step 3: The local projection method is used to add the shortest edge at each stage and remove the shortest edge from the memory. If the edge does not intersect any of the current triangulation edges, then it is added to the triangulation, otherwise, it is removed. When the memory is empty, the triangulation process ends.

Step 4: Triangulation is used to obtain the connection relationship of the points and return it to the three-dimensional space, which forms the space triangulation of the point and its nearby points.

Greedy projection triangulation can establish a reasonable data structure for a large number of scattered point clouds in the 3-D space. When positioning a point, the path is unique, and the tetrahedron can be located accurately and quickly, thereby narrowing the search range and eliminating unnecessary matching. This fundamentally improves the overall efficiency of matching feature points.

2.3. Sample Consensus Initial Registration

The sample consensus initial alignment is used for initial registration. Assuming that there exists a source cloud and a target cloud , then the specific steps are as follows:

Step 1: Based on the FPFH feature descriptor of each sample point, greedy projection triangulation is performed on the target point cloud to establish local correlation of the scattered point cloud data.

Step 2: A number of sampling points are selected in the source point cloud . In order to ensure that the sampling points are representative, the distance between two sampling points must be greater than the preset minimum distance threshold .

Step 3: Search for the feature points in the target point cloud , whose feature value are close to the sample points in the source point cloud . Given that the greedy projection triangulation establishes a reasonable data structure for the target point cloud and then performs feature matching, it directly locates the tetrahedron with a large correlation and searches for the corresponding point pairs within the local scope.

Step 4: The transformation matrix between the corresponding points is obtained. The performance of registration is evaluated according to the total distance error function by solving the corresponding point transformation, which is expressed as follows:

in which,

is the specified value and

is the distance difference after the corresponding point transformation. When the registration process is completed, the one with the smallest error in all the transformations is considered as the optimal transformation matrix for initial registration.

2.4. Iterative Closest Point Accurate Registration

The initial transformation matrix is the key to improved matching for accurate registration. An optimized rotational translation matrix was obtained by initial registration, which is used as an initial value for accurate registration to obtain a more accurate transformation relationship by the iterative closest point algorithm.

Based on the optimal rotation translation matrix obtained from the initial registration, the source point cloud

is transformed into

, and it is used together with

as the initial set for accurate registration. For each point in the source point cloud, the nearest corresponding point in the target point cloud is determined to form the initial corresponding point pair and the corresponding point pair with the direction vector threshold is deleted. The rotation matrix

and translation vector

are then determined. Given that

and

have six degrees of freedom while the number of points is huge, a series of new

and

are obtained by continuous optimization. The nearest neighbor point changes with the position of the relevant point after the conversion; therefore, it returns to the process of continuous iteration to find the nearest neighbor point. The objective function is constructed as follows:

when the change of the objective function is smaller than a certain value, it is believed that the iterative termination condition has been satisfied. More precisely, accurate registration has been completed.

3. Experiment and Analysis

During the experiment, Kinect was used as a 3-D vision sensor to realize point cloud data acquisition. The original point cloud data that was collected was processed on the Geomagic Studio 12 (Geomagic Corporation, North Carolina, the United States) platform and the experiment was completed in Microsoft Visual C++ (Microsoft Corporation, Washington, the United States). The traditional algorithm collects two point cloud data under different orientations of the same object, performs initial registration and fine registration without applying greedy projection triangulation. The greedy projection triangulation is added to address the limitations in terms of registration speed and accuracy, and the superiority of the proposed algorithm is analyzed by comparing with the traditional algorithm.

3.1. Point Cloud Registration Experiment for Simple Target Object

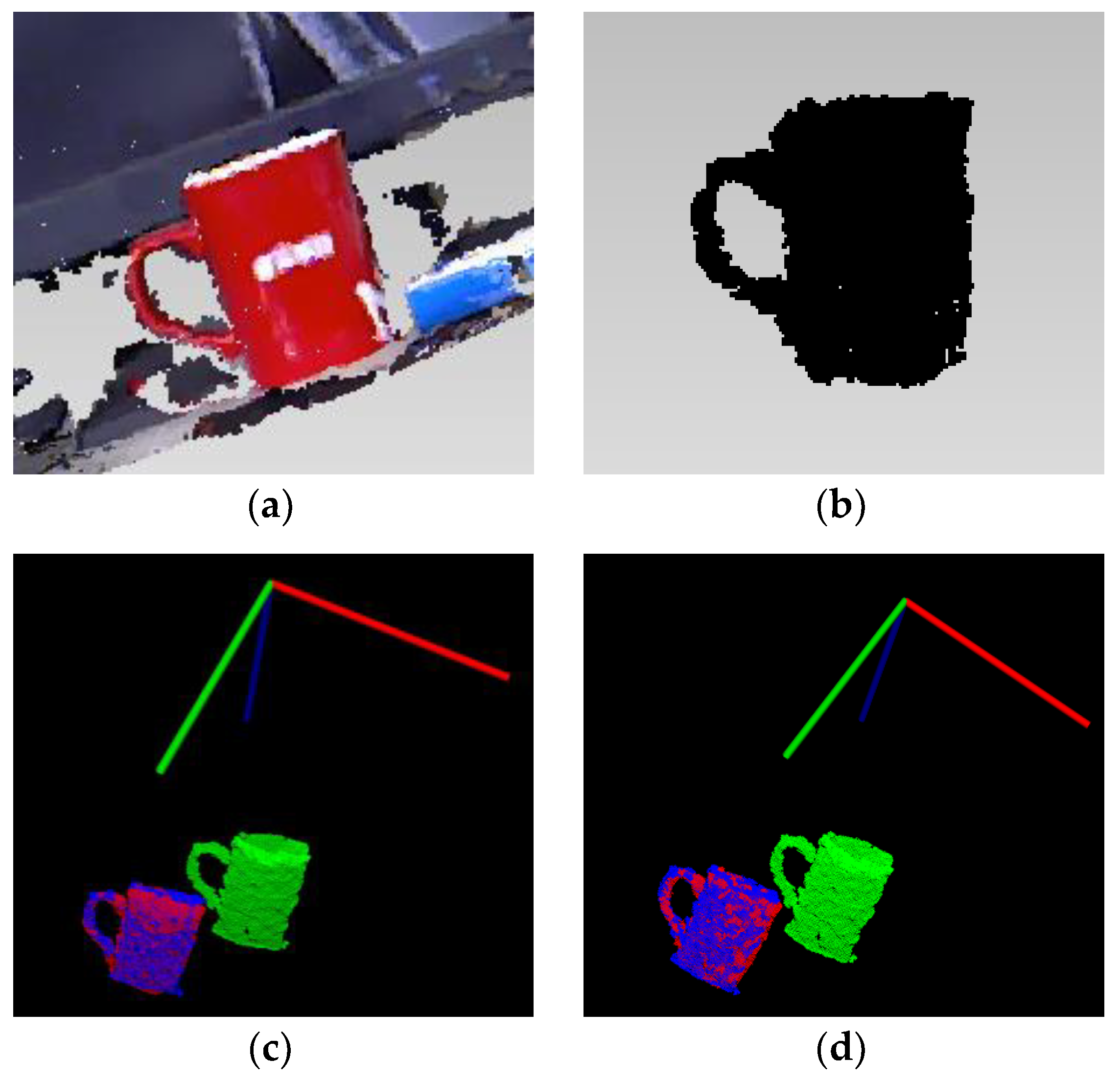

In this experiment, a cup is used as an example for registration.

Figure 3a shows the original point cloud data and

Figure 3b shows cup point cloud data after removing the background.

Figure 3c shows the registration result obtained by the traditional algorithm while

Figure 3d shows the registration result obtained using the registration algorithm proposed in this paper. The red regions in the point cloud represents the source point cloud data while the green and the blue regions represent the target point cloud and the rotated cloud point data, respectively.

Table 1 lists the experimental parameters.

Table 2 lists the results of the target point cloud conversion obtained using the traditional point cloud registration algorithm and the results obtained using the proposed algorithm, which reflect the relative transformation relationship of the target object.

Table 3 compares the registration times of different algorithms.

When analyzing the above experiments, the attitude of the source cloud is considered as a reference and the attitude of the target object is decomposed into three directions, namely X, Y, and Z. The rotation angle in three directions and the matching error distance between the source cloud and the transformed point cloud are considered as the evaluation indices. The rotation angle and the registration error distance in this experiment are shown in

Table 4.

From

Table 3, it can be observed that for the same point cloud sample with the same experimental parameters, the initial registration time using the traditional algorithm is 0.340 s. Because of the combination of FPFH feature description and greedy projection triangulation, the initial registration time obtained using the proposed algorithm is 0.252 s.

Table 4 shows a comparison of the two algorithms. The average error distance obtained is 1.58 mm and 1.49 mm using the traditional algorithm and the proposed algorithm, respectively. As shown in

Table 2, the point cloud is transformed by the different transformation matrix, and the average error distance obtained is smaller by the proposed algorithm.

3.2. Point Cloud Registration Experiment for Complex Target Object

In this experiment, the point cloud models of the same person in different orientations are collected and then registered using different algorithms.

Figure 4a shows the results of 3-D reconstruction.

Figure 4b,c show the registration results of the traditional algorithm and the proposed algorithm, respectively. As can be seen from the figure, the blue point cloud and the red point cloud are more highly integrated in

Figure 4c than that in

Figure 4b, which can be known the proposed algorithm is more accurate than the traditional algorithm.

Table 5 shows a comparison of the registration time of different algorithms.

Table 6 shows the obtained rotation angle and the registration error distance in this experiment. The eight groups affine transformations are performed on the input point cloud data, which verify the reliability of the algorithm.

Table 7 shows a comparison of the average registration error distance and the total registration time of the eight groups of experiments. The ratio of average registration error reduction is between 27.3% and 50%, and the ratio of total registration time reduction is about 1.1%. It can be seen that the average registration error distance of the proposed algorithm is smaller and the total registration time is shorter than the traditional one, which verifies the reliability of the proposed algorithm.

Compared with the results obtained from the traditional algorithm, it is concluded that the proposed algorithm has higher registration accuracy and faster registration speed. Its advantages can be attributed to the following factors:

- (a)

The FPFH feature descriptor describes feature information accurately and comprehensively and avoids the errors in matching feature point pairs.

- (b)

Greedy projection triangulation reflects the topological connection between data points and its neighbors, establishes local optimal correlation, narrows the search scope, and reduce unnecessary matching times.

- (c)

The combination of the FPFH feature description and the greedy projection triangulation can match similar point pairs accurately and quickly, which is the key to efficient registration.

4. Conclusions

Based on the traditional sample consensus initial alignment and iterative closest point algorithms, a new point cloud registration algorithm based on the combination of the FPFH feature description and the greedy projection triangulation was proposed herein. The 3-D point cloud data is used to improve the information regarding the two-dimensional image, and the data information is completely preserved. The FPFH comprehensively describes the local geometric feature information around the sample point. This simplifies the complexity of feature extraction and improves the accuracy of feature description. Greedy projection triangulation solves the problem that the feature points have a wide search range during the registration process. Thus, the number of matching processes is reduced.

In the registration experiment for target object, the registration speed increased by 1.1% and the registration accuracy improved by 27.3% to 50%. The results show that the optimized spatial triangular mesh established by greedy projection triangulation narrows the search range of feature points, which improved the registration speed and accuracy. The initial registration determines an approximate rotational translation relationship between the two point cloud models. Using it as the initial value, accurate registration is performed to obtain a more precise relative change relationship. The greedy projection triangulation optimizes the traditional registration algorithm, thereby making the registration process faster and more accurate.