Abstract

This paper presents a glove designed to assess the viability of communication between a deaf-blind user and his/her interlocutor through a vibrotactile device. This glove is part of the TactileCom system, where communication is bidirectional through a wireless link, so no contact is required between the interlocutors. Responsiveness is higher than with letter by letter wording. The learning of a small set of concepts is simpler and the amount learned can be increased at the user’s convenience. The number of stimulated fingers, the keying frequencies and finger response were studied. Message identification rate was 97% for deaf-blind individuals and 81% for control subjects. Identification by single-finger stimulation was better than by multiple-finger stimulation. The interface proved suitable for communication with deaf-blind individuals and can also be used in other conditions, such as multilingual or noisy environments.

1. Introduction

Human-environment interaction is carried out by means of the five senses: sight, ear, taste, smell, and touch. In some cases, these senses—understood as information channels—become overloaded, weakened, or even lost. This is an issue for individuals with deaf-blindness, due to the fact that their principal information channels to interact with their environment are noticeably weakened or, in many cases, lost. Therefore, these subjects need different channels to manage external information and a specific approach to communicate with other individuals and express themselves.

Communication is achieved through one of the unimpaired senses; in this case, touch. Haptic communication has been one of the most common strategies applied to improve deaf-blind individuals’ interaction with the environment []. The solutions include the Braille system [] and related devices such as the finger-Braille [,] or the body-Braille [], the Tadoma method [], the tactile sign language, the Malossi alphabet [], spectral displays [], tactile displays [], electro-tactile displays [], or the stimulation of the mechano-receptive systems of the skin, among others. In the case of residual sight, sign language or lipreading [] can be used. On the other hand, for some deaf individuals, acoustic nerve stimulating devices can be implanted when suitable. Commercial products for deaf-blind people adapted to the telephone are available, based on teletype writer (TTY) systems adapted with a telephone device for the deaf (TDD). Some examples are the PortaView 20 Plus TTY system from Krown manufacturing, the FSTTY (Freedom Scientific’s TTY) adapted to the deaf-blind users with the FaceToFace proprietary software, or the more recent Interpretype Deaf-Blind Communication System [,]. These systems rely on the Braille code by means of tactile displays or input devices.

In order to design a communication channel based on touch, it is necessary to know the basic physiology of the skin []. Skin is classified either as non-hairy (glabrous) or hairy. This classification is relevant for haptic stimulation because each type of skin possesses different sensory receptor systems and, thus, different perception mechanisms []. Also, it is important to consider that each mechano-receptive fibre plays a specific role in the perception of external stimuli [].

Although there are several methods [], tactile stimulation is usually based on either a moving coil or a direct current (DC) motor with an eccentric weight mounted on it []. Therefore, the stimuli generate a vibrotactile sensation that is related to the stimulation frequency and its amplitude []. The literature on vibrotactile methods describes two physiological systems, according to the fibre grouping: the Pacinian system and the non-Pacinian system. The Pacinian system involves a large receptive field that can be stimulated by higher frequencies (40–500 Hz). On the contrary, the non-Pacinian system has a small receptive field that can be stimulated by lower frequencies: 0.4–3 Hz and 3–40 Hz [,]. Vibration is highly suitable because the user tends to adapt rapidly to stationary touch stimuli [], in such a way that a repeated stimulus produces a sensation that remains in conscious awareness.

Tactile stimulation through vibration has been widely studied. These studies include the evaluation of different place and space effects on the abdomen [], torso [], upper leg [], arm [,], and palm and fingers []. In addition, other parameters were taken into account when evaluating vibrotactile sensitivity: age [], skin temperature [], menstrual cycle [], body fat [], contactor area [], several spatial parameters involved in the stimulation of mechano-receptors [,], or the influence of having yet another sense weakened or impaired, e.g., sight or hearing [].

Blind individuals are familiar with the Braille code, but deaf-blindness is often degenerative, as in those diagnosed with Usher’s syndrome, and visual loss becomes progressive. Hence, the learning of the Braille code may take too long to accomplish or to feel comfortable with due to older age. On the other hand, communication by concepts is faster than letter by letter wording, as in Braille. Thus, the learning of a small set of concepts is simpler and can always be progressively increased. This paper analyses the feasibility of communication by concepts for deaf-blind individuals through a vibrotactile system and introduces TactileCom, as a communication system of substitution based on an efferent human-machine interface (HMI) implemented as a glove. The term efferent refers to the direction of the HMI interaction: if the interface performs a stimulation function it is an efferent HMI, but if the interface performs an acquisition function it is an afferent HMI []. TactileCom delivers information to the user by means of vibrotactile stimulation. This information is coded in an abstract stimulus-meaning relationship [], similar to the sign language, i.e., each stimulation pattern corresponds to a concept or idea. For instance, some information that can be relayed is “we are going to the hospital” or “you will be left alone.” This facilitates the learning of the code because deaf-blind individuals are often familiar with that kind of communication. The entire system was developed in a manner taking into account deaf-blind individuals’ preferences after discussion with potential users. Two community associations of deaf-blind individuals were consulted: ASOCYL (Association of Deaf-Blind People Castilla y León) and ASPAS (Association of Parents and Friends of the Deaf).

Although TactileCom is presented as a communication system for individuals with deaf-blindness, unimpaired subjects can also benefit from it. Its application in environments with difficult verbal communication is straightforward, e.g., noisy workplaces where people from different countries collaborate together, as in major infrastructure works or oil rigs, or for multimodal action-specific warnings []. The coded work instructions and coordination between the workers would be language independent. The wireless link to the HMI overcomes the noise limitations of the environment and even the lack of visual between sender and receiver.

Case Scenario

TactileCom is aimed at deaf-blind individuals, so it is specifically helpful when somebody wants to convey a message to a person with deaf-blindness. Figure 1 presents an overview of the scenario with all the parts involved. On one side (that of the deaf-blind user), the system is composed of the vibrotactile glove to receive messages and a small keyboard to send messages. On the other side, the unimpaired interlocutor sends and receives messages by means of a computer, i.e., a tablet or smartphone, which implements a voice recognition system.

Figure 1.

Communication system: (i) smartphone as afferent human-machine interface (HMI) for the unimpaired interlocutor, (ii) stimulating glove as efferent HMI for the deaf-blind individual, and (iii) Bluetooth link.

In daily life, the interlocutor of a deaf-blind individual can send a message or idea by choosing one of the three selection modes included in the mobile application: an icon menu, typing, or voice recognition. The message is transmitted via the wireless link to the efferent HMI, i.e., the glove. The messages are coded in an abstract representation [] and delivered on the fingers by a stimulation pattern.

The codification strategy was suggested by potential users. The main principals for that suggested representation are: (i) the users are already familiar with these kind of communication, and (ii) the users only need a small amount of concepts to communicate. It was the latter that prompted the election of the stimulation patterns or tactons [] analysed in the following sections. Hence, the simplest tactons were the ones chosen as a starting point to facilitate the everyday use of the HMI. Additionally, due to the abstract meaning-stimulus relationship, this set of tactons scales better than those that use a more mnemonic meaning-stimulus relationship []. Also, this method is faster and easier to decode than those that only transmit characters.

The proposed system leads to an improvement in communication compared with the tactile sign language, which requires proximity between the two interlocutors. Moreover, the wireless link between the two interfaces represents a clear benefit in terms of portability.

2. Methods

The vibrotactile glove was developed for this research project to assess the feasibility of vibrotactile communication of concepts. This efferent HMI is aimed at improving the interaction of the deaf-blind user with both the environment and the rest of the people. The glove is part of the TactileCom system that will be explained in this section along with the assessment procedure. The assessment is focused on the efferent HMI, as it is the main and more innovative part of our TactileCom.

2.1. Apparatus

The system allows the interaction between an unimpaired user A and a deaf-blind user B. User A does not need any previous knowledge of any adapted language, e.g., tactile sign language. The system design follows a methodology based on the involvement of all parties concerned []. This means that potential deaf-blind users, care-givers, and engineers collaborated closely in the discussion of trade-offs, achieving the implementation laid out here.

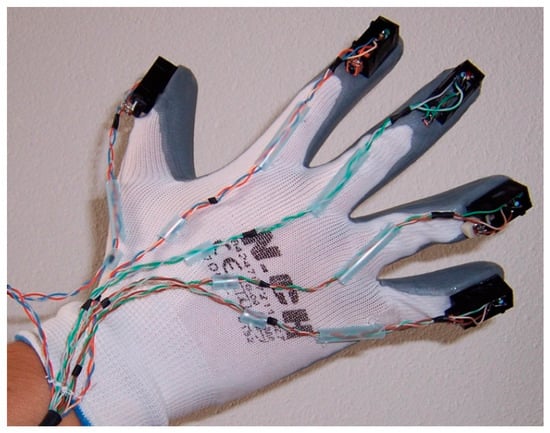

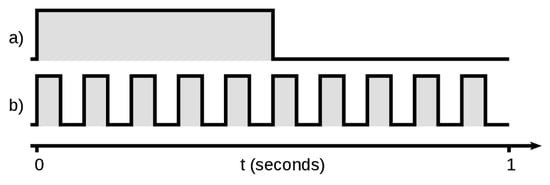

The HMI consists of a glove that stimulates the fingers with a predefined pattern, as shown in Figure 2. Each finger has a tactor attached, i.e., a DC motor with an eccentric weight mounted on it, so there are five tactors. The DC motor is a CEBEK C6070, used as a vibrator in cellular phones. The motors are controlled by an Arduino Micro board through a set of drivers to deliver the requested current. The Arduino board has a Bluetooth link to connect with the interlocutor. Tactor activation is coded with two on-off keying frequencies—1 Hz and 10 Hz—that modulate the activation or not of the tactors, as shown in Figure 3. The transient time to achieve the full vibration is negligible at 10 Hz. And this starting transient is compensated with the stopping transient.

Figure 2.

Efferent HMI developed for the vibrotactile stimulation of the subject. It consists of a glove with one tactor per finger. Each tactor is a direct current (DC) motor with an eccentric weight placed on the dorsal side of the finger, i.e., on the fingernail area.

Figure 3.

Applied signals to the tactors: (a) the amplitude is an on-off keying at 1 Hz, and (b) the amplitude is on-off keyed at 10 Hz. These signals switch on the tactors during the high level intervals. Thus, the shading indicates that the tactor is vibrating.

Hence, a two-dimensional stimulus mapping is obtained, i.e., tactor activation (location) and tactor keying frequency (rhythm). This mapping is consistent with the amount of information that can be received, processed, and remembered taking into account the span of absolute judgment and the span of immediate memory []. As previous studies have demonstrated, users perform better with two-dimensions rather than with three or more [].

According to previous studies [,], sensitivity towards the location of a touch or toward the separation between touched locations is greater when the body site is more mobile. Finger stimulation in this case fulfils this principle, and this was the main reason for choosing the glove as the efferent HMI. Thus, the fingers are used to stimulate the subject. On the other hand, it has been shown that repeated stimulation of a specific part of the body can lead to an improvement of tactile discrimination performance for that part of the body []. This allows the device to meet the user’s wishes regarding the location of the HMI in another target body part. Hence, other possible HMI locations can be proposed, though proper training is required.

The tactor produces a stimulating burst generated by the 170 Hz tactor rotation frequency. This stimulating frequency avoids the rapid adaptation to stationary touch stimuli []. The ratio between the applied amplitude and the achieved stimulus should be taken into account, as it is related to the tactor rotation frequency. The obtained 170 Hz is close to the range of frequencies with the lowest perception threshold amplitudes and, at that frequency, there is a sensitivity peak at the fingers that reinforces the effect []. This makes the HMI vibrotactile stimuli remain in conscious awareness. Hence, this set of characteristics match the requirements of the system.

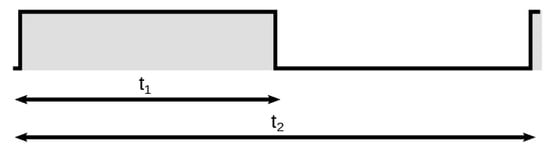

The explained rotation frequency is modulated by means of an on-off keying signal in order to achieve two keying frequencies, i.e., 1 Hz or 10 Hz, as shown in Figure 3. Thus, two possible stimuli are implemented: 170 Hz modulated with 1 Hz or 170 Hz modulated with 10 Hz. Two performance parameters should be taken into account: the time between the onset and the end of a burst, t1, and the time between the onsets of two bursts, t2 (Figure 4). As the burst duty cycle is 50%, then t2 is two times t1. The 1 Hz and 10 Hz frequencies, in combination with the 50% duty cycle, meet the t1 and t2 timing constraints to achieve an optimum performance [].

Figure 4.

Performance parameters t1 and t2, related to the keying frequency and the duty cycle; t1 is the time between the onset and the end of a burst, and t2 is the time between the onsets of two bursts.

At the other end of the communication channel, the afferent interface is based on a general purpose mobile phone or tablet. Contemporary mobile phones are like small computers, which allows for a broad set of applications. Therefore, an application that provides the user with a set of pieces of information, i.e., concepts or ideas, was developed, as shown in Figure 5. This set contains the pieces of information that somebody might want to convey to a deaf-blind subject. The unimpaired user can select these messages or ideas from an icon menu, by typing them or by voice recognition. Thus, the previous afferent interface based on a personal computer [] is substituted by a more portable one.

Figure 5.

Set of concepts to be selected on the mobile phone/tablet screen, e.g., OK, not OK, emergency, etc.

The channel between the vibrotactile HMI and the mobile phone is a Bluetooth link. Thus, only one mobile phone at a time can be linked to the efferent HMI that delivers the vibrotactile information to the deaf-blind subject.

2.2. Population

The experimental trials were performed, on the one hand, on eighteen gender-balanced control subjects (CS): nine male and nine female. The CS were students or employees at the University of Valladolid, and their ages ranged from 18 to 58 years old. The experiments were non-invasive, performed with a glove with vibrators, and no physical or psychological risks were involved. The experiments were approved by the Research Ethics Committee of the university (CEI—Comité Ético de Investigación de la Universidad de Valladolid). The subjects were not familiar with psychophysical studies or with the use of this kind of vibrotactile devices to get information from their environment. Seventeen of them were right-handed and only one was left-handed.

The study also included four deaf-blind subjects (DBS)—three male and one female, with an age range from 42 to 45 years old, with Usher’s syndrome, which is the most frequent cause of deaf-blindness in developed countries []. Usher’s syndrome is an autosomal recessive disorder characterized by sensorineural hearing loss and progressive visual loss secondary to retinitis pigmentosa []. Three of the subjects were familiar with other haptic and vibrotactile devices because they suffered advanced deaf-blindness, whereas the other one was not familiar with these systems because he still had some remnant sight. The deaf-blind population is fortunately not very large in Castilla y León (Spain). With a population of 2.5 million, there are 26 individuals affiliated to the deaf-blind associations and their availability is very limited. Therefore, our sample of four represents 15% of the individuals with deaf-blindness in this area.

2.3. Procedure

Twenty stimulation patterns or tactons were implemented on the HMI for the assessment. These patterns include the stimulation of one or more fingers with any combination of the two possible keying frequencies, as shown in Table 1. The number of patterns can be easily increased according to the user’s necessities, with a maximum of 242. All of these tactons have a one-second duration, as shown in Figure 3. In the experiment, each tacton is repeated continuously four times to achieve a four-second duration. The repetitions can be reduced as training improves the user’s performance, understood as correct identification of the perceived messages.

Table 1.

List of tactons implemented on the efferent HMI.

This pattern map was chosen after several meetings with the deaf-blind people and their caregivers. A basic set of messages was proposed for the daily activities; twenty messages was the number agreed upon. To relay these messages, the following design procedure was adopted: (i) stimuli in space—during the meetings, the hand was chosen because of its sensitivity and as a known instrument for them to communicate; (ii) stimuli in time—vibration frequency (170 Hz), adequate for a good perception, and on-off frequencies (1 or 10 Hz), that meet the timing constraints for optimum performance, as explained; and (iii) complexity of the stimuli—deaf-blind people and caregivers reported that the one-finger stimulus was the simplest, simultaneous stimulus of all the fingers is also very simple, and the next complexity level was the combinations of two and three fingers, to be studied in this work.

The same HMI glove was employed with all subjects. Due to the glove’s elastic material, it fitted most of the hands under test, although in some cases when the glove was not tight enough, an elastic bandage was also applied.

The table displays the location and rhythm of the stimulation patterns.

2.4. Initial Training

Before starting the actual assessment with the subjects in the experiment, both CS and DBS went through training to understand how the system worked. The training was both passive (i.e., explanation about the system operation) and active (i.e., wearing the HMI and making a demonstration to get the feeling of the two keying frequencies). There was also some visual feedback, as each glove has a light that blinks on the finger at the same pace as the tacton. The contents of the explanation were the same for both the CS and the DBS; however, the explanation was oral for the CS and then translated into tactile sign language for the DBS by a language assistant.

2.5. Trials with CS and DBS

After the initial training, the assessment began. The subjects were asked to guess the stimulation pattern, i.e., which tactors were turned on and which were the tactor keying frequencies. The subjects were told to guess the tacton as fast and accurately as possible in three attempts. The vibrotactile glove is a communication system, so we expect a high success rate in the first attempt. However, as in oral communication, sporadic repetitions are permitted to clarify the message. Hence, we allowed two more attempts in the procedure. For this task, eight patterns were randomly selected for the experiment, marked as * in Table 1. If the stimulation pattern was correctly identified, a subsequent pattern was then supplied. Otherwise, if the four-second tacton was wrongly guessed, it was repeated up to three times. The repetition allowed the subject to overcome communication failures when the guessing speed was not a limiting factor. Hence, we considered as a right guess any of the three attempts correctly guessed. In case the pattern was not accurately recognized in those three attempts, it was definitely marked as a wrong identification. Additionally, if the pattern was wrongly recognized by the subject under test, feedback was provided to the user as an aid. The feedback consisted in a new stimulation with the unrecognized pattern after the user was informed about the active tactors and their keying frequencies. This clue was selected because we detected that the main difficulty was guessing which fingers were involved. The subjects were informed in the same way as in the initial training.

These tests took place in a quiet and favourable environment (i.e., an isolated laboratory) in order to eliminate potentially distracting ambient sounds. The CS were blindfolded to avoid visual distractions. Their gloved right hand remained in the air without any physical contact with any surface or other objects. This avoided the bias that might have been produced in cases of contact with any other surface due to vibration propagation [].

2.6. Instruction Procedure for the DBS

After performing the assessment test, the DBS were actively instructed on all possible stimulations, as displayed in Table 1, and on the concepts or ideas associated to the stimulation provided. As DBS are familiar with concept relay, such as tactile sign language, this apprenticeship adds a new communication skill. This means that the subjects do not need to learn another code in order to understand different concepts or expressions that they do not previously know. This happens with Braille code: the user has to learn letters, words, and a grammar to communicate. We found that often deaf-blind people are not receptive to learn this new code. Additionally, it has been shown that this kind of abstract representation with messages scales better [] and makes communication faster and more customizable.

2.7. Extended Trials with the DBS

Afterward, the DBS were requested to guess the whole set of patterns shown in Table 1. The task procedure was repeated in the same manner, i.e., with three possible attempts, in the same environment and without any physical contact of the HMI hand with any other object.

3. Results

In this section, the performance of both groups is compared, starting with the trials after the initial training for understanding the system operation. The results achieved by the DBS in the extended trials are analysed according to the different stimulation parameters, i.e., the number of tactors, the keying frequencies or the fingers involved. The performance is analysed in terms of the success rate. For the statistical analysis, normality is assumed in the populations. A normality test was not considered because of the low number of subjects and the high number of ties achieved during the trials, i.e., repeated values in the sample. With these assumptions, the populations are compared with the t-test, the F-test or the ANOVA test when suitable. Finally, a discussion of the results is presented.

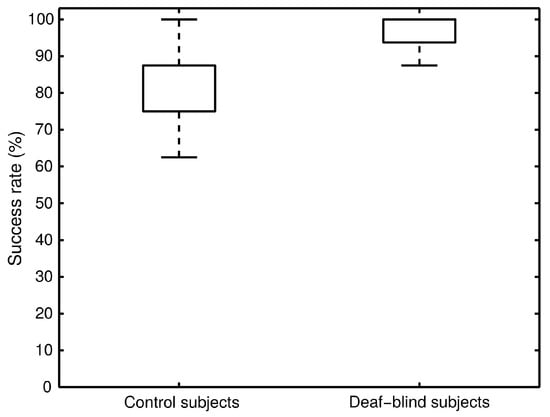

The results of the trials performed on both CS and DBS show a significant difference, as shown in Figure 6. The figure presents a boxplot with the overall success rate of the eight stimulation patterns under test. It takes into account the three recognition attempts for each pattern. Note that there is no median line inside the box because it coincides with both the 25th percentile and the 75th percentile for the CS and the DBS, respectively.

Figure 6.

Success rates of the performed tests on control subjects (CS) and deaf-blind subjects (DBS). The plot takes into account the three recognition attempts for each tacton. A better performance by the DBS is noticeable. The boxplots display the success rate for 18 CS and 4 DBS.

The performance was analysed by unpaired t-test, which shows different behaviour between the CS and the DBS (p < 0.01). A large difference was observed in the mean success rates: 81% for CS and 97% for DBS. The t-test was applied to the two data sets assuming that have the same variance, as the result of the F-test showed that this assumption was reasonable (F(17,3) = 3.399, p = 0.171).

After the comparative analysis between population samples, the extended trials performed on the DBS were analysed. Table 2 shows the raw scores achieved during these trials. Their tactile pattern recognition improved in the first attempt from 63% to 71% after the instruction. However, the overall average success rate over the three attempts remained at 97%, while the worst score improved from 87% to 90%, and the statistical mode was 100%. Thus, for the HMI practical purposes, the twenty possible patterns can be recognized with high effectiveness.

Table 2.

Raw scores achieved by the DBS in the extended trials. 1

Table 2 displays the four possible cases when guessing each stimulation pattern within the three attempts. Tactons are defined in Table 1. The influence of having either one or more active tactors at the same time is widely reflected in the pattern recognition performance. At first glance, this outcome can be extracted from the obtained data because the patterns applied to a single tactor indicate a success rate of 100%. In contrast, a lower efficiency is achieved with those patterns that activate more than one tactor. The paired t-test applied to these experimental data (one active tactor vs. multiple active tactors data) reinforces the previous impression (p = 0.04). In fact, it was noticed that the patterns that simultaneously stimulate the middle finger and the ring finger confuse the subjects, so further analysis should be done.

The two keying frequencies chosen—1 Hz and 10 Hz—were used either isolated or combined. As explained previously, the chosen values present great vibrotactile acuity on the skin. Moreover, the 170 Hz burst stimulus contributes to the performance because of the high vibrotactile sensitivity of the fingers at that frequency []. Thus, the results should be consistent with these statements. Therefore, the one-way ANOVA test (factor: frequencies) reflects no significant difference in recognition both for the isolated keying frequencies—1 Hz and 10 Hz—and their combination (p = 0.730).

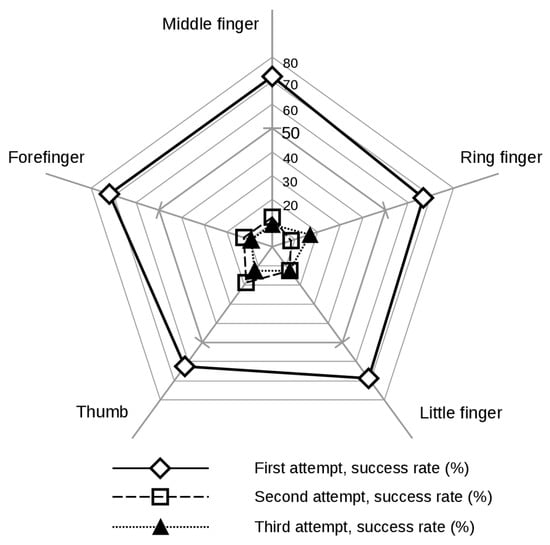

The patterns listed in Table 1 are applied evenly to all five fingers of the right hand. The one-way ANOVA test (factor: fingers) shows that all the fingers perform in the same way or, at least, performance was qualitatively similar between fingers (p = 0.938). Figure 7 displays the five fingers success rate for each of the three recognition attempts.

Figure 7.

Finger success rate for each of the three recognition attempts carried out by the DBS. In the polar diagram, the similarity in performance for each of the fingers is noticeable.

Figure 7 includes data from all the stimulation patterns, i.e., the ones that are applied to a single tactor and those that activate more than one. The wrong guess in multiple-finger stimulation is considered in Figure 7 as incorrect detection for all the stimulated fingers. The figure represents four disjoint events particularized to each finger: guess in the first attempt, guess in the second attempt, guess in the third attempt, and no-guess in any attempt. So the rates associated to each event sum 100%. The success rate for each of the three attempts shows that all fingers perform in a similar way. Subjects guess the finger nearly 70% of the times on the first attempt; a second attempt is required about 15% of the times; and a third attempt is required about 10% of the times. These numbers are consistent with the overall success rate that accounts for 97%. Notice that in these numbers a guess on the first attempt excludes other attempts. A guess on the second attempt excludes the third one. The statistical analysis reinforces the fingers’ similar performance mentioned above (p = 0.999).

4. Discussion

In order to represent a wide range of population, the CS were selected on a gender-balance basis and age interval from 18 to 58 years old. Older age was limited to avoid tactile sensitivity decrease that comes with age []. The CS are younger adults attending to mean age value, so a better sensitivity is expected from them. This makes the comparative test more challenging for the DBS.

Compared to the CS, the DBS had a better performance. This was not totally unexpected as gathered in the corresponding scientific literature: people with some kind of sense impairment often achieve better acuity through another sense (for example, touch) [,]. Therefore, future studies on vibrotactile recognition can be carried out by focusing on the performance and the learning of unimpaired subjects. This is due to the fact that most DBS develop better touch sensitivity than unimpaired people; notwithstanding, it is convenient to ensure the validity of the vibrotactile analyses with unimpaired individuals. This will allow us to increase the population sample, make face-to-face tests, and minimize communication issues. However, the tests also need to be performed on DBS because we cannot disregard their feedback, as they are the potential users. They should also participate in the system development sequence [].

Pattern recognition improves with the activation of a single tactor. Therefore, the codification of stimuli with a single tactor can be prioritized so as to associate them with the most important concepts or ideas. Thus, two keying frequencies applied to the five fingers give 10 stimuli combinations. This number of combinations is deemed to be good enough to communicate the most important everyday concepts for the interaction between DBS and their care-givers. This is a significant advance for those DBS with no knowledge of adapted language or for those who already handle one but do not require a large number of concepts.

The number of orders with a single tactor can be increased by adding a third keying frequency. This new frequency could be either (a) in the range from 1 Hz to 10 Hz that meets the aforementioned timing constraints [], such as 5 Hz, or (b) a 0 Hz keying, i.e., a 170 Hz stimulus burst with no interruption. These keying frequencies can be adapted to each user by choosing a custom set aimed at achieving the best performance. Hence, the new frequency expands the stimulation pattern set and maintains the two-dimensional mapping, i.e., tactor activation and tactor keying frequency. This expanded map fits with the span of absolute judgment and the span of immediate memory []. Thus, the inclusion of a new dimension that could decrease the users’ performance is avoided [].

When the stimulation pattern set is expanded by increasing the keying frequencies, those frequencies that meet the timing constraints improve recognition. The experiments show a similar detection performance for both 1 Hz and 10 Hz and their combination. However, the increase in the keying frequency can introduce the skin effect. This effect integrates specific spatio-temporal stimuli, so spatial and temporal masking can be produced []. Thus, timing constraints should be met in order to achieve a balanced recognition performance among the keying frequencies as well as a high success rate.

Great recognition efficiency can be achieved by stimulating the fingers. According to classical studies, as explained in the introduction section, the fingers are one of the most sensitive parts of the body. The developed system takes advantage of these features by means of the vibrotactile stimulation of the five fingers of the right hand. This technique raises a problem because, although the HMI glove allows for the performance of all or almost all regular daily tasks, it is not comfortable for continuous use. This disadvantage was reported by both CS and DBS. Changing the placement of the efferent HMI was considered; however, new locations in the body were not as suitable as the fingers. Moreover, the deaf-blind group reported that they did not want to look different from other people, so the HMI would need to be covered by their clothing. Following the literature guidelines, our research experience and the indications of potential users, our research group is already working on a vibrotactile belt. Even though there is a sensitivity loss, the spatial separability is increased, so the belt does achieve a high degree of recognition [], the stimuli can be organized to meet the human cognitive restrictions [], and, so far, our preliminary tests show very satisfactory results.

From the results obtained, the system is also suitable for unimpaired individuals. Its success rate, although a bit lower than that of the DBS, is high enough to consider it efficient. This fact supports TactileCom as a communication alternative for noisy and/or multilingual working environments.

5. Conclusions

Communication of concepts through a vibrotactile device is feasible. Responsiveness is much higher with this device than letter by letter communication. A bidirectional communication system named TactileCom was developed for this purpose. The system is aimed at helping the deaf-blind population. However, other communication applications are possible, e.g., in noisy or multilingual environments. The analysed HMI is a vibrotactile glove that was tested with deaf-blind and unimpaired subjects. The achieved recognition performance is high enough to consider it a valid system: 97% for the DBS and 81% for the CS. These results were achieved without previous training with the HMI. The ability to discriminate vibrotactile patterns was more acute in the deaf-blind individuals than in unimpaired subjects.

A dedicated experiment focused on DBS was performed in a second stage. The success rate improved from 63% to 71% on the first attempt. These results show that adaptability to the HMI is fast and learning its functioning is easy. The response of the DBS was also analysed in terms of pattern complexity, the two keying frequencies, and the sensitivity of each finger. The outcome showed a 100% success rate when the stimulus was applied to a single tactor and a lower performance when multiple tactors were activated.

As the device is aimed primarily at aiding the deaf-blind population, the development procedure needs to take into account opinions and suggestions by these potential users. The subjects of the trials expressed their satisfaction with the general HMI operation and suggested improvements related to practical issues concerning everyday life activities and aesthetics. Their feedback led us to the design of new vibrotactile HMIs to wear under the clothing.

For the potential user, TactileCom is a communication system of substitution that can codify concepts or ideas. This allows a faster and more efficient communication than the character-by-character coding. On the other hand, tactor usage is more efficient than those in tactile displays used to present a figure. And finally, the person who communicates with the deaf-blind individuals does not require previous knowledge of the coding language, as opposed to tactile sign language.

Acknowledgments

This research was partially supported by the Regional Ministry of Education from Castilla y León (Spain) and by the European Social Fund: ORDEN EDU/1867/2009.

Author Contributions

Albano Carrera and Alonso Alonso designed the system and the experiments. Albano Carrera implemented the system, performed the experiments, analysed the data and wrote the article. Alonso Alonso contributed to the tests with deaf-blind people. Ramón de la Rosa reviewed the paper, contributed to the writing and to the data analysis. And Evaristo J. Abril contributed to the analysis of the experiments and the materials.

Conflicts of Interest

The authors declare no conflict of interest.

References

- Reed, C.M.; Durlach, N.I.; Braida, L.D. Research on tactile communication of speech: A review. ASHA Monogr. 1982, 20, 1–23. [Google Scholar]

- Braille, L. Method of Writing Words, Music, and Plain Songs by Means of Dots, for Use by the Blind and Arranged for Them; Institut National des Jeunes Aveugles: Paris, France, 1829. [Google Scholar]

- Nicolau, H.; Guerreiro, J.; Guerreiro, T.; Carriço, L. UbiBraille. In Proceedings of the 15th International ACM SIGACCESS Conference on Computers and Accessibility—ASSETS ’13, Bellevue, Washington, WA, USA, 21–23 October 2013; ACM Press: New York, NY, USA, 2013; pp. 1–8. [Google Scholar]

- Amemiya, T.; Yamashita, J.; Hirota, K.; Hirose, M. Virtual Leading Blocks for the Deaf-Blind: A Real-Time Way-Finder by Verbal-Nonverbal Hybrid Interface and High-Density RFID Tag Space. In Proceedings of the IEEE Virtual Reality Conference VR 2004, Chicago, IL, USA, 27–31 March 2004; IEEE: New York, NY, USA, 2004; pp. 165–287. [Google Scholar]

- Ohtsuka, S.; Sasaki, N.; Hasegawa, S.; Harakawa, T. Body-Braille System for Disabled People. In Computers Helping People with Special Needs; Springer: Berlin, Germany, 2008; pp. 682–685. [Google Scholar]

- Reed, C.M. The implications of the Tadoma method of speechreading for spoken language processing. In Proceedings of the Fourth International Conference on Spoken Language Processing (ICSLP ’96), Philadelphia, PA, USA, 3–6 October 1996; IEEE: New York, NY, USA, 1996; pp. 1489–1492. [Google Scholar]

- Caporusso, N. A wearable Malossi alphabet interface for deafblind people. In Proceedings of the Working Conference on Advanced Visual Interfaces, Palermo, Italy, 28–30 May 2008; ACM Press: New York, NY, USA, 2008; pp. 445–448. [Google Scholar]

- Myles, K.; Binseel, M.S. The Tactile Modality: A Review of Tactile Sensitivity and Human Tactile Interfaces; Army Research Laboratory: Aberdeen, MD, USA, 2007; Available online: http://www.arl.army.mil/arlreports/2007/ARL-TR-4115.pdf (accessed on 3 March 2017).

- Kajimoto, H.; Kanno, Y.; Tachi, S. Forehead electro-tactile display for vision substitution. In Proceedings of the EuroHaptics 2006, Paris, France, 3–6 July 2006; pp. 75–79. Available online: http://citeseerx.ist.psu.edu/viewdoc/download?doi=10.1.1.497.8483&rep=rep1&type=pdf (accessed on 3 March 2017).

- Sherrick, C.E. Basic and applied research on tactile aids for deaf people: Progress and prospects. J. Acoust. Soc. Am. 1984, 75, 1325–1342. [Google Scholar] [CrossRef]

- Taylor, A.; Booth, S.; Tindell, M. Deaf-Blind Communication Devices. Braille Monit. 2006, 49. Available online: https://nfb.org/Images/nfb/Publications/bm/bm06/bm0609/bm060913.htm (accessed on 3 March 2017).

- Deaf-Blind Solutions. Freedomscientific, St. Petersburg, FL, USA. Available online: http://www.freedomscientific.com/Products/Blindness/Interpretype (accessed on 3 March 2017).

- Cholewiak, R.W.; Collins, A. Vibrotactile pattern discrimination and communality at several body sites. Percept. Psychophys. 1995, 57, 724–737. [Google Scholar] [CrossRef] [PubMed]

- Pasquero, J. Survey on Communication through Touch. Montreal, Canada, 2006. Available online: http://www.cim.mcgill.ca/~haptic/pub/JP-CIM-TR-06.pdf (accessed on 3 March 2017).

- Van Erp, J.B.F. Guidelines for the Use of Vibro-Tactile Displays in Human Computer Interaction. In Proceedings of the Eurohaptics, Edinburgh, UK, 8–10 July 2002; pp. 18–22. Available online: http://www.eurohaptics.vision.ee.ethz.ch/2002/vanerp.pdf (accessed on 3 March 2017).

- Azadi, M.; Jones, L. Evaluating Vibrotactile Dimensions for the Design of Tactons. IEEE Trans. Haptics 2014, 7, 14–23. [Google Scholar] [CrossRef] [PubMed]

- Sherrick, C.E. The localization of low- and high-frequency vibrotactile stimuli. J. Acoust. Soc. Am. 1990, 88, 169–179. [Google Scholar] [CrossRef] [PubMed]

- Nafe, J.P.; Wagoner, K.S. The Nature of Pressure Adaptation. J. Gen. Psychol. 1941, 25, 323–351. [Google Scholar] [CrossRef]

- Cholewiak, R.W.; Brill, J.C.; Schwab, A. Vibrotactile localization on the abdomen: Effects of place and space. Percept. Psychophys. 2004, 66, 970–987. [Google Scholar] [CrossRef] [PubMed]

- Van Erp, J.B.F. Vibrotactile Spatial Acuity on the Torso: Effects of Location and Timing Parameters. In Proceedings of the First Joint Eurohaptics Conference and Symposium on Haptic Interfaces for Virtual Environment and Teleoperator Systems, Pisa, Italy, 18–20 March 2005; IEEE: New York, NY, USA, 2005; pp. 80–85. [Google Scholar]

- Wentink, E.C.; Mulder, A.; Rietman, J.S.; Veltink, P.H. Vibrotactile stimulation of the upper leg: Effects of location, stimulation method and habituation. In Proceedings of the Annual International Conference of the IEEE Engineering in Medicine and Biology Society, Boston, MA, USA, 30 August–3 September 2011; IEEE: New York, NY, USA, 2011; pp. 1668–1671. [Google Scholar]

- Cholewiak, R.W.; Collins, A. Vibrotactile localization on the arm: Effects of place, space, and age. Percept. Psychophys. 2003, 65, 1058–1077. [Google Scholar] [CrossRef] [PubMed]

- Piateski, E.; Jones, L. Vibrotactile Pattern Recognition on the Arm and Torso. In Proceedings of the First Joint Eurohaptics Conference and Symposium on Haptic Interfaces for Virtual Environment and Teleoperator Systems, Pisa, Italy, 18–20 March 2005; IEEE: New York, NY, USA, 2005; pp. 90–95. [Google Scholar]

- Verrillo, R.T. Age Related Changes in the Sensitivity to Vibration. J. Gerontol. 1980, 35, 185–193. [Google Scholar] [CrossRef] [PubMed]

- Green, B.G. The effect of skin temperature on vibrotactile sensitivity. Percept. Psychophys. 1977, 21, 243–248. [Google Scholar] [CrossRef]

- Gescheider, G.A.; Verrillo, R.T.; Mccann, J.T.; Aldrich, E.M. Effects of the menstrual cycle on vibrotactile sensitivity. Percept. Psychophys. 1984, 36, 586–592. [Google Scholar] [CrossRef] [PubMed]

- Bikah, M.; Hallbeck, M.S.; Flowers, J.H. Supracutaneous vibrotactile perception threshold at various non-glabrous body loci. Ergonomics 2008, 51, 920–934. [Google Scholar] [CrossRef] [PubMed]

- Verrillo, R.T. Effect of Contactor Area on the Vibrotactile Threshold. J. Acoust. Soc. Am. 1963, 35, 1962. [Google Scholar] [CrossRef]

- Verrillo, R.T. Effect of spatial parameters on the vibrotactile threshold. J. Exp. Psychol. 1966, 71, 570. [Google Scholar] [CrossRef] [PubMed]

- Sakurai, T.; Konyo, M.; Tadokoro, S. Enhancement of vibrotactile sensitivity: Effects of stationary boundary contacts. In Proceedings of the IEEE/RSJ International Conference on Intelligent Robots and Systems, San Francisco, CA, USA, 25–30 September 2011; IEEE: New York, NY, USA, 2011; pp. 3494–3500. [Google Scholar]

- Barbacena, I.L.; Lima, A.C.O.; Barros, A.T.; Freire, R.C.S.; Pereira, J.R. Comparative Analysis of Tactile Sensitivity between Blind, Deaf and Unimpaired People. In Proceedings of the IEEE International Workshop on Medical Measurements and Applications, Ottawa, ON, Canada, 9–10 May 2008; IEEE: New York, NY, USA, 2008; pp. 19–24. [Google Scholar]

- Carrera, A. Innovaciones en Sistemas e Interfaces Humano.-Máquina: Aplicación a las Tecnologías de Rehabilitación. (Innovations in Systems and Human-Machine Interfaces: Application to Rehabilitation Technologies); ProQuest Dissertations Publishing: Ann Arbor, CA, USA, 2013; Available online: http://search.proquest.com/docview/1556107255?accountid=14778 (accessed on 3 March 2017).

- MacLean, K.E. Foundations of Transparency in Tactile Information Design. IEEE Trans. Haptics 2008, 1, 84–95. [Google Scholar] [CrossRef] [PubMed]

- Schmuntzsch, U.; Sturm, C.; Roetting, M. The warning glove—Development and evaluation of a multimodal action-specific warning prototype. Appl. Ergon. 2014, 45, 1297–1305. [Google Scholar] [CrossRef] [PubMed]

- Brewster, S.A.; Brown, L.M. Tactons: Structured Tactile Messages for Non-Visual Information Display. In Proceedings of the Fifth Australasian User Interface Conference, Dunedin, New Zealand, 18–22 January 2004; pp. 15–23. Available online: http://crpit.com/confpapers/CRPITV28Brewster.pdf (accessed on 3 March 2017).

- Carrera, A.; Alonso, A.; de la Rosa, R. Sistema de Sustitución Sensorial para Comunicación en Sordo-Ciegos Basado en Servicio Web (Sensory-substitution system for deaf-blind communication based on web service). In Proceedings of the V International Congress on Design, Research Networks and Technology for All (DRT4ALL), Madrid, Spain, 23–25 September 2013; Fundación ONCE. pp. 21–28. Available online: http://www.discapnet.es/Castellano/areastematicas/tecnologia/DRT4ALL/EN/DRT4ALL1013/Documents/Libro_actas_DRT4ALL_2013.pdf (accessed on 3 March 2017).

- Miller, G.A. The magical number seven, plus or minus two: Some limits on our capacity for processing information. Psychol. Rev. 1956, 63, 81–97. [Google Scholar] [CrossRef] [PubMed]

- Brown, L.M.; Brewster, S.A.; Purchase, H.C. Multidimensional tactons for non-visual information presentation in mobile devices. In Proceedings of the 8th Conference on Human-Computer interaction with Mobile Devices and Services, New York, NY, USA, 12–15 September 2006; ACM Press: New York, NY, USA, 2006; p. 231. [Google Scholar]

- Vierordt, K. Dependence of the Development of the Skin’s Spatial Sense on the Flexibility of Parts of the Body. Available online: http://reader.digitale-sammlungen.de/de/fs1/object/display/bsb11037704_00007.html (accessed on 3 March 2017). (In German).

- Weber, E.H. The Sense of Touch; Academic Press: London, UK, 1978. [Google Scholar]

- Gallace, A.; Tan, H.Z.; Spence, C. The Body Surface as a Communication System: The State of the Art after 50 Years. Presence Teleoperators Virtual Environ. 2007, 16, 655–676. [Google Scholar] [CrossRef]

- Wilska, A. On the Vibrational Sensitivity in Different Regions of the Body Surface. Acta Physiol. Scand. 1954, 31, 285–289. [Google Scholar] [CrossRef] [PubMed]

- Otterstedde, C.R.; Spandau, U.; Blankenagel, A.; Kimberling, W.J.; Reisser, C. A New Clinical Classification for Usher’s Syndrome Based on a New Subtype of Usher’s Syndrome Type I. Laryngoscope 2001, 111, 84–86. [Google Scholar] [CrossRef] [PubMed]

- Goldreich, D.; Kanics, I.M. Tactile acuity is enhanced in blindness. J. Neurosci. 2003, 23, 3439–3445. [Google Scholar] [PubMed]

© 2017 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (http://creativecommons.org/licenses/by/4.0/).