Weighted Decision Aggregation for Dispersed Data in Unified and Diverse Coalitions

Abstract

1. Introduction

- A novel coalition-based approach for dispersed data classification with unified and diverse coalition formation strategies and their impact on accuracy.

- Integration of Pawlak’s conflict model to systematically identify compatible and diverse classifier groups.

- Evaluation of weighted vs. unweighted prediction aggregation, demonstrating how weighting schemes affect decision reliability.

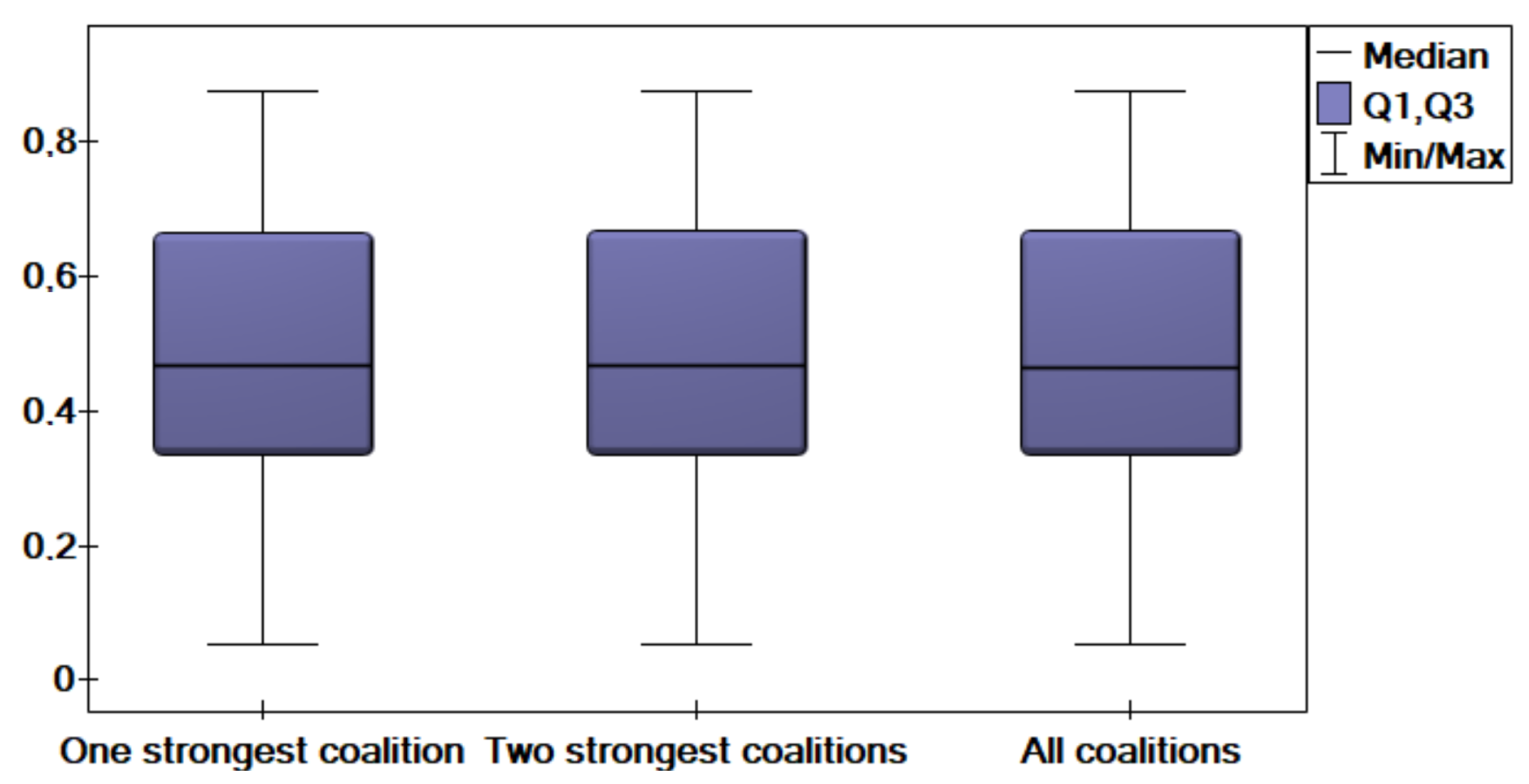

- Introduction and evaluation of three strategies for coalition-based classification: single strongest coalition, two strongest coalitions, and all coalitions.

2. Related Works

3. Research Methods and Approach

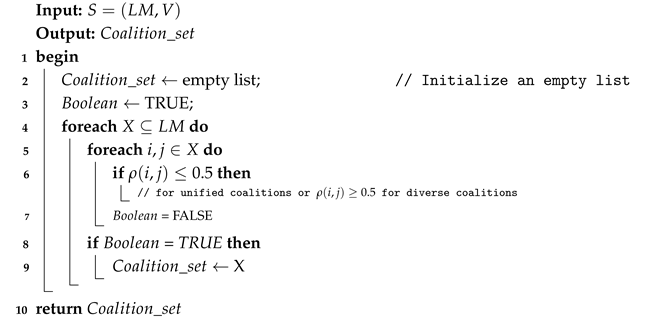

| Algorithm 1: Creation of coalitions |

|

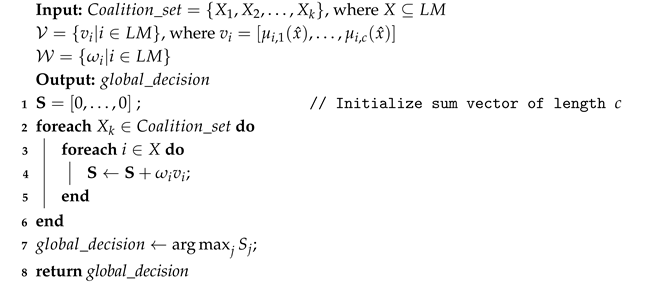

| Algorithm 2: Weighted fusion of prediction vectors from selected coalitions |

|

- without weights—aggregated vector , final decision ;

- with weights—aggregated vector , final decision .However, when considering diverse coalitions, the results will differ. For the single strongest coalition , we obtain

- without weights—aggregated vector , final decision ;

- with weights—aggregated vector , final decision .For the two strongest coalitions and all coalitions, we get

- without weights—aggregated vector , final decision ;

- with weights—aggregated vector , final decision .As demonstrated in the example, the choice of coalition type, the decision-making strategy, and the application of weights all play a crucial role in determining the final decision. This analysis underscores the necessity of selecting the appropriate coalition structure and weighting strategy based on the specific problem domain to improve classification accuracy and decision reliability.

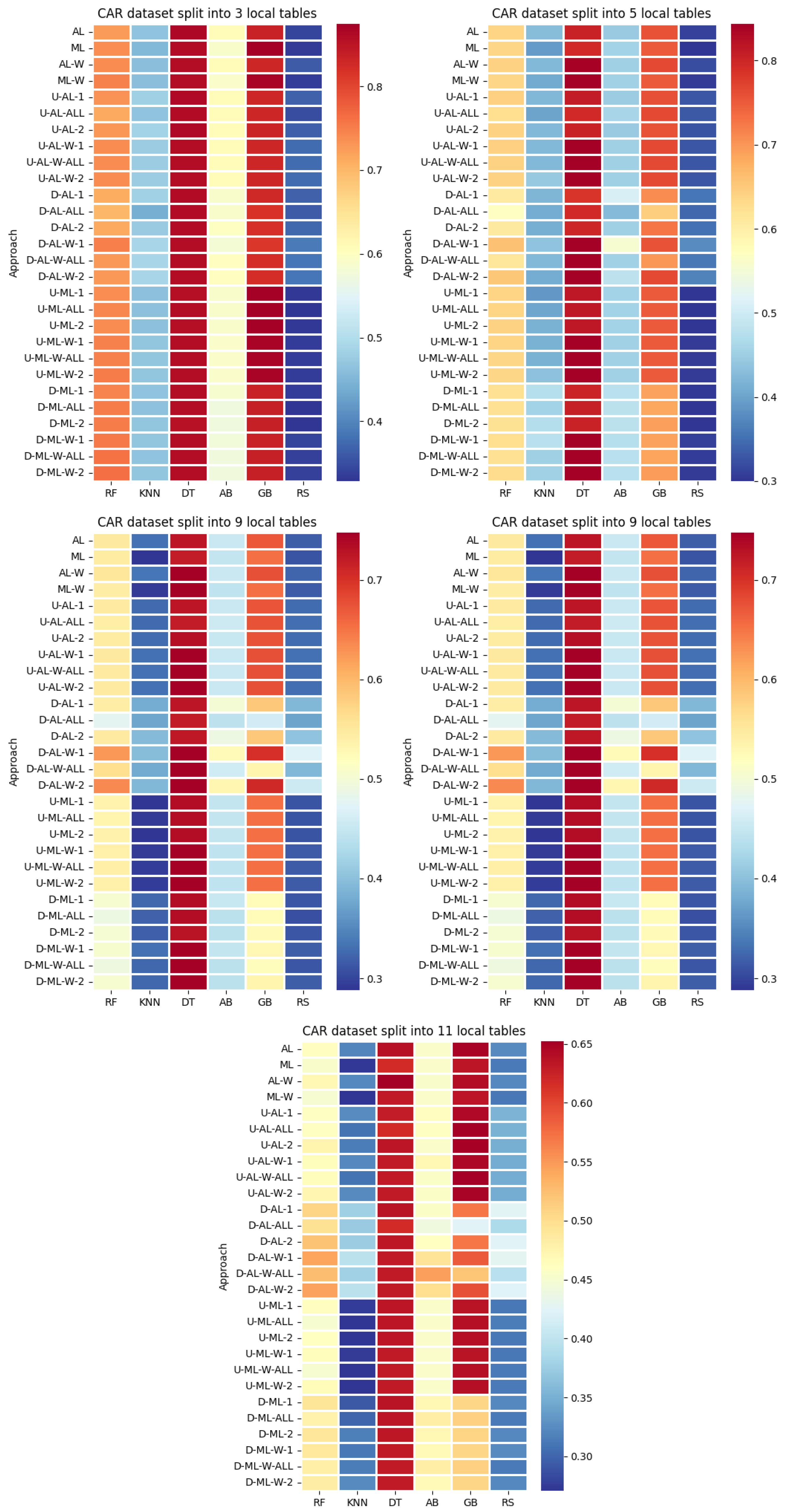

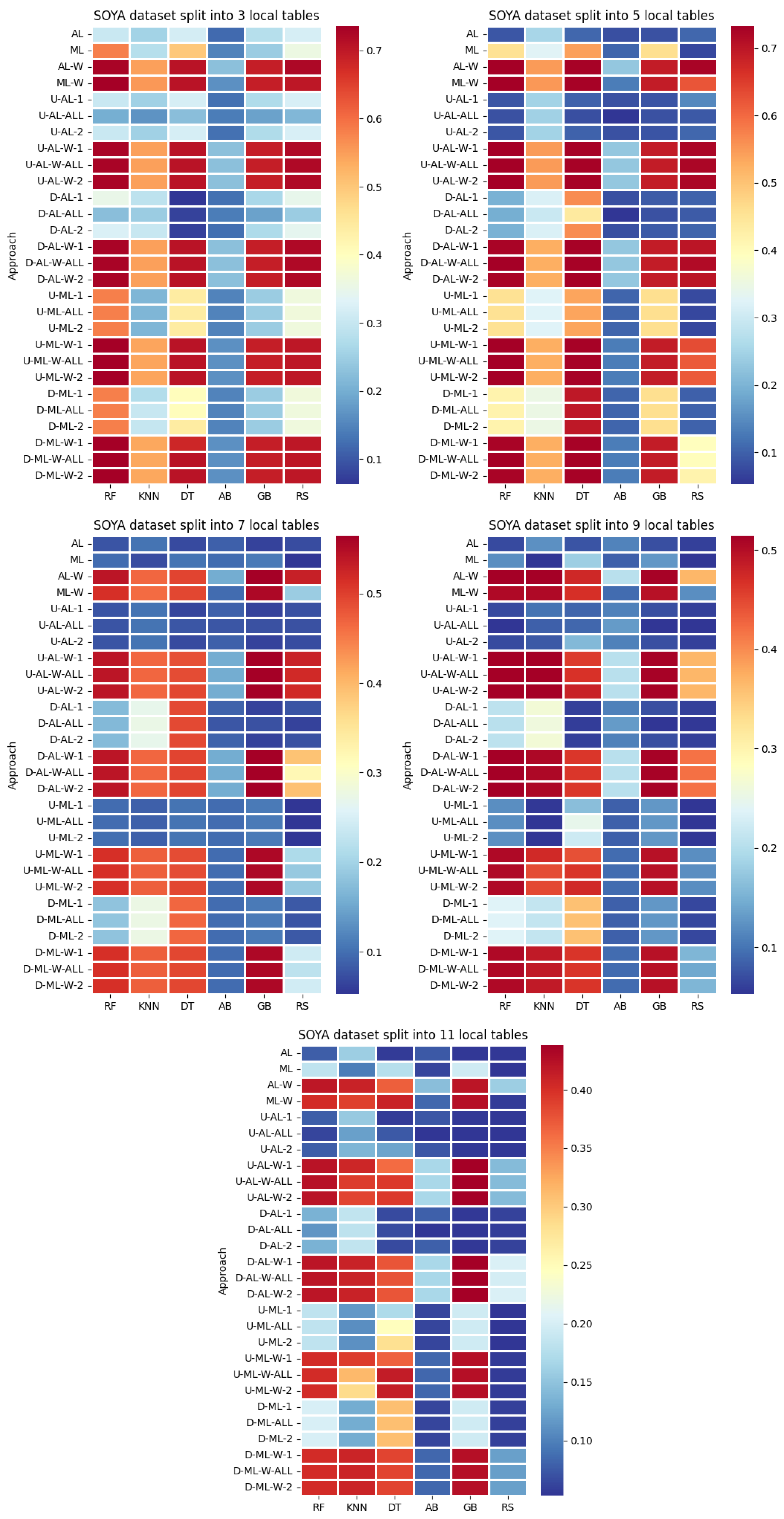

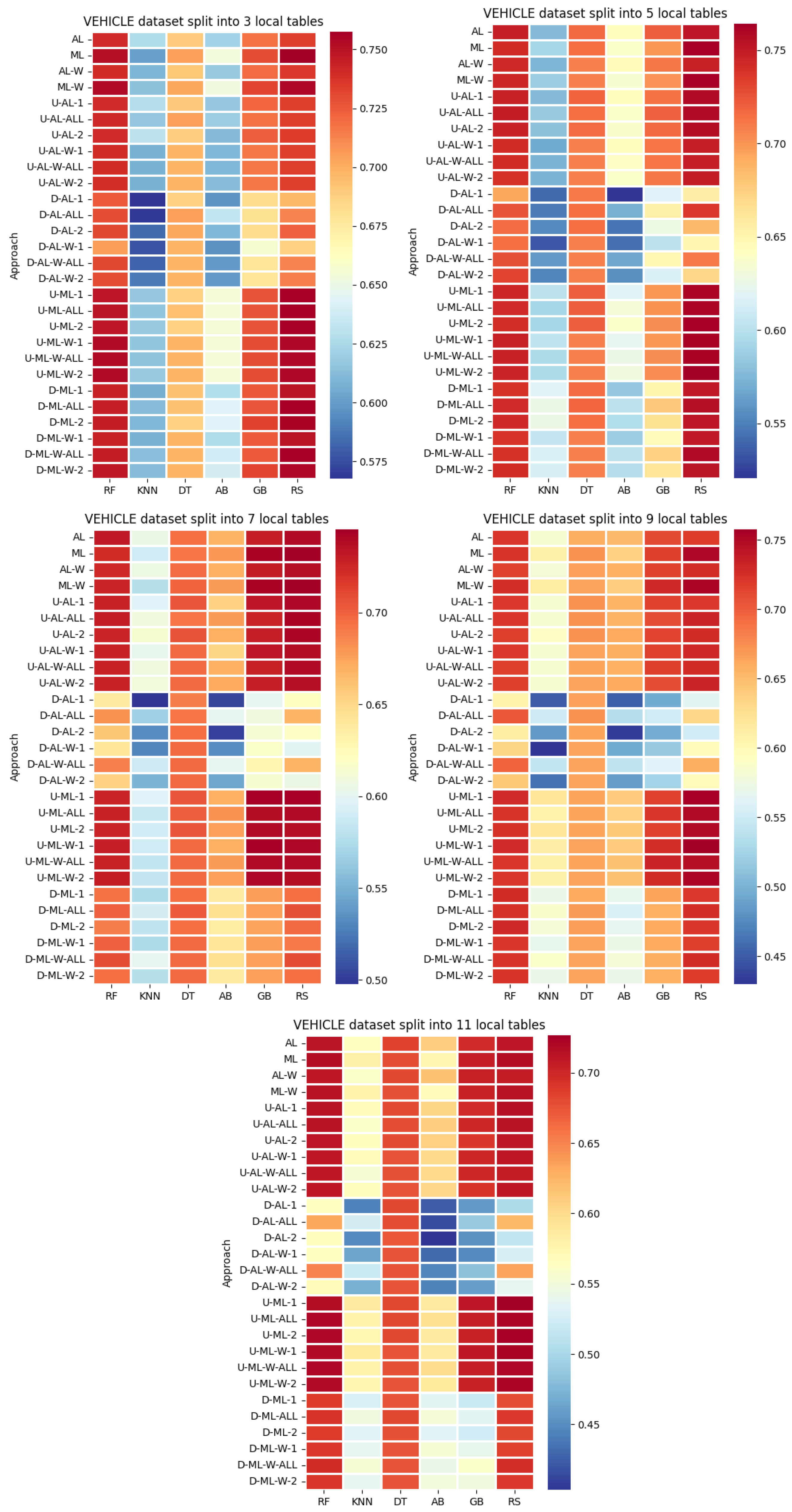

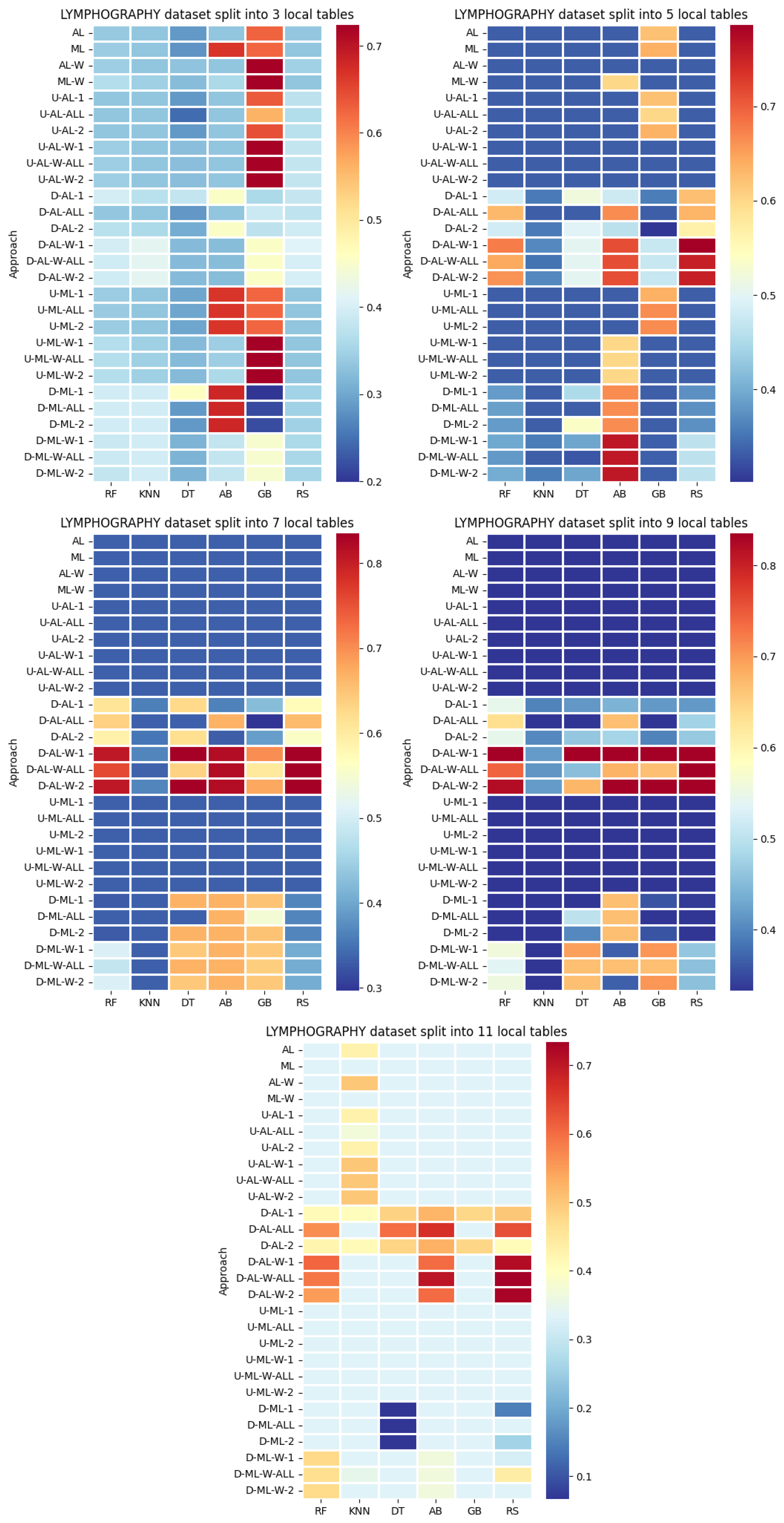

4. Datasets and Experimental Design

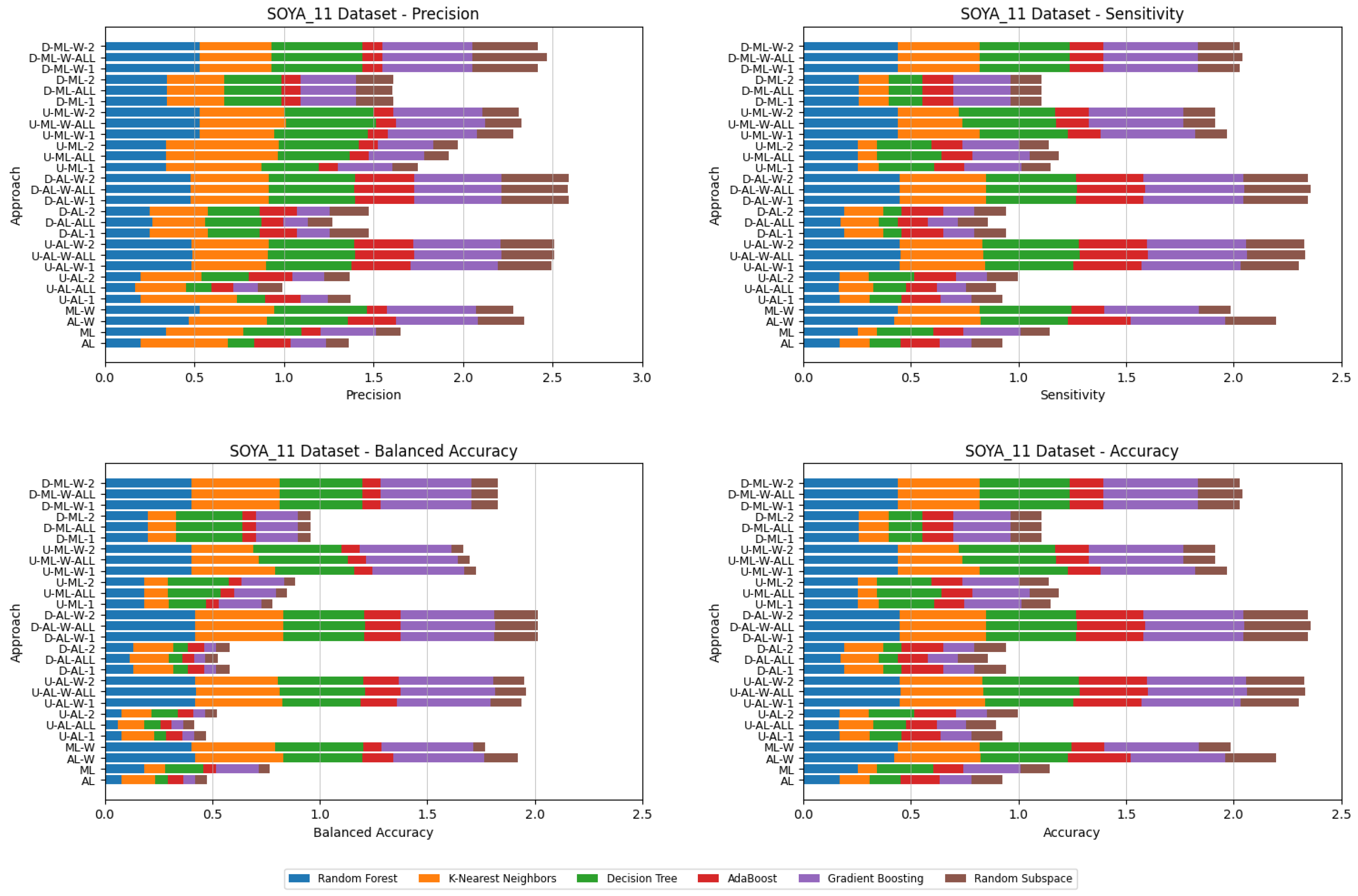

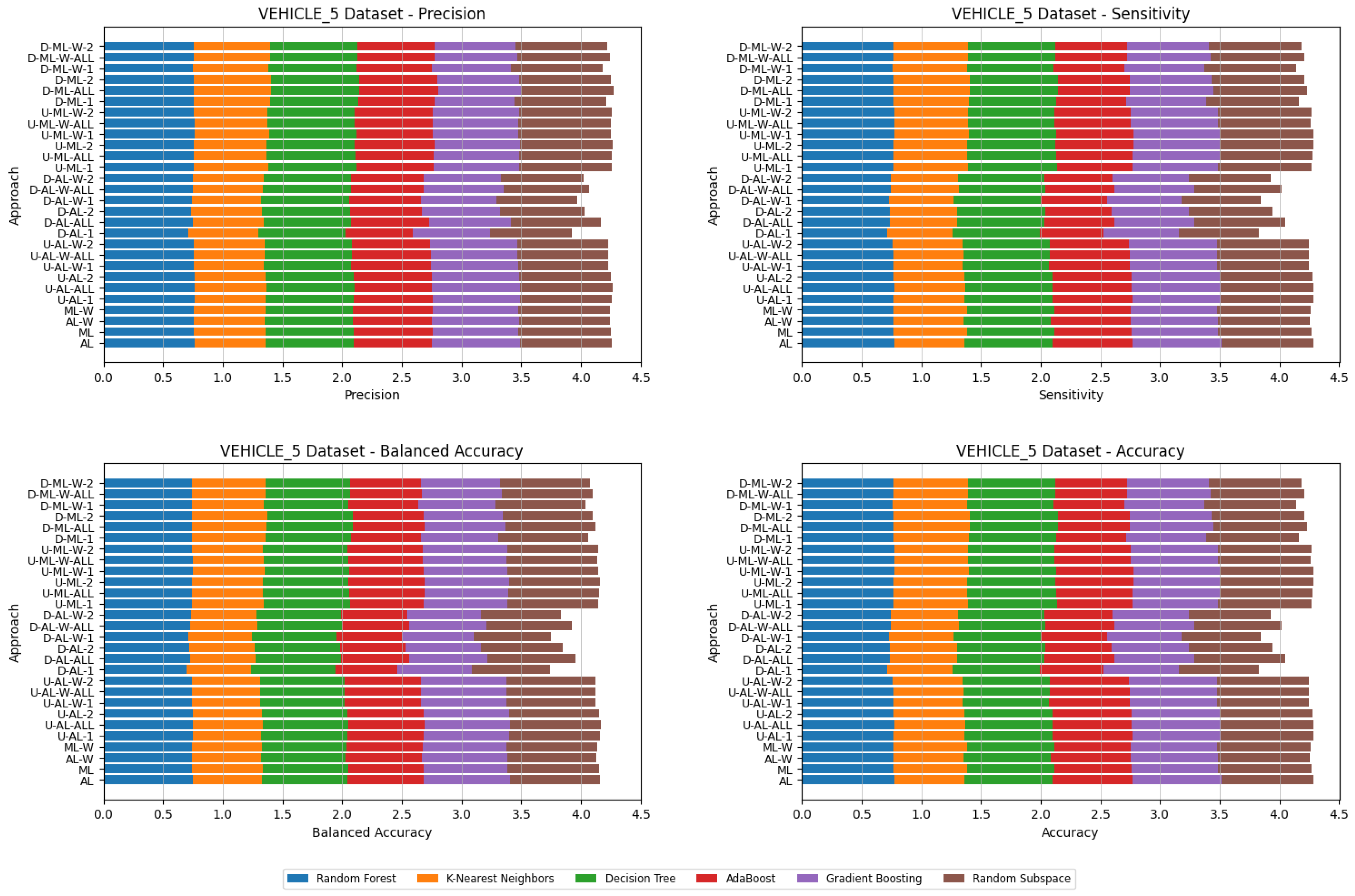

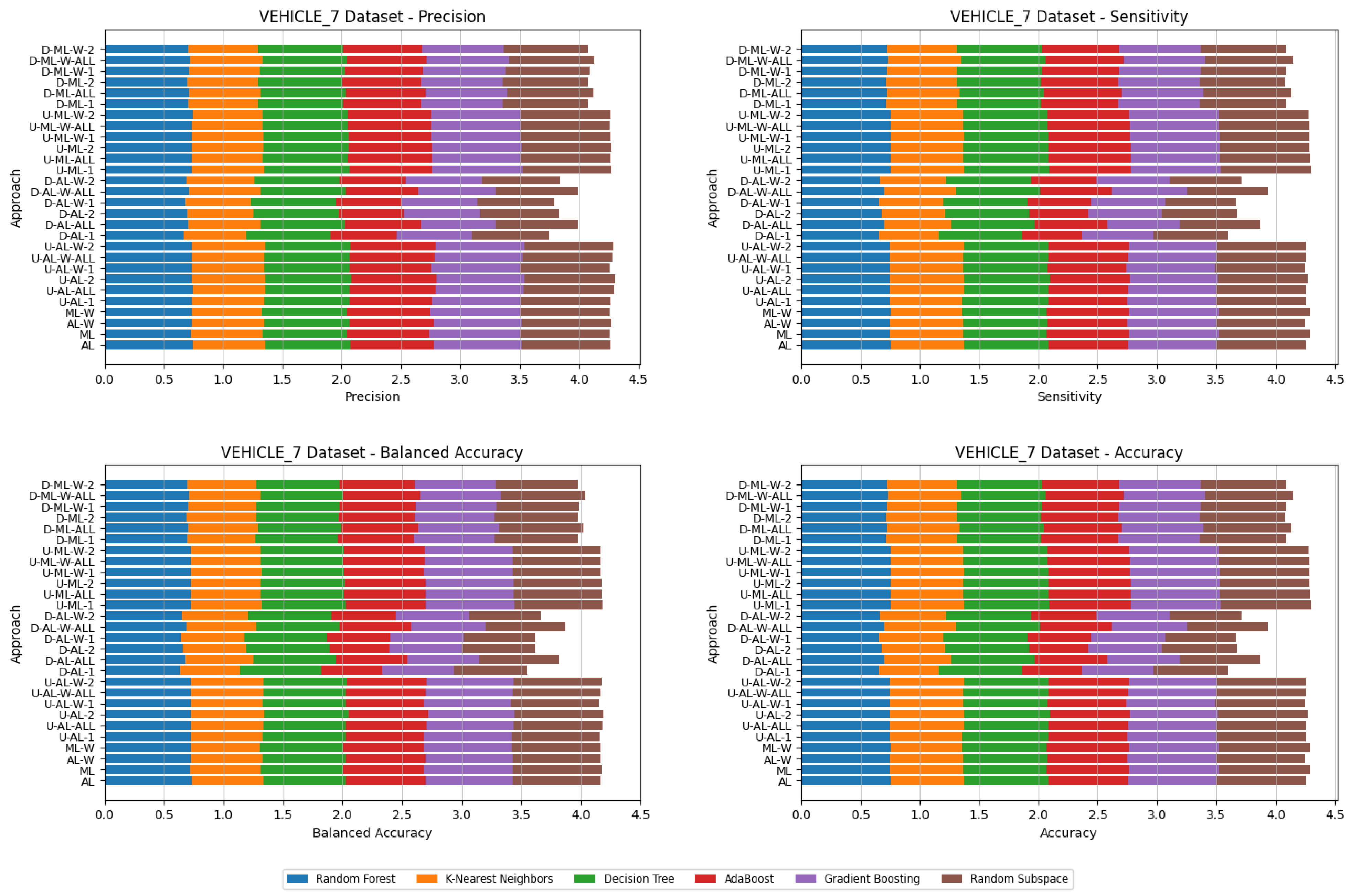

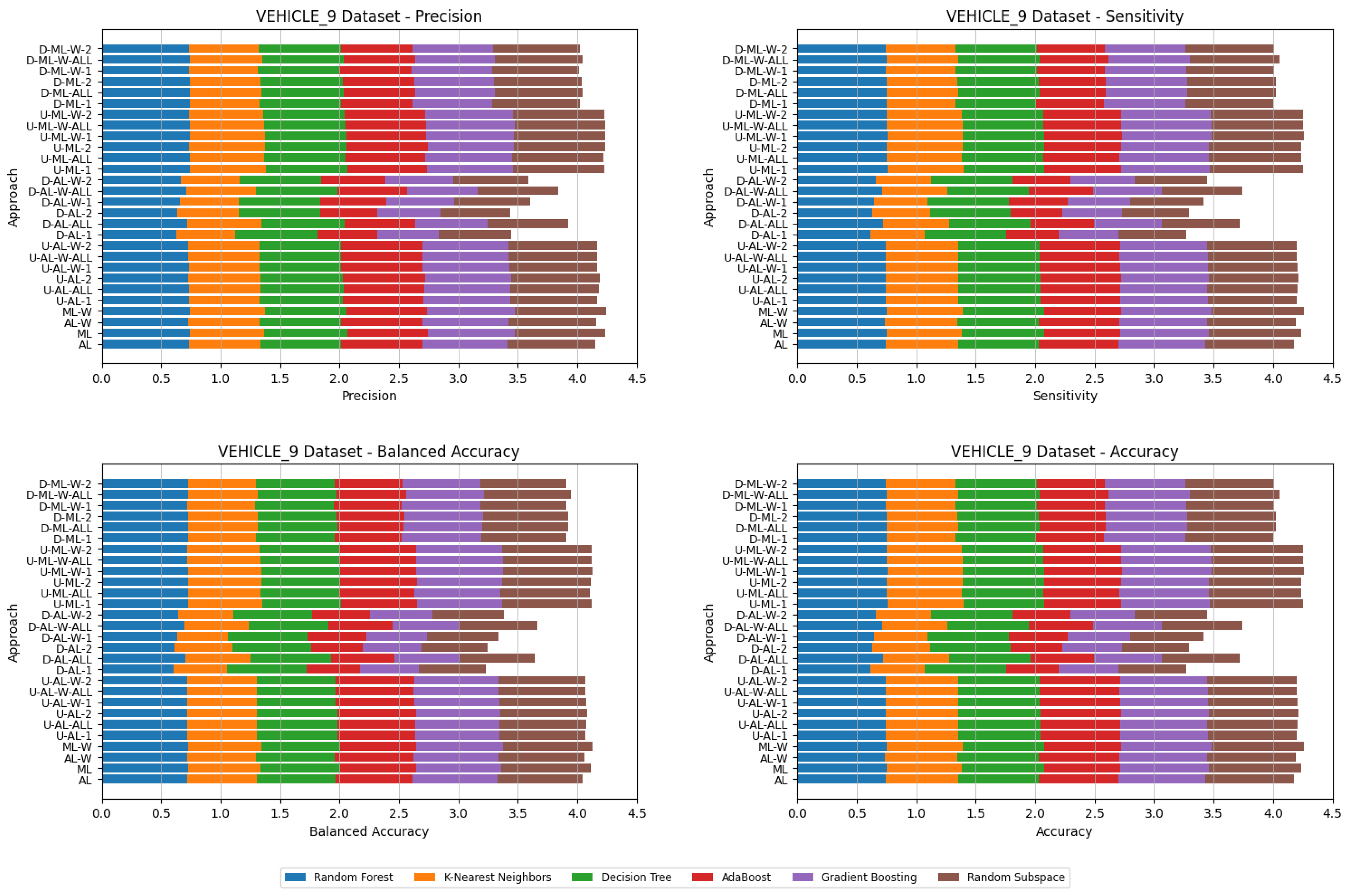

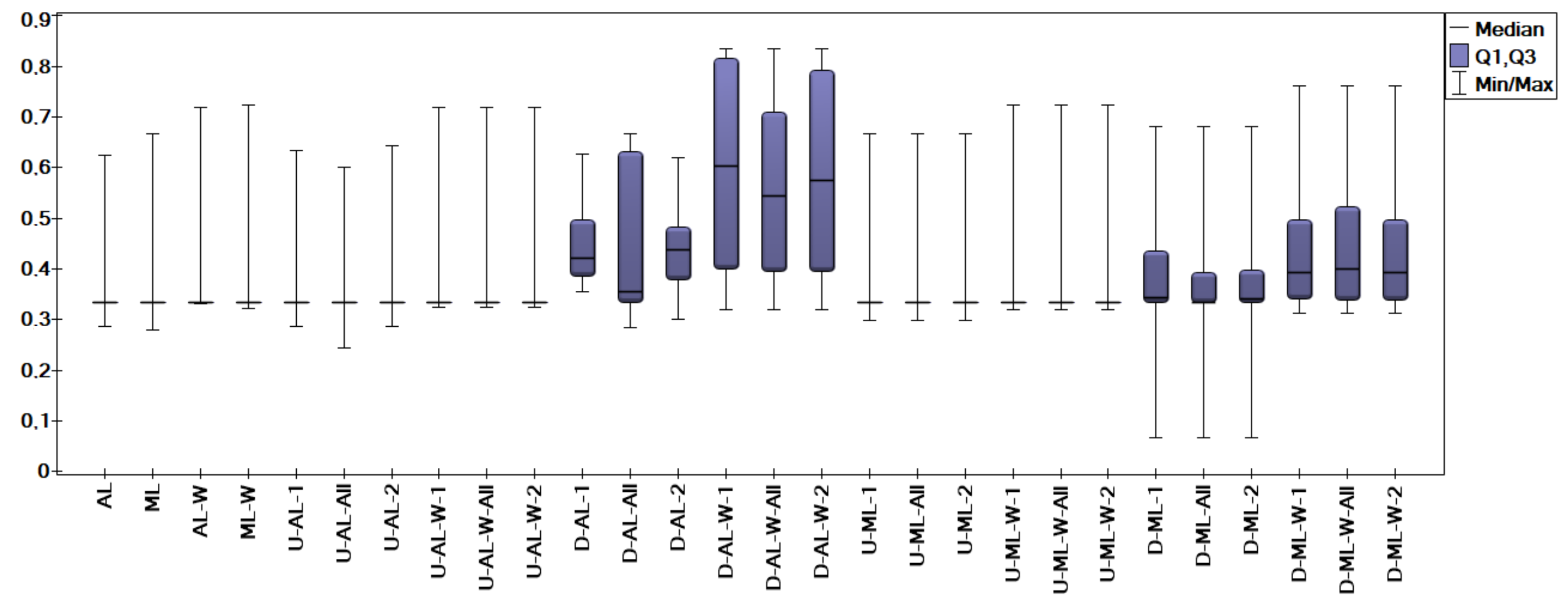

- AL—prediction vectors from the abstract level;

- ML—prediction vectors from the measurement level;

- W—means that the weights for the prediction vectors were used;

- U—means that unified coalitions were used;

- D—means that diverse coalitions were used;

- 1—means that the final decision is made by one strongest coalition;

- 2—means that the final decision is made by two strongest coalitions;

- All—means that the final decision is taken by all coalitions.

- By systematically combining various approaches to explore all feasible and meaningful configurations, 4 baseline approaches without coalitions and 24 distinct approaches with coalitions and voting were obtained:

- AL—local classifiers generate predictions from the abstract level, and then the vectors are summed.

- AL-W—local classifiers generate predictions from the abstract level, and then the vectors are summed using weights proportional to the quality of the model evaluated on the validation set.

- U-AL-1—unified coalitions are created, local classifiers generate predictions from the abstract level, and, finally, the prediction vectors from only the one strongest coalition are summed.

- U-AL-All—unified coalitions are created, local classifiers generate predictions from the abstract level, and, finally, the prediction vectors from all coalitions are summed.

- U-AL-2—unified coalitions are created, local classifiers generate predictions from the abstract level, and, finally, the prediction vectors from only the two strongest coalitions are summed.

- U-AL-W-1—similar to U-AL-1 but, when summing prediction vectors, weights proportional to the quality of the local model are used.

- U-AL-W-All—similar to U-AL-All but, when summing prediction vectors, weights proportional to the quality of the local model are used.

- U-AL-W-2—similar to U-AL-2 but, when summing prediction vectors, weights proportional to the quality of the local model are used.

- D-AL-1—diverse coalitions are created, local classifiers generate predictions from the abstract level, and, finally, the prediction vectors from only the one strongest coalition are summed.

- D-AL-All—diverse coalitions are created, local classifiers generate predictions from the abstract level, and, finally, the prediction vectors from all coalitions are summed.

- D-AL-2—diverse coalitions are created, local classifiers generate predictions from the abstract level, and, finally, the prediction vectors from only the two strongest coalitions are summed.

- D-AL-W-1—similar to D-AL-1 but, when summing prediction vectors, weights proportional to the quality of the local model are used.

- D-AL-W-All—similar to D-AL-All but, when summing prediction vectors, weights proportional to the quality of the local model are used.

- D-AL-W-2—similar to D-AL-2 but, when summing prediction vectors, weights proportional to the quality of the local model are used.All the above approaches were also used in combination with prediction vectors from the measurement level. Thus, we obtained approaches ML, ML-W, U-ML-1, U-ML-All, U-ML-2, U-ML-W-1, U-ML-W-All, U-ML-W-2, D-ML-1, D-ML-All, D-ML-2, D-ML-W-1, D-ML-W-All, and D-ML-W-2.

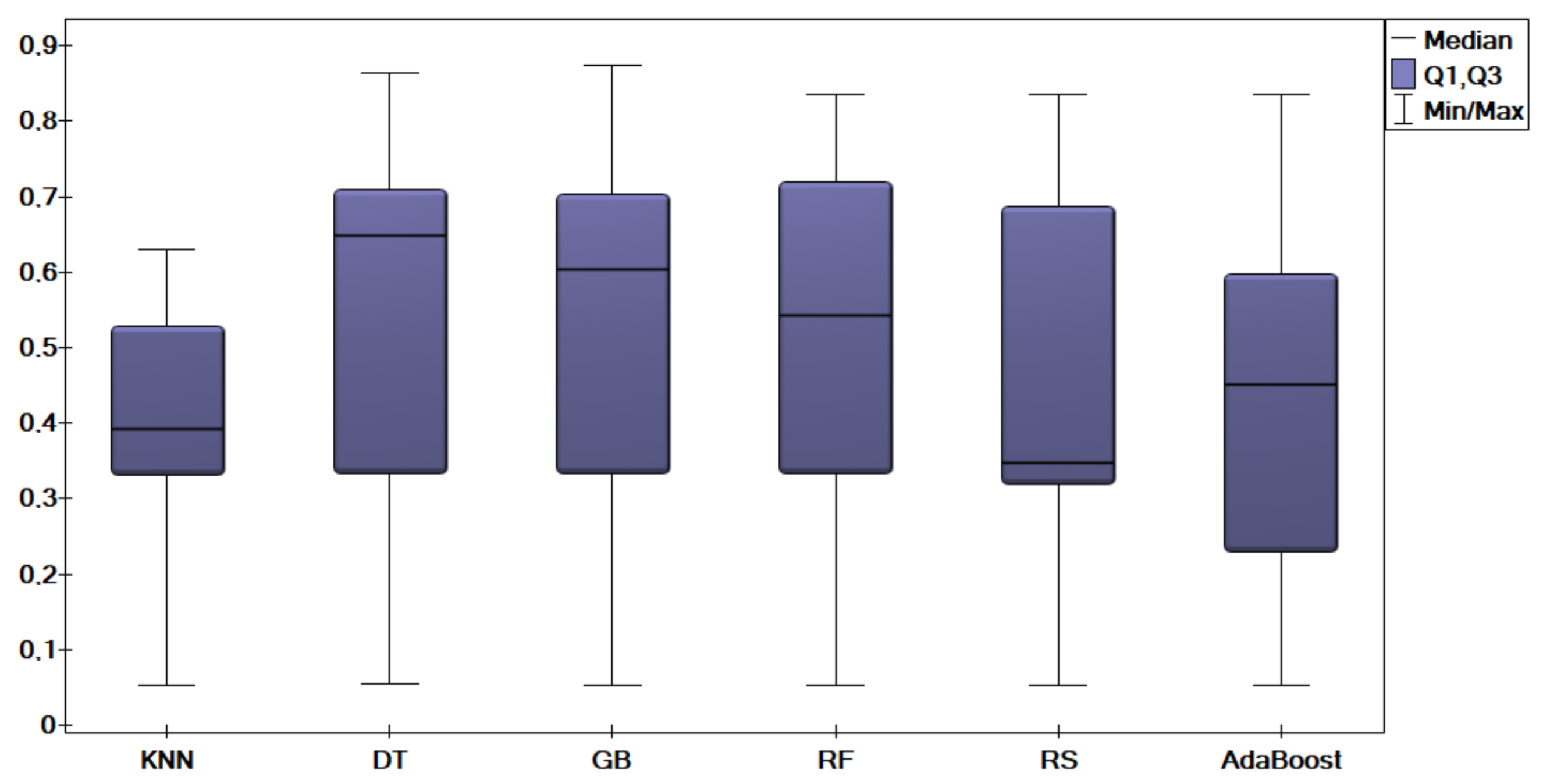

- k-Nearest Neighbors (KNN);

- Decision Tree (DT);

- Gradient Boosting (GB);

- Random Forest (RF);

- Random Subspace (RS);

- AdaBoost.

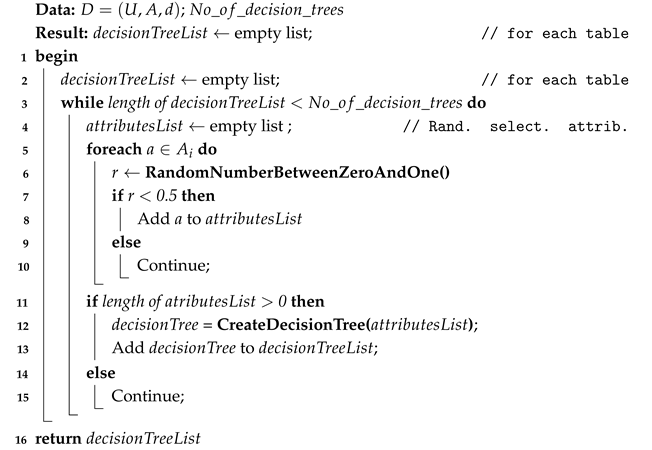

- Various numbers of estimators were tested for Gradient Boosting, Random Forest, Random Subspace, and AdaBoost, specifically 20, 50, 100, 200, and 500. The tables present the best result achieved, along with the corresponding number of estimators that produced this outcome. For each dataset, the table indicates the best result obtained. The following parameter values were tested for the k-Nearest Neighbors classifier: 1, 2, 3, 4, and 5. The Decision Tree was built with defaults in the sklearn library in Python 3.13.0. The stages of building Random Subspace model from Decision Trees are presented in Algorithm 3. From all these parameters, the best results were selected and are included in Table A1, Table A2, Table A3, Table A4, Table A5, Table A6, Table A7, Table A8, Table A9, Table A10, Table A11, Table A12, Table A13, Table A14, Table A15, Table A16, Table A17, Table A18, Table A19, Table A20 and Table A21 in Appendix A. The values presented in the tables represent the average metrics calculated across all 10 evaluations. For each dataset, the best-performing score is highlighted in blue to indicate the top result.

| Algorithm 3: Creation of Random Subspace model |

|

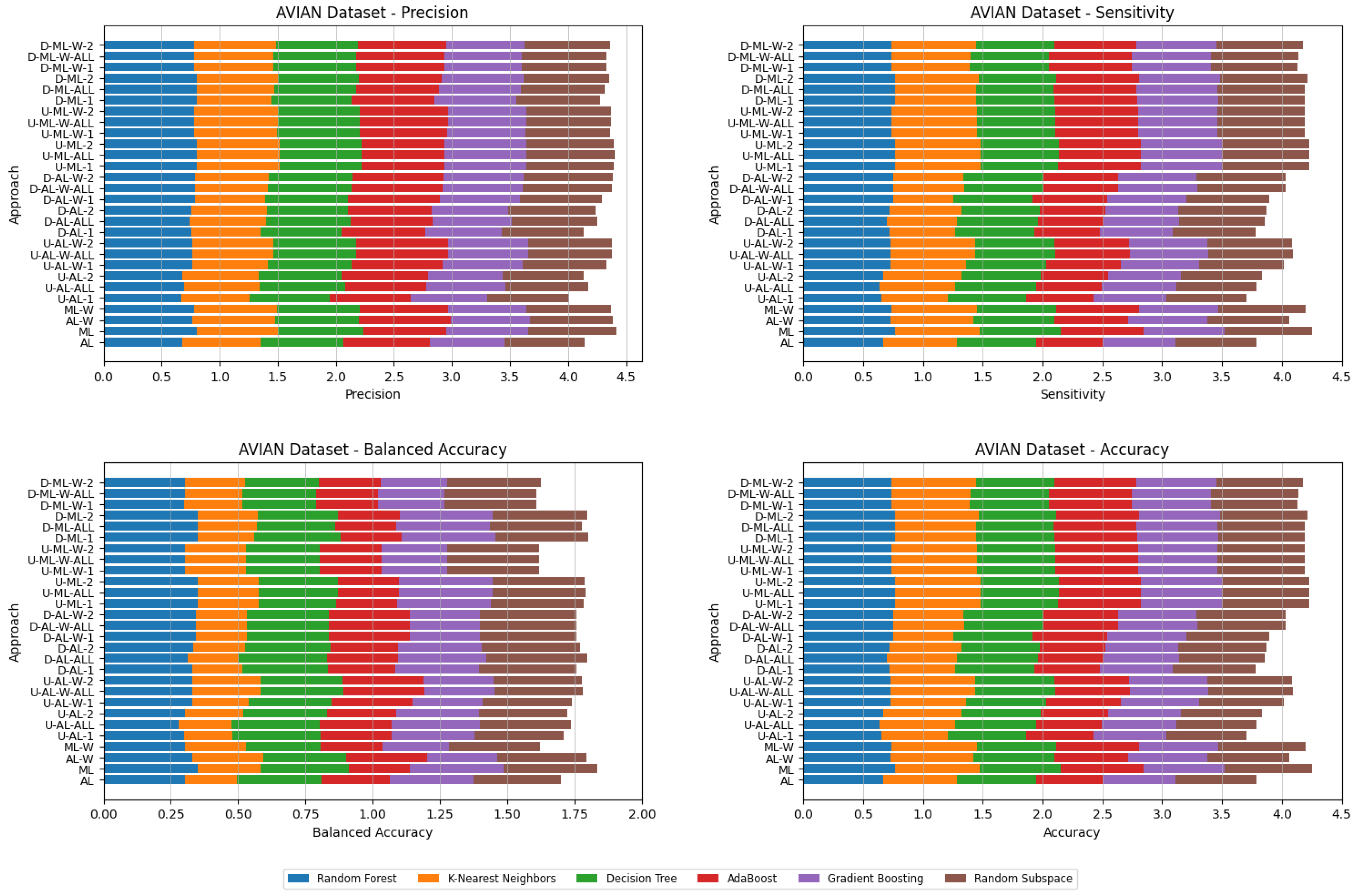

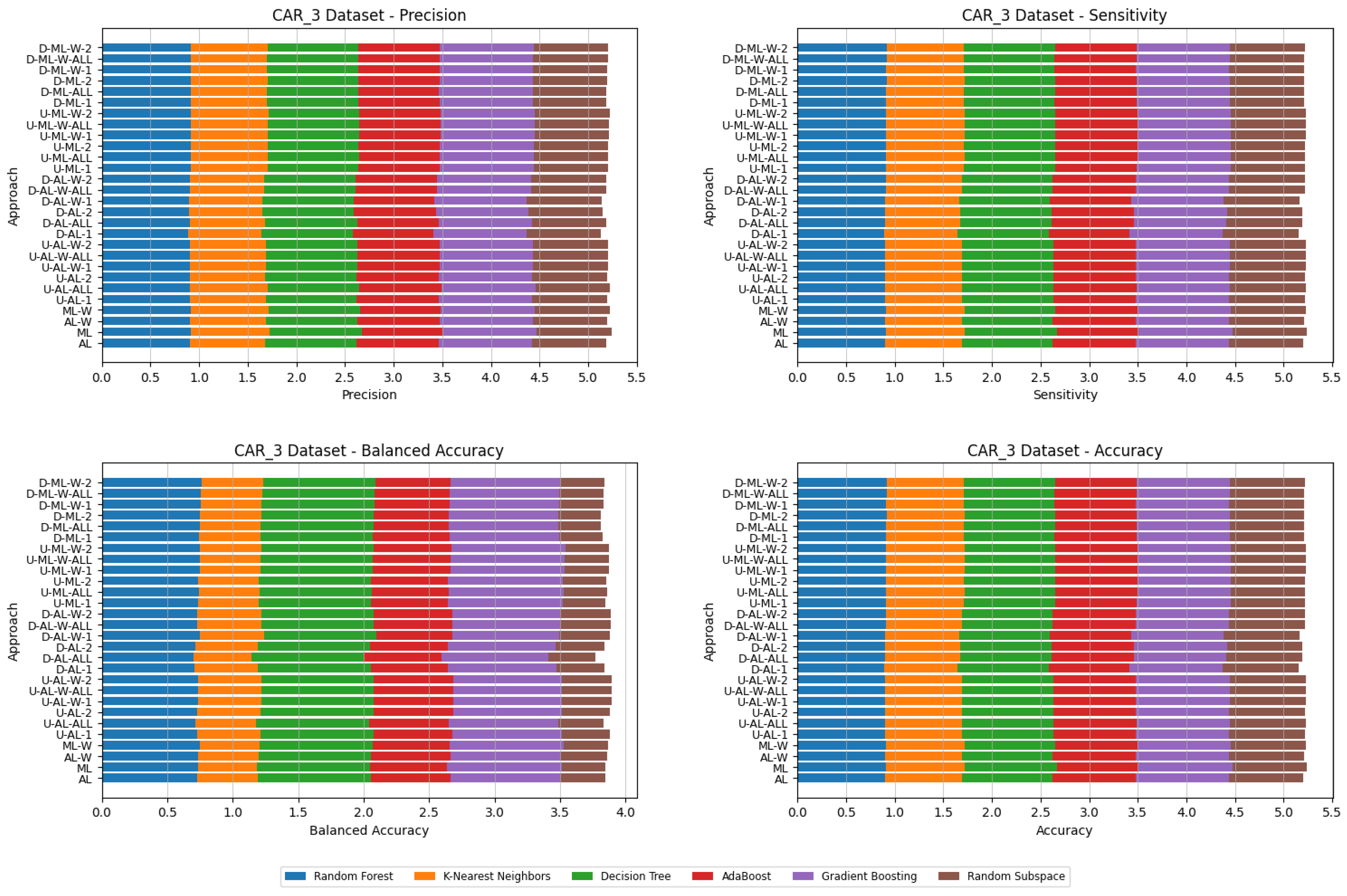

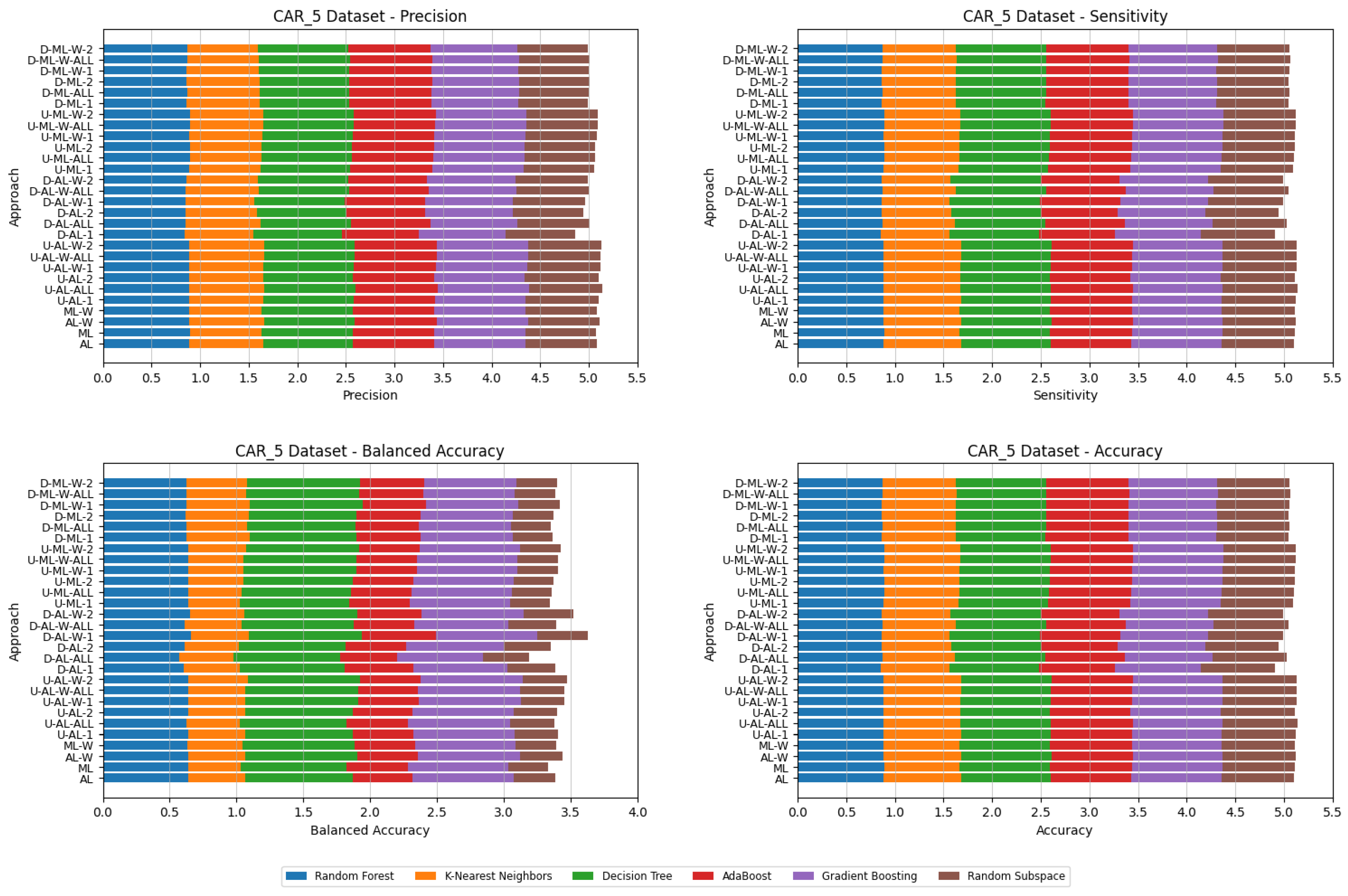

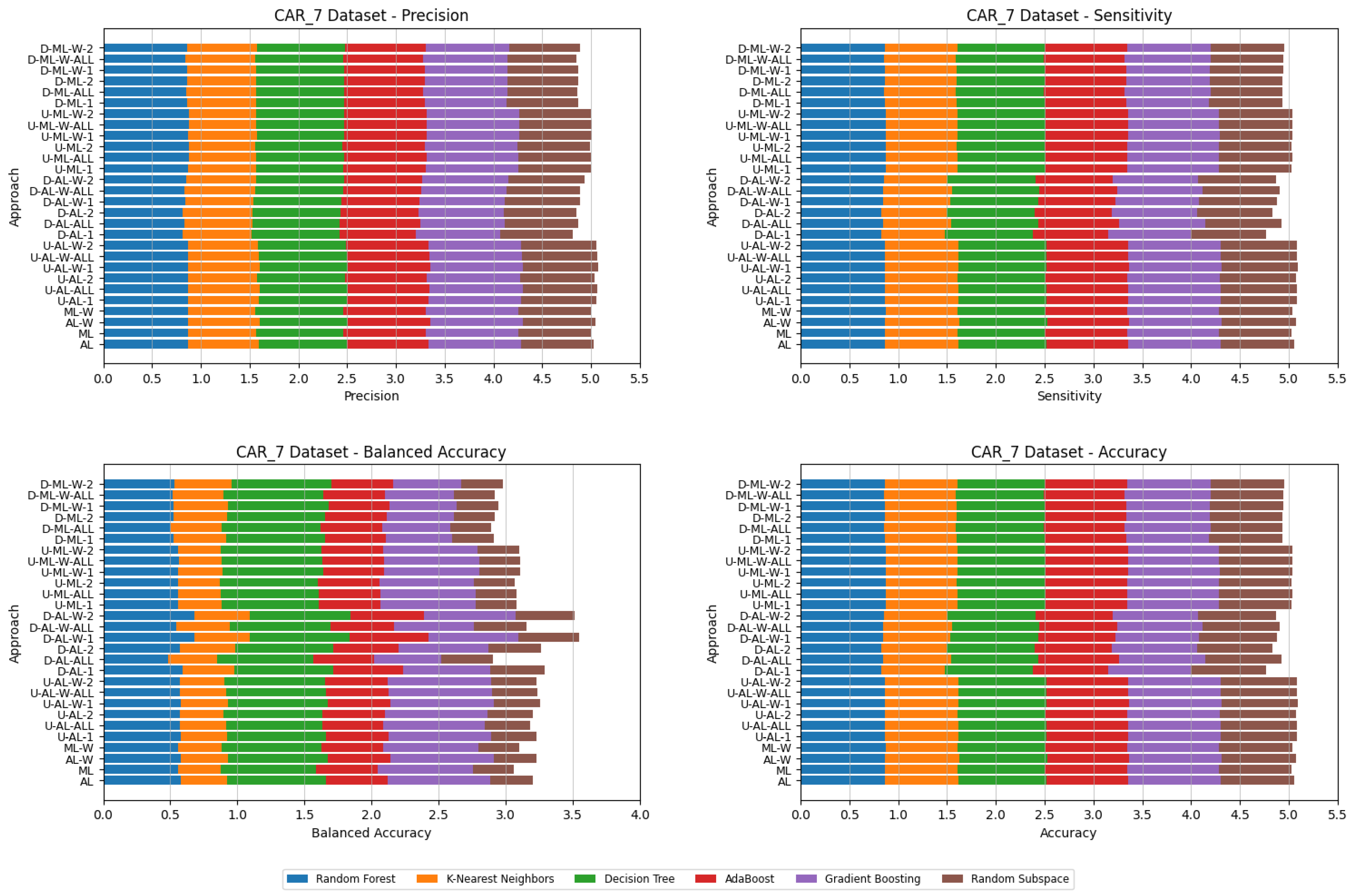

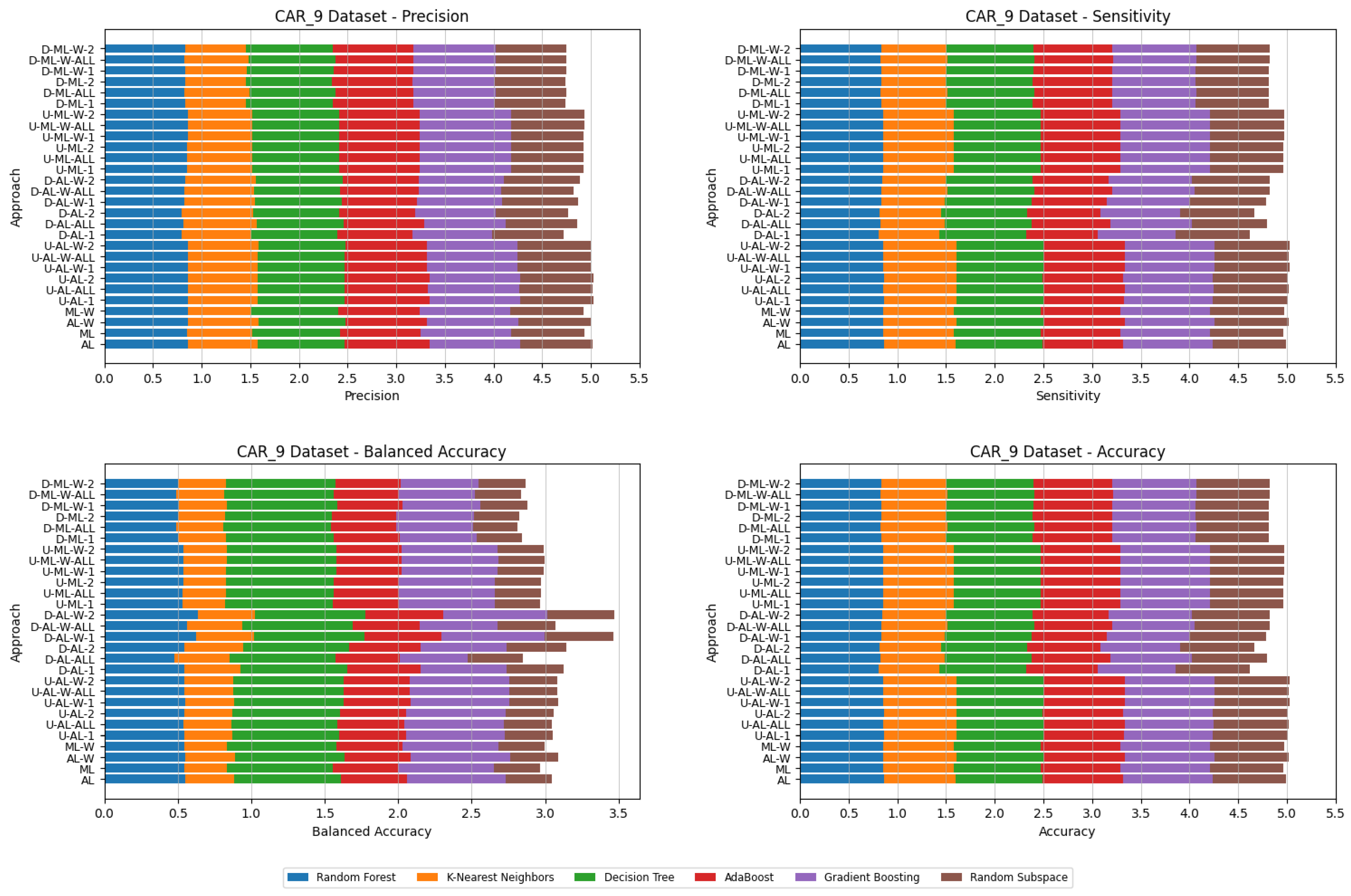

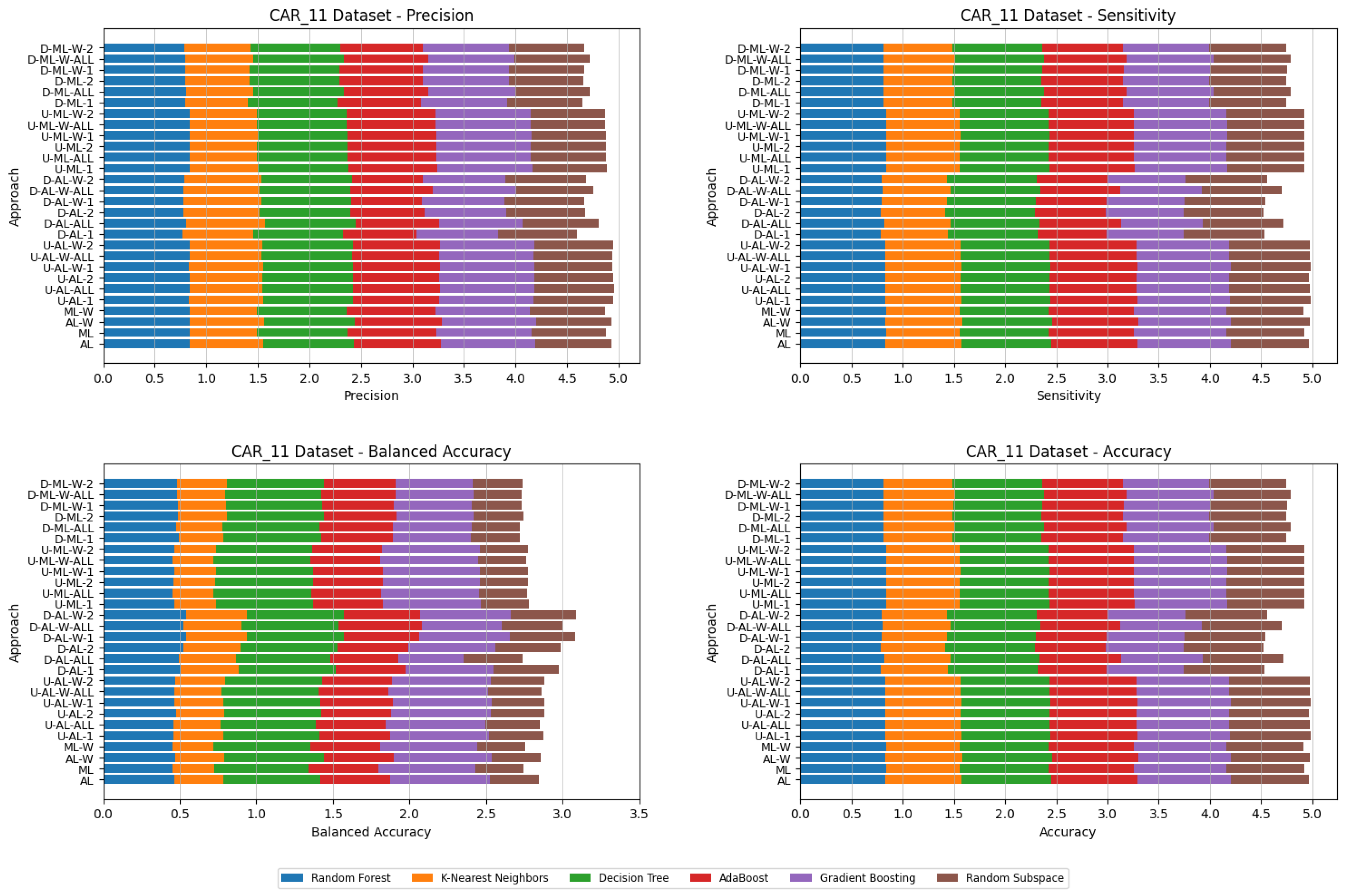

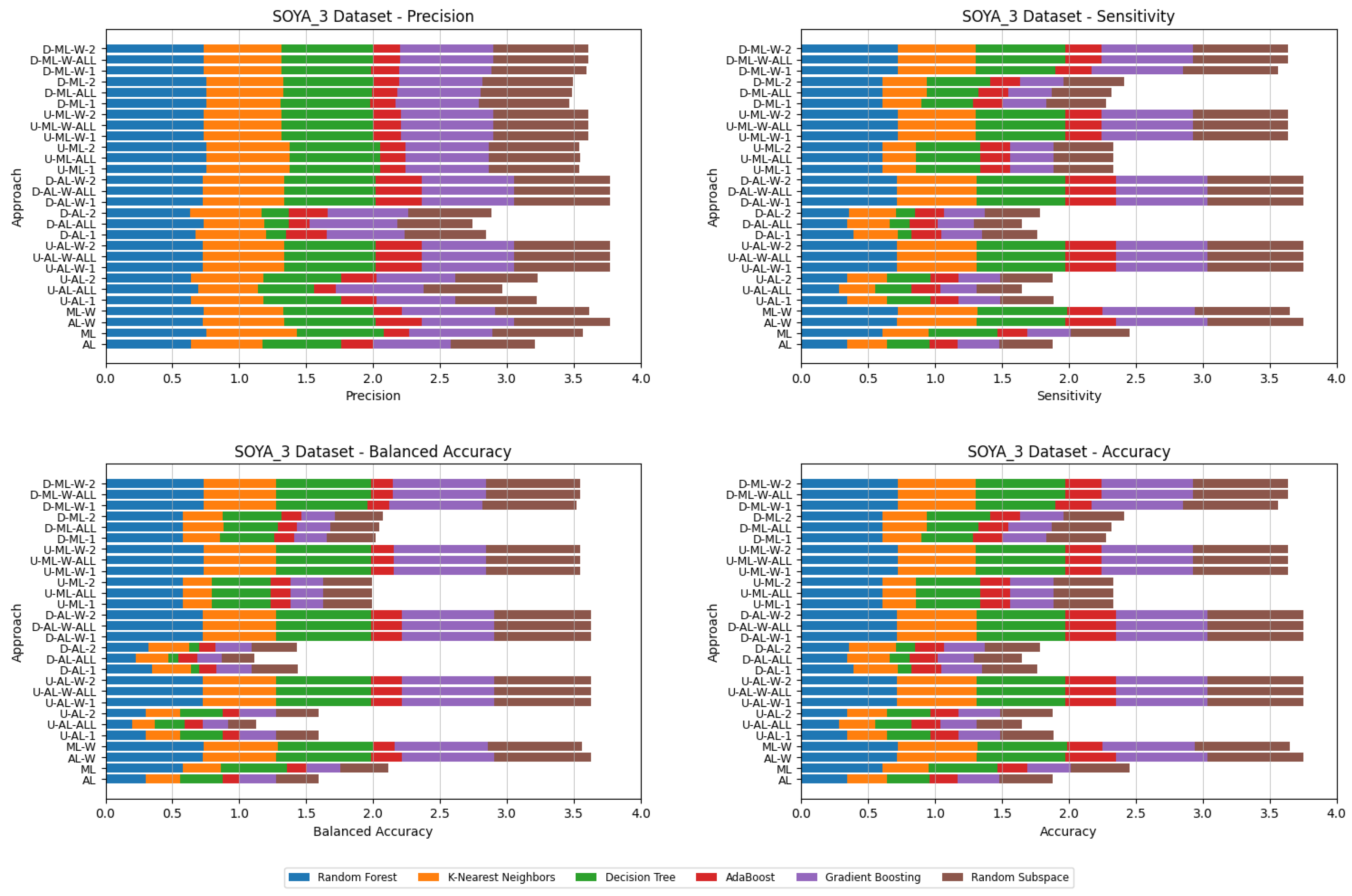

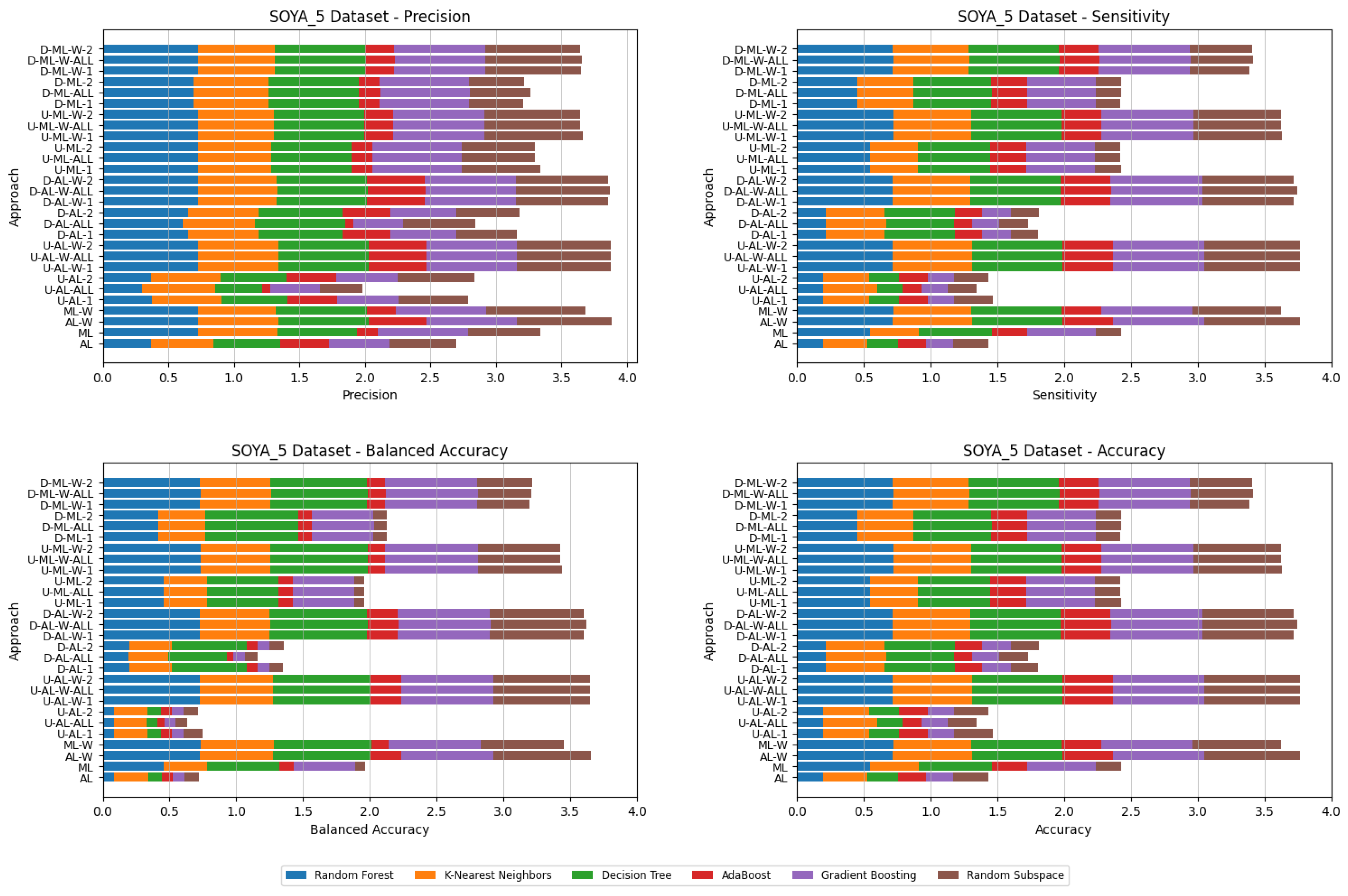

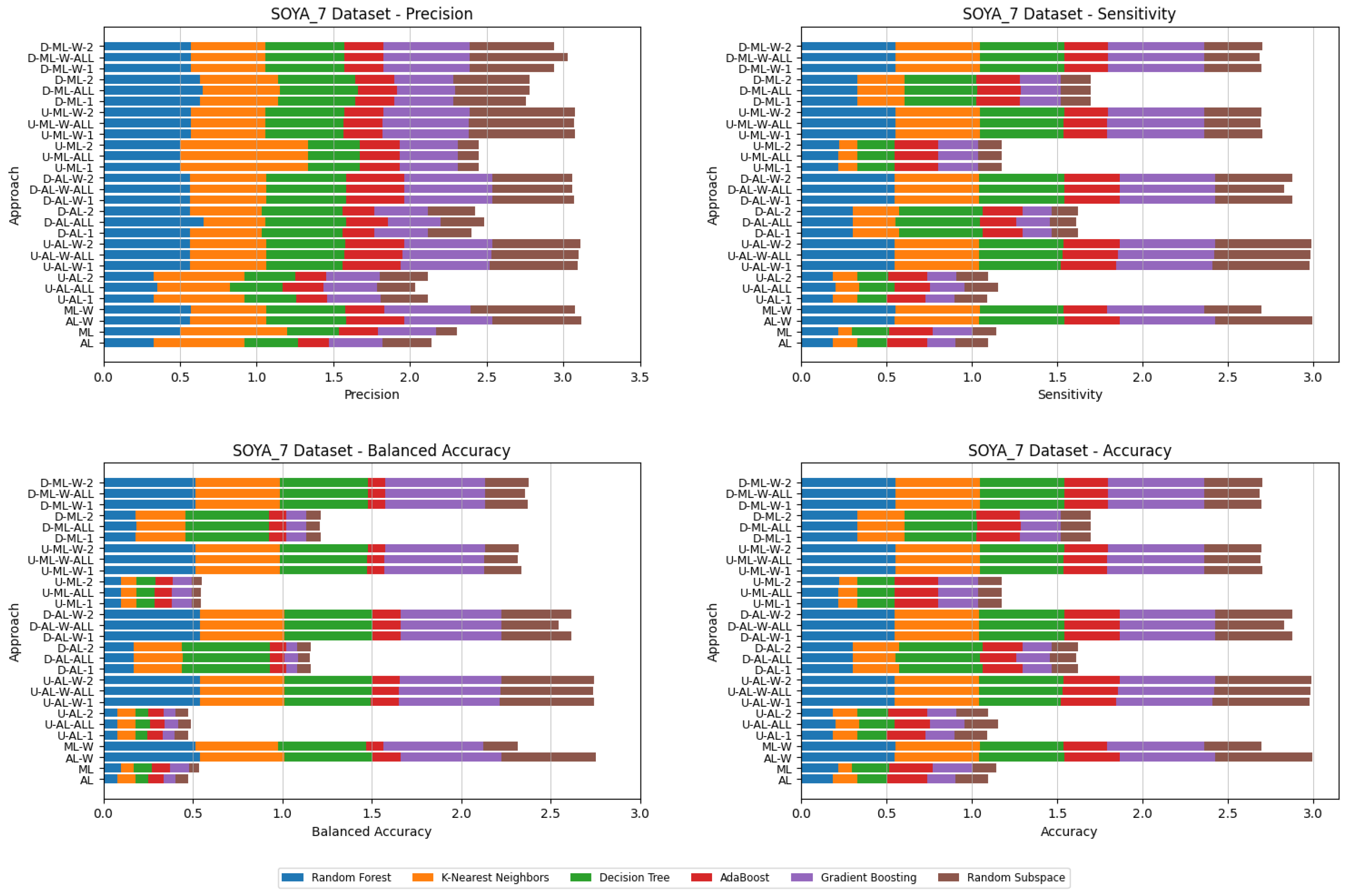

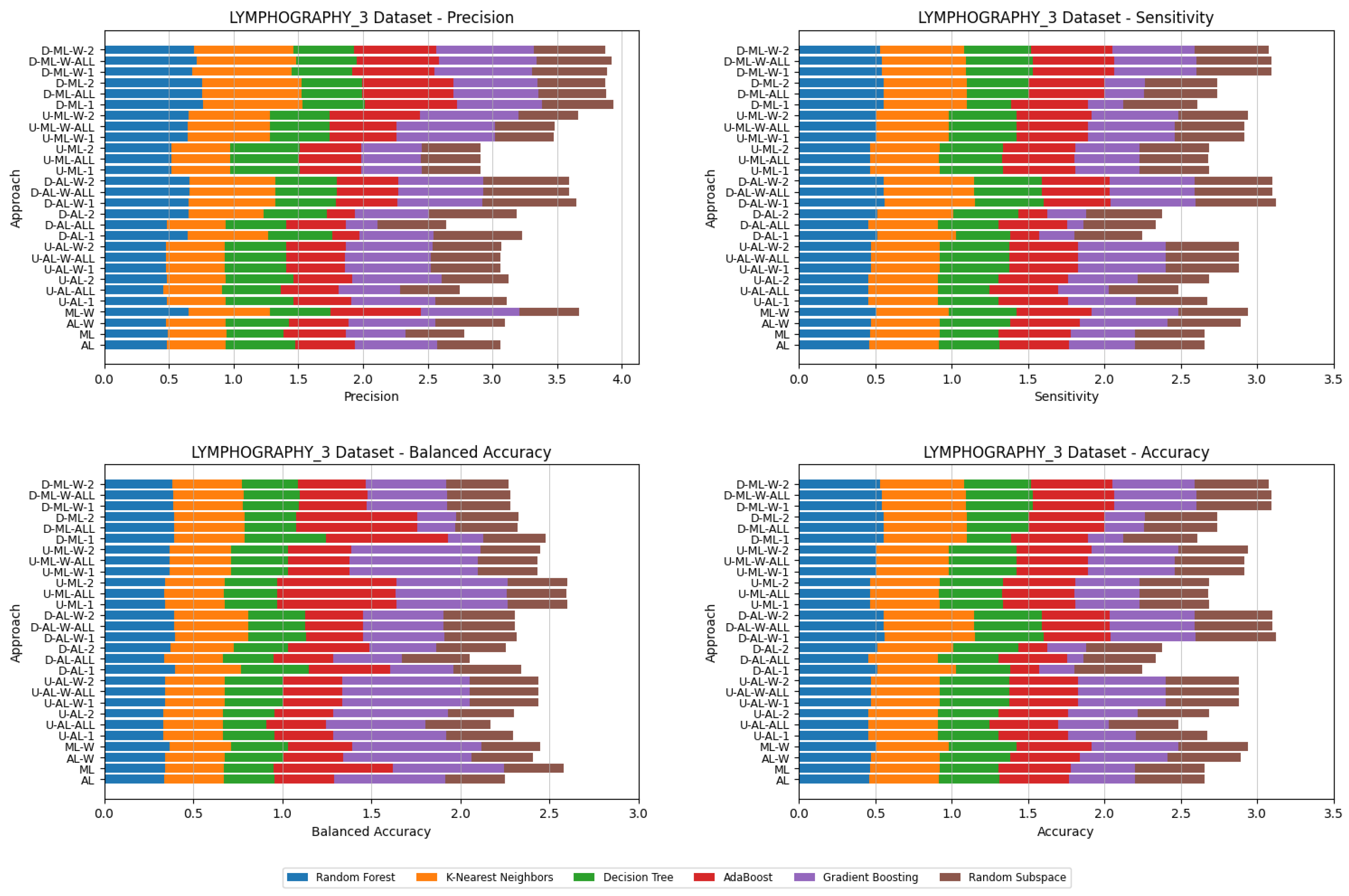

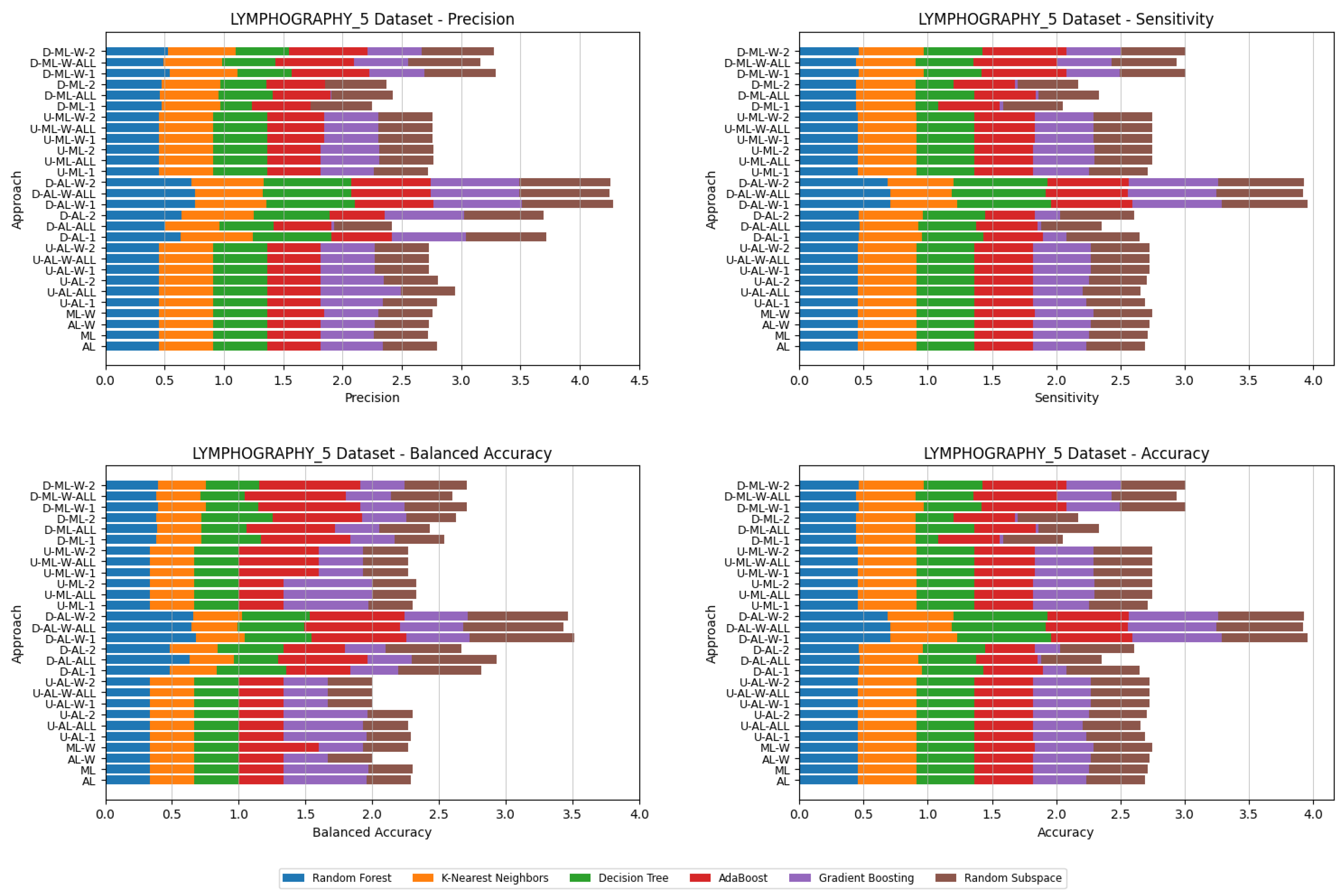

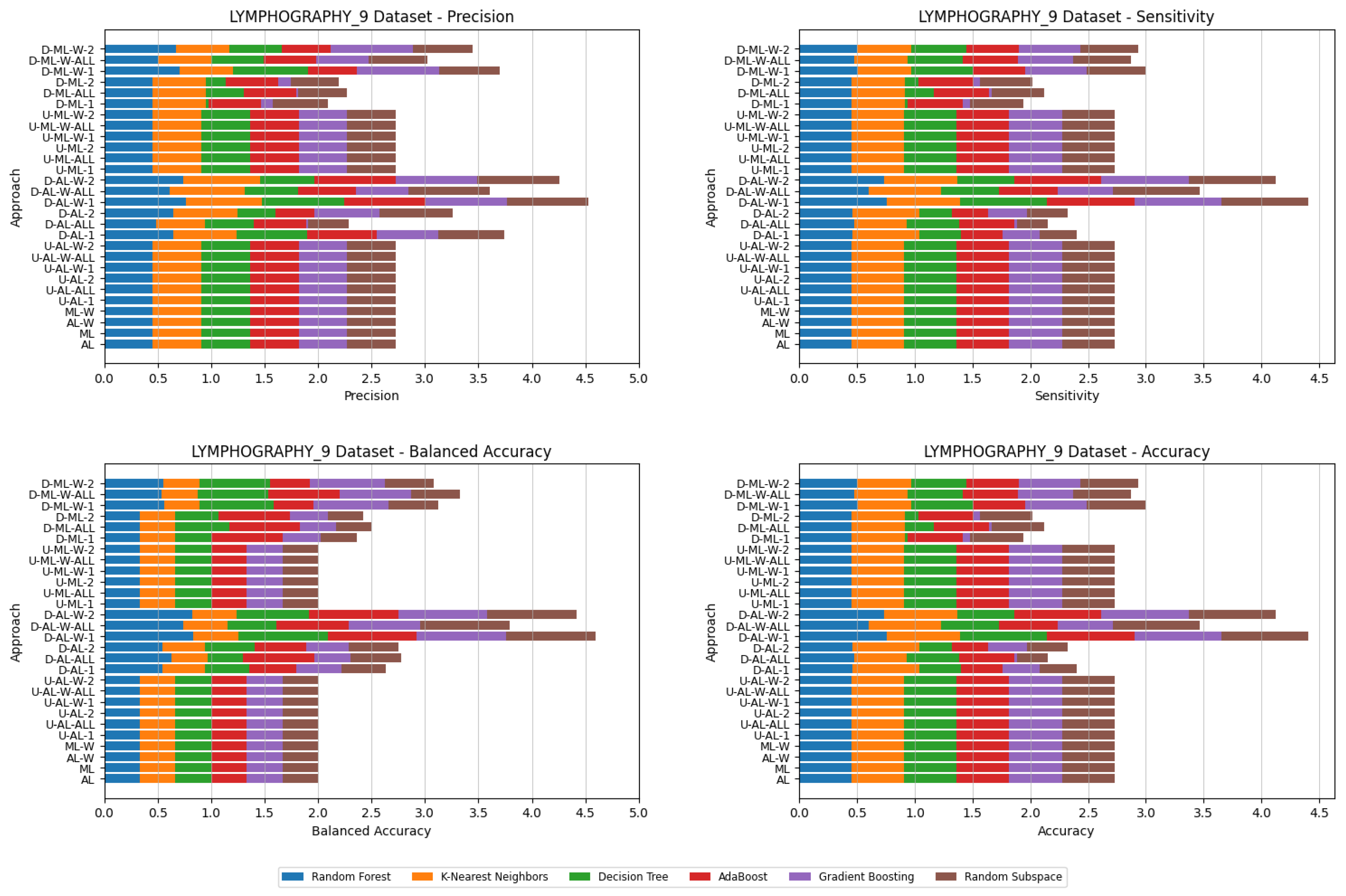

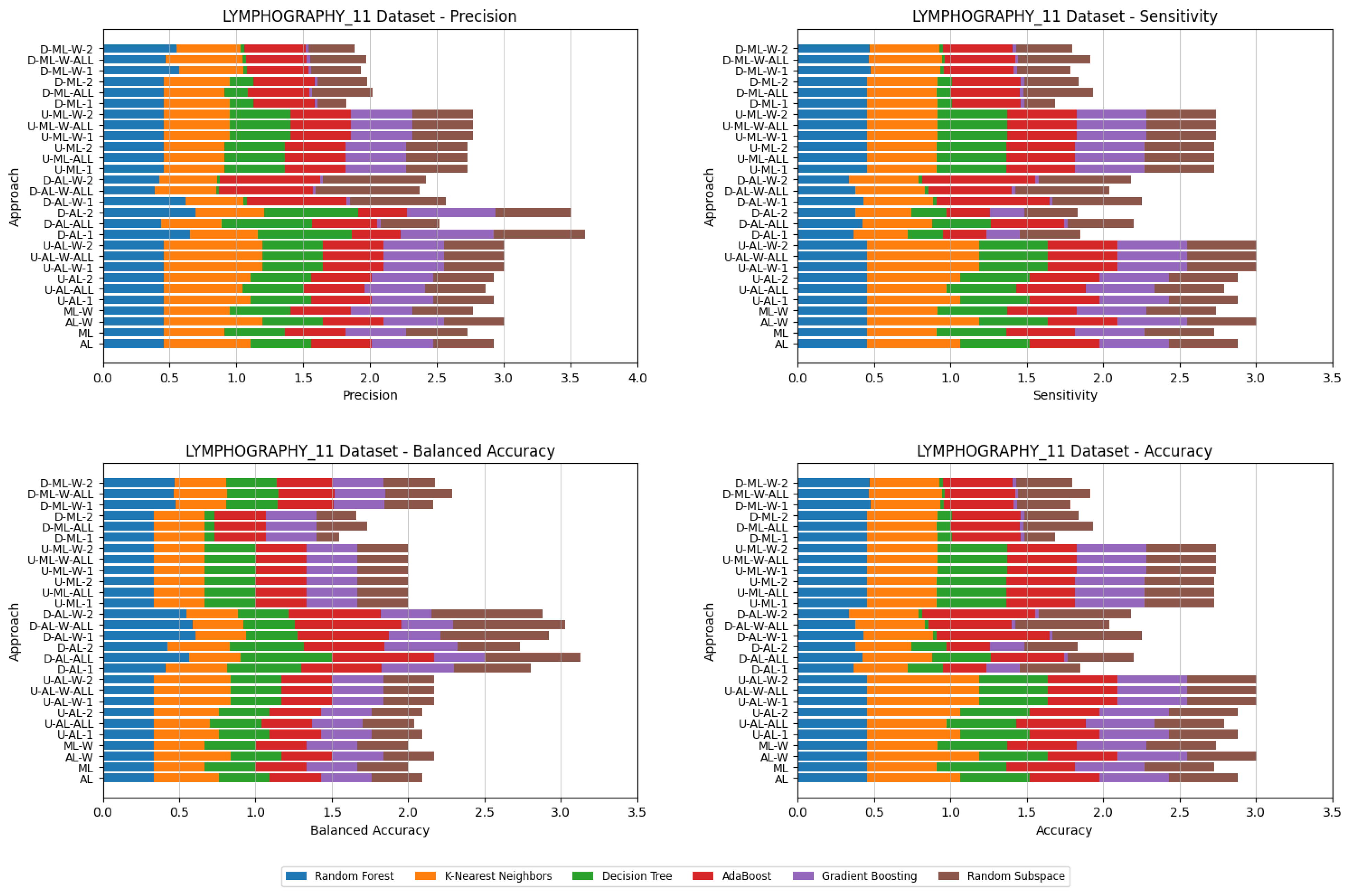

5. Experimental Results and Comparisons

6. Conclusions

Author Contributions

Funding

Informed Consent Statement

Data Availability Statement

Conflicts of Interest

Appendix A. Detailed Experimental Results

| Method | K-Nearest Neighbors | Decision Tree | Gradient Boosting | |||||||||

|---|---|---|---|---|---|---|---|---|---|---|---|---|

| Prec. | Recall | BAcc | Acc | Prec. | Recall | BAcc | Acc | Prec. | Recall | BAcc | Acc | |

| AL | 0.673 | 0.62 | 0.194 | 0.62 | 0.712 | 0.66 | 0.312 | 0.66 | 0.646 | 0.609 | 0.31 | 0.609 |

| ML | 0.701 | 0.711 | 0.232 | 0.711 | 0.737 | 0.678 | 0.329 | 0.678 | 0.704 | 0.68 | 0.348 | 0.68 |

| AL-W | 0.716 | 0.696 | 0.263 | 0.696 | 0.719 | 0.671 | 0.308 | 0.671 | 0.688 | 0.66 | 0.26 | 0.66 |

| ML-W | 0.715 | 0.711 | 0.227 | 0.711 | 0.717 | 0.664 | 0.277 | 0.664 | 0.67 | 0.667 | 0.246 | 0.667 |

| U-AL-1 | 0.591 | 0.553 | 0.182 | 0.553 | 0.691 | 0.656 | 0.328 | 0.656 | 0.66 | 0.609 | 0.305 | 0.609 |

| U-AL-All | 0.65 | 0.629 | 0.197 | 0.629 | 0.732 | 0.678 | 0.329 | 0.678 | 0.683 | 0.629 | 0.328 | 0.629 |

| U-AL-2 | 0.659 | 0.66 | 0.217 | 0.66 | 0.712 | 0.66 | 0.312 | 0.66 | 0.646 | 0.609 | 0.31 | 0.609 |

| U-AL-W-1 | 0.655 | 0.631 | 0.212 | 0.631 | 0.717 | 0.671 | 0.307 | 0.671 | 0.688 | 0.66 | 0.26 | 0.66 |

| U-AL-W-All | 0.701 | 0.707 | 0.254 | 0.707 | 0.717 | 0.671 | 0.307 | 0.671 | 0.688 | 0.66 | 0.26 | 0.66 |

| U-AL-W-2 | 0.702 | 0.707 | 0.254 | 0.707 | 0.716 | 0.664 | 0.304 | 0.664 | 0.688 | 0.66 | 0.26 | 0.66 |

| D-AL-1 | 0.6 | 0.551 | 0.186 | 0.551 | 0.699 | 0.658 | 0.317 | 0.658 | 0.658 | 0.611 | 0.311 | 0.611 |

| D-AL-All | 0.657 | 0.584 | 0.189 | 0.584 | 0.732 | 0.678 | 0.329 | 0.678 | 0.683 | 0.629 | 0.328 | 0.629 |

| D-AL-2 | 0.65 | 0.596 | 0.195 | 0.596 | 0.699 | 0.658 | 0.317 | 0.658 | 0.658 | 0.611 | 0.311 | 0.611 |

| D-AL-W-1 | 0.602 | 0.502 | 0.191 | 0.502 | 0.718 | 0.667 | 0.304 | 0.667 | 0.688 | 0.66 | 0.26 | 0.66 |

| D-AL-W-All | 0.628 | 0.591 | 0.191 | 0.591 | 0.718 | 0.667 | 0.304 | 0.667 | 0.688 | 0.66 | 0.26 | 0.66 |

| D-AL-W-2 | 0.634 | 0.589 | 0.19 | 0.589 | 0.718 | 0.667 | 0.304 | 0.667 | 0.688 | 0.66 | 0.26 | 0.66 |

| U-ML-1 | 0.713 | 0.713 | 0.228 | 0.713 | 0.707 | 0.651 | 0.287 | 0.651 | 0.704 | 0.68 | 0.348 | 0.68 |

| U-ML-All | 0.717 | 0.713 | 0.228 | 0.713 | 0.704 | 0.653 | 0.292 | 0.653 | 0.704 | 0.68 | 0.348 | 0.68 |

| U-ML-2 | 0.717 | 0.713 | 0.228 | 0.713 | 0.704 | 0.653 | 0.292 | 0.653 | 0.704 | 0.68 | 0.348 | 0.68 |

| U-ML-W-1 | 0.714 | 0.713 | 0.228 | 0.713 | 0.713 | 0.656 | 0.273 | 0.656 | 0.67 | 0.667 | 0.246 | 0.667 |

| U-ML-W-All | 0.72 | 0.713 | 0.228 | 0.713 | 0.713 | 0.656 | 0.273 | 0.656 | 0.67 | 0.667 | 0.246 | 0.667 |

| U-ML-W-2 | 0.72 | 0.713 | 0.228 | 0.713 | 0.713 | 0.656 | 0.273 | 0.656 | 0.67 | 0.667 | 0.246 | 0.667 |

| D-ML-1 | 0.642 | 0.678 | 0.211 | 0.678 | 0.69 | 0.656 | 0.321 | 0.656 | 0.704 | 0.68 | 0.348 | 0.68 |

| D-ML-All | 0.669 | 0.678 | 0.219 | 0.678 | 0.7 | 0.649 | 0.291 | 0.649 | 0.704 | 0.68 | 0.348 | 0.68 |

| D-ML-2 | 0.694 | 0.698 | 0.224 | 0.698 | 0.701 | 0.651 | 0.298 | 0.651 | 0.704 | 0.68 | 0.348 | 0.68 |

| D-ML-W-1 | 0.681 | 0.656 | 0.214 | 0.656 | 0.714 | 0.66 | 0.275 | 0.66 | 0.67 | 0.667 | 0.246 | 0.667 |

| D-ML-W-All | 0.685 | 0.66 | 0.215 | 0.66 | 0.713 | 0.656 | 0.273 | 0.656 | 0.67 | 0.667 | 0.246 | 0.667 |

| D-ML-W-2 | 0.704 | 0.702 | 0.225 | 0.702 | 0.713 | 0.656 | 0.273 | 0.656 | 0.67 | 0.667 | 0.246 | 0.667 |

| Method | Random Forest | Random Subspace | AdaBoost | |||||||||

| Prec. | Recall | BAcc | Acc | Prec. | Recall | BAcc | Acc | Prec. | Recall | BAcc | Acc | |

| AL | 0.678 | 0.664 | 0.303 | 0.664 | 0.691 | 0.678 | 0.325 | 0.678 | 0.743 | 0.56 | 0.255 | 0.56 |

| ML | 0.803 | 0.767 | 0.35 | 0.767 | 0.756 | 0.729 | 0.348 | 0.729 | 0.714 | 0.689 | 0.227 | 0.689 |

| AL-W | 0.764 | 0.729 | 0.33 | 0.729 | 0.71 | 0.684 | 0.33 | 0.684 | 0.788 | 0.622 | 0.302 | 0.622 |

| ML-W | 0.777 | 0.74 | 0.301 | 0.74 | 0.732 | 0.724 | 0.34 | 0.724 | 0.758 | 0.689 | 0.23 | 0.689 |

| U-AL-1 | 0.667 | 0.656 | 0.298 | 0.656 | 0.706 | 0.673 | 0.332 | 0.673 | 0.698 | 0.558 | 0.264 | 0.558 |

| U-AL-All | 0.695 | 0.64 | 0.278 | 0.64 | 0.714 | 0.669 | 0.341 | 0.669 | 0.701 | 0.547 | 0.266 | 0.547 |

| U-AL-2 | 0.678 | 0.664 | 0.303 | 0.664 | 0.699 | 0.682 | 0.327 | 0.682 | 0.743 | 0.56 | 0.255 | 0.56 |

| U-AL-W-1 | 0.76 | 0.727 | 0.329 | 0.727 | 0.72 | 0.702 | 0.329 | 0.702 | 0.788 | 0.622 | 0.302 | 0.622 |

| U-AL-W-All | 0.76 | 0.727 | 0.329 | 0.727 | 0.72 | 0.702 | 0.329 | 0.702 | 0.788 | 0.622 | 0.302 | 0.622 |

| U-AL-W-2 | 0.76 | 0.727 | 0.329 | 0.727 | 0.72 | 0.702 | 0.329 | 0.702 | 0.788 | 0.622 | 0.302 | 0.622 |

| D-AL-1 | 0.754 | 0.72 | 0.331 | 0.72 | 0.706 | 0.693 | 0.363 | 0.693 | 0.717 | 0.547 | 0.249 | 0.547 |

| D-AL-All | 0.74 | 0.7 | 0.312 | 0.7 | 0.738 | 0.72 | 0.373 | 0.72 | 0.701 | 0.547 | 0.266 | 0.547 |

| D-AL-2 | 0.759 | 0.724 | 0.332 | 0.724 | 0.75 | 0.733 | 0.368 | 0.733 | 0.717 | 0.547 | 0.249 | 0.547 |

| D-AL-W-1 | 0.785 | 0.749 | 0.342 | 0.749 | 0.71 | 0.696 | 0.358 | 0.696 | 0.788 | 0.622 | 0.302 | 0.622 |

| D-AL-W-All | 0.79 | 0.751 | 0.343 | 0.751 | 0.768 | 0.744 | 0.358 | 0.744 | 0.788 | 0.622 | 0.302 | 0.622 |

| D-AL-W-2 | 0.79 | 0.751 | 0.343 | 0.751 | 0.768 | 0.744 | 0.358 | 0.744 | 0.788 | 0.622 | 0.302 | 0.622 |

| U-ML-1 | 0.803 | 0.767 | 0.35 | 0.767 | 0.753 | 0.729 | 0.345 | 0.729 | 0.714 | 0.689 | 0.227 | 0.689 |

| U-ML-All | 0.803 | 0.767 | 0.35 | 0.767 | 0.755 | 0.731 | 0.345 | 0.731 | 0.714 | 0.689 | 0.227 | 0.689 |

| U-ML-2 | 0.803 | 0.767 | 0.35 | 0.767 | 0.753 | 0.729 | 0.344 | 0.729 | 0.714 | 0.689 | 0.227 | 0.689 |

| U-ML-W-1 | 0.777 | 0.74 | 0.301 | 0.74 | 0.732 | 0.724 | 0.34 | 0.724 | 0.758 | 0.689 | 0.23 | 0.689 |

| U-ML-W-All | 0.777 | 0.74 | 0.301 | 0.74 | 0.732 | 0.724 | 0.34 | 0.724 | 0.758 | 0.689 | 0.23 | 0.689 |

| U-ML-W-2 | 0.777 | 0.74 | 0.301 | 0.74 | 0.732 | 0.724 | 0.34 | 0.724 | 0.758 | 0.689 | 0.23 | 0.689 |

| D-ML-1 | 0.803 | 0.767 | 0.35 | 0.767 | 0.723 | 0.724 | 0.343 | 0.724 | 0.714 | 0.689 | 0.227 | 0.689 |

| D-ML-All | 0.803 | 0.767 | 0.35 | 0.767 | 0.726 | 0.729 | 0.344 | 0.729 | 0.714 | 0.689 | 0.227 | 0.689 |

| D-ML-2 | 0.803 | 0.767 | 0.35 | 0.767 | 0.741 | 0.729 | 0.352 | 0.729 | 0.714 | 0.689 | 0.227 | 0.689 |

| D-ML-W-1 | 0.778 | 0.738 | 0.3 | 0.738 | 0.725 | 0.724 | 0.343 | 0.724 | 0.758 | 0.689 | 0.23 | 0.689 |

| D-ML-W-All | 0.777 | 0.74 | 0.301 | 0.74 | 0.726 | 0.729 | 0.344 | 0.729 | 0.758 | 0.689 | 0.23 | 0.689 |

| D-ML-W-2 | 0.777 | 0.74 | 0.301 | 0.74 | 0.736 | 0.727 | 0.351 | 0.727 | 0.758 | 0.689 | 0.23 | 0.689 |

| Method | K-Nearest Neighbors | Decision Tree | Gradient Boosting | |||||||||

|---|---|---|---|---|---|---|---|---|---|---|---|---|

| Prec. | Recall | BAcc | Acc | Prec. | Recall | BAcc | Acc | Prec. | Recall | BAcc | Acc | |

| AL | 0.778 | 0.784 | 0.466 | 0.784 | 0.939 | 0.937 | 0.864 | 0.937 | 0.963 | 0.962 | 0.836 | 0.962 |

| ML | 0.811 | 0.812 | 0.452 | 0.812 | 0.944 | 0.942 | 0.861 | 0.942 | 0.967 | 0.965 | 0.874 | 0.965 |

| AL-W | 0.784 | 0.785 | 0.459 | 0.785 | 0.936 | 0.935 | 0.859 | 0.935 | 0.962 | 0.961 | 0.831 | 0.961 |

| ML-W | 0.798 | 0.802 | 0.46 | 0.802 | 0.936 | 0.935 | 0.859 | 0.935 | 0.966 | 0.964 | 0.869 | 0.964 |

| U-AL-1 | 0.779 | 0.786 | 0.48 | 0.786 | 0.938 | 0.937 | 0.862 | 0.937 | 0.962 | 0.961 | 0.831 | 0.961 |

| U-AL-All | 0.803 | 0.792 | 0.467 | 0.792 | 0.944 | 0.942 | 0.861 | 0.942 | 0.962 | 0.961 | 0.831 | 0.961 |

| U-AL-2 | 0.778 | 0.787 | 0.485 | 0.787 | 0.938 | 0.937 | 0.862 | 0.937 | 0.962 | 0.962 | 0.833 | 0.962 |

| U-AL-W-1 | 0.784 | 0.788 | 0.477 | 0.788 | 0.936 | 0.935 | 0.859 | 0.935 | 0.962 | 0.961 | 0.831 | 0.961 |

| U-AL-W-All | 0.785 | 0.788 | 0.477 | 0.788 | 0.936 | 0.935 | 0.859 | 0.935 | 0.962 | 0.961 | 0.831 | 0.961 |

| U-AL-W-2 | 0.785 | 0.788 | 0.477 | 0.788 | 0.936 | 0.935 | 0.859 | 0.935 | 0.962 | 0.961 | 0.831 | 0.961 |

| D-AL-1 | 0.752 | 0.75 | 0.483 | 0.75 | 0.94 | 0.938 | 0.861 | 0.938 | 0.956 | 0.956 | 0.828 | 0.956 |

| D-AL-All | 0.78 | 0.772 | 0.44 | 0.772 | 0.944 | 0.942 | 0.861 | 0.942 | 0.958 | 0.957 | 0.818 | 0.957 |

| D-AL-2 | 0.76 | 0.778 | 0.475 | 0.778 | 0.938 | 0.937 | 0.86 | 0.937 | 0.956 | 0.956 | 0.819 | 0.956 |

| D-AL-W-1 | 0.754 | 0.759 | 0.489 | 0.759 | 0.936 | 0.935 | 0.859 | 0.935 | 0.952 | 0.952 | 0.812 | 0.952 |

| D-AL-W-All | 0.768 | 0.786 | 0.489 | 0.786 | 0.936 | 0.935 | 0.859 | 0.935 | 0.962 | 0.958 | 0.822 | 0.958 |

| D-AL-W-2 | 0.768 | 0.786 | 0.489 | 0.786 | 0.936 | 0.935 | 0.859 | 0.935 | 0.962 | 0.958 | 0.822 | 0.958 |

| U-ML-1 | 0.789 | 0.803 | 0.462 | 0.803 | 0.937 | 0.936 | 0.856 | 0.936 | 0.967 | 0.965 | 0.874 | 0.965 |

| U-ML-All | 0.789 | 0.803 | 0.462 | 0.803 | 0.937 | 0.936 | 0.859 | 0.936 | 0.967 | 0.965 | 0.874 | 0.965 |

| U-ML-2 | 0.789 | 0.803 | 0.462 | 0.803 | 0.937 | 0.936 | 0.856 | 0.936 | 0.967 | 0.965 | 0.874 | 0.965 |

| U-ML-W-1 | 0.795 | 0.803 | 0.467 | 0.803 | 0.936 | 0.935 | 0.859 | 0.935 | 0.966 | 0.964 | 0.869 | 0.964 |

| U-ML-W-All | 0.795 | 0.803 | 0.467 | 0.803 | 0.936 | 0.935 | 0.859 | 0.935 | 0.966 | 0.964 | 0.869 | 0.964 |

| U-ML-W-2 | 0.795 | 0.803 | 0.467 | 0.803 | 0.936 | 0.935 | 0.859 | 0.935 | 0.966 | 0.964 | 0.869 | 0.964 |

| D-ML-1 | 0.781 | 0.798 | 0.465 | 0.798 | 0.938 | 0.937 | 0.859 | 0.937 | 0.96 | 0.957 | 0.835 | 0.957 |

| D-ML-All | 0.78 | 0.798 | 0.464 | 0.798 | 0.938 | 0.937 | 0.859 | 0.937 | 0.962 | 0.959 | 0.837 | 0.959 |

| D-ML-2 | 0.784 | 0.801 | 0.468 | 0.801 | 0.937 | 0.936 | 0.854 | 0.936 | 0.962 | 0.959 | 0.837 | 0.959 |

| D-ML-W-1 | 0.784 | 0.796 | 0.467 | 0.796 | 0.936 | 0.935 | 0.859 | 0.935 | 0.96 | 0.957 | 0.835 | 0.957 |

| D-ML-W-All | 0.783 | 0.796 | 0.466 | 0.796 | 0.936 | 0.935 | 0.859 | 0.935 | 0.962 | 0.959 | 0.837 | 0.959 |

| D-ML-W-2 | 0.787 | 0.799 | 0.467 | 0.799 | 0.936 | 0.935 | 0.859 | 0.935 | 0.962 | 0.959 | 0.837 | 0.959 |

| Method | Random Forest | Random Subspace | AdaBoost | |||||||||

| Prec. | Recall | BAcc | Acc | Prec. | Recall | BAcc | Acc | Prec. | Recall | BAcc | Acc | |

| AL | 0.904 | 0.903 | 0.727 | 0.903 | 0.756 | 0.763 | 0.343 | 0.763 | 0.842 | 0.852 | 0.606 | 0.852 |

| ML | 0.916 | 0.912 | 0.736 | 0.912 | 0.766 | 0.768 | 0.333 | 0.768 | 0.834 | 0.843 | 0.59 | 0.843 |

| AL-W | 0.905 | 0.905 | 0.739 | 0.905 | 0.763 | 0.766 | 0.361 | 0.766 | 0.845 | 0.854 | 0.609 | 0.854 |

| ML-W | 0.917 | 0.913 | 0.747 | 0.913 | 0.768 | 0.768 | 0.337 | 0.768 | 0.836 | 0.845 | 0.591 | 0.845 |

| U-AL-1 | 0.905 | 0.904 | 0.732 | 0.904 | 0.772 | 0.78 | 0.369 | 0.78 | 0.842 | 0.852 | 0.606 | 0.852 |

| U-AL-All | 0.903 | 0.901 | 0.714 | 0.901 | 0.764 | 0.777 | 0.349 | 0.777 | 0.846 | 0.855 | 0.61 | 0.855 |

| U-AL-2 | 0.904 | 0.904 | 0.729 | 0.904 | 0.772 | 0.78 | 0.366 | 0.78 | 0.842 | 0.852 | 0.606 | 0.852 |

| U-AL-W-1 | 0.905 | 0.905 | 0.739 | 0.905 | 0.774 | 0.781 | 0.376 | 0.781 | 0.845 | 0.854 | 0.609 | 0.854 |

| U-AL-W-All | 0.905 | 0.905 | 0.739 | 0.905 | 0.774 | 0.781 | 0.376 | 0.781 | 0.845 | 0.854 | 0.609 | 0.854 |

| U-AL-W-2 | 0.905 | 0.905 | 0.739 | 0.905 | 0.774 | 0.781 | 0.376 | 0.781 | 0.845 | 0.854 | 0.609 | 0.854 |

| D-AL-1 | 0.892 | 0.896 | 0.71 | 0.896 | 0.765 | 0.778 | 0.368 | 0.778 | 0.825 | 0.832 | 0.589 | 0.832 |

| D-AL-All | 0.902 | 0.902 | 0.701 | 0.902 | 0.762 | 0.778 | 0.362 | 0.778 | 0.842 | 0.84 | 0.59 | 0.84 |

| D-AL-2 | 0.895 | 0.9 | 0.714 | 0.9 | 0.765 | 0.779 | 0.371 | 0.779 | 0.839 | 0.845 | 0.598 | 0.845 |

| D-AL-W-1 | 0.899 | 0.902 | 0.749 | 0.902 | 0.773 | 0.785 | 0.39 | 0.785 | 0.829 | 0.834 | 0.582 | 0.834 |

| D-AL-W-All | 0.904 | 0.907 | 0.729 | 0.907 | 0.77 | 0.782 | 0.384 | 0.782 | 0.842 | 0.85 | 0.603 | 0.85 |

| D-AL-W-2 | 0.904 | 0.907 | 0.729 | 0.907 | 0.771 | 0.782 | 0.384 | 0.782 | 0.842 | 0.85 | 0.603 | 0.85 |

| U-ML-1 | 0.914 | 0.911 | 0.736 | 0.911 | 0.766 | 0.764 | 0.331 | 0.764 | 0.834 | 0.843 | 0.59 | 0.843 |

| U-ML-All | 0.915 | 0.912 | 0.743 | 0.912 | 0.762 | 0.763 | 0.329 | 0.763 | 0.834 | 0.843 | 0.59 | 0.843 |

| U-ML-2 | 0.915 | 0.912 | 0.739 | 0.912 | 0.766 | 0.764 | 0.331 | 0.764 | 0.834 | 0.843 | 0.59 | 0.843 |

| U-ML-W-1 | 0.916 | 0.913 | 0.747 | 0.913 | 0.768 | 0.768 | 0.337 | 0.768 | 0.836 | 0.845 | 0.591 | 0.845 |

| U-ML-W-All | 0.916 | 0.913 | 0.747 | 0.913 | 0.768 | 0.768 | 0.337 | 0.768 | 0.836 | 0.845 | 0.591 | 0.845 |

| U-ML-W-2 | 0.918 | 0.914 | 0.752 | 0.914 | 0.768 | 0.768 | 0.337 | 0.768 | 0.836 | 0.845 | 0.591 | 0.845 |

| D-ML-1 | 0.914 | 0.912 | 0.744 | 0.912 | 0.76 | 0.765 | 0.335 | 0.765 | 0.837 | 0.841 | 0.587 | 0.841 |

| D-ML-All | 0.917 | 0.915 | 0.752 | 0.915 | 0.758 | 0.762 | 0.328 | 0.762 | 0.834 | 0.839 | 0.572 | 0.839 |

| D-ML-2 | 0.918 | 0.915 | 0.753 | 0.915 | 0.762 | 0.762 | 0.33 | 0.762 | 0.834 | 0.839 | 0.572 | 0.839 |

| D-ML-W-1 | 0.914 | 0.912 | 0.754 | 0.912 | 0.764 | 0.769 | 0.342 | 0.769 | 0.837 | 0.841 | 0.576 | 0.841 |

| D-ML-W-All | 0.918 | 0.915 | 0.76 | 0.915 | 0.766 | 0.768 | 0.339 | 0.768 | 0.835 | 0.84 | 0.574 | 0.84 |

| D-ML-W-2 | 0.918 | 0.916 | 0.762 | 0.916 | 0.767 | 0.767 | 0.338 | 0.767 | 0.835 | 0.84 | 0.574 | 0.84 |

| Method | K-Nearest Neighbors | Decision Tree | Gradient Boosting | |||||||||

|---|---|---|---|---|---|---|---|---|---|---|---|---|

| Prec. | Recall | BAcc | Acc | Prec. | Recall | BAcc | Acc | Prec. | Recall | BAcc | Acc | |

| AL | 0.765 | 0.795 | 0.428 | 0.795 | 0.924 | 0.923 | 0.804 | 0.923 | 0.933 | 0.925 | 0.758 | 0.925 |

| ML | 0.738 | 0.77 | 0.393 | 0.77 | 0.932 | 0.927 | 0.796 | 0.927 | 0.937 | 0.929 | 0.75 | 0.929 |

| AL-W | 0.772 | 0.795 | 0.424 | 0.795 | 0.931 | 0.929 | 0.841 | 0.929 | 0.937 | 0.928 | 0.765 | 0.928 |

| ML-W | 0.751 | 0.779 | 0.407 | 0.779 | 0.931 | 0.929 | 0.843 | 0.929 | 0.937 | 0.929 | 0.751 | 0.929 |

| U-AL-1 | 0.766 | 0.795 | 0.422 | 0.795 | 0.926 | 0.924 | 0.81 | 0.924 | 0.933 | 0.926 | 0.759 | 0.926 |

| U-AL-All | 0.776 | 0.793 | 0.401 | 0.793 | 0.932 | 0.927 | 0.796 | 0.927 | 0.939 | 0.93 | 0.765 | 0.93 |

| U-AL-2 | 0.766 | 0.792 | 0.424 | 0.792 | 0.924 | 0.923 | 0.804 | 0.923 | 0.933 | 0.925 | 0.758 | 0.925 |

| U-AL-W-1 | 0.77 | 0.796 | 0.425 | 0.796 | 0.931 | 0.929 | 0.843 | 0.929 | 0.937 | 0.928 | 0.765 | 0.928 |

| U-AL-W-All | 0.772 | 0.794 | 0.424 | 0.794 | 0.93 | 0.928 | 0.843 | 0.928 | 0.937 | 0.928 | 0.765 | 0.928 |

| U-AL-W-2 | 0.777 | 0.795 | 0.442 | 0.795 | 0.931 | 0.929 | 0.843 | 0.929 | 0.937 | 0.928 | 0.765 | 0.928 |

| D-AL-1 | 0.7 | 0.703 | 0.42 | 0.703 | 0.919 | 0.919 | 0.784 | 0.919 | 0.891 | 0.888 | 0.709 | 0.888 |

| D-AL-All | 0.764 | 0.741 | 0.409 | 0.741 | 0.932 | 0.927 | 0.796 | 0.927 | 0.895 | 0.901 | 0.644 | 0.901 |

| D-AL-2 | 0.736 | 0.715 | 0.41 | 0.715 | 0.925 | 0.923 | 0.799 | 0.923 | 0.901 | 0.898 | 0.729 | 0.898 |

| D-AL-W-1 | 0.708 | 0.702 | 0.436 | 0.702 | 0.931 | 0.929 | 0.843 | 0.929 | 0.906 | 0.904 | 0.758 | 0.904 |

| D-AL-W-All | 0.747 | 0.755 | 0.423 | 0.755 | 0.931 | 0.929 | 0.843 | 0.929 | 0.902 | 0.904 | 0.699 | 0.904 |

| D-AL-W-2 | 0.737 | 0.707 | 0.409 | 0.707 | 0.931 | 0.929 | 0.843 | 0.929 | 0.911 | 0.909 | 0.765 | 0.909 |

| U-ML-1 | 0.736 | 0.768 | 0.387 | 0.768 | 0.926 | 0.924 | 0.814 | 0.924 | 0.937 | 0.929 | 0.75 | 0.929 |

| U-ML-All | 0.736 | 0.77 | 0.402 | 0.77 | 0.926 | 0.924 | 0.814 | 0.924 | 0.937 | 0.929 | 0.751 | 0.929 |

| U-ML-2 | 0.739 | 0.772 | 0.414 | 0.772 | 0.926 | 0.924 | 0.814 | 0.924 | 0.937 | 0.929 | 0.751 | 0.929 |

| U-ML-W-1 | 0.754 | 0.78 | 0.415 | 0.78 | 0.931 | 0.929 | 0.843 | 0.929 | 0.937 | 0.929 | 0.751 | 0.929 |

| U-ML-W-All | 0.752 | 0.78 | 0.415 | 0.78 | 0.93 | 0.928 | 0.843 | 0.928 | 0.937 | 0.929 | 0.751 | 0.929 |

| U-ML-W-2 | 0.755 | 0.783 | 0.435 | 0.783 | 0.931 | 0.929 | 0.843 | 0.929 | 0.937 | 0.929 | 0.751 | 0.929 |

| D-ML-1 | 0.749 | 0.762 | 0.474 | 0.762 | 0.924 | 0.923 | 0.802 | 0.923 | 0.894 | 0.905 | 0.69 | 0.905 |

| D-ML-All | 0.743 | 0.76 | 0.456 | 0.76 | 0.925 | 0.923 | 0.807 | 0.923 | 0.896 | 0.909 | 0.686 | 0.909 |

| D-ML-2 | 0.75 | 0.765 | 0.473 | 0.765 | 0.925 | 0.923 | 0.805 | 0.923 | 0.895 | 0.905 | 0.692 | 0.905 |

| D-ML-W-1 | 0.742 | 0.761 | 0.476 | 0.761 | 0.931 | 0.929 | 0.843 | 0.929 | 0.893 | 0.903 | 0.688 | 0.903 |

| D-ML-W-All | 0.74 | 0.762 | 0.452 | 0.762 | 0.931 | 0.929 | 0.843 | 0.929 | 0.897 | 0.909 | 0.686 | 0.909 |

| D-ML-W-2 | 0.727 | 0.756 | 0.454 | 0.756 | 0.931 | 0.929 | 0.843 | 0.929 | 0.895 | 0.905 | 0.693 | 0.905 |

| Method | Random Forest | Random Subspace | AdaBoost | |||||||||

| Prec. | Recall | BAcc | Acc | Prec. | Recall | BAcc | Acc | Prec. | Recall | BAcc | Acc | |

| AL | 0.886 | 0.883 | 0.639 | 0.883 | 0.741 | 0.744 | 0.311 | 0.744 | 0.836 | 0.83 | 0.446 | 0.83 |

| ML | 0.897 | 0.893 | 0.637 | 0.893 | 0.724 | 0.745 | 0.301 | 0.745 | 0.843 | 0.846 | 0.456 | 0.846 |

| AL-W | 0.887 | 0.884 | 0.641 | 0.884 | 0.737 | 0.746 | 0.319 | 0.746 | 0.846 | 0.837 | 0.452 | 0.837 |

| ML-W | 0.886 | 0.883 | 0.635 | 0.883 | 0.734 | 0.749 | 0.305 | 0.749 | 0.841 | 0.844 | 0.454 | 0.844 |

| U-AL-1 | 0.886 | 0.883 | 0.642 | 0.883 | 0.754 | 0.763 | 0.328 | 0.763 | 0.837 | 0.833 | 0.448 | 0.833 |

| U-AL-All | 0.887 | 0.883 | 0.626 | 0.883 | 0.755 | 0.764 | 0.33 | 0.764 | 0.854 | 0.841 | 0.459 | 0.841 |

| U-AL-2 | 0.884 | 0.88 | 0.642 | 0.88 | 0.755 | 0.763 | 0.327 | 0.763 | 0.836 | 0.83 | 0.446 | 0.83 |

| U-AL-W-1 | 0.884 | 0.881 | 0.642 | 0.881 | 0.753 | 0.763 | 0.329 | 0.763 | 0.846 | 0.837 | 0.452 | 0.837 |

| U-AL-W-All | 0.887 | 0.884 | 0.641 | 0.884 | 0.753 | 0.763 | 0.329 | 0.763 | 0.846 | 0.837 | 0.452 | 0.837 |

| U-AL-W-2 | 0.887 | 0.884 | 0.641 | 0.884 | 0.755 | 0.763 | 0.329 | 0.763 | 0.846 | 0.837 | 0.452 | 0.837 |

| D-AL-1 | 0.844 | 0.855 | 0.607 | 0.855 | 0.718 | 0.754 | 0.356 | 0.754 | 0.791 | 0.785 | 0.51 | 0.785 |

| D-AL-All | 0.854 | 0.873 | 0.568 | 0.873 | 0.741 | 0.767 | 0.343 | 0.767 | 0.822 | 0.823 | 0.426 | 0.823 |

| D-AL-2 | 0.847 | 0.86 | 0.61 | 0.86 | 0.726 | 0.759 | 0.351 | 0.759 | 0.805 | 0.795 | 0.452 | 0.795 |

| D-AL-W-1 | 0.853 | 0.86 | 0.659 | 0.86 | 0.741 | 0.77 | 0.377 | 0.77 | 0.823 | 0.826 | 0.556 | 0.826 |

| D-AL-W-All | 0.854 | 0.87 | 0.614 | 0.87 | 0.748 | 0.77 | 0.358 | 0.77 | 0.819 | 0.822 | 0.453 | 0.822 |

| D-AL-W-2 | 0.858 | 0.865 | 0.653 | 0.865 | 0.746 | 0.775 | 0.368 | 0.775 | 0.807 | 0.81 | 0.482 | 0.81 |

| U-ML-1 | 0.886 | 0.883 | 0.639 | 0.883 | 0.725 | 0.744 | 0.3 | 0.744 | 0.843 | 0.846 | 0.456 | 0.846 |

| U-ML-All | 0.897 | 0.893 | 0.639 | 0.893 | 0.727 | 0.745 | 0.3 | 0.745 | 0.843 | 0.846 | 0.456 | 0.846 |

| U-ML-2 | 0.897 | 0.893 | 0.64 | 0.893 | 0.725 | 0.744 | 0.3 | 0.744 | 0.843 | 0.846 | 0.456 | 0.846 |

| U-ML-W-1 | 0.887 | 0.883 | 0.638 | 0.883 | 0.734 | 0.749 | 0.305 | 0.749 | 0.841 | 0.844 | 0.454 | 0.844 |

| U-ML-W-All | 0.897 | 0.893 | 0.637 | 0.893 | 0.733 | 0.749 | 0.305 | 0.749 | 0.841 | 0.844 | 0.454 | 0.844 |

| U-ML-W-2 | 0.897 | 0.893 | 0.637 | 0.893 | 0.733 | 0.749 | 0.305 | 0.749 | 0.841 | 0.844 | 0.454 | 0.844 |

| D-ML-1 | 0.861 | 0.863 | 0.623 | 0.863 | 0.718 | 0.745 | 0.303 | 0.745 | 0.847 | 0.85 | 0.477 | 0.85 |

| D-ML-All | 0.868 | 0.869 | 0.624 | 0.869 | 0.714 | 0.744 | 0.301 | 0.744 | 0.85 | 0.852 | 0.479 | 0.852 |

| D-ML-2 | 0.863 | 0.865 | 0.62 | 0.865 | 0.726 | 0.738 | 0.302 | 0.738 | 0.85 | 0.852 | 0.479 | 0.852 |

| D-ML-W-1 | 0.864 | 0.867 | 0.626 | 0.867 | 0.723 | 0.749 | 0.309 | 0.749 | 0.845 | 0.848 | 0.475 | 0.848 |

| D-ML-W-All | 0.869 | 0.87 | 0.623 | 0.87 | 0.725 | 0.748 | 0.306 | 0.748 | 0.848 | 0.85 | 0.477 | 0.85 |

| D-ML-W-2 | 0.867 | 0.869 | 0.628 | 0.869 | 0.722 | 0.748 | 0.306 | 0.748 | 0.848 | 0.851 | 0.478 | 0.851 |

| Method | K-Nearest Neighbors | Decision Tree | Gradient Boosting | |||||||||

|---|---|---|---|---|---|---|---|---|---|---|---|---|

| Prec. | Recall | BAcc | Acc | Prec. | Recall | BAcc | Acc | Prec. | Recall | BAcc | Acc | |

| AL | 0.723 | 0.746 | 0.341 | 0.746 | 0.902 | 0.902 | 0.737 | 0.902 | 0.955 | 0.949 | 0.766 | 0.949 |

| ML | 0.695 | 0.737 | 0.317 | 0.737 | 0.901 | 0.9 | 0.714 | 0.9 | 0.946 | 0.936 | 0.708 | 0.936 |

| AL-W | 0.736 | 0.755 | 0.35 | 0.755 | 0.901 | 0.902 | 0.748 | 0.902 | 0.955 | 0.949 | 0.771 | 0.949 |

| ML-W | 0.691 | 0.736 | 0.322 | 0.736 | 0.901 | 0.902 | 0.748 | 0.902 | 0.946 | 0.936 | 0.708 | 0.936 |

| U-AL-1 | 0.726 | 0.748 | 0.348 | 0.748 | 0.901 | 0.902 | 0.739 | 0.902 | 0.954 | 0.948 | 0.765 | 0.948 |

| U-AL-All | 0.731 | 0.75 | 0.348 | 0.75 | 0.901 | 0.9 | 0.714 | 0.9 | 0.955 | 0.948 | 0.755 | 0.948 |

| U-AL-2 | 0.709 | 0.74 | 0.326 | 0.74 | 0.902 | 0.903 | 0.738 | 0.903 | 0.955 | 0.949 | 0.766 | 0.949 |

| U-AL-W-1 | 0.733 | 0.754 | 0.347 | 0.754 | 0.901 | 0.902 | 0.748 | 0.902 | 0.955 | 0.949 | 0.771 | 0.949 |

| U-AL-W-All | 0.726 | 0.749 | 0.34 | 0.749 | 0.901 | 0.902 | 0.748 | 0.902 | 0.955 | 0.949 | 0.771 | 0.949 |

| U-AL-W-2 | 0.718 | 0.747 | 0.332 | 0.747 | 0.901 | 0.902 | 0.748 | 0.902 | 0.955 | 0.949 | 0.771 | 0.949 |

| D-AL-1 | 0.714 | 0.659 | 0.388 | 0.659 | 0.9 | 0.901 | 0.739 | 0.901 | 0.86 | 0.854 | 0.644 | 0.854 |

| D-AL-All | 0.693 | 0.695 | 0.368 | 0.695 | 0.901 | 0.9 | 0.714 | 0.9 | 0.869 | 0.885 | 0.504 | 0.885 |

| D-AL-2 | 0.722 | 0.668 | 0.412 | 0.668 | 0.9 | 0.901 | 0.73 | 0.901 | 0.88 | 0.872 | 0.669 | 0.872 |

| D-AL-W-1 | 0.694 | 0.685 | 0.413 | 0.685 | 0.901 | 0.902 | 0.748 | 0.902 | 0.875 | 0.859 | 0.668 | 0.859 |

| D-AL-W-All | 0.722 | 0.703 | 0.401 | 0.703 | 0.901 | 0.902 | 0.748 | 0.902 | 0.874 | 0.876 | 0.592 | 0.876 |

| D-AL-W-2 | 0.717 | 0.655 | 0.415 | 0.655 | 0.901 | 0.902 | 0.748 | 0.902 | 0.887 | 0.868 | 0.679 | 0.868 |

| U-ML-1 | 0.693 | 0.737 | 0.324 | 0.737 | 0.9 | 0.9 | 0.727 | 0.9 | 0.947 | 0.936 | 0.708 | 0.936 |

| U-ML-All | 0.688 | 0.735 | 0.319 | 0.735 | 0.9 | 0.9 | 0.727 | 0.9 | 0.946 | 0.936 | 0.708 | 0.936 |

| U-ML-2 | 0.679 | 0.731 | 0.315 | 0.731 | 0.9 | 0.9 | 0.727 | 0.9 | 0.946 | 0.936 | 0.708 | 0.936 |

| U-ML-W-1 | 0.701 | 0.741 | 0.328 | 0.741 | 0.901 | 0.902 | 0.748 | 0.902 | 0.947 | 0.936 | 0.708 | 0.936 |

| U-ML-W-All | 0.69 | 0.737 | 0.321 | 0.737 | 0.901 | 0.902 | 0.748 | 0.902 | 0.946 | 0.936 | 0.708 | 0.936 |

| U-ML-W-2 | 0.69 | 0.737 | 0.321 | 0.737 | 0.901 | 0.902 | 0.748 | 0.902 | 0.946 | 0.936 | 0.708 | 0.936 |

| D-ML-1 | 0.712 | 0.737 | 0.39 | 0.737 | 0.902 | 0.902 | 0.74 | 0.902 | 0.838 | 0.847 | 0.494 | 0.847 |

| D-ML-All | 0.71 | 0.737 | 0.376 | 0.737 | 0.901 | 0.902 | 0.74 | 0.902 | 0.862 | 0.876 | 0.511 | 0.876 |

| D-ML-2 | 0.713 | 0.738 | 0.397 | 0.738 | 0.902 | 0.902 | 0.735 | 0.902 | 0.847 | 0.856 | 0.504 | 0.856 |

| D-ML-W-1 | 0.708 | 0.74 | 0.403 | 0.74 | 0.901 | 0.902 | 0.748 | 0.902 | 0.845 | 0.853 | 0.501 | 0.853 |

| D-ML-W-All | 0.715 | 0.74 | 0.38 | 0.74 | 0.901 | 0.902 | 0.748 | 0.902 | 0.866 | 0.878 | 0.516 | 0.878 |

| D-ML-W-2 | 0.718 | 0.742 | 0.424 | 0.742 | 0.901 | 0.902 | 0.748 | 0.902 | 0.853 | 0.862 | 0.511 | 0.862 |

| Method | Random Forest | Random Subspace | AdaBoost | |||||||||

| Prec. | Recall | BAcc | Acc | Prec. | Recall | BAcc | Acc | Prec. | Recall | BAcc | Acc | |

| AL | 0.869 | 0.867 | 0.579 | 0.867 | 0.742 | 0.757 | 0.316 | 0.757 | 0.837 | 0.841 | 0.464 | 0.841 |

| ML | 0.868 | 0.867 | 0.556 | 0.867 | 0.742 | 0.749 | 0.306 | 0.749 | 0.846 | 0.844 | 0.459 | 0.844 |

| AL-W | 0.87 | 0.867 | 0.579 | 0.867 | 0.744 | 0.758 | 0.317 | 0.758 | 0.844 | 0.843 | 0.464 | 0.843 |

| ML-W | 0.87 | 0.868 | 0.56 | 0.868 | 0.741 | 0.75 | 0.307 | 0.75 | 0.846 | 0.844 | 0.459 | 0.844 |

| U-AL-1 | 0.869 | 0.866 | 0.576 | 0.866 | 0.766 | 0.777 | 0.341 | 0.777 | 0.836 | 0.84 | 0.461 | 0.84 |

| U-AL-All | 0.87 | 0.866 | 0.57 | 0.866 | 0.765 | 0.777 | 0.341 | 0.777 | 0.843 | 0.842 | 0.456 | 0.842 |

| U-AL-2 | 0.867 | 0.866 | 0.57 | 0.866 | 0.762 | 0.775 | 0.339 | 0.775 | 0.837 | 0.841 | 0.464 | 0.841 |

| U-AL-W-1 | 0.871 | 0.867 | 0.581 | 0.867 | 0.767 | 0.778 | 0.344 | 0.778 | 0.844 | 0.843 | 0.464 | 0.843 |

| U-AL-W-All | 0.87 | 0.866 | 0.574 | 0.866 | 0.765 | 0.777 | 0.341 | 0.777 | 0.844 | 0.843 | 0.464 | 0.843 |

| U-AL-W-2 | 0.868 | 0.866 | 0.574 | 0.866 | 0.765 | 0.777 | 0.341 | 0.777 | 0.844 | 0.843 | 0.464 | 0.843 |

| D-AL-1 | 0.81 | 0.822 | 0.59 | 0.822 | 0.745 | 0.772 | 0.407 | 0.772 | 0.783 | 0.766 | 0.522 | 0.766 |

| D-AL-All | 0.832 | 0.845 | 0.482 | 0.845 | 0.751 | 0.781 | 0.385 | 0.781 | 0.825 | 0.821 | 0.454 | 0.821 |

| D-AL-2 | 0.811 | 0.825 | 0.571 | 0.825 | 0.737 | 0.772 | 0.396 | 0.772 | 0.797 | 0.798 | 0.487 | 0.798 |

| D-AL-W-1 | 0.842 | 0.847 | 0.677 | 0.847 | 0.776 | 0.796 | 0.452 | 0.796 | 0.8 | 0.788 | 0.59 | 0.788 |

| D-AL-W-All | 0.833 | 0.844 | 0.543 | 0.844 | 0.752 | 0.782 | 0.396 | 0.782 | 0.806 | 0.799 | 0.475 | 0.799 |

| D-AL-W-2 | 0.847 | 0.852 | 0.678 | 0.852 | 0.782 | 0.802 | 0.439 | 0.802 | 0.801 | 0.793 | 0.552 | 0.793 |

| U-ML-1 | 0.87 | 0.868 | 0.557 | 0.868 | 0.739 | 0.748 | 0.305 | 0.748 | 0.846 | 0.844 | 0.459 | 0.844 |

| U-ML-All | 0.877 | 0.871 | 0.56 | 0.871 | 0.743 | 0.748 | 0.305 | 0.748 | 0.846 | 0.844 | 0.459 | 0.844 |

| U-ML-2 | 0.876 | 0.87 | 0.556 | 0.87 | 0.738 | 0.748 | 0.304 | 0.748 | 0.846 | 0.844 | 0.459 | 0.844 |

| U-ML-W-1 | 0.871 | 0.869 | 0.56 | 0.869 | 0.739 | 0.75 | 0.307 | 0.75 | 0.846 | 0.844 | 0.459 | 0.844 |

| U-ML-W-All | 0.878 | 0.872 | 0.564 | 0.872 | 0.741 | 0.75 | 0.306 | 0.75 | 0.846 | 0.844 | 0.459 | 0.844 |

| U-ML-W-2 | 0.876 | 0.87 | 0.555 | 0.87 | 0.739 | 0.75 | 0.306 | 0.75 | 0.846 | 0.844 | 0.459 | 0.844 |

| D-ML-1 | 0.858 | 0.859 | 0.524 | 0.859 | 0.732 | 0.75 | 0.309 | 0.75 | 0.823 | 0.838 | 0.455 | 0.838 |

| D-ML-All | 0.853 | 0.855 | 0.506 | 0.855 | 0.717 | 0.742 | 0.301 | 0.742 | 0.816 | 0.83 | 0.457 | 0.83 |

| D-ML-2 | 0.858 | 0.859 | 0.524 | 0.859 | 0.725 | 0.748 | 0.307 | 0.748 | 0.823 | 0.838 | 0.455 | 0.838 |

| D-ML-W-1 | 0.859 | 0.86 | 0.527 | 0.86 | 0.729 | 0.751 | 0.31 | 0.751 | 0.827 | 0.839 | 0.456 | 0.839 |

| D-ML-W-All | 0.841 | 0.85 | 0.515 | 0.85 | 0.715 | 0.745 | 0.303 | 0.745 | 0.816 | 0.83 | 0.457 | 0.83 |

| D-ML-W-2 | 0.859 | 0.861 | 0.53 | 0.861 | 0.724 | 0.75 | 0.309 | 0.75 | 0.827 | 0.839 | 0.456 | 0.839 |

| Method | K-Nearest Neighbors | Decision Tree | Gradient Boosting | |||||||||

|---|---|---|---|---|---|---|---|---|---|---|---|---|

| Prec. | Recall | BAcc | Acc | Prec. | Recall | BAcc | Acc | Prec. | Recall | BAcc | Acc | |

| AL | 0.718 | 0.742 | 0.331 | 0.742 | 0.887 | 0.89 | 0.727 | 0.89 | 0.933 | 0.917 | 0.67 | 0.917 |

| ML | 0.67 | 0.723 | 0.289 | 0.723 | 0.894 | 0.893 | 0.72 | 0.893 | 0.935 | 0.919 | 0.654 | 0.919 |

| AL-W | 0.726 | 0.748 | 0.335 | 0.748 | 0.892 | 0.893 | 0.747 | 0.893 | 0.932 | 0.919 | 0.676 | 0.919 |

| ML-W | 0.659 | 0.72 | 0.292 | 0.72 | 0.892 | 0.893 | 0.747 | 0.893 | 0.935 | 0.919 | 0.654 | 0.919 |

| U-AL-1 | 0.719 | 0.748 | 0.327 | 0.748 | 0.888 | 0.891 | 0.726 | 0.891 | 0.932 | 0.917 | 0.673 | 0.917 |

| U-AL-All | 0.722 | 0.748 | 0.326 | 0.748 | 0.894 | 0.893 | 0.72 | 0.893 | 0.935 | 0.917 | 0.675 | 0.917 |

| U-AL-2 | 0.719 | 0.745 | 0.328 | 0.745 | 0.887 | 0.89 | 0.732 | 0.89 | 0.933 | 0.917 | 0.675 | 0.917 |

| U-AL-W-1 | 0.72 | 0.751 | 0.333 | 0.751 | 0.892 | 0.893 | 0.747 | 0.893 | 0.932 | 0.919 | 0.676 | 0.919 |

| U-AL-W-All | 0.723 | 0.748 | 0.331 | 0.748 | 0.892 | 0.893 | 0.747 | 0.893 | 0.932 | 0.919 | 0.676 | 0.919 |

| U-AL-W-2 | 0.725 | 0.75 | 0.332 | 0.75 | 0.892 | 0.893 | 0.747 | 0.893 | 0.932 | 0.919 | 0.676 | 0.919 |

| D-AL-1 | 0.717 | 0.625 | 0.381 | 0.625 | 0.886 | 0.889 | 0.726 | 0.889 | 0.817 | 0.8 | 0.584 | 0.8 |

| D-AL-All | 0.756 | 0.657 | 0.376 | 0.657 | 0.894 | 0.893 | 0.72 | 0.893 | 0.836 | 0.84 | 0.46 | 0.84 |

| D-AL-2 | 0.73 | 0.632 | 0.395 | 0.632 | 0.888 | 0.89 | 0.723 | 0.89 | 0.835 | 0.818 | 0.586 | 0.818 |

| D-AL-W-1 | 0.728 | 0.653 | 0.396 | 0.653 | 0.892 | 0.893 | 0.747 | 0.893 | 0.869 | 0.85 | 0.702 | 0.85 |

| D-AL-W-All | 0.716 | 0.683 | 0.378 | 0.683 | 0.892 | 0.893 | 0.747 | 0.893 | 0.843 | 0.844 | 0.533 | 0.844 |

| D-AL-W-2 | 0.725 | 0.654 | 0.392 | 0.654 | 0.892 | 0.893 | 0.747 | 0.893 | 0.877 | 0.859 | 0.708 | 0.859 |

| U-ML-1 | 0.67 | 0.723 | 0.288 | 0.723 | 0.889 | 0.891 | 0.733 | 0.891 | 0.935 | 0.919 | 0.654 | 0.919 |

| U-ML-All | 0.669 | 0.722 | 0.292 | 0.722 | 0.889 | 0.891 | 0.733 | 0.891 | 0.935 | 0.919 | 0.654 | 0.919 |

| U-ML-2 | 0.671 | 0.723 | 0.289 | 0.723 | 0.889 | 0.891 | 0.733 | 0.891 | 0.935 | 0.919 | 0.654 | 0.919 |

| U-ML-W-1 | 0.663 | 0.722 | 0.292 | 0.722 | 0.892 | 0.893 | 0.747 | 0.893 | 0.935 | 0.919 | 0.654 | 0.919 |

| U-ML-W-All | 0.666 | 0.722 | 0.293 | 0.722 | 0.892 | 0.893 | 0.747 | 0.893 | 0.935 | 0.919 | 0.654 | 0.919 |

| U-ML-W-2 | 0.666 | 0.722 | 0.293 | 0.722 | 0.892 | 0.893 | 0.747 | 0.893 | 0.935 | 0.919 | 0.654 | 0.919 |

| D-ML-1 | 0.63 | 0.669 | 0.325 | 0.669 | 0.889 | 0.891 | 0.733 | 0.891 | 0.839 | 0.855 | 0.521 | 0.855 |

| D-ML-All | 0.661 | 0.688 | 0.322 | 0.688 | 0.889 | 0.891 | 0.733 | 0.891 | 0.851 | 0.858 | 0.523 | 0.858 |

| D-ML-2 | 0.626 | 0.668 | 0.319 | 0.668 | 0.887 | 0.889 | 0.728 | 0.889 | 0.844 | 0.859 | 0.526 | 0.859 |

| D-ML-W-1 | 0.631 | 0.672 | 0.332 | 0.672 | 0.892 | 0.893 | 0.747 | 0.893 | 0.845 | 0.858 | 0.529 | 0.858 |

| D-ML-W-All | 0.655 | 0.688 | 0.324 | 0.688 | 0.892 | 0.893 | 0.747 | 0.893 | 0.848 | 0.859 | 0.52 | 0.859 |

| D-ML-W-2 | 0.629 | 0.672 | 0.325 | 0.672 | 0.892 | 0.893 | 0.747 | 0.893 | 0.85 | 0.862 | 0.533 | 0.862 |

| Method | Random Forest | Random Subspace | AdaBoost | |||||||||

| Prec. | Recall | BAcc | Acc | Prec. | Recall | BAcc | Acc | Prec. | Recall | BAcc | Acc | |

| AL | 0.862 | 0.859 | 0.55 | 0.859 | 0.747 | 0.76 | 0.318 | 0.76 | 0.874 | 0.828 | 0.451 | 0.828 |

| ML | 0.855 | 0.856 | 0.545 | 0.856 | 0.749 | 0.754 | 0.311 | 0.754 | 0.83 | 0.821 | 0.446 | 0.821 |

| AL-W | 0.859 | 0.858 | 0.553 | 0.858 | 0.746 | 0.762 | 0.322 | 0.762 | 0.841 | 0.838 | 0.452 | 0.838 |

| ML-W | 0.856 | 0.857 | 0.543 | 0.857 | 0.753 | 0.758 | 0.316 | 0.758 | 0.829 | 0.821 | 0.444 | 0.821 |

| U-AL-1 | 0.862 | 0.859 | 0.547 | 0.859 | 0.751 | 0.767 | 0.329 | 0.767 | 0.874 | 0.828 | 0.452 | 0.828 |

| U-AL-All | 0.856 | 0.855 | 0.541 | 0.855 | 0.752 | 0.769 | 0.331 | 0.769 | 0.855 | 0.837 | 0.455 | 0.837 |

| U-AL-2 | 0.861 | 0.858 | 0.545 | 0.858 | 0.754 | 0.769 | 0.329 | 0.769 | 0.873 | 0.827 | 0.451 | 0.827 |

| U-AL-W-1 | 0.859 | 0.857 | 0.55 | 0.857 | 0.753 | 0.772 | 0.331 | 0.772 | 0.841 | 0.838 | 0.452 | 0.838 |

| U-AL-W-All | 0.857 | 0.855 | 0.547 | 0.855 | 0.752 | 0.77 | 0.33 | 0.77 | 0.841 | 0.838 | 0.452 | 0.838 |

| U-AL-W-2 | 0.857 | 0.856 | 0.547 | 0.856 | 0.752 | 0.77 | 0.33 | 0.77 | 0.841 | 0.838 | 0.452 | 0.838 |

| D-AL-1 | 0.793 | 0.807 | 0.544 | 0.807 | 0.733 | 0.758 | 0.391 | 0.758 | 0.771 | 0.736 | 0.5 | 0.736 |

| D-AL-All | 0.809 | 0.826 | 0.477 | 0.826 | 0.741 | 0.773 | 0.372 | 0.773 | 0.827 | 0.808 | 0.441 | 0.808 |

| D-AL-2 | 0.797 | 0.813 | 0.548 | 0.813 | 0.742 | 0.768 | 0.403 | 0.768 | 0.776 | 0.749 | 0.489 | 0.749 |

| D-AL-W-1 | 0.824 | 0.835 | 0.624 | 0.835 | 0.784 | 0.789 | 0.469 | 0.789 | 0.772 | 0.768 | 0.526 | 0.768 |

| D-AL-W-All | 0.818 | 0.832 | 0.564 | 0.832 | 0.746 | 0.774 | 0.391 | 0.774 | 0.809 | 0.8 | 0.457 | 0.8 |

| D-AL-W-2 | 0.833 | 0.844 | 0.635 | 0.844 | 0.783 | 0.796 | 0.456 | 0.796 | 0.779 | 0.778 | 0.53 | 0.778 |

| U-ML-1 | 0.854 | 0.855 | 0.535 | 0.855 | 0.748 | 0.754 | 0.311 | 0.754 | 0.83 | 0.821 | 0.446 | 0.821 |

| U-ML-All | 0.855 | 0.855 | 0.534 | 0.855 | 0.747 | 0.754 | 0.311 | 0.754 | 0.83 | 0.821 | 0.446 | 0.821 |

| U-ML-2 | 0.854 | 0.855 | 0.537 | 0.855 | 0.748 | 0.754 | 0.311 | 0.754 | 0.83 | 0.821 | 0.446 | 0.821 |

| U-ML-W-1 | 0.856 | 0.856 | 0.538 | 0.856 | 0.752 | 0.758 | 0.316 | 0.758 | 0.829 | 0.821 | 0.444 | 0.821 |

| U-ML-W-All | 0.856 | 0.857 | 0.541 | 0.857 | 0.752 | 0.758 | 0.316 | 0.758 | 0.829 | 0.821 | 0.444 | 0.821 |

| U-ML-W-2 | 0.856 | 0.856 | 0.538 | 0.856 | 0.752 | 0.758 | 0.316 | 0.758 | 0.829 | 0.821 | 0.444 | 0.821 |

| D-ML-1 | 0.829 | 0.831 | 0.504 | 0.831 | 0.728 | 0.749 | 0.312 | 0.749 | 0.824 | 0.818 | 0.45 | 0.818 |

| D-ML-All | 0.827 | 0.829 | 0.49 | 0.829 | 0.722 | 0.746 | 0.306 | 0.746 | 0.799 | 0.803 | 0.44 | 0.803 |

| D-ML-2 | 0.83 | 0.832 | 0.502 | 0.832 | 0.726 | 0.748 | 0.312 | 0.748 | 0.823 | 0.816 | 0.44 | 0.816 |

| D-ML-W-1 | 0.831 | 0.833 | 0.505 | 0.833 | 0.729 | 0.751 | 0.318 | 0.751 | 0.819 | 0.807 | 0.446 | 0.807 |

| D-ML-W-All | 0.826 | 0.83 | 0.491 | 0.83 | 0.733 | 0.751 | 0.311 | 0.751 | 0.799 | 0.803 | 0.44 | 0.803 |

| D-ML-W-2 | 0.831 | 0.833 | 0.504 | 0.833 | 0.727 | 0.75 | 0.318 | 0.75 | 0.821 | 0.813 | 0.439 | 0.813 |

| Method | K-Nearest Neighbors | Decision Tree | Gradient Boosting | |||||||||

|---|---|---|---|---|---|---|---|---|---|---|---|---|

| Prec. | Recall | BAcc | Acc | Prec. | Recall | BAcc | Acc | Prec. | Recall | BAcc | Acc | |

| AL | 0.716 | 0.746 | 0.32 | 0.746 | 0.878 | 0.873 | 0.638 | 0.873 | 0.917 | 0.907 | 0.648 | 0.907 |

| ML | 0.656 | 0.719 | 0.273 | 0.719 | 0.878 | 0.87 | 0.617 | 0.87 | 0.918 | 0.908 | 0.635 | 0.908 |

| AL-W | 0.717 | 0.747 | 0.322 | 0.747 | 0.88 | 0.877 | 0.651 | 0.877 | 0.915 | 0.906 | 0.641 | 0.906 |

| ML-W | 0.65 | 0.718 | 0.271 | 0.718 | 0.872 | 0.871 | 0.631 | 0.871 | 0.918 | 0.908 | 0.635 | 0.908 |

| U-AL-1 | 0.72 | 0.748 | 0.324 | 0.748 | 0.876 | 0.87 | 0.63 | 0.87 | 0.916 | 0.907 | 0.644 | 0.907 |

| U-AL-All | 0.697 | 0.728 | 0.309 | 0.728 | 0.878 | 0.87 | 0.617 | 0.87 | 0.916 | 0.907 | 0.651 | 0.907 |

| U-AL-2 | 0.701 | 0.729 | 0.314 | 0.729 | 0.878 | 0.872 | 0.636 | 0.872 | 0.916 | 0.906 | 0.65 | 0.906 |

| U-AL-W-1 | 0.715 | 0.746 | 0.323 | 0.746 | 0.872 | 0.871 | 0.631 | 0.871 | 0.915 | 0.91 | 0.646 | 0.91 |

| U-AL-W-All | 0.697 | 0.728 | 0.309 | 0.728 | 0.872 | 0.87 | 0.631 | 0.87 | 0.915 | 0.908 | 0.652 | 0.908 |

| U-AL-W-2 | 0.705 | 0.73 | 0.323 | 0.73 | 0.872 | 0.871 | 0.631 | 0.871 | 0.913 | 0.906 | 0.649 | 0.906 |

| D-AL-1 | 0.688 | 0.66 | 0.377 | 0.66 | 0.871 | 0.871 | 0.636 | 0.871 | 0.792 | 0.757 | 0.57 | 0.757 |

| D-AL-All | 0.766 | 0.642 | 0.372 | 0.642 | 0.878 | 0.87 | 0.617 | 0.87 | 0.802 | 0.802 | 0.425 | 0.802 |

| D-AL-2 | 0.738 | 0.625 | 0.375 | 0.625 | 0.882 | 0.873 | 0.634 | 0.873 | 0.796 | 0.762 | 0.57 | 0.762 |

| D-AL-W-1 | 0.754 | 0.64 | 0.396 | 0.64 | 0.872 | 0.87 | 0.631 | 0.87 | 0.804 | 0.762 | 0.588 | 0.762 |

| D-AL-W-All | 0.739 | 0.67 | 0.379 | 0.67 | 0.872 | 0.87 | 0.631 | 0.87 | 0.809 | 0.79 | 0.521 | 0.79 |

| D-AL-W-2 | 0.754 | 0.64 | 0.396 | 0.64 | 0.872 | 0.87 | 0.631 | 0.87 | 0.806 | 0.766 | 0.594 | 0.766 |

| U-ML-1 | 0.664 | 0.721 | 0.276 | 0.721 | 0.878 | 0.872 | 0.636 | 0.872 | 0.918 | 0.909 | 0.637 | 0.909 |

| U-ML-All | 0.652 | 0.717 | 0.271 | 0.717 | 0.878 | 0.872 | 0.636 | 0.872 | 0.917 | 0.909 | 0.64 | 0.909 |

| U-ML-2 | 0.655 | 0.717 | 0.272 | 0.717 | 0.878 | 0.872 | 0.636 | 0.872 | 0.918 | 0.909 | 0.639 | 0.909 |

| U-ML-W-1 | 0.659 | 0.721 | 0.274 | 0.721 | 0.872 | 0.871 | 0.631 | 0.871 | 0.918 | 0.909 | 0.637 | 0.909 |

| U-ML-W-All | 0.652 | 0.718 | 0.271 | 0.718 | 0.872 | 0.87 | 0.631 | 0.87 | 0.917 | 0.909 | 0.64 | 0.909 |

| U-ML-W-2 | 0.653 | 0.717 | 0.271 | 0.717 | 0.872 | 0.871 | 0.631 | 0.871 | 0.918 | 0.909 | 0.64 | 0.909 |

| D-ML-1 | 0.607 | 0.671 | 0.292 | 0.671 | 0.874 | 0.872 | 0.637 | 0.872 | 0.837 | 0.843 | 0.507 | 0.843 |

| D-ML-All | 0.655 | 0.692 | 0.299 | 0.692 | 0.878 | 0.872 | 0.636 | 0.872 | 0.841 | 0.852 | 0.512 | 0.852 |

| D-ML-2 | 0.623 | 0.671 | 0.316 | 0.671 | 0.871 | 0.872 | 0.634 | 0.872 | 0.836 | 0.842 | 0.508 | 0.842 |

| D-ML-W-1 | 0.63 | 0.68 | 0.312 | 0.68 | 0.872 | 0.87 | 0.631 | 0.87 | 0.839 | 0.844 | 0.507 | 0.844 |

| D-ML-W-All | 0.66 | 0.694 | 0.315 | 0.694 | 0.872 | 0.87 | 0.631 | 0.87 | 0.84 | 0.85 | 0.511 | 0.85 |

| D-ML-W-2 | 0.635 | 0.677 | 0.324 | 0.677 | 0.872 | 0.87 | 0.631 | 0.87 | 0.837 | 0.843 | 0.507 | 0.843 |

| Method | Random Forest | Random Subspace | AdaBoost | |||||||||

| Prec. | Recall | BAcc | Acc | Prec. | Recall | BAcc | Acc | Prec. | Recall | BAcc | Acc | |

| AL | 0.839 | 0.831 | 0.462 | 0.831 | 0.738 | 0.762 | 0.323 | 0.762 | 0.844 | 0.845 | 0.455 | 0.845 |

| ML | 0.838 | 0.838 | 0.452 | 0.838 | 0.727 | 0.756 | 0.314 | 0.756 | 0.864 | 0.828 | 0.455 | 0.828 |

| AL-W | 0.843 | 0.835 | 0.47 | 0.835 | 0.738 | 0.764 | 0.322 | 0.764 | 0.842 | 0.845 | 0.453 | 0.845 |

| ML-W | 0.837 | 0.837 | 0.451 | 0.837 | 0.726 | 0.755 | 0.312 | 0.755 | 0.863 | 0.827 | 0.454 | 0.827 |

| U-AL-1 | 0.83 | 0.827 | 0.459 | 0.827 | 0.769 | 0.786 | 0.353 | 0.786 | 0.836 | 0.844 | 0.461 | 0.844 |

| U-AL-All | 0.844 | 0.835 | 0.46 | 0.835 | 0.769 | 0.786 | 0.351 | 0.786 | 0.85 | 0.848 | 0.46 | 0.848 |

| U-AL-2 | 0.842 | 0.834 | 0.475 | 0.834 | 0.767 | 0.784 | 0.349 | 0.784 | 0.843 | 0.844 | 0.454 | 0.844 |

| U-AL-W-1 | 0.836 | 0.83 | 0.464 | 0.83 | 0.762 | 0.782 | 0.347 | 0.782 | 0.843 | 0.848 | 0.47 | 0.848 |

| U-AL-W-All | 0.841 | 0.833 | 0.463 | 0.833 | 0.766 | 0.783 | 0.348 | 0.783 | 0.847 | 0.85 | 0.457 | 0.85 |

| U-AL-W-2 | 0.843 | 0.835 | 0.473 | 0.835 | 0.765 | 0.783 | 0.348 | 0.783 | 0.847 | 0.85 | 0.457 | 0.85 |

| D-AL-1 | 0.766 | 0.785 | 0.508 | 0.785 | 0.763 | 0.786 | 0.427 | 0.786 | 0.718 | 0.675 | 0.456 | 0.675 |

| D-AL-All | 0.803 | 0.823 | 0.496 | 0.823 | 0.746 | 0.784 | 0.388 | 0.784 | 0.816 | 0.793 | 0.442 | 0.793 |

| D-AL-2 | 0.776 | 0.79 | 0.522 | 0.79 | 0.765 | 0.787 | 0.425 | 0.787 | 0.72 | 0.69 | 0.461 | 0.69 |

| D-AL-W-1 | 0.781 | 0.792 | 0.543 | 0.792 | 0.777 | 0.792 | 0.427 | 0.792 | 0.682 | 0.685 | 0.495 | 0.685 |

| D-AL-W-All | 0.783 | 0.8 | 0.526 | 0.8 | 0.745 | 0.78 | 0.395 | 0.78 | 0.807 | 0.787 | 0.546 | 0.787 |

| D-AL-W-2 | 0.786 | 0.795 | 0.543 | 0.795 | 0.779 | 0.792 | 0.423 | 0.792 | 0.689 | 0.692 | 0.499 | 0.692 |

| U-ML-1 | 0.838 | 0.84 | 0.462 | 0.84 | 0.725 | 0.755 | 0.312 | 0.755 | 0.864 | 0.828 | 0.455 | 0.828 |

| U-ML-All | 0.838 | 0.837 | 0.451 | 0.837 | 0.727 | 0.757 | 0.314 | 0.757 | 0.864 | 0.828 | 0.455 | 0.828 |

| U-ML-2 | 0.837 | 0.838 | 0.461 | 0.838 | 0.725 | 0.755 | 0.312 | 0.755 | 0.864 | 0.828 | 0.455 | 0.828 |

| U-ML-W-1 | 0.84 | 0.84 | 0.464 | 0.84 | 0.726 | 0.755 | 0.312 | 0.755 | 0.863 | 0.827 | 0.454 | 0.827 |

| U-ML-W-All | 0.842 | 0.84 | 0.451 | 0.84 | 0.727 | 0.755 | 0.313 | 0.755 | 0.863 | 0.827 | 0.454 | 0.827 |

| U-ML-W-2 | 0.839 | 0.839 | 0.465 | 0.839 | 0.727 | 0.755 | 0.313 | 0.755 | 0.863 | 0.827 | 0.454 | 0.827 |

| D-ML-1 | 0.795 | 0.813 | 0.493 | 0.813 | 0.725 | 0.753 | 0.322 | 0.753 | 0.81 | 0.794 | 0.471 | 0.794 |

| D-ML-All | 0.801 | 0.814 | 0.477 | 0.814 | 0.726 | 0.753 | 0.313 | 0.753 | 0.822 | 0.805 | 0.481 | 0.805 |

| D-ML-2 | 0.796 | 0.814 | 0.491 | 0.814 | 0.727 | 0.753 | 0.322 | 0.753 | 0.81 | 0.794 | 0.471 | 0.794 |

| D-ML-W-1 | 0.793 | 0.815 | 0.489 | 0.815 | 0.727 | 0.754 | 0.323 | 0.754 | 0.806 | 0.791 | 0.468 | 0.791 |

| D-ML-W-All | 0.8 | 0.814 | 0.48 | 0.814 | 0.727 | 0.753 | 0.314 | 0.753 | 0.822 | 0.805 | 0.481 | 0.805 |

| D-ML-W-2 | 0.791 | 0.813 | 0.483 | 0.813 | 0.726 | 0.753 | 0.322 | 0.753 | 0.806 | 0.791 | 0.468 | 0.791 |

| Method | K-Nearest Neighbors | Decision Tree | Gradient Boosting | |||||||||

|---|---|---|---|---|---|---|---|---|---|---|---|---|

| Prec. | Recall | BAcc | Acc | Prec. | Recall | BAcc | Acc | Prec. | Recall | BAcc | Acc | |

| AL | 0.535 | 0.297 | 0.255 | 0.297 | 0.587 | 0.319 | 0.316 | 0.319 | 0.577 | 0.314 | 0.28 | 0.314 |

| ML | 0.674 | 0.348 | 0.281 | 0.348 | 0.647 | 0.51 | 0.497 | 0.51 | 0.623 | 0.324 | 0.245 | 0.324 |

| AL-W | 0.607 | 0.591 | 0.548 | 0.591 | 0.68 | 0.666 | 0.709 | 0.666 | 0.691 | 0.685 | 0.692 | 0.685 |

| ML-W | 0.595 | 0.593 | 0.555 | 0.593 | 0.68 | 0.666 | 0.709 | 0.666 | 0.694 | 0.687 | 0.693 | 0.687 |

| U-AL-1 | 0.544 | 0.297 | 0.254 | 0.297 | 0.581 | 0.322 | 0.319 | 0.322 | 0.589 | 0.31 | 0.273 | 0.31 |

| U-AL-All | 0.449 | 0.277 | 0.166 | 0.277 | 0.42 | 0.269 | 0.223 | 0.269 | 0.654 | 0.27 | 0.185 | 0.27 |

| U-AL-2 | 0.544 | 0.297 | 0.254 | 0.297 | 0.581 | 0.322 | 0.319 | 0.322 | 0.589 | 0.31 | 0.273 | 0.31 |

| U-AL-W-1 | 0.607 | 0.591 | 0.548 | 0.591 | 0.68 | 0.666 | 0.709 | 0.666 | 0.691 | 0.685 | 0.692 | 0.685 |

| U-AL-W-All | 0.607 | 0.591 | 0.548 | 0.591 | 0.68 | 0.666 | 0.709 | 0.666 | 0.691 | 0.685 | 0.692 | 0.685 |

| U-AL-W-2 | 0.607 | 0.591 | 0.548 | 0.591 | 0.68 | 0.666 | 0.709 | 0.666 | 0.691 | 0.685 | 0.692 | 0.685 |

| D-AL-1 | 0.531 | 0.331 | 0.289 | 0.331 | 0.146 | 0.102 | 0.063 | 0.102 | 0.586 | 0.307 | 0.262 | 0.307 |

| D-AL-All | 0.455 | 0.32 | 0.245 | 0.32 | 0.178 | 0.147 | 0.076 | 0.147 | 0.654 | 0.27 | 0.185 | 0.27 |

| D-AL-2 | 0.535 | 0.351 | 0.302 | 0.351 | 0.199 | 0.145 | 0.074 | 0.145 | 0.598 | 0.305 | 0.269 | 0.305 |

| D-AL-W-1 | 0.607 | 0.591 | 0.548 | 0.591 | 0.68 | 0.666 | 0.709 | 0.666 | 0.691 | 0.685 | 0.692 | 0.685 |

| D-AL-W-All | 0.607 | 0.591 | 0.548 | 0.591 | 0.68 | 0.666 | 0.709 | 0.666 | 0.691 | 0.685 | 0.692 | 0.685 |

| D-AL-W-2 | 0.607 | 0.591 | 0.548 | 0.591 | 0.68 | 0.666 | 0.709 | 0.666 | 0.691 | 0.685 | 0.692 | 0.685 |

| U-ML-1 | 0.621 | 0.252 | 0.212 | 0.252 | 0.675 | 0.477 | 0.439 | 0.477 | 0.623 | 0.324 | 0.245 | 0.324 |

| U-ML-All | 0.621 | 0.252 | 0.212 | 0.252 | 0.675 | 0.477 | 0.439 | 0.477 | 0.623 | 0.324 | 0.245 | 0.324 |

| U-ML-2 | 0.621 | 0.252 | 0.212 | 0.252 | 0.675 | 0.477 | 0.439 | 0.477 | 0.623 | 0.324 | 0.245 | 0.324 |

| U-ML-W-1 | 0.584 | 0.581 | 0.542 | 0.581 | 0.68 | 0.666 | 0.709 | 0.666 | 0.694 | 0.687 | 0.693 | 0.687 |

| U-ML-W-All | 0.584 | 0.581 | 0.542 | 0.581 | 0.68 | 0.666 | 0.709 | 0.666 | 0.694 | 0.687 | 0.693 | 0.687 |

| U-ML-W-2 | 0.584 | 0.581 | 0.542 | 0.581 | 0.68 | 0.666 | 0.709 | 0.666 | 0.694 | 0.687 | 0.693 | 0.687 |

| D-ML-1 | 0.552 | 0.291 | 0.275 | 0.291 | 0.668 | 0.385 | 0.403 | 0.385 | 0.623 | 0.324 | 0.245 | 0.324 |

| D-ML-All | 0.571 | 0.334 | 0.3 | 0.334 | 0.668 | 0.384 | 0.404 | 0.384 | 0.623 | 0.324 | 0.245 | 0.324 |

| D-ML-2 | 0.575 | 0.331 | 0.298 | 0.331 | 0.675 | 0.477 | 0.439 | 0.477 | 0.623 | 0.324 | 0.245 | 0.324 |

| D-ML-W-1 | 0.583 | 0.58 | 0.54 | 0.58 | 0.669 | 0.593 | 0.681 | 0.593 | 0.694 | 0.687 | 0.693 | 0.687 |

| D-ML-W-All | 0.583 | 0.58 | 0.54 | 0.58 | 0.68 | 0.666 | 0.709 | 0.666 | 0.694 | 0.687 | 0.693 | 0.687 |

| D-ML-W-2 | 0.583 | 0.58 | 0.54 | 0.58 | 0.68 | 0.666 | 0.709 | 0.666 | 0.694 | 0.687 | 0.693 | 0.687 |

| Method | Random Forest | Random Subspace | AdaBoost | |||||||||

| Prec. | Recall | BAcc | Acc | Prec. | Recall | BAcc | Acc | Prec. | Recall | BAcc | Acc | |

| AL | 0.638 | 0.345 | 0.303 | 0.345 | 0.634 | 0.395 | 0.319 | 0.395 | 0.241 | 0.206 | 0.12 | 0.206 |

| ML | 0.756 | 0.606 | 0.581 | 0.606 | 0.676 | 0.445 | 0.358 | 0.445 | 0.189 | 0.224 | 0.148 | 0.224 |

| AL-W | 0.73 | 0.719 | 0.73 | 0.719 | 0.713 | 0.713 | 0.725 | 0.713 | 0.346 | 0.376 | 0.228 | 0.376 |

| ML-W | 0.734 | 0.726 | 0.736 | 0.726 | 0.71 | 0.708 | 0.704 | 0.708 | 0.207 | 0.27 | 0.165 | 0.27 |

| U-AL-1 | 0.638 | 0.345 | 0.303 | 0.345 | 0.608 | 0.398 | 0.321 | 0.398 | 0.262 | 0.215 | 0.126 | 0.215 |

| U-AL-All | 0.691 | 0.28 | 0.2 | 0.28 | 0.593 | 0.343 | 0.213 | 0.343 | 0.16 | 0.213 | 0.139 | 0.213 |

| U-AL-2 | 0.638 | 0.345 | 0.303 | 0.345 | 0.614 | 0.392 | 0.321 | 0.392 | 0.262 | 0.215 | 0.126 | 0.215 |

| U-AL-W-1 | 0.73 | 0.719 | 0.73 | 0.719 | 0.717 | 0.717 | 0.722 | 0.717 | 0.346 | 0.376 | 0.228 | 0.376 |

| U-AL-W-All | 0.73 | 0.719 | 0.73 | 0.719 | 0.717 | 0.717 | 0.722 | 0.717 | 0.346 | 0.376 | 0.228 | 0.376 |

| U-AL-W-2 | 0.73 | 0.719 | 0.73 | 0.719 | 0.717 | 0.717 | 0.722 | 0.717 | 0.346 | 0.376 | 0.228 | 0.376 |

| D-AL-1 | 0.67 | 0.392 | 0.35 | 0.392 | 0.609 | 0.412 | 0.348 | 0.412 | 0.304 | 0.221 | 0.128 | 0.221 |

| D-AL-All | 0.734 | 0.341 | 0.225 | 0.341 | 0.566 | 0.36 | 0.245 | 0.36 | 0.16 | 0.213 | 0.139 | 0.213 |

| D-AL-2 | 0.633 | 0.358 | 0.324 | 0.358 | 0.623 | 0.412 | 0.342 | 0.412 | 0.297 | 0.215 | 0.124 | 0.215 |

| D-AL-W-1 | 0.73 | 0.719 | 0.73 | 0.719 | 0.717 | 0.717 | 0.722 | 0.717 | 0.346 | 0.376 | 0.228 | 0.376 |

| D-AL-W-All | 0.73 | 0.719 | 0.73 | 0.719 | 0.717 | 0.717 | 0.722 | 0.717 | 0.346 | 0.376 | 0.228 | 0.376 |

| D-AL-W-2 | 0.73 | 0.719 | 0.73 | 0.719 | 0.717 | 0.717 | 0.722 | 0.717 | 0.346 | 0.376 | 0.228 | 0.376 |

| U-ML-1 | 0.756 | 0.606 | 0.581 | 0.606 | 0.678 | 0.447 | 0.364 | 0.447 | 0.189 | 0.224 | 0.148 | 0.224 |

| U-ML-All | 0.756 | 0.606 | 0.581 | 0.606 | 0.682 | 0.448 | 0.366 | 0.448 | 0.189 | 0.224 | 0.148 | 0.224 |

| U-ML-2 | 0.756 | 0.606 | 0.581 | 0.606 | 0.678 | 0.447 | 0.364 | 0.447 | 0.189 | 0.224 | 0.148 | 0.224 |

| U-ML-W-1 | 0.734 | 0.726 | 0.736 | 0.726 | 0.71 | 0.708 | 0.704 | 0.708 | 0.207 | 0.27 | 0.165 | 0.27 |

| U-ML-W-All | 0.734 | 0.726 | 0.736 | 0.726 | 0.71 | 0.708 | 0.704 | 0.708 | 0.207 | 0.27 | 0.165 | 0.27 |

| U-ML-W-2 | 0.734 | 0.726 | 0.736 | 0.726 | 0.71 | 0.708 | 0.704 | 0.708 | 0.207 | 0.27 | 0.165 | 0.27 |

| D-ML-1 | 0.756 | 0.606 | 0.581 | 0.606 | 0.679 | 0.448 | 0.367 | 0.448 | 0.189 | 0.224 | 0.148 | 0.224 |

| D-ML-All | 0.756 | 0.606 | 0.581 | 0.606 | 0.678 | 0.447 | 0.364 | 0.447 | 0.189 | 0.224 | 0.148 | 0.224 |

| D-ML-2 | 0.756 | 0.606 | 0.581 | 0.606 | 0.678 | 0.447 | 0.364 | 0.447 | 0.189 | 0.224 | 0.148 | 0.224 |

| D-ML-W-1 | 0.734 | 0.726 | 0.736 | 0.726 | 0.71 | 0.708 | 0.704 | 0.708 | 0.207 | 0.27 | 0.165 | 0.27 |

| D-ML-W-All | 0.734 | 0.726 | 0.736 | 0.726 | 0.71 | 0.708 | 0.704 | 0.708 | 0.207 | 0.27 | 0.165 | 0.27 |

| D-ML-W-2 | 0.734 | 0.726 | 0.736 | 0.726 | 0.71 | 0.708 | 0.704 | 0.708 | 0.207 | 0.27 | 0.165 | 0.27 |

| Method | K-Nearest Neighbors | Decision Tree | Gradient Boosting | |||||||||

|---|---|---|---|---|---|---|---|---|---|---|---|---|

| Prec. | Recall | BAcc | Acc | Prec. | Recall | BAcc | Acc | Prec. | Recall | BAcc | Acc | |

| AL | 0.473 | 0.331 | 0.252 | 0.331 | 0.515 | 0.235 | 0.106 | 0.235 | 0.465 | 0.199 | 0.084 | 0.199 |

| ML | 0.61 | 0.366 | 0.326 | 0.366 | 0.607 | 0.547 | 0.543 | 0.547 | 0.683 | 0.514 | 0.461 | 0.514 |

| AL-W | 0.607 | 0.591 | 0.548 | 0.591 | 0.69 | 0.679 | 0.729 | 0.679 | 0.691 | 0.685 | 0.692 | 0.685 |

| ML-W | 0.594 | 0.58 | 0.549 | 0.58 | 0.69 | 0.679 | 0.729 | 0.679 | 0.693 | 0.686 | 0.692 | 0.686 |

| U-AL-1 | 0.532 | 0.348 | 0.248 | 0.348 | 0.505 | 0.225 | 0.1 | 0.225 | 0.47 | 0.2 | 0.086 | 0.2 |

| U-AL-All | 0.558 | 0.409 | 0.246 | 0.409 | 0.353 | 0.189 | 0.08 | 0.189 | 0.38 | 0.201 | 0.084 | 0.201 |

| U-AL-2 | 0.532 | 0.348 | 0.248 | 0.348 | 0.505 | 0.225 | 0.1 | 0.225 | 0.47 | 0.2 | 0.086 | 0.2 |

| U-AL-W-1 | 0.607 | 0.591 | 0.548 | 0.591 | 0.69 | 0.679 | 0.729 | 0.679 | 0.691 | 0.685 | 0.692 | 0.685 |

| U-AL-W-All | 0.607 | 0.591 | 0.548 | 0.591 | 0.69 | 0.679 | 0.729 | 0.679 | 0.691 | 0.685 | 0.692 | 0.685 |

| U-AL-W-2 | 0.607 | 0.591 | 0.548 | 0.591 | 0.69 | 0.679 | 0.729 | 0.679 | 0.691 | 0.685 | 0.692 | 0.685 |

| D-AL-1 | 0.536 | 0.442 | 0.316 | 0.442 | 0.645 | 0.526 | 0.562 | 0.526 | 0.497 | 0.216 | 0.094 | 0.216 |

| D-AL-All | 0.548 | 0.451 | 0.297 | 0.451 | 0.694 | 0.507 | 0.438 | 0.507 | 0.38 | 0.201 | 0.084 | 0.201 |

| D-AL-2 | 0.536 | 0.442 | 0.316 | 0.442 | 0.645 | 0.526 | 0.562 | 0.526 | 0.497 | 0.216 | 0.094 | 0.216 |

| D-AL-W-1 | 0.6 | 0.578 | 0.527 | 0.578 | 0.69 | 0.679 | 0.729 | 0.679 | 0.691 | 0.685 | 0.692 | 0.685 |

| D-AL-W-All | 0.6 | 0.578 | 0.527 | 0.578 | 0.69 | 0.679 | 0.729 | 0.679 | 0.691 | 0.685 | 0.692 | 0.685 |

| D-AL-W-2 | 0.6 | 0.578 | 0.527 | 0.578 | 0.69 | 0.679 | 0.729 | 0.679 | 0.691 | 0.685 | 0.692 | 0.685 |

| U-ML-1 | 0.561 | 0.356 | 0.326 | 0.356 | 0.611 | 0.547 | 0.538 | 0.547 | 0.683 | 0.514 | 0.461 | 0.514 |

| U-ML-All | 0.561 | 0.356 | 0.326 | 0.356 | 0.611 | 0.547 | 0.538 | 0.547 | 0.683 | 0.514 | 0.461 | 0.514 |

| U-ML-2 | 0.561 | 0.356 | 0.326 | 0.356 | 0.611 | 0.547 | 0.538 | 0.547 | 0.683 | 0.514 | 0.461 | 0.514 |

| U-ML-W-1 | 0.579 | 0.581 | 0.526 | 0.581 | 0.69 | 0.679 | 0.729 | 0.679 | 0.693 | 0.686 | 0.692 | 0.686 |

| U-ML-W-All | 0.579 | 0.581 | 0.526 | 0.581 | 0.69 | 0.679 | 0.729 | 0.679 | 0.693 | 0.686 | 0.692 | 0.686 |

| U-ML-W-2 | 0.579 | 0.581 | 0.526 | 0.581 | 0.69 | 0.679 | 0.729 | 0.679 | 0.693 | 0.686 | 0.692 | 0.686 |

| D-ML-1 | 0.572 | 0.421 | 0.347 | 0.421 | 0.69 | 0.585 | 0.696 | 0.585 | 0.683 | 0.514 | 0.461 | 0.514 |

| D-ML-All | 0.576 | 0.424 | 0.348 | 0.424 | 0.69 | 0.585 | 0.696 | 0.585 | 0.683 | 0.514 | 0.461 | 0.514 |

| D-ML-2 | 0.572 | 0.421 | 0.347 | 0.421 | 0.69 | 0.585 | 0.696 | 0.585 | 0.683 | 0.514 | 0.461 | 0.514 |

| D-ML-W-1 | 0.588 | 0.566 | 0.527 | 0.566 | 0.69 | 0.679 | 0.729 | 0.679 | 0.693 | 0.686 | 0.692 | 0.686 |

| D-ML-W-All | 0.588 | 0.566 | 0.527 | 0.566 | 0.69 | 0.679 | 0.729 | 0.679 | 0.693 | 0.686 | 0.692 | 0.686 |

| D-ML-W-2 | 0.588 | 0.566 | 0.527 | 0.566 | 0.69 | 0.679 | 0.729 | 0.679 | 0.693 | 0.686 | 0.692 | 0.686 |

| Method | Random Forest | Random Subspace | AdaBoost | |||||||||

| Prec. | Recall | BAcc | Acc | Prec. | Recall | BAcc | Acc | Prec. | Recall | BAcc | Acc | |

| AL | 0.369 | 0.193 | 0.087 | 0.193 | 0.509 | 0.263 | 0.108 | 0.263 | 0.368 | 0.209 | 0.082 | 0.209 |

| ML | 0.723 | 0.546 | 0.455 | 0.546 | 0.555 | 0.189 | 0.072 | 0.189 | 0.16 | 0.266 | 0.105 | 0.266 |

| AL-W | 0.73 | 0.719 | 0.73 | 0.719 | 0.721 | 0.72 | 0.727 | 0.72 | 0.441 | 0.376 | 0.228 | 0.376 |

| ML-W | 0.727 | 0.722 | 0.731 | 0.722 | 0.757 | 0.66 | 0.621 | 0.66 | 0.22 | 0.296 | 0.13 | 0.296 |

| U-AL-1 | 0.373 | 0.193 | 0.087 | 0.193 | 0.529 | 0.291 | 0.145 | 0.291 | 0.379 | 0.211 | 0.083 | 0.211 |

| U-AL-All | 0.302 | 0.194 | 0.082 | 0.194 | 0.324 | 0.212 | 0.09 | 0.212 | 0.061 | 0.138 | 0.053 | 0.138 |

| U-AL-2 | 0.366 | 0.193 | 0.087 | 0.193 | 0.581 | 0.254 | 0.106 | 0.254 | 0.379 | 0.211 | 0.083 | 0.211 |

| U-AL-W-1 | 0.73 | 0.719 | 0.73 | 0.719 | 0.717 | 0.717 | 0.724 | 0.717 | 0.441 | 0.376 | 0.228 | 0.376 |

| U-AL-W-All | 0.73 | 0.719 | 0.73 | 0.719 | 0.717 | 0.717 | 0.724 | 0.717 | 0.441 | 0.376 | 0.228 | 0.376 |

| U-AL-W-2 | 0.73 | 0.719 | 0.73 | 0.719 | 0.717 | 0.717 | 0.724 | 0.717 | 0.441 | 0.376 | 0.228 | 0.376 |

| D-AL-1 | 0.651 | 0.214 | 0.199 | 0.214 | 0.464 | 0.205 | 0.101 | 0.205 | 0.365 | 0.206 | 0.08 | 0.206 |

| D-AL-All | 0.611 | 0.215 | 0.193 | 0.215 | 0.548 | 0.219 | 0.094 | 0.219 | 0.061 | 0.138 | 0.053 | 0.138 |

| D-AL-2 | 0.651 | 0.214 | 0.199 | 0.214 | 0.486 | 0.211 | 0.103 | 0.211 | 0.365 | 0.206 | 0.08 | 0.206 |

| D-AL-W-1 | 0.727 | 0.716 | 0.724 | 0.716 | 0.707 | 0.689 | 0.702 | 0.689 | 0.441 | 0.376 | 0.228 | 0.376 |

| D-AL-W-All | 0.73 | 0.719 | 0.73 | 0.719 | 0.715 | 0.712 | 0.714 | 0.712 | 0.441 | 0.376 | 0.228 | 0.376 |

| D-AL-W-2 | 0.727 | 0.716 | 0.724 | 0.716 | 0.707 | 0.689 | 0.702 | 0.689 | 0.441 | 0.376 | 0.228 | 0.376 |

| U-ML-1 | 0.723 | 0.546 | 0.455 | 0.546 | 0.6 | 0.197 | 0.076 | 0.197 | 0.16 | 0.266 | 0.105 | 0.266 |

| U-ML-All | 0.723 | 0.546 | 0.455 | 0.546 | 0.556 | 0.19 | 0.072 | 0.19 | 0.16 | 0.266 | 0.105 | 0.266 |

| U-ML-2 | 0.723 | 0.546 | 0.455 | 0.546 | 0.556 | 0.19 | 0.072 | 0.19 | 0.16 | 0.266 | 0.105 | 0.266 |

| U-ML-W-1 | 0.727 | 0.722 | 0.731 | 0.722 | 0.751 | 0.665 | 0.632 | 0.665 | 0.22 | 0.296 | 0.13 | 0.296 |

| U-ML-W-All | 0.727 | 0.722 | 0.731 | 0.722 | 0.731 | 0.657 | 0.617 | 0.657 | 0.22 | 0.296 | 0.13 | 0.296 |

| U-ML-W-2 | 0.727 | 0.722 | 0.731 | 0.722 | 0.731 | 0.657 | 0.617 | 0.657 | 0.22 | 0.296 | 0.13 | 0.296 |

| D-ML-1 | 0.69 | 0.449 | 0.418 | 0.449 | 0.412 | 0.187 | 0.1 | 0.187 | 0.16 | 0.266 | 0.105 | 0.266 |

| D-ML-All | 0.69 | 0.449 | 0.418 | 0.449 | 0.461 | 0.189 | 0.101 | 0.189 | 0.16 | 0.266 | 0.105 | 0.266 |

| D-ML-2 | 0.69 | 0.449 | 0.418 | 0.449 | 0.422 | 0.19 | 0.102 | 0.19 | 0.16 | 0.266 | 0.105 | 0.266 |

| D-ML-W-1 | 0.724 | 0.716 | 0.725 | 0.716 | 0.736 | 0.446 | 0.395 | 0.446 | 0.22 | 0.296 | 0.13 | 0.296 |

| D-ML-W-All | 0.728 | 0.723 | 0.732 | 0.723 | 0.738 | 0.461 | 0.396 | 0.461 | 0.22 | 0.296 | 0.13 | 0.296 |

| D-ML-W-2 | 0.724 | 0.716 | 0.725 | 0.716 | 0.726 | 0.466 | 0.417 | 0.466 | 0.22 | 0.296 | 0.13 | 0.296 |

| Method | K-Nearest Neighbors | Decision Tree | Gradient Boosting | |||||||||

|---|---|---|---|---|---|---|---|---|---|---|---|---|

| Prec. | Recall | BAcc | Acc | Prec. | Recall | BAcc | Acc | Prec. | Recall | BAcc | Acc | |

| AL | 0.589 | 0.143 | 0.104 | 0.143 | 0.352 | 0.18 | 0.069 | 0.18 | 0.348 | 0.168 | 0.064 | 0.168 |

| ML | 0.696 | 0.079 | 0.072 | 0.079 | 0.337 | 0.216 | 0.104 | 0.216 | 0.38 | 0.237 | 0.11 | 0.237 |

| AL-W | 0.498 | 0.494 | 0.468 | 0.494 | 0.519 | 0.498 | 0.496 | 0.498 | 0.576 | 0.561 | 0.564 | 0.561 |

| ML-W | 0.491 | 0.492 | 0.463 | 0.492 | 0.515 | 0.493 | 0.493 | 0.493 | 0.563 | 0.567 | 0.556 | 0.567 |

| U-AL-1 | 0.589 | 0.143 | 0.104 | 0.143 | 0.341 | 0.172 | 0.065 | 0.172 | 0.348 | 0.168 | 0.064 | 0.168 |

| U-AL-All | 0.474 | 0.135 | 0.103 | 0.135 | 0.338 | 0.209 | 0.079 | 0.209 | 0.345 | 0.201 | 0.076 | 0.201 |

| U-AL-2 | 0.589 | 0.143 | 0.104 | 0.143 | 0.334 | 0.182 | 0.069 | 0.182 | 0.348 | 0.168 | 0.064 | 0.168 |

| U-AL-W-1 | 0.498 | 0.494 | 0.468 | 0.494 | 0.497 | 0.479 | 0.485 | 0.479 | 0.576 | 0.561 | 0.564 | 0.561 |

| U-AL-W-All | 0.498 | 0.494 | 0.468 | 0.494 | 0.509 | 0.489 | 0.489 | 0.489 | 0.576 | 0.561 | 0.564 | 0.561 |

| U-AL-W-2 | 0.498 | 0.494 | 0.468 | 0.494 | 0.516 | 0.495 | 0.493 | 0.495 | 0.576 | 0.561 | 0.564 | 0.561 |

| D-AL-1 | 0.471 | 0.275 | 0.269 | 0.275 | 0.524 | 0.491 | 0.491 | 0.491 | 0.348 | 0.168 | 0.064 | 0.168 |

| D-AL-All | 0.401 | 0.255 | 0.273 | 0.255 | 0.524 | 0.491 | 0.491 | 0.491 | 0.345 | 0.201 | 0.076 | 0.201 |

| D-AL-2 | 0.471 | 0.275 | 0.269 | 0.275 | 0.524 | 0.491 | 0.491 | 0.491 | 0.348 | 0.168 | 0.064 | 0.168 |

| D-AL-W-1 | 0.498 | 0.494 | 0.468 | 0.494 | 0.519 | 0.498 | 0.496 | 0.498 | 0.576 | 0.561 | 0.564 | 0.561 |

| D-AL-W-All | 0.498 | 0.494 | 0.468 | 0.494 | 0.519 | 0.498 | 0.496 | 0.498 | 0.576 | 0.561 | 0.564 | 0.561 |

| D-AL-W-2 | 0.498 | 0.494 | 0.468 | 0.494 | 0.519 | 0.498 | 0.496 | 0.498 | 0.576 | 0.561 | 0.564 | 0.561 |

| U-ML-1 | 0.833 | 0.111 | 0.087 | 0.111 | 0.341 | 0.216 | 0.103 | 0.216 | 0.38 | 0.237 | 0.11 | 0.237 |

| U-ML-All | 0.833 | 0.111 | 0.087 | 0.111 | 0.34 | 0.216 | 0.103 | 0.216 | 0.38 | 0.237 | 0.11 | 0.237 |

| U-ML-2 | 0.833 | 0.111 | 0.087 | 0.111 | 0.34 | 0.216 | 0.103 | 0.216 | 0.38 | 0.237 | 0.11 | 0.237 |

| U-ML-W-1 | 0.484 | 0.494 | 0.473 | 0.494 | 0.511 | 0.489 | 0.488 | 0.489 | 0.563 | 0.567 | 0.556 | 0.567 |

| U-ML-W-All | 0.484 | 0.494 | 0.473 | 0.494 | 0.511 | 0.489 | 0.489 | 0.489 | 0.563 | 0.567 | 0.556 | 0.567 |

| U-ML-W-2 | 0.484 | 0.494 | 0.473 | 0.494 | 0.515 | 0.493 | 0.493 | 0.493 | 0.563 | 0.567 | 0.556 | 0.567 |

| D-ML-1 | 0.506 | 0.278 | 0.276 | 0.278 | 0.507 | 0.422 | 0.467 | 0.422 | 0.38 | 0.237 | 0.11 | 0.237 |

| D-ML-All | 0.506 | 0.278 | 0.276 | 0.278 | 0.507 | 0.422 | 0.467 | 0.422 | 0.38 | 0.237 | 0.11 | 0.237 |

| D-ML-2 | 0.506 | 0.278 | 0.276 | 0.278 | 0.507 | 0.422 | 0.467 | 0.422 | 0.38 | 0.237 | 0.11 | 0.237 |

| D-ML-W-1 | 0.484 | 0.494 | 0.473 | 0.494 | 0.516 | 0.493 | 0.493 | 0.493 | 0.563 | 0.567 | 0.556 | 0.567 |

| D-ML-W-All | 0.484 | 0.494 | 0.473 | 0.494 | 0.516 | 0.493 | 0.493 | 0.493 | 0.563 | 0.567 | 0.556 | 0.567 |

| D-ML-W-2 | 0.484 | 0.494 | 0.473 | 0.494 | 0.516 | 0.493 | 0.493 | 0.493 | 0.563 | 0.567 | 0.556 | 0.567 |

| Method | Random Forest | Random Subspace | AdaBoost | |||||||||

| Prec. | Recall | BAcc | Acc | Prec. | Recall | BAcc | Acc | Prec. | Recall | BAcc | Acc | |

| AL | 0.329 | 0.184 | 0.078 | 0.184 | 0.317 | 0.187 | 0.071 | 0.187 | 0.203 | 0.231 | 0.088 | 0.231 |

| ML | 0.501 | 0.219 | 0.097 | 0.219 | 0.138 | 0.138 | 0.053 | 0.138 | 0.256 | 0.255 | 0.097 | 0.255 |

| AL-W | 0.566 | 0.549 | 0.542 | 0.549 | 0.576 | 0.569 | 0.528 | 0.569 | 0.379 | 0.325 | 0.155 | 0.325 |

| ML-W | 0.57 | 0.554 | 0.513 | 0.554 | 0.682 | 0.334 | 0.192 | 0.334 | 0.256 | 0.255 | 0.097 | 0.255 |

| U-AL-1 | 0.329 | 0.184 | 0.078 | 0.184 | 0.308 | 0.193 | 0.076 | 0.193 | 0.203 | 0.231 | 0.088 | 0.231 |

| U-AL-All | 0.355 | 0.202 | 0.077 | 0.202 | 0.254 | 0.198 | 0.075 | 0.198 | 0.271 | 0.212 | 0.081 | 0.212 |

| U-AL-2 | 0.329 | 0.184 | 0.078 | 0.184 | 0.316 | 0.189 | 0.072 | 0.189 | 0.203 | 0.231 | 0.088 | 0.231 |

| U-AL-W-1 | 0.566 | 0.549 | 0.542 | 0.549 | 0.578 | 0.568 | 0.527 | 0.568 | 0.379 | 0.325 | 0.155 | 0.325 |

| U-AL-W-All | 0.566 | 0.549 | 0.542 | 0.549 | 0.573 | 0.565 | 0.52 | 0.565 | 0.379 | 0.325 | 0.155 | 0.325 |

| U-AL-W-2 | 0.566 | 0.549 | 0.542 | 0.549 | 0.573 | 0.565 | 0.52 | 0.565 | 0.379 | 0.325 | 0.155 | 0.325 |

| D-AL-1 | 0.565 | 0.299 | 0.171 | 0.299 | 0.286 | 0.156 | 0.077 | 0.156 | 0.207 | 0.233 | 0.089 | 0.233 |

| D-AL-All | 0.657 | 0.3 | 0.169 | 0.3 | 0.288 | 0.155 | 0.064 | 0.155 | 0.271 | 0.212 | 0.081 | 0.212 |

| D-AL-2 | 0.565 | 0.299 | 0.171 | 0.299 | 0.308 | 0.155 | 0.075 | 0.155 | 0.207 | 0.233 | 0.089 | 0.233 |

| D-AL-W-1 | 0.566 | 0.549 | 0.542 | 0.549 | 0.531 | 0.449 | 0.39 | 0.449 | 0.379 | 0.325 | 0.155 | 0.325 |

| D-AL-W-All | 0.566 | 0.549 | 0.542 | 0.549 | 0.519 | 0.403 | 0.32 | 0.403 | 0.379 | 0.325 | 0.155 | 0.325 |

| D-AL-W-2 | 0.566 | 0.549 | 0.542 | 0.549 | 0.517 | 0.447 | 0.392 | 0.447 | 0.379 | 0.325 | 0.155 | 0.325 |

| U-ML-1 | 0.501 | 0.219 | 0.097 | 0.219 | 0.138 | 0.138 | 0.053 | 0.138 | 0.256 | 0.255 | 0.097 | 0.255 |

| U-ML-All | 0.501 | 0.219 | 0.097 | 0.219 | 0.138 | 0.138 | 0.053 | 0.138 | 0.256 | 0.255 | 0.097 | 0.255 |

| U-ML-2 | 0.502 | 0.22 | 0.1 | 0.22 | 0.138 | 0.138 | 0.053 | 0.138 | 0.256 | 0.255 | 0.097 | 0.255 |

| U-ML-W-1 | 0.57 | 0.554 | 0.513 | 0.554 | 0.688 | 0.343 | 0.209 | 0.343 | 0.256 | 0.255 | 0.097 | 0.255 |

| U-ML-W-All | 0.57 | 0.554 | 0.513 | 0.554 | 0.684 | 0.334 | 0.188 | 0.334 | 0.256 | 0.255 | 0.097 | 0.255 |

| U-ML-W-2 | 0.57 | 0.554 | 0.513 | 0.554 | 0.684 | 0.334 | 0.188 | 0.334 | 0.256 | 0.255 | 0.097 | 0.255 |

| D-ML-1 | 0.632 | 0.328 | 0.181 | 0.328 | 0.477 | 0.174 | 0.081 | 0.174 | 0.256 | 0.255 | 0.097 | 0.255 |

| D-ML-All | 0.646 | 0.329 | 0.184 | 0.329 | 0.486 | 0.173 | 0.078 | 0.173 | 0.256 | 0.255 | 0.097 | 0.255 |

| D-ML-2 | 0.632 | 0.328 | 0.181 | 0.328 | 0.498 | 0.176 | 0.082 | 0.176 | 0.256 | 0.255 | 0.097 | 0.255 |

| D-ML-W-1 | 0.57 | 0.554 | 0.513 | 0.554 | 0.546 | 0.334 | 0.242 | 0.334 | 0.256 | 0.255 | 0.097 | 0.255 |

| D-ML-W-All | 0.57 | 0.554 | 0.513 | 0.554 | 0.64 | 0.321 | 0.223 | 0.321 | 0.256 | 0.255 | 0.097 | 0.255 |

| D-ML-W-2 | 0.57 | 0.554 | 0.513 | 0.554 | 0.552 | 0.338 | 0.243 | 0.338 | 0.256 | 0.255 | 0.097 | 0.255 |

| Method | K-Nearest Neighbors | Decision Tree | Gradient Boosting | |||||||||

|---|---|---|---|---|---|---|---|---|---|---|---|---|

| Prec. | Recall | BAcc | Acc | Prec. | Recall | BAcc | Acc | Prec. | Recall | BAcc | Acc | |

| AL | 0.496 | 0.166 | 0.122 | 0.166 | 0.276 | 0.151 | 0.075 | 0.151 | 0.398 | 0.186 | 0.072 | 0.186 |

| ML | 0.064 | 0.064 | 0.053 | 0.064 | 0.354 | 0.234 | 0.179 | 0.234 | 0.397 | 0.212 | 0.127 | 0.212 |

| AL-W | 0.591 | 0.504 | 0.513 | 0.504 | 0.546 | 0.528 | 0.475 | 0.528 | 0.561 | 0.547 | 0.511 | 0.547 |

| ML-W | 0.553 | 0.497 | 0.504 | 0.497 | 0.552 | 0.502 | 0.468 | 0.502 | 0.542 | 0.531 | 0.497 | 0.531 |

| U-AL-1 | 0.519 | 0.138 | 0.098 | 0.138 | 0.38 | 0.186 | 0.088 | 0.186 | 0.398 | 0.186 | 0.072 | 0.186 |

| U-AL-All | 0.576 | 0.13 | 0.084 | 0.13 | 0.358 | 0.161 | 0.089 | 0.161 | 0.138 | 0.138 | 0.053 | 0.138 |

| U-AL-2 | 0.556 | 0.104 | 0.078 | 0.104 | 0.348 | 0.204 | 0.157 | 0.204 | 0.398 | 0.186 | 0.072 | 0.186 |

| U-AL-W-1 | 0.591 | 0.504 | 0.513 | 0.504 | 0.54 | 0.516 | 0.459 | 0.516 | 0.561 | 0.547 | 0.511 | 0.547 |

| U-AL-W-All | 0.591 | 0.504 | 0.513 | 0.504 | 0.544 | 0.525 | 0.467 | 0.525 | 0.561 | 0.547 | 0.511 | 0.547 |

| U-AL-W-2 | 0.591 | 0.504 | 0.513 | 0.504 | 0.55 | 0.534 | 0.48 | 0.534 | 0.561 | 0.547 | 0.511 | 0.547 |

| D-AL-1 | 0.434 | 0.286 | 0.265 | 0.286 | 0.242 | 0.14 | 0.06 | 0.14 | 0.369 | 0.186 | 0.072 | 0.186 |

| D-AL-All | 0.431 | 0.282 | 0.259 | 0.282 | 0.259 | 0.14 | 0.058 | 0.14 | 0.138 | 0.138 | 0.053 | 0.138 |

| D-AL-2 | 0.434 | 0.286 | 0.265 | 0.286 | 0.242 | 0.14 | 0.06 | 0.14 | 0.369 | 0.186 | 0.072 | 0.186 |

| D-AL-W-1 | 0.588 | 0.501 | 0.507 | 0.501 | 0.546 | 0.498 | 0.463 | 0.498 | 0.561 | 0.547 | 0.511 | 0.547 |

| D-AL-W-All | 0.588 | 0.501 | 0.507 | 0.501 | 0.541 | 0.497 | 0.463 | 0.497 | 0.561 | 0.547 | 0.511 | 0.547 |

| D-AL-W-2 | 0.588 | 0.501 | 0.507 | 0.501 | 0.546 | 0.498 | 0.463 | 0.498 | 0.561 | 0.547 | 0.511 | 0.547 |

| U-ML-1 | 0.147 | 0.065 | 0.056 | 0.065 | 0.372 | 0.227 | 0.163 | 0.227 | 0.397 | 0.212 | 0.127 | 0.212 |

| U-ML-All | 0.064 | 0.064 | 0.053 | 0.064 | 0.413 | 0.277 | 0.248 | 0.277 | 0.397 | 0.212 | 0.127 | 0.212 |

| U-ML-2 | 0.064 | 0.064 | 0.053 | 0.064 | 0.398 | 0.22 | 0.221 | 0.22 | 0.397 | 0.212 | 0.127 | 0.212 |

| U-ML-W-1 | 0.53 | 0.498 | 0.473 | 0.498 | 0.541 | 0.481 | 0.443 | 0.481 | 0.542 | 0.531 | 0.497 | 0.531 |

| U-ML-W-All | 0.514 | 0.485 | 0.447 | 0.485 | 0.542 | 0.509 | 0.465 | 0.509 | 0.542 | 0.531 | 0.497 | 0.531 |

| U-ML-W-2 | 0.513 | 0.488 | 0.448 | 0.488 | 0.551 | 0.519 | 0.473 | 0.519 | 0.542 | 0.531 | 0.497 | 0.531 |

| D-ML-1 | 0.446 | 0.164 | 0.213 | 0.164 | 0.533 | 0.353 | 0.359 | 0.353 | 0.397 | 0.212 | 0.127 | 0.212 |

| D-ML-All | 0.446 | 0.164 | 0.213 | 0.164 | 0.533 | 0.353 | 0.359 | 0.353 | 0.397 | 0.212 | 0.127 | 0.212 |

| D-ML-2 | 0.446 | 0.164 | 0.213 | 0.164 | 0.533 | 0.353 | 0.359 | 0.353 | 0.397 | 0.212 | 0.127 | 0.212 |

| D-ML-W-1 | 0.555 | 0.477 | 0.489 | 0.477 | 0.542 | 0.483 | 0.464 | 0.483 | 0.542 | 0.531 | 0.497 | 0.531 |

| D-ML-W-All | 0.555 | 0.477 | 0.489 | 0.477 | 0.542 | 0.483 | 0.464 | 0.483 | 0.542 | 0.531 | 0.497 | 0.531 |

| D-ML-W-2 | 0.555 | 0.477 | 0.489 | 0.477 | 0.542 | 0.483 | 0.464 | 0.483 | 0.542 | 0.531 | 0.497 | 0.531 |

| Method | Random Forest | Random Subspace | AdaBoost | |||||||||

| Prec. | Recall | BAcc | Acc | Prec. | Recall | BAcc | Acc | Prec. | Recall | BAcc | Acc | |

| AL | 0.386 | 0.177 | 0.069 | 0.177 | 0.249 | 0.149 | 0.06 | 0.149 | 0.361 | 0.282 | 0.11 | 0.282 |

| ML | 0.464 | 0.237 | 0.121 | 0.237 | 0.138 | 0.138 | 0.053 | 0.138 | 0.134 | 0.132 | 0.084 | 0.132 |

| AL-W | 0.566 | 0.54 | 0.514 | 0.54 | 0.497 | 0.442 | 0.368 | 0.442 | 0.418 | 0.389 | 0.203 | 0.389 |

| ML-W | 0.581 | 0.539 | 0.504 | 0.539 | 0.496 | 0.215 | 0.121 | 0.215 | 0.101 | 0.137 | 0.093 | 0.137 |

| U-AL-1 | 0.386 | 0.177 | 0.069 | 0.177 | 0.218 | 0.149 | 0.06 | 0.149 | 0.36 | 0.281 | 0.11 | 0.281 |

| U-AL-All | 0.138 | 0.138 | 0.053 | 0.138 | 0.15 | 0.14 | 0.054 | 0.14 | 0.389 | 0.337 | 0.131 | 0.337 |

| U-AL-2 | 0.386 | 0.177 | 0.069 | 0.177 | 0.226 | 0.151 | 0.06 | 0.151 | 0.36 | 0.281 | 0.11 | 0.281 |

| U-AL-W-1 | 0.566 | 0.54 | 0.514 | 0.54 | 0.497 | 0.44 | 0.367 | 0.44 | 0.419 | 0.389 | 0.203 | 0.389 |

| U-AL-W-All | 0.566 | 0.54 | 0.514 | 0.54 | 0.497 | 0.44 | 0.367 | 0.44 | 0.419 | 0.389 | 0.203 | 0.389 |

| U-AL-W-2 | 0.566 | 0.54 | 0.514 | 0.54 | 0.497 | 0.44 | 0.367 | 0.44 | 0.419 | 0.389 | 0.203 | 0.389 |

| D-AL-1 | 0.651 | 0.312 | 0.206 | 0.312 | 0.22 | 0.158 | 0.061 | 0.158 | 0.374 | 0.279 | 0.109 | 0.279 |

| D-AL-All | 0.688 | 0.303 | 0.202 | 0.303 | 0.197 | 0.146 | 0.056 | 0.146 | 0.389 | 0.337 | 0.131 | 0.337 |

| D-AL-2 | 0.651 | 0.312 | 0.206 | 0.312 | 0.22 | 0.158 | 0.061 | 0.158 | 0.374 | 0.279 | 0.109 | 0.279 |

| D-AL-W-1 | 0.566 | 0.54 | 0.514 | 0.54 | 0.524 | 0.518 | 0.417 | 0.518 | 0.419 | 0.389 | 0.203 | 0.389 |

| D-AL-W-All | 0.566 | 0.54 | 0.514 | 0.54 | 0.518 | 0.504 | 0.419 | 0.504 | 0.419 | 0.389 | 0.203 | 0.389 |

| D-AL-W-2 | 0.566 | 0.54 | 0.514 | 0.54 | 0.524 | 0.518 | 0.417 | 0.518 | 0.419 | 0.389 | 0.203 | 0.389 |

| U-ML-1 | 0.464 | 0.237 | 0.12 | 0.237 | 0.138 | 0.138 | 0.053 | 0.138 | 0.134 | 0.132 | 0.084 | 0.132 |

| U-ML-All | 0.464 | 0.237 | 0.12 | 0.237 | 0.138 | 0.138 | 0.053 | 0.138 | 0.134 | 0.132 | 0.084 | 0.132 |

| U-ML-2 | 0.465 | 0.238 | 0.122 | 0.238 | 0.138 | 0.138 | 0.053 | 0.138 | 0.134 | 0.132 | 0.084 | 0.132 |

| U-ML-W-1 | 0.581 | 0.539 | 0.504 | 0.539 | 0.496 | 0.215 | 0.121 | 0.215 | 0.101 | 0.137 | 0.093 | 0.137 |

| U-ML-W-All | 0.581 | 0.539 | 0.504 | 0.539 | 0.496 | 0.215 | 0.121 | 0.215 | 0.101 | 0.137 | 0.093 | 0.137 |

| U-ML-W-2 | 0.581 | 0.539 | 0.504 | 0.539 | 0.496 | 0.215 | 0.121 | 0.215 | 0.101 | 0.137 | 0.093 | 0.137 |

| D-ML-1 | 0.575 | 0.345 | 0.237 | 0.345 | 0.209 | 0.152 | 0.067 | 0.152 | 0.134 | 0.132 | 0.084 | 0.132 |

| D-ML-All | 0.575 | 0.345 | 0.237 | 0.345 | 0.209 | 0.152 | 0.067 | 0.152 | 0.134 | 0.132 | 0.084 | 0.132 |

| D-ML-2 | 0.575 | 0.345 | 0.237 | 0.345 | 0.209 | 0.152 | 0.067 | 0.152 | 0.134 | 0.132 | 0.084 | 0.132 |

| D-ML-W-1 | 0.581 | 0.539 | 0.504 | 0.539 | 0.535 | 0.268 | 0.155 | 0.268 | 0.101 | 0.137 | 0.093 | 0.137 |

| D-ML-W-All | 0.581 | 0.539 | 0.504 | 0.539 | 0.519 | 0.244 | 0.142 | 0.244 | 0.101 | 0.137 | 0.093 | 0.137 |

| D-ML-W-2 | 0.581 | 0.539 | 0.504 | 0.539 | 0.535 | 0.268 | 0.155 | 0.268 | 0.101 | 0.137 | 0.093 | 0.137 |

| Method | K-Nearest Neighbors | Decision Tree | Gradient Boosting | |||||||||

|---|---|---|---|---|---|---|---|---|---|---|---|---|

| Prec. | Recall | BAcc | Acc | Prec. | Recall | BAcc | Acc | Prec. | Recall | BAcc | Acc | |

| AL | 0.488 | 0.14 | 0.158 | 0.14 | 0.147 | 0.145 | 0.056 | 0.145 | 0.198 | 0.146 | 0.055 | 0.146 |

| ML | 0.43 | 0.091 | 0.097 | 0.091 | 0.327 | 0.26 | 0.177 | 0.26 | 0.309 | 0.263 | 0.195 | 0.263 |

| AL-W | 0.435 | 0.402 | 0.411 | 0.402 | 0.454 | 0.406 | 0.369 | 0.406 | 0.458 | 0.437 | 0.42 | 0.437 |

| ML-W | 0.419 | 0.38 | 0.39 | 0.38 | 0.515 | 0.428 | 0.41 | 0.428 | 0.498 | 0.438 | 0.425 | 0.438 |

| U-AL-1 | 0.539 | 0.141 | 0.154 | 0.141 | 0.158 | 0.145 | 0.056 | 0.145 | 0.156 | 0.144 | 0.055 | 0.144 |

| U-AL-All | 0.287 | 0.16 | 0.121 | 0.16 | 0.143 | 0.154 | 0.075 | 0.154 | 0.138 | 0.138 | 0.053 | 0.138 |

| U-AL-2 | 0.34 | 0.135 | 0.138 | 0.135 | 0.267 | 0.214 | 0.125 | 0.214 | 0.176 | 0.145 | 0.055 | 0.145 |

| U-AL-W-1 | 0.417 | 0.399 | 0.406 | 0.399 | 0.475 | 0.409 | 0.363 | 0.409 | 0.484 | 0.462 | 0.438 | 0.462 |

| U-AL-W-All | 0.419 | 0.388 | 0.393 | 0.388 | 0.488 | 0.446 | 0.393 | 0.446 | 0.484 | 0.462 | 0.438 | 0.462 |

| U-AL-W-2 | 0.43 | 0.386 | 0.385 | 0.386 | 0.478 | 0.447 | 0.394 | 0.447 | 0.484 | 0.462 | 0.438 | 0.462 |

| D-AL-1 | 0.323 | 0.182 | 0.185 | 0.182 | 0.288 | 0.084 | 0.066 | 0.084 | 0.182 | 0.145 | 0.055 | 0.145 |

| D-AL-All | 0.291 | 0.179 | 0.182 | 0.179 | 0.318 | 0.086 | 0.066 | 0.086 | 0.138 | 0.138 | 0.053 | 0.138 |

| D-AL-2 | 0.323 | 0.182 | 0.185 | 0.182 | 0.288 | 0.084 | 0.066 | 0.084 | 0.182 | 0.145 | 0.055 | 0.145 |

| D-AL-W-1 | 0.435 | 0.402 | 0.411 | 0.402 | 0.479 | 0.417 | 0.375 | 0.417 | 0.486 | 0.463 | 0.438 | 0.463 |

| D-AL-W-All | 0.435 | 0.402 | 0.411 | 0.402 | 0.478 | 0.423 | 0.378 | 0.423 | 0.486 | 0.463 | 0.438 | 0.463 |

| D-AL-W-2 | 0.435 | 0.402 | 0.411 | 0.402 | 0.479 | 0.417 | 0.375 | 0.417 | 0.486 | 0.463 | 0.438 | 0.463 |

| U-ML-1 | 0.536 | 0.098 | 0.116 | 0.098 | 0.316 | 0.257 | 0.171 | 0.257 | 0.309 | 0.263 | 0.195 | 0.263 |

| U-ML-All | 0.624 | 0.092 | 0.109 | 0.092 | 0.402 | 0.301 | 0.246 | 0.301 | 0.309 | 0.263 | 0.195 | 0.263 |

| U-ML-2 | 0.628 | 0.093 | 0.11 | 0.093 | 0.449 | 0.251 | 0.283 | 0.251 | 0.309 | 0.263 | 0.195 | 0.263 |

| U-ML-W-1 | 0.421 | 0.383 | 0.392 | 0.383 | 0.521 | 0.407 | 0.368 | 0.407 | 0.498 | 0.438 | 0.425 | 0.438 |

| U-ML-W-All | 0.482 | 0.301 | 0.314 | 0.301 | 0.502 | 0.436 | 0.414 | 0.436 | 0.498 | 0.438 | 0.425 | 0.438 |

| U-ML-W-2 | 0.475 | 0.285 | 0.286 | 0.285 | 0.496 | 0.449 | 0.413 | 0.449 | 0.498 | 0.438 | 0.425 | 0.438 |

| D-ML-1 | 0.317 | 0.139 | 0.131 | 0.139 | 0.323 | 0.158 | 0.31 | 0.158 | 0.309 | 0.263 | 0.195 | 0.263 |

| D-ML-All | 0.317 | 0.139 | 0.131 | 0.139 | 0.323 | 0.158 | 0.31 | 0.158 | 0.309 | 0.263 | 0.195 | 0.263 |

| D-ML-2 | 0.317 | 0.139 | 0.131 | 0.139 | 0.323 | 0.158 | 0.31 | 0.158 | 0.309 | 0.263 | 0.195 | 0.263 |

| D-ML-W-1 | 0.404 | 0.382 | 0.409 | 0.382 | 0.508 | 0.419 | 0.386 | 0.419 | 0.498 | 0.438 | 0.425 | 0.438 |

| D-ML-W-All | 0.404 | 0.382 | 0.409 | 0.382 | 0.508 | 0.419 | 0.386 | 0.419 | 0.498 | 0.438 | 0.425 | 0.438 |

| D-ML-W-2 | 0.404 | 0.382 | 0.409 | 0.382 | 0.508 | 0.419 | 0.386 | 0.419 | 0.498 | 0.438 | 0.425 | 0.438 |

| Method | Random Forest | Random Subspace | AdaBoost | |||||||||

| Prec. | Recall | BAcc | Acc | Prec. | Recall | BAcc | Acc | Prec. | Recall | BAcc | Acc | |

| AL | 0.198 | 0.167 | 0.078 | 0.167 | 0.129 | 0.145 | 0.055 | 0.145 | 0.202 | 0.183 | 0.075 | 0.183 |

| ML | 0.341 | 0.251 | 0.183 | 0.251 | 0.138 | 0.138 | 0.053 | 0.138 | 0.107 | 0.143 | 0.062 | 0.143 |

| AL-W | 0.47 | 0.423 | 0.419 | 0.423 | 0.259 | 0.238 | 0.159 | 0.238 | 0.268 | 0.292 | 0.145 | 0.292 |

| ML-W | 0.528 | 0.438 | 0.403 | 0.438 | 0.208 | 0.148 | 0.056 | 0.148 | 0.112 | 0.154 | 0.084 | 0.154 |

| U-AL-1 | 0.198 | 0.167 | 0.078 | 0.167 | 0.125 | 0.145 | 0.055 | 0.145 | 0.196 | 0.184 | 0.073 | 0.184 |

| U-AL-All | 0.168 | 0.164 | 0.062 | 0.164 | 0.138 | 0.138 | 0.053 | 0.138 | 0.119 | 0.141 | 0.054 | 0.141 |

| U-AL-2 | 0.198 | 0.167 | 0.078 | 0.167 | 0.144 | 0.143 | 0.054 | 0.143 | 0.243 | 0.192 | 0.073 | 0.192 |

| U-AL-W-1 | 0.485 | 0.447 | 0.422 | 0.447 | 0.299 | 0.269 | 0.143 | 0.269 | 0.331 | 0.316 | 0.168 | 0.316 |

| U-AL-W-All | 0.49 | 0.45 | 0.423 | 0.45 | 0.299 | 0.269 | 0.143 | 0.269 | 0.331 | 0.316 | 0.168 | 0.316 |

| U-AL-W-2 | 0.485 | 0.447 | 0.422 | 0.447 | 0.299 | 0.269 | 0.143 | 0.269 | 0.331 | 0.316 | 0.168 | 0.316 |

| D-AL-1 | 0.252 | 0.189 | 0.135 | 0.189 | 0.221 | 0.147 | 0.061 | 0.147 | 0.211 | 0.194 | 0.079 | 0.194 |

| D-AL-All | 0.267 | 0.172 | 0.114 | 0.172 | 0.139 | 0.14 | 0.058 | 0.14 | 0.119 | 0.141 | 0.054 | 0.141 |

| D-AL-2 | 0.252 | 0.189 | 0.135 | 0.189 | 0.221 | 0.147 | 0.061 | 0.147 | 0.211 | 0.194 | 0.079 | 0.194 |

| D-AL-W-1 | 0.481 | 0.446 | 0.421 | 0.446 | 0.378 | 0.302 | 0.202 | 0.302 | 0.331 | 0.316 | 0.168 | 0.316 |

| D-AL-W-All | 0.481 | 0.446 | 0.421 | 0.446 | 0.375 | 0.306 | 0.198 | 0.306 | 0.331 | 0.316 | 0.168 | 0.316 |

| D-AL-W-2 | 0.481 | 0.446 | 0.421 | 0.446 | 0.378 | 0.302 | 0.202 | 0.302 | 0.331 | 0.316 | 0.168 | 0.316 |

| U-ML-1 | 0.341 | 0.251 | 0.183 | 0.251 | 0.138 | 0.138 | 0.053 | 0.138 | 0.107 | 0.143 | 0.062 | 0.143 |

| U-ML-All | 0.341 | 0.251 | 0.183 | 0.251 | 0.138 | 0.138 | 0.053 | 0.138 | 0.107 | 0.143 | 0.062 | 0.143 |

| U-ML-2 | 0.341 | 0.251 | 0.183 | 0.251 | 0.138 | 0.138 | 0.053 | 0.138 | 0.107 | 0.143 | 0.062 | 0.143 |

| U-ML-W-1 | 0.528 | 0.438 | 0.403 | 0.438 | 0.202 | 0.149 | 0.057 | 0.149 | 0.112 | 0.154 | 0.084 | 0.154 |

| U-ML-W-All | 0.528 | 0.438 | 0.403 | 0.438 | 0.202 | 0.149 | 0.057 | 0.149 | 0.112 | 0.154 | 0.084 | 0.154 |