A Helping Hand: A Survey About AI-Driven Experimental Design for Accelerating Scientific Research

Abstract

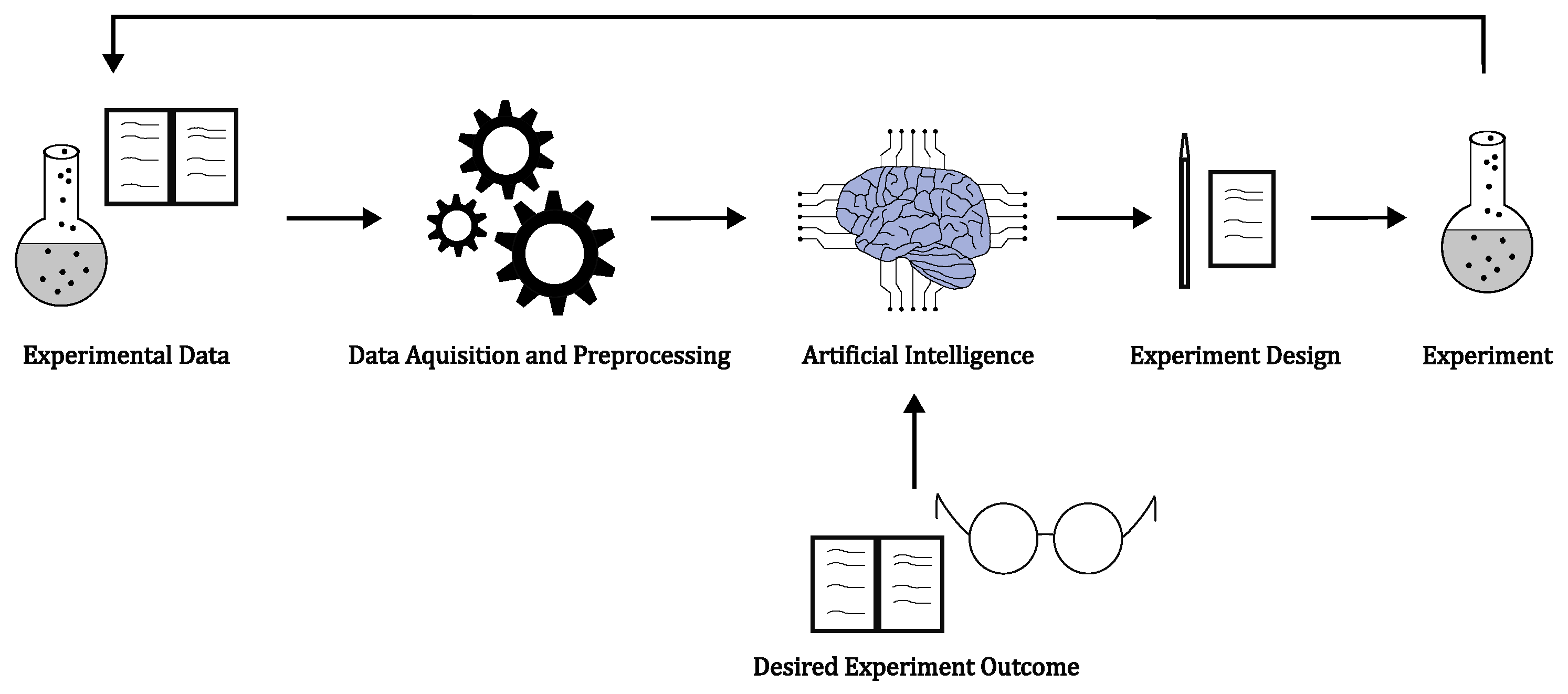

1. Introduction

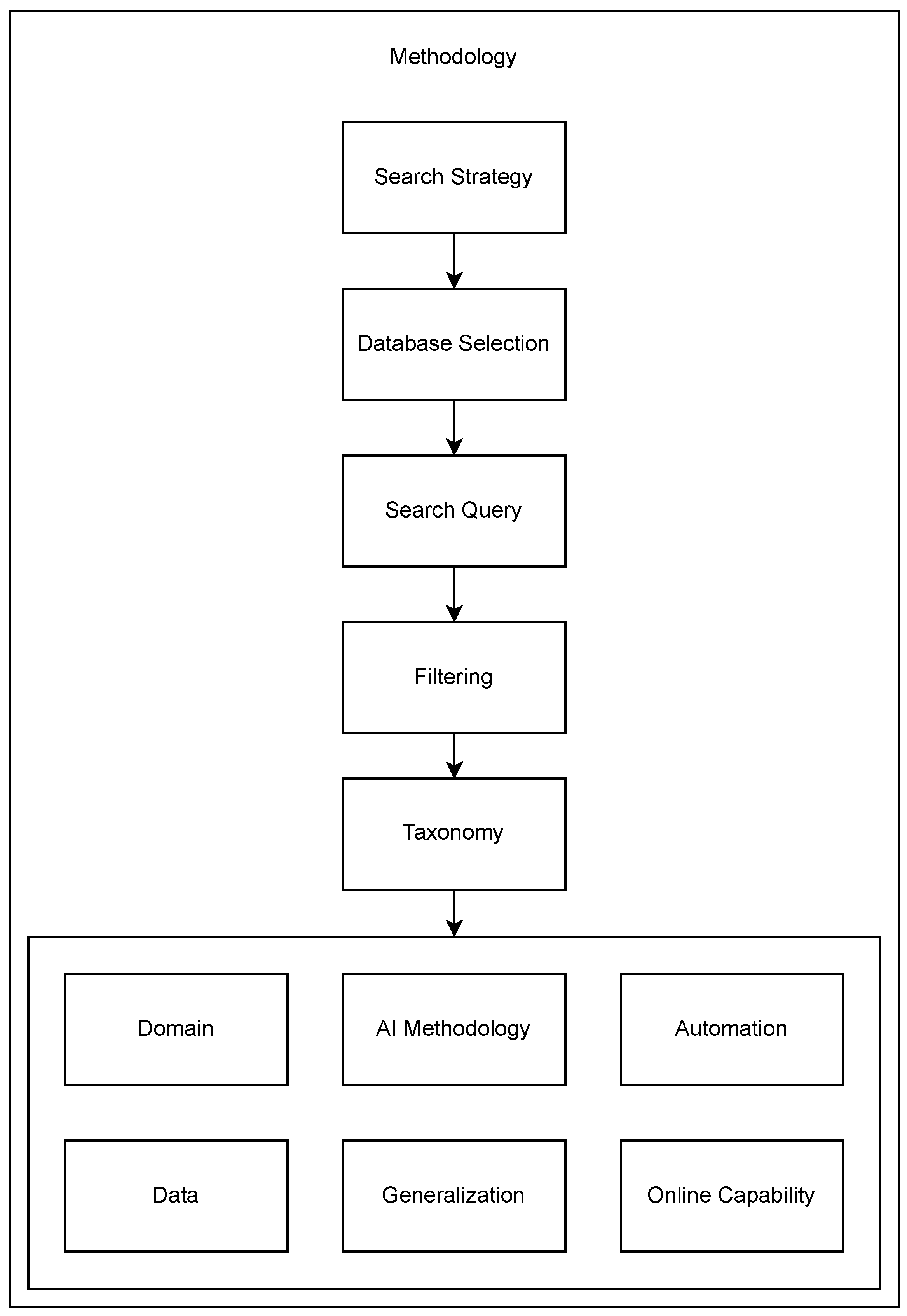

2. Methodology

2.1. Search Strategy

- Define research questions for guiding the systematic research.

- Create an appropriate search query by defining meaningful search terms and assessing relevant databases.

- Determine filter criteria to exclude non-relevant studies.

- Apply the filter criteria through appropriate procedures.

- In which research fields and kinds of applications are AI techniques used to automate experimental design?

- Which quantitative methods are used to implement AI in experimental design?

- Which tasks in the experimental design process are addressed by these techniques?

- What kind of data are used?

- Are the proposed frameworks online-capable, and can they generalize well to new data?

2.2. Filtering

2.3. Application of Filter Criteria

2.3.1. Filter Criterion 1

2.3.2. Filter Criterion 2

2.3.3. Filter Criterion 3

2.3.4. Filter Criterion 4

2.4. Taxonomy

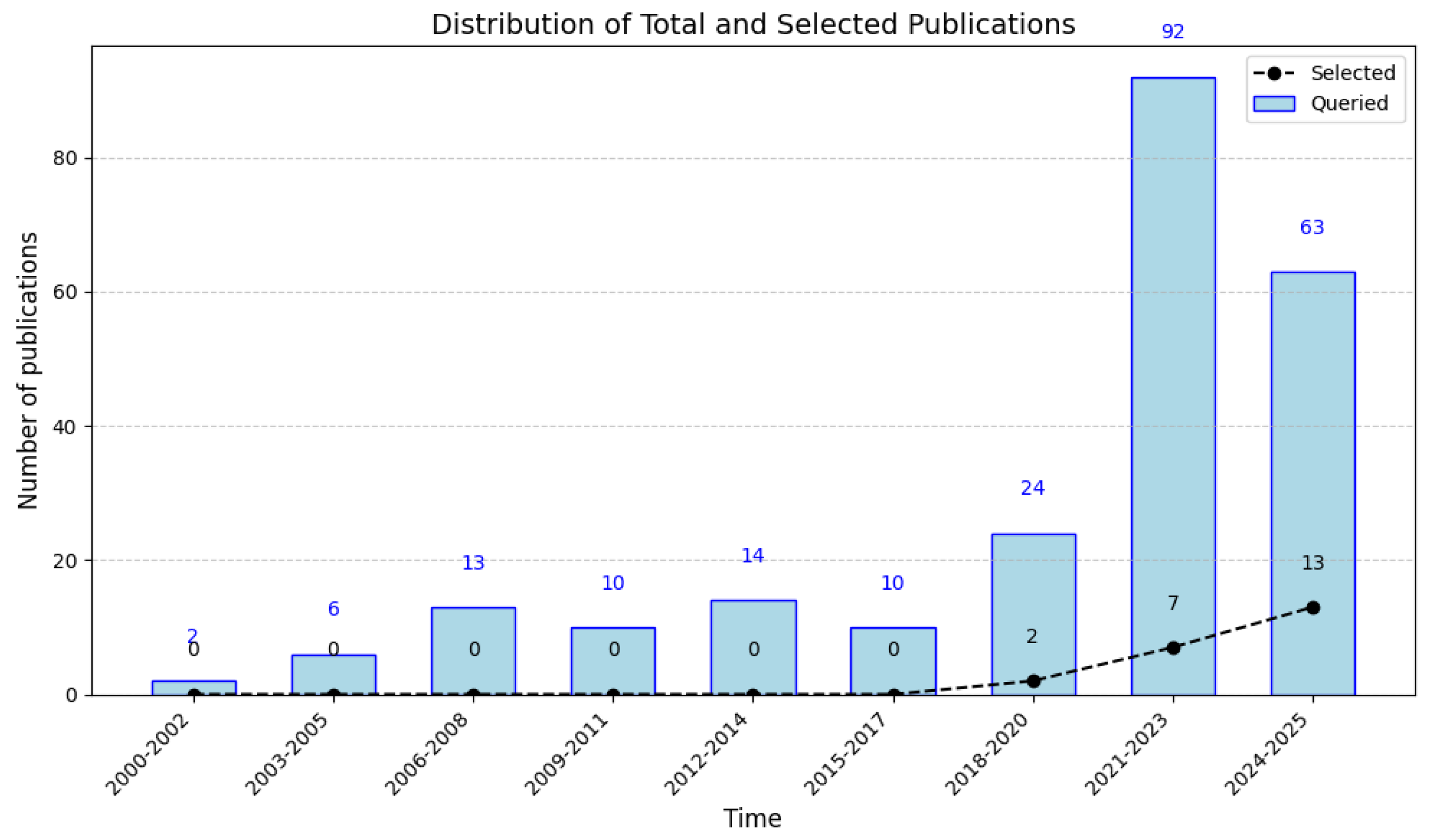

3. Results

3.1. Domains of Application

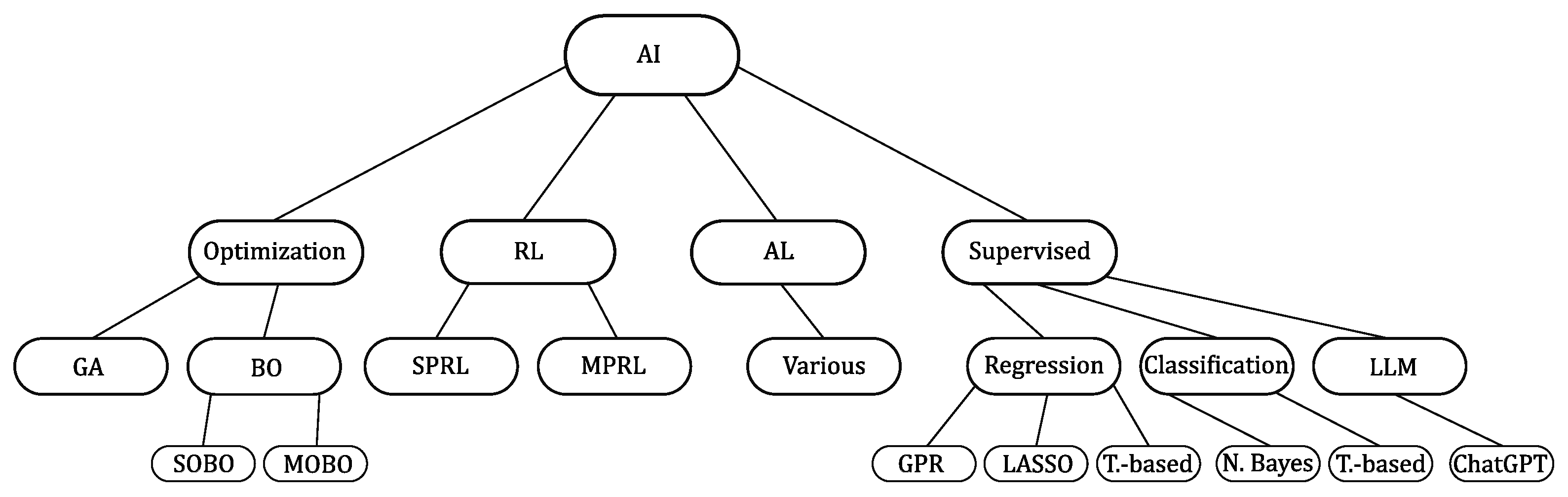

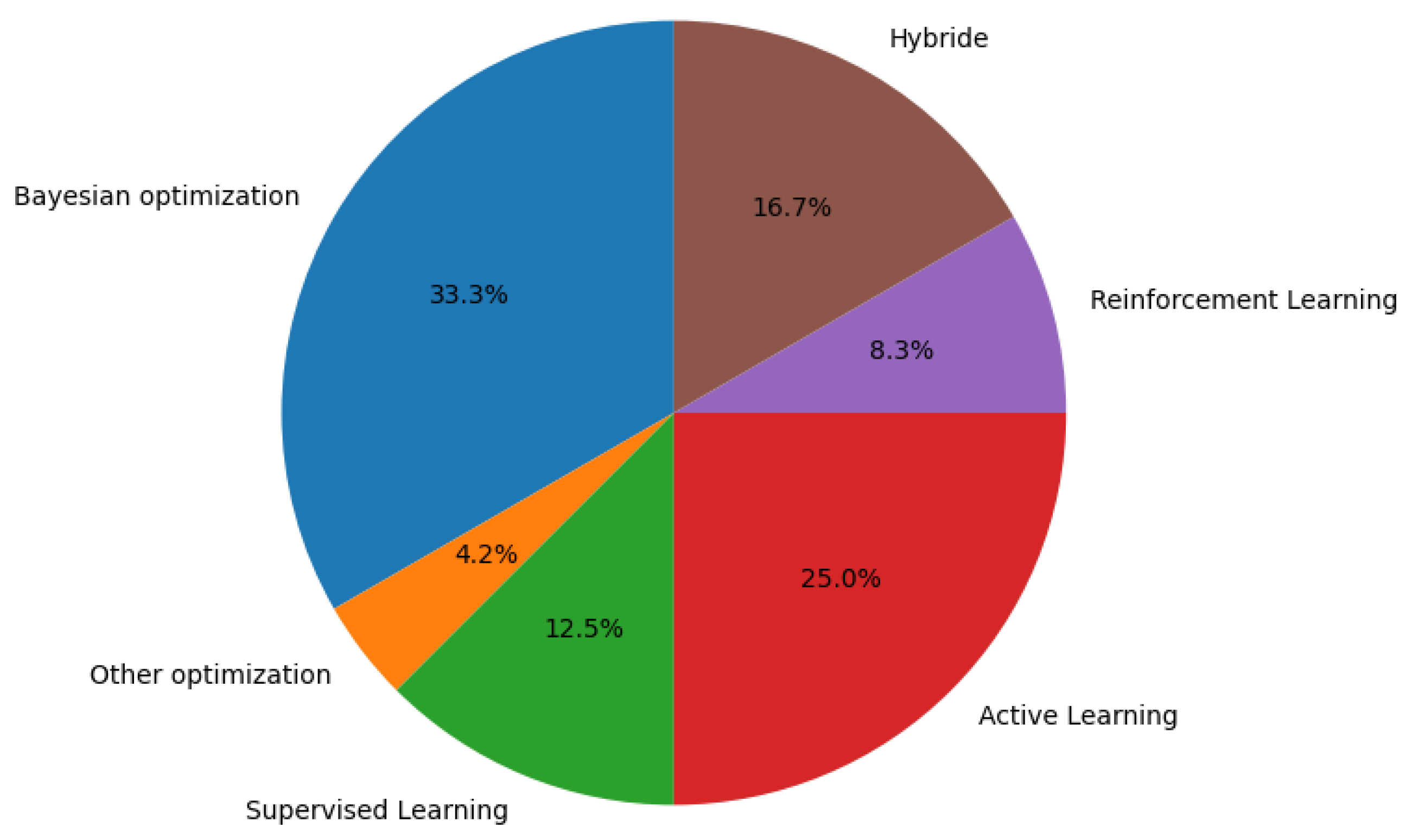

3.2. AI Methodologies for Experimental Design

3.2.1. Single Approaches

3.2.2. Hybrid Approaches

3.2.3. Summary

3.3. Degree of Automation

3.4. Kind of Data

3.5. Online Capability

3.6. Generalization Ability

3.7. Discussion

4. Conclusions

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Acknowledgments

Conflicts of Interest

Abbreviations

| AI | Artificial intelligence |

| AL | Active learning |

| BO | Bayesian optimization |

| DL | Deep learning |

| DT | Decision tree |

| EDNN | Ensemble deep neural network |

| EHI | Expected hypercube improvement |

| EI | Expected improvement |

| ENN | Ensemble neural network |

| FC | Filter criteria |

| GA | Genetic algorithm |

| GP | Gaussian process |

| LASSO | Least absolute shrinkage and selection operator |

| LHS | Latin hypercube sampling |

| LLM | Large language model |

| MC | Monte Carlo |

| MOBO | Multi-objective Bayesian optimization |

| NN | Neural network |

| PLSR | Partial least square regression |

| PV | Predictive variance |

| RL | Reinforcement learning |

| RF | Random forest |

| SOBO | Single-objective Bayesian optimization |

References

- Piliuk, K.; Tomforde, S. Artificial intelligence in emergency medicine. A systematic literature review. Int. J. Med. Inform. 2023, 180, 105274. [Google Scholar] [CrossRef] [PubMed]

- Hysmith, H.; Foadian, E.; Padhy, S.P.; Kalinin, S.V.; Moore, R.G.; Ovchinnikova, O.S.; Ahmadi, M. The future of self-driving laboratories: From human in the loop interactive AI to gamification. Digit. Discov. 2024, 3, 621–636. [Google Scholar] [CrossRef]

- Su, Y.; Wang, X.; Ye, Y.; Xie, Y.; Xu, Y.; Jiang, Y.; Wang, C. Automation and machine learning augmented by large language models in a catalysis study. Chem. Sci. 2024, 15, 12200–12233. [Google Scholar] [CrossRef] [PubMed]

- Häse, F.; Roch, L.M.; Aspuru-Guzik, A. Next-Generation Experimentation with Self-Driving Laboratories. Trends Chem. 2019, 1, 282–291. [Google Scholar] [CrossRef]

- Lo, S.; Baird, S.G.; Schrier, J.; Blaiszik, B.; Carson, N.; Foster, I.; Aguilar-Granda, A.; Kalinin, S.V.; Maruyama, B.; Politi, M.; et al. Review of low-cost self-driving laboratories in chemistry and materials science: The “frugal twin” concept. Digit. Discov. 2024, 3, 842–868. [Google Scholar] [CrossRef]

- Hickman, R.J.; Bannigan, P.; Bao, Z.; Aspuru-Guzik, A.; Allen, C. Self-driving laboratories: A paradigm shift in nanomedicine development. Matter 2023, 6, 1071–1081. [Google Scholar] [CrossRef]

- Bennett, J.A.; Abolhasani, M. Autonomous chemical science and engineering enabled by self-driving laboratories. Curr. Opin. Chem. Eng. 2022, 36, 100831. [Google Scholar] [CrossRef]

- Sadeghi, S.; Canty, R.B.; Mukhin, N.; Xu, J.; Delgado-Licona, F.; Abolhasani, M. Engineering a Sustainable Future: Harnessing Automation, Robotics, and Artificial Intelligence with Self-Driving Laboratories. ACS Sustain. Chem. Eng. 2024, 12, 12695–12707. [Google Scholar] [CrossRef]

- Tom, G.; Schmid, S.P.; Baird, S.G.; Cao, Y.; Darvish, K.; Hao, H.; Lo, S.; Pablo-García, S.; Rajaonson, E.M.; Skreta, M.; et al. Self-Driving Laboratories for Chemistry and Materials Science. Chem. Rev. 2024, 124, 9633–9732. [Google Scholar] [CrossRef]

- Beaucage, P.A.; Sutherland, D.R.; Martin, T.B. Automation and Machine Learning for Accelerated Polymer Characterization and Development: Past, Potential, and a Path Forward. Macromolecules 2024, 57, 8661–8670. [Google Scholar] [CrossRef]

- Sadeghi, S.; Bateni, F.; Kim, T.; Son, D.Y.; Bennett, J.A.; Orouji, N.; Punati, V.S.; Stark, C.; Cerra, T.D.; Awad, R.; et al. Autonomous nanomanufacturing of lead-free metal halide perovskite nanocrystals using a self-driving fluidic lab. Nanoscale 2024, 16, 580–591. [Google Scholar] [CrossRef] [PubMed]

- Snapp, K.L.; Brown, K.A. Driving school for self-driving labs. Digit. Discov. 2023, 2, 1620–1629. [Google Scholar] [CrossRef]

- Seifrid, M.; Pollice, R.; Aguilar-Granda, A.; Morgan Chan, Z.; Hotta, K.; Ser, C.T.; Vestfrid, J.; Wu, T.C.; Aspuru-Guzik, A. Autonomous Chemical Experiments: Challenges and Perspectives on Establishing a Self-Driving Lab. Accounts Chem. Res. 2022, 55, 2454–2466. [Google Scholar] [CrossRef] [PubMed]

- Morgan, D.; Pilania, G.; Couet, A.; Uberuaga, B.P.; Sun, C.; Li, J. Machine learning in nuclear materials research. Curr. Opin. Solid State Mater. Sci. 2022, 26, 100975. [Google Scholar] [CrossRef]

- Kalinin, S.V.; Ziatdinov, M.; Hinkle, J.; Jesse, S.; Ghosh, A.; Kelley, K.P.; Lupini, A.R.; Sumpter, B.G.; Vasudevan, R.K. Automated and Autonomous Experiments in Electron and Scanning Probe Microscopy. ACS Nano 2021, 15, 12604–12627. [Google Scholar] [CrossRef]

- Aal E Ali, R.S.; Meng, J.; Khan, M.E.I.; Jiang, X. Machine learning advancements in organic synthesis: A focused exploration of artificial intelligence applications in chemistry. Artif. Intell. Chem. 2024, 2, 100049. [Google Scholar] [CrossRef]

- Bannigan, P.; Aldeghi, M.; Bao, Z.; Häse, F.; Aspuru-Guzik, A.; Allen, C. Machine learning directed drug formulation development. Adv. Drug Deliv. Rev. 2021, 175, 113806. [Google Scholar] [CrossRef]

- Li, X.; Xu, Z.; Bu, D.; Cai, J.; Chen, H.; Chen, Q.; Chen, T.; Cheng, F.; Chi, L.; Dong, W.; et al. Recent progress on surface chemistry II: Property and characterization. Chin. Chem. Lett. 2025, 36, 110100. [Google Scholar] [CrossRef]

- Smeaton, M.A.; Abellan, P.; Spurgeon, S.R.; Unocic, R.R.; Jungjohann, K.L. Tutorial on In Situ and Operando (Scanning) Transmission Electron Microscopy for Analysis of Nanoscale Structure—Property Relationships. ACS Nano 2024, 18, 35091–35103. [Google Scholar] [CrossRef]

- Anker, A.S.; Aspuru-Guzik, A.; Mahmoud, C.B.; Bennett, S.; Briling, K.R.; Changiarath, A.; Chong, S.; Collins, C.M.; Cooper, A.I.; Crusius, D.; et al. Discovering structure-property correlations: General discussion. Faraday Discuss. 2024, 256, 373–412. [Google Scholar] [CrossRef]

- Batool, M.; Sanumi, O.; Jankovic, J. Application of artificial intelligence in the materials science, with a special focus on fuel cells and electrolyzers. Energy AI 2024, 18, 100424. [Google Scholar] [CrossRef]

- Wang, L.; Ma, C.; Feng, X.; Zhang, Z.; Yang, H.; Zhang, J.; Chen, Z.; Tang, J.; Chen, X.; Lin, Y.; et al. A survey on large language model based autonomous agents. Front. Comput. Sci. 2024, 18, 186345. [Google Scholar] [CrossRef]

- Madanchian, M.; Taherdoost, H. AI-Powered Innovations in High-Tech Research and Development: From Theory to Practice. Comput. Mater. Contin. 2024, 81, 2133–2159. [Google Scholar] [CrossRef]

- Abstracts. Fuel Energy Abstr. 2024, 65, 506–608. [CrossRef]

- Nian, M.; Braun, G.; Escher, B.I.; Fang, M. Toxicological Study of Human Exposure to Mixtures of Chemicals: Challenges and Approaches. Environ. Sci. Technol. Lett. 2024, 11, 773–782. [Google Scholar] [CrossRef]

- Groo, L.; Juhl, A.T.; Baldwin, L.A. Toward soft robotic inspection for aircraft: An overview and perspective. MRS Commun. 2024, 14, 741–751. [Google Scholar] [CrossRef]

- Achenbach, P.; Adhikari, D.; Afanasev, A.; Afzal, F.; Aidala, C.; Al-bataineh, A.; Almaalol, D.; Amaryan, M.; Androić, D.; Armstrong, W.; et al. The present and future of QCD. Nucl. Phys. A 2024, 1047, 122874. [Google Scholar] [CrossRef]

- Wu, Y.; Walsh, A.; Ganose, A.M. Race to the bottom: Bayesian optimisation for chemical problems. Digit. Discov. 2024, 3, 1086–1100. [Google Scholar] [CrossRef]

- Li, Q.; Xing, R.; Li, L.; Yao, H.; Wu, L.; Zhao, L. Synchrotron radiation data-driven artificial intelligence approaches in materials discovery. Artif. Intell. Chem. 2024, 2, 100045. [Google Scholar] [CrossRef]

- Xie, J.; Zhou, Y.; Faizan, M.; Li, Z.; Li, T.; Fu, Y.; Wang, X.; Zhang, L. Designing semiconductor materials and devices in the post-Moore era by tackling computational challenges with data-driven strategies. Nat. Comput. Sci. 2024, 4, 322–333. [Google Scholar] [CrossRef]

- Orouji, N.; Bennett, J.A.; Sadeghi, S.; Abolhasani, M. Digital Pareto-front mapping of homogeneous catalytic reactions. React. Chem. Eng. 2024, 9, 787–794. [Google Scholar] [CrossRef]

- Baró, E.L.; Nadal Rodríguez, P.; Juárez-Jiménez, J.; Ghashghaei, O.; Lavilla, R. Reaction Space Charting as a Tool in Organic Chemistry Research and Development. Adv. Synth. Catal. 2024, 366, 551–573. [Google Scholar] [CrossRef]

- Volk, A.A.; Abolhasani, M. Performance metrics to unleash the power of self-driving labs in chemistry and materials science. Nat. Commun. 2024, 15, 1378. [Google Scholar] [CrossRef] [PubMed]

- Voogdt, C.G.P.; Tripathi, S.; Bassler, S.O.; McKeithen-Mead, S.A.; Guiberson, E.R.; Koumoutsi, A.; Bravo, A.M.; Buie, C.; Zimmermann, M.; Sonnenburg, J.L.; et al. Randomly barcoded transposon mutant libraries for gut commensals II: Applying libraries for functional genetics. Cell Rep. 2024, 43, 113519. [Google Scholar] [CrossRef]

- Williams, B.M.; Hanson, B.R.; Pandya, R. Chapter 15—Geoscience-society interface: How to improve dialog and build actions for the benefit of human communities. In Geoethics for the Future; Peppoloni, S., Capua, G.D., Eds.; Elsevier: Amsterdam, The Netherlands, 2024; pp. 191–206. [Google Scholar] [CrossRef]

- Von Stosch, M. Digital Process Development and Manufacturing of Biopharmaceuticals: Is It a Revolution? In Innovation in Life Sciences; Management for Professionals; Schönbohm, A., Von Horsten, H.H., Plugmann, P., Von Stosch, M., Eds.; Springer Nature Switzerland: Cham, Switzerland, 2024; pp. 61–75. [Google Scholar] [CrossRef]

- Volkamer, A.; Riniker, S.; Nittinger, E.; Lanini, J.; Grisoni, F.; Evertsson, E.; Rodríguez-Pérez, R.; Schneider, N. Machine learning for small molecule drug discovery in academia and industry. Artif. Intell. Life Sci. 2023, 3, 100056. [Google Scholar] [CrossRef]

- Zhao, Y.; Zhu, Z.; Chen, B.; Qiu, S.; Huang, J.; Lu, X.; Yang, W.; Ai, C.; Huang, K.; He, C.; et al. Toward parallel intelligence: An interdisciplinary solution for complex systems. Innovation 2023, 4, 100521. [Google Scholar] [CrossRef]

- Schrier, J.; Norquist, A.J.; Buonassisi, T.; Brgoch, J. In Pursuit of the Exceptional: Research Directions for Machine Learning in Chemical and Materials Science. J. Am. Chem. Soc. 2023, 145, 21699–21716. [Google Scholar] [CrossRef]

- Bustillo, L.; Laino, T.; Rodrigues, T. The rise of automated curiosity-driven discoveries in chemistry. Chem. Sci. 2023, 14, 10378–10384. [Google Scholar] [CrossRef]

- Xu, Y.; Ge, J.; Ju, C.W. Machine learning in energy chemistry: Introduction, challenges and perspectives. Energy Adv. 2023, 2, 896–921. [Google Scholar] [CrossRef]

- Burkert, V.; Elouadrhiri, L.; Afanasev, A.; Arrington, J.; Contalbrigo, M.; Cosyn, W.; Deshpande, A.; Glazier, D.; Ji, X.; Liuti, S.; et al. Precision studies of QCD in the low energy domain of the EIC. Prog. Part. Nucl. Phys. 2023, 131, 104032. [Google Scholar] [CrossRef]

- Pelkie, B.G.; Pozzo, L.D. The laboratory of Babel: Highlighting community needs for integrated materials data management. Digit. Discov. 2023, 2, 544–556. [Google Scholar] [CrossRef]

- Wang, X.Q.; Chen, P.; Chow, C.L.; Lau, D. Artificial-intelligence-led revolution of construction materials: From molecules to Industry 4.0. Matter 2023, 6, 1831–1859. [Google Scholar] [CrossRef]

- Automate and digitize. Nat. Synth. 2023, 2, 459. [CrossRef]

- Vriza, A.; Chan, H.; Xu, J. Self-Driving Laboratory for Polymer Electronics. Chem. Mater. 2023, 35, 3046–3056. [Google Scholar] [CrossRef]

- Cavasotto, C.N.; Di Filippo, J.I. The Impact of Supervised Learning Methods in Ultralarge High-Throughput Docking. J. Chem. Inf. Model. 2023, 63, 2267–2280. [Google Scholar] [CrossRef]

- Kaur, D.P.; Singh, N.P.; Banerjee, B. A review of platforms for simulating embodied agents in 3D virtual environments. Artif. Intell. Rev. 2023, 56, 3711–3753. [Google Scholar] [CrossRef]

- Espino, M.T.; Tuazon, B.J.; Espera, A.H.; Nocheseda, C.J.C.; Manalang, R.S.; Dizon, J.R.C.; Advincula, R.C. Statistical methods for design and testing of 3D-printed polymers. MRS Commun. 2023, 13, 193–211. [Google Scholar] [CrossRef]

- Pacheco Gutierrez, D.; Folkmann, L.M.; Tribukait, H.; Roch, L.M. How to Accelerate R&D and Optimize Experiment Planning with Machine Learning and Data Science. Chimia 2023, 77, 7–16. [Google Scholar] [CrossRef]

- Leins, D.A.; Haase, S.B.; Eslami, M.; Schrier, J.; Freeman, J.T. Collaborative methods to enhance reproducibility and accelerate discovery. Digit. Discov. 2023, 2, 12–27. [Google Scholar] [CrossRef]

- Peng, X.; Wang, X. Next-generation intelligent laboratories for materials design and manufacturing. MRS Bull. 2023, 48, 179–185. [Google Scholar] [CrossRef]

- Abolhasani, M.; Kumacheva, E. The rise of self-driving labs in chemical and materials sciences. Nat. Synth. 2023, 2, 483–492. [Google Scholar] [CrossRef]

- Kardynska, M.; Kogut, D.; Pacholczyk, M.; Smieja, J. Mathematical modeling of regulatory networks of intracellular processes—Aims and selected methods. Comput. Struct. Biotechnol. J. 2023, 21, 1523–1532. [Google Scholar] [CrossRef] [PubMed]

- Kabir, R.; Sivasubramanian, M.; Hitch, G.; Hakkim, S.; Kainesie, J.; Vinnakota, D.; Mahmud, I.; Hoque Apu, E.; Syed, H.Z.; Parsa, A.D. Chapter 17—“Deep learning” for healthcare: Opportunities, threats, and challenges. In Deep Learning in Personalized Healthcare and Decision Support; Garg, H., Chatterjee, J.M., Eds.; Academic Press: Cambridge, MA, USA, 2023; pp. 225–244. [Google Scholar] [CrossRef]

- Gongora, A.E.; Saygin, V.; Snapp, K.L.; Brown, K.A. Chapter 12—Autonomous experimentation in nanotechnology. In Intelligent Nanotechnology; Zheng, Y., Wu, Z., Eds.; Elsevier: Amsterdam, The Netherlands, 2023; pp. 331–360. [Google Scholar] [CrossRef]

- Lin, D.Z.; Fang, G.; Liao, K. Synthesize in a Smart Way: A Brief Introduction to Intelligence and Automation in Organic Synthesis. In Machine Learning in Molecular Sciences; Challenges and Advances in Computational Chemistry and, Physics; Qu, C., Liu, H., Eds.; Springer International Publishing: Cham, Switzerland, 2023; Volume 36, pp. 227–275. [Google Scholar] [CrossRef]

- Botifoll, M.; Pinto-Huguet, I.; Arbiol, J. Machine learning in electron microscopy for advanced nanocharacterization: Current developments, available tools and future outlook. Nanoscale Horizons 2022, 7, 1427–1477. [Google Scholar] [CrossRef]

- Arrington, J.; Battaglieri, M.; Boehnlein, A.; Bogacz, S.; Brooks, W.; Chudakov, E.; Cloët, I.; Ent, R.; Gao, H.; Grames, J.; et al. Physics with CEBAF at 12 GeV and future opportunities. Prog. Part. Nucl. Phys. 2022, 127, 103985. [Google Scholar] [CrossRef]

- Abdul Khalek, R.; Accardi, A.; Adam, J.; Adamiak, D.; Akers, W.; Albaladejo, M.; Al-bataineh, A.; Alexeev, M.; Ameli, F.; Antonioli, P.; et al. Science Requirements and Detector Concepts for the Electron-Ion Collider: EIC Yellow Report. Nucl. Phys. A 2022, 1026, 122447. [Google Scholar] [CrossRef]

- Munyebvu, N.; Lane, E.; Grisan, E.; Howes, P.D. Accelerating colloidal quantum dot innovation with algorithms and automation. Mater. Adv. 2022, 3, 6950–6967. [Google Scholar] [CrossRef]

- Barends, T.R.M.; Stauch, B.; Cherezov, V.; Schlichting, I. Serial femtosecond crystallography. Nat. Rev. Methods Prim. 2022, 2, 59. [Google Scholar] [CrossRef]

- Jia, S.; Yang, P.; Gao, Z.; Li, Z.; Fang, C.; Gong, J. Recent progress in antisolvent crystallization. CrystEngComm 2022, 24, 3122–3135. [Google Scholar] [CrossRef]

- Serov, N.; Vinogradov, V. Artificial intelligence to bring nanomedicine to life. Adv. Drug Deliv. Rev. 2022, 184, 114194. [Google Scholar] [CrossRef]

- Mayr, F.; Harth, M.; Kouroudis, I.; Rinderle, M.; Gagliardi, A. Machine Learning and Optoelectronic Materials Discovery: A Growing Synergy. J. Phys. Chem. Lett. 2022, 13, 1940–1951. [Google Scholar] [CrossRef]

- Schmitz, C.; Cremanns, K.; Bissadi, G. Chapter 5—Application of machine learning algorithms for use in material chemistry. In Computational and Data-Driven Chemistry Using Artificial Intelligence; Akitsu, T., Ed.; Elsevier: Amsterdam, The Netherlands, 2022; pp. 161–192. [Google Scholar] [CrossRef]

- Oettmeier, C.; Fessel, A.; Döbereiner, H.G. Chapter 12—Integrated biology of Physarum polycephalum: Cell biology, biophysics, and behavior of plasmodial networks. In Myxomycetes, 2nd ed.; Rojas, C., Stephenson, S.L., Eds.; Academic Press: Cambridge, MA, USA, 2022; pp. 453–492. [Google Scholar] [CrossRef]

- Liu, Z.; Zheng, Y. Start of the “Age of Exploration” of AI Governance. In AI Ethics and Governance; Springer Nature Singapore: Singapore, 2022; pp. 111–125. [Google Scholar] [CrossRef]

- Li, B.; Zhong, Y.; Zhang, T.; Hua, N. Transcending the COVID-19 crisis: Business resilience and innovation of the restaurant industry in China. J. Hosp. Tour. Manag. 2021, 49, 44–53. [Google Scholar] [CrossRef]

- Epps, R.W.; Volk, A.A.; Ibrahim, M.Y.; Abolhasani, M. Universal self-driving laboratory for accelerated discovery of materials and molecules. Chem 2021, 7, 2541–2545. [Google Scholar] [CrossRef]

- Stach, E.; DeCost, B.; Kusne, A.G.; Hattrick-Simpers, J.; Brown, K.A.; Reyes, K.G.; Schrier, J.; Billinge, S.; Buonassisi, T.; Foster, I.; et al. Autonomous experimentation systems for materials development: A community perspective. Matter 2021, 4, 2702–2726. [Google Scholar] [CrossRef]

- Lin, A.; Uva, A.; Babi, J.; Tran, H. Materials design for resilience in the biointegration of electronics. MRS Bull. 2021, 46, 860–869. [Google Scholar] [CrossRef]

- Tao, H.; Wu, T.; Aldeghi, M.; Wu, T.C.; Aspuru-Guzik, A.; Kumacheva, E. Nanoparticle synthesis assisted by machine learning. Nat. Rev. Mater. 2021, 6, 701–716. [Google Scholar] [CrossRef]

- Rodríguez-Martínez, X.; Pascual-San-José, E.; Campoy-Quiles, M. Accelerating organic solar cell material’s discovery: High-throughput screening and big data. Energy Environ. Sci. 2021, 14, 3301–3322. [Google Scholar] [CrossRef]

- Pollice, R.; dos Passos Gomes, G.; Aldeghi, M.; Hickman, R.J.; Krenn, M.; Lavigne, C.; Lindner-D’Addario, M.; Nigam, A.; Ser, C.T.; Yao, Z.; et al. Data-Driven Strategies for Accelerated Materials Design. Accounts Chem. Res. 2021, 54, 849–860. [Google Scholar] [CrossRef]

- Bittner, M.I. Rethinking data and metadata in the age of machine intelligence. Patterns 2021, 2, 100208. [Google Scholar] [CrossRef]

- Shi, Y.; Prieto, P.L.; Zepel, T.; Grunert, S.; Hein, J.E. Automated Experimentation Powers Data Science in Chemistry. Accounts Chem. Res. 2021, 54, 546–555. [Google Scholar] [CrossRef]

- Zhuo, Y.; Brgoch, J. Opportunities for Next-Generation Luminescent Materials through Artificial Intelligence. J. Phys. Chem. Lett. 2021, 12, 764–772. [Google Scholar] [CrossRef]

- Hayashi, Y. Time Economy in Total Synthesis. J. Org. Chem. 2021, 86, 1–23. [Google Scholar] [CrossRef] [PubMed]

- Bergemann, D.; Ottaviani, M. Chapter 8—Information markets and nonmarkets. In Handbook of Industrial Organization; Ho, K., Hortaçsu, A., Lizzeri, A., Eds.; Elsevier: Amsterdam, The Netherlands, 2021; Volume 4, pp. 593–672. [Google Scholar] [CrossRef]

- Idrobo-Ávila, E.; Loaiza-Correa, H.; Muñoz-Bolaños, F.; van Noorden, L.; Vargas-Cañas, R. A Proposal for a Data-Driven Approach to the Influence of Music on Heart Dynamics. Front. Cardiovasc. Med. 2021, 8, 699145. [Google Scholar] [CrossRef] [PubMed]

- Hosszu, A.; Kaucsar, T.; Seeliger, E.; Fekete, A. Animal Models of Renal Pathophysiology and Disease. In Preclinical MRI of the Kidney; Methods in Molecular Biology; Pohlmann, A., Niendorf, T., Eds.; Springer: New York, NY, USA, 2021; Volume 2216, pp. 27–44. [Google Scholar] [CrossRef]

- Han, S.; Kashfipour, M.A.; Ramezani, M.; Abolhasani, M. Accelerating gas-liquid chemical reactions in flow. Chem. Commun. 2020, 56, 10593–10606. [Google Scholar] [CrossRef] [PubMed]

- Zhong, J.; Riordon, J.; Wu, T.C.; Edwards, H.; Wheeler, A.R.; Pardee, K.; Aspuru-Guzik, A.; Sinton, D. When robotics met fluidics. Lab Chip 2020, 20, 709–716. [Google Scholar] [CrossRef]

- Siedler, M.; Eichling, S.; Huelsmeyer, M.; Angstenberger, J. Chapter 13: Formulation Development for Biologics Utilizing Lab Automation and In Vivo Performance Models. In Development of Biopharmaceutical Drug-Device Products; AAPS Advances in the Pharmaceutical Sciences Series; Jameel, F., Skoug, J.W., Nesbitt, R.R., Eds.; Springer International Publishing: Cham, Switzerland, 2020; Volume 35, pp. 299–341. [Google Scholar] [CrossRef]

- Cuadrado-Gallego, J.J.; Demchenko, Y. Data Science Body of Knowledge. In The Data Science Framework; Cuadrado-Gallego, J.J., Demchenko, Y., Eds.; Springer International Publishing: Cham, Switzerland, 2020; pp. 43–73. [Google Scholar] [CrossRef]

- Stein, H.S.; Gregoire, J.M. Progress and prospects for accelerating materials science with automated and autonomous workflows. Chem. Sci. 2019, 10, 9640–9649. [Google Scholar] [CrossRef]

- De Almeida, A.F.; Moreira, R.; Rodrigues, T. Synthetic organic chemistry driven by artificial intelligence. Nat. Rev. Chem. 2019, 3, 589–604. [Google Scholar] [CrossRef]

- Riordon, J.; Sovilj, D.; Sanner, S.; Sinton, D.; Young, E.W. Deep Learning with Microfluidics for Biotechnology. Trends Biotechnol. 2019, 37, 310–324. [Google Scholar] [CrossRef]

- Melo, S.M.; Carver, J.C.; Souza, P.S.; Souza, S.R. Empirical research on concurrent software testing: A systematic mapping study. Inf. Softw. Technol. 2019, 105, 226–251. [Google Scholar] [CrossRef]

- Chandra, C.; Grabis, J. Methodology for Supply Chain Configuration. In Supply Chain Configuration; Springer: New York, NY, USA, 2016; pp. 87–107. [Google Scholar] [CrossRef]

- Park, Y.; Kellis, M. Deep learning for regulatory genomics. Nat. Biotechnol. 2015, 33, 825–826. [Google Scholar] [CrossRef]

- Bouquet, F.; Chipeaux, S.; Lang, C.; Marilleau, N.; Nicod, J.M.; Taillandier, P. 1—Introduction to the Agent Approach. In Agent-Based Spatial Simulation with Netlogo; Banos, A., Lang, C., Marilleau, N., Eds.; Elsevier: Oxford, UK, 2015; pp. 1–28. [Google Scholar] [CrossRef]

- Holzinger, A.; Dehmer, M.; Jurisica, I. Knowledge Discovery and interactive Data Mining in Bioinformatics—State-of-the-Art, future challenges and research directions. BMC Bioinform. 2014, 15, I1. [Google Scholar] [CrossRef]

- Klie, S.; Mutwil, M.; Persson, S.; Nikoloski, Z. Inferring gene functions through dissection of relevance networks: Interleaving the intra- and inter-species views. Mol. Biosyst. 2012, 8, 2233–2241. [Google Scholar] [CrossRef] [PubMed]

- Griffiths, G. Steps towards autonomy: From current measurements to underwater vehicles. Methods Oceanogr. 2012, 1–2, 22–48. [Google Scholar] [CrossRef]

- Potyrailo, R.; Rajan, K.; Stoewe, K.; Takeuchi, I.; Chisholm, B.; Lam, H. Combinatorial and High-Throughput Screening of Materials Libraries: Review of State of the Art. ACS Comb. Sci. 2011, 13, 579–633. [Google Scholar] [CrossRef] [PubMed]

- Kleiner, R.E.; Dumelin, C.E.; Liu, D.R. Small-molecule discovery from DNA-encoded chemical libraries. Chem. Soc. Rev. 2011, 40, 5707–5717. [Google Scholar] [CrossRef]

- Sparkes, A.; Aubrey, W.; Byrne, E.; Clare, A.; Khan, M.N.; Liakata, M.; Markham, M.; Rowland, J.; Soldatova, L.N.; Whelan, K.E.; et al. Towards Robot Scientists for autonomous scientific discovery. Autom. Exp. 2010, 2, 1. [Google Scholar] [CrossRef]

- Abstracts from Fuel and Energy Research Publications. Fuel Energy Abstr. 2008, 49, 382–462. [CrossRef]

- Perales, J.C.; Shanks, D.R. Models of covariation-based causal judgment: A review and synthesis. Psychon. Bull. Rev. 2007, 14, 577–596. [Google Scholar] [CrossRef]

- Complex Systems and the Evolution of Economies. In Thinking in Complexity; Springer: Berlin/Heidelberg, Germany, 2007; pp. 311–365. [CrossRef]

- Goldfarb, D. High field ENDOR as a characterization tool for functional sites in microporous materials. Phys. Chem. Chem. Phys. 2006, 8, 2325–2343. [Google Scholar] [CrossRef]

- López-García, I.; Campillo, N.; Arnau-Jerez, I.; Hernández-Córdoba, M. ETAAS determination of gallium in soils using slurry sampling. J. Anal. At. Spectrom. 2004, 19, 935–937. [Google Scholar] [CrossRef]

- Mainzer, K. Complex Systems and the Evolution of Human Society. In Thinking in Complexity; Springer: Berlin/Heidelberg, Germany, 2004; pp. 313–385. [Google Scholar] [CrossRef]

- Stewart, L.; Clark, R.; Behnke, C. High-throughput crystallization and structure determination in drug discovery. Drug Discov. Today 2002, 7, 187–196. [Google Scholar] [CrossRef]

- Fei, Y.; Rendy, B.; Kumar, R.; Dartsi, O.; Sahasrabuddhe, H.P.; McDermott, M.J.; Wang, Z.; Szymanski, N.J.; Walters, L.N.; Milsted, D.; et al. AlabOS: A Python-based reconfigurable workflow management framework for autonomous laboratories. Digit. Discov. 2024, 3, 2275–2288. [Google Scholar] [CrossRef]

- Pham, L.M.; Le, D.T. High-performance simulation of disease outbreaks in growing-finishing pig herds raised by the precision feeding method. Comput. Electron. Agric. 2024, 225, 109335. [Google Scholar] [CrossRef]

- Chen, T.; She, C.; Wang, L.; Duan, S. Memristive leaky integrate-and-fire neuron and learnable straight-through estimator in spiking neural networks. Cogn. Neurodynamics 2024, 18, 3075–3091. [Google Scholar] [CrossRef] [PubMed]

- Sim, M.; Vakili, M.G.; Strieth-Kalthoff, F.; Hao, H.; Hickman, R.J.; Miret, S.; Pablo-García, S.; Aspuru-Guzik, A. ChemOS 2.0: An orchestration architecture for chemical self-driving laboratories. Matter 2024, 7, 2959–2977. [Google Scholar] [CrossRef]

- Seifrid, M.; Strieth-Kalthoff, F.; Haddadnia, M.; Wu, T.C.; Alca, E.; Bodo, L.; Arellano-Rubach, S.; Yoshikawa, N.; Skreta, M.; Keunen, R.; et al. Chemspyd: An open-source python interface for Chemspeed robotic chemistry and materials platforms. Digit. Discov. 2024, 3, 1319–1326. [Google Scholar] [CrossRef]

- Liu, H.; Li, Y.; Xin, H.; Wang, F.; Du, Y.; Wu, J. The Innovation and Practice of a Remote Physical Experiment Method. In Proceedings of the 2024 International Symposium on Artificial Intelligence for Education, New York, NY, USA, 6–8 September 2024; ISAIE ’24. pp. 583–588. [Google Scholar] [CrossRef]

- He, L.; Song, L.; Li, X.; Lin, S.; Ye, G.; Liu, H.; Zhao, X. Study of andrographolide bioactivity against Pseudomonas aeruginosa based on computational methodology and biochemical analysis. Front. Chem. 2024, 12, 1388545. [Google Scholar] [CrossRef]

- Engelmann, C.; Somnath, S. Science Use Case Design Patterns for Autonomous Experiments. In Proceedings of the 28th European Conference on Pattern Languages of Programs, Irsee, Germany, 5–9 July 2024. EuroPLoP ’23. [Google Scholar] [CrossRef]

- Kovtun, V.; Grochla, K.; Kharchenko, V.; Haq, M.A.; Semenov, A. Stochastic forecasting of variable small data as a basis for analyzing an early stage of a cyber epidemic. Sci. Rep. 2023, 13, 22810. [Google Scholar] [CrossRef]

- Guevarra, D.; Kan, K.; Lai, Y.; Jones, R.J.R.; Zhou, L.; Donnelly, P.; Richter, M.; Stein, H.S.; Gregoire, J.M. Orchestrating nimble experiments across interconnected labs. Digit. Discov. 2023, 2, 1806–1812. [Google Scholar] [CrossRef]

- Misko, V.R.; Baraban, L.; Makarov, D.; Huang, T.; Gelin, P.; Mateizel, I.; Wouters, K.; Munck, N.D.; Nori, F.; Malsche, W.D. Selecting active matter according to motility in an acoustofluidic setup: Self-propelled particles and sperm cells. Soft Matter 2023, 19, 8635–8648. [Google Scholar] [CrossRef]

- Brian, K.; Stella, M. Introducing mindset streams to investigate stances towards STEM in high school students and experts. Phys. A Stat. Mech. Its Appl. 2023, 626, 129074. [Google Scholar] [CrossRef]

- Coleman, A.; Eser, J.; Mayotte, E.; Sarazin, F.; Schröder, F.; Soldin, D.; Venters, T.; Aloisio, R.; Alvarez-Muñiz, J.; Alves Batista, R.; et al. Ultra high energy cosmic rays The intersection of the Cosmic and Energy Frontiers. Astropart. Phys. 2023, 149, 102819. [Google Scholar] [CrossRef]

- Junior, W.; Azzolini, F.; Mundim, L.; Porto, A.; Amani, H. Shipyard facility layout optimization through the implementation of a sequential structure of algorithms. Heliyon 2023, 9, e16714. [Google Scholar] [CrossRef] [PubMed]

- Szlachetko, J.; Szade, J.; Beyer, E.; Błachucki, W.; Ciochoń, P.; Dumas, P.; Freindl, K.; Gazdowicz, G.; Glatt, S.; Guła, K.; et al. SOLARIS National Synchrotron Radiation Centre in Krakow, Poland. Eur. Phys. J. Plus 2023, 138, 10. [Google Scholar] [CrossRef]

- Varotsos, C.A.; Krapivin, V.F.; Mkrtchyan, F.A.; Xue, Y. Global Problems of Ecodynamics and Hydrogeochemistry. In Constructive Processing of Microwave and Optical Data for Hydrogeochemical Applications; Springer International Publishing: Cham, Switzerland, 2023; pp. 1–118. [Google Scholar] [CrossRef]

- Furtado, V.R.; Vignando, H.; Luz, C.D.; Steinmacher, I.F.; Kalinowski, M.; OliveiraJr, E. Controlled Experimentation of Software Product Lines. In UML-Based Software Product Line Engineering with SMarty; OliveiraJr, E., Ed.; Springer International Publishing: Cham, Switzerland, 2023; pp. 417–443. [Google Scholar] [CrossRef]

- Zhao, Y.; Boley, M.; Pelentritou, A.; Karoly, P.J.; Freestone, D.R.; Liu, Y.; Muthukumaraswamy, S.; Woods, W.; Liley, D.; Kuhlmann, L. Space-time resolved inference-based neurophysiological process imaging: Application to resting-state alpha rhythm. NeuroImage 2022, 263, 119592. [Google Scholar] [CrossRef]

- Wang, Y.; Laforge, F.; Goun, A.; Rabitz, H. Selective photo-excitation of molecules enabled by stimulated Raman pre-excitation. Phys. Chem. Chem. Phys. 2022, 24, 10062–10068. [Google Scholar] [CrossRef]

- Lucas-Rhimbassen, M. The COST of Joining Legal Forces on a Celestial Body of Law and Beyond: Anticipating Future Clashes between Corpus Juris Spatialis, Lex Mercatoria, Antitrust and Ethics. Space Policy 2022, 59, 101445. [Google Scholar] [CrossRef]

- Nau, J.; Henke, K.; Streitferdt, D. New Ways for Distributed Remote Web Experiments. In Learning with Technologies and Technologies in Learning; Lecture Notes in Networks and Systems; Auer, M.E., Pester, A., May, D., Eds.; Springer International Publishing: Cham, Switzerland, 2022; Volume 456, pp. 257–284. [Google Scholar] [CrossRef]

- Engelmann, C.; Kuchar, O.; Boehm, S.; Brim, M.J.; Naughton, T.; Somnath, S.; Atchley, S.; Lange, J.; Mintz, B.; Arenholz, E. The INTERSECT Open Federated Architecture for the Laboratory of the Future. In Accelerating Science and Engineering Discoveries Through Integrated Research Infrastructure for Experiment, Big Data, Modeling and Simulation; Communications in Computer and Information Science; Doug, K., Al, G., Pophale, S., Liu, H., Parete-Koon, S., Eds.; Springer Nature Switzerland: Cham, Switzerland, 2022; Volume 1690, pp. 173–190. [Google Scholar] [CrossRef]

- Tran, N.N.; Gelonch, M.E.; Liang, S.; Xiao, Z.; Sarafraz, M.M.; Tišma, M.; Federsel, H.J.; Ley, S.V.; Hessel, V. Enzymatic pretreatment of recycled grease trap waste in batch and continuous-flow reactors for biodiesel production. Chem. Eng. J. 2021, 426, 131703. [Google Scholar] [CrossRef]

- Pasquinelli, M.; Joler, V. The Nooscope manifested: AI as instrument of knowledge extractivism. AI Soc. 2021, 36, 1263–1280. [Google Scholar] [CrossRef]

- Gupta, N.; Kini, P.; Gupta, S.; Darbari, H.; Joshi, N.; Khosravy, M. Six Sigma based modeling of the hydraulic oil heating under low load operation. Eng. Sci. Technol. Int. J. 2021, 24, 11–21. [Google Scholar] [CrossRef]

- Demchenko, Y.; Maijer, M.; Comminiello, L. Data Scientist Professional Revisited: Competences Definition and Assessment, Curriculum and Education Path Design. In Proceedings of the 2021 4th International Conference on Big Data and Education, ICBDE ’21, London, UK, 3–5 February 2021; pp. 52–62. [Google Scholar] [CrossRef]

- Wang, R.; Luo, Y.; Jia, H.; Ferrell, J.R.; Ben, H. Development of quantitative 13C NMR characterization and simulation of C, H, and O content for pyrolysis oils based on 13C NMR analysis. RSC Adv. 2020, 10, 25918–25928. [Google Scholar] [CrossRef]

- Brings, J.; Daun, M.; Weyer, T.; Pohl, K. Analyzing goal variability in cyber-physical system networks. SIGAPP Appl. Comput. Rev. 2020, 20, 19–35. [Google Scholar] [CrossRef]

- Faqeh, R.; Fetzer, C.; Hermanns, H.; Hoffmann, J.; Klauck, M.; Köhl, M.A.; Steinmetz, M.; Weidenbach, C. Towards Dynamic Dependable Systems Through Evidence-Based Continuous Certification. In Leveraging Applications of Formal Methods, Verification and Validation: Engineering Principles; Lecture Notes in Computer Science; Margaria, T., Steffen, B., Eds.; Springer International Publishing: Cham, Switzerland, 2020; Volume 12477, pp. 416–439. [Google Scholar] [CrossRef]

- Daviran, M.; Longwill, S.M.; Casella, J.F.; Schultz, K.M. Rheological characterization of dynamic remodeling of the pericellular region by human mesenchymal stem cell-secreted enzymes in well-defined synthetic hydrogel scaffolds. Soft Matter 2018, 14, 3078–3089. [Google Scholar] [CrossRef] [PubMed]

- Wilderen, L.J.G.W.v.; Neumann, C.; Rodrigues-Correia, A.; Kern-Michler, D.; Mielke, N.; Reinfelds, M.; Heckel, A.; Bredenbeck, J. Picosecond activation of the DEACM photocage unravelled by VIS-pump-IR-probe spectroscopy. Phys. Chem. Chem. Phys. 2017, 19, 6487–6496. [Google Scholar] [CrossRef]

- Sarraf, M.; Razak, B.A.; Dabbagh, A.; Nasiri-Tabrizi, B.; Kasim, N.H.A.; Basirun, W.J. Optimizing PVD conditions for electrochemical anodization growth of well-adherent Ta2O5 nanotubes on Ti-6Al-4V alloy. RSC Adv. 2016, 6, 78999–79015. [Google Scholar] [CrossRef]

- Cink, R.B.; Song, Y. Appropriating scientific vocabulary in chemistry laboratories: A multiple case study of four community college students with diverse ethno-linguistic backgrounds. Chem. Educ. Res. Pract. 2016, 17, 604–617. [Google Scholar] [CrossRef]

- De Giacomo, G.; Gerevini, A.E.; Patrizi, F.; Saetti, A.; Sardina, S. Agent planning programs. Artif. Intell. 2016, 231, 64–106. [Google Scholar] [CrossRef]

- Zangiabady, M.; Aguilar-Fuster, C.; Rubio-Loyola, J. A Virtual Network Migration Approach and Analysis for Enhanced Online Virtual Network Embedding. In Proceedings of the 12th Conference on International Conference on Network and Service Management, CNSM 2016, Montreal, QC, Canada, 31 October–4 November 2016; pp. 324–329. [Google Scholar]

- Hohaus, T.; Gensch, I.; Kimmel, J.; Worsnop, D.R.; Kiendler-Scharr, A. Experimental determination of the partitioning coefficient of β-pinene oxidation products in SOAs. Phys. Chem. Chem. Phys. 2015, 17, 14796–14804. [Google Scholar] [CrossRef]

- Wu, Z.; Sekar, R.; Hsieh, S.j. Study of factors impacting remote diagnosis performance on a PLC based automated system. J. Manuf. Syst. 2014, 33, 589–603. [Google Scholar] [CrossRef]

- Wagner, S.; Kronberger, G.; Beham, A.; Kommenda, M.; Scheibenpflug, A.; Pitzer, E.; Vonolfen, S.; Kofler, M.; Winkler, S.; Dorfer, V.; et al. Architecture and Design of the HeuristicLab Optimization Environment. In Advanced Methods and Applications in Computational Intelligence; Topics in Intelligent Engineering and Informatics; Klempous, R., Nikodem, J., Jacak, W., Chaczko, Z., Eds.; Springer International Publishing: Berlin/Heidelberg, Germany, 2014; Volume 6, pp. 197–261. [Google Scholar] [CrossRef]

- Gašpar, V.; Madarász, L.; Andoga, R. Scientific Research Information System as a Solution for Assessing the Efficiency of Applied Research. In Advances in Soft Computing, Intelligent Robotics and Control; Topics in Intelligent Engineering and Informatics; Fodor, J., Fullér, R., Eds.; Springer International Publishing: Cham, Switzerland, 2014; Volume 8, pp. 273–293. [Google Scholar] [CrossRef]

- Alemi, O.; Polajnar, D.; Polajnar, J.; Mumbaiwala, D. A simulation framework for design-oriented studies of interaction models in agent teamwork. In Proceedings of the 2014 Symposium on Agent Directed Simulation, ADS ’14, Tampa, FL, USA, 13–16 April 2014. [Google Scholar]

- Klarborg, B.; Lahrmann, H.; NielsAgerholm, n.; Tradisauskas, N.; Harms, L. Intelligent speed adaptation as an assistive device for drivers with acquired brain injury: A single-case field experiment. Accid. Anal. Prev. 2012, 48, 57–62. [Google Scholar] [CrossRef]

- Barišić, A.; Monteiro, P.; Amaral, V.; Goulão, M.; Monteiro, M. Patterns for evaluating usability of domain-specific languages. In Proceedings of the 19th Conference on Pattern Languages of Programs, PLoP ’12, Tucson, AZ, USA, 19–26 October 2012. [Google Scholar]

- Soldatova, L.N.; Rzhetsky, A. Representation of research hypotheses. J. Biomed. Semant. 2011, 2, S9. [Google Scholar] [CrossRef]

- Duff, A.; Sanchez Fibla, M.; Verschure, P.F. A biologically based model for the integration of sensory-motor contingencies in rules and plans: A prefrontal cortex based extension of the Distributed Adaptive Control architecture. Presence Brian, Virtual Real. Robot. 2011, 85, 289–304. [Google Scholar] [CrossRef] [PubMed]

- Wombacher, A. A-Posteriori Detection of Sensor Infrastructure Errors in Correlated Sensor Data and Business Workflows. In Business Process Management; Lecture Notes in Computer Science; Rinderle-Ma, S., Toumani, F., Wolf, K., Eds.; Springer: Berlin/Heidelberg, Germany, 2011; Volume 6896, pp. 329–344. [Google Scholar] [CrossRef]

- He, L.; Friedman, A.M.; Bailey-Kellogg, C. Algorithms for optimizing cross-overs in DNA shuffling. In Proceedings of the 2nd ACM Conference on Bioinformatics, Computational Biology and Biomedicine, Chicago, IL, USA, 1–3 August 2011; BCB ’11. pp. 143–152. [Google Scholar] [CrossRef]

- Apgar, J.F.; Witmer, D.K.; White, F.M.; Tidor, B. Sloppy models, parameter uncertainty, and the role of experimental design. Mol. Biosyst. 2010, 6, 1890–1900. [Google Scholar] [CrossRef] [PubMed]

- Sparkes, A.; King, R.D.; Aubrey, W.; Benway, M.; Byrne, E.; Clare, A.; Liakata, M.; Markham, M.; Whelan, K.E.; Young, M.; et al. An Integrated Laboratory Robotic System for Autonomous Discovery of Gene Function. SLAS Technol. 2010, 15, 33–40. [Google Scholar] [CrossRef]

- Barnaud, C.; Bousquet, F.; Trebuil, G. Multi-agent simulations to explore rules for rural credit in a highland farming community of Northern Thailand. Ecol. Econ. 2008, 66, 615–627. [Google Scholar] [CrossRef]

- Yilmaz, L.; Davis, P.; Fishwick, P.A.; Hu, X.; Miller, J.A.; Hybinette, M.; Ören, T.I.; Reynolds, P.; Sarjoughian, H.; Tolk, A. Sustaining the growth and vitality of the M&S discipline. In Proceedings of the 40th Conference on Winter Simulation, Winter Simulation Conference, WSC ’08, Miami, FL, USA, 7–10 December 2008; pp. 677–688. [Google Scholar]

- King, R.D.; Karwath, A.; Clare, A.; Dehaspe, L. Logic and the Automatic Acquisition of Scientific Knowledge: An Application to Functional Genomics. In Computational Discovery of Scientific Knowledge; Lecture Notes in Computer Science; Džeroski, S., Todorovski, L., Eds.; Springer: Berlin/Heidelberg, Germany, 2007; Volume 4660, pp. 273–289. ISSN 0302-9743, 1611-3349. [Google Scholar] [CrossRef]

- Brandes, T.; Schwamborn, H.; Gerndt, M.; Jeitner, J.; Kereku, E.; Schulz, M.; Brunst, H.; Nagel, W.; Neumann, R.; Müller-Pfefferkorn, R.; et al. Monitoring cache behavior on parallel SMP architectures and related programming tools. Future Gener. Comput. Syst. 2005, 21, 1298–1311. [Google Scholar] [CrossRef]

- Whelan, K.E.; King, R.D. Intelligent software for laboratory automation. Trends Biotechnol. 2004, 22, 440–445. [Google Scholar] [CrossRef]

- Kell, D.B.; Mendes, P. Snapshots of Systems. In Technological and Medical Implications of Metabolic Control Analysis; Cornish-Bowden, A., Cárdenas, M.L., Eds.; Springer: Dordrecht, The Netherlands, 2000; pp. 3–25. [Google Scholar] [CrossRef]

- Ma, X.; Huo, Z.; Lu, J.; Wong, Y.D. Deep Forest with SHapley additive explanations on detailed risky driving behavior data for freeway crash risk prediction. Eng. Appl. Artif. Intell. 2025, 141, 109787. [Google Scholar] [CrossRef]

- Li, J.; Chen, H.; Wang, X.B.; Yang, Z.X. A comprehensive gear eccentricity dataset with multiple fault severity levels: Description, characteristics analysis, and fault diagnosis applications. Mech. Syst. Signal Process. 2025, 224, 112068. [Google Scholar] [CrossRef]

- Subasi, A.; Qaisar, S.M. Chapter 16—EEG-based emotion recognition using AR burg and ensemble machine learning models. In Artificial Intelligence and Multimodal Signal Processing in Human-Machine Interaction; Subasi, A., Qaisar, S.M., Nisar, H., Eds.; Academic Press: Cambridge, MA, USA, 2025; pp. 303–329. [Google Scholar] [CrossRef]

- Liu, D.; Zhang, B.; Jiang, Y.; An, P.; Chen, Z. Deep learning driven inverse solving method for neutron diffusion equations and three-dimensional core power reconstruction technology. Nucl. Eng. Des. 2024, 429, 113590. [Google Scholar] [CrossRef]

- Huang, J.; Huang, S.; Moghaddam, S.K.; Lu, Y.; Wang, G.; Yan, Y.; Shi, X. Deep Reinforcement Learning-Based Dynamic Reconfiguration Planning for Digital Twin-Driven Smart Manufacturing Systems with Reconfigurable Machine Tools. IEEE Trans. Ind. Inform. 2024, 20, 13135–13146. [Google Scholar] [CrossRef]

- Anthony, B. Decentralized AIoT based intelligence for sustainable energy prosumption in local energy communities: A citizen-centric prosumer approach. Cities 2024, 152, 105198. [Google Scholar] [CrossRef]

- Chang, W.; D’Ascenzo, N.; Antonecchia, E.; Li, B.; Yang, J.; Mu, D.; Li, A.; Xie, Q. Deep denoiser prior driven relaxed iterated Tikhonov method for low-count PET image restoration. Phys. Med. Biol. 2024, 69. [Google Scholar] [CrossRef] [PubMed]

- Widanage, C.; Mohotti, D.; Lee, C.; Wijesooriya, K.; Meddage, D. Use of explainable machine learning models in blast load prediction. Eng. Struct. 2024, 312, 118271. [Google Scholar] [CrossRef]

- Schaefer, M.; Reichl, S.; ter Horst, R.; Nicolas, A.M.; Krausgruber, T.; Piras, F.; Stepper, P.; Bock, C.; Samwald, M. GPT-4 as a biomedical simulator. Comput. Biol. Med. 2024, 178, 108796. [Google Scholar] [CrossRef]

- Naseer, F.; Khan, M.N.; Tahir, M.; Addas, A.; Aejaz, S.M.H. Integrating deep learning techniques for personalized learning pathways in higher education. Heliyon 2024, 10, e32628. [Google Scholar] [CrossRef]

- Amuzuga, P.; Bennebach, M.; Iwaniack, J.L. Model reduction for fatigue life estimation of a welded joint driven by machine learning. Heliyon 2024, 10, e30171. [Google Scholar] [CrossRef]

- Wojnar, T.; Hryszko, J.; Roman, A. Mi-Go: Tool which uses YouTube as data source for evaluating general-purpose speech recognition machine learning models. EURASIP J. Audio Speech Music Process. 2024, 2024, 24. [Google Scholar] [CrossRef]

- Jiang, W.; Ding, L. Unsafe hoisting behavior recognition for tower crane based on transfer learning. Autom. Constr. 2024, 160, 105299. [Google Scholar] [CrossRef]

- Guo, Y.; Yu, X.; Wang, Y.; Huang, K. Health prognostics of lithium-ion batteries based on universal voltage range features mining and adaptive multi-Gaussian process regression with Harris Hawks optimization algorithm. Reliab. Eng. Syst. Saf. 2024, 244, 109913. [Google Scholar] [CrossRef]

- Guan, S.; Wang, Y.; Liu, L.; Gao, J.; Xu, Z.; Kan, S. Ultra-short-term wind power prediction method based on FTI-VACA-XGB model. Expert Syst. Appl. 2024, 235, 121185. [Google Scholar] [CrossRef]

- Hu, X.; Liu, J.; Li, H.; Liu, H.; Xue, X. An effective transformer based on dual attention fusion for underwater image enhancement. PeerJ Comput. Sci. 2024, 10, e1783. [Google Scholar] [CrossRef] [PubMed]

- Fu, J.; Fang, R. Thermal Fault Warning of Turbine Generators Based on Cluster Heatmap CNN-GRU-Attention Method. In Proceedings of the 9th International Symposium on Hydrogen Energy, Renewable Energy and Materials; Springer Proceedings in Physics. Kolhe, M.L., Liao, Q., Eds.; Springer Nature Singapore: Singapore, 2024; Volume 399, pp. 125–134. [Google Scholar] [CrossRef]

- Liu, D.; Wang, S.; Fan, Y.; Liang, Y.; Fernandez, C.; Stroe, D.I. State of energy estimation for lithium-ion batteries using adaptive fuzzy control and forgetting factor recursive least squares combined with AEKF considering temperature. J. Energy Storage 2023, 70, 108040. [Google Scholar] [CrossRef]

- Okuda, K.; Nakajima, K.; Kitamura, C.; Ljungberg, M.; Hosoya, T.; Kirihara, Y.; Hashimoto, M. Machine learning-based prediction of conversion coefficients for I-123 metaiodobenzylguanidine heart-to-mediastinum ratio. J. Nucl. Cardiol. Off. Publ. Am. Soc. Nucl. Cardiol. 2023, 30, 1630–1641. [Google Scholar] [CrossRef]

- Muckley, E.S.; Vasudevan, R.; Sumpter, B.G.; Advincula, R.C.; Ivanov, I.N. Machine Intelligence-Centered System for Automated Characterization of Functional Materials and Interfaces. ACS Appl. Mater. Interfaces 2023, 15, 2329–2340. [Google Scholar] [CrossRef]

- Foroughi, P.; Brockners, F.; Rougier, J.L. ADT: AI-Driven network Telemetry processing on routers. Comput. Netw. 2023, 220, 109474. [Google Scholar] [CrossRef]

- Bertin, P.; Rector-Brooks, J.; Sharma, D.; Gaudelet, T.; Anighoro, A.; Gross, T.; Martínez-Peña, F.; Tang, E.L.; Suraj, M.; Regep, C.; et al. RECOVER identifies synergistic drug combinations in vitro through sequential model optimization. Cell Rep. Methods 2023, 3, 100599. [Google Scholar] [CrossRef]

- Ghasemi, M.; Hasani Zonoozi, M.; Rezania, N.; Saadatpour, M. Predicting coagulation-flocculation process for turbidity removal from water using graphene oxide: A comparative study on ANN, SVR, ANFIS, and RSM models. Environ. Sci. Pollut. Res. Int. 2022, 29, 72839–72852. [Google Scholar] [CrossRef]

- Cao, L.; Su, J.; Wang, Y.; Cao, Y.; Siang, L.C.; Li, J.; Saddler, J.N.; Gopaluni, B. Causal Discovery Based on Observational Data and Process Knowledge in Industrial Processes. Ind. Eng. Chem. Res. 2022, 61, 14272–14283. [Google Scholar] [CrossRef]

- Novitski, P.; Cohen, C.M.; Karasik, A.; Shalev, V.; Hodik, G.; Moskovitch, R. All-cause mortality prediction in T2D patients with iTirps. Artif. Intell. Med. 2022, 130, 102325. [Google Scholar] [CrossRef]

- Guevarra, D.; Zhou, L.; Richter, M.H.; Shinde, A.; Chen, D.; Gomes, C.P.; Gregoire, J.M. Materials structure-property factorization for identification of synergistic phase interactions in complex solar fuels photoanodes. NPJ Comput. Mater. 2022, 8, 57. [Google Scholar] [CrossRef]

- Zhang, Y.; Xiao, B.; Al-Hussein, M.; Li, X. Prediction of human restorative experience for human-centered residential architecture design: A non-immersive VR—DOE-based machine learning method. Autom. Constr. 2022, 136, 104189. [Google Scholar] [CrossRef]

- Seifrid, M.; Hickman, R.J.; Aguilar-Granda, A.; Lavigne, C.; Vestfrid, J.; Wu, T.C.; Gaudin, T.; Hopkins, E.J.; Aspuru-Guzik, A. Routescore: Punching the Ticket to More Efficient Materials Development. ACS Cent. Sci. 2022, 8, 122–131. [Google Scholar] [CrossRef] [PubMed]

- Lang, S.; Reggelin, T.; Schmidt, J.; Müller, M.; Nahhas, A. NeuroEvolution of augmenting topologies for solving a two-stage hybrid flow shop scheduling problem: A comparison of different solution strategies. Expert Syst. Appl. 2021, 172, 114666. [Google Scholar] [CrossRef]

- Gu, P.; Lan, X.; Li, S. Object Detection Combining CNN and Adaptive Color Prior Features. Sensors 2021, 21, 2796. [Google Scholar] [CrossRef]

- Lucchese, L.V.; de Oliveira, G.G.; Pedrollo, O.C. Investigation of the influence of nonoccurrence sampling on landslide susceptibility assessment using Artificial Neural Networks. CATENA 2021, 198, 105067. [Google Scholar] [CrossRef]

- Higgins, K.; Valleti, S.M.; Ziatdinov, M.; Kalinin, S.V.; Ahmadi, M. Chemical Robotics Enabled Exploration of Stability in Multicomponent Lead Halide Perovskites via Machine Learning. ACS Energy Lett. 2020, 5, 3426–3436. [Google Scholar] [CrossRef]

- Rohr, B.; Stein, H.S.; Guevarra, D.; Wang, Y.; Haber, J.A.; Aykol, M.; Suram, S.K.; Gregoire, J.M. Benchmarking the acceleration of materials discovery by sequential learning. Chem. Sci. 2020, 11, 2696–2706. [Google Scholar] [CrossRef]

- Ghnatios, C.; Hage, R.M.; Hage, I. An efficient Tabu-search optimized regression for data-driven modeling. Data-Based Eng. Sci. Technol. 2019, 347, 806–816. [Google Scholar] [CrossRef]

- Feng, N.; Wang, H.; Hu, F.; Gouda, M.A.; Gong, J.; Wang, F. A fiber-reinforced human-like soft robotic manipulator based on sEMG force estimation. Eng. Appl. Artif. Intell. 2019, 86, 56–67. [Google Scholar] [CrossRef]

- Hoef, S.V.D.; Mårtensson, J.; Dimarogonas, D.V.; Johansson, K.H. A Predictive Framework for Dynamic Heavy-Duty Vehicle Platoon Coordination. ACM Trans. Cyber-Phys. Syst. 2019, 4. [Google Scholar] [CrossRef]

- Chaudhuri, T.; Soh, Y.C.; Li, H.; Xie, L. A feedforward neural network based indoor-climate control framework for thermal comfort and energy saving in buildings. Appl. Energy 2019, 248, 44–53. [Google Scholar] [CrossRef]

- Rodrigues, A.; Rodrigues, G.N.; Knauss, A.; Ali, R.; Andrade, H. Enhancing context specifications for dependable adaptive systems: A data mining approach. Inf. Softw. Technol. 2019, 112, 115–131. [Google Scholar] [CrossRef]

- Oliveira, P.; Santos Neto, P.; Britto, R.; Rabêlo, R.; Braga, R.; Souza, M. CIaaS—computational intelligence as a service with Athena. Comput. Lang. Syst. Struct. 2018, 54, 95–118. [Google Scholar] [CrossRef]

- Navarro, L.C.; Navarro, A.K.; Rocha, A.; Dahab, R. Connecting the dots: Toward accountable machine-learning printer attribution methods. J. Vis. Commun. Image Represent. 2018, 53, 257–272. [Google Scholar] [CrossRef]

- Fagerholm, F.; Sanchez Guinea, A.; Mäenpää, H.; Münch, J. The RIGHT model for Continuous Experimentation. J. Syst. Softw. 2017, 123, 292–305. [Google Scholar] [CrossRef]

- Taghavifar, H.; Mardani, A. On the modeling of energy efficiency indices of agricultural tractor driving wheels applying adaptive neuro-fuzzy inference system. J. Terramechanics 2014, 56, 37–47. [Google Scholar] [CrossRef]

- Kowalski, K.C.; He, B.D.; Srinivasan, L. Dynamic analysis of naive adaptive brain-machine interfaces. Neural Comput. 2013, 25, 2373–2420. [Google Scholar] [CrossRef]

- Vidal, S.A.; Marcos, C.A. Toward automated refactoring of crosscutting concerns into aspects. J. Syst. Softw. 2013, 86, 1482–1497. [Google Scholar] [CrossRef]

- Olejnik-Krugły, A.; Różewski, P.; Zaikin, O.; Sienkiewicz, P. Approach for color management in printing process in open manufacturing systems. 7th Ifac Conf. Manuf. Model. Manag. Control 2013, 46, 2104–2109. [Google Scholar] [CrossRef]

- Westermann, D.; Happe, J.; Krebs, R.; Farahbod, R. Automated inference of goal-oriented performance prediction functions. In Proceedings of the 27th IEEE/ACM International Conference on Automated Software Engineering, ASE ’12, Essen, Germany, 3–7 September 2012; pp. 190–199. [Google Scholar] [CrossRef]

- Krzhizhanovskaya, V.; Shirshov, G.; Melnikova, N.; Belleman, R.; Rusadi, F.; Broekhuijsen, B.; Gouldby, B.; Lhomme, J.; Balis, B.; Bubak, M.; et al. Flood early warning system: Design, implementation and computational modules. Procedia Comput. Sci. 2011, 4, 106–115. [Google Scholar] [CrossRef]

- Seeger, M.W. Bayesian Inference and Optimal Design for the Sparse Linear Model. J. Mach. Learn. Res. 2008, 9, 759–813. [Google Scholar]

- Kubera, Y.; Mathieu, P.; Picault, S. Interaction Selection Ambiguities in Multi-agent Systems. In Proceedings of the 2008 IEEE/WIC/ACM International Conference on Web Intelligence and Intelligent Agent Technology—Volume 02, WI-IAT ’08, Washington, DC, USA, 9–12 December 2008; pp. 75–78. [Google Scholar] [CrossRef]

- Ince, H.; Trafalis, T.B. A hybrid model for exchange rate prediction. Decis. Support Syst. 2006, 42, 1054–1062. [Google Scholar] [CrossRef]

- Awate, S.P.; Tasdizen, T.; Foster, N.; Whitaker, R.T. Adaptive Markov modeling for mutual-information-based, unsupervised MRI brain-tissue classification. Med. Image Anal. 2006, 10, 726–739. [Google Scholar] [CrossRef] [PubMed]

- Cheng, S.; Sabes, P.N. Modeling sensorimotor learning with linear dynamical systems. Neural Comput. 2006, 18, 760–793. [Google Scholar] [CrossRef] [PubMed]

- Terada, J.; Vo, H.; Joslin, D. Combining genetic algorithms with squeaky-wheel optimization. In Proceedings of the 8th Annual Conference on Genetic and Evolutionary Computation, GECCO ’06, Seattle, WA, USA, 8–12 July 2006; pp. 1329–1336. [Google Scholar] [CrossRef]

- Joslin, D.; Poole, W. Agent-based simulation for software project planning. In Proceedings of the 37th Conference on Winter Simulation, Winter Simulation Conference, WSC ’05, Orlando, FL, USA, 4–7 December 2005; pp. 1059–1066. [Google Scholar]

- Wai, R.J.; Lin, C.M.; Peng, Y.F. Adaptive hybrid control for linear piezoelectric ceramic motor drive using diagonal recurrent CMAC network. IEEE Trans. Neural Netw. 2004, 15, 1491–1506. [Google Scholar] [CrossRef]

- Adams, F.; McDannald, A.; Takeuchi, I.; Kusne, A.G. Human-in-the-loop for Bayesian autonomous materials phase mapping. Matter 2024, 7, 697–709. [Google Scholar] [CrossRef]

- Aldeghi, M.; Häse, F.; Hickman, R.J.; Tamblyn, I.; Aspuru-Guzik, A. Golem: An algorithm for robust experiment and process optimization. Chem. Sci. 2021, 12, 14792–14807. [Google Scholar] [CrossRef]

- Hickman, R.J.; Aldeghi, M.; Häse, F.; Aspuru-Guzik, A. Bayesian optimization with known experimental and design constraints for chemistry applications. Digit. Discov. 2022, 1, 732–744. [Google Scholar] [CrossRef]

- Schilter, O.; Gutierrez, D.P.; Folkmann, L.M.; Castrogiovanni, A.; García-Durán, A.; Zipoli, F.; Roch, L.M.; Laino, T. Combining Bayesian optimization and automation to simultaneously optimize reaction conditions and routes. Chem. Sci. 2024, 15, 7732–7741. [Google Scholar] [CrossRef]

- Epps, R.W.; Volk, A.A.; Reyes, K.G.; Abolhasani, M. Accelerated AI development for autonomous materials synthesis in flow. Chem. Sci. 2021, 12, 6025–6036. [Google Scholar] [CrossRef]

- Plommer, H.; Betinol, I.O.; Dupree, T.; Roggen, M.; Reid, J.P. Extraction yield prediction for the large-scale recovery of cannabinoids. Digit. Discov. 2024, 3, 155–162. [Google Scholar] [CrossRef]

- Eyke, N.S.; Green, W.H.; Jensen, K.F. Iterative experimental design based on active machine learning reduces the experimental burden associated with reaction screening. React. Chem. Eng. 2020, 5, 1963–1972. [Google Scholar] [CrossRef]

- Waelder, R.; Park, C.; Sloan, A.; Carpena-Núñez, J.; Yoho, J.; Gorsse, S.; Rao, R.; Maruyama, B. Improved understanding of carbon nanotube growth via autonomous jump regression targeting of catalyst activity. Carbon 2024, 228, 119356. [Google Scholar] [CrossRef]

- Yoon, J.W.; Kumar, A.; Kumar, P.; Hippalgaonkar, K.; Senthilnath, J.; Chellappan, V. Explainable machine learning to enable high-throughput electrical conductivity optimization and discovery of doped conjugated polymers. Knowl.-Based Syst. 2024, 295, 111812. [Google Scholar] [CrossRef]

- Fu, W.; Chien, C.F.; Chen, C.H. Advanced quality control for probe precision forming to empower virtual vertical integration for semiconductor manufacturing. Comput. Ind. Eng. 2023, 183, 109461. [Google Scholar] [CrossRef]

- Lai, N.S.; Tew, Y.S.; Zhong, X.; Yin, J.; Li, J.; Yan, B.; Wang, X. Artificial Intelligence (AI) Workflow for Catalyst Design and Optimization. Ind. Eng. Chem. Res. 2023, 62, 17835–17848. [Google Scholar] [CrossRef]

- Yonge, A.; Gusmão, G.S.; Fushimi, R.; Medford, A.J. Model-Based Design of Experiments for Temporal Analysis of Products (TAP): A Simulated Case Study in Oxidative Propane Dehydrogenation. Ind. Eng. Chem. Res. 2024, 63, 4756–4770. [Google Scholar] [CrossRef]

- Almeida, A.F.; Branco, S.; Carvalho, L.C.R.; Dias, A.R.M.; Leitão, E.P.T.; Loureiro, R.M.S.; Lucas, S.D.; Mendonça, R.F.; Oliveira, R.; Rocha, I.L.D.; et al. Benchmarking Strategies of Sustainable Process Chemistry Development: Human-Based, Machine Learning, and Quantum Mechanics. Org. Process Res. Dev. 2024. [Google Scholar] [CrossRef]

- Almeida, A.F.; Ataíde, F.A.P.; Loureiro, R.M.S.; Moreira, R.; Rodrigues, T. Augmenting Adaptive Machine Learning with Kinetic Modeling for Reaction Optimization. J. Org. Chem. 2021, 86, 14192–14198. [Google Scholar] [CrossRef]

- Bosten, E.; Pardon, M.; Chen, K.; Koppen, V.; Van Herck, G.; Hellings, M.; Cabooter, D. Assisted Active Learning for Model-Based Method Development in Liquid Chromatography. Anal. Chem. 2024, 96, 13699–13709. [Google Scholar] [CrossRef]

- Liang, W.; Zheng, S.; Shu, Y.; Huang, J. Machine Learning Optimizing Enzyme/ZIF Biocomposites for Enhanced Encapsulation Efficiency and Bioactivity. JACS Au 2024, 4, 3170–3182. [Google Scholar] [CrossRef] [PubMed]

- Cruse, K.; Baibakova, V.; Abdelsamie, M.; Hong, K.; Bartel, C.J.; Trewartha, A.; Jain, A.; Sutter-Fella, C.M.; Ceder, G. Text Mining the Literature to Inform Experiments and Rationalize Impurity Phase Formation for BiFeO3. Chem. Mater. 2024, 36, 772–785. [Google Scholar] [CrossRef] [PubMed]

- Dama, A.C.; Kim, K.S.; Leyva, D.M.; Lunkes, A.P.; Schmid, N.S.; Jijakli, K.; Jensen, P.A. BacterAI maps microbial metabolism without prior knowledge. Nat. Microbiol. 2023, 8, 1018–1025. [Google Scholar] [CrossRef] [PubMed]

- Suvarna, M.; Zou, T.; Chong, S.H.; Ge, Y.; Martín, A.J.; Pérez-Ramírez, J. Active learning streamlines development of high performance catalysts for higher alcohol synthesis. Nat. Commun. 2024, 15, 5844. [Google Scholar] [CrossRef]

- Chen, J.; Hou, J.; Wong, K.C. Categorical Matrix Completion With Active Learning for High-Throughput Screening. IEEE/ACM Trans. Comput. Biol. Bioinform. 2020, 18, 2261–2270. [Google Scholar] [CrossRef]

- Wang, Y.; Yao, Q.; Kwok, J.T.; Ni, L.M. Generalizing from a few examples: A survey on few-shot learning. ACM Comput. Surv. (Csur) 2020, 53, 1–34. [Google Scholar] [CrossRef]

- Biswas, A.; Md Abdullah Al, N.; Imran, A.; Sejuty, A.T.; Fairooz, F.; Puppala, S.; Talukder, S. Generative adversarial networks for data augmentation. In Data Driven Approaches on Medical Imaging; Springer: Berlin/Heidelberg, Germany, 2023; pp. 159–177. [Google Scholar]

- Schumann, R.; Rehbein, I. Active learning via membership query synthesis for semi-supervised sentence classification. In Proceedings of the 23rd conference on computational natural language learning (CoNLL), Hong Kong, China, 3–4 November 2019; pp. 472–481. [Google Scholar]

| ID | Criterion | # Studies Excluded |

|---|---|---|

| FC1 | No concrete solution | 104 |

| FC2 | No AI technique | 54 |

| FC3 | Does not focus on experimental design | 55 |

| FC4 | Selection of another version | 0 |

| ID | Criterion | # Studies Excluded |

|---|---|---|

| FC1 | No concrete solution | [2,3,4,5,6,7,8,9,10,12,13,14,15,16,17,18,19,20,21,22,23,24,25,26,27,28,29,30,31,32,33,34,35,36,37,38,39,40,41,42,43,44,45,46,47,48,49,50,51,52,53,54,55,56,57,58,59,60,61,62,63,64,65,66,67,68,69,70,71,72,73,74,75,76,77,78,79,80,81,82,83,84,85,86,87,88,89,90,91,92,93,94,95,96,97,98,99,100,101,102,103,104,105,106] |

| FC2 | No AI technique | [107,108,109,110,111,112,113,114,115,116,117,118,119,120,121,122,123,124,125,126,127,128,129,130,131,132,133,134,135,136,137,138,139,140,141,142,143,144,145,146,147,148,149,150,151,152,153,154,155,156,157,158,159,160] |

| FC3 | Does not focus on experimental design | [161,162,163,164,165,166,167,168,169,170,171,172,173,174,175,176,177,178,179,180,181,182,183,184,185,186,187,188,189,190,191,192,193,194,195,196,197,198,199,200,201,202,203,204,205,206,207,208,209,210,211,212,213,214,215] |

| FC4 | Selection of another version |

| Authors | Domain | Application | Technique | Task |

|---|---|---|---|---|

| Aldeghi et al. [217] | General framework | Chemistry | Tree-based regression | Assistance in robustness estimation |

| Hickman et al. [218] | General framework | Chemistry | BO | Optimization of experimental constraints |

| Schilter et al. [219] | Chemistry | Optimization of different terminal alkynes’ reaction routes | BO | Selecting experiments and optimizing experimental design |

| Sadeghi et al. [11] | Materials science | Nano-manufacturing of lead-free metal halide perovskite nanocrystals | BO | Selecting experiments and optimizing experimental design |

| Epps et al. [220] | Materials science | Microfluidic material synthesis | Single-period RL + surrogate model (Naive classifier + GPR) | Surrogate model determines the feasibility of experimental parameters and predicts output; RL selects experiments |

| Plommer et al. [221] | Biology | Extraction of cannabinoids | RF | Prediction of experimental yields under different experiment conditions; assistance in decision-making |

| Eyke et al. [222] | Chemistry | Reduction of reaction screening | AL: ENN; single-trained models with MC dropout masks | Selecting experiments and optimizing experimental design |

| Adams et al. [216] | Materials science | Composition-structure phase mapping | BO | Selecting experiments and optimizing experimental design assisted by humans |

| Waelder et al. [223] | Materials science | Carbon nanotube growth | AL: Jump regression surrogate | Selecting experiments and optimizing experimental design |

| Yoon et al. [224] | Materials science | High-throughput electrical conductivity optimization and discovery of doped conjugated polymers | RF classifier + LASSO regression | Classifier excludes low conductivity material; regressor predicts experimental output |

| Fu et al. [225] | Fabrication | Quality control for probe precision forming in semiconductor manufacturing | GA (fitness function: PLSR) | Selecting experiments and optimizing experimental design |

| Lai et al. [226] | Materials science | Catalyst design and optimization | LLM + BO | LLM extracts process data; BO selects experiments and optimizes experimental design |

| Yonge et al. [227] | Chemistry | Temporal analysis of products | Model-based design of experiments | Selecting experiments and optimizing experimental design |

| Almeida et al. [228] | Chemistry | Sustainable chemistry processes | MOBO, AL: RF | Selecting experiments and optimizing experimental design |

| Almeida et al. [229] | Chemistry | Reaction optimization using kinetic modeling | AL: RF | Selecting experiments and optimizing experimental design |

| Bosten et al. [230] | Chemistry | Liquid chromatography | Assisted AL | Selecting experiments and optimizing experimental design |

| Liang et al. [231] | Materials science | Synthesis optimization for formulation of enzymes/ZIFs (zeolitic imidazolate framework) | BO | Selecting experiments and optimizing experimental design |

| Cruse et al. [232] | Materials science | Formation of impurity phases in BiFeO3 thin-film synthesis | DT classifier | Prediction of experimental output based on various conditions |

| Dama et al. [233] | Biology | Microbial metabolism mapping | Multi-period RL | Selecting experiments and optimizing experimental design |

| Suvarna et al. [234] | Chemistry | High-performance catalyst development for higher alcohol synthesis | MOBO | Selecting experiments and optimizing experimental design |

| Chen et al. [235] | Biology | Guidance of high-throughput screening | AL: Matrix completion | Selecting experiments and optimizing experimental design |

| Orouji et al. [31] | Chemistry | Optimization of transition metal-based homogeneous catalytic reactions | MOBO + EDNN (Ground-truth simulator for evaluation) | Selecting experiments and optimizing experimental design using MOBO, with evaluation by EDNN |

| Authors | Automation | Data | Online-Capable | Generalizable |

|---|---|---|---|---|

| Aldeghi et al. [217]. | Supportive | Reaction data | Yes | Yes |

| Hickman et al. [218] | Partially autonomous | Reaction data | Yes | Yes |

| Schilter et al. [219] | Fully autonomous | Reaction data | Yes | Limited to different reactions |

| Sadeghi et al. [11] | Fully autonomous | Synthesis data | Yes | Limited due to fluidics lab platform |

| Epps et al. [220] | Fully autonomous | Synthesis data | Yes | Limited to flow chemistry |

| Plommer et al. [221] | Supportive | Extraction data and condition data | No | Yes |

| Eyke et al. [222] | Partially autonomous | Reaction data | Yes | Yes |

| Adams et al. [216] | Partially autonomous | X-ray diffraction data | Yes | Limited to domain experts |

| Waelder et al. [223] | Fully autonomous | Catalyst reaction data and Raman spectrum | Yes | Limited to catalyst research |

| Yoon et al. [224] | Supportive | Optical spectra and process data | No | Yes |

| Fu et al. [225] | Partially autonomous | Quality and process data | Yes | Yes |

| Lai et al. [226] | Partially autonomous | Text data and catalyst synthesis data | Yes | Yes |

| Yonge et al. [227] | Partially autonomous | Kinetic process data | Yes | Yes |

| Almeida et al. [228] | Partially autonomous | Reaction data | Yes | Yes |

| Almeida et al. [229] | Partially autonomous | Reaction data | Yes | Yes |

| Bosten et al. [230] | Partially autonomous | Chromatography data | Yes | Yes |

| Liang et al. [231] | Partially autonomous | Synthesis data | Yes | Yes |

| Cruse et al. [232] | Supportive | Synthesis data | No | Yes |

| Dama et al. [233] | Fully autonomous | Growth data | Yes | Yes |

| Suvarna et al. [234] | Partially autonomous | Reaction data | Yes | Yes |

| Chen et al. [235] | Partially autonomous | Condition data | Yes | Yes |

| Orouji et al. [31] | Partially autonomous | Catalyst reaction data | Yes | Limited to catalyst research |

| Domain | Amount |

|---|---|

| General frameworks | 1 |

| Biology | 3 |

| Chemistry | 9 |

| Materials science | 8 |

| Fabrication | 1 |

| Category | Total Number (Separate) |

|---|---|

| Optimization | 10 (9) |

| Supervised | 8 (3) |

| AL | 5 (5) |

| RL | 2 (1) |

| Methodology | Number |

|---|---|

| BO | 9 |

| GA | 1 |

| GP regression | 1 |

| Tree-based regression | 2 |

| Tree-based classifier | 2 |

| Naive Bayes Classifier | 1 |

| LLM | 1 |

| LASSO regression | 1 |

| AL | 5 |

| RL | 2 |

| Category | Methodology/ Technique | Task | |

|---|---|---|---|

| Optimization | SOBO | Surrogate models:

| Models data using surrogate models and iteratively selects experiments based on surrogate models using acquisition functions; the obtained data are used to optimize the surrogate. |

| MOBO | Surrogate models:

| Optimizes multiple targets, models data with surrogate models, and iteratively selects experiments based on surrogate models using acquisition functions; the obtained data are used to optimize the surrogate. | |

| Optimization | GA | Fitness function:

| Determines the “fitness” of parameter combinations and iteratively selects experiments based on the fitness function; biology-inspired optimization using the obtained data. It is used as an additional agent for finding a human-interpretable rule for experimental observations in an RL-based hybrid framework. |

| Supervised | LLMs | ChatGPT | Extracts data from the literature. |

| Regression |

| Acts as a surrogate model or fitness function; in some frameworks, DTs, RFs, and LASSO regression are used independently to predict experimental output to assist in scientists’ decision-making. | |

| Classification |

| Exclusion of parameter combinations with potentially poor experimental output; GP classifier for integrating human feedback into models. | |

| Active Learning | Various | Reducing uncertainty:

| Iteratively selects the most informative experiments while reducing uncertainty and adapts predictive models for experimental output using the obtained data. |

| Reinforcement Learning | Single-period RL | Belief models:

| Selects and optimizes parameters and experiments. |

| Multi-period RL | Belief model:

| Selects and optimizes parameters and experiments. |

| Automation | Number |

|---|---|

| Fully autonomous | 5 |

| Partially autonomous | 13 |

| Supportive | 4 |

| Online Capable | Number |

|---|---|

| Yes | 19 |

| No | 3 |

| Generalizability | Number |

|---|---|

| Yes | 16 |

| Limited | 6 |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Nolte, L.; Tomforde, S. A Helping Hand: A Survey About AI-Driven Experimental Design for Accelerating Scientific Research. Appl. Sci. 2025, 15, 5208. https://doi.org/10.3390/app15095208

Nolte L, Tomforde S. A Helping Hand: A Survey About AI-Driven Experimental Design for Accelerating Scientific Research. Applied Sciences. 2025; 15(9):5208. https://doi.org/10.3390/app15095208

Chicago/Turabian StyleNolte, Lukas, and Sven Tomforde. 2025. "A Helping Hand: A Survey About AI-Driven Experimental Design for Accelerating Scientific Research" Applied Sciences 15, no. 9: 5208. https://doi.org/10.3390/app15095208

APA StyleNolte, L., & Tomforde, S. (2025). A Helping Hand: A Survey About AI-Driven Experimental Design for Accelerating Scientific Research. Applied Sciences, 15(9), 5208. https://doi.org/10.3390/app15095208