Abstract

Space-time image velocimetry (STIV) plays an important role in river velocity measurement due to its safety and efficiency. However, its practical application is affected by complex scene conditions, resulting in significant errors in the accurate estimation of texture angles. This paper proposes a method to predict the texture angles in frequency domain images based on an improved ShuffleNetV2. The second 1 × 1 convolution in the main branch of the downsampling unit and basic unit is deleted, the kernel size of the depthwise separable convolution is adjusted, and a Bottleneck Attention Module (BAM) is introduced to enhance the ability of capturing important feature information, effectively improving the precision of texture angles. In addition, the measured data from a current meter are used as the standard for comparison with established and novel approaches, and this study further validates its methodology through comparative experiments conducted in both artificial and natural river channels. The experimental results at the Agu, Panxi, and Mengxing hydrological stations demonstrate that the relative errors of the discharge measured by the proposed method are 2.20%, 3.40%, and 2.37%, and the relative errors of the mean velocity are 1.47%, 3.64%, and 1.87%, which affirms it has higher measurement accuracy and stability compared with other methods.

1. Introduction

China has a vast territory, numerous rivers, and abundant water resources, which are important resources for human survival, and have greatly promoted the development of industry and society in our country. However, severe flood disasters have occurred many times in some areas, which not only seriously threatened the lives of residents, but also brought huge economic losses. Therefore, it is crucial to strengthen continuous monitoring of rivers to improve the accuracy and timeliness of flood warnings, as well as developing effective flood prevention strategies [1].

Although traditional techniques for measuring river flow velocity are capable of delivering precise results, they all belong to the intrusive contact measurement method category, and require a large amount of manpower and financial and material inputs. The current meter is the most widely used velocity measuring instrument at present. When water flows pass through the instrument, the rotor is driven to rotate, and the actual velocity can be calculated by recording the number of rotations of the rotor in a specified time. It requires complex deployment and depends on manual operation. Generally, it is only possible to measure and obtain data within a local range during the daytime. In addition, the Acoustic Doppler Current Profiler (ADCP) [2] is a contact measurement instrument that utilizes the acoustic doppler principle to calculate fluid velocity based on changes in echo frequency. These methods can become infeasible or even hazardous during extreme weather conditions and fail to satisfy the demands of continuous monitoring. In contrast, the development of non-contact flow measurement methods provides vital research value in this field [3], while also making up for the shortcomings of conventional contact methods.

Non-contact techniques that utilize video image recognition have attracted widespread attention due to their advantages of high accuracy, low cost, safety, and strong real-time capabilities [4], and have gradually evolved into a mainstream technology and which has been successfully deployed in monitoring of river velocities and discharges. Particle image velocimetry (PIV) [5] involves dispersing tracer particles within the flow field and determining fluid velocity through cross-correlation analysis of their images. Large-scale particle image velocimetry (LSPIV) [6] extends PIV technology to measure velocities in large-scale flow fields for the first time, and uses cameras to capture the movement trajectory of natural floating objects such as foam, plant debris, and tiny ripples in the river. It eliminates the need for manual seeding of tracers, but can be limited by the availability and density of natural tracers and is prone to long computation times. Optical flow velocimetry (OFV) [7], based on the assumption of constant brightness between consecutive frames, obtains motion vectors by calculating the pixel displacement between adjacent frames. Space-time image velocimetry (STIV) [8] is a method to synthesize space-time images (STIs) by collecting information of the velocimetry line parallel to the river flow direction from river surface sequence images, and analyzes the main orientation of texture to calculate the velocity. Fujita et al. [9] determined the texture angle of space-time images using the Gradient Tensor Method (GTM); the principle is to divide the STI into several small windows of equal size. The texture angle of each small window is calculated according to the grayscale information of the image, and then the texture angle value of the whole image can be obtained. This method effectively addresses the limitations of LSPIV and greatly enhances computational efficiency, and the precision of the measured results is comparable to that of LSPIV. Further, Fujita et al. [10] proposed to detect the texture angle by calculating the Two-dimensional Autocorrelation Function (QESTA) of the image intensity in the space-time image, where the gradient of the region with high correlation indicates the effective texture direction, from which the texture angle is derived. Zhen et al. [11] introduced a frequency domain STIV method based on Fast Fourier Transform (FFT), which leveraged the orthogonality between the main orientation of texture (MOT) and the main orientation of spectrum (MOS); the MOT was ascertained by identifying the MOS. However, these methods are extremely sensitive to noise, which not only reduces the accuracy of measurement, but also makes it difficult to take into account applications in various complex environments. In order to improve the detection accuracy of MOT, Zhao et al. [12] proposed a new denoising method that combines frequency domain filtering technology, which generated a noise-free space-time image with a clear texture, and was applied to the measurement of river velocity. Lu et al. [13] firstly performed preprocessing operations on space-time images, primarily including contrast enhancement and noise filtering, with residual noise further mitigated by frequency domain filtering techniques to obtain precise texture angle. Yuan et al. [14] proposed the OT-STIV-SC algorithm, which effectively enhanced the trajectory texture information of space-time images and integrated statistical features to detect texture angles.

Methods for detecting the space-time image texture angle based on deep learning have been highly favored by hydrological researchers in recent years. Researchers like Watanabe et al. [15] combined deep learning with STIV to input STIs into a CNN network, and it demonstrated a high STI texture angle recognition accuracy in validation experiments with both synthetic and real datasets. Li et al. [16] employed residual networks to construct regression models to detect MOT values. Furthermore, Huang et al. [17] enhanced the accuracy of texture angle recognition by integrating the original STIs into a depth residual network with global concern of relational perception for training. While these methods have improved the robustness of measurement techniques, the lack of publicly available datasets and the demand for a considerable number of network parameters and calculations remain obstacles to be addressed.

Traditional STIV generates STIs that contain a large amount of noise in complex scenes, which means it is difficult to directly and accurately determine the texture orientation. It often requires enhancement and filtering operations to obtain images with clear texture trajectories, but these processes rely heavily on manual parameter settings and are unable to adjust parameters adaptively for different scenes. This paper introduces a novel space-time image texture angles measurement method in the frequency domain to avoid the complex filtering steps and address the issue of larger angle detection errors in traditional STIV in complex noisy environments. The problem of space-time images texture angle detection is regarded as an image classification problem. It is necessary to establish frequency domain image datasets containing multiple scenarios to compensate for the shortage of datasets, and this paper then utilizes ShuffleNetV2 to construct a classification model for training and then predicts texture angle values using a saved optimal weight file. The whole measurement process does not require parameter optimization and the improved ShuffleNetV2 retains lightweight characteristics compared with the CNN and residual networks in previous studies. Finally, the river mean velocity and discharge are calculated based on the detected angle values and the velocity–area method.

2. Theory and Methods

2.1. Generation of Space-Time Images

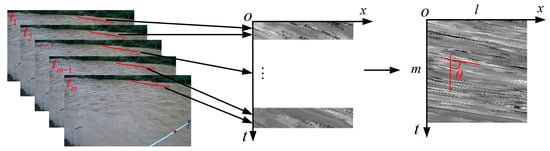

STIV is a non-contact measurement method that takes the velocimetry lines as the analysis area and estimates surface velocity by detecting the texture angle of the synthesized space-time images [18]. Firstly, a camera installed on the bank is used to collect an image sequence of frames of the river surface in a certain time interval ; then, a series of velocimetry lines are set along the direction of the actual movement of the water; these lines are only the width of a single pixel with a length of pixels. Next, a space-time image (STI) with a size of pixels is synthesized in an rectangular coordinate system constructed from the motion distance and time. Figure 1 shows the generation of ST images; the black and white texture trajectory is displayed in the space-time image, and the angle between these texture trajectories and the vertical direction is defined as the main orientation of texture (MOT).

Figure 1.

Schematic diagram of space-time image synthesis.

Suppose that in the physical coordinate system, the distance of the river surface flow feature moving along the velocimetry lines in time is , corresponding to pixels moving within frames in the image coordinate system; then, the river surface velocity on the velocimetry lines is shown in Equation (1).

where (m/pixel) represents the actual distance of each pixel, (s/pixel) represents the time interval between two frames, (pixel/s) represents the camera frame rate, and represents the tangent of the texture angle.

2.2. Construction of Datasets

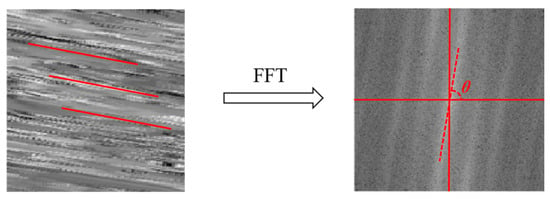

The texture direction of the space-time images generated by the river video is chaotic, which is difficult to judge directly and accurately. Therefore, the ST images are converted into a frequency domain representation, as shown in Figure 2. The image feature extraction ability of deep convolutional neural network is utilized to obtain the mapping relationships from image space to angle space from a large number of training data, and then uses the learned relationship for angle prediction, which is a simple and efficient process with end-to-end implementation.

Figure 2.

The process of FFT.

At present, the STIV method based on deep learning lacks publicly available image datasets of the river, so a high-definition video camera is installed at a fixed position on the bank of the hydrological station to record river flow videos at different time periods to construct the datasets. The common texture angle of the STIs is [5°, 85°]. Considering the difficulty of collecting river video in specific complex scenes and making the constructed dataset representative, four common scenes, including normal, exposure, turbulence, and blur conditions, are selected to generate STIs. Artificial spectrum labeling is adopted to label texture angle sizes, and unified integer as classification labels. The STIs generated under real river conditions contain a lot of noise, which may be completely treated as such datasets will make the model learn the noise patterns during training, and the angle values may be incorrectly detected, affecting the accuracy of the datasets. Therefore, some synthetic images created with Berlin noise are incorporated into the datasets, which are ideal STIs and have the characteristics of no noise interference and accurate known MOT values.

Since it is not possible to generate STIs for all angles, it is necessary to augment the original images to increase the diversity and variability of training data. The data augmentation process involves the following steps: the images are rotated in 1-degree steps with the center of the images as the origin to generate 81 classes of space-time images with a given texture angle, and then these images are transformed into frequency domain representation with an image size of 224 × 224 pixels for training. Figure 3 shows the images of the datasets in various scenarios randomly selected, and the sequence is normal, exposure, turbulence, blur, and synthesis. Table 1 displays the dataset’s distribution.

Figure 3.

Dataset images of different types.

Table 1.

Dataset distributions.

2.3. Structure of ShuffleNetV2

ShuffleNetV2 [19], introduced by Megvii Technology, is an efficient and lightweight convolutional neural network. ShuffleNetV2 retains the core operations from its predecessor ShuffleNetV1 [20], such as channel shuffle, group convolution, and depthwise separable convolution. Beyond that, ShuffleNetV2 takes into account the impact of Memory Access Cost (MAC) on the model performance on the basis of ShuffleNetV1, ensuring that the network architecture is optimized for speed. The overall design of the network adheres to four guidelines: keep the width of the input and output channels equal, employ group convolution appropriately, minimize the degree of network fragmentation, and reduce the element operations.

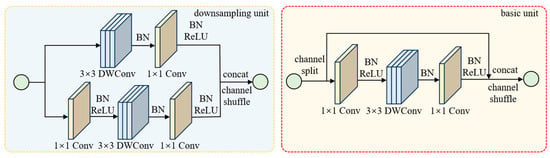

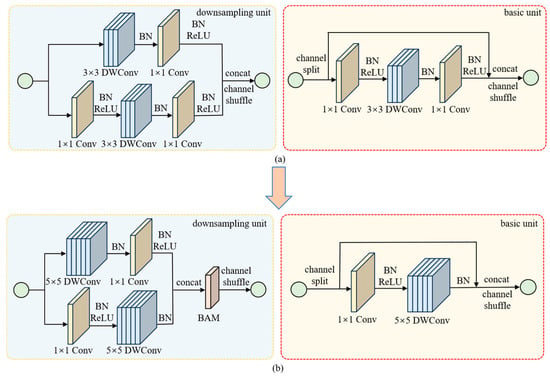

The basic structure of ShuffleNetV2 is depicted in Figure 4; it is mainly composed of a downsampling unit and a basic unit. The downsampling unit is designed with a stride of 2, which effectively halves the spatial dimensions of the input features. The feature maps are dually processed through two distinct branches. The main branch undergoes two 1 × 1 conventional convolutions, and employs a 3 × 3 depthwise separable convolution (DWConv) in the middle for feature extraction, while the other branch applies a conventional 1 × 1 convolution and a 3 × 3 depthwise separable convolution with a stride of 2. The final step involves concatenating the feature maps and performing a channel shuffling operation. In the basic unit with a stride of 1, the input feature map is divided into two branches by channel split. The main branch operates the same as the downsampling unit, while no operation is performed in the other branch. Ultimately, the channels from both branches are concatenated and a channel shuffling operation is carried out.

Figure 4.

Basic structure of ShuffleNetV2.

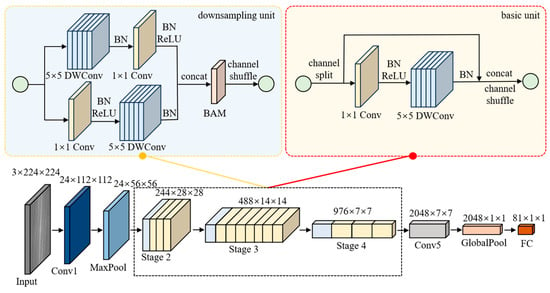

2.4. Improvement of ShuffleNetV2

We take ShuffleNetV2_2.0 as the backbone network in this research and improve it. The overall structure of the improved network is shown in Figure 5. Firstly, the second 1 × 1 convolution in the main branch of both the downsampling unit and basic unit is deleted; secondly, the kernel size of all depthwise separable convolutions is increased from 3 × 3 to 5 × 5; additionally, the Bottleneck Attention Module (BAM) [21] is introduced, and it is added after the channel concatenation operation of the downsampling unit.

Figure 5.

Overall structure of the improved ShuffleNetV2_2.0.

2.4.1. Delete the Second Convolution and Enlarge DWConv Kernel Size

Typically, there are two purposes for using 1 × 1 convolution before and after DWConv. One is to fuse inter-channel information to compensate for the limitations of DWConv, the other is to perform a dimensional adjustment, which may be a reduction or expansion in dimension. The ShuffleNetV2 network uses two 1 × 1 convolutions when fusing channel information, but this task can be accomplished with only one 1 × 1 convolution. Therefore, to delete the second 1 × 1 convolution in the main branch of downsampling unit and the basic unit can not only preserve the ability to fuse information, but also reduce the calculation amount of the model.

As can be seen from the distribution of the computational volume of ShuffleNetV2, the vast majority of the computational volume is concentrated on the 1 × 1 convolution, with DWConv contributing a relatively smaller portion. To improve the detection accuracy without significantly increasing the computational effort, we change the kernel size of all DWConv from 3 × 3 to 5 × 5. The padding needs to be adjusted from 1 to 2 to ensure that the dimension of the output feature maps remains constant in the PyTorch code.

2.4.2. Bottleneck Attention Module (BAM)

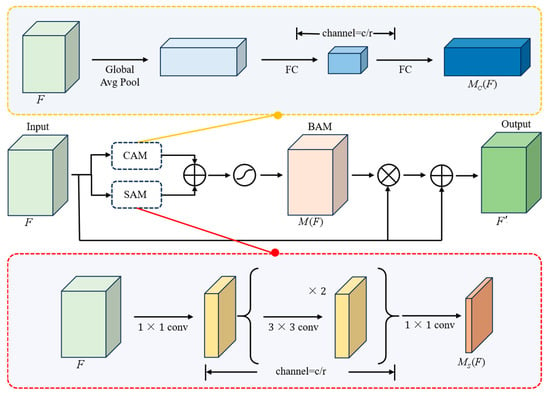

In order to enable the neural network to learn the feature information in complex scenes and improve the accuracy of texture angle recognition, we integrate a BAM attention module after the channel concatenation in the downsampling unit, followed by a channel shuffling operation. BAM is a lightweight Bottleneck Attention Module, as shown in Figure 6, which consists of a Channel Attention Module (CAM) and a Spatial Attention Module (SAM).

Figure 6.

BAM attention module.

Global average pooling is performed on the input feature map to aggregate and enhance feature information in the CAM, and more channel attention is obtained through the shared Multilayer Perceptron, and then the global maximum pooling result of each channel is transmitted through the two fully-connected layers to generate channel attention features . The calculation process is shown in Equation (2).

The SAM adopts a bottleneck structure similar to ResNet to emphasize or suppress features at different spatial locations. Firstly, the feature is projected onto the reduced dimension by 1 × 1 convolution, then two 3 × 3 dilation convolutions are used to effectively utilize the context information, and finally further dimensionality reduction by a 1 × 1 convolution obtains spatial attention features with dimension . The calculation process is shown in Equation (3).

and are added together and activated by the activation function to generate 3D attention features , as shown in Equation (4). is multiplied by the input feature map and then added to the input feature map to obtain the final feature map , as shown in Equation (5).

2.5. Camera Calibration

After the texture angle of the space-time image is obtained, it is necessary to further utilize the principle of projection transformation to achieve the conversion between the world coordinate system and the pixel coordinate system, so as to obtain the actual distance represented by each pixel, which is denoted by . Having selected a calibration point on the riverbank as the origin of the world coordinate system, the line connecting this origin to another calibration point on the same horizontal plane is considered the x-axis direction, and the perpendicular line from the origin to the calibration point on the opposite bank is considered the y-axis direction. According to the positional relationship of the ground calibration points, the world coordinates of each calibration point are measured by a total station, and the pixel coordinates correspond one by one. Assuming a point in the pixel coordinate system corresponds to the position in the world coordinate system, the transformation relationship can be expressed as Equation (6), is the corresponding transmission transformation matrix.

After expanding the equation, the final coordinate relationship is shown in Equation (7). Therefore, the position of the ground calibration points and the velocimetry points on the river section in the world coordinate system can be accurately marked in the pixel coordinate system.

2.6. Calculation of River Velocity and Discharge

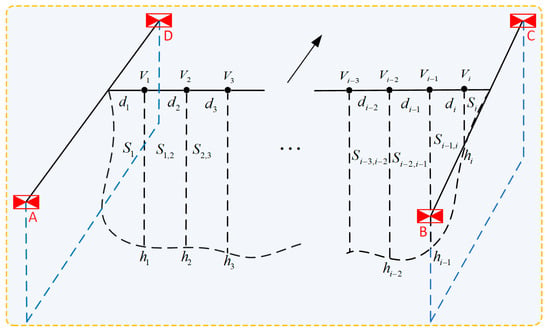

After calculating the river surface velocity, the total discharge and mean velocity are calculated according to the velocity–area method. As shown in Figure 7, the arrow direction represents the flow of water. Suppose that the surface flow velocity of the measuring point is , and the width between the measuring points and is , and and denote the actual water depth (m) corresponding to the vertical line of the measuring points and , respectively, then the partial area between the two measuring points is shown in Equation (8).

Figure 7.

Schematic diagram of river cross-section.

The vertical mean velocity is shown in Equation (9), where denotes the surface velocity coefficient.

The partial mean velocity between the two measuring points is shown in Equation (10).

The partial mean velocity near the bank is shown in Equations (11) and (12), where denotes velocity coefficient on the bank; its value usually follows the Chinese national measurement specifications standard GB 50179-2015 [22]. When on slopes where the water depth gradually becomes shallower to zero on banks, ranges from 0.67 to 0.75, and is 0.8 and 0.9 on the steep banks of an uneven riverbank and even riverbank and is 0.6 at the river bank or in a stagnant water area.

After calculating the partial mean velocity of each part, the product of it and the partial area is the discharge of each part, and then the total discharge is the sum of the discharge of all parts, as shown in Equation (13).

The mean velocity is calculated according to the total discharge and the cross-section area , as shown in Equation (14).

3. Results and Discussion

3.1. Model Training

The experimental hardware environment is an Intel (R) Core (TM) i7-6700K CPU @ 4.00 GHz and a NVIDIA GeForce RTX 2060 GPU with 6 GB of video memory; software environment is Windows 10 64 bit operating system, the program is run on Python 3.8, and the deep learning framework is PyTorch 1.9. The Adam optimizer is used to optimize the network backpropagation and the loss function is cross-entropy. The initial learning rate is 0.001, the number of training iterations is 200, and the batch size is 32.

3.2. Model Performance Comparison

TOP1 and TOP5 accuracy are chosen to evaluate the classification performance of the model in the experiment, and the number of parameters (Params) and FLOPs are selected to further evaluate the hardware requirements and the complexity of the model. TOP1 accuracy refers to the proportion where the category with the highest predicted probability by the model matches the true label. If the predicted category is consistent with the true label, the sample is considered to be correctly classified. TOP5 accuracy refers to the proportion where the true label appears among the top five categories with the predicted probabilities by the model; as long as the true label is within the top five predictions, the sample is considered to be correctly classified. The number of parameters is directly related to the size of the model and its demand for computing resources and storage. The number of calculations refers to the number of floating-point operations per second, which directly determines the run speed of the model. The greater the amount of computation, the longer the training time, and the higher the requirement of computing capabilities.

Comparative experiments are conducted using original ShuffleNetV2, improved ShuffleNetV2, and other networks to comprehensively evaluate the performance.

3.2.1. Comparison of ShuffleNetV2 Series Models

Similar to ShuffleNetV1, the number of channels in each block of the ShuffleNetV2 is scaled to create networks of different complexity, and denoted as ShuffleNetV2_0.5, ShuffleNetV2_1.0, ShuffleNetV2_1.5, and ShuffleNetV2_2.0. Among them, _0.5, _1.0, _1.5, and _2.0 are scaling factors which is related to the number of output channels in each layer of the network. Their experimental results on the datasets are illustrated in Table 2.

Table 2.

Experimental results of ShuffleNetV2 series.

It can be seen from the data in the Table 2 that using the same experimental platform and hyperparameter settings, ShuffleNetV2_2.0 achieves a higher classification accuracy than the other three models, although the amount of parameters and FLOPs is relatively high. We choose to further improve ShuffleNetV2_2.0 after comprehensive considerations and conduct other related experiments based on the frequency domain image datasets.

3.2.2. Ablation Experiment

In order to facilitate the ablation experiments, we designed models 0 to 3. The basic structure of the module for model 0 is shown in Figure 8a, while the basic structure of the modules for models 1 to 3 is shown in Figure 8b.

Figure 8.

Module structure improvement of ShuffleNetV2_2.0. (a) Module structure of model 0; (b) module structure of models 1 to 3.

Model 0 is the original ShuffleNetV2_2.0, model 1 only reduces the 1 × 1 convolution in the main branch of the downsampling unit and basic unit, model 2 enlarges the DWConv kernel from 3 × 3 to 5 × 5 based on model 1, and model 3 increases BAM attention based on model 2. The ablation experiments are conducted and the results are detailed in Table 3, where Lite_1 × 1 means to delete the 1 × 1 convolution operation, K_size = 5 means to change the DWConv kernel to 5 × 5, and “√” indicates that this operation is performed.

Table 3.

Ablation experiment results of ShuffleNetV2_2.0.

It is noticeable that the performance of the model is gradually improved with the addition of each improvement factor. To be specific, model 1 exhibits a reduction of 27.52% in the number of parameters and 34.92% in the number of FLOPs compared to model 0 and the accuracy of model 2 is increased by 2.97% and 0.81%, respectively, on the original basis. Model 3 integrates all the improvement factors, resulting in a significant improvement in performance. The TOP1 accuracy is increased by 5.99%, the TOP5 accuracy is improved by 0.75% over the original ShuffleNetV2_2.0, and the numbers of parameters and calculations are reduced by 20.14% and 34.92%, respectively. All in all, deleting 1 × 1 convolution, enlarging the DWConv kernel, and introducing BAM attention all significantly enhance the classification performance, proving that the improved methods are effective.

In addition, in order to further verify the superiority of the BAM attention mechanism in improving the model effect, a comparison experiment was conducted between BAM attention mechanism and the Efficient Channel Attention (ECA) [23], the Convolutional Block Attention Module (CBAM) [24], and the Squeeze-and-Excitation (SE) [25] attention mechanisms, and the experimental results are shown in Table 4.

Table 4.

Effects of different attention mechanisms on model performance.

The experimental results show that the addition of various attention mechanisms to model 2 has different effects on the classification performance. In particular, the BAM attention mechanism is the most effective. In contrast, the performance of ECA and CBAM attention in this experiment is not as good as that of the basic model, while the SE attention mechanism has some performance improvement, but the effect is still not as good as BAM. The BAM attention mechanism enhances the ability to extract different image features more effectively than other attention mechanisms, while ignoring those with less correlation. Although there is a slight increase in parameters and computation with the addition of BAM, the increase is worthwhile.

3.2.3. Comparison of Different Network Models

The comparative experiments are conducted with other networks ResNet34 [26], DenseNet [27], MobileNetV2 [28], EfficientNetV2 [29], and GhostNetV1 [30] on the same experimental platform to comprehensively evaluate the performance of the improved network. Care is taken to ensure that all relevant parameters remain constant to guarantee the fairness of the experiments. The experimental results of these various networks are presented in Table 5.

Table 5.

Comparison of experimental results of different networks.

According to the data shown in Table 5, the TOP1 accuracy of the improved model reaches 64.69%, which is notably superior to that of the original model and other competing networks. Furthermore, the TOP5 accuracy stands at 90.56%, and it significantly outperforms ResNet34, DenseNet, and EfficientNetV2 in terms of parameters and FLOPs. When compared to MobileNetV2 and GhostNetV1, the improved model demonstrates a higher detection accuracy. This indicates that the improvements have been successful in boosting its accuracy without incurring excessive computational costs, and hence it exhibits a more favorable overall performance.

3.3. Experiments in the Measurement of River Velocity and Discharge

The texture angle of the STI is detected by image classification, and the surface velocity of each velocity point is calculated, and then the total discharge and mean velocity of the river are obtained. The results obtained from the current meter are usually considered as the true value and treated as a criterion for comparison with other methods in the measurement of river velocity and discharge. We have chosen vertical mean velocity, total discharge, and mean velocity as the key measurement indicators in this research. The river images are captured at a resolution of 1920 × 1080 in all experiments. During the same time period that the current meter is operating, comparative measurement experiments are carried out in an artificially repaired and two natural rivers, respectively, by using GTM [9], FFT [11], FD-DIS-G [31], and the proposed method. FD-DIS-G proposed by Wang et al. [31] combines the frame difference with fast optical flow estimation using Dense Inverse Search (DIS) [32], and generates a motion significance graph to capture the water surface motion features by calculating the difference between image frames, then uses the DIS algorithm to calculate the dense optical flow, and finally performs singular value processing on the obtained data by means of groupings. The errors associated with each method are then compared and analyzed to assess their accuracy and reliability.

3.3.1. Experiment in an Artificially Repaired River

In order to verify the actual measurement effect of the proposed method, the Agu hydrology station located in Yimen County, Yuxi City, Yunnan Province is selected as the experimental site. Agu station has been artificially repaired, the river section is regular, and the velocity is stable. According to the annual measurement records of the hydrology station, the velocity coefficient on the bank is 0.75, and the surface velocity coefficient is 0.89. The camera view for capturing video is shown in Figure 9; the points A, B, C, and D, marked with red boxes, represent the location of the selected ground calibration points, and AB is the cross-section line. The velocimetry points represented by yellow solid points are set at distances of 2 m, 4 m, 6 m, 8 m, 10 m, 12 m, 14 m, 16 m, 18 m, and 20 m from point A, respectively, and are sequentially numbered from No. 1 to No. 10. The yellow lines are velocimetry lines laid at each measuring point.

Figure 9.

Ground calibration points and velocimetry lines in Agu.

A Hikvision DS-2DC7423 camera is fixed at distances of 24.2 m, 2.7 m, 8.5 m, 29.3 m from points A, B, C, and D, respectively, to record 5s of river video images, and the frame rate is 60 fps. Table 6 presents the cross-section data and values measured by the LS25-1 current meter. The results and errors derived from various methods are shown in Table 7. The intuitive comparison results and errors of vertical mean velocity, total discharge, and mean velocity are depicted in Figure 10.

Table 6.

Data of cross-section and the LS25-1 current meter in Agu.

Table 7.

Comparison of measured results and errors of different methods in Agu.

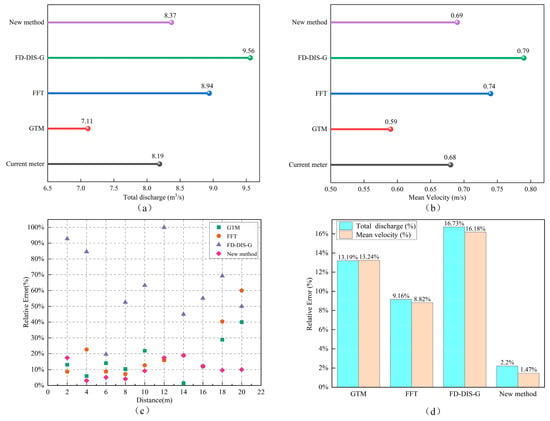

Figure 10.

The results and analysis of different methods. (a) Results of the total discharge; (b) results of the mean velocity; (c) errors of the vertical mean velocity; (d) errors of the total discharge and mean velocity.

The total discharge and mean velocity measured by the proposed method are 8.37 m3/s and 0.69 m/s, respectively. The absolute errors are 0.18 m3/s for discharge and 0.01 m/s for mean velocity, with relative errors of 2.20% and 1.47%, respectively. Compared with the GTM, FFT, and FD-DIS-G methods, the accuracy of discharge is increased by 10.99%, 6.96%, and 14.53%, and the accuracy of mean velocity is increased by 11.77%, 7.35%, and 14.71%, respectively, which indicates that the results of the new method are closest to the true value of the current meter and exhibits a high degree of consistency. For individual velocity measurement points, as shown in Figure 10c, the relative errors of the vertical mean velocity are maintained below 10% at points 2, 3, 4, 5, 9, and 10, and identical to those obtained by the FFT method at velocimetry points 7 and 8, and the relative errors are kept within the range of 12% to 19%, and the high accuracy of the measurements is still maintained even in the case of the most serious influence of the illumination, which confirms that it has good robustness and stability. In contrast, the GTM and FFT methods perform at a moderate level, while the measurement values and error fluctuations of FD-DIS-G are the largest, indicating that the stability is poor. The error of one velocimetry point is as high as 100%, which is attributed to the sensitivity to environmental noisy conditions of the FD-DIS-G method, and it can be clearly seen that the presence of a significant uneven illumination in the video of the river leads to a large measurement deviation of FD-DIS-G at each point. Its robustness needs to be improved.

Beyond that, the running time of the FD-DIS-G method is the shortest, only 32.75 s, followed by the BGT method (50.96 s) and the FFT method (67.03 s), while the time of the new proposed method is 115.43 s, which is much slower than the other methods. Deep Learning takes a long time to learn the data during the training phase, but after the training is completed, its advantage for improving precision is obvious, so the running efficiency of the proposed method can be ignored in actual measurement work.

3.3.2. Experiment in Natural Rivers

To assess the applicability of the new proposed method in natural rivers, a verification experiment is conducted at Panxi hydrology station, located in Huaning County, Yuxi City, Yunnan Province, where the banks are irregular, there are many rocks at the bottom of the river, and vortices are generated in some areas of the water surface. According to the years of measurement experience of the hydrology station, the velocity coefficients of the left and right banks are 0.80 and 0.70, respectively, and the surface velocity coefficient is 0.88. As shown in Figure 11, the ground calibration points, marked with red boxes A, B, C, and D, are set on both sides of the river, and E to F is designed as the cross-section line; the five yellow solid points are velocimetry points, which are set at the distances 35 m, 40 m, 45 m, 50 m, 55 m away from point E, respectively, and named as No. 1 to No. 5 in turn. Furthermore, the yellow velocimetry lines are arranged at equal intervals along the direction of water flow.

Figure 11.

Ground calibration points and velocimetry lines in Panxi.

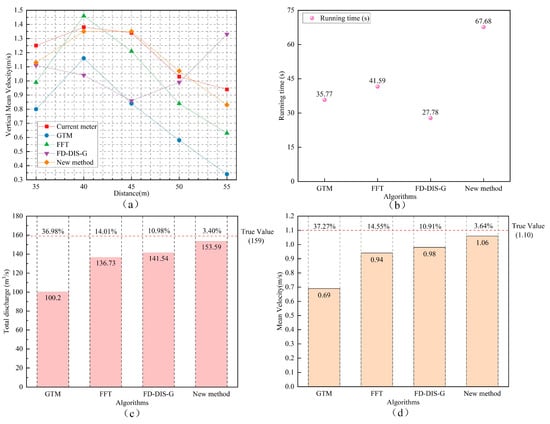

A Hikvision DS-2DC1225-I3/I5 camera is used to record a 15 s river video at 25 frames per second from a location that is 13.1 m away from point A, 4.5 m away from point B, 36.4 m away from point C, and 44.8 m away from point D. Table 8 provides a detailed presentation of the cross-section data and the measurements recorded by the LS25-1 current meter. The measured results of the comparison experiments conducted by different methods at the same time period and the calculated errors compared with the true values of LS25-1 are presented in Table 9. Figure 12 displays the comparison of different methods.

Table 8.

Data of cross-section and the LS25-1 current meter in Panxi.

Table 9.

Comparison of measured results and errors of different methods in Panxi.

Figure 12.

The results and analysis of different methods. (a) Results of the vertical mean velocity; (b) comparison of running time; (c) results and errors of total discharge; (d) results and errors of mean velocity.

It can be concluded from the experimental data that the discharge and mean velocity measured by the proposed method are 153.59 m3/s and 1.06 m/s, respectively. When compared to the true values of the current meter, the absolute and relative errors of discharge are 5.41 m3/s and 3.40%, and the absolute and relative errors of mean velocity are 0.04 m/s and 3.64%. The accuracy of these measurements surpasses that of the GTM, FFT, and FD-DIS-G methods. As depicted in Figure 12a, there is significant fluctuation in the measured values of the vertical flow velocity measured by GTM, FFT, and FD-DIS-G at each velocity measurement point, and the vertical mean velocity measured by the new method has a very high consistency with the measured results of the current meter, and the relative errors are within 12%, with a maximum error of 11.70%. At the middle three velocimetry points, the absolute errors of vertical mean velocity are 0.03 m/s, 0.01 m/s, and 0.04 m/s, with relative errors of 2.17%, 0.75%, and 3.88%, respectively. The error fluctuations are the most minimal, indicating an overall superior performance. However, the velocimetry points close to the banks are affected by the lens aberration of the camera equipment, which makes the errors relatively large and is not conducive to improving measurement accuracy. Nonetheless, even in these cases, the measurement errors are still lower than those of the other three methods.

Figure 12b clearly shows the time consumption of the four algorithms. Compared with the BGT, FFT, and FD-DIS-G methods, the running time of the new method is the longest. However, it can provide more reliable measurement results, and the increased time is completely acceptable in the monitoring work of hydrological stations.

The above two sets of experiments demonstrate that the proposed method is well-suited for monitoring river velocity and discharge in both artificial and natural rivers, and the reliable measurement results can be obtained in complex natural environments. Further research and verification are required to establish its applicability under other river conditions.

The wide universality of the proposed method is further confirmed at the Mengxing hydrological station in Lincang City, Yunnan province. The edge of the river bank is covered with vegetation and weeds, and there are standing signs and guardrails along the shore. During the measurement process, a DS-2DC1225-I3/I5 camera produced by Hikvision is installed at a location that is 39.4 m, 35.8 m, 80.3 m, and 85.6 m away from points A, B, C, and D, respectively. A 20 s river video is recorded at a frame rate of 25 frames per second to capture the flow characteristics in detail. The velocity coefficient of the bank is 0.70, and the surface velocity coefficient is 0.90. Figure 13 shows the layout of the ground calibration points A, B, C, and D, as well as the 10 velocity-measuring lines, and EF is regarded as the cross-section line; E is the starting point, while F is the end point. The measured points named as No. 1 to No. 10 in turn are positioned at intervals of 4 m, as shown in the yellow solid points in the figure. Table 10 presents the cross-section data and the true values obtained from the LS25-3A current meter at the velocimetry points. Table 11 provides a comparative analysis of the measured results and the errors associated with several methods, including the proposed one.

Figure 13.

Ground calibration points and velocimetry lines in Mengxing.

Table 10.

Data of cross-section and the LS25-3A current meter.

Table 11.

Comparison of measured results and errors of different methods in Mengxing.

The measurement results of the proposed method have proven to be the most precise. Specifically, the measured total discharge is 86.11 m3/s with a relative error of 2.37%, which is 10.31% and 6.14% higher than that of GTM and FFT. In terms of mean velocity, the relative error of the proposed method is only 1.87%, which is 10.28% and 6.54% lower than that of other methods, respectively, and its stability and accuracy are improved to a great extent. It is noted that the error of vertical mean velocity at the last velocimetry point reaches 104.76%, which is because the point is far away from the lens, and the flow field near the bank is chaotic, resulting in the generated space-time images of the river having more noise components and less effective texture information, whereas the relative errors at the remaining measurement points are all controlled within 28%. Although the measured errors of the vertical mean velocity of the GTM and FFT methods are lower than those of the new proposed method at certain points, the overall measurement effect of these two methods is not ideal. The comprehensive analysis of three sets of experimental results indicates that the proposed method can realize reliable measurement and has good stability, and the development of this technology makes it possible for STIV technology to be more widely used and popularized in the monitoring of velocity.

4. Conclusions

A river velocity and discharge measurement method based on improved ShuffleNetV2 is proposed in this study. It utilizes image classification to detect the river texture angle without image processing, and enhances the ability to extract image information in complex river scenes by adjusting and optimizing the network structure and introducing the BAM attention mechanism, which effectively solves the problems of traditional STIV in complex noisy environments such as accuracy and robustness. The training experiment results of the improved ShuffleNetV2 on the dataset show that the TOP1 accuracy is 64.69%, and the TOP5 accuracy is 90.56%. The three sets of experiments indicate that the river discharge and mean velocity are the closest to the true values measured by the current meter. Specifically, the relative errors of the discharge and mean velocity in the Agu artificial river are 2.20% and 1.47%, respectively. In the Panxi and Mengxing natural rivers, the measured relative errors in discharge are 3.40% and 2.37%, respectively, and the measured relative errors in mean velocity are 3.64% and 1.87%, respectively. Compared with the existing BGT, FFT, and FD-DIS-G methods, the proposed method is more effective and stable and confirms the potential of deep learning technology in the hydrological field. It not only provides a new technical means, but also promotes the construction and development of intelligent hydrology.

However, the proposed method still has some limitations in practical application. Future research should focus on collecting more diversified river video image data to cover different geographical features and climatic conditions, expanding the scope and variety of datasets, and conducting validation experiments in more river environments to further improve the generalization ability and adaptability of the model. In addition, we have only researched rivers with turbid water and a flow rate of about 1 m/s. Further work can be attempted to apply this method to cases with faster flow rates or clearer water to verify the advantages of the method.

Author Contributions

Conceptualization, R.L. and J.W.; methodology, R.L.; validation, R.L., J.J. and J.W.; formal analysis, R.L.; investigation, R.L.; resources, D.H., N.L. and X.P.; writing—original draft preparation, R.L.; writing—review and editing, R.L.; visualization, R.L.; supervision, J.J. and J.W.; project administration, J.J. and J.W.; funding acquisition, J.W. All authors have read and agreed to the published version of the manuscript.

Funding

This research was supported by the National Natural Science Foundation of China (No. 62363017) and ‘Yunnan Xingdian Talents Support Plan’ Project (No. KKXY202203006).

Institutional Review Board Statement

Not applicable.

Informed Consent Statement

Not applicable.

Data Availability Statement

The data supporting the findings of this study can be found within the article.

Conflicts of Interest

The authors declare no conflicts of interest.

References

- Ju, Z. Research on River Velocity Measurement Based on Video and Image Recognition. Master’s Thesis, Zhejiang University of Technology, Hangzhou, China, 2018. [Google Scholar]

- Kimiaghalam, N.; Goharrokhi, M.; Clark, S.P. Assessment of wide river characteristics using an acoustic Doppler current profiler. J. Hydrol. Eng. 2016, 21, 06016012. [Google Scholar] [CrossRef]

- Xu, L.; Zhang, Z.; Yan, X.; Wang, H.; Wang, X. Advances of Non-contact Instruments and Techniques for Open-channel Flow Measurements. Water Resour. Inform. 2013, 3, 37–44. [Google Scholar]

- Yang, D.; Shao, G.; Hu, W.; Liu, G.; Liang, J.; Wang, H.; Xu, C. Review of image-based river surface velocimetry research. J. Zhejiang Univ. Sci. 2021, 55, 1752–1763. [Google Scholar]

- Adrian, R.J. Scattering particle characteristics and their effect on pulsed laser measurements of fluid flow: Speckle velocimetry vs particle image velocimetry. Appl. Opt. 1984, 23, 1690–1691. [Google Scholar] [CrossRef] [PubMed]

- Fujita, I.; Muste, M.; Kruger, A. Large-scale particle image velocimetry for flow analysis in hydraulic engineering applications. J. Hydraul. Res. 1998, 36, 397–414. [Google Scholar] [CrossRef]

- Khalid, M.; Pénard, L.; Mémin, E. Optical flow for image-based river velocity estimation. Flow Meas. Instrum. 2019, 65, 110–121. [Google Scholar] [CrossRef]

- Fujita, I.; Tsubaki, R. A Novel Free-Surface Velocity Measurement Method Using Spatio-Temporal Images. In Proceedings of the 2002 Hydraulic Measurements and Experimental Method Specialty Conference, Estes Park, CO, USA, 28 July–1 August 2002; pp. 1–7. [Google Scholar]

- Fujita, I.; Watanabe, H.; Tsubaki, R. Development of a non-intrusive and efficient flow monitoring technique: The space-time image Velocimetry (STIV). Int. J. River Basin Manag. 2007, 5, 105–114. [Google Scholar] [CrossRef]

- Fujita, I.; Notoya, Y.; Tani, K.; Tateguchi, S. Efficient and accurate estimation of water surface velocity in STIV. Environ. Fluid Mech. 2019, 19, 1363–1378. [Google Scholar] [CrossRef]

- Zhen, Z.; Huabao, L.; Yang, Z.; Jian, H. Design and evaluation of an FFT-based space-time image velocimetry (STIV) for time-averaged velocity measurement. In Proceedings of the 2019 14th IEEE International Conference on Electronic Measurement & Instruments, Changsha, China, 1–3 November 2019; pp. 503–514. [Google Scholar]

- Zhao, H.; Chen, H.; Liu, B.; Liu, W.; Xu, C.Y.; Guo, S.; Wang, J. An improvement of the Space-Time Image Velocimetry combined with a new denoising method for estimating river discharge. Flow Meas. Instrum. 2021, 77, 101864. [Google Scholar] [CrossRef]

- Lu, J.; Yang, X.; Wang, J. Velocity Vector Estimation of Two-Dimensional Flow Field Based on STIV. Sensors 2023, 23, 955. [Google Scholar] [CrossRef] [PubMed]

- Yuan, Y.; Che, G.; Wang, C.; Yang, X.; Wang, J. River video flow measurement algorithm with space-time image fusion of object tracking and statistical characteristics. Meas. Sci. Technol. 2024, 35, 055301. [Google Scholar] [CrossRef]

- Watanabe, K.; Fujita, I.; Iguchi, M.; Hasegawa, M. Improving Accuracy and Robustness of Space-Time Image Velocimetry (STIV) with Deep Learning. Water 2021, 13, 2079. [Google Scholar] [CrossRef]

- Li, H.; Zhang, Z.; Chen, L.; Meng, J.; Sun, Y.; Cui, W. Surface space-time image velocimetry of river based on residual network. J. Hohai Univ. 2023, 51, 118–128. [Google Scholar]

- Huang, Y.; Chen, H.; Huang, K.; Chen, M.; Wang, J.; Liu, B. Optimization of Space-Time image velocimetry based on deep residual learning. Measurement 2024, 232, 114688. [Google Scholar] [CrossRef]

- Zhang, Z.; Li, H.; Yuan, Z.; Dong, R.; Wang, J. Sensitivity analysis of image filter for space-time image velocimetry in frequency domain. Chin. J. Sci. Instrum. 2022, 43, 43–53. [Google Scholar]

- Ma, N.; Zhang, X.; Zheng, H.T.; Sun, J. ShuffleNetV2: Practical Guidelines for Efficient CNN Architecture Design. In Proceedings of the 15th European Conference on Computer Vision, Munich, Germany, 8–14 September 2018; pp. 122–138. [Google Scholar]

- Zhang, X.; Zhou, X.; Lin, M.; Sun, J. ShuffleNet: An Extremely Efficient Convolutional Neural Network for Mobile Devices. In Proceedings of the 2018 IEEE/CVF Conference on Computer Vision and Pattern Recognition, Salt Lake City, UT, USA, 18–23 June 2018; pp. 6848–6856. [Google Scholar]

- Park, J.; Woo, S.; Lee, J.Y.; Kweon, I.S. Bam: Bottleneck Attention Module. arXiv 2018, arXiv:1807.06514. [Google Scholar]

- GB 50179-2015; Code for Liquid Flow Measurement in Open Channels. Beijing China Planning Publishing House: Beijing, China, 2015. (In Chinese)

- Wang, Q.; Wu, B.; Zhu, P.; Li, P.; Zuo, W.; Hu, Q. ECA-Net: Efficient channel attention for deep convolutional neural networks. In Proceedings of the 2020 IEEE/CVF Conference on Computer Vision and Pattern Recognition, Seattle, WA, USA, 13–19 June 2020; pp. 11534–11542. [Google Scholar]

- Woo, S.; Park, J.; Lee, J.Y.; Kweon, I.S. Cbam: Convolutional block attention module. In Proceedings of the 15th European Conference on Computer Vision (ECCV), Munich, Germany, 8–14 September 2018; pp. 3–19. [Google Scholar]

- Hu, J.; Shen, L.; Sun, G. Squeeze-and-excitation networks. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Salt Lake City, UT, USA, 18–23 June 2018; pp. 7132–7141. [Google Scholar]

- He, K.; Zhang, X.; Ren, S.; Sun, J. Deep residual learning for image recognition. In Proceedings of the 2016 IEEE Conference on Computer Vision and Pattern Recognition, Las Vegas, NV, USA, 27–30 June 2016; pp. 770–778. [Google Scholar]

- Huang, G.; Liu, Z.; Van Der Maaten, L.; Weinberger, K.Q. Densely connected convolutional networks. In Proceedings of the 2017 IEEE Conference on Computer Vision and Pattern Recognition, Honolulu, HI, USA, 21–26 July 2017; pp. 4700–4708. [Google Scholar]

- Sandler, M.; Howard, A.; Zhu, M.; Zhmoginov, A.; Chen, L.C. Mobilenetv2: Inverted residuals and linear bottlenecks. In Proceedings of the 2018 IEEE/CVF Conference on Computer Vision and Pattern Recognition, Salt Lake City, UT, USA, 18–23 June 2018; pp. 4510–4520. [Google Scholar]

- Tan, M.; Le, Q. Efficientnetv2: Smaller models and faster training. In Proceedings of the 38th International Conference on Machine Learning, PMLR, Virtual, 18–24 July 2021; pp. 10096–10106. [Google Scholar]

- Han, K.; Wang, Y.; Tian, Q.; Guo, J.; Xu, C.; Xu, C. Ghostnet: More features from cheap operations. In Proceedings of the 2020 IEEE/CVF Conference on Computer Vision and Pattern Recognition, Seattle, WA, USA, 13–19 June 2020; pp. 1580–1589. [Google Scholar]

- Wang, J.; Zhu, R.; Zhang, G.; He, X.; Cai, R. Image Flow Measurement Based on the Combination of Frame Difference and Fast and Dense Optical Flow. Adv. Eng. Sci. 2022, 54, 195–207. [Google Scholar]

- Kroeger, T.; Timofte, R.; Dai, D.; Van Gool, L. Fast optical flow using dense inverse search. In Proceedings of the 14th European Conference on Computer Vision, Amsterdam, The Netherlands, 11–14 October 2016; pp. 471–488. [Google Scholar]

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).