1. Introduction

Real-world engineering optimization problems often involve many conflicting objectives and some constraints [

1]. A constrained multi-objective optimization problem (CMOP) with inequality constraints can be expressed as:

where

is a d-dimensional decision variable,

is the objective function to be optimized, and

is the first

inequality constraint. For CMOP, constraint violation (CV) can be computed in the following way:

A feasible solution refers to a solution whose CV value is less than or equal to 0. When a solution

outperforms solution

x on all objectives,

dominates

x. If

is not dominated by other solutions,

is called a non-dominated solution. The set of objective vectors formed by non-dominated solutions is called the constrained Pareto front. The goal of CMOP is to find the constrained Pareto front (CPF). There are a large number of constrained multi-objective problems in engineering problems in the real world. Some of these problems require expensive computational costs when being solved, such as fuel cell vehicle design [

2], wire manufacturing [

3], and space system design [

4]. These types of problem are called expensive constrained multi-objective problems.

The ECMOP requires the algorithm to find the optimal solution under limited computational resources. However, the feasible domain is extremely narrow in MW1, which makes it difficult for the algorithm to find the feasible domain quickly. Meanwhile, the feasible domain of the problem is very broad for CF1 if the computational resources are heavily used in the constraint-value dominated search process, and the algorithm often falls into local convergence, making it difficult to approach the Pareto frontier. Therefore, the algorithm must use different search strategies for different feasible domains under different feasible domain conditions. In the case that the feasible domain is narrow and difficult to find, the algorithm needs to find the feasible domain with as few evaluations as possible, and in the case that there are already enough individuals in the feasible domain, it will not obtain good results if it pays too much attention to the convergence and feasibility. However, due to the limitation of computational resources, it is difficult for the algorithm to search for the optimal solution in a large decision space, requiring the algorithm to converge quickly to find the feasible domain. On the other hand, the complexity of CPF makes it difficult to explore the feasible domain with a limited number of solutions, which requires the algorithm to have good diversity.

Evolutionary algorithms are widely used in constrained multi-objective problems due to their advantages, such as simple implementation and strong search ability [

5]. Constrained multi-objective evolutionary algorithms require using a large number of function evaluations to search the objective space to ensure the good performance of the algorithm. However, for ECMOPs, where the number of available function evaluations is limited, traditional constrained multi-objective evolutionary algorithms are not adept at generating solutions that meet the requirements [

6].

In order to solve such problems, many researchers have used surrogate models instead of real evaluation functions to produce suitable solutions within a limited number of evaluations; this method is called surrogate-assisted MOEAs (SA-MOEAs). SA-MOEAs have become a promising direction in recent years [

7] that has received increasing attention. Commonly used surrogate models in recent years include artificial neural networks and support vector machines [

8,

9], radial basis functions [

10], and Gaussian regression (Kriging) [

11]. The Kriging model is a stochastic model and, due to its good approximation ability to nonlinear functions with stochastic properties [

12], it is one of the most representative and potential surrogate modeling methods in the field of surrogate-assisted evolutionary algorithms [

13,

14]. Surrogate-assisted evolutionary algorithms guide the search process of the algorithm by using existing solutions that have been evaluated with real functions to build surrogate models to predict the performance of the unevaluated ones. The use of surrogate models allows the algorithm to explore the decision space more fully within the constraints of a limited number of real evaluations and greatly reduces the amount of computation due to the computationally inexpensive nature of surrogate models.

SA-MOEA needs to find the optimal solution under the limitation of only a few evaluation times. Therefore, the strategy of the evolutionary algorithm needs to be improved to adapt to SA-MOEA [

15]. The mainstream improvement directions can be divided into three types: offspring generation, surrogate model management, and fill sampling criteria. In terms of offspring generation, KTA2 [

16] uses an archived population to generate multiple sets of candidate solutions to ensure the diversity and convergence of the population. In order to meet the preference requirements within a limited number of evaluations, K-RVEA [

17] uses the angle penalty distance to balance the diversity and convergence of solutions in decision space and dynamically adjust the reference vector distribution simultaneously. In terms of surrogate model management. In order to improve the prediction accuracy of the surrogate model, MOEA/D-EGO [

18] adopts the idea based on decomposition and uses the MOEA/D algorithm to decompose the multi-objective problem into multiple single-objective problems and establishes a surrogate model separately for each single-objective problem. K-RVEA utilized the uncertain information of the Kriging model to design the training data selection strategy for the Kriging model in order to improve the performance of Kriging. In terms of the fill sampling criterion, CSEA [

8] uses the surrogate model to predict the dominant relationship between the candidate solution and the reference solution and selects the dominant solution for real function evaluation. ABSAEA [

19] uses two fill sampling criteria; one of them selects the non-inferior solution to enhance the convergence of the population, and the other selects the solution with the maximum uncertainty to enhance the diversity of the population.

For ECMOPs, another problem that SAMOEA needs to solve is how to optimize the objectives under the premise of meeting the constraint conditions [

20]. For SA-MOEA, predicting both the constraint values and the objective values simultaneously often leads to a large prediction error of the surrogate model [

21], especially when there is an overlap between the constrained Pareto front and the unconstrained Pareto front. However, not predicting the constraint values often leads to the algorithm being unable to find the feasible region and making it difficult to search for effective feasible solutions. Therefore, SA-MOEA needs to use appropriate constraint handling techniques to balance the optimization of objective values and the satisfaction of constraint conditions.

In ECMOPs, the feasible domain of the problem may be extremely small, and then the algorithm needs to accelerate the convergence to ensure that the feasible domain can be found with limited computational resources; however, existing algorithms such as KTS [

22] that only consider the correlation between the constraints and the objective values are unable to accelerate the convergence in this case. On the other hand, if the algorithm focuses too much on finding the feasible domain in the early stage, the algorithm may fall into localized convergence and fail to cross the feasible domain to converge to the CPF.

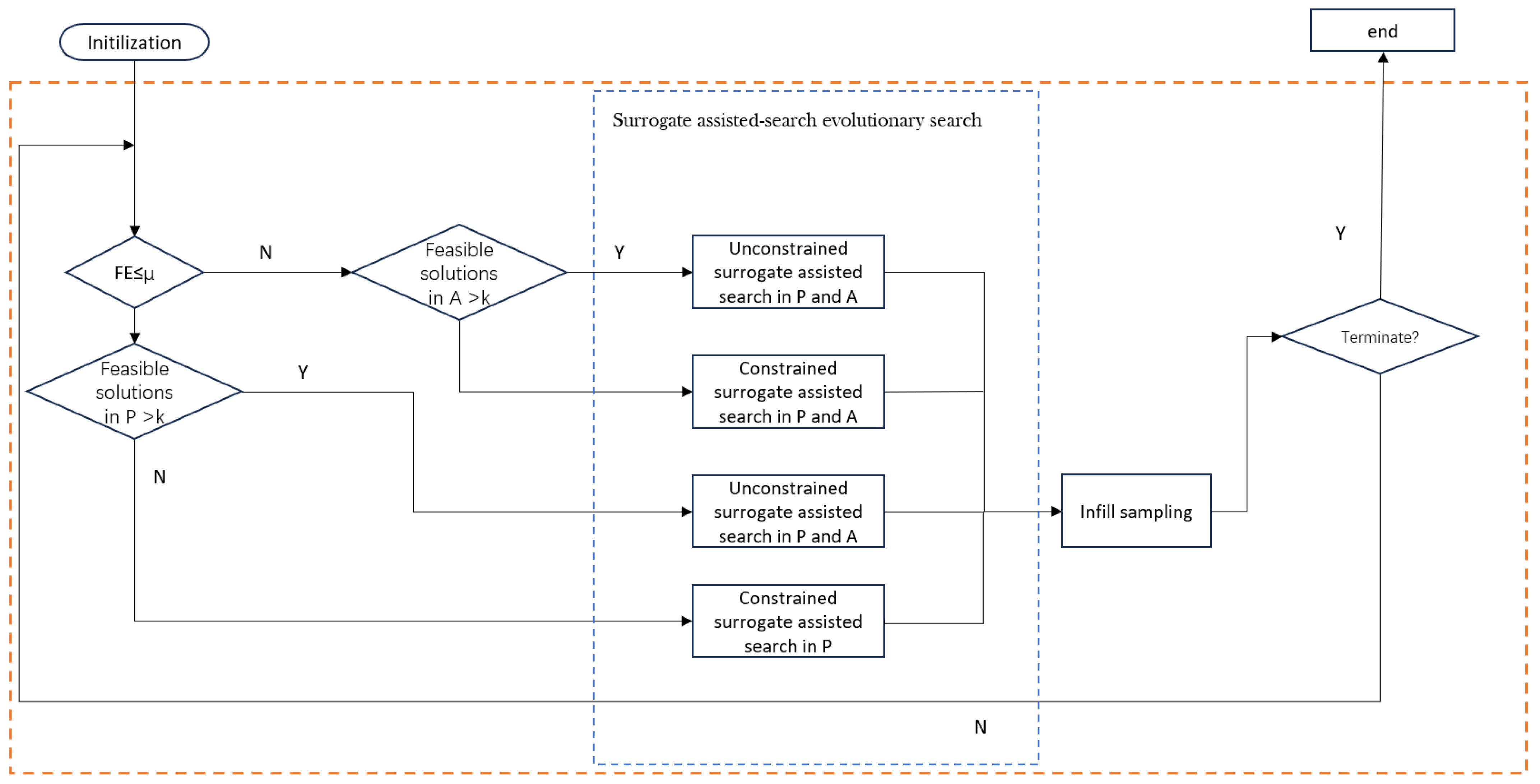

To solve the above problems, we propose a preference model-based surrogate-assisted constrained multi-objective evolutionary algorithm, which performs different offspring generation strategies based on the current difficulty of finding a feasible solution. In addition, a surrogate model management strategy is used to adjust the surrogate model evaluation strategy and the new offspring selection strategy according to the performance of the current surrogate model. Specifically, this paper’s contributions are as follows:

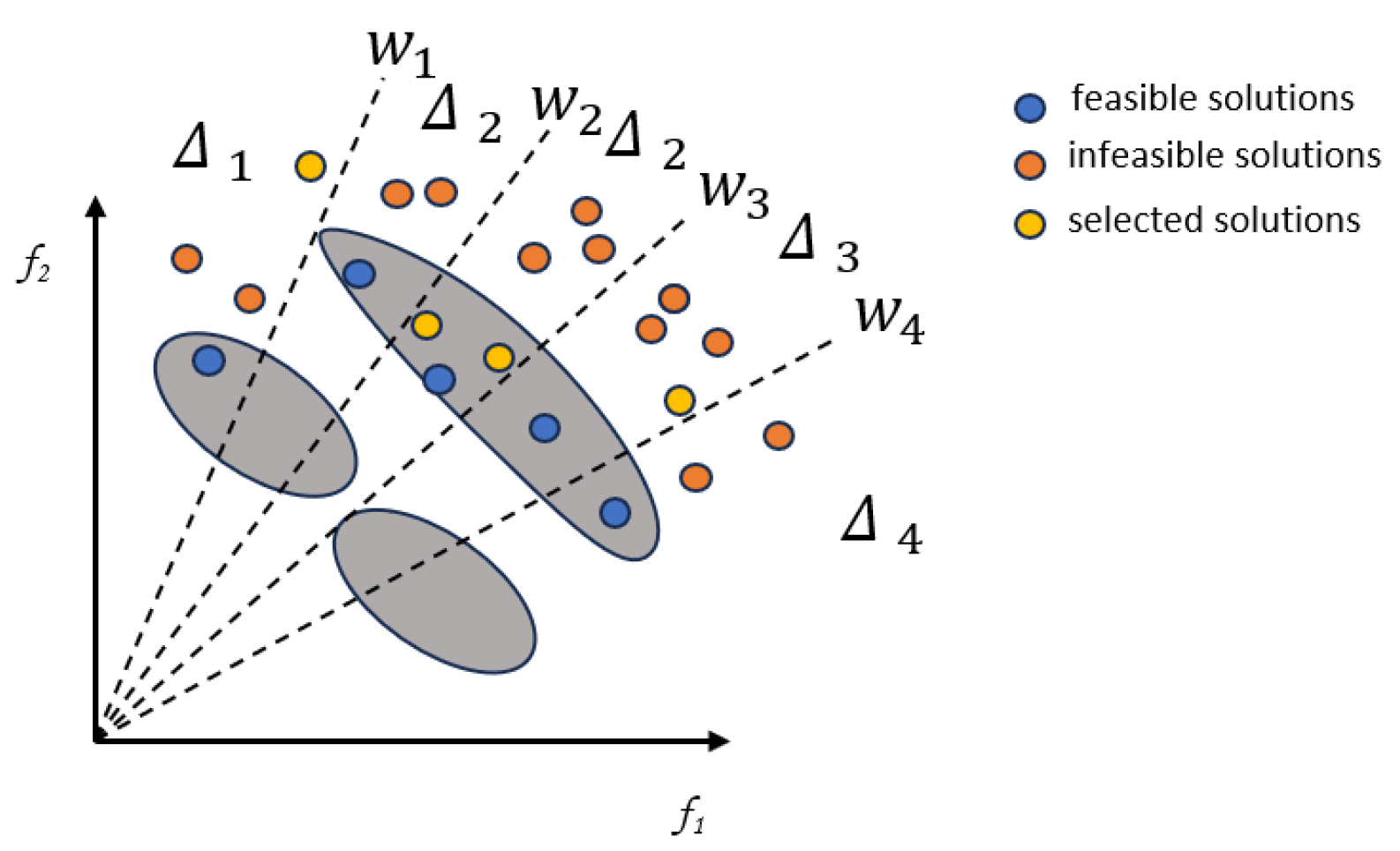

To meet the optimization requirements of the population at different stages, we propose a multi-preference search model based on evolutionary demands. In the early stage of evolution, the waste of computing resources is reduced by rapidly converging to the feasible region. In the middle stage of evolution, for the feasibility search requirements of the population, a feasible solution archive and constrained preference strategy are used to help the population cross infeasible regions. For the diversity requirements of the population, the objective value preference algorithm is used to help the population get rid of local optima. In the later stage, the model balances diversity and convergence demands to facilitate the exploration and exploitation of feasible regions.

In surrogate-assisted evolutionary algorithms, the accuracy of the surrogate model plays a crucial role in the algorithm’s performance. To better utilize the surrogate model for evaluating newly generated solutions, a strategy to modify the number of predictions of the surrogate model based on the prediction error of the current realistically evaluated solutions is proposed.

Target constrained Pareto frontier search and unconstrained Pareto frontier search address the different optimization needs of populations. Convergence to the feasible domain is accelerated by auxiliary populations when the main population lacks feasible solutions and is converted to objective-value dominated to converge across the feasible domain to the unconstrained Pareto front (UPF) when there are sufficient feasible solutions.

2. Preliminaries

2.1. Kriging

In this study, we use the Kriging model to approximate the objective function and constraint functions. As a typical surrogate model, Kriging can effectively fit complex functions and provide uncertainty estimates for the prediction results. The dynamic evaluation strategy relies on the prediction accuracy and uncertainty information of the Kriging model. By monitoring the model prediction errors (such as the mean square error and the feasibility misclassification rate), it dynamically adjusts the usage frequency of the surrogate model. When the model reliability is low, the number of surrogate evaluations is reduced to prevent it from misleading the search direction. When the reliability is high, the number of surrogate evaluations is increased to save computational resources. The mathematical model of the Kriging model can be expressed as:

where

is a uniformly distributed Gaussian noise and

and

are two constants independent of

x.

Given the collected data

and its corresponding output

, for an unevaluated point

, the estimated output

, the mean

, and the variance

are calculated as follows:

Among others,

X is the average vector of

X, the mean vector of

is the average vector of

X, and

is the vector of covariances between

K, where

K is the covariance matrix of

X. The covariance matrix of two data points is measured using the squared exponential function as the covariance function of

X and

. The similarity between the two data points

X and

is given by:

Predicting the mean

is directly used as

, the predicted value, and the predicted variance

represents the uncertainty. The hyperparameters in the covariance function can be obtained by maximizing the log-marginal likelihood function as follows:

2.2. Co-Evolution

In this study, we introduce the co-evolutionary algorithm into the multi-preference search mode based on evolutionary demand. Through the co-evolution of the main population and the auxiliary population, the overall optimization performance is enhanced. This collaborative mechanism enables the algorithm to dynamically balance feasibility and convergence at different stages of evolution. The cooperative co-evolutionary algorithm is an optimization method based on evolutionary algorithms. Its main aim is to decompose a complex problem into several relatively simple sub-problems, solve these sub-problems separately, and then combine their solutions to obtain the overall solution. During this process, the solutions of different sub-problems interact with each other through cooperation and competition, thereby optimizing the overall problem.

The basic steps of the co-evolutionary algorithm are as follows:

Problem decomposition: Decompose the original problem into multiple subproblems. These sub-problems should be relatively independent and can be solved relatively easily.

Individual representation: Designing a suitable individual representation for each subproblem, usually by encoding the solution of the subproblem as an individual.

Subproblem solving: Use evolutionary algorithms (e.g., Genetic Algorithm, Particle Swarm Algorithm, etc.) to solve each subproblem separately, and get the local optimal solution of each subproblem.

Merging and competition: Combining locally optimal solutions to individual subproblems and influencing each other competitively or collaboratively to further optimize those solutions.

Iterative optimization: The above steps are repeated until the stopping condition is reached (e.g., the maximum number of iterations is reached, the convergence condition is satisfied, etc.).

The advantage of the co-evolutionary algorithm is that it can deal with complex, high-dimensional problems and make full use of the structural information of the problem to improve the solution efficiency. It is often used in multi-objective optimization, large-scale optimization, and optimization problems with complex structures.

4. Experimental Setup

In this paper, to demonstrate the ability of SA-MPCMOEA to solve expensive constrained multi-objective problems, two test suites, CF and LIRCMOP, are used to compare SA-MPCMOEA with the current state-of-the-art algorithms. The number of decision variables is set to 10 for all test instances. It is assumed that the evaluation of their objectives and constraints is expensive. The performance of the algorithms is evaluated using IGD metrics

where

denotes the minimum Euclidean distance from

x to

and

denotes the number of points in

. A total of 10,000 reference points are used to compute the IGD values. Smaller IGD values indicate better convergence and diversity of the algorithm’s non-dominated set.

The hypervolume (HV) is a metric used to measure the volume of the objective space covered by a solution set relative to a reference point. A larger HV value indicates that the solution set obtained by the algorithm covers a wider range of the true Pareto front, demonstrating better solution quality and algorithm efficiency. The HV calculation method is shown in the following equation:

where

denotes the Lebesgue measure,

S represents the non-dominated solution set, and

represents the hypervolume formed by the reference point and the non-dominated solutions. In this paper, we adopt the nadir point method for reference point selection, which uses the maximum value of all objective functions as the reference point. The advantage of the HV indicator lies in its ability to be calculated without prior knowledge of the true Pareto front of test problems, making it particularly suitable for real-world applications.

To ensure the fairness of the experiments, the parameter settings for all compared algorithms are configured as follows:

All algorithms are independently executed 30 times.

The maximum number of real function evaluations for each algorithm is set to 1000.

The population size is uniformly set to 100 across all test problems.

4.1. Experiments Results

In this section, our algorithm SA-MPCMOEA is compared with the following state-of-the-art algorithms: The proposed SA-MPCMOEA was empirically compared with six state-of-the-art CMOEAs: CTAEA [

29], RVEAa [

30], ABSAEA, CCMO, and NSGA-II. to examine its performance.

All compared algorithms were implemented in MATLAB (R2023a) within the PlatEMO platform [

31] and executed on a computer equipped with a 13th Gen Intel Core i5-13400 2.50 GHz CPU and the Microsoft Windows 10 operating system.

CTAEA balances convergence, diversity, and feasibility by maintaining two collaborative archives: the convergence-oriented archive (CA) and the diversity-oriented archive (DA). By adaptively selecting individuals from these archives based on their distinct evolutionary states to generate offspring solutions, the algorithm achieves superior performance on constrained multi-objective optimization problems.

RVEAa employs a reference vector regeneration strategy to address constrained many-objective optimization problems with irregular PFs (Pareto Fronts) and utilizes the angle-penalized distance to maintain a balance between convergence and diversity.

ABSAEA employs an adaptive Bayesian surrogate-assisted evolutionary algorithm to address expensive multi-objective optimization problems, where the hyperparameters of the acquisition function are dynamically tuned to select candidate solutions for real function evaluations, thereby reducing the computational cost of evaluations.

CMOEAD ensures the diversity of solutions by performing niching operations on individuals within the population. Through the adaptive updating of reference points, the CMOEAD algorithm can simultaneously search for multiple Pareto-optimal points in a single run to achieve efficient exploration.

NSGA-II improves computational efficiency by utilizing fast non-dominated sorting and an enhanced dominance mechanism. Owing to its selection operator, which constructs the mating pool based on fitness and distribution characteristics, the algorithm achieves well-distributed solution sets across most optimization problems while demonstrating superior convergence performance near the true Pareto-optimal front in practical scenarios.

4.1.1. The Computational Complexity of SA-MPCMOEA

When using the Kriging model, the time complexity of the algorithm is mainly determined by the surrogate model training. The model training of Kriging requires the calculation of the inverse of the covariance matrix, and the time complexity is

, where

N is the population size. The independent modeling of each objective function increases the total training cost to

(

M is the dimension of the objective function). In each iteration, the surrogate prediction of the offspring individuals requires

time (traversing

M models, and the single prediction complexity of each model is

). The overall complexity is

, where

T is the number of iterations. As shown in

Table 1, SA-MPCMOEA is compared with other algorithms on CF test problems and LIRCMOP test problems, respectively. Using 1000 evaluations, each algorithm is independently run once.

4.1.2. Result on CF Test Suit

The comparative results of SA-MPCMOEA and other algorithms on the CF test problems [

32] are presented in

Table 2.

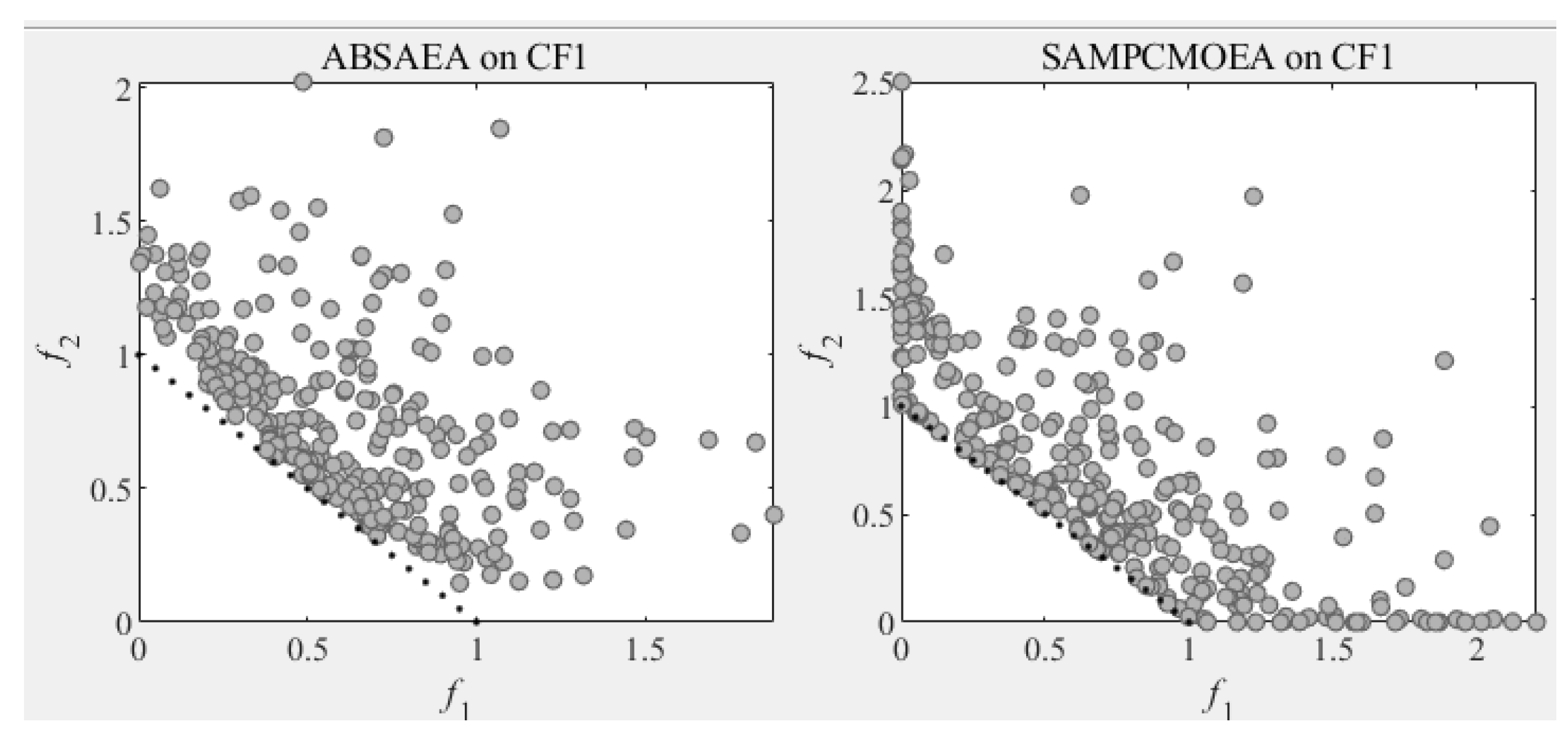

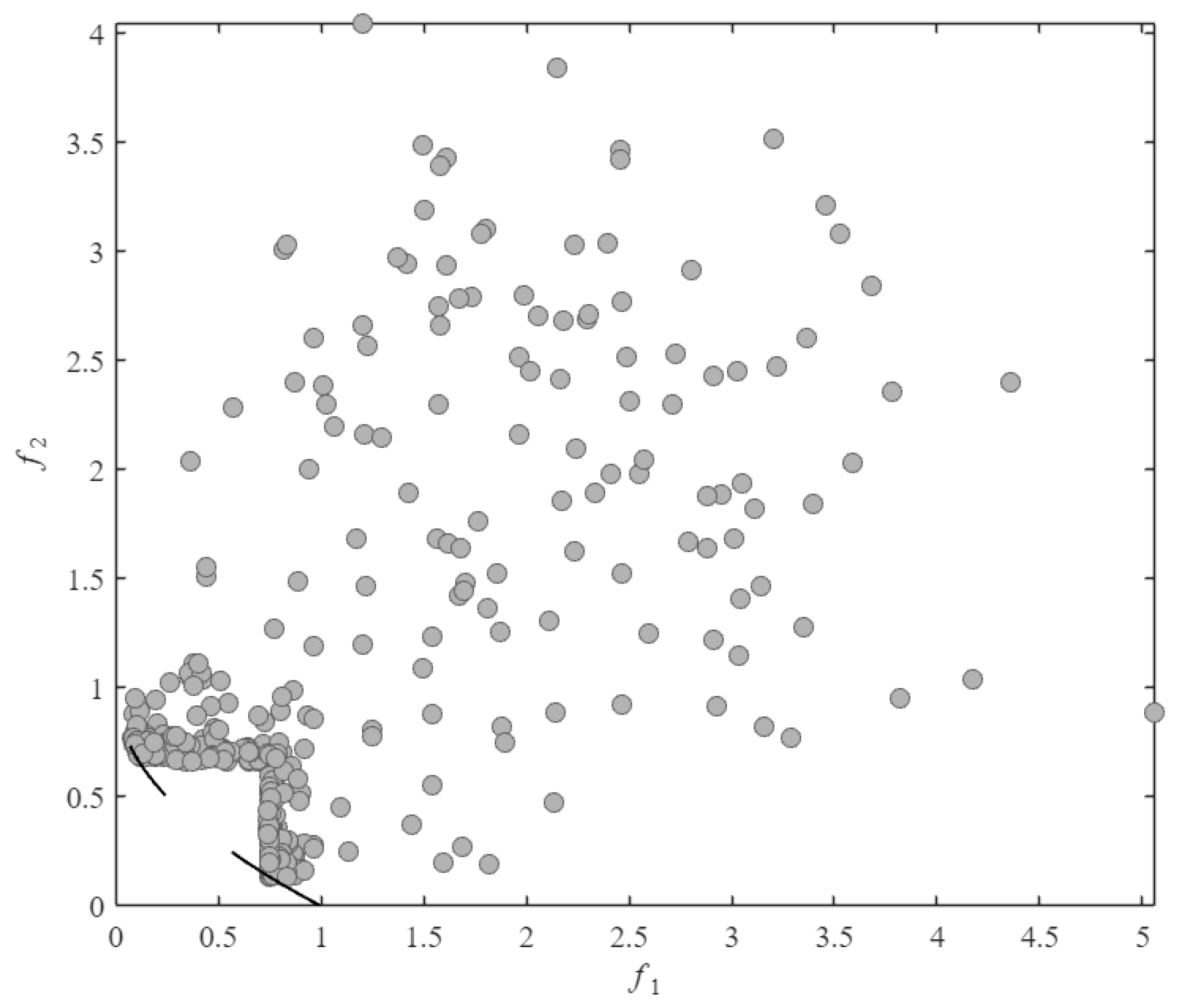

As shown in

Table 2, the SA-MPCMOEA algorithm demonstrates a significant advantage across the CF benchmark problems. Among all compared algorithms, SA-MPCMOEA is only outperformed by ABSAEA on three problems (CF1, CF3, and CF10) in terms of IGD values because the feasible region of the problem is relatively large in CF1, CF3, and CF10. SA-MPCMOEA starts to focus on the diversity of the population after finding a sufficient number of feasible solutions, as shown in

Figure 3, while maintaining superiority or equivalent performance on the remaining seven problems. Notably, SA-MPCMOEA exhibits exceptional performance on complex constrained problems (CF2, CF4, CF6, and CF7), particularly in the equality-constrained problem CF7, where it achieves the best results, highlighting its robust constraint-handling capability. In high-dimensional problems (CF8, CF9, and CF10), the infeasible regions disrupt the feasible domains, leading to the inferior performance of other algorithms compared to SA-MPCMOEA and ABSAEA. Benefiting from the angle-based convergence model for feasible domains, SA-MPCMOEA efficiently traverses complex and vast search spaces, rapidly crossing infeasible regions to locate feasible solutions.

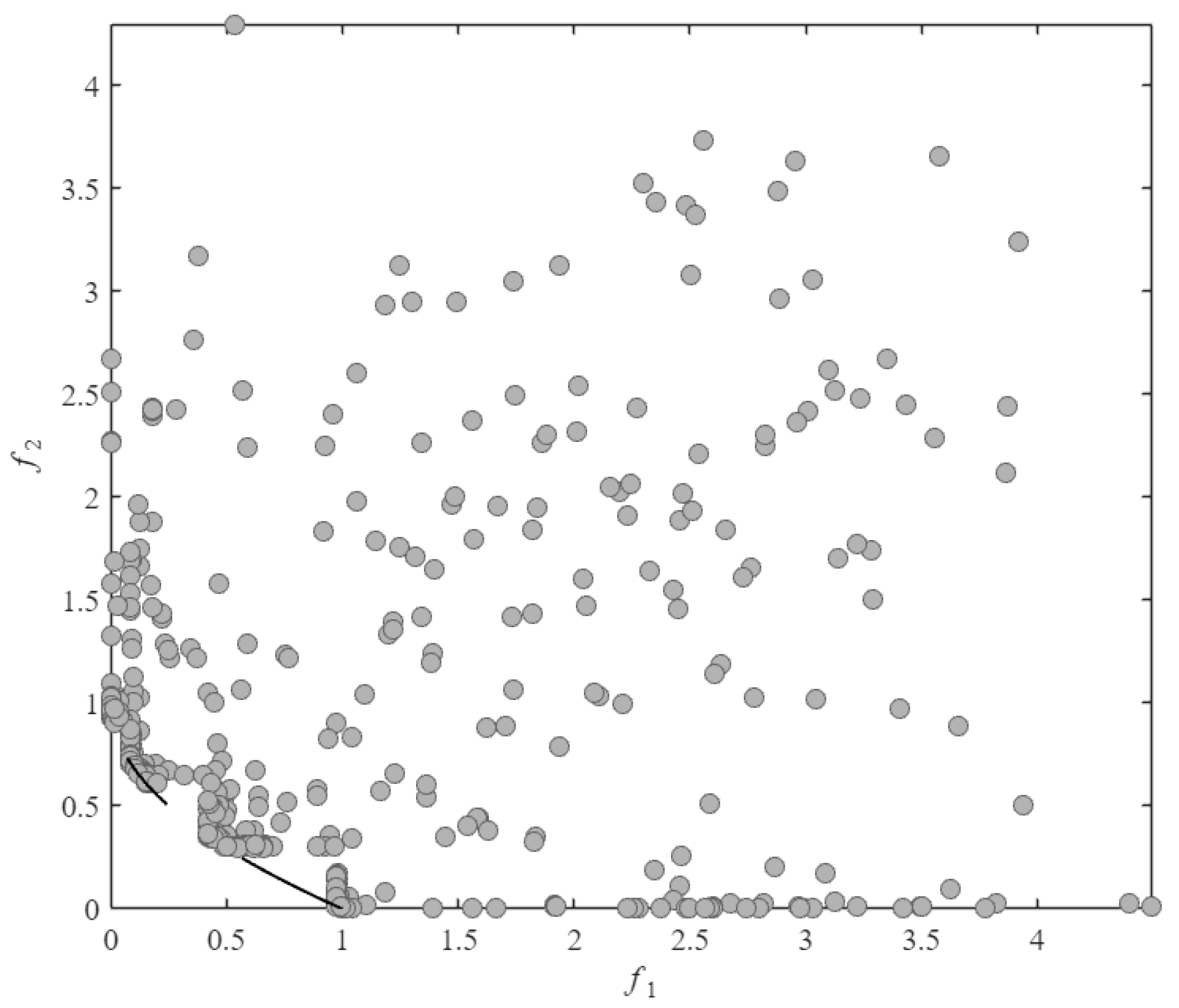

As observed in

Figure 4 and

Figure 5, SA-MPCMOEA effectively prevents population convergence toward CPF gap regions through its constraint-dominated exploration mechanism when handling problems with a discontinuous constrained Pareto front. Compared to ABSAEA, SA-MPCMOEA achieves more uniform distribution along the CPF, demonstrating superior diversity preservation capabilities in maintaining solution spread.

4.1.3. Result on LIRCMOP Test Suit

The comparative results of SA-MPCMOEA and other algorithms on the LIRCMOP [

33] high-dimensional complex constrained multi-objective optimization problems are summarized in

Table 3. As shown in LIRCMOP1–LIRCMOP4, where feasible regions are extremely limited, all compared algorithms fail to converge due to their inability to traverse infeasible regions before exhausting computational resources. In contrast, SA-MPCMOEA successfully obtains feasible solutions by leveraging its dynamic assessment strategy, which enables the surrogate model to efficiently screen candidate solutions and prioritize promising regions, thereby minimizing unnecessary real function evaluations and validating the robustness of its constraint-handling mechanism in extreme scenarios. For more complex problems (LIRCMOP5–LIRCMOP12), SA-MPCMOEA significantly outperforms conventional algorithms in most cases, although it slightly underperforms RVEAa on LIRCMOP5 and LIRCMOP6. These results highlight SA-MPCMOEA’s superior search efficiency in high-dimensional discontinuous feasible domains, particularly in balancing exploration–exploitation trade-offs under severe constraint conflicts.

4.1.4. Ablation Experiments

To verify the proposed methods in SA-MPCMOEA, this paper conducted ablation experiments for verification. The verification methods were divided into four algorithms: the SA-MPCMMOEA algorithm proposed in this paper; SA-MPCMOEA/MP without multi-preference modeling; SA-MPCMOEA/MP, which uses tournament selection [

34] to generate offsprings; SA-MPCMOEA/FS without fill sampling strategy, which uses non-dominated sorting to select non-dominated candidate solutions for real function evaluation; SA-MPCMOEA/DA without dynamic assessment strategy, which uses a fixed

of 20.

The four methods were run ten times independently on the CF test suit using the same random seeds. As shown in

Table 4, among the 24 problems, SA-MPCMOEA achieved the optimal value or a value close to the optimal value.

In the experiments, SA-MPCMOEA/MP performed the worst because the control of search direction in ECMOPs has an important impact on the algorithm’s performance, generating offspring without considering the current evolutionary needs of the population, which leads to a waste of computational resources.

In the experiments, the performance of SA-MPCMOEA is better than that of SA-MPCMOEA/FS in 18 problems and is similar in 6 problems. The fill sampling strategy is responsible for selecting candidate solutions for real function evaluation. A good fill sampling strategy needs to select candidate solutions that can optimize the surrogate model and guide the population search. The fill sampling strategy based on optimization requirements effectively selects solutions with the greatest potential for evaluation, avoiding the population from getting trapped in local optima.

In the experiments, SA-MPCMOEA outperformed SA-MPCMOEA/DA in 16 problems, and the performance was similar in 6 problems. In surrogate-assisted evolutionary algorithms, a larger number of surrogate model evaluations means generating more candidate solutions to explore the decision space. However, as the number of surrogate model evaluations increases, the error of the approximate objective values obtained by evaluating the newly generated candidate solutions through the surrogate model also increases, which may mislead the population to evolve in the wrong direction.

4.1.5. Parameter Sensitivity Analysis Experiments

There are three parameters in SA-MPCMOEA: the threshold adjustment parameter (), the threshold number of feasible solutions in Populations P and A (k), and the scaling factor (). Different parameter values may affect the performance of SA-MPCMOEA. Therefore, we conduct experiments in the CF test suit to analyze the parameter sensitivity of SA-MPCMOEA.

In order to test the impact of different values on the algorithm performance, we set to 0.2, 0.25, 0.3, 0.35, and 0.4 respectively, with k = 30 and = 20.

As shown in

Table 5, the performance is best when

= 0.25, and the worst when

= 0.4. This may be because, when

= 0.4, the algorithm uses too many computational resources to meet the convergence condition, causing the algorithm to be prone to getting trapped in local optima.

After that, we adjust k to verify the influence of the threshold of the number of feasible solutions in the population. k is set to 10, 20, 30, 40, and 50, respectively, with

= 0.25 and

= 20. It can be seen in

Table 6 that different k values will have an impact on the performance of SA-MPCMOEA. This may be because, when the feasible solution threshold k is too large, the algorithm fails to enter search mode 3 to explore the feasible region.

Finally, we analyze the influence of the value of the scaling factor

on the SA-MPCMOEA; we set

to 10, 15, 20, 25, 30 respectively, with

= 0.25 and k = 20. The value of

controls the maximum number of evaluations that the surrogate model can use and affects the number of offspring solutions generated. As can be seen from

Table 7, the effect is best when the value of

is 20. This may be because, at this time, the algorithm can generate as many offspring solutions as possible under the condition of a relatively high surrogate model accuracy. When

= 30, the performance of the algorithm begins to decline. This may be because the algorithm generates offspring solutions when the surrogate model has poor accuracy, which misleads the filling sampling strategy.

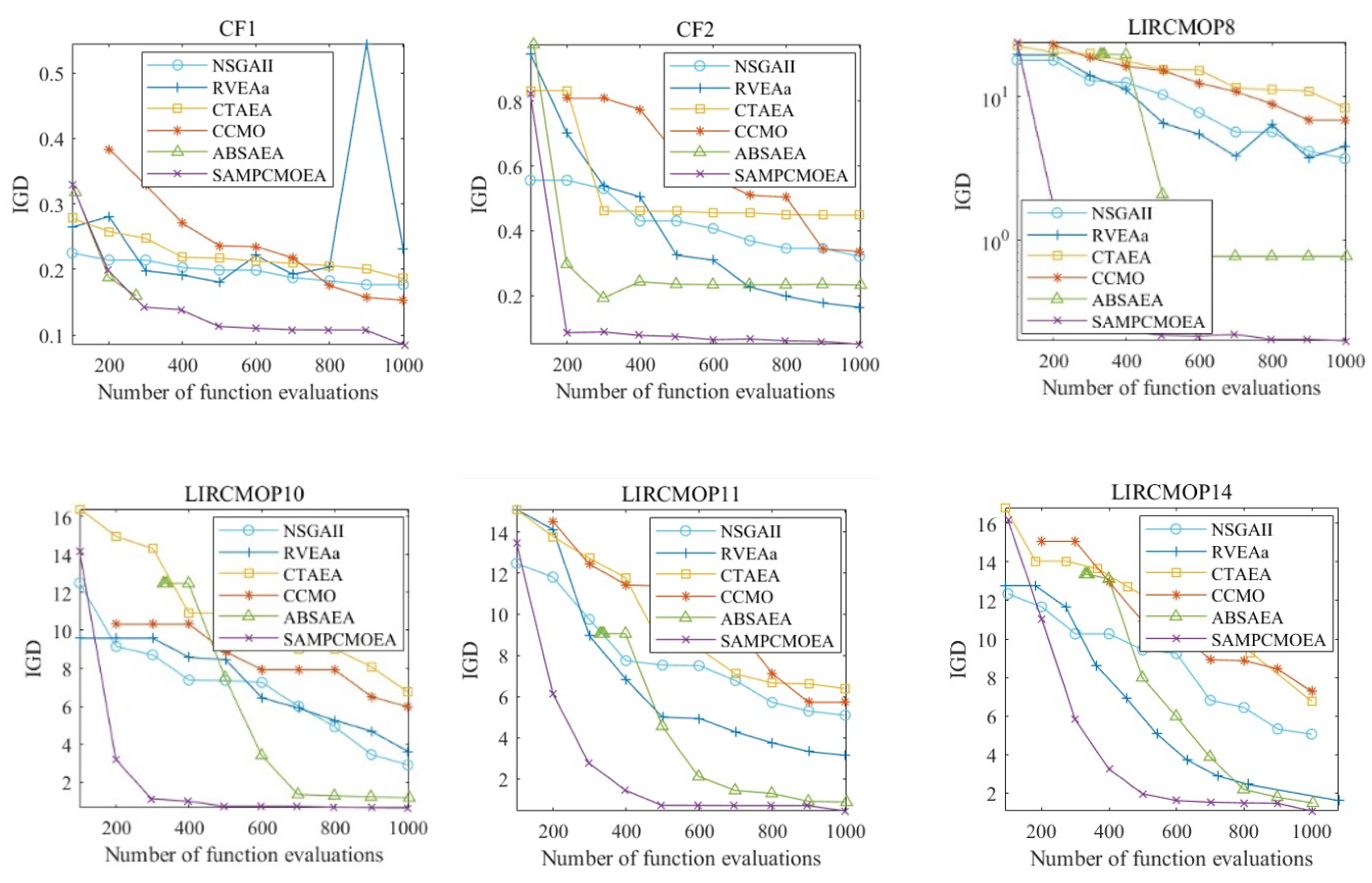

4.1.6. IGD Value Convergence Experiment

In order to demonstrate the convergence curves of the SA-MPCMOEA algorithm compared to other algorithms in terms of the IGD value, we conducted a convergence analysis in CF1, CF2, LIRCMOP8, LIRCMOP10, LIRCMOP11, and LIRCMOP14, respectively. For each test problem, we selected 6 values from 1000 function evaluations to show the convergence variation of the algorithm.

As can be seen in

Figure 6, SA-MPCMOEA converges faster than other algorithms when in search mode 1, which demonstrates the effectiveness of the angle-based convergence model. This is mainly because, given limited computational resources, there are fewer individuals available to explore the feasible region. If the algorithm cannot converge quickly to reduce the search range, the population will spend some computational resources on ineffective exploration.

4.1.7. Real-World Case Study

In order to verify the ability of SA-MPCMOEA to solve practical application problems, we conduct verification respectively in RW1 (Pressure Vessel Design), RW2 (Simply Supported I-beam Design), RW3 (Cantilever Beam Design), RW4 (Crash Energy Management for High-speed Trains), and RW5 (Water Resources Management). The mathematical expressions of these five engineering design problems can be found in [

35]. In each problem, it is necessary to find the minimum value of different objective functions.

From

Table 8, it can be seen that SA-MPCMOEA has achieved the optimal solution or near-optimal solution in all practical engineering problems, demonstrating its applicability in solving practical engineering problems.