Abstract

Today, numerous methods have been developed to address various problems, each with its own advantages and limitations. To overcome these limitations, hybrid structures that integrate multiple techniques have emerged as effective computational methods, offering superior performance and efficiency compared to single-method solutions. In this paper, we introduce a basic method that combines the strengths of fuzzy logic, wavelet theory, and kernel-based extreme learning machines to efficiently classify facial expressions. We call this method the Fuzzy Wavelet Mexican Hat Kernel Extreme Learning Machine. To evaluate the classification performance of this mathematically defined hybrid method, we apply it to both an original dataset and the JAFFE dataset. The method is enhanced with various feature extraction methods. On the JAFFE dataset, the algorithm achieved an average classification accuracy of 94.55% when supported with local binary patterns and 94.27% with a histogram of oriented gradients. Moreover, these results outperform those of previous studies conducted on the same dataset. On the original dataset, the proposed method was compared with an extreme learning machine and wavelet neural network, and it was found that the method has remarkable efficiency compared to the other two methods.

1. Introduction

Data have become the cornerstone of modern research and are available in a rapidly expanding range of forms. Communication and body language, for example, serve as important sources of data. Nonverbal communication, defined as the transfer of information without the use of words, accounts for about 93% of overall communication. Of this, facial expressions, body movements, and the perception of distance account for 55%, and the tone of voice for 38%. Facial expressions provide important information about people’s emotions, thoughts, and states of mind, and play a vital role in human-to-human communication. Hundreds of different variations in facial expressions complicate the process of accurate identification and classification. Research has shown that humans can make around 250,000 different expressions with their faces. Initially, psychologists focused on facial expression identification and classification analysis as a research topic. In 1978, researchers for the first time used image sets as inputs to analyze facial expressions and entered the field of image processing [1]. Since then, thanks to technological advancement, successful progress has been made in the fields of face detection, tracking, and face recognition, and work on analyzing facial expressions has accelerated [2]. Recently, simultaneously with the advancing technological environment, soft methodologies including neural networks, fuzzy structure (logic), and wavelet structure have been applied to categorize facial expressions [3,4,5]. For example, Sahoolizadeh et al. proposed a robust Gabor wavelet-based approach for facial gesture recognition and face identification that can reduce dimensions and improve discriminative capabilities as well as perform linear discriminant analysis. In their experiments, the proposed Gabor wavelet metric achieved a maximum 93% correct recognition rate on the ORL dataset without any preprocessing steps [6]. Zong and Huang showed that Kernel ELM, an extreme learning machine based on least square solutions, can be implemented using kernels for face recognition problems. The performances of kernel ELM were compared with traditional least squares support vector machines on a face recognition dataset [7]. Abidin and Alamsyah classified facial expression images into seven classes using BPNNs as classifiers. They applied three wavelet transforms (Haar, Daubechies, and Coiflet) for feature extraction and tested their method on the JAFFE database with promising results [8]. Mahmud and Maun presented an ELM-based facial expression recognition (FER) model. In their experiments, the face image is first captured and then some salient facial features such as the eyes, mouth, and nose are detected. The feature segments are extracted to form feature vectors through morphological image processing and edge detection techniques. Using ELM, facial expressions are classified into six basic categories on the JAFFE facial expression database [9]. Sailaja and Deepthi used Log Gabor wavelet filters for feature extraction in their study. This is because the wavelet structure is “sensitive to changes in light and variations in pose”. In the classification stage, SVM was run on the JAFFE database [10]. Kar et al. conducted extensive experiments on two reference datasets, JAFFE and Cohn-Kanade Extended (CK+). Their proposed methodology integrates meta-heuristic optimization algorithms (Whale) and a relatively new decomposition algorithm, Variational Mode Decomposition with KELM. The methodology was used as a classifier for the FER system [11]. Padmanabhan and Kanchikere proposed an optimized KELM algorithm with ANFIS for an efficient FR system. In the experiment, the success of the hybrid technique was compared with the performance of existing methods in terms of various parameters (precision, sensitivity, etc.) and it was shown that the proposed FR system produced more efficient results [12].

Undoubtedly, these methods have both strengths and disadvantages. Therefore, recent studies have proposed more efficient hybrid algorithms by combining their strengths and camouflaging their weaknesses. For example, Takagi and Sugeno integrated neural networks with a fuzzy structure to solve complex problems with uncertain parameters more efficiently [13]. Ho, Zhang, and Xu created a new algorithm called Fuzzy Wavelet Neural Network, which aims to test the time frequency and multiresolution of a wavelet structure and its capacity to independently stop a set of responses [14]. In ‘Extreme Learning Machine: RBF Network Case’, Huang and Siew extended the LMM structure to the radial basis function (RBF) network case [15]. Later, Huang, Zhu, and Siew introduced a single hidden layer FF neural network, called the extreme learning machine (ELM), to overcome the problems of classical neural networks such as long computation time and becoming stuck at local optima [16]. Pati and Krishnaprasad proposed the WNN structure integrating wavelet structure and neural networks to overcome the disadvantage that backpropagation approaches local minima when adopting a sigmoidal activation function [17,18]. Subasi et al. integrated wavelet neural networks (WNNs) with an FF error backpropagation artificial neural network (FEBANN) and used the algorithm as a classifier [19]. Yilmaz and Oysal introduced the FWNN model by replacing the THEN structure of the Takagi–Sugeno–Kang fuzzy rule set with a wavelet function [20]. Finally, Golestaneh, Zekri, and Sheikholeslam combined a fuzzy wavelet structure with ELM methods to reduce network complexity and introduced the fuzzy wavelet extreme learning machine (FW-ELM) framework [21].

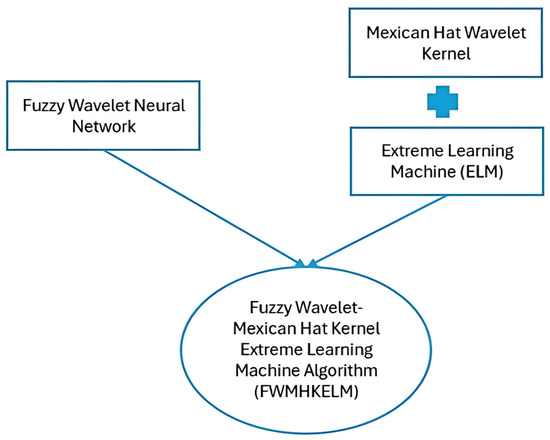

Considering all the above-mentioned issues, in this study we suggest a hybrid baseline algorithm based on fuzzy logic, wavelet structure, and kernel-based extreme learning machines (KELMs) for facial expression classification problems. The hybrid approach is composed of a fuzzy wavelet network and a KELM to form a simple network structure, improve performance accuracy, and mitigate the effect of parameters randomly assigned by the researcher, and the structure is named FWMHKELM and is presented in Figure 1.

Figure 1.

Schematic representation of the proposed hybrid model.

The main motivation of the paper is to present the construction of a hybrid baseline algorithm that provides robust results in solving problems related to facial expression classification. Unlike iterative learning algorithms, our hybrid approach analytically determines the framework learning parameters and captures the diversity of network behaviors from the learning function by using wavelets with different parameters in each of the fuzzy rules. Finally, this study aims to contribute a new facial expression dataset to the literature.

The rest of the paper can be summarized as follows: In Section 2, we briefly recall the KELM and FWNN algorithms and then show the mathematical structure of our hybrid approach. In this context, we show the equivalence of a KELM with an FW model by using the proof of the theorem that the Mexican hat wavelet is an admissible ELM kernel. In the hybrid algorithm, all fuzzy rules consist of the IF-THEN structure and the algorithm uses the sigmoid activation function and the Mexican hat wavelet kernel function, respectively. In Section 3, to evaluate the performance of the hybrid algorithm, we present the experiments we designed, the datasets we used, the results of the experiments, and the comparison results with classical baseline methods to evaluate the performance of the hybrid model. In Section 4, all findings are discussed, and recommendations for future studies are made in Section 5.

2. Methods

In this section, we provide a brief overview of the FWNN and Kernel ELM methods, and then, in the final subsection, we focus on integrating a Mexican hat wavelet kernel-based ELM structure into a fuzzy wavelet structure, as the Mexican hat (MH) wavelet is an adaptive ELM kernel according to the translation–invariant theorem.

2.1. Kernel-Based ELM

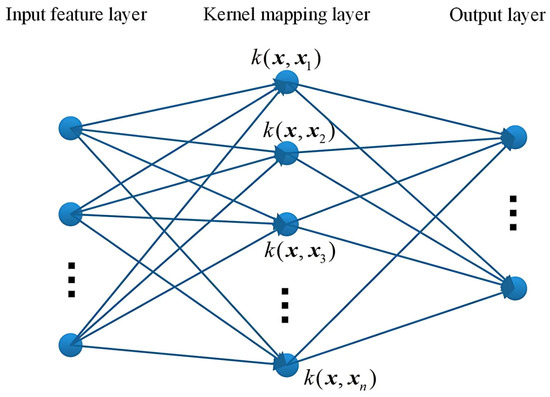

The ELM was introduced to train single hidden layer (HL) feed-forward neural networks (SLFNs), the most widely used artificial neural network structure. A traditional SLFN consists of three layers—the input, hidden, and output layers. The goal of an ELM is to minimize both training errors and the output weight norm simultaneously. To achieve this, an ELM randomly selects input weights and biases, and then computes output neurons using the Moore–Penrose generalized inverse [22]. The weakness of the classical ELM model is that involving randomly assigned weights gives rise to the computation of different accuracies in various trials. However, to eliminate this inconvenience, the proposed kernel-based ELM executes tasks with a modification of random weight assignment between the input and hidden layers [23]. A brief overview of the KELM model will follow. The structure of the KELM is shown in Figure 2.

Figure 2.

A structural representation of the kernel extreme learning machine (KELM).

The mathematical framework of an ELM can be summarized as in Equation (1) [24]:

where t is the output vector, x is the input feature vector, w is the weight vector between the input layer (IL) and the hidden layer (HL), b is the bias, N is hidden nodes, and K is the training sample. Assuming optimal conditions, the difference between the target value (t) and the model output (o) is expected to be zero error [23].

In this case, Equation (1) can be rewritten as Equation (2):

As a result, the formula expressed by Equation (2) can be shortened to be represented by Equation (3).

Since Equation (3) is a linear problem, it is obtained by the Moore–Penrose inverse known as .

In a KELM, the kernel structure maps the data from the input layer to the hidden layer and computes the matrix by applying the orthogonal projection procedure. Applying Mercer’s condition to define the kernel matrix of KELM operates as follows [25]:

where is the hidden layer’s output matrix, is the kernel matrix, and is the kernel function. The output of the KELM model is calculated using Equations (6) and (7).

where is the kernel function matrix of X [25].

2.2. Fuzzy Wavelet Neural Network (FWNN)

Wavelets may be defined as mathematical functions used for representing data or other functions. A wavelet is such a waveform possessing an effectiveness of finite duration with an average value equal to zero. The underlying theme connected to wavelet neural networks is related to the combination of wavelet structure and neural networks. This successful synthesis is called WNN. The novelty of these networks is that they prefer the wavelet as the HL activation function in classical neural networks. The output of the network is given in Equation (8).

where n is the size of the input, and the weight coefficient. and are free parameters to be initialized.

By synthesizing the FIS and the WNN, the structure of the FWNN was created. FWNN incorporates fuzzy rules, wavelet functions, and neural networks. Therefore, it has some of the strengths of these structures. For example, the high reasoning capability of fuzzy logic or the high-precision approximation performance of the wavelet structure. A set of fuzzy rules that influence the operation of this network is shown in Equation (9) [21].

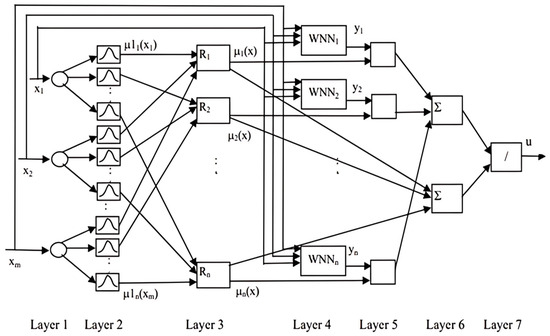

where c and m are the number of fuzzy rules and the size of the input. The output of the FWNN is given in Equation (10) and its general structure is given in Figure 3.

Figure 3.

A structural representation of the fuzzy wavelet NN [14].

It has seven layers. In the first layer, nodes equal to the number of input signals send the signals. In the second layer, each node corresponds to a linguistic term. For each signal input into the system, the degree of membership to the fuzzy set to which the input value belongs is calculated. In the third layer, each node represents a fuzzy rule. Here, the AND (min) operation is used to calculate the values of the output signals of the layer. The fourth layer is where the wavelets are incorporated into the neural network mechanism. In layer five, the output signals from layer three are multiplied by output signals from the WNNs. Defuzzification is carried out in the sixth and seventh layers to determine the final output of the entire network [14].

2.3. Fuzzy Wavelet Mexican Hat Wavelet Kernel Extreme Learning Machine (FWMHKELM) Algorithm

In this section, we will introduce our proposed basic hybrid algorithm. The work cited in [21] showed the equivalence of the FW structure and the ELM structure. However, edge detection or robustness to noise are important steps in facial expression classification. Therefore, in this study, the FW structure is integrated with a KELM. A Mexican hat wavelet is selected as the kernel. The MH wavelet is defined as the second derivative of the Gaussian function (Equation (11)). This derivative property helps to detect more precisely the distinct change points in the signal or data. In classification and pattern recognition problems, it is important to capture sharp transitions. The MH wavelet kernel was chosen for this study due to its better edge detection; its real-valued, positive semi-definite robustness to noise; and its better performance over a wide spectrum.

The hybrid model has a hierarchical and sequential fusion structure. The operation of the model follows a hierarchical structure—First, the input data are disambiguated by fuzzification (FIS). Then, the frequency components of the data are analyzed by applying the wavelet transform. Finally, classification is performed with the MHKELM. This process is also sequential in nature, as it proceeds in successive steps.

We have mentioned that the MH wavelet function is an acceptable ELM kernel [25]. For this case, Wang et al. proved that the Fourier transform of the MH wavelet is nonnegative according to the translation–invariant theorem [25]. This proof is based on the theorem that the Fourier transform is nonnegative and is given [26]; we will not repeat it in this paper.

When the MH wavelet is used as the mother wavelet, the MH wavelet kernel function is derived as in Equations (11) and (12):

Also, the Fourier transform of the MH wavelet is shown in Equation (13):

Equation (14) is obtained when this is solved by a series of integral partial integrations.

The focus of the paper is how to combine the MH wavelet, mentioned above as being an adaptive ELM kernel, and the ELM method into the fuzzy wavelet structure. In this context, it will be argued that the fuzzy wavelet (FW) structure is equivalent to the MH wavelet kernel-based extreme learning machine structure and, finally, the steps of the proposed algorithm will be presented.

The fuzzy wavelet (FW) structure is equivalent to the kernel-based extreme learning machine structure. A TSK model with k inputs and r outputs is defined as in Equation (15) [21]:

where

- is the input vector;

- the output vector;

- is the fuzzy membership function for rule ;

- is calculated with the help of Equation (16) [18]:

When an FW model is called an FIS model, Equations (15) and (16) are expressed as Equations (17) and (18):

where is a combination of the wavelet function and is calculated as in Equation (18).

In Equation (18), s denotes the FW structure and denotes the wavelet function of the jth input of the ith rule. In Equations (17) and (18), the THEN part considers one coefficient for each of the inputs; the number of coefficients for all c rules is . Furthermore, the output of the FWNN is shown in Equation (10) and can again be restated as in Equation (19).

When Equation (19) and (1) are compared, it can be seen that the KELM and FW models are equivalent. Moreover, both models have similar constraints on the number of hidden nodes (N) and the number of rules (c), a power that fires each rule and an activation function that enables communication between each hidden node. FWMHKELM structure—the FW structure is defined as follows:

Depending on whether n is odd or even, is represented by Equations (21) and (22):

In Equation (20), the sigmoid function is used in the IF part of the rule, while the Mexican line wavelet kernel is used in the THEN part. The functions are shown in Equations (23) and (24):

where is the center and is the slope point at .

In an arbitrary training dataset, is the input vector, is the output vector, and c is the number of fuzzy rules.

The mathematical model of FW can be defined as Equations (25) and (26):

where is the MH wavelet kernel (MHW-KELM) matrix and it can be defined as Equation (27):

Equation (25) can be compactly written as follows:

where is the hidden matrix.

The output equation of the new classifier is shown as Equation (29):

The steps of the FWMHKELM algorithm are summarized below:

Step 1: Randomly select the parameters for the membership function and wavelet coefficients.

Step 2: Construct the hidden matrix using Equations (26) and (28).

Step 3: Calculate the matrix Q.

3. Experimental Results and Analysis

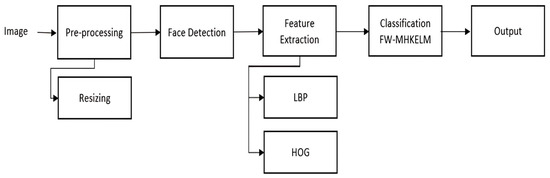

In this study, two experiments were designed, and the first one utilized the JAFFE dataset, which is available in the literature and is frequently used in facial expression classification problems. Access to the Jaffe dataset is publicly available [27]. It consists of 213 black-and-white facial photographs of 10 Japanese women with 7 basic emotional expressions. Each participant displayed each emotional expression more than once. The workflow of the algorithm developed for use in facial expression classification problems is shown in Figure 4.

Figure 4.

The architectural design of the facial expression classification system.

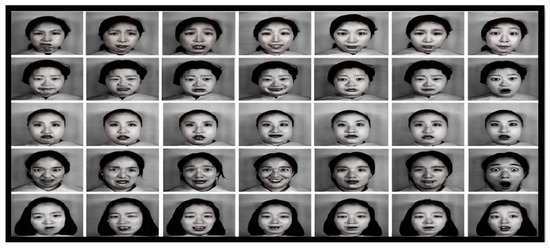

Examples of these 256 × 256-pixel images are shown in Figure 5.

Figure 5.

Sample images from the Jaffe database [27] for facial expression recognition.

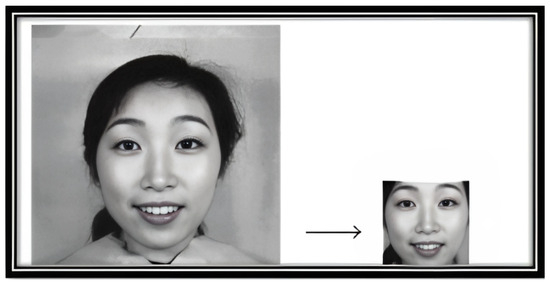

During preprocessing, image intensities were normalized, and only the region containing the face expressing the emotion was resized. We resized the original image of size 256 × 256 to 168 × 120 by removing background effects. An example is presented in Figure 6.

Figure 6.

Example of image resizing used for preprocessing.

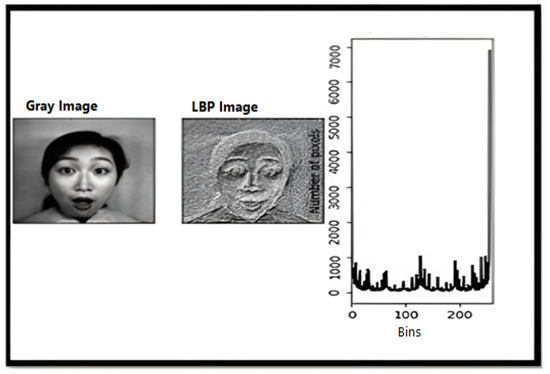

Additionally, histogram equalization was applied to remove lighting effects. For feature extraction, we employed the local binary pattern (LBP) method. To evaluate the effectiveness of LBP, we also tested the histogram of oriented gradients (HOG) method for comparison.

The output image (Figure 7) shows that the LBP-processed image has improved the facial landmark boundaries. Moreover, it is very important to analyze the changes in the pixel positions of key regions such as the eyebrows, eyes, and mouth with respect to a given reference point in facial expression detection. We also test other feature representations such as HOG for comparison. For the classification phase of the facial expression recognition problem, the architecture (network structure) of the proposed algorithm needs to be established. In the developed algorithm, the wavelet coefficients are randomly generated and remain constant. In addition, the initial parameters are all user inputs and are randomly defined. At this stage, the structure of the network is created.

Figure 7.

Example of local binary pattern (LBP) processing in feature extraction.

In this study, the structure of the network was defined with user input. Accordingly, the number of neurons/ fuzzy rules in the HL was set as 10, and the minimum error was set as 0.001. To calculate the average classification measure, the experiment was performed with 10-fold cross-validation. Because the number of images in the dataset is limited, after training the dataset, the test image is classified using the proposed hybrid algorithm classifier. All analyses were performed using MATLAB R2021b.

When a dataset contains more than two classes or an unequal distribution of observations across classes, the calculated accuracy rate may be misleading. Therefore, in addition to accuracy, a confusion matrix was generated to summarize the classification algorithm’s performance. The confusion matrix provides preliminary information about the types of errors made by the model. Table 1 provides a detailed confusion matrix showing classification performance across various facial expressions.

Table 1.

Classification results: the confusion matrix of the LBP + FWMHKELM approach.

According to Table 1, the performance of the classifier for the expression of happiness is 96.13%. The performance is 91.89% for fear, and 97.63% for surprise. It was determined that over 90% of the classifications for all expressions performed by the proposed algorithm were correct. The average classification accuracy was calculated as 94.55%. In parallel, the experiment was repeated on the same dataset using different methods in the face feature extraction step. Thus, the aim was to observe how the proposed algorithm performs with different facial feature extraction algorithms. In the experiment, HOG was used in the face feature extraction step. In the classification phase, the FWMHKELM algorithm was applied, and the confusion matrix was obtained again. The confusion matrix obtained for this experiment is presented in Table 2.

Table 2.

Classification results: the confusion matrix of the HOG + FWMHKELM approach.

Table 2 shows that the performance of the classifier is 97.78% for happiness, 98.89% for fear and 84.22% for disgust. When the algorithm was run with histograms of oriented gradients, it was found that the algorithm correctly classified over 90% for all expressions except disgust. The average classification accuracy was calculated as 94.27%. The developed algorithm is also compared with other existing studies in the literature using the same dataset and the results are presented in Table 3.

Table 3.

Performance comparison of various classification methods.

Table 3 shows that the hybrid algorithm and the local binary pattern algorithm work together in feature detection to achieve more efficient results.

In the second experiment, original images were used to test our approach. The dataset consists of images of 38 people of Turkish origin, 17 males and 21 females, each showing six basic facial expressions (happy, sad, fearful, angry, surprised, and neutral). In the first step of the process, Ethics Committee Approval was obtained for the creation of the dataset and the necessary personal data sharing consents were obtained from the participants through the Voluntary Participation Form. To balance gender and age distribution and reduce sampling bias, the study protocol was designed to include stratified participant recruitment. Neutral images were captured twice under controlled lighting conditions to minimize within-dataset bias. The original image size is 768 × 1024 pixels. Image samples from the database are shown in Figure 8.

Figure 8.

Sample images from the original database (approved by the Ethics Committee).

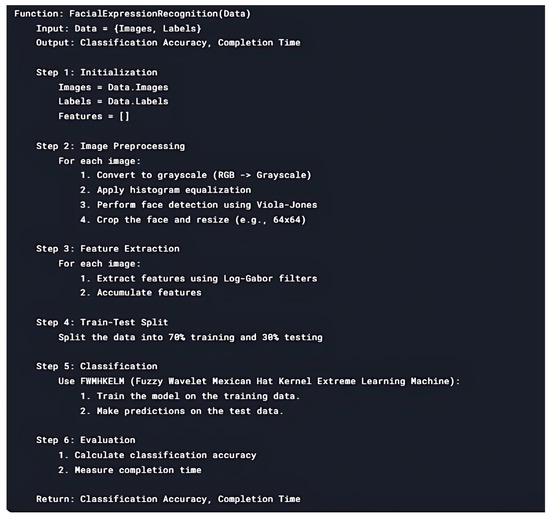

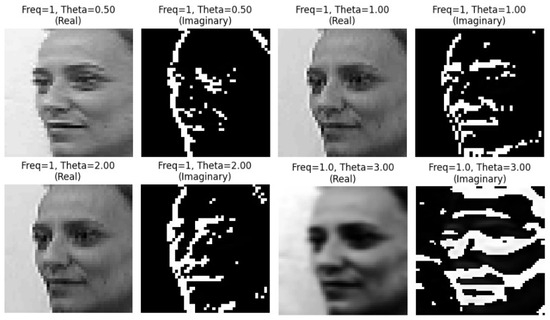

The pseudocode of this experiment is presented in Figure 9. The hybrid method was again run on MATLAB R2021b software. The data were split 70:30 into training data and test data. This ratio was chosen because the neural network was intended to be trained with maximum data, and the remaining images were sufficient to test the accuracy of the algorithm. In the preprocessing step, the image intensities were normalized and only the image consisting of a face expressing an emotion was preprocessed and resized. On the other hand, gray processing was used on the face images to remove the effects of lighting. Histogram equalization was applied for gray processing. Using the Viola–Jones algorithm, certain features in the images were cropped and the face region of the image was extracted. Log Gabor filters were used for feature extraction.

Figure 9.

The algorithm for facial expression classification.

Figure 10.

Preprocessing steps for face recognition—color image to grayscale conversion and face detection.

Figure 11.

Analysis of real and imaginary components of frequency response with Log Gabor filter with respect to phase angle (θ).

In the classification phase, the initial parameters were chosen by the user. For example, the number of neurons in the HL was set to 5, in LR it was 0.25, the minimum error was 0.001, and the maximum iteration number was 250. After training the database, the test image was classified using the FWMHKELM classifier. The results are presented in Table 4.

Table 4.

Performance metrics comparison for various methods of face recognition.

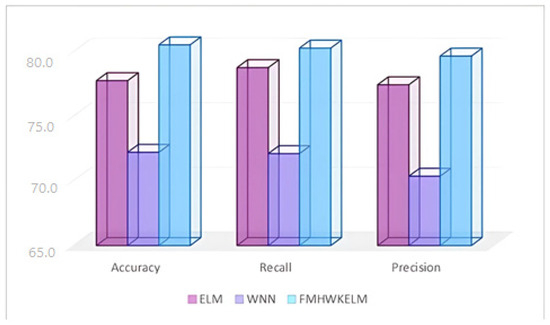

As can be seen in Table 4, the FWMHKELM algorithm demonstrates better classification performance compared to the ELM and WNN algorithms. However, its training time is longer than that of the other algorithms. This increased training time can be attributed to the use of fuzzy rules within the model. To evaluate the classification performance of FWMHKELM, we calculated the accuracy, precision, and recall parameters. The accuracy value of the test is calculated by comparing the test data with the results predicted using real labels. The average accuracy value was calculated as 80.6%. Figure 12 graphically shows the comparison of all algorithms categorized by the accuracy, recall, and precision parameters. The study shows that our proposed FWMHKELM algorithm showed better results than the ELM and WNN.

Figure 12.

Comparative classification analysis of ELM, WNN, and FWMHKELM methods.

4. Discussion

The current work presents a hybrid method that will bring a new perspective to the problem of facial expression classification. The structure of the hybrid method includes fuzzy rules, wavelet structure, and a kernel-based ELM. We call this structure FWMHKELM for short. The key advantage of FWMHKELM lies in its simplicity and its high accuracy and generalization performance.

Building on this theoretical foundation, the mathematical framework of the FWMHKELM model was used to classify facial expressions. The facial expression classification helps build a flexible facial expression identification model in terms of mapping behavioral features against physiological biometric features. People have distinct physiognomic expressions associated with emotions such as happiness, sadness, shock, and fear. In the classification system, these expressions are identified through established matching templates based on the geometry of the face.

The mathematical basis of the structure is presented in the paper. Taking advantage of the fact that the Mexican hat wavelet is an acceptable ELM kernel, it is discussed how to integrate a fuzzy wavelet into a fuzzy wavelet neural network and into a kernel-based extreme learning machine algorithm. The theoretical equivalence between the fuzzy wavelet structure and the Mexican hat (MH) kernel-based ELM, as demonstrated in Equations (19) and (26), ensures that the model captures both the local and global features of facial expressions through wavelet decomposition and fuzzy rule-based reasoning. The high classification accuracies (94.55% with LBP, 94.27% with HOG) empirically validate this synergy—the MH kernel’s edge-detection properties (Equations (11) and (12)) enhance feature discrimination, while fuzzy rules handle inherent uncertainties in expression data. For instance, the confusion matrices (Table 1 and Table 2) reflect the model’s ability to leverage wavelet-transformed features (e.g., eyebrow/mouth movements) via the MH kernel, with misclassifications primarily occurring between expressions with overlapping geometric cues (e.g., fear/disgust). This alignment between theory and results underscores how the hybrid structure’s mathematical design—combining fuzzy adaptability, wavelet multiresolution analysis, and the ELM’s efficient optimization—directly translates into superior performance metrics (Figure 12). Future work could further dissect this relationship by visualizing feature maps or kernel responses for specific expressions.

In this study, the coding for the algorithm and all other comparison algorithms was performed in MATLAB (R2021b). The combination of the algorithm with feature extraction algorithms involved in the classification of facial expression datasets (Log Gabor filters, local binary patterns, and histograms of oriented gradients) tends to produce highly successful classes. The integration of feature extraction techniques such as LBP and HOG further improves the classification performance by capturing critical geometric and textural features of facial expressions. Comparison with existing techniques in the literature, such as ELM and WNN, shows the superiority of the proposed method, especially in handling the intrinsic uncertainty associated with human emotions and expressions.

In the experiments, it was observed that the hybrid algorithm failed to classify fear and disgust expressions. One of the main reasons why the FWMHKELM model misclassifies some facial expressions is the feature overlap problem between expressions such as “fear” and “disgust”. These expressions can show highly correlated features because they involve similar movements of facial muscles. For example, both expressions have similar visual cues, such as nose wrinkling, eyebrows pulling in, and lips stretching. This can limit the discrimination ability of the model, especially when using classical feature extraction methods. To reduce misclassifications, the overall performance of the model can be improved by generating synthetic data with more variations in these expressions in the training set, or dynamic data augmentation and 3D morphable techniques can be integrated to reduce anatomical similarities.

Regarding dataset considerations, while the original dataset used in this study (Section 3) was carefully curated with ethical approval and demographic diversity (38 participants with balanced gender representation), we acknowledge that its smaller scale compared to benchmark datasets (e.g., JAFFE) may raise concerns about potential biases. To mitigate this, we employed two strategies: (1) cross-dataset validation, where the model’s performance was first rigorously tested on the JAFFE benchmark (achieving 94.55% accuracy), demonstrating consistency with established literature (Table 3); and (2) feature augmentation via Log Gabor filters and LBP/HOG, which reduce sensitivity to dataset-specific artifacts by emphasizing universal texture/edge features (Figure 7 and Figure 11), as demonstrated in [10]. These steps collectively enhance the model’s robustness to dataset-specific artifacts while preserving expression-related features across diverse populations. Nevertheless, biases could persist in subtle cultural expressions (e.g., Turkish vs. Japanese facial norms in JAFFE). Future work will expand the dataset with multicultural samples (CK+, FER2013) and integrate adversarial training to further minimize demographic bias.

5. Conclusions

In summary, this study presents a novel and fundamental approach to the facial expression classification problem through the proposed Fuzzy Wavelet Mexican Hat Kernel Extreme Learning Machine (FWMHKELM) framework. The FWMHKELM framework combines high accuracy with efficient feature extraction techniques, offering an important contribution to the problem. As the study provides the basic structure, we would like to highlight several areas of improvement in the work that can guide future research. One problem is the random initialization of parameters, which can affect the consistency of the results. This problem can be addressed by using heuristic optimization algorithms such as Genetic Algorithms, Whale, or Grey Wolf Optimization to automatically determine optimal parameter values. With a better pre-processing approach, the area that is likely to be a face can be minimized. Instead of the Mexican hat wavelet used in the proposed structure, it can be integrated with different wavelet kernel structures (such as Morlet or Haar). Comparative analyses with deep learning architectures (e.g., ResNet or Vision Transformer) will be conducted in future studies to evaluate the competitiveness of the model more comprehensively. Thanks to the use of fuzzy logic in our study, we believe that the model has a certain level of interpretability. Fuzzy rules allow the decision mechanism to be analyzed with a certain degree of clarity. However, the combination of a fuzzy wavelet and an ELM may cause the model to be perceived as a “black box”. Considering this point, in future studies, model stability can be supported with explainability techniques such as SHAP or LIME to support the explainability of the proposed model.

With further refinements, the FWMHKELM framework has the potential to set a new benchmark in affective computing and pattern recognition. Its hybrid nature bridges the gap between traditional machine learning and modern deep learning, offering a scalable solution for applications requiring both high accuracy and explainability. Future work could also explore edge deployment for low-power devices, enabling real-time emotion detection in IoT and wearable technologies.

In conclusion, this study lays a solid foundation for advancing facial expression classification systems. By addressing the outlined limitations and integrating cutting-edge techniques, the proposed framework can evolve into a versatile tool for both academic research and industrial applications, paving the way for innovative solutions in emotion-aware AI systems.

Author Contributions

Conceptualization, A.K.K. and O.O.; data curation, A.K.K.; formal analysis, A.K.K. and O.O.; investigation, O.O.; methodology, A.K.K. and O.O.; project administration, O.O.; resources, A.K.K. and O.O.; software, A.K.K. and O.O.; supervision, A.K.K. and O.O.; validation, A.K.K. and O.O.; visualization, A.K.K. and O.O.; writing—original draft, A.K.K.; writing—review and editing, A.K.K. and O.O. All authors have read and agreed to the published version of the manuscript.

Funding

This study was supported by Eskisehir Technical University Scientific Research Project Commission under grant No. 21DRP054.

Institutional Review Board Statement

The study was conducted in accordance with the Declaration of Helsinki and approved by the Institutional Ethics Committee of Eskisehir Technical University (E-87914409-050.03.04-2200020290 and 21.11.2022).

Informed Consent Statement

Informed consent was obtained from all subjects involved in the study.

Data Availability Statement

The first experiment utilizes an existing dataset from the literature. For the second experiment, the raw data supporting the conclusions of this article will be made available by the authors on request.

Conflicts of Interest

The authors declare no conflicts of interest.

Abbreviations

The following abbreviations are used in this manuscript:

| ANN: | Artificial Neural Network |

| BPNN: | Backpropagation Neural Network |

| CNN: | Convolutional Neural Networks |

| ELM: | Extreme Learning Machine |

| FER: | Facial Expression Recognition |

| FIS: | Fuzzy Inference Systems |

| FF: | Feed Forward |

| FLD: | Fisher’s Linear Discriminant Analysis |

| FNN: | Fuzzy Neural Network |

| FWNN: | Fuzzy Wavelet Neural Network |

| HL: | Hidden Layer |

| HOG: | Histogram of Oriented Gradients |

| IL: | Input Layer |

| KELM: | Kernel-Based Extreme Learning Machine |

| LBP: | Local Binary Pattern |

| LIME: | Local Interpretable Model-Agnostic Explanation |

| MIP: | Morphological Image Processing |

| MH: | Mexican Hat |

| NN: | Neural Network |

| SHAP: | Shapley Additive Explanations |

| WNN: | Wavelet Neural Network |

References

- Suwa, M.; Sugie, N.; Fujimora, K. A preliminary note on pattern recognition of human emotional expression. In Proceedings of the 4th International Joint Conference on Pattern Recognition, Kyoto, Japan, 7–10 November 1978; pp. 408–410. [Google Scholar]

- Fasel, B.; Luettin, J. Automatic facial expression analysis: A survey. Pattern Recognit. 2003, 36, 259–275. [Google Scholar] [CrossRef]

- Wang, J.; Wang, C.; Chen, C. The bounded capacity of fuzzy neural networks (FNNs) via a new fully connected neural fuzzy inference system (F-CONFIS) with its applications. IEEE Trans. Fuzzy Syst. 2014, 22, 1373–1386. [Google Scholar] [CrossRef]

- Lin, C.K. H∞ reinforcement learning control of robot manipulators using fuzzy wavelet networks. Fuzzy Sets Syst. 2009, 160, 1765–1786. [Google Scholar] [CrossRef]

- Reyneri, L. Unification of neural and wavelet networks and fuzzy systems. IEEE Trans. Neural Netw. 1999, 10, 801–814. [Google Scholar] [CrossRef]

- Sahoolizadeh, H.; Sarikhanimoghadam, D.; Dehghani, H. Face detection using Gabor wavelets and neural networks. World Acad. Sci. Eng. Technol. 2008, 45, 552–554. [Google Scholar]

- Zong, W.; Huang, G.B. Face recognition based on extreme learning machine. Neurocomputing 2011, 74, 2541–2551. [Google Scholar] [CrossRef]

- Abidin, Z.; Alamsyah, A. Wavelet-based approach for facial expression recognition. Int. J. Adv. Intell. Inform. 2015, 1, 7–14. [Google Scholar] [CrossRef][Green Version]

- Mahmud, F.; Maun, M.A. Facial expression recognition system using extreme learning machines. Int. J. Sci. Eng. Res. 2017, 8, 26–30. [Google Scholar]

- Sailaja, G.; Deepthi, V.H. Wavelet-based feature extraction for facial expression recognition. Int. J. Pure Appl. Math 2018, 118, 1–7. [Google Scholar]

- Kar, N.B.; Babu, K.S.; Bakshi, S. Facial expression recognition system based on variational mode decomposition and whale optimized KELM. Image Vis. Comput. 2022, 123, 104445. [Google Scholar] [CrossRef]

- Padmanabhan, S.; Kanchikere, J. An efficient face recognition system based on hybrid optimized KELM. Multimed. Tools Appl. 2020, 79, 10677–10697. [Google Scholar] [CrossRef]

- Takagi, T.; Sugeno, M. Fuzzy identification of systems and its applications for modeling and control. IEEE Trans. Syst. Man Cybern. 1985, 15, 116–132. [Google Scholar] [CrossRef]

- Ho, D.; Zhang, P.A.; Xu, J. Fuzzy wavelet networks for function learning. IEEE Trans. Fuzzy Syst. 2001, 9, 200–211. [Google Scholar] [CrossRef]

- Huang, G.B.; Siew, C.K. Extreme learning machine: RBF network case. In Proceedings of the 8th International Conference on Control, Automation, Robotics and Vision (ICARCV), Kunming, China, 6–9 December 2004; IEEE: New York, NY, USA, 2004; pp. 1029–1036. [Google Scholar]

- Huang, G.B.; Zhu, Q.Y.; Siew, C.K. Extreme learning machine: Theory and applications. Neurocomputing 2006, 70, 489–501. [Google Scholar] [CrossRef]

- Pati, Y.C.; Krishnaprasad, P.S. Analysis and synthesis of feedforward neural networks using discrete affine wavelet transforms. EEE Trans. Neural Netw. 1993, 4, 73–85. [Google Scholar] [CrossRef]

- Zhang, Q.; Benveniste, A. Wavelet networks. IEEE Trans. Neural Netw. 1992, 3, 889–898. [Google Scholar] [CrossRef]

- Subaşı, A.; Alkan, A.; Köklükaya, A.; Kiymik, M.K. Wavelet neural network classification of EEG signals by using AR model with MLE preprocessing. Neural Netw. 2006, 18, 985–997. [Google Scholar] [CrossRef]

- Yılmaz, S.; Oysal, Y. Fuzzy wavelet neural network models for prediction and identification of dynamical systems. IEEE Trans. Neural Netw. 2010, 21, 1599–1609. [Google Scholar] [CrossRef]

- Golestaneh, P.; Zekri, M.; Sheikholeslam, F. Fuzzy wavelet extreme learning machine. Fuzzy Sets Syst. 2018, 342, 90–108. [Google Scholar] [CrossRef]

- Ozdemir, O.; Kaya, A. Aşırı Öğrenme Makinelerinin Performansının Değerlendirilmesi ve Moore-Penrose Matrisinin Hesaplanması; Ekin Basım Yayın Dağıtım: Bursa, Türkiye, 2019; pp. 145–159. [Google Scholar]

- Huang, G.B.; Zhou, H.M.; Ding, X.J.; Zhang, R. Extreme learning machine for regression and multiclass classification. IEEE Trans. Syst. Man. Cybern. Part B Cybern. 2012, 42, 513–529. [Google Scholar] [CrossRef]

- Kisi, O.; Alizamir, M. Modelling reference evapotranspiration using a new wavelet conjunction heuristic method: Wavelet extreme learning machine vs wavelet neural networks. Agric. For. Meteorol. 2018, 263, 41–58. [Google Scholar] [CrossRef]

- Wang, J.; Song, Y.F.; Ma, T.L. Mexican hat wavelet kernel ELM for multiclass classification. Comput. Intell. Neurosci. 2017, 2017, 7479140. [Google Scholar] [CrossRef] [PubMed]

- Zhang, L.; Zhou, W.; Jiao, L. Wavelet support vector machine. IEEE Trans. Syst. Man. Cybern. Part B Cybern. 2004, 34, 34–39. [Google Scholar] [CrossRef]

- Jaffe Dataset. Available online: https://zenodo.org/records/3451524 (accessed on 22 March 2025).

- Abidin, Z.; Harjoko, A. A neural network-based facial expression recognition using Fisherface. Int. J. Comput. Appl. 2012, 59, 30–34. [Google Scholar] [CrossRef]

- Ghimire, D.; Lee, J. Extreme learning machine ensemble using bagging for facial expression recognition. J. Inf. Process. Syst. 2014, 10, 443–458. [Google Scholar] [CrossRef]

- Dharani, P.; Vibhute, A.S. Face recognition using wavelet neural network. Int. J. Adv. Res. Comput. Sci. Softw. Eng. 2017, 7, 101–107. [Google Scholar] [CrossRef]

- Shi, W.; Jiang, M. Fuzzy wavelet network with feature fusion and LM algorithm for facial emotion recognition. In Proceedings of the IEEE International Conference on Safety Produce Informatization (IICSPI), Chongqing, China, 10–12 December 2018; IEEE: New York, NY, USA, 2018; pp. 582–586. [Google Scholar]

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).