Abstract

In the presence of external interference, multimodal target classification plays a crucial role. Traditional single-modal classification systems are limited by the singularity of data representation and their sensitivity to environmental conditions, making it challenging to meet the robustness requirements for target classification under external disturbances. This paper addresses the inadequacies of single-modal target classification by proposing a target classification algorithm based on audiovisual fusion. The innovative contributions of this work are as follows. (1) To resolve the issue of the lack of correlation between audio signals and image signals, we introduce a method that converts audio signals into spectrograms and fuses them with target images. The advantage of this method is that the spectrogram can fully utilize the effective information in the audio, ensuring stability, while also effectively addressing the challenge of fusing one-dimensional time series audio signals with two-dimensional discrete image signals. (2) We propose a convolutional extraction and modal fusion network framework that incorporates an attention mechanism module during the fusion process, ensuring the stability and robustness of the fused data for audiovisual target classification. Validation was conducted on both a custom dataset and the YouTube-8M dataset. The experimental results indicate that the proposed method demonstrates improvements in accuracy of 2.9%, 2.4%, 1.2%, and 0.9% compared to other multimodal fusion target classification methods on the custom dataset. This demonstrates the effectiveness of the proposed multimodal fusion recognition approach and fully validates the theoretical rationale behind our method.

1. Introduction

A growing number of researchers are directing their attention towards the field of object classification due to its excellent potential for practical applications. Autonomous driving [1], for instance, facilitates vehicles in making accurate driving decisions through the detection and classification of traffic signs. Image retrieval [2] is enhanced by identifying and classifying image content, resulting in improved retrieval efficiency. Security monitoring [3] involves the process of detecting, recognizing, and categorizing individuals or items that are deemed suspicious to ensure public safety. Medical imaging [4] is used by clinicians to classify and diagnose lesions. Agricultural applications [5] involve the identification and classification of field crops, as well as the detection of the growth of crops.

With the continuous development of machine learning, single-modal target classification technologies have also advanced rapidly. Deep learning has revolutionized the field of target classification through its end-to-end feature learning mechanism. In image classification, Convolutional Neural Networks (CNNs) [6] automatically extract hierarchical features (from edge textures to semantic information) through multiple layers of non-linear transformations. Models such as ResNet [7], EfficientNet [8], and VGG [9] have surpassed human classification accuracy on the ImageNet dataset, significantly improving classification efficiency. In the field of sound classification, end-to-end models based on Recurrent Neural Networks (RNNs) and Transformers (such as WaveNet [10]) can directly learn the temporal dependencies from raw audio signals, exhibiting stronger capabilities in sound classification. The core breakthrough of deep learning lies in eliminating the bottleneck of manual feature design by obtaining more essential feature representations through data-driven methods and improving overall performance by jointly optimizing feature extraction and classification processes. However, single-modal deep learning target classification has significant limitations. First, its semantic representation is incomplete, as a single modality can only capture partial attributes of the target. Second, it is highly sensitive to the environment, with a single modality being susceptible to interference from other data sources (e.g., visual models affected by lighting changes, speech models sensitive to background noise). Third, it has weak generalization with small sample sizes, and model performance significantly degrades when data for a single modality is scarce.

The rapid development of multimodal fusion technology [11,12,13] can be attributed to several key factors. First, multimodal fusion technology enables a more accurate description of the same entity through different modalities. Second, multimodal fusion technology can fully utilize the correlated information between modalities when classifying objects, thus complementing each other to classify the same object. Third, in multimodal fusion technology, if one modality’s information is missing or incomplete, the robust nature of multimodal fusion ensures that the system remains stable and operates without being affected. Multimodal fusion effectively overcomes the bottleneck of single-modal classification by leveraging the synergistic complementarity of heterogeneous data. Firstly, joint learning of vision and hearing can mutually correct environmental interference (e.g., using emotional features to compensate for noise in speech classification [14]). Secondly, multimodal pre-training constructs a unified semantic space through cross-modal alignment, enhancing fine-grained classification capabilities (e.g., combining pathological images and clinical text to improve disease diagnosis accuracy [15]). Moreover, through feature sharing between modalities (e.g., fusion of infrared and visible light images [16]), the reliance on labeled data from a single modality is reduced.

Despite the widespread application of multimodal fusion classification technologies [17,18,19,20,21], during the complementary process of cross-modal data fusion, the heterogeneity generated due to the distinct semantic characteristics of each modality can lead to insufficient feature information in the fused data. To address the heterogeneity issue in multimodal data fusion, this paper proposes an audiovisual fusion-based target classification framework. The innovations of this paper are as follows.

(1) The transformation method is used to convert the one-dimensional sequence of sound, which is transformed into a two-dimensional discrete sound spectrogram. This process visually visualizes the sound and obtains a more stable sound signal. Additionally, it solves the problem of the ineffective fusion of one-dimensional sound sequence and two-dimensional image sequence, enhances the complementary ability of sound and image, and improves the accuracy of object classification.

(2) Under the condition of dual-channel input of sound and images, this study combines Convolutional Neural Networks (CNNs) with feature-level fusion. To address the misalignment issue between spectrograms and images during the fusion process, this paper introduces a self-attention mechanism module during feature fusion. This approach utilizes a weighted method to resolve the heterogeneity between the spectrogram and image fusion.

2. Related Work

2.1. Single-Modal Target Classification

Image classification, as a popular research topic in the field of computer vision, has developed rapidly in recent years. It employs a series of complex algorithms and models to deeply analyze various features in images, such as color, texture, shape, and edges, thereby accurately extracting the core information of the image. This information is then processed and transformed into a form that machines can understand and process. For example, in the ImageNet challenge in 2012, the introduction of the AlexNet model [22] achieved an overwhelming victory. Its network structure significantly improved the accuracy of image classification and solved the problems of gradient vanishing and overfitting during model training in deep learning. Subsequently, the introduction of GoogLeNet [23], VGG [9], and ResNet [7] accelerated progress in the field of image classification. They all optimized the network structure to enhance the model’s ability to process images and improve its classification accuracy. In 2020, Google proposed Vision Transformer (ViT) [24], which achieved end-to-end modeling of high-resolution images through a global attention mechanism, significantly enhancing the performance of image classification tasks. In 2025, YJ Ban [25] introduced the DINOv2 model, which employs self-supervised learning strategies to extract universal visual features without labeled data, significantly improving the adaptability of cross-domain image classification.

Sound classification, another research hotspot in the field of target classification, has also been widely explored by researchers. Sound signals possess unique spatiotemporal features that can provide effective information in complex environments. Therefore, extracting and analyzing these features has become the key to research. Deep learning models, trained on large-scale data, can learn more robust feature representations, thereby demonstrating higher robustness in complex environments with noise, accents, and varying speech rates. In 2019, Qiuqiang Kong [26] and others proposed a large-scale pre-trained audio neural network (PANNS) for audio pattern classification. This method introduced the Wavegram–Log-Mel–CNN architecture, which combines log-mel spectrograms and waveforms as input features, significantly enhancing the performance of audio pattern classification. In 2020, Benjamin Desplanques [27] and others proposed the ECAPA_TDNN method, which introduced the Squeeze-and-Excitation (SE) module to enhance the model’s ability to focus on important feature channels and aggregate features from multiple layers. By leveraging complementary information from different levels, this method improved the model’s feature representation capability and enhanced sound classification performance.

Despite the significant breakthroughs achieved in sound classification through deep learning, monomodal sound classification exhibits poor robustness in complex environments. Moreover, under the interference of noise, deep learning-based sound classification techniques may fail to classify targets. In the field of deep learning-based image classification, reliance on large-scale data annotation and extensive model training is significant. Additionally, under conditions of varying lighting and target occlusion, robustness tends to decline, leading to a substantial decrease in the accuracy of image target classification. Therefore, to enhance classification robustness, it is necessary to introduce other modalities to assist in target classification.

2.2. Multimodal Target Classification

Multimodal fusion object detection (Multi-modal Fusion Object Detection) is a direction based on the fusion of multimodal data. It is one of the important research directions in the field of computer vision in recent years [28]. With the continuous improvement of computing power and sensor technology, multimodal data processing has progressed from monomodal object classification to multimodal object classification in the current environment. Multimodal fusion object detection technology can combine different data structures, fully utilize the complementary relationship between data, better understand the environment comprehensively, and improve the accuracy and robustness of object classification.

In recent years, deep learning has played a key role in multimodal fusion object detection. In 2021, Xu et al. proposed a multimodal fusion framework based on Transformer, which effectively fused image and LiDAR data through self-attention mechanisms [29]. This method performed excellently in object detection tasks in autonomous driving scenarios, significantly improving classification accuracy and speed. Aligning and fusing cross-modal features is one of the core challenges in multimodal fusion object detection. In 2024, K. Islam et al. proposed a joint enhancement method for multimodal data [30], which reduced the information loss between different modalities through collaborative enhancement at the data level. This method performed particularly well in complex environments such as low light and occlusion. In 2025, Chen et al. further improved the Transformer model [31] and proposed a multi-scale multimodal fusion network (Multi-scale Multi-modal Fusion Network, MMFN). This network can perform feature fusion at different scales, thereby better capturing the details and contextual information of objects.

Despite the progress made in multimodal fusion object detection in the above cases, there are still problems to be solved in object detection. For example, when describing the same object, different modalities often have significant semantic differences due to differences in sensor working principles, imaging mechanisms, and data representation methods. This semantic gap is not only reflected in the mismatch of features between different modalities but also in the inconsistency of descriptions of the same object. Therefore, how to solve the semantic gap between features of different modalities is the key to achieving efficient and accurate multimodal fusion object detection.

In response to the above problems, this research starts from the task of using audio to assist image classification, aiming to transfer image features from training set samples (containing known categories) to test set samples (containing unknown categories) through shared semantic features. In this process, this paper explores the mapping mechanism of semantic feature space to image feature space and its generalization ability. Meanwhile, to address the semantic gap between different modal features, this paper introduces a feature matching mechanism in the multimodal information fusion process. Through this mechanism, this paper can effectively align the feature representations of different modalities, thereby reducing their heterogeneity differences.

3. Proposed Method

3.1. Audio-Visual Fusion Network Model

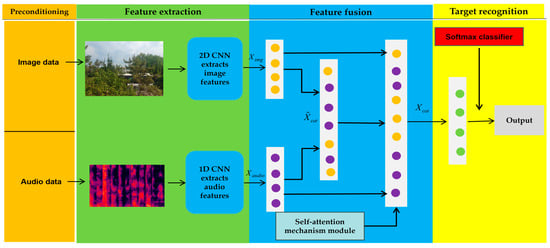

Figure 1 illustrates the architecture of M-AVFA. To address the issue of cross-modal fusion between audio and visual data, we explored a novel approach. First, since audio signals are one-dimensional temporal signals, we leveraged the relationship between the time domain and the frequency domain. By applying the Fast Fourier Transform (FFT), we converted the temporal information into frequency information. Subsequently, we extracted audio features through a series of operations, including triangular filters and discrete cosine transforms, and transformed them into corresponding audio feature spectrograms.

Figure 1.

M-AVFA general frame diagram.

To fully exploit the semantic correlations between auditory and visual modalities, we designed a dual-stream network architecture. The audio spectrogram and target image are independently processed through a 1D CNN and 2D CNN, respectively, to extract modality-specific features (denoted as audio feature and visual feature ). These unimodal features are then fused through cross-modal interaction to generate a joint semantic representation . To preserve modality-specific discriminability while enhancing complementary information, both the original features ( and ) and the fused representation are adaptively integrated via a self-attention-based feature fusion module, producing the final unified semantic embedding . This hierarchical fusion mechanism ensures the retention of critical unimodal patterns while capturing cross-modal dependencies. The resulting embedding is subsequently fed into a Softmax classifier to accomplish unified target classification.

3.2. The Conversion of the Spectrogram (Audio Preprocessing)

Considering that the acquisition process of sound will be affected by some environmental factors, and the acquisition of sound characteristics will be affected by large noise, we use multiple microphone arrays in terms of hardware and can use beamforming technology to lock the direction of sound, which has a good signal-to-noise ratio.

The sound captured by the microphone array is a one-dimensional time series signal. First, we will send the sound signal via a high-pass filter, specifically Equation (1):

Among them, the filter coefficient is set to 0.95, t represents the time of the sound signal, represents the input signal, and represents the output signal. In this manner, we complete the pre-emphasis processing of the input sound signal and compensate for the high-frequency part of the speech signal that was suppressed by the pronunciation signal system.

Nevertheless, the sound signal exhibits stationary characteristics over a brief period of time, allowing us to simply apply a framing operation to the output sound signal produced by the high-pass filter. To enhance the signal’s smoothness, we multiply each frame of the sound signal by a hamming window, thereby increasing the continuity from the left end of the frame to the right end. Suppose that the signal after framing is , = 0, 1, 2, …, N − 1, where N is the length of the window. Hamming window makes the edge of the signal gradually weaken by weighting the signal, reducing the leakage of signal energy into the adjacent frequency components, that is, reducing the aliasing between different frequency components, thus suppressing the influence of noise. The signal of each frame is multiplied by the Hamming window, denoted as Equation (2):

Among them, represents a sub-frame defined by and is a Hamming window, whose form is defined by Equation (3):

where represents the weight value of the signal at the nth discrete time point of the window, L represents the length of the window, and n represents the sample index of the window.

The energy distribution in the frequency domain observes its characteristics, and distinct speech characteristics can be distinct through different energy distributions in the frequency domain. We perform an N-point FFT on each frame of the frame windowed signal to calculate the spectrum. Equation (4) is as follows:

where represents the input frame data, refers to the Hamming window function, N is the number of Fourier transform points, and denotes the frequency domain expression of the input audio signal. The absolute values of the Fourier transform results are used to obtain the spectral amplitude.

The spectral amplitude of each time frame is arranged into a two-dimensional matrix according to Equation (5):

where i represents the index of the time frame, k represents the index of the frequency, and is a two-dimensional matrix. This is the spectrogram of sound that we need.

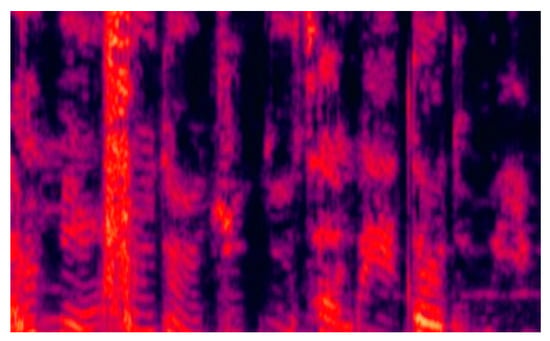

Therefore, this paper completes the conversion of one-dimensional acoustic signal to two-dimensional spectrogram signal. Figure 2 shows the spectrum diagram.

Figure 2.

Spectrum diagram.

3.3. Feature Extraction

3.3.1. Sound Feature Extraction

In Section 3.2, preprocessing operations were applied to the audio data. This paper employs Mel-Frequency Cepstral Coefficients (MFCC) to extract features of sound targets in complex environments. The principle behind MFCC extraction is based on the spectrogram of the sound. However, when fusing these features with image features, MFCC is unable to capture higher-level phonetic information from the sound. Therefore, this paper further extracts the semantic information of the sound using a one-dimensional convolutional neural network (1D CNN). During the extraction process, the sound features are used as the input, denoted as . As shown in Equation (6),

Among them, represents the semantic features of the sound, conv denotes the convolution operation, and represents the size of the convolution kernel.

3.3.2. Image Feature Extraction

In the design of this paper, a 2D CNN is specifically selected to process image data because of its proficiency in capturing spatial features of images. The designed 2D CNN consists of multiple convolutional layers, pooling layers, and fully connected layers. Convolutional layers extract local features from images using different convolution kernels, while pooling layers are used to reduce the dimensionality of feature maps, decrease computational load, and retain important features. Fully connected layers map the extracted features to the target category space, providing a basis for subsequent feature fusion and target classification. This section uses the image features obtained from the aforementioned image as input, denoted as . As shown in Equation (7),

Among them, represents the semantic features of the target image, conv denotes the convolution operation, H and W represent the height and width of the image, and C represents the number of channels in the image.

3.4. Mode Fusion

When fusing audio and visual data, it is essential to ensure alignment between the modalities. The data collected in this study consists of 10 s videos, from which the visual and audio data are separated. The visual data have a sampling rate of 24 frames per second, while the audio data are sampled at 24 Hz. By matching each frame of the video with its corresponding audio segment, this study achieves alignment between the audio and visual modalities. The visual modality contains rich detail information, while the audio modality provides complementary auditory details to the visual modality.

As discussed in Section 3.3.1 and Section 3.3.2, this study represents the high-level semantic features of images and sounds as and , respectively. A multimodal fusion network structure is constructed to fuse these features. The high-level semantic features extracted from images and sounds using the CNN are mapped into a shared semantic subspace through a joint architecture for feature fusion, resulting in the fused high-level semantic feature , as shown in Equation (8):

After obtaining the fused high-level semantic feature in the feature fusion stage, this paper introduces a Self-Attention module (SA module) to learn the representations of image features and audio features. The high-level semantic feature , the image features extracted by the 2D CNN , and the audio features extracted by the 1D CNN are passed through a self-channel attention module and ultimately weighted to obtain the result. The optimized high-level semantic feature , the optimized image feature , and the optimized audio feature are then fused. The attention weights , , and between each modality are calculated for , , and , respectively, as shown in Equation (9):

Among them, and .

In this study, self-attention weights were used to carry out weighted fusion of each modal feature, and finally, the final high-level semantic information was obtained through modal fusion, as shown in Equation (10):

In order to effectively complete the task of target classification, Softmax function is used to normalize and achieve the probability distribution of classification. Sofatamx’s formula is shown in (11):

The audiovisual fusion network proposed in this paper can gradually enhance the matching degree and fusion effect between sound visual features. In the iterative process, the network adjusts and optimizes the feature extraction and fusion methods of each mode according to the current feature representation and interaction weights, so as to obtain the high-level semantic information of the final fusion. This cross-modal interaction and fusion method not only improves the robustness and generalization ability of feature representation but also solves the problem of data heterogeneity among modes. It makes full use of the complementarity between different modes and provides strong support for the subsequent classification output.

4. Experimental Result and Analysis

4.1. Dataset Description and Experimental Platform

The self-built dataset used to measure the performance of M-AVFA is a combination of common objects in life, consisting of four categories: automobiles, buses, people, and bicycles. The camera provided by our laboratory captures images, and the microphone array collects the actual sound signals. In the sound dataset, the car’s driving, braking, and whistle sounds are marked as car-related; the bus’s driving sounds and the sounds from the station are categorized as bus-related; the bicycle’s brake sounds, chain drive sounds, and bicycle bell sounds are categorized as bicycle-related; and the sounds of people walking and speaking are categorized as people-related. Regarding the image dataset, we label cars, buses, bicycles and people according to our visual observations. The dataset was primarily collected outdoors, and the data we sampled outdoors are shown in Figure 3.

Figure 3.

Data acquisition. Figure (e) demonstrates the utilization of the microphone to collect the sound information corresponding to Figure (a) “occluded bicycles”, (b) “occluded buses”, (c) “occluded cars”, and (d) “occluded pedestrians” (captured by the camera),while simultaneously acquiring environmental acoustic data. These data are as follows: (a) bicycle sounds in outdoor environments, (b) bus noises in exterior settings, (c) vehicular traffic sounds in open-road conditions, and (d) human speech in indoor crowded spaces.

To strengthen the nonlinearity and robustness of the image dataset, we adopted a series of operations, such as adding noise, resizing images, and adjusting contrast ratios, for data augmentation, thereby expanding the image dataset to a total of 8408 samples across four object categories. For the audio dataset, each sound file was segmented into 3 s clips for storage, with incomplete segments (shorter than 3 s) retained to ensure temporal alignment with visual counterparts. Consequently, the audio dataset was correspondingly expanded to 8408 samples. (To maintain modality consistency, the number of image and audio samples per target category was strictly matched.)

4.2. Introduction of Experimental Platform and Evaluation Indicators

4.2.1. Evaluation Metrics

In order to effectively explain the effectiveness of our proposed object classification method, we introduce the evaluation indicators of , , , F1 score, and . The specific evaluation index formulas such as Equations (12)–(16):

The values TP, TN, FP, and FN represent true positive, true negative, false positive and false negative, respectively. Using accuracy alone as a measure of the standard is insufficient, especially when dealing with unbalanced datasets. Therefore, class-based performance measurement is obtained by incorporating additional standards.

4.2.2. Introduction to the Experimental Platform

This section intends to validate the effectiveness of the fusion classification of M-AVFA proposed in this paper. Firstly, the model architecture of the 2D-CNN was determined. Once the model CNN architecture was established, we proceeded to assess the effects of various parameters. Afterwards, we compared the audio-visual fusion recognition method using image recognition algorithm and sound recognition algorithm.

During the training of our model, the predicted label and the actual label of the object we trained achieved an optimal fitting state. The sound data and image data we trained were randomly selected from the self-made dataset. Both the sound dataset and the image dataset were then randomly divided into the training and the verification sets, following a 9: 1 ratio. All the parameters we proposed are listed in Table 1. To achieve the optimal result, the loss value we took was just converging, hence preventing overfitting. The method proposed in this paper was written by python3.8 and the Pytorch 1.10.1 framework; it runs on Ubuntu20.04 and RTX4090 GPU.

Table 1.

Experimental parameters.

4.3. Comparative Test

4.3.1. CNN Structure Experiment

This section aims to evaluate the 2D-CNN models with various convolutional architectures to obtain the optimal model. Inspired by Farzin KazemiFae’s comparative experiment, this paper conducted corresponding convolution depth tests on the AVE dataset. This article initially focuses on the depth of the convolutional layer in the model and tests the effects of various indicators of convolutional layers with varying depths after fusion. We made four groups of comparison experiments on each object from 1-layer, 2-layer, 3-layer, and 4-layer convolution depth, respectively, so as to obtain the optimal solution, as shown in Table 2.

Table 2.

AVE dataset convolution depth test.

As shown in Table 2, when the convolutional depth is three or four layers, the metrics of Accuracy, Recall, Precision, F1 score, and G-Mean show little difference. In contrast, when the convolutional depth is one or two layers, these metrics are significantly lower. Therefore, the model performs better with a convolutional depth of three or four layers. However, as the convolutional depth increases, the computational cost also rises correspondingly, and there is a risk of overfitting.

Therefore, considering the indicators of Precision, Recall, Accuracy, F1 score and G-Mean, this paper selects a convolution depth of three layers.

4.3.2. Cross-Scene Experiment

In order to verify that the proposed method can adapt to more diverse scenes, indoor and noisy environments were selected, respectively, to test the proposed method and the sound target classification method. The experimental results of the indoor environment are shown in Table 3.

Table 3.

Indoor environment test.

In the indoor environment, this study found that M-AVFA improved the accuracy index by 6.17% compared to PANNS_CNN10, and M-AVFA improved the accuracy index by 4.88% compared to ECAPA_TDNN. The experimental results show that the M-AVFA method proposed in this paper is more advanced than the single-mode sound target classification algorithm in the indoor environment.

In order to verify that the proposed method can adapt to more diverse scenarios, a noisy environment was selected to test the proposed method and the sound target classification method, respectively. The experimental results of the noisy environment are shown in Table 4.

Table 4.

Noisy environment experiment.

In the noisy environment, it is obvious that the sound target classification method is disturbed. This study found that the accuracy index of M-AVFA increased by 10.53% compared to PANNS_CNN10, and the accuracy index of M-AVFA increased by 7.69% compared to ECAPA_TDNN. The experimental results show that the M-AVFA method proposed in this paper is more advanced than the single-mode sound target classification algorithm in the noisy environment.

In order to verify that the proposed method can adapt to more diverse scenes, occlusion and weak environments were selected to test the proposed method and image target classification method, respectively. The experimental results under the occluded environment are shown in Table 5.

Table 5.

Occlusion environment experiment.

In the occlusion environment, this study found that the accuracy index of M-AVFA was improved by 10.26% compared to ViT, and the accuracy index of M-AVFA was improved by 13.16% compared to VGG16. The experimental results show that the M-AVFA method proposed in this paper is more advanced than the single-mode image object classification algorithm under occlusion environment.

In order to verify the influence of low-light conditions on image target classification, this paper chose a low-light environment to test the proposed method and image target classification method, and the experimental results are shown in Table 6.

Table 6.

Low-light environment experiment.

In the low-light environment, this study found that the accuracy index of M-AVFA increased by 8.86% compared to ViT, and the accuracy index of M-AVFA increased by 10.26% compared to VGG16. The experimental results show that the M-AVFA method proposed in this paper is more advanced than the single-mode image object classification algorithm under occlusion environment.

4.3.3. Ablation Test

Ablation experiments are used to evaluate the contribution of specific components or features to the performance of a model by removing or modifying them one by one. In order to deeply explore the importance of each component in the M-AVFA algorithm, a series of ablation experiments were carefully designed in this paper. By systematically removing or replacing key modules in the algorithm (feature extraction module, fusion strategy) and comparing experimental results in different configurations, this paper aims to quantify the impact of each component on the overall performance, thereby revealing the central role of each part of the algorithm and its contribution to the target recognition task.

Experiment 1: Ablation experiment of feature extraction module

This study starts with the feature extraction module. Specifically, the experiment initially focuses on the feature extraction module. The image feature extraction module and the audio feature extraction module, serving as the core components of the visual and auditory modalities, respectively, are each removed and subjected to comparative tests. The performance of the model on the dataset is observed. The experimental results are shown in Table 7.

Table 7.

Feature extraction module ablation experiment.

The experimental results show that when the feature extraction module of any mode is removed, the performance of the model on the test set decreases significantly. The results show that both image features and sound features play an indispensable role in the target recognition task, and they complement each other to provide a rich source of information for the model. When the fusion module is removed, the performance of the model decreases most significantly. This finding further validates the core role of acousto-optic information fusion in complex environment target recognition. By integrating multimodal information, the fusion module effectively makes up for the limitations of a single mode in a specific scene and significantly improves the robustness and accuracy of the model.

Experiment 2: Ablation experiment of fusion strategy

In order to deeply explore the influence of different fusion strategies on multimodal target recognition performance, this study designed a series of ablation experiments to systematically analyze the effectiveness and applicability of various fusion strategies. Specifically, the experiment revolves around the key modules in the M-AVFA algorithm and observes the impact on model performance by replacing or removing specific fusion strategies one by one.

The experiment compares the three fusion strategies proposed in this paper, namely Feature fusion, and Decision fusion, and M-AVFA. The results are shown in Table 8.

Table 8.

Ablation experiment of fusion strategy.

Feature fusion can make full use of the original information of each mode by directly splicing multi-mode features, but it is easy to introduce noise in complex scenes. Decision fusion combines the decision results of each mode by means of weighting or voting, which has strong robustness; however, in some cases, it may not be able to make full use of the complementary information of multiple modes. The fusion strategy proposed in this paper has advantages.

Experiment 3: Comparison of feature fusion methods

In order to deeply explore the influence of different feature fusion methods on multimodal target recognition performance, a series of ablation experiments were designed to systematically analyze the effectiveness and applicability of various feature fusion methods. Specifically, the experiment focused on the key modules of M-AVFA algorithm and observed their impact on model performance by replacing or removing specific feature fusion one by one, as shown in Table 9

Table 9.

Feature fusion ablation experiment.

The experimental results show that different feature fusion methods have significant effects on the performance of multimodal target recognition. Among them, self-attention mechanism fusion is superior to splicing feature fusion and weighted feature fusion in accuracy, recall rate, accuracy rate and F1 score, indicating that it can more effectively capture the complementary information among multimodal features, thus improving the model’s adaptability to complex scenes and the accuracy of target recognition. This result provides an important basis for optimizing the feature fusion module of M-AVFA algorithm and verifies the superiority of self-attention mechanism in multimodal target recognition task.

4.3.4. Model Feasibility Experiment

In order to verify that the M-AVFA model proposed in this paper can work properly, the audiovisual event classification (AVE) dataset is selected for testing experiments and compared with other multimodal fusion classification methods. In this paper, STNet and PCTDF models are selected for comparative experiments. The experimental results are shown in Table 10.

Table 10.

Test on AVE dataset.

As shown in Table 10, the classification of audiovisual time on AVE dataset of the M-AVFA method proposed in this paper is superior to that of the PCTDF method and the STNet method. In terms of the Accuracy index, M-AVFA improves by 1.77% compared with PCTDF, and M-AVFA improves by 1.49% compared with STNet. From the perspective of G-Mean index, M-AVFA increased by 1.69% compared to PCTDF, and M-AVFA increased by 1.5% compared to STNet. From these, it can be seen that this method has advantages.

4.3.5. Compared with SOTA Methods

In order to evaluate the validity of the proposed method, this paper compares it with both monomodal target recognition and the current mainstream multimodal fusion recognition algorithms. For monomodal audio recognition, the methods selected include PANNS_CNN [26] and ECAPA_TDNN [27]. For monomodal image recognition, the methods selected are VIT [24] and VGG [9]. For multimodal fusion target recognition, the methods chosen include STNet [32], proposed by Li in 2024, the personalized CTD framework (PCTDF) [33], proposed by RA Borsoi in 2024, AFT-SAM [34], proposed by Chen in 2025, and PSFMMA [35], proposed by Y Li in 2025. Comparisons are made between the aforementioned algorithms and the M-AVFA proposed in this paper. The recognition performance of each algorithm was obtained by averaging the results of five experiments.

As shown in Table 11, the proposed method in this paper achieves an average recognition rate of 84.32%, which outperforms other methods.

Table 11.

Quantitative results compared with SOTA method.

- Comparison with monomodal audio recognition methods: Compared with PANNS_CNN, the proposed method in this paper increases the average recognition rate by 7.9%. Compared with ECAPA_TDNN, the proposed method increases the average recognition rate by 7.7%. This indicates that multimodal fusion methods can effectively compensate for the shortcomings of monomodal methods in audio feature extraction, thereby enhancing recognition performance.

- Comparison with monomodal image recognition methods: Compared with ViT, the proposed method increases the average recognition rate by 7.5%. Compared with VGG, the proposed method increases the average recognition rate by 9.7%. The results show that multimodal fusion further enhances the accuracy of target recognition, especially in complex scenarios where the complementarity of multimodal information plays a significant role.

- Comparison with the latest multimodal fusion recognition methods: Compared with the PCTDF method, the proposed method increases the average recognition rate by 2.6%. Compared with the STNet method, the recognition accuracy of the proposed method is increased by 2.4%. Compared with the AFT-SAM method, the proposed method increases the average recognition rate by 1.2%. Compared with the PSFMMA method, the recognition accuracy of the proposed method is increased by 0.9%. These results demonstrate that the proposed M-AVFA algorithm has significant advantages in multimodal fusion, effectively integrating audio and visual information to enhance target recognition performance in complex environments.

In summary, the proposed M-AVFA algorithm performs exceptionally well in multimodal target recognition tasks, outperforming not only monomodal methods but also the latest multimodal fusion algorithms. This further validates the effectiveness and superiority of the M-AVFA algorithm in handling complex environment target recognition tasks.

5. Conclusions

Traditional monomodal recognition systems, due to their inherent limitations in data representation and sensitivity to environmental conditions, are increasingly unable to meet the robustness requirements for target recognition. Faced with significant technical challenges, such as the difficulty in aligning heterogeneous modality features and the substantial semantic gap across modalities, this study proposes a multimodal fusion framework based on audio-visual fusion. The network architecture employs a 2D CNN and a 1D CNN to extract semantic features from images and sounds, respectively, thereby providing high-quality modality-specific feature representations for subsequent fusion.

To address the heterogeneity issues in modality fusion and to solve the problem of heterogeneity among modes, a feature fusion strategy based on self-attention mechanism is proposed in this paper. Comparison with the latest multimodal fusion recognition methods: Compared with the PCTDF method, the proposed method increases the average recognition rate by 2.6%. Compared with the STNet method, the recognition accuracy of the proposed method is increased by 2.4%. Compared with the AFT-SAM method, the proposed method increases the average recognition rate by 1.2%. Compared with the PSFMMA method, the recognition accuracy of the proposed method is increased by 0.9%. These results demonstrate that the proposed M-AVFA algorithm has significant advantages in multimodal fusion, effectively integrating audio and visual information to enhance target recognition performance in complex environments.

The current multimodal fusion-based target classification in this study is primarily proposed based on convolutional neural networks (CNNs). Future research will attempt to explore deeper network architectures, such as ResNet structures. Future work will prioritize validating the proposed methods through classification experiments in complex outdoor environments. Additionally, further modifications to the current audio-visual fusion approach to achieve target classification under extreme weather conditions remain an area for future investigation.

Author Contributions

P.C. contributed to this paper. Conceptualization, X.Z. and H.Z.; methodology, P.C. and H.C.; writing—original draft preparation, P.C., X.C. and X.L. All authors have read and agreed to the published version of the manuscript.

Funding

This work was supported in part by the Innovative Research Group Project of the National Natural Science Foundation of China (51821003), the Excellent Youth foundation of Shanxi Province (202103021222011), the Key Research and Development Project of Shanxi Province (202202020101002), the Fundamental Research Program of Shanxi Province (202303021211150), the Aviation Science Foundation (2022Z0220U0002), and the Shanxi Province Key Laboratory of Quantum Sensing and Precision Measurement (201905D121001).

Data Availability Statement

The original contributions presented in this study are included in the article. Further inquiries can be directed to the corresponding authors.

Acknowledgments

Thanks to all the authors for their help with this paper. Here I would like to thank Xuan Zhang for putting forward the corresponding concept of this paper, and providing analysis, experimental verification and data preservation; Thanks to Huijun Zhao for his support of the concepts and methods proposed in this paper, and for his help in the software writing and visualization; Thanks to Huiliang Cao for the situation analysis of this paper, the investigation of the relevant background of the paper, and the help in the experimental verification and data preservation; Thanks to Xuemei Chen and Xiaochen Liu for their supervision, theoretical guidance, project and fund support for the first draft of this paper.

Conflicts of Interest

The authors declare no conflicts of interest.

References

- Yurtsever, E.; Lambert, J.; Carballo, A.; Takeda, K. A survey of autonomous driving: Common practices and emerging technologies. IEEE Access 2020, 8, 58443–58469. [Google Scholar] [CrossRef]

- Shamsipour, G.; Fekri-Ershad, S.; Sharifi, M.; Alaei, A. Improve the efficiency of handcrafted features in image retrieval by adding selected feature generating layers of deep convolutional neural networks. Signal Image Video Process. 2024, 18, 2607–2620. [Google Scholar] [CrossRef]

- Lagraa, S.; Husák, M.; Seba, H.; Vuppala, S.; State, R.; Ouedraogo, M. A review on graph-based approaches for network security monitoring and botnet detection. Int. J. Inf. Secur. 2024, 23, 119–140. [Google Scholar] [CrossRef]

- Zhang, S.; Metaxas, D. On the challenges and perspectives of foundation models for medical image analysis. Med. Image Anal. 2024, 91, 102996. [Google Scholar] [CrossRef] [PubMed]

- Al-Nuaimy, M.N.M.; Azizi, N.; Nural, Y.; Yabalak, E. Recent advances in environmental and agricultural applications of hydrochars: A review. Environ. Res. 2024, 250, 117923. [Google Scholar] [CrossRef]

- Lecun, Y.; Bottou, L.; Bengio, Y.; Haffner, P. Gradient-based learning applied to document recognition. Proc. IEEE 1998, 86, 2278–2324. [Google Scholar] [CrossRef]

- He, K.; Zhang, X.; Ren, S.; Sun, J. Deep residual learning for image recognition. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Las Vegas, NV, USA, 27–30 June 2016; pp. 770–778. [Google Scholar]

- Tan, M.; Le, Q. Efficientnet: Rethinking model scaling for convolutional neural networks. In Proceedings of the International Conference on Machine Learning, PMLR, Long Beach, CA, USA, 9–15 June 2019; pp. 6105–6114. [Google Scholar]

- Simonyan, K.; Zisserman, A. Very deep convolutional networks for large-scale image recognition. arXiv 2014, arXiv:1409.1556. [Google Scholar]

- Van den Oord, A.; Dieleman, S.; Zen, H.; Simonyan, K.; Vinyals, O.; Graves, A.; Kalchbrenner, N.; Senior, A.; Kavukcuoglu, K. Wavenet: A generative model for raw audio. arXiv 2016, arXiv:1609.03499. [Google Scholar]

- Wang, S.; Mei, L.; Liu, R.; Jiang, W.; Yin, Z.; Deng, X.; He, T. Multi-modal fusion sensing: A comprehensive review of millimeter-wave radar and its integration with other modalities. IEEE Commun. Surv. Tutor. 2024, 27, 322–352. [Google Scholar] [CrossRef]

- Kazemi, F.; Asgarkhani, N.; Jankowski, R. Optimization-based stacked machine-learning method for seismic probability and risk assessment of reinforced concrete shear walls. Expert Syst. Appl. 2024, 255, 124897. [Google Scholar] [CrossRef]

- Ren, G.; Diao, L.; Guo, F.; Hong, T. A co-attention based multi-modal fusion network for review helpfulness prediction. Inf. Process. Manag. 2024, 61, 103573. [Google Scholar]

- Kim, B.; Kwon, Y. Searching for Effective Preprocessing Method and CNN-based Architecture with Efficient Channel Attention on Speech Emotion Recognition. arXiv 2024, arXiv:2409.04007. [Google Scholar]

- Huang, S.Y.; Hsu, R.J.; Liu, D.W.; Hsu, W.L. Using a machine learning algorithm and clinical data to predict the risk factors of disease recurrence after adjuvant treatment of advanced-stage oral cavity cancer. Tzu Chi Med. J. 2025, 37, 91–98. [Google Scholar] [PubMed]

- Xu, H.; Nie, R.; Cao, J.; Xie, G.; Ding, Z. IMQFusion: Infrared and visible image fusion via implicit multi-resolution preservation and query aggregation. Expert Syst. Appl. 2024, 257, 125014. [Google Scholar]

- Xu, X.; Cong, F.; Chen, Y.; Chen, J. Sleep stage classification with multi-modal fusion and denoising diffusion model. IEEE J. Biomed. Health Inform. 2024, 1–12. [Google Scholar] [CrossRef]

- Liu, X.; Tang, J.; Shen, C.; Wang, C.; Zhao, D.; Guo, X.; Liu, J. Brain-like position measurement method based on improved optical flow algorithm. ISA Trans. 2023, 143, 221–230. [Google Scholar] [CrossRef]

- Shen, C.; Wu, Y.; Qian, G.; Wu, X.; Cao, H.; Wang, C.; Tang, J.; Liu, J. Intelligent bionic polarization orientation method using biological neuron model for harsh conditions. IEEE Trans. Pattern Anal. Mach. Intell. 2024, 47, 789–806. [Google Scholar] [CrossRef]

- Wu, X.; Cao, H.; Wang, C.; Shen, C.; Tang, J.; Liu, J. Heading measurement frame based on atmospheric scattering beams for intelligent vehicle. IEEE Trans. Intell. Transp. Syst. 2024, 25, 14932–14947. [Google Scholar]

- Tang, P.; Yan, X.; Nan, Y.; Hu, X.; Menze, B.H.; Krammer, S.; Lasser, T. Joint-individual fusion structure with fusion attention module for multi-modal skin cancer classification. Pattern Recognit. 2024, 154, 110604. [Google Scholar]

- Krizhevsky, A.; Sutskever, I.; Hinton, G.E. Imagenet classification with deep convolutional neural networks. Adv. Neural Inf. Process. Syst. 2017, 60-6, 84–90. [Google Scholar]

- Szegedy, C.; Liu, W.; Jia, Y.; Sermanet, P.; Reed, S.; Anguelov, D.; Erhan, D.; Vanhoucke, V.; Rabinovich, A. Going deeper with convolutions. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Boston, MA, USA, 7–12 June 2015; pp. 1–9. [Google Scholar]

- Dosovitskiy, A.; Beyer, L.; Kolesnikov, A.; Weissenborn, D.; Zhai, X.; Unterthiner, T.; Dehghani, M.; Minderer, M.; Heigold, G.; Gelly, S.; et al. An image is worth 16 × 16 words: Transformers for image recognition at scale. arXiv 2020, arXiv:2010.11929. [Google Scholar]

- Ban, Y.J.; Lee, S.; Park, J.; Kim, J.E.; Kang, H.S.; Han, S. Dinov2_Mask R-CNN: Self-supervised Instance Segmentation of Diabetic Foot Ulcers. In Diabetic Foot Ulcers Grand Challenge; Springer: Cham, Switzerland, 2024; pp. 17–28. [Google Scholar]

- Kong, Q.; Cao, Y.; Iqbal, T.; Wang, Y.; Wang, W.; Plumbley, M.D. Panns: Large-scale pretrained audio neural networks for audio pattern recognition. IEEE/ACM Trans. Audio Speech Lang. Process. 2020, 28, 2880–2894. [Google Scholar] [CrossRef]

- Desplanques, B.; Thienpondt, J.; Demuynck, K. Ecapa-tdnn: Emphasized channel attention, propagation and aggregation in tdnn based speaker verification. arXiv 2020, arXiv:2005.07143. [Google Scholar]

- Li, K. Applications of Deep Learning in Object Detection. In Proceedings of the 2022 International Conference on Computers, Information Processing and Advanced Education (CIPAE), Ottawa, ON, Canada, 26–28 August 2022; IEEE: Piscataway, NJ, USA, 2022; pp. 436–442. [Google Scholar]

- Xu, S.; Zhou, D.; Fang, J.; Yin, J.; Bin, Z.; Zhang, L. Fusionpainting: Multimodal fusion with adaptive attention for 3d object detection. In Proceedings of the 2021 IEEE International Intelligent Transportation Systems Conference (ITSC), Indianapolis, IN, USA, 19–22 September 2021; IEEE: Piscataway, NJ, USA, 2021; pp. 3047–3054. [Google Scholar]

- Chen, Y.; Ma, Y.; Zou, H. Multifactorial modality fusion network for multimodal recommendation. Appl. Intell. 2025, 55, 139. [Google Scholar] [CrossRef]

- Islam, K.; Zaheer, M.Z.; Mahmood, A.; Nandakumar, K.; Akhtar, N. Genmix: Effective data augmentation with generative diffusion model image editing. arXiv 2024, arXiv:2412.02366. [Google Scholar]

- Li, Y.; Liu, H.; Yang, B. STNet: Deep Audio-Visual Fusion Network for Robust Speaker Tracking. IEEE Trans. Multimed. 2024, 27, 1835–1847. [Google Scholar] [CrossRef]

- Borsoi, R.A.; Usevich, K.; Brie, D.; Adali, T. Personalized Coupled Tensor Decomposition for Multimodal Data Fusion: Uniqueness and Algorithms. IEEE Trans. Signal Process. 2024, 73, 113–129. [Google Scholar] [CrossRef]

- Che, N.; Zhu, Y.; Wang, H.; Zeng, X.; Du, Q. AFT-SAM: Adaptive Fusion Transformer with a Sparse Attention Mechanism for Audio–Visual Speech Recognition. Appl. Sci. 2025, 15, 199. [Google Scholar] [CrossRef]

- Li, Y.; Li, Y.; He, X.; Fang, J.; Zhou, C.; Liu, C. Learner’s cognitive state recognition based on multimodal physiological signal fusion. Appl. Intell. 2025, 55, 127. [Google Scholar] [CrossRef]

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).