Abstract

The intent classification of Chinese questions about respiratory diseases (CQRD) can not only promote the development of smart medical care, but also strengthen epidemic surveillance. The major core of the intent classification of CQRD is text representation. This paper studies how to utilize keywords to construct CQRD representation. Based on the characteristics of CQRD, we propose a keywords-based reinforcement learning model. In the reinforcement learning model based on keywords, we crafted a word frequency reward function to aid in generating the reward function and determining keyword categories. Simultaneously, to generate CQRD representations using keywords, we developed two models: keyword-driven LSTM (KD-LSTM) and keyword-driven GCN (KD-GCN). The KD-LSTM incorporates two methods: one based on word weights and the other based on category vectors. The KD-GCN employs keywords to construct a weight matrix for training. The method based on word weight achieves the best results on the CQRD_28000 dataset, which is 0.72% higher than the Bi-LSTM model. The method based on category vector outperforms the Bi-LSTM model in the CQRD_8000 dataset by 2.41%. The KD-GCN, although not attaining the optimal outcome, exhibited a superior performance of 3.12% compared to the GCN model. Both methods have significantly improved the classification results of minority classes.

1. Introduction

With the relentless progression of information technology, online consultation has emerged as a pivotal medical service modality, indispensable in contemporary healthcare landscapes. The precise identification of patients’ intentions not only enhances the efficiency of medical services but also fosters advancements in smart healthcare. Respiratory diseases, being the most prevalent category of illnesses, encompass a range of respiratory infectious diseases that exhibit characteristics such as diverse transmission routes and rapid transmission speeds. It is of paramount importance to promptly comprehend the latest advancements in respiratory infectious diseases. By analyzing the online intentions expressed by patients suffering from respiratory ailments, it becomes feasible to swiftly filter out consultations conducive to the dynamic assessment of infectious diseases. Consequently, the recognition of intentions among patients with respiratory diseases plays an importent role in monitoring respiratory infectious diseases. The intent classification of Chinese questions about respiratory diseases (CQRD) is a type of text classification. It mainly focuses on the problems raised by patients on the Internet and judges their intent. Based on the analysis of CQRD samples and the recommendations of professional medical consultants, we divide them into five categories:

- : The patient clearly states what kind of disease they are suffering from and asks about effective treatments (intervention measures).

- : The patient asks what disease they are suffering from or distinguishes the name of the specific disease from which they are suffering from.

- : The patient asks about the disease burden or adverse reactions that a certain disease or drug may cause.

- : The patient asks about the cause of a certain disease.

- : The main body of the patients are a specific group of people, asking about behaviors, diet, or other matters that require attention for a certain disease or drug.

Compared to the general text dataset, the CQRD intent classification dataset exhibits the following distinctive features: online question platforms that lack standardized formats and presentation for questions and online medical inquiries that are characterized by their colloquial and flexible expression. Additionally, it encompasses a wide range of medical terminology (MT), primarily drug names (DrN), symptom names (SN), and disease names (DiN). Within the CQRD corpus, each record includes one or more intent-indicating words or phrases, termed keywords.

The core of the intent classification of CQRD is text representation. With the advancement of neural networks, various text representation models have been proposed. The main components encompass a bag-of-words model [1], a sequence representation model [2], a structured model [3,4], a graph neural network [5,6], and attention-based methods [7]. In recent models, attention-based approaches have progressively gained prominence. A typical attention-based method is the BERT pre-training model [8] and it has achieved significant success in various natural language processing (NLP) tasks. Unlike BERT models trained on general corpora, MC-BERT [9] and BERT-ehealth [10] use Chinese medical and biological corpora for model training. In addition, contrastive learning [11,12] can effectively mitigate the “collapse” issue of BERT and enhance the distinctiveness of sentence representation. Attention-based methods simulate human attention behavior by enabling models to focus on important parts of the input data while ignoring irrelevant information. Similarly, the impact of keywords on text representation is significant. The effective utilization of keywords not only enhances the quality of text representation but also addresses the interpretability limitations associated with attention-based methods.

In the CQRD corpus, there exist numerous category-oriented words, which we refer to as keywords. The evolution of keyword usage in text classification has undeniably underscored the pivotal role of keywords within texts [13,14]. The manual annotation of relevant keywords necessitates not only expertise but also a substantial investment of time. In the absence of a corpus of resources, reinforcement learning is introduced to solve problems to a certain degree [15]. Zhang et al. [16] proposed a reinforcement learning (RL) method to build structured sentence representations by identifying task-relevant structures without explicit structure annotations. Due to the flexible presentation and various framework structures of CQRD, these models cannot work well in the intent classification of CQRD.

In this paper, we construct the CQRD intent classification datasets to advance research in this field. Based on the distinctive characteristics of CQRD, we propose a keywords-based reinforcement learning approach to increase classification accuracy of CQRD. The approach consists of three components: policy network (PNet), CQRD representation model, and classification network (CNet). First, we identify the keywords through PNet. Then, the keywords are delivered to the CQRD representation model. We designed a word frequency reward function to assign multiple weights for each word to assist in the generation of the reward function and keyword category judgment. In CQRD representation model, we propose a KD-LSTM and KD-GCN based on the characteristics of the CQRD. In the KD-LSTM, we have designed two methods to utilize keyword information: the method based on word weight and the method based on category vector. In the method based on word weight, different weights are assigned to each word according to the attributes of the words, and keywords with different categories are also assigned different weights. For the method based on category vector constructs, different category vectors are constructed to assist in CQRD intent classification, according to the different categories of keywords contained in each CQRD. The KD-GCN utilizes keywords to construct an adjacency matrix with weights for training the model. The CNet performs intent classification of CQRD based on the representation of CQRD and provides reward computation to PNet.

2. Related Work

2.1. Keywords in Classification Tasks

Keywords are important information in the text. When classifying text, keywords have a directional effect on the text category. The reasonable use of keywords can improve the classification results of text. Before the rise of deep learning, keyword-based classification methods had already been used [17,18]. With the widespread use of deep learning, the role of keywords in text classification has also become diverse. Hu et al. [19] establish a keyword vocabulary and propose an LSTM-based model that is sensitive to the words in the vocabulary; hence, the keywords leverage the semantics of the full document. The above work uses linear data as input, and in order to utilize the structural relationships between keywords, graph neural networks are introduced. Zhang et al. [14] propose a novel framework called ClassKG, which explore keyword–keyword correlation on a keyword graph using GNN. Due to the need for manual annotation of training data, weakly supervised methods have been proposed. Weakly-supervised methods [20,21] leverage seed information to generate pseudo-labeled documents for model pre-training, and a self-training module that bootstraps on real unlabeled data for model refinement. With the rise of large language models, keyword information can also provide optimization methods [22,23] for it. Unlike previous work, our method does not require identifying keywords in advance, but instead uses reinforcement learning to recognize keywords during the training process. Compared with keywords extracted by tf-idf and other keyword extraction methods, the keywords we generate are more relevant to the category.

2.2. Application of Reinforcement Learning in NLP Tasks

Reinforcement learning (RL) [24] is a promising approach that automatically and actively explores the environment and achieves the optimized strategy for final tasks. RL has been explored in NLP-related interactive tasks such as text-based games [25], text summarisation [26], dialogue systems [27], machine translation [28], information extraction [29], and text classification [30].

Compressive text summarisation offers a balance between the conciseness issue of extractive summarisation and the factual hallucination issue of abstractive summarisation. Dual-Agent reinforcement learning [26] utilizes unsupervised dual-agent reinforcement learning to optimize a summary’s semantic coverage and fluency by simulating human judgment on text summarisation quality. For sentence summarization, Hyun et al. [31] devise an abstractive model based on RL without ground-truth summaries. To further enhance the summary quality, the research developed a multi-summary learning mechanism that generates multiple summaries with varying lengths for a given text, while making the summaries mutually enhance each other.

Applying RL following maximum likelihood estimation (MLE) pre-training is a versatile method for enhancing neural machine translation (NMT) performance. Yehudai et al. [28] explore the impact of the size of the vocabulary and the dimension of the action space on MT performance. The research finds that effectively reducing the dimension of the action space without changing the vocabulary also yields notable improvement as evaluated by BLEU, semantic similarity, and human evaluation.

Information extraction (IE) has been studied extensively. The experiments observe that different extraction orders can significantly affect the extraction results for a great portion of instances, and the ratio of sentences that are sensitive to extraction orders increases dramatically with the complexity of the IE task. Huang et al. [29] propose an RL-based framework to generate optimal extraction order for each instance dynamically.

Unlike all the above studies, text classification is used to assign one or multiple category labels to a sequence of text tokens. The main task of RL in text classification tasks is to assist text feature extraction. Wang et al. [30] propose SentRL, a reinforcement learning-based framework for aspect-based sentiment classification. In this framework, an agent is deployed to walk on the dependency graphs of sentiment text, explore paths from target aspect nodes to their potential sentimental regions, and differentiate the effectiveness of different paths. In this paper, we propose a reinforcement learning model to extract keywords and construct high-quality CQRD representations for intention classification.

3. Methodology

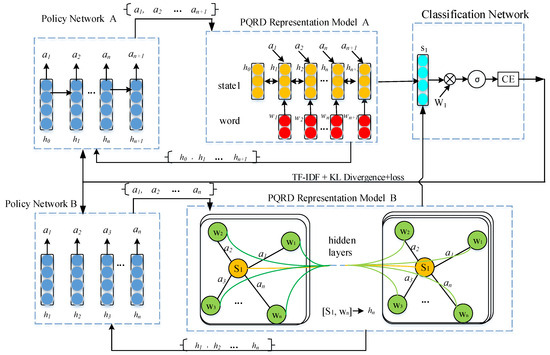

The paper proposed a keywords-based reinforcement learning method to increase classification accuracy. The overall process is shown in Figure 1. This approach consists of three components: policy network (PNet), CQRD representation model, and classification network (CNet). PNet adopts a stochastic policy and samples an action at each state. It keeps sampling until the end of a sentence, and produces an action sequence for the CQRD. Then, PNet transfers the action sequence into a CQRD representation model. In the CQRD representation model, we design a KD-LSTM model and KD-GCN. The CNet makes intent classification of CQRD based on the CQRD representation and offers reward computation to PNet.

Figure 1.

Illustration of the overall process. The policy network (PNet) samples an action each state. The CQRD representation model offers state representation to PNet and outputs the final sentence.

The representation of the CNet when all actions are sampled. CNet provides to PNet. Reward by , , and consists of three parts. PNet A is the sequence output action and PNet B is unordered. The CQRD representation model A is based on Bi-LSTM and the CQRD representation model B is based on GCN. In the CQRD representation model, the states are generated for PNet. The state is constructed differently in the two models. In CQRD representation model A, represents the hidden layer state of , whereas in CQRD representation model B, it denotes the concatenation of the sentence vector and the word vector within the graph. In CNet, serves as a parameter matrix, is the activation function, and represents the cross-entropy loss.

3.1. Policy Network (PNet)

The policy network [32] adopts a stochastic policy and uses a delayed reward to guide the policy learning. It samples an action with the probability at each state. The delayed rewards come from the CNet and the statistical learning, so it is necessary to complete the action sampling of the entire CQRD to obtain the corresponding reward. Following this, we specifically introduce the state, action, and reward function.

3.1.1. State

The state encapsulates the contextual information of the current word. In the KD-LSTM model, the context of the current word is encoded using a Bi-LSTM model to generate the current state. Specifically, in our experiments, the hidden layers of the Bi-LSTM at each time step are utilized to form a sequence of states. Based on these states, PNet provides corresponding actions. In contrast, in the KD-GCN model, the state is generated by concatenating the representation of the current word with the representation of its connected CQRD. The key distinction between the two models lies in their generation mechanisms: the KD-LSTM model constructs the states sequence incrementally, step by step, whereas the KD-GCN model aggregates information from the Graph Convolutional Network (GCN) and can generate all states simultaneously.

3.1.2. Action

Actions are generated based on the generated state. In the model, we design two actions to form a binary action space . The action spaces indicate that the word is keywords or common words of a CQRD.

We utilize a stochastic policy to guide the decision-making process. Let denote the action taken at state t. The policy, which governs the selection of actions, is formally defined as follows:

where represents the probability of selecting , corresponds to the sigmoid activation function, and signifies the set of parameters associated with PNet.

3.1.3. Reward

Reward is a typical delayed reward because no reward can be obtained until the action sequence is fully generated. In this model, the reward is divided into three parts, word frequency reward and loss reward.

Word frequency reward. The word frequency reward gives the word the initial weight corresponding to each category through the word frequency reward function. Formally, x is a word. n is the total number of x in training corpus. is the number of x in the category l. s is the total number of CQRD in the training corpus. is the number of documents containing x in training corpus. The formula is as follows:

where is KL-divergence. is the weight of x as a keyword in category l. The smaller is, the higher the probability of x becoming a keyword of the category l. is word frequency reward. is chosen from . Based on the statistically annotated keywords, we have determined that the parameter is 8.5.

3.2. CQRD Representation Model

The CQRD representation model is based on the Bi-LSTM and GCN. The Bi-LSTM model is responsible for generating the state sequence and the state sequence constructs a CQRD representation based on the action sequence from PNet. In contrast, GCN generates an unordered state for the construction of a CQRD representation. In CQRD representation model, we have separately designed KD-LSTM and KD-GCN as CQRD representation models. In the kD-LSTM, we use two methods to construct CQRD representation. The method based on word weight is as follows: Different weights are assigned to each word according to the attributes of the words, and keywords with different categories are also assigned different weights. The method based on the category vector is as follows: According to the different categories of keywords contained in each question, different category vectors are cosntructed to assist in question classification. KD-GCN assigns greater weight to the edges between keywords and CQRD to construct a weight adjacency matrix.

3.2.1. Keyword-Driven LSTM

The main idea of keyword-driven LSTM (KD-LSTM) is to build a CQRD representation by screening the most important words and strengthening its effect in constructing CQRD representation. In this way, it is expected to learn more task-relevant representations for classification.

Method based on word weight. This method assigns different weights for each word when constructing a CQRD representation according to its contribution. By screening the most important words in a CQRD, the final representation can comprise enhanced local information for classification. KD-LSTM translates the actions obtained from PNet to a CQRD representation. Formally, given a sentence , there is a corresponding action sequence obtained from PNet. In this setting, each action at word position is chosen from , where keywords indicate that the word is a keyword in a CQRD and give it more weight. means that the word is a common word with minimal impact on the final CQRD representation. The categorization of the word is determined based on the word frequency reward function. Formally,

where denotes hidden layers of Bi-LSTM. is the weight of corresponding to category l. is the category parameter. is action; when action is the keyword, is equal to 1, otherwise, is equal to 0. The setting of the value is consistent with the value of in Equation (5).

Method based on category vector. Similar to the method based on word weight, the method based on the category vector also chooses the keywords first. The difference with the method based on word weight is that the method based on the category vector is not necessary to assign a weight to each word. The method based on the category vector is only necessary to judge the category of keywords roughly in the CQRD. When counting the categories of keywords contained in CQRD, we only select the two categories with the highest weights, and only use these two categories to construct the category vector. Formally,

where and are category vectors. and represent the two most likely categories of keywords in the CQRD. If the keyword in the CQRD can only have one category, then is a 0 vector. denotes hidden layers of Bi-LSTM. denotes the CQRD representation.

3.2.2. Keyword-Driven GCN

The main idea of keyword-driven GCN (KD-GCN) is to build a CQRD representation by screening the most important words and utilizing these words to assign different weight values to the adjacency matrix. Likewise, it is expected to learn more task-relevant representations for classification.

We construct a large-scale heterogeneous text graph comprising word nodes and CQRD nodes to explicitly model global word co-occurrence and facilitate the application of graph convolution. The total number of nodes in the text graph, denoted as , is the sum of the number of CQRDs (corpus size) and the number of unique words (vocabulary size) in the corpus. For simplicity, we initialize the feature matrix as an identity matrix, meaning each word or document is represented as a one-hot vector, which serves as the input to the text Graph Convolutional Network (GCN). Edges between nodes are established based on two criteria: (1) word occurrence within CQRDs (CQRD–word edges) and (2) word co-occurrence across the entire corpus (word–word edges). To quantify the strength of associations between word nodes, we utilize point-wise mutual information (PMI), a widely recognized metric for measuring word relationships. Our preliminary experiments indicate that employing PMI yields superior results compared to using raw word co-occurrence counts. Additionally, the edge weight between a CQRD node and a word node is determined by whether the word is a keyword, with keywords assigned higher weights and non-keywords assigned relatively lower weights. This weighting strategy ensures that semantically significant words contribute more prominently to the graph structure.

The specific values are established in accordance with Equation (10). Formally, the weight of the edge between node i and node j is defined as follows:

where is the maximum value obtained by in all words within the current l category.

3.3. Classification Network (CNet)

For classification purposes, the output from the CQRD representation model is fed into the classification network (CNet).

where and represent the parameters of CNet, d denotes the dimension of the hidden state, is the class label, and l is the number of categories.

The classification network (CNet) generates a probability distribution across class labels using the CQRD representation. The parameters and which define CNet are provided in Equation (11) and will not be discussed further.

To train the CNet, we employ the cross-entropy loss function, a widely adopted objective function in classification tasks due to its effectiveness in quantifying the discrepancy between predicted probability distributions and ground truth labels. The cross-entropy loss is formulated as follows:

where represents the gold truth one-hot distribution corresponding to the sample X, and denotes the predicted probability distribution as specified in Equation (11). It is worth noting that the loss function employed here is consistent with the approach adopted by Zhang et al. [16].

4. Experiments

4.1. Tasks and Datasets

4.1.1. Intention Classification of Chinese Questions on Respiratory Diseases (IC-CQRD) Dataset

The experimental data were collected from prominent Chinese Q&A platforms, including Baidu Knows, 360 Q&A, and Sogou Ask. Using web crawlers, we gathered approximately 40,000 CQRD samples from these platforms. After eliminating duplicates and filtering out CQRD that did not meet the specified criteria, a subset of the corpus was selected for manual labeling. The initial labeling phase resulted in 8926 annotated CQRD, of which 8056 questions were allocated for training and 870 questions for testing. During this process, keywords within each CQRD were manually extracted and utilized for classification tasks. However, due to the broad and diverse nature of non-medical problems, this category was subsequently removed. The resulting dataset was named CQRD_8000.

To further expand the dataset, an additional 28,521 CQRD samples were annotated based on the initial labeling framework. The expanded dataset includes 21,610 CQRD for training and 6263 CQRD for testing, with 648 CQRD belonging to multiple categories. This enhanced version of the dataset was named CQRD_28000.

4.1.2. Chinese Medical Intention Dataset (CMID)

The CMID dataset [33] comprises a total of 12,254 sentences, categorized into four main categories and 36 subcategories. Upon scrutinizing the annotation of the CMID corpus, we observed a certain alignment between the category annotation of this corpus and our own constructed annotation.

Consequently, during the experiment, we adjusted the labels in the CMID corpus and statistically mapped the rules for this adjustment to map CMID labels to our designated labels. Following the exclusion of unmappable data, we ultimately reassigned 9224 sentences in the CMID corpus according to our set labels. Among these, 7224 sentences were utilized for training purposes, whereas 2000 were allocated for testing.

4.2. Hyperparameters and Training Detail

We initialized word embeddings to include 60 dimensional embeddings, trained word vectors from the word2vec model. In the experiment, dropout [34] is applied to embedding hidden states with a rate of 0.5. All models are optimized using the Adam optimizer [35], with an initial learning rate of 0.003 and a decay rate of 0.95. A batch size of 32 is adopted. The L2 regularization parameter is set to 0.00001.

4.3. Chinese Questions on Respiratory Diseases (CQRD) Datasets Preprocessing

Since the CQRD dataset is gathered from a Chinese search platform and drawn from genuine user inquiries, it encompasses a plethora of extraneous elements, such as irrelevant special symbols, excessive spaces, and tab characters. These impurities are initially removed prior to training. The Chinese preprocessing step involves word segmentation. In our experiments, we employed the word segmentation tool. To enhance the segmentation efficacy of the tool for the medical-related dataset, we compiled specific dictionaries, including dictionaries for drug names, symptom names, and disease names. These dictionaries comprising 17,513 drug names, 1101 symptom names, and 4971 disease names, respectively, facilitate more accurate segmentation and consequently bolster the outcomes of medical data training.

4.4. Classification Results

In the experimental analysis, we evaluated the performance of various models, and all results were derived from our own independent testing.

In the task of CQRD intent classification, the quality of the CQRD representation directly affects the result of the classification task. The keywords of the CQRD play an important role in the intent classification of CQRD, and the rational use of the keywords information can improve the quality of the CQRD representation. In this study, various methods are employed to incorporate keyword information into problem representations, depending on the construction approach used for problem representation. The specific strategies are as follows:

- Composition Function-Based CQRD Representation: Since the composition function constructs CQRD representations by directly deriving word vectors from the problem text, it is not feasible to directly integrate keyword information into the representation. To address this, a one-hot vector representing the category is constructed and concatenated with the problem representation vector, thereby embedding keyword information into the representation.

- CQRD Representation Based on CNN and S-LSTM Models: In this approach, keyword information is incorporated in a semi-generalized manner. Specifically, category labels are appended to each keyword in the CQRD text, treating these labels as independent words that participate in the generation of CQRD representations. This enhances the influence of keywords during the representation generation process.

- CQRD Representation Based on Bi-LSTM and LSTM Models: In this method, different weights are assigned to keywords and non-keywords within the problem text, forming a weight vector. By leveraging an attention mechanism, the hidden state sequences generated by the Bi-LSTM and LSTM models are weighted and summed to produce the final CQRD representation. This approach ensures that keywords contribute more significantly to the representation than non-keywords.

In CQRD_8000, we manually extracted the keywords from each CQRD to construct a representation. As shown in Table 1, incorporating keyword information into the model significantly enhances the performance of CQRD classification. Specifically, the Bi-LSTM model augmented with keyword information achieved the highest classification accuracy, outperforming the standalone Bi-LSTM model by 1.6%.

Table 1.

Comparison of the results after adding keyword information and the results of unused keyword information (Accuracy * 100). The asterisk (*) indicates the best result.

From the aforementioned experiments, it is evident that incorporating keyword information during the generation of question representations enhances the performance of CQRD classification. However, manually extracting keywords from large-scale internet corpora is prohibitively costly. This paper proposes a method utilizing reinforcement learning to automatically identify keywords within CQRDs, thereby constructing CQRD representations based on these identified keywords.

We conducted experiments using multiple models on the CMID dataset. Given the similarity in data type and scale between the CMID and CQRD_8000 datasets, the performance of each model on CMID is comparable to that on CQRD_8000. As shown in Table 2, the KD-LSTM_vec model still achieves the highest performance, while the results of other models are largely consistent with those on the CQRD_8000 dataset. A notable difference, however, is observed in the BERT model, which demonstrates significant improvement on the CMID dataset.

Table 2.

Comparison of results from different models (Accuracy * 100). The bolded and highlighted models in the table are the ones we proposed. The asterisk (*) indicates the best result.

ERNIE-Bot [36] is a generative dialogue product launched by Baidu based on the Wenxin big model technology, which is known as “the Chinese version of ChatGPT”. ERNIE-Bot employs manually summarized category features for the purpose of intention classification. The GCN_Tf-Idf utilizes tf-idf to identify keywords and construct a weighted adjacency matrix. Table 2 shows that the KD-LSTM_vec model obtains high results when the amount of data is relatively small. As the amount of data increases, the result of the KD-LSTM_weight model exceeds that of the KD-LSTM_vec model. Although KD-GCN did not achieve the best results, it showed the highest improvement compared to the baseline model. On the contrary, using tf-idf in GCN_Tf-Idf not only did not improve the results but also significantly decreased their value. In addition, the BERT model does not work well on these two datasets. In addition, MC-BERT and BERT-ehealth used medical and biological corpora during training, but the results did not significant improvement. Because the training corpus was not used, ERNIE-Bot achieved relatively stable classification results on the CQRD_28000 and CQRD_8000 datasets.

To verify the robustness of the model, a five-fold cross-validation method was employed; the primary advantage of K-fold cross-validation is that it utilizes all data points in the dataset for both training and testing, thereby enhancing the reliability of the results. As shown in Table 3, when comparing different classification methods using cross-validation, the KD-LSTM_weight model achieved the highest accuracy on the first and fifth test sets. Meanwhile, the KD-LSTM_vec model performed best on the third and fourth test sets. Despite achieving the best results in the second test set, the performance of the KD-GCN model was unsatisfactory across other test sets.

Table 3.

Comparison of cross-validation results. The bolded and highlighted models in the table are the ones we proposed. The asterisk (*) indicates the best result.

From Table 4, we evaluate the accuracy of specific categories on the CQRD_28000 dataset. Experimental results demonstrate that the KD-LSTM and KD-GCN models exhibit excellent classification performance for minority classes, effectively addressing the issue of data imbalance to some extent. ERNIE-Bot exhibits remarkable performance in the PRE category. Upon analyzing the characteristics of the PRE category, it was discovered that it encompasses a substantial amount of vocabulary pertaining to food, beverages, and sports types, among others. The ERNIE-Bot demonstrates a high capability in recognizing these attributes. To evaluate the statistical significance of the classification model’s performance on minority classes, we employed the Paired t-Test for significance testing. The baseline model used for comparison was Bi-LSTM, and each of the other models were sequentially tested against it for significance. The results indicate that KD-LSTM_weight demonstrates statistically significant improvements.

Table 4.

Comparison of classification results of different models for specific categories (Accuracy × 100). The bolded and highlighted models in the table are the ones we proposed. The asterisk (*) indicates the best result.

The keywords for each CQRD in the CQRD_8000 dataset were manually summarized. In the reinforcement learning-based intention classification model, keywords are initially identified, and the quality of these keywords directly influences the quality of the subsequent results. Humans are able to deeply understand the meaning and context of text, thus enabling more accurate keyword extraction. Unlike manually summarized keywords, the keywords identified based on reinforcement learning are the ones that are most beneficial to the current classification model under the set assumption. In Table 5, we use manually summarized keywords as the standard results and analyze the similarities and differences between the identified keywords in the experiment and the manually summarized keywords.

Table 5.

Keyword analysis by multiple models (* 100). The bolded and highlighted models in the table are the ones we proposed. The asterisk (*) indicates the best result.

The precision, recall, and F-score were used as evaluation metrics. From Table 5, it is evident that KD-LSTM_vec achieved the highest values in terms of precision and F-score; the highest recall value was achieved by the KD-LSTM_weight model. Compared to other models, both KD-LSTM_vec and KD-LSTM_weight significantly outperformed the alternative methods in terms of F-score. When comparing TF-IDF and WF-RF, their precision values were relatively close; however, there was a notable difference in recall, with a gap of 12.64%.

5. Discussion

In this paper, we propose a keyword-based reinforcement learning approach to increase CQRD intent classification accuracy. First, we identify the keywords through reinforcement learning. We utilize a word frequency reward function to assign multiple weights to each word to assist in the generation of the reward and keyword category judgment. For keywords that have been assigned categories, we employ KD-LSTM and KD-GCN to construct CQRD representation. In KD-LSTM, we have designed two methods to utilize keyword information: the method based on word weight and the method based on category vector. The method based on word weight achieves the best results on the CQRD_28000 dataset, which is 0.72% higher than the Bi-LSTM model. The method based on the category vector is 2.41% higher than the Bi-LSTM model in the CQRD_8000 dataset. The KD-GCN, although not attaining the optimal outcome, exhibited a superior performance of 3.12% compared to the baseline model. Especially in the minority categories, the CQRD intention classification results have improved significantly. Therefore, the keyword-based reinforcement learning approach can solve the problem of CQRD data imbalance to a certain extent.

Author Contributions

Conceptualization, H.W. and D.H.; methodology, H.W.; software, H.W.; validation, H.W. and D.H.; formal analysis, H.W.; investigation, H.W.; resources, D.H.; data curation, H.W.; writing—original draft preparation, H.W.; writing—review and editing, H.W. and X.L.; visualization, H.W.; supervision, D.H.; project administration, D.H.; funding acquisition, D.H. All authors have read and agreed to the published version of the manuscript.

Funding

This research was funded by the Key R&D plans Yunnan Province, grant number 202203AA080004 and the National Key R&D Plan, grant number 2020AAA0108004.

Institutional Review Board Statement

Not applicable.

Informed Consent Statement

Not applicable.

Data Availability Statement

The IC-CDRD dataset is the dataset that we have constructed, and we agree to provide it free of charge to any researchers who require it https://github.com/WHnihao/IC-CQRD-Dataset (accessed on 15 March 2025).

Acknowledgments

This work was supported by the Key R&D Plans Yunnan Province (Approval No.: 202203AA080004) and the National Key R&D Plan (Approval No.: 2020AAA0108004).

Conflicts of Interest

All authors declare no conflicts of interest.

Abbreviations

The following abbreviations are used in this manuscript:

| IC-CQRD | Intention Classification of Chinese Questions on Respiratory Diseases; |

| CQRD | Chinese Questions on Respiratory Diseases; |

| KD-LSTM | Keyword-driven LSTM; |

| KD-GCN | Keyword-driven GCN; |

| RL | Reinforcement Learning; |

| HT | How to Treat; |

| WD | What Disease; |

| WWH | What Will Happen; |

| WHY | Why; |

| PRE | Precautions; |

| GDA | Grammar-based Data Augmentation; |

| DrN | Drug Names; |

| SN | Symptom Names; |

| DiN | Disease Names; |

| PNet | Policy Network; |

| CQRD_RM | CQRD Representation Model; |

| CNet | Classification Network. |

References

- Joulin, A.; Grave, E.; Bojanowski, P.; Mikolov, T. Bag of tricks for effificient text classifification. In Proceedings of the ECACL 2017, Valencia, Spain, 3–7 April 2017; Short Papers. pp. 427–431. [Google Scholar]

- Hochreiter, S.; Schmidhuber, J. Long Short-term Memory. Neural Comput. 1997, 9, 1735–1780. [Google Scholar] [CrossRef] [PubMed]

- Tai, K.S.; Socher, R.; Manning, C.D. Improved Semantic Representations from Tree-Structured Long Short-Term Memory Networks. In Proceedings of the 53rd Annual Meeting of the Association for Computational Linguistics and the 7th International Joint Conference on Natural Language Processing, Beijing, China, 26–31 July 2015; pp. 1556–1566. [Google Scholar]

- Bao, X.; Jiang, X.; Wang, Z.; Zhang, Y.; Zhou, G. Opinion Tree Parsing for Aspect-based Sentiment Analysis. In Findings of the Association for Computational Linguistics: ACL 2023; Association for Computational Linguistics: Stroudsburg, PA, USA, 2023; pp. 7971–7984. [Google Scholar]

- Yuan, S.; Nie, E.; Färber, M.; Schmid, H.; Schuetze, H. GNNavi: Navigating the Information Flow in Large Language Models by Graph Neural Network. In Findings of the Association for Computational Linguistics: ACL 2024; Association for Computational Linguistics: Stroudsburg, PA, USA, 2024; pp. 3987–4001. [Google Scholar]

- Liang, Y.; Mao, C.; Luo, Y. Graph Convolutional Networks for Text Classification. In Proceedings of the Thirty-Third AAAI Conference on Artificial Intelligence and Thirty-First Innovative Applications of Artificial Intelligence Conference and Ninth AAAI Symposium on Educational Advances in Artificial Intelligence, Honolulu, HI, USA, 27 January–1 February 2019; Volume 33. [Google Scholar]

- Lin, Z.; Feng, M.; Santos, C.N.d. A structured self-attentive sentence embedding. In Proceedings of the ICLR, Toulon, France, 24–26 April 2017. [Google Scholar]

- Devlin, J.; Chang, M.; Lee, K.; Toutanova, K. BERT: Pre-training of Deep Bidirectional Transformers for Language Understanding. In Proceedings of the NAACL-HLT 2019, Minneapolis, MN, USA, 2–7 June 2019; pp. 4171–4186. [Google Scholar]

- Zhang, N.; Jia, Q.; Yin, K.; Dong, L.; Gao, F.; Hua, N. Conceptualized Representation Learning for Chinese Biomedical Text Mining. arXiv 2020, arXiv:2008.10813. [Google Scholar]

- Wang, Q.; Dai, S.; Xu, B.; Lyu, Y.; Zhu, Y.; Wu, H.; Wang, H. Building Chinese Biomedical Language Models via Multi-Level Text Discrimination. arXiv 2021, arXiv:2110.07244. [Google Scholar]

- Chuang, Y.; Dangovski, R.; Luo, H.; Zhang, Y.; Chang, S.; Soljacic, M.; Li, S.; Yih, S.; Kim, Y.; Glass, J. Diffcse: Difference-Based Contrastive Learning for Sentence Embeddings. In Proceedings of the 2022 Conference of the North American Chapter of the Association for Computational Linguistics: Human Language Technologies, Online, 10–15 July 2022; pp. 4207–4218. [Google Scholar]

- Wang, Y.; Chi, T.; Zhang, R.; Yang, Y. PESCO: Prompt-enhanced Self Contrastive Learning for Zero-shot Text Classification. In Proceedings of the 61st Annual Meeting of the Association for Computational Linguistics, Toronto, ON, Canada, 9–14 July 2023; pp. 14897–14911. [Google Scholar]

- Yu, M.; Shen, J.; Zhang, C.; Han, J. Weakly-Supervised Neural Text Classification. In Proceedings of the 27th ACM International Conference on Information and Knowledge Management, Torino, Italy, 22–26 October 2018; pp. 983–992. [Google Scholar]

- Lu, Z.; Ding, J.; Xu, Y.; Liu, Y.; Zhou, S. Weakly-supervised Text Classification Based on Keyword Graph. In Proceedings of the EMNLP 2021, Virtual, 16–20 November 2021; pp. 2803–2813. [Google Scholar]

- Yogatama, D.; Blunsom, P.; Dyer, C.; Grefenstette, E.; Ling, W. Learning to Compose Words into Sentences with Reinforcement Learning. In Proceedings of the International Conference on Learning Representations, Toulon, France, 24–26 April 2017. [Google Scholar]

- Zhang, T.; Huang, M.; Zhao, L. Learning Structured Representation for Text Classification via Reinforcement Learning. In Proceedings of the Thirty-Second AAAI Conference on Artificial Intelligence, New Orleans, LA, USA, 2–7 February 2018; pp. 6053–6060. [Google Scholar]

- Aytuğ, O.; Korukoğlu, S.; Bulut, H. Ensemble of Keyword Extraction Methods and Classifiers in Text Classification. Expert Syst. Appl. 2016, 57, 232–247. [Google Scholar] [CrossRef]

- El-Ghawi, Y.; Marzouk, A.; Khamis, A. LexiconLadies at FIGNEWS 2024 Shared Task: Identifying Keywords for Bias Annotation Guidelines of Facebook News Headlines on the Israel-Palestine 2023 War. In Proceedings of the Second Arabic Natural Language Processing Conference, Bangkok, Thailand, 16 August 2024; pp. 561–566. [Google Scholar]

- Hu, F.; Li, L.; Zhang, Z.L.; Wang, J.Y.; Xu, X.F. Emphasizing Essential Words for Sentiment Classification based on Recurrent Neural Networks. J. Comput. Sci. Technol. 2017, 32, 785–795. [Google Scholar]

- Mekala, D.; Shang, J. Contextualized Weak Supervision for Text Classification. In Proceedings of the ACL 2020, Online, 5–10 July 2020; pp. 323–333. [Google Scholar]

- Meng, Y.; Zhang, Y.; Huang, J.; Xiong, C.; Ji, H.; Zhang, C.; Han, J. Text Classification Using Label Names Only: A Language Model Self-Training Approach. In Proceedings of the EMNLP 2020, Online, 16–20 November 2020; pp. 9006–9017. [Google Scholar]

- Rrv, A.; Tyagi, N.; Uddin, M.N.; Varshney, N.; Baral, C. Chaos with Keywords: Exposing Large Language Models Sycophancy to Misleading Keywords and Evaluating Defense Strategies. In Findings of the Association for Computational Linguistics: ACL 2024; Association for Computational Linguistics: Stroudsburg, PA, USA, 2024; pp. 12717–12733. [Google Scholar]

- Kim, J.; Yeonju, K.; Ro, Y.M. What if…?: Thinking Counterfactual Keywords Helps to Mitigate Hallucination in Large Multi-modal Models. In Findings of the Association for Computational Linguistics: EMNLP 2024; Association for Computational Linguistics: Stroudsburg, PA, USA, 2024; pp. 10672–10689. [Google Scholar]

- Pasini, T.; Scozzafava, F.; Scarlini, B. CluBERT: A Cluster-Based Approach for Learning Sense Distributions in Multiple Languages. In Proceedings of the 58th Annual Meeting of the Association for Computational Linguistics, Online, 5–10 July 2020; pp. 4008–4018. [Google Scholar]

- Karthik, N.; Kulkarni, T.; Regina, B. Language Understanding for Text-based Games using Deep Reinforcement Learning. In Proceedings of the 2015 Conference on Empirical Methods in Natural Language Processing, Lisbon, Portugal, 17–21 September 2015; pp. 1–11. [Google Scholar]

- Tang, P.; Gao, J.; Zhang, L.; Zhiyong, W. Efficient and Interpretable Compressive Text Summarisation with Unsupervised Dual-Agent Reinforcement Learning. In Proceedings of the Fourth Workshop on Simple and Efficient Natural Language Processing (SustaiNLP), Toronto, ON, Canada, 13 July 2023; pp. 227–238. [Google Scholar]

- Wang, W.Y.; Li, J.; He, X. Deep Reinforcement Learning for NLP. In Proceedings of the 56th Annual Meeting of the Association for Computational Linguistics-Tutorial Abstracts, Melbourne, Australia, 15–20 July 2018; pp. 19–21. [Google Scholar]

- Asaf, Y.; Leshem, C.; Lior, F.; Omri, A. Reinforcement Learning with Large Action Spaces for Neural Machine Translation. In Proceedings of the 29th International Conference on Computational Linguistics, Gyeongju, Republic of Korea, 12–17 October 2022; pp. 4544–4556. [Google Scholar]

- Huang, W.; Liang, J.; Li, Z.; Xiao, Y.; Ji, C. Adaptive Ordered Information Extraction with Deep Reinforcement Learning. In Findings of the Association for Computational Linguistics: ACL 2023; Association for Computational Linguistics: Stroudsburg, PA, USA, 2023; pp. 13664–13678. [Google Scholar]

- Wang, L.; Bo, Z.; Yunyu, L.; Can, Q.; Wei, C.; Wenchao, Y.; Xuchao, Z.; Haifeng, C.; Yun, F. Aspect-based Sentiment Classification via Reinforcement Learning. In Proceedings of the 2021 IEEE International Conference on Data Mining (ICDM), Auckland, New Zealand, 7–10 December 2021; pp. 1391–1396. [Google Scholar]

- Hyun, D.; Wang, X.; Park, C.; Xie, X.; Yu, H. Generating Multiple-Length Summaries via Reinforcement Learning for Unsupervised Sentence Summarization. In Proceedings of the EMNLP 2022, Abu Dhabi, United Arab Emirates, 7–11 December 2022; pp. 2939–2951. [Google Scholar]

- Sutton, R.S.; McAllester, D.; Singh, S.; Mansour, Y. Policy Gradient Methods for Reinforcement Learning with Function Approximation. Neural Inf. Process. Syst. 2000, 12, 1057–1063. [Google Scholar]

- Chen, N.; Su, X.; Liu, T.; Hao, Q.; Wei, M. A Benchmark Dataset and Case Study for Chinese Medical Question Intent Classification. BMC Med. Inform. Decis. Mak. 2020, 20, 125. [Google Scholar] [CrossRef] [PubMed]

- Srivastava, N.; Ruslan, R.S.; Geoffrey, E.H. Modeling Documents with Deep Boltzmann Machines. arXiv 2013, arXiv:1309.6865. [Google Scholar]

- Kingma, D.P.; Ba, J. Adam: A Method for Stochastic Optimization. In Proceedings of the International Conference on Learning Representations, San Diego, CA, USA, 7–9 May 2015; pp. 74–80. [Google Scholar]

- Baidu’s Knowledge-Enhanced Large Language Model Built on Full AI Stack Technology. 2023. Available online: http://research.baidu.com/Blog/index-view?id=183 (accessed on 24 March 2023).

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).