Abstract

Safe street crossing poses significant challenges for visually impaired pedestrians, who must rely on non-visual cues to assess crossing safety. Conventional assistive technologies often fail to provide real-time, actionable information about oncoming traffic, making independent navigation difficult, particularly in uncontrolled or vehicle-based crossing scenarios. To address these challenges, we designed and evaluated two assistive systems utilizing haptic and visual feedback, tailored for traffic signal-controlled intersections and vehicle-based crossings. The results indicate that visual feedback significantly improved decision efficiency at signalized intersections, enabling users to make faster decisions, regardless of their confidence levels. However, in vehicle-based crossings, where real-time hazard assessment is crucial, haptic feedback proved more effective, enhancing decision efficiency by enabling quicker and more intuitive judgments about approaching vehicles. Moreover, users generally preferred haptic feedback in both scenarios, citing its comfort and intuitiveness. These findings highlight the distinct challenges posed by different street-crossing environments and confirm the value of multimodal feedback systems in supporting visually impaired pedestrians. Our study provides important design insights for developing effective assistive technologies that enhance pedestrian safety and independence across varied urban settings.

1. Introduction

Approximately 220 million people worldwide live with visual impairments [1], facing numerous challenges in daily life, with mobility safety being particularly critical [2,3]. For the general population, crossing the street is a simple task, but for individuals with visual impairments, it is fraught with risks and uncertainties [4,5]. Research shows that safe street-crossing decisions primarily depend on two key factors [6]: the time required for a pedestrian to cross the road and the available time before the next vehicle arrives. However, empirical evidence reveals concerning real-world conditions: drivers yield to pedestrians with white canes at a rate of only 37% [7], forcing visually impaired individuals to exercise extra caution in evaluating crossing opportunities. Moreover, the complexity of many intersections and the interference of street noise [8] further exacerbate the difficulties and safety risks faced by visually impaired pedestrians. Therefore, developing effective street-crossing assistive systems is of critical and urgent importance to improve the mobility safety of individuals with visual impairments.

With advancements in the Internet of Things (IoT) and vehicle-to-everything (V2X) technologies, smart infrastructure can now collect and communicate real-time traffic information, such as traffic light status and approaching vehicles. Wearable and handheld assistive devices for visually impaired pedestrians can leverage these data to provide timely feedback that enhances their awareness of crossing conditions. Existing assistive technologies, such as smartphone navigation [9,10,11,12], smart canes [13,14,15], and intelligent guide robots [16,17,18] have made significant progress in daily navigation. These technologies, developed through both laboratory research and field studies, have demonstrated considerable success in general mobility assistance and route guidance. However, these technologies still face challenges in conveying information effectively in street-crossing scenarios, particularly in communicating critical information such as the remaining time for pedestrian traffic lights [5,19,20] and safe crossing gaps [21]. Traditional auditory feedback faces notable limitations in street environments: environmental noise can mask critical auditory cues [22], and it may lead to auditory channel overload, impairing the ability of visually impaired individuals to perceive surrounding environmental sounds [23].

As promising alternatives, haptic and visual feedback provide visually impaired individuals with a clear and distinguishable means of receiving cues, even in noisy environments. The existing research has shown that haptic feedback can effectively avoid interference from environmental noise, enabling users to perceive vibration signals quickly and accurately [24,25,26]. At the same time, 90% of visually impaired individuals retain some degree of light perception [2,3,27], allowing them to detect the position, motion, and brightness changes of light sources [28]. This opens up significant opportunities for the development of visual cue systems based on residual light perception [27]. The widespread presence of this residual light perception capability represents an underutilized channel for assistive technology development, particularly in navigational contexts requiring rapid decision-making.

Despite these known advantages of both feedback modalities, a significant research gap exists in understanding their comparative effectiveness across different street-crossing contexts. While individual studies have explored haptic systems [29,30] and light-based cues [27] separately, few have systematically compared their performance or investigated how varying parameters within each modality affect decision-making. Research has shown different effectiveness patterns depending on the task and environment [27], highlighting the need for context-specific evaluations. To address this gap, we explored feedback effectiveness in two distinct scenarios: signal-controlled intersections where pedestrians follow traffic signals and vehicle crossing scenarios where they navigate based on approaching vehicles. Two assistive systems were designed and evaluated to determine the optimal feedback mode and parameters for each context. Our findings reveal distinct advantages in different scenarios, summarized as follows:

- In the traffic light crossing scenario, visual feedback significantly improved users’ decision-making efficiency and facilitated faster decisions, regardless of their confidence levels.

- In the vehicle crossing scenario, haptic feedback significantly enhanced decision efficiency, with dynamic haptic feedback outperforming dynamic visual feedback.

- In both scenarios, the high-frequency visual feedback encouraged users to make more cautious decisions, while haptic feedback was widely preferred by users due to its comfortable experience.

Based on the findings above, we validated the application value of haptic and visual feedback in assisting visually impaired individuals with crossing decisions, providing critical insights for designing decision-support systems in traffic light-controlled and vehicle crossing scenarios. The study indicates that users can select their preferred feedback modality in traffic light scenarios based on personal preferences, while haptic feedback demonstrates significant advantages in vehicle crossing scenarios. These findings offer effective design strategies for improving the safety and convenience of daily mobility for visually impaired individuals.

The organization of this paper is as follows: Section 2 reviews related studies, focusing on haptic and visual feedback technologies for assisting visually impaired individuals. Section 3 details Experiment 1, which examined the effectiveness of haptic and visual feedback in a traffic light crossing scenario. Section 4 presents Experiment 2, exploring their utility in a vehicle crossing scenario. Section 5 discusses the results, analyzing the applicability and user preferences for different feedback modalities. Finally, Section 6 concludes the study and suggests future research directions.

2. Related Works

This section reviews previous research on supporting visually impaired people in complex tasks like crossing streets, obstacle avoidance, and navigation. We systematically examine the challenges in assisted mobility and existing solutions, investigate haptic feedback applications for critical information delivery, and discuss advances in visual feedback utilizing light perception. Both modalities aim to provide clear guidance for safe street-crossing decisions.

2.1. Challenges in Mobility for Visually Impaired Individuals

According to data from the World Health Organization, at least 2.2 billion people globally live with some form of vision impairment [1]. Among them, approximately 217 million people suffer from moderate to severe vision impairment, also referred to as “low vision”, and this number continues to grow [31]. Compared to individuals with low vision, those who are blind lack sufficient functional vision to support their daily activities [32,33,34]. However, it is noteworthy that a considerable proportion of legally blind individuals still retain some degree of usable vision, particularly light perception ability [27]. For example, in the United States, approximately 90% of blind individuals retain some level of light perception [2,3]. Although this degree of light perception is insufficient to perform complex visual tasks, it is often adequate for perceiving location, movement, and brightness variations in light sources [28]. Despite the retained light perception in some blind individuals, mobility and other daily activities that depend on vision remain significantly restricted for people with vision impairments across all age groups [30].

2.1.1. Street-Crossing Barriers for Visually Impaired Pedestrians

As a fundamental daily activity, crossing streets, a basic activity for sighted individuals, constitutes a significant challenge for visually impaired pedestrians [4,5]. They not only need to accurately identify and interpret the remaining time displayed on pedestrian traffic signals [5,19,20] but also must assess the availability of sufficiently large traffic gaps or rely on drivers to yield in order to cross safely [21]. This safety assessment primarily depends on two critical factors [6]: the time required for the pedestrian to cross the street and the available time gap before the next vehicle arrives. Studies have shown that, even when using a white cane, the rate of drivers yielding to blind pedestrians is only 37% [7], forcing them to rely more heavily on their judgment of traffic gaps. However, complex intersection designs and environmental noise on streets severely impact this judgment process [8]. For instance, in roundabouts and other segments without fixed crossing times, visually impaired pedestrians find it challenging to reliably assess drivers’ intentions to yield. In some cases, they even require intervention from others to avoid collisions [35]. These factors collectively result in longer decision-making times for visually impaired pedestrians, significantly affecting both their travel efficiency and safety.

To address the challenges faced by visually impaired individuals during mobility, researchers have developed a variety of assistive technological solutions. These solutions include smartphone-based navigation applications [9,10,11,12], smart canes [13,14,15], and intelligent guide robots [16,17,18]. These innovations provide essential functions such as obstacle detection, the interpretation of traffic patterns, and directional guidance. Despite their significant advancements in daily navigation, these technologies still encounter challenges in crossing scenarios, particularly in accurately conveying critical information such as the remaining time on pedestrian traffic signals [5,19,20] and safe traffic gaps [21].

2.1.2. Assistive Technology Solutions for Safe Crossing

To address these specific challenges, researchers have proposed various assistive systems to help visually impaired individuals cross streets safely. Among smartphone-based solutions, Ghilardi et al. developed a pedestrian traffic signal detection system capable of effectively recognizing various shapes and types of traffic signals [36], thereby providing crossing assistance to visually impaired users. Similarly, Montanha et al.’s system not only provides voice prompts for traffic signal and vehicle information but can also automatically adjust signal timing based on user needs, significantly aiding visually impaired individuals in crossing roads safely [37]. In the domain of wearable devices, Tian et al. designed a head-mounted device that delivers real-time audio feedback to convey information about crosswalk locations and traffic signal states. Experimental results have demonstrated the system’s effectiveness in providing safe crossing guidance for visually impaired individuals [4]. Li et al. introduced a wearable system capable of detecting the status of nearby traffic signals in real time and guiding users safely through intersections via voice alerts [38]. Additionally, Chen et al. developed an intelligent guide-dog harness system that identifies surrounding moving obstacles and traffic signal states, improving the safety of visually impaired pedestrians in complex traffic environments through voice reminders [39].

Subsequent research highlights the benefits of tactile notification methods, as audio feedback alone can be compromised by ambient noise and the cognitive demands of processing multiple auditory cues simultaneously [22,23]. Tactile cues, on the other hand, operate independently of hearing and demonstrate greater accuracy in complex traffic scenarios [25]. Comparative studies indicate that tactile feedback frequently surpasses auditory signals in terms of user preference and task efficiency [26,40]. Many visually impaired pedestrians also express a preference for tactile guidance, as it allows them to simultaneously monitor environmental audio cues [30]. Additionally, recent research has compared tactile and visual feedback for individuals with residual light perception, finding that visual cues provide superior performance in such cases [27].

2.2. Haptic Feedback for Visually Impaired Individuals

The human skin is densely populated with tactile receptors and nerve endings, which enable the effective capture of external stimuli and the transmission of signals to the central nervous system, forming a complete tactile experience [41]. Due to the immediacy and intuitiveness of tactile perception, vibration stimuli play a central role in tactile communication systems [42,43,44]. In practical applications, tactile feedback technology has been widely integrated into various wearable devices to provide users with diversified functional support, such as localization and navigation, motion control, and obstacle perception [45]. Research indicates that this technology can effectively guide users by simulating natural tactile cues, such as a “tap on the shoulder” [46]. For visually impaired individuals in particular, tactile feedback has become an important alternative sensory modality [24,47].

2.2.1. Haptic Perception Mechanisms and Assistive Applications

To fully leverage the advantages of tactile feedback in enhancing the safety of visually impaired individuals during mobility, researchers have designed and developed a variety of assistive devices. These devices can be broadly categorized into two groups: portable devices, including enhanced white canes [48,49] and handheld devices [50]; and wearable devices, which encompass belt-based systems [51,52,53,54], vest-based systems [55,56,57,58,59], head-mounted systems [60,61], earphone-based systems [62], footwear-based systems [63], and necklace-style systems [64]. In the field of portable navigation devices, Hertel et al. proposed a haptically enhanced white cane that provides visually impaired users with obstacle distance information via vibration feedback on the handle [49]. Experimental results demonstrated that users could recognize changes in obstacle positions with 90% accuracy [49]. To improve navigation precision, Liu et al. designed a handheld tactile navigation device featuring a rotatable tactile pointer to provide real-time directional feedback. Experiments showed that users were able to maintain their position within 30 cm of the central path for 92.6% of the navigation time [65]. Additionally, Leporini et al. developed a virtual white cane system that uses tactile feedback to simulate the sensation of a traditional cane touching obstacles [66]. Testing revealed that this system effectively helped blind individuals identify obstacles and door locations in indoor environments [66].

In the domain of wearable navigation devices, Van Erp et al. found that a vibrotactile belt could provide five distinguishable tactile cues in a laboratory environment [67]. However, in real walking scenarios with noise interference, the number of effectively recognizable cues significantly decreased to fewer than two [67]. To explore more effective tactile feedback solutions, Xu et al. proposed a wearable backpack-based navigation system that uses vibrations on the shoulder straps and waist to assist visually impaired individuals in recognizing and adjusting their direction, effectively supporting forward movement [29]. Lee et al. further developed a wrist-worn tactile display that innovatively combined static and dynamic tactile modes to deliver navigation information [68]. Experiments demonstrated that visually impaired users could accurately perceive the tactile feedback with a high recognition rate of 94.78% [68].

2.2.2. Haptic Feedback via Wearable Devices for Street-Crossing

Compared to portable devices, wearable devices have gained broader applications due to their unique advantages. Tactile feedback via wearable devices does not occupy the user’s hands, allowing visually impaired individuals to continue using a white cane for environmental exploration. Additionally, such devices can easily be integrated into everyday wearable items, providing users with a more natural and intuitive tactile feedback experience [29]. Despite significant advancements in obstacle detection and directional navigation, research into the application of tactile feedback for specific scenarios, such as street crossing for visually impaired individuals, remains insufficient. Notably, Adebiyi et al. found that, in scenarios like street crossing, where maintaining environmental auditory awareness is critical, visually impaired individuals subjectively preferred tactile feedback over voice prompts. This preference allows them to focus on perceiving surrounding sounds without interference [30]. In the exploration of assistive solutions for visually impaired individuals crossing streets, other forms of information cues are also worth investigating. For instance, Yang C et al. found that in navigation tasks for visually impaired users, visual feedback demonstrated higher efficiency, smoothness, and accuracy compared to tactile feedback [27]. Users also generally perceived visual feedback as more intuitive and user-friendly [27]. This finding opens up new directions for designing street-crossing assistance systems for visually impaired individuals.

2.3. Visual Feedback for Low-Vision and Blind Users

In the field of visual feedback for assisting visually impaired individuals, researchers primarily focus on providing informational support for those with low vision. These systems leverage the residual visual abilities of low-vision individuals and have achieved significant results in various application scenarios, such as obstacle recognition through visual enhancement technologies [28,69,70,71,72], path navigation guidance [73], gesture interaction [74], and stair-climbing assistance [75]. To enhance the usability of these systems, researchers have developed a range of visual optimization methods [76], such as image magnification, contrast adjustment, and edge enhancement, which are presented to users via head-mounted display devices or other visual interfaces.

2.3.1. Enhanced Visual Representation for Visually Impaired Assistance

Specific implementations include displaying enhanced obstacle images through head-mounted devices [69,71] or converting environmental depth information into high-contrast colors [77] and multi-level brightness variations [28,70]. Experimental evaluations demonstrate that these visual feedback methods have achieved positive results in information transmission. For instance, Huang et al. designed a sign-reading system that enhances the visual salience of indoor text (e.g., room numbers), enabling visually impaired users to accurately perceive and recognize this information [74]. In stair navigation research, researchers projected visually highlighted cues directly onto stair surfaces, and visually impaired participants not only perceived these visual feedback cues but also reported a stronger sense of safety [75]. Similarly, Zhao et al. developed a navigation system that used high-contrast visual markers to indicate directions, with validation results showing that visually impaired users could effectively perceive and interpret these navigation cues [73]. Katemake et al. explored the feasibility of LED light-based assistance for visually impaired individuals by installing LED strips along the edges of obstacles to enhance edge visibility. Their findings indicated that this approach helped visually impaired users better perceive and avoid obstacles [78]. These studies collectively demonstrate the feasibility of visual feedback as a medium for information transmission, showing that visually impaired individuals can accurately perceive and understand optimized visual cues.

2.3.2. Harnessing Light Perception for Visual Assistance

Although many visual assistive technologies require users to have functional residual vision, which makes it difficult for legally blind individuals to use these devices, studies have shown that approximately 90% of legally blind individuals still retain light perception abilities [2,3]. While such residual visual function is insufficient for performing fine visual tasks, it is sufficient for visually impaired individuals to perceive changes in light sources in their environment [28]. This basic light perception ability holds significant value in the daily activities of visually impaired individuals. For example, a study by Ross et al. found that visually impaired individuals can use environmental light sources (such as light at the end of a corridor) to assist with orientation and pathfinding [79]. Based on this finding, Yang C et al. developed a wearable navigation device using LED light strips. Experimental results demonstrated that this system significantly improved navigation efficiency (with the average completion time reduced by 28.5%) and accuracy (with an average deviation of less than 4°) [27]. In real-world environment testing, the collision rate of users employing this visual feedback device (0.25 collisions per 100 m) was significantly lower than the rate observed when using tactile feedback systems (1.58 collisions per 100 m) [27]. These findings provide important evidence for exploring new pathways in information acquisition for visually impaired individuals, indicating that well-designed light signal feedback systems can effectively leverage their light perception capability to provide environmental awareness support. Assistive technology applications for visually impaired individuals are summarized in Table 1.

Table 1.

Assistive technology applications for visually impaired individuals.

Building upon the aforementioned research, this study aims to explore the comparative effectiveness of both haptic and visual feedback in street-crossing scenarios for visually impaired individuals. Specifically, we propose to design a light-based visual cue system [27] and a vibration-based tactile feedback device [29,67] to systematically evaluate the effectiveness of these two feedback modalities in conveying traffic signal states and surrounding vehicle activity information. By systematically comparing the distinctive characteristics of light and tactile perception in visually impaired individuals, we aim to determine which feedback method provides optimal support in complex traffic environments. The findings are expected to provide reliable theoretical foundations and practical guidance for developing safer and more intuitive assistive systems for visually impaired individuals.

3. Study 1: Haptic and Visual Feedback for Visually Impaired Pedestrians Crossing at Traffic Signals

This study investigates how well haptic and visual feedback support visually impaired pedestrians with residual light perception in crossing streets at traffic signal intersections. The feedback modes convey timing information to help participants make crossing decisions, allowing them to interpret the traffic light status through the feedback’s urgency levels. We systematically examine influencing factors of both haptic and visual feedback approaches in terms of interaction design, user evaluation, and experimental data analysis.

3.1. Experimental Design

Our study utilized the Pico 4 headset for system development and designed two independent feedback channels for comparison: haptic feedback was delivered through a bHaptics Tactosy vest equipped with 20 haptic units, while visual feedback was provided via two floodlight strips, each containing six lighting units. Through a detailed system implementation process and experimental setup, we conducted an in-depth evaluation of the effectiveness of these two feedback methods under different parameter configurations in assisting visually impaired individuals to determine the safe crossing timing at traffic lights.

3.1.1. Haptic and Visual Feedback Design in Assistive Systems for Visually Impaired Pedestrians

Haptic feedback serves as an important alternative mode for visually impaired individuals to perceive external information [24,47]. Research on vibration-based feedback [42,43,44] has demonstrated varying tactile sensitivity across different body regions [80]. While studies have explored haptic feedback on various body locations, including the waist [81], arm [82], and leg [83], the chest and abdomen regions have shown particularly promising results. Kim et al. discovered that these regions exhibit exceptional tactile recognition capabilities, with accuracy rates reaching 95% [84]. Park et al. further demonstrated that, by controlling the vibration frequency and contact area parameters, chest and abdomen-based feedback can effectively convey different levels of urgency perception [85]. Studies have also revealed that approximately 90% of legally blind individuals retain basic light perception abilities [2,3], allowing them to detect changes in light characteristics [27,28]. Yang C et al. confirmed that head-mounted LED arrays could effectively convey information through controlled light patterns [27].

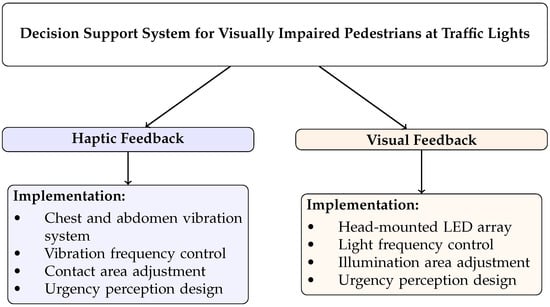

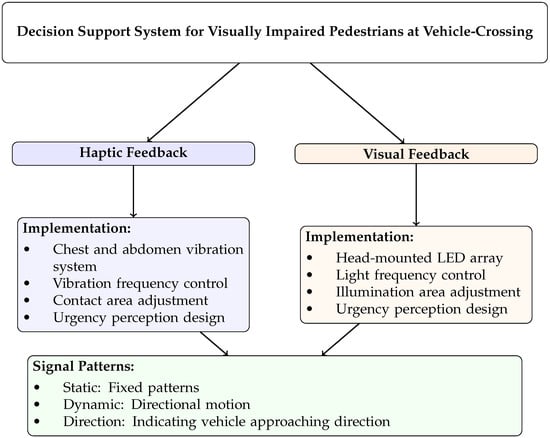

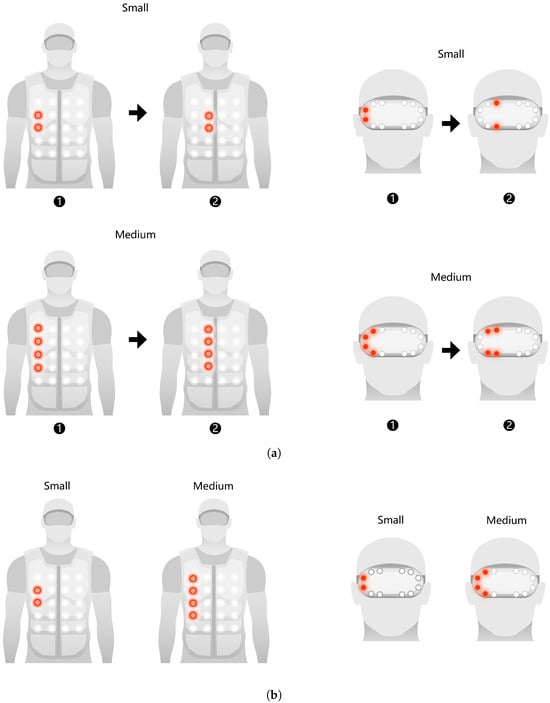

Based on these findings, this study implements two parallel feedback schemes, as shown in Figure 1: a chest and abdomen vibration system that conveys urgency information through controlled frequency and contact area parameters, and a head-mounted LED array system that communicates the same information through adjustments in light frequency and illumination area. This dual-mode design aims to accommodate diverse user preferences and needs while ensuring consistent urgency perception across both feedback channels.

Figure 1.

Overview of haptic and visual feedback systems.

3.1.2. Experimental Settings

Our Study 1 utilized Pico 4 as the experimental platform. This device is equipped with two 2.56-inch fast LCD displays and features a proprietary high-precision four-camera environmental tracking system, combined with an infrared optical positioning system, enhancing tracking and positioning capabilities through optical sensors. The experimental application was developed using Unity 2021.3.27f1c2, along with the PICO Unity Integration SDK, version 2.5.0. The vibration haptic devices were operated via an application built in Unity 2019.4.40f1c1 using the bHaptics haptic plugin. This haptic control application was deployed on a Windows 11 desktop computer. The LED light strips were controlled via a Halo board using the MQTT (Message Queuing Telemetry Transport) protocol to publish commands, enabling synchronization between the vibration haptic devices and the LED light strips. An MQTT server, hosted on Tencent Cloud, was established using EMQX V4.0.4 running on a CentOS 7 server. Both the haptic and visual feedback control applications subscribed to the relevant pattern information and triggered the corresponding feedback modes upon receiving the data.

3.1.3. Independent Variables

Study 1 explored the impact of different feedback modes on street-crossing decision-making. The experiment included three independent variables, focusing on the effects of haptic and visual feedback. The specific independent variables are as follows:

- Feedback modality (two levels): haptic feedback and visual feedback.

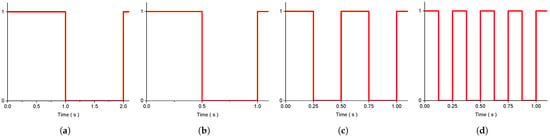

- Feedback frequency (four levels): 0.5 Hz, 1.0 Hz, 2.0 Hz, and 4.0 Hz, as shown in Figure 2.

Figure 2. Frequency patterns for haptic and visual feedback. 1 represents 100% intensity vibration or light on, while 0 indicates no vibration or light off. (a) 0.5 Hz, (b) 1.0 Hz, (c) 2.0 Hz, and (d) 4.0 Hz.

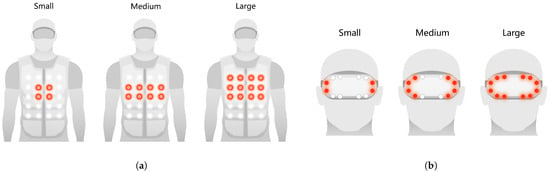

Figure 2. Frequency patterns for haptic and visual feedback. 1 represents 100% intensity vibration or light on, while 0 indicates no vibration or light off. (a) 0.5 Hz, (b) 1.0 Hz, (c) 2.0 Hz, and (d) 4.0 Hz. - Feedback size (three levels): small, medium, and large, as shown in Figure 3.

Figure 3. Configurations of haptic and visual feedback size. (a) Haptic stimulus size; (b) visual stimulus size.

Figure 3. Configurations of haptic and visual feedback size. (a) Haptic stimulus size; (b) visual stimulus size.

3.1.4. Experimental Design

Both feedback modes employed a periodic stimulation pattern (Figure 2), with haptic and visual feedback using the same frequency pattern. Each frequency cycle was evenly distributed between stimulation (1) and pause (0) phases, continuing until the participant made a decision. Each mode featured 12 parameter combinations (4 frequencies × 3 sizes), totaling 24 experimental conditions. The experiment proceeded in two stages. In the pre-experiment phase, participants familiarized themselves with each feedback mode’s characteristics (24 trials). In the formal experiment phase (24 trials), we randomized the order of haptic and visual modes to minimize sequence effects. Within each mode, parameter combinations were presented with an increasing size, followed by an increasing frequency to aid participants’ recall in subsequent questionnaires and interviews. This design facilitated more accurate evaluations of perceived urgency and decision confidence under each condition. Each participant completed a total of 48 trials (24 haptic feedback; 24 visual feedback), with 24 trials in the pre-experiment phase and 24 in the formal experiment phase.

3.1.5. Participants and Procedure

We recruited 16 participants (9 males; 7 females) from a university campus, with an average age of 23.7 years . Among the participants, 11 had prior experience with haptic or visual assistive devices, while 5 had no previous exposure to such technologies. During the experiment, the participants closed their eyes to simulate visual impairment [6]. Hassan et al. demonstrated that both sighted individuals with closed eyes and visually impaired pedestrians exhibit similar levels of decision consistency in their street-crossing decisions when relying on non-visual senses [6]. While this simulation cannot perfectly replicate the experience of those with long-term visual impairments, it provides a valuable preliminary approach to studying the challenges faced by individuals with visual impairments and evaluating potential assistive technologies in controlled settings.

Using a Pico controller, participants pushed the joystick forward to indicate “cross” or pulled it backward to indicate “wait” and then pressed the trigger to proceed to the next trial. After completion, the participants filled out two questionnaires. The first measured urgency using a five-point scale [86]: 0 (not perceived), 1 (insignificant), 2 (low priority), 3 (high priority), and 4 (urgent). This choice aligns with research showing that users can distinguish at least four levels of urgency in vibration cues [86,87]. The second captured decision confidence, from 0% to 100%, following methods in prior human–machine interaction studies [88]. Together, these metrics offered a comprehensive view of how different feedback modes impacted user decision-making.

3.2. Results

In Study 1, we collected data on user decisions, decision time, and behavioral metrics, including head rotation angles and movement distances to evaluate performance. As the data were not normally distributed, we employed generalized estimating equations (GEEs) [89,90,91] to analyze our within-subjects experimental design with multiple factors (feedback modality, frequency, and size). GEE models are capable of handling non-normally distributed dependent variables while accounting for the correlated nature of repeated-measures data [90,91]. For binary user decisions (0 or 1), we specified binomial distribution with a Probit link function. For decision time and behavioral metrics, we used gamma distribution with a log link function. We also assessed users’ subjective perceptions through questionnaires. For urgency perception (which contained zero values), we applied negative binomial distribution with an identity link function, while, for decision confidence (range: 0–100; no zero values), we utilized gamma distribution with a log link function. All models employed an exchangeable correlation structure to account for within-subject correlations and included feedback modality, frequency, and size as main effects, along with their interactions. This comprehensive analysis provided a thorough understanding of how different feedback modalities influence user decision-making and behavior.

3.2.1. Cross Rate, Decision Time, and User Behavior Data in Study 1

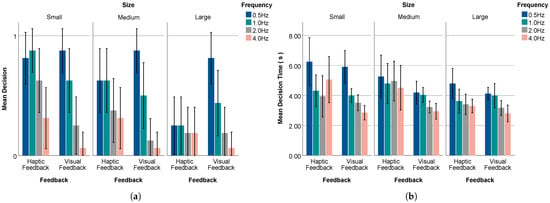

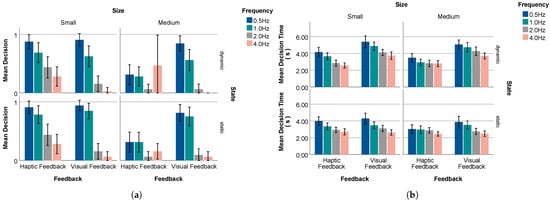

Cross rate.

We recorded participants’ crossing decision under different feedback modes and parameter-combination conditions; “1” represented the decision to cross, and “0” represented the decision to wait. The main effects were found for frequency () and size (). Additionally, we observed significant interaction effects between feedback and frequency () and between feedback and size (). The mean cross rate across feedback modality, frequency, and Size is illustrated in Figure 4a. Post hoc Bonferroni pairwise comparisons showed that the cross rate of frequency 0.5 Hz (0.72) was significantly more than frequency 2.0 Hz (0.27) and frequency 4.0 Hz (0.13) ; frequency 1.0 Hz (0.55) was significantly more than frequency 2.0 Hz (0.27) and frequency 4.0 Hz (0.13) . The cross rate of the small size (0.54) was significantly more than that of the large size (0.26) ; the medium size (0.40) was significantly more than the large size (0.26) .

Figure 4.

Mean cross rate and decision time. (a) Mean cross rate; (b) mean decision time.

Decision time.

We recorded participants’ decision time under different feedback modes and parameter combination conditions, defined as the time interval from receiving the feedback signal to making the final crossing decision. The main effects were found for feedback (), frequency (), and size (). Additionally, we observed significant interaction effects between feedback and frequency () and between feedback and size (). The mean decision time across feedback modality, frequency, and size is illustrated in Figure 4b. Post hoc Bonferroni pairwise comparisons showed that the decision time of the haptic feedback (4.46 s) was significantly longer than that of the visual feedback (3.66 s) . The decision time of frequency 4.0 Hz (3.49 s) was significantly faster than that of frequency 0.5 Hz (5.05 s) and frequency 1.0 Hz (4.11 s) ; frequency 2.0 Hz (3.67 s) was significantly faster than frequency 0.5 Hz (5.05 s) and frequency 1.0 Hz (4.11 s) ; frequency 1.0 Hz (4.11 s) was significantly faster than frequency 0.5 Hz (5.05 s) . The decision time of the large size (3.61 s) was significantly faster than that of the small size (4.36 s) and the medium size (4.18 s) .

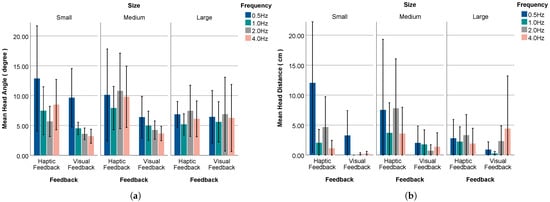

Head rotation angle.

We recorded the total head rotation angle during the decision time. The main effect was found for feedback (). The mean head rotation angle across feedback modalities, frequencies, and sizes is illustrated in Figure 5a. Post hoc Bonferroni pairwise comparisons showed that the head rotation angle of the haptic feedback (8.04 degrees) was significantly longer than that of the visual feedback (5.27 degrees) .

Figure 5.

The mean head rotation angle and head movement distance result of Study 1. (a) Mean head rotation angle; (b) mean head movement distance.

Head movement distance.

We recorded the total head movement distance during the decision time. The main effect was found for feedback (), frequency (), and size (). Additionally, we observed significant interaction effects between feedback and size (). The mean total head movement distance across feedback modalities, frequency, and size is illustrated in Figure 5b. Post hoc Bonferroni pairwise comparisons showed that the total head-movement distance of the haptic feedback (3.81 cm) was significantly longer than that of the visual feedback (1.23 cm) .

We also recorded the head movement distance along X, Y, and Z dimensions. For the X dimension, the main effects were found for feedback (). Post hoc Bonferroni pairwise comparisons showed that the distance of haptic feedback (4.66 cm) was significantly longer than that of visual feedback (2.99 cm) . For the Y dimension, the main effects were found for feedback () and frequency (, ). Post hoc Bonferroni pairwise comparisons showed that the distance of haptic feedback (2.30 cm) was significantly longer than that of visual feedback (1.18 cm) . The distance of frequency 0.5 Hz (2.34 cm) was significantly longer than that of frequency 1.0 Hz (1.53 cm) and frequency 4.0 Hz (1.32 cm) . For the Z dimension, the main effects were found for feedback (). Post hoc Bonferroni pairwise comparisons showed that the distance of haptic feedback (4.40 cm) was significantly longer than that of visual feedback (3.13 cm) .

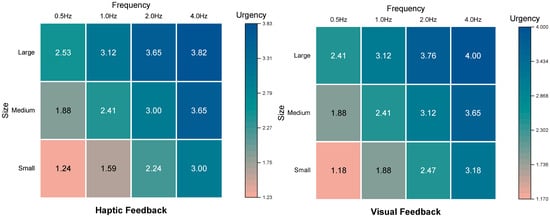

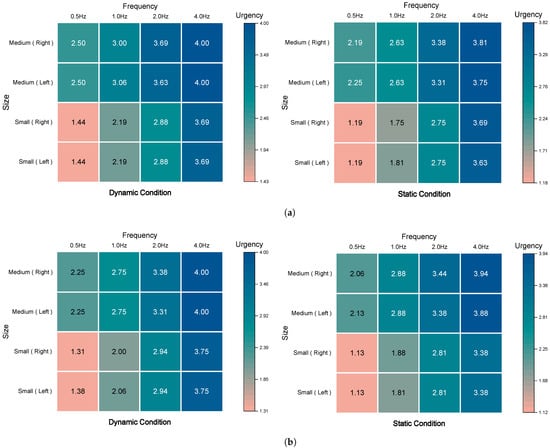

3.2.2. User Perception of Feedback Patterns

After the test, the participants completed a questionnaire measuring both urgency and confidence. GEEs revealed significant effects of frequency () and size () on urgency (Figure 6). Post hoc Bonferroni pairwise comparisons showed that the urgency of frequency 4.0 Hz (3.61) was significantly more than that of frequency 2.0 Hz (3.04) , frequency 1.0 Hz (2.41) , and frequency 0.5 Hz (1.84) ; that of frequency 2.0 Hz (3.07) was significantly more than that of frequency 1.0 Hz (2.41) and frequency 0.5 Hz (1.84) , and that of frequency 1.0 Hz (2.41) was significantly more than that of frequency 0.5 Hz (1.84) . The urgency of the large size (3.34) was significantly more than that of the medium size (2.77) and the small size (2.07) ; that of the medium size (2.77) was significantly more than that of the size small (2.07) .

Figure 6.

Mean user-perceived urgency of Study 1.

For confidence ratings, GEEs revealed significant effects of frequency (, ) and size () on confidence (Figure 7). Post hoc Bonferroni pairwise comparisons showed that the confidence of frequency 4.0 Hz (92.87%) was significantly more than that of frequency 2.0 Hz (85.73%) , frequency 1.0 Hz (79.77%) , and frequency 0.5 Hz (79.64%) ; that of frequency 2.0 Hz (85.73%) was significantly more than that of frequency 1.0 Hz (79.77%) . The confidence of the large size (87.77%) was significantly more than that of the medium size (81.43%) .

Figure 7.

Mean user-perceived confidence of Study 1.

Interview results show that over 62% of respondents (10 for haptic feedback and 6 for visual feedback) explicitly expressed a preference for the haptic feedback system, stating that its dynamic vibration frequency changes conveyed the urgency of situations more intuitively. This immediate physical perception helped them better understand and assess the level of danger in the current context. As one participant noted, “When the vibration frequency is very high, I can clearly feel that ‘danger is approaching’, which gives me more confidence in judging the level of urgency and making quick decisions”. However, some participants pointed out that an excessive vibration amplitude or overly high frequencies could cause discomfort or fatigue, indicating the need to strike a balance between alertness and user comfort. In contrast, participants reported that visual feedback conveyed a stronger sense of urgency compared to haptic feedback, especially the intense urgency evoked via high-frequency flashing, which made them more cautious during their decision-making process. As the flashing frequency increased, participants’ confidence in their decisions also improved. They generally believed that flashing frequency was the key factor in conveying urgency: faster flashes effectively induced a sense of time pressure, while lower frequencies caused some users to shift their attention to perceiving changes in size. However, the combination of high-frequency flashing and large size also involved limitations, as it could lead to perceptual fatigue and confusion in judgment. As one user observed, “When the light flashes intensely, it’s hard to tell whether the change comes from the frequency or the size. I feel overwhelmed by the flashing and have to make an immediate decision”.

The user feedback highlights a trade-off between the intuitive nature of haptic feedback and the stronger urgency signals from visual feedback, suggesting that an optimal system should balance perceptual effectiveness with user comfort to avoid cognitive overload during critical decision-making moments. A few participants further suggested incorporating directional motion cues, such as left-to-right vibration sequences or light flows, to better perceive the movement of oncoming vehicles. They noted that, while frequency effectively conveyed urgency, integrating directional information could enhance their ability to assess approaching hazards in more dynamic environments. These insights highlight the importance of frequency as a core parameter for both haptic and visual feedback, while size bolsters users’ confidence and situational awareness. Building on these findings, we extended our investigation beyond controlled traffic light crossings to more complex, vehicle-based crossing scenarios. Study 2 explores how integrating directional cues alongside frequency and size can further support visually impaired pedestrians in making safer crossing decisions when navigating roads with moving vehicles.

4. Study 2: Haptic and Visual Feedback for Assisting Visually Impaired Pedestrians in Vehicle-Based Crossing Decisions

Unlike traffic light-controlled intersections, where pedestrian signals regulate vehicle movement and create predictable stop-and-go patterns, vehicle-based crossings present greater uncertainty for visually impaired pedestrians. In scenarios such as mid-block crossings or uncontrolled intersections, the absence of explicit stop signals increases cognitive load, requiring pedestrians to judge the speed, direction, and intent of approaching vehicles in real time. These conditions demand more dynamic situational awareness, making it essential to explore how additional sensory cues—such as directional motion feedback—can support safer crossing decisions.

Extending the experimental setup and feedback modalities from Study 1, Study 2 focused on the vehicle crossing scenario. While retaining the same frequency settings, we refined the size variable and incorporated the feedback state and direction as additional cue dimensions to enhance visually impaired users’ ability to judge safe crossing times. We assessed these two modes through interaction design, user evaluation, and experimental data to clarify how haptic or visual feedback might facilitate crossing decisions.

4.1. Experimental Design

Prior work indicates that haptic information transmission can be optimized through parameter adjustments including speed, direction, intensity, and positioning [92]. Dynamic haptic signals have shown multiple advantages over static feedback: they effectively guide attention and convey hazard information while improving processing speed and accuracy [93,94], provide more natural and intuitive interactions [95,96], and enable the robust recognition of continuous motion and direction [97]. For visual feedback, research confirms that many visually impaired individuals retain sufficient light perception capabilities to discern positional and brightness changes [27,28].

Building on these findings, Study 2 maintained the same feedback implementation as Study 1 (as shown in Figure 8) while introducing additional parameters to examine both static and dynamic patterns, as well as directional cues for indicating the vehicle approach. This enhanced design allows for a systematic comparison of how different signal patterns aid safe crossing decisions across both feedback channels.

Figure 8.

Overview of haptic and visual feedback systems with signal pattern types.

4.1.1. Independent Variables

In Study 2, based on the evaluation results from Study 1, we adopted and adjusted the settings to optimize the user experience. In the experiment, haptic feedback was compared with visual feedback. The independent variables included the following:

- Feedback modality (two levels): haptic feedback and visual feedback.

- Feedback direction (two levels): left and right.

- Feedback state (two levels): dynamic and static, as shown in Figure 9.

Figure 9. Haptic and Visual Feedback in Study 2. (a) Dynamic feedback design (right direction): stimulation transitions from position 1 to position 2, with an equal duration at each position. (b) Static feedback design (right direction): using the same stimulation pattern as in Study 1.

Figure 9. Haptic and Visual Feedback in Study 2. (a) Dynamic feedback design (right direction): stimulation transitions from position 1 to position 2, with an equal duration at each position. (b) Static feedback design (right direction): using the same stimulation pattern as in Study 1. - Feedback frequency (four levels): 0.5 Hz, 1.0 Hz, 2.0 Hz, and 4.0 Hz, as shown in Figure 2.

- Feedback size (two levels): small and medium.

4.1.2. Experimental Design

Study 2 employed both haptic and visual feedback modes, maintaining the same periodic stimulation pattern as in Study 1 (see Figure 2), with each mode containing 32 parameter combinations (2 directions × 2 states × 4 frequencies × 2 sizes). The experiment was divided into two stages: a pre-experiment phase and a formal experiment phase. Each phase consisted of 64 trials (32 haptic feedback; 32 visual feedback), resulting in a total of 128 trials per participant. The pre-experiment phase aimed to familiarize participants with the basic characteristics of the feedback modes. In the formal experiment phase, the presentation order of the variables feedback mode, state, and direction was randomized to avoid sequence effects. Meanwhile, within each feedback mode, the parameter combinations were presented in a fixed order (with the size gradually increasing first, followed by frequency gradually increasing). This design helped participants more accurately recall and evaluate their perceived urgency and decision confidence under each condition during subsequent questionnaire assessments and interviews.

4.1.3. Participants and Procedure

Study 2 recruited 16 participants (9 male; 7 female) from a university campus, with an average age of 23.7 years . Among the participants, nine had prior experience with haptic or visual assistive devices, while seven had no previous exposure to such technologies. As in Study 1, the participants were required to simulate a visually impaired state by closing their eyes and making crossing decisions based on the feedback received. After the experiment, the participants also completed two evaluation questionnaires concerning urgency and decision confidence, using the same assessment methods as in Study 1.

4.2. Results

In Study 2, we collected the same types of data as in Study 1, including user decisions, decision time, behavioral metrics, and subjective perceptions. We employed the same GEE method for analysis, using consistent distribution and link function specifications for each collected data type, as in Study 1. All models utilized an exchangeable correlation structure to account for within-subject correlations and included feedback modality, direction, state, frequency, and size as main effects, along with their interactions. This comprehensive analysis enabled us to gain deep insights into how different feedback modalities influence users’ decision-making behaviors and subjective experiences.

4.2.1. Cross Rate, Decision Time, and User Behavior Data in Study

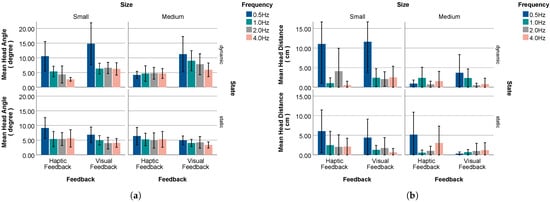

Cross rate.

Main effects were found for frequency (), size (, ), state (). Additionally, we observed significant interaction effects between feedback and frequency () and feedback and size (). The mean cross rate across feedback modalities, frequencies, sizes, directions, and states is illustrated in Figure 10a. Post hoc Bonferroni pairwise comparisons showed that the cross rate of frequency 0.5 Hz (0.76) was significantly more than that of frequency 2.0 Hz (0.15) and frequency 4.0 Hz (0.09) ; that of frequency 1.0 Hz (0.60) was significantly more than that of frequency 2.0 Hz (0.15) and frequency 4.0 Hz (0.09) . The cross rate of the small size (0.51) was significantly more than that of the medium size (0.24) .

Figure 10.

Mean cross rate and decision time. (a) Mean cross rate; (b) mean decision time.

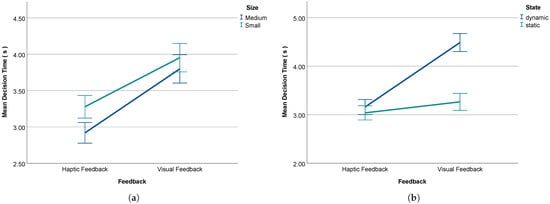

Decision time.

Main effects were found for feedback (), frequency (, ), size (), and state (, ). Additionally, we observed significant interaction effects between feedback and size () and between feedback and state (). The mean decision time across feedback modality, frequency, size, direction, and state is illustrated in Figure 10b. Post hoc Bonferroni pairwise comparisons showed that the decision time of the visual feedback (3.78 s) was significantly longer than that of the haptic feedback (3.07 s) . The decision time of frequency 4.0 Hz (2.85 s) was significantly faster than that of frequency 0.5 Hz (4.10 s) , frequency 1.0 Hz (3.63 s) , and frequency 2.0 Hz (3.17 s) ; that of frequency 2.0 Hz (3.17 s) was significantly faster than that of frequency 0.5 Hz (4.10 s) and frequency 1.0 Hz (3.63 s) ; that of frequency 1.0 Hz (3.63 s) was significantly faster than that of frequency 0.5 Hz (4.10 s) . The decision time of the medium size (3.28 s) was significantly faster than that of the small size (3.53 s) . The decision time of the state dynamic (3.73 s) was significantly longer than the static state (3.11 s) .

Head rotation angle.

We recorded the total head rotation angle during the decision time. The main effect was found for frequency () and state (). No other main or interaction effect was found. The mean decision across feedback modalities, frequencies, sizes, directions, and states is illustrated in Figure 11a. Post hoc Bonferroni pairwise comparisons showed that the total head rotation angle of frequency 0.5 Hz (8.07 degree) was significantly longer than that of frequency 1.0 Hz (5.51 degree) , frequency 2.0 Hz (5.13 degree) , and frequency 4.0 Hz (4.64 degree) ; that of frequency 1.0 Hz (5.51 degree) was significantly longer than that of frequency 4.0 Hz (4.64 degree) . The head rotation angle of the dynamic state (6.38 degree) was significantly longer than that of the static state (5.11 degree) .

Figure 11.

Mean head rotation angle and head movement distance results of Study 2. (a) Mean head rotation angle; (b) mean head movement distance.

Head movement distance.

We recorded the total head movement distance during the decision time. The main effect was found for feedback (), frequency (, ), and size (). The mean decision across feedback modalities, frequencies, sizes, directions, and states is illustrated in Figure 11b. Post hoc Bonferroni pairwise comparisons showed that the total head movement distance of the small size (2.80 cm) was significantly longer than that of the medium size (1.56 cm) .

We also recorded the head movement distance along the X, Y, and Z dimensions. For the X dimension, the main effects were found for frequency () and state (). Post hoc Bonferroni pairwise comparisons showed that the distance of frequency 0.5 Hz (4.72 cm) was significantly longer than that of frequency 1.0 Hz (3.16 cm) and frequency 4.0 Hz (2.73 cm) . The distance of the dynamic state (3.89 cm) was significantly longer than that of the static state (2.93 cm) . For the Y dimension, the main effects were found for frequency (), size (), and state (). Post hoc Bonferroni pairwise comparisons showed that the distance of frequency 0.5 Hz (1.97 cm) was significantly longer than that of frequency 1.0 Hz (1.31 cm) , frequency 2.0 Hz (1.10 cm) , and frequency 4.0 Hz (1.03 cm) ; that of frequency 1.0 Hz (1.31 cm) was significantly longer than that of frequency 2.0 Hz (1.10 cm) and frequency 4.0 Hz (1.03 cm) . The distance of the small size (1.42 cm) was significantly longer than that of the medium size (1.20 cm) . The distance of the dynamic state (1.46 cm) was significantly longer than that of the static state (1.17 cm) . For the Z dimension, the main effects were found for frequency (). Post hoc Bonferroni pairwise comparisons showed that the distance of frequency 0.5 Hz (4.74 cm) was significantly longer than that of frequency 1.0 Hz (3.24 cm) , frequency 2.0 Hz (2.82 cm) , and frequency 4.0 Hz (2.71 cm) .

4.2.2. User Perception of Feedback Patterns

After the test, participants completed a questionnaire measuring both urgency and confidence. GEE revealed significant effects of frequency (), size (), and state (). No other main or interaction effect was found. The mean urgency across feedback modalities, frequencies, sizes, directions, and states is illustrated in Figure 12. Post hoc Bonferroni pairwise comparisons showed that, for urgency, that of frequency 4.0 Hz (3.81) was significantly more than that of frequency 0.5 Hz (1.73) , frequency 1.0 Hz (2.38) , and frequency 2.0 Hz (3.16) ; that of frequency 2.0 Hz (3.16) was significantly more than that of frequency 0.5 Hz (1.73) and frequency 1.0 Hz (2.38) , while that of frequency 1.0 Hz (2.38) was significantly more than that of frequency 0.5 Hz (1.73) . The urgency of the medium size (3.18) was significantly more than that of the small size (2.37) . The urgency of the dynamic state (2.88) was significantly more than that of the static state (2.67) .

Figure 12.

Mean user-perceived urgency of Study 2. (a) Haptic feedback urgency; (b) visual feedback urgency.

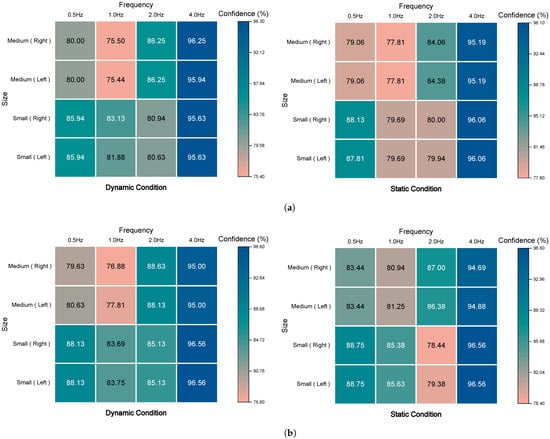

For confidence ratings, EGG revealed significant effects of frequency (, ). No other main or interaction effect was found. The mean confidence across feedback modalities, frequencies, sizes, directions, and states is illustrated in Figure 13. Post hoc Bonferroni pairwise comparisons showed that, for confidence, that of frequency 4.0 Hz (95.74%) was significantly more than that of frequency 0.5 Hz (84.14%) , frequency 1.0 Hz (80.35%) and frequency 2.0 Hz (83.82%) .

Figure 13.

Mean user-perceived confidence of Study 2. (a) Haptic feedback confidence; (b) visual feedback confidence.

In post-experiment interviews, over 81% of respondents (13 for haptic feedback; 3 for visual feedback) explicitly expressed a preference for the haptic feedback system, particularly favoring its dynamic tactile presentation (11 for the dynamic condition; 5 for the static condition). They felt that dynamic haptic feedback helped them perceive the vehicle’s trajectory more intuitively and accurately. As one participant noted, “The dynamic Haptic Feedback allowed me to clearly feel the process of the vehicle crossing by the side of my torso, which significantly boosted my confidence in making decisions”. Additionally, users unanimously agreed that changes in feedback frequency were crucial for perceiving the distance and urgency of approaching vehicles. They stated that the gradual increase in vibration or light frequency effectively conveyed a sense of “urgency” as the vehicle approached, while changes in the feedback area helped them judge the vehicle’s distance. The combination of these two dimensions allowed them to quickly assess danger and make cautious decisions. Most participants pointed out that high-frequency vibrations enabled them to recognize imminent danger more immediately than high-frequency visual cues, giving them greater confidence in their decision-making. Regarding the feedback area, one participant commented as follows: “A smaller vibration area allowed me to perceive the cues more precisely; combined with frequency changes, I felt more confident in my decision-making”. Another user stated, “Frequency changes within a small area allowed me to quickly understand the cues while consuming fewer cognitive resources”.

The user feedback revealed a strong user preference for haptic feedback systems that combine dynamic presentation with frequency modulation, suggesting that tactile cues may offer cognitive advantages for urgent safety decisions by providing spatially intuitive information while imposing a lower cognitive load compared to visual alternatives. However, some participants suggested that, in specific scenarios, static visual cues might offer unique advantages, particularly when multiple vehicles needed to be perceived; changes in light areas could intuitively convey information about traffic density. Participants also offered suggestions for enhancing the feedback modes. Some proposed using different frequencies or sizes to represent specific vehicle information, such as vehicle size, type, and speed, to better assist blind individuals in perceiving vehicular details and making more informed crossing decisions. As one participant stated, “Using different sizes to indicate vehicle sizes, and including non-motorized vehicles in the perceptual information, could ensure safety during daily travel”. These suggestions highlight the users’ need for multi-dimensional traffic information acquisition.

5. Discussion

We explored the effectiveness of haptic and visual feedback in assisting visually impaired individuals with crossing decisions. By systematically adjusting different feedback parameter combinations, we compared the performance of the two feedback methods in conveying critical information such as traffic light status and vehicle movement. The study found that, in the traffic light scenario (Study 1), visual feedback significantly reduced users’ decision-making time, whereas, in the vehicle crossing scenario (Study 2), haptic feedback demonstrated a more pronounced advantage. This section discusses the theoretical significance of these findings based on the existing literature, provides an in-depth analysis of the study’s limitations, and proposes future research directions and practical recommendations.

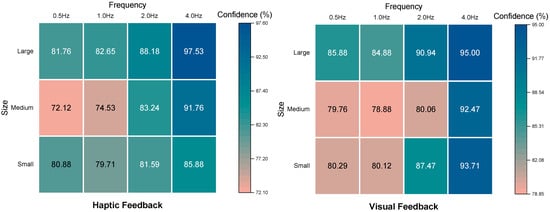

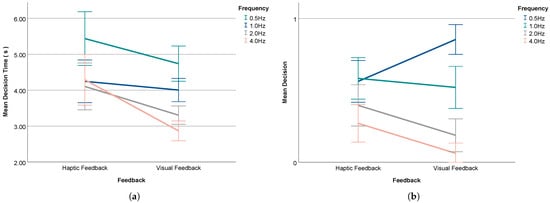

5.1. Effects of Feedback Urgency on Decision Time and Crossing Outcomes

In Study 1, focusing on the traffic light crossing scenario, visual feedback led to significantly shorter decision times than haptic feedback. Under different frequency conditions, participants interpreted visual feedback as conveying higher urgency, thereby accelerating their reaction times (see Figure 14a) and clarifying crossing intentions (Figure 14b). These findings suggest that visual feedback could potentially promote timely and cautious decisions for individuals with residual light perception. However, from a user-experience perspective, most participants still preferred haptic feedback. While visual feedback showed advantages in decision-making speed, prior research has identified potential limitations, including perceptual fatigue and possible confusion with ambient lighting during extended use [27]. As a result, haptic feedback offered a more comfortable experience, whereas visual feedback demonstrated better performance in rapid decision-making for traffic signal scenarios.

Figure 14.

Decision time and cross rate across feedback and frequency of Study 1. (a) Mean decision time for feedback and frequency; (b) mean cross rate for feedback and frequency.

Further analysis showed that the modality difference remained significant across different feedback area conditions. Visual feedback consistently led to faster decision times (see Figure 15a). However, participants’ crossing intentions were less explicit with visual feedback compared to haptic feedback (see Figure 15b). This suggests that, while visual feedback enhances decision speed, haptic feedback may better support clear action intentions, highlighting the complementary strengths of both modalities for different scenarios or preferences.

Figure 15.

Decision time and cross rate across feedback and frequency of Study 1. (a) Mean decision time for feedback and size; (b) mean cross rate for feedback and size.

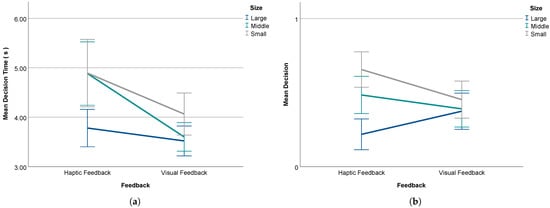

In Study 2, which addressed vehicle crossing, haptic feedback yielded two key benefits. First, it effectively reduced decision-making time; second, its ability to convey vehicle proximity in a tangible manner earned widespread user approval. Notably, when frequency-related decisions were considered (see Figure 16a), visual feedback maintained its clarity advantage observed in Study 1. However, area-based decisions (Figure 16b) revealed stronger performance for haptic feedback, as participants more readily sensed urgency through changes in vibration area [85].

Figure 16.

Cross rate across feedback, frequency, and size of Study 2. (a) Mean cross rate for feedback and frequency; (b) mean cross rate for feedback and size.

Moreover, the advantages of Haptic Feedback extended beyond decision quality and were also evident in decision speed under various feedback parameters. The data showed that haptic feedback consistently achieved shorter decision times compared to visual feedback, both under different feedback area parameters (see Figure 17a) and feedback state parameters (see Figure 17b). Particularly under dynamic feedback parameters, the speed advantage of haptic feedback was especially pronounced, emphasizing its effectiveness in scenarios requiring rapid responses to moving vehicles.

Figure 17.

Decision time across the feedback, size, and state of Study 2. (a) Mean decision time for feedback and size; (b) mean decision time for feedback and state.

The parameter analysis results identified several feedback combinations that performed well in different scenarios. In Study 1 (see Table 2), the combinations of small (1.0 Hz) and large (1.0 Hz) for haptic feedback, and medium (0.5 Hz) and small (4.0 Hz) for visual feedback, effectively distinguished between “cross” and “wait” decision scenarios. In Study 2 (see Table 3), directional haptic feedback utilized combinations of small (0.5 Hz) and medium (2.0 Hz) in both dynamic and static conditions, while Visual Feedback consistently used small (0.5 Hz) and small (4.0 Hz) combinations in both states. These parameter combinations ensured both the timeliness of decisions and an appropriate level of alertness while avoiding discomfort caused by excessive urgency. Beyond technical parameters, we also examined subjective user preferences, which revealed distinct patterns across different crossing scenarios: 62% of participants preferred visual feedback in the traffic light crossing scenario, while 81% favored haptic feedback in the vehicle crossing scenario. These contrasting preferences across different street-crossing contexts suggest that combining both feedback types may offer complementary benefits for comprehensive crossing assistance. In addition to preference data, we analyzed performance metrics to further understand the optimal implementation approaches. Although users’ overall decision times were shorter in static states during Study 2, further analysis showed no significant time difference between dynamic and static modes for haptic feedback , whereas visual feedback was significantly faster under static conditions than dynamic . Consequently, haptic feedback may adopt either dynamic or static approaches based on user preference, whereas visual feedback under static conditions appears optimal for efficient decision-making regarding vehicle movement.

Table 2.

Effects of feedback parameters on user responses in Study 1.

Table 3.

Effects of feedback parameters on user responses in Study 2.

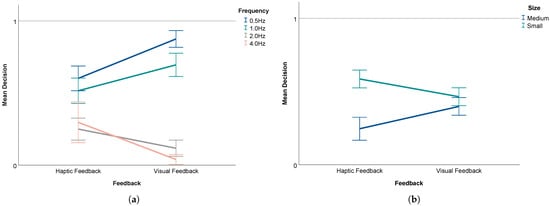

5.2. Analysis of Confidence Levels in Relation to Decision Time and Crossing Behavior

In both Study 1 and Study 2, we conducted in-depth correlation analyses between users’ decision confidence and crossing frequencies (see Table 4 and Table 5). Focusing on complete-confidence cases (confidence = 100%), we found that the visual feedback group consistently surpassed the haptic feedback group in generating fully confident decisions. The visual feedback group made 73 such decisions in Study 1, showing a 21.67% increase compared to the haptic feedback group’s 60 instances, and reached 180 fully confident decisions in Study 2 (90 Dynamic, 90 Static), exceeding the haptic feedback group’s 165 (87 Dynamic, 78 Static) by about 9.1%. In addition, the dynamic condition within the haptic feedback group effectively boosted user confidence, whereas the visual feedback group showed no such difference between static and dynamic modes. As the feedback frequency rose in both the signal-controlled (Study 1) and vehicle crossing (Study 2) scenarios, users receiving visual feedback often chose to wait. This pattern likely arises from the clarity of visual feedback [27], wherein higher frequencies heighten environmental awareness and encourage more cautious decisions. However, environmental conditions such as intense sunlight may reduce the perceptibility of visual cues [27], potentially diminishing user confidence in visual feedback under certain circumstances.

Table 4.

User responses to various feedback parameters in Study 1.

Table 5.

User responses to various feedback parameters in Study 2.

From the perspective of confidence levels, the decision time data were analyzed by categorizing confidence into three levels: full confidence (100%), high confidence (90–99%), and low confidence (<90%). In Study 1 (see Table 6), we examined the effect of different confidence levels on decision times in the signal-controlled crossing scenario. The results showed that decision times (see Table 7) for haptic feedback (full confidence: 3.65 s < high confidence: 4.24 s < low confidence: 4.79 s) were generally longer than those for visual feedback (full confidence: 3.45 s < low confidence: 3.82 s < high confidence: 4.15 s). This result indicates that visual feedback supports faster decisions across all confidence levels. In particular, when users lack confidence, high-frequency flashing in visual feedback appears to generate a stronger sense of urgency, prompting quicker action.

Table 6.

Study 1: decision time (s) and confidence levels across different feedback parameters.

Table 7.

Decision time (s) analysis across confidence levels and feedback types.

In Study 2, we examined both dynamic and static conditions in the vehicle crossing scenario (see Table 8). Under dynamic conditions (Table 7), both feedback modalities showed the longest decision times at full confidence: for haptic feedback (high confidence: 2.88 s < low confidence: 3.27 s < full confidence: 3.53 s) and visual feedback (low confidence: 4.32 s < high confidence: 4.59 s < full confidence: 4.79 s). Moreover, at the same confidence level, haptic feedback yielded shorter decision times than visual feedback, possibly owing to dynamic vibration cues that intuitively signal impending hazards [93]. Under static conditions, haptic feedback decision times remained shorter once users reached high confidence (low confidence: 2.99 s < high confidence: 3.10 s < full confidence: 3.26 s), while visual feedback exhibited (low confidence: 2.89 s < full confidence: 3.36 s < high confidence: 3.48 s). These findings show that, at or above high confidence, haptic feedback consistently enabled briefer decision times than visual feedback. The differing impact of confidence levels in Studies 1 and 2 thus reflects unique traffic environments: while fixed light signals give clearer crossing windows [5], vehicle-based judgments demand more cautious strategies [6], leading confident users to take additional time before proceeding.

Table 8.

Study 2: decision time (s) and confidence levels across different feedback parameters.

5.3. Implications for Haptic and Visual Feedback Design

Our study highlights the distinct advantages of haptic and visual feedback in different street-crossing contexts: visual feedback enhances decision efficiency at traffic lights, while haptic feedback proves especially useful in vehicle-based scenarios. Based on these findings, we offer the following design recommendations:

- Visual feedback significantly improved decision-making efficiency, conveying urgency that enabled users to act quickly even at lower confidence levels. Designers targeting individuals with light perception should leverage visual cues to foster timely and effective decisions.

- Haptic feedback markedly enhanced decision efficiency, particularly for users with higher confidence, who could respond more rapidly than with visual feedback. Assistive systems requiring quick, confident decisions should prioritize haptic feedback to facilitate swift user reactions.

- Visual feedback induces more cautious behavior among users, owing to its stronger sense of urgency, particularly under high-frequency cues. This approach is beneficial for scenarios that demand heightened risk awareness, such as crossing busy multi-lane roads.

- Across both scenarios, a notable user preference emerged for haptic feedback, with participants citing comfort and intuitive information delivery. Given the respective strengths of both modalities, assistive systems should allow users to select their preferred method based on personal needs and specific crossing circumstances.

6. Conclusions

This study investigated the effectiveness of haptic and visual feedback in assisting visually impaired individuals with safe street-crossing decisions. Through two controlled experiments, we examined how different feedback modalities influenced decision-making efficiency, user confidence, and perceived urgency across distinct crossing scenarios: traffic signal-controlled intersections and vehicle-based crossings. Key findings indicate that, in signal-controlled crossings, visual feedback significantly reduced decision time, enabling quicker and more confident decisions, though some users experienced perceptual fatigue. Haptic feedback, while slightly slower, was preferred for its intuitive and comfortable nature. In vehicle-based crossings, haptic feedback was more effective, particularly in dynamic conditions, enhancing situational awareness and enabling faster decisions.

These findings underscore the importance of tailoring assistive feedback to specific street-crossing environments. Future research should explore hybrid feedback systems that integrate both modalities to optimize safety and user experience. We acknowledge the limitations of our study, particularly the use of simulated environments, rather than real-world settings and the lack of participants with actual visual impairments. Future studies should expand to real-world urban environments with larger, more diverse participant groups, particularly individuals with varying degrees of visual impairment, including those with residual light perception. Field testing should occur across multiple intersection types under various traffic and environmental conditions, followed by longitudinal studies to assess adaptation and long-term benefits. This ecological approach would significantly enhance the system’s validation and generalizability. By leveraging multimodal feedback systems, we can significantly improve the mobility independence and safety of visually impaired pedestrians across various urban settings.

Author Contributions

Conceptualization, G.R., J.H.L. and G.W.; methodology, G.R. and T.H.; software, G.R.; validation, T.H. and J.H.L.; formal analysis, Z.H.; investigation, Z.H. and W.L.; resources, G.W.; data curation, Z.H.; writing—original draft preparation, G.R. and Z.H.; writing—review and editing, G.R., J.H.L. and G.W.; visualization, W.L.; supervision, G.W.; project administration, G.R. and G.W.; funding acquisition, G.W. and J.H.L. All authors have read and agreed to the published version of the manuscript.

Funding

This study was supported by (1) the Korea Institute of Police Technology (KIPoT; Police Lab 2.0 program) grant funded by MSIT (RS-2023-00281194), (2) a research grant (2024-0035) funded by HAII Corporation, (3) the Fujian Province Social Science Foundation Project (No. FJ2025MGCA042), and (4) the 2024 Fujian Provincial Lifelong Education Quality Improvement Project (No. ZS24005).

Institutional Review Board Statement

The study was conducted according to the guidelines of the Declaration of Helsinki and approved by the Institutional Review Board of School of Design Arts, Xiamen University of Technology (Approval Number: XMUT-SDA-IRB-2024-10/043, approval date: 15 October 2024).

Informed Consent Statement

Informed consent was obtained from all subjects involved in the study.

Data Availability Statement

All data are contained within the manuscript. Raw data are available from the corresponding author upon request.

Acknowledgments

We appreciate all participants who took part in the studies.

Conflicts of Interest

Author Jee Hang Lee was employed by the company Institute for Advanced Intelligence Study. The remaining authors declare that the research was conducted in the absence of any commercial or financial relationships that could be construed as a potential conflict of interest.

Abbreviations

The following abbreviations are used in this manuscript:

| 3D | Three-Dimensional |

| IoT | Internet of Things |

| V2X | Vehicle-to-Everything |

| MQTT | Message Queuing Telemetry Transport |

| GEEs | Generalized Estimating Equations |

References

- World Health Organization. World Report on Vision; Technical Report; World Health Organization: Geneva, Switzerland, 2019. [Google Scholar]

- Weiland, J.; Humayun, M. Intraocular retinal prosthesis. IEEE Eng. Med. Biol. Mag. 2006, 25, 60–66. [Google Scholar] [CrossRef] [PubMed]

- Morley, J.W.; Chowdhury, V.; Coroneo, M.T. Visual Cortex and Extraocular Retinal Stimulation with Surface Electrode Arrays. In Visual Prosthesis and Ophthalmic Devices; Humana Press: Totowa, NJ, USA, 2007; pp. 159–171. [Google Scholar] [CrossRef]

- Tian, S.; Zheng, M.; Zou, W.; Li, X.; Zhang, L. Dynamic Crosswalk Scene Understanding for the Visually Impaired. IEEE Trans. Neural Syst. Rehabil. Eng. 2021, 29, 1478–1486. [Google Scholar] [CrossRef]

- Huang, C.Y.; Wu, C.K.; Liu, P.Y. Assistive technology in smart cities: A case of street crossing for the visually-impaired. Technol. Soc. 2022, 68, 101805. [Google Scholar] [CrossRef]

- Hassan, S.E. Are normally sighted, visually impaired, and blind pedestrians accurate and reliable at making street crossing decisions? Investig. Ophthalmol. Vis. Sci. 2012, 53, 2593–2600. [Google Scholar] [CrossRef] [PubMed]

- Geruschat, D.R.; Hassan, S.E. Driver behavior in yielding to sighted and blind pedestrians at roundabouts. J. Vis. Impair. Blind. 2005, 99, 286–302. [Google Scholar] [CrossRef]

- Ihejimba, C.; Wenkstern, R.Z. DetectSignal: A Cloud-Based Traffic Signal Notification System for the Blind and Visually Impaired. In Proceedings of the 2020 IEEE International Smart Cities Conference (ISC2), Piscataway, NJ, USA, 28 September–1 October 2020; pp. 1–6, ISBN 9781728182940. [Google Scholar] [CrossRef]

- Ahmetovic, D.; Gleason, C.; Ruan, C.; Kitani, K.; Takagi, H.; Asakawa, C. NavCog: A navigational cognitive assistant for the blind. In Proceedings of the 18th International Conference on Human-Computer Interaction with Mobile Devices and Services, Florence, Italy, 6–9 September 2016; pp. 90–99, ISBN 9781450344081. [Google Scholar] [CrossRef]

- Khan, A.; Khusro, S. An insight into smartphone-based assistive solutions for visually impaired and blind people: Issues, challenges and opportunities. Univers. Access Inf. Soc. 2021, 20, 265–298. [Google Scholar] [CrossRef]

- Budrionis, A.; Plikynas, D.; Daniušis, P.; Indrulionis, A. Smartphone-based computer vision travelling aids for blind and visually impaired individuals: A systematic review. Assist. Technol. 2022, 34, 178–194. [Google Scholar] [CrossRef]

- See, A.R.; Sasing, B.G.; Advincula, W.D. A smartphone-based mobility assistant using depth imaging for visually impaired and blind. Appl. Sci. 2022, 12, 2802. [Google Scholar] [CrossRef]

- Dhod, R.; Singh, G.; Singh, G.; Kaur, M. Low cost GPS and GSM based navigational aid for visually impaired people. Wirel. Pers. Commun. 2017, 92, 1575–1589. [Google Scholar] [CrossRef]

- Bhatnagar, A.; Ghosh, A.; Florence, S.M. Android integrated voice based walking stick for blind with obstacle recognition. In Proceedings of the 2022 Fourth International Conference on Emerging Research in Electronics, Computer Science and Technology (ICERECT), Mandya, India, 26–27 December 2022; pp. 1–4, ISBN 9781665456357. [Google Scholar] [CrossRef]

- Mai, C.; Chen, H.; Zeng, L.; Li, Z.; Liu, G.; Qiao, Z.; Qu, Y.; Li, L.; Li, L. A smart cane based on 2D LiDAR and RGB-D camera sensor-realizing navigation and obstacle recognition. Sensors 2024, 24, 870. [Google Scholar] [CrossRef]

- Guerreiro, J.; Sato, D.; Asakawa, S.; Dong, H.; Kitani, K.M.; Asakawa, C. CaBot: Designing and evaluating an autonomous navigation robot for blind people. In Proceedings of the 21st International ACM SIGACCESS Conference on Computers and Accessibility, Pittsburgh, PA, USA, 28–30 October 2019; pp. 68–82, ISBN 9781450366762. [Google Scholar] [CrossRef]

- Wang, L.; Chen, Q.; Zhang, Y.; Li, Z.; Yan, T.; Wang, F.; Zhou, G.; Gong, J. Can Quadruped Guide Robots be Used as Guide Dogs? In Proceedings of the 2023 IEEE/RSJ International Conference on Intelligent Robots and Systems (IROS), Detroit, MI, USA, 1–5 October 2023; pp. 4094–4100, ISBN 9781665491907. [Google Scholar] [CrossRef]