A Unified Denoising Framework for Restoring the LiDAR Point Cloud Geometry of Reflective Targets

Abstract

1. Introduction

- (1)

- Unlike existing denoising algorithms that typically address only one type of noise, our method is capable of effectively tackling the veiling effect, range anomalies, and blooming effect within a unified framework, offering a comprehensive solution to the challenges encountered in complex scenarios.

- (2)

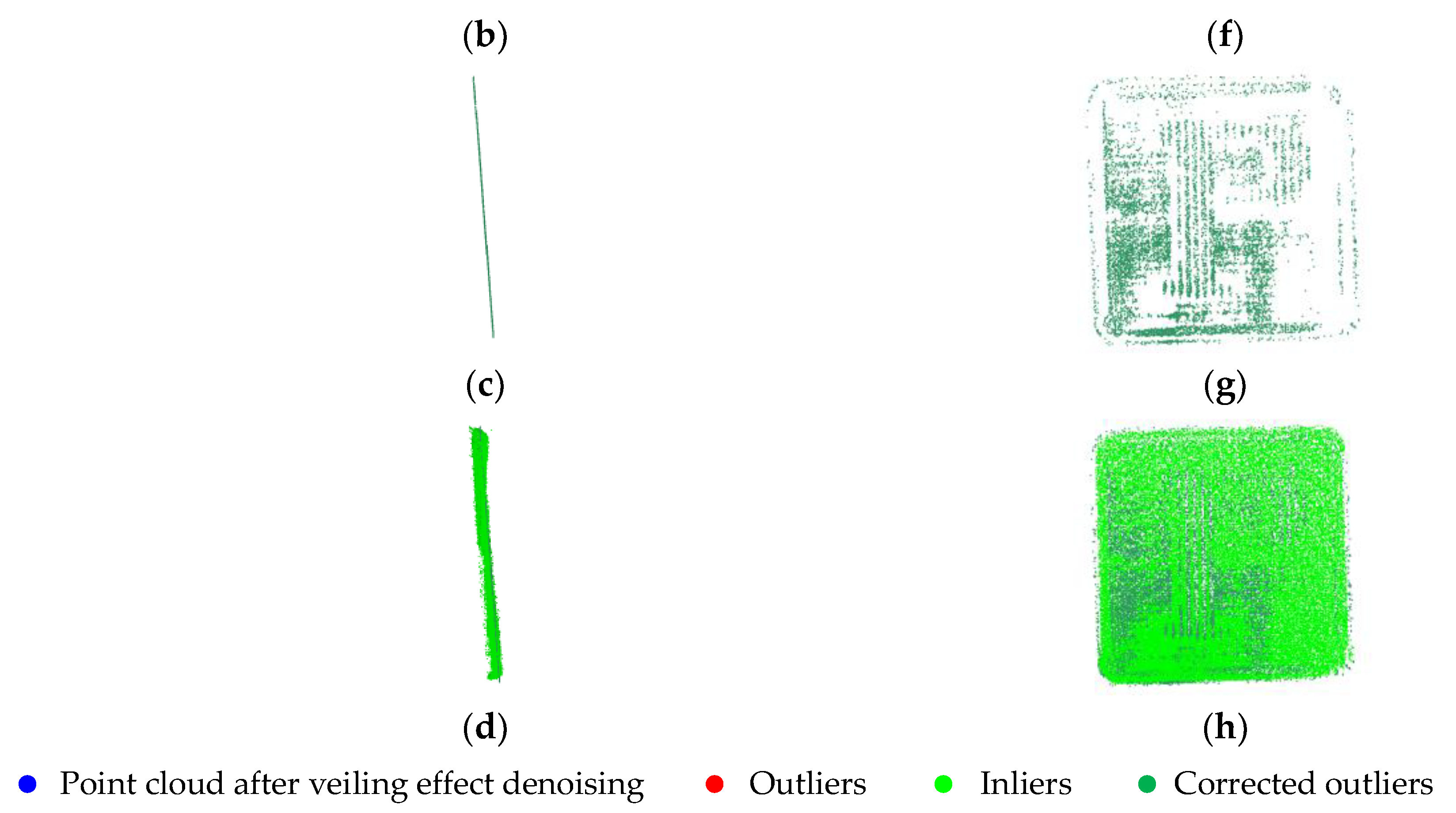

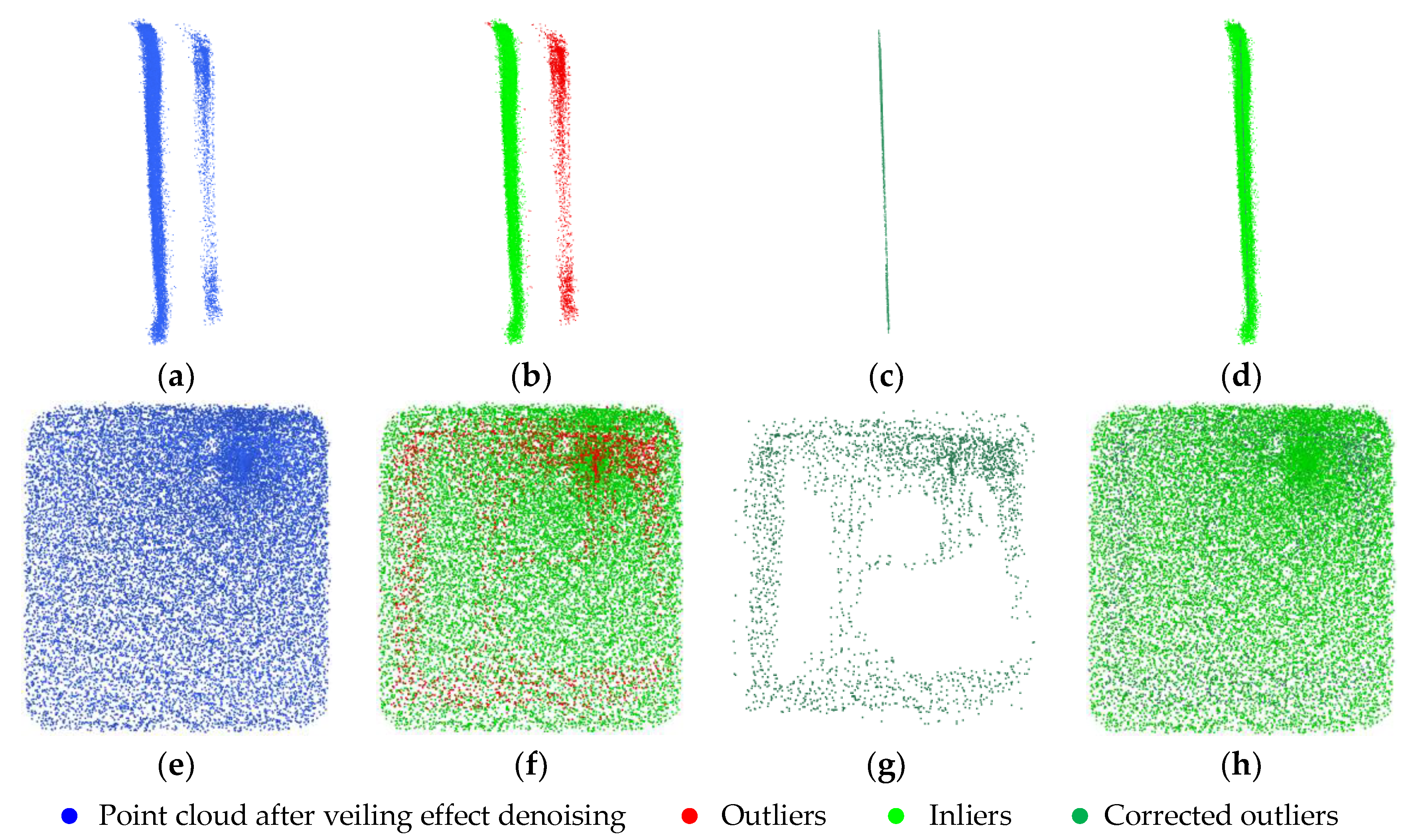

- By integrating the MSAC-based plane fitting algorithm with a ray-projection approach, the range-anomalous points are corrected back to the fitted plane. Compared with denoising algorithms based on spatial statistical strategies, which tend to treat these points as noise and remove them, our method recovers these points to the target’s surface, resulting in a denser and more complete point cloud representation.

- (3)

- To the best of our knowledge, our method is the first to utilize the spatial energy distribution of Gaussian laser beams to eliminate noise points caused by the blooming effect. An adaptive error ellipse is used to refine the boundary of the blooming point cloud. The parameters of the error ellipse are adaptively determined based on the divergence angle of the laser beam, the target distance, and the normal vector along the boundary. With simple adjustments to the algorithm’s parameters, it can be applied to various LiDAR models with different divergence angles.

2. Materials and Methods

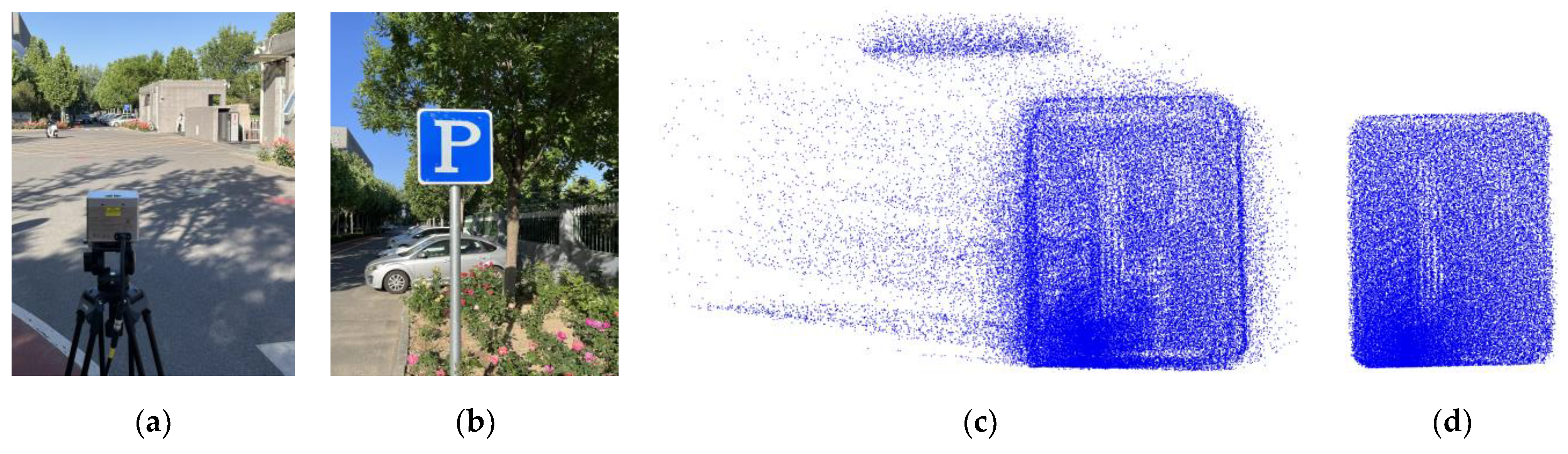

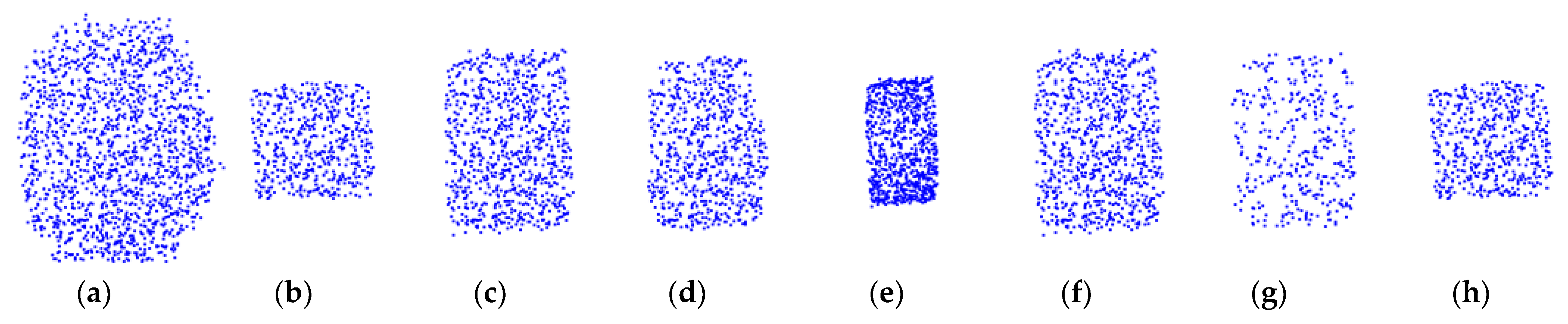

2.1. Dataset

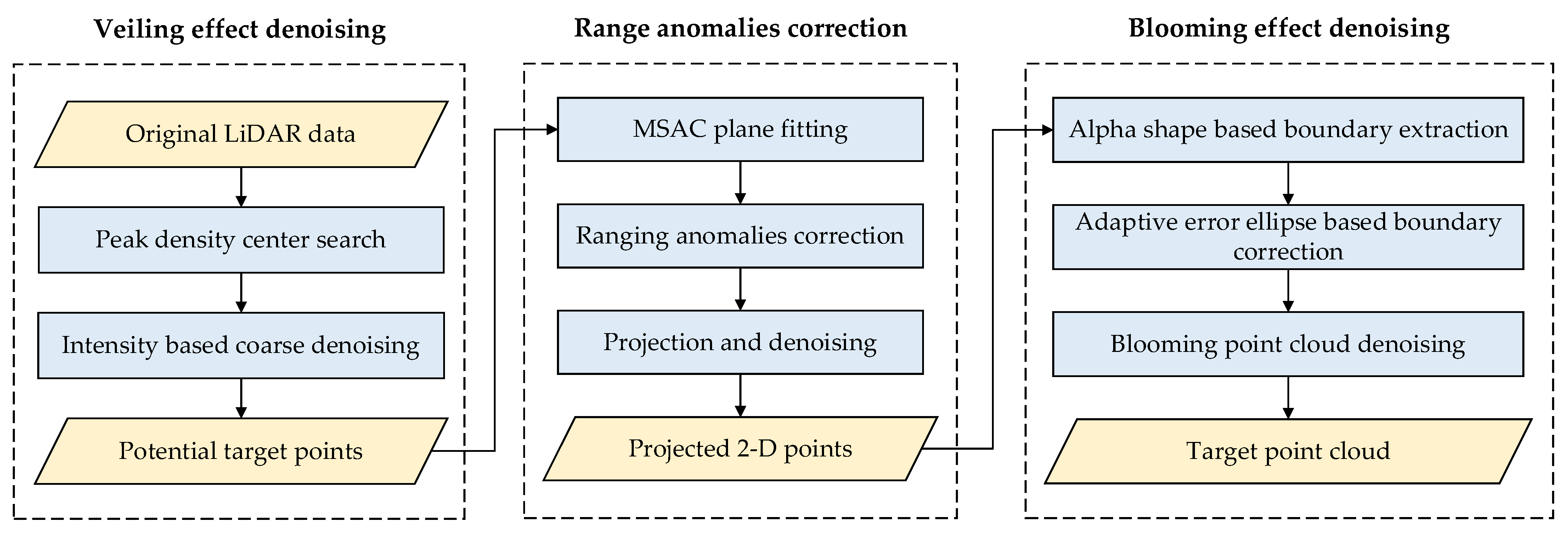

2.2. Methods

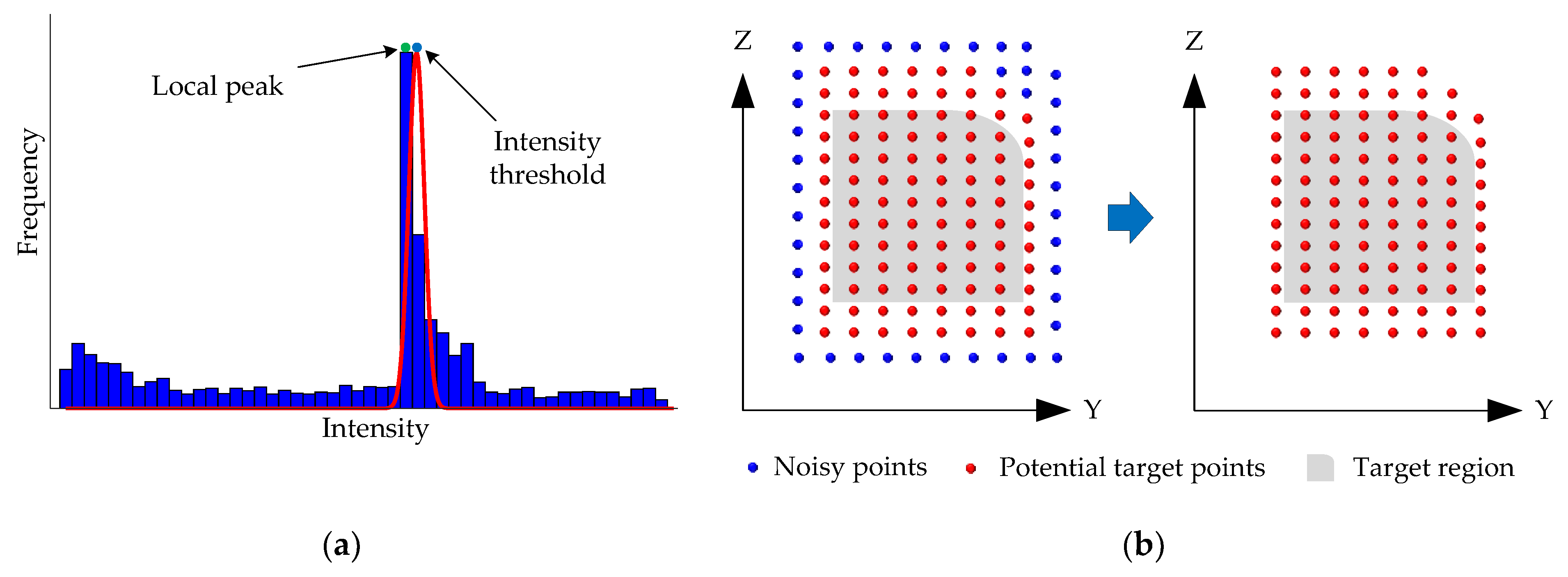

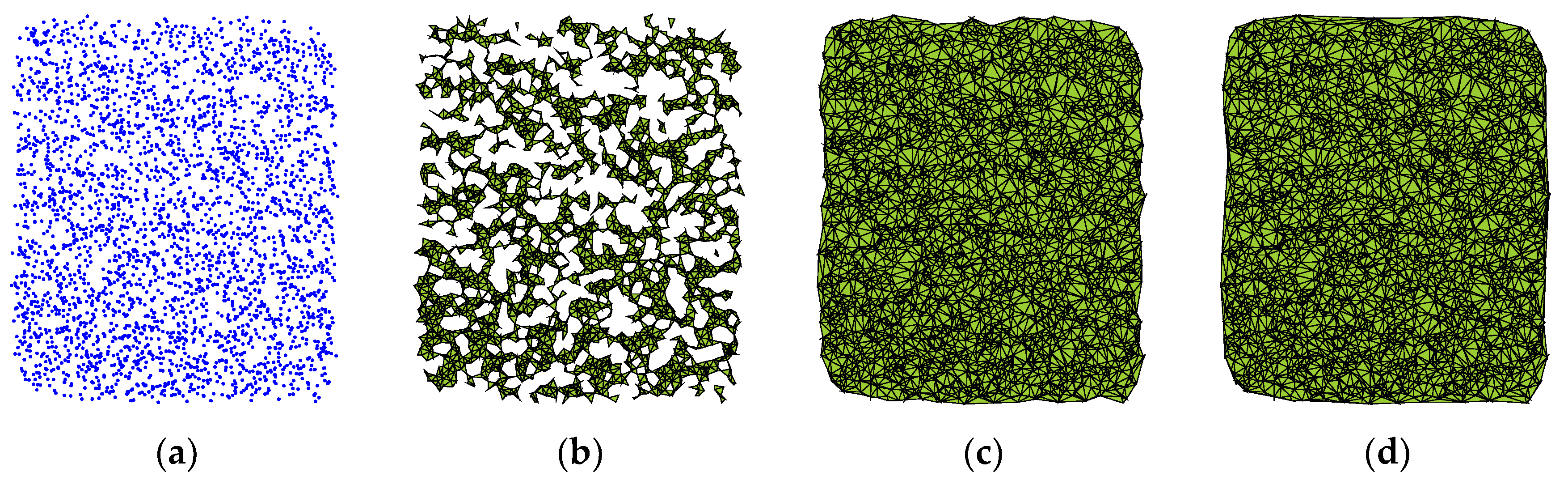

2.2.1. Veiling Effect Denoising

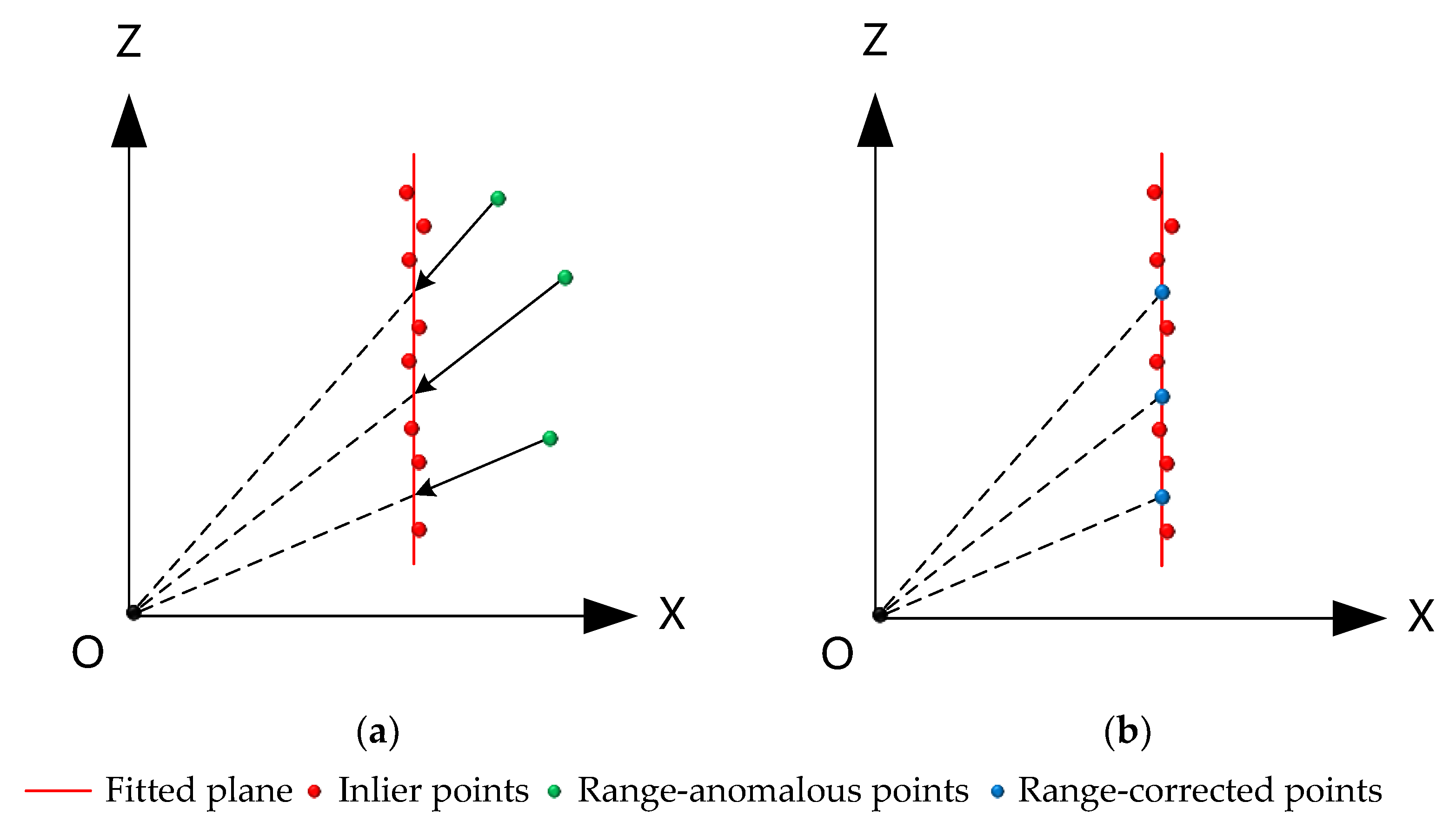

2.2.2. Range Anomalies Correction

| Algorithm 1: The range anomalies correction algorithm |

| Input: Potential target points and the maximum distance from an inlier point to the plane Output: Projected 2D points (1) Fit a plane to using the MSAC algorithm to obtain the plane equation. (2) Classify points as inliers (3) is not empty then (4) do (5) Define the origin of the LiDAR coordinate system as O (0, 0, 0) (6) (7) with the plane a0x + b0y + c0z + d0 = 0 (8) (9) end for (10) end if (11) (12) onto the YOZ plane and remove isolated noise points (13) return |

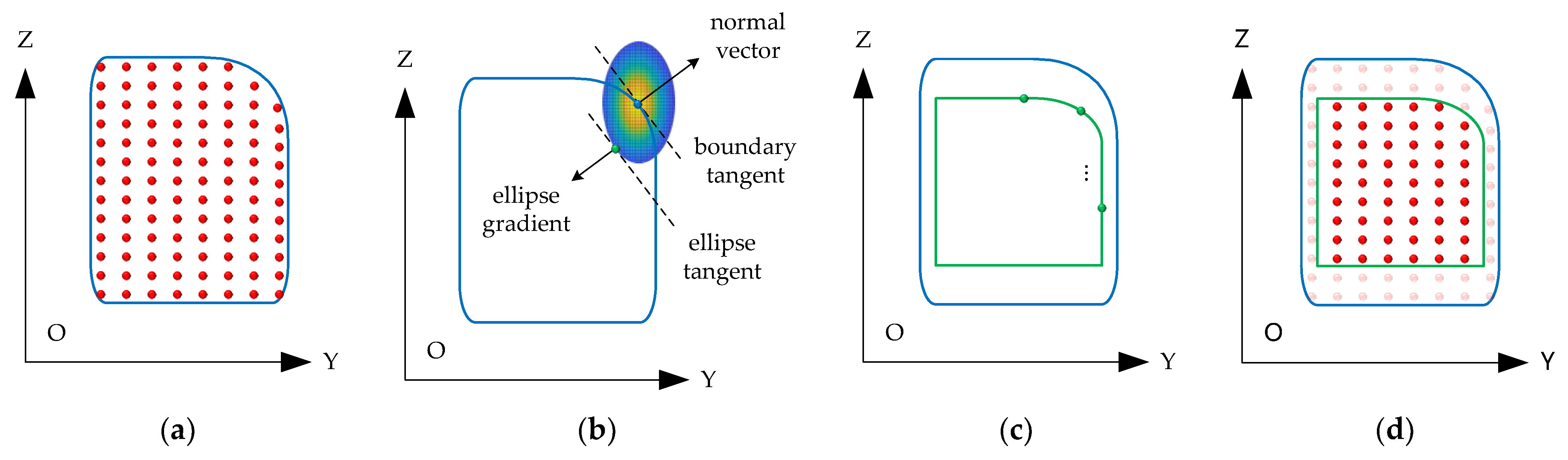

2.2.3. Blooming Effect Denoising

- Boundary extraction: Use the alpha shape algorithm to extract the boundary of the point cloud, obtaining boundary segments and boundary points.

- Error ellipse construction: Construct an error ellipse centered at each boundary point. The length of the semi-major and semi-minor axes of the ellipse (Equation (3)) are determined based on the LiDAR beam divergence angle ( and ), target distance (), and blooming factors ( and ). The blooming factors can be estimated through a simple experiment: collect point cloud data of a target with known dimensions at a specific distance and compare the size of the point cloud with the actual size of the target to estimate the factors. In the equation, the subscripts and represent vertical and horizontal, respectively.

- Normal vector calculation: For each boundary point, compute the normal vectors of its adjacent left and right boundary segments. The normalized sum of these two vectors is used as the normal vector (Equation (4)) of the boundary point.

- Boundary point correction: Draw the tangent and the normal vector at each boundary point. Adjust the tangent along the direction of the normal vector until it is tangential to the error ellipse. The point of tangency is regarded as the corrected boundary point . The gradient unit vector of the ellipse at this point is (Equation (5)). Let ; then, the absolute value of the slope of the line connecting this point to the center of the ellipse can be obtained (Equation (6)). The absolute value of the boundary point coordinate offset is shown in Equation (7).

- Noise removal: Traverse all boundary points of the original point cloud to generate a new refined boundary. Points located between the original boundary and the corrected boundary are identified as noise and are removed.

| Algorithm 2: The boundary correction algorithm based on adaptive error ellipse |

| Input: Projected 2D points and alpha radius Output: Target points (1) Extract the boundary of point cloud using alpha shape with alpha radius (2) Obtain the facets (each facet represents an edge segment in a 2D plane) and the corresponding boundary points (each facet contains two boundary points) (3) Initialize the normal vector for each boundary point with zero values (4) for each facet do (5) Compute the normal vector for the facet using its two endpoints and (6) Normalize to make it a unit vector (7) Accumulate to the normal of the two endpoints and (8) end for (9) Initialize the corrected boundary points with (10) for each boundary point do (11) Compute the unit normal vector for the point (12) Compute the distance L from to the origin of the LiDAR coordinate system (13) Define the adaptive error ellipse centered at ; calculate the semi-major axis a and semi-minor axis b of the ellipse (14) Calculate the absolute value of the slope k between the original boundary point and the corrected boundary point (15) Calculate the corrected boundary point coordinate based on the adaptive ellipse parameters (16) end for (17) Connect all points to form the new boundary (18) for each point do (19) if is located between the original and the corrected boundaries, then (20) Mark as an inflated point and remove it (21) else if is located inside the corrected boundary, then (22) Mark as a target point and retain it (23) end if (24) return |

3. Experiments and Results

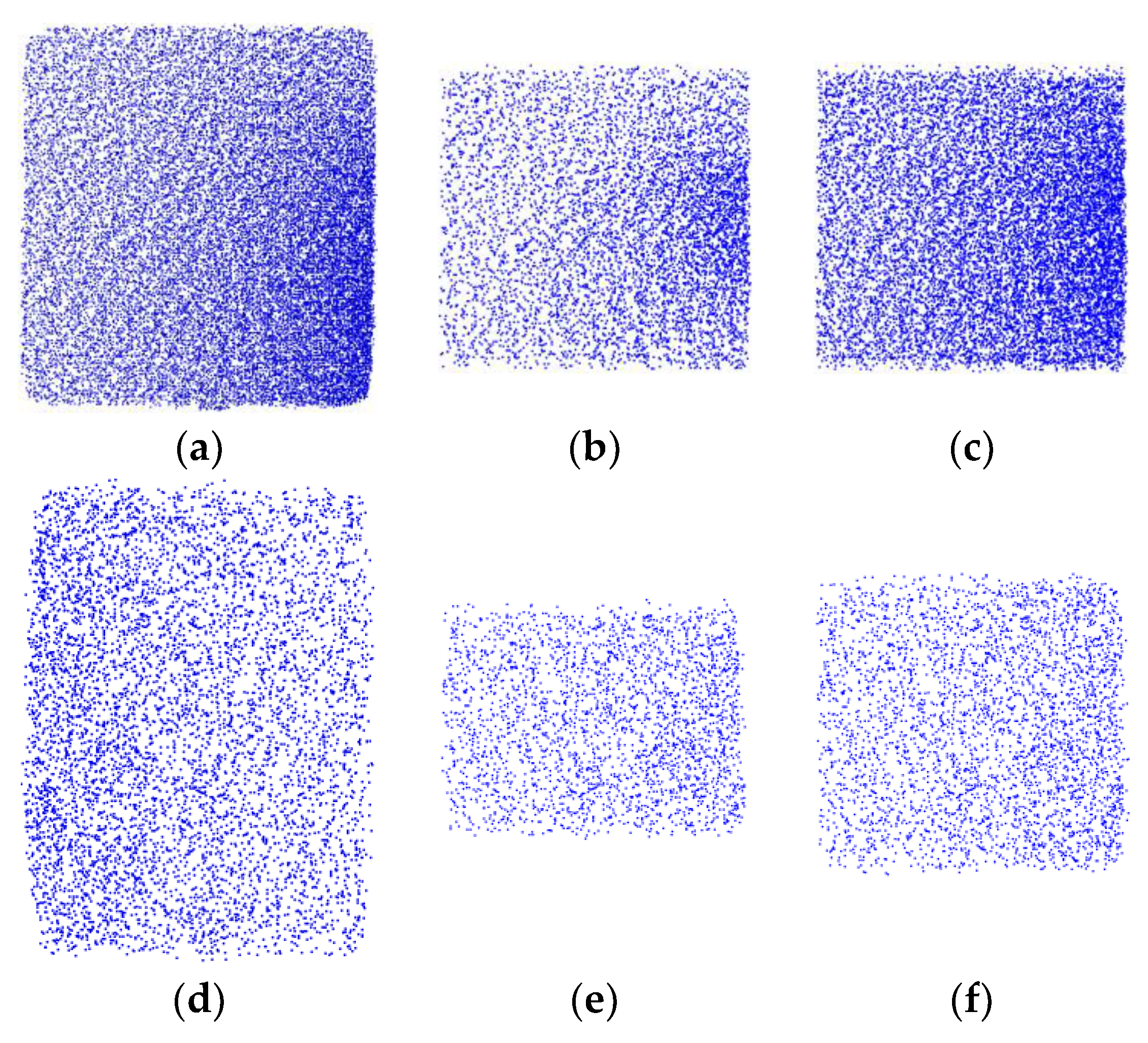

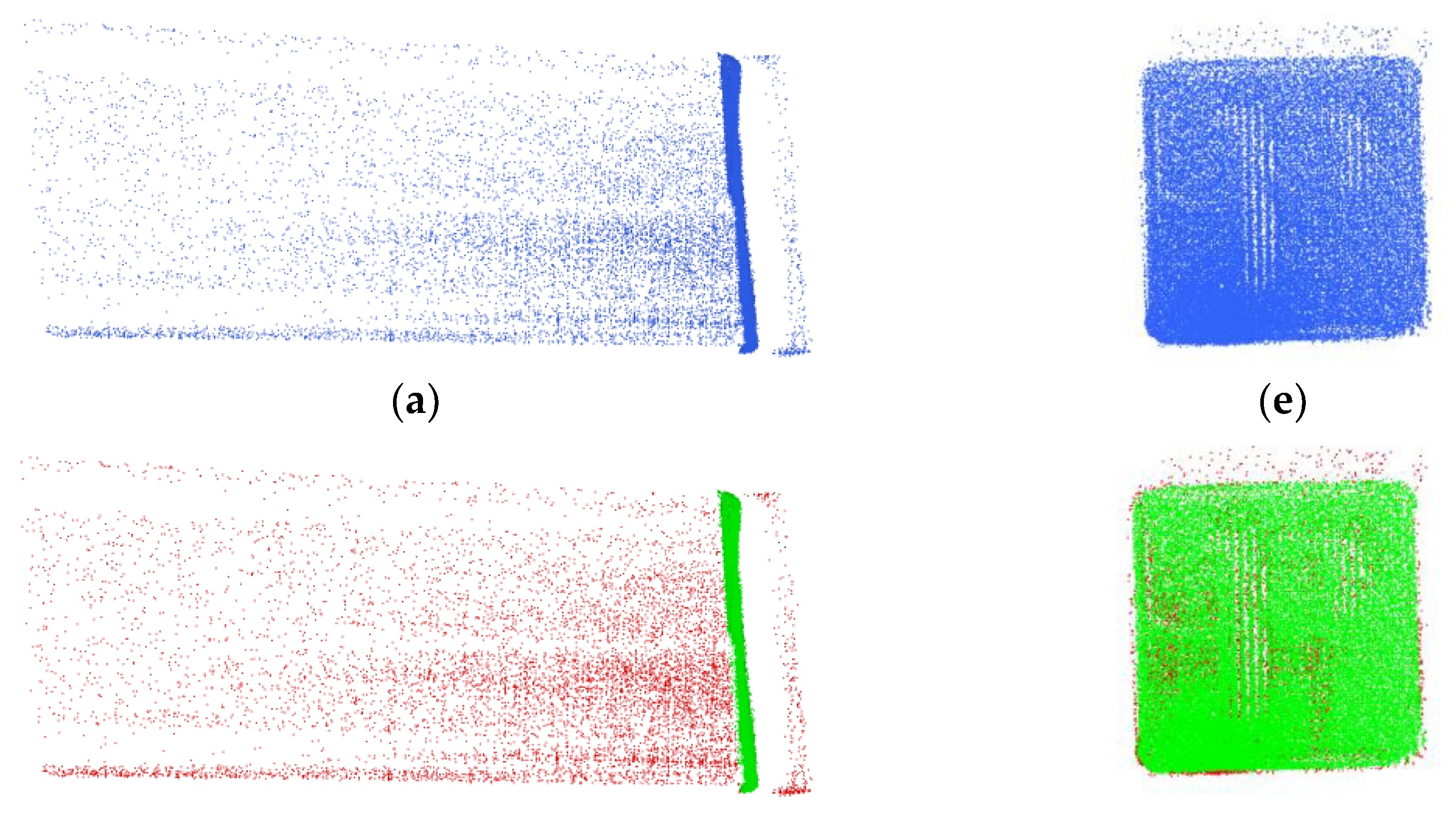

3.1. Experimental Setup

3.2. Evaluation Metrics

3.3. Experimental Results

4. Discussion

5. Conclusions

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Conflicts of Interest

References

- Li, Y.; Ibanez-Guzman, J. Lidar for Autonomous Driving: The Principles, Challenges, and Trends for Automotive Lidar and Perception Systems. IEEE Signal Process. Mag. 2020, 37, 50–61. [Google Scholar] [CrossRef]

- Feng, D.; Haase-Schütz, C.; Rosenbaum, L.; Hertlein, H.; Gläser, C.; Timm, F.; Wiesbeck, W.; Dietmayer, K. Deep Multi-Modal Object Detection and Semantic Segmentation for Autonomous Driving: Datasets, Methods, and Challenges. IEEE Trans. Intell. Transp. Syst. 2021, 22, 1341–1360. [Google Scholar] [CrossRef]

- Li, Y.; Ma, L.; Zhong, Z.; Liu, F.; Chapman, M.A.; Cao, D.; Li, J. Deep Learning for LiDAR Point Clouds in Autonomous Driving: A Review. IEEE Trans. Neural Netw. Learn. Syst. 2021, 32, 3412–3432. [Google Scholar] [CrossRef] [PubMed]

- Jin, X.; Yang, H.; He, X.; Liu, G.; Yan, Z.; Wang, Q. Robust LiDAR-Based Vehicle Detection for On-Road Autonomous Driving. Remote Sens. 2023, 15, 3160. [Google Scholar] [CrossRef]

- Xue, F.; Lu, W.; Chen, Z.; Webster, C.J. From LiDAR point cloud towards digital twin city: Clustering city objects based on Gestalt principles. ISPRS J. Photogramm. Remote Sens. 2020, 167, 418–431. [Google Scholar] [CrossRef]

- Kulawiak, M. A Cost-Effective Method for Reconstructing City-Building 3D Models from Sparse Lidar Point Clouds. Remote Sens. 2022, 14, 1278. [Google Scholar] [CrossRef]

- Franzini, M.; Casella, V.M.; Monti, B. Assessment of Leica CityMapper-2 LiDAR Data within Milan’s Digital Twin Project. Remote Sens. 2023, 15, 5263. [Google Scholar] [CrossRef]

- Borowiec, N.; Marmol, U. Using LiDAR System as a Data Source for Agricultural Land Boundaries. Remote Sens. 2022, 14, 1048. [Google Scholar] [CrossRef]

- Debnath, S.; Paul, M.; Debnath, T. Applications of LiDAR in Agriculture and Future Research Directions. J. Imaging 2023, 9, 57. [Google Scholar] [CrossRef]

- Karim, M.R.; Reza, M.N.; Jin, H.; Haque, M.A.; Lee, K.-H.; Sung, J.; Chung, S.-O. Application of LiDAR Sensors for Crop and Working Environment Recognition in Agriculture: A Review. Remote Sens. 2024, 16, 4623. [Google Scholar] [CrossRef]

- Dassot, M.; Constant, T.; Fournier, M. The use of terrestrial LiDAR technology in forest science: Application fields, benefits and challenges. Ann. For. Sci. 2011, 68, 959–974. [Google Scholar] [CrossRef]

- Li, W.; Guo, Q.; Jakubowski, M.K.; Kelly, M. A new method for segmenting individual trees from the lidar point cloud. Photogramm. Eng. Remote Sens. 2012, 78, 75–84. [Google Scholar]

- Fassnacht, F.E.; White, J.C.; Wulder, M.A.; Næsset, E. Remote sensing in forestry: Current challenges, considerations and directions. For. Int. J. For. Res. 2023, 97, 11–37. [Google Scholar] [CrossRef]

- Yang, D.; Liu, Y.; Chen, Q.; Chen, M.; Zhan, S.; Cheung, N.-k.; Chan, H.-Y.; Wang, Z.; Li, W.J. Development of the high angular resolution 360° LiDAR based on scanning MEMS mirror. Sci. Rep. 2023, 13, 1540. [Google Scholar] [CrossRef]

- Cheong, S.; Ha, J. LiDAR Blooming Artifacts Estimation Method Induced by Retro-Reflectance with Synthetic Data Modeling and Deep Learning. In Proceedings of the 2024 IEEE International Conference on Consumer Electronics-Asia (ICCE-Asia), Danang, Vietnam, 3–6 November 2024; pp. 1–4. [Google Scholar]

- Uttarkabat, S.; Appukuttan, S.; Gupta, K.; Nayak, S.; Palo, P. BloomNet: Perception of Blooming Effect in ADAS using Synthetic LiDAR Point Cloud Data. In Proceedings of the 2024 IEEE Intelligent Vehicles Symposium (IV), Jeju Island, Republic of Korea, 2–5 June 2024; pp. 1886–1892. [Google Scholar]

- Han, X.-F.; Jin, J.S.; Wang, M.-J.; Jiang, W.; Gao, L.; Xiao, L. A review of algorithms for filtering the 3D point cloud. Signal Process. Image Commun. 2017, 57, 103–112. [Google Scholar] [CrossRef]

- Zhou, L.; Sun, G.; Li, Y.; Li, W.; Su, Z. Point cloud denoising review: From classical to deep learning-based approaches. Graph. Models 2022, 121, 101140. [Google Scholar] [CrossRef]

- Rusu, R.B.; Cousins, S. 3D is here: Point Cloud Library (PCL). In Proceedings of the 2011 IEEE International Conference on Robotics and Automation, Shanghai, China, 9–13 May 2011; pp. 1–4. [Google Scholar]

- Miknis, M.; Davies, R.; Plassmann, P.; Ware, A. Near real-time point cloud processing using the PCL. In Proceedings of the 2015 International Conference on Systems, Signals and Image Processing (IWSSIP), London, UK, 10–12 September 2015; pp. 153–156. [Google Scholar]

- Miknis, M.; Davies, R.; Plassmann, P.; Ware, A. Efficient point cloud pre-processing using the point cloud library. Int. J. Image Process. 2016, 10, 63–72. [Google Scholar]

- Nurunnabi, A.; West, G.; Belton, D. Outlier detection and robust normal-curvature estimation in mobile laser scanning 3D point cloud data. Pattern Recognit. 2015, 48, 1404–1419. [Google Scholar] [CrossRef]

- Carrilho, A.C.; Galo, M.; Santos, R.C. Statistical Outlier Detection Method For Airborne Lidar Data. Int. Arch. Photogramm. Remote Sens. Spat. Inf. Sci. 2018, 42, 87–92. [Google Scholar] [CrossRef]

- Fleishman, S.; Drori, I.; Cohen-Or, D. Bilateral mesh denoising. In Proceedings of the ACM SIGGRAPH 2003 Papers, San Diego, CA, USA, 27–31 July 2003; pp. 950–953. [Google Scholar]

- Digne, J.; de Franchis, C. The Bilateral Filter for Point Clouds. Image Process. Line 2017, 7, 278–287. [Google Scholar] [CrossRef]

- Guoqiang, W.; Hongxia, Z.; Zhiwei, G.; Wei, S.; Dagong, J. Bilateral filter denoising of Lidar point cloud data in automatic driving scene. Infrared Phys. Technol. 2023, 131, 104724. [Google Scholar] [CrossRef]

- Duan, Y.; Yang, C.; Li, H. Low-complexity adaptive radius outlier removal filter based on PCA forlidar point cloud denoising. Appl. Opt. 2021, 60, E1–E7. [Google Scholar] [CrossRef] [PubMed]

- Szutor, P.; Zichar, M. Fast Radius Outlier Filter Variant for Large Point Clouds. Data 2023, 8, 149. [Google Scholar] [CrossRef]

- Luo, Y.; Shi, S.; Zhang, K. Multibeam Point Cloud Denoising Method Based on Modified Radius Filter. In Proceedings of the 4th International Conference on Geology, Mapping and Remote Sensing (ICGMRS 2023), Wuhan, China, 23 January 2024; pp. 98–103. [Google Scholar]

- Fleishman, S.; Cohen-Or, D.; Silva, C.T. Robust moving least-squares fitting with sharp features. ACM Trans. Graph. 2005, 24, 544–552. [Google Scholar] [CrossRef]

- Preiner, R.; Mattausch, O.; Arikan, M.; Pajarola, R.; Wimmer, M. Continuous projection for fast L1 reconstruction. ACM Trans. Graph. 2014, 33, 1–13. [Google Scholar] [CrossRef]

- Xu, Z.; Foi, A. Anisotropic Denoising of 3D Point Clouds by Aggregation of Multiple Surface-Adaptive Estimates. IEEE Trans. Vis. Comput. Graph. 2021, 27, 2851–2868. [Google Scholar] [CrossRef]

- Yu, L.; Li, X.; Fu, C.-W.; Cohen-Or, D.; Heng, P.-A. Ec-net: An edge-aware point set consolidation network. In Proceedings of the European conference on computer vision (ECCV), Munich, Germany, 8–14 September 2018; pp. 386–402. [Google Scholar]

- Rakotosaona, M.-J.; La Barbera, V.; Guerrero, P.; Mitra, N.J.; Ovsjanikov, M. PointCleanNet: Learning to Denoise and Remove Outliers from Dense Point Clouds. Comput. Graph. Forum 2020, 39, 185–203. [Google Scholar] [CrossRef]

- Pistilli, F.; Fracastoro, G.; Valsesia, D.; Magli, E. Learning Robust Graph-Convolutional Representations for Point Cloud Denoising. IEEE J. Sel. Top. Signal Process. 2021, 15, 402–414. [Google Scholar] [CrossRef]

- Chen, S.; Duan, C.; Yang, Y.; Li, D.; Feng, C.; Tian, D. Deep Unsupervised Learning of 3D Point Clouds via Graph Topology Inference and Filtering. IEEE Trans. Image Process. 2020, 29, 3183–3198. [Google Scholar] [CrossRef]

- Luo, S.; Hu, W. Differentiable Manifold Reconstruction for Point Cloud Denoising. In Proceedings of the 28th ACM International Conference on Multimedia, Seattle, WA, USA, 12–16 October 2020; pp. 1330–1338. [Google Scholar]

- Luo, S.; Hu, W. Score-Based Point Cloud Denoising. In Proceedings of the 2021 IEEE/CVF International Conference on Computer Vision (ICCV), Montreal, BC, Canada, 10–17 October 2021; pp. 4563–4572. [Google Scholar]

- Sezan, M.I. A peak detection algorithm and its application to histogram-based image data reduction. Comput. Vis. Graph. Image Process. 1990, 49, 36–51. [Google Scholar] [CrossRef]

- Guo, H. A Simple Algorithm for Fitting a Gaussian Function [DSP Tips and Tricks]. IEEE Signal Process. Mag. 2011, 28, 134–137. [Google Scholar] [CrossRef]

- Schnabel, R.; Wahl, R.; Klein, R. Efficient RANSAC for Point-Cloud Shape Detection. Comput. Graph. Forum 2007, 26, 214–226. [Google Scholar] [CrossRef]

- Torr, P.H.S.; Zisserman, A. MLESAC: A New Robust Estimator with Application to Estimating Image Geometry. Comput. Vis. Image Underst. 2000, 78, 138–156. [Google Scholar] [CrossRef]

- Edelsbrunner, H.; Kirkpatrick, D.; Seidel, R. On the shape of a set of points in the plane. IEEE Trans. Inf. Theory 1983, 29, 551–559. [Google Scholar] [CrossRef]

- Congalton, R.G. A review of assessing the accuracy of classifications of remotely sensed data. Remote Sens. Environ. 1991, 37, 35–46. [Google Scholar] [CrossRef]

- Zeng, J.; Cheung, G.; Ng, M.; Pang, J.; Yang, C. 3D Point Cloud Denoising Using Graph Laplacian Regularization of a Low Dimensional Manifold Model. IEEE Trans. Image Process. 2020, 29, 3474–3489. [Google Scholar] [CrossRef]

| Denoised Data | |||

|---|---|---|---|

| Target Points | Noise Points | ||

| Ground-Truth | Target points | TP | FN |

| Noise points | FP | TN | |

| Sample | T.I (%) | T.II (%) | T.E (%) | Kappa (%) | MSE (cm2) | MCD (cm) | R.H (%) | R.W (%) |

|---|---|---|---|---|---|---|---|---|

| Sample 1 | 2.59 | 2.10 | 2.47 | 93.32 | 0.02 | 0.05 | 0.40 | 3.12 |

| Sample 2 | 0.06 | 5.74 | 2.22 | 95.24 | 0.03 | 0.06 | 1.25 | 2.78 |

| Sample 3 | 0.01 | 4.53 | 1.85 | 96.14 | 0.02 | 0.02 | 1.53 | 2.01 |

| Sample 4 | 0.01 | 3.73 | 1.64 | 96.66 | 0.02 | 0.02 | 1.40 | 1.57 |

| Sample 5 | 0.41 | 3.20 | 1.73 | 96.52 | 0.03 | 0.02 | 1.65 | 2.47 |

| Sample 6 | 0.42 | 3.33 | 1.87 | 96.26 | 0.06 | 0.03 | 2.29 | 0.16 |

| Sample 7 | 0.42 | 3.91 | 2.29 | 95.40 | 0.07 | 0.04 | 3.19 | 2.08 |

| Sample 8 | 0.43 | 0.42 | 0.42 | 99.15 | 0.02 | 0.01 | 0.12 | 0.52 |

| Sample 9 | 0.44 | 5.54 | 3.51 | 92.78 | 0.17 | 0.07 | 5.00 | 4.05 |

| Sample 10 | 0.01 | 1.27 | 0.79 | 98.33 | 0.06 | 0.02 | 0.69 | 1.25 |

| Sample 11 | 1.05 | 2.76 | 2.16 | 95.32 | 0.17 | 0.06 | 2.86 | 2.35 |

| Sample 12 | 2.76 | 0.85 | 1.50 | 96.65 | 0.11 | 0.05 | 1.42 | 0.85 |

| Sample 13 | 4.56 | 2.89 | 3.45 | 92.29 | 0.12 | 0.04 | 0.42 | 2.03 |

| Sample 14 | 6.94 | 1.60 | 3.40 | 92.32 | 1.18 | 0.23 | 4.60 | 1.51 |

| Avg. | 1.44 | 2.99 | 2.09 | 95.46 | 0.15 | 0.05 | 1.92 | 1.91 |

| Max. | 6.94 | 5.74 | 3.51 | 99.15 | 1.18 | 0.23 | 5.00 | 4.05 |

| Min. | 0.01 | 0.42 | 0.42 | 92.29 | 0.02 | 0.01 | 0.12 | 0.16 |

| Std. | 2.01 | 1.58 | 0.89 | 2.05 | 0.29 | 0.05 | 1.46 | 1.01 |

| Sample | Rusu [19] | Nurunnabi [22] | Digne [25] | Rakotosaona [34] | Duan [27] | Ours |

|---|---|---|---|---|---|---|

| Sample 1 | 238.28 | 2.00 | 223.48 | 238.34 | 1.52 | 0.02 |

| Sample 2 | 5.77 | 2.30 | 10.01 | 5.87 | 2.02 | 0.03 |

| Sample 3 | 0.73 | 0.37 | 9.77 | 0.73 | 0.73 | 0.02 |

| Sample 4 | 1.18 | 0.63 | 10.15 | 1.18 | 1.13 | 0.02 |

| Sample 5 | 1.74 | 1.03 | 9.72 | 1.74 | 1.56 | 0.03 |

| Sample 6 | 2.68 | 1.40 | 10.26 | 2.68 | 2.47 | 0.06 |

| Sample 7 | 3.62 | 2.32 | 9.86 | 3.62 | 3.30 | 0.07 |

| Sample 8 | 5.17 | 3.02 | 10.57 | 5.17 | 4.68 | 0.02 |

| Sample 9 | 6.81 | 4.44 | 9.49 | 6.81 | 5.65 | 0.17 |

| Sample 10 | 10.88 | 6.43 | 12.41 | 10.88 | 9.05 | 0.06 |

| Sample 11 | 12.45 | 6.35 | 11.33 | 12.45 | 8.58 | 0.17 |

| Sample 12 | 18.57 | 13.44 | 14.52 | 18.57 | 16.17 | 0.11 |

| Sample 13 | 16.67 | 8.74 | 13.52 | 16.67 | 12.08 | 0.12 |

| Sample 14 | 24.81 | 13.09 | 19.46 | 24.81 | 19.94 | 1.18 |

| Avg. | 24.95 | 4.68 | 26.75 | 24.97 | 6.35 | 0.15 |

| Max. | 238.28 | 13.44 | 223.48 | 238.34 | 19.94 | 1.18 |

| Min. | 0.73 | 0.37 | 9.49 | 0.73 | 0.73 | 0.02 |

| Std. | 59.58 | 4.23 | 54.63 | 59.60 | 5.84 | 0.29 |

| Sample | Rusu [19] | Nurunnabi [22] | Digne [25] | Rakotosaona [34] | Duan [27] | Ours |

|---|---|---|---|---|---|---|

| Sample 1 | 3.16 | 0.16 | 5.21 | 3.24 | 0.13 | 0.05 |

| Sample 2 | 0.70 | 0.34 | 1.86 | 0.89 | 0.43 | 0.06 |

| Sample 3 | 0.21 | 0.14 | 1.77 | 0.21 | 0.40 | 0.02 |

| Sample 4 | 0.28 | 0.20 | 1.85 | 0.28 | 0.49 | 0.02 |

| Sample 5 | 0.36 | 0.27 | 1.80 | 0.37 | 0.54 | 0.02 |

| Sample 6 | 0.48 | 0.34 | 1.93 | 0.48 | 0.75 | 0.03 |

| Sample 7 | 0.59 | 0.47 | 1.88 | 0.59 | 0.87 | 0.04 |

| Sample 8 | 0.74 | 0.55 | 2.04 | 0.74 | 1.01 | 0.01 |

| Sample 9 | 0.89 | 0.72 | 1.81 | 0.89 | 1.09 | 0.07 |

| Sample 10 | 1.18 | 0.87 | 2.27 | 1.18 | 1.51 | 0.02 |

| Sample 11 | 1.26 | 0.85 | 2.21 | 1.26 | 1.37 | 0.06 |

| Sample 12 | 1.63 | 1.34 | 2.48 | 1.63 | 1.96 | 0.05 |

| Sample 13 | 1.49 | 1.02 | 2.46 | 1.49 | 1.67 | 0.04 |

| Sample 14 | 1.85 | 1.20 | 3.21 | 1.85 | 2.17 | 0.23 |

| Avg. | 1.06 | 0.61 | 2.34 | 1.08 | 1.03 | 0.05 |

| Max. | 3.16 | 1.34 | 5.21 | 3.24 | 2.17 | 0.23 |

| Min. | 0.21 | 0.14 | 1.77 | 0.21 | 0.13 | 0.01 |

| Std. | 0.77 | 0.38 | 0.88 | 0.78 | 0.61 | 0.05 |

| Sample | Original Data | Rusu [19] | Nurunnabi [22] | Digne [25] | Rakotosaona [34] | Duan [27] | Ours |

|---|---|---|---|---|---|---|---|

| Sample 1 | 42.27 | 36.48 | 4.86 | 9.39 | 35.80 | 3.56 | 0.40 |

| Sample 2 | 62.33 | 12.45 | 12.20 | 24.74 | 12.59 | 10.93 | 1.25 |

| Sample 3 | 57.17 | 19.38 | 13.27 | 20.83 | 19.38 | 14.95 | 1.53 |

| Sample 4 | 44.81 | 23.11 | 15.92 | 17.95 | 23.11 | 18.48 | 1.40 |

| Sample 5 | 52.69 | 26.96 | 21.18 | 15.24 | 26.96 | 23.52 | 1.65 |

| Sample 6 | 62.18 | 31.55 | 20.54 | 12.27 | 31.55 | 25.16 | 2.29 |

| Sample 7 | 67.77 | 36.34 | 26.09 | 7.81 | 36.34 | 29.85 | 3.19 |

| Sample 8 | 72.40 | 36.75 | 28.11 | 6.84 | 36.75 | 31.80 | 0.12 |

| Sample 9 | 80.68 | 45.61 | 34.79 | 1.76 | 45.61 | 41.01 | 5.00 |

| Sample 10 | 95.34 | 50.70 | 38.55 | 2.34 | 50.70 | 41.41 | 0.69 |

| Sample 11 | 104.93 | 55.87 | 37.16 | 5.06 | 55.87 | 43.54 | 2.86 |

| Sample 12 | 111.04 | 56.32 | 47.61 | 8.27 | 56.33 | 47.40 | 1.42 |

| Sample 13 | 121.50 | 61.85 | 41.53 | 9.33 | 61.83 | 48.91 | 0.42 |

| Sample 14 | 123.16 | 62.42 | 46.23 | 16.19 | 62.42 | 47.99 | 4.60 |

| Avg. | 78.45 | 39.70 | 27.72 | 11.29 | 39.66 | 30.61 | 1.92 |

| Max. | 123.16 | 62.42 | 47.61 | 24.74 | 62.42 | 48.91 | 5.00 |

| Min. | 42.27 | 12.45 | 4.86 | 1.76 | 12.59 | 3.56 | 0.12 |

| Std. | 26.91 | 15.56 | 13.08 | 6.63 | 15.55 | 14.40 | 1.46 |

| Sample | Original Data | Rusu [19] | Nurunnabi [22] | Digne [25] | Rakotosaona [34] | Duan [27] | Ours |

|---|---|---|---|---|---|---|---|

| Sample 1 | 50.03 | 6.37 | 2.66 | 34.27 | 6.24 | 2.06 | 3.12 |

| Sample 2 | 45.79 | 3.78 | 3.45 | 33.48 | 3.88 | 2.27 | 2.78 |

| Sample 3 | 40.87 | 4.06 | 1.35 | 34.41 | 4.06 | 1.99 | 2.01 |

| Sample 4 | 42.38 | 4.08 | 0.63 | 35.62 | 4.07 | 1.63 | 1.57 |

| Sample 5 | 42.78 | 5.28 | 0.06 | 35.93 | 5.29 | 2.42 | 2.47 |

| Sample 6 | 45.50 | 3.56 | 2.05 | 36.81 | 3.56 | 0.49 | 0.16 |

| Sample 7 | 50.65 | 5.84 | 0.91 | 36.51 | 5.83 | 1.06 | 2.08 |

| Sample 8 | 52.64 | 5.00 | 3.78 | 37.68 | 5.00 | 1.80 | 0.52 |

| Sample 9 | 54.91 | 8.73 | 0.55 | 37.44 | 8.72 | 3.06 | 4.05 |

| Sample 10 | 58.98 | 7.08 | 2.05 | 37.82 | 7.08 | 3.70 | 1.25 |

| Sample 11 | 64.87 | 8.60 | 0.15 | 38.52 | 8.60 | 6.07 | 2.35 |

| Sample 12 | 74.53 | 6.60 | 0.11 | 40.93 | 6.61 | 1.94 | 0.85 |

| Sample 13 | 74.67 | 10.39 | 1.60 | 39.87 | 10.39 | 6.61 | 2.03 |

| Sample 14 | 80.59 | 9.44 | 0.02 | 39.79 | 9.44 | 1.39 | 1.51 |

| Avg. | 55.66 | 6.34 | 1.38 | 37.08 | 6.34 | 2.61 | 1.91 |

| Max. | 80.59 | 10.39 | 3.78 | 40.93 | 10.39 | 6.61 | 4.05 |

| Min. | 40.87 | 3.56 | 0.02 | 33.48 | 3.56 | 0.49 | 0.16 |

| Std. | 12.71 | 2.16 | 1.22 | 2.15 | 2.15 | 1.70 | 1.01 |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Xie, T.; Zhu, J.; Wang, C.; Li, F.; Meng, Z. A Unified Denoising Framework for Restoring the LiDAR Point Cloud Geometry of Reflective Targets. Appl. Sci. 2025, 15, 3904. https://doi.org/10.3390/app15073904

Xie T, Zhu J, Wang C, Li F, Meng Z. A Unified Denoising Framework for Restoring the LiDAR Point Cloud Geometry of Reflective Targets. Applied Sciences. 2025; 15(7):3904. https://doi.org/10.3390/app15073904

Chicago/Turabian StyleXie, Tianpeng, Jingguo Zhu, Chunxiao Wang, Feng Li, and Zhe Meng. 2025. "A Unified Denoising Framework for Restoring the LiDAR Point Cloud Geometry of Reflective Targets" Applied Sciences 15, no. 7: 3904. https://doi.org/10.3390/app15073904

APA StyleXie, T., Zhu, J., Wang, C., Li, F., & Meng, Z. (2025). A Unified Denoising Framework for Restoring the LiDAR Point Cloud Geometry of Reflective Targets. Applied Sciences, 15(7), 3904. https://doi.org/10.3390/app15073904