Predicting College Student Engagement in Physical Education Classes Using Machine Learning and Structural Equation Modeling

Abstract

1. Introduction

2. Materials and Methods

2.1. Participants and Procedure

2.2. Measures

2.2.1. Digital Technology Usage

2.2.2. Student Classroom Engagement

2.2.3. Perceived Usefulness and Perceived Ease of Use

2.2.4. Academic Self-Efficacy

2.3. Interpretable Machine Learning Modeling

2.3.1. Model Development and Performance Evaluation

2.3.2. Interpretable Methods and Variable Importance Analysis

2.3.3. SHAP Value-Based Interpretability

2.4. Statistical Analysis

3. Results

3.1. Descriptive Statistics, Correlation Analysis, and Variable Selection

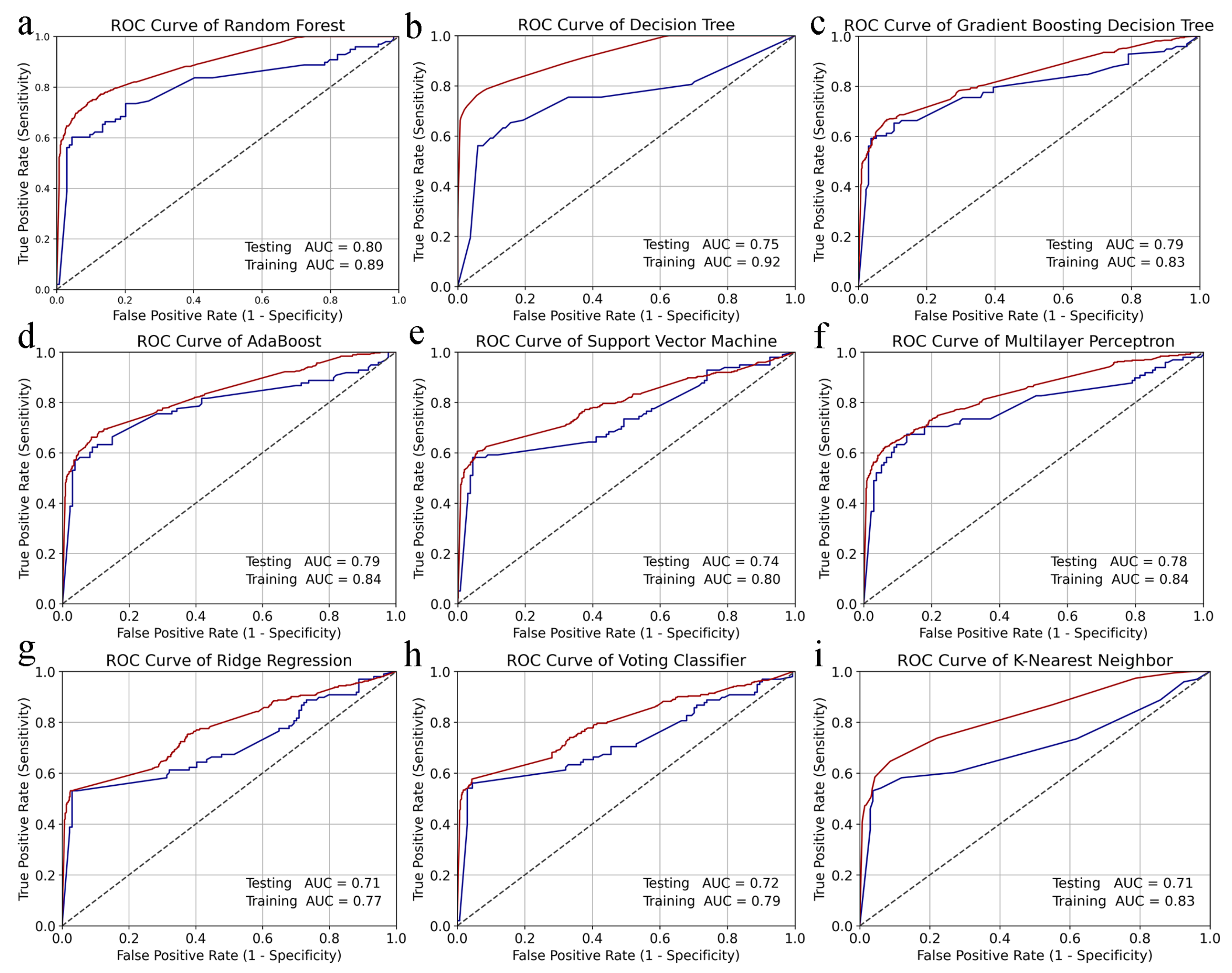

3.2. Model Performance Evaluation

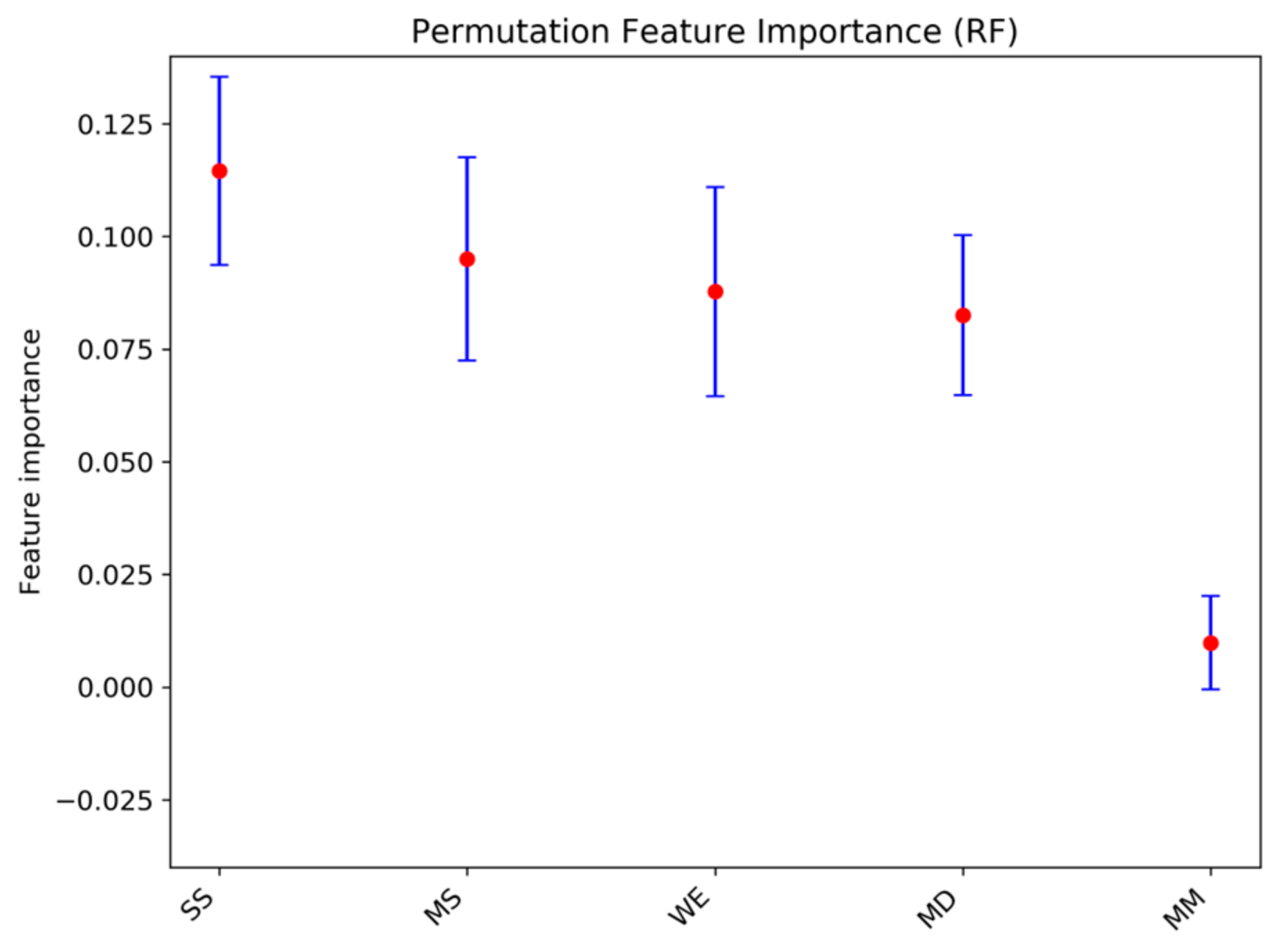

3.3. Interpretable Modeling Approach

3.3.1. Importance Ranking of Digital Technologies

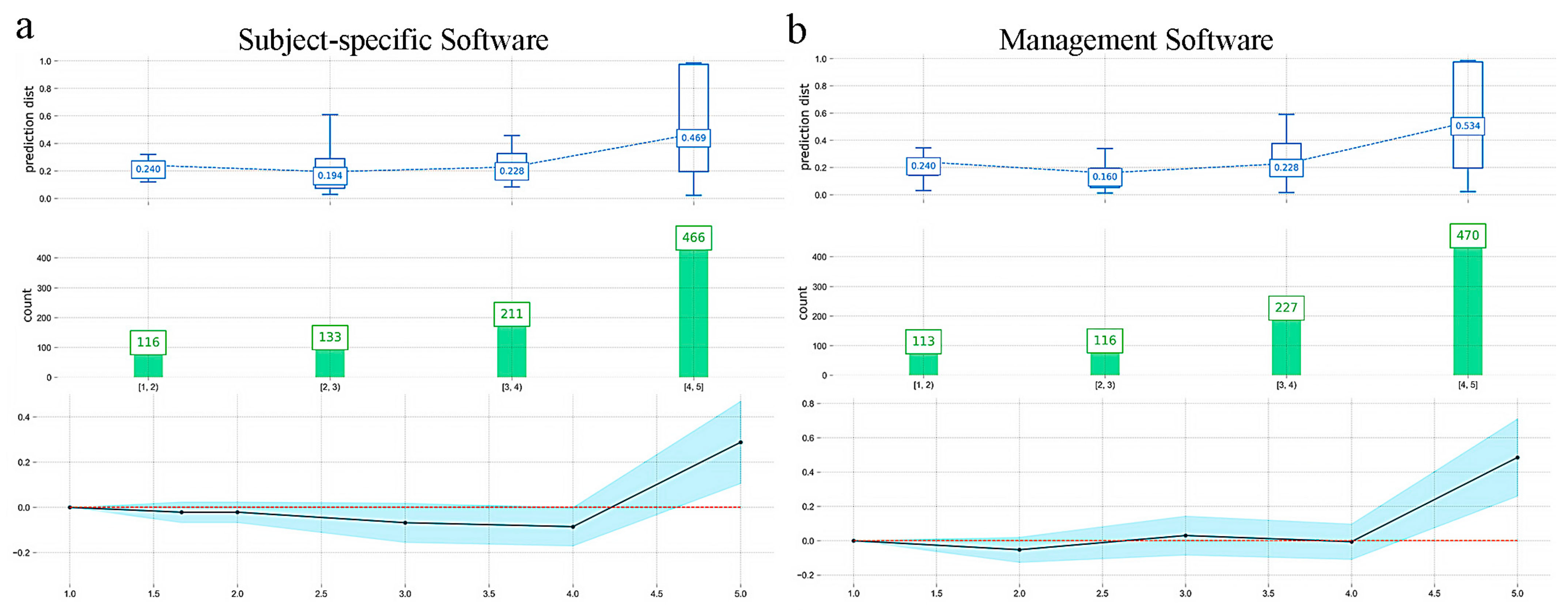

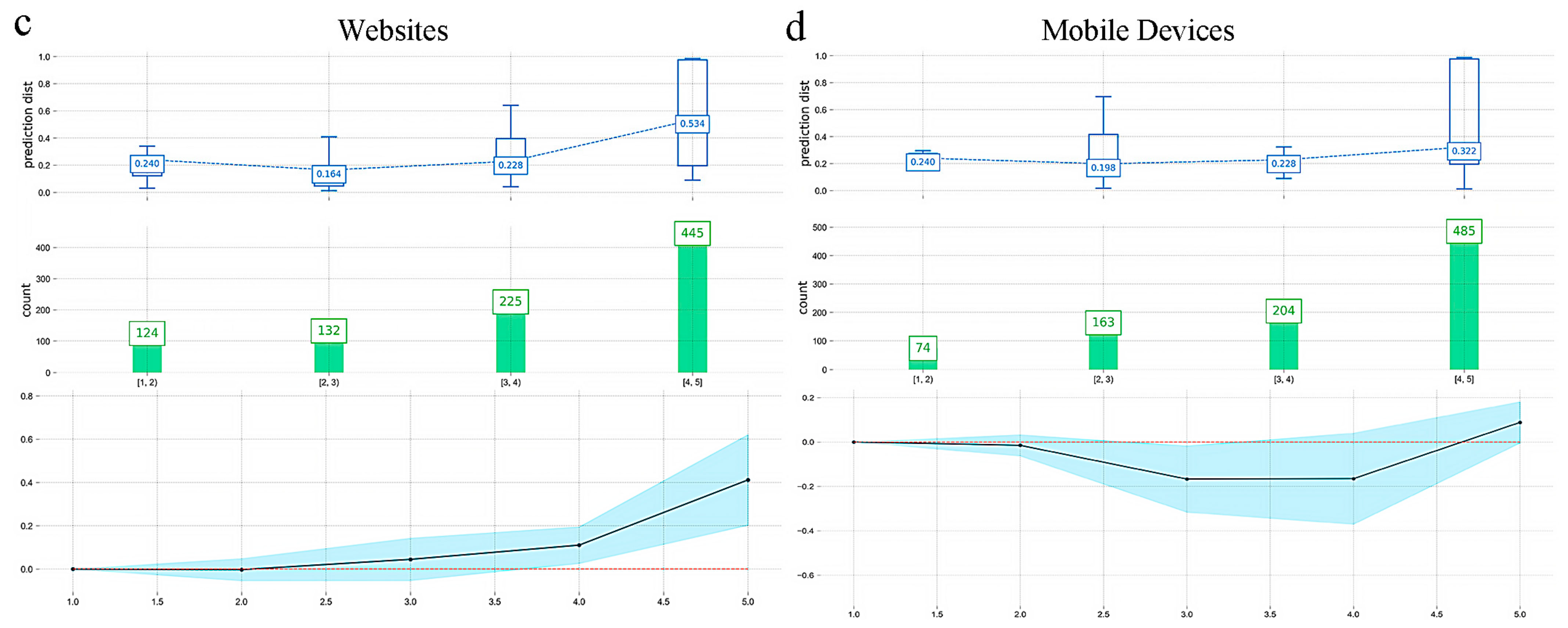

3.3.2. Relationships Between Key Digital Technologies and Classroom Engagement

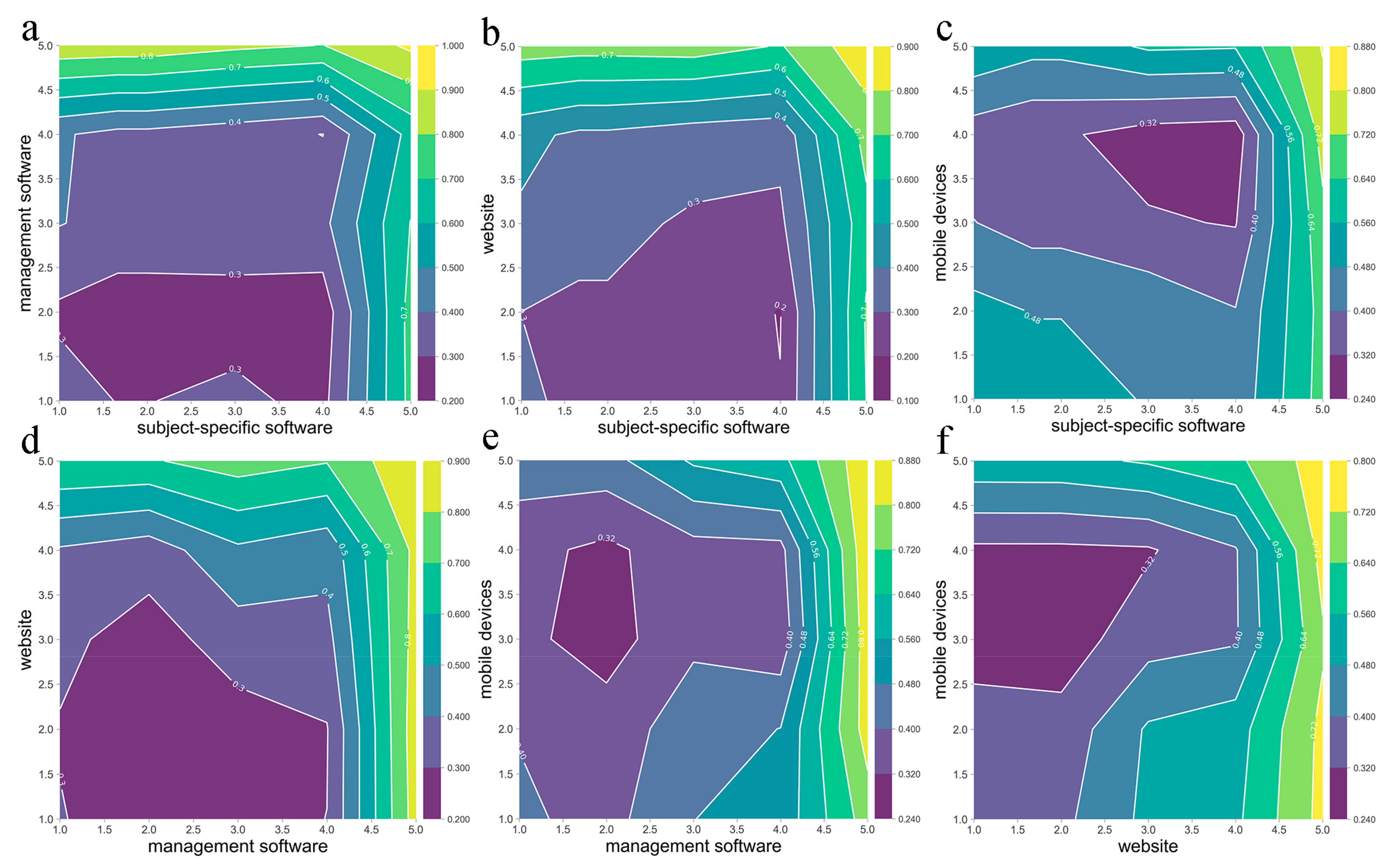

3.3.3. Synergistic Effects of Key Digital Technologies on Student Classroom Engagement

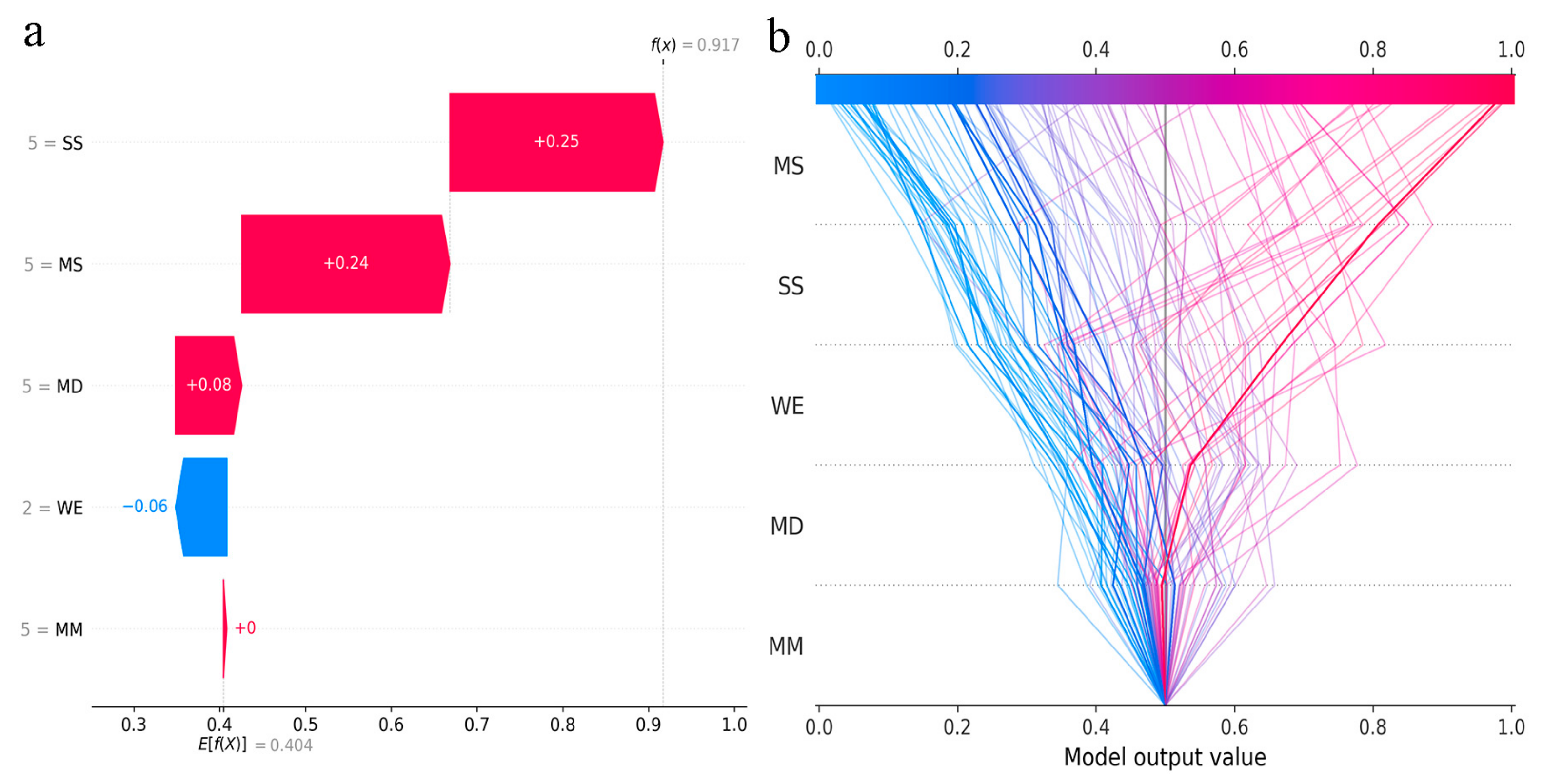

3.3.4. SHAP Analysis for Model Interpretation

3.4. Structural Equation Modeling Analysis

4. Discussion

5. Conclusions

Supplementary Materials

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Acknowledgments

Conflicts of Interest

Abbreviations

| PE | Physical Education |

| PU | Perceived usefulness |

| PEU | Perceived ease of use |

| ASE | Academic self-efficacy |

| χ2/df | Chi-square to Degrees of Freedom Ratio |

| GFI | Goodness-of-Fit Index |

| AGFI | Adjusted Goodness of Fit Index |

| CFI | Comparative Fit Index |

| TLI | Tucker–Lewis Index |

| RMSEA | Root Mean Square Error of Approximation |

| NFI | Normed Fit Index |

| IFI | Incremental Fit Index |

| RFI | Relative Fit Index |

| CI | Confidence interval |

Appendix A

Appendix A.1. Digital Technology Usage Questionnaire

Appendix A.2. Student Classroom Engagement Questionnaire

Appendix A.3. Perceived Usefulness of Digitization Questionnaire

Appendix A.4. Perceived Ease of Use of Digitization Questionnaire

Appendix A.5. Academic Self-Efficacy Questionnaire

References

- Appleton, J.J.; Christenson, S.L.; Furlong, M.J. Student engagement with school: Critical conceptual and methodological issues of the construct. Psychol. Sch. 2008, 45, 369–386. [Google Scholar] [CrossRef]

- Bautista-Vallejo, J.M.; Hernández-Carrera, R.M.; Moreno-Rodriguez, R.; Lopez-Bastias, J.L. Improvement of memory and motivation in language learning in primary education through the interactive digital whiteboard (idw): The future in a post-pandemic period. Sustainability 2020, 12, 8109. [Google Scholar] [CrossRef]

- Lin, Y.T.; Cheng, C.T. Effects of technology-enhanced board game in primary mathematics education on students’ learning performance. Appl. Sci. 2022, 12, 11356. [Google Scholar] [CrossRef]

- Ateş, H.; Köroğlu, M. Online collaborative tools for science education: Boosting learning outcomes, motivation, and engagement. J. Comput. Assist. Learn. 2024, 40, 1052–1067. [Google Scholar] [CrossRef]

- Lin, X.P.; Li, B.B.; Yao, Z.N.; Yang, Z.; Zhang, M. The impact of virtual reality on student engagement in the classroom–a critical review of the literature. Front. Psychol. 2024, 15, 1360574. [Google Scholar] [CrossRef]

- Xu, L. Navigating the educational landscape: The transformative power of smart classroom technology. J. Knowl. Econ. 2024, 1–32. [Google Scholar] [CrossRef]

- Zhang, X.; Qian, W.; Chen, C. The effect of digital technology usage on higher vocational student satisfaction: The mediating role of learning experience and learning engagement. Front. Educ. 2024, 9, 1508119. [Google Scholar] [CrossRef]

- Teo, T.; Khazaie, S.; Derakhshan, A. Exploring teacher immediacy-(non)dependency in the tutored augmented reality game-assisted flipped classrooms of English for medical purposes comprehension among the Asian students. Comput. Educ. 2022, 179, 104406. [Google Scholar] [CrossRef]

- Pikhart, M.; Klimova, B.; Al-Obaydi, L.H. Exploring university students’ preferences and satisfaction in utilizing digital tools for foreign language learning. Front. Educ. 2024, 9, 1412377. [Google Scholar] [CrossRef]

- Ahadi, A.; Bower, M.; Lai, J.; Singh, A.; Garrett, M. Evaluation of teacher professional learning workshops on the use of technology—A systematic review. Prof. Dev. Educ. 2024, 50, 221–237. [Google Scholar] [CrossRef]

- Mayer, R.E. Multimedia Learning, 2nd ed.; Cambridge University Press: Cambridge, UK, 2009. [Google Scholar] [CrossRef]

- Osterlie, O.; Sargent, J.; Killian, C.; Garcia-Jaen, M.; Garcia-Martinez, S.; Ferriz-Valero, A. Flipped learning in physical education: A scoping review. Eur. Phys. Educ. Rev. 2023, 29, 125–144. [Google Scholar] [CrossRef]

- Calabuig-Moreno, F.; Huertas Gonzalez-Serrano, M.; Fombona, J.; Garcia-Tascon, M. The Emergence of Technology in Physical Education: A General Bibliometric Analysis with a Focus on Virtual and Augmented Reality. Sustainability 2020, 12, 2728. [Google Scholar] [CrossRef]

- Wang, N.; Abdul Rahman, M.N.; Lim, B.-H. Teaching and Curriculum of the Preschool Physical Education Major Direction in Colleges and Universities under Virtual Reality Technology. Comput. Intell. Neurosci. 2022, 2022, 3250986. [Google Scholar] [CrossRef] [PubMed]

- Alenezi, M.; Wardat, S.; Akour, M. The Need of Integrating Digital Education in Higher Education: Challenges and Opportunities. Sustainability 2023, 15, 4782. [Google Scholar] [CrossRef]

- Karaoglan Yilmaz, F.G.; Yilmaz, R. Learning Analytics Intervention Improves Students’ Engagement in Online Learning. Technol. Knowl. Learn. 2022, 27, 449–460. [Google Scholar] [CrossRef]

- Davis, F.D. Perceived usefulness, perceived ease of use, and user acceptance of information technology. MIS Q. 1989, 13, 319–340. [Google Scholar] [CrossRef]

- Kim, S.S. Motivators and concerns for real-time online classes: Focused on the security and privacy issues. Interact. Learn. Environ. 2023, 31, 1875–1888. [Google Scholar] [CrossRef]

- Sprenger, D.A.; Schwaninger, A. Technology acceptance of four digital learning technologies (classroom response system, classroom chat, e-lectures, and mobile virtual reality) after three months’ usage. Int. J. Educ. Technol. High. Educ. 2021, 18, 8. [Google Scholar] [CrossRef]

- Bai, Y.-Q.; Jiang, J.-W. Meta-analysis of factors affecting the use of digital learning resources. Interact. Learn. Environ. 2024, 32, 522–533. [Google Scholar] [CrossRef]

- Lee, W. The Effect of Self-Efficacy and Service Quality on Ease of Use and Usefulness of an e-Learning System. J. Inf. Syst. 2003, 12, 41–56. [Google Scholar]

- Bufford, R.K. Social foundations of thought and action—A social cognitive theory—Bandura, A. J. Psychol. Theol. 1986, 14, 341–342. [Google Scholar]

- Eghterafi, W.; Tucker, M.C.; Zhang, I.; Son, J.Y. Effect of Feedback with Video-based Peer Modeling on Learning and Self-efficacy. Online Learn. 2022, 26, 1–5. [Google Scholar] [CrossRef]

- Han, J.; Geng, X.; Wang, Q. Sustainable Development of University EFL Learners’ Engagement, Satisfaction, and Self-Efficacy in Online Learning Environments: Chinese Experiences. Sustainability 2021, 13, 11655. [Google Scholar] [CrossRef]

- Kuo, T.M.; Tsai, C.-C.; Wang, J.-C. Linking web-based learning self-efficacy and learning engagement in MOOCs: The role of online academic hardiness. Internet High. Educ. 2021, 51, 100819. [Google Scholar] [CrossRef]

- Morales-Sanchez, V.; Hernandez-Martos, J.; Reigal, R.E.; Morillo-Baro, J.P.; Caballero-Cerban, M.; Hernandez-Mendo, A. Physical Self-Concept and Motor Self-Efficacy Are Related to Satisfaction/Enjoyment and Boredom in Physical Education Classes. Sustainability 2021, 13, 8829. [Google Scholar] [CrossRef]

- Wang, J.; Tigelaar, D.E.H.; Luo, J.; Admiraal, W. Teacher beliefs, classroom process quality, and student engagement in the smart classroom learning environment: A multilevel analysis. Comput. Educ. 2022, 183, 104501. [Google Scholar] [CrossRef]

- Wang, J.; Tigelaar, D.E.H.; Admiraal, W. Connecting rural schools to quality education: Rural teachers’ use of digital educational resources. Comput. Hum. Behav. 2019, 101, 68–76. [Google Scholar] [CrossRef]

- Reeve, J.; Tseng, C.-M. Agency as a fourth aspect of students’ engagement during learning activities. Contemp. Educ. Psychol. 2011, 36, 257–267. [Google Scholar] [CrossRef]

- Jang, H.; Kim, E.J.; Reeve, J. Longitudinal Test of Self-Determination Theory’s Motivation Mediation Model in a Naturally Occurring Classroom Context. J. Educ. Psychol. 2012, 104, 1175–1188. [Google Scholar] [CrossRef]

- Lee, M.-C. Explaining and predicting users’ continuance intention toward e-learning: An extension of the expectation-confirmation model. Comput. Educ. 2010, 54, 506–516. [Google Scholar] [CrossRef]

- Pintrich, P.R.; Degroot, E.V. Motivational and self-regulated learning components of classroom academic-performance. J. Educ. Psychol. 1990, 82, 33–40. [Google Scholar] [CrossRef]

- Liang, Y.S. Study on Achievement Goals, Attribution Styles and Academic Self-Efficacy of College Students. Master’s Thesis, Central China Normal University, Wuhan, China, 2000, unpublished. [Google Scholar]

- Yu, Q.; Ji, W.; Prihodko, L.; Ross, C.W.; Anchang, J.Y.; Hanan, N.P. Study becomes insight: Ecological learning from machine learning. Methods Ecol. Evol. 2021, 12, 2117–2128. [Google Scholar] [CrossRef] [PubMed]

- Chang, S.-C.; Chu, C.-L.; Chen, C.-K.; Chang, H.-N.; Wong, A.M.K.; Chen, Y.-P.; Pei, Y.-C. The comparison and interpretation of machine-learning models in post-stroke functional outcome prediction. Diagnostics 2021, 11, 1784. [Google Scholar] [CrossRef] [PubMed]

- Park, J.; Lee, W.H.; Kim, K.T.; Park, C.Y.; Lee, S.; Heo, T.-Y. Interpretation of ensemble learning to predict water quality using explainable artificial intelligence. Sci. Total Environ. 2022, 832, 155070. [Google Scholar] [CrossRef]

- Chen, H.; Lundberg, S.M.; Lee, S.-I. Explaining a series of models by propagating Shapley values. Nat. Commun. 2022, 13, 4512. [Google Scholar] [CrossRef]

- Yu, H.; Shi, G.; Li, J.; Yang, J. Analyzing the Differences of Interaction and Engagement in a Smart Classroom and a Traditional Classroom. Sustainability 2022, 14, 8184. [Google Scholar] [CrossRef]

- Wang, Y.; Cao, Y.; Gong, S.; Wang, Z.; Li, N.; Ai, L. Interaction and learning engagement in online learning: The mediating roles of online learning self-efficacy and academic emotions. Learn. Individ. Differ. 2022, 94, 102128. [Google Scholar] [CrossRef]

- Lu, G.; Xie, K.; Liu, Q. What influences student situational engagement in smart classrooms: Perception of the learning environment and students’ motivation. Br. J. Educ. Technol. 2022, 53, 1665–1687. [Google Scholar] [CrossRef]

- Cleary, P.F.; Pierce, G.; Trauth, E.M. Closing the digital divide: Understanding racial, ethnic, social class, gender and geographic disparities in Internet use among school age children in the United States. Univers. Access Inf. Soc. 2006, 4, 354–373. [Google Scholar] [CrossRef]

- Kalyanpur, M.; Kirmani, M.H. Diversity and technology: Classroom implications of the digital divide. J. Spec. Educ. Technol. 2005, 20, 9–18. [Google Scholar] [CrossRef]

- Ke, C.-H.; Sun, H.-M.; Yang, Y.-C. Effects of user and system characteristics on perceived usefulness and perceived ease of use for the web-based classroom response system. Turk. Online J. Educ. Technol. 2012, 11, 128–143. [Google Scholar]

- Zhang, T.; Zhao, J.; Shen, B. The influence of perceived teacher and peer support on student engagement in physical education of Chinese middle school students: Mediating role of academic self-efficacy and positive emotions. Curr. Psychol. 2024, 43, 10776–10785. [Google Scholar] [CrossRef]

- Hanham, J.; Lee, C.B.; Teo, T. The influence of technology acceptance, academic self-efficacy, and gender on academic achievement through online tutoring. Comput. Educ. 2021, 172, 104252. [Google Scholar] [CrossRef]

- Khan, M.J.; Reddy, L.K.V.; Khan, J.; Narapureddy, B.R.; Vaddamanu, S.K.; Alhamoudi, F.H.; Vyas, R.; Gurumurthy, V.; Altijani, A.A.G.; Chaturvedi, S. Challenges of E-Learning: Behavioral Intention of Academicians to Use E-Learning during COVID-19 Crisis. J. Pers. Med. 2023, 13, 555. [Google Scholar] [CrossRef]

- Abdullah, F.; Ward, R. Developing a General Extended Technology Acceptance Model for E-Learning (GETAMEL) by analysing commonly used external factors. Comput. Hum. Behav. 2016, 56, 238–256. [Google Scholar] [CrossRef]

- Banos, R.; Calleja-Nunez, J.J.; Espinoza-Gutierrez, R.; Granero-Gallegos, A. Mediation of academic self-efficacy between emotional intelligence and academic engagement in physical education undergraduate students. Front. Psychol. 2023, 14, 1178500. [Google Scholar] [CrossRef]

| Variable | Option | Number | % |

|---|---|---|---|

| Gender | Female | 441 | 38.08 |

| Male | 717 | 61.92 | |

| Age | ≤18 years old | 37 | 3.20 |

| 18~25 years old | 1115 | 96.29 | |

| 26~30 years old | 4 | 0.35 | |

| ≥30 years old | 2 | 0.17 | |

| Nation | Han nationality | 1043 | 90.07 |

| Minority | 115 | 9.93 | |

| Grade | First-year undergraduate | 653 | 56.39 |

| Second-year undergraduate | 402 | 34.72 | |

| Third-year undergraduate | 91 | 7.86 | |

| Fourth-year undergraduate | 12 | 1.04 | |

| Address | East China | 393 | 33.94 |

| South China | 183 | 15.80 | |

| Central China | 329 | 28.41 | |

| North China | 10 | 0.86 | |

| Northwest China | 1 | 0.09 | |

| Southwest China | 84 | 7.25 | |

| Northeast China | 158 | 13.64 |

| Characteristics | RF | DT | GBDT | AB | SVM | MLP | RR | VC | KNN |

|---|---|---|---|---|---|---|---|---|---|

| AUC | 0.80 | 0.75 | 0.79 | 0.79 | 0.74 | 0.78 | 0.71 | 0.72 | 0.75 |

| Accuracy (%) | 0.80 | 0.77 | 0.79 | 0.79 | 0.79 | 0.78 | 0.63 | 0.65 | 0.78 |

| Sensitivity/Recall | 0.60 | 0.59 | 0.55 | 0.56 | 0.56 | 0.56 | 0.61 | 0.61 | 0.52 |

| Specificity | 0.94 | 0.90 | 0.97 | 0.96 | 0.96 | 0.94 | 0.64 | 0.68 | 0.96 |

| FPR | 0.06 | 0.10 | 0.03 | 0.04 | 0.04 | 0.06 | 0.36 | 0.32 | 0.04 |

| FNR | 0.40 | 0.41 | 0.45 | 0.44 | 0.44 | 0.44 | 0.39 | 0.39 | 0.48 |

| PPV | 0.88 | 0.81 | 0.93 | 0.92 | 0.90 | 0.87 | 0.56 | 0.58 | 0.91 |

| NPV | 0.76 | 0.75 | 0.75 | 0.75 | 0.75 | 0.75 | 0.69 | 0.71 | 0.73 |

| F1 score | 0.72 | 0.68 | 0.69 | 0.70 | 0.69 | 0.68 | 0.58 | 0.60 | 0.66 |

| Mean | SD | 1 | 2 | 3 | 4 | 5 | |

|---|---|---|---|---|---|---|---|

| 1. Digital technology | 29.827 | 10.707 | — | ||||

| 2. Classroom participation | 41.586 | 7.139 | 0.519 *** | — | |||

| 3. Perceived usefulness | 12.434 | 2.220 | 0.506 *** | 0.774 *** | — | ||

| 4. Perceived ease of use | 12.476 | 2.186 | 0.487 *** | 0.752 *** | 0.874 *** | — | |

| 5. Academic self-efficacy | 83.867 | 12.400 | 0.478 *** | 0.628 *** | 0.566 *** | 0.572 *** | — |

| Index | χ2/df | GFI | AGFI | CFI | TLI | RMSEA | NFI | IFI | RFI |

|---|---|---|---|---|---|---|---|---|---|

| Model 1 | 5.754 | 0.972 | 0.950 | 0.947 | 0.924 | 0.064 | 0.937 | 0.947 | 0.909 |

| Model 2 | 5.436 | 0.974 | 0.953 | 0.949 | 0.926 | 0.062 | 0.938 | 0.949 | 0.911 |

| Ideal value | <3.0 | >0.9 | >0.8 | >0.9 | >0.9 | <0.08 | >0.9 | >0.9 | >0.9 |

| Path | B | S.E. | C.R. | β | p | |

|---|---|---|---|---|---|---|

| Model 1 | Digital Technology → Classroom Participation | 0.072 | 0.014 | 5.279 | 0.108 | 0.000 |

| Digital Technology → Perceived Usefulness | 0.105 | 0.005 | 19.931 | 0.506 | 0.000 | |

| Perceived Usefulness → Classroom Participation | 1.841 | 0.070 | 26.187 | 0.572 | 0.000 | |

| Digital Technology → Academic Self-efficacy | 0.298 | 0.031 | 9.524 | 0.258 | 0.000 | |

| Academic Self-efficacy → Classroom Participation | 0.148 | 0.012 | 11.947 | 0.256 | 0.000 | |

| Perceived Usefulness → Academic Self-efficacy | 2.436 | 0.151 | 16.120 | 0.436 | 0.000 | |

| Model 2 | Digital Technology → Classroom Participation | 0.090 | 0.014 | 6.478 | 0.135 | 0.000 |

| Digital Technology → Perceived Ease of Use | 0.099 | 0.005 | 18.974 | 0.487 | 0.000 | |

| Perceived Ease of Use → Classroom Participation | 1.755 | 0.073 | 23.971 | 0.535 | 0.000 | |

| Digital Technology → Academic Self-efficacy | 0.303 | 0.031 | 9.852 | 0.261 | 0.000 | |

| Academic Self-efficacy → Classroom Participation | 0.152 | 0.013 | 11.850 | 0.263 | 0.000 | |

| Perceived Ease of Use → Academic Self-efficacy | 2.524 | 0.150 | 16.776 | 0.445 | 0.000 |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Zhang, L.; Gao, J.; Zhao, L.; Liu, Z.; Guan, A. Predicting College Student Engagement in Physical Education Classes Using Machine Learning and Structural Equation Modeling. Appl. Sci. 2025, 15, 3884. https://doi.org/10.3390/app15073884

Zhang L, Gao J, Zhao L, Liu Z, Guan A. Predicting College Student Engagement in Physical Education Classes Using Machine Learning and Structural Equation Modeling. Applied Sciences. 2025; 15(7):3884. https://doi.org/10.3390/app15073884

Chicago/Turabian StyleZhang, Liguo, Jiarui Gao, Liangyu Zhao, Zetan Liu, and Anlin Guan. 2025. "Predicting College Student Engagement in Physical Education Classes Using Machine Learning and Structural Equation Modeling" Applied Sciences 15, no. 7: 3884. https://doi.org/10.3390/app15073884

APA StyleZhang, L., Gao, J., Zhao, L., Liu, Z., & Guan, A. (2025). Predicting College Student Engagement in Physical Education Classes Using Machine Learning and Structural Equation Modeling. Applied Sciences, 15(7), 3884. https://doi.org/10.3390/app15073884