Abstract

Clothing image synthesis has emerged as a crucial technology in the fashion domain, enabling designers to rapidly transform creative concepts into realistic visual representations. However, the existing methods struggle to effectively integrate multiple guiding information sources, such as sketches and texture patches, limiting their ability to precisely control the generated content. This often results in issues such as semantic inconsistencies and the loss of fine-grained texture details, which significantly hinders the advancement of this technology. To address these issues, we propose the Fast Fourier Asymmetric Context Aggregation Network (FCAN), a novel image generation network designed to achieve controllable clothing image synthesis guided by design sketches and texture patches. In the FCAN, we introduce the Asymmetric Context Aggregation Mechanism (ACAM), which leverages multi-scale and multi-stage heterogeneous features to achieve efficient global visual context modeling, significantly enhancing the model’s ability to integrate guiding information. Complementing this, the FCAN also incorporates a Fast Fourier Channel Dual Residual Block (FF-CDRB), which utilizes the frequency-domain properties of Fast Fourier Convolution to enhance fine-grained content inference while maintaining computational efficiency. We evaluate the FCAN on the newly constructed SKFashion dataset and the publicly available VITON-HD and Fashion-Gen datasets. The experimental results demonstrate that the FCAN consistently generates high-quality clothing images aligned with the design intentions while outperforming the baseline methods across multiple performance metrics. Furthermore, the FCAN demonstrates superior robustness to varying texture conditions compared to the existing methods, highlighting its adaptability to diverse real-world scenarios. These findings underscore the potential of the FCAN to advance this technology by enabling controllable and high-quality image generation.

1. Introduction

In recent years, the incorporation of artificial intelligence (AI) technology has facilitated a revolutionary transformation in the fashion design industry, fostering innovation and injecting new vitality and opportunities [1]. Traditional fashion design is a complex and time-consuming task that heavily relies on manual efforts, requiring designers to translate creative concepts into visually appealing garments through stages like sketching, texture refinement, and prototyping. This process is not only intricate but also constrained by seasonal cycles, with designers facing tight deadlines for various apparel types—such as summer dresses or winter coats [2]. These pressures highlight the need for efficient solutions to support designers in navigating these complexities. AI-driven tools have emerged as a powerful solution to these challenges, enabling designers to generate innovative ideas, refine concepts, and visualize designs more quickly and with greater precision. Among the most promising advancements is clothing image synthesis, which allows for the generation of photo-realistic images automatically from diverse inputs such as sketches, texture patches, and textual descriptions. This technology enhances design workflows by streamlining the process and providing designers with more precise visual outputs. Furthermore, clothing image synthesis holds significant value for applications in areas like virtual try-on systems, fashion recommendation, and digital prototyping. By bridging the gap between abstract design concepts and realistic visual outputs, it enables designers to efficiently refine their ideas while offering consumers more interactive and personalized experiences. Therefore, this technology not only enhances creative efficiency but also contributes to the sustainable development and continued innovation of the related industry.

Although clothing image synthesis has facilitated broader applications in fashion design, generating high-quality, semantically consistent, and texture-rich clothing, synthesis remains a challenging task. These difficulties arise from the inherent complexity of diverse garment designs and the intricate details of textures. Addressing these challenges is essential not only to enhance the photo-realism and accuracy of synthesized images but also to develop more efficient and controllable design processes, which hold substantial practical value for the fashion industry. However, many current methods for clothing image synthesis fail to fully address the need for subjective controllability in design tasks. For instance, some approaches rely on text descriptions [3,4,5], cross-domain style images [6,7,8], or existing clothing images [9,10,11] as guidance for image generation. While these methods can generate visually plausible results, the outputs often deviate from the designer’s original intent. In contrast, design sketches provide a more direct reflection of the designer’s structural intent by encoding rich semantic information about garment shape and structure. Utilizing design sketches as structural guidance ensures that generated clothing images can align with the designer’s creative intent. However, sketches alone are insufficient for capturing essential texture attributes, such as fabric color, material texture, and intricate details, which are critical for a garment’s visual appeal and consumer perception. To address the limitations of sketch-based methods, some approaches [12,13,14] incorporate texture style content as supplementary stylistic guidance. While these methods improve the visual output of simple and repetitive patterns, the structural complexity of garment regions presents considerable challenges in accurately mapping texture content to these areas. This challenge is especially evident when the texture inputs are limited or contain intricate details, a situation frequently encountered in practical applications. Additionally, creating detailed texture references remains a laborious and time-intensive process, posing additional challenges for designers. Although some studies on texture synthesis [15,16,17] have achieved impressive results using limited texture content, the outcomes are often restricted to simple, repetitive patterns and suffer from issues such as edge-seam artifacts. Moreover, these methods struggle to accurately capture critical features of clothing images, such as lighting effects and fabric folds. Consequently, utilizing such techniques as a source for clothing style remains remains fraught with difficulties.

The above issues highlight the need for advanced technologies that can seamlessly integrate the structural guidance of design sketches with precise texture control to generate realistic garment images. Such methods would allow designers to quickly obtain high-quality images that align with their creative intentions, significantly enhancing the practical value of these techniques. To achieve this goal, we propose a novel fashion clothing image generation network (FCAN) that combines Fast Fourier Convolution (FFC) [18] with a novel Asymmetric Context Aggregation Mechanism (ACAM) to generate clothing images that align with the intended design structures and textures. By utilizing dual guidance from design sketches and texture patches, the method ensures that the structural semantic information from the sketches is preserved while enabling precise control over texture styles. Within the FCAN, we leverage the spectral transformation capability of FFC to design a Fast Fourier Channel Dual Residual Block (FF-CDRB), which efficiently handles complex textures and detailed information for content inference. Additionally, we introduce the ACAM in the FCAN, which innovatively aggregates global context information through multi-stage heterogeneous features. This mechanism partially compensates for the inadequate context modeling capabilities of the existing methods, enabling accurate integration of sketch information with limited texture content. Extensive comparative experiments demonstrate that this method exhibits superior robustness to variations in texture patch size compared to the existing advanced methods, and it is capable of synthesizing high-quality clothing images across diverse texture conditions. In addition, extensive ablation studies demonstrate that, within the FCAN, the ACAM effectively addresses the limitations of the existing methods in long-range context modeling, significantly improving the quality of synthesized images and enhancing the model’s robustness to variations in texture patch size. The integration of the FF-CDRB further boosts the model’s ability to capture complex textures and fine details while optimizing parameter efficiency. Together, these innovations establish the FCAN as a practical and effective solution, capable of making a positive impact in real-world applications, particularly in enhancing the efficiency of fashion design processes.

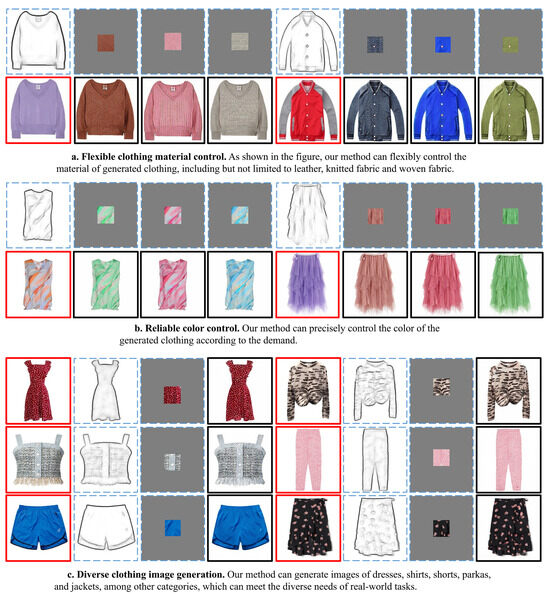

Figure 1 demonstrates the clothing image synthesis results produced by our proposed method. Notably, by guiding the generation process with texture patches and design sketches that include brief internal contours, our method is able to flexibly synthesize complex structures and diverse texture content. Furthermore, it exhibits positive effects in areas such as wrinkles and shadows. It is worth noting that the texture patches in the images are derived from fabric image cropping, Photoshop compositing, or real garment cutting. Regardless of the source, our method consistently demonstrates strong generative performance, highlighting its flexibility and reliability.

Figure 1.

Showcase of garment images generated using our method. The real images are marked with red solid line frames, while the generated images are marked with black solid line frames.

In summary, our main contributions are as follows:

- We propose a novel clothing image synthesis method guided by design sketches and texture patches, enabling precise control over both the structural appearance and texture style of the clothing. Our method provides innovative and practical solutions for scenarios such as personalized clothing design and rapid prototyping.

- We design a new content inference module, the Fast Fourier Channel Dual Residual Block (FF-CDRB), which utilizes the global receptive field characteristics of Fast Fourier Convolution (FFC). This module improves the network’s ability to capture contextual information and perform accurate content inference while also improving the efficiency of parameter computation. By integrating the FF-CDRB, the model demonstrates its capability to generate high-quality images with optimized parameter utilization, ensuring both accuracy and computational efficiency.

- We propose a novel Asymmetric Context Aggregation Mechanism (ACAM), which constructs global context by effectively leveraging heterogeneous features with distinct information representation characteristics from different scales and stages. This approach addresses the limitations of the existing methods in context modeling. The ACAM significantly improves both the perceptual and pixel-level quality of generated images while also enhancing the model’s robustness to variations in texture patch size. To the best of our knowledge, the ACAM is an innovative method for context information interaction, providing potentially valuable references for the research community.

- Extensive experiments were conducted on our self-constructed dataset, SKFashion, as well as two public datasets, VITON-HD and Fashion-Gen, to validate the effectiveness of the FCAN. The experimental results show that those images generated by the FCAN outperform advanced baseline methods in terms of both perceptual and pixel-level quality. Moreover, the FCAN demonstrates greater robustness to variations in texture patch conditions compared to the other methods, making it more suitable for meeting practical application requirements.

2. Related Work

2.1. Image Generation Models

Image generation models have become a pivotal tool in the field of computer vision research, designed to synthesize realistic or stylized images by learning the underlying distributions from data. These models play a critical role in a wide range of applications, such as creative content generation [19,20,21,22,23], medical imaging [24,25,26,27,28], multi-scene imaging optimization [29,30,31,32,33], and fashion design [34,35,36]. In recent years, the evolution of image generation models has been predominantly influenced by two significant methodologies: generative adversarial networks (GANs) [37] and diffusion models [38].

GANs, originally introduced by Goodfellow et al. [39], typically consist of two neural networks: a generator, which learns to produce realistic images that can deceive the discriminator, and a discriminator, which evaluates the authenticity of the generated samples. This adversarial setup encourages the generator to iteratively improve its outputs, achieving highly realistic image synthesis. Over the years, various GAN-based architectures have made impressive strides in areas such as image generation quality, data dependency optimization, and controllability of generated content, significantly advancing the field of image generation technology. For instance, the StyleGAN [40] series proposed by researchers at NVIDIA has enabled the synthesis of highly detailed high-resolution images, taking GAN-generated content quality to a new level. Similarly, CycleGAN [41], introduced by Zhu et al., facilitates image translation under unpaired data conditions, offering a key solution to the problem of training data dependency. Moreover, conditional generative adversarial networks (cGAN) [42], proposed by Mirza et al., establish the foundation for GAN controllability by enabling content generation through conditional embedding.

However, despite these advancements, GANs still face challenges such as training instability and mode collapse, which somewhat limit their ability to produce diverse and consistent results across different tasks. To optimize the stability of GANs, researchers have undertaken significant efforts, proposing a range of effective solutions. For instance, Arjovsky et al. [43] introduced Wasserstein distance during training, which resulted in smoother and more stable gradients, mitigating the problem of gradient vanishing to some extent and thereby enhancing the training stability of GANs. Similarly, Mao et al. [44] employed the least squares loss function, alleviating the gradient vanishing issue in both the generator and discriminator networks. These contributions have been pivotal in the development and advancement of GANs. Today, GANs have become a representative technology in the field of image generation, widely applied across various generative tasks.

Diffusion models, on the other hand, represent a fundamentally different approach to image generation. Inspired by non-equilibrium thermodynamics, these models progressively add noise to input images during training and then learn to reverse this process, effectively generating images from noise. The Denoising Diffusion Probabilistic Model (DDPM) [45] proposed by Ho et al. represents a milestone in the field. By simplifying the model structure and optimizing the training process, the DDPM significantly reduces the training complexity of diffusion models while markedly improving the quality of generated samples. This advancement has demonstrated the immense potential of diffusion models for high-quality and high-resolution image synthesis, sparking widespread academic interest in diffusion-based approaches. Building on this progress, Song et al. introduced Denoising Diffusion Implicit Models (DDIMs) [46], which, by employing a deterministic sampling strategy, effectively reduce inference time without sacrificing quality. This innovation has paved the way for the practical application of diffusion models.

Although diffusion models have demonstrated impressive generative capabilities, their extremely high computational resource requirements remain a significant obstacle to their development. To address this, Rombach et al. [47] proposed latent diffusion models (LDMs), which transfer the training and inference processes from the high-dimensional pixel space to a compressed latent space. This shift reduces resource consumption, effectively mitigating the computational overhead of diffusion models, and has made a crucial contribution to their rapid advancement. Additionally, hybrid approaches, such as UFOGen [48], aim to combine the fast convergence of GANs with the superior quality of diffusion models, offering new possibilities in balancing quality and efficiency.

Moreover, with the proliferation of large-scale datasets and the remarkable advancements in computational hardware, significant progress has been achieved in large-scale image generation models in recent years. A series of large-scale image generation models with unprecedented generative capabilities have emerged, marking the onset of a new era in the field. For instance, NVIDIA introduced StyleGAN-XL [49], based on the GAN architecture, which is capable of handling larger datasets and generating higher-resolution images with more intricate details. Similarly, GigaGAN [50], developed collaboratively by several universities and Adobe Research, breaks through the scale limitations of previous models, showcasing the exceptional generative capabilities of multimodal image generation within the GAN framework. It outperforms large models based on diffusion architectures in terms of generation speed and image quality, underscoring the continued potential of GANs in the era of big data. Moreover, major tech companies such as OpenAI and Stability AI have introduced large-scale image generation models based on diffusion architectures, such as the DALL·E series [51] and the Stable Diffusion series [52]. Backed by vast computational resources and massive datasets, these models have further demonstrated the immense potential of generative models, significantly influencing the related field.

2.2. Fashion Clothing Image Synthesis

Fashion image synthesis has become a key research direction in the field of image generation, mainly focusing on the creation and editing of fashion images such as clothing, accessories, models, texture fabrics, etc. However, among many research works, clothing image synthesis and editing methods have attracted significant attention as clothing serves as the core element in various tasks within the fashion field. The applications of fashion clothing image synthesis span a wide range of fields, such as virtual fashion design, personalized clothing generation, and automated fashion creation. Over the years, various innovative models have been developed to address the unique challenges in this domain, demonstrating tremendous potential for practical applications.

Currently, fashion clothing image synthesis primarily relies on deep generative models such as GANs and diffusion models due to their ability to generate realistic images. One of the pioneering works in fashion image synthesis is FashionGAN [53], proposed by Cui et al., which can conditionally generate fashion images based on specific attributes (e.g., color, style, or texture), laying the foundation for subsequent research. Since then, numerous fashion image synthesis methods have been proposed, introducing new technological tools to the fashion domain.

The controllability and quality of generated images are key areas of focus in the current research on garment image synthesis [1] as they directly impact the applicability of these methods in critical tasks such as fashion design, prototype presentation, and virtual try-on, thereby significantly influencing their practical value in the fashion industry.

As the quality of clothing images directly affects the practicality of these methods, the existing approaches are often specifically optimized based on the characteristics of their respective tasks to ensure the quality of the generated images. For example, MT-VTON, proposed by Lee et al. [54], introduces a transfer strategy that enhances the realism of generated clothing by optimizing wrinkles, shadows, and texture effects. Similarly, Jiang et al. designed an alternating patch-global optimization methodology in [8] to ensure semantic consistency of clothing content. These methods have demonstrated promising performance in certain specific scenarios, serving as an inspiration for advancements in related research.

In terms of controllability, the current methods primarily use text-based guidance, sketch or contour-based guidance, and texture-style content guidance to control the appearance, structure, and texture of the generated clothing. Sketches can provide relatively accurate guidance for the appearance and structure, while text descriptions, when paired with text encoders, offer feature guidance aligned with the semantic content of the related clothing, thus enabling control over the structure or texture. Texture styles can further guide the details of the texture content. However, considering the limitations of these control methods when used independently to control structure or texture, the existing approaches often combine them, yielding promising results. For instance, Sun et al. proposed a fashion garment generation model called SGDiff [5], which leverages a pre-trained text-to-image diffusion model to generate clothing images controlled by both textual descriptions and style examples. However, even state-of-the-art text encoders like CLIP [55] still struggle to achieve fine-grained content alignment in specific knowledge domains. Furthermore, textual descriptions themselves are limited in their ability to express subjective intentions. These constraints result in deficiencies in both the quality and subjective consistency of clothing images generated by SGDiff, hindering its applicability in tasks such as design and virtual try-ons. Gu et al. introduced a fashion image generation framework based on a GAN architecture, called PAINT [56], which combines clothing semantic contours and texture patch content to generate coherent fashion images, offering a novel solution for improving efficiency in fashion design. Nevertheless, the PAINT framework, driven by texture blocks, performs poorly in context modeling and fails to fully leverage texture patch information, often resulting in artifacts when synthesizing complex texture content. Furthermore, PAINT shows poor robustness under varying texture patch conditions and typically requires more complete texture patch content to generate reasonable outputs, limiting its scalability to diverse application scenarios. Gao et al. proposed a controllable fashion image synthesis method guided by clothing edge contours and texture patches [57]. Although this method is capable of generating reasonable clothing content, it struggles to capture fine-grained internal structures or texture features. As a result, the generated clothing content is often limited to solid colors or simple repetitive texture patterns, making it difficult to achieve flexible and diverse applications. In addition, there are other approaches, such as DiffFashion [6] proposed by Cao et al., which leverage the generative capabilities of pre-trained models to synthesize garment images and control the generation process through style transfer strategies. Despite this, these methods are limited in their adaptability to different requirements and may generate unreasonable out-of-domain content due to the broad knowledge embedded in pre-trained models.

In summary, despite some progress, the existing methods still exhibit clear limitations. Specifically, sketch-guided and texture-guided approaches struggle to balance image quality with precise control over diverse stylistic content. Text-based methods, meanwhile, are constrained by inherent expression deficiencies and the current capabilities of encoding technologies, making them challenging to apply in practical tasks such as fashion design. Moreover, other methods based on pre-trained models face similar issues in terms of controllability and image quality. These limitations continue to hinder the further development of related methods, becoming a critical issue that needs to be addressed in this field.

2.3. Fast Fourier Convolution

FFC is an operator based on Fast Fourier Transform (FFT), proposed by Chi et al. in [18]. This operator utilizes Fourier transforms to shift spatial signals into the frequency domain, addressing the limitations of traditional convolution operations in terms of computational efficiency and feature extraction quality. In computer vision tasks, FFC leverages the properties of the frequency domain to obtain a receptive field covering the entire image at the early stages of the network, enabling more efficient extraction of global features without increasing the computational cost. In recent years, FFC has been widely applied in the field of computer vision. For instance, Chu proposed an image inpainting method based on FFC [58], which exploits the sensitivity of FFC to repetitive textures in images, achieving effective inpainting results for images with repetitive textured content. Zhou et al. proposed a non-uniform dehazing method using FFC [59], where the global receptive field property of FFC enabled effective haze removal. Additionally, Quattrini et al. utilized FFC for ink volume detection in digital document restoration [60], achieving excellent detection results. These FFC-based works have introduced new technological pathways for solving various tasks in related fields, positively influencing the progress of related research.

3. Proposed Method

3.1. Overview

Our method takes as input a design sketch S, texture patch T, and clothing region mask , with the goal of synthesizing a clothing image whose semantics align with both S and T. The sources and combination strategies of these input components will be elaborated on in subsequent sections.

The primary challenge of the task lies in ensuring that the synthesized clothing image not only conforms to the appearance pattern of the sketch but also propagates texture content effectively and coherently across the clothing regions. This imposes stringent requirements on the alignment of global information within the image. Moreover, we consider the interplay between texture patch size and synthesis performance. For instance, larger texture patches provide richer texture information but require more effort from the designer to obtain. Consequently, a synthesis strategy that is more robust to variations in texture patch size can significantly enhance the practical applicability of our method.

To address these challenges, we propose a frequency-domain-based content inference block (FF-CDRB) that leverages frequency-domain characteristics to synthesize fine-grained texture content. Furthermore, we introduce the ACAM, which, during the decoding phase, utilizes heterogeneous features to construct a more comprehensive global relationship. The ACAM enhances information through texture patch, enabling better exploitation of the limited guidance provided by the texture patches. This approach not only improves the consistency between the appearance structure and texture content but also enhances the model’s robustness to variations in texture patch size.

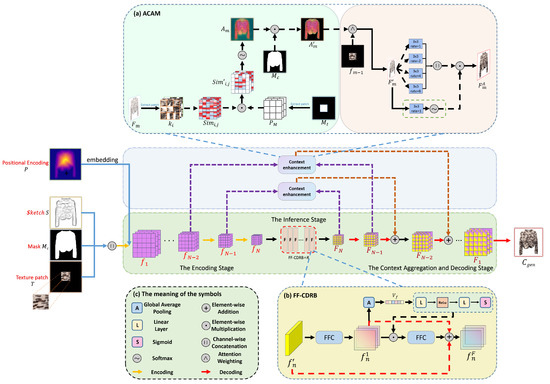

Figure 2 shows the overall framework of FCAN. In this chapter, we will provide a detailed introduction to the workflow of FCAN.

Figure 2.

The overall framework of FCAN. The FCAN workflow consists of three stages: the encoding stage, content inference stage, and context aggregation and decoding stage. (a) Encoding Stage: In the encoding stage, FCAN utilizes an encoder to encode the input information into multi-scale features. (b) Content Inference Stage: In the content inference stage, FCAN employs concatenated FF-CDRB blocks to perform content inference and initial context modeling on the deepest features obtained in the encoding stage. (c) Context Aggregation and Decoding Stage: In this stage, the proposed ACAM is used to achieve global context aggregation with heterogeneous features from different stages. The enhanced features are then fused into the corresponding decoding steps, ultimately generating the clothing image.

3.2. The Encoding Stage

In the encoding stage, we first concatenate the design sketch S, texture patch T, and clothing mask along the channel dimension to obtain the fused feature :

where denotes the concatenation operation along the channel dimension. S and T provide structural and texture guidance, respectively, while prevents content overflow. During training, , and are extracted from real clothing images. Subsequently, FCAN employs N layers of sequential lightweight encoding blocks, , based on vanilla convolution, where , to encode into multi-scale features . Additionally, in the first encoding block, we introduce sinusoidal position encoding to embed positional information of the garment regions P, enhancing the efficiency of feature alignment. A detailed implementation can be found in [61].

The structure of encoding block is shown in Figure 3. The embedding process of P is illustrated in Figure 4, and the multi-scale encoding process can be expressed as follows:

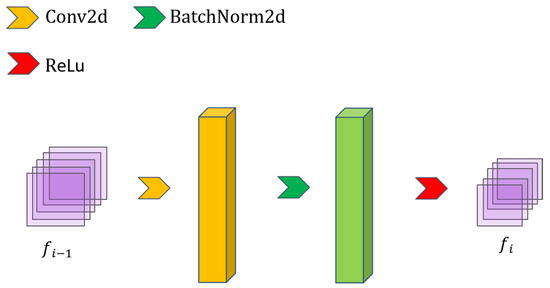

Figure 3.

Structure of the encoding block.

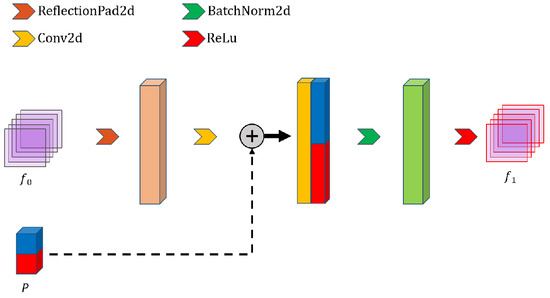

Figure 4.

The positional information embedding process.

3.3. The Content Inference Stage

In the content inference stage, the deepest encoding feature is fed into the content inference module, which consists of R connected FF-CDRB blocks. This module synthesizes garment content by aligning texture and structure through frequency-domain characteristics. Next, we will provide a detailed description of the FF-CDRB.

Fast Fourier Channel Dual Residual Block

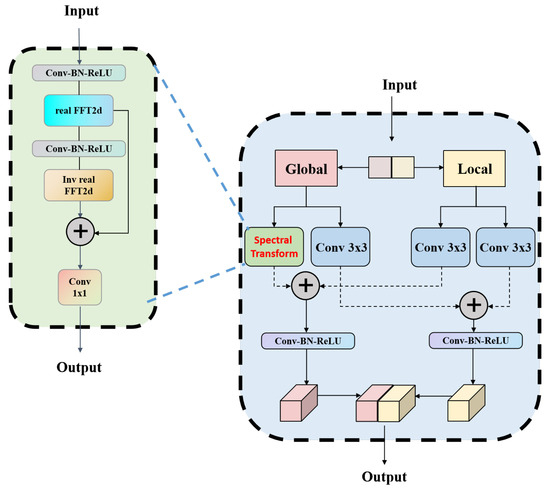

Inspired by the residual design in ResNet [62], we introduce the Fast Fourier Channel Dual Residual Block (FF-CDRB) based on FFC, aiming to facilitate apparel content inference. The structure of FF-CDRB is shown in the lower part of Figure 2.

The FF-CDRB consists of two serially connected FFCs. As shown in Figure 5, FFC divides the channels into a local branch and a global branch. The local branch captures local features using standard convolution, while the global branch interprets global context through FFT. In the global branch, FFC employs a spectral transformation module based on the Discrete Fourier Transform to convert signals from the spatial domain to the frequency domain, enabling convolution operations with a global receptive field. Finally, the conjugate symmetry of real-valued signals is used to perform an inverse transform to restore spatial structures. The specific operations in the global branch are as follows, including the real and complex spaces, respectively. Real and image denote the real and imaginary parts, respectively. Referring to [63], the specific operations of the global branch of FFC can be expressed as

Figure 5.

Structure of FFC.

- Apply real FFT to input features:where , and C represent the height, width, and number of channels of the tensor, respectively, and × represents multiplication, indicating that the dimensions of height, width, and channels are multiplied together to define the size of the feature map. and represent a real-valued tensor with spatial dimensions and a complex tensor with spatial dimensions , respectively. FFT represents the real-valued Fourier transform, and a detailed explanation and derivation of FFT can be found in [64].

- Concatenates real and imaginary parts:where ComplexToReal represents the concatenation of the real and imaginary parts of the input tensor.

- Apply convolution operations in the frequency domain:where Conv represents the convolution operation.

- Apply inverse transform to recover a spatial structure:where RealToComplex represents the conversion of a real-valued tensor into a complex-valued tensor for the application of the inverse transform. InverseRealFFT represents the application of the inverse real-valued FFT to restore the tensor to its real-valued form. Similarly, the detailed process of the inverse transform can be found in [64].

However, although the FFC can efficiently extract features via spectral transformation, it does not fully account for the relationship between the local and global feature branches. Specifically, since the two feature extraction branches in the FFC are independent, a simple concatenation of their outputs may lead to a loss of certain feature information. To address this, the first FFC in the FF-CDRB applies adaptive channel weighting to the outputs of both branches using the Squeeze-and-Excitation (SE) attention [65], thereby effectively adjusting the response levels of feature branches.

The feature input to the -th FF-CDRB block is denoted as . The output of after processing through the first FFC is represented as . The specific operation for adaptive channel weighting on feature is

- Apply global average pooling to compress into a vector to capture global information:where represents the result of compressing the features using global average pooling.

- Map the features of through a learnable fully connected layer, and finally apply the Sigmoid function to obtain the adaptive weight vector :where represents a learnable fully connected layer used to capture feature information in the compressed vector, while represents the sigmoid function, which normalizes the final result into the weight vector w.

- Use the channel-adaptive weights to adjust the response levels of the local and global branch features in , ultimately obtaining the feature representation with adaptive weight adjustments:

where represents the result of element-wise multiplication, where the weight w is applied to .

The adaptively weighted feature is fed into the second FFC in the FF-CDRB block for further content inference. The output after processing by the second FFC is denoted as . Inspired by the residual design in ResNet, a dual-path residual mechanism is incorporated in the FF-CDRB to simplify module learning and mitigate potential feature degradation caused by the serial FFC structure, thereby enhancing the module’s expressive capability. Specifically, in the FF-CDRB, the initial input and the feature processed by the first FFC are merged through skip connections into the final output to optimize feature representation. The final output of the -th FF-CDRB block can be expressed as

where ⊕ represents element-wise addition.

In summary, the content inference process in FCAN can be described as follows:

where denotes the -th FF-CDRB block in the FCAN.

3.4. The Context Aggregation and Decoding Stage

In the context aggregation and decoding stage, we integrate the proposed ACAM into the decoding process to aggregate multi-stage heterogeneous features, achieving more comprehensive contextual relationship interaction. We utilize N decoding blocks, , , constructed by stacking transposed convolutions, batch normalization, and ReLU activation function in sequence. Next, a detailed explanation of the proposed ACAM will be presented.

Asymmetric Context Aggregation Mechanism

Since texture patches provide limited information guidance, generating clothing content that aligns semantically with these texture patches becomes challenging, especially when the texture patches are small. To ensure the model can fully utilize the information from texture patches and generate semantically consistent image content under varying patch sizes, we propose a novel Asymmetric Context Aggregation Mechanism (ACAM).

The ACAM is designed based on our consideration of the characteristics of heterogeneous feature representations: compared to the features in the decoding stage, those in the encoding stage demonstrate a superior capacity for accurately capturing the initial guiding information as the progressive processing of features inevitably leads to a certain degree of representation loss. In contrast, decoding features, through the FF-CDRB blocks, establish preliminary global relationships, providing a more comprehensive interpretation of the fused multi-source features. Therefore, ACAM first constructs global contextual attention using decoding features and extracts initial guidance information representations from the multi-scale features of the encoding stage. It then uses the constructed attention scores to complete the aggregation of encoding and decoding features.

It is important to highlight that we observed suboptimal performance when constructing ACAM using cross-stage features of the same size. We believe the primary reason lies in the shared commonalities in information representation among features of identical size, which results in less expressive information compared to features of heterogeneous sizes. Based on this observation, we utilize cross-stage heterogeneous-sized features to implement ACAM.

Specifically, the features from the decoding stage are denoted as , where represents the deepest features, and =. As shown in the upper part of Figure 2, ACAM first extracts patches from the decoding stage features and calculates the cosine similarity between these patches:

where and are the -th and -th patch vectors extracted from , respectively, and and represent the Euclidean norms of and , respectively. is the cosine similarity fraction between and . Considering that the encoding stage features contain semantic representations of the sketch, ACAM focuses on aligning the global information in with the texture content in to more effectively utilize the limited texture information in the texture patches. Specifically, ACAM uses the texture patch region mask to obtain the cosine similarity scores between the internal and external segments of the texture patches in the feature map:

where is the patch vector extracted from , and ⊙ represents element-wise multiplication. Then, Softmax is applied to the to obtain the attention weight vector :

where H is the total number of patches extracted from . Since context enhancement is not required in the back area outside the clothing area, ACAM uses the clothing area binary mask to limit context attention enhancement to the clothing area to avoid content overflow:

where is the attention weight matrix composed of , and is the binary mask of clothing area. Subsequently, ACAM uses and the texture patch feature segments from to aggregate heterogeneous features, obtaining the context-enhanced features . The specific operations are as follows:

where represents the h-th patch vector extracted from the regions outside the texture patch in , and denotes the l-th patch from the regions within the texture patch in , where . and are the total numbers of patches extracted from within and outside the texture patch region in , respectively. The context-enhanced feature is ultimately obtained by concatenating and .

As shown in the upper part of Figure 2, based on [56], refines the enhanced feature using a set of dilated convolutions [66] with different dilation rates, and the features refined through multi-scale processing using dilated convolutions are denoted as . The specific operations are as follows:

However, although the parallel dilated convolution design provides richer scale information, it may also introduce local information loss that can impair the final feature representation. To address this, an adaptive gating mechanism is introduced in ACAM to adaptively coordinate with the refined residual features . Specifically, an adaptive gate value is first obtained from through standard convolution and Softmax operations. is then used to balance the response levels of and , yielding the final output . The specific operation is as follows:

where is

where represents the obtained gating weight vector, Softmax denotes the Softmax function, Norm represents normalization, and Conv denotes a learnable convolution.

Finally, the context-enhanced feature is integrated into the decoding features during the decoding phase to achieve comprehensive context modeling. Similarly, is incorporated into the asymmetric decoding step to obtain richer information representations. The specific operation can be expressed as follows:

The processing of the context aggregation and decoding stage can be represented as follows:

where ACAM represents the context aggregation operation within the ACAM, denotes the decoding block based on transposed convolution, and represents the final generated image.

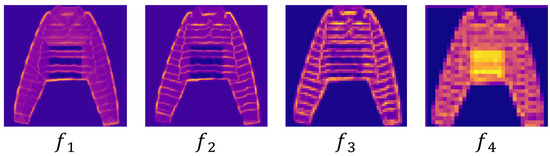

To provide a clearer explanation of the internal modeling details of ACAM, we conducted further analysis and interpretation of its feature modeling process using feature visualization techniques. For illustrative purposes, we take FCAN, which consists of four encoding blocks and four decoding blocks, as an example to present the visualization results. Figure 6 shows the visualization results of multi-scale features during the encoding phase. As shown in the figure, the shallow features in the encoding stage are more adept at capturing low-level information such as textures and edges, while deeper features are capable of extracting richer high-level semantic information.

Figure 6.

The visualization of multi-scale features from the encoding stage.

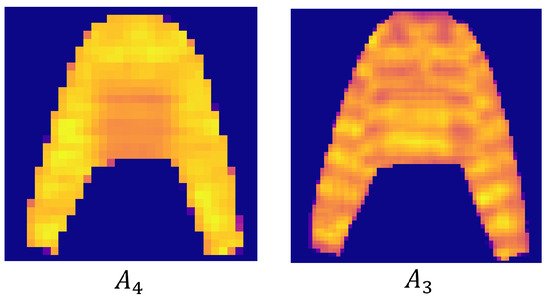

Next, we proceed based on the ACAM workflow described in this section. Figure 7 presents the visualization results of the context attention maps constructed by ACAM, where and represent the context attention maps constructed from and , respectively. As shown in Figure 7, the context attention maps extracted from the deeper decoding features enhance global high-level information, while those extracted from the shallower features focus more on the expression of local structures and texture details. Thus, ACAM leverages cross-level and cross-stage heterogeneous structures for context aggregation, thereby enhancing the model’s ability to represent diverse feature details.

Figure 7.

The context attention visualization maps constructed by ACAM.

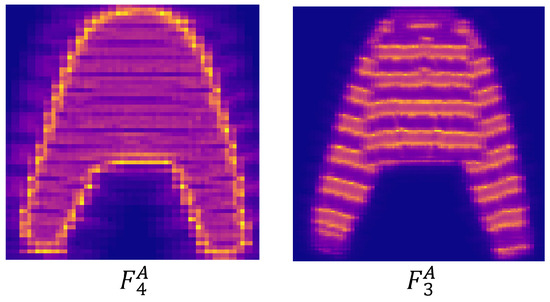

Figure 8 presents the visualization results of the context-enhanced features and obtained using and , respectively. As indicated, achieves a more comprehensive aggregation of global information, while provides a more detailed interpretation of local features. These results visually demonstrate the effectiveness of ACAM in heterogeneous feature aggregation.

Figure 8.

The visualization maps of context-enhanced features constructed by ACAM.

Finally, to facilitate a clear understanding of our overall process, we summarize the operation of our FCAN in the form of pseudocode. It is presented in Algorithm 1.

| Algorithm 1 Algorithm of the FCAN |

| Input: Sketch S, Clothing Mask , and Texture Patch T Output: Synthesized Clothing Image

|

3.5. Loss Functions

Due to the complexity of apparel content, training the generative model presents certain challenges. Based on [56,58,61], we employ adversarial loss based on PatchGAN [67], perceptual loss based on VGG [68], style loss based on Gram matrices [69], and pixel-level loss to jointly train FCAN.

The pixel-level loss guides the network to learn the underlying data distribution, enabling it to acquire fundamental image synthesis capabilities. Its specific computation is given by

where and represent the real image and the generated image, respectively.

The pixel-level reconstruction loss based on Absolute Value Error cannot accurately guide the network to reconstruct apparel content as the style information provided by texture patches is limited. Thus, relying solely on may lead the network to generate unrealistic content in challenging regions of the garment. It is important to note that the goal of our task is not to fully replicate the exact apparel content but rather to generate apparel images that are semantically consistent with the guiding information and contextually reasonable. To achieve this, we employ perceptual loss based on the pre-trained VGG network and style loss based on Gram matrices to help the network produce semantically consistent apparel content. The perceptual loss calculates the differences between images through a pre-trained neural network. Compared to Absolute Value Error, perceptual loss aligns more closely with human perception of images.

We calculate the perceptual loss using a pre-trained VGG network as follows:

where and represent the features extracted from the i-th layer of VGG for the real image and the generated image, respectively, and U denotes the total number of layers in the VGG network.

The style loss is used to ensure that the generated images have a consistent overall style with the real images, avoiding generating plausible but seriously misaligned results in terms of style semantics. First, the pre-trained VGG is used to calculate the Gram matrix:

where and are the number of channels and spatial dimensions of the features, respectively. C is the input image. The style loss can be expressed as

In order to improve the quality of the generated image, avoid blur in the generated image, and simplify the design details of the loss function, we introduced PatchGan-based adversarial loss for adversarial training. PatchGAN divides the image into several small local patches and discriminates the truth and falsity of each local patch to help the generation network improve the quality of local texture generation. The discriminator loss based on PatchGAN is

where is the output of the discriminator on the real sample on patch , and is the output of the discriminator on the generated sample on patch . The goal of the generative network is to fool the discriminator on as many patches as possible. The loss of the generated network is

To sum up, the adversarial loss is

where and are generator loss weight coefficients and discriminator loss weight coefficients, respectively. The total losses in training are

where , , and are the weight coefficients of the corresponding losses.

4. Experiments

4.1. Dataset

To the best of our knowledge, there is currently no publicly available dataset featuring diverse categories of paired design sketches and fashion images. To address this gap, we constructed a dataset named SKFashion, based on [70], which includes a wide range of fashion categories. This dataset enables the evaluation of our method’s performance in generating images for various types of apparel.

Additionally, to further validate the robustness of our proposed approach under different conditions, we conducted extensive experiments on two publicly available datasets: VITON-HD [71] and Fashion-Gen [72].

- SKFashion: The SKFashion dataset consists of 20,421 paired design sketches and fashion images, each with a resolution of 256 × 256 pixels. It covers dozens of fashion categories, including long sleeves, short sleeves, dresses, shorts, skirts, padded coats, jackets, and vests. Figure 9 presents a selection of image pairs from SKFashion. Following the setup in [56], we divided the SKFashion dataset into a training set containing 17,396 image pairs and a test set comprising 3025 image pairs.

Figure 9. Partial data demonstration of the SKFashion dataset.

Figure 9. Partial data demonstration of the SKFashion dataset. - VITON-HD: The VITON-HD dataset includes images of various upper-body clothing categories and is widely used in fashion image generation tasks such as virtual try-on. We standardized the resolution of the clothing images in VITON-HD to 192 × 256 pixels and utilized the garment structure edge maps extracted using DexiNed (Dense Extreme Inception Network for Edge Detection) [73] as design sketches. We divided the dataset into a training set comprising 10,309 sketch–clothing image pairs and a test set with 1010 pairs. Figure 10 illustrates a selection of image pairs from the VITON-HD dataset.

Figure 10. Partial data demonstration of the VITON-HD dataset.

Figure 10. Partial data demonstration of the VITON-HD dataset. - Fashion-Gen: The Fashion-Gen is a large-scale multimodal dataset designed for fashion-related tasks and is widely used in multimodal fashion image generation. We extracted clothing images from Fashion-Gen using [74] and generated corresponding design sketches by applying DexiNed to obtain structural edge maps of the garments. Following the setup in [53,56,57], we randomly sampled 10,282 sketch–clothing image pairs as the training set and 800 pairs as the test set. Figure 11 showcases a selection of image pairs from the Fashion-Gen dataset.

Figure 11. Partial data demonstration of the Fashion-Gen dataset.

Figure 11. Partial data demonstration of the Fashion-Gen dataset.

4.2. Evaluation Metrics

We conduct a comprehensive performance evaluation of the proposed method using five popular evaluation metrics:

- Fréchet Inception Distance (FID): FID [75] is an objective metric widely used to evaluate the quality of images generated by generative models. It measures the distance between the distributions of generated and real images using a pre-trained Inception network, quantifying the perceptual similarity between them. By considering both the global feature distribution and perceptual similarity, FID effectively assesses the overall quality of generated images, aligning closely with human visual perception. We use FID to evaluate the overall diversity and global perceptual quality of the generated images. The calculation of FID is as follows:where and represent the mean vector and covariance matrix of the generated image features, respectively, while and represent the mean vector and covariance matrix of the real image features, respectively. represents the square of the Euclidean norm of the difference between two mean vectors. Tr represents the trace of a matrix.

- Structural Similarity (SSIM): SSIM [76] is an evaluation metric used to measure the similarity of two images. SSIM focuses on the brightness, contrast, and structure of the image. We use SSIM to evaluate the quality of the generated image in terms of local structure and so on. SSIM is calculated as follows:where x and y represent the real image and the generated image, respectively. denotes the mean pixel value, represents the standard deviation of grayscale intensity, and are constant coefficients, and is defined as

- Learned Perceptual Image Patch Similarity (LPIPS): LPIPS [77] is a metric used to evaluate the perceptual similarity between two images. It assesses image quality by directly extracting features from a pre-trained neural network, making it more aligned with human visual perception. LPIPS places greater emphasis on local perceptual similarity, and we employ it to evaluate the local perceptual quality of generated images. The calculation of LPIPS is as follows:where and represent the normalized corresponding features, and is the scaling weight vector.

- Peak Signal-to-Noise Ratio (PSNR): PSNR is a pixel-level image quality metric that is sensitive to the random noise in the image. We use PSNR to evaluate the pixel-level quality of the generated image. The calculation formula of PSNR iswhere represents the maximum pixel value of the image, and is the average value of the RGB three-channel mean square error of the real image and the generated image.

- TOPIQ: TOPIQ is a deep-learning-based image quality assessment method that utilizes multi-scale features and cross-scale attention mechanisms to evaluate image quality. By simulating the characteristics of the human visual system, TOPIQ leverages high-level semantic information to guide the network’s focus on semantically important local distortion regions, thereby assessing image quality. TOPIQ includes both Full-Reference (FR) and No-Reference (NR) forms. In this paper, we use them to evaluate the perceptual quality of the generated images. The specific details of TOPIQ can be found in [78].

4.3. Baseline Methods

The proposed method is extensively compared with five advanced existing methods: ZITS [61], PAINT [56], MST [79], Albahar-MT [80], and EdgeConnect [81]. Please note that, since the method proposed by Albahar et al. is not specially named in [80], the method is uniformly named as Albahar-method (Albahar-MT) in this paper. We re-trained all the baseline methods on the corresponding dataset. For fair comparison, the hyperparameters in our experiment follow the default settings in the corresponding paper.

4.4. Implementation Details

In our experiments, training is conducted using a fixed learning rate of , with the Adam optimizer () to optimize the network parameters until the model converges. We initialized the loss weights in our experiments based on the settings in Refs. [56,61,79] as the weights in these papers performed well on similar tasks. To further optimize, we performed a grid search within a 50% range of the initial weights to ensure the selection of the most appropriate weight combination, ultimately determining the final weights. The loss weights in the experiments are set as , , and . Considering a balance between performance and computational cost, we set the number of FF-CDRB blocks in the FCAN as and the number of encoding and decoding blocks as . While deeper network architectures and additional context aggregation operations may lead to improved performance, they would also increase computational resource consumption.

Additionally, we follow the operations in [56,80], where randomly sized texture patches are cropped from the clothing areas as texture patches. To ensure fairness in the experiments, the same random seed is used across all experiments to guarantee consistency in the texture content.

FCAN was trained for 100 epochs with a batch size of 16 on the SKFashion and VITON-HD datasets, and for 100 epochs with a batch size of 8 on the Fashion-Gen dataset.

We adopted the texture patch size specified in [56], setting 60–70 pixels as the standard texture patch size. Based on this standard, the texture patch size was reduced by approximately 20–30% and 40–50% to represent medium-sized and small-sized texture patches, respectively. Extensive experiments were conducted under varying texture patch sizes to evaluate the performance of different generation methods under diverse conditions.

The primary software environment used in the experiments is Python 3.8 (Ubuntu 20.04) and PyTorch 2.0.0. The main hardware environment consists of a single RTX 4090 (24 GB) GPU, Xeon(R) Platinum 8352 V CPU, and 120 GB of memory.

4.5. Comparison with Existing Approaches

4.5.1. Quantitative Comparison

Table 1, Table 2 and Table 3 illustrate the quantitative comparison results between our method and existing approaches. It is worth noting, as discussed in [77], that, while PSNR and SSIM are effective in objectively measuring pixel-level image quality, their outcomes may sometimes contradict human perceptual judgments. For instance, certain meaningless blurs can lead to perceptual bias, which may not be adequately captured by PSNR and SSIM. Given that garment image generation tasks place greater emphasis on human perceptual judgment, our quantitative experiments prioritize perceptual quality metrics such as FID, LPIPS, and TOPIQ, using PSNR and SSIM as supplementary references for pixel-level image quality.

Table 1.

Quantitative experimental results on the SKFashion dataset.

Table 2.

Quantitative experimental results on the VITON-HD dataset.

Table 3.

Quantitative experimental results on the Fashion-Gen dataset.

Table 1 presents the results of the quantitative comparison experiments conducted on the SKFashion dataset. The results show that, under different texture patch size conditions, our method outperforms the baseline methods in terms of FID and LPIPS metrics while achieving comparable results in PSNR and SSIM. This indicates that the garment images generated by our method exhibit superior perceptual quality and also perform well in terms of pixel-level quality.

As the texture patch size decreases, all methods experience some degree of performance fluctuation. Among them, PAINT [56], which models context based on a single texture patch, shows the poorest robustness to changes in texture patch size, resulting in the most significant metric fluctuations. ZITS [61] demonstrates the closest robustness to texture patch size variation compared to our method as it introduces positional encoding techniques to improve performance under small texture patch conditions.

Additionally, it is noteworthy that, as shown in Table 4, our approach achieves superior performance while utilizing only approximately one-third to one-half of the computational parameters required by the baseline methods. This underscores the efficiency and lightweight nature of our method.

Table 4.

Comparison of the model parameters.

Table 2 presents the quantitative comparison results on the VITON-HD dataset. Our proposed FCAN achieves the best performance across all evaluation metrics. Notably, the VITON-HD dataset contains approximately 40% less data compared to the SKFashion dataset, making the evaluation results more sensitive to the texture patch size. Under conditions of small texture patch sizes, only our proposed FCAN and ZITS maintain consistently high performance, while the performance of other baseline methods fluctuates significantly.

Table 3 presents the quantitative comparison results on the Fashion-Gen dataset, showing that our method achieves the best performance across all metrics, further validating the effectiveness of our approach. Among all the baseline methods, MST [79] achieves performance metrics closest to FCAN under both standard and medium texture patch sizes. However, under small texture patch sizes, the performance of MST [79] deteriorates significantly, indicating that it lacks robustness to texture patch size and struggles to adapt to the varying requirements of different practical tasks.

It is important to note that the clothing size ratio in Fashion-Gen is smaller compared to SKFashion and VITON-HD, and the dataset contains fewer clothing categories with simpler garment structures. As a result, under conditions with standard and medium-sized texture patches, the SSIM scores of all methods are relatively close. However, although the data scales of the VITON-HD and Fashion-Gen datasets are nearly half that of the SKFashion, our method still maintains robust performance on both datasets. This suggests that, in comparison to the baseline methods, our approach exhibits enhanced robustness to the scale of training samples, thereby ensuring the method’s reliability.

Table 4 presents a comparison of the model parameter counts for each method, showing that our approach has the smallest number of parameters. Combined with the results of the quantitative experiments, this demonstrates that our method is highly efficient in terms of parameter usage, achieving optimal performance with the fewest computational parameters.

4.5.2. Qualitative Comparison

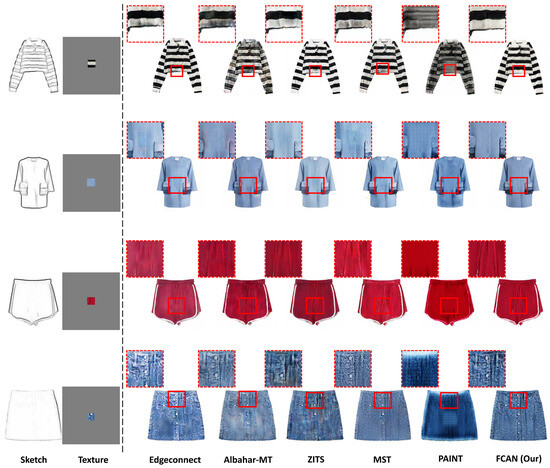

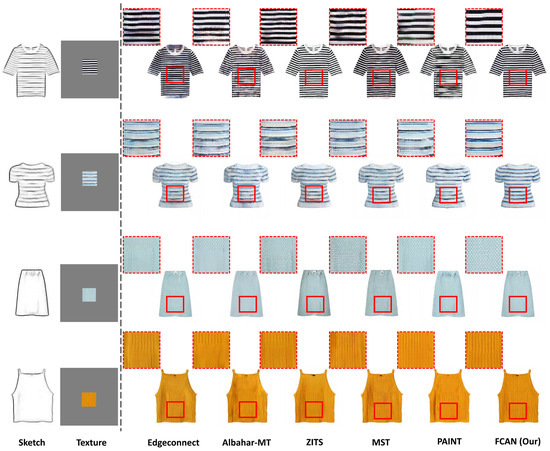

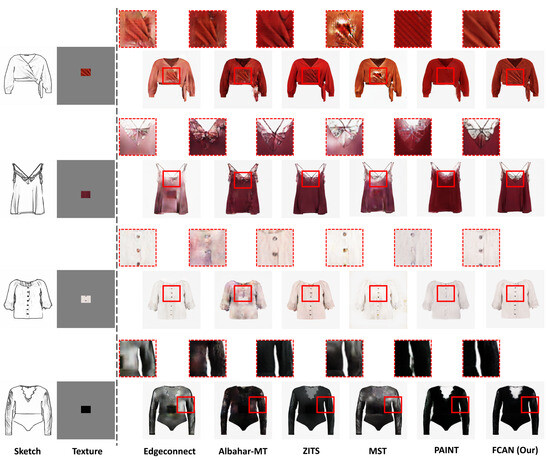

Figure 12, Figure 13 and Figure 14 present the qualitative comparison results on the SKFashion dataset. To facilitate comparison, we have zoomed in on certain details in the visual comparisons. Please note that the annotated details are not the only noticeable differences in performance. For better overall comparison, we recommend zooming in on the images. The qualitative comparison results on the VITON-HD and the Fashion-Gen will be presented in the appendix.

Figure 12.

Qualitative comparison results under small texture patch sizes on the SKFashion dataset.

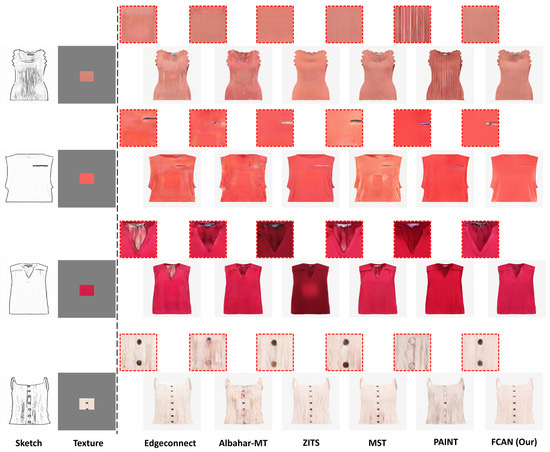

Figure 13.

Qualitative comparison results under medium texture patch sizes on the SKFashion dataset.

Figure 14.

Qualitative comparison results under standard texture patch sizes on the SKFashion dataset.

As shown in Figure 12, under small texture patch sizes, the images generated by our method exhibit significant advantages over the baseline methods in terms of texture details, semantic consistency, and other aspects. The images generated by the baseline methods suffer from issues such as style leakage (e.g., black-and-white texture spillover on the striped top in the first row), color mismatches and artifacts (e.g., color discrepancies and meaningless artifacts on the blue coat in the second row and the denim skirt in the fourth row), as well as structural and semantic loss (e.g., missing waistband on the red sports shorts in the third row). These issues indicate that the baseline methods perform poorly in integrating guidance information and content inference.

As shown in Figure 13, as the texture patch size increases, the quality of the images generated by all methods improves to some extent. However, when generating densely repetitive textures and large-scale texture propagation, the baseline methods still encounter issues such as texture detail loss (e.g., texture propagation failure on the yellow knit vest in the first row of Figure 13) and inconsistency in dense texture content (e.g., loss of dense white dots on the green and white patterned top in the third row). Furthermore, the clothing images generated by the baseline methods still exhibit artifacts and color discrepancies (e.g., in the second and fourth rows).

Figure 14 presents the qualitative comparison results under the standard texture patch size condition on the SKFashion dataset. As the texture patch size increases, the quality of the generated images improves across all methods. However, the baseline methods still struggle when generating dense texture content, exhibiting issues such as texture loss or overflow.

Leveraging the advancements in content reasoning and contextual modeling introduced by ACAM and FF-CDRB, our method seamlessly integrates the structural semantics of design sketches with the stylistic content of texture patches, thereby establishing coherent and consistent global contextual relationships. As a result, our method maintains stable performance across all three texture patch sizes. The generated images demonstrate significant advantages over the baseline methods in terms of color consistency, texture details, and structural-semantic alignment while also showing robust performance with respect to variations in texture patch size.

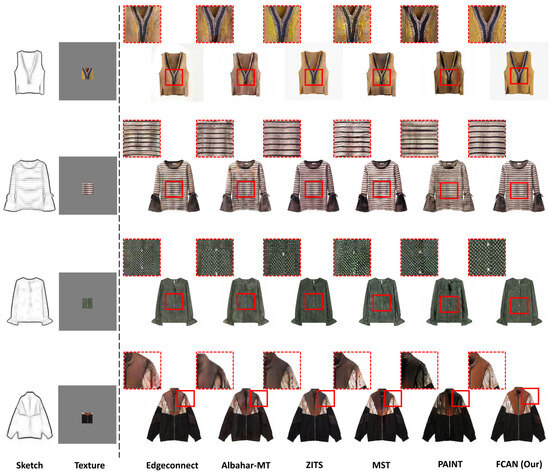

Figure A1, Figure A2, Figure A3, Figure A4, Figure A5 and Figure A6 in the appendix present the experimental results on the VITON-HD and Fashion-Gen datasets. The results demonstrate that our method achieves excellent performance on both datasets. Even with a significantly reduced training dataset, our approach can still generate high-quality clothing images, guided by sketches and texture patches.

Extensive comparative experiments conducted across the three datasets show that our method offers significant advantages in terms of objective performance metrics, visual quality of the generated images, and model size.

4.5.3. Ablation Studies

We conducted extensive ablation experiments to assess the importance of each component in our method and the performance impact it provides.

Table 5, Table 6 and Table 7 present the results of the ablation experiments conducted on three datasets. Specifically, “w/o Dual residual” indicates the removal of the dual-path residual connections in FF-CDRB, “w/o ACAM” means the removal of ACAM, “w/o Gating” refers to the removal of the adaptive gating mechanism in ACAM, “ResNet+ACAM” denotes the use of standard ResNet residual blocks as a replacement for FF-CDRB in the content inference module of FCAN, and “Skip Connection” denotes replacing ACAM with conventional skip connection.

Table 5.

Ablation experiment results on the SKFashion dataset.

Table 6.

Ablation experiment results on the VITON-HD dataset.

Table 7.

Ablation experiment results on the Fashion-Gen dataset.

FF-CDRB: The ablation experiment results show that the proposed FF-CDRB effectively improves the FID, LPIPS, and TOPIQ metrics of the generated images, demonstrating its superiority in content inference. As mentioned in our qualitative comparison experiments, the clothing structures in the Fashion-Gen dataset are relatively simple, and the clothing regions occupy a smaller area, which is why the SSIM metric on the Fashion-Gen dataset is relatively similar.

Table 8 presents a comparison of the model’s computational parameter count after ablating each component. The results show that the FF-CDRB, compared to conventional convolution-based residual blocks, not only provides performance improvements but also reduces the model’s computational parameters, achieving more efficient and lightweight content inference.

Table 8.

Comparison of computational parameter counts after ablation.

ACAM: The experimental results validate the effectiveness of the proposed ACAM in context modeling. The ACAM significantly improves the performance of our method across various metrics. More comprehensive and effective context relationship modeling leads to a notable enhancement in the perceptual quality of the generated images. Furthermore, the ablation experiment results show that, as the texture patch size decreases, ACAM’s ability to aggregate global information becomes more prominent, demonstrating that it can effectively enhance the model’s robustness under extreme texture patch size conditions. Furthermore, Table 8 shows, that while ACAM effectively enhances the performance, it does not introduce significant additional computational parameters. This is because the proposed ACAM constructs global context attention based on the heterogeneous features after inference, enabling explicit context aggregation.

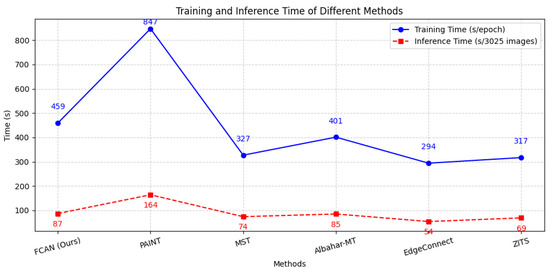

4.5.4. Time Performance

We evaluated the training and inference time of the proposed FCAN on the SKFashion dataset and compared it with baseline methods. The time costs for training and inference are shown in Figure 15.

Figure 15.

Time evaluation and comparison.

The FCAN we proposed is on par with the baseline methods in terms of training and inference time consumption. Given the performance improvement, we believe that a slight increase in time cost is acceptable. In our future work, we will continue to optimize the model structure and hyperparameter settings to ensure that similar results are achieved with minimal cost.

5. Conclusions

This paper proposes a novel clothing image generation method driven by texture blocks and design sketches. At the same time, it provides a comprehensive review of numerous related techniques, methods, and the current state of the research in this field. Starting from issues such as the lack of controllability and poor image quality in the existing methods, we highlight the advantages of our approach.

Our method is driven by texture blocks and design sketches, ensuring the maximum subjective control over the generated content, thereby guaranteeing its potential application value. In response to the issue of poor image quality in the current related research, we also introduce the innovative ACAM and FF-CDRB components, which enable our model to achieve significant performance improvements in multiple aspects. Specifically, considering the importance of contextual relationship modeling in the field of computer vision, we believe that the heterogeneous feature modeling approach proposed by the ACAM component can provide valuable insights for the research community.

In this paper, we report the comparative experiments conducted between our proposed method and five advanced baseline methods under different texture block sizes. In the quantitative experiments, our method achieved the best FID, PSNR, LPIPS, and TOPIQ scores under the standard, medium, and small texture block conditions of the SKFashion dataset while also yielding comparable SSIM and TOPIQ-NR results. Specifically, under the three texture block conditions, the FID metric showed improvements of approximately 23.2%, 22.4%, and 19.7%, respectively, over the method with the second-best performance, while LPIPS showed improvements of about 13.5%, 11.8%, and 12.8%. In the publicly available Fashion-Gen and VITON-HD datasets, our method achieved improvements of approximately 5–18% in metrics such as FID, LPIPS, and PSNR compared to suboptimal methods. We also conducted extensive ablation experiments to demonstrate the effectiveness of the design of each component in the model.

In addition, we performed extensive qualitative comparison experiments, the results of which demonstrated that our method is capable of generating high-quality clothing images under various texture block conditions and outperforms the baseline methods in terms of semantic consistency and fine-grained expression. Notably, while achieving these performance improvements, our method maintains efficient computational parameters, with the fewest model parameters among all the methods tested. We believe this feature holds significant potential for local deployment on multiple devices. These extensive experimental results highlight the progress our method has made in the controllable clothing image domain, and we are confident that it provides valuable insights for research in related fields.

In summary, this method offers an innovative solution for various tasks in fashion design and related fields, effectively reducing designers’ workload during the early stages of prototyping. It also has a positive impact on transforming production methods in the fashion industry and advancing its sustainable development.

6. Discussion

Although our method has shown promising advancements in controllability and the quality of generated images, there are still several factors worth discussing regarding both the strengths and limitations of our approach.

One of the key advantages of the method we propose is its ability to provide fine-grained control over generated clothing images, which represents a significant improvement over the traditional methods. By leveraging texture blocks and design sketches as driving forces, we are able to achieve high customization while maintaining consistency in the generated content. This level of control is particularly important for practical applications in fashion design, where ensuring semantic coherence and meeting design specifications are crucial. However, this enhanced controllability can, in some cases, limit the model’s generative capabilities. Specifically, while our method excels in controlled scenarios, it may not be as effective in generating highly diverse clothing styles as other methods based on pre-trained generative models, which are designed to produce a wider range of outputs. This limitation highlights the challenge of balancing control and diversity in generative models.

Another key contribution of our work is the introduction of the ACAM components, which have played a crucial role in enhancing the model’s performance. The ACAM focuses on heterogeneous feature modeling, which helps to establish contextual relationships in the generated images, leading to more realistic and semantically consistent clothing representations. The exploration of such methods is a focal point in this field as contextual modeling is often critical in computer vision tasks. However, despite its advantages, the ACAM still faces some challenges. In certain cases, especially when the design sketch is not sufficiently detailed or when complex clothing features are involved, the model may struggle to generate highly accurate or realistic textures and patterns. This suggests that further improvements to the ACAM component may be needed to enhance the model’s performance in these more challenging scenarios.

At the same time, time cost is also a key concern for generative models. Currently, our method’s training and inference time costs are on par with the existing methods and, in some cases, slightly higher. While we believe that a modest increase in time cost for significant performance improvement is acceptable, it could still impact the future development of the model. Therefore, how to significantly reduce time cost while improving performance remains a direction worth exploring.

In summary, our method still holds significant potential for future improvements. Potential future research will focus on, but is not limited to, the following areas:

- Optimizing the acquisition process of sketch and texture data to enhance the practical value of the method;

- Improving its synthesis performance under more extreme guidance conditions to further broaden its applicability;

- Improving time efficiency without sacrificing performance or while enhancing performance;

- Exploring cross-applications with related tasks such as virtual try-on, clothing retrieval, and recommendation systems to expand its use cases.

Moving forward, we will continue to investigate clothing image synthesis technologies based on artificial intelligence, building upon these aspects.

Author Contributions

Conceptualization, H.L. and Y.H.; methodology, H.L. and Y.H.; software, Y.H.; validation, Y.H., M.D., and Z.L.; formal analysis, G.L.; investigation, Y.H.; resources, H.L.; data curation, Y.H.; writing—original draft preparation, Y.H.; writing—review and editing, H.L.; visualization, H.L.; supervision, H.L.; project administration, M.W.; funding acquisition, H.L. and G.L. All authors have read and agreed to the published version of the manuscript.

Funding

This research is jointly supported by the Natural Science Foundation of Jiangxi Province (20224BAB202018), National Natural Science Foundation of China (62462032, 62266023), Key Research and Development Program of Jiangxi Province (20223BBE51039, 20232BBE50020), and Science Fund for Distinguished Young Scholars of Jiangxi Province (20232ACB212007).

Institutional Review Board Statement

Not applicable.

Informed Consent Statement

Not applicable.

Data Availability Statement

The VITON-HD and Fashion-Gen datasets used in this study are accessible via sources https://github.com/shadow2496/VITON-HD (accessed on 1 October 2024) and https://fashion-gen.com (accessed on 1 October 2024), respectively. Since we are still conducting other related research, our self-constructed SKFashion dataset is temporarily unavailable to the public. Further inquiries can be made by contacting our first or second author.

Acknowledgments

This research was supported by multiple projects, including the National Natural Science Foundation of China, for experimental equipment, office supplies, and other related expenses.

Conflicts of Interest

The authors declare no conflicts of interest.

Appendix A

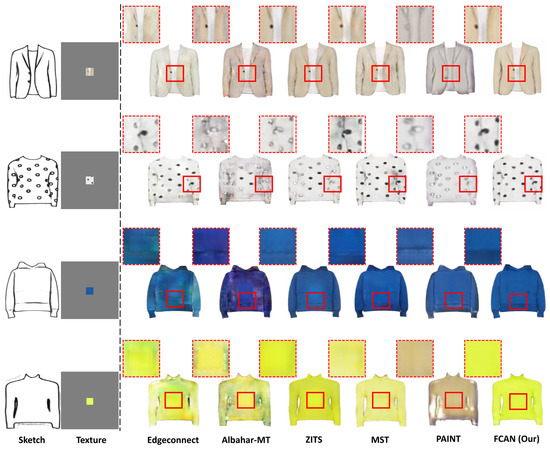

Figure A1.

Qualitative comparison results under small texture patch sizes on the VITON-HD dataset.

The qualitative experimental comparison results on the VITON-HD dataset are shown in Figure A1, Figure A2 and Figure A3. On the VITON-HD dataset, the baseline methods exhibit significant color discrepancies and artifacts under both small and medium texture patch sizes, whereas our method generates clothing images with a clear visual quality advantage. When the texture patch size is increased to the standard size, the baseline methods suffer from loss of details when handling complex, dense textures (as seen in the first and third rows of Figure A3, where dense textures fail to propagate properly to the clothing area). In contrast, our method effectively handles dense texture content.

Figure A2.

Qualitative comparison results under medium texture patch sizes on the VITON-HD dataset.

Figure A3.

Qualitative comparison results under standard texture patch sizes on the VITON-HD dataset.

Figure A4, Figure A5 and Figure A6 present the qualitative comparison results on the Fashion-Gen dataset. Similar to the VITON-HD dataset, the clothing structures in the Fashion-Gen dataset are relatively simple, and the clothing images generated by all methods maintain consistent structural semantics with the design sketches. However, on the Fashion-Gen dataset, the baseline methods still exhibit significant issues with color discrepancies, artifacts, and loss of texture details. In contrast, our method demonstrates stable performance on the Fashion-Gen dataset, producing clothing images with the best visual quality under all three texture patch sizes.

Figure A4.

Qualitative comparison results under small texture patch sizes on the Fashion-Gen dataset.

Figure A5.

Qualitative comparison results under medium texture patch sizes on the Fashion-Gen dataset.

Figure A6.

Qualitative comparison results under standard texture patch sizes on the Fashion-Gen dataset.

References

- Guo, Z.; Zhu, Z.; Li, Y.; Chen, S.C.H.; Wang, G. AI Assisted Fashion Design: A Review. IEEE Access 2023, 11, 88403–88415. [Google Scholar] [CrossRef]

- Oh, J.; Ha, K.J.; Jo, Y.H. A Predictive Model of Seasonal Clothing Demand with Weather Factors. Asia-Pac. J. Atmos. Sci. 2022, 58, 667–678. [Google Scholar] [CrossRef]

- Linlin, L.; Haijun, Z.; Qun, L.; Jianghong, M.; Zhao, Z. Collocated Clothing Synthesis with GANs Aided by Textual Information: A Multi-Modal Framework. ACM Trans. Multimedia Comput. Commun 2024, 20, 25. [Google Scholar]

- Lampe, A.; Stopar, J.; Jain, D.K.; Omachi, S.; Peer, P.; Štruc, V. DiCTI: Diffusion-based Clothing Designer via Text-guided Input. In Proceedings of the 2024 IEEE 18th International Conference on Automatic Face and Gesture Recognition (FG), Istanbul, Turkiye, 27–31 May 2024; pp. 1–9. [Google Scholar]

- Zhengwentai, S.; Yanghong, Z.; Honghong, H.; Mok, P.Y. DiCTI: SGDiff: A Style Guided Diffusion Model for Fashion Synthesis. In Proceedings of the 31st ACM International Conference on Multimedia (MM ’23), Association for Computing Machinery, New York, NY, USA, 29 October–3 November 2023; pp. 8433–8442. [Google Scholar]

- Cao, S.; Chai, W.; Hao, S.; Zhang, Y.; Chen, H.; Wang, G. DiffFashion: Reference-Based Fashion Design With Structure-Aware Transfer by Diffusion Models. IEEE Trans. Multimed. 2024, 26, 3962–3975. [Google Scholar] [CrossRef]

- Kim, B.-K.; Kim, G.; Lee, S.-Y. Style-controlled synthesis of clothing segments for fashion image manipulation. IEEE Trans. Multimed. 2020, 22, 298–310. [Google Scholar] [CrossRef]

- Jiang, S.; Li, J.; Fu, Y. Deep Learning for Fashion Style Generation. IEEE Trans. Neural Netw. Learn. Syst. 2022, 33, 4538–4550. [Google Scholar] [CrossRef]

- Di, W.; Zhiwang, Y.; Nan, M.; Jianan, J.; Yuetian, W.; Guixiang, Z.; Hanhui, D.; Yi, L. StyleMe: Towards Intelligent Fashion Generation with Designer Style. In Proceedings of the 2023 CHI Conference on Human Factors in Computing Systems (CHI ’23). Association for Computing Machinery, New York, NY, USA, 23–28 April 2023; pp. 1–16. [Google Scholar]