Abstract

In the product design and manufacturing process, the effective management and representation of system requirements (SRs) are crucial for ensuring product quality and consistency. However, current methods are hindered by document ambiguity, weak requirement interdependencies, and limited semantic expressiveness in model-based systems engineering. To address these challenges, this paper proposes a prompt-driven integrated framework that synergizes large language models (LLMs) and knowledge graphs (KGs) to automate the visualization of SR text and structured knowledge extraction. Specifically, this paper introduces a template for information extraction tailored to arbitrary requirement documents, designed around five SysML-defined SR categories: functional requirements, interface requirements, performance requirements, physical requirements, and design constraints. By defining structured elements for each category and leveraging the GPT-4 model to extract key information from unstructured texts, the system can effectively extract and present the structured requirement information. Furthermore, the system constructs a knowledge graph to represent system requirements, visually illustrating the interdependencies and constraints between them. A case study applying this approach to Chapters 18–22 of the ‘Code for Design of Metro’ demonstrates the effectiveness of the proposed method in automating requirement representation, enhancing requirement traceability, and improving management. Moreover, a comparison of information extraction accuracy between GPT-4, GPT-3.5-turbo, BERT, and RoBERTa using the same dataset reveals that GPT-4 achieves an overall extraction accuracy of 84.76% compared to 79.05% for GPT-3.5-turbo and 59.05% for both BERT and RoBERTa. This proves the effectiveness of the proposed method in information extraction and provides a new technical pathway for intelligent requirement management.

1. Introduction

Existing requirement management primarily relies on document-based formats [1], which, while facilitating communication and understanding, suffer from the inherent ambiguity and vagueness of natural language [2]. This often leads to inconsistencies in interpretation [3], poor information interrelation [4], and increased management complexity [5]. Especially when dealing with large and intricate requirement documents, traditional manual management approaches are prone to information loss and misinterpretation, adversely affecting both the efficiency and quality of the design process [6]. Furthermore, the lack of an effective traceability mechanism complicates the retrieval of historical change information, exacerbating communication barriers between requirements and design [7].

To address the aforementioned challenges, the Model-Based Systems Engineering (MBSE) approach, particularly the application of the Systems Modeling Language (SysML), provides theoretical support for the standardization and structuring of requirements. SysML defines five major categories of system requirements—functional requirements, interface requirements, performance requirements, physical requirements, and design constraints [8]—offering a structured framework that effectively supports requirements management [9], traceability [7], and verification [10]. However, the creation of SysML models still heavily relies on the manual interpretation and processing of natural language requirements by designers, which not only demands substantial time investment, but also requires designers to possess deep domain knowledge [11]. When faced with large volumes of complex requirement documents, maintaining a consistent understanding of the document contents remains a significant challenge, further limiting the application of SysML in efficient requirement management [12]. Additionally, SysML faces limitations in the semantic representation of requirements, making it difficult to effectively capture the implicit relationships between requirements in complex systems, thus hindering its effectiveness in managing complex requirement relationships [13].

To bridge this gap, AI-driven approaches have emerged as promising solutions. Large Language Models (LLMs), leveraging their robust knowledge repositories and exceptional contextual comprehension capabilities, provide efficient and precise technical support for extracting critical information from unstructured requirement documents [14]. Using prompt engineering, designers can construct task-specific prompts to further optimize a model’s recognition and extraction efficiency of essential requirement components, significantly enhancing data processing accuracy and consistency [15,16,17]. This foundational capability positions LLMs as pivotal enablers for knowledge graph construction. Integrated with graph representation techniques [18], LLMs systematically analyze entity relationships and dependency patterns within requirement texts, transforming unstructured textual information into multi-relational tuples [19]. This transformation facilitates the establishment of structured semantic models for requirement networks in complex systems by using knowledge graph formalization.

To address the issues discussed above, this paper proposes a prompt-engineering-based system for constructing requirement representation knowledge graphs, aimed at automatically converting system requirements (SRs) from requirement documents into a visualized knowledge graph structure. To enable the effective processing of requirement documents, this paper focuses on SysML’s standardized and structured definitions of requirements, categorizing them into five major types: functional requirements, interface requirements, performance requirements, physical requirements, and design constraints. The corresponding general information extraction templates for each category are designed, which clearly define the key elements of information in the requirement documents, such as subjects, objects, and actions. This forms the basis for prompt design that guides the large language model in executing the information extraction task, converting unstructured text into structured data, including nodes (subject, attribute, object), edges (action), and textual elements (function, condition, principle). Based on this, a domain-specific requirement representation knowledge graph is constructed using the extracted structured data, systematically showcasing the semantic relationships and hierarchical connections between the requirements.

In summary, our contributions are as follows:

- (1)

- Design of a SR information extraction template: A general information extraction template is proposed that is capable of adapting to information extraction tasks for requirement documents with various design attribute demands using the explicit definition of structured elements. The five types of requirement labels, based on SysML requirement definitions, comprehensively covering various elements in system design, ensuring the effective extraction of all types of requirement information. The proposed template demonstrates strong versatility, supporting requirement change management and ensuring the consistency and traceability of requirement documents throughout their lifecycle.

- (2)

- SR representation knowledge graph: An SR representation knowledge graph is constructed, illustrating the dependencies and constraints between requirements in a graphical format. The visualization of the graph, implemented using the Pyvis tool (version 0.3.2), not only provides an intuitive display of the semantic relationships among requirements, but also offers robust support for the dynamic management and traceability of requirements. The SR requirement representation knowledge graph provides a solid foundation for the requirement analysis of other specific systems within the field, facilitating the in-depth exploration and management of specific system requirements.

2. Related Work

Knowledge graph construction refers to the process of extracting key information from documents and systematically presenting knowledge resources and their interrelationships using intelligent processing and visualization techniques [20]. Among these, information extraction is the core component of knowledge graph construction. Efficient information extraction technologies can accurately convert unstructured data into structured forms, providing strong support for subsequent graph representation [21]. Commonly used information extraction techniques include rule-based approaches, as well as machine learning and deep learning methods [22].

Wang et al. [23] proposed a novel information extraction method called QuantityIE, which utilizes syntactic features from constituent parsing and dependency parsing to establish rules for structuring numerical information. The method also employs a QuantityIE-based numerical information retrieval approach to answer related questions, demonstrating its effectiveness. Chen et al. [24] introduced a structure–function knowledge extraction method based on AskNature, which combines keyword extraction with dependency parsing rules. This method was applied to fan noise reduction design, showcasing its potential in extracting structure–function knowledge. Wang et al. [25] addressing the challenges of managing and querying large-scale heterogeneous book data from multiple sources, constructed extraction rules based on the semi-structured characteristics of the textual data. Their practical application revealed significant improvements in management and query efficiency compared to traditional methods. However, rules are typically manually written based on domain-specific features, and as the dataset grows in size and complexity, writing and maintaining these rules become exceedingly difficult [26]. Additionally, due to the inherent ambiguity and polysemy of natural language, rule-based information extraction methods face considerable limitations when handling complex linguistic phenomena [27].

Several studies have begun utilizing machine learning/deep learning approaches for information extraction from text data to address the aforementioned issues. Zhang et al. [28] proposed a deep neural network model combining Long Short-Term Memory (LSTM) networks with Conditional Random Fields (CRF) for extracting semantic and syntactic information from regulatory documents. The model demonstrated superior performance in evaluation metrics such as accuracy, recall, and F1 score. Liu et al. [29] introduced a deep neural network model called BBMC which integrates BERT, BiLSTM, a multi-head attention mechanism (MHATT), and CRF to extract semantic and syntactic information from biomedical texts. Experiments showed that this model excelled in accuracy, recall, and F1 score, with particular advantages in recognizing low-frequency entities, polysemy, and abbreviations. These methods partially alleviate issues such as the large volume of requirement documents and frequent changes in requirements. However, their application still faces limitations. For instance, the construction of these models relies on extensive and labor-intensive data annotation, which demands high-quality annotations, and the model training process is time-consuming [30]. Furthermore, the effectiveness of these models in extracting complex texts [31] and nested entities remains an area for improvement [32].

In recent years, large language models (LLMs), based on the Transformer framework with billions of parameters [33], have demonstrated remarkable potential in natural language processing tasks, such as natural language understanding, through extensive pretraining, enabling them to perform such tasks without initial work [34]. Wang et al. [35] compared the performance of GPT-4 and ChemDataExtractor in extracting bandgap information in materials science. The evaluation results showed that GPT-4 significantly outperformed rule-based methods in accuracy (87.95% vs. 51.08%) and excelled in complex name recognition and information parsing. Enhanced prompt design further improved GPT-4’s accuracy, providing substantial support for the application of LLMs in domain-specific information extraction. Tang et al. [36] investigated the impact of prompt engineering on GPT-3.5 and GPT-4.0 in medical information extraction, employing role-setting, chain-of-thought, and few-shot prompting strategies. The results demonstrate that GPT-4.0 achieved an average accuracy ranging from 0.688 to 0.964 under the optimal prompt strategy, with the best performance observed in sample extraction (0.964). The study concluded that prompt engineering significantly enhanced the accuracy of GPT models in complex information extraction tasks.

Large language models (LLMs) have demonstrated formidable capabilities in information extraction, requiring neither extensive data annotation nor a high level of domain expertise from users due to their rich knowledge base [37,38,39]. However, their information extraction performance is highly dependent on the design of the prompts, and the extracted textual data must exhibit a high level of standardization to serve as effective input for knowledge graphs [40]. Given the complexity of unstructured text and the widespread presence of nested sentences in requirement documents, there is an urgent need to design an information extraction template suitable for general requirement documents. This template would serve as the foundation for constructing prompts that guide the large language model, thereby enhancing the standardization and generalizability of information extraction.

3. Research Methodology

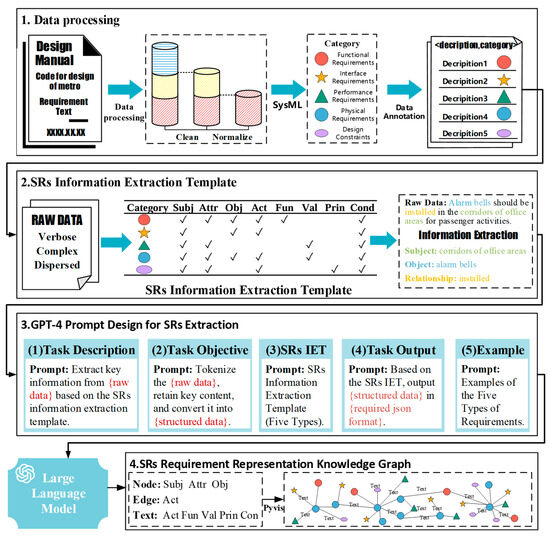

This paper proposes a prompt-engineering-based system for constructing requirement representation knowledge graphs capable of automatically converting unstructured requirement texts into visualized graph structures. The system’s framework is shown in Figure 1.

Figure 1.

The schematic diagram of the proposed framework.

To address the issues of complex information, unstructured formats, and the high consistency requirements for designers to understand requirement documents, the system employs SysML’s definition of requirements for document management. It classifies system requirements (SRs) into five major categories: functional requirements, interface requirements, performance requirements, physical requirements, and design constraints. In response to the differences in specific elements contained within each category, generalized information extraction templates are designed for each category. These templates clearly define key information elements in the requirement documents, such as subjects, objects, and actions. Based on these templates, prompt phrases are designed to standardize the large language model’s extraction of elements, transforming unstructured text into structured data, including nodes (subject, attribute, object), edges (action), and textual elements (function, condition, principle). Finally, a domain-specific requirement representation knowledge graph is constructed using the extracted structured data, systematically presenting the semantic relationships and hierarchical connections between the requirements, thereby providing intuitive support for requirement analysis and management.

3.1. Data Preprocessing

In natural language processing tasks, data cleaning is a crucial step to ensure data quality and enhance model performance [41,42]. Given the characteristics of requirement documents, this study designs and implements three data cleaning strategies to ensure data consistency and usability: (1) Removal of irrelevant numbering and markings: Requirement documents are typically organized by chapters and often contain irrelevant noise, such as chapter numbers, section markers, and other formatting symbols. If this noise is included in the input, it could interfere with the subsequent text parsing performed by the large language model. (2) Text format normalization: The raw documents frequently contain extra spaces, line breaks, and other special characters, which may cause errors in text segmentation or parsing difficulties. (3) Maintaining consistency in requirement text context: Given that requirement documents often have multi-level nested structures, ensuring the coherence and integrity of the requirement descriptions is crucial. For sub-items or specific functionality entries that follow a main requirement description, their content is concatenated with the primary description to ensure consistency in the subject matter. This approach ensures that the requirement subject remains consistent throughout.

After completing the data cleaning process, this paper annotated the requirement document data according to the five types of requirement labels defined in SysML: functional requirements, interface requirements, performance requirements, physical requirements, and design constraints. These labels comprehensively cover the main types of requirements in complex system design and provide clear standards for the classification and analysis of requirement documents. The document descriptions corresponding to these five requirement labels are collectively referred to as system requirements (SRs). By classifying the scattered SRs into categories, this process not only improves the organization of information but also lays the foundation for the subsequent construction of information extraction templates. The specific definitions and examples of the five requirement labels are provided in Table 1. This study strictly adheres to the definition standards for each requirement label and systematically annotates the SRs to ensure the accuracy and consistency of the annotation results, thus providing a high-quality corpus for subsequent research.

Table 1.

Five types of requirements and their descriptions and examples.

3.2. Construction of SRs Information Extraction Templates

The information extraction templates serve as the core foundation for enabling large language models to perform information extraction tasks, and their completeness directly impacts the accuracy and reliability of the extracted information. To achieve the precise extraction of information from different categories of system requirements (SRs), this paper designs corresponding structured information extraction templates based on the five requirement labels defined by SysML. Each template clearly defines the key information elements that should be present for each requirement type, aiming to ensure consistency and completeness in the extraction process. The design of these templates is based on a comprehensive analysis of the requirement categories, thoroughly considering the unique characteristics and shared attributes of various requirements in complex system design, ensuring the accurate extraction of the core components of each requirement category from the requirement documents.

The elements within the templates encompass various types of characteristic information, including the following:

Subject: The subject of each requirement represents the initiator, executor, or the primary system components involved. In the five types of requirements, the subject is a core component that clarifies the responsible party or related system elements, helping to define the key roles and their functions within the requirement. In the process of requirement data extraction using large language models, the subject is a necessary information element.

Attribute: In some SRs, in addition to the subject, specific attributes related to the subject may also appear, typically expressed in the form of “AA’s BB”, where BB is the core subject of the requirement. These attributes are used to further describe the specific characteristics or functionalities of the subject.

Object: In SRs, the object is the target entity involved when the subject performs certain actions or behaviors. It refers to the specific thing or system component that the described operation or function in the requirement acts upon.

Action: In the requirement document, the action refers to the operation or action performed by the subject on the object, specifically describing the interaction between the subject and the object.

Function: In functional requirements, the function refers to the behavior or operational capability that a system or component should possess. It specifies the tasks that the system needs to perform or the services it needs to provide. For functional requirements, functionality is an essential key information element.

Value: In performance and physical requirements, the value refers to specific performance metrics or physical attributes that a subject or object must achieve.

Principle: In design constraints, the principle refers to the standards, norms, or guidelines that must be followed during the system design process. For design constraints, principles are essential key information elements.

Condition: In the requirements document, a condition refers to the prerequisite or specific environment that must be met to fulfill a particular requirement.

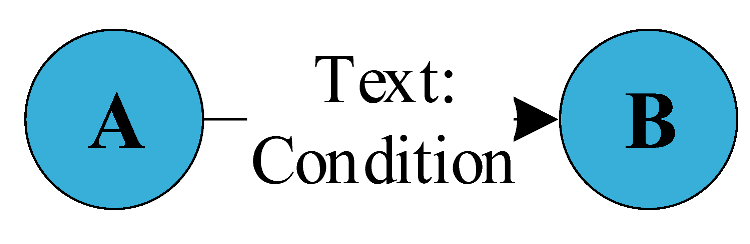

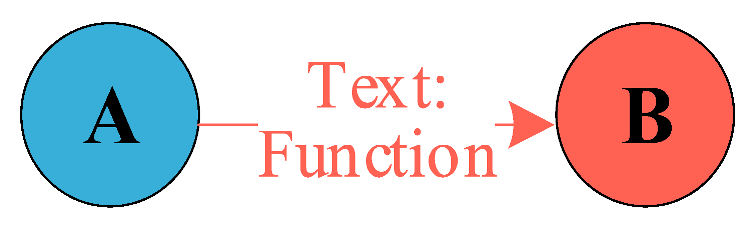

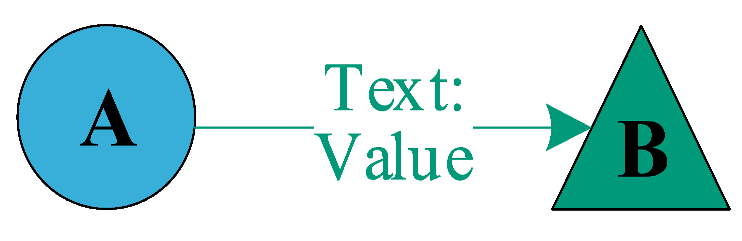

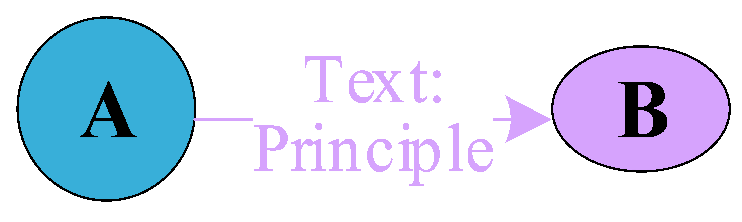

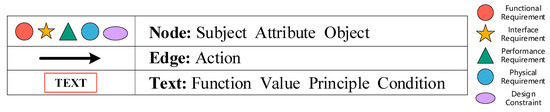

For the five types of requirement text content in the requirement documents, this paper selects the key elements that each category should contain. The specific template structure is shown in Table 2, where ✓ indicates the key elements that should be present for each requirement type. Additionally, for a given SR, certain elements (such as subject, object, function, etc.) may appear multiple times. A single SR may involve multiple subjects, objects, or functions. To further incorporate the structured data as part of the knowledge graph construction, this paper categorizes the elements of the SR into three types based on the characteristics of the information extraction templates: nodes, edges, and text. As shown in Figure 2, the elements corresponding to nodes are as follows: subject, attribute, and object, which are used to describe key entities in the requirements. The elements corresponding to edges are as follows:: action, which is used to represent the relationships between different nodes, with the specific content of the behaviors recorded within the edges. And the elements corresponding to text are as follows: function, value, principle, and condition, which are used to further describe the specific content of the requirements. During the graph construction, by progressively combining nodes, edges, and text, the domain knowledge graph is continuously expanded to achieve a comprehensive representation of requirement information.

Table 2.

SR information extraction templates.

Figure 2.

Basic elements of knowledge graph construction.

3.3. Design of GPT-4 Prompts for SRs Information Extraction

With its powerful natural language understanding and generation capabilities, GPT-4 excels in processing complex texts, recognizing contextual relationships, and generating structured outputs. Especially when dealing with technically demanding texts such as requirement documents, GPT-4 is capable of accurately identifying key elements within the text and effectively transforming them into structured data. Based on this, GPT-4 is selected as the core model for information extraction in this paper, and prompts are designed to guide it in performing the information extraction tasks, with these prompts being grounded in the information extraction templates.

The construction of prompts is a critical step in guiding large language models to perform specific tasks. Although several studies have explored how to design efficient prompt templates [43], creating a highly effective template remains a process that requires continuous iteration and experience accumulation. To achieve the precise extraction of SRs, this paper designs the following prompt templates, aiming to ensure that GPT-4 can efficiently and accurately complete the information extraction tasks. The design of this prompt is primarily divided into the following modules:

- (1)

- Task description: The task description clearly defines GPT-4’s core responsibility, which is to extract key information from the requirements document based on the provided <description, category> using the defined SRs information extraction template.

- (2)

- Task objective: The task objective involves tokenizing the text, removing stop words, retaining the main content and key terms, and ultimately transforming the text into structured data. During this process, GPT-4 will strictly follow the SRs information extraction template to simplify the complex content into a structured output that is easy to understand and process. This objective ensures that the model can efficiently and accurately extract core information from technical documents.

- (3)

- SRs information extraction template: This section outlines the SRs information extraction template established based on the characteristics of the requirement documents. The template offers specific extraction elements for the five types of requirements, such as subject, object, action, and conditions, ensuring that the extraction results are structured and accurate.

- (4)

- Output format: To ensure that the extracted structured data are easy to process and interpret in subsequent stages, GPT-4 is instructed to output the results in a standardized JSON format. The output format includes a “category” label and “structured data”, where the “structured data” section is filled in accordance with the SRs information extraction template. This approach ensures that all extracted content maintains consistency and clarity.

- (5)

- Examples: To further guide GPT-4 in understanding the task, few-shot learning [44] is employed by providing multiple examples. Each example includes input text and its corresponding expected output, ensuring that the model can generate structured results in accordance with the template.

After using GPT-4 for SRs information extraction, the originally unstructured requirement texts are transformed into labeled tuples. Each structured data tuple fully represents all of the information within the requirement text. These structured SR tuples and their corresponding category labels are then used as input for further construction of the knowledge graph for requirement representation.

3.4. Construction of SRs Requirement Representation Knowledge Graph

Requirement analysis for complex systems often involves intricate interactions and relationships between multiple entities. To better understand and manage these requirements, a knowledge graph serves as an effective visualization tool, depicting key elements and their interrelationships in system requirements with nodes and edges [18]. The knowledge graph not only visually represents the structure of the requirements, but also uncovers the underlying dependencies and functional interactions between them, aiding designers in gaining a comprehensive understanding of the overall SRs.

Upon analyzing the structure of the five types of requirements, it was found that physical requirements define the components of the system and their interrelationships, exhibiting static structural characteristics. Therefore, physical requirements can serve as the foundational framework for constructing the requirement representation knowledge graph. By describing the relationships between subjects, objects, and their attributes, physical requirements provide a clear structural backbone for the knowledge graph. Based on this framework, other types of requirements (such as functional requirements, interface requirements, performance requirements, and design constraints) will gradually expand and refine the graph. Each requirement category further enriches the graph content based on its specific elements (e.g., functions in functional requirements, interface requirements in interface specifications, numerical indicators in performance requirements, etc.), ensuring that the logical relationships between different types of requirements are fully represented. The relationships between various nodes are shown in Table 3, and the SRs representation knowledge graph is constructed based on these node relationships to form the domain knowledge graph.

Table 3.

Schematic representation of node relationships.

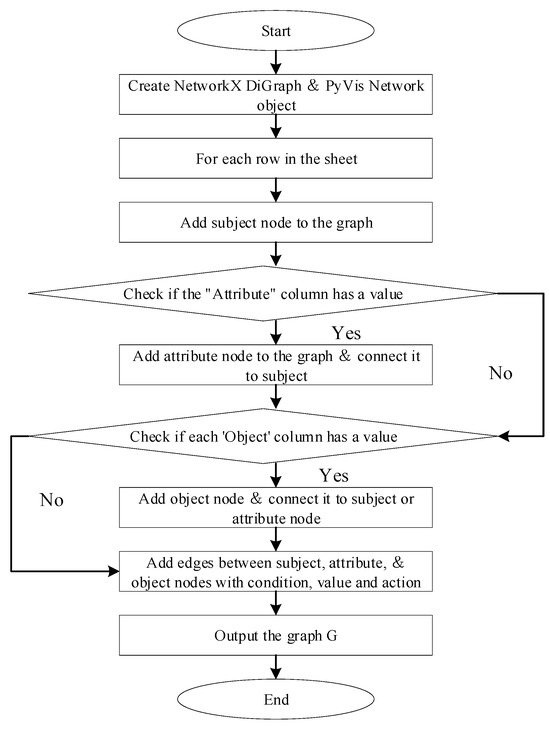

The framework for constructing the knowledge graph based on physical requirements is shown in Figure 3. The first step of the algorithm is to create an empty directed graph G to store all of the entities and their interrelationships within the physical requirements. As the requirement data are parsed, nodes and edges are incrementally added to the graph. In the second step, the algorithm iterates through each row of the physical requirement sheet, adding the values from the “subject” column as nodes to graph G. If the “attribute” column in the row has a value, it indicates that the subject has specific attributes. The algorithm adds these attributes as nodes to the graph and connects the subject node to the attribute node via directed edges. Next, if the “object” column in the row contains a value, the algorithm adds the object as a node to the graph and connects it to the corresponding subject or attribute node through directed edges. If the row contains the “action”, “value”, and “condition” columns, the corresponding text is attached to the connecting edges between the subject and the object, between the subject and the attribute, between the attribute and the object, or the self-loop edge of the subject, to describe the specific supplementary description of the connection between the data points. Finally, the graph G is output.

Figure 3.

Algorithm flowchart for constructing the knowledge graph framework based on physical requirements.

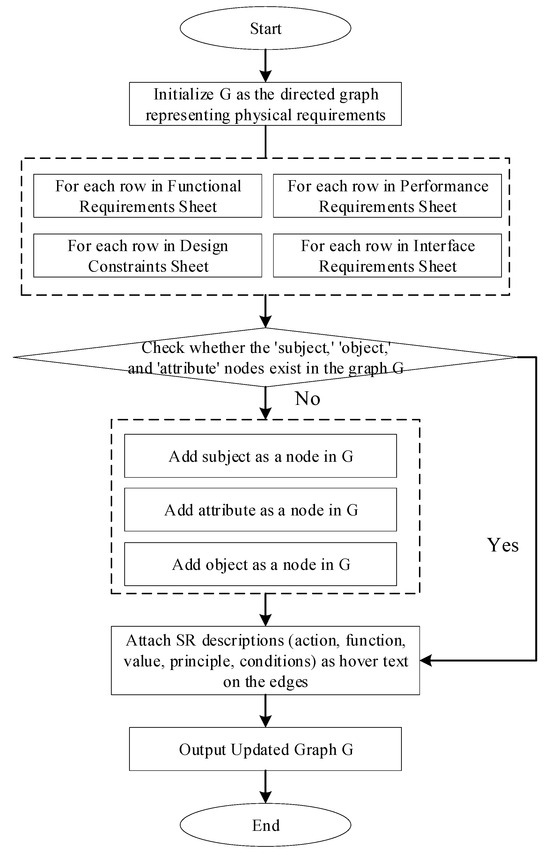

The basic framework for constructing a domain-specific requirement knowledge graph based on physical requirement data is outlined, followed by the stepwise integration of functional requirements, performance requirements, design constraints, and interface requirements into the graph. The specific algorithmic steps are shown in Figure 4. The first step involves importing the knowledge graph G built from the physical requirements and traversing each row of the functional requirements sheet. The algorithm checks the “subject”, “attribute”, and “object” columns to see if their values already exist in graph G. If any values are missing, they are added as nodes to the graph G. If the “attribute” column contains functional attributes, directed edges are created to connect the subject to the attribute. If the “object” column contains values, directed edges are used to connect the subject or attribute nodes to the object node. The “function”, “action”, and “condition” columns are attached as hover text on the edges. The second step addresses performance requirements. The algorithm traverses each row of the performance requirements sheet, checking whether the values in the “subject” and “attribute” columns exist in G. If any values are missing, they are added as nodes to the graph G. If the “attribute” column contains performance attributes, directed edges are created to connect the subject to the attribute. If the attribute contains specific values or conditions, this information is attached as hover text on the edges. The third step involves processing design constraints. The algorithm traverses the design constraints table, checking whether the values in the “subject” and “attribute” columns exist in G. If any values are missing, they are added as nodes to the graph G. If design constraint attributes are present in the “attribute” column, these are added as nodes to the graph and are connected to the subject nodes via directed edges. Descriptions of “action”, “principle”, or “condition” are appended as hover text on the edges, clearly displaying the design constraint requirements. The fourth step focuses on interface requirements. The algorithm traverses each row of the interface requirements sheet, checking whether the values in the “subject” and “object” columns exist in G. If any values are missing, they are added as nodes to the graph G. Subsequently, if the “object” column contains values, directed edges are used to connect the subject node to the object node. Descriptions of the interface requirements actions and conditions are attached as hover text on the edges.

Figure 4.

Algorithm flowchart for knowledge graph expansion based on additional requirements.

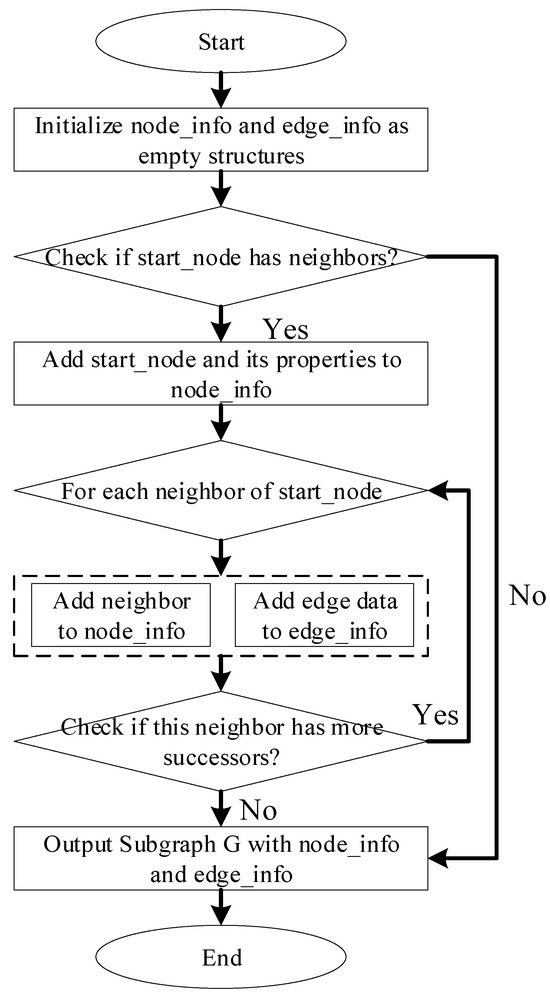

Based on the aforementioned steps, a complete knowledge graph for the domain-specific requirement documentation can be constructed. Considering the designer’s focus on specific nodes, a node extraction algorithm is designed to meet these requirements. This algorithm can extract all of the relationships and associated nodes of a target node from the knowledge graph, thereby presenting all of the related requirement information in a comprehensive manner. The algorithmic logic is depicted in Figure 5. The first step is to import the domain-specific requirement knowledge graph G and initialize two empty structures: one for storing node information and the other for storing edge information. The second step involves extracting the properties of the target node from the network objects and storing them in the node information structure. In the third step, the algorithm traverses all adjacent nodes pointed to by the target node, collects their properties, and adds them to the node information. Simultaneously, it retrieves the edge information between the adjacent nodes and the target node, storing this in the edge information structure. The fourth step entails recursively traversing all neighbors of the adjacent nodes, collecting their properties and the edge information between them, continuing this process until no further related nodes are found. The final step is to plot the relationship graph G’ of the target node, based on all of the collected node and edge information.

Figure 5.

Node extraction algorithm flowchart.

4. Case Study

4.1. Data Preparation

In order to further illustrate the functionality of the system’s modules with a practical case, Chapters 18–22 of the national standard document ‘Code for Design of Metro’ (GB 50157-2013) [45] were selected as the case study. The raw data contained a large number of irrelevant elements, such as spaces, chapter numbers, and symbols, which were unrelated to the main content. To ensure the quality of the data, regular expressions were employed to remove these extraneous elements, following the data cleaning principles outlined earlier. As the requirements text is usually presented in paragraph form, regular expressions were applied for filtering and processing, as shown in Table 4. The table illustrates the raw form of the requirement document and the form after processing with regular expressions. This processing split one requirement into two distinct requirements and removed irrelevant chapter numbers, such as 18.3.4, that were not needed for the input model. After data cleaning, a total of 516 requirements were collected.

Table 4.

Data before and after preprocessing.

After completing the data cleaning process, the SRs were categorized into five types: functional requirements, interface requirements, performance requirements, physical requirements, and design constraints. Based on the definitions of these five requirement types, the research team members annotated the collected requirements. During the annotation process, each requirement was carefully reviewed, and the corresponding category label was assigned according to the SysML requirement definition standard. To ensure label accuracy, other team members verified the preliminary classification results, ensuring the correctness and consistency of the requirement labels. In total, 516 requirement data entries were collected, of which 198 (38.37%) were labeled as functional requirements, 50 (9.69%) as interface requirements, 57 (11.05%) as performance requirements, 109 (21.12%) as physical requirements, and 102 (19.33%) as design constraints. A sample of the data is presented in Table 5, which includes the requirement description text and its corresponding requirement category.

Table 5.

Partial data from the ‘Code for Design of Metro’ dataset.

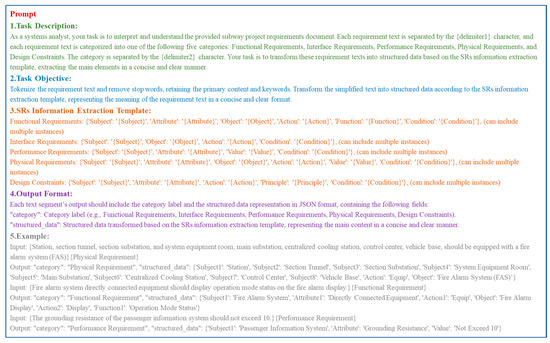

4.2. Structured Information Extraction Based on Prompt Engineering

After completing data cleaning and annotation, to achieve the structured extraction of requirement information, this study designs dedicated prompts for the metro design domain in the ‘Code for Design of Metro’ (GB 50157-2013) based on the components included in the GPT-4 prompt template for information extraction described in Section 3.3, as shown in Figure 6. The design of this prompt encompasses all of the modules mentioned in Section 3.3, ensuring that the key elements of the requirement text can be effectively captured during the information extraction process and, according to the information extraction template, converted into standardized structured data.

Figure 6.

Structure of SR information extraction task prompt template.

In the specific implementation process, we input the data into the GPT-4 API in the format of ⟨requirement description text, requirement category⟩ and guide GPT-4 to perform information extraction based on predefined prompts. In terms of model configuration, a lower temperature value makes the output more deterministic. To ensure higher accuracy and consistency, the temperature is set to 0.3 [46], and max-tokens is set to 300 based on the length of the input text. GPT-4 returns JSON-structured data according to the specified output format, where each data field corresponds to the information extraction template of different requirement categories to ensure the accuracy and consistency of the extracted information.

To ensure that the model’s output format strictly adheres to the predefined information extraction template and to minimize inconsistencies in knowledge graph construction caused by hallucinations, this study introduces an automatic format validation mechanism in the code implementation process. Specifically, after receiving the JSON structure generated using GPT-4, the code checks whether the output key names match the key information elements required in the information extraction template. If extra fields or format mismatches are detected, the data are re-input into GPT-4, requesting it to generate the output again until the output key names fully match. Additionally, if the generated data do not contain the “subject” field, the model is also instructed to regenerate the output.

Table 6 presents the partial results of structured information extraction performed using GPT-4 under the guidance of prompts, where the example data are derived from the translated version of the original Chinese requirement text. With the assistance of prompt examples, GPT-4 can identify key information in the text based on the information extraction template. In some cases, it may also expand the content contextually to enhance the completeness and readability of the extracted results. For example, in Table 6, within the functional requirement description “The semi-automatic ticket vending machine should have the following key functions: processing ticket refunds, ticket replacements, fare top-ups, ticket validation, and ticket exchanges for passengers.”, when extracting information under the “function” category, GPT-4 automatically added “for passengers”. This addition does not appear in all function descriptions, but, from the context, it reasonably supplements the applicable subject of the function, making the information expression clearer.

Table 6.

GPT-4 structured information extraction examples.

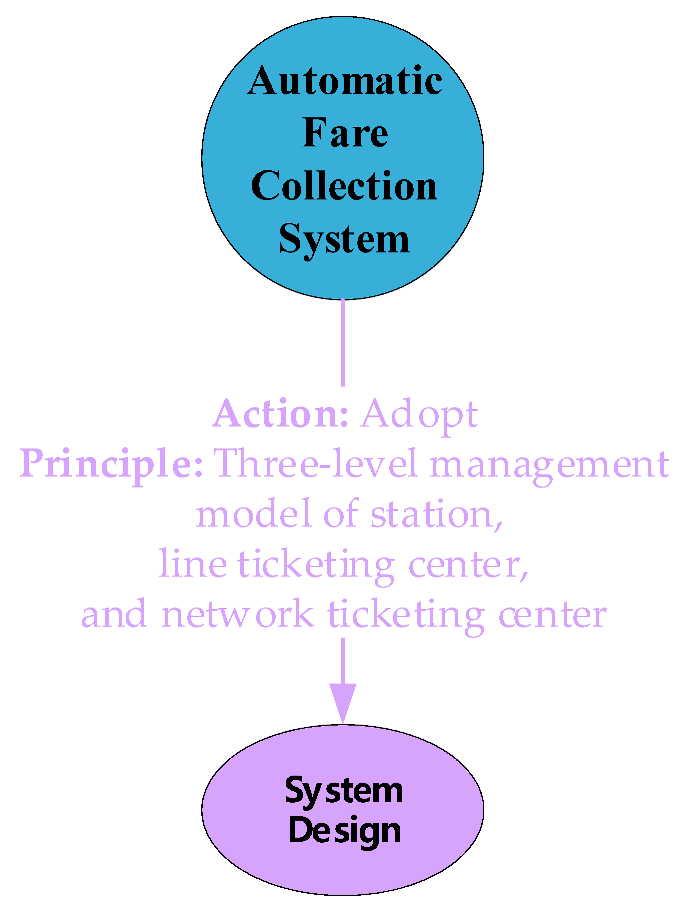

However, in some cases, GPT-4 may also produce extraction errors, especially when processing requirement statements with strong hierarchical dependencies. For example, in the design constraint “{The automatic fare collection system design should follow the principles of centralized management, hierarchical control, and resource sharing.}”, GPT-4 extracts the structure as {‘Subject’: ‘Automatic Fare Collection System Design’, ‘Principle 1’: ‘Centralized Management’, ‘Principle 2’: ‘Hierarchical Control’, ‘Principle 3’: ‘Resource Sharing’}. However, the actual subject should be “Automatic Fare Collection System”, and “Design” should be treated as an attribute. The structure thus needs to be corrected to {‘Subject’: ‘Automatic Fare Collection System’, ‘Attribute’: ‘Design’, ‘Principle 1’: ‘Centralized Management’, ‘Principle 2’: ‘Hierarchical Control’, ‘Principle 3’: ‘Resource Sharing’}. In this case, GPT-4 failed to correctly distinguish between subject and attribute, mistakenly identifying “System Design” as part of the subject rather than its attribute. This indicates that when handling complex hierarchical dependencies, GPT-4 may misclassify descriptive phrases as part of the subject while overlooking the actual attribute relationships. Additionally, when dealing with multi-level requirement structures, GPT-4 may lose or misinterpret hierarchical information, causing the extracted results to be inconsistent with the original requirement text’s structure, thereby affecting the accuracy and consistency of knowledge graph construction.

4.3. Performance Evaluation of Information Extraction Compared to Other Models

4.3.1. Dataset Partitioning and Annotation

To validate the effectiveness of the proposed model, which integrates GPT-4 with an information extraction template, in information extraction tasks, we compared its performance with traditional pre-trained deep learning models widely used in information extraction tasks, such as BERT, RoBERTa, and the generative pre-trained model GPT-3.5-turbo, to evaluate its advantages in requirement extraction accuracy. Pre-trained learning models typically require data annotation. Considering the varying proportions of different requirement categories within the overall dataset, we adopted a random sampling strategy to partition the data, allocating 80% as the training set and 20% as the test set. Specifically, the training set comprises 411 data entries, while the test set consists of 105 entries. The test data encompass five requirement categories: 40 functional requirements, 10 interface requirements, 12 performance requirements, 22 physical requirements, and 21 design constraints. This balanced distribution across categories within the test set ensures a comprehensive evaluation of each model’s extraction performance across different requirement types.

Based on the elements outlined in the information extraction template in Section 3.2, we adopted the “BIO” annotation strategy, where “B” denotes the beginning of an entity, “I” represents the inside of an entity, and “O” indicates non-entity segments. Following the information extraction template, we established a corresponding labeling system, which is shown in Table 7. The annotated dataset has been uploaded to the Hugging Face platform for further research use (dataset access link: https://huggingface.co/datasets/OrangeeSofty/Metro_Code_Chapters_18_to_22_Data/tree/main/out_bio_txt, accessed on 12 February 2025).

Table 7.

Element labels of the information extraction template.

4.3.2. Baseline Model Configuration

In the experiment, we utilized the Hugging Face Transformers library to load the pre-trained BERT model (bert-base-chinese) and RoBERTa model (hfl/rbt6) as baseline models. Their encoding layers were employed for feature representation in the information extraction task, and a fully connected layer combined with Softmax was used for classification.

Given the limited dataset size, we employed pre-trained word embeddings during training and froze the model parameters without fine-tuning. The hyperparameter settings, as shown in Table 8, were selected based on commonly used values in deep learning networks. Additionally, the number of epochs was dynamically adjusted according to the loss reduction trend during training, ultimately set to 30. The GPT-3.5-turbo model configuration is the same as GPT-4, with the temperature set to 0.3 and max-tokens set to 300. The entire experiment was implemented using the PyTorch framework (version 2.4.1) and executed on a device equipped with an NVIDIA GeForce GTX 1060 GPU (Nvidia, Santa Clara, CA, USA).

Table 8.

Pre-trained deep learning model parameter configuration.

4.3.3. Evaluation System Construction

To evaluate the accuracy of different models in information extraction tasks, research team members manually annotated a randomly sampled 20% subset of the test data. Cross-validation was conducted to ensure the high reliability of the manually annotated extraction results. Given the significant differences in core elements across various requirement categories, this study establishes a unified matching standard applicable to GPT-4, GPT-3.5-turbo, BERT, and RoBERTa, based on the information extraction template outlined in Section 3.2. The evaluation system integrates exact matching and cosine similarity [47] computation to ensure the accuracy and rationality of the structured data extraction.

For nodes consisting of subject, attribute, and object elements, these nodes represent key entities in the requirements. Any deviation in these nodes may result in the introduction of incorrect nodes during the subsequent graph construction process. To mitigate this issue, we employ a strict string-matching approach, where every term extracted from the results must exactly match the manually labeled terms; otherwise, it is considered a mismatched match. When multiple subjects, objects, or attributes are present within the requirement text, the number of correctly matched elements is used as the score for that item, and the matching accuracy is calculated using the following formula:

where represents the exact matching score for element ; denotes the total number of instances of element in the manually annotated data; represents the -th value predicted by the model for element ; is an indicator function, which equals 1 if , and 0 otherwise.

For edge elements and textual components, such as actions, functions, values, principles, and conditions, which are characterized by lengthy text content and rich semantic information, relying solely on exact matching is insufficient for comprehensively assessing the semantic similarity between the model’s extracted results and the manually annotated data. Cosine similarity calculation can serve as a metric for evaluating the similarity between the two. Therefore, this study employs a cosine similarity computation method based on BERT word embeddings (bert-base-chinese) for matching [48]. In actual requirement entries, the same data may contain multiple elements of the same category. For instance, during the structured information extraction of functional requirements, multiple functional items such as Function 1, Function 2, … Function N may be extracted. Before computing cosine similarity, all text elements of the same category are first merged and concatenated to form a unified textual representation. The overall similarity is then calculated by comparing this aggregated representation with the manually annotated elements.

Let denote the semantic vector representation of element extracted by the model and denote the semantic vector representation of element extracted from the manual annotation. The cosine similarity score for this element is defined as

where represents the cosine similarity score for element ; denotes the L2 norm of the vector; and the cosine similarity ranges from [−1, 1], where values closer to 1 indicate a higher semantic similarity between the model-extracted text and the manually annotated text.

Furthermore, considering that different requirement categories place varying levels of emphasis on different elements, applying uniform weights to all elements would fail to accurately reflect the model’s actual information extraction capability across different requirement types. Therefore, to more precisely evaluate the effectiveness of the model’s extraction performance, this study adopts a differentiated weight allocation strategy for the core elements of each requirement category. For example, in the structured data of functional requirements, the primary elements include subject, attribute, object, action, function, condition. However, in practical requirement analysis, functional requirements place greater emphasis on the subject performing the function and its specific functional content, while elements such as action receive relatively less attention. Therefore, assigning equal evaluation weights to all elements may lead to an inaccurate assessment of the model’s performance in extracting key elements.

To optimize the evaluation framework, we establish distinct weight allocation strategies for the elements of different requirement categories, ensuring that the assessment results align more closely with real-world application scenarios. This approach enhances the scientific rigor and reliability of the information extraction model. The weight allocation for each requirement category is presented in Table 9.

Table 9.

Weight allocation for different requirement categories.

The final evaluation scores for the structured requirement data are as follows:

where represents the final score of the requirement data entry; denotes the score of element , where, for node elements, , and for textual elements, ; represents the weight of element within the given requirement category; and , ensuring that the weighted sum of all elements equals 1, thereby achieving normalization.

4.3.4. Performance Evaluation of Information Extraction by GPT-4 and Other Models

The final score of the data depends on the comprehensive assessment of exact matching and cosine similarity. Based on existing research on the accuracy of information extraction models and text similarity standards [49,50,51], we set the determination criterion as . That is, when the structured extraction score reaches or exceeds 0.85, the extracted result is deemed correct. Based on this criterion, we conducted an evaluation on a test set of 105 data entries. The experimental results indicate that the GPT-4 model correctly extracted 89 entries, including 35 functional requirements, 9 interface requirements, 10 performance requirements, 19 physical requirements, and 16 design constraints, achieving an overall extraction accuracy of 84.76%, according to the evaluation criterion. In comparison, the extraction accuracy of the GPT-3.5-turbo model was 79.05%, while both the BERT and RoBERTa models achieved the same extraction accuracy of 59.05%. The extraction accuracy for different requirement categories across the models is presented in Table 10.

Table 10.

Model performance comparison.

The results of this study demonstrate that GPT-4 significantly outperforms traditional pre-trained fine-tuned models, slightly surpassing the generative pre-trained model GPT-3.5-turbo as well. One of the primary reasons for this disparity is the limitation in the amount of training data in our experiment. With only 411 labeled data points, models like BERT and RoBERTa, which rely on supervised fine-tuning, struggle to develop adequate generalization capability [52,53,54]. According to the definitions of the five requirement categories in Section 3.1, Table 1, pre-trained fine-tuned models often encounter significant challenges when processing requirement texts such as interface requirements and design constraints. This is because these texts involve multiple levels of dependency and nested semantics. In these cases, BERT and RoBERTa often fail to fully capture the semantic context, leading to incomplete extraction results or erroneous word segmentation. For example, as shown in Table 11, when extracting the phrase “相关系统” (relevant systems) from the sentence “自动售检票系统应实现与相关系统的接口” (“The automatic fare collection system should implement interfaces with relevant systems”), BERT mistakenly extracts “关系统”, which is not a valid term. These issues further affect the final scores of BERT and RoBERTa, resulting in a decline in the extraction accuracy of the models.

Table 11.

Examples of structured data extracted using different models.

In contrast, GPT-4 and GPT-3.5-turbo demonstrate superior performance due to their adoption of a different learning paradigm. Unlike BERT and RoBERTa, both GPT-4 and GPT-3.5-turbo do not rely on large amounts of labeled data for fine-tuning; instead, they employ few-shot learning [44], where a small number of example data points are provided in the prompt to adapt to new tasks. By embedding the information extraction template in the prompt design, both GPT-4 and GPT-3.5-turbo can effectively learn the structure of the requirement text and align their outputs accordingly. The models’ robust contextual understanding enables them to capture subtle dependencies in the text, making them particularly effective in extracting complex semantic structures such as interface requirements and design constraints, outperforming BERT and RoBERTa in these tasks.

Between GPT-4 and GPT-3.5-turbo, GPT-4 is an iterative upgrade of GPT-3.5-turbo. GPT-4 has a significant increase in the number of parameters, reaching trillions of parameters compared to the billions of parameters in GPT-3.5-turbo. This higher parameter count enables GPT-4 to better understand complex contextual information, thereby enhancing its reasoning ability and the accuracy of text generation. Current research shows that GPT-4 outperforms GPT-3.5 in various tasks. According to an official report from OPENAI, in complex information extraction tasks, GPT-4’s error rate is 25% lower than that of GPT-3.5-turbo [55,56,57]. A. In SR information extraction tasks, GPT-4 demonstrates higher extraction accuracy compared to GPT-3.5-turbo.

For requirement descriptions primarily composed of noun phrases, such as functional requirements, physical requirements, and performance requirements, the performance of all models is relatively strong. These requirement categories rely more on explicit object–action relationships rather than deep contextual interpretations, so even label-based models like BERT and RoBERTa can achieve high accuracy.

5. System Development and Design

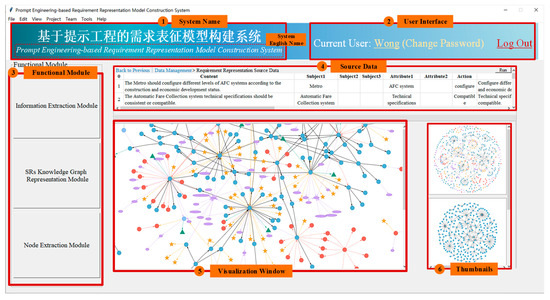

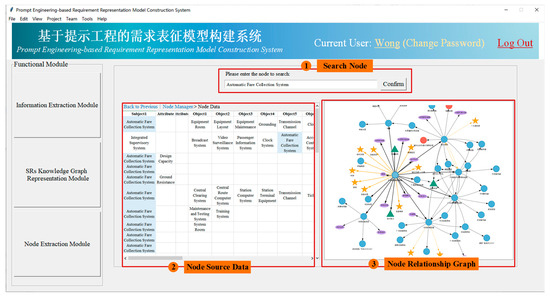

A prototype system for requirement representation knowledge graph construction based on prompt engineering was developed using the methodology outlined above, designed to assist designers in efficiently managing and parsing requirement documents. The system was developed using PyCharm 2021, coupling Python (version 3.8) with Tkinter (version 8.6) for numerical analysis and visualization. The system consists of three key modules: the SRs information extraction module, the SRs representation knowledge graph construction module, and the target node extraction module. Specifically, the SRs information extraction module constructs a prompt template based on the SRs information extraction template and integrates it with GPT-4, utilizing its language generation capabilities to perform information extraction. The SRs representation knowledge graph construction module generates a hierarchical requirement representation model based on structured data and node relationships. The target node extraction module is capable of extracting directly associated node elements for any node in the requirement representation knowledge graph model. The prototype system for constructing requirement representation knowledge graphs based on prompt engineering is shown in Figure 7. Area 1 displays the system name, providing a clear platform identification. Area 2 contains user login and logout in-formation, managing user access and sessions. Area 3 shows a list of functionalities for the three core modules, allowing for users to easily browse and select specific operations. Area 4 displays the source data related to the selected functional module, ensuring transparency and data traceability. Area 5 is a visualization window that presents outputs or results in an intuitive graphical manner. Lastly, Area 6 provides a thumbnail view for quick navigation and a compact overview of the system content.

Figure 7.

Prototype system for requirement representation knowledge graph construction based on prompt engineering.

5.1. The Information Extraction Module

The information extraction module automatically extracts requirement data based on the principles outlined in Section 4.2, as shown in Figure 8. Users need to input two pieces of data, “Requirement Text” and “Requirement Category”, into the system or upload an Excel file containing these two columns. The system calls the OPENAI GPT-4 API, extracts structured data based on the prompts, and returns the results. After receiving the structured information from the API, the SRs information extraction module displays the extracted structured data in the text box on the interface and supports downloading it as an Excel file, which serves as the basis for the next module, the construction of the requirement representation knowledge graph. This study introduces a manual correction step in the information extraction process to ensure data consistency. For example, the design constraint requirement in Table 12 involves the “Integrated Backup Panel (IBP)”, which may be referred to as “IBP” in other requirement descriptions. If the same entity is not uniformly processed across different descriptions, it may lead to multiple nodes in the knowledge graph that point to the same concept but have different names, thereby affecting the accuracy of requirement analysis. Therefore, the research team further checked and corrected the results based on GPT-4’s structured extraction, ensuring consistency in the entities representing the same concept throughout the knowledge graph construction process and preventing semantic fragmentation. The elements manually corrected in this case are shown in Table 12.

Figure 8.

Example of the information extraction module.

Table 12.

Standardized terminology alignment for information extraction.

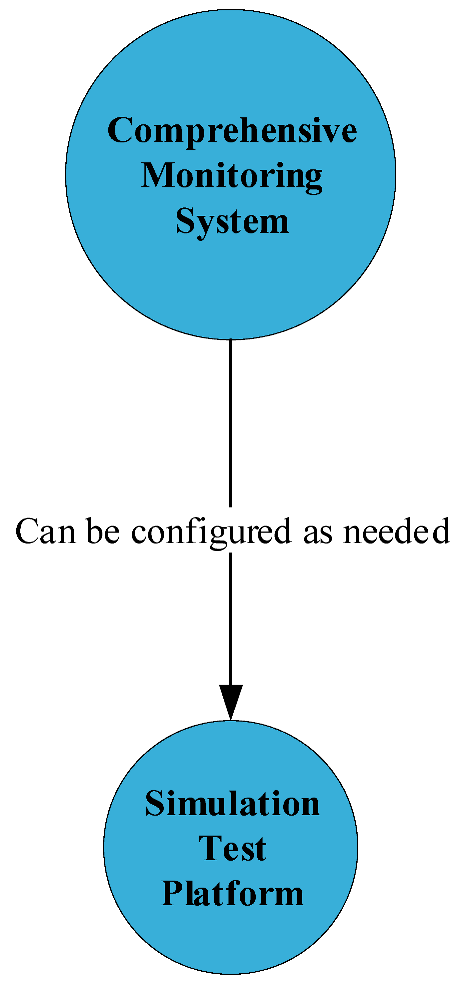

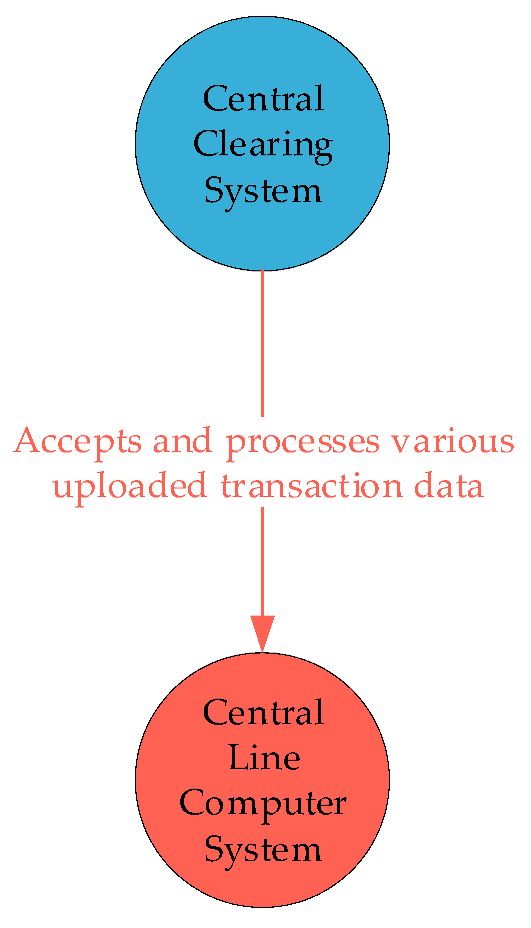

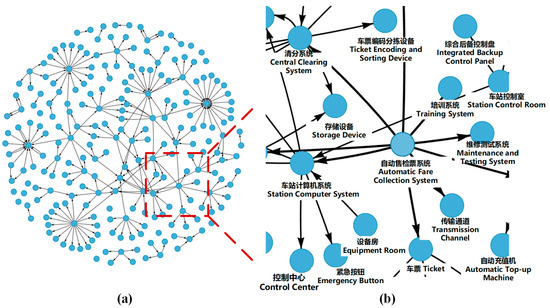

5.2. The SRs Knowledge Graph Requirement Representation Module

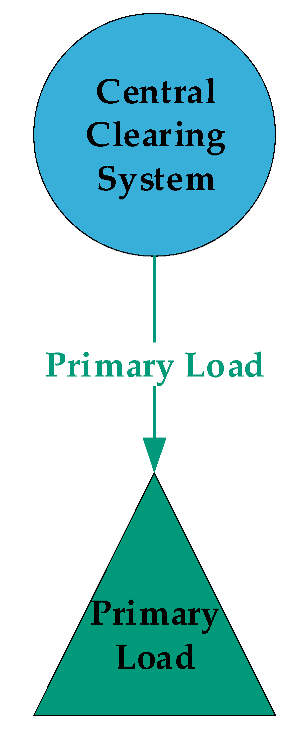

The SRs representation knowledge graph construction module is the core component of the entire prototype model. Based on the principles of domain knowledge graph construction proposed in Section 3.4, the structured requirement data are utilized to extract node attributes (subject, attribute, object), edge attributes (action), and textual elements (function, value, principle, condition) to establish the requirement representation graph. Using physical requirements as the foundational framework for the domain requirement representation knowledge graph, its specific function is shown in Figure 9. Taking a partial magnified view of the “Automatic Fare Collection System” node as an example, this system is composed of several subsystems, including “Station Computer System, Central Clearing System, Training System, Transmission Channel, Maintenance and Testing System, Tickets”, etc. Each subsystem contains the corresponding constituent elements that it should possess. Using this graph framework, the knowledge structure based on physical requirements is clearly presented, encompassing the core nodes and elements of the entire metro system.

Figure 9.

(a) Knowledge graph framework based on physical requirements, (b) physical relationship graph of the node “Automatic Fare Collection System”.

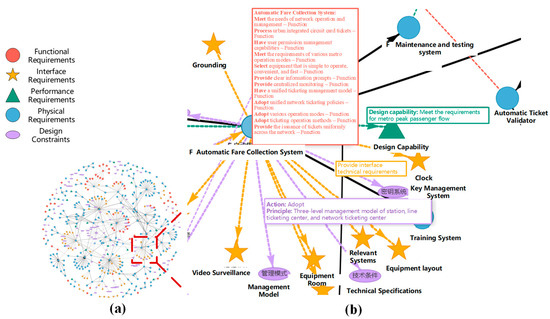

Based on this framework, the remaining four types of requirements (functional requirements, interface requirements, performance requirements, and design constraints) are gradually added to the requirement graph, thereby forming a complete system requirement representation. The SR representation knowledge graph for Chapters 18–22 of the ‘Code for Design of Metro’ is shown in Figure 10. When hovering over the node “Automatic Fare Collection System”, the functionalities that this node should possess will be displayed, such as processing urban-integrated circuit card tickets, having user permission management capabilities, and providing clear information prompts, among others. All nodes with functional requirements will have the prefix “F” added to their names to indicate that the node contains functional requirements, making it easier for users to understand the functionalities it should possess. Design constraints will add specific limitations to the attributes associated with the nodes, and these design constraints are displayed in purple ellipses. When hovering over a node or connecting edge, the specific constraints of the corresponding attribute will be shown. For example, when hovering over the connecting edge between the “Automatic Fare Collection System” and “Management Mode” nodes, the requirement that the system must adopt a “three-level management model of station, line ticketing center, and network ticketing center” will appear. Performance requirements are indicated by green triangles on the attribute nodes. When hovering over an attribute node or its connecting edge, the specific requirements for that attribute from the subject will be displayed. For example, the “Design Capacity” attribute of the “Automatic Fare Collection System” requires “meeting the requirements for metro peak passenger flow”. Interface requirements reflect the interconnection between subjects and associated objects, represented by orange pentagrams on the attribute nodes of the associated objects. When hovering over the connecting edge between the subject and the associated object, the system will display the specific requirements for the interface interaction between the subject and the object. For example, the connection between the “Automatic Fare Collection System” and the associated object “Clock” requires that the “Automatic Fare Collection System” provides “Interface Technical Requirements” to the “Clock”.

Figure 10.

(a) SRs representation knowledge graph, (b) example of other requirements for the “Automatic Fare Collection System” node.

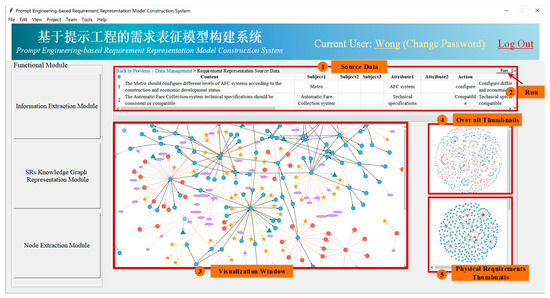

The SRs knowledge graph requirement representation module construction logic is integrated into the system, as shown in Figure 11. The SRs knowledge graph requirement representation module contains multiple functional areas. Region 1 represents the source data for the requirement representation graph, Region 2 is the code execution button, Region 3 is the visual display area for the generated graph, Region 4 is the overall thumbnail of the SRs requirement representation knowledge graph, and Region 5 is the thumbnail containing only physical requirements.

Figure 11.

Example of the SR knowledge graph requirement representation module.

5.3. The Node Extraction Module

The algorithm principle of the target node extraction module is based on the content from Section 3.4, and the algorithm is integrated into the node extraction module. An example of this module is shown in Figure 12. Area 1 displays the target node input by the user, Area 2 shows the source data related to the target node, and Area 3 presents the relationship graph of the target node. Using this functionality, users can intuitively view the information contained within the node in the knowledge graph, including physical requirements, functional requirements, performance requirements, design constraints, and interface requirements, thereby gaining a deeper understanding of the node’s overall requirements and relationships. The figure illustrates the relationship graph of the “Automatic Fare Collection System”, where designers can clearly understand the complete set of requirements associated with this node, including its physical requirements, such as the physical components like “Transmission Channel”, “Maintenance and Testing System”, “Central Clearing System”, and “Transmission Channel”. The algorithm performs a layer-by-layer search for relevant neighboring nodes of the target node. It first retrieves the neighboring nodes related to the “Automatic Fare Collection System” and then gradually explores the child nodes of these neighbors. This process continues until no more child nodes exist. The target node extraction module provides designers with complete information about the target node while preserving the consistency of the requirement relationships in the original graph.

Figure 12.

Example of the node extraction module for the automatic fare collection system.

6. Conclusions and Future Work

6.1. Conclusions

To address the current challenges of requirement management chaos and representation difficulties, this paper innovatively proposes a prototype system construction method for requirement representation based on prompt engineering. This system leverages the powerful information extraction capabilities of large language models and the structural representation advantages of knowledge graphs, implementing the intelligent management of requirement documents using a three-layer architecture of “requirement analysis—information extraction—graph construction”. Based on the SysML standard, the requirements are classified, and specialized information extraction templates and prompt templates are designed for different types of requirements. GPT-4 is used for structured data extraction, combined with automated processing and manual corrections, resulting in a knowledge graph that clearly presents the semantic relationships and hierarchical structure of the requirements, providing intuitive and efficient support for requirement analysis and management.

To verify the feasibility of this method, the paper uses Chapter 18–Chapter 22 of the national standard ‘Code for Design of Metro’ (GB 50157-2013) as a case study to construct a dataset, dividing it into 80% training data and 20% test data. The experiment compares the performance of GPT-4 with GPT-3.5-turbo, BERT, and RoBERTa under the same evaluation criteria. The results show that GPT-4 achieves an extraction accuracy of 84.76%, significantly higher than GPT-3.5-turbo (79.05%), BERT (59.05%), and RoBERTa (59.05%). This demonstrates that the proposed method, which combines an information extraction template with GPT-4, exhibits excellent performance in SR information extraction tasks, far outperforming current mainstream deep learning models. Furthermore, GPT-4 can perform SR information extraction tasks without the need for extensive data labeling, achieving high-accuracy and high-efficiency extraction with only a few examples. This highlights the vast application potential of large language models combined with prompt engineering in requirement management and representation.

Furthermore, based on the extracted structured data, this paper successfully constructs a requirement representation knowledge graph for the metro domain, further validating the effectiveness of the large language model combined with prompt engineering and knowledge graph construction systems. This provides a new technological pathway for intelligent requirement management.

6.2. Future Work

During the course of this study, we also recognized the limitations of GPT-4 in information extraction tasks. Future research can focus on the following aspects:

- (1)

- The current work does not fully address the challenges faced by GPT-4 in handling hierarchical dependencies and entity disambiguation. At this stage, the GPT-4 model primarily interprets multi-level requirement structures and polysemous entities. Future research could explore integrating GPT-4 with dependency syntax analysis [58] for more precise calibration and optimization of the design of prompts. Additionally, the application of Chain of Thought (CoT) [59] methods in comparison with existing approaches could facilitate a deeper analysis of multi-level requirement structures and clarify the specific roles of entities within these requirements, thereby enhancing the interpretability and accuracy of data extraction using GPT-4.

- (2)

- The prompts designed in this study utilize few-shot learning for information extraction; however, varying prompting strategies, such as zero-shot, one-shot [60], and CoT, may influence the model’s extraction accuracy, which remains an area for further research. Additionally, as a general-purpose language model, GPT-4 may not fully satisfy the stringent accuracy requirements of specific domains when processing domain-specific data. Therefore, future research could investigate the potential of domain-specific fine-tuning of GPT-4 to enhance its accuracy within those specialized fields.

- (3)

- Although this study designed an automatic format validation mechanism to correct issues related to hallucinations and inconsistencies in structured data generated using GPT-4, the model is still influenced by the training data [61] during the semantic understanding and content generation processes, leading to errors in some cases. To address this issue, future research could explore more refined post-processing mechanisms to verify the accuracy of generated content and reduce the occurrence of hallucinations.

- (4)

- Due to the complexity of node relationships in Chinese requirement texts, the current system has not yet implemented uniform relationship edges connecting all nodes, which remains a problem to be further investigated. Additionally, the research in this paper mainly focuses on requirement document representation, and there is still significant room for exploration regarding the implicit relationships between different requirements and requirement mining. Future work could delve deeper into these aspects.

Author Contributions

L.W.: Conceptualization, Resources, Writing—review and editing; M.-C.W.: Conceptualization, Methodology, Software, Writing—original draft; Y.-R.Z.: Methodology, Investigation; Visualization, Validation; J.M.: Writing—Review and Editing; H.-Y.S.: Writing—Review and Editing; Z.-X.C.: Methodology, Data curation, Writing—Review and Editing. All authors have read and agreed to the published version of the manuscript.

Funding

This work was supported by the National Key Research and Development Projects of China (No. 2024YFB3309900).

Institutional Review Board Statement

Not applicable.

Informed Consent Statement

Not applicable.

Data Availability Statement

The data used to support the findings of this study are available from the corresponding author upon request.

Conflicts of Interest

The authors declare no conflicts of interest.

References

- Li, B.; Nong, X. Automatically classifying non-functional requirements using deep neural network. Pattern Recognit. 2022, 132, 108948. [Google Scholar] [CrossRef]

- Aslam, K.; Iqbal, F.; Altaf, A.; Hussain, N.; Villar, M.G.; Flores, E.S.; De La Torre Díez, I.; Ashraf, I. Detecting Pragmatic Ambiguity in Requirement Specification Using Novel Concept Maximum Matching Approach Based on Graph Network. IEEE Access 2024, 12, 15651–15661. [Google Scholar] [CrossRef]

- Wang, Z.; Pan, J.-S.; Chen, Q.; Yang, S. BiLSTM-CRF-KG: A Construction Method of Software Requirements Specification Graph. Appl. Sci. 2022, 12, 6016. [Google Scholar] [CrossRef]

- Majidzadeh, A.; Ashtiani, M.; Zakeri-Nasrabadi, M. Multi-type requirements traceability prediction by code data augmentation and fine-tuning MS-CodeBERT. Comput. Stand. Interfaces 2024, 90, 103850. [Google Scholar] [CrossRef]

- Gupta, A.; Siddiqui, S.T.; Qidwai, K.A.; Haider, A.S.; Khan, H.; Ahmad, M.O. Software Requirement Ambiguity Avoidance Framework (SRAAF) for Selecting Suitable Requirement Elicitation Techniques for Software Projects. In Proceedings of the 2022 IEEE International Conference on Current Development in Engineering and Technology (CCET), Bhopal, India, 23–24 December 2022; pp. 1–6. [Google Scholar] [CrossRef]

- Kasauli, R.; Knauss, E.; Horkoff, J.; Liebel, G.; de Oliveira Neto, F.G. Requirements engineering challenges and practices in large-scale agile system development. J. Syst. Softw. 2021, 172, 110851. [Google Scholar] [CrossRef]

- Pauzi, Z.; Capiluppi, A. Applications of natural language processing in software traceability: A systematic mapping study. J. Syst. Softw. 2023, 198, 111616. [Google Scholar] [CrossRef]

- Friedenthal, S.; Moore, A.; Steiner, R. A Practical Guide to SysML: The Systems Modeling Language; Morgan Kaufmann: Burlington, MA, USA, 2009. [Google Scholar]

- Wu, Z.; Ma, G. NLP-based approach for automated safety requirements information retrieval from project documents. Expert Syst. Appl. 2024, 239, 122401. [Google Scholar] [CrossRef]

- Herber, D.R.; Narsinghani, J.B.; Shahroudi, K.E. Model-Based Structured Requirements in SysML. In Proceedings of the 2022 IEEE International Systems Conference (SysCon), Montreal, QC, Canada, 25–28 April 2022; pp. 1–8. [Google Scholar] [CrossRef]

- Zhong, S.; Scarinci, A.; Cicirello, A. Natural Language Processing for systems engineering: Automatic generation of Systems Modelling Language diagrams. Knowl. Based Syst. 2023, 259, 110071. [Google Scholar] [CrossRef]

- Zhao, L.; Alhoshan, W.; Ferrari, A.; Letsholo, K.J.; Ajagbe, M.A.; Chioasca, E.-V.; Batista-Navarro, R.T. Natural Language Processing for Requirements Engineering: A Systematic Mapping Study. ACM Comput. Surv. 2021, 54, 1–41. [Google Scholar] [CrossRef]

- Chen, J.; Hu, B.; Diao, W.; Huang, Y. Automatic generation of SysML requirement models based on Chinese natural language requirements. In Proceedings of the 2022 6th International Conference on Electronic Information Technology and Computer Engineering, Xiamen, China, 21–23 October 2022; pp. 242–248. [Google Scholar] [CrossRef]

- Rula, A.; D’Souza, J. Procedural Text Mining with Large Language Models. In Proceedings of the 12th Knowledge Capture Conference 2023, Pensacola, FL, USA, 5–7 December 2023. [Google Scholar] [CrossRef]

- Son, M.; Won, Y.-J.; Lee, S. Optimizing Large Language Models: A Deep Dive into Effective Prompt Engineering Techniques. Appl. Sci. 2025, 15, 1430. [Google Scholar] [CrossRef]

- Sundberg, L.; Holmström, J. Innovating by prompting: How to facilitate innovation in the age of generative AI. Bus. Horiz. 2024, 67, 561–570. [Google Scholar] [CrossRef]

- Giray, L. Prompt Engineering with ChatGPT: A Guide for Academic Writers. Ann. Biomed. Eng. 2023, 51, 2629–2633. [Google Scholar] [CrossRef] [PubMed]

- Peng, C.; Xia, F.; Naseriparsa, M.; Osborne, F. Knowledge Graphs: Opportunities and Challenges. Artif. Intell. Rev. 2023, 56, 13071–13102. [Google Scholar] [CrossRef]

- Lehnen, N.C.; Dorn, F.; Wiest, I.C.; Zimmermann, H.; Radbruch, A.; Kather, J.N.; Paech, D.; Panzer, A. Data Extraction from Free-Text Reports on Mechanical Thrombectomy in Acute Ischemic Stroke Using ChatGPT: A Retrospective Analysis. Radiology 2024, 311, e232741. [Google Scholar] [CrossRef] [PubMed]

- Nguyen, H.L.; Vu, D.T.; Jung, J.J. Knowledge graph fusion for smart systems: A Survey. Inf. Fusion. 2020, 61, 56–70. [Google Scholar] [CrossRef]

- Zhou, W. Research on information extraction technology applied for knowledge graphs. Appl. Comput. Eng. 2023, 4, 26–31. [Google Scholar] [CrossRef]

- Abdullah, M.H.A.; Aziz, N.; Abdulkadir, S.J.; Alhussian, H.S.A.; Talpur, N. Systematic Literature Review of Information Extraction From Textual Data: Recent Methods, Applications, Trends, and Challenges. IEEE Access 2023, 11, 10535–10562. [Google Scholar] [CrossRef]

- Wang, Z.; Li, T.; Li, Z. Unsupervised Numerical Information Extraction via Exploiting Syntactic Structures. Electronics 2023, 12, 1977. [Google Scholar] [CrossRef]

- Chen, C.; Tao, Y.; Li, Y.; Liu, Q.; Li, S.; Tang, Z. A structure-function knowledge extraction method for bio-inspired design. Comput. Ind. 2021, 127, 103402. [Google Scholar] [CrossRef]

- Wang, D.; Liu, L.; Liu, Y. Normalized Storage Model Construction and Query Optimization of Book Multi-Source Heterogeneous Massive Data. IEEE Access 2023, 11, 96543–96553. [Google Scholar] [CrossRef]

- Tabebordbar, A.; Beheshti, A.; Benatallah, B.; Barukh, M.C. Feature-Based and Adaptive Rule Adaptation in Dynamic Environments. Data Sci. Eng. 2020, 5, 207–223. [Google Scholar] [CrossRef]

- Yadav, A.; Patel, A.; Shah, M. A comprehensive review on resolving ambiguities in natural language processing. AI Open 2021, 2, 85–92. [Google Scholar] [CrossRef]

- Zhang, R.; El-Gohary, N. Hierarchical Representation and Deep Learning–Based Method for Automatically Transforming Textual Building Codes into Semantic Computable Requirements. J. Comput. Civ. Eng. 2022, 36, 04022022. [Google Scholar] [CrossRef]

- Liu, J.; Gao, L.; Guo, S.; Ding, R.; Huang, X.; Ye, L.; Meng, Q.; Nazari, A.; Thiruvady, D. A hybrid deep-learning approach for complex biochemical named entity recognition. Knowl. Based Syst. 2021, 221, 106958. [Google Scholar] [CrossRef]

- Liu, P.; Guo, Y.; Wang, F.; Li, G. Chinese named entity recognition: The state of the art. Neurocomputing 2022, 473, 37–53. [Google Scholar] [CrossRef]

- Ji, W.; Wen, K.; Ding, L.; Song, B. A Relation Extraction Method Based on Multi-layer Index and Cascading Binary Framework. In Advanced Data Mining and Applications; Springer: Singapore, 2024; pp. 113–126. [Google Scholar] [CrossRef]

- Parikh, A. Information Extraction from Unstructured data using Augmented-AI and Computer Vision. arXiv 2023. [Google Scholar] [CrossRef]

- Vaswani, A.; Shazeer, N.; Parmar, N.; Uszkoreit, J.; Jones, L.; Gomez, A.N.; Kaiser, L.; Polosukhin, I. Attention Is All You Need. arXiv 2023. [Google Scholar] [CrossRef]

- Polak, M.P.; Morgan, D. Extracting accurate materials data from research papers with conversational language models and prompt engineering. Nat. Commun. 2024, 15, 1569. [Google Scholar] [CrossRef]

- Wang, X.; Huang, L.; Xu, S.; Lu, K. How Does a Generative Large Language Model Perform on Domain-Specific Information Extraction?─A Comparison between GPT-4 and a Rule-Based Method on Band Gap Extraction. J. Chem. Inf. Model. 2024, 64, 7895–7904. [Google Scholar] [CrossRef]

- Tang, Y.; Xiao, Z.; Li, X.; Zhang, Q.; Chan, E.W.; Wong, I.C.; Force, R.D.C.T. Large Language Model in Medical Information Extraction from Titles and Abstracts with Prompt Engineering Strategies: A Comparative Study of GPT-3.5 and GPT-4. medRxiv 2024, 2024, 24304572. [Google Scholar] [CrossRef]

- Davoodi, L.; Mezei, J. A Large Language Model and Qualitative Comparative Analysis-Based Study of Trust in E-Commerce. Appl. Sci. 2024, 14, 10069. [Google Scholar] [CrossRef]

- Dagdelen, J.; Dunn, A.; Lee, S.; Walker, N.; Rosen, A.S.; Ceder, G.; Persson, K.A.; Jain, A. Structured information extraction from scientific text with large language models. Nat. Commun. 2024, 15, 1418. [Google Scholar] [CrossRef] [PubMed]

- Xing, X.; Chen, P. Entity Extraction of Key Elements in 110 Police Reports Based on Large Language Models. Appl. Sci. 2024, 14, 7819. [Google Scholar] [CrossRef]