Featured Application

A novel automatic segmentation approach for gas metal arc welding robot welding quality assessment.

Abstract

In the industry, the robotic gas metal arc welding (GMAW) process has a huge range of applications, including in the automotive sector, construction companies, the shipping industry, and many more. Automatic quality inspection in robotic welding is crucial because it ensures the uniformity, strength, and safety of welded joints without the need for constant human intervention. Detecting defects in real time prevents defective products from reaching advanced production stages, reducing reprocessing costs. In addition, the use of materials is optimized by avoiding defective welds that require rework, contributing to cleaner production. This paper presents a novel dataset of robot GMAW images for experimental purposes, including human-expert segmentation and human knowledge labeling regarding the different errors that may appear in welding. In addition, it tests an automatic segmentation approach for robot GMAW quality assessment. The results presented confirm that automatic segmentation is comparable to human segmentation, guaranteeing a correct welding quality assessment to provide feedback on the robot welding process.

1. Introduction

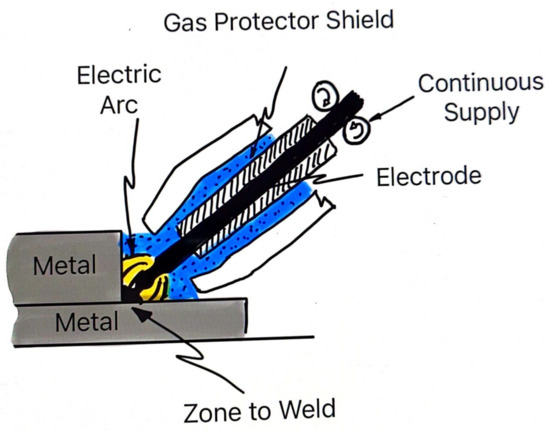

The American Welding Society (AWS) defined the GMAW as a welding process that uses an electrical arc between an electrode of continuous supply material and the material zone to be welded. The main characteristic of this welding process is that it needs a gas protector shield, as shown in Figure 1, according to “Terms of definitions” of the AWS 3.0 Norm [1]. This process is generally used for automatic or semi-automatic welding equipment [2].

Figure 1.

Elements of the GMAW process in fitting welding.

The robotic GMAW process has a wide range of applications in the industry, including the automotive sector, construction companies, the shipping industry, and many more. Nowadays, with modern manufacturing and Industry 4.0 applications, industries need to monitor the welding quality, waste acquisition, and contamination and even decrease the process costs, making it more economical [3]. The fundamental problem is to monitor the robot’s correct application of the weld bead based on many input variables such as output current, pulse time, voltage, wire feed speed, geometry profile, and robot path [1]. In this process, toxic chemical reactions occur that become environmental pollution when the steel is exposed to high temperatures caused by electricity and the reaction with argon and carbon dioxide in the shielding gas. Every minute used in a welded part equals one minute of environmental footprint and energy consumed.

In addition, when parts are manufactured in a serial process, the operation time is precise from the start of the welding process until the welding seam is made. Therefore, it is logical to ensure that the inspection activity time is not greater than the operation time [4].

Taking this period as a reference, different techniques for inspecting the parts exist. Currently, a specialist performs the quality assessment process as automated inspection has evolved and increased in the development and implementation of that process. One of these quality control methods is to take photographs and analyze them, such as the geometry, color, and path of the welded parts.

The main goal is to replace the specialist with an artificial intelligence method that uses an inexpensive camera and free software [5]. Recently, several methods of automatic robotic weld seam analysis have been developed. There are numerous differences in how to acquire the weld image, what features would be analyzed in the image, and what type of image processing algorithm has better results.

The main decision in applying a specific model depends on the image quality. In this sense, there are high-cost cameras made to withstand the high temperatures inherent to the welding process and low-cost cameras, such as a webcam, which must be far from the robot that executes the welding process and whose technology is not robust enough.

Another difference is the use of image processing techniques. In some works, specialized software (usually with the camera) is used, and only segments must be identified. In some researches, free software has been used, where it is necessary to use filters to clean the image before identifying the features of interest [3,5].

Research has considered different cameras for the welding process and certain additional elements such as the welding electrode’s distance, width, area, and angle. When using low-cost cameras, such as a webcam, it is necessary to apply more processing methods such as YOLOv5 (You Only Look Once), PAN (Path Aggregation Network), CNN (Convolutional Neural Network), and FPN (Feature Pyramid Networks) [6].

The automatic segmentation of welding images is a crucial advancement in developing solutions for welding processes, mainly when aiming for both environmental and economic sustainability. This process involves using computer vision and machine learning techniques to analyze and segment images captured during welding to identify key features such as weld beads, joint quality, heat-affected zones, and defects.

Furthermore, accurately identifying the region of interest (ROI) in weld seam images is essential for quality assurance in industrial manufacturing processes. However, this represents a significant challenge, as incorrect ROI identification can lead to welding defects, compromising the structural integrity of the parts [4]. In this study, a new approach based on Rembg is proposed to overcome these limitations.

The main contributions of this paper are:

- A publicly available real-world GMAW welding dataset for quality assessment

- Human segmentation and human knowledge labeling of the welding images in the dataset, including some of the most common errors.

- Evaluation of automatic segmentation by traditional and deep neural network-based models

This project also has a social impact, which includes improving the working conditions of people involved in this environment, achieving a decrease in environmental impact towards carbon footprint (CO2) neutrality, and finally covering Industry 5.0, which aims to establish a smart, sustainable, and circular industrial zone to benefit businesses and society.

The rest of this paper is organized as follows: Section 2 covers some related works on welding quality assessment. Section 3 presents the collected data and the corresponding benchmark dataset of GMAW images. Section 4 presents the evaluation of segmentation algorithms versus human segmentation, including the use of Deep Neural Networks for segmentation. Finally, Section 5 gives the conclusions and avenues for future research.

2. Related Works

This section presents several related works in the field of automatic welding quality assessment that justify the relevance of this topic and provide a better description of the background in the field. It is important to note that, since 1968, research papers have focused on using computers to improve welding quality assessment [7].

Pico et al. [4] reviewed the use of machine learning models in metallurgic and other industries. Their results showed the adaptability and potential applicability of artificial intelligence techniques to almost any stage of the process of any product due to the use of complementary techniques that adapt to the different particularities of the data, production processes, and quality requirements.

Automatic weld quality detection is a growing research topic due to its direct impact on the safety, reliability, and efficiency of various industries, such as automotive, aerospace, shipbuilding, and infrastructure manufacturing. This field of research is of great importance for several reasons, including the following:

- Safety: Defective welds can lead to severe structural failures in products or infrastructure, posing a significant risk to human safety. Detecting these defects early can prevent accidents and human loss.

- Efficiency: Inspecting welds manually is a process that can be slow, expensive, and prone to human error. Automatic systems can speed up inspection and allow more quality tests to be performed in less time, improving productivity and reducing manufacturing costs.

- Quality and Consistency: Weld quality can vary depending on factors such as welder technique, environmental conditions, and material quality. Automatic detection methods can provide a more accurate and consistent weld quality assessment compared to traditional visual inspections.

- Cost Reduction: Automatic detection can reduce costs associated with repairing defective welds and labor costs for inspection. It also improves traceability and quality management throughout the manufacturing process.

- Defect Complexity: Welds can have very complex defects that are not always visible to the naked eye, and their exact location can be challenging to determine. Detecting them using automatic technologies, such as ultrasound, X-ray, or computer vision, requires advanced approaches to ensure that potential flaws are not overlooked.

Several studies related to the use of machine learning algorithms to improve welding processes are reviewed below.

GMAW welding is being studied for its wide use in industry and the mechanical properties it provides due to its high productivity and processing speed, such as automotive and heavy manufacturing equipment [8]. Its automatic quality control goes back to 1980 [9].

Panttilä et al. [10] investigated using Artificial Neural Networks (ANN) to develop an adaptive GMAW parameter control system. Their study combined a computer vision system with an artificial neural network-based welding parameter control system to achieve a more consistent welding result and higher weld quality under varying welding conditions. The study further enabled the development of an ANN-controlled robotic welding system for multi-pass butt welding on a 12 mm S420MC plate without root support. It was also established how the developed ANN system adapted to varying welding conditions and pit welds in a robotic welding application. Their later experiments [11] included using the Levenberg–Marquardt Algorithm (LMA) for ANN training and incorporating a welding adaptive feedback control system for welding process parameters in a laboratory environment.

Reisgen et al. again focused on the classification of different deviations of the gas metal arc welding process using artificial neural networks [12]. To do so, they used transient optical and electrical data of the welding process. The deviations examined ranged from large process disturbances due to insufficient shielding gas coverage to a slight shift in the process operating point due to a poorly positioned welding torch. Their study showed that the best results were obtained when optical (photodiodes) and electrical (current and voltage) process data were used together [12].

Njock-Bayock et al. [13] also used artificial neural networks to determine the mechanical properties of high-strength steels. Their study generated yield and tensile strength data concerning welding parameters and a filler wire with a similar carbon equivalent. They stressed the importance of considering additional factors, such as filler wire characteristics, to accurately predict the mechanical properties of solder joints.

Nomura et al. focused on burn-through prediction and weld depth estimation in GMAW [14]. The variation in overpenetration and burn-through among samples was explained in the study by the inevitable influence of disturbances during the welding process and the instability of the welding arc. They developed a deep convolutional neural network model to assess both situations satisfactorily.

Designing a static feature pattern that allows consistent detection of the weld pool boundary or position under different welding conditions is relatively complicated. Yu et al. [15] also used deep neural networks for this purpose. Their study aimed to automatically track the weld pool boundary to monitor the process and collect operational and process data for operator analysis and modeling. In their case study, intense arc radiation and unpredictable operation presented additional challenges, which were overcome using U-Net architectures.

Enríquez et al. [16] also used deep neural networks to improve welding. Their research focused on identifying and categorizing weld current. They tested several architectures (in alphabetical order: DarkNet53 [17], DenseNet201 [18], EfficientNetB0 [19], InceptionV3 [20], MobilenetV2 [21], NASNetLarge [22], ResNet18 [23], ResNet101 [23], and Xception [24]) for weld current detection and classification, with good results.

In addition, Yang and Zou designed lightweight networks for automatic weld seam tracking [25]. They use denoising and object detection, followed by information-driven structural pruning with Bayesian Optimization.

Reddy et al. also focused on a machine vision-based algorithm for robotic weld path detection, gap measurement, and weld length calculation in the GMAW process. They used a low-cost webcam camera located on the robotic arm to capture images, which were later converted to grayscale. In addition, YOLOv5 [26] was used for identifying atypical weld regions [5].

Li et al. leveraged Generative Adversarial Networks combined with Gated Recurrent Units in a human–robot collaborative system for GMAW [27]. Their system used time-series torch movement data and corresponding weld pool images.

From a different perspective, Wu et al. [28] pioneered using fuzzy sets for GMAW analysis. Using measured welding voltage and current signals, they developed a fuzzy logic system capable of recognizing common disturbances during automatic gas metal arc welding (GMAW). The proposed system could recognize and classify disturbed and undisturbed GMAW experiments using only the measured welding voltage and current signals.

Cavazos-Hernández et al. also employed fuzzy systems for assessing the geometries and quality of weld beads [29]. They emphasize the uncertainties of the semi-automated GMAW process and leverage on soft computing to obtain satisfactory results.

The prediction model proposed in that study considered parameters such as wire feed speed, travel speed, contact tip-to-work distance, and electrode working angle.

In addition, Wordofa et al. [30] applied fuzzy logic to GMAW quality assessment. They proposed a fuzzy model that uses fuzzy expert rules, triangular membership functions, and the centroid area method to obtain effective defuzzification. This enabled them to predict acceptable parameters for robotic gas metal arc welding, following the quality standards set out in AWS B4.0:2016 and BS EN ISO 5817:2014.

Chen et al. [31] analyzed the behavior of weld deposition by analyzing images captured by a high-speed camera in a short-circuit arc GMAW welding process. Their study focused on recognizing the weld’s length, grain size, and microstructure. On the other hand, Wang et al. [32] studied the GMAW type robotic welding bead for the application of additive manufacturing, where the interest of the research is to find the optimal voltage, feed speed and travel speed.

Aoyade and Steele focused on developing an online welding quality assessment system [33]. To do this, they relied on radiographic data to detect the presence of subsurface porosity in order to assess defects and show how to objectively quantify the occurrence of porosity at the surface. This research analyzed individual 1 mm thick data and the corresponding weld section result, identifying each section as a pass or fail weld and labeling it accordingly.

Cullen and Ji [34] proposed an innovative approach based on acoustic signal monitoring to automatically detect faults in GMAW welding by monitoring the changes in the droplet transfer mode during the welding process. This contributes to a long-date research topic [35]. The proposed fault detection algorithm showed promising results, exhibiting a remarkable ability to identify and locate defects such as burn-through and porosity in the different natural droplet transfer modes in GMAW.

Also, Biber et al. [36] studied the analysis of GMAW robotic welding, particularly employing vision systems, including the use of a monochrome camera where geometric information is extracted from the images taken in real time and measurements of the weld bead are made.

In this research, we focused on using low-cost cameras for GMAW quality assessment.

3. Materials and Methods

Throughout this section, we will detail how the images of the weld bead that exist in the metal parts were obtained. In addition, we will describe the composition of the dataset, i.e., which weld beads are fine, which ones have problems but can be corrected, and which ones should be discarded. Subsequently, it describes which hardware and software were used to carry out this study.

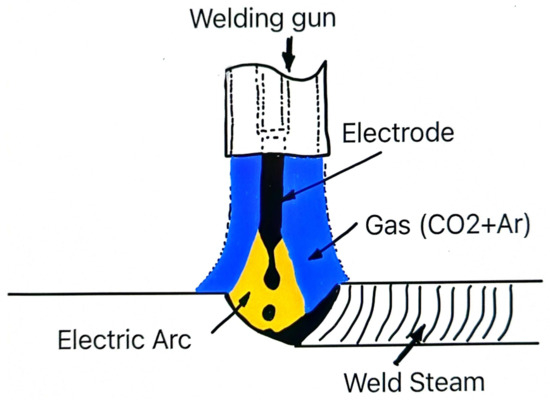

3.1. Dataset of Images

The images in this research come from robotic welding practices carried out at the School of Mechanical and Electrical Engineering (ESIME) Azcapotzalco Unit. The gas used in the school practice for MAG welding process is a mix of Dioxide of Carbon (CO2) and Argon (Ar). This gas is supplied through a welding gun with the purpose of protecting the electric arc and the welding steam against the chemical agents as shown in Figure 2.

Figure 2.

Elements of the GMAW process.

The automotive parts used in this investigation are made of black sheet metal plates with low carbon, cut by a water jet, and with a 0.25-inch thickness. The parts were machined from ASTM A1011/1018 steel, called black sheet or Black Iron, whose classification is hot-rolled steel sheet, designated as Commercial Steel Type A (CS Type A). The chemical composition and mechanical properties are provided in Table 1 and Table 2, respectively.

Table 1.

Chemical composition of the parts.

Table 2.

Mechanical properties of the parts.

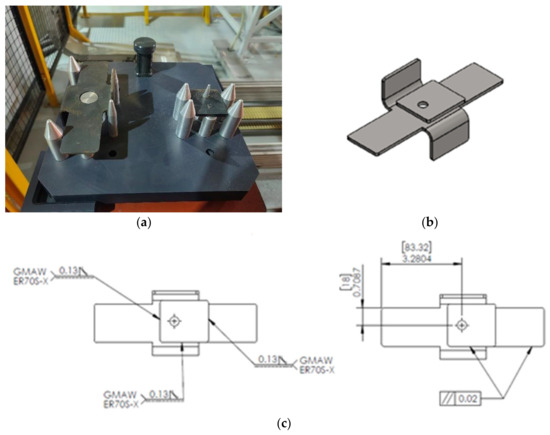

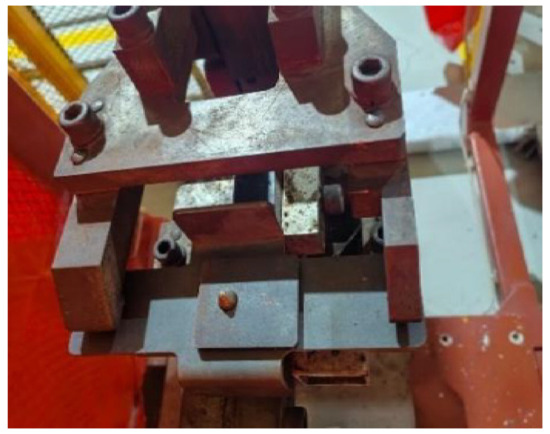

There are two parts: one is a cross, and the other is a little square. Both parts are carried on a base called a pallet, shown in Figure 3.

Figure 3.

Automotive parts. (a) Pallet with two automotive parts; (b) sketch of the welded parts; (c) geometrical properties of the parts and welding specifications.

The process involves holding both parts in an automatic welding dispositive called clamps (Figure 4). It has two main characteristics: First, it helps us to avoid moving the parts in the welding process, and second, it is a good electricity conductor because it is made of metal, and the parts are in contact with it.

Figure 4.

Automotive parts in clamps.

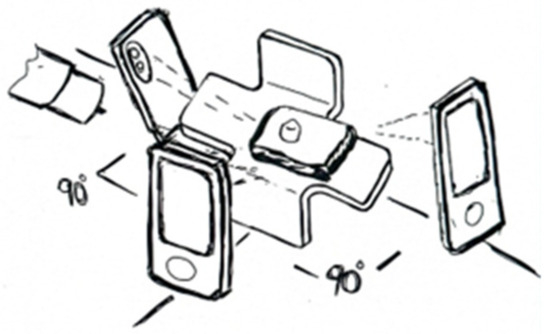

The experimental setup used was the following. The robot generates the three welding beads in a program cycle previously programmed by a student. When the torch moves away and the clamping devices release the welded piece, an operator approaches and positions himself precisely in the positions shown in Figure 5 to take the photograph, looking for the perpendicularity of the faces of the cell phone. A limitation of our study is the possible variation in the distance between the hand of the human operator and the parts.

Figure 5.

Cellphone position concerning the welded parts.

The welding process parameters (Wire feed, Trim, Voltage, Travel speed, Pulse, Air mix, and Feedback Current) are detailed in Table 3.

Table 3.

Parameters of the welding process.

To assess the quality of the welding, the school defines four categories: Good weld seam (Good), Bad weld seam, with the possibility of being reworked by a human expert (Human), Bad weld seam, with the possibility of being reworked by a robot (Robot), and Very Bad weld seam, discard as scrap (Scrap). Table 4 presents the qualitative description of each category.

Table 4.

Qualitative criteria for the four categories analyzed.

In addition to the raw images, the resulting dataset includes human-expert knowledge regarding several common errors in the welding process. These errors include incorrect trajectory, lack of welding, need to remove splatter welding or welding seam, or presence of holes. Each image includes specific errors or a good welding, if that is the case.

The way to evaluate the weld seam was not by longitudinal or height measurement (quantitative), but by visual geometric parameters using images (qualitative). The labeling of the images in the four categories was done manually by a human expert.

This dataset allows for quickly and accurately identifying the most common defects that can appear during welding. The images are a visual reference for technicians, engineers, and students, helping them recognize problems in practice. In addition, images serve to illustrate what not to do, which is crucial for training. In the School of Mechanical and Electrical Engineering (ESIME) Azcapotzalco Unit, the students can study the visual examples of errors and learn how to avoid them, improving welding quality. They can also better understand what an error looks like in the process, which complements the theoretical knowledge acquired.

Finally, from the perspective of research and development of advanced technologies, such as automated weld inspection using computer vision and artificial intelligence, having a real-world welding images dataset with examples of errors allows machine learning models to be trained to recognize faults automatically. This can optimize inspection and reduce the margin of human error.

The dataset obtained from the welding images will be donated to the University of California (UCI) Machine Learning Repository to be publicly available. In the meantime, researchers can download it from this link: https://github.com/MetatronEMDI/Welding-Dataset-ESIME, accessed on 19 February 2025.

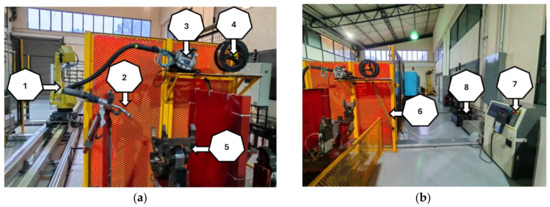

3.2. Hardware and Software Used

The welding equipment used in the Automotive Cell Laboratory is shown in Figure 6, and its characteristics are described below:

Figure 6.

Equipment of Automotive Cell Laboratory by ESIME Azcapotzalco Unit–IPN. Examples (a) First view; (b) Second view.

- Fanuc Robot M710iC/50.

- Lincoln Electric Welding Gun

- Wire feeder, AutoDrive 4R90 Lincoln Electric

- Weld Wire.

- Clamps

- Security device

- Fanuc Robot Controller, R30iA

- Power control, Power Wave i400 Lincoln Electric.

We obtained the images with a Huawei Nova 10 cellphone with a 50 MP Ultra Vision Photography camera.

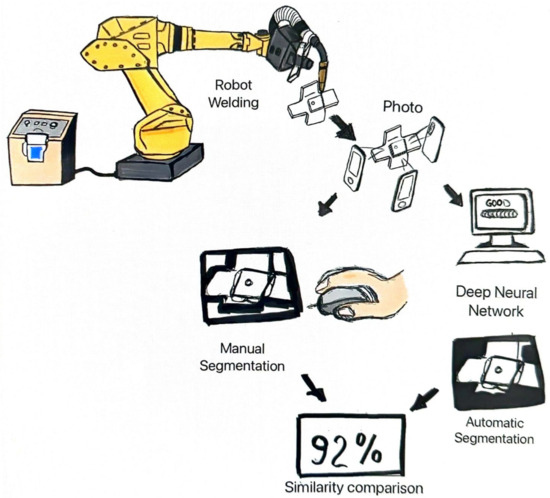

3.3. Automatic Segmentation Methodology

The automated inspection of weld beads in the metallurgical industry, particularly in GMAW processes, plays a fundamental role in ensuring the quality and structural integrity of the welded components. Defect identification in welded joints remains a critical challenge, as undetected flaws can compromise mechanical performance and lead to premature failure of industrial structures [6,37,38].

Traditional inspection methods rely on visual examination by certified welders, an inherently time-consuming process susceptible to human error. To overcome these limitations, it is necessary to implement advanced image segmentation techniques to identify regions of interest accurately without manual intervention.

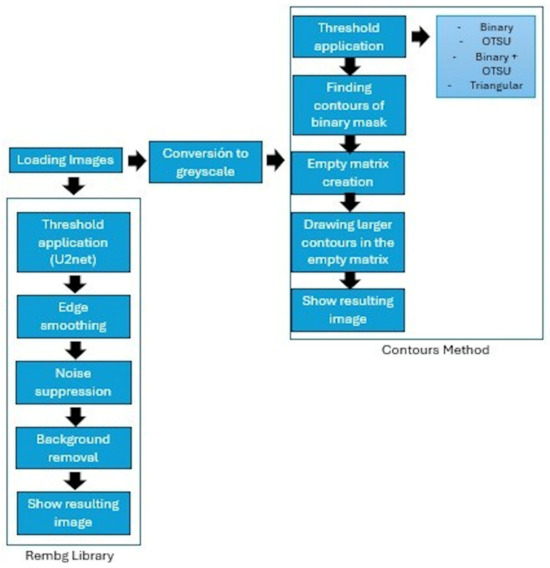

The research methodology of this paper includes the welding, the image acquisition, and the segmentation of the region of interest (Figure 7).

Figure 7.

Research methodology.

This study acquired a dataset of 30 weld bead images under controlled conditions to facilitate a comprehensive analysis of segmentation performance. These images encompass a range of weld bead characteristics, including geometric irregularities, porosity, and structural deviations (Figure 8), providing a representative sample for evaluating segmentation accuracy and reliability.

Figure 8.

Image of welding seams in the automotive parts. The image presents the photograph captured in the central position. This image will be further used as a reference throughout the paper.

A human expert manually delineated the ROI within the automotive component to evaluate the performance of various segmentation algorithms. This expert segmentation served as the ground truth for comparative analysis, enabling an objective assessment of each algorithm’s ability to identify the region of interest accurately.

Original images were acquired in RGB format and converted to grayscale to standardize preprocessing and enhance contrast for segmentation. Multiple segmentation techniques were then applied to determine their effectiveness in isolating ROI. The algorithms implemented included binary segmentation, binary segmentation combined with OTSU thresholding method, standalone OTSU thresholding, and binary segmentation combined with triangular thresholding.

Once the binary masks were generated, the Contours method was employed to identify and extract the most prominent contours within the segmented images. A blank matrix matching the original image’s dimensions was created to facilitate further analysis, onto which the largest detected contours were mapped.

Additionally, Rembg library (a background removal tool based on neural networks [9]) was utilized as an alternative segmentation approach. The key steps of this segmentation methodology are illustrated in Figure 9.

Figure 9.

Schematic of the segmentations performed to obtain the ROI.

To apply the Rembg method, the input images must be in .jpeg format. This technique utilizes a pre-trained deep learning model (U2Net) to generate an initial segmentation mask. The resulting mask undergoes a post-processing stage to refine its quality by eliminating artifacts and enhancing contour definition. This refinement is achieved through morphological operations, which reduce noise, improve the structural integrity of the segmented region, and smooth the mask edges.

The background removal process involves cropping the image and combining the original input with its corresponding binary mask, ensuring that only the region of interest remains. Finally, the processed images are preserved in the same format as the input data, maintaining consistency for further analysis.

4. Results and Discussion

This section presents and analyses the results obtained in image segmentation, evaluating the performance of the proposed methods compared to manual segmentation performed by an expert. The accuracy of region of interest (ROI) identification is analyzed, and the advantages and limitations of each technique are discussed.

4.1. Comparison with Manual Segmentation

Manual segmentation by an expert provides a fundamental benchmark for evaluating the effectiveness of the automatic methods. In this study, the expert manually identified and marked the ROI in each image, which allows validation of the results obtained by the automatic algorithms. This ground truth is instrumental in images with complex backgrounds or similar textured elements that can make segmentation difficult.

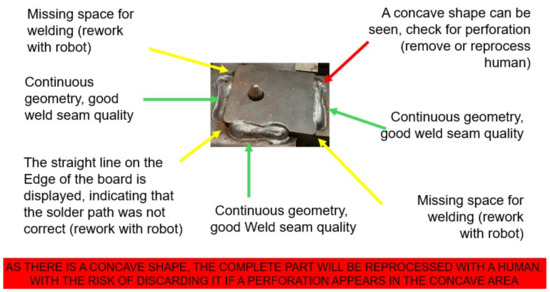

Figure 10 shows an example of the manual segmentation performed by the expert. The image includes the annotation of different areas of interest that are highlighted. Yellow arrows indicate regions that require reprocessing in the weld, while green arrows indicate correctly welded areas. In addition, a red arrow indicates an area that needs further inspection to determine if there is a structural defect.

Figure 10.

Human segmentation and knowledge of the weld seam of the metal part.

This visual analysis identifies the accuracy of the segmentation and its usefulness in quality inspection.

4.2. Assessment of Segmentation Methods

The segmentation methods were evaluated qualitatively and quantitatively. The qualitative evaluation was performed by visual inspection of the segmented images, while the quantitative evaluation measured the similarity between manual segmentation and automatic techniques.

4.2.1. Visual Comparison

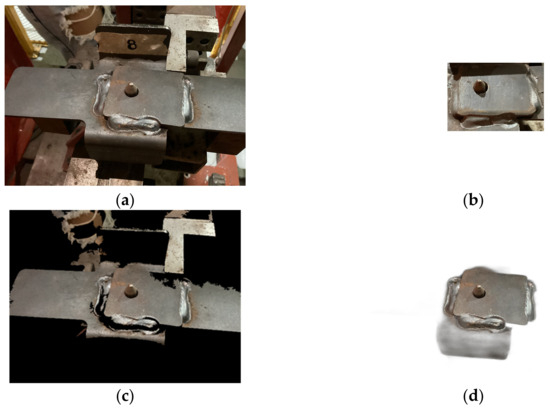

A comparison between manual segmentation and automatic methods is presented in Figure 11. The original image shows the metal part and its welds, while the expert’s manual segmentation is used as a reference (ground truth). The contour method (Contours) presents difficulties in accurately identifying the ROI and preserving details in the welds. In contrast, Rembg segmentation achieves more accurate ROI identification and preserves weld information. Table 5 exemplifies the results of the compared automatic segmentation algorithms.

Figure 11.

Results of traditional segmentation and deep learning-based segmentation: (a) original image; (b) human segmentation; (c) binary segmentation + contours; (d) segmentation by Rembg.

Table 5.

Results of applying different segmentation methods and the obtained ROI of the image of Figure 8.

The traditional segmentation algorithms obtained similar results (except for Triangular segmentation, which could not approach any ROI). After observing the behavior of the segmentation algorithms, we assumed that it was correct to compare the binary segmentation and its respective result after applying the Contours method with the segmentation performed with the Rembg method of the same image to exemplify the performance at the time of segmentation.

Figure 11a shows the original image of the metal part with the weld seams, which, according to the trained eye, shows a good weld that does not need to be checked or have any changes made to the weld. Figure 11b shows the segmentation made by the human expert. On the other hand, Figure 11c shows the result of the Contour segmentation application, which, although it manages to isolate the metal part, does not remove some background details, and the ROI is not well defined.

This segmentation also presents problems, specifically in the weld seams, as some details disappear, becoming black. This is a problem, as the ROI identification is inaccurate. On the other hand, Figure 11d shows the results of applying the Rembg method, where it can be seen that the ROI is well identified and the weld seams can be seen, so there is a noticeable difference in the type of segmentation.

4.2.2. Quantitative Comparison

The similarities between human segmentation and the results of traditional segmentation (Contours method) and segmentation based on neural networks (Rembg) were quantified. The quantification consisted of using the Structural Similarity Index Measure (SSIM), which helps to observe the similarity between two images [39]. The SSIM measure is computed as follows:

where represent the images to be compared, and are the pixel intensity averages of the images and , respectively; is the covariance between and (which measures how much the pixel intensities in the images vary together), and are the variances of and , respectively (which measures how much the pixel intensities in the images vary together), and and are stabilization constants to avoid divisions by zero when variances or means are close to zero. Thus, the similarity is calculated in the following way:

Table 6 shows the similarity between the segmentation methods and the segmentation made by the human expert.

Table 6.

Similarity (in percentage) between human segmentation (ground truth) and the compared segmentation methods (Contour Method and Rembg Method).

It can be seen that the similarity between binary segmentation based on the Contours method and segmentation based on neural networks such as Rembg are pretty far from each other compared to human segmentation. The similarity obtained by applying a traditional segmentation does not exceed 30%, which indicates that these methods are far from fulfilling the function of manual segmentation. On the other hand, segmentation based on neural networks has a similarity that is above 80%. This indicates that the selected method recognizes the ROI area of interest and is competitive with human segmentation.

The image segmentation results show Rembg has a high level of accuracy in identifying regions of interest. However, further optimization is required to improve the detection of specific defects. Compared to traditional visual inspection, the automated segmentation methodology significantly enhanced efficiency and consistency in results. These findings suggest that automating weld bead inspection through image segmentation techniques can revolutionize the quality assurance process in the industry, reducing both time and operation costs.

Despite these promising results, challenges remain regarding the precision and robustness of the methods under varying lighting conditions and image quality. Addressing these limitations will be a key focus of future research.

5. Conclusions

By adopting best practices and advanced technologies, such as automated inspection systems, industries can minimize the use of toxic materials and improve the quality of their products more sustainably. This paper introduced a novel welding image dataset that includes examples of common welding errors. In particular, we want to highlight that the proposed dataset includes human experts’ knowledge regarding several common welding errors, such as incorrect trajectory, lack of welding, need to remove splatter welding or welding seam, or presence of holes. Each image references specific errors or good welding. This publicly available dataset can improve the training and development of new technologies and positively impact clean production and the environment.

On the other hand, we analyzed automatic segmentation methods. The experimental results show that using deep neural network-based segmentation is comparable to human segmentation, with up to 87% similarities.

The importance of the studies carried out lies in the impact of automatic segmentation in the quality assessment process of GMAW. Automatic segmentation also contributes to predictive maintenance, minimizing unplanned downtime and emergency repairs. Furthermore, it enables energy-efficient welding by precisely adjusting parameters such as heat input and welding speed, leading to lower energy consumption and a smaller environmental footprint. In summary, automatic segmentation enables precise process control, improving consistency, reducing labor costs, and improving overall production efficiency.

Through real-time image analysis, welding defects can be detected early, avoiding costly rework and reducing material waste. From an economic and environmental perspective, automatic segmentation improves sustainability by reducing material waste, improving process efficiency, and optimizing resource use.

In future work, we want to use machine learning algorithms to classify the welding images once segmented automatically and to integrate the computer vision models in a complete setup, including in the cloud services.

Author Contributions

Conceptualization, Y.V.-R. and O.C.-N.; methodology, J.A.L.-I. and E.M.D.-I.; software, E.M.D.-I.; validation, Y.V.-R. and O.C.-N.; investigation, J.A.L.-I. and E.M.D.-I.; data curation, J.A.L.-I. and Y.V.-R.; writing—original draft preparation, J.A.L.-I. and E.M.D.-I.; writing—review and editing, Y.V.-R. and O.C.-N.; visualization, J.A.L.-I. and E.M.D.-I.; supervision, Y.V.-R. and O.C.-N. All authors have read and agreed to the published version of the manuscript.

Funding

This research received no external funding.

Institutional Review Board Statement

Not applicable.

Informed Consent Statement

Not applicable.

Data Availability Statement

The dataset introduced in this research will be donated to the University of California (UCI) Machine Learning Repository to be publicly available. In the meantime, researchers can download it from this link: https://github.com/MetatronEMDI/Welding-Dataset-ESIME, accessed on 19 February 2025. The dataset includes the original images, the images segmented by a human expert, and a .pdf file with the human expert’s knowledge regarding the errors presented in each image (similar to Figure 10 of this paper). In addition, the source code regarding the segmentation experiments can be found at https://github.com/MetatronEMDI/Supplementary---code, accessed on 19 February 2025.

Acknowledgments

The authors would like to thank the Instituto Politécnico Nacional (Secretaría Académica, Secretaría de Investigación y Posgrado, ESIME Culhuacan, ESIME Azcapotzalco, and Centro de Innovación y Desarrollo Tecnológico en Cómputo), the Secretaría de Ciencia, Humanidades, Tecnología e Innovación, and Sistema Nacional de Investigadores for their economic support to develop this work. The authors also want to thank the anonymous reviewers for their valuable comments that helped improve the paper.

Conflicts of Interest

The authors declare no conflicts of interest.

References

- Moinuddin, S.Q.; Saheb, S.H.; Dewangan, A.K.; Cheepu, M.M.; Balamurugan, S. Automation in the Welding Industry: Incorporating Artificial Intelligence, Machine Learning and Other Technologies; John Wiley & Sons: Hoboken, NJ, USA, 2024. [Google Scholar]

- Diaz-Cano, I.; Morgado-Estevez, A.; Rodríguez Corral, J.M.; Medina-Coello, P.; Salvador-Dominguez, B.; Alvarez-Alcon, M. Automated fillet weld inspection based on deep learning from 2D images. Appl. Sci. 2025, 15, 899. [Google Scholar] [CrossRef]

- Li, H.; Ma, Y.; Duan, M.; Wang, X.; Che, T. Defects detection of GMAW process based on convolutional neural network algorithm. Sci. Rep. 2023, 13, 21219. [Google Scholar] [CrossRef] [PubMed]

- Pico, L.E.A.; Marroquín, O.J.A.; Lozano, P.A.D. Application of Deep Learning for the Identification of Surface Defects Used in Manufacturing Quality Control and Industrial Production: A Literature Review. Ingeniería 2023, 28, 1–20. [Google Scholar]

- Reddy, J.; Dutta, A.; Mukherjee, A.; Pal, S.K. A low-cost vision-based weld-line detection and measurement technique for robotic welding. Int. J. Comput. Integr. Manuf. 2024, 37, 1538–1558. [Google Scholar] [CrossRef]

- Kim, D.-Y.; Lee, H.W.; Yu, J.; Park, J.-K. Application of Convolutional Neural Networks for Classifying Penetration Conditions in GMAW Processes Using STFT of Welding Data. Appl. Sci. 2024, 14, 4883. [Google Scholar] [CrossRef]

- Kralj, V. Biocybernetic Investigations of Hand Movements of Human Operator in Hand Welding; International Institute of Welding: Genoa, Italy, 1968. [Google Scholar]

- Kim, D.-Y.; Yu, J. Evaluation of Continuous GMA Welding Characteristics Based on the Copper-Plating Method of Solid Wire Surfaces. Metals 2024, 14, 1300. [Google Scholar] [CrossRef]

- Tomizuka, M.; Dornfeld, D.; Purcell, M. Application of microcomputers to automatic weld quality control. J. Dyn. Syst. Meas. Control 1980, 102, 62–68. [Google Scholar] [CrossRef]

- Penttilä, S.; Kah, P.; Ratava, J.; Eskelinen, H. Artificial neural network controlled GMAW system: Penetration and quality assurance in a multi-pass butt weld application. Int. J. Adv. Manuf. Technol. 2019, 105, 3369–3385. [Google Scholar] [CrossRef]

- Penttilä, S.; Lund, H.; Skriko, T. Possibilities of artificial intelligence-enabled feedback control system in robotized gas metal arc welding. J. Manuf. Mater. Process. 2023, 7, 102. [Google Scholar] [CrossRef]

- Reisgen, U.; Mann, S.; Oster, L.; Gött, G.; Sharma, R.; Uhrlandt, D. Study on identifying GMAW process deviations by means of optical and electrical process data using ANN. In Proceedings of the IEEE 16th International Conference on Automation Science and Engineering (CASE), Hong Kong, China, 20–21 August 2020; pp. 1596–1601. [Google Scholar]

- Njock Bayock, F.; Nlend, R.; Mbou Tiaya, E.; Appiah Kesse, M.; Kamdem, B.; Kah, P. Development of an Artificial Intelligence-Based Algorithm for Predicting the Mechanical Properties of Weld Joints of Dissimilar S700MC-S960QC Steel Structures. Civ. Eng. Infrastruct. J. 2025, in press. [Google Scholar] [CrossRef]

- Nomura, K.; Fukushima, K.; Matsumura, T.; Asai, S. Burn-through prediction and weld depth estimation by deep learning model monitoring the molten pool in gas metal arc welding with gap fluctuation. J. Manuf. Process. 2021, 61, 590–600. [Google Scholar] [CrossRef]

- Yu, R.; Kershaw, J.; Wang, P.; Zhang, Y. Real-time recognition of arc weld pool using image segmentation network. J. Manuf. Process. 2021, 72, 159–167. [Google Scholar] [CrossRef]

- Enriquez, M.L.; Concepcion, R.; Relano, R.-J.; Francisco, K.; Mayol, A.P.; Española, J.; Vicerra, R.R.; Bandala, A.; Co, H.; Dadios, E. Prediction of Weld Current Using Deep Transfer Image Networks Based on Weld Signatures for Quality Control. In Proceedings of the 2021 IEEE 13th International Conference on Humanoid, Nanotechnology, Information Technology, Communication and Control, Environment, and Management (HNICEM), Manila, Philippines, 28–30 November 2021; pp. 1–6. [Google Scholar]

- Redmon, J.; Farhadi, A. Yolov3: An incremental improvement. arXiv 2018, arXiv:1804.02767. [Google Scholar]

- Huang, G.; Liu, Z.; Pleiss, G.; Van Der Maaten, L.; Weinberger, K.Q. Convolutional networks with dense connectivity. IEEE Trans. Pattern Anal. Mach. Intell. 2019, 44, 8704–8716. [Google Scholar] [CrossRef]

- Tan, M.; Le, Q. Efficientnet: Rethinking model scaling for convolutional neural networks. In Proceedings of the International Conference on Machine Learning, Long Beach, CA, USA, 9–15 June 2019; pp. 6105–6114. [Google Scholar]

- Szegedy, C.; Liu, W.; Jia, Y.; Sermanet, P.; Reed, S.; Anguelov, D.; Erhan, D.; Vanhoucke, V.; Rabinovich, A. Going deeper with convolutions. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Boston, MA, USA, 7–12 June 2015; pp. 1–9. [Google Scholar]

- Sandler, M.; Howard, A.; Zhu, M.; Zhmoginov, A.; Chen, L.-C. Mobilenetv2: Inverted residuals and linear bottlenecks. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Salt Lake City, UT, USA, 18–23 June 2018; pp. 4510–4520. [Google Scholar]

- Zoph, B.; Vasudevan, V.; Shlens, J.; Le, Q.V. Learning transferable architectures for scalable image recognition. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Salt Lake City, UT, USA, 18–23 June 2018; pp. 8697–8710. [Google Scholar]

- He, K.; Zhang, X.; Ren, S.; Sun, J. Deep residual learning for image recognition. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Las Vegas, NV, USA, 27–30 June 2016; pp. 770–778. [Google Scholar]

- Chollet, F. Xception: Deep learning with depthwise separable convolutions. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Honolulu, HI, USA, 21–26 July 2017; pp. 1251–1258. [Google Scholar]

- Yang, J.; Zou, Y. Enhanced automatic weld seam tracking: Bayesian optimization and information-based pruning of compact denoising and object detection models. Expert Syst. Appl. 2025, 266, 126132. [Google Scholar] [CrossRef]

- Jocher, G. Ultralytics YOLOv5, Version 7.0. 2020. Available online: https://docs.ultralytics.com/models/yolov5/#citations-and-acknowledgements (accessed on 19 February 2025).

- Li, T.; Cao, Y.; Ye, Q.; Zhang, Y. Generative adversarial networks (GAN) model for dynamically adjusted weld pool image toward human-based model predictive control (MPC). J. Manuf. Process. 2025, 141, 210–221. [Google Scholar] [CrossRef]

- Wu, C.; Polte, T.; Rehfeldt, D. A fuzzy logic system for process monitoring and quality evaluation in GMAW. Weld. J. 2001, 80, 33. [Google Scholar]

- Cavazos Hernández, E.A.; Chiñas Sánchez, P.; Navarro González, J.L.; López Juárez, I. Geometries and quality assesment of weld beads in 5052-H32 aluminum alloys joined by semiautomated GMAW through a double fuzzy system. Int. J. Adv. Manuf. Technol. 2023, 129, 2011–2030. [Google Scholar] [CrossRef]

- Wordofa, T.N.; Perumalla, J.R.; Sharma, A. An artificial intelligence system for quality level–based prediction of welding parameters for robotic gas metal arc welding. Int. J. Adv. Manuf. Technol. 2024, 132, 3193–3212. [Google Scholar] [CrossRef]

- Chen, T.; Xue, S.; Wang, B.; Zhai, P.; Long, W. Study on short-circuiting GMAW pool behavior and microstructure of the weld with different waveform control methods. Metals 2019, 9, 1326. [Google Scholar] [CrossRef]

- Wang, H.; Klaric, S.; Havrlišan, S. Preliminary Study of Bead-On-Plate Welding Bead Geometry for 316L Stainless Steel Using GMAW. FME Trans. 2024, 52, 563–572. [Google Scholar] [CrossRef]

- Ayoade, A.; Steele, J. Heterogeneous Measurement System for Data Mining Robotic GMAW Weld Quality. Weld. J. 2022, 101, 96–110. [Google Scholar] [CrossRef]

- Cullen, M.; Ji, J. Online defect detection and penetration estimation system for gas metal arc welding. Int. J. Adv. Manuf. Technol. 2025, 136, 2143–2164. [Google Scholar] [CrossRef]

- Jolly, W. Acoustic emission exposes cracks during welding (Acoustic emission from welds in stainless steel plates used for detecting defects in single and multiple pass machine welds). Weld. J. 1969, 48, 21–27. [Google Scholar]

- Biber, A.; Sharma, R.; Reisgen, U. Robotic welding system for adaptive process control in gas metal arc welding. Weld. World 2024, 68, 2311–2320. [Google Scholar] [CrossRef]

- Kim, G.-G.; Kim, Y.-M.; Kim, D.-Y.; Park, J.-K.; Park, J.; Yu, J. Vision-Based Online Molten Pool Image Acquisition and Assessment for Quality Monitoring in Gas-Metal Arc Welding. Appl. Sci. 2024, 14, 5998. [Google Scholar] [CrossRef]

- Mobaraki, M.; Ahani, S.; Gonzalez, R.; Yi, K.M.; Van Heusden, K.; Dumont, G.A. Vision-based seam tracking for GMAW fillet welding based on keypoint detection deep learning model. J. Manuf. Process. 2024, 117, 315–328. [Google Scholar] [CrossRef]

- Bakurov, I.; Buzzelli, M.; Schettini, R.; Castelli, M.; Vanneschi, L. Structural similarity index (SSIM) revisited: A data-driven approach. Expert Syst. Appl. 2022, 189, 116087. [Google Scholar] [CrossRef]

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).