Abstract

Fabric defect detection is a crucial step in ensuring product quality within the textile industry. However, current detection methods face challenges in processing efficiency for high-resolution images, detail recovery during upsampling, and the adaptability of loss functions for low-quality samples, which limit detection accuracy and real-time performance. To overcome these limitations, this paper introduces an improved YOLOv8-based model that optimizes both aspects for fabric defect detection. First, we introduce an efficient RG-C2f module to improve processing speed for high-resolution images. Second, the DySample upsampling operator is adopted to enhance edge and detail preservation, improving detail recovery within defect regions. Finally, an adaptive inner-WIoU loss function is designed to dynamically adjust focus on low-quality samples, thereby strengthening the model’s generalization capability. Experimental results validated on the TILDA and Tianchi datasets show that, compared with YOLOv8, the proposed model achieves mAP improvements of 6.4% and 1.5%, respectively, demonstrating significant enhancements in detection accuracy and speed. This advancement provides strong support for fabric defect detection.

1. Introduction

Fabric defect detection is a critical task in textile manufacturing, as defects can significantly impact both product quality and production efficiency. These defects, including cracks, color variations, and holes, are often caused by factors such as raw material quality, manufacturing processes, and equipment [1]. Moreover, defects not only affect the aesthetic value of the products but also pose potential risks to fabric durability and safety [2]. Therefore, accurate and effective defect detection during the production process is crucial to maintaining product quality and controlling costs.

However, fabric defect detection faces many challenges. Fabric defects typically exhibit complex characteristics, such as large size variations, numerous small defects, and complex background textures, making detection accuracy a significant challenge [3]. Real-time, accurate fabric defect detection is filled with technical challenges [4]. Traditional manual inspection and machine vision-based methods are often limited by subjectivity, reliance on human expertise, and handcrafted feature extraction, making it difficult to adapt them to the varied characteristics and detection needs of fabric defects [5]. In recent years, deep learning has demonstrated high accuracy and real-time capabilities in defect detection, particularly convolutional neural network-based models like YOLO. These models have become the dominant approach due to their efficiency and speed [6].

Although the YOLO model has excellent detection performance, it still suffers from the problems of large computational volume, poor detail reproduction, and limited generalization ability in the detection of fabric defects in high-resolution images. For this reason, this paper proposes an improved model based on YOLOv8, which makes the following three innovative improvements for these problems:

1. For the problem of the large computational volume of the traditional Bottleneck residual block, the lightweight RG-C2f module is designed to effectively improve the inference speed and meet the demand for real-time detection of high-resolution images.

2. To solve the problem of insufficient recovery of edge details in the upsampling method, introduce the DySample upsampling operator to improve the recovery of edge details in the upsampling process and enhance the localization accuracy of defect edges.

3. To solve the problem of insufficient adaptability of the CIoU loss function to sample quality differences, the adaptive inner-WIoU loss function is designed to dynamically adjust the penalties for different sample qualities to enhance the robustness and generalization ability of the model.

Experimental results show that the YOLOv8 model proposed in this paper achieves significant improvement in both accuracy and efficiency, demonstrating its application potential and technical advantages in fabric defect detection tasks.

The rest of the paper is organized as follows: Section 2 describes the related work; Section 3 details the improved model proposed in this paper, including the network architecture and key technologies; Section 4 describes the experimental design, covering the dataset, experimental environment, evaluation metrics, ablation experiments, and comparative experiments; and Section 5 summarizes the full paper and looks forward to future research directions.

2. Related Work

Currently, deep learning-based methods are widely applied in industrial defect detection, including fabric defects [7], metal surface defects [8], PCB board defects [9], and so on. Target detection methods in deep learning are generally divided into two categories: single-stage and two-stage networks, with the latter including models such as R-CNN [10], Fast R-CNN [11], and Faster R-CNN [12], etc. By first generating candidate regions and then classifying and localizing defects, these models typically offer higher detection accuracy. The high computational complexity of such networks results in slow processing, making real-time performance challenging. Consequently, researchers have proposed various improvements for two-stage networks. Chen et al. [13] improved fabric defect detection in complex backgrounds by incorporating a Gabor filter within Faster R-CNN and optimizing training with a genetic algorithm. However, this method relies on a two-stage training approach of the genetic algorithm, resulting in a long model training time, which makes it difficult to meet the high real-time demand. Wang et al. [14] enhanced Cascade R-CNN by integrating an adaptive fusion attention module (AFAM), which improves defect detection for small- and medium-sized fabrics with minor defects by augmenting feature mapping and attentional information flow across spatial and channel dimensions.

The single-stage network simplifies target detection by using a single structure for classification and localization, achieving higher speed and making it ideal for real-time scenarios. As a representative single-stage model, the YOLO series enhances detection speed and accuracy by optimizing network architecture and feature extraction. In recent years, YOLO-based methods have been increasingly applied to fabric defect detection. Liu et al. [15] proposed an improved fabric defect detection algorithm based on YOLOv8, which improves the feature response to small targets by introducing the GS-Conv and Slim-neck feature fusion module, adds a P2 detection layer to enhance the detection of small defects, and adopts the EMA-Slide Loss function to improve the classification stability and model training effect, which outperforms the original YOLOv8n model in terms of both mAP and detection speed. Li et al. [6] introduced PEI-YOLOv5 for fabric defect detection, which improves the efficiency of spatial feature extraction by introducing particle depth convolution, enhances the BiFPN module to improve the multi-scale feature fusion effect, and improves the loss function by adopting IN loss function to improve the detection accuracy for small targets while accelerating model convergence. Zhang et al. [16] developed an anchorless YOLOv8 network using DsPAN, which boosts the detection of small defects in multi-scale environments by leveraging the lightweight PAN and LCBHAM modules while preserving local details and location information during deep feature extraction. Wang et al. [17] developed a lightweight multi-type defect detection approach using DCP-YOLOv8, improving accuracy through deformable convolution and feature fusion while optimizing efficiency for real-time performance. Yu et al. [18] introduced the ABG-YOLOv8n model, an improved version of YOLOv8n, which boosts detection performance with a lightweight network, bi-directional feature pyramid, and ASFF mechanism, resulting in a 4.5% increase in mAP to meet the real-time monitoring needs of smart grids.

3. Methods

There is a wide variety of fabric defects; common ones include cracks, color differences, and holes. The complexity and diversity of these defects, especially the differences in size, make the inspection task more difficult. The complexity of defects exceeds the capabilities of traditional machine vision methods, which cannot meet the high accuracy and real-time performance required in practical applications. Although the YOLO series models perform well in target detection, they still face challenges such as high computational effort, incomplete detail recovery, and insufficient generalization capability in the application of high-resolution fabric images.

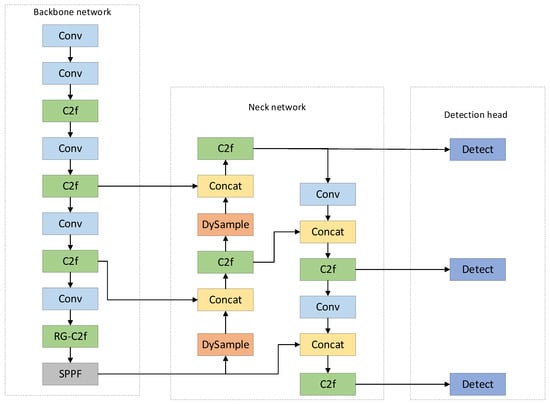

To address these issues, YOLOv8n is used as the benchmark model, and the YOLOv8 architecture is enhanced to better adapt to fabric defect detection. The improved network structure is shown in Figure 1, which mainly includes the following key parts: the RG-C2f module, the DySample upsampling operator, and the improvement of the WIoU loss function. First, the RG-C2f module is proposed, which combines the RepConv structure and the adjustable scaling factor to significantly enhance the feature extraction capability while reducing the computational and parametric quantities to ensure fast inference for high-resolution images. Second, the DySample upsampling operator replaces the traditional nearest neighbor interpolation method, which effectively preserves the image details and improves the detection accuracy by learning the optimal sampling pattern. In addition, the introduction of the inner-WIoU loss function combines dynamic sample weights with auxiliary frames of different scales, optimizes the border regression process, and boosts the model’s capacity to identify complex fabric defects. These improvements enable YOLOv8 to achieve higher detection accuracy and efficiency when dealing with fabric defect detection tasks.

Figure 1.

Structure of the improved YOLOv8.

3.1. RG-C2f Module

In the fabric defect detection application of YOLOv8, the backbone network’s feature extraction is crucial for improving detection accuracy. However, the traditional BottleNeck residual block in C2f, although excellent in feature extraction, has a large computational and parametric count when dealing with high-resolution images, resulting in slower inference speeds and failing to meet the demands of real-time detection. In addition, the redundant information in the residual block may affect the generalization ability of the model, especially in the complex fabric texture background, which makes it easy to introduce noise and affect the accuracy of defect detection.

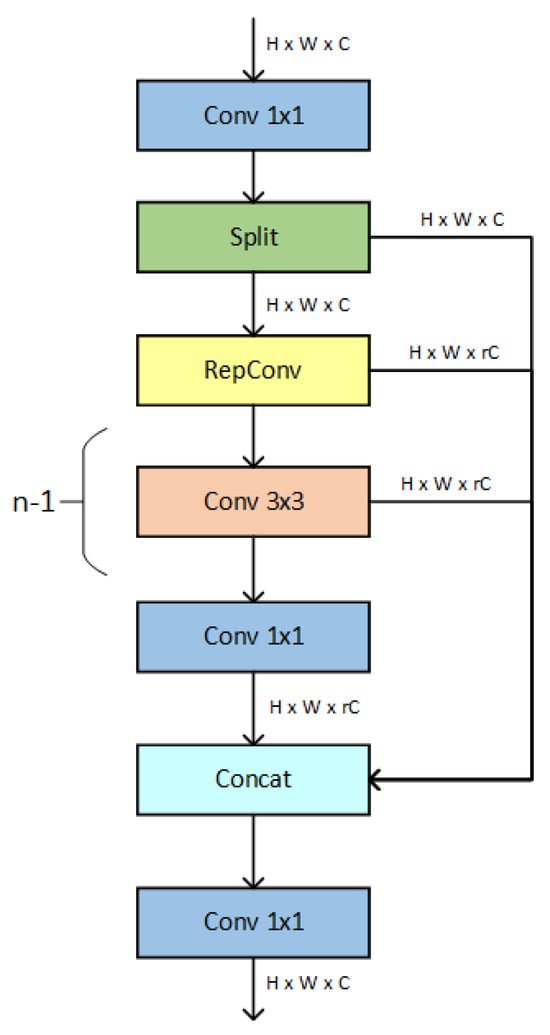

To address this problem, this paper proposes an improved C2f module structure named RG-C2f, as shown in Figure 2. Inspired by GhostNet [19], the RG-C2f module effectively reduces the consumption of computational resources by using inexpensive operations to generate redundant feature maps. Unlike the traditional BottleNeck residual block, the RG-C2f module discards the residual structure commonly used in YOLOv5 and YOLOv8. To compensate for the performance loss caused by removing the residual block, The RepConv [20] operation is applied to the gradient circulation branch to boost feature extraction and optimize gradient flow. Meanwhile, the convolutional kernel fusion technique of RepConv can further improve efficiency in the inference stage, realizing the dual advantages of low computational cost and high inference speed.

Figure 2.

RG-C2f Structure.

Another advantage of the RG-C2f module is its adjustable scaling factor, which gives it the flexibility to adapt models of different sizes. In fabric defect detection tasks, this means that the complexity of the model can be adjusted according to different fabric types and defect characteristics in order to achieve an optimal balance of performance and efficiency. By introducing the RG-C2f module, YOLOv8’s backbone network significantly optimizes computational efficiency while maintaining high detection accuracy, especially when processing high-resolution images, demonstrating faster inference and lower latency and providing a more efficient solution for fabric defect detection.

3.2. DySample Upsampling Operator

In YOLOv8’s neck network, nearest neighbor interpolation assigns the value of the nearest known pixel to the target pixel during upsampling. However, with this method, since only the value of the nearest pixel is considered, the interpolated image may have a jagged effect and the edges may not look smooth and continuous, especially when high magnification is performed; the nearest neighbor interpolation method will appear to be inaccurate; and the detail and sharpness of the image may be significantly degraded.

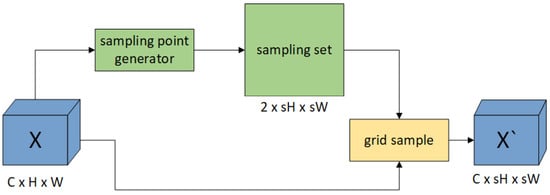

To solve this problem, this paper replaces the default upsampling operator in YOLOv8 with a new upsampling operator, DySample [21]. Dysample is a data-driven upsampling method based on the core idea of determining the optimal sampling pattern through learning. Unlike traditional interpolation methods, DySample does not simply apply a fixed formula for interpolation but allows the network to autonomously learn how to recover high-quality, high-resolution images from low-resolution feature maps. This approach effectively preserves image details, reduces artifacts, and improves the performance of downstream tasks such as target detection.

The execution flow of DySample, shown in Figure 3, consists of two main steps: generating the sampling set and performing grid sampling. The specific flow is as follows:

Figure 3.

Structure of DySample.

An initial offset O is first generated from the input feature map X. Given an upsampling scale factor s, we use a linear layer with the number of input and output channels C and , respectively, and the size of the generated offset O is . Then, its size is changed by pixel shuffling operation as follows:

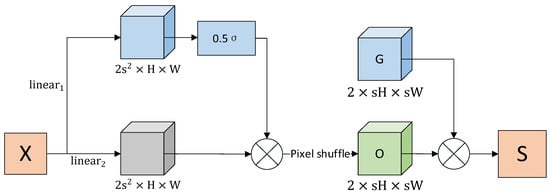

To increase the flexibility of the bias, DySample further introduces a dynamic range factor, as shown in Figure 4. The dynamic range factor is generated by performing two linear transformations on the input feature map X and using the Sigmoid activation function. The dynamic range factor adjusts the range of the offset to make it more adaptable to join the feature map content. The new offset formula is as follows:

Figure 4.

Flowchart for generating a sample set using dynamic range factors.

The sampling set S is formed by combining the original grid G with the generated offsets O, i.e.

Finally, the dynamic sampling set S resamples the input feature map X using the grid sample function to generate the final upsampled map, as shown in Equation (3).

3.3. Inner-WIoU Loss Function

In the object detection task, YOLOv8 adopts the CIoU loss function as the main optimization objective for bounding box regression. CIoU not only takes into account the overlapping regions of the predicted and real boxes in the traditional IoU but also makes the model fit the geometrical information of the object more accurately by additionally introducing two key factors, namely, the distance of the centroid point and the aspect ratio. Specifically, the CIoU loss function is shown in Equation (5).

where IoU is the overlap weight of the prediction box and the real box, denotes the Euclidean distance between their centroids, c is the diagonal distance of the smallest enclosing box, v is the aspect ratio of the two boxes, and α is the coefficient regulating the aspect ratio weight.

The advantage of CIoU is that it considers several geometric factors, making it better than traditional IoU, DIoU [22], GIoU [23], etc. in terms of accuracy. In particular, during the training process, CIoU uses the same weight-updating strategy for all samples, ignoring the difficulty differences between samples. For complex or low-quality samples, CIoU may not be able to provide enough gradient information, which leads to the model preferring to optimize simple samples and ignoring those difficult to locate. Meanwhile, although the CIoU loss function takes into account the overlap region, center distance, and aspect ratio of the frames, it tends to lead to poor model convergence and performance when dealing with small targets (e.g., fabric defects), especially those with small and extreme aspect ratios such as holes.

To overcome these challenges, the WIoU [24] loss function is introduced in this paper, which ensures that the model can handle complex samples more efficiently during training by dynamically adjusting the weights of the samples. The core idea of WIoU is to regulate the weight of each sample according to the sample’s outlier degree β so as to enhance the model’s generalization ability. The loss function of the WIoU is shown in Equation (6).

where denotes the 3rd version of the WIoU loss function, r is the gradient weight factor, and denotes the IoU loss function value. The weight factor r is determined by the outlier of the sample, which is calculated as

where denotes the IoU loss value of the current sample, and is the average of the IoU loss values of all the samples in the training process. The specific formula for weight r is

where α and δ are two hyperparameters used to control the weight adjustment, which are used to adjust the weight gain of the samples, enabling the WIoU to dynamically adjust the gradient according to the sample outliers.

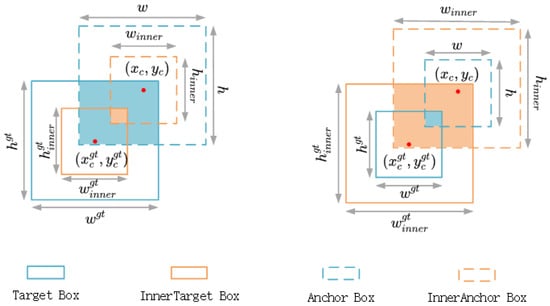

Also, this paper introduces the inner-IoU [25] loss function, which accelerates the process of edge regression by utilizing auxiliary boxes of different scales for loss calculation. With this approach, the model can converge faster, especially in fabric defect detection, and this fast convergence helps to improve the detection of defects of multiple sizes. In inner-IoU, it is assumed that the GT box and anchor box are denoted as and respectively. Their centers are and respectively, as shown in Figure 5. A scaling factor ratio whose value range is [0.5,1.5] is also introduced to adjust the size of the box.

Figure 5.

Inner-IoU auxiliary bounding box.

The value of the inner-IoU loss function is defined as the ratio of the intersection area to the union area. By introducing auxiliary boxes of different scales, this method enables more robust optimization of the predicted box’s position and size during the bounding box regression process. Its formulation is as follows:

The formulas for the intersection area and union area are as follows:

Here, ,,, and ,,, denote the left and right and top and bottom boundaries of the ground truth box and anchor box, respectively, under the scaling ratio, where is the scaling ratio. The formulas for the left and right and top and bottom boundaries of the ground truth box and anchor box under the scaling ratio are as follows:

Finally, this paper combines WIoU with inner-IoU to propose the inner-WIoU loss function. This loss function leverages the strengths of both, improving complex fabric defect detection and accelerating bounding box regression. Its formulation is as follows:

By using the inner-WIoU loss function, YOLOv8 demonstrates higher accuracy and better real-time detection capabilities in fabric defect detection tasks, making it more suitable for practical application requirements.

4. Experimental Results and Analysis

4.1. Datasets

In the field of fabric defect detection, dataset annotation is crucial. Only accurately annotated data can be used for model training. During training, the model’s predictions are compared with the ground truth to compute the error, and the model parameters are adjusted through backpropagation. This ultimately allows the model to achieve the best possible alignment with real-world defect localization. Therefore, the quality of the dataset directly impacts the model’s performance and accuracy. In this paper, training and validation are conducted on two publicly available fabric defect datasets: the TILDA dataset and the Tianchi dataset.

4.1.1. TILDA Dataset

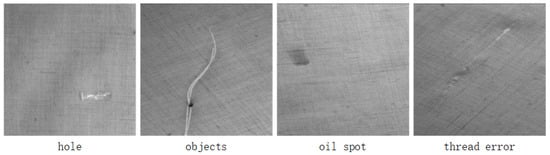

The TILDA textile defect dataset is specifically designed for surface defect detection in textiles. It contains 896 images with a resolution of 416 × 416 pixels, covering four different categories of textile defects: holes, foreign objects, oil stains, and misaligned threads, as shown in Figure 6.

Figure 6.

TILDA dataset samples.

4.1.2. Tianchi Dataset

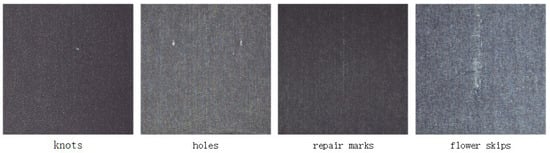

The Tianchi dataset is the fabric images collected on-site by the Tianchi platform deep into the textile workshop in Nanhai, Foshan, which contains a total of 5913 image samples, all with a resolution of 2446 × 1000 pixels, covering all kinds of important defects of fabrics. In the textile industry, there are 34 categories. The defect categories are not balanced, as there is a small number of samples in some categories, and some of the defect types have similar characteristics that can be categorized into the same category. Therefore, we finally selected 20 common fabric defect categories after manual classification and screening. These categories include holes, three silk, stains, knots, repair marks, flower skips, hundred feet, etc., respectively, and some of the samples are shown in Figure 7.

Figure 7.

Some samples of the Tianchi dataset.

4.2. Experimental Environment

This paper uses Python3.8 and the PyTorch1.13.1 framework to train and test the network model. The system is Win10, the CPU is Intel(R) Core(TM) i5-9400F, the GPU is NVIDIA GeForce RTX 2070 SUPER, and the system has 16 G of RAM. The model is trained with the SGD optimizer, applying a 0.01 learning rate, 0.937 momentum, 0.0005 weight decay, a batch size of 32, and 300 epochs.

4.3. Evaluation Metrics

In object detection experiments, selecting appropriate evaluation metrics is crucial. This paper uses precision (P), recall (R), mean average precision (mAP), and frames per second (FPS) as the primary metrics. Precision and recall assess detection accuracy and completeness, mAP evaluates overall model performance across categories, and FPS indicates real-time efficiency.

4.4. Ablation Experiments

To evaluate their effects on model performance, ablation experiments were performed for the RG-C2f module, DySample upsampling operator, and inner-WIoU loss function. In this paper, we first use YOLOv8 as the baseline model and evaluate their effects on model performance by gradually removing or replacing key modules. The experiments were trained using the TILDA dataset, the symbol “√” indicates that the method was used, and the results are shown in Table 1.

Table 1.

Results of ablation experiments.

According to the table, the baseline model records a mAP of 0.756 and an FPS of 103.5. The addition of the RG-C2f module results in a slight mAP improvement to 0.761, and the FPS is increased to 119.3, while the number of FLOPs and parametric quantities is significantly reduced, suggesting that RG-C2f optimizes the computational paths and improves the inference efficiency. After adding DySample, the mAP improves to 0.763, but the FPS decreases to 95.4, indicating that it increases the computational overhead while improving the detection performance. After the introduction of inner-WIoU, the accuracy and mAP are improved to 0.783 and 0.772, respectively, and the FPS is basically unchanged, indicating that it enhances the localization accuracy without significantly affecting the inference speed. When RG-C2f, DySample, and inner-WIoU are introduced at the same time, the model performance reaches optimization, with the mAP boosted to 0.82, the FPS is 114.7, and the FLOPs remain low at 7.9G. The experimental findings verify the improved model’s effectiveness.

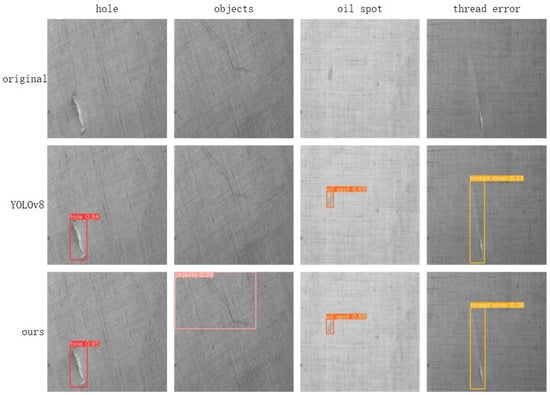

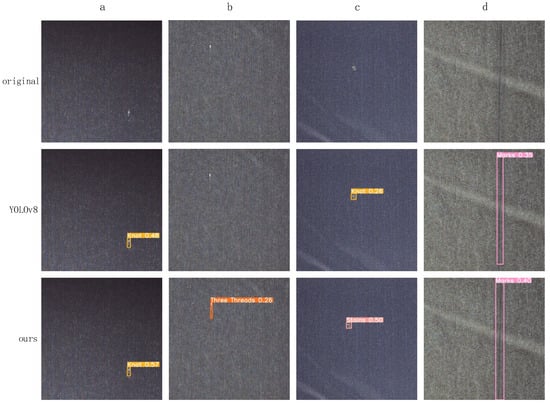

A comparison of detection results between this model and YOLOv8 on the TILDA dataset is illustrated in Figure 8, which shows the detection results of four categories, namely holes, foreign objects, oil contamination, and line errors. It can be seen that in the original YOLOv8 in the category of foreign objects, there is a leakage of detection, and other categories of accuracy are also low. This paper’s model, in terms of accuracy and leakage of detection rate, has been improved, showing its good performance.

Figure 8.

Comparison chart of detection results of TILDA dataset.

4.5. Comparison Experiment

4.5.1. Comparative Analysis of Different Modules

In order to verify the lightweight effect of the proposed modules, the C3, ELAN, C2f, and RG-C2f modules are selected to evaluate the inference speed and resource consumption individually in this paper. The average inference time, frame rate, floating-point operations, and parameters of each module were recorded by performing 2000 inference tests on GPUs. The results are shown in Table 2.

Table 2.

Comparative experimental results of different modules.

The experimental results show that the C3 module has medium computational and parameter counts and relatively fast inference speed, which makes it suitable for use in scenarios that balance performance and computational complexity. The RG-C2f module has the highest inference speed (481.65 FPS) and the lowest resource consumption (3.565G of FLOPs and 217,920 of parameters), while the ELAN module has the largest parameter (492,288) and has the slowest inference (328.00 FPS). This indicates that the RG-C2f module has a significant advantage in scenarios that require high inference efficiency.

4.5.2. Comparative Analysis of Different Algorithms

This paper evaluates the effectiveness of the proposed method in fabric defect detection by comparing it with mainstream models such as Faster R-CNN [12], Cascade R-CNN [26], TPH-YOLOv5 [27], YOLOv5, and YOLOv8 [28] on the TILDA dataset. To ensure a fair comparison of inference speed, FPS (Frames Per Second) is measured after a warm-up phase of 200 iterations, followed by 1000 inference iterations to obtain an average inference time per image. The comparison results are shown in Table 3.

Table 3.

Comparative test results of different models.

From the table, the proposed model demonstrates significant advantages in fabric defect detection compared with several mainstream models while retaining the lightweight characteristics of the YOLO series of networks. The mAP value of Faster RCNN is only 0.71, which is due to the large difference between its initial anchor frame and the real frame in the dataset, and its performance declines, particularly in detecting fabric defects with extreme aspect ratios. Furthermore, due to Faster R-CNN being a two-stage detection network, its detection speed is restricted to 25 FPS, which fails to meet the real-time detection requirements. Although YOLOv5 achieves a faster detection speed of 118 FPS, its accuracy is relatively low, with a mAP of 0.686. YOLOv8, as the mainstream network of the YOLO series, achieves a mAP of 0.756 and a detection speed of 103.5 FPS, outperforming YOLOv5 and Faster R-CNN in both accuracy and speed. Therefore, the model in this paper is improved based on YOLOv8, which is slightly lower than YOLOv5 in detection speed but still better than most methods and achieves a good balance between accuracy and speed, which can meet the actual detection needs.

To further evaluate the model’s performance and robustness on a large-scale fabric defect dataset, this paper also introduces the Tianchi fabric defect dataset for extended experiments, and the experimental results are shown in Table 4.

Table 4.

Comparative experimental results of different models.

As can be seen from the table, the performance of all the models on the Tianchi dataset is degraded compared to the results on the TILDA dataset. This is due to the fact that the Tianchi dataset is more complex and diverse, with as many as 20 types of defects and significant differences in shapes, which leads to a significant increase in the difficulty of accurate recognition and thus poses a greater challenge to each model. Specifically, the mAP value of YOLOv8 is similar to that of Cascade RCNN, reaching 0.427, but the detection speed of Cascade RCNN is only 35.1 FPS, which is much lower than the requirement of real-time detection. In contrast, YOLOv8 has a detection speed of 103.5 FPS while maintaining a high mAP, but it is still not optimal.

The improved algorithm proposed in this paper is based on YOLOv8, which is optimized and still shows excellent performance on the Tianchi dataset. The model in this paper not only achieves superior precision and recall, with mAP reaching 0.448, but also inherits the advantage of the light weight of the YOLO series, and the model’s detection speed of 110.2 FPS is sufficient to meet real-time detection demands. This shows that the algorithm in this paper is still able to maintain high robustness and efficiency in the face of complex and variable defect detection tasks and is suitable for different datasets and application scenarios.

Figure 9 presents a comparison of detection results between the proposed model and YOLOv8 on the Tianchi dataset. In both sample (a) and sample (d), although YOLOv8 correctly recognizes the defect categories, its confidence level is slightly lower than that of this paper’s model. In sample (b), YOLOv8 has a missed detection situation and does not recognize the defects of the three-line type. And YOLOv8 in sample (c) has a misdetection situation, incorrectly recognizing the stain type as the hole type. The proposed model significantly improves the classification and detection accuracy of fabric defects.

Figure 9.

Comparison of results of Tianchi dataset testing, where (a) denotes the knot, (b) denotes the three threads, (c) denotes the stains, (d) denotes the marks.

5. Conclusions

This study presents an enhanced YOLOv8 model to improve the efficiency of high-resolution image processing, preserve image details, and adapt better to low-quality samples in fabric defect detection. Detection accuracy and speed are significantly improved by the following innovations: first, the RG-C2f module optimizes model efficiency by reducing parameters and computation and improves the inference speed while maintaining the efficiency of high-resolution image processing; second, the DySample upsampling operator replaces the traditional nearest neighbor interpolation method and efficiently retains more image details by learning the optimal sampling pattern, thus improving the detection ability of small-size defects; and finally, the designed inner-WIoU loss function introduces dynamic sample weights, which enhances the generalization ability of the model in edge regression, especially in dealing with different defect types and large differences in sample quality.

Compared to the original YOLOv8, the enhanced model demonstrates a substantial increase in mAP across multiple public datasets. The frame rate of the model is 110.2 FPS, which is able to meet the real-time detection requirements in industrial real-world production. Therefore, the improved model not only outperforms multiple existing single-stage detection methods in terms of accuracy but also achieves higher inference speed, making it suitable for industrial online defect detection.

Although the improved model significantly enhances accuracy and efficiency, it still faces some limitations. False detections may occur in complex backgrounds and for defects with high similarity. Additionally, the model shows limited adaptability to fabrics under extreme color or lighting changes. The model’s adaptability to various industrial environments and hardware platforms, especially in terms of resource consumption and inference speed for real-time inspection systems, also requires further verification. Future work can focus on improving the model’s robustness and adaptability for broader application scenarios.

Author Contributions

Conceptualization, X.L.; methodology, X.L.; software, K.N.; validation, K.N.; formal analysis, S.W.; investigation, S.W.; resources, X.Z.; data curation, X.Z.; writing—original draft preparation, X.L.; writing—review and editing, Y.J.; visualization, Z.Z.; supervision, Y.J.; project administration, T.W.; funding acquisition, Y.J. All authors have read and agreed to the published version of the manuscript.

Funding

This research was funded by the Natural Science Foundation of Shanxi Province, grant number 202103021224218.

Institutional Review Board Statement

Not applicable.

Informed Consent Statement

Not applicable.

Data Availability Statement

The data used to support the findings of this study are available from the corresponding author upon request.

Conflicts of Interest

The authors declare no conflicts of interest.

References

- Liangtai, F.; Zijing, D.; Wenbo, Z.; Xinyuan, W.; Mimi, Z.; Lingjie, Y.; Runjun, S. Research Progress on Fabric Surface Defect Detection. Wool Text. J. 2023, 4, 94–101. [Google Scholar] [CrossRef]

- Chandurkar, P.; Kakde, M.; Patil, C.; Shirpur, D. Minimization of Defects in Knitted Fabric. Int. J. Text Eng. Process 2016, 2, 13–18. [Google Scholar]

- Liu, Q.; Wang, C.; Li, Y.; Gao, M.; Li, J. A Fabric Defect Detection Method Based on Deep Learning. IEEE Access 2022, 10, 4284–4296. [Google Scholar] [CrossRef]

- Yang, H.; Jing, J.; Wang, Z.; Huang, Y.; Song, S. YOLOV4-TinyS: A New Convolutional Neural Architecture for Real-Time Detection of Fabric Defects in Edge Devices. Text. Res. J. 2024, 94, 49–68. [Google Scholar] [CrossRef]

- Prunella, M.; Scardigno, R.M.; Buongiorno, D.; Brunetti, A.; Longo, N.; Carli, R.; Dotoli, M.; Bevilacqua, V. Deep Learning for Automatic Vision-Based Recognition of Industrial Surface Defects: A Survey. IEEE Access 2023, 11, 43370–43423. [Google Scholar] [CrossRef]

- Li, X.; Zhu, Y. A Real-Time and Accurate Convolutional Neural Network for Fabric Defect Detection. Complex Intell. Syst. 2024. [CrossRef]

- Guo, Y.; Kang, X.; Li, J.; Yang, Y. Automatic Fabric Defect Detection Method Using AC-YOLOv5. Electronics 2023, 12, 2950. [Google Scholar] [CrossRef]

- Zhang, H.; Li, S.; Miao, Q.; Fang, R.; Xue, S.; Hu, Q.; Hu, J.; Chan, S. Surface Defect Detection of Hot Rolled Steel Based on Multi-Scale Feature Fusion and Attention Mechanism Residual Block. Sci. Rep. 2024, 14, 7671. [Google Scholar] [CrossRef] [PubMed]

- Xiao, G.; Hou, S.; Zhou, H. PCB Defect Detection Algorithm Based on CDI-YOLO. Sci. Rep. 2024, 14, 7351. [Google Scholar] [CrossRef] [PubMed]

- Girshick, R.; Donahue, J.; Darrell, T.; Malik, J. Rich Feature Hierarchies for Accurate Object Detection and Semantic Segmentation. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Columbus, OH, USA, 23–28 June 2014. [Google Scholar]

- Girshick, R. Fast R-CNN. In Proceedings of the 2015 IEEE International Conference on Computer Vision (ICCV), Santiago, Chile, 7–13 December 2015; pp. 1440–1448. [Google Scholar]

- Ren, S.; He, K.; Girshick, R.; Sun, J. Faster R-CNN: Towards Real-Time Object Detection with Region Proposal Networks. IEEE Trans. Pattern Anal. Mach. Intell. 2017, 39, 1137–1149. [Google Scholar] [CrossRef] [PubMed]

- Chen, M.; Yu, L.; Zhi, C.; Sun, R.; Zhu, S.; Gao, Z.; Ke, Z.; Zhu, M.; Zhang, Y. Improved Faster R-CNN for Fabric Defect Detection Based on Gabor Filter with Genetic Algorithm Optimization. Comput. Ind. 2022, 134, 103551. [Google Scholar] [CrossRef]

- Wang, J.; Yang, J.; Lu, G.; Zhang, C.; Yu, Z.; Yang, Y. Adaptively Fused Attention Module for the Fabric Defect Detection. Adv. Intell. Syst. 2023, 5, 2200151. [Google Scholar] [CrossRef]

- Weihong, L.; Min, L.; Ping, Z.; Shuqin, C.; Xiaoyun, Y. Improved Fabric Defect Detection Algorithm Based on YOLOv8n. Cotton Text. Technol. 2024, 52, 19–25. [Google Scholar]

- Zhang, Y.; Zhang, H.; Huang, Q.; Han, Y.; Zhao, M. DsP-YOLO: An Anchor-Free Network with DsPAN for Small Object Detection of Multiscale Defects. Expert Syst. Appl. 2024, 241, 122669. [Google Scholar] [CrossRef]

- Wang, Y.; Zhang, L.; Xiong, X.; Kuang, J.; Xiang, S. A Lightweight and Efficient Multi-Type Defect Detection Method for Transmission Lines Based on DCP-YOLOv8. Sensors 2024, 24, 4491. [Google Scholar] [CrossRef] [PubMed]

- Yu, Y.; Lv, H.; Chen, W.; Wang, Y. Research on Defect Detection for Overhead Transmission Lines Based on the ABG-YOLOv8n Model. Energies 2024, 17, 5974. [Google Scholar] [CrossRef]

- Han, K.; Wang, Y.; Tian, Q.; Guo, J.; Xu, C.; Xu, C. GhostNet: More Features From Cheap Operations. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, Seattle, WA, USA, 13–19 June 2020; pp. 1580–1589. [Google Scholar]

- Ding, X.; Zhang, X.; Ma, N.; Han, J.; Ding, G.; Sun, J. RepVGG: Making VGG-Style ConvNets Great Again. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, Nashville, TN, USA, 20–25 June 2021; pp. 13733–13742. [Google Scholar]

- Liu, W.; Lu, H.; Fu, H.; Cao, Z. Learning to Upsample by Learning to Sample. In Proceedings of the IEEE/CVF International Conference on Computer Vision, Paris, France, 2–3 October 2023; pp. 6027–6037. [Google Scholar]

- Zheng, Z.; Wang, P.; Liu, W.; Li, J.; Ye, R.; Ren, D. Distance-IoU Loss: Faster and Better Learning for Bounding Box Regression. Proc. AAAI Conf. Artif. Intell. 2020, 34, 12993–13000. [Google Scholar] [CrossRef]

- Rezatofighi, H.; Tsoi, N.; Gwak, J.; Sadeghian, A.; Reid, I.; Savarese, S. Generalized Intersection Over Union: A Metric and a Loss for Bounding Box Regression. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, Long Beach, CA, USA, 15–20 June 2019; pp. 658–666. [Google Scholar]

- Tong, Z.; Chen, Y.; Xu, Z.; Yu, R. Wise-IoU: Bounding Box Regression Loss with Dynamic Focusing Mechanism. arXiv 2023, arXiv:2301.10051. [Google Scholar]

- Zhang, H.; Xu, C.; Zhang, S. Inner-IoU: More Effective Intersection over Union Loss with Auxiliary Bounding Box. arXiv 2023, arXiv:2311.02877. [Google Scholar]

- Cai, Z.; Vasconcelos, N. Cascade R-CNN: Delving Into High Quality Object Detection. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Salt Lake City, UT, USA, 18–28 June 2018; pp. 6154–6162. [Google Scholar]

- Zhu, X.; Lyu, S.; Wang, X.; Zhao, Q. TPH-YOLOv5: Improved YOLOv5 Based on Transformer Prediction Head for Object Detection on Drone-Captured Scenarios. In Proceedings of the IEEE/CVF International Conference on Computer Vision, Montreal, BC, Canada, 10–17 October 2021; pp. 2778–2788. [Google Scholar]

- Varghese, R.; Sambath, M. YOLOv8: A Novel Object Detection Algorithm with Enhanced Performance and Robustness. In Proceedings of the 2024 International Conference on Advances in Data Engineering and Intelligent Computing Systems (ADICS), Chennai, India, 18–19 April 2024; pp. 1–6. [Google Scholar]

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).