1. Introduction

The printed circuit board (PCB) is the heart of modern electronic devices, providing the foundation for components to be connected functionally and compactly. As technological advances continue, the complexity and miniaturization of PCBs have dramatically increased. Consequently, the inspection and detection of defects in PCBs have become vital aspects of ensuring the quality, functionality, and reliability of electronic products. PCBs are integral to a wide range of industries, including consumer electronics, automotive, aerospace, and telecommunications. Defects such as misaligned components, soldering issues, cracks, and short circuits can adversely affect the performance of electronic devices. Therefore, accurate, efficient, and automated defect detection methods are essential to minimize the risks of faulty products reaching the market.

Traditional PCB inspection techniques, such as manual visual inspection and simple image processing methods, often fail to meet the growing demands for precision and efficiency [

1,

2]. Manual inspection, being time-consuming and prone to human error, struggles to detect subtle defects, especially small or hidden within the board’s intricate layers. Basic image processing methods, including edge detection and thresholding, are also limited in their capacity to handle complex images, especially those containing noise or poor contrast. Given these challenges, researchers have increasingly turned to advanced image fusion techniques to improve the accuracy and robustness of PCB defect detection.

Image fusion is a technique that combines information from multiple source images to produce a single image that contains enhanced features and details [

3]. In the context of PCB inspection, image fusion can provide a more comprehensive view of the board by merging different types of images. Doing so helps preserve critical information, such as fine details of the edges, textures, and structures, which are essential for accurate defect detection. Among the various image fusion techniques, wavelet-based fusion methods have gained significant attention due to their unique ability to decompose images into different frequency bands, capturing both low-frequency structural information and high-frequency details like edges and textures.

Wavelet-based image fusion methods rely on the discrete wavelet transform (DWT), which decomposes an image into a set of wavelet coefficients corresponding to different frequency subbands [

4,

5]. The low-frequency bands capture the overall structure and large-scale features of the image, while the high-frequency bands retain fine details, such as edges, textures, and small-scale features. These properties make wavelet-based fusion particularly useful for applications like PCB inspection, where both the macrostructure and microstructure are equally important for accurate defect detection. Wavelet fusion aims to combine the strengths of multiple images by selecting the most relevant information from each frequency band. Genetic algorithms (GAs) are a class of optimization techniques inspired by the principles of natural selection and evolution [

6]. GAs have been successfully applied in many optimization problems, including image fusion, because they can explore a large solution space and converge towards optimal solutions. A genetic algorithm involves a population of candidate solutions over several generations, with the best solutions selected for reproduction and the next iteration. The key strength of GAs lies in their ability to balance exploration.

Despite the advantages of wavelet-based image fusion, effectively optimizing the fusion process remains a significant challenge. Selecting appropriate coefficients from each image and determining their optimal combination is complex. Traditional fusion techniques often rely on heuristic rules or statistical measures, which may not always yield optimal results. Additionally, these methods may struggle to balance high fusion quality with computational efficiency, particularly when handling large or high-resolution images. Meanwhile, conventional GAs are susceptible to premature convergence, where the algorithm converges to a suboptimal solution due to a loss of diversity within the population.

To address these issues, an improved Genetic Algorithm-Based Wavelet Image Fusion Technique can be employed. The Elite Strategy is a technique that ensures the best solutions in each generation are retained and passed on to the next iteration. This helps to preserve the most promising candidates, preventing the algorithm from losing high-quality solutions during the evolutionary process. By integrating the Elite Strategy into the genetic algorithm, we can improve the convergence speed and the overall fusion quality, leading to a more effective and efficient fusion process. The contributions of this paper are listed below:

- (1)

An elite strategy-enhanced genetic algorithm (ESGA) is proposed to improve the robustness and convergence efficiency of fusion weight optimization. By preserving high-quality individuals across generations and integrating an adaptive mutation mechanism, ESGA effectively mitigates premature convergence while maintaining solution diversity, enhancing fusion performance.

- (2)

A synergistic framework combining the improved genetic algorithm with DWT is developed for multiscale image decomposition. Haar wavelet is selected as the basis function due to its computational efficiency and ability to balance localization in both spatial and frequency domains. The low-frequency subbands capture the overall structure of the image, while high-frequency subbands retain critical details such as edges and textures. This approach effectively integrates the strengths of both source images, avoiding deficiencies in overall appearance or detail retention.

- (3)

A prototype system was developed and tested in a realistic environment to validate the effectiveness of the proposed method. Experimental results demonstrate that the proposed image fusion method significantly enhances the accuracy and efficiency of PCB detection, providing a reliable solution for automated defect detection and quality assessment.

2. Related Work

Image fusion has become a pivotal technique for combining complementary information from multiple image sources, enhancing visual interpretation and decision-making in fields such as medical diagnosis, remote sensing, and surveillance. X. Li, G. Zhang et al. present a competitive mask-guidance fusion method for infrared and visible images, utilizing a multimodal semantic-sensitive mask selection network and a bidirectional-collaboration region fusion strategy to effectively integrate advantageous target regions from different modalities, significantly improving the saliency and structural integrity of fused images [

7]. Cheng, C. et al. introduce FusionBooster, a model designed for image fusion tasks, which improves fusion performance through a divide-and-conquer strategy controlled by an information probe, consisting of probe units, a booster layer, and an assembling module, with experimental results showing significant improvements in fusion and downstream detection tasks [

8]. Yang Z, Li Y, Tang X and Xie M present a novel infrared and visible image fusion method that leverages a multimodal large language model with CLIP-driven Information Injection (CII) and CLIP-guided Feature Fusion (CFF) strategies to enhance image quality and address complex scene challenges without relying on complex network structures [

9]. ReFusion is a meta-learning-based image fusion framework that dynamically optimizes fusion loss through source image reconstruction, employing a parameterized loss function and a three-module structure to adapt to various fusion tasks and consistently achieve superior results [

10].

Other contributions in the field include a wavelet-based medical image fusion method proposed by Yang et al., which combines human visual system characteristics with wavelet coefficients’ physical meaning [

11]. Furthermore, Wang-yang et al. present a wavelet-based multi-focus image fusion algorithm that combines SSIM and edge energy matrices to select high-frequency coefficients, improving fusion quality by reducing ringing effects and information loss [

12]. Similarly, Jadhav proposes a wavelet-based image fusion technique using dual-tree complex wavelet transform to preserve local perceptual features, thus avoiding ghosting, aliasing, and haloing when combining images captured from different instruments [

13]. An advanced wavelet-based fusion method, proposed by Yuan and Yang, integrates wavelet transform with a Fourier random measurement matrix [

14]. This approach reduces time complexity while improving performance through compressive sensing sampling. In the domain of multi-focus image fusion, Yang et al. utilize a dual-tree complex wavelet-based framework that improves fusion accuracy through contrast-based initial fusion and decision-map-guided final fusion [

15].

In the field of wavelet-based image fusion, Pei et al. introduce a fusion algorithm integrating histogram equalization and sharpening techniques to enhance degraded images by improving contrast and detail clarity [

16]. Singh et al. present a wavelet-based multi-focus image fusion method using method noise and anisotropic diffusion, which enhances real-time surveillance images by improving clarity and visual quality [

17]. Additionally, Dou et al. propose a wavelet-based multi-focus image fusion method that optimizes image quality using genetic algorithms and HVS-based weighting to ensure enhanced perceptual clarity [

18]. A wavelet-based multi-view fusion method enhances LSFM imaging by integrating directional information, improving contrast and detail without requiring PSF knowledge [

19].

Several studies have also explored the integration of GA into wavelet-based fusion to further optimize fusion processes [

20,

21]. The integration of GAs into wavelet-based methods has further optimized fusion processes by dynamically selecting optimal fusion rules and parameters [

22,

23,

24]. A. Ganjehkaviri, M.N. Mohd Jaafar, S.E. Hosseini and H. Barzegaravval explores GA in multi-objective energy system optimization, addressing Pareto front accuracy, convergence, uniqueness, and dimension reduction, proposing effective quantitative methodologies [

25]. Recent advancements have introduced novel frameworks such as hybrid genetic algorithms for feature selection and super-resolution algorithms for medical image enhancement, combining discrete wavelet transforms with adaptive feature selection to improve CT image quality [

26,

27].

Furthermore, Wavelet-based fusion techniques continue to evolve, as demonstrated by Soniminde and Biradar, who improve wavelet-based image fusion by incorporating weighted averages of great boost and CLAHE, enhancing image quality by reducing blurring and artifacts [

28]. Singh et al. improve fusion techniques for real-time surveillance, presenting a wavelet-based multi-focus fusion approach that uses method noise and anisotropic diffusion [

17]. Srivastava et al. develop the Deep-AF model, which integrates CNN-based decision maps, sparse coding, and cross-modality transitions to improve multimodal medical image fusion [

29].

Wavelet transforms also have a significant role in enhancing image clarity and feature preservation across various fields. The integration of Particle Swarm Optimization (PSO) and Stationary Wavelet Transform (SWT) has been proposed to optimize parameters for better edge resolution and detail preservation [

30]. Additionally, Ravichandran et al. propose an image fusion method using PSO and Dual-Tree Discrete Wavelet Transform (DTDWT), optimizing fusion weights to improve fusion quality [

31].

Therefore, image fusion techniques, particularly wavelet-based methods, combine complementary information to improve image quality. Integrating GAs optimizes fusion by dynamically selecting the best parameters, enhancing detail preservation. In PCB detection, a GA-based wavelet fusion approach significantly improves clarity, aiding in the detection of subtle defects and enhancing fault analysis, thus offering substantial practical value for industrial applications.

3. Method

The DWT in this paper serves as a cornerstone for multiscale image fusion frameworks due to its ability to decompose images into spatially localized frequency components while preserving critical edge and texture information. In the context of multispectral image fusion between visible (VS) and infrared (IR) modalities, the DWT-driven mechanism systematically addresses spectral disparities through hierarchical decomposition and reconstruction.

To ensure compatibility between input images, a preprocessing module enforces dimensional consistency through automated resizing and interpolation. Let

represent the resized IR image derived from the original

of dimensions p × q:

where

denotes a bicubic interpolation operator. This step mitigates spatial misalignment artifacts in subsequent fusion operations.

The decomposition process begins by applying a mother wavelet to each input image. This operation splits the images into approximation and detail coefficients at multiple scales. The approximation coefficients capture low-frequency content, representing the overall structure of the image, while the detail coefficients encode high-frequency information, such as edges and textures, in three orientations: vertical, horizontal, and diagonal. By iterating this decomposition across multiple levels, the framework constructs a hierarchical representation of the input images, enabling precise control over feature extraction at different spatial resolutions. This multiscale approach ensures that global thermal patterns and fine structural details are preserved for subsequent fusion. The subband fusion process employs distinct strategies for low-frequency and high-frequency components to optimize complementary information retention. For low-frequency subbands, an energy-adaptive weighting scheme dynamically allocates fusion weights based on the relative energy contributions of the vs. and IR modalities. This prioritizes the modality with stronger energy signatures in coarse-scale regions, ensuring the preservation of thermal patterns from IR and structural integrity from vs. For high-frequency subbands, a gradient saliency maximization criterion selectively retains coefficients with higher edge activity by comparing local gradient energy between modalities.

The inverse wavelet reconstruction phase synthesizes the fused approximation and detail coefficients into a final fused image. This critical step reverses the multilevel decomposition process through the inverse discrete wavelet transform (IDWT). The fused low-frequency subband provides the foundational structure of the image, while the fused high-frequency subbands inject detailed edge and texture information. The fused approximation

and detail coefficients {

,

,

} are synthesized through the inverse DWT (IDWT):

The reconstruction guarantees perfect invertibility, ensuring no information loss during fusion. In addition, in this study, we utilize a specialized version of GA, called the Elite Decision-Making Genetic Algorithm (EDA), to optimize the parameters involved in wavelet-based image fusion. The elite decision-making approach ensures that the best solutions from each generation are retained, enhancing the convergence rate of the algorithm. In the application of the EDA to wavelet-based image fusion, the selection and dynamic adjustment of key parameters, including mutation rate, crossover probability, elite strategy, population size, and selection pressure, are essential for optimizing performance and ensuring effective convergence. In this study, the mutation rate is set to 0.2 to facilitate exploration by introducing diversity into the population. As the algorithm progresses, the mutation rate is dynamically reduced using a linear decay function, allowing for more focused exploitation of high-quality solutions. Similarly, the crossover probability is set to 0.8 to promote the recombination of superior solutions, with a potential decrease in the later stages of optimization to fine-tune and exploit existing solutions. The elite strategy further enhances convergence by retaining the top 20% of the best-performing individuals in each generation, ensuring they are directly passed to the next generation without modification. The population size is set to 50, which plays a critical role in balancing exploration with computational efficiency and can be dynamically adjusted throughout the algorithm to improve convergence.

The fitness function

evaluates the quality of an individual solution by measuring how well the fusion performs in terms of edge preservation, contrast enhancement, and feature extraction. Each individual i is a vector

, where

represents the parameters related to wavelet transformation. A composite fitness score is used, combining multiple performance metrics:

where

represents RSD,

represents PSNR,

represents SF,

represents image clarity,

,

,

and

are weight coefficients. RSD measures the consistency and uniformity of the image fusion process. Lower values of RSD indicate better consistency across the fused image. PSNR is a measure of the quality of the reconstructed image. Higher PSNR values indicate that the fused image retains more of the original image quality. SF measures the amount of information or detail in the image. A higher spatial frequency indicates that the image contains more spatial detail, which is important for accurate feature extraction. Image clarity quantifies the sharpness and overall visual clarity of the fused image. Higher clarity values suggest better preservation of important features and edges. The elite selection mechanism ensures that only the top solutions are chosen to form the next generation. Crossover and mutation operations are applied to generate new candidate solutions based on the selected individuals. After applying crossover and mutation, the best solutions, elite individuals, are directly carried over to the next generation without alteration, improving the convergence speed and avoiding premature stagnation. The genetic algorithm proceeds iteratively until convergence is reached or a predefined number of generations are completed.

Finally, the final fused image

is obtained by applying two-dimensional inverse discrete wavelet transform (IDWT) to each subband after fusion:

where

presents the final fused image in the spatial domain which gives the pixel intensity at the spatial location (x,y) after the fusion process is complete.

denotes the IDWT operator whose role is to reconstruct the spatial-domain image from the wavelet subband coefficients.

refers to the fused low-frequency subband.

refers to the fused high-frequency subband that contains horizontal detail information.

refers to the fused high-frequency subband that contains vertical detail information.

refers to the fused high-frequency subband corresponding to diagonal details.

4. Experiment Results and Discussion

This study employs a comprehensive set of evaluation metrics for image fusion assessment, encompassing RSD, PSNR, SF, and image clarity. In addition, the experimental platform includes a 13th Gen Intel(R) Core(TM) i9-13900HX 2.20 GHz (Intel Corporation, Santa Clara, CA, USA) and an NVIDIA GeForce RTX 4060 Laptop GPU (NVIDIA Corporation, Santa Clara, CA, USA).

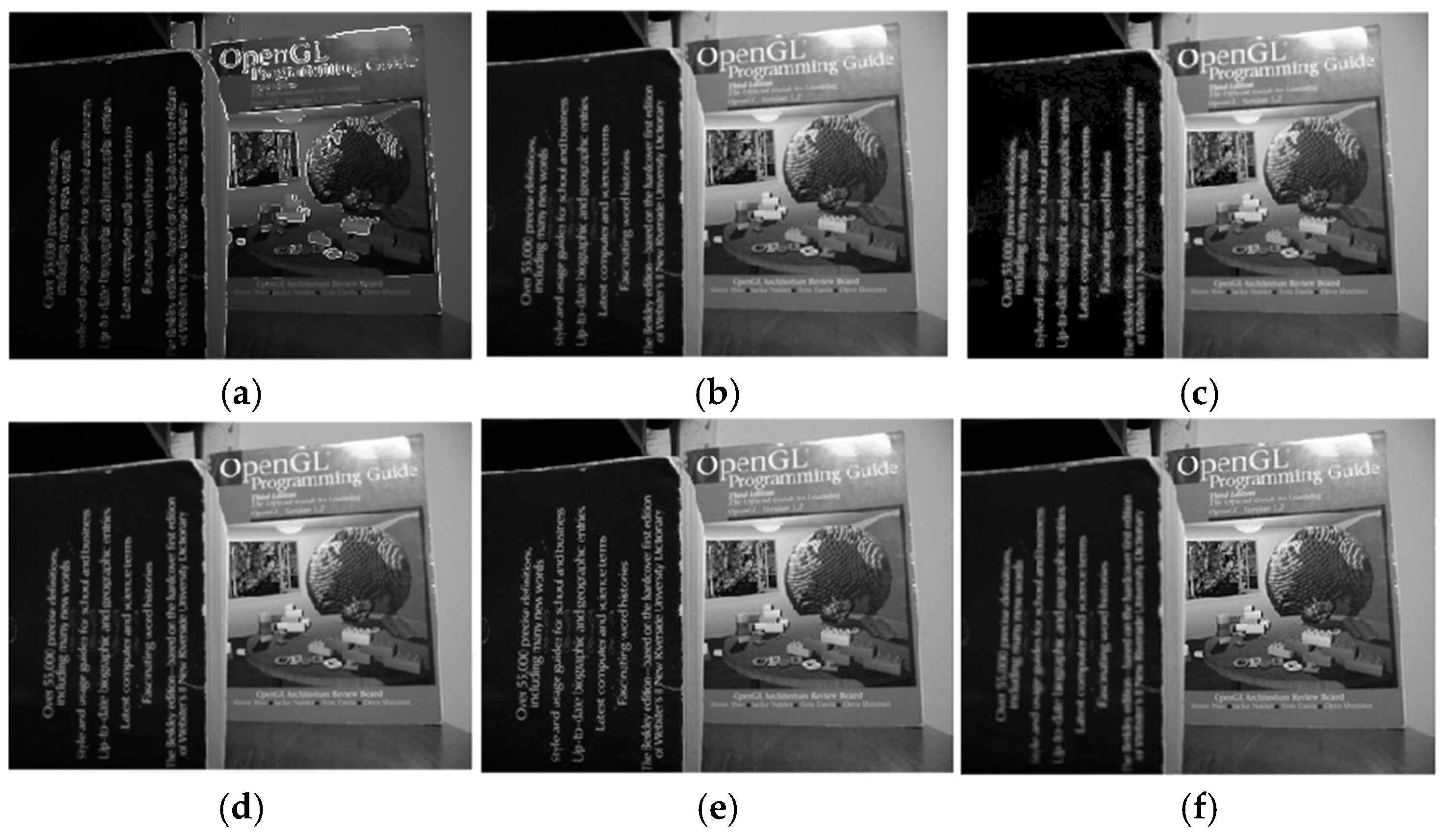

The designed model first loads the two images to be fused from the specified file paths, retrieves the previously imported image data through the handles structure, converts these data into a double-precision matrix, and normalizes it to a range between 0 and 1. Subsequently, the dimensions of the two images are made identical using the clip_images function. The two images are then decomposed using a two-dimensional wavelet transform. Following this, the wavelet coefficients of the two images are input into an enhanced genetic algorithm with improvements. Initially, the algorithm generates a random initial population, where each individual represents a potential solution. Each individual is evaluated using a fitness function, which is designed to assess the quality of the current solution. In this study, the fitness function incorporates the RSD, PSNR, SF, and image clarity as evaluation criteria. During the selection process for each generation, the individual with the highest fitness is retained, leading to the identification of the optimal solution. This approach accelerates the convergence of the genetic algorithm and ensures that high-quality solutions are preserved, ultimately yielding the best fusion weight combination. A set of image fusion results is illustrated in

Figure 1.

Figure 1a,b show the original images to be fused, while

Figure 1c presents the final fusion result. A total of ten image groups were used for testing in this study, with the results presented in

Table 1.

Based on the results, the RSD values of the improved method are generally close to or equal to 1, indicating that the enhanced image stability has not significantly improved. In some cases, the RSD values have slightly increased, such as in Test4, which rose from 0.91353 to 0.91938. However, the proposed method demonstrates outstanding performance in enhancing PSNR, SF, and overall image clarity, leading to a significant improvement in image quality. The PSNR values after enhancement show a notable increase, particularly in Test1-enhanced and Test5-enhanced, where the values are substantially higher than those of the original test method. This suggests that the enhancement process effectively improves image quality and reduces noise. Although there are a few cases where the PSNR did not increase significantly or decrease slightly, the overall trend indicates an improvement in PSNR after enhancement. The SF generally exhibits an upward trend following enhancement, implying that the enhancement operation typically improves image details and sharpness. While some tests show a slight decrease in SF, the overall effect suggests that enhancement contributes to increased spatial frequency. Furthermore, image clarity is generally improved, with the enhancement process demonstrating a significant effect in enhancing image sharpness, particularly in the representation of image details.

Additionally, the proposed approach was further compared with commonly used image fusion methods, including the pixel averaging method and the Pulse Coupled Neural Network (PCNN) method [

32,

33]. A set of representative examples illustrating the image fusion performance of the improved approach, the conventional method, the pixel averaging method, and the PCNN method is presented in

Figure 2. Specifically,

Figure 2a shows the fusion result obtained using the pixel averaging method,

Figure 2b displays the result from the PCNN method,

Figure 2c presents the fusion outcome of the conventional method before improvement, and

Figure 2d demonstrates the fusion result achieved by the proposed approach.

As observed in

Figure 2, from a human visual perspective,

Figure 2a, the pixel averaging method exhibits relatively poor performance. In

Figure 2b, the distant object (flag) and the foreground object (person) in the PCNN method appear somewhat blurred compared to the proposed approach in

Figure 2d. Similarly, in

Figure 2c, the distant object (flag) in the conventional method appears less sharp than in

Figure 2d. The proposed approach, as shown in

Figure 2d, achieves clearer image fusion across all regions. Furthermore, a comparative analysis of the fusion performance of the proposed method, the pixel averaging method, and the PCNN method across five sets of images is presented in

Table 2.

As shown in

Table 3, the proposed approach demonstrates superior performance across multiple key metrics, particularly excelling in PSNR and image clarity, compared to the PCNN method. Additionally, it exhibits greater stability and sharpness than pixel-level fusion methods. The enhanced fusion approach effectively improves image quality while achieving a well-balanced performance across various aspects, ensuring the preservation of image details, sharpness, and overall quality.

The proposed method shows a significant advantage in PSNR, with particularly high values in Test1-enhanced, whose PSNR equals 123.239, and Test5-enhanced, whose PSNR equals 120.192, where it notably outperforms both the PCNN and pixel-level methods. This indicates that the enhanced fusion method better retains image details and signal integrity while reducing noise introduced during the fusion process. A high PSNR value typically signifies superior image quality with minimal distortion.

Furthermore, the proposed method consistently achieves an RSD value of approximately 1, indicating excellent stability and consistency in image fusion. While pixel-level methods tend to have lower RSD values, the enhanced method maintains a well-balanced performance through optimized algorithms, minimizing variations between images without compromising image quality. In terms of SF, the Test-enhanced method exhibits a moderate yet effective performance, particularly in Test3-enhanced, whose SF equals 477.058, and Test2-enhanced, whose SF equals 41.3933, demonstrating strong capability in preserving image details. Although its SF values are generally lower than those of pixel-level fusion methods, the proposed approach maintains clearer image structures and detailed information compared to the PCNN method, particularly excelling in edge and texture detail representation. The Test-enhanced method also achieves consistently high image clarity, particularly in Test1-enhanced, whose image clarity equals 292.1254, and Test5-enhanced, whose image clarity equals 160.055, highlighting its effectiveness in enhancing image sharpness. Higher image clarity signifies more distinct details and improved visual perception, allowing for a better presentation of fine features and contours. Consequently, while the Test-enhanced method generally improves image clarity, it may not always result in an overall perceptual improvement in clarity across all images. Therefore, despite its success in many cases, the method’s ability to consistently enhance image clarity may vary depending on the specific fusion context and image properties. Therefore, for many application scenarios, the Test-enhanced method provides a high-quality fusion solution, making it an ideal choice for improving image quality and sharpness.

For further extension of our proposed method, extra experiments have been performed in

Figure 3.

The proposed method outperforms other methods in multiple metrics, especially in terms of PSNR reaching 124.5946, far exceeding all other methods, indicating that its fused images have extremely high signal-to-noise ratio and fidelity. Meanwhile, its RSD is 1, which is lower than PCNN, CVT, and PCA, demonstrating better grayscale stability. In terms of SF, the proposed method achieved 15,101.3567, although not as good as PCNN and CVT, it is still relatively high and can effectively preserve the detailed information of the image. In addition, its Image Clarity is 1022.9067, which is better than in traditional method and PCA, and second only to CVT and PCNN, showing good performance. Overall, the proposed method performs outstandingly in terms of PSNR and achieves excellent levels in other key indicators, making it particularly suitable for application scenarios that require extremely high fusion quality.

Subsequently, the proposed method was applied to a more practical scenario involving the detection of temperature anomalies in components on printed circuit boards (PCBs). During PCB operation, components may malfunction, especially when the circuit input is subjected to external excitation. In these cases, the malfunctioning component typically experiences abnormal heating, resulting in a higher temperature rise than during normal circuit operation. This thermal anomaly is often a key indicator of component failure.

To address this issue, a fault detection system was designed to identify and mark areas with abnormally high temperatures in infrared images. The method aims to accurately detect thermal anomalies that could signify potential faults, thus providing a reliable tool for monitoring the health of PCBs. The prototype designed for this purpose is shown in

Figure 4.

Figure 4a presents the overall framework of the prototype, while

Figure 4b,c show the front and back views of the physical prototype, respectively. This system aims to enhance the detection and diagnosis of temperature-related faults in PCBs, which is essential for improving the reliability and safety of electronic devices.

The design of the prototype closely resembles that of a standard camera, incorporating a familiar form factor and operational logic that aligns with user expectations. The front of the prototype is equipped with a sensor module that is responsible for capturing image data and ensuring the quality and accuracy of the data acquisition process. This sensor module consists of an infrared array sensor and a visible light camera. The Mlx90640 infrared array sensor, a 32 × 24 pixel thermal infrared sensor that adheres to industrial standards and has been pre-calibrated, was chosen for its affordability and cost-effectiveness compared to other infrared sensors on the market. The sensor communicates with the Raspberry Pi via I2C, enabling the collection of infrared image data from the target area. To capture visible light images, a universal USB optical camera was selected. This camera is easily integrable, highly compatible, and capable of capturing high-quality visible light images. On the rear of the prototype, a display module is integrated to provide a clear view of the collected images and offer intuitive visual feedback to the user. At the top of the prototype, a simple and user-friendly interface is designed, featuring a power button and a camera button, allowing users to effortlessly power the device and capture images with a single press. Internally, the model includes all necessary circuit connections, power modules, and the main control board. The main control board utilizes a Raspberry Pi 4B, ensuring the system’s performance and stability while preserving the simplicity and elegance of the external design.

The overall operational flow of the prototype is illustrated in

Figure 5. The image acquisition module is responsible for capturing image data from the prototype. The infrared image acquisition function establishes communication with the thermal imaging camera via the SMBus library, configures the camera parameters, and uses the mlx90640 library to retrieve temperature data. The temperature data are then normalized, and bilinear interpolation is applied to enhance the image resolution, ultimately generating a colorized thermal map. Testing results indicate that the infrared image refresh rate achieves a level of 2 frames per second. The visible light image acquisition function integrates physical buttons controlled by GPIO. It interacts with the USB visible light camera via the OpenCV library, capturing and storing both the current visible light and infrared images locally upon button activation for subsequent processing. The data transmission module, based on user input, sends the image data to a central processing platform via TCP socket. After that, the acquired visible light and thermal images are processed to ensure uniformity in format and size. Subsequently, the images undergo DWT decomposition, resulting in four subbands: LL, LH, HL, and HH. Among these, LL represents the low-frequency subband, while LH, HL, and HH correspond to the high-frequency subbands, which capture the horizontal, vertical, and diagonal high-frequency components, respectively. Each subband is assigned a weight, which is calculated using the system’s ESGA. This weight is determined based on the potential RSD, PSNR, SF, and image clarity values achievable from the fused image. Once the weights are assigned, the system produces the fused subbands: LLF, LHF, HLF, and HHF, where ‘F’ denotes the fused version of the subband. The system then performs the IDWT, resulting in the fused image, and outputs its RSD, PSNR, SF, and image clarity values.

In the testing experiment, as illustrated in

Figure 6, a typical functional PCB was selected and tested after being powered on using the proposed system. The visible light image, infrared image, and fused image of the PCB in its normal operating state were recorded, as shown in

Figure 6a–c, respectively. Based on these reference images, an automatic annotation algorithm for thermal imaging was designed within the system. When the system detects red high-temperature regions that deviate from the distribution pattern observed in

Figure 6b, it automatically annotates the corresponding areas in the image.

Subsequently, a specific component region on the PCB was artificially heated to simulate a component failure scenario. After powering on, the system was used to test the PCB, and the prototype captured both visible light and thermal images. These images were then transmitted to the central processing platform, which in this case was a PC. The visible light and thermal images were imported into the Wavelet Transform Image Fusion Scheme Based on the Improved Genetic Algorithm designed for this project, which produced the fused image and highlighted the areas with abnormal heating. The processed image was then transmitted to the prototype’s display screen. Upon receiving the image data from the sensors, the system first converts the format of the images and scales them to a resolution of 320 × 240 pixels to match the screen size. It then calls a drawing function that maps the image data onto the screen, converting the pixel color values to a 16-bit color format for display.

Figure 7 illustrates the process, where

Figure 7a shows the original visible light image,

Figure 7b displays the thermal image,

Figure 7c presents the fused image, and

Figure 7d shows the image after automatic annotation of the abnormal heating areas.

Based on the functionality tests performed and the analysis of the resulting images, it is possible to identify the locations of abnormally high temperatures, which correspond to the components with the highest likelihood of failure. The proposed system introduces an innovative approach to monitoring errors in PCBs by utilizing both visible light and thermal infrared images for fusion and analysis. This dual-modal imaging technique enhances the system’s ability to detect anomalies, such as overheating, that may not be easily identified through visual inspection alone. The system captures high-quality visible light and thermal images, which are then fused using a Wavelet Transform Image Fusion Scheme based on an Improved Genetic Algorithm. This process generates a fused image that effectively highlights areas of abnormal heating, providing a more accurate and comprehensive view of the PCB’s operational status. Experimental results demonstrate the system’s effectiveness in detecting errors, as it successfully captured and processed both visible and thermal images of a PCB with an artificially heated component region. The fused image displayed clear indications of the overheated areas, which were annotated by the system’s automatic annotation algorithm. This capability is crucial for real-time monitoring, allowing immediate detection of potential issues such as overheating that could lead to component failures. The combination of thermal and visible light data improves error detection accuracy, providing a clearer representation of both thermal characteristics and visual context. This is especially useful in scenarios where visual inspection alone may fail to detect hidden issues like hot spots or malfunctions. The system’s ability to annotate abnormal heating areas further enhances its utility by enabling quick identification and localization of faults.