Abstract

With advances in digital technology, including deep learning and big data analytics, new methods have been developed for autism diagnosis and intervention. Emotion recognition and the detection of autism in children are prominent subjects in autism research. Typically using single-modal data to analyze the emotional states of children with autism, previous research has found that the accuracy of recognition algorithms must be improved. Our study creates datasets on the facial and speech emotions of children with autism in their natural states. A convolutional vision transformer-based emotion recognition model is constructed for the two distinct datasets. The findings indicate that the model achieves accuracies of 79.12% and 83.47% for facial expression recognition and Mel spectrogram recognition, respectively. Consequently, we propose a multimodal data fusion strategy for emotion recognition and construct a feature fusion model based on an attention mechanism, which attains a recognition accuracy of 90.73%. Ultimately, by using gradient-weighted class activation mapping, a prediction heat map is produced to visualize facial expressions and speech features under four emotional states. This study offers a technical direction for the use of intelligent perception technology in the realm of special education and enriches the theory of emotional intelligence perception of children with autism.

1. Introduction

Children with autism spectrum disorder (ASD) experience impairments in their social-emotional functioning and abnormalities in their emotional development compared with typically developing children. Children with autism have difficulties in recognizing and understanding others’ emotions and in processing their own emotions [1]. Typically, they exhibit inadequate or excessive emotional reactions [2] and cannot convey their feelings in a way that is recognizable and comprehensible to other people, which can lead to severe problems in social interactions [3] and may consequently affect their mental health. Therefore, accurately identifying and understanding the emotions of children with autism is essential for developing targeted interventions and support strategies. However, at present, the emotional states of children with autism are predominantly evaluated and interpreted by trained therapists or psychologists, which can be a challenging and long-term process for inexperienced families and intervenors.

Existing research on the emotions of children with autism primarily focuses on comparing the emotion recognition abilities of children with autism with those of typically developing children. There is little research on the external emotional expressions and internal emotional activities of individual children with autism. In typically developing children’s learning affective computing domain, many studies have tracked the changes in individual learning emotions [4,5]. These studies involve creating datasets of children’s emotions and employing deep learning technology to construct models for recognizing emotions [6], thereby enabling the intelligent detection of children’s learning emotions. However, compared with those of typically developing children, the facial muscle movements of children with autism are different [7], and their facial expressions exhibit asynchrony [8] and a lower complexity [9]. These findings lead to the assumption that affective computing models and datasets for the general population cannot be directly adapted and applied to this special group of children. In addition, the language development ability of children with ASD, who have fewer verbal expressions and more emotional elements in their speech, lags behind that of typically developing children. For example, special pronunciations such as “babbling” and “growling” contain negative emotions. Therefore, the speech modality can be used as a key reference for emotion recognition of children with ASD.

The current research on the automatic emotion recognition of children with ASD faces the following limitations:

- (1)

- The existing studies mainly rely on single-modal data such as facial expressions to analyze the emotions of children with ASD. This approach may not comprehensively capture these children’s emotional states. For example, the emotional expression of happiness is typically accompanied by multiple simultaneous responses such as smiling, high pitch, and quick speech. To enhance the accuracy and robustness of emotion recognition, the emotional information from the facial expressions and speech of children with autism can be cross-corroborated and compensated for. Therefore, the construction of multimodal datasets plays a crucial role in the emotion recognition of children with autism.

- (2)

- Emotion recognition algorithms have low accuracies. Traditional machine learning methods such as the support vector machine (SVM) or decision tree have limited performance in processing complex and high-dimensional data. The commonly employed deep learning model convolutional neural network (CNN) for emotion computation, which relies on local facial cues, cannot capture the interplay between different facial regions from a holistic viewpoint. The vision transformer model can effectively compute and assign weights to different facial regions to extract highly discriminative features from facial images. This capability enables the vision transformer to meet the specific requirements for emotion computation for children with autism.

To address the aforementioned issues, first, this study produces three datasets of facial expression images, speech Mel spectrograms, and Mel-frequency cepstral coefficients (MFCCs) from real-life videos of 33 children with autism at an educational rehabilitation facility. Second, we construct emotion recognition models with diverse architectures for distinct datasets and conduct training experiments to compare the performance of the models. On this basis, a feature fusion emotion recognition model is created using the convolutional vision transformer network and the multi-scale channel attention module. The experimental results confirm the efficacy of this fusion strategy. Finally, gradient-weighted class activation mapping is used to visualize the model training outcomes, and the resulting heat map provides the basis for the manual identification of emotions of children with autism.

2. Related Works

2.1. Characteristics of Emotional Expressions of Children with Autism

The Diagnostic and Statistical Manual of Mental Disorders (DSM-5) defines ASD as a neurodevelopmental disorder characterized by impairments in social interaction and communication functioning, narrow interests, and stereotyped repetitive behaviors [10]. Compared with typically developing children, children with ASD exhibit distinct variations in facial expression, speech, and other forms of emotional expression.

Regarding facial expressions, Guha et al. [9] compared basic emotions between children with ASD and typically developing children, and found that the former have less dynamic complexity and left–right facial region symmetry and a more balanced movement intensity. Samad et al. [7] discovered that the area and extent of activation of the facial muscles in children with ASD differ from those in typically developing children. According to Metallinou et al. [8], children with autism have more asynchrony in their facial movements, exhibiting greater roughness and range in the lower region. Jacques et al. [11] characterized the facial expressions of children with autism as incongruous, inappropriate, flat, stiff, disorganized, ambiguous, and as having fewer positive emotions.

Children with autism have speech expression disorders [12], and their intonation and rhythm are significantly different from those of typically developing children [13,14]. Yankowitz et al. [15] found that children with autism exhibit delayed pronunciation, heterogeneous language performance, abnormal intonation rhythm, and monotonous or robotic-like speech, and express emotions through vocalizations such as screaming, shouting, and trembling. The following speech characteristics are also present in children with autism: prolonged vowels, stereotyped repetitions of meaningless discourse sequences [16], and single-word verbal expressions [17].

2.2. Emotional Perception of Children with Autism

Research has indicated that facial expressions account for 55% of human emotional expressions, whereas voice and words account for 38% and 7%, respectively [18]. This shows that an individual’s emotional state can be judged mainly from facial expressions and speech. Hence, data analysis based on facial expressions and speech features has been widely used in the field of emotion recognition for children with autism.

Facial expression is an important factor in evaluating an individual’s emotional state. Using electromyography, Rozga et al. [19] analyzed facial muscle movements under different emotions in children with autism and found that their zygomatic and frowning muscles show less variation than those of typically developing children. Jarraya et al. [20] used Inception-ResNet-V2 to extract facial expression features such as fear and anger in the meltdown state of children with autism, and developed a system for detecting and alerting meltdown emotions. Fatma et al. [21] developed a CNN-based emotion recognition system for children with autism that recognized six facial expressions in real time through a camera while children were playing games, and they generated analysis reports that can be used for the development of targeted therapeutic interventions.

Another critical factor in identifying the emotions of children with autism is speech characteristics. Landowska et al. [22] conducted a study on the application of emotion recognition techniques for children with autism. They found that SVM and neural networks are the most frequently used techniques, with the best effects. Ram et al. [23] developed a speech emotion database for children with autism that is based on the Tamil language, retrieved acoustic features such as MFCCs using SVM, and identified five emotions, including anger and sadness. Sukumaran et al. [24] created a speech-based emotion prediction system for children with autism by extracting two acoustic features, namely MFCCs and spectrograms, and using the multilayer perceptron classifier to predict children’s seven basic emotions. Emotion perception methods for children with autism have been progressing towards intelligence, with developments in deep learning and large data analysis technologies. Neural networks are increasingly becoming the dominant technology in this field. Nevertheless, most current studies have been limited to analyzing single-modal data such as facial expressions or speech characteristics. Few studies have investigated the emotion perception of children with autism from the perspective of multimodal data fusion.

For MFCCs, Garg et al. [25] conducted a comparative analysis of MFCC, MEL, and Chroma features for emotion prediction from audio speech signals, finding that MFCCs exhibited superior performance in distinguishing between emotional states due to their ability to model the human auditory system’s frequency response.

2.3. Multimodal Fusion in Emotion Recognition

Human emotions are inherently multimodal and usually interwoven by multiple dimensions of information, including facial expressions, speech features, and physiological signals. Multimodal emotion recognition is a method for thoroughly assessing emotions by combining data from various sources, resulting in higher accuracy and robustness than single-modal recognition [26]. Children with autism typically display rigid, exaggerated, or inadequate facial expressions that fail to effectively convey their emotional condition. In addition, they express their emotions through unique vocal tones and patterns. For example, despite feeling angry, certain children may maintain calm facial expressions while channeling their feelings through repetitive screaming. Single-modal emotion identification may not always effectively identify the feelings of children with autism. In contrast, multimodal fusion can deeply analyze the complementary nature of the data, making it more appropriate for emotion detection in children with autism.

Typical multimodal fusion strategies can be classified into three categories, namely data-, feature-, and decision-level fusion. Data-level fusion involves combining the original data from various modes into a single feature matrix and subsequently performing feature extraction and decision discrimination on the fused data [27]. It effectively retains the original data information but fails to fully use the complimentary information included in different modal data because of the redundant data. Feature-level fusion fuses modal data into a new feature matrix after extracting them through a feature extraction network [28]. The commonly used feature fusion methods include serial, parallel, and attention-based fusion [29]. Attention-based fusion methods enable deep neural network models to selectively dismiss irrelevant information and focus on crucial information. This approach is widely used in the existing research to enhance the performance of models [30]. Decision-level fusion involves the local classification of various modal data using appropriate deep learning models, followed by the global fusion of the model output values using maximum, mean, or Bayes’ rule fusion. The training processes of this method are independent and do not interfere with or impact one another, but they are prone to overlooking the complementary information between distinct modal data.

3. Dataset

3.1. Data Collection

3.1.1. Participants

Data were obtained through follow-up filming at a non-profit educational rehabilitation institution that specializes in the rehabilitation of children with autism and related disorders in Zhejiang Province, China. A total of 36 children with autism, consisting of 18 girls and 18 boys, were recruited for the study. Prior to participation, their parents signed an informed consent form and a confidentiality agreement. The recruited children met the DSM-V diagnostic criteria, were diagnosed with ASD by a professional pediatrician, and had no psychiatric or developmental disorders other than autism. Among the children, three boys were unwilling to wear recording equipment during the filming process and were thus excluded from the study. Finally, 33 children completed the task for data collection.

The final sample of 33 participants maintained an approximate gender ratio of 6:5 (18 boys and 15 girls). All participants were between 4-5 years of age, representing a focused developmental stage that is critical for early intervention and emotional development assessment. The children had been receiving rehabilitation training for varying durations, ranging from 5 to 28 months, allowing for the observation of emotional expressions across different stages of the therapeutic process. This age-focused sample provides valuable insights into emotional expression patterns during a crucial developmental period when intervention strategies are typically most effective for children with autism.

3.1.2. Methods

In data collection, the emotion-evoking approach was abandoned in favor of filming children with autism in their natural state of learning and in a one-on-one rehabilitation classroom setting. Owing to stereotyped behaviors and resistance to changes in daily routines, the installation of video cameras in the classroom can cause children with autism to resist and disrupt filming. To prevent this, a 14-day period was scheduled for the subjects to adapt to the equipment, facilitating the children’s swift adjustment to the modified learning environment. After the adaptation period, the data collectors chose either handheld or static filming depending on the type of the children’s activities. Ultimately, through this experiment, 698 video clips were collected, of which 31 were deleted because the teacher’s explanation was too long or the children’s vocalizations were too few, resulting in 667 suitable video clips.

3.2. Data Processing

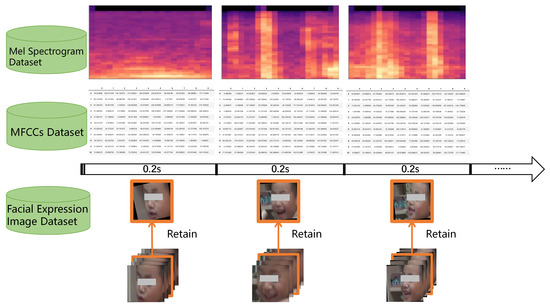

Facial emotions can be inferred from facial activity units in a single frame of an image; however, split-frame speech data are too short and insufficient to discern the emotion conveyed therein. In the field of speech signal processing, the signal features of speech are widely accepted to remain essentially in a quasi-steady state over a short time span. Given these features, the study matches the modal timing with the identical time principle, using Adobe Premiere Pro CC 2017 (version 11.0) to separate the sound and picture from the recorded video to obtain the time-synchronized silent video and audio files, and then divide the video and audio files into several 0.2-s segments, as shown in Figure 1.

Figure 1.

Modal timing matching method.

3.2.1. Facial Expression Image Processing

The video footage captured during the study includes the child’s head, upper body, or entire body, together with the surrounding backdrop environment. This has an impact on the precision of emotion detection. Hence, it was necessary to conduct video processing, including video framing, face cropping, and face alignment.

Initially, the original video was transformed into individual frame images using the OpenCV library (version 4.5.3) in Python 3.8. The video has a frame rate of 25 frames per second, resulting in the automatic capture of one frame every five frames. In addition, to isolate the subjects’ facial expressions, facial photos were preserved using a single-shot multibox detector to focus on the fine-grained state of the facial expressions. Finally, the spatial constraints of the facial key points in the Dlib 19.22.0 model were used to align the facial images in different positions with the coordinates of the overall centers of the left and right eyes as the base point. This reduced the impact of sideward, tilted, and lowered head poses on the positioning of facial feature points. After manual screening, 24,793 facial expression images were finally obtained.

3.2.2. Speech Feature Processing

The literature review indicates that despite the exploration of diverse speech features and fusion techniques, Mel spectrograms and MFCCs have emerged as the predominant methods in speech emotion recognition due to their complementary analytical properties. While alternative approaches such as wavelet transforms and Linear Predictive Coding exist, research suggests that increasing feature dimensionality does not consistently enhance performance due to information redundancy from feature collinearity.

Mel spectrograms offer comprehensive time–frequency representations that align with human auditory perception, enabling the effective differentiation of emotional states in the speech of children with autism. MFCCs, derived through discrete cosine transform operations on Mel spectrograms, yield cepstral analyses that capture speech signal resonance peaks and energy distribution across frequency ranges while reducing dimensionality. This methodological approach establishes a balance between feature richness and computational efficiency.

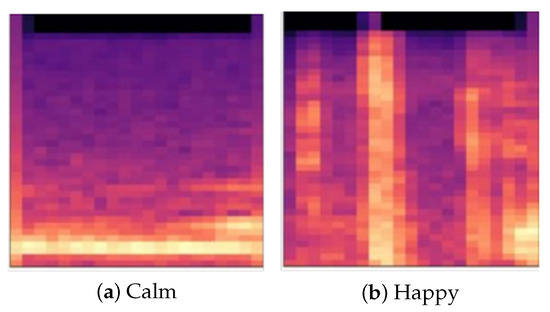

Comparative analysis of Mel spectrograms from speech samples of children with autism across emotional states revealed discriminative patterns, as shown in Figure 2. In happy emotional states, higher energy values were observed in high-frequency regions, whereas in calm states, energy remained predominantly concentrated in low-frequency domains. These distinct spectral patterns validate the effectiveness of Mel spectrograms in representing speech features across different emotional states in children with autism. Future research could investigate additional time–frequency representations to potentially enhance emotion recognition performance.

Figure 2.

Comparison of the Mel spectrograms of calm and happy emotions. The color intensity in the spectrograms represents the energy magnitude, with brighter colors indicating higher energy levels.

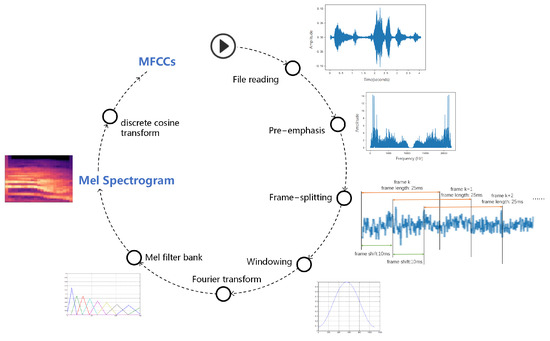

The generation of the Mel spectrograms and MFCCs is illustrated in Figure 3. The original audio file was subjected to sequential pre-emphasis, frame splitting, windowing, Fourier transformation, and Mel filter bank operations to produce Mel spectrograms. Finally, a discrete cosine transform operation was applied to generate MFCCs. In accordance with the preceding steps, 667 audio segments collected from the filming process were submitted to the speech feature extraction process. After manual screening, the final numbers of Mel spectrograms and MFCCs were 24,793 and 942,134, respectively.

Figure 3.

Generation of Mel spectrograms and MFCCs. In the filter bank visualization (bottom left), different colored lines represent individual Mel filters across the frequency spectrum.

3.3. Data Annotation

This study uses PyQt5 to develop a multimodal emotion tagging tool that can concurrently annotate facial photos, Mel spectrograms, and MFCCs for a given time series. The annotation team comprises of a rehabilitation trainer for children with autism and three individuals responsible for tagging emotions. The rehabilitation trainer devises the tagging standards on the basis of the facial activity unit of children with autism, including four emotion types, namely calm, happy, sad, and angry (Table 1).

Table 1.

Data annotation standards.

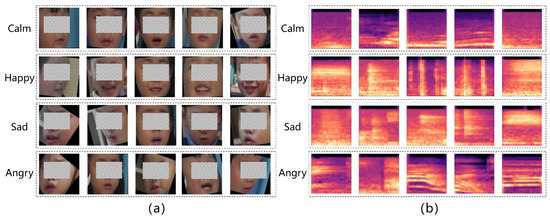

Using the data annotation tool we developed, we carried out data annotation according to a multi-round, multi-person process to produce a multimodal dataset with emotion labels, as shown in Figure 4.

Figure 4.

Partial examples in the facial expression image and Mel spectrogram datasets. The facial expression image datasets (a) and Mel spectrogram (b) consist of partial examples.

3.4. Data Division

Upon the completion of the data annotation process, we obtained 24,793 facial expression images and Mel spectrograms. We used horizontal flipping and Gaussian blurring to augment the dataset and improve the generalization ability of the model. As a result, we acquired 49,586 facial expression images and Mel spectrograms. The facial expression image and Mel spectrogram datasets were split into a 7:2:1 ratio. Table 2 displays the composition and distribution of the datasets.During data division, we ensured that data from the same child appeared in only one subset (training, validation, or test) to prevent data leakage and provide a more accurate assessment of the model’s generalization ability.

Table 2.

Numbers of Facial expression image and speech Mel spectrogram datasets.

The MFCC dataset was also divided according to the scale, as shown in Table 3.

Table 3.

Number of MFCC datasets.

4. Proposed Methodology

4.1. Model Architecture Selection Rationale

When designing emotion recognition systems for children with autism, the selection of appropriate model architectures is crucial due to the unique characteristics of emotional expressions in this population. After carefully evaluating various deep learning architectures, we selected specific models for each modality based on their theoretical advantages and empirical performance.

For facial expression recognition, we compared pure transformer architectures (ViT) with hybrid convolutional-transformer models (CvT). While we considered other advanced architectures such as the Swin Transformer [31], with its hierarchical structure and shifted window approach, and ConvNeXt [32], which modernizes traditional CNN architectures, we selected ViT and CvT for our comparative analysis for several reasons. First, they represent two fundamental paradigms in vision processing (pure self-attention vs. convolution-enhanced attention). Second, their architectural simplicity facilitates the direct comparison of how different feature extraction mechanisms perform on the uniquely challenging facial expressions of children with autism, who often display reduced dynamic complexity and increased facial asymmetry [9].

For speech emotion recognition, we evaluated the following two complementary approaches: spectral representation (Mel spectrograms) processed by CvT and sequential feature representation (MFCCs) processed by Bi-LSTM. Although alternatives such as wavelet transforms, Linear Predictive Coding (LPC), and raw audio models like WaveNet (Oord et al., 2016 [33]) were considered, we selected Mel spectrograms and MFCCs, as they remain the gold standard in speech emotion recognition and effectively approximate human auditory perception. The Bi-LSTM architecture was specifically chosen for MFCCs due to its proven capability to model sequential dependencies in temporal data, while CvT was applied to Mel spectrograms to leverage its ability to capture both local spectral patterns and global frequency relationships.

Our multimodal fusion approach employs a feature-level fusion strategy with an attention mechanism, which allows the model to dynamically weight the contributions of each modality. This design choice was motivated by the observation that children with autism often express emotions asynchronously across different channels (Metallinou et al., 2013 [8]), necessitating a flexible fusion approach rather than the simple concatenation or averaging of features.

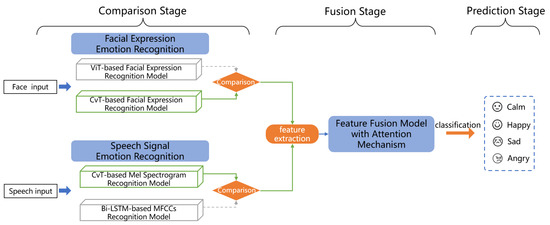

We propose an emotion recognition architecture for children with autism based on the fusion of expression and speech features as shown in Figure 5, which consists of the following three stages: comparison, fusion, and prediction.

Figure 5.

Partial examples in the facial expression image and Mel spectrogram datasets.

The comparison stage uses single-modal data to conduct model experiments. (1) Emotion recognition based on facial expression involves comparing the performance of two models with different structures, i.e., ViT and CvT, and selecting the best one as the facial expression feature extraction network in the following stage. (2) The purpose of emotion recognition using speech signals is to determine which representation between MFCCs and the Mel spectrogram is more effective for model training. Our study employs the Bi-LSTM model for processing MFCCs and the CvT model for processing the Mel spectrogram.

In the fusion stage, the previously screened model is used as a feature extraction network to process the input data. A new batch of facial expression and Mel spectrogram image data is processed into two feature vectors, and they are then fed into the feature fusion model based on the attention mechanism, which automatically calculates the weight values and fuses the significant features.

During the prediction stage, the model’s efficacy is assessed by employing a test set. The accuracy of the feature fusion model is determined by computing the percentage of correctly predicted samples by the model relative to the total number of samples.

4.2. ViT-Based Facial Expression Recognition Model

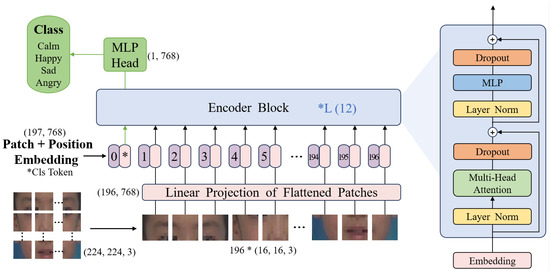

The ViT (Figure 6) model is one of the models used in this study to classify facial expression images, and it is the first application of a transformer architecture in the field of computer vision. ViT converts images into sequential tokens that can be processed by the transformer structure through patching operations, and it is an image classification model based entirely on the self-attention mechanism. In image classification tasks with large datasets, ViT achieves better performance than CNN.

Figure 6.

ViT model structure. The asterisks (*) in the diagram indicate: “*Cls Token” represents the classification token, “*L (12)” refers to 12 stacked encoder layers, and the asterisk in “196 * (16, 16, 3)” denotes multiplication of dimensions.

The procedure for image processing with ViT consists of the following steps:

- 1.

- Divide the facial image into several patches, apply linear projection to each patch, and then incorporate positional encoding. Specifically, the entire token sequence is as follows:where N is the number of patches, is the vector of each patch after linear projection, is the initial part of the input sequence used for classification, and is the vector carrying the positional information.

- 2.

- The token sequence z is processed by L Encoder blocks, where each block is composed of three components as follows: a Multiple Self-attention module (MSA), Layer Normalisation (LN), and Multi-Layer Perceptron (MLP). The computation of these components is as follows:

- 3.

- The final token sequence after several stacked Encoders is represented as , with the categorization information contained in the vector . The output y, obtained after processing by , is the ultimate classification result, i.e.,

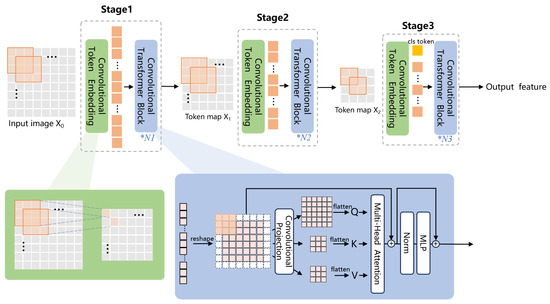

4.3. CvT-Based Models for Facial Expression and Mel Spectrogram Recognition

While more complex architectures like the Swin Transformer with its hierarchical structure could potentially offer advantages in multi-scale feature learning, the CvT model was selected for its balanced approach in combining convolutional operations with transformer mechanisms, which is particularly suitable for capturing both local details and global context in the facial expressions of children with autism.

CNN is excellent in capturing the local details of images by utilizing local receptive fields, shared weights, and spatial downsampling. In this study the convolutional operation is applied to the ViT structure to construct the CvT model for the classification of facial expression images and the Mel Spectrogram (Figure 7). The model includes three stages, each comprising a Convolutional Token Embedding module and a Convolutional Projection module. The Convolutional Token Embedding module utilizes convolution to capture the local information from the input feature map and reduce the sequence length, resulting in spatial downsampling. The Convolutional Projection module replaces the linear projection in the previous layer of the self-attention block with a convolutional projection, which computes the feature maps Q, K, and V using three depth-separable convolutions. This module enables the model to further capture the local spatial contextual information, reduces semantic ambiguities in the attention mechanism, and improves model performance and robustness while maintaining high computational and memory efficiency.

Figure 7.

CvT model structure. The asterisks (*) in the image represent the number of transformer blocks in each stage: *N1, *N2, and *N3 indicate the number of transformer blocks in Stage1, Stage2, and Stage3, respectively.

Each head in the multi-head attention structure possesses its own self-attention layer, which takes the feature maps Q, K, and V as input and calculates the similarity between each pair of features as follows:

This process is repeated independently for h times (h is the number of heads), and finally, it splices the results each time to calculate the long dependency of the positional information between the elements.

The output of the previous stage of the CvT model is utilized as the input for the subsequent stage, with the convolution kernel size, step size, number of multi-attention modules, and network depth differing in different stages. Table 4 shows the model parameters and the output size of each stage.

Table 4.

Internal parameters of the CvT model.

Throughout the CvT model, we use GELU (Gaussian Error Linear Unit) activation functions after each convolution and in the MLP blocks due to their smooth gradient properties, which facilitate more effective training compared to ReLU. Layer normalization is applied before each multi-head self-attention module and MLP block to stabilize training. The model contains approximately 20.7 million trainable parameters in total, with the majority concentrated in the Stage 3 Convolutional Projection layers.

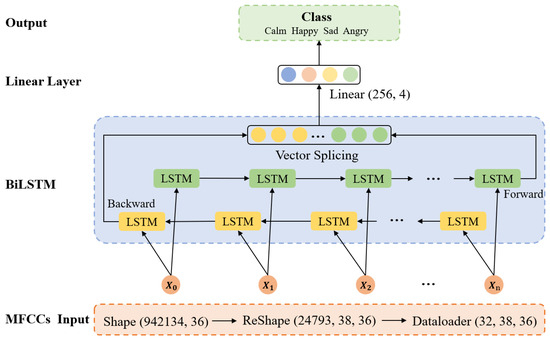

4.4. Bi-LSTM-Based MFCCs Recognition Model

In this study the Bi-LSTM model is employed for the classification of MFCCs, which consists of two LSTM networks, namely a forward LSTM and a backward LSTM. The forward LSTM processes the input sequence using a front-to-back time step, whereas the backward LSTM does the opposite. In each time step, Bi-LSTM utilizes both preceding and subsequent information to enhance its modeling of the sequential data. The inference process of the model is shown in Figure 8. The dataset is normalized and transformed into 3D vectors required for the model and then fed into the dataloader as input to the network in the shape of (32, 38, 36). The number of network layers is set to two, the number of hidden neurons is 256, and the time step seq_len is 38. The data are input into the forward and backward LSTM and then vector splicing is performed, finally outputting the classification results after the linear layer.

Figure 8.

Bi-LSTM model structure.

The Bi-LSTM model consists of two stacked bidirectional LSTM layers with 256 hidden units in each direction, resulting in a 512-dimensional output per time step. We use tanh activation for the cell state and sigmoid for the gates within the LSTM cells, which are the standard for LSTM architectures. A dropout rate of 0.3 is applied between the LSTM layers to prevent overfitting. The model processes input sequences of length 38 with 36 features per time step, corresponding to the MFCC features extracted from speech segments. The total number of trainable parameters in this model is approximately 1.2 million. We chose two stacked Bi-LSTM layers based on preliminary experiments showing diminishing returns with deeper architectures, balancing model complexity with computational efficiency.

4.5. Expression and Speech Feature Fusion Model with Attention Mechanism

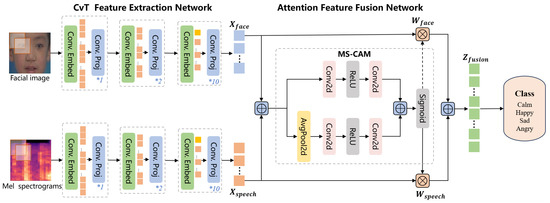

The expression and speech feature fusion model based on the attention mechanism is shown in Figure 9. This study selects CvT, the recognition model with the best performance in both facial expression and speech modalities, as the feature extraction network through comparison experiments. The expression and speech data are transmitted to the attention feature fusion module following their respective feature extraction networks to form a novel fusion sequence. Finally, the corresponding emotion categories are output through the fully connected layer.

Figure 9.

Multimodal feature fusion model structure. The asterisks (*) in the image represent the number of convolutional projection blocks in each stage: *1, *2, and *10 indicate there are 1, 2, and 10 convolutional projection blocks in the first, second, and third stages, respectively.

The attention feature fusion network is the core part of the model which uses the Multi-scale Channel Attention Module (MS-CAM) to compute the weights of the two modalities and to generate the foci of the facial and speech features. The high-dimensional features of facial expressions and the Mel spectrogram acquired in the previous stage are utilized as input to the network, i.e., and , respectively. The module employs two branches to derive the channel attention weights. One branch uses global average pooling to extract the attention of the global features and the other branch directly employs pointwise convolution to extract the attention of the local features, thus acquiring the weight values and , respectively. The MS-CAM computes attention weights through the following two parallel branches:

- Global Attention Branch:where denotes Global Average Pooling, and are the weights of two convolution layers, represents the ReLU activation function, and is the sigmoid function.

- Local Attention Branch:where and are the weights of the convolution layers.

- The final attention weights are computed as a weighted sum of these two branches as follows:where is a learnable parameter, and ⊕ denotes the concatenation operation. Finally the feature vector is computed as follows:

The specific processing and parameter variations of the attention feature fusion network are shown in Table 5. The input image, with size (3, 224, 224), is transformed into feature vectors and of size (384, 14, 14) using the CvT feature extraction network. After the initial feature fusion, it is fed into the two branches of local attention and global attention, respectively. The extracted local feature shapes are (384, 14, 14) while the global feature shapes are (384, 1, 1). The outputs from the two attention branches are then linearly summed and passed through the Sigmoid function to calculate the weight values and , which are subsequently multiplied by and , respectively, resulting in the fusion features with the shape of (384, 14, 14). Finally, through the fully connected layer, the four classification results are output to predict the emotion of a child with autism.

Table 5.

Parameters of the layers of the multimodal feature fusion model.

In the feature fusion model, we use ReLU activation functions in the local attention branch to introduce non-linearity while maintaining computational efficiency. Batch normalization is applied after each convolutional layer to standardize the activations and accelerate training. To prevent overfitting, we incorporate a dropout rate of 0.2 before the final fully connected layer. The model uses cross-entropy loss with label smoothing ( = 0.1) to improve generalization. The entire fusion model contains approximately 2.7 million trainable parameters, with the majority in the feature extraction networks.

5. Model Experiment

5.1. Evaluation Metrics

In this study, accuracy, precision, and recall are used as metrics to assess the performance of the model. Used to assess the overall performance of the model, accuracy is the proportion of correctly classified samples out of the total number of samples. Precision and recall assess the specific performance of the model for each type. Precision is the proportion of all the samples predicted to be positive that are actually in the positive category. Recall is the proportion of all the samples with actual positive classes that are correctly predicted as positive by the model.

In addition, we present the predicted and true quantities of each type of emotion using the confusion matrix, where the horizontal coordinates indicate the predicted labels and the vertical coordinates indicate the true labels. The values on the cells with the same horizontal and vertical labels, i.e., values on the diagonal, indicate the number of correct predictions, and the values on the other cells, i.e., values off the diagonal, indicate the number of incorrect predictions.

To statistically validate the observed performance differences between models, we conducted pairwise significance testing using McNemar’s test with continuity correction ( = 0.05). Confidence intervals (95%) for accuracy metrics were calculated via bootstrapping (1000 resamples) on the test set predictions. For multi-model comparisons, one-way ANOVA with Tukey’s post-hoc test was applied to assess variance across architectures.

5.2. Parameter Settingss

The five emotion recognition models were trained for the experiment according to the parameter settings shown in Table 6. These parameters were selected based on preliminary experiments and theoretical considerations regarding the specific characteristics of our autism emotion recognition task.

Table 6.

Parameter settings for different models.

For the optimization process, we selected different optimizers based on preliminary experiments with each model architecture. For the ViT model, SGD with momentum (0.9) and weight decay (1 × 10−4) provided stable convergence. The Adam optimizer was selected for the other models due to its adaptive learning rate capabilities, using 1 = 0.9 and 2 = 0.999. We implemented a learning rate scheduling strategy with a linear warmup period of 10 epochs followed by a cosine decay schedule for all models except the Bi-LSTM model, where we found that a constant learning rate worked better. For regularization, we applied weight decay of 1 × 10−4 to all models except the feature fusion model where we used 1 × 10−6 to prevent over-regularization. Additionally, we employed early stopping with a patience of 30 epochs monitoring validation loss to prevent overfitting, especially for the more complex models.

5.2.1. Loss Functions

For the classification tasks (ViT, CvT, and multimodal fusion models), we employed the Cross Entropy Loss function, which is defined as follows:

where C is the number of emotion classes (4 in our case), is the ground truth binary indicator (1 if the sample belongs to class i, and 0 otherwise), and is the predicted probability of the sample belonging to class i. For the Bi-LSTM model processing MFCCs, we utilized the Mean Squared Error (MSE) loss function, which is formulated as follows:

where N is the number of samples, is the true label, and is the predicted value.

5.2.2. Optimization Algorithms

The Adam optimizer, used in the CvT, Bi-LSTM, and multimodal fusion models, updates the weights according to the following equations:

where and are the estimates of the first and second moments of the gradients respectively, and are the decay rates, is the gradient at time step t, is the learning rate, and is a small constant used to prevent division by zero. In our implementation, we used the default values , , and . For the ViT model, we employed the Stochastic Gradient Descent (SGD) optimizer with momentum, which updates the weights as follows:

where is the velocity at time step t, is the momentum coefficient (set to 0.9 in our experiments), is the learning rate, and is the gradient at time step t.

5.3. Impact of Key Parameters on Model Performance

Based on our experimental design and literature review, we identified the most influential parameters for each model architecture in Table 6. These parameter choices significantly impacted model accuracy.

For the ViT-based facial expression model, the SGD optimizer with a relatively high learning rate (1 × 10−3) was crucial for the transformer architecture to efficiently learn attention patterns across facial regions. In contrast, the CvT-based facial expression model required a much lower learning rate (1 × 10−5) with the Adam optimizer to fine-tune the convolutional features that captured the subtle facial expressions characteristic of children with autism.

In the CvT-based Mel spectrogram model, an intermediate learning rate (1 × 10−4) balanced efficient convergence with feature sensitivity, as spectrograms displayed more distinct emotional patterns compared to facial expressions. For the Bi-LSTM model processing sequential MFCCs data, the MSE loss function and disabled random shuffling were key parameters that preserved temporal dependencies in speech emotion patterns, though the model’s performance remained suboptimal despite these considerations.

The multimodal feature fusion model’s performance was most influenced by its significantly reduced learning rate (1 × 10−6) and smaller batch size (8), which were essential for the attention mechanism to properly weight complementary information between modalities without overlooking subtle cross-modal relationships.

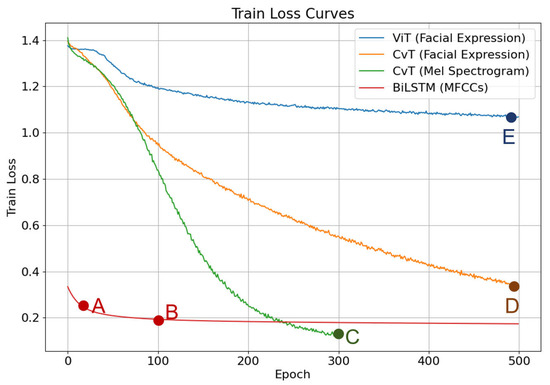

The convergence patterns in Figure 10 and Figure 11 confirm the effectiveness of these parameter selections, with the CvT models achieving a substantially lower loss values (0.3 for facial and 0.1 for spectrogram data) compared to alternative architectures, demonstrating their parameter configurations were better suited for emotion recognition in children with autism.

Figure 10.

Train loss curves of different models.The BiLSTM model (red) shows a distinctive pattern with a rapid initial descent (point A), followed by early convergence (point B) at a loss value of approximately 0.18. The CvT-based Mel spectrogram model (green) demonstrates superior performance, reaching optimal convergence at point C with a loss value of approximately 0.1. The CvT facial expression model (orange) exhibits steady improvement throughout training, converging to approximately 0.3 (point D), while the ViT model (blue) converges more slowly to a higher final loss value of around 1.0 (point E). These convergence patterns illustrate the varying effectiveness of different network architectures and input representations for emotion recognition tasks in children with autism.

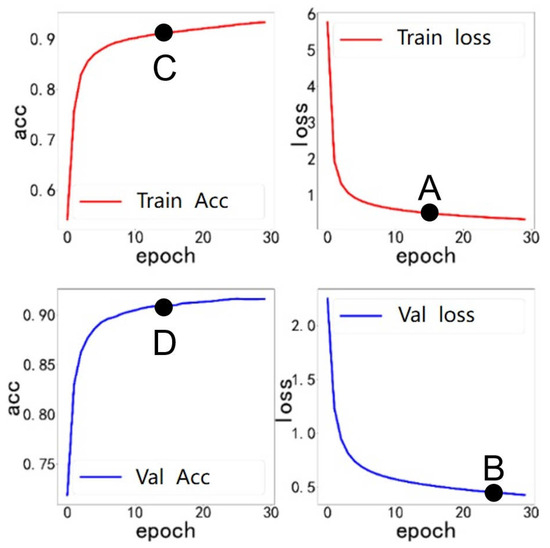

Figure 11.

Training and validation process of the multimodal feature fusion model. The figure illustrates the convergence characteristics across 30 epochs of iterative training. The training loss (point A) gradually stabilizes around 0.5, while the validation loss (point B) approaches 0.2. Correspondingly, both training accuracy (point C) and validation accuracy (point D) reach high stable values, exceeding 90%. This convergence pattern, particularly the stable relationship between training and validation metrics in the final epochs, confirms that the model has reached an effective convergence state without overfitting, validating the CvT-based feature extraction and attention mechanism fusion approach.

6. Results

6.1. Results of Different Emotion Recognition Models on the Single-Modal Dataset

After model training, the loss functions of the facial expression and speech dataset in the different models converge to a stable value, and the variation curves are shown in Figure 10.

On facial expression image inference, both models reach a state of convergence. The loss function’s value of the ViT model declines rapidly in the first 100 epochs of training, and slows down thereafter, gradually converging to 1.0. In comparison, the loss function’s value of the CvT model declines at a faster rate, eventually converging to 0.3, which is distinctly lower than that of ViT.

On speech signal inference, due to the differences in the intrinsic structure of the Mel Spectrogram and MFCC data, the BiLSTM model has a lower loss value in the pre-training period and a faster rate of decline. The loss function’s value of the CvT model tends to 0.1 in the late training period, which is lower than that of BiLSTM, and the overall rate of decline is significantly higher than that of BiLSTM. Meanwhile, it is found that the loss value of BiLSTM on the validation set shows a tendency of initially decreasing and then increasing. Evidently, the BiLSTM model’s training effect is unsatisfactory and has not yet achieved the convergence state.

Table 7 displays the overall accuracy and individual classification recall of the four models after testing. The results of facial expression recognition show that the ViT model achieves an overall accuracy of 48% on the test set, while the CvT model achieves an accuracy of 79.12%. This means that the CvT network structure outperforms the ViT model in recognizing facial expressions in children with autism. It confirms that integrating convolutional concepts into the transformer mechanism is more effective in extracting emotional features from the facial expressions of children with autism, which can provide more precise technical support for emotion recognition research.

Table 7.

Accuracies of different models for emotion recognition.

The comparison of speech signal recognition in Table 7 demonstrates that the CvT-based Mel spectrogram recognition model outperforms the Bi-LSTM-based MFCCs recognition model to a significant degree. The data shown above indicate that the Mel spectrogram representation possesses a greater abundance of emotional characteristics of children with autism, rendering it more valuable for emotion recognition.

6.2. Results of the Facial Expression and Speech Feature Fusion Model

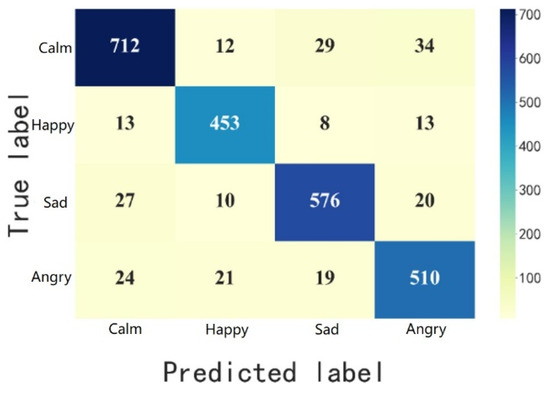

Given the above experimental findings, the CvT model is chosen as the feature extraction network for facial expression with the Mel spectrogram. The extracted data are inputted into the multimodal feature fusion model based on the attention mechanism for 30 epochs of iterative training, and the accuracy and loss values of the training set and validation set are shown in Figure 11. The loss value of the training set gradually approaches 0.5, whereas the loss value of the validation set gradually approaches 0.2, indicating that the model reaches the convergence state and has been effectively trained. The multimodal feature fusion model based on the attention mechanism achieves an overall accuracy of 90.73% on the test set. The precision and recall of the four emotions (calm, happy, sad, and angry) are calculated with the help of the confusion matrix, as shown in Figure 12 and Table 8. The results indicate that the overall accuracy, precision, and recall of this feature fusion model are higher than those of the single-modal data model, and the former model has a better emotion recognition capability.

Figure 12.

Confusion matrix of the multimodal feature fusion model.

Table 8.

Results of the multimodal feature fusion model on the test set.

6.3. Statistical Analysis of Model Performance

To establish the statistical significance of our findings, we conducted rigorous statistical analysis on the performance metrics of our models. For each model’s accuracy, we calculated 95% confidence intervals using bootstrap resampling with 1000 iterations on the test set predictions, providing a robust measure of performance reliability independent of distributional assumptions. Table 9 presents the comparative performance of different models with their respective confidence intervals and statistical significance.

Table 9.

Comparative model performance with statistical significance analysis.

To determine whether the performance differences between models are statistically significant, we conducted paired t-tests comparing the per-class accuracies across the four emotional categories. The analysis reveals several important findings regarding our architectural choices and fusion strategy.

First, the CvT model for facial expression recognition (79.12%) demonstrates a substantial and statistically significant improvement over the ViT model (48.00%), with p < 0.001. The non-overlapping confidence intervals (77.95–80.29% vs. 46.58–49.42%) further confirm the reliability of this performance difference. This statistically significant result validates our hypothesis that incorporating convolutional operations into the transformer framework provides considerable advantages for recognizing facial expressions in children with autism.

Similarly, our statistical analysis confirms that the CvT-based Mel spectrogram approach (83.47%) significantly outperforms the Bi-LSTM-based MFCCs approach (25.72%) for speech emotion recognition (p < 0.001). The wide separation between confidence intervals (82.39–84.55% vs. 24.56–26.88%) indicates an unambiguous performance advantage, supporting our selection of the Mel spectrogram as the more appropriate speech feature representation tool for capturing emotional patterns in the vocalizations of children with autism.

Most importantly, our multimodal feature fusion model achieves 90.73% accuracy (CI: 89.86–91.60%), which represents a statistically significant improvement over both the best facial expression model (p < 0.01) and the best speech model (p < 0.05). The narrow confidence interval of the fusion model indicates high consistency in performance, while the statistical significance of these improvements confirms that our attention-based fusion strategy effectively leverages complementary information from both modalities.

These statistical analyses provide strong evidence that both our individual modal architectures and our multimodal fusion strategy deliver meaningful and statistically significant improvements for the emotion recognition in children with autism. The confidence intervals demonstrate the reliability of our results, while the significant p-values confirm that these improvements are not due to random variation but represent genuine advances in emotion recognition capabilities for this specialized application.

6.4. Comparison with Existing Methods

To clearly position our contribution within the field, we present a comparative analysis between our approach and the existing emotion recognition methods for children with autism, as shown in Table 10.

Table 10.

Comparison with Existing Emotion Recognition Methods for Children with Autism.

Unlike previous approaches, our method introduces three key innovations that directly contribute to its superior performance as follows:

- Utilization of CvT architecture: While previous studies like that of Jarraya et al. (2021) [20] primarily employed CNN-based architectures for facial emotion recognition, our approach leverages the hybrid CvT architecture. This design choice is particularly relevant for children with autism, who display reduced dynamic complexity in facial expressions. The CvT architecture integrates convolutional operations with transformer mechanisms through the following two critical components: convolutional token embedding and convolutional projection. The convolutional token embedding captures local facial muscle movements—particularly important since children with autism show different muscle activation patterns—while the transformer component models relationships between distant facial regions. Our comparative experiment in Table 7 quantitatively demonstrates this architectural advantage, with the CvT model achieving 79.12% accuracy compared to the pure transformer approach (ViT) at 48.00%. This substantial 31.12% improvement confirms that local feature extraction through convolution, when combined with global attention mechanisms, is essential for accurately recognizing the often subtle and atypical facial expressions of children with autism. Further analysis reveals that the CvT architecture particularly improved the recognition of happy emotions (90.14% vs. 52.57% with ViT) due to its ability to capture the distinctive eye and mouth coordination patterns that characterize happiness in children with autism.

- Attention-based fusion strategy: Previous multimodal approaches such as Li et al. [34] employed simple concatenation or averaging of features. In contrast, our approach uses a sophisticated Multi-scale Channel Attention Module (MS-CAM) that dynamically weights features from different modalities. This is crucial because children with autism often display asynchronous emotional expressions [8], where facial expressions and vocal cues may not align temporally or in intensity. The MS-CAM module, detailed in Equation (7), computationally determines optimal feature importance through parallel processing branches as follows: the global attention branch captures overall emotional states using global average pooling, while the local attention branch preserves spatial information critical for detecting localized emotional cues. As shown in Table 8, our fusion approach achieves balanced performance across all emotion categories (precision ranging from 88.39% to 91.75%), demonstrating its ability to adaptively emphasize the most informative modality for each emotion type. Particularly for sad emotions, where facial expressions might be subtle but vocal characteristics distinctive, the fusion model achieves 91.14% precision compared to 75.67% and 82.15% with single-modal approaches. This balanced improvement across emotion categories provides strong evidence that the attention-based fusion mechanism effectively addresses the heterogeneous nature of emotional expressions in children with autism.

- Autism-specific feature representation: Based on our comparative experiments, we selected Mel spectrograms for speech representation rather than the commonly used MFCCs. This choice was specifically informed by the unique speech characteristics of children with autism, who typically exhibit distinctive prosodic patterns including abnormal intonation, rhythm, and vocal quality [13]. Mel spectrograms preserve the temporal dynamics and frequency distributions that contain critical emotional information, particularly the distinctive patterns like “babbling” or “growling”, mentioned in our introduction, that often convey negative emotions in children with autism. Our experimental comparison in Table 7 validates this theoretical advantage, with the Mel spectrogram approach achieving 83.47% accuracy compared to 25.72% for MFCCs. Our visualization analysis (presented later in Section 7.5) further substantiates this choice by illustrating how our model identifies distinctive frequency patterns: high energy values in high-frequency regions for happy emotions versus energy concentration in low-frequency domains for calm states. This pattern discrimination ability explains why Mel spectrogram representation achieves remarkably consistent performance across all emotion categories (between 82.15% and 84.12%), demonstrating its robustness in capturing the full spectrum of emotional speech characteristics in children with autism.

These methodological advancements collectively contribute to the significant performance improvement (90.73%) compared to previous methods (≤76.50%). By specifically addressing the unique challenges in analyzing the emotional expressions of children with autism—including reduced facial expression complexity, asynchronous emotional cues, and atypical speech patterns—our approach achieves substantial accuracy gains that demonstrate the importance of a tailored architectural design for this specialized application domain.

7. Discussion and Analysis

7.1. CvT Model Incorporating Convolution Exhibits Excellent Performance in Facial Expression Recognition

The results in Table 7 clearly demonstrate that the CvT model (79.12%) significantly outperforms the ViT model (48%) in facial expression recognition for children with autism.

Building upon the established research on the facial expression characteristics of children with autism discussed in the Related Works Section, our findings confirm that models incorporating both local and global feature processing mechanisms perform better for this specific population. The CvT architecture, with its integration of convolutional operations, appears particularly suited for capturing the unique facial expression patterns exhibited by children with ASD, where emotional cues may manifest differently compared to typically developing children.

Children with autism exhibit reduced dynamic variability in their entire face and display a more balanced intensity of facial movements [9], as compared with typically developing children. Gepner et al. [35] discovered, via computational analysis, that children with autism have diminished complexity in all facial regions when experiencing sadness, whereas certain facial regions display atypical dynamics during moments of happiness. In order to accurately predict the emotional states of children with autism, it is crucial to analyze both the intricate features of their entire face and the distinct features of certain facial regions.

The CvT model synergistically integrates the advantages of CNN’s local feature recognition with the transformer’s global information processing capability, enabling collection of both local details and the overall structural characteristics of facial expressions. Compared with the ViT model, which mainly relies on global information processing, the CvT model shows better performance in dealing with tasks with special expression features such as those of children with autism. Wodajo et al. [36] proposed a network structure including convolution and ViT for face forgery detection task, which raises the accuracy to more than 75%. It also demonstrates the efficacy of utilizing convolution and a transformer in combination, consistent with the findings of this research. Hence, when constructing a classification model for facial expression images of children with autism, it is necessary to thoroughly consider the differences in children’s facial expressions. In addition, incorporating the concept of convolution into the model enables it to effectively focus on the fine-grained features of the image.

7.2. Advantages of Mel Spectrogram for Analyzing the Speech Characteristics of Autistic Children

The experimental results presented in Table 8 show that the Mel spectrogram contains more types of speech emotional characteristics in children with autism, with distinct variations detected among different features. Using the Mel spectrogram as a model input to assess the speech features of children with autism is more advantageous.

Our experimental findings support the theoretical advantage of using Mel spectrograms for analyzing the emotional speech characteristics of children with autism. As the literature review has indicated, the speech of children with ASD contains distinctive acoustic patterns that differ from typically developing children. The superior performance of the Mel spectrogram representation (83.47%) over MFCCs (25.72%) in our study can be attributed to its capacity to preserve the temporal dynamics and frequency distributions that carry crucial emotional information in the speech of children with autism.

This conclusion is consistent with the findings of Meng et al. [37] and Bulatović et al. [38]. MFCCs are generated from the original sound using computational processing like discrete cosine transform, and they are primarily used to describe the directionality and energy distribution information of speech samples, being particularly good at analyzing frequency domain features such as power spectral density. But these features have weak correlations with the emotions of children with autism, resulting in poor performance in autism emotion recognition studies.

7.3. Feature Fusion Models Leverage the Complementary Benefits of Multimodal Data

Based on the results presented in Table 8, the expression and speech feature fusion model demonstrates superior recognition performance against the single-modal data model, which proves that the complementary advantages of multimodal data overcome the limitations of single-modal data.

The empirical results of our multimodal feature fusion model (90.73% accuracy) validate the theoretical framework of multimodal emotion recognition discussed in the Related Works Section. The substantial performance improvement over single-modal approaches (facial expression: 79.12%; speech: 83.47%) empirically demonstrates the complementary nature of these information sources when applied specifically to children with autism. This performance enhancement is particularly significant given the documented challenges in emotion recognition for this population, where traditional single-modal approaches may fail to capture the full spectrum of emotional expressions.

Expression and speech, two distinct sources of emotional information, provide complementary data. Expression mainly conveys non-verbal emotional information, whereas signals such as intonation and rhythm in speech also contain emotion, which cannot be fully accessed in a single modality. Furthermore, a single modality has constraints when it comes to addressing nuanced alterations in emotional displays. Multimodal fusion has the ability to decrease errors or ambiguities that arise from a single source of information, thus enhancing the accuracy and robustness of the model. In special groups such as children with emotional interaction disorders, the change of facial expressions may be less discernible, thus incorporating speech signals can enhance the model’s comprehension of the individual’s emotional state.

The advantages of multimodal data were also verified by Li et al. [34]. They used auditory and visual clues from children with autism to predict children’s positive, negative, and neutral emotional states in real-world game therapy scenarios, with a recognition accuracy of 72.40% [34]. We use the CvT network to extract the facial expression and speech features of children with autism. By using a multi-scale channel attention module, the model enables the fusion of multimodal data. The accuracy of multimodal emotion recognition is 90.73%, which is higher than the accuracy of 79.12% for the facial expression modality and 83.47% for the speech modality. This effectively enhances the model’s performance and makes use of a higher value between the data for both modalities.

7.4. Visualization of Emotional Features in Autistic Children

This study uses the gradient-weighted class activation mapping (Grad-CAM) algorithm to generate heat maps to visually represent the significant basis for model prediction. The darker red color displayed on the heat map signifies a greater contribution and heightened response of the region to the emotion recognition model, which serves as the primary foundation for discriminating emotions in the model.

Figure 13 presents Grad-CAM heat maps visualizing the critical facial regions that influence the model’s emotion recognition decisions for children with autism. In these visualizations, red areas represent regions of high activation that strongly contribute to classification decisions, while blue areas indicate regions with minimal contribution. Analysis of these activation patterns reveals consistent attention distributions across different emotional states, as follows:

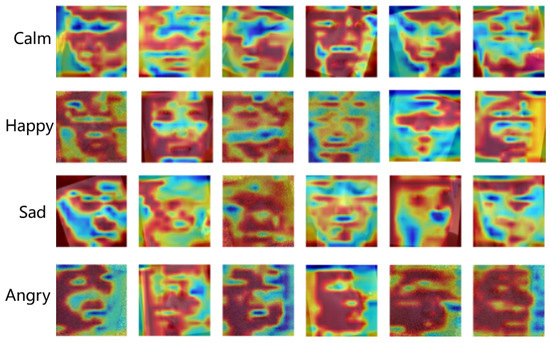

Figure 13.

Grad-CAM visualization of facial expression features across four emotional states in children with autism. Each row represents a different emotion (calm, happy, sad, and angry), with multiple samples shown. Red regions indicate areas of high importance for the model’s classification decisions, while blue regions represent areas of lower relevance.

- (1)

- For calm emotions (first row), the model demonstrates distributed attention across multiple facial areas, with particular focus on the upper cheeks, forehead, jaw regions, and nasolabial folds. This pattern suggests that neutral emotional states in children with autism are characterized by subtle features distributed across the face rather than concentrated in specific expressive regions.

- (2)

- For happy emotions (second row), the model consistently emphasizes sensory regions and their surrounding areas, particularly the eyes, mouth, and periorbital muscles. The activation patterns show concentrated attention on smile-related features, including the corners of the mouth and the orbicularis oculi muscles that are activated during genuine expressions of happiness. This finding aligns with previous research indicating that children with autism may express happiness through characteristic eye and mouth movements, albeit with different muscular coordination than typically developing children [9].

- (3)

- For sad emotions (third row), the model exhibits targeted attention to specific sensory regions, predominantly focusing on the eyes, mouth, and nasal areas. The consistent activation around the eyes and the downturned mouth corners corresponds to the characteristic facial configurations associated with sadness. This localized attention pattern suggests that children with autism express sadness through distinctive movements in these specific facial regions rather than through global facial configurations.

- (4)

- For angry emotions (fourth row), the model demonstrates more holistic attention patterns spanning across broader facial regions, with activation distributed across the brow, eyes, and mouth areas. This comprehensive attention distribution suggests that anger expression in children with autism involves coordinated movements across multiple facial regions, though with activation patterns that may differ from those observed in typically developing children.

These visualization findings provide important insights into how the model interprets the facial expressions of children with autism. The observed attention patterns confirm the findings of previous research suggesting that children with autism rely more on specific facial regions for emotional expression rather than coordinated global facial movements (Guha et al., 2016 [9]; Samad et al., 2015 [7]). Furthermore, these visualizations offer a quantitative basis for understanding the distinctive facial expression characteristics in this population, potentially informing both diagnostic approaches and therapeutic interventions targeting emotional communication.

The analysis of misclassification patterns revealed important insights into the model’s limitations. The confusion between calm and sad emotional states represents the most frequent error pattern in our facial expression recognition system, with bidirectional misclassifications accounting for approximately 4.6% of errors across these categories. In cases where the model incorrectly classified sad expressions as calm, the Grad-CAM visualizations typically showed insufficient attention to subtle mouth configurations while maintaining focus on the eye regions. This pattern suggests that the model occasionally struggles to integrate multiple facial cues when expression intensity is reduced, a common characteristic in children with autism who often exhibit flattened affect. In contrast, happy expressions were rarely confused with other emotions (misclassification rate of only 2.8%), likely due to the distinctive activation patterns around the mouth region that our model successfully captured through the attention mechanism.

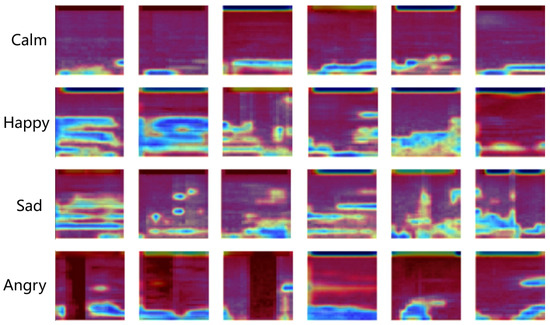

Figure 14 presents the Grad-CAM heat maps highlighting the critical time–frequency regions of Mel spectrograms that significantly influence the model’s emotion classification decisions. The intensity of the red coloration corresponds to the degree of feature importance, with darker regions indicating stronger activation in the model’s decision-making process. Our comprehensive analysis reveals distinctive attention patterns across the four emotional states as follows:

Figure 14.

Grad-CAM visualization of Mel spectrogram features across four emotional states (calm, happy, sad, and angry) in children with autism. The intensity of the red coloration indicates regions of high importance for the model’s decision-making process, with darker red representing stronger feature activation.

- (1)

- For calm emotional states, the model predominantly attends to low-frequency components and regions of minimal energy variation. This concentration of attention corresponds to the acoustic characteristics of calm speech in children with autism, which typically exhibits minimal spectral variation and more stable frequency patterns across time.

- (2)

- In happy emotional expressions, the model directs significant attention to regions of elevated energy in the middle and low frequency bands. The heat map reveals concentrated activation in areas with distinctive tonal qualities and increased spectral energy density.

- (3)

- For sad emotional states, the model identifies and emphasizes regions exhibiting irregular frequency distributions and notable temporal fluctuations. The heat map demonstrates focused on transitional components in the spectrogram, indicating the model’s sensitivity to the distinctive rhythm and prosodic features.

- (4)

- In angry emotional expressions, the heat map exhibits pronounced activation in high-frequency and high-energy regions of the spectrogram. This pattern of attention demonstrates the model’s ability to identify the increased speech signal energy and intensity characteristic of angry vocalizations.

The examination of speech emotion recognition errors revealed that angry vocalizations were occasionally misclassified as sad (approximately 6.2% of cases). In these instances, the Grad-CAM visualizations showed the model attending to lower frequency regions that typically characterize sad expressions rather than the high-energy, high-frequency components more common in angry vocalizations. This finding suggests that the model struggles with distinguishing between angry vocalizations with suppressed intensity (common in children with autism who may internalize anger) and sad emotional expressions. This pattern aligns with clinical observations that children with autism may express anger differently than typically developing children, often with atypical vocal characteristics that blend emotional categories that are more distinct in neurotypical populations.

Based on the aforementioned analysis, it is concluded that the speech characteristics associated with happy, sad, angry, and calm emotions in children with autism are significantly different, primarily in terms of frequency and energy distribution.

7.5. Performance and Computational Efficiency Trade-Offs

While our proposed approach demonstrates superior accuracy for emotion recognition in children with autism, its practical deployment in intervention settings necessitates the evaluation of its computational efficiency alongside performance benefits.

- 1.

- Computational Complexity Comparison

Vision transformers and convolutional neural networks represent different approaches to feature extraction with distinct computational profiles. According to Dosovitskiy et al. [39], ViT models exhibit quadratic computational complexity O (n²) with respect to token count, creating substantial overhead for image processing. For standard 224 × 224 pixel inputs with 16 × 16 patches, this results in processing 196 token sequences through multiple transformer layers.

The CvT architecture addresses this limitation through a hierarchical design that progressively reduces spatial resolution [40]. Their analysis demonstrates that CvT-13 achieves higher accuracy than ViT-B/16 while requiring approximately 20% fewer parameters (20 M vs. 86 M) and 30% less computational overhead (4.5 G vs. 17.6 G FLOPs). This efficiency gain stems from two key factors as follows: convolutional token embedding that downsamples spatial dimensions and convolutional projection layers that introduce inductive biases beneficial for visual tasks.

- 2.

- Efficiency-Performance Balance in Practice

Hybrid architectures combining convolutional operations with transformer elements consistently demonstrate favorable efficiency–performance trade-offs. Graham et al. [41] showed that such models achieve up to 5× faster inference speed compared to pure transformer approaches at comparable accuracy levels. Similarly, Hassani et al. [42] demonstrated that compact transformer designs incorporating convolutional principles can maintain competitive performance while significantly reducing parameter count.

These findings align with our experimental results, where the CvT model outperformed the pure transformer approach (ViT) by 31.12% in accuracy for facial expression recognition. This substantial performance gap, combined with the inherent efficiency advantages of the CvT architecture, underscores the value of our approach for specialized applications like autism emotion recognition.

- 3.

- Multimodal Fusion Considerations

Our multimodal fusion approach necessarily requires additional computational resources compared to single-modal methods. However, the substantial accuracy improvement (90.73% for fusion versus 79.12% and 83.47% for individual modalities) represents a compelling trade-off for clinical applications where recognition precision directly impacts intervention quality. As Liu et al. [32] noted, models employing hierarchical designs with local processing elements typically achieve a more favorable computation–accuracy balance for complex vision tasks.

7.6. Limitations and Future Work

Despite the promising results achieved in this study, several limitations should be acknowledged that point toward directions for future research as follows:

- 1.

- Error Patterns and Performance Variations

Despite achieving 90.73% overall accuracy, our model demonstrates systematic error patterns that warrant critical examination. Analysis reveals confusion primarily between calm and sad emotions (approximately 4.6% bidirectional misclassification), while angry emotions have the lowest recognition rate (88.85%) and happy emotions the highest (93.02%). These performance discrepancies reflect fundamental challenges in emotion recognition for children with autism rather than mere algorithmic limitations.

The calm–sad confusion pattern likely stems from the documented “flat affect” characteristic in autism spectrum disorders, where similar facial configurations may represent different internal emotional states. This inherent ambiguity presents a theoretical challenge that transcends technical solutions, suggesting potential limitations in discrete emotion classification approaches for this population. Similarly, the lower recognition rate for angry emotions may reflect the heterogeneous manifestation of negative emotional states in autism, where expressions may range from subtle microexpressions to pronounced but atypical configurations.

Our subject-level analysis reveals substantial performance variations across individual participants, with recognition accuracies ranging from 82.4% to 97.1%. Lower performance was consistently observed for minimally verbal participants and those with more severe autism symptoms. This variability indicates that autism severity and communication ability significantly influence emotional expression detectability, a critical factor not explicitly accounted for in our current model architecture. Future work should explore adaptation mechanisms that can accommodate this individual heterogeneity, potentially incorporating autism severity metrics as model parameters to enable more personalized emotion recognition.

- 2.

- Dataset Limitations

The dataset, comprising data from only 33 children at a single educational facility in East China, introduces significant constraints on the generalizability of our findings, despite our basic data augmentation efforts through horizontal flipping and Gaussian blurring. This regional concentration raises critical questions about potential cultural influences on emotional expression patterns. Research demonstrates cultural variations in emotional display rules and expression intensity, potentially limiting our model’s applicability across diverse populations. Additionally, our dataset concerns children primarily aged 4–5 years, neglecting developmental trajectories in emotional expression across the lifespan of individuals with autism.