Enhancing Security of Online Interfaces: Adversarial Handwritten Arabic CAPTCHA Generation

Abstract

1. Introduction

2. Literature Review

2.1. CAPTCHA Types

2.2. Latin Handwritten CAPTCHAs

2.3. Arabic Handwritten CAPTCHAs

2.4. Adversarial CAPTCHAs

- Chinese Script: Zhang et al. [45] employed multi-target adversarial attacks on Chinese character CAPTCHAs, reducing solver accuracy to 12% while maintaining 88% human accuracy.

- Cross-Script Analysis: Unlike Arabic, Latin and Chinese CAPTCHAs benefit from extensive datasets and standardized fonts. Arabic’s cursive nature, contextual letter forms (e.g., isolated, initial, medial, final), and diacritics introduce unique challenges, making adversarial perturbations more effective against segmentation and recognition models. This work bridges a critical gap by tailoring adversarial techniques to Arabic’s script-specific complexities.

3. Methodology

- Generation of Arabic handwritten words:

- Applying adversarial perturbation:

- We have applied five adversarial models on the generated samples. These techniques are: EOT, SGTCS, JSMA, IAN, and CTC. The generated CAPTCHAs use single perturbations (e.g., JSMA-only, EOT-only). The selection of these approaches was based on their empirical robustness against perturbation removal attacks demonstrated by Alsuhibany [49]. Using five approaches provided sufficient data while keeping the scope manageable for the study.

- Evaluation

- We have evaluated the security level of the generated samples via the Google vision API [50].

- We have evaluated the usability level of the generated samples through an experimental study.

3.1. Arabic Handwritten CAPTCHA

Generation of Arabic Handwritten Words

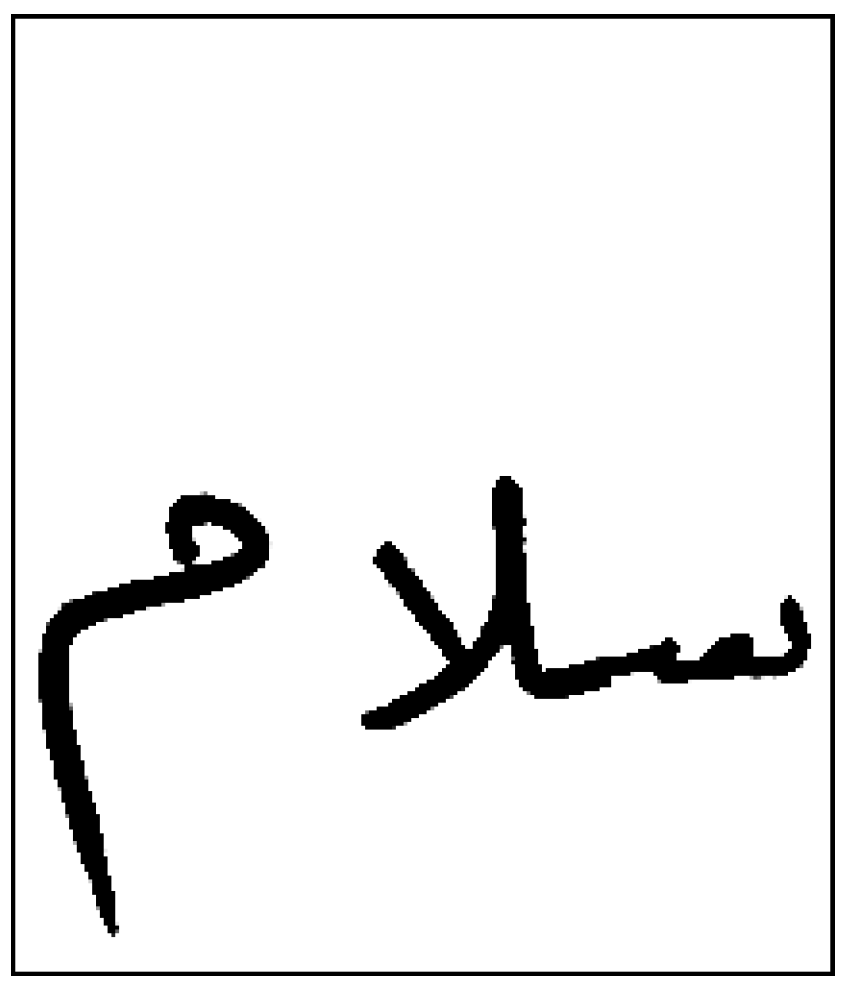

- Generation of Arabic handwritten meaningless words:Alsuhibany and Alquraishi’s [33] model involves programmatically generating handwritten Arabic words and assembling them into CAPTCHA images. This is done through a series of Hypertext Preprocessor (PHP) scripts. PHP is a general-purpose scripting language that can be utilized to create intuitive and dynamic scripts, automating the entire process.PHP first selects a random word by combining characters from predefined Arabic letter arrays. The word is converted to Unicode representation. Individual letter images are retrieved from the Arabic letters MySQL database. This database contains over 250 photos of letters in various positions/styles.The letters are arranged on a blank canvas using coordinates calculated from baseline positioning functions. Random wrinkles are applied and overlapped to make the handwriting appear natural. Joining points between letters are highlighted with colored ellipses. Baseline differences are accumulated to adjust letter placements vertically.An example of generated CAPTCHA images of meaningless words is shown in Figure 2.

- Generation of Arabic handwritten meaningful words:To enhance realism and usability, the word generation process is enhanced. Instead of combining random letters, meaningful Arabic words are selectively constructed. An external dictionary file containing over 10,000 common words is incorporated into this process. The script is modified to retrieve validated words randomly from this dictionary, instead of the predefined character arrays. Additional validation is added to filter out words containing rare characters unsupported by the letter image database. Punctuation and diacritics are also stripped for simplicity. This improves the linguistic validity and readability of the generated CAPTCHAs for human users. As real words, they are more intuitive to decipher compared to random strings. An example of generated CAPTCHA images of meaningful words is shown in Figure 3.

3.2. Adversarial Techniques Used

- Expectation Over Transformation (EOT).

- Scaled Gaussian Translation with Channel Shifts (SGTCS).

- Jacobian-based Saliency Map Attack (JSMA).

- Immutable Adversarial Noise (IAN).

- Connectionist Temporal Classification (CTC).

- 1.

- Expectation Over Transformation (EOT):EOT aims to generate small input perturbations that lead a neural network to produce incorrect predictions. With EOT, adversarial perturbations are formulated as the expectation of infinitesimal transformations of the original input, as opposed to other attack methods that directly optimize the network loss or logits. The following are the critical EOT steps:

- (a)

- Identify a distribution of potential small input transformations. Gaussian noise or patch rotations and translations of the input image are common choices.

- (b)

- To obtain a Jacobian J that demonstrates how network activations change with small input variations, linearize the neural network function around the original input x.

- (c)

- Determine the expectation of the network predictions over the transformation distribution. This can be approximately expressed as .

- (d)

- Change the original input in a way that increases the expectation for an alternative target class while decreasing the expectation for the true class y. This causes an adversarial perturbation .

- (e)

- To produce the adversarial example , add the perturbation to the original input. The network should confidently and incorrectly predict as a result of this process.

We show the design of EOT in Algorithm 1.- Rationale for Selection. EOT was chosen for three reasons:

- (a)

- Robustness to Preprocessing: CAPTCHAs often apply distortions to thwart attacks; EOT’s perturbations account for these transformations.

- (b)

- Real-World Relevance: unlike attacks assuming static inputs, EOT mimics adversarial examples surviving sensor noise or rendering artifacts.

- (c)

- Transferability: by optimizing over a distribution of , EOT-generated attacks generalize better to black-box models.

- Contribution to CAPTCHA Security EOT exposes vulnerabilities in CAPTCHAs that rely on deterministic preprocessing (e.g., fixed noise patterns). By showing that adversarial examples can persist through randomized transformations, our work argues for non-differentiable CAPTCHA augmentation (e.g., non-grid warping) to break gradient-based attacks.

- Experimental Parameters For reproducibility, we configured EOT with the following:

- −

- Transformation Distribution: Gaussian noise ( = 0.1), rotations (±15°), and translations (±5%).

- −

- Perturbation Budget: -norm ≤ 0.05 (normalized pixel range).

- −

- Optimization: 200 iterations with ( = 0.01).

- −

- Expectation Approximation: 50 samples per iteration.

Algorithm 1 EOT Adversarial Generation. - 1:

- Input:

- 2:

- Original image x

- 3:

- Transformation distribution

- 4:

- Target class

- 5:

- Number of samples n

- 6:

- Identify :

- 7:

- Specify distribution over small transformations

- 8:

- Linearize F around x:

- 9:

- Compute Jacobian J expressing change in F w.r.t inputs

- 10:

- Determine expectation:

- 11:

- for to n do

- 12:

- Sample noise

- 13:

- Perturb image:

- 14:

- Optimize expectation for over y

- 15:

- end for

- 16:

- Return adversarial image:

- 17:

- 18:

- Output:

- 19:

- Adversarial image

- 2.

- Scaled Gaussian Translation with Channel Shifts (SGTCS)SGTCS is a targeted adversarial perturbation technique that aims to incorrectly classify an input image as belonging to a particular target class. To create an imperceptible disturbance, it applies scaled Gaussian noise to each channel of the image.The attack takes in an original correctly classified image, a target class label, and a pretrained convolutional neural network (CNN) model. It first generates randomly sampled Gaussian noise with a zero mean and unit variance for each channel of the image.Next, a small hyperparameter ( = 0.1) that controls the perturbation’s magnitude scales the noise values. Next, each channel of the original image has the scaled noise added to it element-by-element. As a result, each color channel in the disturbed image has indistinguishable noise added to it.The predictions are then observed after the perturbed image has been run through the pretrained CNN model. By creating an adversarial example, the attack is successful if the model incorrectly labels the image as belonging to the target class. Otherwise, the noise scaling factor is increased, and this process is repeated until misclassification occurs or a maximum number of iterations is reached. This allows for stronger perturbations until the model’s decision boundary is crossed.The SGTCS method targets all three dimensions of translation invariance: spatial, color, and instance translations. By adding scaled Gaussian noise individually to each color channel, it breaks the color translation invariance of CNNs. This forces the model to rely more on spatial and instance-based cues for classification.SGTCS generates a targeted adversarial perturbation by adding scaled Gaussian noise to each color channel of the input image. Mathematically, let x be the original input image. SGTCS computes the perturbation , where N is a tensor of Gaussian noise with zero mean and unit variance, and is a small hyperparameter controlling the perturbation magnitude. The perturbed image is then created as , where the noise is added element-wise to each color channel of x. The goal is to find the minimum that causes the model to misclassify the perturbed image as the target class.Attacking spatial invariance is additionally helped by the randomness of noise. The aim is to create a minimal perturbation that induces misclassification in a selected target class while being difficult for the model to detect. This is what the SGTCS method attempts to accomplish by simultaneously perturbing all translation dimensions. We show the design of SGTCS in Algorithm 2.

- Rationale for Selection. SGTCS was chosen for three reasons:

- (a)

- CAPTCHA-Specific Weaknesses: many CAPTCHAs rely on color/texture invariance for automated solving; SGTCS tests whether minor channel shifts can break this assumption.

- (b)

- Controlled Perturbations: unlike untargeted noise attacks, SGTCS iteratively adjusts to find the minimal perceptible perturbation, mimicking real-world adversarial constraints.

- (c)

- Diagnostic Value: by isolating failures in color/spatial invariance, SGTCS reveals which CAPTCHA features (e.g., hue consistency, edge alignment) are over-relied on by models.

- Contribution to CAPTCHA Security SGTCS demonstrates that CAPTCHAs using color-based obfuscation (e.g., overlapping hues, gradient backgrounds) are vulnerable to channel-specific perturbations. Our results advocate for non-invariant CAPTCHA features, such as the following:

- −

- Non-grid-aligned characters (breaking spatial invariance).

- −

- High-contrast, non-RGB color spaces (e.g., CMYK patterns, disrupting channel-wise attacks).

- Experimental Parameters

- −

- Initial Perturbation: = 0.1, scaled by +0.05 per iteration.

- −

- Noise Distribution: N(0,1) sampled independently per channel.

- −

- Termination Conditions:

- ∗

- Maximum iterations: 50

- ∗

- Success threshold: (normalized pixel range).

- −

- Model Constraints: inputs clipped to [0,1] post-perturbation to maintain valid pixel ranges.

Algorithm 2 SGTCS Adversarial Generation. - 1:

- Input:

- 2:

- Original image x

- 3:

- Epsilons

- 4:

- Step size

- 5:

- Number of iterations

- 6:

- Initialization:

- 7:

- Perturbation

- 8:

- for to number of iterations do

- 9:

- Iteration i:

- 10:

- Compute gradient:

- 11:

- Update perturbation:

- 12:

- Clip perturbation:

- 13:

- Generate adversarial image:

- 14:

- Clip image values:

- 15:

- Predict on adversarial image:

- 16:

- end for

- 17:

- Output: Adversarial image

- 3.

- Jacobian-based Saliency Map Attack (JSMA): An iterative, greedy algorithm called JSMA is used to create adversarial examples. The way that JSMA operates is by calculating an image’s Jacobian matrix with respect to the logits of the model. The matrix shows how sensitive each pixel’s changes are to the model’s predictions. A saliency map is created as a matrix to determine which pixels have the greatest influence over the model’s predictions. Firstly, a target class t is chosen by the algorithm to incorrectly classify the image. The original clean image is then initialized to represent the adversarial image. The algorithm calculates the Jacobian of the current adversarial image with respect to the logits for each iteration. From the Jacobian, it computes a saliency map, which assigns each pixel a value indicating how much modifying that pixel would affect the predicted probability of class t versus the original predicted class. In an attempt to raise the probability of class t, it then greedily changes the two pixels with the highest absolute saliency values, iteratively calculating the Jacobian/saliency map. The modification of pixels continues until the model classifies the image as class t or until a predetermined modification budget is exhausted. With relatively few pixel modifications, JSMA generates targeted adversarial perturbations by iteratively identifying the most influential pixels, based on the current predictions of the model. Mathematically, let x be the original input image and y be the true class label. The goal of JSMA is to find a small perturbation that can be added to x to create an adversarial example , such that the model incorrectly classifies as a target class . The JSMA algorithm calculates the Jacobian matrix J of the model’s output with respect to the input x, where , and represents the model’s output logits. From the Jacobian matrix, a saliency map S is computed, whereand t is the target class. This saliency map identifies the pixels that have the greatest influence on increasing the probability of the target class t while decreasing the probability of the true class y.The JSMA algorithm then iteratively modifies the two pixels with the highest saliency values, updating the Jacobian and saliency map at each step, until the model classifies the image as the target class or a predetermined perturbation budget is exhausted.We show the design of JSMA in Algorithm 3.

- Rationale for Selection. JSMA was chosen for three reasons:

- (a)

- Targeted Attack Capability: unlike untargeted attacks, JSMA forces misclassification to a specific class, mimicking real-world CAPTCHA bypass scenarios.

- (b)

- Minimal Perturbations: by modifying only critical pixels, it tests whether CAPTCHAs are vulnerable to imperceptible alterations.

- (c)

- White-Box Relevance: as CAPTCHA defenses often rely on obfuscation, JSMA’s reliance on model gradients highlights weaknesses in systems assuming attackers lack model knowledge.

- Contribution to CAPTCHA Security:JSMA’s saliency maps reveal which CAPTCHA features (e.g., character spacing, noise patterns) are most vulnerable to adversarial manipulation. By quantifying how few pixel changes are needed to deceive models, our work underscores the need for non-differentiable CAPTCHA designs (e.g., randomized distortions) that resist gradient-based attacks.

- Experimental Parameters:

- −

- Perturbation Budget: maximum -norm of 15% of total pixels.

- −

- Iterations: 100 steps or until misclassification.

- −

- Target Class Selection: least-likely class (untargeted) or predefined labels (targeted).

- −

- Pixel Constraints: modifications limited to ±10 intensity values per step to mimic subtle adversarial alterations.

Algorithm 3 JSMA Adversarial Generation. - 1:

- Input:

- 2:

- x: Original image

- 3:

- t: Target class

- 4:

- : Epsilon budget

- 5:

- : Mask

- 6:

- while do

- 7:

- {Fourier transform}

- 8:

- {Compute gradient in Fourier domain}

- 9:

- Calculate Jacobian J of w.r.t. model logits

- 10:

- {Calculate saliency map}

- 11:

- {Apply mask}

- 12:

- {Greedily select pixels p in S with highest values}

- 13:

- {Perturb selected pixels and neighbors}

- 14:

- {Inverse Fourier transform}

- 15:

- Update

- 16:

- end while

- 17:

- Return

- 18:

- Output: Adversarial image

- 4.

- Immutable Adversarial Noise (IAN):IAN is a training technique that aims to add imperceptible perturbations (known as adversarial noise) to inputs in order to fool deep learning models while maintaining the original prediction. This helps to make the models more robust against adversarial attacks. The fundamental goal of IAN is to identify small perturbations that can be added to inputs to alter model predictions while maintaining the inputs’ perceived meaning by humans. There are two main steps to achieving this:

- (a)

- Perturbation Computation: IAN firstly feeds a clean input through the model to obtain the original prediction. It then computes an adversarial perturbation based on the gradient of the loss with respect to the input, which can change the prediction when added to it. This perturbation is computed so that it is imperceptible to humans.

- (b)

- Adversarial Training: The computed perturbation is then combined with the clean input to generate an adversarial example. This perturbed input is fed into the model, which produces a different prediction to the clean input. The perturbation is interpreted as the “correct” or “target” output. This set up is an adversarial training example that can be used to fine-tune the model weights.

IAN aims to find a small perturbation that can be added to the input x to alter the model’s prediction while maintaining the original meaning of the input as perceived by humans. Mathematically, IAN first computes the gradient of the loss L with respect to the input x, where y is the true label. The perturbation is then defined as (Section 2.4), where is a hyperparameter controlling the perturbation magnitude. The perturbed input is then used as an adversarial training example to fine-tune the model, with the goal of making the model robust to the computed perturbation.IAN aims to train models that are robust to imperceptible perturbations by repeating the above process on multiple inputs from the training dataset. Using perceptual loss functions, the perturbations are limited to remain small and imperceptible. This enables IAN to produce adversarial examples that do not change significantly how humans interpret inputs. We show the design of IAN in Algorithm 4.- Rationale for Selection. IAN was chosen for two reasons:

- (a)

- Human-Readable Perturbations: unlike standard adversarial training, IAN’s -bounded noise ensures CAPTCHA legibility post-perturbation.

- (b)

- Defense-Aware Evaluation: by training models with IAN, we test whether CAPTCHA recognition systems can resist perturbations without degrading human usability.

- Contribution to CAPTCHA Security:IAN reveals that CAPTCHA models trained without adversarial robustness fail catastrophically when perturbed, even with imperceptible noise.

- Experimental Parameters

- −

- Perturbation Budget: (normalized pixel range), incrementally increased to .

Algorithm 4 IAN Adversarial Generation. - 1:

- Input:

- 2:

- Original image x

- 3:

- Epsilons

- 4:

- Compute perturbation:

- 5:

- Sample noise N

- 6:

- Perturbation

- 7:

- Create adversarial example:

- 8:

- Output: Adversarial image

- 5.

- Connectionist Temporal Classification (CTC):The CTC model represents an adversarial perturbation. CTC is a type of neural network architecture widely used in sequence labeling tasks such as speech recognition and handwriting recognition, where the input and output sequences are of different lengths.The main components of CTC are the following:

- (a)

- Recurrent Neural Network (RNN): CTC employs an RNN as the primary component for encoding the input sequence. There are several possible forms for the RNN, including a simple RNN, LSTM network, or GRU network. The RNN reviews the input sequence in order, saving a hidden state to remember information from previous time points.

- (b)

- Softmax Output Layer: A softmax layer that provides a probability for each possible label in each step is present in the RNN’s output layer. The softmax layer calculates the probability of each label using the input sequence and the RNN’s current hidden state.

- (c)

- CTC Loss Function: The CTC loss function is utilized for computing the loss between the actual label sequence and the forecast probability distribution. The definition of the CTC loss function is as follows:where L is the loss and is the probability of finding the true label sequence y given the input sequence x. The CTC loss function is computed using dynamic programming, which allows the loss to be calculated efficiently even with long input sequences.

Keras CTC was used to train a benchmark sequence recognition model. A stack of LSTM layers, a dense layer, and a CTC loss layer made up the model architecture. Until the model’s performance was considered acceptable, it was trained on a sequence dataset.To generate adversarial examples, the Fast Gradient Sign Method (FGSM) can be applied to the CTC loss, computing the perturbation . FGSM is a simple and computationally inexpensive method for determining perturbations that increase a model’s loss function. Given a test example, the FGSM determines the smallest perturbation required to change the model predictions.For each test example, the loss gradient was calculated with respect to the input and taking a step in the direction of the gradient’s sign. The step size is a hyperparameter that determines the magnitude of the perturbation. Larger step sizes are more likely to affect model behavior, but they may introduce detectable noise.By feeding perturbed examples into the CTC model and monitoring whether the predictions change, the adversarial accuracy of the model was assessed. Additionally, the perturbations’ qualitative impact on the inputs’ discernible noise or distortions was calculated. We show the design of CTC in Algorithm 5.- Rationale for Selection. CTC was chosen for two reasons:

- (a)

- Temporal CAPTCHA Relevance: many CAPTCHAs use animated/text-scrolling designs; CTC’s sequence alignment mirrors automated solvers’ temporal reasoning.

- (b)

- Alignment Vulnerabilities: CTC’s reliance on input-output alignment makes it susceptible to perturbations that shift temporal features (e.g., character onset/offset).

- Contribution to CAPTCHA Security Our CTC-based attacks reveal that temporal CAPTCHAs are vulnerable to gradient-aligned perturbations:

- −

- Key Insight: automated solvers over-rely on consistent character timing and spacing, which adversarial noise can disrupt.

- −

- Per-Frame Noise: add dynamic, non-differentiable noise to individual frames to block gradient-based attacks.

- Experimental Parameters

- −

- Model Architecture:

- ∗

- 3 bidirectional LSTM layers (256 units each),

- ∗

- Optimizer (lr=0.001)

- −

- Adversarial Settings:

- ∗

- (normalized pixel range),

- ∗

- Sequence perturbation budget: per frame

| Algorithm 5 CTC Adversarial Generation. |

|

3.3. Evaluation

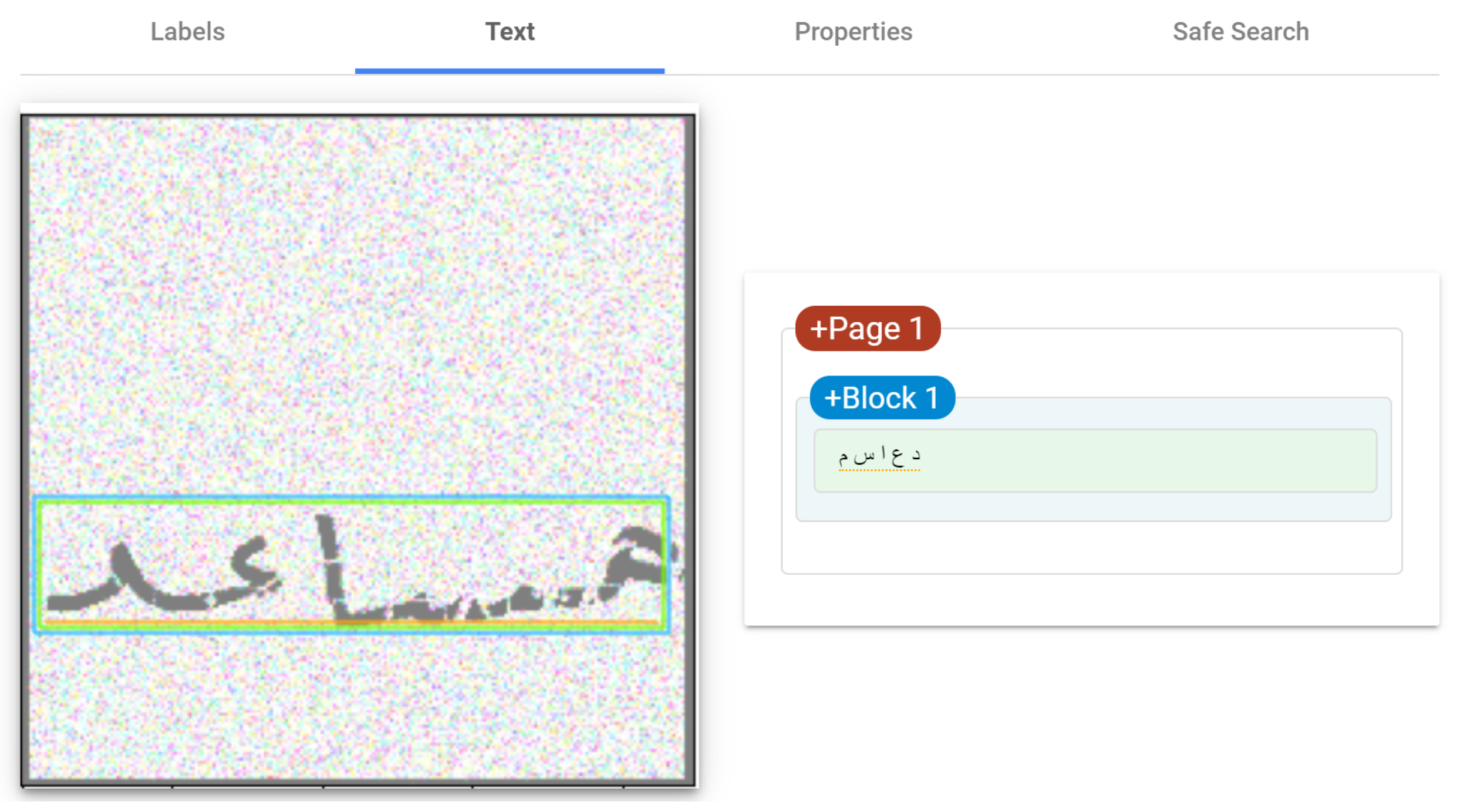

3.3.1. Security Evaluation

- Experimental Set-Up:This section describes the experimental set-up for the security evaluation.

- −

- System:To evaluate the security of the adversarial CAPTCHAs, the Google Vision API was used [50] to recognize perturbed images. Google Vision is a model that uses machine learning to identify images that can be considered representative of modern bot capabilities. The Cloud Vision API provided an important benchmark for assessment, given its prevalence and high accuracy.

- API Version: the testing was done using the latest stable version of the Google Cloud Vision API, which is currently version v1.

- Input Data Format: the Vision API can process a variety of input data formats, including image files (JPEG, PNG, GIF, BMP) and base64-encoded image data.

- Computer Vision Tasks: the testing involves evaluating the API’s performance on common computer vision tasks, such as the following:

- ∗

- Image labeling: identifying and classifying objects, scenes, and activities in images.

- ∗

- Facial detection and recognition: detecting and identifying faces in images.

- ∗

- Optical character recognition (OCR): extracting text from images.

- ∗

- Logo detection: identifying logos within images.

- ∗

- Web detection: annotating images with information about the web entities they contain.

- API Parameters: the testing involves experimenting with different API parameters, such as the following:

- ∗

- Image source (file, base64-encoded).

- ∗

- Vision feature types to enable(e.g., LABEL_DETECTION, FACE_DETECTION).

- ∗

- Language model to use for text-related features.

- ∗

- Confidence thresholds for detected entities.

- Validation (Text Detection (OCR)):

- ∗

- Arabic Text: validate accuracy for handwritten/printed Arabic text.

- ∗

- Mixed Languages: test images with Arabic + Latin script. Validation:

- ∗

- Ground Truth: compare API output with known text.

- ∗

- Accuracy Metric: Accuracy = (Correctly Recognized Characters/Total Characters) × 100

- −

- Dataset:The experimental set up included creating a dataset of 60 Arabic handwritten word CAPTCHA images (30 meaningful words, 30 meaningless words). The images were generated using Alsuhibany and Alquraishi’s [33] model for generating meaningless words, with an improved model used to generate the meaningful words. The images were then categorized based on whether they were meaningful or meaningless. Arabic CAPTCHA images with perturbed handwritten words were then produced using five different perturbation techniques. These perturbed images were categorized into five groups based on the perturbation methods used. Finally, a total dataset of 300 perturbed Arabic handwritten word CAPTCHA images was compiled to be used in the security evaluation experiment.

- ∗

- Size:

- Base dataset: 60 Arabic handwritten CAPTCHAs (30 meaningless, 30 meaningful).

- Perturbed dataset: 300 CAPTCHAs (5 techniques × 60 images).

- ∗

- Source: synthetic generation using PHP scripts and a MySQL database of 250+ Arabic letter images in varied styles.

- ∗

- Characteristics:

- Meaningless words: random strings of 3–9 Arabic characters.

- Meaningful words: selected from a dictionary of 10,000 common Arabic words, stripped of diacritics.

- ∗

- Training/Testing Split: no traditional split; all CAPTCHAs were generated and evaluated.

- Experimental Procedure:This section describes the experimental procedure for the security evaluation.

- −

- The 300 perturbed CAPTCHA images with different adversarial perturbations were tested using Google Vision [50].

- −

- The recognition results (text labels) were analyzed and classified into categories, as shown in Table 4.

- −

- The recognition rates in each category were calculated for each perturbation technique.

3.3.2. Usability Evaluation

- Experimental Set-Up:The experimental set-up of the experiment was as follows:

- −

- Participants:To enhance participant engagement, reduce respondent burden, and increase the likelihood of completing the entire experiment, the experiment was divided into two equal-length sections, accessible via separate links. Each link contained an identical number of images and a balanced distribution of meaningful and meaningless words. Link 1 had 132 participants out of a total of 294, and Link 2 had 162 participants.

- −

- Design:An online test containing 33 pages with two links was developed. There were 30 CAPTCHA questions in each link. 30 distinct CAPTCHA images were chosen at random from the test dataset. There were 15 meaningful words in the CAPTCHA images and 15 meaningless words. There was a text field for participants to enter their answer next to each CAPTCHA image.

- −

- System:The usability testing system consisted of an online website, created using Figma and Weavely forms. It was responsively designed for both desktop and mobile use.

- Experimental Procedure:

- −

- The Procedure of the usability experiment:

- The initial page gathered demographic information such as age, gender, proficiency in Arabic, and experience in CAPTCHA.

- The task and method of interaction were explained on the second page of a set of instructions.

- Participants were presented with an example CAPTCHA image and detailed step-by-step instructions on how to complete it.

- Each page displayed one CAPTCHA sample for participants to solve.

- A progress bar tracked completion across all pages.

- Participants could not advance pages without solving the CAPTCHA correctly.

- The system did not provide feedback on answer correctness or hints.

- Upon completion, a final page contained a thank-you page.

- −

- Usability Evaluation Metrics:Usability was evaluated based on the following:

- Time spent solving CAPTCHAs:The average time taken to complete a CAPTCHA (in seconds), from the ‘Start’ button click to the ‘Submit’ button click. Quicker average solution times indicated a better user experience.

- Accuracy in solving CAPTCHAs:Accuracy referred to the percentage of CAPTCHAs that participants were able to solve accurately. A higher accuracy rate indicated improved usability.

4. Results

4.1. Security Evaluation

4.2. Usability Evaluation

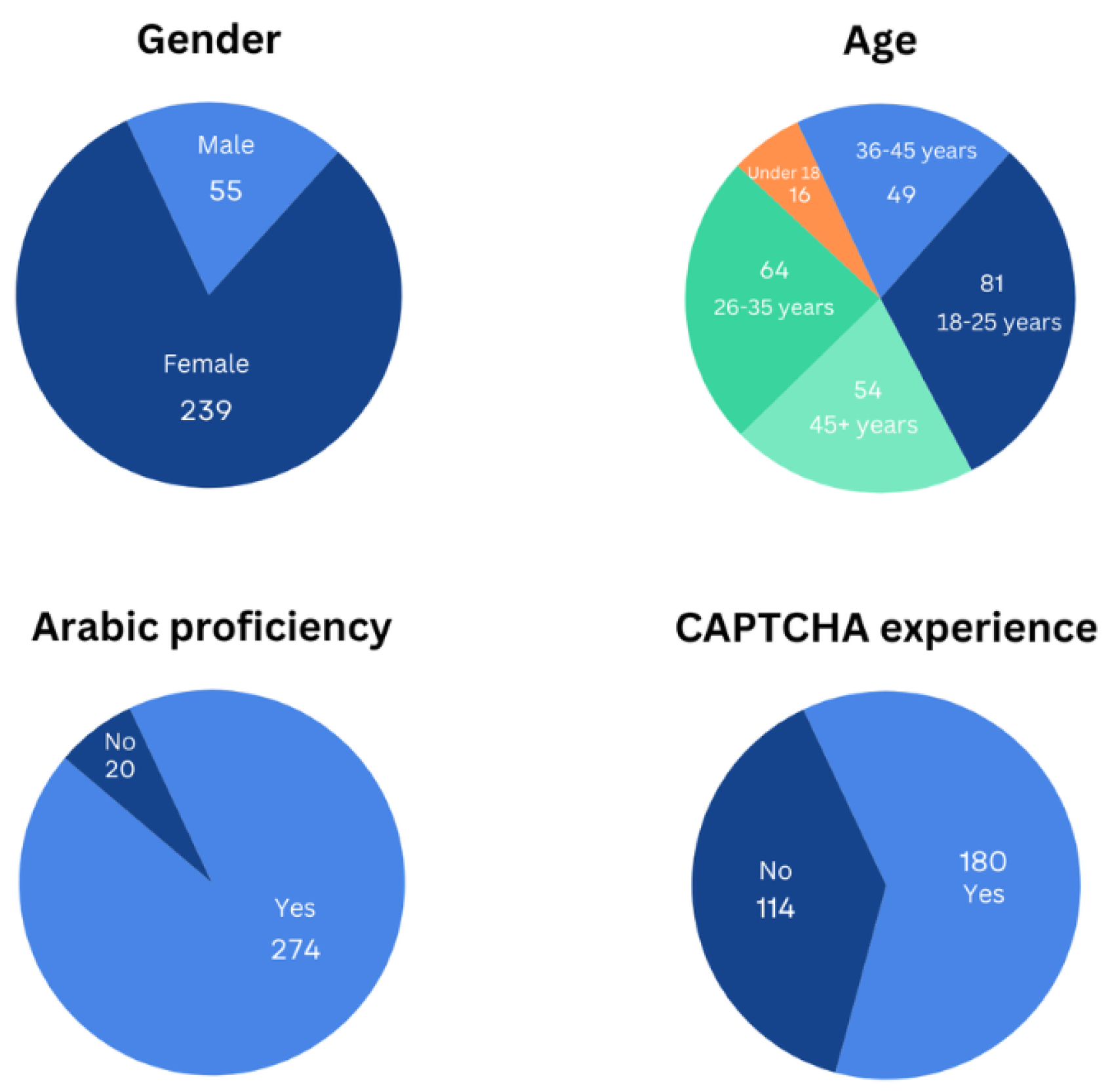

4.2.1. Participants

- Gender: female or male.

- Age: Under 18 years, 18–25 years, 26–35 years, 36–45 years, 45+ years.

- Arabic proficiency: Most participants were Arabic speakers, except 20 participants (approximately 7% of 294) who reported not speaking Arabic.

- CAPTCHA experience: yes or no.

4.2.2. Time Spent Solving CAPTCHAs

4.2.3. Accuracy in Solving CAPTCHAs

- The Accuracy rate for each technique was calculated using the following formula:

5. Discussion

5.1. Security Level

5.1.1. Meaningless Words

- CTC achieved a comparable 43.33% level of partial recognition, with a lower 23.33% rate of unrecognized results, indicating that it perturbed the text more efficiently than EOT in confounding machine vision systems.

- For EOT, none of the images were completely recognized correctly, indicating this technique successfully introduced some distortion. However, the high 66.66% figure of partial recognition suggests that the distortion had only a minimal impact, allowing the text to remain partially legible to machines.

- IAN resulted in a 26.66% incorrect recognition rate and a higher partial recognition rate of 60%, implying that its distortions did not completely obscure the text, unlike more successful techniques. IAN needs to improve its obscuring capabilities.

- JSMA uniquely had only one image 3.33% completely recognized. The highest percentage of 30% of images unrecognized aligns with the following characteristics:

- −

- JSMA cannot identify the same level of influential pixels/features, as the meaningless words do not have inherent semantic meaning that the model has learned to recognize robustly.

- −

- With less identifiable influential features, JSMA can introduce smaller perturbations to cause a misclassification.

- −

- This results in a lower non-recognition rate (30%) for meaningless words, as the adversary can more easily perturb the input to bypass the CAPTCHA.

- Finally, SGTCS achieved a high partial recognition of 63.33% but a similar incorrect or unrecognized split to IAN, implying a comparable obscuring ability.

5.1.2. Meaningful Words

- CTC achieved complete recognition for 10% of the images. This technique was not as successful at obscuring meaningful words, as it had a higher rate when compared to using meaningless words. Machine vision systems may have exploited semantic understanding.

- EOT reached the highest complete recognition at 23.33%. Its distortions were the least effective at obscuring meaningful words, since it achieved the weakest obfuscation, as measured by recognition success. It also had 13.33% incomplete words and 33.33% unrecognized words, indicating mixed effectiveness.

- IAN achieved a partial recognition rate of 33.33%, with 13.33% completely recognized, indicating that it obscured some of the meaningful words.

- JSMA obtained the lowest complete and partial recognition rates of 6.66%.

- −

- JSMA creates a saliency map that identifies the pixels/features that have the greatest influence on the model’s prediction of the meaningful word.

- −

- To cause a misclassification, JSMA needs to introduce larger perturbations to those influential pixels/features, as the model has higher confidence in recognizing the meaningful word.

- −

- This results in a higher non-recognition rate (60%) for meaningful words, as the adversary needs to make more substantial changes to bypass the CAPTCHA.

This provided evidence that JSMA’s distortions most successfully disrupted semantic analysis, maximally confounding machine solvers as intended. This suggests that JSMA most strongly perturbed the meaningful words. - Finally, SGTCS resulted in an intermediate complete recognition of 6.66% and a partial rate of 13.33%, with 63.33% unrecognized.

5.2. Usability Level

5.2.1. Meaningless Words

5.2.2. Meaningful Words

5.3. Comparison to Previous Studies

- Synthetic Arabic handwritten CAPTCHA methods that use machine learning to generate authentic looking handwritten words [37].

- Distorting Arabic word images through OCR procedures to improve the security of Arabic CAPTCHAs [35].

- A process of handwritten text segmentation that includes creating synthetic cursive Arabic word images and asking users to verify CAPTCHAs by recognizing segmentation points [34].

- Visual cryptography that splits images into two components that need to be properly aligned for decryption [33].

6. Conclusions and Future Work

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Conflicts of Interest

References

- Shivani, A.; Challa, R. CAPTCHA: A Systematic Review. In Proceedings of the 2020 IEEE International Conference on Advent Trends in Multidisciplinary Research and Innovation (ICATMRI), Buldhana, India, 30 December 2020; pp. 1–8. [Google Scholar]

- Bursztein, E.; Martin, M.; Mitchell, J. Text-based CAPTCHA Strengths and Weaknesses. In Proceedings of the 18th ACM Conference on Computer and Communications Security, Chicago, IL, USA, 17–21 October 2011; pp. 125–138. [Google Scholar]

- Hasan, W.K.A. A Survey of Current Research on CAPTCHA. Int. J. Comput. Sci. Eng. Surv. (IJCSES) 2016, 7, 1–21. [Google Scholar] [CrossRef]

- Sun, Y.; Xie, X.; Li, Z.; Yang, K. Batch-transformer for scene text image super-resolution. Vis. Comput. 2024, 40, 7399–7409. [Google Scholar] [CrossRef]

- Elanwar, R.; Betke, M. Generative adversarial networks for handwriting image generation: A review. Vis. Comput. 2024, 41, 2299–2322. [Google Scholar] [CrossRef]

- Aldosari, M.H.; Al-Daraiseh, A.A. Strong multilingual CAPTCHA based on handwritten characters. In Proceedings of the 2016 7th International Conference on Information and Communication Systems (ICICS), Irbid, Jordan, 5–7 April 2016; IEEE: Piscatway, NJ, USA; pp. 239–245. [Google Scholar]

- Khan, B.; Alghathbar, K.; Khan, M.K.; Alkelabi, A.; Alajaji, A. Cyber Security Using Arabic CAPTCHA Scheme. Int. Arab J. Inf. Technol. 2013, 10, 76–83. [Google Scholar]

- Shi, C.; Xu, X.; Ji, S.; Bu, K.; Chen, J.; Beyah, R.; Wang, T. Adversarial CAPTCHAs. IEEE Trans. Cybern. 2019, 52, 6095–6108. [Google Scholar] [CrossRef]

- Bursztein, E.; Aigrain, J.; Moscicki, A.; Mitchell, J. The end is nigh: Generic solving of text-based CAPTCHAs. In Proceedings of the Workshop on Offensive Technologies, San Diego, CA, USA, 19 August 2014. [Google Scholar]

- Wang, P.; Gao, H.; Xiao, C.; Guo, X.; Gao, Y.; Zi, Y. Extended Research on the Security of Visual Reasoning CAPTCHA. IEEE Trans. Dependable Secur. Comput. 2023, 21, 4502–4516. [Google Scholar] [CrossRef]

- Panda, S. Recognizing CAPTCHA using Neural Networks. Int. J. Sci. Res. Eng. Manag. 2022, 10. [Google Scholar] [CrossRef]

- Wang, H.; Zheng, F.; Chen, Z.; Lu, Y.; Gao, J.; Wei, R. A CAPTCHA Design Based on Visual Reasoning. In Proceedings of the 2018 IEEE International Conference on Acoustics, Speech, and Signal Processing (ICASSP), Calgary, AB, Canada, 15–20 April 2018; pp. 6029–6033. [Google Scholar]

- Hossen, M.I.; Hei, X.S. aaeCAPTCHA: The Design and Implementation of Audio Adversarial CAPTCHA. In Proceedings of the 2022 IEEE 7th European Symposium on Security and Privacy, Genoa, Italy, 6–10 June 2022; pp. 430–447. [Google Scholar]

- Conti, M.; Guarisco, C.; Spolaor, R. CAPTCHaStar! A Novel CAPTCHA Based on Interactive Shape Discovery. In Proceedings of the International Conference on Applied Cryptography and Network Security, New York, NY, USA, 2–5 June 2015. [Google Scholar]

- Truong, H.D.; Turner, C.F.; Zou, C.C. iCAPTCHA: The Next Generation of CAPTCHA Designed to Defend against 3rd Party Human Attacks. In Proceedings of the 2011 IEEE International Conference on Communications (ICC), Kyoto, Japan, 5–9 June 2011; pp. 1–6. [Google Scholar]

- Umar, M. A Review on Evolution of various CAPTCHA in the field of Web Security. Int. J. Res. Appl. Sci. Eng. Technol. 2022, 10, 90–96. [Google Scholar] [CrossRef]

- Usuzaki, S.; Aburada, K.; Yamaba, H.; Katayama, T.; Mukunoki, M.; Park, M.; Okazaki, N. Interactive Video CAPTCHA for Better Resistance to Automated Attack. In Proceedings of the 2018 Eleventh International Conference on Mobile Computing and Ubiquitous Network (ICMU), Auckland, New Zealand, 5–8 October 2018; pp. 1–2. [Google Scholar]

- Alreshoodi, L.A.; Alsuhibany, S.A. A Proposed Methodology for Detecting Human Attacks on Text-based CAPTCHAs. Int. J. Eng. Res. Technol. 2020, 9, 193–202. [Google Scholar] [CrossRef]

- Rusu, A.; Govindaraju, V. Handwritten CAPTCHA: Using the difference in the abilities of humans and machines in reading handwritten words. In Proceedings of the 9th International Workshop on Frontiers in Handwriting Recognition (IWFHR-9 2004), Tokyo, Japan, 26–29 October 2004. [Google Scholar] [CrossRef]

- Rusu, A.; Thomas, A.; Govindaraju, V. Generation and use of handwritten CAPTCHAs. Int. J. Doc. Anal. Recognit. (IJDAR) 2010, 13, 49–64. [Google Scholar] [CrossRef]

- Govindaraju, V.; Thomas, A. Enhancing Cyber Security through the Use of Synthetic Handwritten Captchas; Computer Science Department 226 Bell Hall: Buffalo, NY, USA, 2010; ISBN 978-1-124-24557-7. [Google Scholar]

- Thomas, A.O.; Rusu, A.; Govindaraju, V. Synthetic handwritten CAPTCHAs. Pattern Recognit. 2009, 42, 3365–3373. [Google Scholar] [CrossRef]

- Rusu, A.; Mislich, S.; Missik, L.; Schenker, B. A Multilingual Handwriting Approach to CAPTCHA. In Proceedings of the 2013 17th International Conference on Information Visualisation, London, UK, 16–18 July 2013; pp. 198–203. [Google Scholar]

- Rusu, A.I.; Govindaraju, V. On the challenges that handwritten text images pose to computers and new practical applications. Document Recognition and Retrieval XII. Int. Soc. Opt. Photonics 2005, 5676, 84–91. [Google Scholar]

- Rusu, A.; Govindaraju, V. Visual CAPTCHA with handwritten image analysis. In International Workshop on Human Interactive Proofs; Springer: Berlin/Heidelberg, Germany, 2005; pp. 42–52. [Google Scholar]

- Rusu, A.; Govindaraju, V. A human interactive proof algorithm using handwriting recognition. In Proceedings of the Eighth International Conference on Document Analysis and Recognition (ICDAR’05), Seoul, Korea, 31 August–1 September 2005; IEEE: Piscatway, NJ, USA; pp. 967–971. [Google Scholar]

- Achint, T.; Venu, G. Generation and performance evaluation of synthetic handwritten captchas. In Proceedings of the First International Conference on Frontiers in Handwriting Recognition, ICFHR, Montreal, QC, USA, 19–21 August 2008. [Google Scholar]

- Rao, M.; Singh, N. Random Handwritten CAPTCHA: Web Security with a Difference. Int. J. Inf. Technol. Comput. Sci. (IJITCS) 2012, 4, 53. [Google Scholar] [CrossRef][Green Version]

- Rusu, A.I.; Docimo, R.; Rusu, A. Leveraging Cognitive Factors in Securing WWW with CAPTCHA. In Proceedings of the USENIX Conference on Web Application Development, Boston, MA, USA, 23–24 June 2010. [Google Scholar]

- Rusu, A.; Docimo, R. Securing the web using human perception and visual object interpretation. In Proceedings of the 2009 13th International Conference Information Visualisation, Barcelona, Spain, 15–17 July 2009; IEEE: Piscatway, NJ, USA; pp. 613–618. [Google Scholar]

- Alsuhibany, S.A.; Parvez, M.T. Attack-filtered interactive arabic CAPTCHAs. J. Inf. Secur. Appl. 2022, 70, 103318. [Google Scholar] [CrossRef]

- Lajmi, H.; Idoudi, F.; Njah, H.; Kammoun, H.M.; Njah, I. Strengthening Applications’ Security with Handwritten Arabic Calligraphy Captcha. In Proceedings of the 2024 IEEE 8th Forum on Research and Technologies for Society and Industry Innovation (RTSI), Milano, Italy, 18–20 September 2024. [Google Scholar]

- Alsuhibany, S.A.; Alquraishi, M. Usability and Security of Arabic Text-based CAPTCHA Using Visual Cryptography. Information 2022, 13, 112. [Google Scholar] [CrossRef]

- Parvez, M.T.; Alsuhibany, S.A. Segmentation-validation based handwritten Arabic CAPTCHA generation. Comput. Secur. 2020, 93, 101829. [Google Scholar] [CrossRef]

- Alsuhibany, S.A.; Parvez, M.T. Secure Arabic Handwritten CAPTCHA Generation Using OCR Operations. In Proceedings of the 2016 15th International Conference on Frontiers in Handwriting Recognition (ICFHR), Shenzhen, China, 23–26 October 2016; IEEE: Piscatway, NJ, USA; pp. 126–131. [Google Scholar]

- Abdalla, M.I.; Rashwan, M.A.; Elserafy, M.A. Generating realistic Arabic handwriting dataset. Int. J. Eng. Technol. 2019, 9, 3. [Google Scholar] [CrossRef]

- Alsuhibany, S.A.; Almohaimeed, F.N. Synthetic Arabic handwritten CAPTCHA. Int. J. Inf. Comput. Secur. 2021, 16, 385–398. [Google Scholar] [CrossRef]

- Alkhodidi, T.; Aljoudi, L.; Fallatah, A.; Bashy, A.; Ali, N.; Alqahtani, N.; Almajnooni, N.; Allhabi, A.; Albarakati, T.; Alafif, T.K.; et al. GEAC: Generating and Evaluating Handwritten Arabic Characters Using Generative Adversarial Networks. In Proceedings of the 2021 International Conference on Computational Intelligence and Knowledge Economy (ICCIKE), Dubai, United Arab Emirates, 17–18 March 2021; pp. 1–6. [Google Scholar]

- Parvez, M.T.; Alsuhibani, A.M.; Alamri, A.H. Educational and Cybersecurity Applications of an Arabic CAPTCHA Gamification System. Ing. Syst. Inf. 2023, 28, 1275–1285. [Google Scholar] [CrossRef]

- Khan, B.; Alghathbar, K.S.; Khan, M.K.; AlKelabi, A.M.; AlAjaji, A. Using Arabic CAPTCHA for Cyber Security. In Security Technology, Disaster Recovery and Business Continuity; Kim, T., Fang, W., Khan, M.K., Arnett, K.P., Kang, H., Ślęzak, D., Eds.; Communications in Computer and Information Science, Vol. 122; Springer: Berlin/Heidelberg, Germany, 2010; pp. 1–10. [Google Scholar] [CrossRef]

- Goodfellow, I.J.; Shlens, J.; Szegedy, C. Explaining and Harnessing Adversarial Examples. arXiv 2014, arXiv:1412.6572. [Google Scholar]

- Goodfellow, I.; Pouget-Abadie, J.; Mirza, M.; Xu, B.; Warde-Farley, D.; Ozair, S.; Courville, A.; Bengio, Y. Generative Adversarial Nets. Adv. Neural Inf. Process. Syst. 2014, 2, 2672–2680. [Google Scholar]

- Terada, T.; Nguyen, V.N.K.; Nishigaki, M.; Ohki, T. Improving Robustness and Visibility of Adversarial CAPTCHA Using Low-Frequency Perturbation. In Proceedings of the International Conference on Advanced Information Networking and Applications, Sydney, Australia, 13–15 April 2022; pp. 586–597. [Google Scholar]

- Osadchy, M.; Hernandez-Castro, J.; Gibson, S.J.; Dunkelman, O.; Pérez-Cabo, D. No Bot Expects the DeepCAPTCHA! Introducing Immutable Adversarial Examples, With Applications to CAPTCHA Generation. IEEE Trans. Inf. Forensics Secur. 2017, 12, 2640–2653. [Google Scholar] [CrossRef]

- Zhang, J.; Sang, J.; Xu, K.; Wu, S.; Zhao, X.; Sun, Y.; Hu, Y.; Yu, J. Robust CAPTCHAs Towards Malicious OCR. IEEE Trans. Multimed. 2021, 23, 2575–2587. [Google Scholar] [CrossRef]

- Dinh, N.; Tran-Trung, K.; Hoang, V.T. Augment CAPTCHA Security Using Adversarial Examples With Neural Style Transfer. IEEE Access 2023, 11, 83553–83561. [Google Scholar] [CrossRef]

- Wang, P.; Gao, H.; Guo, X.; Yuan, Z.; Nian, J. Improving the Security of Audio CAPTCHAs With Adversarial Examples. IEEE Trans. Dependable Secur. Comput. 2024, 21, 650–667. [Google Scholar] [CrossRef]

- Kwon, H.; Kim, Y.; Yoon, H.; Choi, D. CAPTCHA Image Generation Systems Using Generative Adversarial Networks. IEICE Trans. Inf. Syst. 2018, 101, 417–424. [Google Scholar] [CrossRef]

- Alsuhibany, S.A. A Survey on Adversarial Perturbations and Attacks on CAPTCHAs. Appl. Sci. 2023, 13, 4602. [Google Scholar] [CrossRef]

- Google Cloud. Cloud Vision API. 2022. Available online: https://cloud.google.com/vision (accessed on 1 September 2024).

- Ghaddy. Ghaddy/Adversarial: Enhancing Security of Online Interfaces: Adversarial Handwritten Arabic CAPTCHA Generation (v1.0.0). Zenodo 2024. [Google Scholar] [CrossRef]

| Study | CAPTCHAs Type | Description |

|---|---|---|

| [14,15] | Interactive-based CAPTCHAs | Require users to perform interactive tasks like dragging and dropping shapes |

| [9,18] | Text-based CAPTCHAs | Present distorted text that users must correctly decipher |

| [13] | Audio CAPTCHAs | Contain audio clips of words/numbers for users to identify |

| [17] | Interactive video-based CAPTCHA | Involve interaction with videos, such as pausing at an object |

| [10,12] | Puzzle-Based CAPTCHAs | Comprise puzzles that must be solved correctly |

| [11] | Image-based CAPTCHAs | Feature images/objects for users to view and identify |

| [16] | Game-based CAPTCHAs | Implement games challenges |

| Adversarial Perturbation Technique | Output |

|---|---|

| Expected Over Transformation (EOT) |  |

| Scaled Gaussian Translation With Channel Shifts (SGTCS) |  |

| Jacobian-based Saliency Map Attack (JSMA) |  |

| Immutable Adversarial Noise (IAN) |  |

| Connectionist Temporal Classification (CTC) |  |

| Adversarial Perturbation Technique | Output |

|---|---|

| Expected Over Transformation (EOT) |  |

| Scaled Gaussian TranslationWith Channel Shifts (SGTCS) |  |

| Jacobian-based Saliency Map Attack (JSMA) |  |

| Immutable Adversarial Noise (IAN) |  |

| Connectionist Temporal Classification (CTC) |  |

| Recognition Category | Description |

|---|---|

| Completely | All characters recognized correctly |

| Partially | Some characters recognized correctly |

| Incorrectly | All characters recognized incorrectly |

| Not | No characters recognized |

| Perturbation | Text Type | Security | Usability |

|---|---|---|---|

| meaningless words | 70% | 75.7% | |

| JSMA | meaningful words | 86.66% | 90.6% |

| meaningless words | 40% | 58.8% | |

| IAN | meaningful words | 53.33% | 80.6% |

| meaningless words | 33.33% | 24% | |

| EOT | meaningful words | 46.66% | 86% |

| meaningless words | 36.66% | 65.7% | |

| SGTCS | meaningful words | 80% | 82% |

| meaningless words | 56.66% | 45.77% | |

| CTC | meaningful words | 50% | 90.5% |

| Perturbation | Completely | Partially | Incorrectly | Not |

|---|---|---|---|---|

| CTC | 0 = 0% | 13 = 43.33% | 10 = 33.33% | 7 = 23.33% |

| EOT | 0 = 0% | 20 = 66.66% | 6 = 20% | 4 = 13.33% |

| IAN | 0 = 0% | 18 = 60% | 8 = 26.66% | 4 = 13.33% |

| JSMA | 1 = 3.33% | 8 = 26.66% | 12 = 40% | 9 = 30% |

| SGTCS | 0 = 0% | 19 = 63.33% | 7 = 23.33% | 4 = 13.33% |

| Perturbation | Completely | Partially | Incorrectly | Not |

|---|---|---|---|---|

| CTC | 3 = 10% | 12 = 40% | 8 = 26.66% | 7 = 23.33% |

| EOT | 7 = 23.33% | 9 = 30% | 4 = 13.33% | 10 = 33.33% |

| IAN | 4 = 13.33% | 10 = 33.33% | 3 = 10% | 13 = 43.33% |

| JSMA | 2 = 6.66% | 2 = 6.66% | 8 = 26.66% | 18 = 60% |

| SGTCS | 2 = 6.66% | 4 = 13.33% | 5 = 16.66% | 19 = 63.33% |

| Average Time | |

|---|---|

| Group 1 | 10.90 s |

| Group 2 | 10.58 s |

| Technique | Number of Words | Correct Responses | Accuracy Rates |

|---|---|---|---|

| CTC | 6 | 390 | 45.77% |

| EOT | 6 | 206 | 24% |

| IAN | 6 | 510 | 58.8% |

| JSMA | 6 | 690 | 75.7% |

| SGTCS | 6 | 600 | 65.7% |

| Technique | Number of Words | Correct Responses | Accuracy Rates |

|---|---|---|---|

| CTC | 6 | 765 | 90.5% |

| EOT | 6 | 735 | 86% |

| IAN | 6 | 722 | 80.6% |

| JSMA | 6 | 864 | 90.6% |

| SGTCS | 6 | 748 | 82% |

| Technique | Accuracy Rates |

|---|---|

| SGTCS | 65.7% |

| JSMA | 75.7% |

| IAN | 58.8% |

| CTC | 45.77% |

| EOT | 24% |

| Technique | Accuracy Rates |

|---|---|

| JSMA | 90.6% |

| IAN | 80.6% |

| SGTCS | 82% |

| CTC | 90.5% |

| EOT | 86% |

| Study | Technique | Text Type | Security Evaluation Type | Security | Usability |

|---|---|---|---|---|---|

| [35] | OCR | Meaningful words | OCR | 51% difference in segments | More than 88% of accuracy |

| [34] | Segmentation-validation | Meaningful words | OCR | 95.5%in segmentation attacks, 92.1% in recognition attacks | 95% |

| [37] | Synthetic handwritten CAPTCHA | Meaningless words | Tesseract, ABBYY, and OCR | 96.42% | 94.03% |

| [33] | Visual Cryptography | Meaningful and meaningless words | CNN algorithm, GSA software 2.5, and Google Vision | 92% | 90% |

| meaningless words | 70% | 75.7% | |||

| JSMA | meaningful words | 86.66% | 90.6% | ||

| meaningless words | 40% | 58.8% | |||

| IAN | meaningful words | 53.33% | 80.6% | ||

| Current study | meaningless words | Google Vision [50] | 33.33% | 24% | |

| EOT | meaningful words | 46.66% | 86% | ||

| meaningless words | 36.66% | 65.7% | |||

| SGTCS | meaningful words | 80% | 82% | ||

| meaningless words | 56.66% | 45.77% | |||

| CTC | meaningful words | 50% | 90.5% |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Alrasheed, G.; Alsuhibany, S.A. Enhancing Security of Online Interfaces: Adversarial Handwritten Arabic CAPTCHA Generation. Appl. Sci. 2025, 15, 2972. https://doi.org/10.3390/app15062972

Alrasheed G, Alsuhibany SA. Enhancing Security of Online Interfaces: Adversarial Handwritten Arabic CAPTCHA Generation. Applied Sciences. 2025; 15(6):2972. https://doi.org/10.3390/app15062972

Chicago/Turabian StyleAlrasheed, Ghady, and Suliman A. Alsuhibany. 2025. "Enhancing Security of Online Interfaces: Adversarial Handwritten Arabic CAPTCHA Generation" Applied Sciences 15, no. 6: 2972. https://doi.org/10.3390/app15062972

APA StyleAlrasheed, G., & Alsuhibany, S. A. (2025). Enhancing Security of Online Interfaces: Adversarial Handwritten Arabic CAPTCHA Generation. Applied Sciences, 15(6), 2972. https://doi.org/10.3390/app15062972