Adaptive Feedback-Driven Segmentation for Continuous Multi-Label Human Activity Recognition

Abstract

1. Introduction

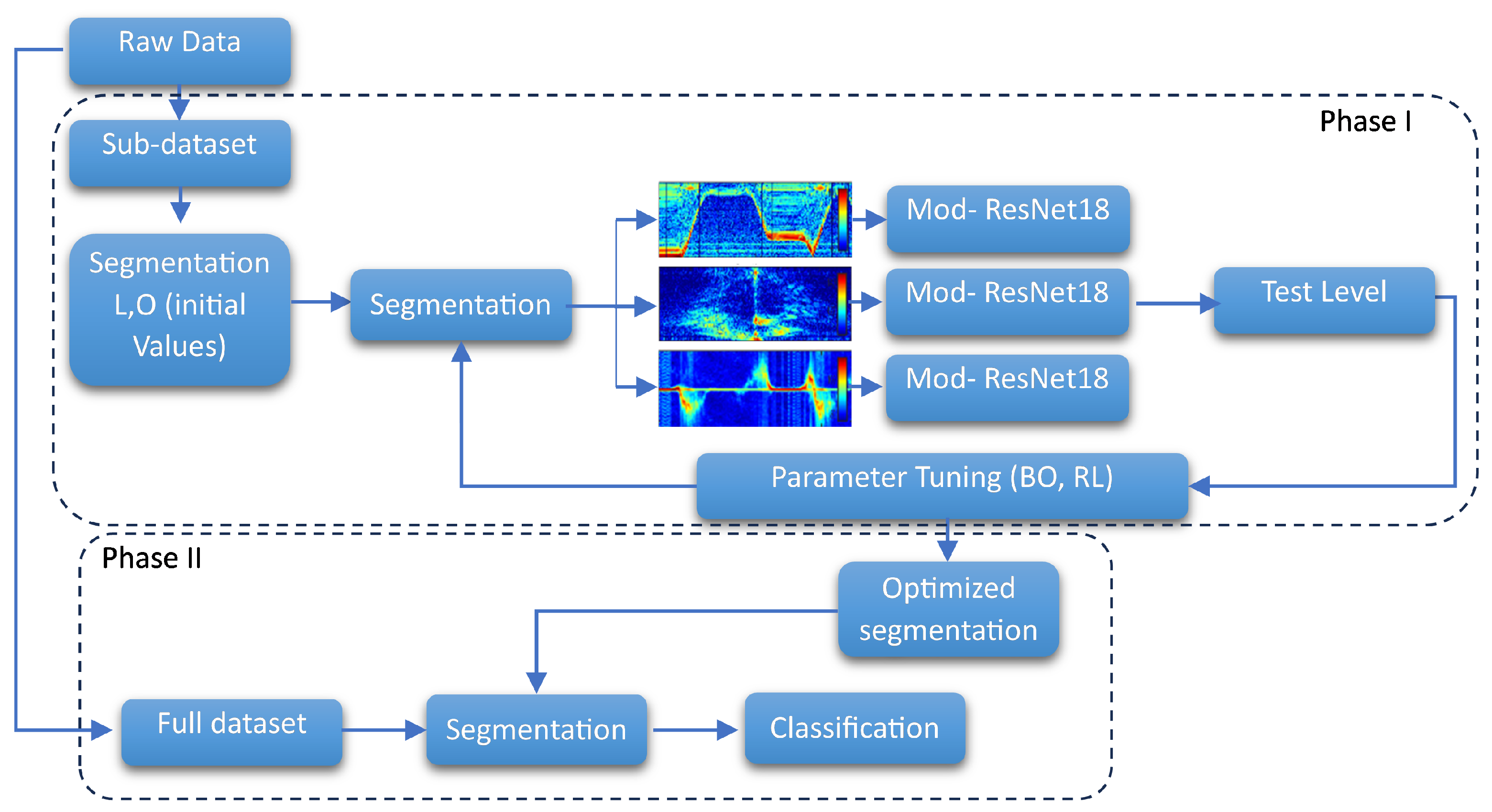

- Feedback-driven segmentation framework: A system that dynamically optimizes segmentation parameters (e.g., window length, overlap) using Bayesian optimization (BO) and reinforcement learning (RL). This framework iteratively refines parameters based on performance metrics (accuracy, F1-score), adapting to variable activity durations and overlapping behaviors.

- Multi-label ResNet18 architecture: A modified ResNet18 architecture tailored for multi-label classification, enhancing accuracy in complex scenarios. This adaptation effectively caters to the nuanced demands of radar-based human activity recognition.

2. Related Work

2.1. Radar-Based HAR

2.2. Multi-Label Classification

2.3. Segmentation Techniques in HAR

- Dynamic Segmentation: The authors in [29] utilized STA/LTA motion detectors and multi-task learning to enhance radar segmentation, effectively managing activities in mixed-motion scenarios. This built upon earlier methods, like Rényi entropy [30], which improved the detection of transitions in micro-Doppler signatures under noisy conditions.

- Fixed-Length Windowing: Addressing the limitations of traditional methods in multi-label HAR, refs. [9,28] implemented fixed-length overlapping windows to better handle the classification of multiple concurrent activities. This technique ensures comprehensive coverage of activity boundaries, enabling more effective recognition of complex, overlapping behaviors.

3. Methodology

3.1. Feedback-Driven Adaptive Segmentation

- A 20% subset of the dataset is used to minimize computational demands while ensuring a diverse representation of activities is maintained.

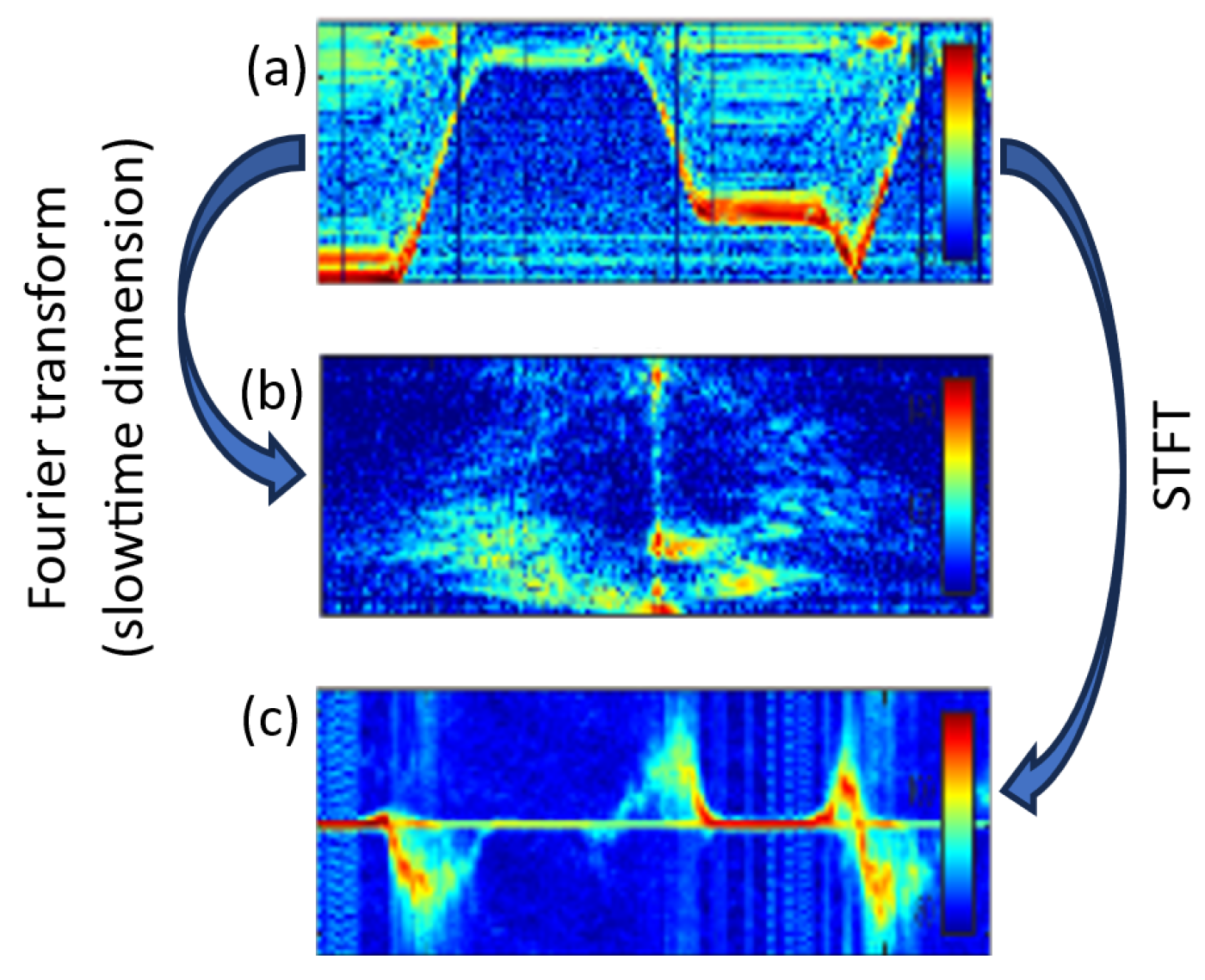

- From this subset, three distinct data representations are generated: spectrogram, range-Doppler, and range-time.

- Individual neural networks are trained on each of these representations to capture modality-specific features.

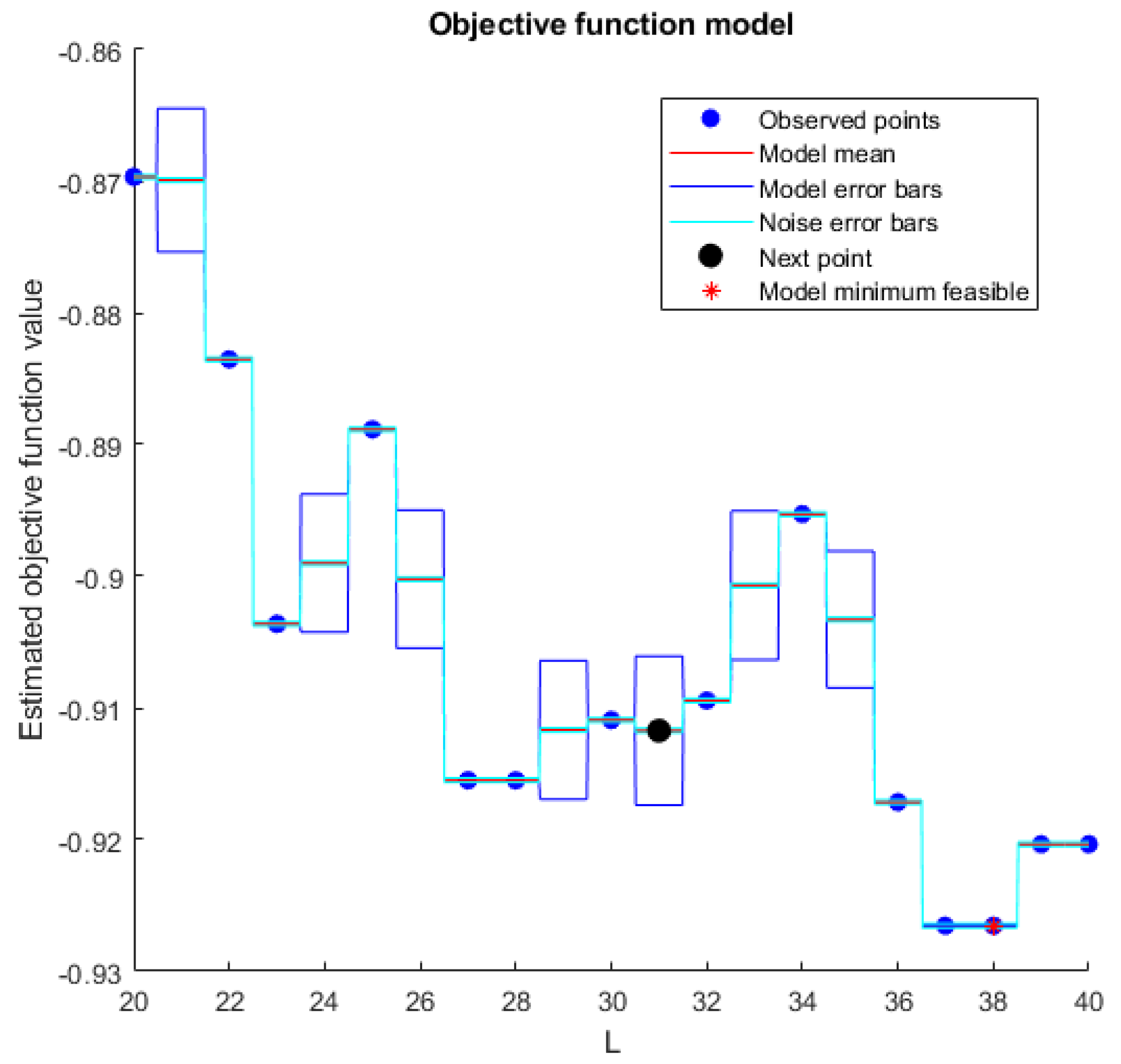

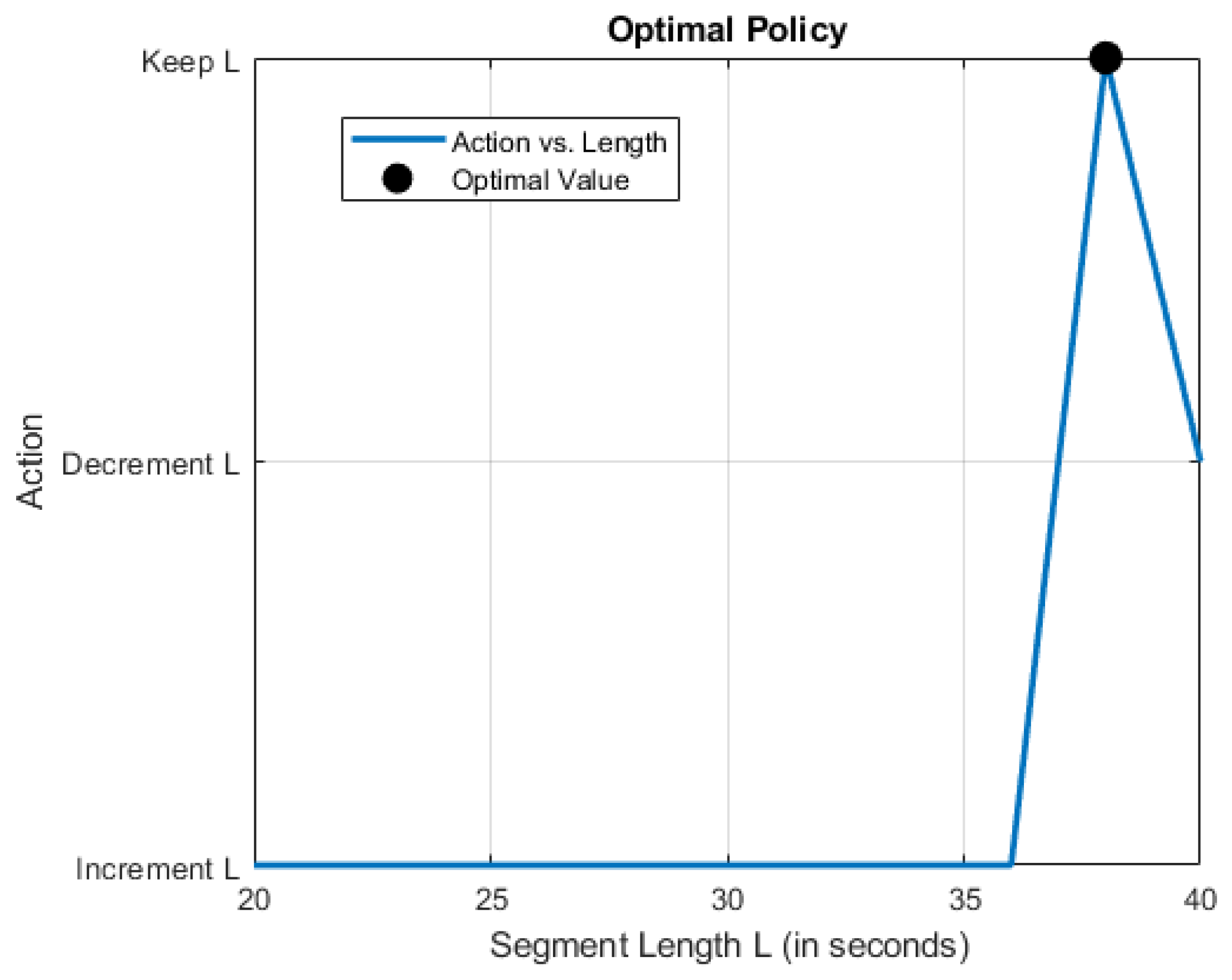

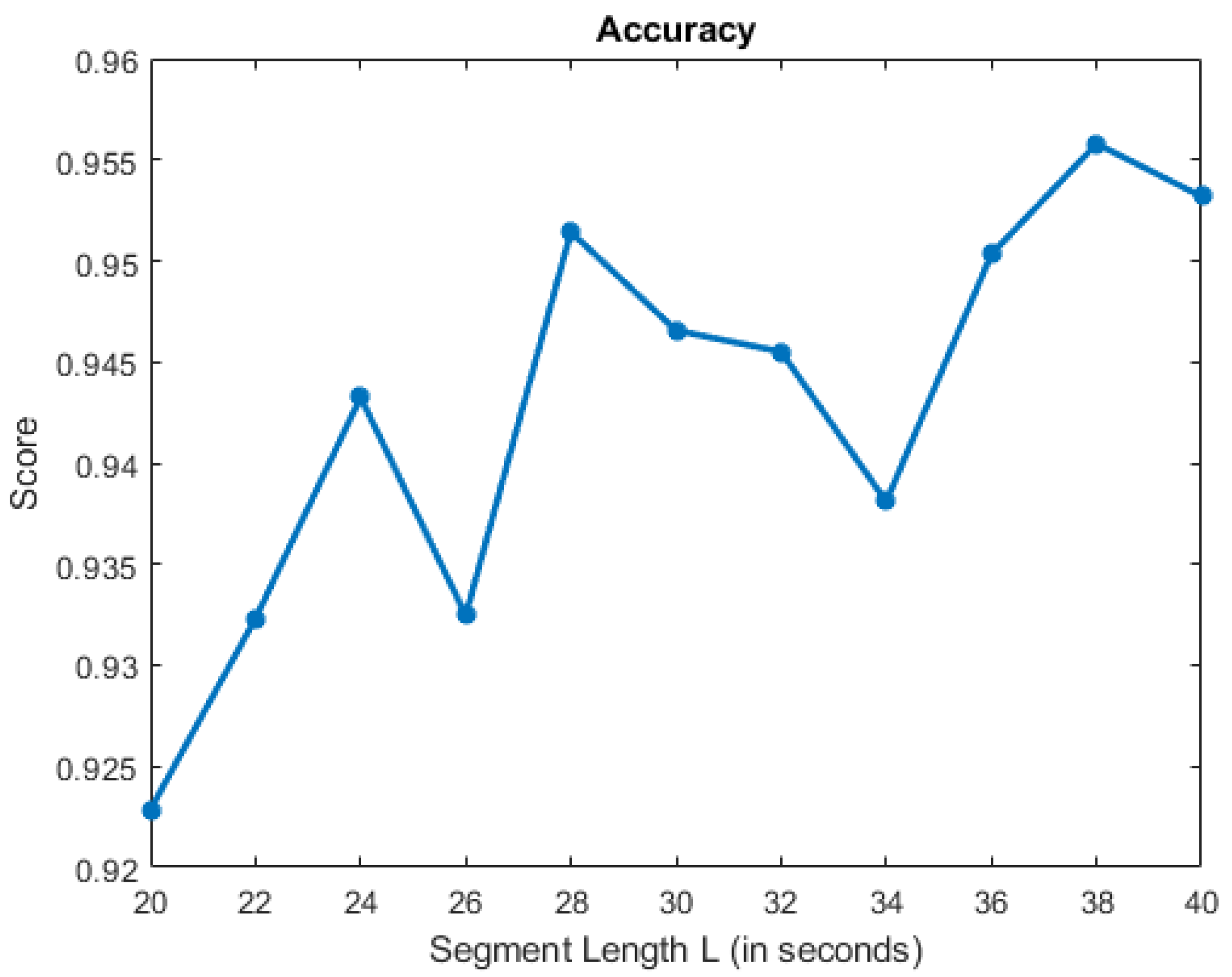

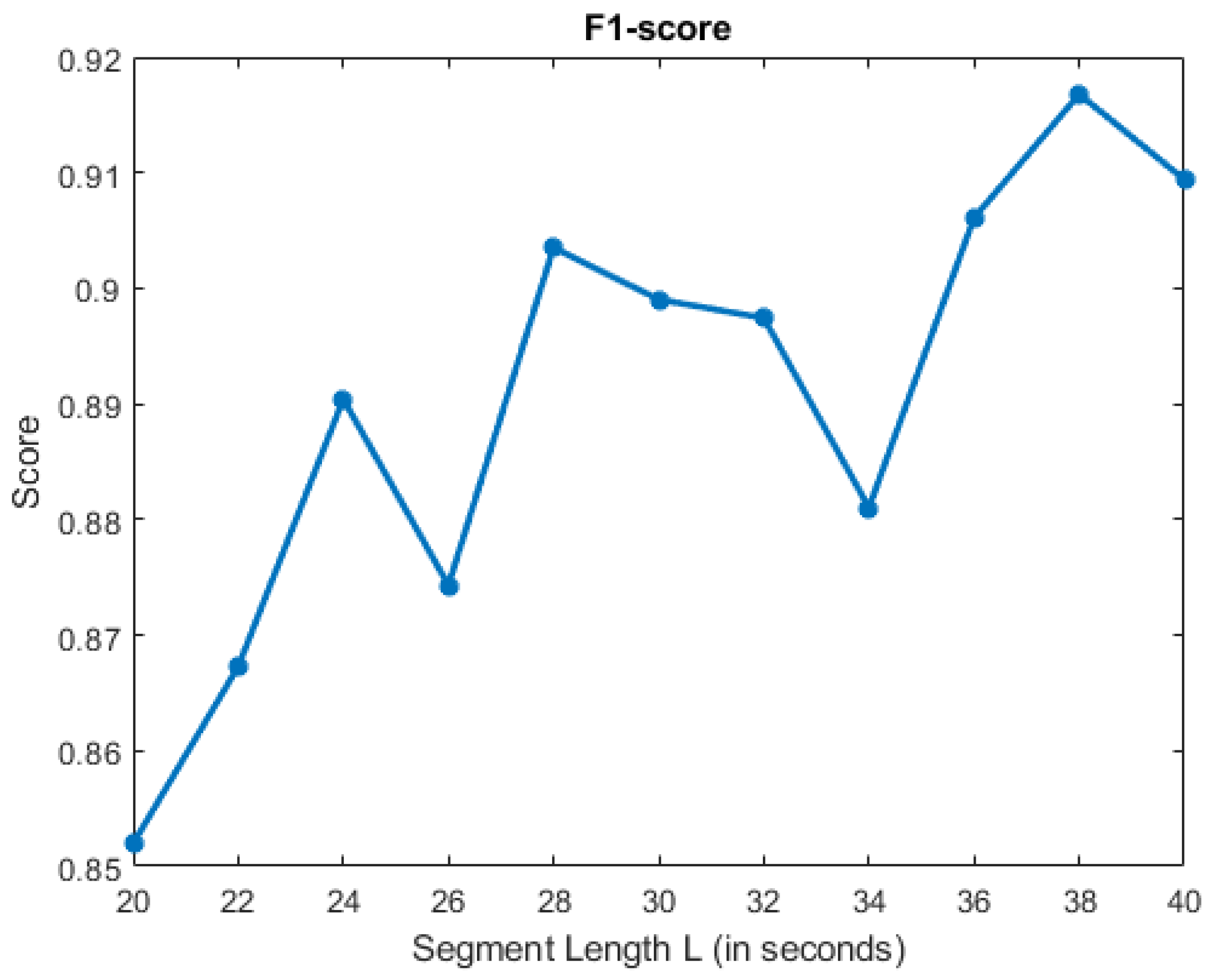

- Segmentation parameters, including segment length (L) and overlap (O), are iteratively optimized using BO and RL separately. This optimization is guided by metrics such as accuracy and F1-score [31]. The overlap is set to one-third (≈33%) of the segment length, an optimal value based on [9,28], ensuring that activities occurring at the edges of one window are fully captured in the overlapping portion of the subsequent window.

- Once the optimal segmentation parameters are identified, they are applied across the entire dataset using the modified Resnet18, to ensure consistency and robustness in activity segmentation.

- Classification results from the individual modalities are then fused in a post-processing step. This fusion leverages the strengths of each representation, combining them to produce the final multi-label predictions. This structured approach allows the feedback-driven system to effectively achieve the highest performance.

- We apply BO and RL separately to dynamically adjust segmentation parameters such as segment length (L) and overlap (O). The effectiveness of these adjustments is then evaluated by comparing the results from both techniques to determine the most effective approach for parameter optimization.

- The adjustments are governed by a reward system, which is crucial in this phase and evaluates performance metrics including accuracy and F1-score. The reward function used for both optimization techniques is defined as follows:where and are weights determining the relative importance of each metric. In our experiments, these weights were set to = 0.25 and = 0.75 to provide greater emphasis to the F1-score, which balances precision and recall and is essential for addressing nuances in imbalanced classes, while still considering overall accuracy, which provides a measure of overall correctness.

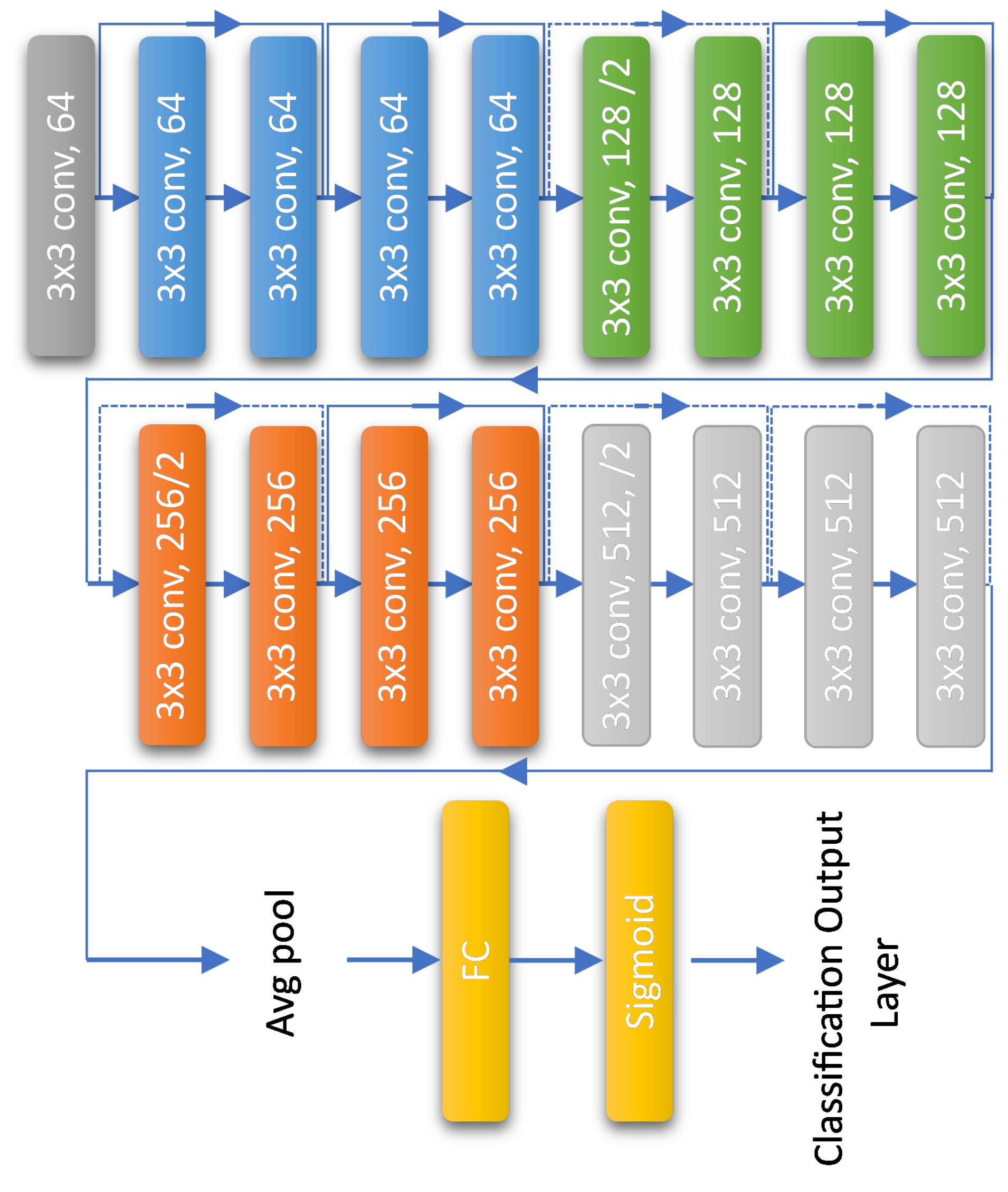

3.2. Multi-Label Classification Using Modified ResNet18

- Final Fully Connected Layer: The original network’s final fully connected layer was replaced with a layer adapted based on our dataset. This adjustment ensures that the network’s output dimensionality is aligned with the task’s requirements.

- Softmax Layer: The softmax activation function is replaced, which constrains outputs to a probability distribution across classes, with a sigmoid function. This change allows for independent prediction of each label, being essential for scenarios where multiple activity types may be present.

- Classification Output Layer: The standard classification output layer was replaced by a custom binary cross-entropy loss layer. This new layer is tailored to manage the outputs from the sigmoid activation, optimizing the network for scenarios where each instance may belong to multiple classes.

4. Experimental Setup

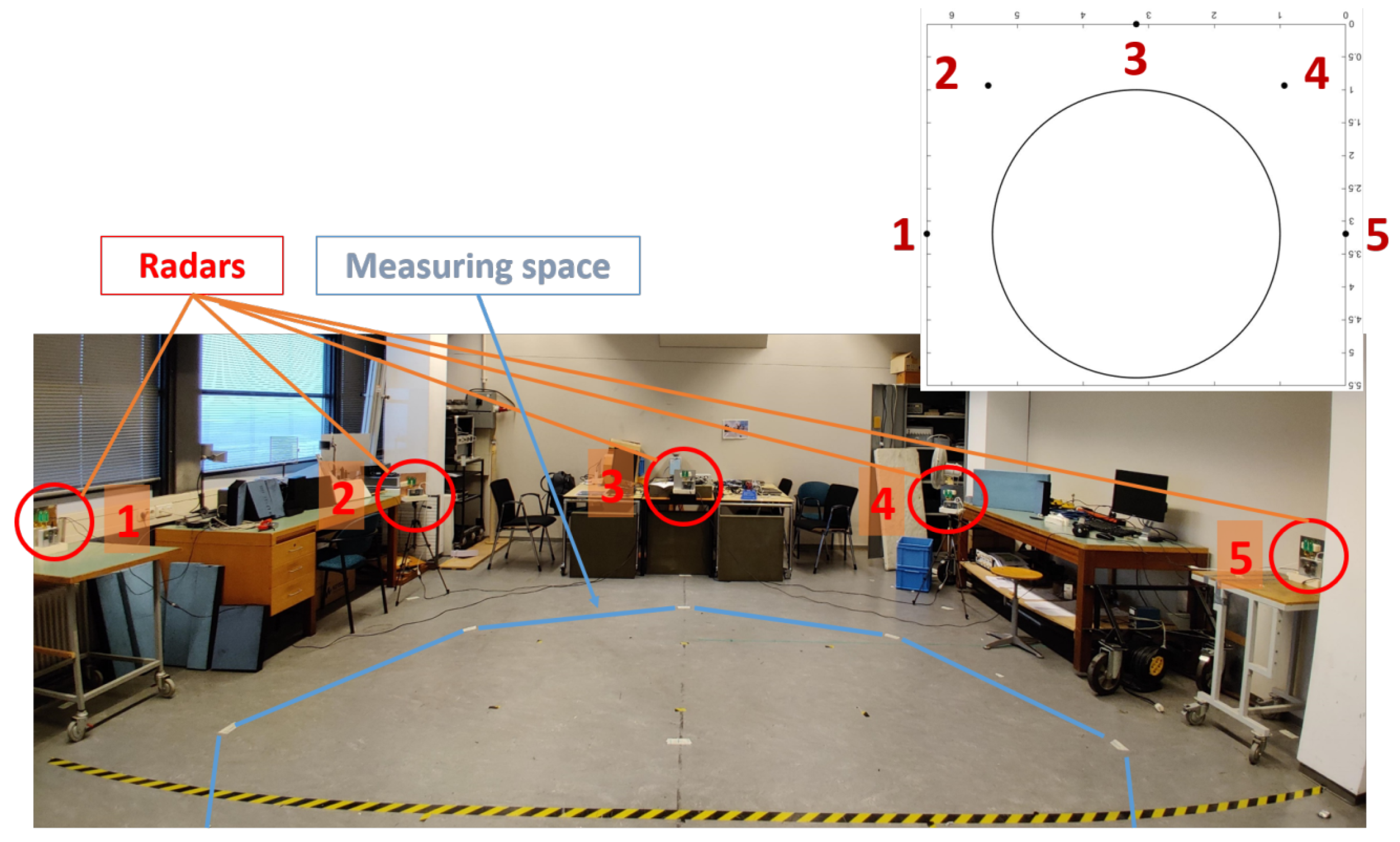

4.1. Dataset Used

4.2. Results and Discussion

5. Conclusions

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Conflicts of Interest

Abbreviations

| ASL | American Sign Language |

| Bi-LSTM | Bidirectional LSTM |

| BO | Bayesian optimization |

| CNN | Convolutional neural network |

| HAR | Human activity recognition |

| LSTM | Long short-term memory |

| LTA | Long time window |

| RNN | Recurrent neural network |

| RL | Reinforcement learning |

| STA | Short time window |

| SVM | Support vector machine |

References

- Fioranelli, F.; Le Kernec, J. Radar sensing for human healthcare: Challenges and results. In Proceedings of the 2021 IEEE Sensors, Virtual, 31 October–4 November 2021; pp. 1–4. [Google Scholar] [CrossRef]

- Savvidou, F.; Tegos, S.A.; Diamantoulakis, P.D.; Karagiannidis, G.K. Passive Radar Sensing for Human Activity Recognition: A Survey. IEEE Open J. Eng. Med. Biol. 2024, 5, 700–706. [Google Scholar] [CrossRef] [PubMed]

- Islam, M.M.; Nooruddin, S.; Karray, F.; Muhammad, G. Human activity recognition using tools of convolutional neural networks: A state of the art review, data sets, challenges, and future prospects. Comput. Biol. Med. 2022, 149, 106060. [Google Scholar] [CrossRef] [PubMed]

- Miazek, P.; Żmudzińska, A.; karczmarek, P.; Kiersztyn, A. Human Behavior Analysis Using Radar Data: A Survey. IEEE Access 2024, 12, 153188–153202. [Google Scholar] [CrossRef]

- Papadopoulos, K.; Jelali, M. A Comparative Study on Recent Progress of Machine Learning-Based Human Activity Recognition with Radar. Appl. Sci. 2023, 13, 12728. [Google Scholar] [CrossRef]

- Ullmann, I.; Guendel, R.G.; Kruse, N.C.; Fioranelli, F.; Yarovoy, A. A Survey on Radar-Based Continuous Human Activity Recognition. IEEE J. Microwaves 2023, 3, 938–950. [Google Scholar] [CrossRef]

- Ding, W.; Guo, X.; Wang, G. Radar-Based Human Activity Recognition Using Hybrid Neural Network Model With Multidomain Fusion. IEEE Trans. Aerosp. Electron. Syst. 2021, 57, 2889–2898. [Google Scholar] [CrossRef]

- Li, H.; Shrestha, A.; Heidari, H.; Le Kernec, J.; Fioranelli, F. Bi-LSTM Network for Multimodal Continuous Human Activity Recognition and Fall Detection. IEEE Sens. J. 2020, 20, 1191–1201. [Google Scholar] [CrossRef]

- Ullmann, I.; Guendel, R.G.; Kruse, N.C.; Fioranelli, F.; Yarovoy, A. Radar-Based Continuous Human Activity Recognition with Multi-Label Classification. In Proceedings of the 2023 IEEE Sensors, Vienna, Austria, 29 October–1 November 2023; pp. 1–4. [Google Scholar] [CrossRef]

- Kang, S.w.; Jang, M.h.; Lee, S. Identification of Human Motion Using Radar Sensor in an Indoor Environment. Sensors 2021, 21, 2305. [Google Scholar] [CrossRef]

- Zhao, Y.; Zhou, H.; Lu, S.; Liu, Y.; An, X.; Liu, Q. Human Activity Recognition Based on Non-Contact Radar Data and Improved PCA Method. Appl. Sci. 2022, 12, 7124. [Google Scholar] [CrossRef]

- Biswas, S.; Manavi Alam, A.; Gurbuz, A.C. HRSpecNET: A Deep Learning-Based High-Resolution Radar Micro-Doppler Signature Reconstruction for Improved HAR Classification. IEEE Trans. Radar Syst. 2024, 2, 484–497. [Google Scholar] [CrossRef]

- Tan, T.H.; Tian, J.H.; Sharma, A.K.; Liu, S.H.; Huang, Y.F. Human Activity Recognition Based on Deep Learning and Micro-Doppler Radar Data. Sensors 2024, 24, 2530. [Google Scholar] [CrossRef] [PubMed]

- Cao, L.; Liang, S.; Zhao, Z.; Wang, D.; Fu, C.; Du, K. Human Activity Recognition Method Based on FMCW Radar Sensor with Multi-Domain Feature Attention Fusion Network. Sensors 2023, 23, 5100. [Google Scholar] [CrossRef] [PubMed]

- Li, Z.; Fioranelli, F.; Yang, S.; Zhang, L.; Romain, O.; He, Q.; Cui, G.; Le Kernec, J. Multi-domains based human activity classification in radar. In Proceedings of the IET International Radar Conference (IET IRC 2020), Virtual, 4–6 November 2020; Volume 2020, pp. 1744–1749. [Google Scholar] [CrossRef]

- Huang, L.; Lei, D.; Zheng, B.; Chen, G.; An, H.; Li, M. Lightweight Multi-Domain Fusion Model for Through-Wall Human Activity Recognition Using IR-UWB Radar. Appl. Sci. 2024, 14, 9522. [Google Scholar] [CrossRef]

- Gurbuz, S.Z.; Amin, M.G. Radar-Based Human-Motion Recognition With Deep Learning: Promising Applications for Indoor Monitoring. IEEE Signal Process. Mag. 2019, 36, 16–28. [Google Scholar] [CrossRef]

- Yang, X.; Guendel, R.G.; Yarovoy, A.; Fioranelli, F. Radar-based Human Activities Classification with Complex-valued Neural Networks. In Proceedings of the 2022 IEEE Radar Conference (RadarConf22), New York, NY, USA, 21–25 March 2022; pp. 1–6. [Google Scholar] [CrossRef]

- Li, H.; Shrestha, A.; Heidari, H.; Kernec, J.L.; Fioranelli, F. Activities Recognition and Fall Detection in Continuous Data Streams Using Radar Sensor. In Proceedings of the 2019 IEEE MTT-S International Microwave Biomedical Conference (IMBioC), Nanjing, China, 6–8 May 2019; Volume 1, pp. 1–4. [Google Scholar] [CrossRef]

- Shrestha, A.; Li, H.; Le Kernec, J.; Fioranelli, F. Continuous Human Activity Classification From FMCW Radar With Bi-LSTM Networks. IEEE Sens. J. 2020, 20, 13607–13619. [Google Scholar] [CrossRef]

- Vaishnav, P.; Santra, A. Continuous Human Activity Classification With Unscented Kalman Filter Tracking Using FMCW Radar. IEEE Sens. Lett. 2020, 4, 1–4. [Google Scholar] [CrossRef]

- Guendel, R.G.; Fioranelli, F.; Yarovoy, A. Derivative Target Line (DTL) for Continuous Human Activity Detection and Recognition. In Proceedings of the 2020 IEEE Radar Conference (RadarConf20), Florence, Italy, 21–25 September 2020; pp. 1–6. [Google Scholar] [CrossRef]

- Kurtoglu, E.; Gurbuz, A.C.; Malaia, E.; Griffin, D.; Crawford, C.; Gurbuz, S.Z. Sequential Classification of ASL Signs in the Context of Daily Living Using RF Sensing. In Proceedings of the 2021 IEEE Radar Conference (RadarConf21), Atlanta, GA, USA, 8–14 May 2021; pp. 1–6. [Google Scholar] [CrossRef]

- Zhu, S.; Guendel, R.G.; Yarovoy, A.; Fioranelli, F. Continuous Human Activity Recognition with Distributed Radar Sensor Networks and CNN–RNN Architectures. IEEE Trans. Geosci. Remote Sens. 2022, 60, 1–15. [Google Scholar] [CrossRef]

- Guendel, R.G.; Fioranelli, F.; Yarovoy, A. Distributed radar fusion and recurrent networks for classification of continuous human activities. IET Radar Sonar Navig. 2022, 16, 1144–1161. [Google Scholar] [CrossRef]

- Qu, L.; Li, X.; Yang, T.; Wang, S. Radar-Based Continuous Human Activity Recognition Using Multidomain Fusion Vision Transformer. IEEE Sens. J. 2025, 1. [Google Scholar] [CrossRef]

- Feng, X.; Chen, P.; Weng, Y.; Zheng, H. CMDN: Continuous Human Activity Recognition Based on Multi-domain Radar Data Fusion. IEEE Sens. J. 2025, 1. [Google Scholar] [CrossRef]

- Ullmann, I.; Guendel, R.G.; Christian Kruse, N.; Fioranelli, F.; Yarovoy, A. Classification Strategies for Radar-Based Continuous Human Activity Recognition With Multiple Inputs and Multilabel Output. IEEE Sens. J. 2024, 24, 40251–40261. [Google Scholar] [CrossRef]

- Kurtoğlu, E.; Gurbuz, A.C.; Malaia, E.A.; Griffin, D.; Crawford, C.; Gurbuz, S.Z. ASL Trigger Recognition in Mixed Activity/Signing Sequences for RF Sensor-Based User Interfaces. IEEE Trans. Hum. Mach. Syst. 2022, 52, 699–712. [Google Scholar] [CrossRef]

- Kruse, N.; Guendel, R.; Fioranelli, F.; Yarovoy, A. Segmentation of Micro-Doppler Signatures of Human Sequential Activities using Rényi Entropy. In Proceedings of the International Conference on Radar Systems (RADAR 2022), Edinburgh, UK, 24–27 October 2022; Volume 2022, pp. 435–440. [Google Scholar] [CrossRef]

- Liu, S.; Wang, B. Optimized Modified ResNet18: A Residual Neural Network for High Resolution. In Proceedings of the 2024 IEEE 4th International Conference on Electronic Technology, Communication and Information (ICETCI), Changchun, China, 24–26 May 2024; pp. 1–5. [Google Scholar] [CrossRef]

- Guendel, R.G.; Unterhorst, M.; Fioranelli, F.; Yarovoy, A. Dataset of continuous human activities performed in arbitrary directions collected with a distributed radar network of five nodes. 4TU. ResearchData 2021, 10, 16691500. [Google Scholar] [CrossRef]

| L | Accuracy | F1-Score |

|---|---|---|

| 30 s | 94.66% | 89.90 % |

| 38 s | 95.58% | 91.68 % |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Belbekri, N.; Wang, W. Adaptive Feedback-Driven Segmentation for Continuous Multi-Label Human Activity Recognition. Appl. Sci. 2025, 15, 2905. https://doi.org/10.3390/app15062905

Belbekri N, Wang W. Adaptive Feedback-Driven Segmentation for Continuous Multi-Label Human Activity Recognition. Applied Sciences. 2025; 15(6):2905. https://doi.org/10.3390/app15062905

Chicago/Turabian StyleBelbekri, Nasreddine, and Wenguang Wang. 2025. "Adaptive Feedback-Driven Segmentation for Continuous Multi-Label Human Activity Recognition" Applied Sciences 15, no. 6: 2905. https://doi.org/10.3390/app15062905

APA StyleBelbekri, N., & Wang, W. (2025). Adaptive Feedback-Driven Segmentation for Continuous Multi-Label Human Activity Recognition. Applied Sciences, 15(6), 2905. https://doi.org/10.3390/app15062905