Featured Application

The results of this study can be used for Chinese named entity recognition in the domain of electric submersible pump wells. This method has been applied to the construction of the knowledge graph for the working conditions of electric submersible pump wells at the Offshore Oil Production Plant of Shengli Oilfield, Sinopec.

Abstract

In special industrial fields such as electric submersible pump (ESP) wells, named entity recognition (NER) often suffers from low accuracy and incomplete entity recognition due to the scarcity of high-quality corpora and the prevalence of rare words and nested entities. To address these issues, this study introduces a character-level convolutional neural network (char-CNN) into the Flat-Lattice Transformer (FLAT) model and constructs nested entity matching rules for the ESP well domain, forming the char-CNN-FLAT-CRF model. This model achieves NER in the low-resource context of ESP wells. Through multiple experiments, the char-CNN-FLAT-CRF model demonstrates superior performance in this NER task compared to mainstream models and shows good recognition capabilities for rare words and nested entities. This research provides a methodological and conceptual reference for NER in other industrial fields that lack sufficient high-quality corpora.

1. Introduction

Knowledge graphs [1], as an important data structure for storing domain knowledge and expert experience, offer numerous advantages such as fast querying, computability, and well-structured organization. They have become the main force in the “informatization” and “digitalization” of smart oil and gas fields [2,3,4]. In the domain of ESP [5,6,7,8], knowledge primarily originates from textual literature, which suffers from fragmented and highly repetitive information, incomplete coverage, and a scarcity of high-quality corpora. These issues lead to unsatisfactory quality of knowledge graphs, with problems such as insufficient mining of fault entities in ESP wells.

NER is fundamental to natural language processing and the construction of domain-specific knowledge graphs. Mainstream NER models such as Bidirectional Encoder Representations from Transformers (BERT) [9,10,11] and Bidirectional Long Short-Term Memory (BiLSTM) [12,13,14] require a large amount of high-quality corpora. The existing ESP corpora only support these models in recognizing common entities, but they fall short when it comes to rare entities like “tubing scaling blockage”, which highlights their limitations.

This paper addresses the issue of insufficient entity extraction in the ESP domain, caused by the scarcity of high-quality corpora, by researching a NER model for ESP well working conditions under low-resource conditions. Through this study, this paper aims to provide methods and ideas for NER in other industrial fields that also suffer from limited corpora.

The main contributions of this paper are as follows:

(1) Introducing semantic analysis technology into dictionary-based word segmentation methods [15,16,17,18] and combining it with the domain-specific nature of ESP texts to improve the accuracy of word segmentation.

(2) This paper has constructed an NER algorithm based on FLAT [19] by utilizing character embedding [20] and word embedding [21] methods. Additionally, the domain characteristics of ESP wells were considered to establish a nested entity [22] rule-matching mechanism.

(3) The experiments validate that the algorithm proposed in this paper can more effectively learn character features and word features, and it has advantages in entity recognition for small samples [23,24,25,26] and rare words [27,28].

2. Materials and Methods

2.1. Related Work on NER

The origin of NER dates back to the 1970s, when early advancements in computer technology led researchers to explore extracting structured information from text, particularly in specific domains like news reports and the scientific literature. Early NER methods relied on manually defined rules and dictionaries. These methods performed well in specific domains and small-scale texts [29].

From the late 1990s to the early 2000s, statistical methods like the Hidden Markov Model (HMM) [30], and Support Vector Machine (SVM) [31] were increasingly used for sequence labeling in NER tasks. Among these, Conditional Random Field (CRF) [32] became the mainstream approach due to its ability to capture contextual information, leading to significant performance improvements. However, traditional machine learning methods rely heavily on feature engineering, requiring manual design of feature templates. This is not only inefficient but also prone to errors due to the lack of prior knowledge.

Mainstream NER algorithms are mostly based on deep learning methods, which automatically extract features to avoid the complex feature engineering required in traditional machine learning. Common deep learning architectures include Recurrent Neural Network (RNN) [33], Convolutional Neural Network (CNN) [34], and Transformer architectures. Among these, the architecture combining Bidirectional Long Short-Term Memory networks with Conditional Random Fields (BiLSTM-CRF) [35] has become widely used in NER tasks. BiLSTM processes sequential data bidirectionally to capture bidirectional contextual information in text, while the CRF layer optimizes the sequence labeling results, significantly enhancing the performance of NER. Additionally, pre-trained language models such as BERT and Generative Pre-trained Transformer (GPT) [36], which undergo large-scale unsupervised pre-training, further enhance the models’ language understanding capabilities and have achieved remarkable results in NER tasks. These models can quickly adapt to specific tasks through fine-tuning without the need for training from scratch. The advantage of deep learning methods lies in their ability to automatically extract features, which not only improves efficiency but also reduces the need for manual involvement from linguistic experts.

However, deep learning methods typically require large amounts of high-quality data and annotations, making it difficult to achieve rapid migration and application in specific domains with scarce high-quality corpora, as well as in different languages. In recent years, domain-specific NER research has become the foundation for many scientific projects and has attracted widespread attention from researchers. Chen Peng et al. [37] used a neural text generation method to increase corpus data through generative adversarial networks, achieving NER in Chinese electronic medical records [38,39]. He L et al. [40] adopted a deep learning method based on multi-feature fusion to recognize complex entities in unstructured shipping enterprise credit texts, providing a foundation for constructing a credit knowledge graph for shipping enterprises. These NER studies cover a variety of fields, including medical, biological, aviation, and mechanical domains, focusing on the recognition of complex entities within these specific fields.

Building on the experimental achievements of these researchers, this paper makes it possible to conduct low-resource NER in the ESP well domain.

2.2. A Method for NER in the Field of Small Sample ESP Based on FLAT

Based on the knowledge of ESP faults, this paper categorizes the entities in the literature into four types: “System”, ”Component”, ”Fault Symptom”, and “Fault”, forming four ontologies. The distribution of knowledge in these documents is uneven, with some fault-related knowledge being scarce in text and containing rare characters and words.

To address the issues of poor handling of small sample data and rare words, overfitting, and low accuracy in entity recognition by general-purpose Chinese named entity recognition models, this section constructs a char-CNN-FLAT-CRF model to achieve NER task in small-scale domain knowledge texts.

2.2.1. A Chinese Word Segmentation Method for ESP Domain Based on Dictionary Matching with Semantic Analysis

To effectively perform character and word embeddings, dictionary-based word segmentation methods are more efficient. They can address the ambiguity and complexity of the Chinese language and are suitable for domain-specific knowledge in the ESP well field. To compensate for the poor adaptability of dictionary matching, this paper introduces a syntactic and semantic analysis module to assist with word segmentation.

To improve dictionary-based word segmentation in the ESP well domain, a domain-specific dictionary is built using the corpus data. The text data are preprocessed according to a general list of Chinese stop words to remove words and special symbols that lack practical significance in the corpus. The jieba word segmentation tool is used, in combination with the dictionary from the People’s Daily word segmentation corpus, to conduct initial segmentation and create the initial vocabulary set.

A hash table-based deduplication method is used to remove duplicate words from a dictionary. The process is as follows: Construct a hash table, iterate through the vocabulary set, use the SHA-256 method to generate hash values, compare the hash values to determine if an element is a duplicate. Map each element of the vocabulary set to a fixed index position in the hash table for deduplication.

Manually review the deduplicated dictionary. Using domain knowledge of ESP wells, identify and eliminate words with language errors to ensure the dictionary’s quality and accuracy. Enrich the dictionary by integrating specialized vocabulary and industry terminology. Use Equation (1) to calculate the frequency of words in the corpus data, and then sort the words by frequency to improve the accuracy of the word segmentation algorithm.

is the frequency of the word , represents the number of occurrences of the word in the corpus, and is the total number of words in the corpus.

The dictionary matching module completes the main work of word segmentation matching through the constructed ESP domain-specific dictionary. A syntactic and semantic analysis module is introduced based on the characteristics described in the ESP literature to assist in verifying the correctness of word segmentation. This paper analyzes the parts of speech of segmented words based on the particularity of domain vocabulary in ESP corpora. When multiple nouns, verbs, or polysemous words appear consecutively in segmentation, noun or verb phrases are formed based on their associative and mutually exclusive relationships. A check is carried out to see if the compound word exists in the domain dictionary, and the word is output if it does.

After that, Context-Free Grammar (CFG) is used to verify the correctness of word segmentation results and identify possible errors. In Chinese corpora, there are cases where verbs function as complements, such as in the sentence “He comes running”, where “running” is a verb that supplements the verb “comes”. For Chinese corpora of ESP, CFG rules include a production body with two consecutive verbs for verb phrases. The CFG rules are shown in Table 1. Here, S represents the sentence structure, NP represents the noun phrase, and VP represents the verb phrase; N represents a noun, and V represents a verb.

Table 1.

Context-free grammar rules table.

For example, in the sentence “mechanical seal leakage increase” in Chinese, the dictionary contains multiple words such as “mechanical”, “seal”, “leakage”, “increase”. These words can form compound terms like “mechanical seal”, “seal leakage”, and “leakage increase”. Since the dictionary is sorted by word frequency, individual words like “seal” and “leakage” appear more frequently than phrases “mechanical seal”. Dictionary-based word segmentation might incorrectly identify “seal” as a verb, leading to the consecutive appearance of three or more verbs, which violates Chinese grammar rules. By introducing a part-of-speech analysis module, “mechanical” can be matched with “seal” to form the noun phrase “mechanical seal”, thus avoiding incorrect segmentation. The final segmentation output is “mechanical seal”, “leakage”, and “increase”, which conforms to the Context-Free Grammar rules constructed in this paper. This approach not only improves the accuracy of word segmentation but also better aligns with the textual characteristics of the ESP domain.

2.2.2. Char-CNN Character Embedding Method and BERT Word Embedding

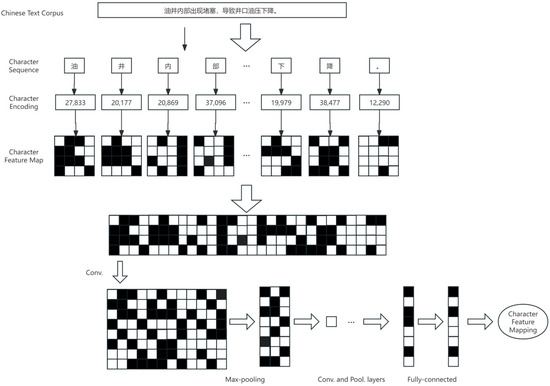

Char-CNN draws inspiration from the pixel-processing techniques in the field of computer vision to extract features from text at the character level. This approach significantly enhances the model’s adaptability in scenarios with limited labeled data for ESP wells. It reduces the dependence on large-scale annotated corpora, which traditional NER models often require. Figure 1 illustrates the architecture of char-CNN.

Figure 1.

The architecture of char-CNN.

For the ESP text corpus, the BERT pre-trained model is used to map the Chinese characters in the text to their corresponding embedding vectors. The BERT model is capable of effectively capturing the semantic and syntactic relationships between Chinese characters, thereby fully extracting the feature information of the characters. These character vectors are then connected in sequence to form a character sequence. To address the issue of varying sequence lengths of Chinese characters in ESP corpora, the method of zero-padding is used to ensure that all character sequences have the same length.

The sentence in the Chinese text corpus in Figure 1 means: "A blockage has occurred inside the oil well, resulting in a decrease in the wellhead oil pressure." The meanings of each character in the character sequence are as follows: “油“ means “oil”, “井” means “well”, “内” means “inside”, “部” means “part” or “interior”, “下” means “down” or “decrease”, “降” means “drop” or “decline”, and the period (。) marks the end of the sentence.

The padded character sequences are fed into the char-CNN model. Convolution operations are performed to learn local features of the character sequences and generate feature maps. These feature maps are then down-sampled by a max-pooling layer to reduce their dimensions, facilitating further feature extraction in subsequent layers. Finally, the output of the pooling layer is passed through fully connected layers to produce a vector representation of the text sequence.

The words and phrases obtained from tokenization are fed into the BERT model. Leveraging its multi-layer Transformer architecture, BERT is capable of capturing the semantic information of these words and phrases within the context and outputs word embedding vectors that contain contextual semantics. Subsequently, these vectors are concatenated in the order of the words in the text to form a complete sequence vector of the word groups. This sequence vector is then input into the char-CNN model to further generate a word vector corresponding to the input text. Such a word vector can more effectively capture the local features and semantic information of the text, providing a more powerful feature representation for subsequent natural language processing tasks.

2.2.3. FLAT Layer

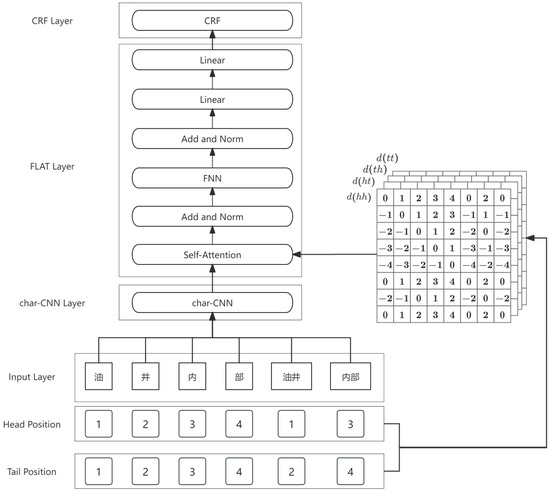

The structure of the FLAT Layer is shown in Figure 2. The model takes character embedding sequences and word embedding sequences as inputs. Drawing on the spatial span concept from the Lattice algorithm, the model categorizes the relationships between intervals in the sequences into three types: containment, intersection, and exclusion. These relationships are represented by Equations (2)–(5). For example, in the Chinese phrase “inner part of the oil well”, there are four Chinese characters, each of which can exist independently. They can also form words such as “oil well”, “inner part”, or “inner part of the oil well”. These different ways of segmentation reflect the idea of spatial span.

Figure 2.

Structure of the FLAT Layer.

The meanings of each Chinese character or vocabulary in the input layer are as follows: “油” means “oil”, “井” means “well”, “内” means “inside”, “部” means “part”, “油井” means “oil well”, “内部” means “internal”.

Here, and represent the head and tail positions of the word or character span , respectively. denotes the relative distance between the head and tail positions of spans and . By calculating , the relationships of intersection, exclusion, and containment between spans and can be identified. The relative position encoding is computed by constructing a matrix based on these relative positions, and the calculation formula is given in Equation (6).

Here, represents the relative position encoding vector, which is obtained by concatenating four position vectors and then applying the learned parameter along with the ReLU activation function.

Through the self-attention mechanism in the FLAT model, the relationships between character and word vectors in the sequence can be captured, thereby learning long-range dependencies in the text and further enhancing the understanding of sentence semantics. The calculation formula for the self-attention layer is shown in Equation (7).

Here, denotes the embedding vector of the identifier , represents the relative position encoding between spans and , and the rest are learnable parameters.

Through the collaborative action of the FNN layer and the fully connected layer, the model transforms the character vector feature representations from the original embedding space into a format suitable for CRF input. This not only retains the characters semantic information but also better supports the CRF layer in performing global optimization, thereby improving the recognition accuracy for ESP.

2.2.4. CRF Layer

The Conditional Random Field layer is widely used in sequence labeling tasks. It can fully utilize contextual information and the dependencies between labels. By learning a feature transition matrix, it maps vector representations to the label space, thereby generating the most likely label sequence for each position. Due to the presence of polysemous words and nested entities in the ESP corpora, the CRF layer is employed to analyze the global information and constraints between labels. This helps resolve ambiguities in the text and enhances the accuracy of NER.

The CRF layer predicts the label sequence of the input text by learning and modeling the conditional probability distribution. For a given sentence , the output probability of its predicted sequence can be calculated through Equations (8)–(10).

Here, is the normalization factor. represents the weight of the feature function , which is used to evaluate the combination of the input and the label . The feature function includes both local features and global features.

CRF leverages contextual information and the dependencies between labels to convert character annotation data into BIO tag sequences for named entities and combines the Viterbi algorithm to find the optimal tag sequence. Maximum likelihood estimation serves as the loss function, providing an optimization target for model training, which enables the model to better fit the training data.

2.2.5. Nested Entity Matching Rule Mechanism

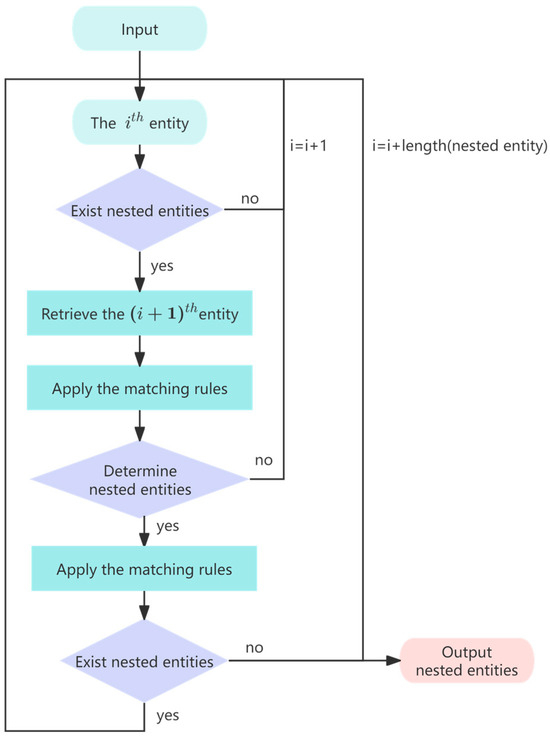

To address the issue of a large number of nested entities in ESP component entities and fault entities, this paper introduces a rule-based mechanism. The flowchart of nested entity matching is shown in Figure 3. By concatenating specific entities according to predefined rules, nested entities are formed, thereby improving the accuracy of NER in the ESP domain. The matching rules are shown in Table 2.

Figure 3.

Flowchart of nested entity matching.

Table 2.

Nested entity matching rules.

When encountering complex Chinese entities such as “motor centering bearing damage”, the model may identify them as separate entities: “motor” (B-Component), “centering bearing” (B-Component), and “damage” (B-Fault). This module first determines whether the entities “motor” and “centering bearing” can form a valid nested entity by applying matching rules. If they can, it then checks whether this nested entity can be combined with the next entity “damage” to form another nested entity. If they can be combined, the process iterates to form nested entities. If not, the loop is exited, and the current entity is output. Thus, the final nested entity obtained is “motor centering bearing damage” (B-Fault).

3. Named Entity Recognition Experiment

3.1. Experimental Setup

3.1.1. Experimental Corpus

This paper used 62 domestic and international documents, 10 ESP well reports from an oil production plant, and 14 historical documents of ESP wells as the NER corpus. The content covered multiple categories, including the structure of ESP wells, working principles, and fault diagnosis, comprising a total of 8983 sentences.

The poor quality of the corpus texts is mainly due to the presence of numerous irregular spaces, invalid characters (such as “0” characters), and improper use of both Chinese and English punctuation marks. These issues make it difficult to segment sentences and tokenize the text. To ensure consistency in text format and structure, this paper conducted initial data cleaning through scripting and further ensured the quality of cleaning by manual review and modification. Additionally, this paper used annotation software (http://www.jinglingbiaozhu.com/, accessed on 6 October 2023) to label the cleaned text corpus using the BIO scheme, ultimately forming a dataset suitable for model use.

The dataset was randomly shuffled and divided into three parts: 70% for training (6288 sentences), 15% for validation (1347 sentences), and 15% for testing (1347 sentences).

3.1.2. Experimental Evaluation Criteria

To evaluate and compare the NER performance of different models, this paper adopts precision, recall, and F1 score as the evaluation metrics. By using a small amount of corpus as the training set, the models’ ability to extract entities from the ESP well with limited samples is assessed. The formulas for calculating precision, recall, and F1 score are given in Equations (11)–(13).

Here, represents the number of correctly identified entities, represents the number of entities identified by the model that are not actually entities, and represents the total number of entities in the text.

3.1.3. Model Parameters

The parameter settings for the char-CNN-FLAT-CRF model are shown in Table 3.

Table 3.

Parameters of the char-CNN-FLAT-CRF model.

3.2. Results and Analysis

3.2.1. Ablation Study

To evaluate the contribution of the CRF component in the char-CNN-FLAT-CRF model, an ablation study was conducted. Specifically, the CRF layer was replaced with a fully connected layer in the char-CNN-FLAT part of the model. This modification allowed for a direct comparison to assess the label prediction and decoding capabilities of the CRF layer within the model. The results of the ablation study are presented in Table 4.

Table 4.

Results of the ablation study.

As shown in Table 4, in the named entity recognition task, the ability of the CRF layer in the char-CNN-FLAT-CRF model to learn transition probabilities and predict labels has a significant impact on the overall accuracy of the model. Optimizing the CRF model can effectively improve the model’s recognition capability.

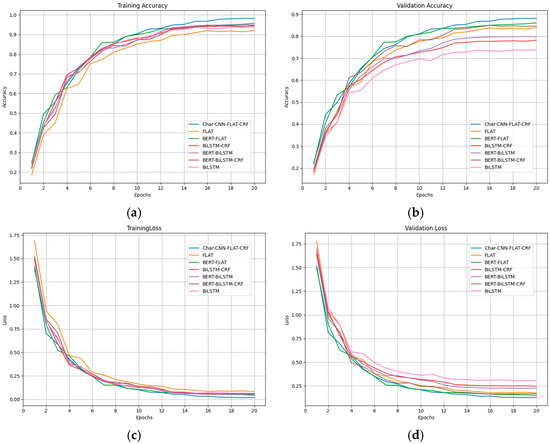

3.2.2. Comparison Experiment

To evaluate the performance of the char-CNN-FLAT-CRF model in NER under low-resource conditions, this paper conducted comparative experiments. Figure 4 shows the accuracy and loss waveforms of the char-CNN-FLAT-CRF model and several mainstream NER models, including BERT-BiLSTM-CRF, BERT-FLAT, and BiLSTM-CRF, on the training and validation sets of a small-sample corpus of ESP. In the test set, three evaluation metrics were used: precision, recall, and F1 score. The experimental results are presented in Table 5.

Figure 4.

The accuracy and loss waveforms of multiple models on the training and validating sets: (a) Training accuracy waveform; (b) Validation accuracy waveform; (c) Training loss waveform; (d) Validation loss waveform.

Table 5.

Comparative experimental results.

As shown in Figure 4, all the models exhibit a converging trend during the training process. However, significant differences in accuracy are observed among the models in the validation set. This phenomenon indicates the differences in the generalization ability of the models for NER, specifically their adaptability to nested entities and rare words in the context of ESP. Among these models, the char-CNN-FLAT-CRF model and the BERT-FLAT model demonstrate superior performance in this experiment.

As shown in Table 5, in the NER task for ESP wells with limited data, the FLAT-based NER model outperforms the BiLSTM-based model. The analysis indicates that BERT and BiLSTM models require larger-scale and higher-quality corpora to effectively identify named entities, making them more suitable for tasks with abundant training data.

In contrast, FLAT, by learning the relative relationships between characters and potential words, is able to better extract character and word features under low-resource conditions, thus demonstrating stronger recognition capabilities. Moreover, the char-CNN-based convolutional character and word embeddings applied in this study further enhance the performance of the FLAT model in low-resource tasks, giving it an edge over models like BERT that rely heavily on large training datasets.

Additionally, the rule-matching mechanism introduced after CRF decoding in this study effectively improves the NER performance in the domain of ESP working conditions. It can better eliminate the interference from complex phrases and word groups in the corpus, thereby significantly enhancing the accuracy and robustness of named entity recognition.

3.2.3. Rare Word Recognition Capability Analysis

Due to the uneven distribution of knowledge in the corpus of ESP working conditions, there are numerous rare words and nested entities. Analysis of the text corpus reveals that component entities often contain nested entities, such as “stator winding” and “rotor core”, while fault-related texts also include many nested entities, like “sand prevention malfunction”, as well as some rare fault entities, such as “paint film damage”. In contrast, entities in fault symptoms and system-related texts are usually simpler.

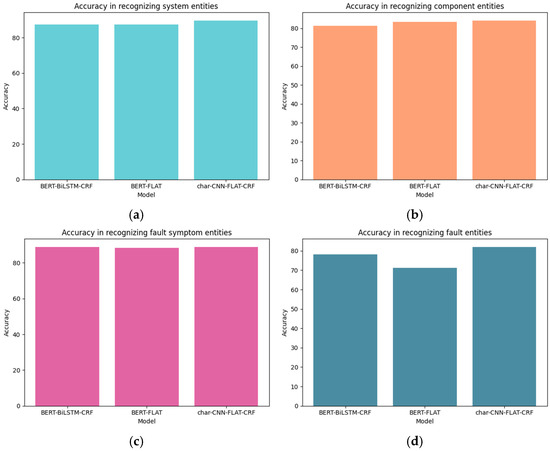

This paper conducts comparative experiments using the general BERT-BiLSTM-CRF model with the BERT-FLAT and char-CNN-FLAT-CRF models. By analyzing the model’s performance in recognizing component entities, which have characteristics of nested entities, we evaluate the model’s capability in nested entity recognition. Meanwhile, by examining the model’s performance in recognizing fault entities, which often contain rare words, we assess the model’s ability to recognize rare words.

As can be seen from Figure 5, the three models have extremely similar recognition accuracy for system entities and fault symptom entities. The char-CNN-FLAT-CRF model slightly outperforms the other two models in recognizing component entities, indicating that the method proposed in this paper has certain improvements in the task of nested entity recognition. Looking at the performance of the three models in recognizing fault entities, the char-CNN-FLAT-CRF model shows a clear advantage in recognizing rare words.

Figure 5.

Comparison experiment results of rare words and nested entities: (a) Accuracy in recognizing system entities; (b) Accuracy in recognizing component entities; (c) Accuracy in recognizing fault symptom entities; (d) Accuracy in recognizing fault entities.

4. Discussion

4.1. Discussion on Comparison Experiment

The char-CNN-FLAT-CRF model performed the best in the low-resource NER task for ESP, mainly due to its character-level convolutional embeddings, the ability of the FLAT structure to handle nested entities, and the global constraints of the CRF layer. The BERT-FLAT model, combining BERT’s pre-trained semantic information and the FLAT structure, demonstrated good recall value in low-resource tasks. The BERT-BiLSTM-CRF model leveraged BERT’s pretrained advantages and, despite some limitations in low-resource conditions, still outperformed models based solely on BiLSTM. In contrast, the BiLSTM-CRF model, due to its strong dependence on the corpus and lack of contextual semantic information, performed poorly in low-resource tasks.

Through ablation experiments, this paper finds that the CRF layer plays a significant role in enhancing model performance in the task of entity label prediction. The CRF layer effectively constrains the rationality of label sequences, ensuring that the generated label sequences comply with semantic and grammatical rules, thereby significantly improving the overall performance and stability of the model.

4.2. Discussion on the Recognition Capability of Rare Words and Nested Entities

By comparing the differences in accuracy of the models on the training and validation sets, it can be analyzed that the models’ abilities to handle nested entities and rare words in the NER of the ESP field are indeed different. This also effectively demonstrates that the model proposed in this paper has a clear advantage in this regard.

In the analysis of recognizing rare words and nested entities, the char-CNN-FLAT-CRF model performs excellently in the recognition tasks of component entities and fault entities. The component entities contain rich nested entity structures, while the fault entities include many rare words. This experiment demonstrates that the char-CNN-FLAT-CRF model has outperformed general NER models in these aspects.

For rare words and nested entities, the model demonstrates strong recognition capabilities through character-level convolutional embeddings and the FLAT structure. However, there is still room for improvement. In the task of recognizing fault entities, the complexity of rare words and nested structures continues to impact the model’s precision. This result indicates that the recognition of rare words remains a challenge under low-resource conditions.

Future research could focus on further optimizing the model architecture or increasing the amount of training data to enhance the recognition performance of rare words and complex nested structures.

5. Conclusions

This paper proposes a low-resource NER model tailored for the ESP domain. By integrating char-CNN into the FLAT framework for convolutional character and word embeddings and combining it with a CRF layer and rule-matching mechanism, the model significantly enhances its ability to recognize rare words and nested entities, achieving remarkable results. The study demonstrates that the char-CNN-FLAT-CRF model excels in low-resource NER tasks, particularly in handling rare words and nested entities. This indicates that the model can offer an effective solution and valuable reference for other NER tasks facing insufficient corpus issues.

Author Contributions

Conceptualization, F.G. and C.D.; methodology, S.T.; software, Z.W. and S.T.; validation, S.T. and Z.W.; formal analysis, S.Q.; investigation, S.Q.; resources, C.D.; data curation, Z.W.; writing—original draft preparation, S.T.; writing—review and editing, S.T.; visualization, S.T.; supervision, F.G. and C.D.; project administration, S.T. All authors have read and agreed to the published version of the manuscript.

Funding

This work was supported by the project 23-2-1-162-zyyd-jch funded by the Qingdao Natural Science Foundation.

Institutional Review Board Statement

Not applicable.

Informed Consent Statement

Not applicable.

Data Availability Statement

Restrictions apply to the availability of these data. Data were obtained from the Sinopec Shengli Oilfield Marine Oil Extraction Plant in Shandong Province, China, and are available from the authors with the permission of the Sinopec Shengli Oilfield Marine Oil Extraction Plant.

Conflicts of Interest

The authors have no conflicts to disclose.

Abbreviations

| ESP | Electric submersible pump |

| NER | Named entity recognition |

| char-CNN | Character-level convolutional neural network |

| FLAT | Flat-Lattice Transformer |

| BERT | Bidirectional Encoder Representations from Transformers |

| BiLSTM | Bidirectional Long Short-Term Memory |

| HMM | Hidden Markov Model |

| SVM | Support Vector Machine |

| CRF | Conditional Random Field |

| RNN | Recurrent Neural Network |

| CNN | Convolutional Neural Network |

| BiLSTM-CRF | Bidirectional Long Short-Term Memory networks with Conditional Random Fields |

| GPT | Generative Pre-trained Transformer |

| CFG | Context-Free Grammar |

References

- Wang, Y.; Yao, R.; Zhao, K.; Wu, P.; Chen, W. Robotics Classification of Domain Knowledge Based on a Knowledge Graph for Home Service Robot Applications. Appl. Sci. 2024, 14, 11553. [Google Scholar] [CrossRef]

- Kalu-Ulu, T.C.; Okon, A.N.; Appah, D. Effective Marginal Field Production Using Electric Submersible Pump: Niger Delta Case Study. Asian J. Adv. Res. Rep. 2024, 18, 53–72. [Google Scholar]

- Yongjie, W.; Liang, M. Heavy oil development: Shengli Oilfield. Geogr. Res. Bull. 2022, 1, 78–80. [Google Scholar]

- Chen, Y.; Yan, Y.; Zhao, C.; Qi, Z.; Chen, Z. Energy—Oil and Gas Research; Findings from Sinopec Group Provide New Insights into Oil and Gas Research (Gini Coefficient: An Effective Way To Evaluate Inflow Profile Equilibrium of Horizontal Wells In Shengli Oil Field). Energy Wkly. News. 2020, 195, 35–38. [Google Scholar]

- Yang, J.; Wang, S.; Zheng, C.; Feng, G.; Du, G.; Tan, C.; Ma, D. Fault Diagnosis Method and Application of ESP Well Based on SPC Rules and Real-Time Data Fusion. Math. Probl. Eng. 2022, 2022, 8497299. [Google Scholar] [CrossRef]

- Solovyev, G.I.; Lapik, I.O.; Govorkov, A.D. Hydrodynamics of transient processes in a well with an electric submersible pump. Bulletin of the Tomsk Polytechnic University. Geo Assets Eng. 2023, 334, 50–60. [Google Scholar]

- Wei, Q.; Tan, C.; Gao, X.; Guan, X.; Shi, X. Research on early warning model of electric submersible pump wells failure based on the fusion of physical constraints and data-driven approach. Geoenergy Sci. Eng. 2024, 233. [Google Scholar] [CrossRef]

- Liu, X.F.; Liu, C.H.; Zheng, Y.; Yu, J.F.; Zhou, W.; Wang, M.X.; Liu, P.; Zhou, Y.F. Numerical simulation of the electromagnetic torques of PMSM with two-way magneto-mechanical coupling and nonuniform spline clearance in electric submersible pumping wells. Pet. Sci. 2024, 21, 4417–4426. [Google Scholar] [CrossRef]

- Bogdanović, M.; Gligorijević, M.F.; Kocić, J.; Stoimenov, L. Improving Text Recognition Accuracy for Serbian Legal Documents Using BERT. Appl. Sci. 2025, 15, 615. [Google Scholar] [CrossRef]

- Jazuli, A.; Widowati; Kusumaningrum, R. Optimizing Aspect-Based Sentiment Analysis Using BERT for Comprehensive Analysis of Indonesian Student Feedback. Appl. Sci. 2024, 15, 172. [Google Scholar] [CrossRef]

- Yadav, A.; Khan, A.F.; Singh, V. A Multi-Architecture Approach for Offensive Language Identification Combining Classical Natural Language Processing and BERT-Variant Models. Appl. Sci. 2024, 14, 11206. [Google Scholar] [CrossRef]

- Melhem, Y.W.; Abdi, A.; Meziane, F. Deep Learning Classification of Traffic-Related Tweets: An Advanced Framework Using Deep Learning for Contextual Understanding and Traffic-Related Short Text Classification. Appl. Sci. 2024, 14, 11009. [Google Scholar] [CrossRef]

- Xiong, Y.; Chen, G.; Cao, J. Research on Public Service Request Text Classification Based on BERT-BiLSTM-CNN Feature Fusion. Appl. Sci. 2024, 14, 6282. [Google Scholar] [CrossRef]

- Wang, Y.; Zhou, Y.; Qi, H.; Wang, D.; Huang, A. A complex history browsing text categorization method with improved BERT embedding layer. Appl. Intell. 2025, 55, 398. [Google Scholar] [CrossRef]

- Chen, S.; Lang, B.; Chen, Y.; Xie, C. Detection of Algorithmically Generated Malicious Domain Names with Feature Fusion of Meaningful Word Segmentation and N-Gram Sequences. Appl. Sci. 2023, 13, 4406. [Google Scholar] [CrossRef]

- Tang, Y.; Deng, J.; Guo, Z. Candidate Term Boundary Conflict Reduction Method for Chinese Geological Text Segmentation. Appl. Sci. 2023, 13, 4516. [Google Scholar] [CrossRef]

- Tsang, Y.K.; Yan, M.; Pan, J.; Chan, M.Y. A corpus of Chinese word segmentation agreement. Behav. Res. Methods 2024, 57, 25. [Google Scholar] [CrossRef]

- Xu, Z.; Xiang, Y. Improving Chinese word segmentation with character–lexicon class attention. Neural Comput. Appl. 2024; prepublish, 1–11. [Google Scholar] [CrossRef]

- Liu, Y.; Zhang, K.; Tong, R.; Cai, C.; Chen, D.; Wu, X. A Flat-Span Contrastive Learning Method for Nested Named Entity Recognition. Int. J. Asian Lang. Process. 2025, prepublish. [Google Scholar] [CrossRef]

- Hou, Z.; Du, Y.; Li, W.; Hu, J.; Li, H.; Li, X.; Chen, X. C-BDCLSTM: A false emotion recognition model in micro blogs combined Char-CNN with bidirectional dilated convolutional LSTM. Appl. Soft Comput. J. 2022, 130, 109659. [Google Scholar] [CrossRef]

- Huang, J.; Ding, R.; Wu, X.; Chen, S.; Zhang, J.; Liu, L.; Zheng, Y. WERECE: An Unsupervised Method for Educational Concept Extraction Based on Word Embedding Refinement. Appl. Sci. 2023, 13, 12307. [Google Scholar] [CrossRef]

- Zhang, H.; Dang, Y.; Zhang, Y.; Liang, S.; Liu, J.; Ji, L. Chinese nested entity recognition method for the finance domain based on heterogeneous graph network. Inf. Process. Manag. 2024, 61, 103812. [Google Scholar] [CrossRef]

- Xia, Y.; Tong, Z.; Wang, L.; Liu, Q.; Wu, S.; Zhang, X.Y. MPE[formula omitted]: Learning meta-prompt with entity-enhanced semantics for few-shot named entity recognition. Neurocomputing 2025, 620, 129031. [Google Scholar] [CrossRef]

- Chen, J.; Su, L.; Li, Y.; Lin, M.; Peng, Y.; Sun, C. A multimodal approach for few-shot biomedical named entity recognition in low-resource languages. J. Biomed. Inform. 2024, 161, 104754. [Google Scholar] [CrossRef] [PubMed]

- Yang, S.; Lai, P.; Fang, R.; Fu, Y.; Ye, F.; Wang, Y. FE-CFNER: Feature Enhancement-based approach for Chinese Few-shot Named Entity Recognition. Comput. Speech Lang. 2025, 90, 101730. [Google Scholar] [CrossRef]

- Ren, Z.; Qin, X.; Ran, W. SLNER: Chinese Few-Shot Named Entity Recognition with Enhanced Span and Label Semantics. Appl. Sci. 2023, 13, 8609. [Google Scholar] [CrossRef]

- Yang, M.; Liu, S.; Chen, K.; Zhang, H.; Zhao, E.; Zhao, T. A Hierarchical Clustering Approach to Fuzzy Semantic Representation of Rare Words in Neural Machine Translation. IEEE Trans. Fuzzy Syst. 2020, 28, 992–1002. [Google Scholar] [CrossRef]

- Khassanov, Y.; Zeng, Z.; Pham, V.T.; Xu, H.; Chng, E.S. Enriching Rare Word Representations in Neural Language Models by Embedding Matrix Augmentation. arXiv 2019, arXiv:1904.0379. [Google Scholar]

- Park, Y.; Son, G.; Rho, M. Biomedical Flat and Nested Named Entity Recognition: Methods, Challenges, and Advances. Appl. Sci. 2024, 14, 9302. [Google Scholar] [CrossRef]

- Zia, G.A.; Sharifi, A. ZHMM-Based Dari Named Entity Recognition for Information Extraction. In CS & IT Conference Proceedings; Computer Science & Information Technology (CS & IT): Cambridge, MA, USA, 2019; Volume 9. [Google Scholar]

- Suganda, A.G.; Krisna, B.N. Six classes named entity recognition for mapping location of Indonesia natural disasters from twitter data. Int. J. Intell. Comput. Cybern. 2024, 17, 395–414. [Google Scholar]

- Fang, T.; Yang, Y.; Zhou, L. Enhanced Precision in Chinese Medical Text Mining Using the ALBERT+Bi-LSTM+CRF Model. Appl. Sci. 2024, 14, 7999. [Google Scholar] [CrossRef]

- Jayakumar, H.; Krishnakumar, M.S.; Peddagopu, V.V.V.; Sridhar, R. RNN based question answer generation and ranking for financial documents using financial NER. Sādhanā 2020, 45, 1–10. [Google Scholar] [CrossRef]

- Tao, G.; Zhichao, Z. LB-BMBC: MHBiaffine-CNN to Capture Span Scores with BERT Injected with Lexical Information for Chinese NER. Int. J. Comput. Intell. Syst. 2024, 17, 269. [Google Scholar]

- Smita, S.; Biswajit, P.; Deepa, G. Study of Word Embeddings for Enhanced Cyber Security Named Entity Recognition. Procedia Comput. Sci. 2023, 218, 449–460. [Google Scholar]

- Ngo, H.D.; Koopman, B. From Free-text Drug Labels to Structured Medication Terminology with BERT and GPT. AMIA Annu. Symp. Proc. 2023, 2023, 540–549. [Google Scholar] [PubMed]

- Chen, P.; Zhang, M.; Yu, X.; Li, S. Named entity recognition of Chinese electronic medical records based on a hybrid neural network and medical MC-BERT. BMC Med. Inform. Decis. Mak. 2022, 22, 315. [Google Scholar] [CrossRef]

- Zhao, H.; Xiong, W. A multi-scale embedding network for unified named entity recognition in Chinese Electronic Medical Records. Alex. Eng. J. 2024, 107, 665–674. [Google Scholar] [CrossRef]

- Li, M.; Gao, C.; Zhang, K.; Zhou, H.; Ying, J. A weakly supervised method for named entity recognition of Chinese electronic medical records. Med. Biol. Eng. Comput. 2023, 61, 2733–2743. [Google Scholar] [CrossRef] [PubMed]

- He, L.; Wang, S.; Cao, X. Multi-Feature Fusion Method for Chinese Shipping Companies Credit Named Entity Recognition. Appl. Sci. 2023, 13, 5787. [Google Scholar] [CrossRef]

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).