Abstract

Generative AI (Gen-AI) revolutionizes design by leveraging machine learning to generate innovative solutions. It analyzes data to identify patterns, creates tailored designs, enhances creativity, and allows designers to explore complex possibilities for diverse industries. This study uses a Gen-AI design generation process to develop an urban landscape fumigation service robot. This study proposes a machine-learned multimodal and feedback-based variational autoencoder (MMF-VAE) model that incorporates a readily available spraying robot dataset and includes design considerations from various research efforts to ensure real-time deployability. The objective is to demonstrate the effectiveness of data-driven and feedback-based approaches in generating design specifications for a fumigation robot with the targeted requirements of autonomous navigation, precision spraying, and an extended runtime. The design generation process comprises three stages: (1) parameter fixation, emphasizing functionality-based and aesthetic-based specifications; (2) design specification generation using the proposed MMF-VAE model with and without a spraying robot dataset; and (3) robot development based on the generated specifications. A comparative analysis evaluated the impact of the dataset-driven design generation. The design generated with the dataset proved more feasible and optimized for real-world deployment with the integration of multimodal inputs and iterative feedback refinement. A real-time prototype was then constructed using the model’s parametric constraints and tested in actual fumigation scenarios to validate operational viability. This study highlights the transformative potential of Gen-AI in robotic design workflows.

1. Introduction

Fumigation is a commonly used method for controlling pests using gaseous chemicals in various settings, such as urban landscapes, medical institutions, and industrial facilities. Fumigation ensures compliance with healthy plant growth and safety standards, as reported by the World Health Organization [1]. Urban biodiversity, high population densities, varied structures, required green spaces for aesthetics, and inadequate green space maintenance create conditions for pest breeding, leading to frequent fumigation [2]. This led to a manual urban fumigation system, which involves trained workers dispersing fumigation chemicals, often in the form of sprays, smoke, or gas, in targeted locations. Manual fumigation effectively controls pests, but prolonged exposure to these toxic fumigation chemicals poses long-term health risks of skin irritation and respiratory issues to workers, leading to neurological disorders and even cancer [3]. To tackle the health risks linked to manual fumigation, using an autonomous fumigation robot in an urban landscape presents a highly effective alternative. These fumigation robots eliminate the need for human involvement in hazardous environments, ensuring safer operations while delivering precise, uniform, and efficient fumigation services. Robot fumigation provides minimal human exposure and precise area coverage in complex urban structures, adheres to safety protocols, and improves overall effectiveness in pest control.

The design and development of a fumigation robot requires careful consideration of various functionalities and aesthetic-based parameters, including the robot’s size, payload capacity, mobility system, autonomy level, and battery capacity. These factors must align with the specific application, such as the terrain conditions, spray type, fumigation duration, weather patterns, and fumigation location. Researchers and designers rely on external sources like artificial intelligence (AI) to address these complex requirements and accelerate the design process in various stages. AI-driven solutions for smart data collection, supervisory control data analysis, and predictive models are utilized in the early design phases of identifying user needs, brainstorming, and generating design concepts. By combining human creativity with AI technologies, designers can explore a broader range of design possibilities and uncover innovative solutions. With the advancements in AI, generative AI (Gen-AI) designs have become a popular method for creating unique and creative designs in numerical, textual, or pictorial data. This method analyzes and learns patterns from existing datasets, incorporating randomness to produce unique and innovative outputs using both explicit programming and implicit learning. Early methods relied on explicit programming based on human design expertise with predefined rules and frameworks. However, these methods lack the ability to leverage the vast knowledge embedded in the existing design datasets.

Gen-AI design tools use various algorithms with iterative learning to process designer-defined input specifications, generating and improving solutions that satisfy functional requirements or applications. The resulting Gen-AI designs often contain unique geometries or configurations that challenge and outperform the traditional human design creativity and workflow [4,5]. In engineering design, data-driven approaches like deep generative models (DGMs) are adopted in Gen-AI. DGMs are capable of approximating complex, high-dimensional probability distributions using large datasets and are uniquely suited for creating new design samples by synthesizing features learned from existing data [6]. Generative adversarial networks (GANs) and variational autoencoders (VAEs) are two prominent classes of DGMs that generate images, text, and structured data across multiple domains.

This paper explores the Gen-AI design models and the development of an autonomous fumigation service robot. Section 2 delves into the related studies and demonstrates case studies on design generation using Gen-AI models. Section 3 elaborates on the proposed machine-learned multimodal and feedback-based variational autoencoders (MMF-VAE) model approach to design generation. Section 4 outlines the design fixation, generation, and development of fumigation robots based on the Gen-AI design, emphasizing the importance of the proposed Gen-AI model in this process. Lastly, Section 5 concludes the paper with a summary of key insights.

2. Related Studies

Gen-AI, including DGM models like GAN, VAE, and transformer architectures like chat generative pre-trained transformer (ChatGPT), has gained attention for its ability to create new content. However, the nature of what it produces has not been fully explored [4]. Jana I Saadi et al. analyzed how Gen-AI affects the designing process, concept generation, designer behavior, and overall design output with subjective assessments from student designers [7]. It was observed that designers define the tool’s inputs using Gen-AI design by focusing on the main goals and limitations of the output and then adjust and refine these inputs repeatedly to improve both qualitative and quantitative aspects of the design. This iterative process helps designers better understand the problem and explore possible solutions. Likewise, Samangi Wadinambiarachchi et al. studied how Gen-AI image generators truly boost design fixation, human creativity, and divergent thinking during designing tasks [5]. An experiment involving 60 participants was performed to understand the impact of Gen-AI on the designers. The participants were divided into three groups: no inspiration support, inspiration from Google search images, and Gen-AI support. The results showed that participants with Gen-AI support were more focused on their ideas (design fixation) and ideation behavior. Gen-AI maximizes the quality of innovative design output and speed of design creation, which ultimately helps in higher design fixation for novice designers. This understanding opens up new research possibilities, especially regarding how humans and Gen-AI can effectively work together. Similarly, through case studies and applications, numerous researchers demonstrate the critical role of Gen-AI design tools in creating high-performance products, paving the way for more efficient, innovative, and tailored solutions in various research applications.

2.1. LLM Models

Large language models (LLMs) like ChatGPT and natural language processing (NLP) are language-based AI models that use text-based prompts to generate and understand human-like text. Qihao Zhu et al. [8] investigated recent advancements in NLP and GPT to automate the early design phase of computer-aided design (CAD) models, transforming textual prompts into new design concepts in three steps: domain understanding, problem-driven, and evaluation-driven synthesis. Similarly, Adam Fitriawijaya et al. generated a building architectural design using ChatGPT and multimodal Gen-AI design, integrating the Ethereum blockchain into Gen-AI driven design to enhance traceability, authenticity, and safeguarding of data ownership [9]. Christos Konstantinou et al. introduced a novel approach for human–robot collaboration in assembly operations using Gen-AI in industrial environments, adopting behavior tree-based system control, LLM, and NLP technologies for real-time alterations in assembly operations and offering dynamic adaptability with building blockchain instructions [10]. Sai H Vemprala et al. developed PromptCraft, an open-source research tool to provide prompts for various robotic applications and sample robotic simulations with the integration of ChatGPT [11]. The authors conducted an experimental study on free-form dialog prompts for robotic tasks, simulations, and form factors, incorporating logical, mathematical reasoning, geometrical, manipulation, and aerial navigation tools. Zhe Zhang et al. proposed an interactive robotics manipulation GPT (Mani-GPT) model that understands human instruction responses and generates suitable manipulation and decision-making plans to assist humans [12]. A high-quality dialog dataset consisting of text-based interaction was designed and trained for the model with multiple scenarios, and the model was fed to the pick-and-place robot for actions. Experimental evaluation achieved an accuracy of 84.6% in human intent recognition, understanding, and decision-making.

2.2. GAN

GAN primarily consists of two neural networks: a generator, which creates new data from the trained dataset, and a discriminator, which evaluates the realism of the data. GAN produces high-dimensional and complex data. Many researchers have utilized GANs, often integrating them with machine learning techniques or enhanced versions of GAN models, to perform data augmentation or generate innovative designs tailored to specific applications. Sangeun Oh et al. used the GAN model to design a 2D wheel for performance and aesthetic optimization, incorporating design automation systems for topology, structural appearance, and new design generation [13]. Lyle Regenwetter et al. compared three DGMs of traditional GANs, multi-objective performance-augmented diverse GAN (MO-PaDGAN), and design target achievement index GAN (DTAI-GAN) for bicycle frame design. It focuses on conditioning, target achievement, performance, novelty, customer satisfaction, diversity, coverage, and realism [14]. MO-PaDGAN uses a performance-weighted kernel function for greater diversity and performance, while DTAI-GAN considers classifier guidance and performance targets. Yan Gan et al. created a social robot design that considers emotional and aesthetic preferences for customer satisfaction [15]. The researchers used a deep convolutional GAN (DCGAN) with the Kansei engineering method to incorporate pleasant, interesting, and intelligent features and aesthetic preferences like color, shape, and outer head features. DCGAN works similarly to GAN; it employs convolutional filters in its discriminator and generator, enabling it to differentiate features during design generation. Christoforos Aristeidou et al. used neural networks and GAN-model Gen-AI for aircraft fuel tank inspection, utilizing a multimodal conditional approach for realistic and diverse images [16]. Convolutional neural networks (CNNs) were used for data collection and augmentation from simplified text prompts and captured images. Kailash Kumar Borkar et al. proposed a GAN-based DGM algorithm for path generation of the TurtleBot4 wheeled robot on the robot operating system (ROS) software in narrow pathways [17].

2.3. VAE

VAE converts input data into a latent space, encodes it into a compressed representation, and decodes it back to generate similar data. The variation in VAE introduces randomness for diverse outputs. It is easy to understand and visualize, ensuring diversity with easier training compared to GAN; however, it fails to generate complex structural data. Wee Kiat Chan et al. studied a vector-quantized VAE model in Gen-AI to design a 3D model of a soft pneumatic robotic actuator [18]. A latent diffusion model was adopted to generate high-dimensional data quality with complex structural properties and vast design exploration, functionalities, shapes, and material properties. Similarly, Liwei Wang et al. used VAE in reverse engineering to design multiscale, graded, and micro-structural metamaterials using DGM [19]. Martin Juan José Bucher et al. introduced performance-based Gen-AI in architectural engineering construction, specifically for network-tied arch bridges and multi-layered brick walls [20]. They introduced the conditional VAE (CVAE) generative model and compared it with genetic algorithms for performance attributes in parametric 2D and 3D modeling.

2.4. Other Models in Gen-AI

Alireza Ramezani et al. used Gen-AI design to create a morpho-functional Husky Carbon robot that incorporates quadrupedal-legged and aerial forms of locomotion, using Grasshopper’s evolutionary solver to address payload capacity and energy maintenance challenges [21]. H. Onan Demirel et al. introduced a human-centered Gen-AI design named human factor engineering AI (HFE-AI) that incorporates human intelligence and factors for concept generation and assessment, enhancing creativity and satisfying human attributes and needs with mixed reality [22]. Luka Gradisar et al. developed a machine-learned Gen-AI design to effectively overcome the limitations of the traditional model of optimized solutions, repetitive tasks, collaboration, and interoperability [23]. This model is trained from the data collected through computational models. This study demonstrated their developed model for generating robotic telescopic enclosures with complex structures.

Despite the substantial advancements with Gen-AI and the implication of DGM models in engineering design creation, it faces unique challenges. (i) The recent literature has primarily focused on integrating Gen-AI in designing complex structures while considering the unimodal approach, and limited studies have explored multimodal inputs to address design constraints. (ii) Past studies failed to implement feedback-based mechanisms or input refinement techniques essential to address the stochastic nature of AI-generated outputs for validation. (iii) A comparative evaluation of design generation from the prompts with and without dataset remains unaddressed. (iv) Lastly, although Gen-AI has been successfully integrated into diverse fields like CAD modeling, robotic actuators, and construction architectural designs, incorporating it in the design of autonomous fumigation robots is still unexplored.

This study’s research objectives are categorized into three segments:

- The development and implication of “machine-learned multimodal and feedback-based VAE model” in design creation using Gen-AI.

- Incorporating a comparative analysis of design generation output with and without a spraying robot’s dataset.

- The development of the autonomous fumigation robot is based on the data-driven design generated using the proposed model.

The novelties of this study encompass the following:

- This study focuses on developing a “machine-learned multimodal and feedback-based VAE model” for design creation using Gen-AI, integrating multimodal data types and feedback mechanisms to enhance precision, relevance, robustness, and adaptability to real-time deployable service robots.

- Conducting a comparative analysis of design outputs generated with and without spraying robot datasets to evaluate the role of data input in improving design quality.

- Additionally, this work involves developing an autonomous fumigation robot for urban landscapes, utilizing the proposed model for application-specific optimization and functionality.

3. Methods

Gen-AI produces new data or content, including text, images, or 3D designs, by identifying and learning patterns from existing datasets using text-to-image, image-to-text, or image-to-image generation techniques. DGMs utilize deep learning algorithms to produce high-dimensional, complex, unique, and realistic data using a fundamental probability distribution from the existing dataset. In DGM models, compared to GAN and transformer models, VAE generates diverse and interpretable outputs. It learns from a structured latent space, making it ideal by integrating randomness for exploring data variations and performing tasks like anomaly detection or controlled design generation. Unlike GANs, which are challenging to train due to adversarial competition and mode collapse, VAE are simpler to optimize and ensure consistent results. Compared to GPT models, VAE handles a wider range of data types, including text, images, and numerical data. While GPTs lack latent space interpretability, VAE offers better control over generative processes, making them versatile for multi-domain applications.

In this study, the VAE model is adopted to generate a design of a fumigation service robot for urban landscape terrains. This study uses Python v3.9.19 in the Anaconda environment and interactive LLM models like ChatGPT/Ollama to develop and integrate the model. Python is the primary programming language for implementing machine learning algorithms, data processing, and model optimization. ChatGPT/Ollama is integrated to facilitate interactive design generation, allowing real-time feedback and the customization of parameters through natural language conversations. PyTorch (an open-source machine learning library with encoders/decoders, optimizers, and backpropagation) served as the primary framework for building the MMF-VAE neural networks. In the VAE model, after receiving the input prompts from the user, the model processes the input prompts through a series of steps: tokenization and self-attention vectors to effectively understand the input data from the user, produce meaningful data in latent space, and generate a desired output. Tokenization breaks down the input data (image or text or numerical data) into smaller, manageable, and more understandable units known as tokens, enabling the model for faster and easier processing. During self-attention vectors, the model focuses on understanding each token’s importance, relevance, and interdependency with the other tokens. Then, the tokens are processed through three segments, query, key, and value to understand the significance of each token. The query segment analyzes the token and then searches the input data for appropriate information. The key segment contains the reference tokens and matches its tokens with query tokens to determine their relevance. Then, the value segment is associated with storing information about each token and provides the data to generate targeted output based on the token weights. In VAE design generation, tokenization and self-attention vectors enhance the encoder’s understanding of the complex inputs and generate accurate outputs from the decoder, resulting in structured, high-quality outputs tailored to specific design requirements.

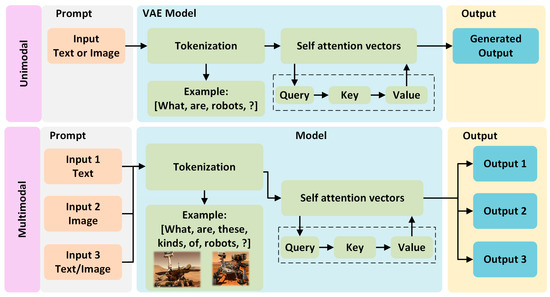

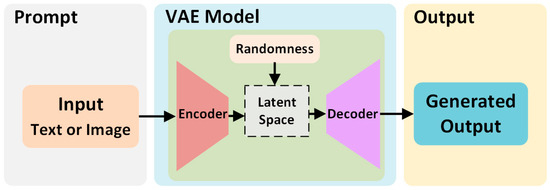

The VAE model primarily employs unimodal and multimodal approaches, as illustrated in Figure 1. A unimodal VAE processes a singular data modality, such as text, image, or numerical data. This approach compresses the input data of any type into a latent space while reconstructing the same type of data with variations from this representation. Unimodal VAE is easy to train, has a simpler architecture, and achieves high performance but fails in adaptability due to restricted diverse data type inputs. A multimodal VAE processes multiple data modalities simultaneously, such as the combination of input prompts of text, images, and numerical data. This approach encodes the inputs into a common latent space. It may reconstruct or produce outputs across several modalities, facilitating cross-modal tasks such as producing an image from a text prompt. It generates diverse and richer outputs but requires higher training with a larger dataset. In summary, unimodal VAEs are ideal for specific tasks, while multimodal VAEs are suited for comprehensive applications integrating diverse data types.

Figure 1.

Architecture of unimodal and multimodal approaches in VAE.

In the VAE model, encoders and decoders are essential for data creation, compression, and transformation activities. The encoder processes input data and compresses it into a reduced-dimensional representation of smaller, simpler data known as the latent vector. This compressed approach preserves vital information while eliminating unnecessary details in the latent space. Mathematically, it associates the input data (x) with a latent vector (y), as presented in Equation (1). Each xi represents data modality for a multimodal approach. Then, the decoder’s function is to utilize the information from the latent space to reconstruct the original input data. Mathematically, the decoder transforms y generated via the encoder into a reconstructed output (), as presented in Equation (2).

Latent vector (y) = fencoder[x1, x2, x3, x4…xm]

An autoencoder is an unsupervised learning model that encompasses both the encoder and decoder to train itself to reduce the difference between x and . The autoencoder employs the mean square error (MSE) loss function to determine the variation of from the original input data and several input data points or specifications (n), as shown in Equation (3). The autoencoder loss function measures the reconstruction accuracy but fails to generate new or diverse data.

VAE is an extension of the autoencoder with an introduction of randomness (ϵ) and probabilistic distribution to the system, allowing the generation of new and diverse outputs, as shown in Figure 2. In VAE, x is encoded as two probabilistic distribution parameters, mean (μ) and standard deviation (σ), to the latent vector (y), as shown in Equation (4). VAE uses a structured latent space with a reparameterization (θ) method to maintain differentiability. The decoder reconstructs the input as or generates new data (z) from the latent vector, represented in Equation (5). This iterates for several epochs until convergence is achieved.

Latent vector (y) = μ + (σ × ϵ), ϵ ∼ (0, 1)

Generated output data (z) = gθ[y]

Figure 2.

The framework of a generic VAE model.

A feedback-based VAE is a modified version of traditional VAE that incorporates an iterative feedback mechanism to enhance the quality and relevance of generated outputs. The reconstructed outputs may not always align with the desired results due to the stochastic nature of latent space sampling in the standard VAE technique. By integrating a feedback loop, the VAE model can iteratively refine its outputs, adjusting model parameters or latent variables accordingly. The feedback mechanism helps users minimize discrepancies between generated data and expected output, allowing them to propagate feedback error (α) back through the network to update encoder and decoder weights. This process helps the model learn more accurate mappings between output data and feedback latent vector (ȳ), improving reconstruction and generation capabilities, as presented in Equation (6). The feedback-based approach benefits applications requiring high reliability in data reconstruction, optimization, adaptive augmentation, and personalized design generation. The feedback term’s gradient (∇y) with respect to the latent space vector (y) represents the difference between the generated output (z) and the targeted output ().

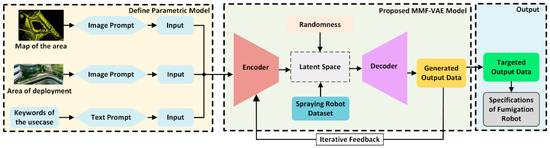

An advanced design generation methodology is proposed in this study to examine and enhance both functionality and aesthetic-based preferences in designing fumigation robots. This study proposes a machine-learned multimodal and feedback-based VAE (MMF-VAE) model. This approach improves the functionality of feedback-based VAE models by refining the latent space representation and provides the desired output quality, as illustrated in Figure 3. In this enhanced framework, textual or image-driven prompts are given to the encoder, while a database (d) with additional spraying robot training data guides the latent space. The model’s refinement is achieved by combining input prompts and external datasets, reducing feedback errors, and achieving better alignment between generated output (z) and desired results (), as represented in Equation (7). The function g represents the refinement function based on the database. Algorithm 1 elaborates the sequence of operations performed in the proposed MMF-VAE model to acquire the desired output.

Figure 3.

The framework of the proposed Machine-Learned Multimodal and Feedback-Based VAE model.

Algorithm 1 constructs and trains an MMF-VAE using a dataset of robot attributes for fumigation services, denoted as data. These attributes include size (represented in three dimensions), weight, speed, drive type, etc. Each sample is flattened to match the specified input_dim (in this case, size, weight, battery, autonomy_type, drive_type, payload, sensors, PC, navigation_system, spraying_type, spray_system, terrain, and safety), which is then processed through an AttributeEncoder and SpecificationDecoder. The encoder outputs two vectors, μ and logvar, each of the dimension latent_dim (in this case 16). A reparameterization function draws from a normal distribution, producing a latent vector, z, that captures essential features of the input data. The hidden_dim parameter (in this case, 128) controls the capacity of internal layers, striking a balance between model expressiveness and computational cost.

During training, batch_size controls how many samples are processed in each iteration, while num_epochs determines the total number of passes over the entire dataset. An Adam optimizer with a learning_rate (lr = 0.0001) performs gradient-based updates on the network weights. A combined VAE loss, encompassing reconstruction error, KL divergence, and any domain-specific penalties, guides the model in accurately learning the robot attribute distribution. After training, the final MMF-VAE is saved as a model file and ready to be integrated with downstream applications such as large language models.

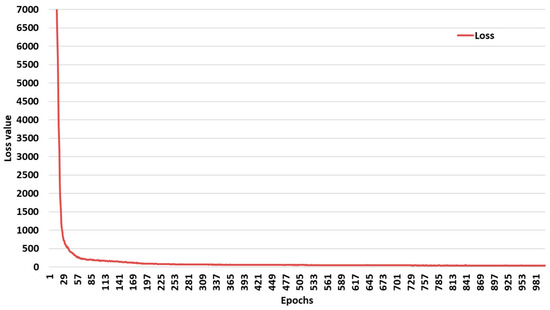

The training loop iterates until the loss, initially around 8000 (as seen in Figure 4), stabilizes below 10 after roughly 1000 iterations (though the example ran for 2000 total). Once trained, the MMF-VAE model is saved and integrated with a conversational LLM (e.g., ChatGPT/Ollama) to provide user-friendly interactions for specifying or refining robot designs. The user can submit prompts to the LLM, which queries or updates the MMF-VAE latent space and returns design suggestions or final specifications.

| Algorithm 1: Development of MMF-VAE Model |

| Require: data: robot attributes (Size, Weight, Speed, Drive Type, etc.) //Dataset of readily available fumigation robots input_dim: total dimension of flattened input latent_dim: dimension of latent vector//(16) hidden_dim: dimension of hidden layers//(128) batch_size: number of samples per training batch num_epochs: number of training epochs learning_rate: Adam optimizer step size |

| Objective: Generate an MMF-VAE model file from fumigation-robot data |

| 1: Define AttributeEncoder(input_dim, latent_dim, hidden_dim): 2: net ← Sequential(Linear(input_dim, hidden_dim), ReLU(), Linear(hidden_dim, hidden_dim), ReLU()) 3: fc_mu, fc_logvar ← Linear(hidden_dim, latent_dim), Linear(hidden_dim, latent_dim) 4: forward(x): h ← net(x); return (fc_mu(h), fc_logvar(h)) 5: end Define 6: Define SpecificationDecoder(latent_dim, hidden_dim): 7: net ← Sequential(Linear(latent_dim, hidden_dim), ReLU(), Linear(hidden_dim, hidden_dim), ReLU()) 8: size_fc, weight_fc, speed_fc, motor_fc ← Linear(...), ... 9: forward(z): h ← net(z); size = ReLU(size_fc(h))×100; weight=ReLU(weight_fc(h))×50 10: speed = ReLU(speed_fc(h)); motor_probs = Softmax(motor_fc(h)) 11: return {size, weight, battery, autonomy_type, drive_type, payload, sensors, PC, navigation_system, spraying_type, spray_system, terrain, safety} 12: end Define 13: Define MMF_VAE(input_dim, latent_dim, hidden_dim): 14: encoder ← AttributeEncoder(input_dim, latent_dim, hidden_dim) 15: decoder ← SpecificationDecoder(latent_dim, hidden_dim) 16: reparameterize(mu, logvar): std = exp(0.5×logvar); eps = randn_like(std); return mu + eps × std 17: forward(x): (mu, logvar) = encoder(x); z = reparameterize(mu, logvar); specs = decoder(z) 18: return (specs, mu, logvar) 19: end Define 20: mmf_vae_model ← MMF_VAE(input_dim, latent_dim, hidden_dim) 21://Train via Adam and domain-specific feedback to get final model 22://e.g., for epoch in 1.num_epochs: forward pass, VAE + feedback loss, backprop 23://After training, save mmf_vae_model for LLM integration 24: return mmf_vae_model //The trained model |

Figure 4.

Loss graph during the training of the MMF-VAE model for the fumigation service robots.

Algorithm 2 illustrates how the trained MMF-VAE model file integrates with a conversational LLM like ChatGPT/Ollama. The MMF-VAE is loaded into a loop that listens for user prompts. When a user requests a new design, a random latent vector is sampled and decoded and then passed back as suggested specifications. If the user provides additional feedback, these textual constraints are translated into numeric adjustments that refine the latent space, generating an updated set of specifications. Throughout this process, the LLM mediates the user interaction, delivering suggestions, explanations, and refined outputs in a dialog-based fashion. The session concludes once the user has reached satisfactory fumigation robot attributes.

| Algorithm 2: Integrating MMF-VAE with LLM Model |

| Input: mmf_vae_model: a trained MMF-VAE model file LLM_service: large language models (e.g., ChatGPT/Ollama) user_prompt: User prompts/instructions |

| Output: Generate an output with targeted or desired requirements |

| Ensure: Interactive design refinements via LLM based on MMF-VAE outputs |

| Pseudocode: 1: Load mmf_vae_model 2: While user is active: 3: prompt ← GET_INPUT_FROM_USER() 4: if prompt requests new design: 5: z_random ← SAMPLE_LATENT_VECTOR() 6: generated_specs ← mmf_vae_model.decoder(z_random) 7: SEND_TO_LLM(LLM_service, “Suggested Parameters: ”+STR(generated_specs)) 8: else if prompt provides feedback: 9: feedback_vector ← CONVERT_TO_CONSTRAINTS(prompt) 10: z_refined ← APPLY_FEEDBACK_TO_LATENT(mmf_vae_model, feedback_vector) 11: refined_specs ← mmf_vae_model.decoder(z_refined) 12: SEND_TO_LLM(LLM_service, “Refined Parameters: ”+STR(refined_specs)) 13: end if 14: end While 15: return //End interactive session |

4. Incorporation of Proposed Model in Urban Landscape Fumigation Service Robot

The incorporation of the proposed MMF-VAE model in designing an urban landscape fumigation service robot focuses on a structured three-stage process. The design and development are divided into the following: (i) design parameter fixation, which highlights the significance of each parameter in the robot’s development; (ii) design parameter generation using Gen-AI, comparing specifications generated with and without datasets using the proposed model; and (iii) the development of the fumigation robot, implementing the generated design specifications. Section 4.1 examines the critical role of parameters like locomotion, spraying mechanisms, and autonomy. Section 4.2 demonstrates the model’s ability to generate accurate, dataset-driven specifications and their impact on design refinement. Finally, Section 4.3 interprets the generated specifications into a functional fumigation service robot optimized for urban landscapes. Implementing the proposed algorithm involves several key steps to generate design specifications for the urban fumigation service robot. First, input data, such as design parameters and sensor information, are processed using Python libraries. The MMF-VAE model is then applied to generate design specifications based on these inputs, incorporating feedback loops for iterative refinement. The algorithm utilizes multimodal data to inform decision-making and enhance output accuracy. Each stage, from data preprocessing to model optimization and final design generation, is clearly defined in Algorithms 1 and 2, ensuring reproducibility. The integration of ChatGPT/Ollama allows for the real-time customization of design inputs.

4.1. Design Parameter Fixation of Fumigation Service Robot

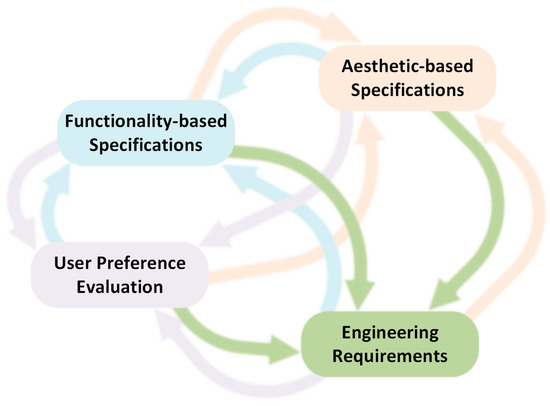

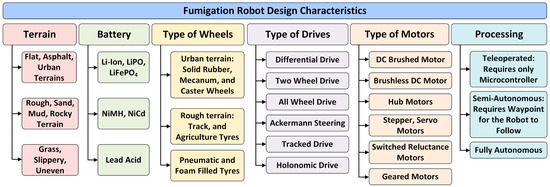

The design fixation for the fumigation service robot addresses both engineering requirements and user preferences. These parameters are categorized into two primary segments: functionality and aesthetic-based specifications, as illustrated in Figure 5. The functionality-based specifications focus on key considerations, including the type of terrain the robot will navigate, its battery capacity to support extended operation, and the number and type of wheels to provide smooth and stable movement. The robot’s design must also integrate essential operational components, such as precise sensors for mosquito hotspot detection and obstacle avoidance. Additionally, the robot’s functionality must align with operational and regulatory guidelines, ensuring safety and environmental compliance during fumigation activities. Aesthetic-based specifications, on the other hand, enhance the robot’s visual appeal and usability. Compactness is another critical factor, ensuring that the robot can navigate tight urban spaces without compromising performance.

Figure 5.

Key factors influencing parameter finalization.

In engineering requirements, the design specifications must meet several targeted requirements. These include adherence to safety standards, such as emergency stop mechanisms, collision avoidance systems, and water resistance, to ensure durability during fumigation in outdoor environments. The robot’s navigation capabilities must be robust, allowing smooth movement across uneven terrains. User preferences play a significant role in guiding these specifications. For instance, the robot should be fully autonomous and capable of navigating urban landscapes without human intervention. Advanced sensors and algorithms must enable the robot to identify mosquito hotspots and target fumigation efforts effectively. Obstacle detection and avoidance systems ensure safe and efficient operations in dynamic environments, such as urban parks or residential areas. By balancing these engineering requirements and user preferences, the design parameter fixation ensures that the fumigation robot meets functional and aesthetic needs. The basic specification requirements considered in designing a fumigation service robot are detailed in Figure 6.

Figure 6.

Parameters considered in designing the fumigation robot.

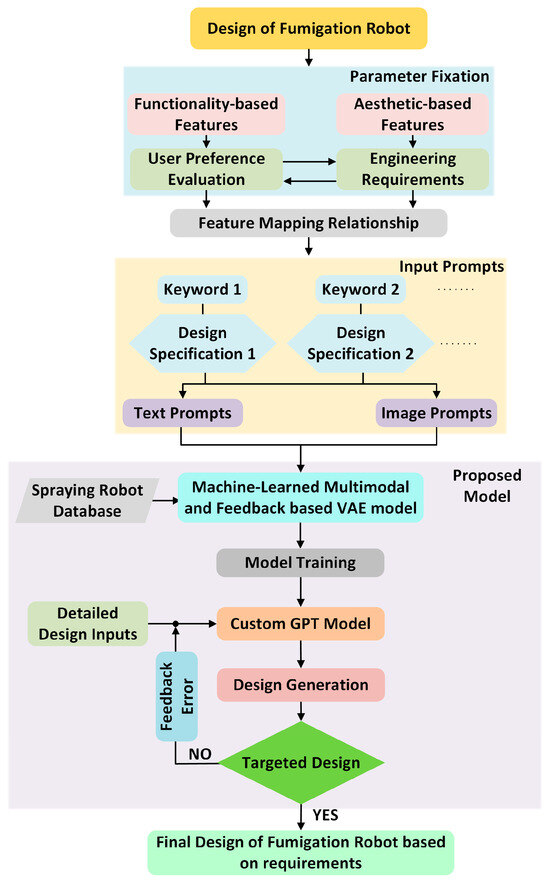

Figure 7 illustrates the systematic approach to designing a fumigation service robot using the proposed MMF-VAE model. The process begins with parameter fixation, guided by engineering requirements and user preference evaluations, ensuring that the robot meets functional and aesthetic specifications. Next, feature mapping is performed to finalize the input design parameters; then, these fixed parameters are transformed into input prompts categorized as text and image prompts. Each keyword represents the design specification requirement for the fumigation robot. These text and image prompts are given as input to the proposed model, which utilizes a spraying robot database for training. The model generates initial designs based on these inputs and incorporates a feedback mechanism to refine the outputs iteratively. Suppose the generated design fails to meet the targeted requirements (i.e., feedback error exists). In that case, the process loops back, refining the input parameters and retraining the model until a satisfactory output is achieved. Once the design aligns with the desired requirements, it is considered the final fumigation robot design. This workflow ensures that the fumigation robot is designed precisely, balancing functional performance, user preferences, and aesthetic appeal, driven by advanced Gen-AI capabilities.

Figure 7.

Process flowchart of designing a fumigation robot using the proposed model.

4.2. Design Parameter Generation Using Proposed Model

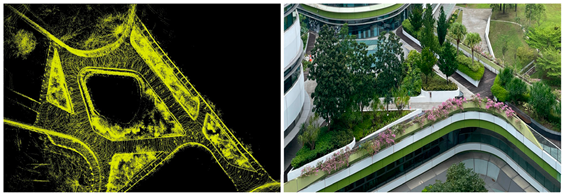

The design specification generation highlights the model’s capability of producing accurate, dataset-driven outputs that significantly influence design refinement. In developing the fumigation service robot for urban landscape terrains, the generated specifications should align with the targeted requirements, ensuring both functionality and practicality with real-time deployment. One of the primary design considerations is maintaining compact physical dimensions of less than 1000 mm × 1000 mm, enabling the robot to navigate through tight spaces commonly found in urban environments. An example of the terrain is provided as an input to the design model. Additionally, the robot is designed to be lightweight, ensuring that it can be easily transported and handled by the operator for portability. In addition, the robot should incorporate advanced computational capabilities to support the fusion of multiple sensors. This ensures the seamless integration of technologies such as LiDAR, depth cameras, and inertial measurement units (IMUs) for autonomous navigation and identifying mosquito hotspots. The robot should have a precision spraying system to minimize chemical usage, ensure controlled and efficient application, and reduce wastage while targeting specific areas. To meet operational demands, the robot should support a 4-h running time with a robust power management system with precise control and a smooth torque drive mechanism that ensures efficient navigation, making it particularly suited for urban terrains. This design generation process, with multimodal inputs and iterative refinement, ensures that the fumigation robot meets targeted requirements while addressing challenges unique to urban landscapes.

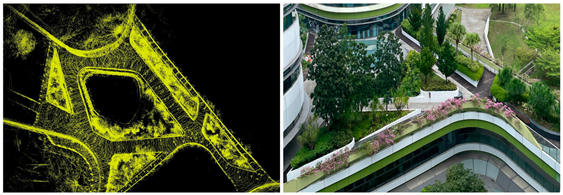

From the existing literature [24,25,26,27,28,29,30,31,32,33,34,35,36,37,38,39] on various spraying robots used for urban fumigation, agricultural fumigation, and similar applications, a readily available spraying robot dataset was prepared and provided as input to the proposed model for generating detailed design inputs. The dataset outlined in Figure 8 and Table 1 compiles specifications of existing spraying robots, including their physical dimensions, power management systems, spraying technologies, tank capacities, navigation types, user interface designs, intended terrains, performance metrics, and terrain of applications. Only terrain-based mobile robots for fumigation services are considered in this study. By incorporating this dataset into the proposed model, the design generation process benefits from a unique design based on the robust foundation of existing knowledge, enabling the proposed model to refine and produce specifications tailored to the targeted fumigation applications.

Figure 8.

Recent spraying/agricultural robot dataset used in training the proposed model, sources from [24,25,26,27,28,29,30,31,32,33,34,35,36,37,38,39] sequentially.

Table 1.

Detailed dataset of various spraying robots and their specifications in the existing literature.

Once the design parameters for the fumigation robot are provided to the proposed MMF-VAE model, the model is integrated with a custom-made GPT to facilitate interactive customization. ChatGPT/Ollama, being a conversational AI, allows users to refine and adjust the desired parameters by providing real-time feedback. By leveraging this interactive capability, targeted design parameters for the fumigation robot can be generated based on user inputs and preferences. This integration ensures a more personalized and efficient design process, enabling users to iteratively refine specifications.

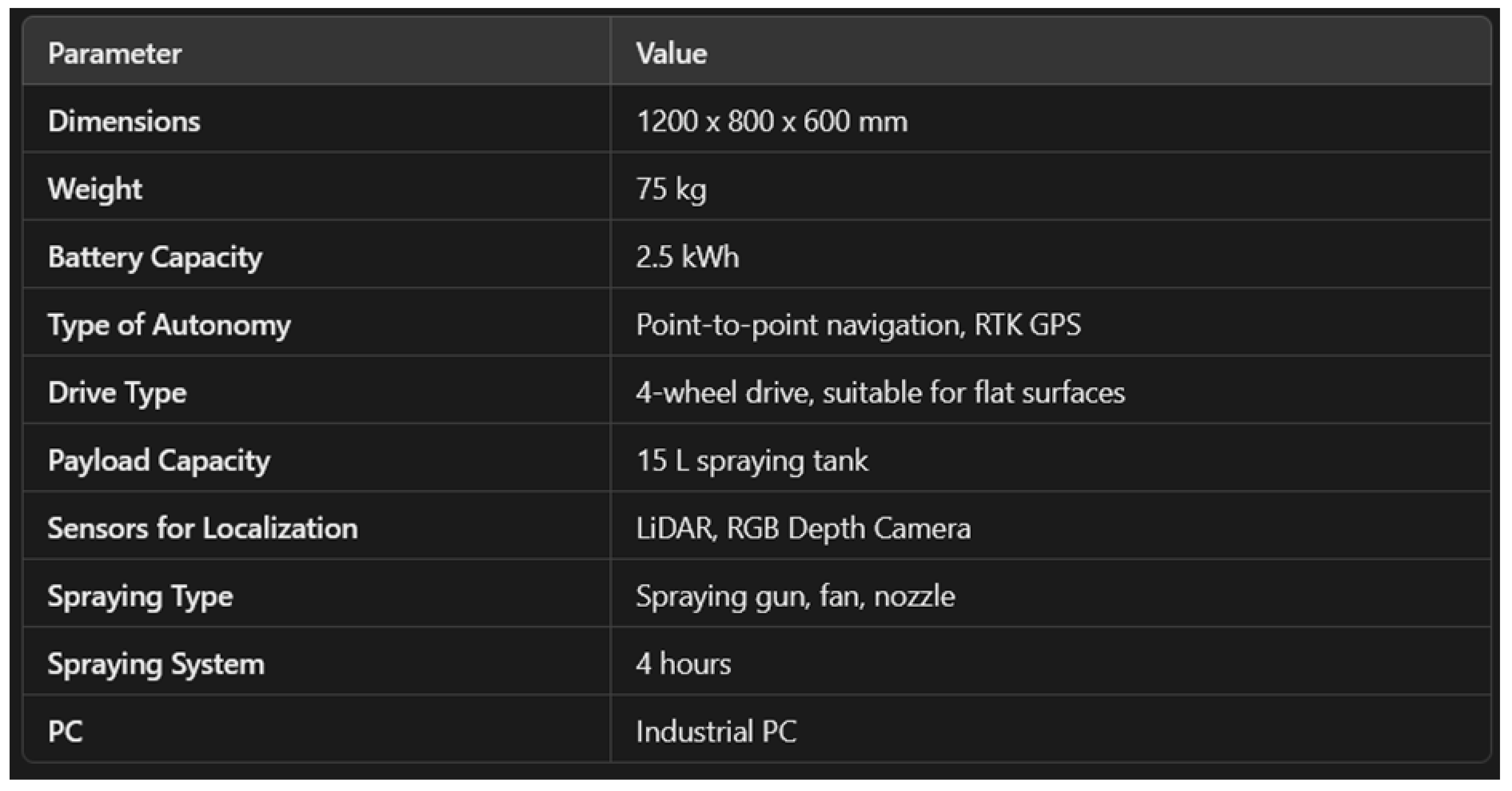

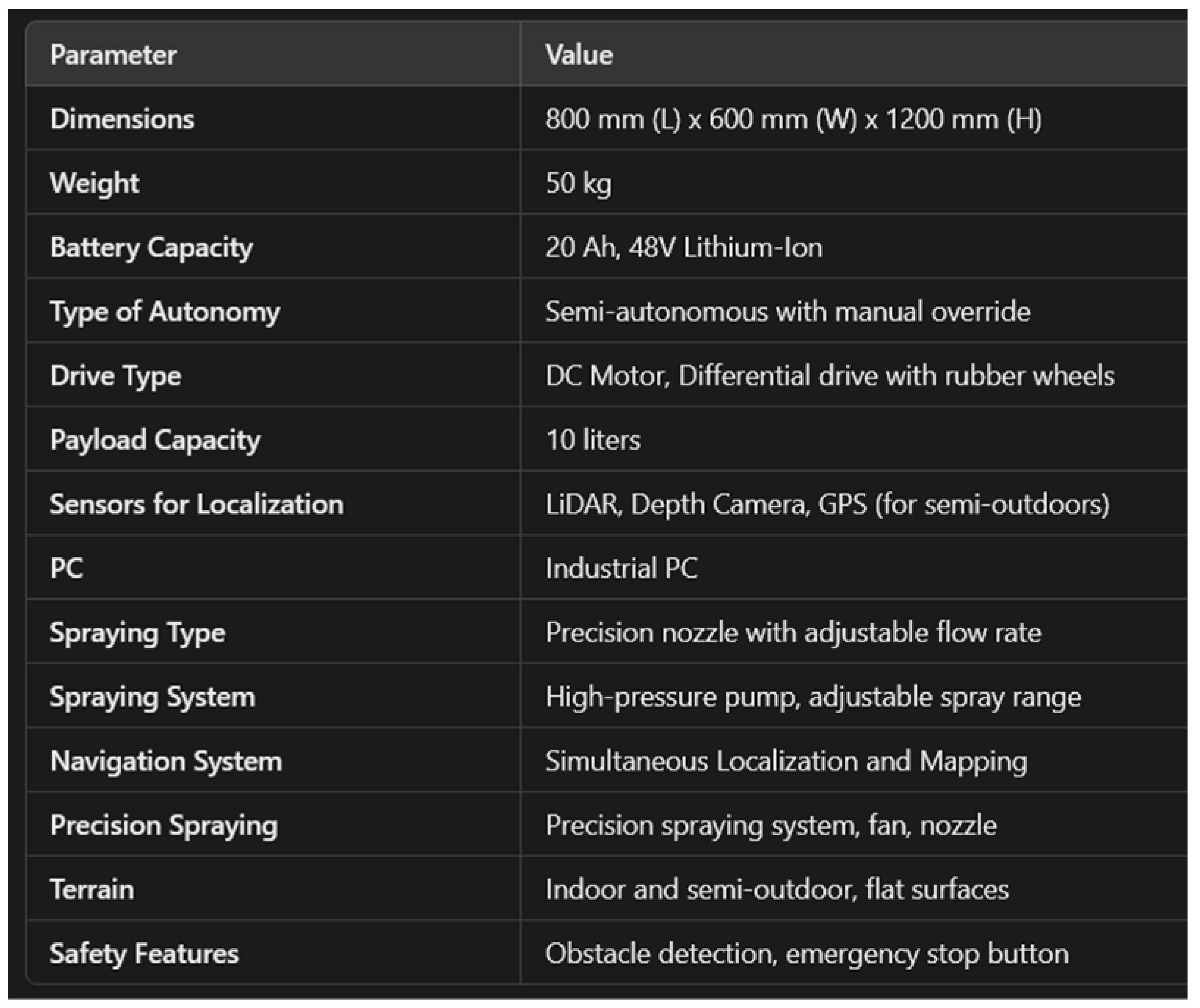

Table 2 outlines the design outputs generated without a dataset, highlighting several limitations in meeting practical deployment requirements. In this case, the model suggests specifications such as an oversized battery, increased weight, a four-wheel drive system, and RTK GPS-based point-to-point navigation, leading to increased costs and inefficiencies. While some suggestions, like autonomous capabilities, align with the requirements, the lack of dataset integration results in impractical outputs, such as a bulky 15-L tank and doubled battery weight.

Table 2.

Input prompts given to the proposed model to generate the design of a fumigation robot without a dataset.

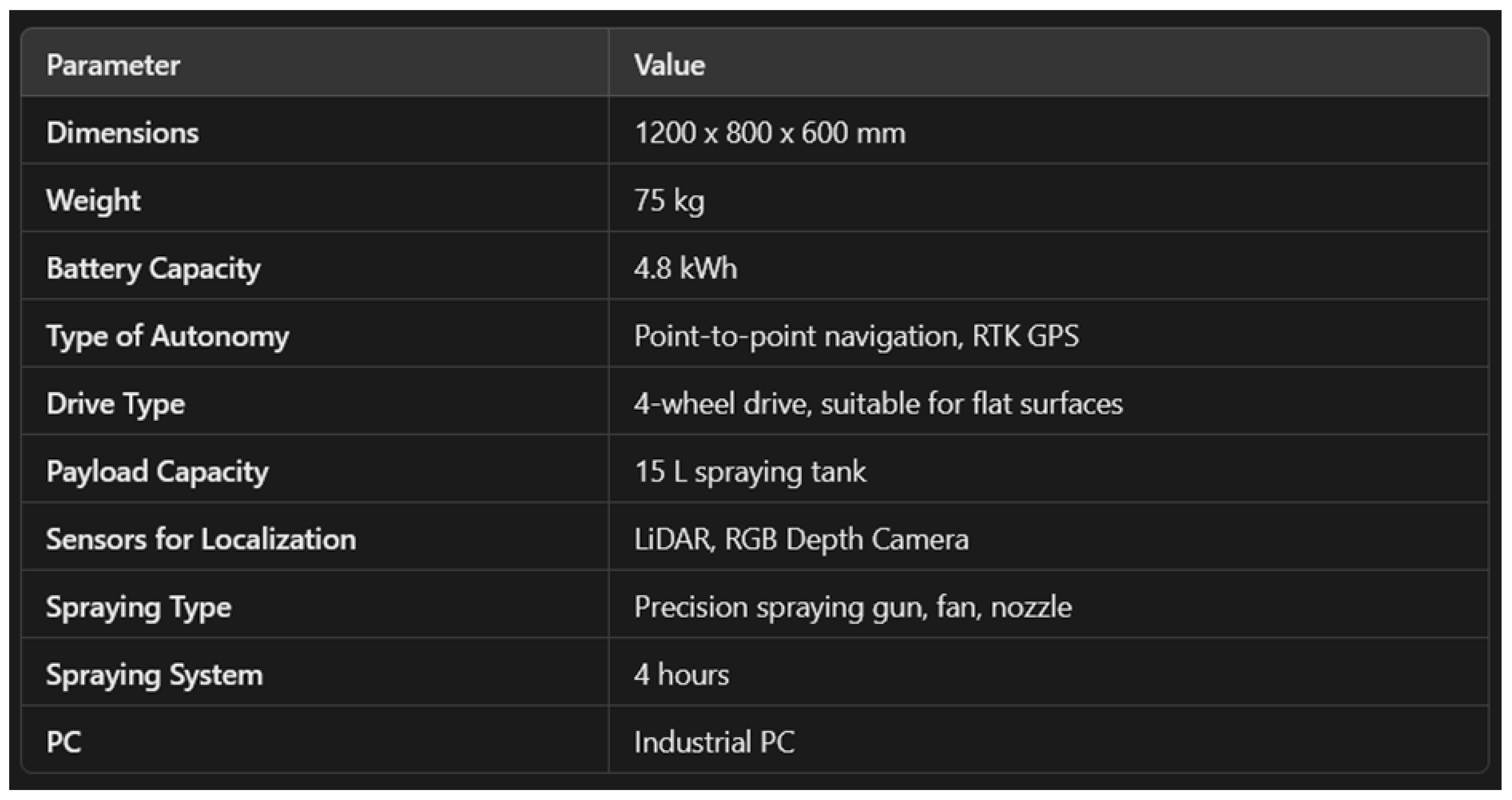

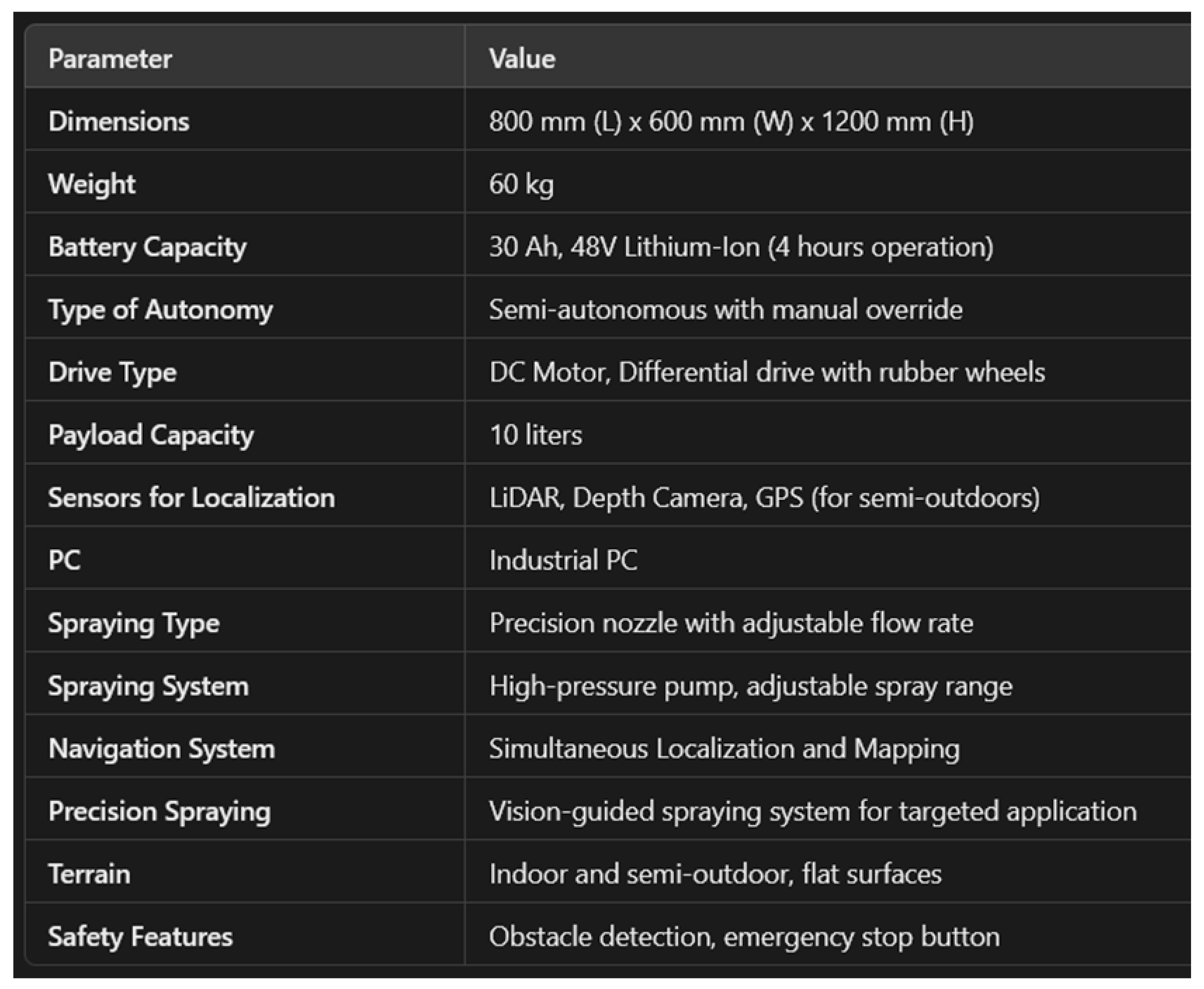

In contrast, Table 3 demonstrates the model’s improved performance with dataset integration. Leveraging the existing spraying robot dataset, the generated specifications are more practical and deployment-ready. The model outputs refined parameters such as a lightweight design (60 kg), a compact size (800 mm × 600 mm × 1200 mm), and a 10-L chemical tank optimized for urban fumigation. Moreover, integrating a differential-drive DC motor with rubber wheels and advanced sensing systems for autonomous navigation aligns closely with real-world needs.

Table 3.

Input prompts given to the proposed model to generate the design of a fumigation robot with a dataset.

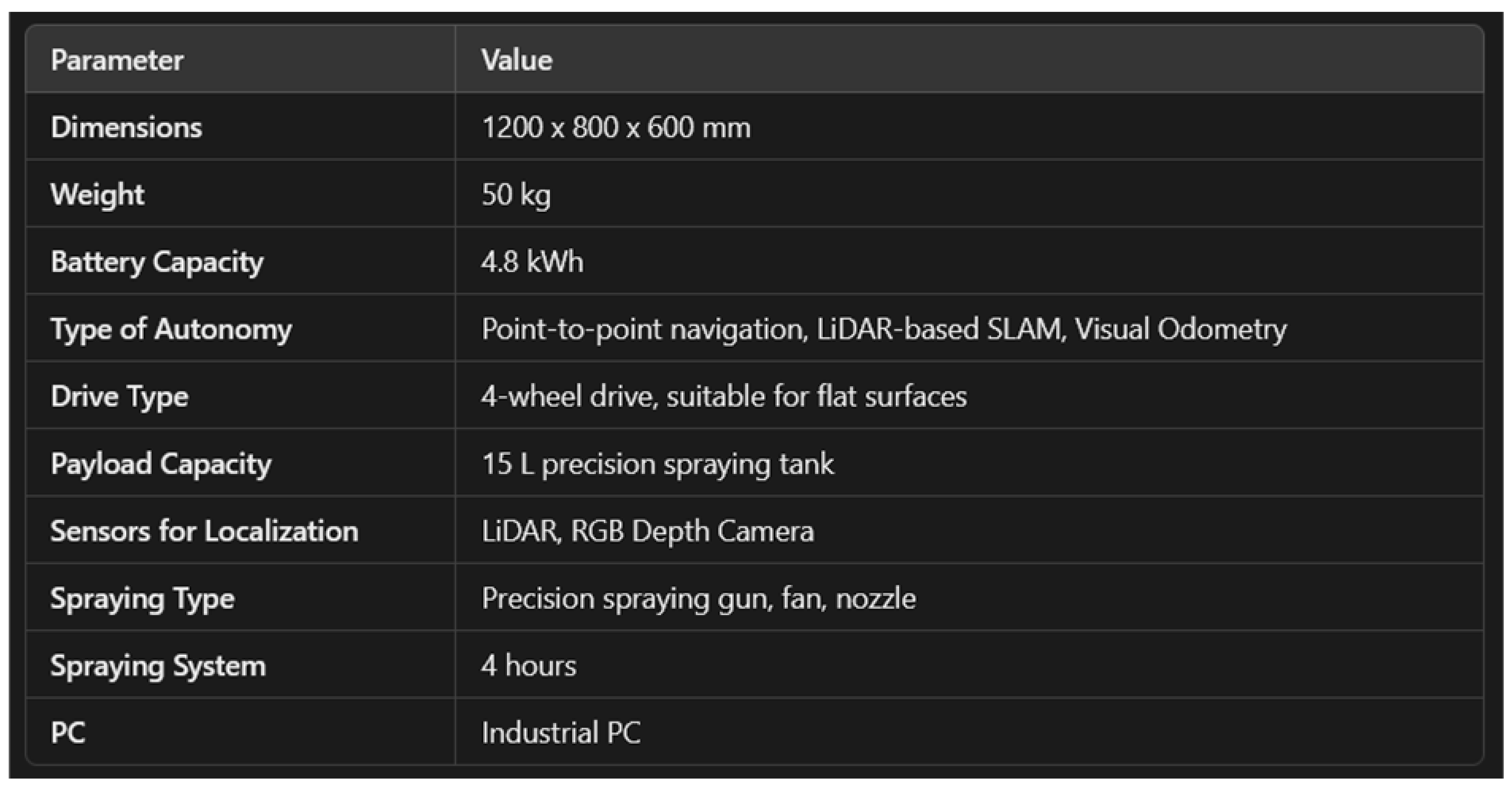

Table 4 provides a comprehensive summary of the design specifications generated for the fumigation service robot tailored to urban landscapes using the proposed model with and without the spraying robot dataset. Upon comparison, the specifications generated with the dataset are more practical and better suited to real-world deployment. Without the dataset, the robot’s design includes increased weight, an oversized battery, costly point-to-point RTK GPS navigation, over-capable four-wheel drive, and a 15 L chemical tank that increases the payload and drains the battery quickly. In contrast, the specifications generated with the dataset exhibit the practical feasibility of lightweight design, optimized battery capacity for extended operation, and a semi-autonomous navigation system powered by a differential drive mechanism with rubber wheels for smooth movement across urban terrains. Additionally, the robot features a 10 L chemical tank payload, an industrial-grade PC for efficient processing, and advanced sensors for precise hotspot detection. The incorporation of a precision spraying technique ensures controlled and efficient chemical application, reducing wastage and environmental impact. This comparison highlights the importance of a structured dataset in refining design specifications, resulting in a highly effective and operationally efficient urban fumigation robot for real-world applications.

Table 4.

Final specification comparison of the fumigation robots generated without and with the dataset.

Compared to the specifications generated without a dataset, the dataset-based design yields a more compact and practically feasible output, particularly in dimensions and overall weight. The recommended battery capacity is sufficient for four hours of continuous operation, avoiding the excessive mass of the 4.8 kWh battery proposed in the no-dataset version, which could add over 30 kg. Moreover, the payload weight is accounted for in the final robot configuration, ensuring a balanced design. The dataset-based specifications also introduce additional detail, such as precision spraying nozzles, simultaneous localization and mapping (SLAM) algorithms, vision-guided spraying, and terrain considerations—all missing from the no-dataset variant. These quantitative outcomes demonstrate the practical benefits of a data-driven design approach in achieving enhanced coverage, efficiency, and robustness for fumigation tasks.

4.3. Development of Fumigation Robot Based on Design Generation

Based on design parameter optimization and finalization using the proposed MMF-VAE model, suitable specifications for a fumigation service robot for urban-landscape applications were identified, as presented in Table 5.

Table 5.

Comparison of the design generated via the proposed model and the developed robot.

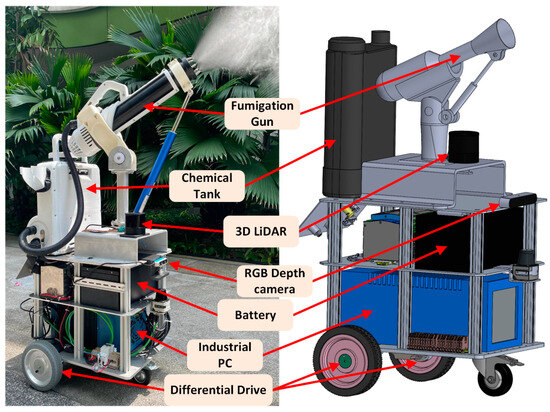

Table 5 compares the specifications generated using the proposed model and those of the developed fumigation robot. The specifications cover key design aspects such as dimensions, weight, fumigation unit capacity, CPU processor, sensors, battery, type of autonomy, and drive type. The comparison demonstrates how the proposed model provides specifications that align closely with real-world requirements, balancing functionality and efficiency. It showcases weight optimization, sensor integration, and battery capacity improvements while effectively ensuring precise fumigation and robust navigation to target mosquito fumigation. By integrating multimodal inputs, Gen-AI ensured that the robot’s components and functionalities align with the demands of urban environments, resulting in a robust and efficient solution for pest control. This development highlights the synergy between AI-driven design processes and practical implementation. The development of the fumigation service robot focuses on efficient maneuverability, autonomous navigation, and precision in fumigation. The robot’s locomotion is a BLDC motor-based differential drive mechanism in this design study. BLDC motors offer durability, precise control, energy efficiency, smooth torque, and navigation, making them ideal for robot locomotion in planar surfaces, commonly found in urban landscaping infrastructure. Here, oriental motors, BLHM450KC-30, are considered. The fumigation robot is equipped with advanced sensing technologies, including three-dimensional light detection and ranging (3D LiDAR) and depth cameras. Three-dimensional LiDAR ensures accurate spatial mapping and precise environmental scanning, while depth cameras add detailed depth perception, obstacle detection, and feature recognition. Together, they enable efficient navigation, target identification, and safe fumigation, even in complex and dynamic urban landscapes. In this prototype, the fusion of the Hesai QT128 3D LiDAR and Intel RealSense D435i depth camera enhances its spatial awareness, ensuring robust detection and navigation.

This study adopts a controlled or precision spraying technique; it ensures precise chemical application, reduces wastage, and minimizes environmental impacts, making it better suited for autonomous fumigation robots compared to large-area spraying techniques. For fumigation, this robot is equipped with a fumigation spray gun and a 10 L chemical tank capable of delivering 50-micron droplets at a flow rate of 330 mL/min. The top and bottom ends of the spray gun are connected to one end of the linear actuator and the 3D-printed mount top end, allowing tilt motion. The linear actuator’s opposite end is attached to the metal shaft bottom end coupled with a stepper motor to facilitate panning motion. This setup enables the spray gun to rotate 360°, fumigating an area up to 4.5 m above the ground and within a range of 2.5 to 6 m from the robot. This combination of adjustable tilt and pan motion ensures comprehensive coverage of the target area.

An industrial-grade processor is essential for an autonomous fumigation robot to support computational needs as it manages complex tasks like object detection, multiple-sensor integration, precise spraying, and efficient navigation. The Nuvo-10108GC-RTX3080 (Voltrium Systems Pte Ltd., Singapore) industrial processor is used in this study for efficient processing and real-time decision-making. Considering a 4 h operational fumigation runtime, due to its nature of precise and efficient fumigation based on mosquito hotspot detection, a 48 V 25 Ah battery is sufficient. Lithium iron phosphate (LiFePO4) batteries outperform lithium-ion, lithium polymer, lead-acid, nickel-metal hydride, and nickel–cadmium batteries in terms of weight to energy density, making them ideal for autonomous robot application. The final developed fumigation service robot for urban landscape navigation is presented in Figure 9. This fumigation service robot’s design demonstrates the integration of advanced navigation and payload systems, making it a robust tool for targeted mosquito control in urban landscape environments [40,41]. The study utilized design guidelines from existing lab-developed fumigation robots and a spraying robot dataset to generate design specifications using the MMF-VAE model. Based on the design specification, a new urban fumigation service robot was developed, tested, and validated in real-world conditions, demonstrating effective performance.

Figure 9.

Developed fumigation robot and its major components.

4.4. Limitations and Future Scope

Although there are significant advantages and the ease of design generation with Gen-AI, there are a few limitations that need to be addressed:

- Interpretability challenges: understanding or interaction conflict between the human and Gen-AI.

- Lack of contextual understanding: this method can provide inaccurate outcomes without proper training.

- Biased output: output is generated based only on the training database.

- Overreliance on AI: this method lacks human-centric considerations and creativity.

- Generalization challenges: requires diverse datasets for task generalization and design adaptability.

- User inputs: continuous inputs are essential to achieving accurate and desirable results.

- Human irreplaceable: the designer’s role in defining the problem, results interpretation, and solution selection remains irreplaceable.

Additionally, to overcome these challenges and for potential future research in design generation via Gen-AI, the following directions need to be investigated:

- Hybrid architecture: incorporating additional DGM models, GANs, or transformers improves diversity and quality while effectively managing complex multimodal data.

- Design validation metrics: integrating automated evaluation and validation metrics ensures that the generated design satisfies the required functionality, aesthetics, and core requirements.

- Interpretability and explainability: this can increase interpretation by improving transparency for effective validation.

- Human collaboration: develop a framework for enhanced collaboration in design generation between AI and human designer creativity.

- Energy and resource optimization: investigate approaches to integrating energy consumption and resource allocation metrics into the generative process, guiding designs to be more cost- and power-efficient.

- Cross-domain transfer learning: leverage insights from adjacent fields (e.g., automated manufacturing and construction robotics) to enrich the dataset and adapt the model’s learned representations for broader applications.

- Simulation-driven evaluation: employ large-scale simulation environments (e.g., Gazebo and Webots) to rapidly test generated designs in virtual conditions before physical deployment, reducing overall development cycles.

- Multi-agent collaboration: extend the MMF-VAE framework to design swarms or collaborative robot teams, focusing on communication protocols, task allocation, and synchronized operation.

- Explainable generative models: develop more interpretable architectures, such as feature-attribution or attention mechanisms, to clarify how specific design elements are selected and refined.

5. Conclusions

This study demonstrates the development of an urban landscape fumigation service robot using a Gen-AI design process integrated with a readily available spraying robot dataset. The proposed methodology highlights the significant impact of data-driven and feedback-based approaches in generating precise and practical design specifications, ensuring that the robot meets the functional, operational, and aesthetic requirements for urban fumigation applications. The design generation process was systematically structured into three key stages: parameter fixation, design specification generation, and robot development. The first stage identified the importance of both functionality and aesthetic-based specifications, ensuring a balance between operational efficiency and user preferences. The second stage used the proposed MMF-VAE model to generate design specifications with and without the spraying robot dataset. A comparative analysis revealed that designs generated using the dataset were more feasible, lightweight, and optimized for real-world deployment. The final stage focused on developing the fumigation robot, incorporating specifications generated from the proposed model. The integration of multimodal inputs, iterative feedback refinement, and a spraying robot dataset in the proposed model ensured a robust, accurate, and adaptable design process that enhanced the practicality of the generated specifications. This study emphasizes the potential of Gen-AI models in transforming traditional design workflows by leveraging structured datasets and feedback mechanisms. Future research can explore extending this approach to other autonomous service robots, integrating additional datasets and hybrid architecture by combining multiple DGM models to handle complex design structures and enhancing design generalization for broader applications.

Author Contributions

Conceptualization, P.K.C., B.P.D., P.V., S.M.B.P.S., M.A.V.J.M. and M.R.E.; methodology, P.K.C. and B.P.D.; software, P.K.C.; writing—original draft, P.K.C. and B.P.D.; resources, supervision, and funding acquisition, M.R.E. All authors have read and agreed to the published version of the manuscript.

Funding

This research was supported by the National Robotics Programme under its National Robotics Programme (NRP) BAU, Ermine III: Deployable Reconfigurable Robots, Award No. M22NBK0054, and it was also supported by the SUTD Growth Plan (SGP) Grant, Grant Ref. No. PIE-SGP-DZ-2023-01.

Institutional Review Board Statement

Not applicable.

Informed Consent Statement

Not applicable.

Data Availability Statement

The data presented in this study are available upon request from the corresponding author. The data are not publicly available due to privacy concerns, proprietary or confidential information, and intellectual property belonging to the organization that restricts its public dissemination.

Conflicts of Interest

The authors declare no conflicts of interest.

References

- World Health Organization. Disease Outbreak News, Dengue-Global Situation; World Health Organization: Geneva, Switzerland, 2023; pp. 1–14. [Google Scholar]

- Onen, H.; Luzala, M.M.; Kigozi, S.; Sikumbili, R.M.; Muanga, C.-J.K.; Zola, E.N.; Wendji, S.N.; Buya, A.B.; Balciunaitiene, A.; Viškelis, J. Mosquito-Borne Diseases and Their Control Strategies: An Overview Focused on Green Synthesized Plant-Based Metallic Nanoparticles. Insects 2023, 14, 221. [Google Scholar] [CrossRef] [PubMed]

- Choi, J.; Cha, W.; Park, M.-G. Evaluation of the effect of photoplethysmograms on workers’ exposure to methyl bromide using second derivative. Front. Public Health 2023, 11, 1224143. [Google Scholar] [CrossRef] [PubMed]

- Bordas, A.; Le Masson, P.; Thomas, M.; Weil, B. What is generative in generative artificial intelligence? A design-based perspective. Res. Eng. Des. 2024, 35, 1–17. [Google Scholar] [CrossRef]

- Wadinambiarachchi, S.; Kelly, R.M.; Pareek, S.; Zhou, Q.; Velloso, E. The Effects of Generative AI on Design Fixation and Divergent Thinking. In Proceedings of the CHI Conference on Human Factors in Computing Systems, Honolulu, HI, USA, 11–16 May 2024; pp. 1–18. [Google Scholar]

- Regenwetter, L.; Nobari, A.H.; Ahmed, F. Deep generative models in engineering design: A review. J. Mech. Des. 2022, 144, 071704. [Google Scholar] [CrossRef]

- Saadi, J.I.; Yang, M.C. Generative design: Reframing the role of the designer in early-stage design process. J. Mech. Des. 2023, 145, 041411. [Google Scholar] [CrossRef]

- Zhu, Q.; Luo, J. Generative transformers for design concept generation. J. Comput. Inf. Sci. Eng. 2023, 23, 041003. [Google Scholar] [CrossRef]

- Fitriawijaya, A.; Jeng, T. Integrating multimodal generative ai and blockchain for enhancing generative design in the early phase of architectural design process. Buildings 2024, 14, 2533. [Google Scholar] [CrossRef]

- Konstantinou, C.; Antonarakos, D.; Angelakis, P.; Gkournelos, C.; Michalos, G.; Makris, S. Leveraging Generative AI Prompt Programming for Human-Robot Collaborative Assembly. Procedia CIRP 2024, 128, 621–626. [Google Scholar] [CrossRef]

- Vemprala, S.H.; Bonatti, R.; Bucker, A.; Kapoor, A. Chatgpt for robotics: Design principles and model abilities. IEEE Access 2024, 12, 55682–55696. [Google Scholar] [CrossRef]

- Zhang, Z.; Chai, W.; Wang, J. Mani-GPT: A generative model for interactive robotic manipulation. Procedia Comput. Sci. 2023, 226, 149–156. [Google Scholar] [CrossRef]

- Oh, S.; Jung, Y.; Kim, S.; Lee, I.; Kang, N. Deep generative design: Integration of topology optimization and generative models. J. Mech. Des. 2019, 141, 111405. [Google Scholar] [CrossRef]

- Regenwetter, L.; Srivastava, A.; Gutfreund, D.; Ahmed, F. Beyond statistical similarity: Rethinking metrics for deep generative models in engineering design. Comput.-Aided Des. 2023, 165, 103609. [Google Scholar] [CrossRef]

- Gan, Y.; Ji, Y.; Jiang, S.; Liu, X.; Feng, Z.; Li, Y.; Liu, Y. Integrating aesthetic and emotional preferences in social robot design: An affective design approach with Kansei Engineering and Deep Convolutional Generative Adversarial Network. Int. J. Ind. Ergon. 2021, 83, 103128. [Google Scholar] [CrossRef]

- Aristeidou, C.; Dimitropoulos, N.; Michalos, G. Generative AI and neural networks towards advanced robot cognition. CIRP Ann. 2024, 73, 21–24. [Google Scholar] [CrossRef]

- Borkar, K.K.; Singh, M.K.; Dasari, R.K.; Babbar, A.; Pandey, A.; Jain, U.; Mishra, P. Path planning design for a wheeled robot: A generative artificial intelligence approach. Int. J. Interact. Des. Manuf. (IJIDeM) 2024, 1–12. [Google Scholar] [CrossRef]

- Chan, W.K.; Wang, P.; Yeow, R.C.-H. Creation of Novel Soft Robot Designs using Generative AI. arXiv 2024, arXiv:2405.01824. [Google Scholar]

- Wang, L.; Chan, Y.-C.; Ahmed, F.; Liu, Z.; Zhu, P.; Chen, W. Deep generative modeling for mechanistic-based learning and design of metamaterial systems. Comput. Methods Appl. Mech. Eng. 2020, 372, 113377. [Google Scholar] [CrossRef]

- Bucher, M.J.J.; Kraus, M.A.; Rust, R.; Tang, S. Performance-based generative design for parametric modeling of engineering structures using deep conditional generative models. Autom. Constr. 2023, 156, 105128. [Google Scholar] [CrossRef]

- Ramezani, A.; Dangol, P.; Sihite, E.; Lessieur, A.; Kelly, P. Generative design of nu’s husky carbon, a morpho-functional, legged robot. In Proceedings of the 2021 IEEE International Conference on Robotics and Automation (ICRA), Xi’an, China, 30 May–5 June 2021; pp. 4040–4046. [Google Scholar]

- Demirel, H.O.; Goldstein, M.H.; Li, X.; Sha, Z. Human-centered generative design framework: An early design framework to support concept creation and evaluation. Int. J. Hum.–Comput. Interact. 2024, 40, 933–944. [Google Scholar] [CrossRef]

- Gradišar, L.; Dolenc, M.; Klinc, R. Towards machine learned generative design. Autom. Constr. 2024, 159, 105284. [Google Scholar] [CrossRef]

- Chen, M.; Sun, Y.; Cai, X.; Liu, B.; Ren, T. Design and implementation of a novel precision irrigation robot based on an intelligent path planning algorithm. arXiv 2020, arXiv:2003.00676. [Google Scholar]

- Liu, Z.; Wang, X.; Zheng, W.; Lv, Z.; Zhang, W. Design of a sweet potato transplanter based on a robot arm. Appl. Sci. 2021, 11, 9349. [Google Scholar] [CrossRef]

- Technologies, N. Oz—The Farming Assistant for Time-Consuming and Arduous Tasks. Available online: https://www.naio-technologies.com/en/oz/ (accessed on 10 December 2024).

- ViTiBOT. BAKUS—Electric Vine Straddle Robot That Meets the Challenges of Sustainable Viticulture. Available online: https://vitibot.fr/vineyards-robots-bakus/?lang=en (accessed on 10 December 2024).

- ROWBOT. ROWBOT—The Future of Farming Robotic Solutions for Row Crop Agriculture. Available online: https://www.rowbot.com/ (accessed on 10 December 2024).

- Chebrolu, N.; Lottes, P.; Schaefer, A.; Winterhalter, W.; Burgard, W.; Stachniss, C. Agricultural robot dataset for plant classification, localization and mapping on sugar beet fields. Int. J. Robot. Res. 2017, 36, 1045–1052. [Google Scholar] [CrossRef]

- Adamides, G.; Katsanos, C.; Christou, G.; Xenos, M.; Papadavid, G.; Hadzilacos, T. User interface considerations for telerobotics: The case of an agricultural robot sprayer. In Proceedings of the Second International Conference on Remote Sensing and Geoinformation of the Environment (RSCy2014), Paphos, Cyprus, 7–10 April 2014; pp. 541–548. [Google Scholar]

- Tom. Tom Autonomously Digitises the Field, Helping Farmers Detect Every Weed and Understand the Crop’s Health. Available online: https://smallrobotco.com/#tom (accessed on 10 December 2024).

- Zhou, L.; Hu, A.; Cheng, Y.; Zhang, W.; Zhang, B.; Lu, X.; Wu, Q.; Ren, N. Barrier-free tomato fruit selection and location based on optimized semantic segmentation and obstacle perception algorithm. Front. Plant Sci. 2024, 15, 1460060. [Google Scholar] [CrossRef]

- Bykov, S. World trends in the creation of robots for spraying crops. Proc. E3S Web Conf. 2023, 380, 01011. [Google Scholar] [CrossRef]

- Baltazar, A.R.; Santos, F.N.d.; Moreira, A.P.; Valente, A.; Cunha, J.B. Smarter robotic sprayer system for precision agriculture. Electronics 2021, 10, 2061. [Google Scholar] [CrossRef]

- Bhandari, S.; Raheja, A.; Renella, N.; Ramirez, R.; Uryeu, D.; Samuel, J. Collaboration between UAVs and UGVs for site-specific application of chemicals. In Proceedings of the Autonomous Air and Ground Sensing Systems for Agricultural Optimization and Phenotyping VIII, Orlando, FL, USA, 30 April–4 May 2023; pp. 123–130. [Google Scholar]

- Iriondo, A.; Lazkano, E.; Ansuategi, A.; Rivera, A.; Lluvia, I.; Tubío, C. Learning positioning policies for mobile manipulation operations with deep reinforcement learning. Int. J. Mach. Learn. Cybern. 2023, 14, 3003–3023. [Google Scholar] [CrossRef]

- Bogue, R. Robots addressing agricultural labour shortages and environmental issues. Ind. Robot. Int. J. Robot. Res. Appl. 2024, 51, 1–6. [Google Scholar] [CrossRef]

- Cantelli, L.; Bonaccorso, F.; Longo, D.; Melita, C.D.; Schillaci, G.; Muscato, G. A small versatile electrical robot for autonomous spraying in agriculture. AgriEngineering 2019, 1, 391–402. [Google Scholar] [CrossRef]

- Oberti, R.; Marchi, M.; Tirelli, P.; Calcante, A.; Iriti, M.; Tona, E.; Hočevar, M.; Baur, J.; Pfaff, J.; Schütz, C. Selective spraying of grapevines for disease control using a modular agricultural robot. Biosyst. Eng. 2016, 146, 203–215. [Google Scholar] [CrossRef]

- Konduri, S.; Chittoor, P.K.; Dandumahanti, B.P.; Yang, Z.; Elara, M.R.; Jaichandar, G.H. Boa Fumigator: An Intelligent Robotic Approach for Mosquito Control. Technologies 2024, 12, 255. [Google Scholar] [CrossRef]

- Jeyabal, S.; Vikram, C.; Chittoor, P.K.; Elara, M.R. Revolutionizing Urban Pest Management with Sensor Fusion and Precision Fumigation Robotics. Appl. Sci. 2024, 14, 7382. [Google Scholar] [CrossRef]

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).