Enhancing Driving Safety of Personal Mobility Vehicles Using On-Board Technologies

Abstract

1. Introduction

2. Related Works

2.1. Driving Safety Enhancement Techniques by Obstacle Detection

2.2. Driving Safety Enhancement Techniques by On-Device AI

2.3. Situational Awareness and Warning System

2.4. Additional Considerations for Electric Wheelchair Safety and Performance

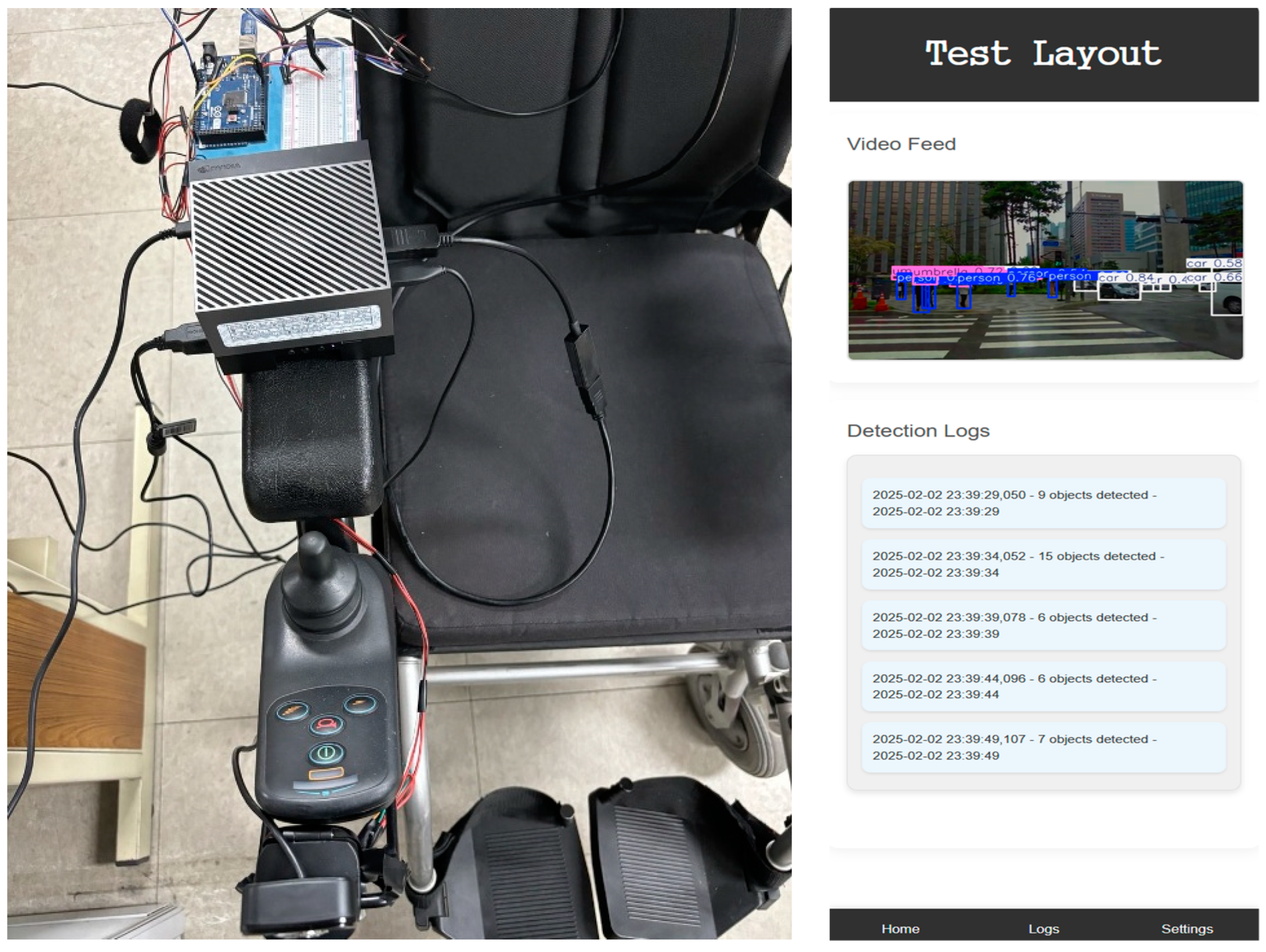

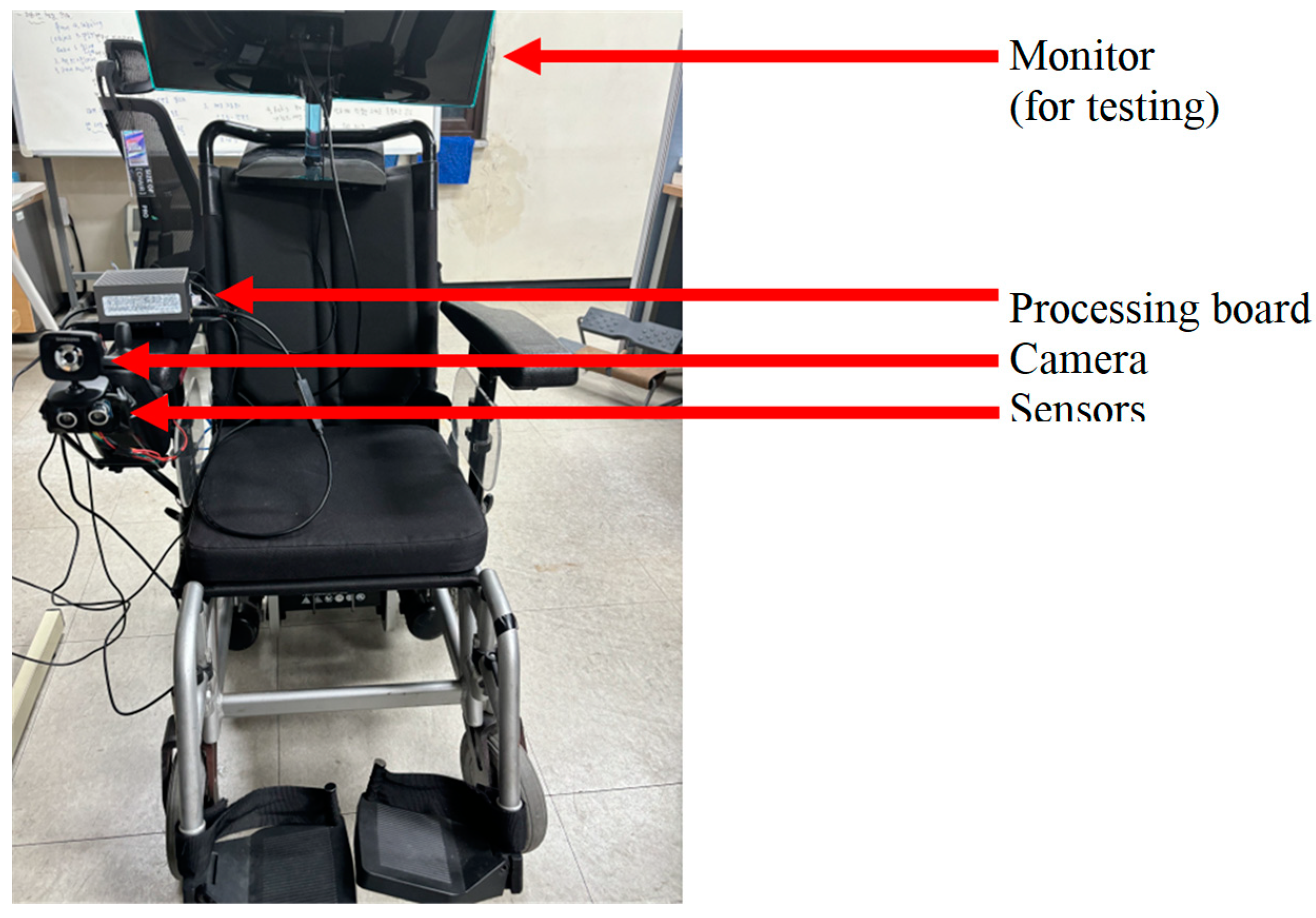

3. System Architecture, Design, and Implementation

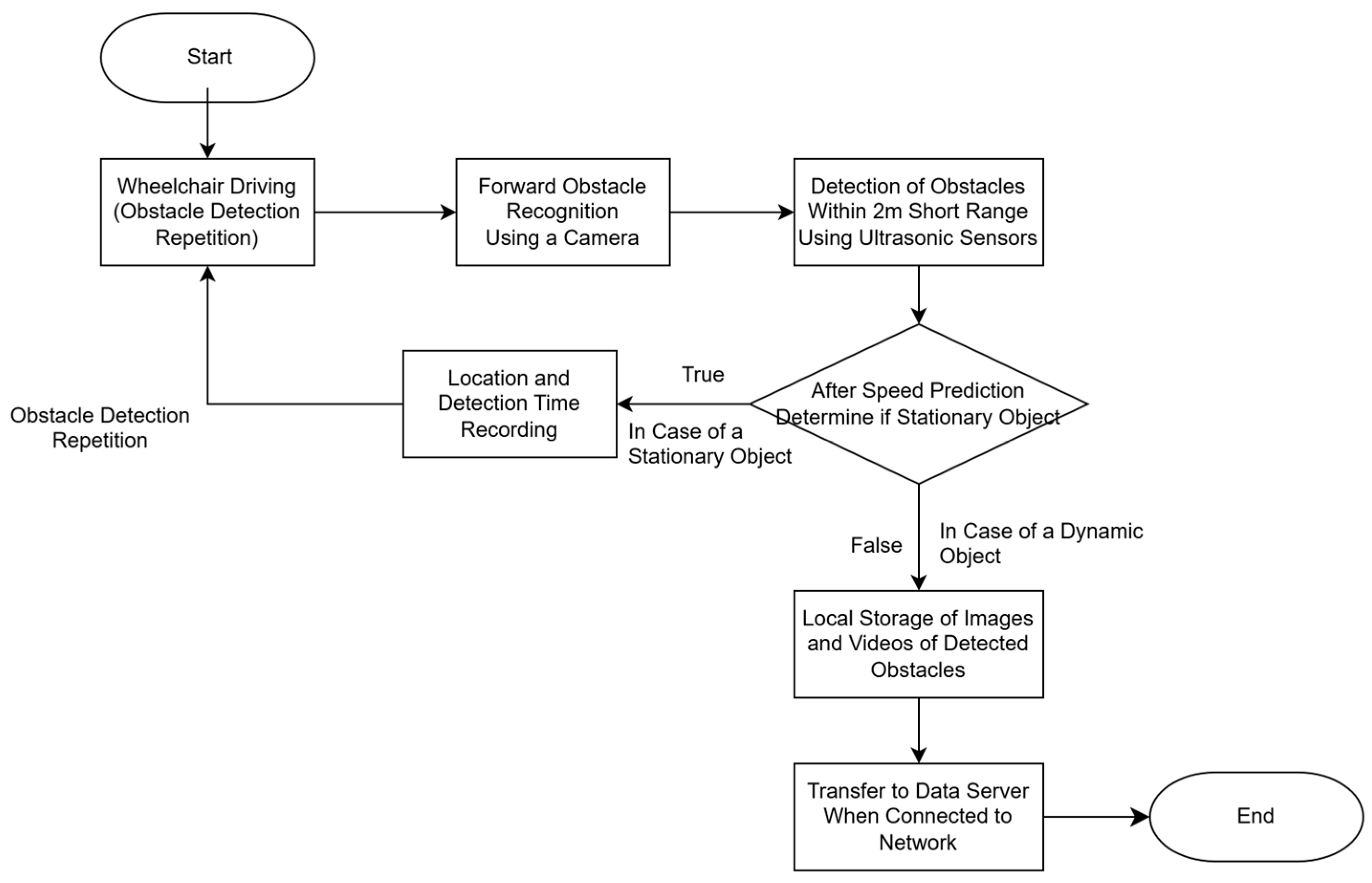

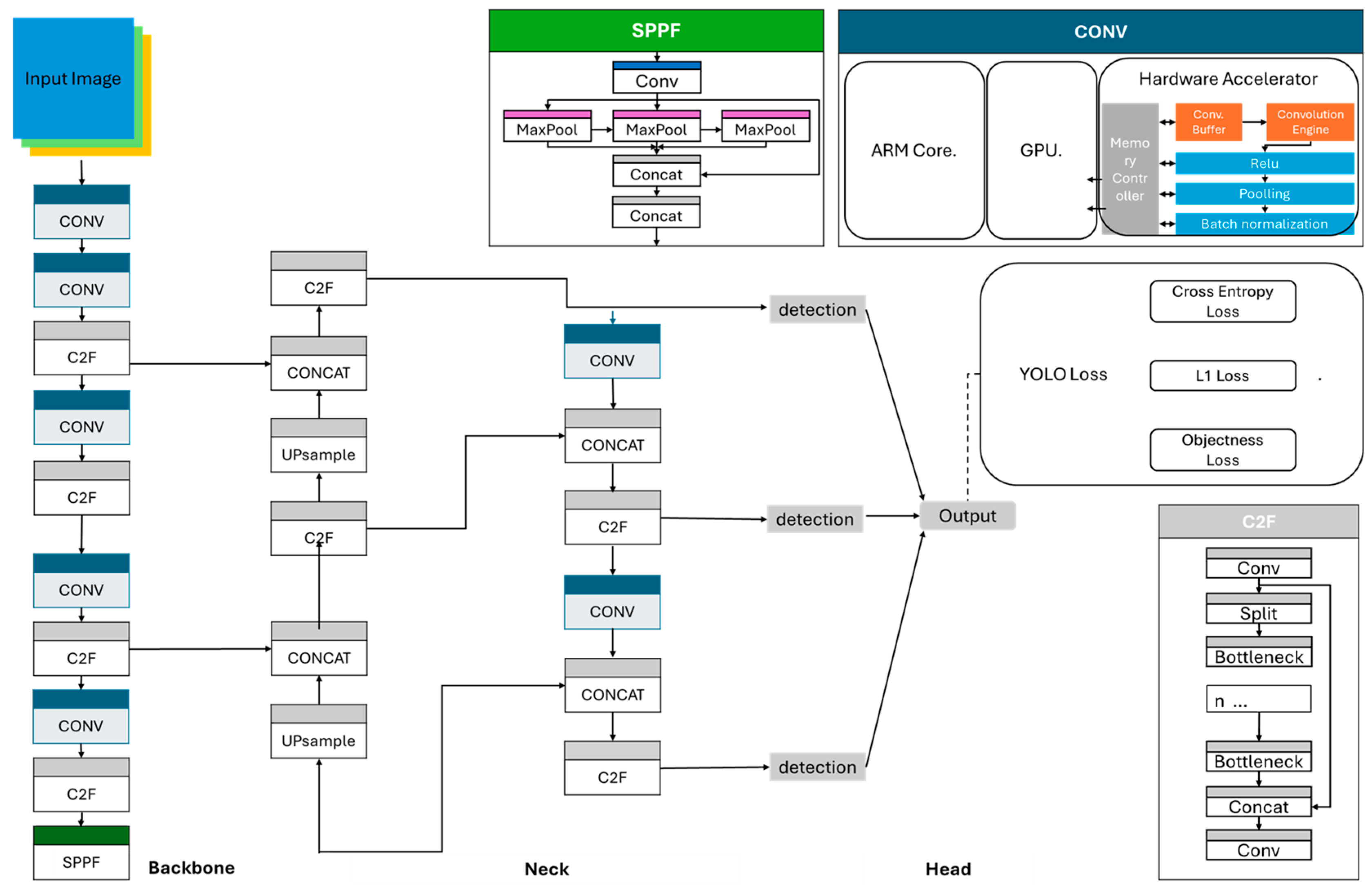

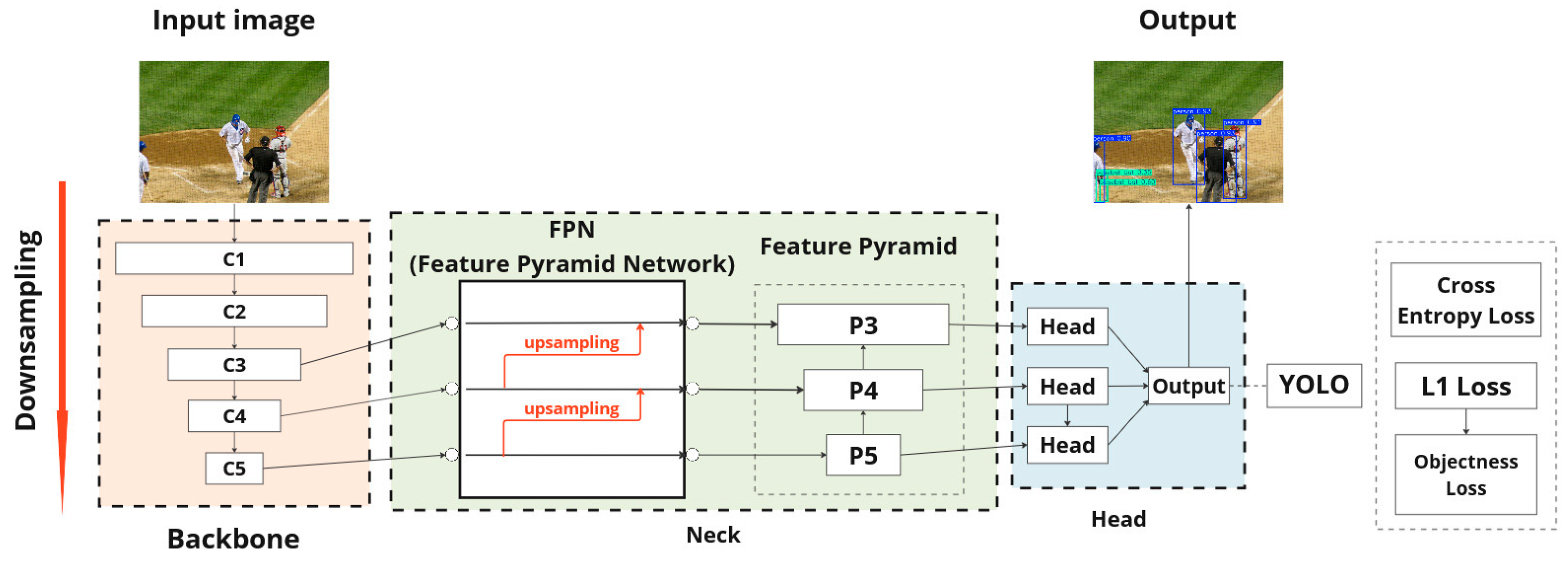

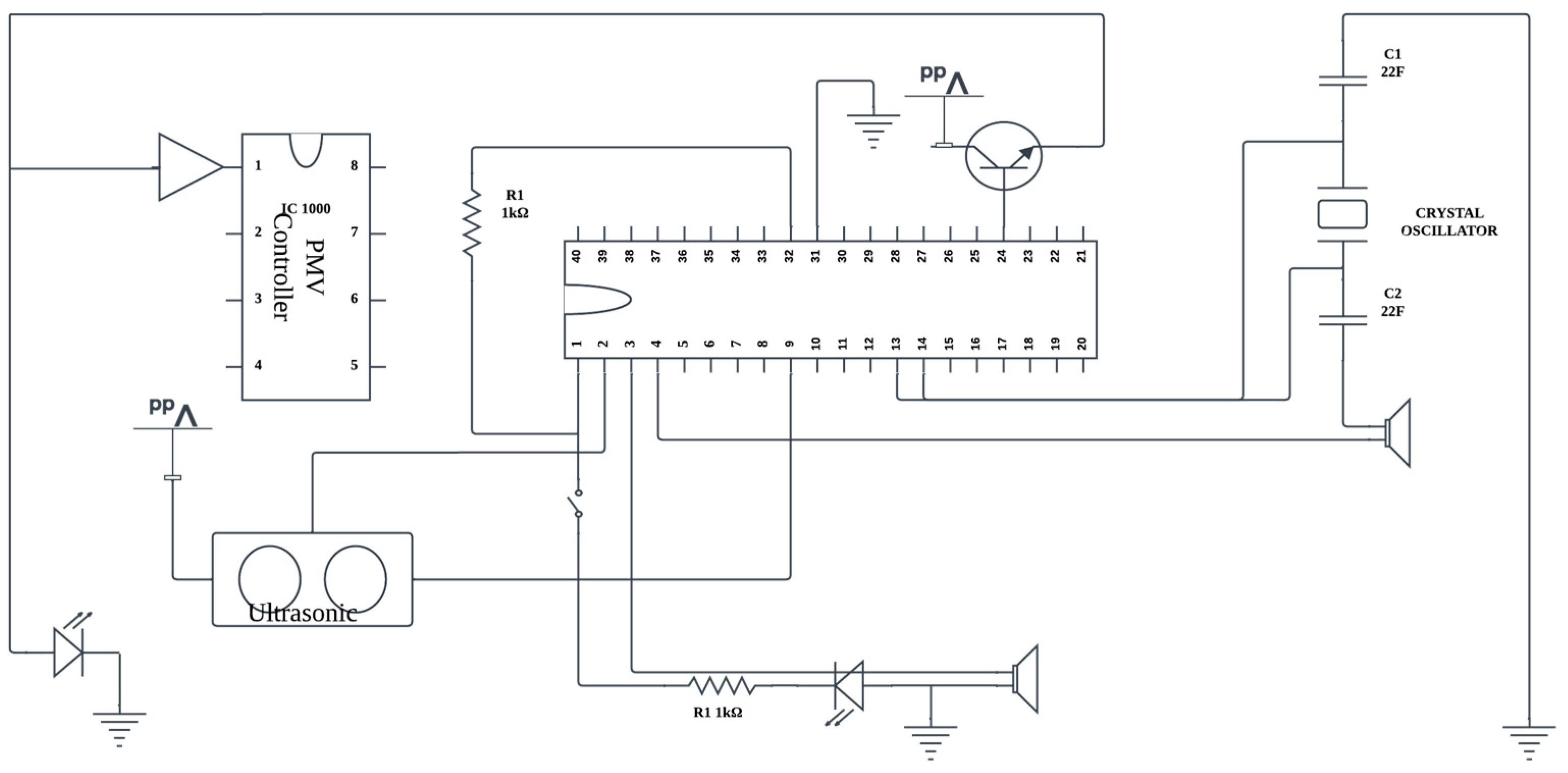

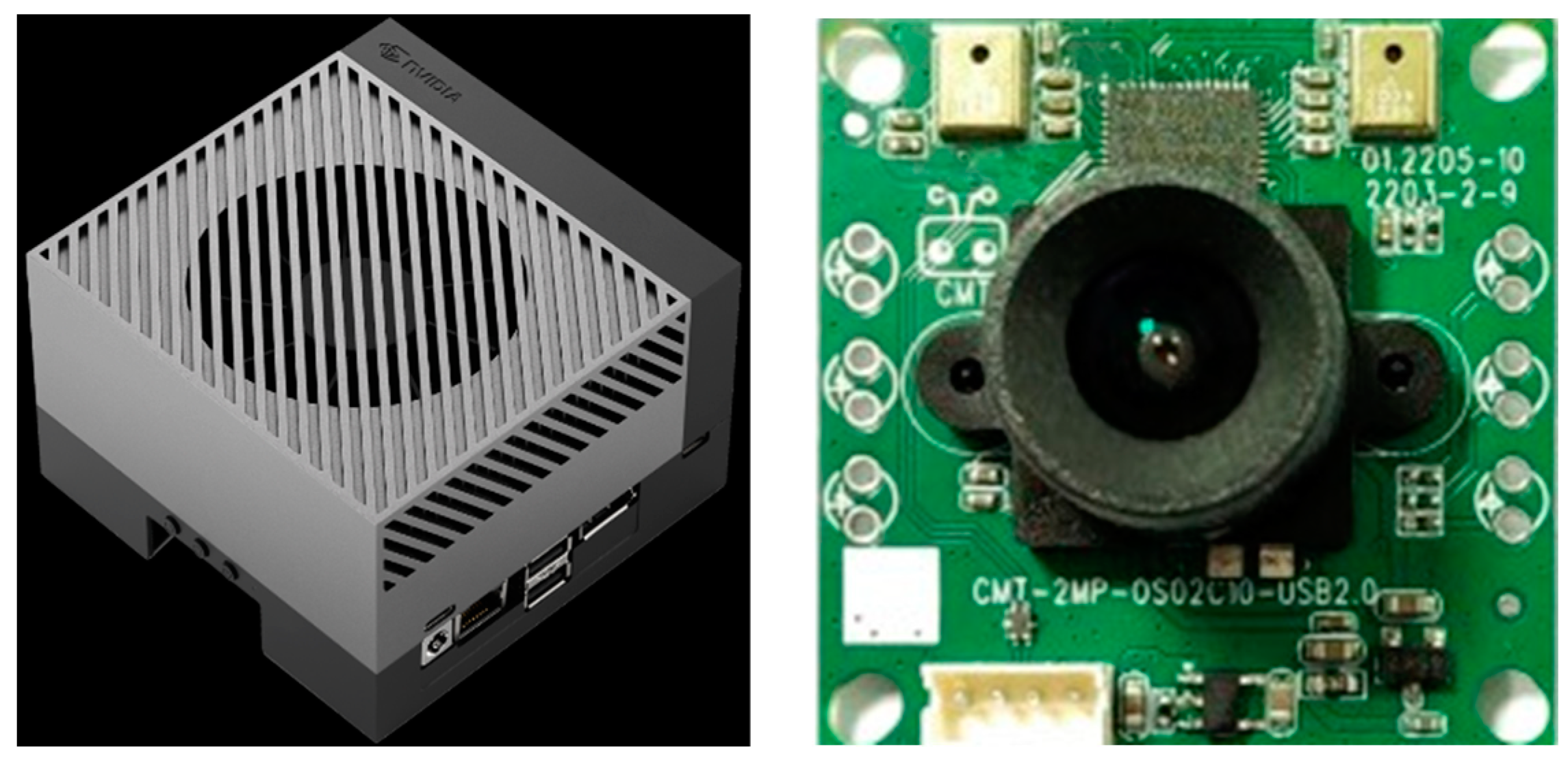

3.1. Enhancing Driving Safety by Forward Obstacle Detection

3.2. Enhancing Driving Safety by Ultrasonic Sensors and Emergency Stop

3.3. Data Acquisition and Statistics

4. Experimental Results

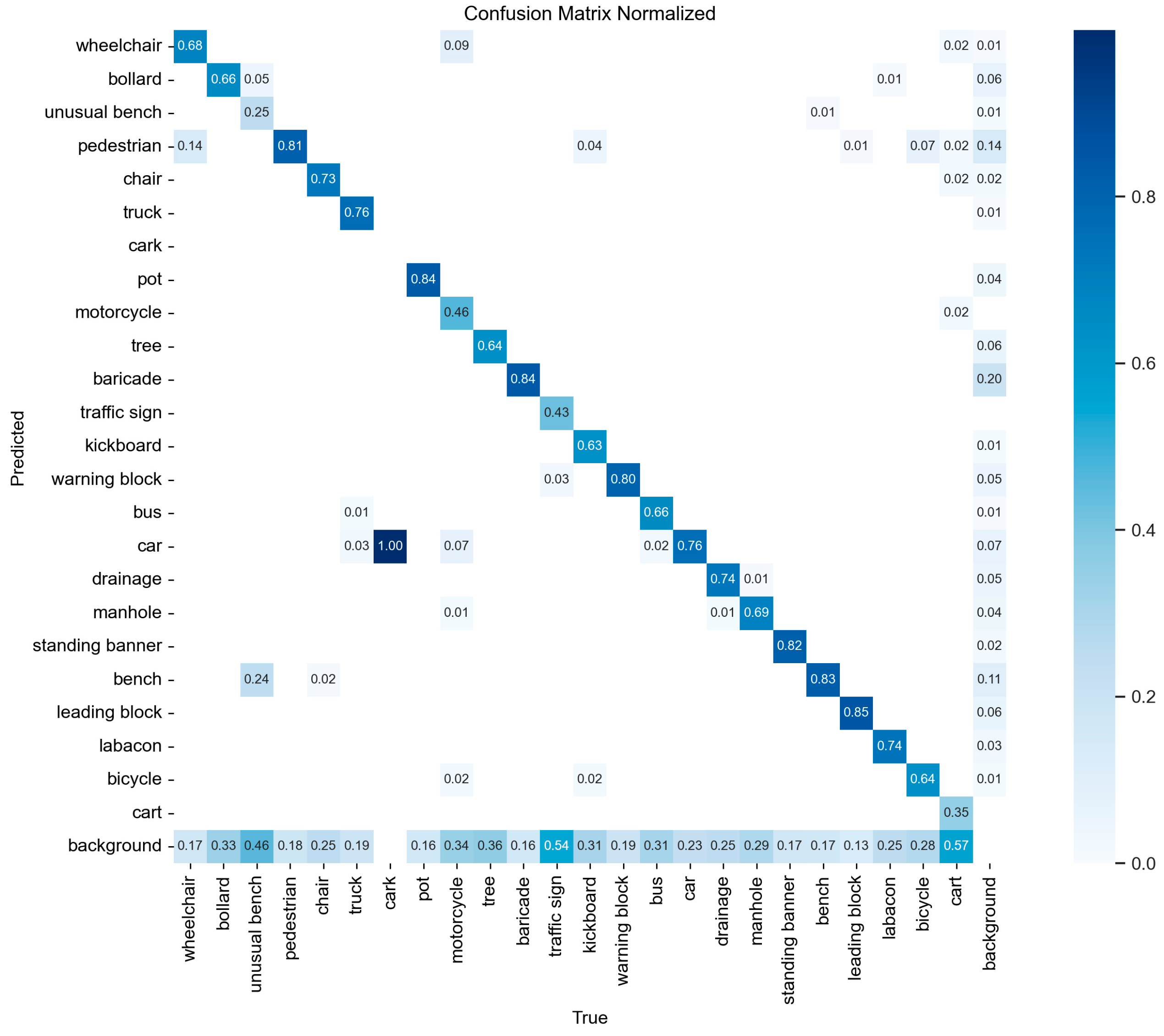

4.1. Confusion Matrix

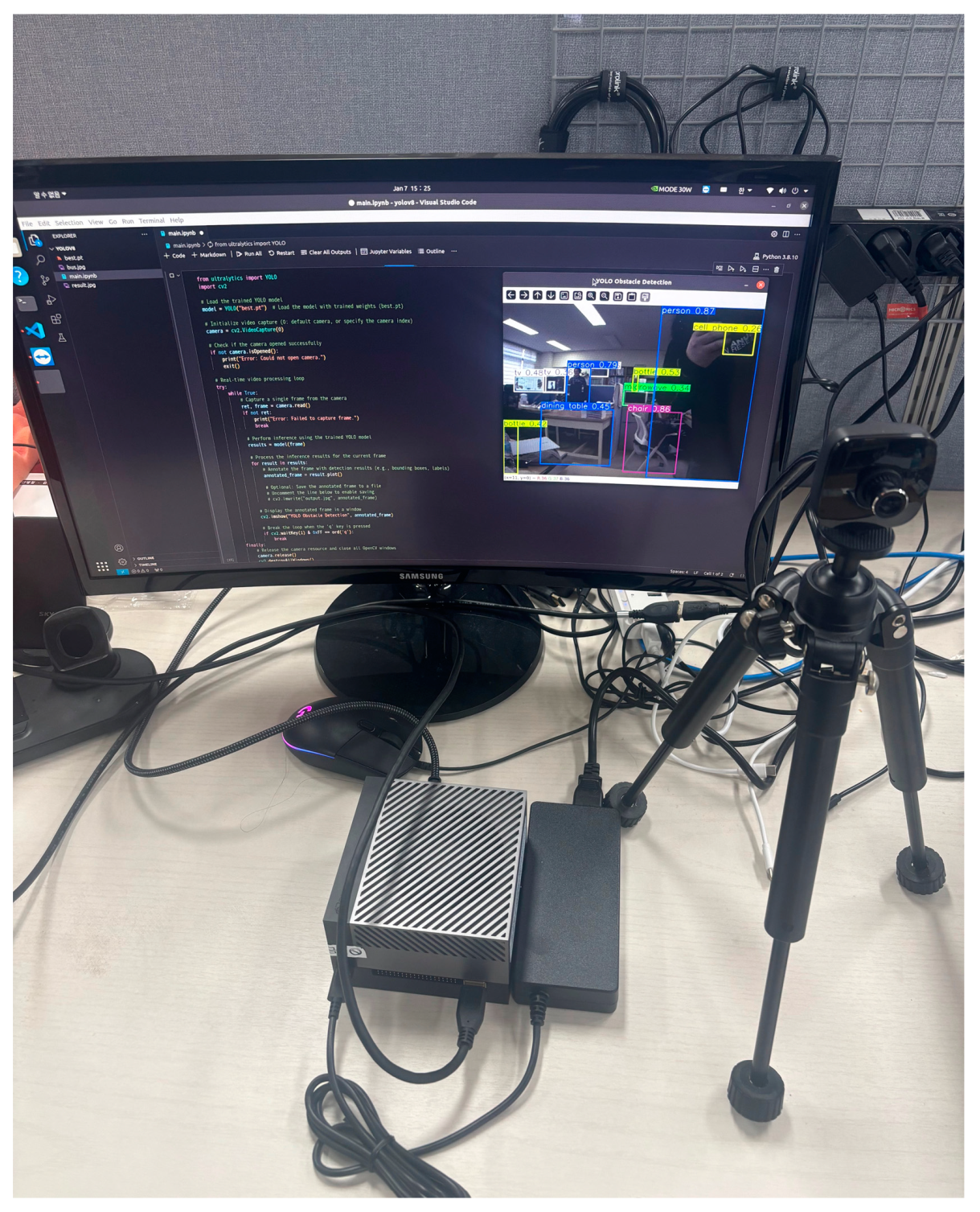

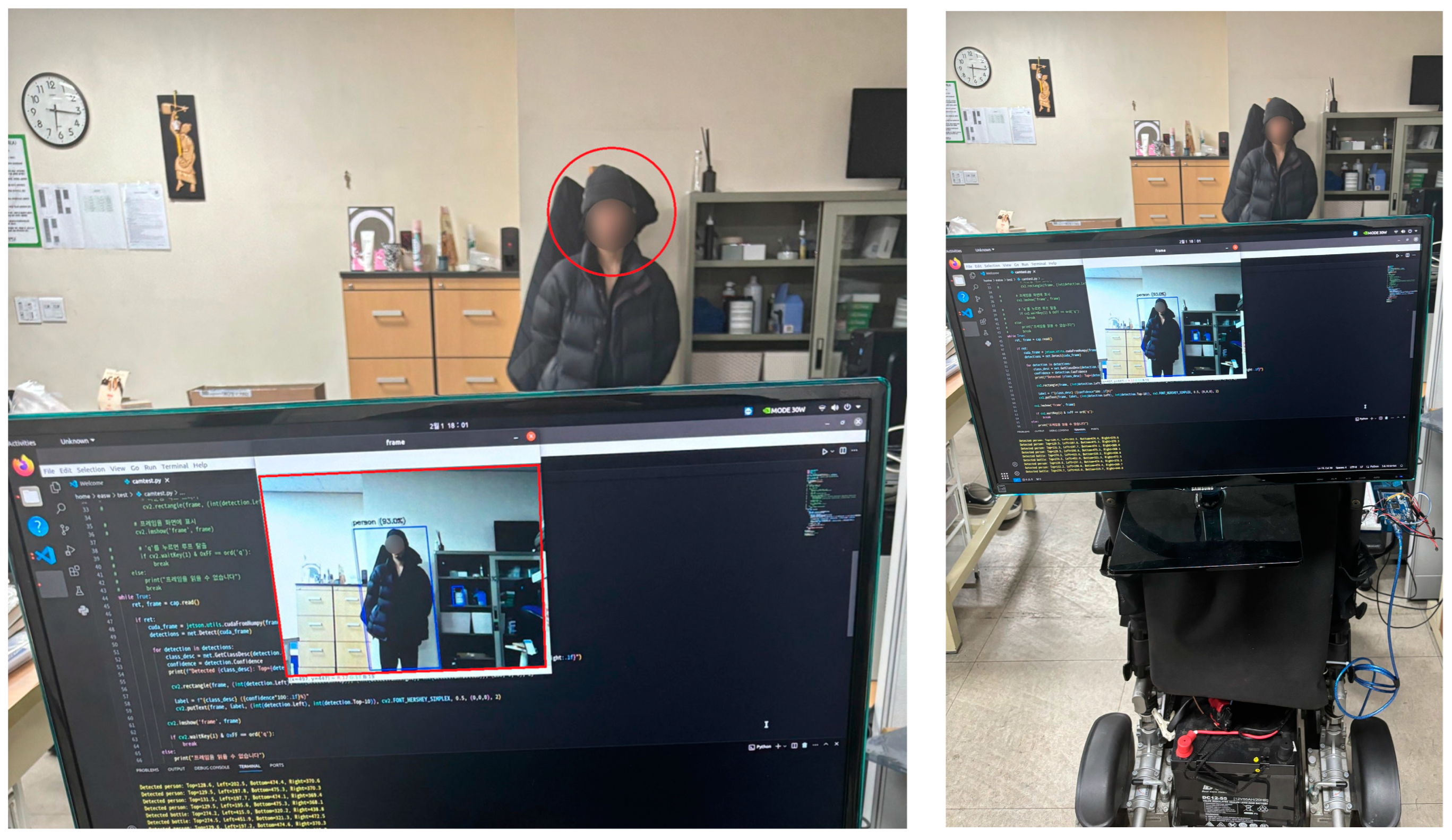

4.2. System Implementation and Experiments for Auto-Stop and TTS

| // Define the options for the fetch request const options = { headers: { “content-type”: “application/json; charset=UTF-8”, }, body: JSON.stringify(data), method: “POST” }; fetch(api_url, options) .then((response) => { if (!response.ok) { throw new Error(“Error with Text to Speech conversion”); } response.json().then((data) => { const audioContent = data.audioContent; // base64 encoded audio const audioBuffer = Buffer.from(audioContent, “base64”); res.send(audioBuffer); }); }) .catch((error) => { res.status(500).send({ error: error.message }); }); |

| // Function to perform Text-to-Speech (TTS) API call and play the resulting audio. function ttsApi() { fetch(‘/tts’) .then(response => { if (!response.ok) { throw new Error(response.statusText); } return response.arrayBuffer(); }) .then(arrayBuffer => { const audioContent = arrayBufferToString(arrayBuffer); const audioContext = new (window.AudioContext || window.webkitAudioContext)(); const source = audioContext.createBufferSource(); const audioData = base64ToArrayBuffer(audioContent); audioContext.decodeAudioData(audioData, function (buffer) { source.buffer = buffer; source.connect(audioContext.destination); source.start(0); }); }) .catch(error => { console.error(‘Error:’, error); }); } |

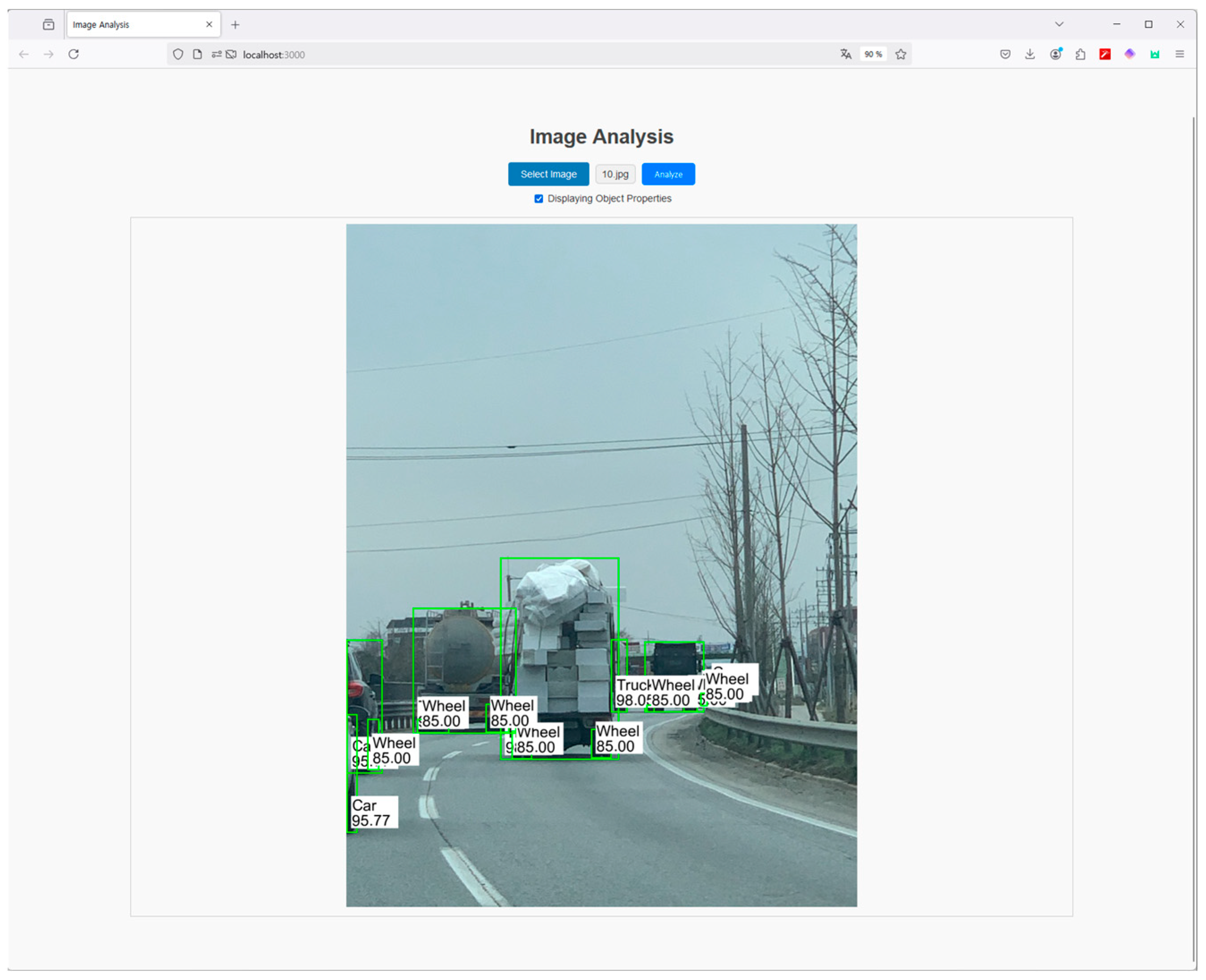

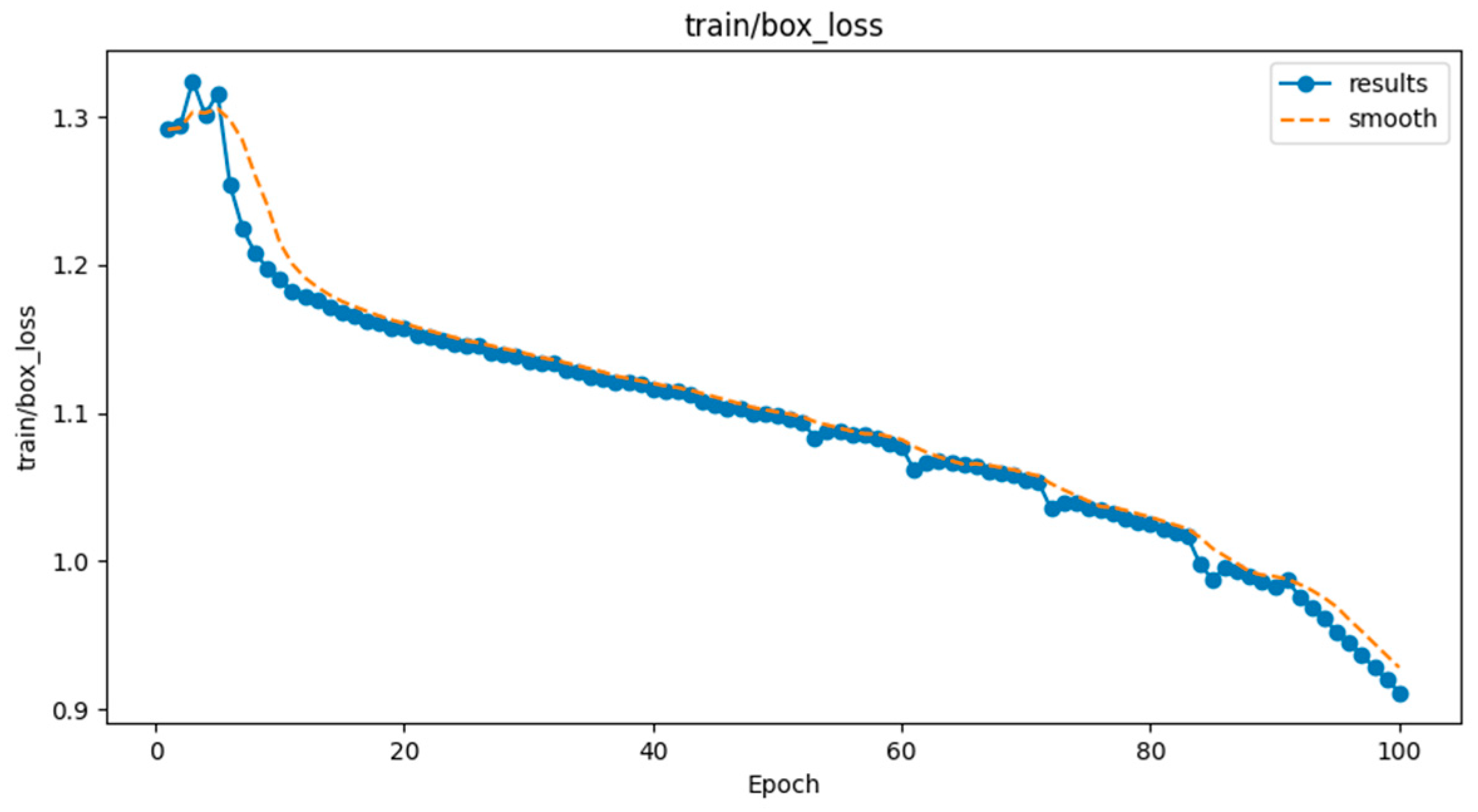

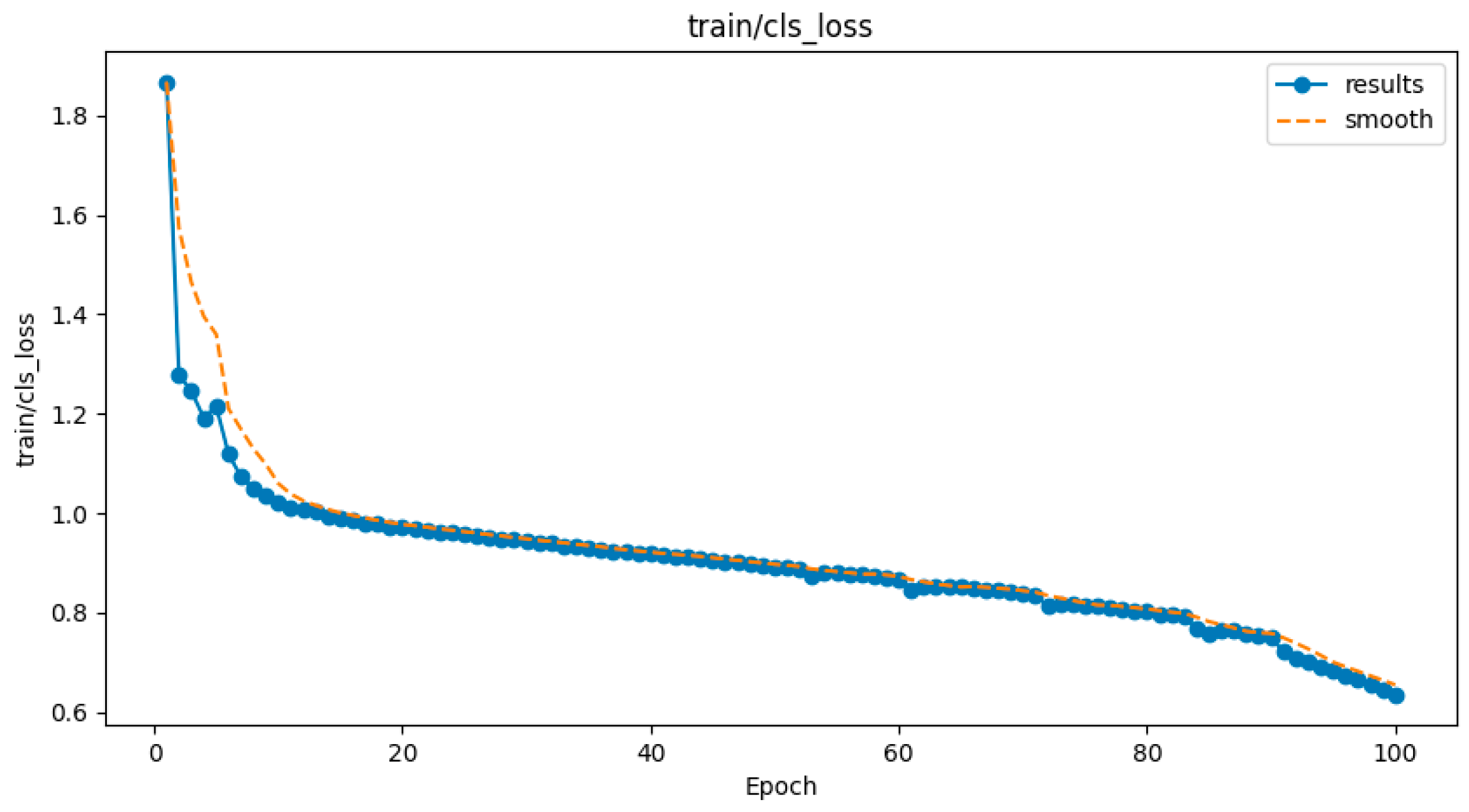

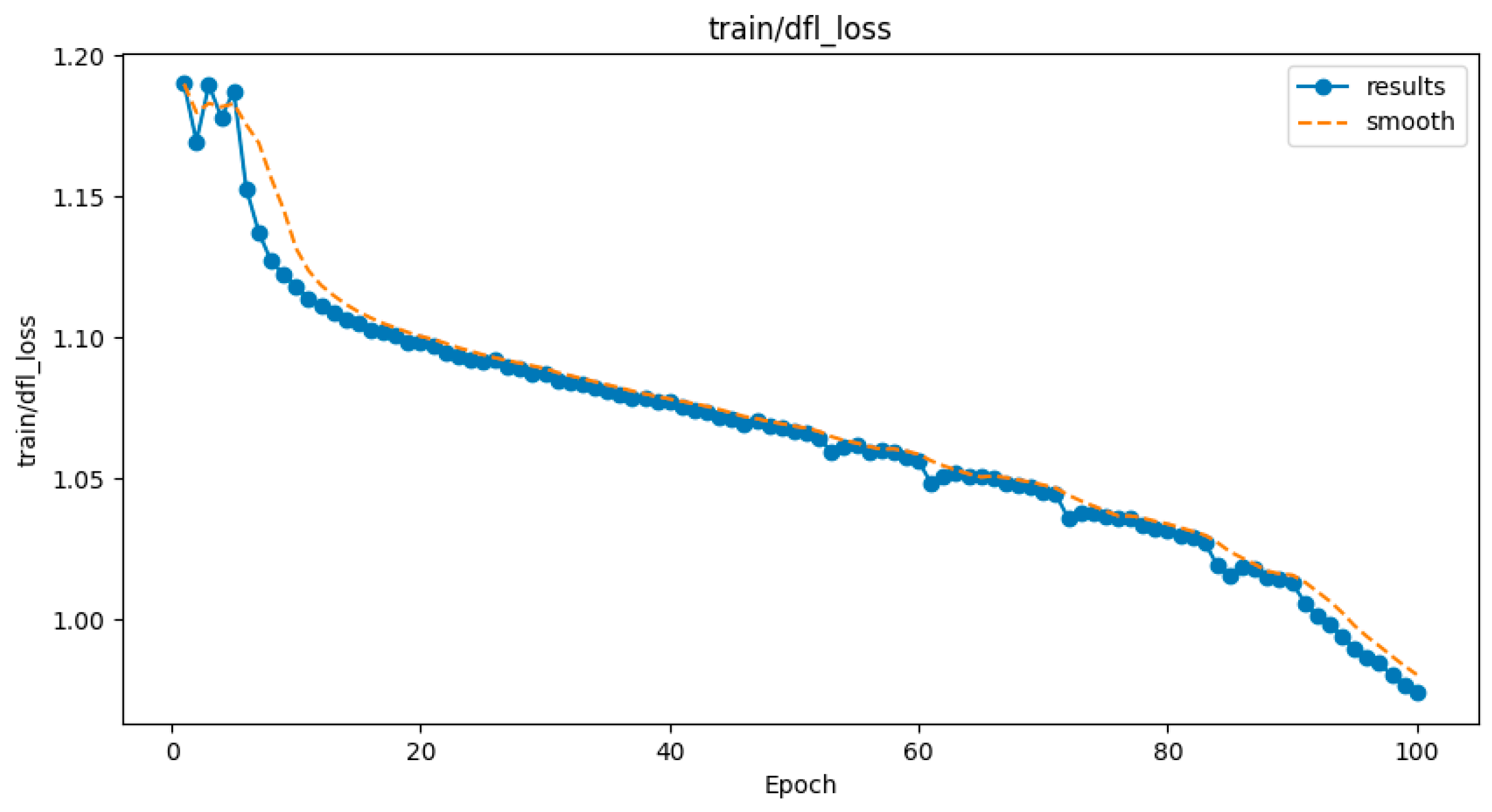

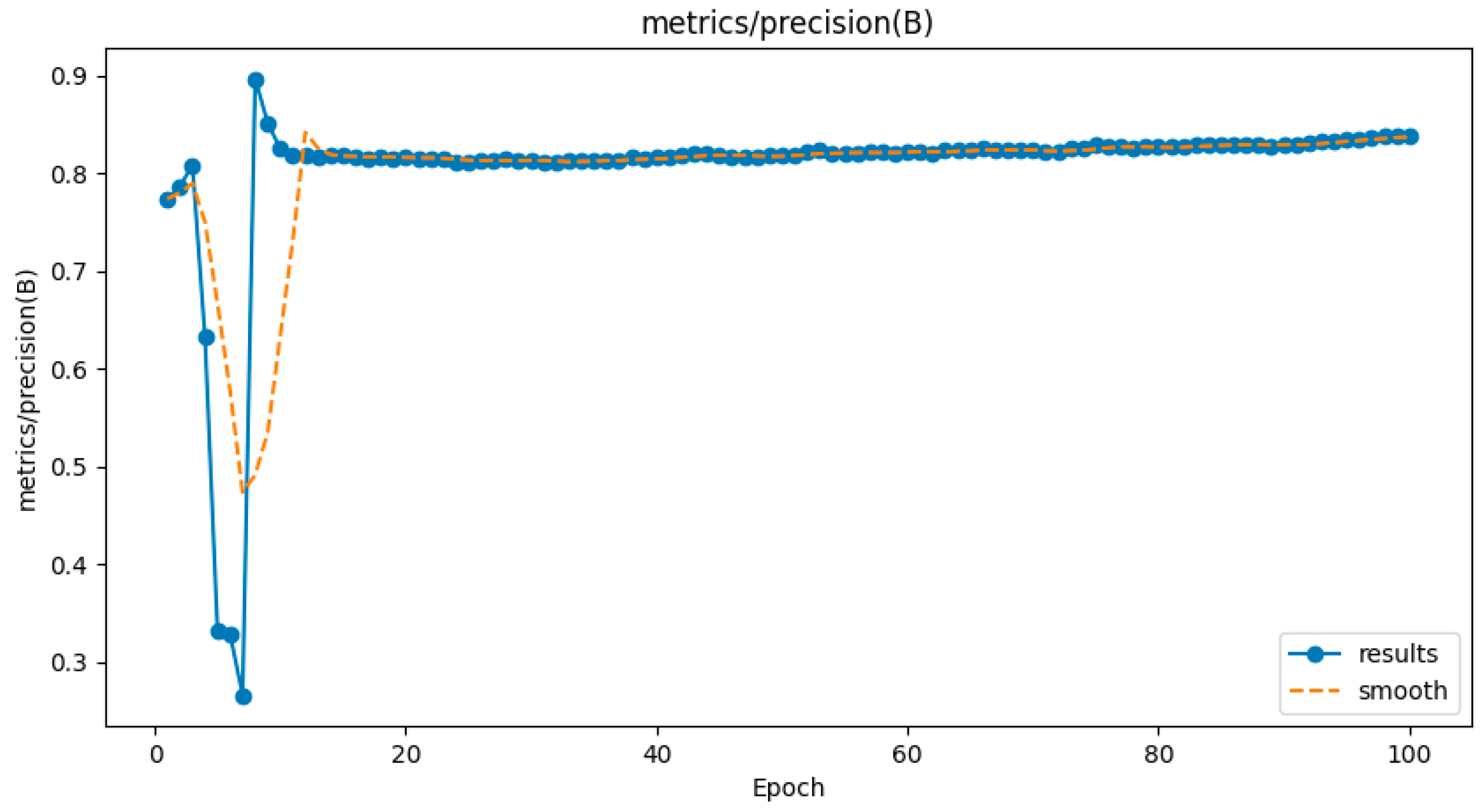

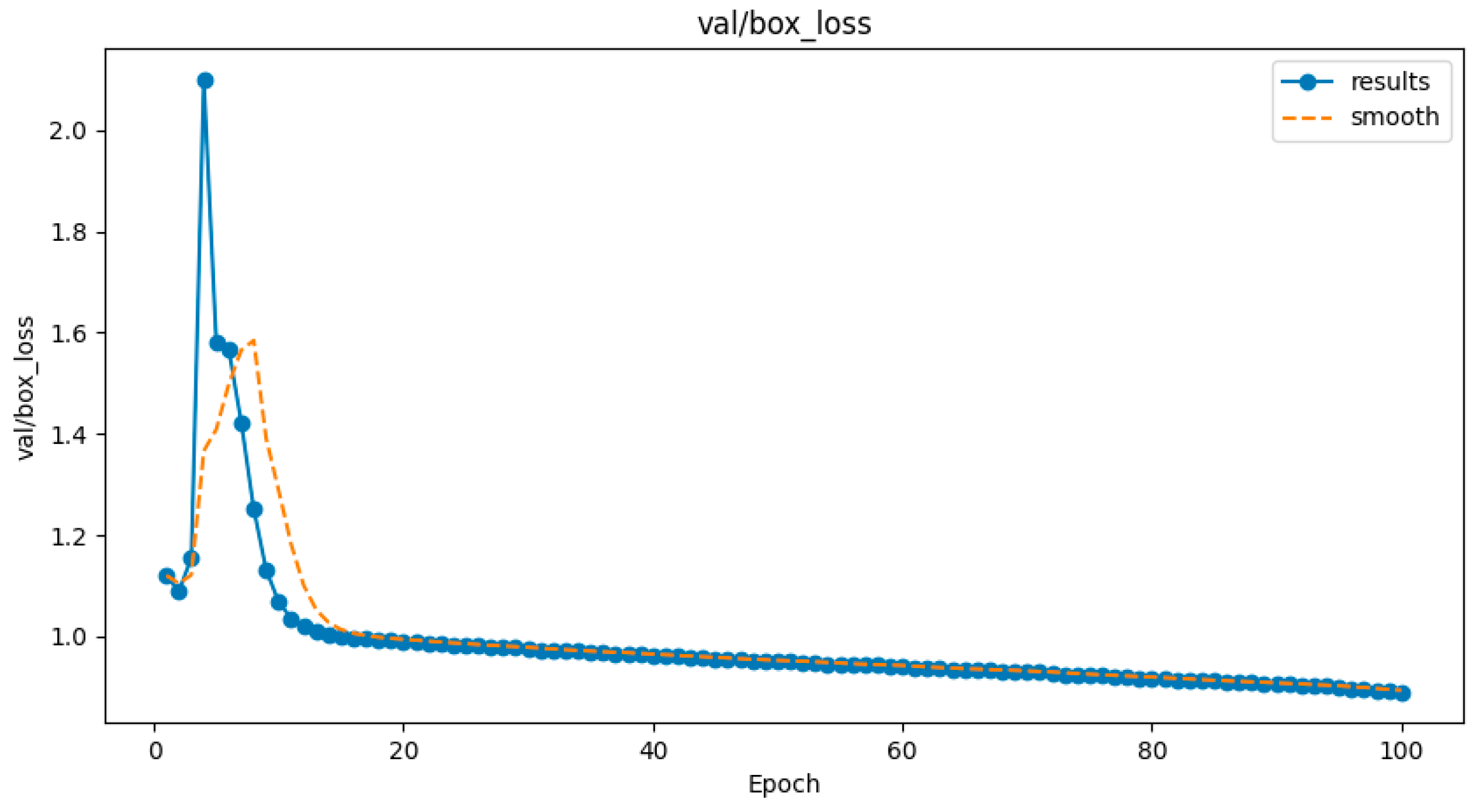

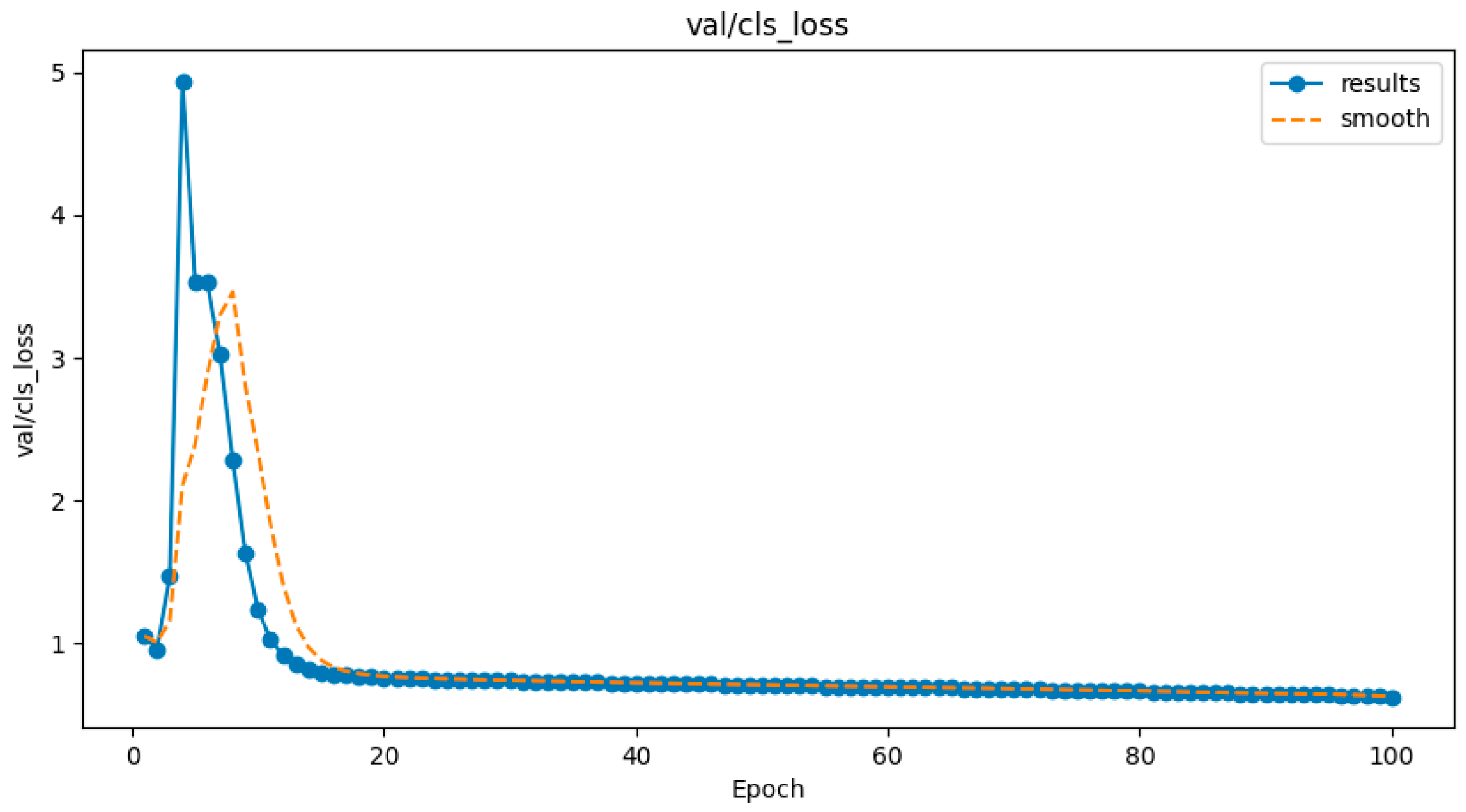

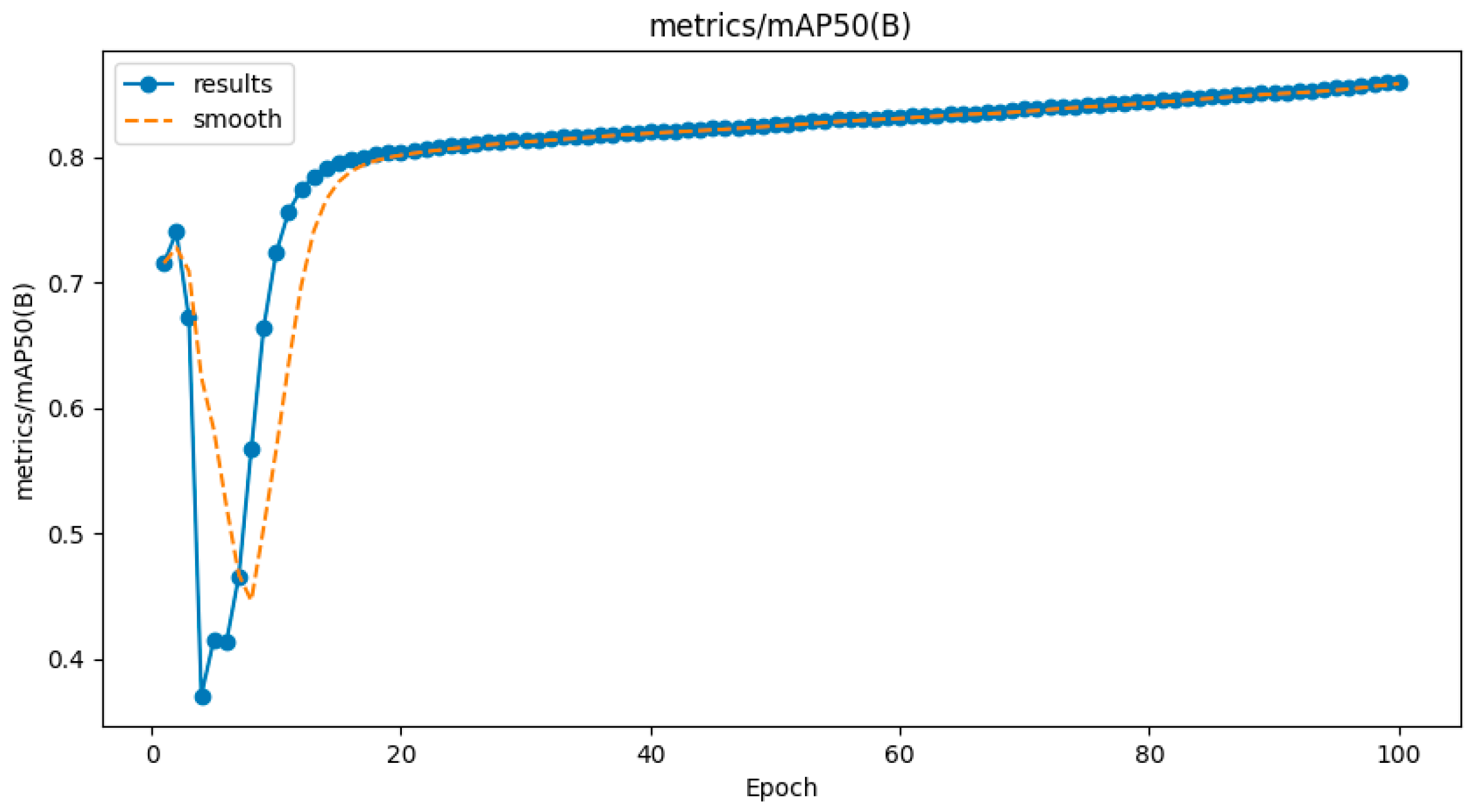

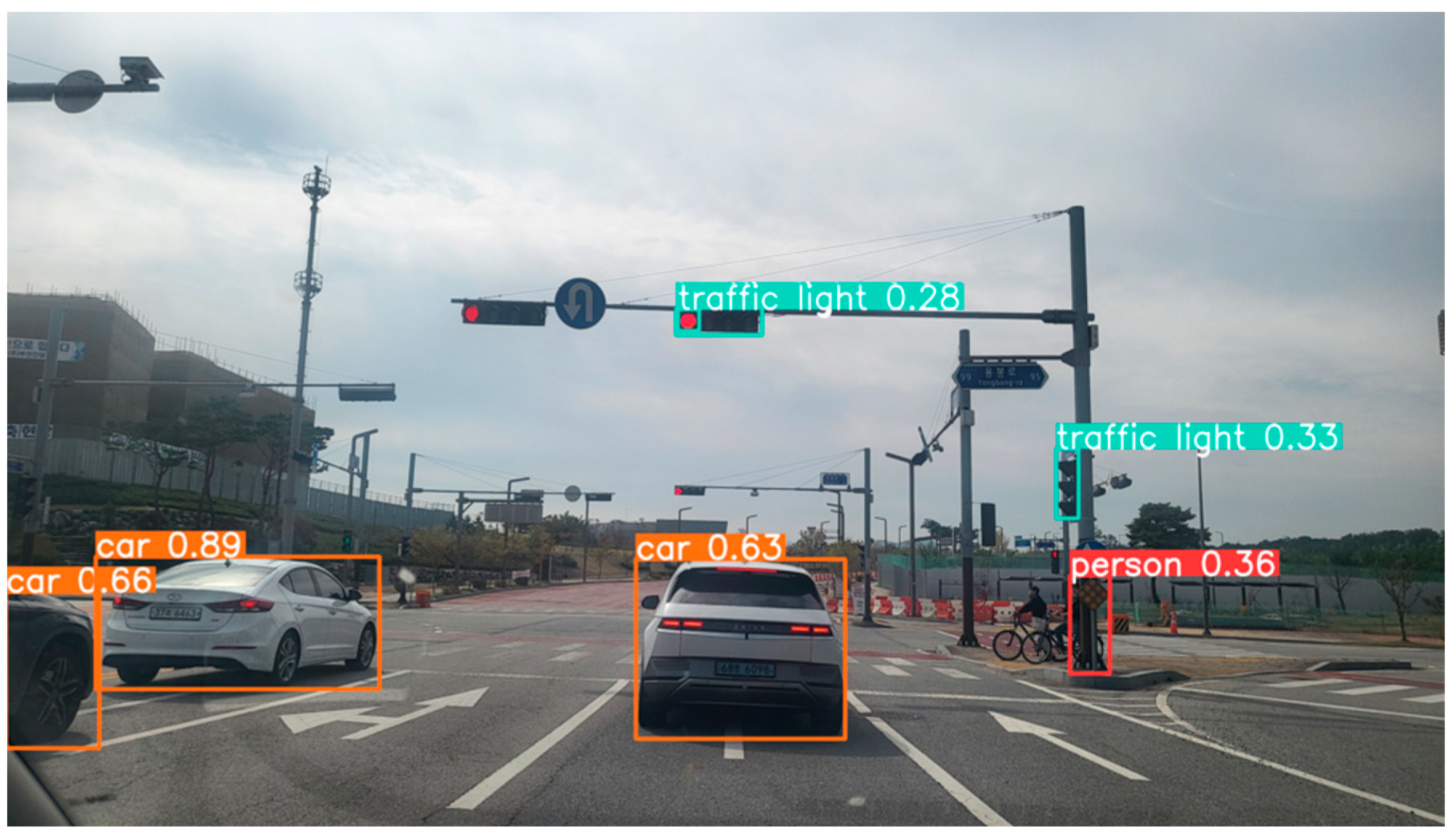

4.3. Forward Obstacle Detection Performance

4.4. Situational Obstacle Detection Performance Analysis

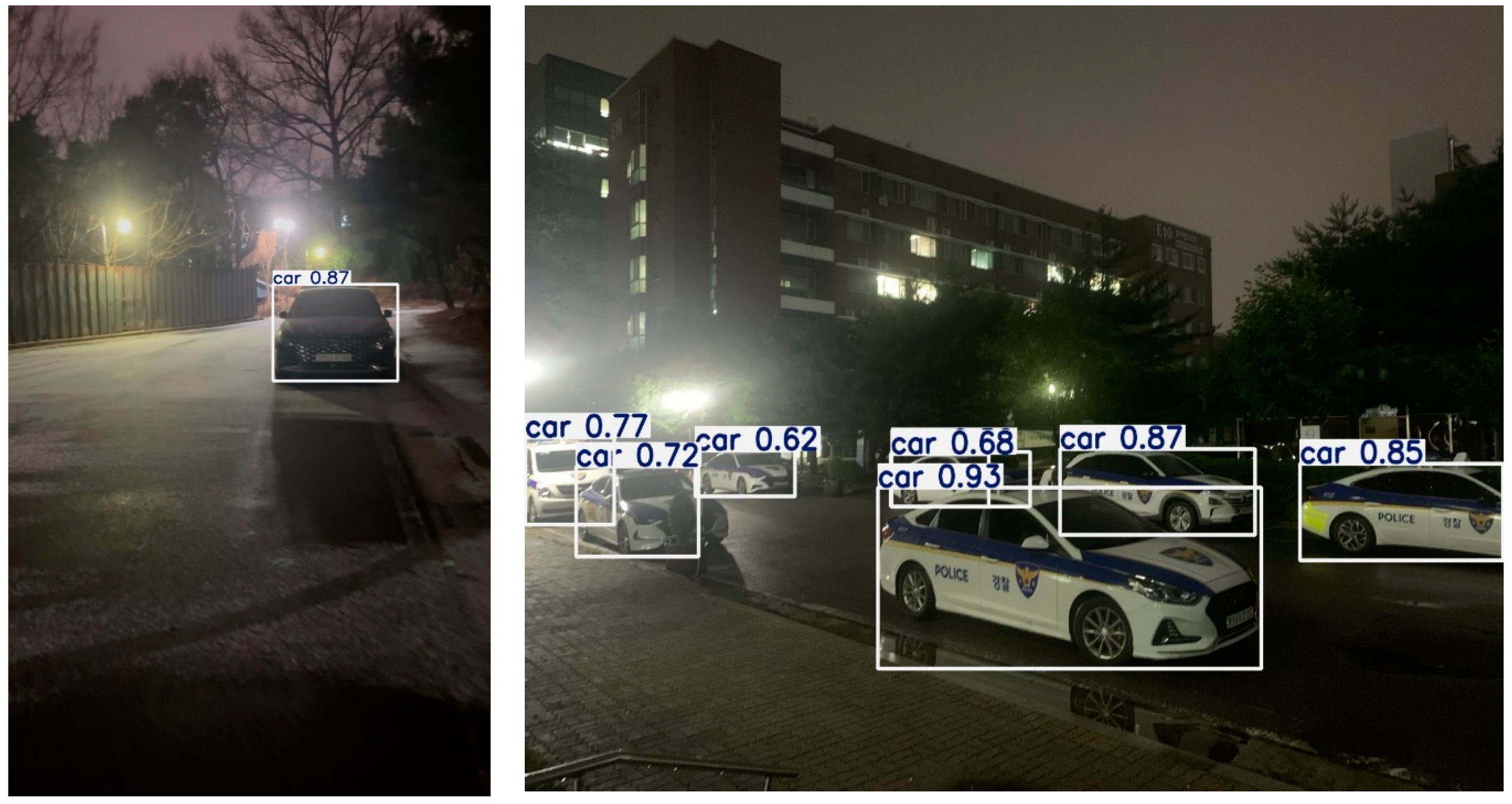

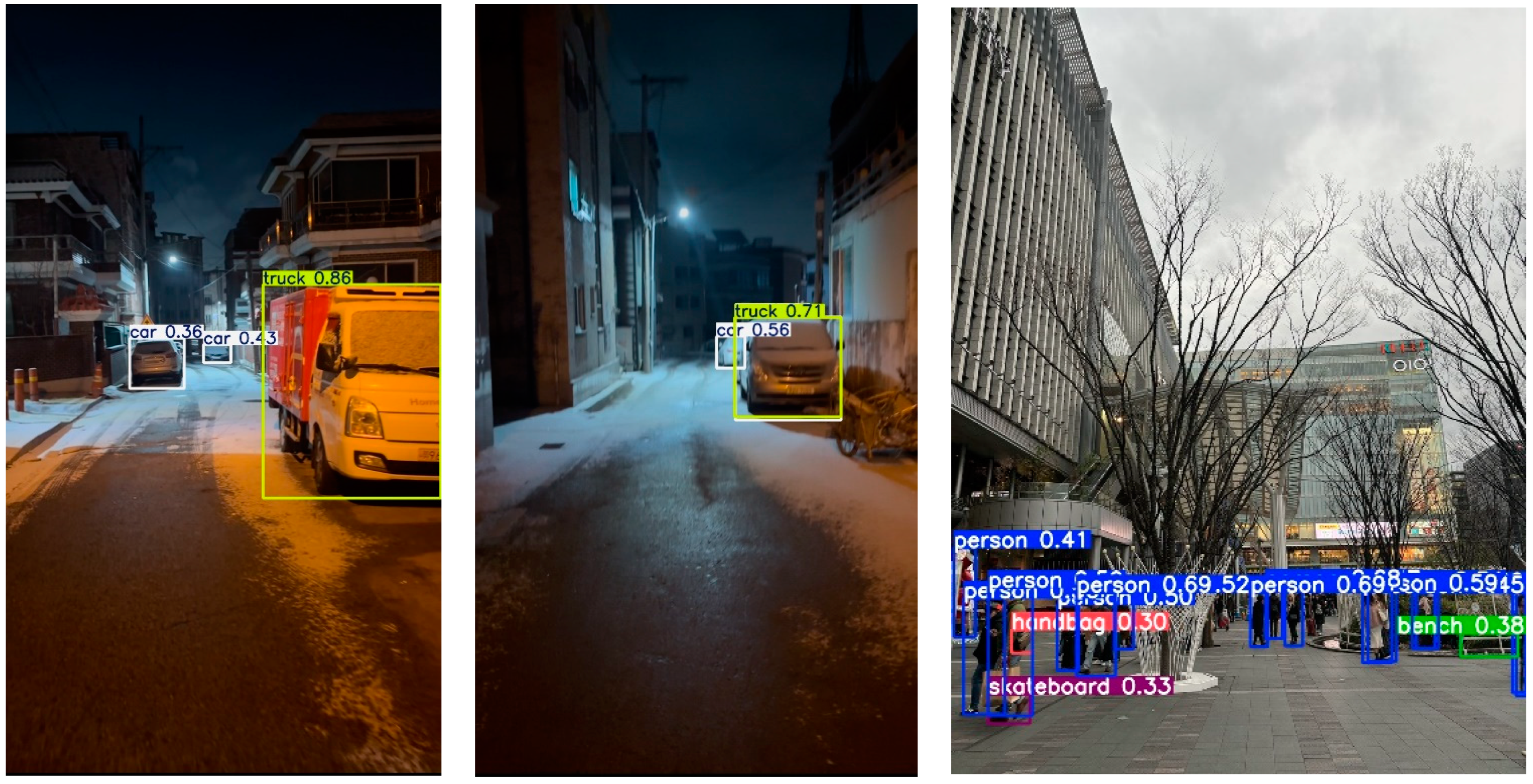

4.5. Experimental Tests Under Weather Conditions, Lighting Conditions, and Road Types

- (1)

- Additional Experiments Based on Lighting Conditions

- (2)

- Additional Experiments Based on Road Type

- (3)

- Additional Experiments Based on Weather Conditions

5. Conclusions

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Conflicts of Interest

References

- K. C. Agency. Survey on the Usage of Motorized Assistive Devices (Electric Wheelchairs, Electric Scooters); K. C. Agency: Seoul, Republic of Korea, 2015. [Google Scholar]

- Lee, S.H. The Importance of Electric Wheelchairs and Assistive Devices. Kyunghyang Daily Article. Available online: https://www.khan.co.kr/economy/market-trend/article/201604191200001 (accessed on 19 April 2016).

- Kim, M.S. The Need for Accident Prevention in Electric Wheelchairs. Seoul Daily Article. Available online: https://www.seoul.co.kr/news/society/2022/01/29/20220129500061 (accessed on 29 January 2022).

- Kim, Y.-P.; Ham, H.-J.; Hong, S.-H.; Ko, S.-C. Design and Manufacture of Improved Obstacle-Overcoming type Indoor Moving and Lifting Electric Wheelchair. J. Korea Acad.-Ind. Coop. Soc. 2020, 21, 851–860. [Google Scholar] [CrossRef]

- Seo, J.; Kim, C.W. 3D Depth Camera-based Obstacle Detection in the Active Safety System of an Electric Wheelchair. J. Inst. Control. Robot. Syst. 2016, 22, 552–556. [Google Scholar] [CrossRef]

- Kang, J.S.; Hong, E.-P.; Chang, Y. Development of a high-performance electric wheelchair: Electric wheelchair users’ posture change function usage and requirements analysis. J. Rehabil. Welf. Eng. Assist. Technol. 2024, 18, 102–112. [Google Scholar]

- Kim, D.; Lee, W.-Y.; Shin, J.-W.; Lee, E.-H. A Study on the Assistive System for Safe Elevator Get on of Wheelchair Users with Upper Limb Disability. In Proceedings of the 2023 International Conference on Electronics, Information, and Communication (ICEIC), Singapore, 5–8 February 2023; pp. 1–4. [Google Scholar]

- Na, R.; Hu, C.; Sun, Y.; Wang, S.; Zhang, S.; Han, M.; Yin, W.; Zhang, J.; Chen, X.; Zheng, D. An Embedded Lightweight SSVEP-BCI Electric Wheelchair with Hybrid Stimulator. Digit. Signal Process. 2021, 116, 103101. [Google Scholar] [CrossRef]

- Heo, D.G. Developments of Indoor Auto-Driving System and Safety Management Smartphone App for Powered Wheelchair User. Master’s Thesis, Deptartment of Rehabilitation Welfare Engineering, Daegu Universit, Gyeongsan, Republic of Korea, 2017. Available online: https://www.riss.kr/link?id=T14604844 (accessed on 15 January 2025).

- Dae-Kee, K.; Dong-Min, K.; Jin-Cheol, P.; Soo-Gyung, L.; Jihyung, Y.; Myung-Seop, L. Torque Ripple Reduction of BLDC Traction Motor of Electric Wheelchair for Ride Comfort Improvement. J. Electr. Eng. Technol. 2022, 17, 351–360. [Google Scholar] [CrossRef]

- Klinich, K.D.; Orton, N.R.; Manary, M.A.; McCurry, E.; Lanigan, T. Independent Safety for Wheelchair Users in Automated Vehicles; Technical Report; UMTRI: Ann Arbor, MI, USA, 2023. [Google Scholar]

- Ji, Y.; Hwang, J.; Kim, E.Y. An Intelligent Wheelchair Using Situation Awareness and Obstacle Detection. Procedia Soc. Behav. Sci. 2013, 97, 620–628. [Google Scholar] [CrossRef]

- Lee, Y.R.; Yang, M.H.; Yu, Y.T.; Choi, M.J.; Kwak, J.H.; Lee, S.J. Improving Public Acceptance of Autonomous Vehicles Based on Explainable Artificial Intelligence (XAI): Developing a Real-Time Road Obstacle Detection Model Using YOLOv5 and Grad-CAM. In Proceedings of the KOR-KST Conference, Seoul, Republic of Korea, 27 March 2024; pp. 220–224. [Google Scholar]

- Torres-Vega, J.G.; Cuevas-Tello, J.C.; Puente, C.; Nunez-Varela, J.; Soubervielle-Montalvo, C. Towards an Intelligent Electric Wheelchair: Computer Vision Module. In Proceedings of the Intelligent Sustainable Systems; Nagar, A.K., Singh Jat, D., Mishra, D.K., Joshi, A., Eds.; Springer Nature: Singapore, 2023; pp. 253–261. [Google Scholar]

- Erturk, E.; Kim, S.; Lee, D. Driving Assistance System with Obstacle Avoidance for Electric Wheelchairs. Sensors 2024, 24, 4644. [Google Scholar] [CrossRef] [PubMed]

- Kim, S.-J.; Lee, Y.-B.; Lee, M.-H.; Kim, J.-M.; Choi, S.-M.; Rho, D.-S. Implementation of the Electric Wheelchair using Hybrid Energy Storage Devices. J. Korea Acad.-Ind. Coop. Soc. 2024, 25, 1–9. [Google Scholar] [CrossRef]

- Jianguo, J.; Shiyi, X.; Jinsheng, X.; Qingshan, H.O.U.; Bosheng, L.; Jiao, S. A Weakly Supervised Object Detection Model for Cyborgs in Smart Cities. Hum.-Centric Comput. Inf. Sci. 2023, 13, 57. [Google Scholar] [CrossRef]

- Li, H.; Liu, X.; Jia, H.; Ahanger, T.A.; Xu, L.; Alzamil, Z.; Li, X. Deep Learning-Based 3D Multi-Object Tracking Using Multi-modal Fusion in Smart Cities. Hum.-Centric Comput. Inf. Sci. 2024, 14, 47. [Google Scholar] [CrossRef]

- Sanghv, J.J.; Shah, M.Y.; Fofaria, J.K. Solar Electric Wheelchair with a Foldable Panel. Epra Int. J. Res. Dev. 2021, 6. [Google Scholar] [CrossRef]

- Yang, F.; Bailian, X.; Bingbing, J.; Xuhui, K.; Yan, L. SPPT: Siamese Pyramid Pooling Transformer for Visual Object Tracking. Hum.-Centric Comput. Inf. Sci. 2023, 13, 59. [Google Scholar] [CrossRef]

- Kim, R.Y.; Cha, H.-J.; Kang, A.R. A Study on the Impact of Noise on YOLO-Based Object Detection in Autonomous Driving Environments. J. Korea Soc. Comput. Inf. 2024, 29, 69–75. [Google Scholar]

- Kim, J.; Cho, Y.-B. Research of Smart Integrated Control Board Function Improvement for Personal Electric Wheelchair’s Safe Driving. J. Digit. Contents Soc. 2018, 19, 1507–1514. [Google Scholar] [CrossRef]

- Lin, L.; Zhi, X.; Zhang, Z. A Lightweight Method for Automatic Road Damage Detection Based on Deep Learning. In Proceedings of the 2023 3rd International Conference on Electronic Information Engineering and Computer Science (EIECS), Changchun, China, 22–24 September 2023; pp. 81–85. [Google Scholar]

- Alam, M.N.; Rian, S.H.; Rahat, I.A.; Ahmed, S.S.; Akhand, M.K.H. A Smart Electric Wheelchair with Multipurpose Health Monitoring System. In Proceedings of the 2022 3rd International Conference for Emerging Technology (INCET), Belgaum, India, 27–29 May 2022; pp. 1–6. [Google Scholar]

- Oh, J.; Park, Y.H.; Hwang, I.H.; Park, S.G. Safety Improvement by Comparison of Standard Specifications for Electric Wheelchairs. J. Rehabil. Welf. Eng. Assist. Technol. 2024, 18, 85–89. [Google Scholar]

- Leblong, E.; Fraudet, B.; Devigne, L.; Babel, M.; Pasteau, F.; Nicolas, B.; Gallien, P. SWADAPT1: Assessment of an Electric Wheelchair-Driving Robotic Module in Standardized Circuits: A Prospective, Controlled Repeated Measure Design Pilot Study. J. Neuroeng. Rehabil. 2021, 18, 140. [Google Scholar] [CrossRef] [PubMed]

- Kim, B.-J.; Jeon, H.-G.; Lee, K.-H. Obstacle Detection and Unmanned Driving Management System in Drivable Area. Proc. 2023 Summer Conf. Korea Soc. Comput. Inf. 2023, 31, 287–289. [Google Scholar]

- Ngo, B.-V.; Nguyen, T.-H.; Tran, D.-K.; Vo, D.-D. Control of a Smart Electric Wheelchair Based on EEG Signal and Graphical User Interface for Disabled People. In Proceedings of the 2021 International Conference on System Science and Engineering (ICSSE), Ho Chi Minh City, Vietnam, 26–28 August 2021; pp. 257–262. [Google Scholar]

- Matsuura, J.; Nakamura, H. Moving Obstacle Avoidance of Electric Wheelchair by Estimating Velocity of Point Cloud. In Proceedings of the 2021 International Automatic Control Conference (CACS), Chiayi, Taiwan, 3–6 November 2021; pp. 1–6. [Google Scholar]

- Botta, A.; Bellincioni, R.; Quaglia, G. Autonomous Detection and Ascent of a Step for an Electric Wheelchair. Mechatronics 2022, 86, 102838. [Google Scholar] [CrossRef]

- Zhang, X.; Bai, L.; Zhang, Z.; Li, Y. Multi-Scale Keypoints Feature Fusion Network for 3D Object Detection from Point Clouds. Hum.-Centric Comput. Inf. Sci. 2022, 12, 373–387. [Google Scholar] [CrossRef]

- Gwangmin, Y.; Kim, N.-H.; Choi, G.-M. Implementation of an Integrated Management System Using Forklift-Type Autonomous Transport Robots and YOLOv8 Object Detection Technology. J. Digit. Contents Soc. 2024, 25, 3013–3019. [Google Scholar]

- Kim, B.-J.; Lee, H.-E.; Yang, Y.-H.; Kang, S.-G. Feature Attention-Based Region Proposal Augmentation and Teacher-Student Method-Based Object Detection Model. J. KIIT 2024, 22, 35–41. [Google Scholar] [CrossRef]

- An Improved Performance Radar Sensor for K-Band Automotive Radars. Available online: https://www.mdpi.com/1424-8220/23/16/7070 (accessed on 27 November 2024).

- Object Detection Capabilities and Performance Evaluation of 3D LiDAR Systems in Urban Air Mobility Environments. Journal of Advanced Navigation Technology|Korea Science. Available online: https://koreascience.kr/article/JAKO202421243329132.page (accessed on 27 November 2024).

- Ding, J.; Xue, N.; Xia, G.-S.; Bai, X.; Yang, W.; Yang, M.Y.; Belongie, S.; Luo, J.; Datcu, M.; Pelillo, M.; et al. Object Detection in Aerial Images: A Large-Scale Benchmark and Challenges. IEEE Trans. Pattern Anal. Mach. Intell. 2022, 44, 7778–7796. [Google Scholar] [CrossRef] [PubMed]

- Fajrin, H.R.; Bariton, S.; Irfan, M.; Rachmawati, P. Accelerometer Based Electric Wheelchair. In Proceedings of the 2020 1st International Conference on Information Technology, Advanced Mechanical and Electrical Engineering (ICITAMEE), Yogyakarta, Indonesia, 13–14 October 2020; pp. 199–203. [Google Scholar]

- Sun, J.; Yu, X.; Cao, X.; Kong, X.; Gao, P.; Luo, H. SLAM Based Indoor Autonomous Navigation System For Electric Wheelchair. In Proceedings of the 2022 7th International Conference on Automation, Control and Robotics Engineering (CACRE), Xi’an, China, 14–16 July 2022; pp. 269–274. [Google Scholar]

- Rabhi, Y.; Tlig, L.; Mrabet, M.; Sayadi, M. A Fuzzy Logic Based Control System for Electric Wheelchair Obstacle Avoidance. In Proceedings of the 2022 5th International Conference on Advanced Systems and Emergent Technologies (IC_ASET), Hammamet, Tunisia, 22–25 March 2022; pp. 313–318. [Google Scholar]

- Ko, H.; Lee, J.; Choi, H.; Koo, K.H.; Kim, H. Lightweight Method for Road Obstacle Detection Model Based on SSD Using IQR Normalization. In Proceedings of the 2024 Summer Conference of the Korean Institute of Electronics Engineers, Jeju, Republic of Korea, 24 June 2024; pp. 2427–2429. [Google Scholar]

- Lee, Y.-J.H.; Choi, D.-S. Development of Crash Prevention System for Electric Scooter Using Depth Camera and Deep Learning. In Proceedings of the KIIT Conference, Jeju, Republic of Korea, 23 November 2023; pp. 330–332. [Google Scholar]

- Haraguchi, T.; Kaneko, T. Design Requirements for Personal Mobility Vehicle (PMV) with Inward Tilt Mechanism to Minimize Steering Disturbances Caused by Uneven Road Surface. Inventions 2023, 8, 37. [Google Scholar] [CrossRef]

- Omori, M.; Yoshitake, H.; Shino, M. Autonomous Navigation for Personal Mobility Vehicles Considering Passenger Tolerance to Approaching Pedestrians. Appl. Sci. 2024, 14, 11622. [Google Scholar] [CrossRef]

- Jian, W.; Chen, K.; He, J.; Wu, S.; Li, H.; Cai, M. A Federated Personal Mobility Service in Autonomous Transportation Systems. Mathematics 2023, 11, 2693. [Google Scholar] [CrossRef]

- NVDLA, The NVIDIA Deep Learning Accelerator (NVDLA). Available online: https://en.wikipedia.org/wiki/NVDLA (accessed on 14 January 2025).

| Sensor | Class | Dataset | ||

| Training | Validation | Test | ||

| Image | Left | 115,200 | 14,400 | 14,400 |

| Right | 115,200 | 14,400 | 14,400 | |

| Total | Image | 230,400 | 28,800 | 28,800 |

| Sensor | Class | Dataset | ||

| Training | Validation | Test | ||

| LiDAR | LiDAR | 115,200 | 14,400 | 14,400 |

| Total | LiDAR | 115,200 | 14,400 | 14,400 |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Choi, E.; Dinh, T.A.; Choi, M. Enhancing Driving Safety of Personal Mobility Vehicles Using On-Board Technologies. Appl. Sci. 2025, 15, 1534. https://doi.org/10.3390/app15031534

Choi E, Dinh TA, Choi M. Enhancing Driving Safety of Personal Mobility Vehicles Using On-Board Technologies. Applied Sciences. 2025; 15(3):1534. https://doi.org/10.3390/app15031534

Chicago/Turabian StyleChoi, Eru, Tuan Anh Dinh, and Min Choi. 2025. "Enhancing Driving Safety of Personal Mobility Vehicles Using On-Board Technologies" Applied Sciences 15, no. 3: 1534. https://doi.org/10.3390/app15031534

APA StyleChoi, E., Dinh, T. A., & Choi, M. (2025). Enhancing Driving Safety of Personal Mobility Vehicles Using On-Board Technologies. Applied Sciences, 15(3), 1534. https://doi.org/10.3390/app15031534