Abstract

Video deblurring faces a fundamental challenge, as blur degradation comprehensively affects frames by not only causing detail loss but also severely distorting structural information. This dual degradation across low- and high-frequency domains makes it challenging for existing methods to simultaneously restore both structural and detailed information through a unified approach. To address this issue, we propose a wavelet-based, blur-aware decoupled network (WBDNet) that innovatively decouples structure reconstruction from detail enhancement. Our method decomposes features into multiple frequency bands and employs specialized restoration strategies for different frequency domains. In the low-frequency domain, we construct a multi-scale feature pyramid with optical flow alignment. This enables accurate structure reconstruction through bottom-up progressive feature fusion. For high-frequency components, we combine deformable convolution with a blur-aware attention mechanism. This allows us to precisely extract and merge sharp details from multiple frames. Extensive experiments on benchmark datasets demonstrate the superior performance of our method, particularly in preserving structural integrity and detail fidelity.

1. Introduction

The proliferation of mobile smart devices has made video recording an essential part of daily life. However, it is recognized that captured videos can experience blur as a result of camera shake and object motion. Therefore, the development and application of effective video deblurring algorithms are highly beneficial for improving video quality and enhancing the overall viewing experience. Moreover, video deblurring serves as a crucial preprocessing step for high-level vision tasks, such as action recognition [1], object detection [2], and visual tracking [3], where the quality of input videos significantly impacts the downstream performance.

Video deblurring remains a challenging restoration task, with its core difficulty lying in effectively utilizing temporal information from video sequences. Research [4] has shown that appropriate feature alignment strategies are vital for improving deblurring performance. Early methods primarily employed optical flow [5,6,7] for explicit alignment, but blur-induced inaccuracies in flow estimation propagated errors that compromised reconstruction quality. To address this limitation, researchers developed adaptive feature alignment schemes based on deformable convolution [8,9,10]. Recently, transformers have emerged as a promising solution [11,12,13] for video deblurring, demonstrating superior performance in complex scenarios through their powerful self-attention mechanisms and long-range dependency modeling capabilities.

However, video deblurring faces unique challenges compared to other video restoration tasks, like super-resolution. Blur not only results in the loss of high-frequency details but also significantly distorts the fundamental image structure. While existing methods have achieved remarkable progress in detail recovery, they often overlook the equally important aspect of structural information reconstruction. Therefore, effectively addressing the simultaneous management of high-frequency details and low-frequency structural information in video deblurring continues to pose a significant challenge within the field of research.

To address these issues, this paper proposes a wavelet-based, blur-aware decoupled network (WBDNet) that decouples structure recovery from detail enhancement. Unlike previous approaches [14,15,16] that merely used wavelet transforms for multi-scale feature extraction, we design specialized recovery strategies for different frequency bands. Specifically, we introduce a multi-scale progressive fusion (MSPF) module and a blur-aware detail enhancement (BADE) module. In the low-frequency domain, the MSPF module operates in several steps. It first employs optical flow for coarse alignment. Then, it constructs multi-scale feature pyramids and uses bottom-up progressive feature fusion to reconstruct the main image structure. In the high-frequency domain, the BADE module takes a different approach. It integrates blur-aware attention mechanisms with deformable convolution. This integration enhances features in sharp regions, thereby enabling more refined alignment. As a result, this approach enables the effective extraction and integration of valuable detailed information from multiple frames.

Our main contributions can be summarized as follows:

- We propose a novel multi-scale progressive fusion (MSPF) module that effectively reconstructs structural information through multi-scale feature fusion, significantly improving the restoration of low-frequency components.

- We design an innovative blur-aware detail enhancement module (BADE) that adaptively perceives and extracts sharp features across multiple frames for precise detail recovery, enabling more effective high-frequency information restoration.

- Experimental results on benchmark datasets demonstrate that our proposed method achieves significant performance improvements over state-of-the-art approaches, particularly in preserving both structural integrity and detail fidelity.

The remainder of this paper is organized as follows: Section 2 presents a comprehensive review of related work. Section 3 details the design of the proposed network architecture. Section 4 provides a comparative analysis with existing methods and experimental results on benchmark datasets. Section 5 conducts an in-depth investigation into the effectiveness of each network module. Finally, Section 6 concludes the paper with a summary of our findings.

2. Related Work

2.1. Single-Image Deblurring

Image deblurring research has evolved from traditional methods to deep learning approaches. Early studies primarily focused on image prior-based methods [17,18,19], where researchers assumed uniform blur kernels and designed various natural image priors to compensate for the ill-posed deblurring process. However, these methods showed limited effectiveness when handling non-uniform blur in complex dynamic scenes. To address this limitation, methods were proposed [20,21] that introduced additional segmentation to estimate blur kernels separately for different image patches, while other approaches [22,23] derived pixel-level blur kernels through motion field estimation.

With the advancement of deep learning, numerous CNN-based methods have been proposed to address dynamic scene deblurring. Sun et al. [24] first applied CNNs to non-uniform motion blur kernel estimation, achieving deblurring through local linear blur kernel prediction. Following the emergence of large-scale datasets for single-image deblurring, several works [25,26,27] began using CNNs to generate sharp images directly from blurry inputs in an end-to-end manner. Nah et al. [28] proposed a multi-scale CNN architecture that could restore sharp images without explicit blur kernel estimation. Tao et al. [29] introduced a scale-recurrent network, employing a coarse-to-fine strategy through recursive learning. By processing images at multiple scales, this approach effectively handled varying degrees of blur. Building on this concept, DMPHN [30] decomposed images into hierarchical patches for more efficient processing and better detail preservation. The emergence of the transformer architecture brought new possibilities to image deblurring. Chen et al. [31] proposed the image processing transformer, adapting the vision transformer architecture for image restoration tasks. Restormer [32] efficiently combined multi-head self-attention mechanisms with progressive feature extraction, achieving state-of-the-art performance while maintaining computational efficiency.

2.2. Video Deblurring

Video deblurring research initially focused on traditional image processing methods. Matsushita et al. [33] proposed utilizing motion compensation between adjacent frames for deblurring by identifying and restoring sharp image patches. Cho et al. [34] introduced a registration-based deblurring algorithm through patch-based motion trajectory modeling. Kim et al. [35] designed a joint optimization framework for simultaneous motion estimation and sharpening, achieving good results in dynamic scenes.

The rise of deep learning brought revolutionary changes to this field. Su et al. [36] first applied CNNs to video deblurring, implementing blur removal based on inter-frame information through a deep encoder–decoder network. Zhang et al. [37] further proposed a spatio-temporal CNN architecture, enhancing multi-frame information fusion capabilities. The subsequent STRCNN [38] and LSTM-based ESTRNN [39] effectively utilized long-term temporal dependencies in video sequences through recursive learning. Zhu et al. [40] further enhanced feature extraction through bidirectional propagation of features at different scales. The introduction of attention mechanisms marked a significant breakthrough in the field. Suin et al. [41] proposed a spatially attentive patch-hierarchical network that employed spatial attention mechanisms to significantly improve detail restoration capabilities. ARVo [42] further optimized performance by constructing an all-range volumetric pyramid that learns spatial correspondences between blurred video frames. With the rise of transformers in computer vision, VRT [13] and VDTR [11] successfully introduced transformer architectures to video deblurring tasks, achieving more effective long-range dependency modeling. FGST [12] further explored the application of transformer architectures in video deblurring through flow-guided sparse attention mechanisms, achieving efficient feature alignment and aggregation.

3. Wavelet-Based, Blur-Aware Decoupled Network

3.1. Network Overview

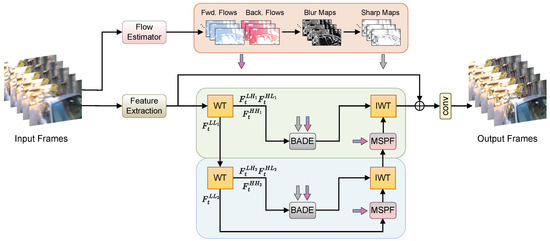

As shown in Figure 1, given a sequence of blurred video frames (), our goal is to reconstruct the corresponding sharp video frames (). The proposed network architecture consists of two main branches: a preprocessing branch and a feature reconstruction branch. The preprocessing branch estimates inter-frame optical flow and sharp maps to provide prior information for subsequent deblurring. In contrast, the feature reconstruction branch employs a wavelet transform-based design to recover structure and detail information from low-frequency and high-frequency sub-bands, respectively.

Figure 1.

The architecture of the proposed wavelet-based, blur-aware decoupled network (WBDNet).

In the preprocessing branch, we first utilize an optical flow estimation network [43] to compute motion information between adjacent frames in a video sequence. Specifically, for any frame (), we calculate the optical flow () between this frame and others. Considering that motion is the primary cause of blur, with higher motion corresponding to increased blur, we use optical flow between adjacent frames to estimate the blur map () for video frame :

For the first and last frames of the video sequence, we set and , respectively. Subsequently, we normalize the blur map () to the interval to obtain the normalized blur map () and corresponding sharp map ():

In the feature reconstruction branch, we first extract shallow features () from video frames through several residual blocks. We then use wavelet transform to decompose these features into one low-frequency sub-band () and three high-frequency sub-bands (, , and ). To achieve multi-scale feature representation, we further decompose the low-frequency sub-band () into corresponding low-and high-frequency sub-bands.

We design specialized processing modules based on the characteristics of different frequency band features. For low-frequency features, we develop the multi-scale progressive fusion (MSPF) module to handle structural reconstruction. For high-frequency features, we create the blur-aware detail enhancement (BADE) module to focus on detail recovery. This decoupled design enables the network to process frame features at different scales more specifically.

3.2. Multi-Scale Progressive Fusion Module

The low-frequency sub-bands obtained through wavelet transform carry the main structural information of the frames. Unlike other video restoration tasks, video deblurring requires not only detailed recovery but also reconstruction of structure distortions caused by blur. Based on this characteristic, we designed the multi-scale progressive fusion (MSPF) module specifically to address structural information reconstruction in low-frequency sub-band features.

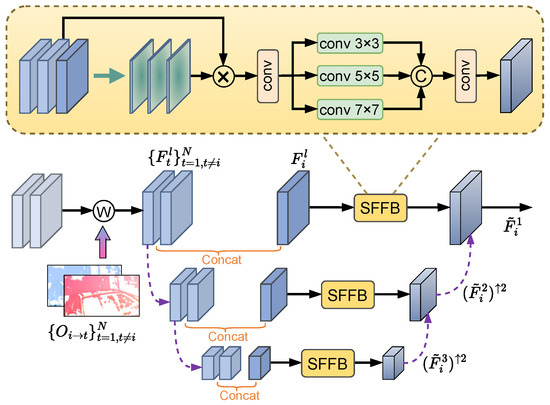

The core idea of the MSPF module is to utilize redundant structural information from multiple frames to assist in target frame restoration. As shown in Figure 2, we first align features from other frames to the target frame using optical flow information obtained from the preprocessing branch. Considering that low-frequency sub-bands mainly contain structural information, we adopt basic optical flow alignment instead of computationally intensive deformable convolution, maintaining effectiveness while improving computational efficiency. Denoting the target frame features as and aligned features from other frames as , where represents the original scale, we construct a three-level feature pyramid () using strided convolution filters for two 2× downsampling operations to expand the network’s receptive field to capture broader structural information.

Figure 2.

Schematic diagram of the multi-scale progressive fusion (MSPF) module. For illustration purposes, we only demonstrate the module with two neighboring frames as an example input.

We employ a bottom-up fusion strategy, starting from the lowest scale () and proceeding level by level. At each scale level, we first concatenate the target frame features with other frame features along the channel dimension and input them into the structure feature fusion block (SFFB) for processing. The fusion process at can be expressed as follows:

where denotes the feature concatenation operation and represents 2× upsampling. This process continues progressively until output features are obtained at the original scale ().

In the SFFB, to emphasize common structural features and suppress interference, we first compute similarity maps between the target frame and other frame features, reflecting structural correlation at each position through similarity distances. By multiplying features with similarity maps, we obtain features with enhanced structural information. This process is represented as follows:

where and are feature embedding functions implemented through convolution layers, ⊙ denotes element-wise multiplication, and the sigmoid function normalizes similarity values to the interval. The enhanced features are concatenated along the channel dimension and compressed through a convolution layer. To obtain more comprehensive structural representations, SFFB employs parallel convolution kernels of different sizes to extract multi-receptive field features, fusing structural information at different scales for complementary enhancement. This design ensures the network can comprehensively capture and integrate structural information in both temporal and scale domains.

3.3. Blur-Aware Detail Enhancement Module

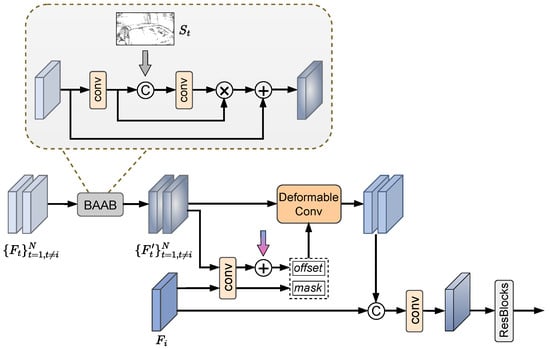

High-frequency sub-bands, as crucial components of wavelet transform, contain rich, detailed information on frames. To effectively recover these details, we propose the blur-aware detail enhancement (BADE) module. The core design principle of this module is to fully utilize temporal information from video sequences while considering the impact of motion blur for precise detail recovery. We first concatenate high-frequency sub-band features (, , and ) along the channel dimension as input to the BADE module.

The specific details of the BADE module are shown in Figure 3. Let denote the target frame features and denote features from other frames. Other frame features first pass through a blur-aware attention block (BAAB). In this block, we incorporate the sharp map () from the preprocessing branch. This sharp map provides crucial prior information about motion-blurred regions in the video. Using this information, we adaptively modulate inter-frame features to reduce the impact of blurred pixels. Specifically, the computation process of BAAB is expressed as follows:

where represents the feature concatenation operation and ⊙ denotes element-wise multiplication. This design enables the network to dynamically adjust feature responses based on sharpness information, enhancing representations in sharp regions while suppressing the influence of blurred regions. The residual connection ensures the effective transmission of original feature information.

Figure 3.

Schematic diagram of the blur-aware detail enhancement (BADE) module. For illustration purposes, we only demonstrate the module with two neighboring frames as an example input.

After obtaining enhanced feature representations, we adopt a residual-flow learning approach to obtain sampling offsets for deformable convolution, which aligns to individually through deformable convolution:

where denotes a stack of convolutions, and and represent the offset and modulation factor for deformable convolution, respectively. This design offers two key advantages. The first advantage relates to feature enhancement. Through BAAB, detail features in sharp regions become more prominent, which leads to improved feature-matching accuracy. The second advantage concerns motion handling. By using residual-flow learning to guide the offset field estimation in deformable convolution, the network can better adapt to large-scale motion changes in complex scenes. Overall, this design facilitates the effective transmission of detailed information throughout the alignment process.

Finally, we concatenate all aligned frame features along the channel dimension, compress the channel dimension through one convolution layer, and use cascaded residual blocks for deep feature extraction. This enables the network to fully integrate multi-frame information, further enhancing the expressiveness of detail features.

4. Experiments

4.1. Datasets

The DVD dataset [36] consists of 71 video sequences captured using a high-speed camera at 240 fps. To generate realistic motion blur, blurred frames at 30 fps were synthesized by averaging consecutive sharp frames. The dataset provides paired blurred–sharp frame sequences covering diverse scenarios, including indoor and outdoor scenes, camera shake, object motion, and varying lighting conditions. The training set includes 61 sequences, with the remaining 10 sequences reserved for testing.

The GoPro dataset [28] contains 33 video sequences captured using a GoPro camera at 240 fps. Similar to the DVD dataset, motion blur at 30 fps was simulated by averaging multiple consecutive sharp frames, resulting in 3214 blurred–sharp frame pairs. These sequences primarily feature outdoor scenes with significant camera motion and dynamic objects, making them particularly challenging for deblurring tasks. The dataset is divided into 22 training sequences and 11 test sequences. The GoPro dataset is widely used due to its effective simulation of real motion blur characteristics encountered in consumer-grade cameras.

The BSD dataset [39] is a real-world video deblurring dataset collected using a beam-splitter acquisition system with two synchronized cameras. It contains three different blur-intensity configurations (1 ms–8 ms, 2 ms–16 ms, and 3 ms–24 ms exposure pairs), with each containing 100 video sequences. The sequences cover diverse real-world scenarios featuring various motion patterns, including camera shake and object motion in both indoor and outdoor environments. The BSD dataset has demonstrated superior generalization capability compared to synthetic datasets, making it particularly valuable for developing and evaluating video deblurring algorithms intended for real-world applications.

4.2. Training Details

During the training process, we employ data augmentation to enhance the diversity of our training data. This includes techniques such as horizontal or vertical flipping, rotation, and random cropping of the images. Our batch size is set to 8 to optimize the training process, and the network extracts image patches with dimensions of for training. Our model is trained by the Adam optimizer [44] with , , and . The initial learning rate is . All experiments were conducted on two NVIDIA RTX 2080Ti GPUs using PyTorch (https://pytorch.org/). We train the network end-to-end by minimizing L1 loss between the generated sharp frames and the ground-truth frames.

4.3. Comparison with State-of-the-Art Methods

4.3.1. Evaluations on the DVD Dataset

To validate the effectiveness of our proposed method, we conducted comprehensive performance evaluations on the DVD dataset. As shown in Table 1, our approach outperforms state-of-the-art methods in both PSNR and SSIM metrics, demonstrating its superior performance in video deblurring tasks. To further illustrate the visual quality advantages of our method, we present comparative deblurring results from different approaches in Figure 4. Through magnified comparisons of specific regions, the differences in detail restoration between methods become more apparent. In the “IMG_0030” sequence, the intersection sign region suffered severe structural degradation due to motion blur, and existing methods struggled to accurately restore its complete contour. In contrast, our method successfully reconstructed clear outlines of the sign through a strategy that decouples structural and detailed information processing, validating the effectiveness of this design approach. The advantages of our method are even more pronounced in the “IMG_0021” sequence comparison. Not only did it accurately restore the overall shape of the wheel, but it also precisely reconstructed fine structures such as spokes, achieving results closest to the ground truth. These outcomes further confirm that our proposed method can maintain both structural integrity and detail authenticity when processing complex scenes, demonstrating excellent deblurring capabilities.

Table 1.

Quantitative comparisons on the DVD dataset. Bold indicates the best performance and underline indicates the second best performance.

Figure 4.

Comparison of visual results on the DVD dataset. Zoom in for better visualization.

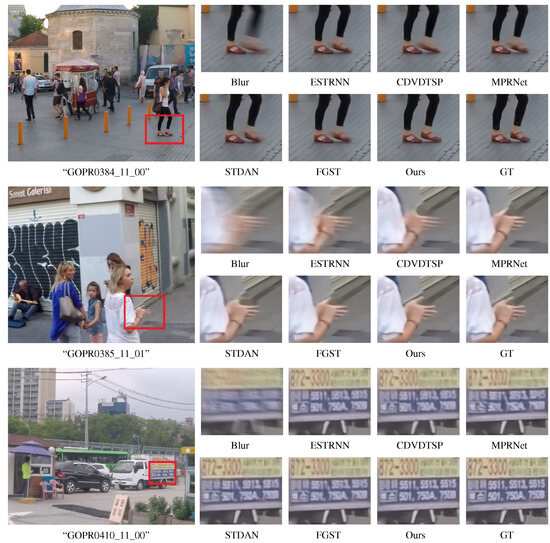

4.3.2. Evaluations on the GoPro Dataset

We conducted comparative evaluations on the GoPro dataset. As shown in Table 2, our proposed method achieved excellent objective metrics on this dataset, achieving leading performance in both PSNR and SSIM. Figure 5 presents visual comparison results of different methods on the GoPro dataset. Unlike the DVD dataset, which primarily contains static objects, the GoPro dataset includes more moving objects and complex textures, presenting new challenges for deblurring tasks. In the “GOPR0384_11_00” sequence, despite severe motion blur in the pedestrian’s foot area due to rapid movement, our method successfully reconstructed clear boundary contours. For the “GOPR0385_11_01” sequence, in complex regions where pedestrian hands overlap with building edges, our method not only recovered hand details but also avoided edge confusion, demonstrating strong robustness in handling complex scenes. In the “GOPR0410_11_00” sequence, while other methods could partially restore the basic outlines of numbers, our method reconstructed sharper and clearer digits that more closely match the visual quality of the original image.

Table 2.

Quantitative comparisons on the GoPro dataset. Bold indicates the best performance and underline indicates the second best performance.

Figure 5.

Comparison of visual results on the GoPro dataset. Zoom in for better visualization.

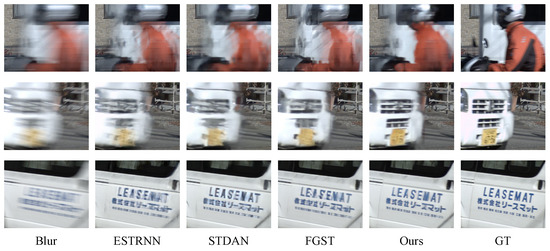

4.3.3. Evaluations on the BSD Dataset

To further validate our method’s generalization capability, we evaluate its performance on the BSD dataset. This dataset features diverse blur characteristics through different exposure-time configurations. Longer exposure times (3 ms–24 ms) lead to more severe blur, posing greater challenges for deblurring algorithms. As shown in Table 3, our method achieves superior performance across all exposure configurations.

Table 3.

Quantitative comparisons on the BSD dataset. Bold indicates the best performance and underline indicates the second best performance.

Figure 6 demonstrates our deblurring results under three typical motion patterns. In static camera scenes, we successfully restore sharp contours of blurred motorcyclists. The original clear background details are also well preserved. For scenes with aligned camera and object motion, our method shows excellent performance. It accurately reconstructs the car front’s edge structure and precisely recovers the front grille’s texture. In scenes with opposing camera and object motion, our method effectively recovers vehicle body text. The restored text maintains high clarity without introducing artifacts. These results demonstrate our method’s robustness and superiority in handling real-world motion blur patterns.

Figure 6.

Comparison of visual results on the BSD dataset (3 ms–24 ms). Zoom in for better visualization.

4.3.4. Network Efficiency Analysis

Table 4 shows the comparison results in terms of parameters, inference time, computational complexity, and restoration quality for the processing of one 1280 × 720 resolution video frame from the GoPro dataset. The experimental results demonstrate that our method achieves the best restoration quality while maintaining moderate computational costs. In terms of model efficiency, our method employs 8.9 M parameters, which is higher than ESTRNN (2.47 M) and STFAN (5.37 M) but significantly lower than CDVD-TSP (16.2 M) and STDAN (13.8 M). Similarly, while the computational complexity of our model is higher than that of ESTRNN and STFAN, it remains more efficient than CDVD-TSP and STDAN. In terms of processing speed, our method can process one frame in 0.41 s, ranking in the middle among all compared methods, demonstrating a good balance between restoration quality and computational efficiency.

Table 4.

Model parameters, inference time, and computational cost comparisons on the GoPro dataset.

5. Ablation Study

To gain deeper insights into the working mechanism of our proposed method and validate the effectiveness of core components, we conducted systematic ablation studies. Our network design is based on a key concept: decomposing the deblurring task into two subtasks through wavelet transform. The first subtask focuses on structure restoration, handled by the multi-scale progressive fusion (MSPF) module. The second subtask addresses detail enhancement, managed by the blur-aware detail enhancement (BADE) module.

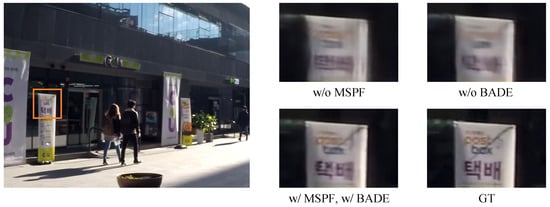

5.1. Effectiveness of the MSPF and BADE Modules

We first validate the necessity of our wavelet-based task decomposition design through ablation studies. As shown in Table 5, removing the MSPF module leads to a 3.4 dB drop in PSNR. Similarly, removing the BADE module results in a 4.99 dB decrease. These results demonstrate that both modules are crucial for effective deblurring.

Table 5.

Ablation study of the proposed module on the GoPro dataset.

To better understand the role of each module, we present visual comparison results in Figure 7. Without the MSPF module, the billboard’s edge structure shows obvious distortion and blur. This is because the network lacks a dedicated module for the processing of low-frequency structural information. Without the BADE module, the text details on the billboard become completely indiscernible. This verifies the importance of high-frequency detail enhancement. Only with both modules working together can the network achieve optimal restoration results. This synergy enables accurate reconstruction of both structural integrity and fine details.

Figure 7.

Effectiveness of the proposed module for video deblurring.

5.2. Effectiveness of Components in the MSPF Module

We evaluated the effectiveness of the MSPF module design. This module utilizes redundant structural information across multiple frames through a progressive fusion strategy and structure feature fusion block (SFFB). The experimental results reported in Table 6 show that removing the progressive fusion strategy and SFFB leads to PSNR decreases of 1.72 dB and 2.61 dB, respectively.

Table 6.

Ablation study for MSPF module design on the GoPro dataset.

The visual comparison in Figure 8 demonstrates the effectiveness of two key components in the MSPF module. Without the progressive fusion strategy, the vehicle’s overall outline appears noticeably blurred. This highlights the importance of this strategy in structure reconstruction. When removing the SFFB module, the vehicle’s contours show motion trailing effects. This indicates that efficient multi-frame temporal information fusion is crucial for achieving ideal deblurring results. With both components working together, the reconstruction results achieve the best visual quality. The output closely matches the reference image. These results validate the effectiveness of our MSPF module design.

Figure 8.

Visualization of ablation on the MSPF module.

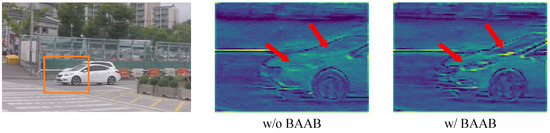

5.3. Effectiveness of Components in the BADE Module

We conducted in-depth ablation analysis on two components of the BADE module: the blur-aware attention block (BAAB) using sharpness priors and deformable convolution alignment based on optical flow residuals. As shown in Table 5 and Table 7, adding BAAB alone yields limited improvement (0.85 dB). However, incorporating BAAB on top of deformable convolution alignment shows more significant improvement (1.81 dB). This reveals important synergistic effects between BAAB and feature alignment operations.

Table 7.

Ablation study for BADE module design on the GoPro dataset.

To better understand BAAB’s working mechanism, we visualize intermediate feature maps in Figure 9. Without BAAB, the vehicle headlight area suffers more interference from blurred pixels. This leads to decreased detail restoration quality. BAAB adaptively modulates feature responses using sharpness prior information. It enhances feature responses in sharp regions while suppressing them in blurred areas. The combination of BAAB and deformable convolution alignment maximizes their respective strengths. BAAB provides sharpness awareness to help identify and preserve features in clear regions. Meanwhile, deformable convolution ensures effective temporal propagation and fusion of these valuable features. This synergy enables better detail recovery in complex dynamic scenes.

Figure 9.

Feature map visualization of ablation on blur-aware attention block (BAAB).

6. Conclusions

In this paper, we propose a novel wavelet-based, blur-aware decoupled network (WBDNet). Through wavelet transform, we decompose the deblurring task into two subtasks: structure restoration and detail enhancement. For the low-frequency domain, we design a multi-scale progressive fusion (MSPF) module that effectively integrates multi-frame structural information through a multi-scale feature fusion strategy and structure feature fusion block. For the high-frequency domain, we introduce a blur-aware detail enhancement (BADE) module that combines a blur-aware attention block utilizing sharpness priors and deformable convolution alignment based on optical flow residuals to enhance detail reconstruction. Experimental results on benchmark datasets demonstrate that our proposed method achieves excellent performance in both objective metrics and subjective visual quality.

Author Contributions

Conceptualization, H.W.; methodology, H.W.; software, H.W.; validation, H.W.; formal analysis, R.C.; investigation, H.W.; resources, R.C.; data curation, H.W.; writing—original draft preparation, H.W.; writing—review and editing, R.C. and P.P.; visualization, H.W.; supervision, R.C.; project administration, R.C.; funding acquisition, R.C. All authors have read and agreed to the published version of the manuscript.

Funding

This research project was financially supported by Faculty of Informatics, Mahasarakham University. The APC was funded by Mahasarakham University, Thailand.

Institutional Review Board Statement

Not applicable.

Informed Consent Statement

Not applicable.

Data Availability Statement

The raw data supporting the conclusions of this article will be made available by the authors upon request.

Conflicts of Interest

The authors declare no conflicts of interest.

References

- Wang, H.; Schmid, C. Action recognition with improved trajectories. In Proceedings of the IEEE International Conference on Computer Vision, Sydney, Australia, 1–8 December 2013; pp. 3551–3558. [Google Scholar]

- Sayed, M.; Brostow, G. Improved handling of motion blur in online object detection. In Proceedings of the IEEE/CVF conference on Computer Vision and Pattern Recognition, Nashville, TN, USA, 20–25 June 2021; pp. 1706–1716. [Google Scholar]

- Jin, H.; Favaro, P.; Cipolla, R. Visual tracking in the presence of motion blur. In Proceedings of the 2005 IEEE Computer Society Conference on Computer Vision and Pattern Recognition (CVPR’05), San Diego, CA, USA, 20–26 June 2005; Volume 2, pp. 18–25. [Google Scholar]

- Gast, J.; Roth, S. Deep video deblurring: The devil is in the details. In Proceedings of the IEEE/CVF International Conference on Computer Vision Workshops, Seoul, Republic of Korea, 27–28 October 2019. [Google Scholar]

- Wulff, J.; Black, M.J. Modeling blurred video with layers. In Proceedings of the Computer Vision–ECCV 2014: 13th European Conference, Proceedings, Part VI 13, Zurich, Switzerland, 6–12 September 2014; Springer: Cham, Switzerland, 2014; pp. 236–252. [Google Scholar]

- Hyun Kim, T.; Mu Lee, K. Generalized video deblurring for dynamic scenes. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Boston, MA, USA, 7–12 June 2015; pp. 5426–5434. [Google Scholar]

- Pan, J.; Bai, H.; Tang, J. Cascaded deep video deblurring using temporal sharpness prior. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, Seattle, WA, USA, 13–19 June 2020; pp. 3043–3051. [Google Scholar]

- Liang, J.; Fan, Y.; Xiang, X.; Ranjan, R.; Ilg, E.; Green, S.; Cao, J.; Zhang, K.; Timofte, R.; Gool, L.V. Recurrent video restoration transformer with guided deformable attention. Adv. Neural Inf. Process. Syst. 2022, 35, 378–393. [Google Scholar]

- Zhang, H.; Xie, H.; Yao, H. Spatio-temporal deformable attention network for video deblurring. In Proceedings of the European Conference on Computer Vision, Tel Aviv, Israel, 23–27 October 2022; Springer: Cham, Switzerland, 2022; pp. 581–596. [Google Scholar]

- Jiang, B.; Xie, Z.; Xia, Z.; Li, S.; Liu, S. Erdn: Equivalent receptive field deformable network for video deblurring. In Proceedings of the European Conference on Computer Vision, Tel Aviv, Israel, 23–27 October 2022; Springer: Cham, Switzerland, 2022; pp. 663–678. [Google Scholar]

- Cao, M.; Fan, Y.; Zhang, Y.; Wang, J.; Yang, Y. Vdtr: Video deblurring with transformer. IEEE Trans. Circuits Syst. Video Technol. 2022, 33, 160–171. [Google Scholar] [CrossRef]

- Lin, J.; Cai, Y.; Hu, X.; Wang, H.; Yan, Y.; Zou, X.; Ding, H.; Zhang, Y.; Timofte, R.; Van Gool, L. Flow-Guided Sparse Transformer for Video Deblurring. In Proceedings of the International Conference on Machine Learning, PMLR, Baltimore, MD, USA, 17–23 July 2022; pp. 13334–13343. [Google Scholar]

- Liang, J.; Cao, J.; Fan, Y.; Zhang, K.; Ranjan, R.; Li, Y.; Timofte, R.; Van Gool, L. Vrt: A video restoration transformer. IEEE Trans. Image Process. 2024, 33, 2171–2182. [Google Scholar] [CrossRef] [PubMed]

- Zou, W.; Jiang, M.; Zhang, Y.; Chen, L.; Lu, Z.; Wu, Y. Sdwnet: A straight dilated network with wavelet transformation for image deblurring. In Proceedings of the IEEE/CVF International Conference on Computer Vision, Montreal, BC, Canada, 11–17 October 2021; pp. 1895–1904. [Google Scholar]

- Dong, J.; Pan, J.; Yang, Z.; Tang, J. Multi-scale residual low-pass filter network for image deblurring. In Proceedings of the IEEE/CVF International Conference on Computer Vision, Paris, France, 2–3 October 2023; pp. 12345–12354. [Google Scholar]

- Pan, J.; Xu, B.; Dong, J.; Ge, J.; Tang, J. Deep discriminative spatial and temporal network for efficient video deblurring. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, Vancouver, BC, Canada, 17–24 June 2023; pp. 22191–22200. [Google Scholar]

- Levin, A.; Weiss, Y.; Durand, F.; Freeman, W.T. Efficient marginal likelihood optimization in blind deconvolution. In Proceedings of the CVPR 2011, Colorado Springs, CO, USA, 20–25 June 2011; pp. 2657–2664. [Google Scholar]

- Sun, L.; Cho, S.; Wang, J.; Hays, J. Edge-based blur kernel estimation using patch priors. In Proceedings of the IEEE International Conference on Computational Photography (ICCP), Cambridge, MA, USA, 19–21 April 2013; pp. 1–8. [Google Scholar]

- Ren, W.; Cao, X.; Pan, J.; Guo, X.; Zuo, W.; Yang, M.H. Image deblurring via enhanced low-rank prior. IEEE Trans. Image Process. 2016, 25, 3426–3437. [Google Scholar] [CrossRef] [PubMed]

- Hyun Kim, T.; Ahn, B.; Mu Lee, K. Dynamic scene deblurring. In Proceedings of the IEEE International Conference on Computer Vision, Sydney, Australia, 1–8 December 2013; pp. 3160–3167. [Google Scholar]

- Pan, J.; Hu, Z.; Su, Z.; Lee, H.Y.; Yang, M.H. Soft-segmentation guided object motion deblurring. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Las Vegas, NV, USA, 27–30 June 2016; pp. 459–468. [Google Scholar]

- Zheng, S.; Xu, L.; Jia, J. Forward motion deblurring. In Proceedings of the IEEE International Conference on Computer Vision, Sydney, Australia, 1–8 December 2013; pp. 1465–1472. [Google Scholar]

- Gong, D.; Yang, J.; Liu, L.; Zhang, Y.; Reid, I.; Shen, C.; Van Den Hengel, A.; Shi, Q. From motion blur to motion flow: A deep learning solution for removing heterogeneous motion blur. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Honolulu, HI, USA, 21–26 July 2017; pp. 2319–2328. [Google Scholar]

- Sun, J.; Cao, W.; Xu, Z.; Ponce, J. Learning a convolutional neural network for non-uniform motion blur removal. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Boston, MA, USA, 7–12 June 2015; pp. 769–777. [Google Scholar]

- Yan, Y.; Ren, W.; Guo, Y.; Wang, R.; Cao, X. Image deblurring via extreme channels prior. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Honolulu, HI, USA, 21–26 July 2017; pp. 4003–4011. [Google Scholar]

- Zhang, J.; Pan, J.; Ren, J.; Song, Y.; Bao, L.; Lau, R.W.; Yang, M.H. Dynamic scene deblurring using spatially variant recurrent neural networks. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Salt Lake City, UT, USA, 18–23 June 2018; pp. 2521–2529. [Google Scholar]

- Kupyn, O.; Budzan, V.; Mykhailych, M.; Mishkin, D.; Matas, J. Deblurgan: Blind motion deblurring using conditional adversarial networks. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Salt Lake City, UT, USA, 18–23 June 2018; pp. 8183–8192. [Google Scholar]

- Nah, S.; Hyun Kim, T.; Mu Lee, K. Deep multi-scale convolutional neural network for dynamic scene deblurring. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Honolulu, HI, USA, 21–26 July 2017; pp. 3883–3891. [Google Scholar]

- Tao, X.; Gao, H.; Shen, X.; Wang, J.; Jia, J. Scale-recurrent network for deep image deblurring. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Salt Lake City, UT, USA, 18–23 June 2018; pp. 8174–8182. [Google Scholar]

- Zhang, H.; Dai, Y.; Li, H.; Koniusz, P. Deep stacked hierarchical multi-patch network for image deblurring. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, Long Beach, CA, USA, 15–20 June 2019; pp. 5978–5986. [Google Scholar]

- Chen, H.; Wang, Y.; Guo, T.; Xu, C.; Deng, Y.; Liu, Z.; Ma, S.; Xu, C.; Xu, C.; Gao, W. Pre-trained image processing transformer. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, Nashville, TN, USA, 20–25 June 2021; pp. 12299–12310. [Google Scholar]

- Zamir, S.W.; Arora, A.; Khan, S.; Hayat, M.; Khan, F.S.; Yang, M.H. Restormer: Efficient transformer for high-resolution image restoration. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, New Orleans, LA, USA, 18–24 June 2022; pp. 5728–5739. [Google Scholar]

- Matsushita, Y.; Ofek, E.; Ge, W.; Tang, X.; Shum, H.Y. Full-frame video stabilization with motion inpainting. IEEE Trans. Pattern Anal. Mach. Intell. 2006, 28, 1150–1163. [Google Scholar] [CrossRef]

- Cho, S.; Wang, J.; Lee, S. Video deblurring for hand-held cameras using patch-based synthesis. ACM Trans. Graph. (TOG) 2012, 31, 1–9. [Google Scholar] [CrossRef]

- Hyun Kim, T.; Mu Lee, K. Segmentation-free dynamic scene deblurring. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Columbus, OH, USA, 23–28 June 2014; pp. 2766–2773. [Google Scholar]

- Su, S.; Delbracio, M.; Wang, J.; Sapiro, G.; Heidrich, W.; Wang, O. Deep video deblurring for hand-held cameras. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Honolulu, HI, USA, 21–26 July 2017; pp. 1279–1288. [Google Scholar]

- Zhang, K.; Luo, W.; Zhong, Y.; Ma, L.; Liu, W.; Li, H. Adversarial spatio-temporal learning for video deblurring. IEEE Trans. Image Process. 2018, 28, 291–301. [Google Scholar] [CrossRef]

- Hyun Kim, T.; Mu Lee, K.; Scholkopf, B.; Hirsch, M. Online video deblurring via dynamic temporal blending network. In Proceedings of the IEEE International Conference on Computer Vision, Venice, Italy, 22–29 October 2017; pp. 4038–4047. [Google Scholar]

- Zhong, Z.; Gao, Y.; Zheng, Y.; Zheng, B.; Sato, I. Real-world video deblurring: A benchmark dataset and an efficient recurrent neural network. Int. J. Comput. Vis. 2023, 131, 284–301. [Google Scholar] [CrossRef]

- Zhu, C.; Dong, H.; Pan, J.; Liang, B.; Huang, Y.; Fu, L.; Wang, F. Deep recurrent neural network with multi-scale bi-directional propagation for video deblurring. In Proceedings of the AAAI Conference on Artificial Intelligence, Vancouver, BC, Canada, 28 February–1 March 2022; Volume 36, pp. 3598–3607. [Google Scholar]

- Suin, M.; Purohit, K.; Rajagopalan, A. Spatially-attentive patch-hierarchical network for adaptive motion deblurring. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, Seattle, WA, USA, 13–19 June 2020; pp. 3606–3615. [Google Scholar]

- Li, D.; Xu, C.; Zhang, K.; Yu, X.; Zhong, Y.; Ren, W.; Suominen, H.; Li, H. Arvo: Learning all-range volumetric correspondence for video deblurring. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, Nashville, TN, USA, 20–25 June 2021; pp. 7721–7731. [Google Scholar]

- Ranjan, A.; Black, M.J. Optical flow estimation using a spatial pyramid network. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Honolulu, HI, USA, 21–26 July 2017; pp. 4161–4170. [Google Scholar]

- Kingma, D.P.; Ba, J. Adam: A method for stochastic optimization. arXiv 2014, arXiv:1412.6980. [Google Scholar]

- Xiang, X.; Wei, H.; Pan, J. Deep video deblurring using sharpness features from exemplars. IEEE Trans. Image Process. 2020, 29, 8976–8987. [Google Scholar] [CrossRef] [PubMed]

- Zamir, S.W.; Arora, A.; Khan, S.; Hayat, M.; Khan, F.S.; Yang, M.H.; Shao, L. Multi-stage progressive image restoration. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, Nashville, TN, USA, 20–25 June 2021; pp. 14821–14831. [Google Scholar]

- Lin, L.; Wei, G.; Liu, K.; Feng, W.; Zhao, T. LightViD: Efficient Video Deblurring with Spatial-Temporal Feature Fusion. IEEE Trans. Circuits Syst. Video Technol. 2024, 34, 7430–7439. [Google Scholar] [CrossRef]

- Zhang, L.; Xu, B.; Yang, Z.; Pan, J. Deblurring Videos Using Spatial-Temporal Contextual Transformer with Feature Propagation. IEEE Trans. Image Process. 2024, 33, 6354–6366. [Google Scholar] [CrossRef] [PubMed]

- Zhou, S.; Zhang, J.; Pan, J.; Xie, H.; Zuo, W.; Ren, J. Spatio-temporal filter adaptive network for video deblurring. In Proceedings of the IEEE/CVF International Conference on Computer Vision, Seoul, Republic of Korea, 27 October–2 November 2019; pp. 2482–2491. [Google Scholar]

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).