Abstract

To improve intersection traffic flow and reduce vehicle energy consumption and emissions at intersections, a signal optimization method based on deep reinforcement learning (DRL) is proposed. The algorithm uses Rainbow DQN as the core framework, incorporating vehicle position, speed, and acceleration information into the state space. The reward function simultaneously considers two objectives: reducing vehicle waiting times and minimizing carbon emissions, with the vehicle queue length as a weighted factor. Additionally, an ACmix module, which integrates self-attention mechanisms and convolutional layers, is introduced to enhance the model’s feature extraction and information representation capabilities, improving computational efficiency. The model is tested using an actual intersection as the study object, with a signal intersection simulation built in SUMO. The proposed approach is compared with traditional Webster signal timing, actuated signal timing, and control strategies based on DQN and D3QN models. The results show that the proposed strategy, through real-time signal timing adjustments, reduces the average vehicle waiting time by approximately 27.58% and the average CO2 emissions by about 7.34% compared with the actuated signal timing method. A comparison with DQN and D3QN models further demonstrates the superiority of the proposed model, achieving a 15% reduction in average waiting time and a 6.5% reduction in CO2 emissions. The model’s applicability is validated under various scenarios, including different proportions of electric vehicles and traffic volumes. This study aims to provide a flexible signal control strategy to enhance intersection vehicle flow and reduce carbon emissions. It offers a reference for the development of green, intelligent transportation systems and holds practical significance for promoting urban carbon reduction efforts.

1. Introduction

Signal control systems have become crucial traffic management tools for mitigating congestion at urban intersections and reducing pollution. Fixed-time signal control, actuated signal control, and adaptive signal control are traditional traffic signal control systems [1,2]. Widely used systems such as SCATS, RHODES, and SCOOT still operate on deterministic control strategies [3,4,5]. These systems are generally criticized for their lack of adaptability, predictive capability, and real-time responsiveness, as well as their limited effectiveness in reducing traffic congestion and pollutant emissions. As traffic volume continues to increase, unconventional intersection designs such as flow direction restrictions and U-turn exits [6], continuous-flow intersections [7], staggered intersections [8], and left-turn intersections at exit points [9,10] have been proposed. However, these new intersection design methods often face the challenge of substantial engineering work in practical applications. The increasing complexity and time-variance of traffic systems have also led to the emergence of intelligent signal control methods, including those based on game theory [11], heuristic algorithms [12], and data-driven approaches [13]. Researchers have applied methods such as neural networks [14], particle swarm optimization [15], and genetic algorithms [16] to traffic signal control in an effort to enhance the system’s global optimization capability. However, these algorithms face inherent issues, including low computational accuracy, difficulty in convergence, and slow convergence speeds. These limitations restrict their effectiveness in finding optimal traffic signal timing solutions and improving the throughput efficiency of road networks.

Meanwhile, with the rapid development of data-driven methods, Deep Reinforcement Learning (DRL) has emerged as a powerful technology for traffic signal control. DRL combines reinforcement learning with deep neural networks, offering advantages such as high adaptability and strong learning capability. By leveraging trial-and-error learning, DRL can overcome the decision-making limitations of traditional models and find optimal strategies in high-dimensional environments. As a result, it has gradually become a popular method for optimizing intersection signals [17,18]. For instance, Lu et al. [19] proposed a signal optimization strategy based on a competitive double deep Q-network (3RDQN) algorithm, which introduced an LSTM network to reduce the algorithm’s reliance on state information, effectively improving intersection throughput. Chen et al. [20] presented an intersection signal timing optimization method based on a hybrid proximal policy optimization (PPO) approach. By parameterizing signal control actions, this method reduced the average vehicle travel time by 27.65% and the average queue length by 23.65%. Dong et al. [21] introduced an adaptive traffic signal priority control framework based on multi-objective DRL, which allocated weights based on vehicle type and passenger load to balance competing objectives within a public transport signal priority strategy. Results indicated that, under mixed traffic conditions, this framework performed best at a 75% Connected and Automated Vehicle (CAV) penetration rate, with the highest efficiency and safety achieved for buses, cars, and CAVs. The reward functions used in DRL-based intersection signal timing models typically consider traffic parameters such as queue length, waiting time, accumulated delay, vehicle speed, vehicle count, number of stops, and sudden braking events [22,23,24,25,26,27]. These reward functions are computed by harmonically weighting some or all of these parameters to achieve optimal trade-offs between traffic efficiency and safety.

As pollution and emissions have increasingly become a global focus, road transport systems are now recognized as one of the major sources of fuel waste and air pollution. Frequent acceleration and deceleration, as well as prolonged idling at intersections, contribute to significant traffic disruptions, leading to inefficiencies and energy wastage. More and more researchers are considering the reduction in pollutant emissions as one of the key objectives in intersection optimization. Coelho et al. [28] found that, in addition to speed-limiting effects, intersection signal control can also lead to increased emissions. Yao et al. [29] calibrated emission factors based on a vehicle power-to-weight ratio model and optimized signal control with the goal of minimizing both vehicle delays and emissions. Chen et al. [30] proposed a traffic signal control optimization method based on a coupled model that integrates macro-level traffic analysis and macro-level emission estimation. By focusing on vehicle emissions as the optimization target, they employed a genetic algorithm to optimize signals, effectively reducing vehicle travel time and emissions. Lin et al. [31] and Ding et al. [32] incorporated exhaust emissions into optimization objectives, constructing a multi-objective timing optimization model that considered intersection delay time, stop frequency, queue length, and exhaust emissions. Their model demonstrated significant improvements in both traffic and environmental benefits. Liu et al. [33] proposed a bus priority pre-signal model aimed at reducing fuel consumption and carbon emissions. Under the premise of bus priority, the “red first, then green” principle for pre-signals was introduced. Results indicated that the model significantly reduced carbon emissions at intersections, as well as delays and stop frequencies for buses. Zhang et al. [34] introduced an adaptive Meta-DQN Traffic Signal Control (MMD-TSC) method, which incorporated a dynamic weight adaptation mechanism, simultaneously optimizing traffic efficiency and energy savings while considering per capita carbon emissions as an energy metric. Compared to fixed-time TSC, the MMD-TSC model improved energy utilization efficiency by 35%. Wang et al. [35] proposed an intersection signal timing optimization method based on the D3QN model in mixed CAV (Connected and Automated Vehicle) and HV (Human-Driven Vehicle) scenarios. Using the VSP power-to-weight algorithm to calculate dynamic vehicle emissions, this method significantly reduced both vehicle carbon emissions and waiting times. Scholars have increasingly prioritized reducing energy consumption and emissions as key objectives for signal optimization and have demonstrated the effectiveness of DRL-based intersection signal optimization models in achieving carbon reduction [36,37,38].

In summary, existing research has successfully applied deep reinforcement learning (DRL) algorithms for multi-objective traffic signal control optimization, but there are still some shortcomings in the following areas: (1) Methodological limitations: Deep reinforcement learning algorithms such as DQN, DDQN, and D3QN have been widely used in traffic signal control optimization. Matteo Hessel et al. [39] proposed the Rainbow DQN algorithm, which integrates six advanced modules and has shown significant advantages in areas like lane-changing and path planning [40,41]. However, current studies have not yet applied Rainbow DQN to intersection signal timing optimization. (2) Carbon emission optimization in DRL: Existing literature has demonstrated the potential of DRL in carbon emission optimization, with most studies focusing on fuel-powered vehicles. However, with the development of new energy electric vehicles (EVs), the proportion of EVs is expected to rise in the future, and the mixed operation of fuel-powered and electric vehicles will become a prevailing trend. He et al. [42] pointed out that there are significant differences in carbon emissions between fuel-powered and electric vehicles. Therefore, in optimizing signal timing, it is essential to consider the carbon emission differences between fuel and electric vehicles and select appropriate carbon emission models for accurate calculation. To date, there is a lack of signal optimization strategies based on DRL that focus on carbon reduction in scenarios involving both fuel-powered and electric vehicles.

To fill the gaps mentioned above, this study explores the use of the Rainbow DQN algorithm for multi-objective optimization of urban intersection signals, fully leveraging the capabilities of DRL algorithms in optimizing signal timing, improving intersection traffic efficiency, and reducing energy consumption and pollutant emissions. The main contributions of this paper are as follows:

(1) Based on the Rainbow DQN algorithm framework, the ACmix module [43] is introduced to combine the advantages of convolution and self-attention mechanisms. By utilizing V2X (Vehicle-to-Everything) technology to obtain dynamic vehicle state information, the traffic signal control actions are defined as parameterized actions. A reward function is constructed with the aim of reducing carbon emissions and improving traffic efficiency while considering the differences between fuel-powered vehicles and electric vehicles. Different methods are employed to accurately calculate the instantaneous carbon emissions of vehicles, and the signal timing is dynamically adjusted based on real-time traffic flow at the intersection.

(2) The model’s effectiveness is validated through a comparative analysis with DQN, D3QN, Webster signal timing, and actuated signal timing methods, using real-world traffic data from an actual intersection. Finally, the model’s applicability is tested under various scenarios, including different proportions of electric vehicles, varying electric vehicle carbon emission factors, different traffic volumes, and the consideration of autonomous vehicle penetration rates.

2. Model Construction

2.1. Markov Decision Process

The classic Markov Decision Process (MDP) is composed of a five-tuple: . Here, S represents the state space; A denotes the action space; P is the state transition probability function, expressed as , representing the probability of transitioning to the next state after taking an action in a given state ; R is the reward function, expressed as , indicating the feedback received after executing an action in a given state ; is the discount factor, representing the degree to which recent feedback influences future rewards, with . Intersection signal control, as a traffic management strategy, can optimize traffic flow and reduce congestion. By adjusting signal timing through an intelligent signal system, intersections can be managed flexibly according to real-time traffic flow, thereby optimizing intersection performance. When addressing the signal control problem, it can be regarded as an MDP.

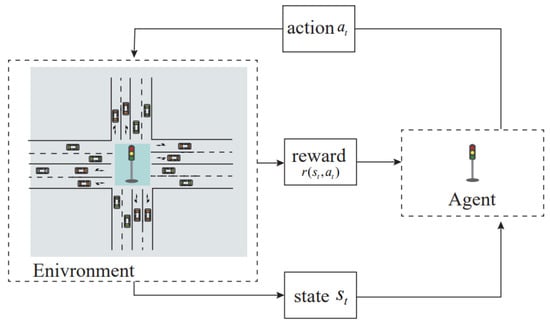

In DRL-based traffic signal control, the agent is represented by the traffic signal lights, and the environment corresponds to the road traffic conditions. In the decision-making process, the agent uses the input state to select appropriate actions and receives rewards as feedback from the environment. By designing suitable algorithms for the agent and training it to interact with the environment, the system can optimize signal timing and enable the agent to autonomously learn eco-friendly control strategies. The overall framework is illustrated in Figure 1.

Figure 1.

Agent-based intersection signal control framework.

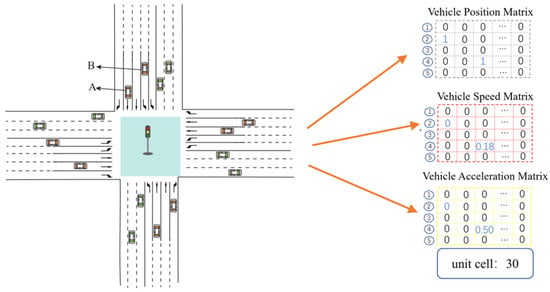

2.2. State Space

In urban intersections, the state refers to environmental variables that influence the agent’s decision-making process. Roadside equipment can use V2X technologies to obtain real-time vehicle information and make driving decisions based on the vehicle’s status and surrounding traffic environment. In this study, the state space designed for the agent includes information on vehicle position, speed, and acceleration. The Discrete Traffic State Encoding method is used to discretize the state space, thereby reducing its complexity and enhancing the expressiveness of the state information. The state space covers the approach lanes within 150 m of the stop line, divided into 5 m intervals, resulting in a state space matrix of size 3 × 19 × 30. To represent position information, elements 0 and 1 are used to indicate whether a vehicle is present at that position. When applying Min–Max normalization to vehicle speed and acceleration information, the calculation formula is as follows:

- (1)

- Normalization of vehicle instantaneous speed

- (2)

- Normalization of vehicle instantaneous acceleration

① When vehicle acceleration is positive, the formula is as follows:

where represents the instantaneous normalized acceleration; represents the instantaneous acceleration; represents the minimum acceleration, set to 0.0 m/s2; and represents the maximum acceleration, set to 2.6 m/s2.

② When the vehicle acceleration is negative, the formula is as follows:

Here, represents the instantaneous normalized deceleration; represents the instantaneous deceleration; represents the minimum deceleration, set to 0.0 m/s2; represents the maximum deceleration, set to −9.0 m/s2.

Taking the state space of the north entrance road as an example, the position information matrix corresponds to vehicles A and B at 0 m and 15 m from the stop line, and the processed velocity and acceleration information is entered into the matrix as illustrated in Figure 2.

Figure 2.

The state space of the northern entrance.

2.3. Action Space

In this study, an adaptive signal phase switching strategy is chosen as the action space. Compared to fixed signal phases, the adaptive signal phases allow for more flexible decision-making by the agent. The action space is set to , with phase actions selected as shown in Table 1, where right-turning vehicles are allowed to turn right under safe conditions.

Table 1.

Phase action selection.

To minimize disruptions from frequent phase changes, the maximum green light time is set to 50 s, with a minimum of 10 s, and the yellow light time is set to 3 s [19,22]. The agent has two types of actions to choose from: one is to extend the current phase’s green light duration by 5 s, denoted as ; the other is to switch to the next phase after the yellow light time has elapsed.

2.4. Reward Function

Deep reinforcement learning aims to maximize rewards. The agent learns a strategy based on the rewards obtained from each action taken to make better action decisions. The model’s reward function is established with a focus on reducing CO2 emissions and improving intersection traffic efficiency.

- (1)

- Traffic efficiency

- (2)

- Carbon emissions

To make more accurate decisions based on vehicle CO2 emissions, real-time speed and acceleration information are obtained using roadside sensors. The instantaneous carbon emissions of fuel vehicles are computed through the Vehicle Specific Power method (VSP), while the instantaneous carbon emissions of electric vehicles are computed from the perspective of energy conversion efficiency.

- ①

- Instantaneous CO2 emissions of conventional gasoline vehicles

Instantaneous energy consumption of gasoline vehicles is determined using the VSP method, known for its simplicity and wide applicability. As noted in the literature [44], the formula for VSP is as follows:

where and denote the instantaneous speed and acceleration of the vehicle, respectively, and denotes the road gradient. Given that the study scenario involves urban intersections, we set = 0, simplifying the calculation formula as follows:

According to the simplified formula, the calculation of VSP only requires the vehicle’s instantaneous speed and instantaneous acceleration. The accurate division of specific power (VSP) intervals is crucial for the precision of vehicle exhaust emission measurements. Frey [45] divided the VSP into 14 operational condition intervals, with the emission rate for each interval representing the average instantaneous emission rate of various pollutants. The instantaneous CO2 emissions of a fuel-powered vehicle can be determined based on the CO2 emission rates corresponding to different specific power intervals, as shown in Table 2.

Table 2.

Vehicle CO2 emission rates for different VSP intervals.

- ②

- Instantaneous CO2 emissions of new energy electric-powered vehicles

From the perspective of energy conversion, the instantaneous electricity consumption of electric vehicles is converted into instantaneous CO2 emissions using the national grid’s CO2 emission factor. The formula for calculating the electricity consumed to charge electric vehicle batteries at time is as follows:

where represents the instantaneous total electricity consumption of electric vehicles; represents the charging loss rate, set at 0.97; and represents the actual power consumption at the time .

As per the Ministry of Environmental Protection Climate Directive [2023] No.43 [46], the CO2 emission factor is specified as . The formula for calculating carbon emissions from electric vehicles is as follows:

where represents the actual carbon emissions per second of the vehicle.

- (3)

- Comprehensive reward function

Combining both carbon emissions and traffic efficiency, the comprehensive reward function (Reward–CO2Reduction) is as follows:

where and are weighted factors are determined by the mean queue length of vehicles at the intersection. The formula for calculating these weights is as follows:

where represents the average number of vehicles in the queue for each lane, and represents the average queue threshold. This threshold can be adjusted to modify the weights. In this study, considering that, when fuel-powered vehicles and electric vehicles mix, the carbon emissions of electric vehicles in an idling state are lower than those of traditional fuel-powered vehicles, the optimization effect of the carbon emission index is easily influenced by the current vehicle composition at the intersection. Therefore, it is necessary to dynamically adjust the weight coefficients of carbon emissions and waiting time, allowing the agent to find the optimal solution. After multiple experiments, the threshold is set to 3.

3. Methodology

3.1. DQN

Reinforcement learning is employed for agents to learn how to find the optimal set of actions in decision-making problems under uncertain environments. The agent achieves transitions between environmental states by taking actions at discrete time steps: when an action is executed, the environment produces a reward and transitions to a new state, formalized as a MDP. The agent’s actions are formalized as a policy π, which is represented by the value function . The formula is as follows:

Here, and , respectively, with the latter being the Bellman recursive equation. The optimal policy is derived by calculating Q-values for each state-action pair and applying the Bellman equation, then using a greedy strategy to choose the action with the highest Q-value.

DQN [47] combines Q-learning with deep networks, with key techniques being experience replay and target networks:

- Setting up two neural networks can improve learning stability: one for determining actual values (main network) and one for predicting future values (target network). The target network is represented by a feedforward neural network consisting of three convolutional layers and two fully connected layers.

- A replay buffer D is set up to store experiences. Random sampling experiences for training can improve data efficiency and reduce the correlation between samples.

3.2. Rainbow DQN

Traditional DQN cannot handle environments with large continuous state-action pairs. Rainbow DQN [39], an advanced reinforcement learning algorithm, enhances the traditional DQN by integrating six major improvement methods: Double QL, Dueling Q-networks, Prioritized Experience Replay, Multi-step Learning, Distributional RL, and Noisy Nets, thereby improving model performance:

- Double DQN decouples value estimation and action selection between the targets to mitigate the overestimation bias in the primary Q-network.

- Prioritized Experience Replay samples experience with greater loss more frequently to accelerate training.

- Dueling Q-networks introduce dueling networks by modifying the DQN architecture to predict the advantages and value of states, respectively.

- Multi-step Learning uses multi-step targets instead of single-step targets to compute the temporal difference error, speeding up the convergence of values.

- Noisy Nets add normally distributed noise parameters to each weight in the fully connected layers, updating the weights through backpropagation. This replaces the standard greedy exploration strategy used in DQN with a noisy linear layer that includes noise streams.

- Distributional RL predicts values as distributions by minimizing Kullback–Leibner loss, offering deeper insights when evaluating specific states through the distributional Q function.

3.3. Rainbow DQN Algorithm Process

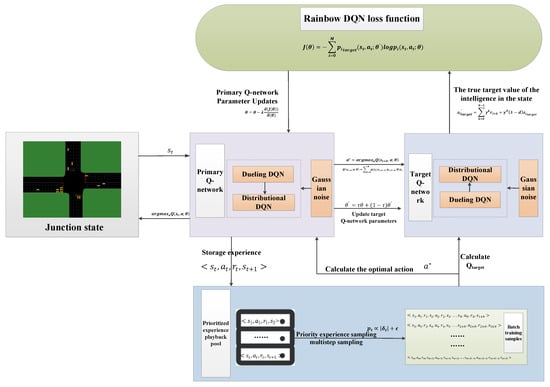

Figure 3 shows the framework of the intersection signal control algorithm based on Rainbow DQN_AM, which consists of two parts: the intersection signal control strategy and neural network parameter updates.

Figure 3.

Framework of the Rainbow DQN_AM algorithm.

The Rainbow DQN intersection signal control algorithm is based on DQN [47], so its algorithmic flow closely resembles that of the DQN algorithm. The specific steps are as follows:

Step 1: parameter settings, including —initialization of the main Q-network parameters; —initialization of the target Q-network parameters; —experience replay pool; —discount factor; —smoothing coefficient; —learning rate of the main Q-network; —exploration rate; —maximum capacity of the experience replay pool; —training batch size; —target Q-network update frequency; —number of iterations; —total number of training episodes; and —simulation episode time.

Step 2: initialize the experience replay pool with a capacity of M; initialize the Rainbow DQN main network and its corresponding parameters θ; initialize the target network and its corresponding parameters .

Step 3: start the iteration, generate the traffic scenario, and obtain the initial intersection environment state .

Step 4: Generate a random probability value . If ε, randomly select a control action for the traffic signal . If ε, the network outputs a control action based on the current intersection environment state , .

Step 5: Execute the action , obtain the new intersection state and the corresponding reward . Store the tuple as a sample in the experience replay buffer, with the sample priority calculated based on the TD error . If the experience buffer is full, delete the oldest sample record.

Step 6: Network update: The neural network parameters are updated using prioritized experience replay. After the parameters are updated, if the iteration count has not reached the predefined value, proceed to Step 4. Otherwise, proceed to Step 7. The process for updating the neural network parameters is as follows:

(1) The prioritized experience replay strategy is used to sample tuples from the experience pool. It is important to note that, due to the multi-step learning strategy, samples from the current tuple to the next N steps in the future need to be collected.

(2) The optimal action is calculated using the main Q-network in the Double DQN network, as shown in the following formula:

where represents the expected value of the action taken by the main Q-network at the state . The expected value of the main network is calculated through the distributional output of the Distributional DQN, as shown in the following formula:

where represents the value of the -th sample point; denotes the probability of the -th sample point when the main Q-network takes action at state .

(3) The target Q-value distribution is computed using the target network, based on the multi-step learning strategy. The formula is as follows:

where is the Q-value distribution of the optimal action from the target Q network, is the discount factor, and represents the true target value of the agent at state .

(4) The error between the true value distribution and the estimated value distribution is calculated using the cross-entropy loss function. The formula for the cross-entropy loss function is as follows:

where represents the true probability of the -th sample point computed by the target network, and the predicted probability is jointly computed using Dueling DQN and Distributional DQN.

(5) Update the parameters of the main network: the parameters of the main network are updated by minimizing the loss function using stochastic gradient descent.

(6) Periodically synchronize and update the target network: The parameters of the target Q network are updated using delayed and soft update strategies to mitigate the overestimation problem in the main network. The update formula is as follows:

In the equation, is the smoothing factor, representing the degree to which the main Q network’s parameters influence the target network.

Step 7: end of the process.

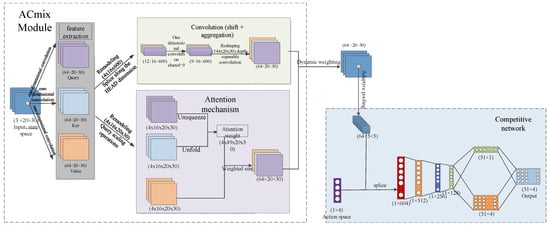

3.4. Algorithm Improvements Based on Rainbow DQN

Based on the Rainbow DQN framework, we designed an efficient and comprehensive Rainbow DQN_AM model by introducing the ACmix module to address the discretized state space. Traditional CNN models excel at extracting local data features but struggle to capture sufficient global information. On the other hand, while LSTM models can capture temporal patterns, they lack effective spatial modeling capabilities. In intersection signal optimization, traffic flow is complex and dynamically changing, which requires models to have strong state information capturing abilities. Moreover, real-time signal optimization demands high computational efficiency to quickly process large amounts of state data. Combining sufficient state information extraction with rapid computation efficiency helps to enhance the model’s decision-making capabilities. Pan [43] explored the relationship between self-attention mechanisms and convolution and proposed the ACmix module, which integrates both. This module retains the advantages of convolutional layers, such as parameter sharing, sparse interactions, and multi-channel processing, allowing it to efficiently learn features, reduce computational load, and enhance the model’s expressiveness. Additionally, by incorporating the self-attention mechanism, the ACmix module can handle long-range dependencies and global information, enabling a more comprehensive capture of global features in intersection traffic flow. The integration of these two modules compensates for CNN’s limitations in capturing global features while maintaining computational efficiency, thereby improving the model’s performance in complex traffic flow environments. The network structure is shown in Figure 4.

Figure 4.

Rainbow DQN_AM network structure diagram.

The pseudocode for the improved Rainbow DQN Algorithm 1 is as follows:

| Algorithm 1. Rainbow DQN algorithm |

| Input: Parameters |

| 1: For do; 2: Initialize the intersection road network environment ; 3: for do; 4: Observe the intersection road network environment , Select action based on the greedy policy and noisy network. Execute action , obtain new state , and receive a reward ; 5: Store the tuple as an experienced sample in the experience replay buffer ; 6: If exceeds capacity, delete the sample with the lowest priority; 7: Sample batch samples of size from the experience replay buffer using a prioritized experience replay strategy; 8: for each transition do. Primary Q-network determines the optimal action ; 9: Compute the target value ; 10: Determine the target value distribution based on the target value; 11: Update parameters using the cross-entropy loss function; 12: ; 13: Update the sample priorities in the experience replay buffer; 14: At every step, update the target network parameters . 15: end 16: end 17: end |

4. Experiment and Results

4.1. Simulation Setup

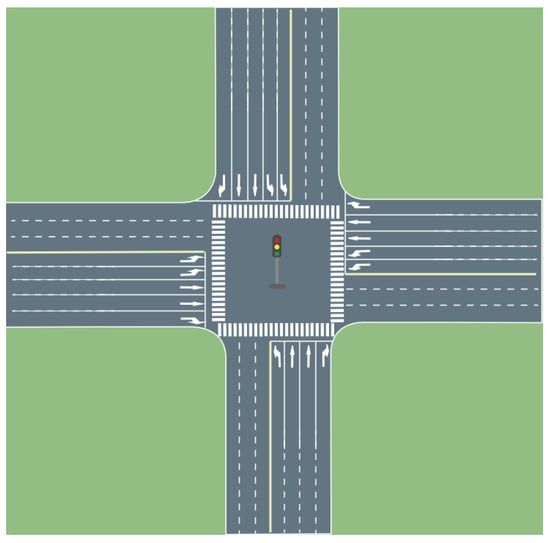

The LiZhou South Road—South City Road intersection in Jinhua City was chosen as the study site, and a simulation model was built in the SUMO simulation software (1.19.0). The intersection’s approach length is 400 m, with a speed limit of 50 km/h and a lane width of 3.75 m. The vehicle length is 5 m. The vehicle-following model adopted is the IDM, with a maximum acceleration of 2.6 m/s2 [35], a maximum deceleration of −4.5 m/s2 [19], a minimum safe following distance of 7.26 m [48,49], and a minimum stopping distance of 2.5 m [22]. The road structure of the simulated intersection is shown in Figure 5.

Figure 5.

The network structure of the intersection.

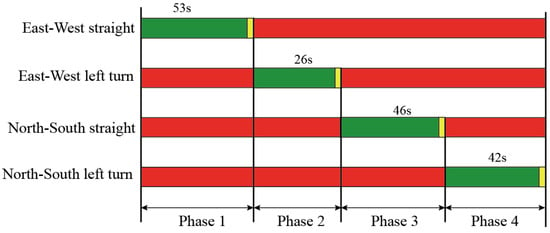

The signal timing data for the LiZouth Road—South City Road intersection in Jinhua City was collected. The actual signal cycle for the intersection is 179 s, with a yellow light duration of 3 s. Right-turning vehicles at this intersection are not controlled by the traffic signals. The specific phase distribution is shown in Figure 6.

Figure 6.

Actual signal timing of the intersection.

The collected actual traffic flow data during peak hours is 4576 pcu/h, and the vehicle turning ratios at the intersection are shown in Table 3.

Table 3.

Vehicle turning ratios for each approach at the intersection.

Considering the traffic flow characteristics during the morning and evening peak hours on urban roads, the traffic volume during peak periods often exhibits extreme fluctuations, where the flow quickly increases within a short period, maintains a peak state, and then sharply decreases. The Weibull distribution is well suited for capturing the significant fluctuations, extreme values, and nonlinear characteristics of peak traffic, making it ideal for simulating traffic distribution during peak hours. Therefore, traffic volume is generated based on the Weibull distribution. The simulation duration is set to 2 h, based on the collected traffic volume data, and the parameters of the probability distribution function are configured according to the method in reference [35]. The probability density function is as follows:

Here, is the scale parameter set to 1, and is the shape parameter set to 2.

4.2. Comparison Experiment

(1) A comparison was conducted using D3QN, DQN, actual signal timing, Webster signal timing, actuated signal timing, and the Rainbow DQN algorithm-based signal timing. Among them, Webster signal timing and actuated signal timing are both traditional methods for signal optimization. For comparison, we selected two mainstream reinforcement learning models: DQN and D3QN. The DQN model serves as the foundational model for Rainbow DQN, while D3QN has garnered significant attention and is considered representative [50,51]. The DQN model configuration is based on the approach in [47], with the addition of a CNN module. The model setup and modules used in the D3QN model follow the configuration described in [35].

The actual signal timing is shown in Figure 6, and the key parameter settings for Webster signal timing and actuated signal timing are provided in Table 4. The Webster signal timing parameters are calculated based on the actual intersection’s road network structure, traffic volume, and phase distribution to determine the optimal fixed signal timing. The actuated signal timing is based on the Webster signal timing parameters, with the maximum and minimum durations set according to [19]. The hyperparameters required for the DQN and D3QN algorithms are consistent with those used in Rainbow DQN.

Table 4.

Signal timing parameter settings for the control group.

To further validate the effectiveness of the reward function, a comparative reward function, Reward–Wait Time, was established. The calculation formula is as follows:

where and represent the total vehicle waiting time at the intersection’s entry lane at time and , respectively; is set to 0.9.

In summary, the experimental groups and control groups are set up as shown in Table 5.

Table 5.

Control group experimental design.

(2) Analyze the control impact of the model under different scenarios, including varying CO2 emission factors for electric vehicles, different proportions of electric vehicles, varying traffic volumes, and considering the penetration rate of autonomous vehicles.

4.3. Experimental Assumptions

(1) Roadside equipment can obtain real-time vehicle information through vehicular wireless communication technology (V2X). Communication delays and information errors are not considered.

(2) The study focuses only on passenger cars, ignoring the impact of vehicle size. From the perspective of vehicle power type, vehicles are categorized into two types: gasoline-powered and electric vehicles.

(3) All vehicles are equipped with the same devices, with the only difference being their power sources. Given the instability of start–stop devices in the current traffic environment [52,53], the impact of these devices on the model results is neglected.

(4) According to the 2023 Global Electric Vehicle Outlook [54], the current mix of gasoline and electric vehicles in traffic is set at 71% and 29%, respectively.

(5) The impact of pedestrians, non-motorized vehicles, and weather conditions on the road system is not considered [55].

(6) The study scenario is an urban intersection with a flat road surface, where factors such as slope have a negligible effect on the VSP-based power model [49].

4.4. Training Process

The Rainbow DQN_AM algorithm uses the Adam optimizer in combination with gradient descent for training. In each iteration, the batch size is set to 64. The number of epochs is set to 2880 simulation steps per epoch, and the model is trained for 100 epochs using real traffic flow data. The main hyperparameters of the algorithm are listed in Table 6, with values referenced from [39]. The learning rate , discount factor , and replay experience capacity M are determined through experimental tuning.

Table 6.

Main hyperparameter of Rainbow DQN_AM.

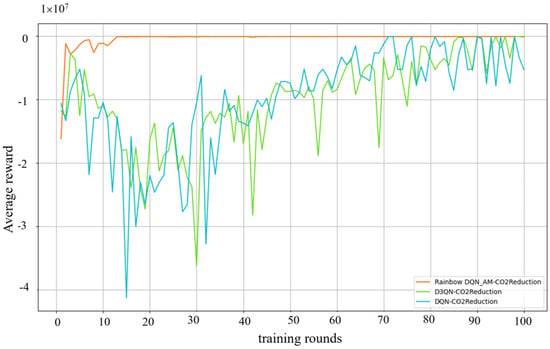

The network parameters from the training cycle with the highest average reward value were selected for model validation. Specifically, the parameters from the 92nd, 99th, and 71st generations were chosen for the Rainbow DQN_AM, D3QN, and DQN algorithms. As shown in Figure 7, the average reward values for the models indicate that the Rainbow DQN_AM algorithm exhibits the highest learning efficiency and fastest convergence, stabilizing after 14 generations. In contrast, the average reward curves for the DQN and D3QN algorithms show significant fluctuations. From the 5th to the 20th generation, the reward values drop sharply, and from the 20th to the 70th generation, the reward values fluctuate and rise but tend towards zero. Overall, the reward curves for these algorithms remain lower than that of the Rainbow DQN_AM algorithm.

Figure 7.

The average reward of the training process.

4.5. Training Results

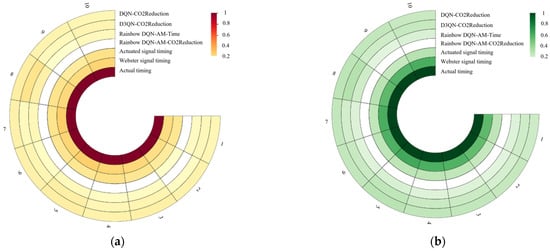

Using peak hour traffic data (4576 pcu/h) and the collected real traffic flow vehicle turn ratios, 10 sets of test traffic flows were randomly generated. The validity of the proposed signal control scheme was confirmed by assessing the average CO2 emissions and vehicle waiting times throughout the simulation period.

Figure 8 shows a comparison of average vehicle CO2 emissions and average waiting times. Min–Max normalization was applied to both metrics, and the results are presented as a heatmap. Each ring in the heatmap represents the optimization effectiveness of a signal control scheme, with lighter colors indicating better optimization results. Figure 8a shows the results for average vehicle waiting time. Compared to traditional signal control methods and actual signal timing, the DRL-based signal control methods provided better optimization for average vehicle waiting time at intersections. Among these, the Rainbow DQN_AM-CO2Reduction scheme performed the best, only slightly outperformed by the Rainbow DQN_AM_Time scheme in the second round of testing. Figure 8b displays the optimization effects on average vehicle CO2 emissions. Similar to the results in the left figure, deep reinforcement learning control schemes generally outperformed traditional signal control algorithms across all test rounds. Furthermore, the Rainbow DQN_AM-CO2Reduction scheme consistently showed the best CO2 reduction effects across all 10 test rounds. The results indicate that the two schemes using the Rainbow DQN_AM algorithm outperform the deep reinforcement learning schemes using D3QN and DQN.

Figure 8.

The heatmap of optimization effects of different signal control strategies: (a) Average vehicle waiting times; (b) average vehicle CO2 emissions.

A statistical significance analysis was conducted on the results for two indicators: average vehicle waiting time and average CO2 emissions across different models. The Rainbow DQN_AM-CO2Reduction algorithm represents the optimized result, while the others serve as control groups. Data from 10 sets of traffic flow were used to perform an analysis of variance (ANOVA) to compare the indicator values from the control groups and the Rainbow DQN_AM-CO2Reduction algorithm. The results, shown in Table 7, demonstrate that there are significant differences between the control group models and the proposed model in both average waiting time and average CO2 emissions, with p-values less than 0.05. This indicates that the optimization effects of the model are statistically significant and representative.

Table 7.

Statistical significance analysis of optimization indicators.

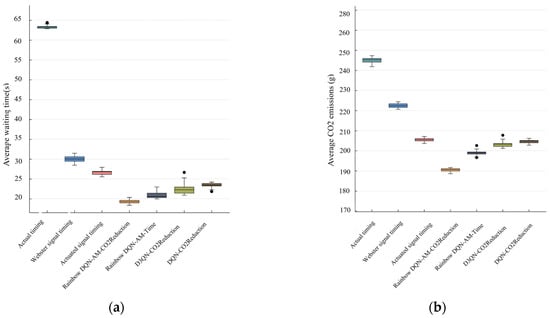

To analyze the optimization degree of different schemes and the model’s robustness under real traffic conditions, box plots of the average vehicle waiting time and average CO2 emissions are shown in Figure 9. Figure 9a shows that the average waiting time for vehicles for the Rainbow DQN_AM-CO2Reduction signal control scheme is 19.30 s. This represents reductions in 7.48%, 14.50%, and 27.58% compared with the Rainbow DQN_AM-Time scheme, the D3QN-CO2Reduction scheme, and the actuated signal timing scheme, respectively. Compared to the actual timing scheme, the reduction is as high as 57.95%. As shown in Figure 9b, the average CO2 emissions per vehicle for the Rainbow DQN_AM-CO2Reduction scheme are only 190.33 g, which is 4.37%, 6.40%, and 7.34% lower than the Rainbow DQN_AM-Time scheme, the D3QN-CO2Reduction scheme, and the actuated signal timing scheme, respectively. In a scenario where electric vehicles account for 29% of the traffic, the average CO2 emissions are optimized to a lesser extent than the average waiting time due to the low-emission nature of the electric vehicles themselves. Additionally, the Rainbow DQN_AM-CO2Reduction scheme shows standard deviations of 0.56 for average vehicle waiting time and 1.06 for average CO2 emissions, significantly outperforming the Rainbow DQN_AM-Time scheme, which uses the same algorithm but with a different reward function. These results indicate that a reward function considering both waiting time and CO2 emissions helps the agent learn a more reliable control strategy.

Figure 9.

The boxplot of optimization effects at different signal control strategies: (a) Average vehicle waiting time; (b) average vehicle carbon dioxide.

By analyzing the data on average waiting time and average CO2 emissions, it is evident that in the simulation scenario using real traffic flow data, the proposed Rainbow DQN_AM-CO2Reduction model demonstrates clear superiority. Compared to the DQN and D3QN models, it reduces average waiting time by approximately 17.16% and 14.22%, respectively, and decreases average CO2 emissions by about 6.94% and 6.39%.

To provide a comprehensive comparative analysis of the model, the results of this study are critically compared with those of related literature. Given that reinforcement learning models are influenced by various factors such as state space and reward functions, which can lead to differences in outcomes, we selected a study [35] as a comparison due to its similarities in state space, reward function, and other aspects with the proposed model. The model in [35] considers a mixed traffic scenario with Connected and Autonomous Vehicles (CAVs) and Human-driven Vehicles (HVs), using a D3QN framework with a CNN module. The state space includes vehicle position, speed, and acceleration, while the reward function considers average vehicle waiting time and CO2 emissions with fixed weight parameters. A comparison of the optimization results between the proposed model and the model in [35], in relation to the optimization of signal timing with actuated signal control, is shown in Table 8. It is evident that, in the scenario based on real traffic data, the proposed model outperforms the D3QN_CNN model in terms of optimization. This suggests that the Rainbow DQN algorithm, as an integrated model, demonstrates superior performance in real-time signal optimization compared with the D3QN algorithm. Furthermore, the ACmix module introduced in this study enhances the model’s processing capabilities more effectively than the CNN module used in [35]. Additionally, compared with the fixed weighting of the reward function in [35], the dynamic adjustment of the queue length coefficient in the proposed model also contributes to the improved optimization performance. However, it is important to note that there are still some differences between the intersection scale, entry lane configuration, and traffic data used in [35] and those in this study. Furthermore, the mixed traffic scenario in [35] focusing on CAVs and HVs differs from the mixed traffic scenario with gasoline and electric vehicles in this study. Therefore, this comparison serves only as a reference.

Table 8.

Comparison of model optimization levels.

4.6. Comparative Analysis of Different CO2 Emission Factors for Electric Vehicles and Electric Vehicle Proportions

With the gradual development of electric vehicles (EVs), the proportion of EVs is expected to change in the future. At the same time, the CO2 emission factors for electric vehicles published by the government vary annually. This section explores the model performance under different CO2 emission factors for electric vehicles and varying proportions of electric vehicles, based on real traffic flow data.

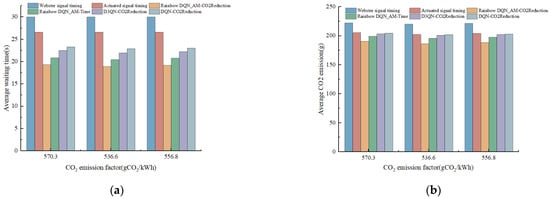

4.6.1. Comparison Analysis Under Different CO2 Emission Factors for Electric Vehicles

Due to the uncertainty in the future changes in CO2 emission factors, this section selects the CO2 emission factors for the years 2021, 2022, and 2023, as published by the Ministry of Ecology and Environment of China, to analyze their impact on the model results. The ratio of fuel vehicles to electric vehicles remains consistent with the assumptions, and the corresponding CO2 emission factors are 556.8 g CO2/kWh, 536.6 g CO2/kWh, and 570.3 g CO2/kWh, respectively. The changes in average vehicle waiting time and average CO2 emissions under different CO2 emission factors are shown in Figure 10. It can be clearly observed that, with variations in the CO2 emission factor, there is no significant change in vehicle average waiting time, and the change in average CO2 emissions is also relatively small. When the CO2 emission factor is at its lowest value of 536.6 g CO2/kWh, the Rainbow DQN-based optimization scheme shows the largest change in average CO2 emissions, with variations reaching 3.46 g and 2.69 g, respectively. However, overall, there is no significant change. The average waiting time only changes by 0.3 s. This may be because, in the scenario where the proportion of electric vehicles is only 29%, changing the CO2 emission factor has little impact on the vehicle’s average waiting time, and the change in CO2 emissions is also minor. Furthermore, the logic of the reward function in this study involves dynamically weighting the differences in vehicle CO2 emissions and waiting times between two consecutive time steps. When only the CO2 emission factor for electric vehicles is altered, the relative differences between the two time steps are relatively small, so the reward function has little effect, and no significant change is observed.

Figure 10.

Model results under different CO2 emission factors for electric vehicles: (a) average vehicle waiting time; (b) average vehicle CO2 emissions.

4.6.2. Comparison Analysis Under Different Proportions of Electric Vehicles

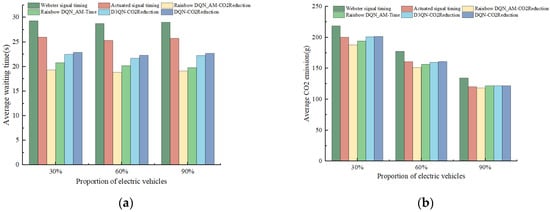

The proportion of electric vehicles was set to 30%, 60%, and 90% to explore the impact on the model’s optimization performance. The corresponding results for average vehicle waiting time and average CO2 emissions are shown in Figure 11.

Figure 11.

Model results for different proportions of electric vehicles: (a) average vehicle waiting time; (b) average vehicle CO2 emissions.

As shown in Figure 11a, the average waiting time for vehicles under different optimization schemes fluctuates as the proportion of electric vehicles increases. At 30% and 60% electric vehicle penetration, the Rainbow DQN_AM-CO2Reduction scheme reduces the average waiting time by more than 15% compared with the actuated signal timing. However, as the proportion of electric vehicles rises to 90%, the optimization effect of the proposed reward function weakens. The Rainbow DQN_AM-Time scheme, which focuses solely on the waiting time, maintains stable optimization performance. Additionally, due to the faster acceleration of electric vehicles, waiting times are reduced to some extent. Despite this, under the same reward function, the Rainbow DQN_AM-CO2Reduction method still achieves a more than 10% reduction in average waiting time compared with the DQN and D3QN models. As shown in Figure 11b, the increase in the proportion of electric vehicles leads to a natural decline in CO2 emissions at the intersection. When the proportion of electric vehicles is 30%, 60%, and 90%, the corresponding average CO2 emissions for the Rainbow DQN_AM-CO2Reduction scheme are 188.1 g, 151.44 g, and 118.3 g, respectively. As the proportion of electric vehicles increases, the optimization effect of the proposed scheme gradually weakens. At medium and low proportions, the scheme can reduce average CO2 emissions by 4% to 7% compared with the actuated signal timing, but at a 90% electric vehicle proportion, the reduction is only about 2%. It is noteworthy that when the proportion of electric vehicles reaches 90%, the average CO2 emissions from the D3QN-CO2Reduction and DQN-CO2Reduction optimization schemes show no significant difference compared with the actuated signal timing. This is because electric vehicles release much lower CO2 emissions during idling, acceleration, and deceleration than conventional gasoline vehicles. At high electric vehicle proportions, the CO2 emissions at the intersection are already at a low level, so the potential for further optimization is reduced.

4.7. Analysis of Experimental Outcomes Under Varying Traffic Volumes

Taking into account the significant variation in traffic flow at different times of the day on urban roads, this study not only validates the optimization effect of the model during peak hours but also compares and analyzes the performance of the intersection signal control strategy under three traffic intensities: 2400 pcu/h, 3600 pcu/h, and 4800 pcu/h. These traffic intensities correspond to vehicle arrival rates of 40 pcu/min, 60 pcu/min, and 80 pcu/min, respectively, simulating off-peak traffic conditions. By adjusting the green signal duration to 3 s and the yellow light duration to 2 s, the system can more effectively respond to varying traffic flow conditions.

As depicted in Table 9, the average vehicle waiting time increases with traffic flow, with the actuated signal timing scheme experiencing the greatest rise and the Rainbow DQN_AM-based scheme showing the least. The Rainbow DQN_AM-CO2Reduction scheme achieves the optimal optimization effect under all three traffic intensities, with waiting times of 4.38 s, 6.88 s, and 11.46 s, respectively. At traffic volumes of 2400 pcu/h and 3600 pcu/h, the D3QN-CO2Reduction scheme performs better than the Rainbow DQN_AM-Time scheme, with waiting times of 4.85 s and 7.45 s, respectively. At the highest traffic intensity, the optimization effectiveness of the timing schemes using the DQN and D3QN algorithms significantly declines, while the scheme based on the Rainbow DQN_AM algorithm demonstrates better robustness. Furthermore, the proposed scheme in this paper demonstrates the best optimization performance under high traffic intensity, reducing average waiting times by 18.08%, 45.66%, and 68.09% compared with the Rainbow DQN_AM-Time, DQN-CO2Reduction, and Webster signal timing schemes, respectively.

Table 9.

Average vehicle waiting time for different traffic intensities.

As shown in Table 10, the average vehicle CO2 emissions increase overall with rising traffic flow. Consistent with the trend in vehicle waiting time, the Rainbow DQN_AM-CO2Reduction scheme achieves the best optimization results for average vehicle CO2 emissions across all three traffic intensities, with values of 175.18 g, 180.53 g, and 192.02 g, respectively. In the 2400 pcu/h and 3600 pcu/h scenarios, the proposed scheme reduces CO2 emissions by 1.73% and 1.41%, respectively, compared with the next best scheme, D3QN_CO2Reduction. In the 4800 pcu/h scenario, emissions are reduced by 6.26% compared with the next best scheme, Rainbow DQN_AM-Time. In medium and low traffic intensity scenarios, the optimization performance of the D3QN-CO2Reduction and DQN-CO2Reduction models, which are based on the same reward function, is inferior to that of the Rainbow DQN_AM-CO2Reduction scheme. As traffic intensity increases, the performance gap in model optimization widens further. Under high traffic intensity, the proposed model reduces average CO2 emissions by 9.42% and 13.16% compared with D3QN-CO2Reduction and actuated signal timing schemes, respectively.

Table 10.

Average vehicle CO2 emissions at different traffic intensities.

Under different traffic volume conditions, the Rainbow DQN_AM-CO2Reduction model demonstrated significant advantages in both the algorithm and reward function compared with classical DRL algorithms and reward functions. This confirmed its effectiveness in reducing the average vehicle waiting time and average CO2 emissions at signalized intersections under mixed traffic conditions.

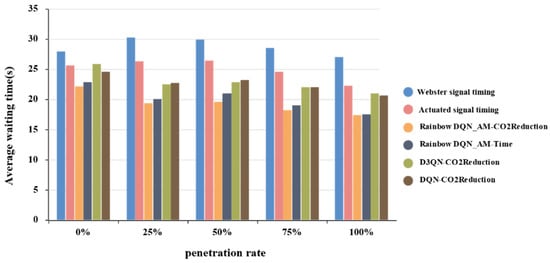

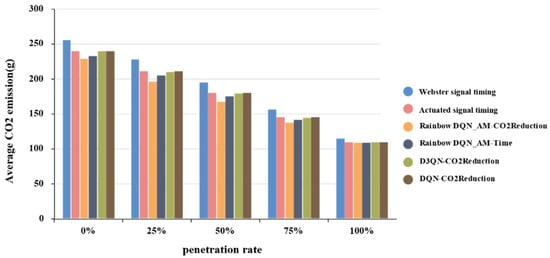

4.8. Analysis of Results Considering Different Autonomous Vehicle Penetration Rates

With the rapid development of autonomous driving technology, the proportion of autonomous vehicles in future transportation systems is expected to gradually increase. This trend will impact traffic flow characteristics, carbon emissions, and traffic efficiency. Therefore, it is necessary to analyze and compare the optimization effects of signal control schemes under different autonomous vehicle penetration rates. Table 11 presents global autonomous vehicle penetration rate data for 2023, and the market penetration rate of autonomous vehicles is expected to continue to rise in the future [54]. Based on this, this study assumes that the growth in autonomous vehicle market penetration will come primarily from autonomous electric vehicles, while the share of other vehicle types will decrease. A traffic flow of 4576 pcu/h is set, with manually driven vehicles modeled using the IDM model and autonomous vehicles modeled using the CACC model. Mixed traffic flows with 75%, 50%, and 25% connected vehicle penetration rates are randomly generated for testing. Additionally, the optimization effects of various signal control schemes are compared under two non-mixed traffic flow conditions: 100% and 0% penetration rates.

Table 11.

Current market penetration rate of vehicles.

To accurately illustrate the performance differences of signal control schemes under varying penetration rates, Figure 12 shows that traditional signal control schemes, such as Webster and actuated signal control, perform less effectively in terms of average vehicle waiting time optimization in mixed traffic environments compared with non-mixed traffic environments. This is because, in mixed traffic conditions, especially under low penetration rates, connected autonomous vehicles cannot form an effective collaborative driving environment. In contrast, the proposed scheme in this study demonstrates more stable control strategies in mixed traffic environments. As the penetration rate increases, the average vehicle waiting time decreases. Specifically, at a 50% penetration rate, the average waiting time is 19.63 s, which is 6.35% lower compared with the scheme with the same algorithm but a different reward function, 14.20% lower compared with the scheme with the same reward function but a different algorithm, and 25.78% lower compared with the actuated signal control scheme.

Figure 12.

Average vehicle waiting time at different penetration rates.

Figure 13 shows the trend of average vehicle CO2 emissions under different penetration rates. Similar to Figure 12, the proposed optimization scheme performs best at a 50% penetration rate, where the average vehicle CO2 emissions are 167 g. Compared to the Rainbow DQN_AM-Time scheme, D3QN-CO2Reduction, and the actuated signal timing scheme, emissions are reduced by 4.58%, 6.60%, and 6.97%, respectively. This is because, in a mixed-traffic environment, connected autonomous vehicles require frequent acceleration and deceleration, and other control schemes fail to learn the correct strategies effectively. It is noteworthy that at a 100% penetration rate, the CO2 emissions component in the reward function is smaller, and thus, the performance difference between the proposed scheme and the Rainbow DQN_AM-Time scheme is not significant.

Figure 13.

Average vehicle CO2 emissions under different penetration rates.

5. Discussion, Conclusions, and Limitations

5.1. Discussion

This study focuses on the mixed traffic scenario of gasoline vehicles and electric vehicles, aiming to propose an intersection signal timing optimization strategy based on deep reinforcement learning (Rainbow DQN) to improve intersection vehicle throughput and reduce CO2 emissions.

Theoretically, this study utilizes the state-of-the-art Q-learning model, Rainbow DQN, providing a novel theoretical framework for optimizing traffic signal timing. Considering the increasing proportion of electric vehicles in real-world scenarios, this study fills the gap in existing literature by addressing the mixed traffic scenario involving both electric and gasoline vehicles. It incorporates the emission differences between gasoline and electric vehicles, selects appropriate carbon emission models, and designs a multi-objective reward function considering both traffic efficiency and CO2 emissions. Additionally, the introduction of the ACmix module, which combines convolutional and self-attention mechanisms, enhances the model’s computational efficiency and expressive power. This also provides insights into mitigating the high computational and decision-making costs of real-time optimization while improving the potential application of reinforcement learning algorithms in complex traffic signal control problems.

In addition, by applying reinforcement learning algorithms to real-time traffic signal optimization, this study significantly improved traffic throughput and reduced CO2 emissions through the dynamic adjustment of intersection signals. This provides an efficient, low-carbon, and intelligent optimization solution for intersection signal timing, offering valuable practical insights for urban traffic management. Through SUMO simulations, the optimization effects of the model were analyzed under different electric vehicle proportions and traffic volumes. In scenarios with a high proportion of electric vehicles, the optimization effect of the model weakened, and the performance of the DQN and D3QN models was lower than that of the conventional traffic signal control. This finding provides useful guidance for urban traffic managers in formulating strategies and further promotes the development of low-carbon cities, reducing traffic emissions. However, the model still needs to be validated in real-world traffic scenarios, and further consideration of the computational power required by the model is necessary to assess its applicability under real traffic conditions, providing stronger data support for broader future applications.

5.2. Conclusions and Limitations

This paper is based on the Rainbow DQN algorithm and utilizes the Reward–CO2 Reduction function for training to optimize intersection signal timing. The conclusions are as follows:

(1) Utilizing Rainbow DQN to explore real-time optimization of signal timing, the introduction of the Acmix module and self-attention mechanism significantly enhanced the model’s computational efficiency and performance. To facilitate efficient learning of signal control strategies by the agent, vehicle acceleration was incorporated into the state space modeling. The action space was defined based on variable phase sequences, while the reward function was formulated according to the average vehicle waiting time and CO2 emissions. The calculation of CO2 emissions at the intersection takes into account both the specific power model for fuel vehicles and the energy conversion model for electric vehicles, making it more reflective of real-world conditions.

(2) The model was validated using actual road intersection data, demonstrating the significant superiority of the Rainbow DQN_AM algorithm compared with signal timing based on DQN and D3QN algorithms. This also verified the effectiveness of the Reward-CO2 Reduction reward function. The proposed scheme reduces average vehicle waiting time by 7.48% and average CO2 emissions by 4.37% compared with the Rainbow DQN_AM-Time scheme. It also achieves a reduction of approximately 27.58% in vehicle waiting time and about 7.34% in average CO2 emissions compared with the actuated signal timing scheme.

(3) The impact of different CO2 emission factors of electric vehicles on the model is not significant. As the proportion of electric vehicles increases, the optimization effect of the proposed reward function in this study decreases. At medium and low proportions of electric vehicles, compared with the actuated signal timing, the Rainbow DQN_AM-CO2Reduction method can reduce the average waiting time by more than 15% and decrease the average CO2 emissions by 4% to 7%. Under varying traffic intensities, the Rainbow DQN_AM-CO2Reduction method adjusts its control strategy according to the traffic flow, reducing CO2 emissions at the intersection and improving traffic efficiency. In the 3600 pcu/h traffic volume scenario, the proposed method shows the best optimization effect, but as traffic volume increases, the optimization effect decreases to some extent.

This study does have certain limitations. First, the research focuses solely on passenger cars and does not account for other types of vehicles. Future studies could include different vehicle types and categorize them based on their size and characteristics in the state input. Additionally, the model’s state–space input only considers factors such as vehicle position, speed, and acceleration. Previous studies [56] have demonstrated the feasibility of incorporating turn signal information into the state space. In the future, this information could be encoded as one of the state inputs to enhance the model’s adaptability at different intersections. Second, the model validation is primarily based on traffic flow data generated in a simulation environment and does not fully account for the complexities of real-world traffic scenarios, such as extreme weather conditions, unexpected events (e.g., traffic accidents), or fluctuations in pedestrian density. These factors may affect the model’s performance in actual environments, and further real-world testing is needed for validation. Lastly, the training process of Rainbow DQN is highly dependent on computational resources, especially in large-scale traffic networks. This could pose significant real-time challenges during training and deployment. Therefore, future research should incorporate real-world traffic data to improve the model’s generalization capability and explore more efficient algorithms to meet the demands of real-time optimization.

Author Contributions

Conceptualization, data curation, methodology, validation, and writing—original draft, J.L.; Conceptualization, data curation, and writing—review and editing Z.W.; conceptualization and writing—review and editing, J.M. All authors have read and agreed to the published version of the manuscript.

Funding

This research received no external funding.

Institutional Review Board Statement

Not applicable.

Informed Consent Statement

Not applicable.

Data Availability Statement

Data is contained within the article.

Conflicts of Interest

The authors declare that there is no conflict of interest regarding the publication of this paper.

References

- Fellendorf, M. VISSIM: A microscopic simulation tool to evaluate actuated signal control including bus priority. In Proceedings of the 64th Institute of Transportation Engineers Annual Meeting, Dallas, TX, USA, 16–19 October 1994; pp. 1–9. [Google Scholar]

- Mirchandani, P.; Head, L. A real-time traffic signal control system: Architecture, algorithms, and analysis. Transp. Res. Part C Emerg. Technol. 2001, 9, 415–432. [Google Scholar] [CrossRef]

- Sims, A.G.; Dobinson, K.W. The Sydney coordinated adaptive traffic (SCAT) system philosophy and benefits. IEEE Trans. Veh 1980, 29, 130–137. [Google Scholar] [CrossRef]

- Mirchandani, P.; Wang, F.-Y. RHODES to intelligent transportation systems. IEEE Intell. Syst. 2005, 20, 10–15. [Google Scholar] [CrossRef]

- Robertson, D.I.; Bretherton, R.D. Optimizing networks of traffic signals in real time-the SCOOT method. IEEE Trans. Veh. 1991, 40, 11–15. [Google Scholar] [CrossRef]

- Nyame-Baafi, E.; Adams, C.A.; Osei, K.K. Volume warrants for major and minor roads left-turning traffic lanes at unsignalized T-intersections: A case study using VISSIM modelling. J. Traffic Transp. Eng. (Engl. Ed.) 2018, 5, 417–428. [Google Scholar] [CrossRef]

- Zhao, J.; Ma, W.; Head, K.L.; Yang, X. Optimal operation of displaced left-turn intersections: A lane-based approach. Transp. Res. Part C Emerg. Technol. 2015, 61, 29–48. [Google Scholar] [CrossRef]

- Xuan, Y.; Daganzo, C.F.; Cassidy, M.J. Increasing the capacity of signalized intersections with separate left turn phases. Transport. Res. B-Meth. 2011, 45, 769–781. [Google Scholar] [CrossRef]

- Zhao, J.; Ma, W.; Han, Y. Optimization Model of Geometry and Signal Combination for Left-Turn Intersections at Exit Lanes. China J. Highw. Transp. 2017, 30, 120–127. [Google Scholar] [CrossRef]

- Wu, J.; Liu, P.; Tian, Z.Z.; Xu, C. Operational analysis of the contraflow left-turn lane design at signalized intersections in China. Transp. Res. Part C Emerg. Technol. 2016, 69, 228–241. [Google Scholar] [CrossRef]

- Lu, K.; Lin, G.; Xu, J.; Wang, Y. Simultaneous optimization model of signal phase design and timing at intersection. In Proceedings of the International Conference on Transportation and Development, Pittsburgh, PA, USA, 15–18 July 2018; pp. 65–74. [Google Scholar]

- Chen, X.; Wang, R.; Chen, Z.; Gao, M.; Guo, Y. Multi-Objective Optimization of Intersection Signal Timing Based on Improved NSGA-II. J. Qingdao Univ. Technol. 2024, 45, 111–117+125. [Google Scholar]

- Xu, J.; Xi, J. Signal Timing Model for Arterial Intersections Based on Q-Reinforcement Learning. J. Guangxi Univ. (Nat. Sci. Ed.) 2021, 46, 1036–1044. [Google Scholar] [CrossRef]

- Kou, J.; Li, X.; Zhang, Y.; Hu, T.; Guo, X. Signal Timing Optimization Model Based on MOPSO-GRU Neural Network: A Case Study of Yan’an Intersection. Traffic Eng. 2024, 24, 36–43. [Google Scholar] [CrossRef]

- Xu, M.; Han, Y. Intersection Signal Timing Optimization Based on Particle Swarm Algorithm. Logist. Technol. 2020, 43, 106–110. [Google Scholar] [CrossRef]

- Lu, Y.; Lin, L. Signal Timing Optimization for At-Grade Intersections Based on Genetic Algorithm. For. Eng. 2020, 36, 103–109. [Google Scholar] [CrossRef]

- Wang, L.; Wang, Y.-X.; Li, J.-K.; Liu, Y.; Pi, J.-T. Adaptive Traffic Signal Control Method Based on Offline Reinforcement Learning. Appl. Sci. 2024, 14, 10165. [Google Scholar] [CrossRef]

- Shi, Y.; Wang, Z.; LaClair, T.J.; Wang, C.; Shao, Y.; Yuan, J. A novel deep reinforcement learning approach to traffic signal control with connected vehicles. Appl. Sci. 2023, 13, 2750. [Google Scholar] [CrossRef]

- Lu, L.; Chen, K.; Chu, D.; Wu, C.; Qiu, Y. Adaptive traffic signal control based on competitive Double Q-Network. China J. Highw. Transp. 2022, 35, 267–277. [Google Scholar] [CrossRef]

- Chen, X.; Zhu, Y.; Lv, C. Signal phase and timing optimization method for intersection based on hybrid proximal policy optimization. J. Transp. Syst. Eng. Inf. Technol. 2023, 23, 106–113. [Google Scholar] [CrossRef]

- Dong, Y.; Huang, H.; Zhang, G.; Jin, J. Adaptive Transit Signal Priority Control for Traffic Safety and Efficiency Optimization: A Multi-Objective Deep Reinforcement Learning Framework. Mathematics 2024, 12, 3994. [Google Scholar] [CrossRef]

- Ye, B.; Sun, R.; Wu, W.; Chen, B.; Yao, Q. Traffic Signal Control Method Based on Asynchronous Advantage Actor-Critic. J. Zhejiang Univ. (Eng. Ed.) 2024, 58, 1671–1680+1703. [Google Scholar]

- Kumar, N.; Rahman, S.S.; Dhakad, N. Fuzzy inference enabled deep reinforcement learning-based traffic light control for intelligent transportation system. IEEE Trans. Intell. Transp. Syst. 2020, 22, 4919–4928. [Google Scholar] [CrossRef]

- Liu, Z.; Ye, B.; Zhu, Y.; Yao, Q.; Wu, W. Traffic Signal Control Method Based on Deep Reinforcement Learning. J. Zhejiang Univ. (Eng. Sci.) 2022, 56, 1249–1256. [Google Scholar]

- Zhang, X.; Nie, S.; Li, Z.; Zhang, H. Traffic Signal Control Based on Deep Reinforcement Learning with Self-Attention Mechanism. J. Transp. Syst. Eng. Inf. Technol. 2024, 24, 96–104. [Google Scholar] [CrossRef]

- Wei, H.; Chen, C.; Zheng, G.; Wu, K.; Gayah, V.; Xu, K.; Li, Z. Presslight: Learning max pressure control to coordinate traffic signals in arterial network. In Proceedings of the 25th ACM SIGKDD International Conference on Knowledge Discovery & Data Mining, Anchorage, AK, USA, 4–8 August 2019; pp. 1290–1298. [Google Scholar] [CrossRef]

- Qiao, Z.; Ke, L. Traffic Signal Control Based on Deep Reinforcement Learning. Control Theory Appl. 2023, 40, 1–11. Available online: https://link.cnki.net/urlid/44.1240.TP.20231214.0847.036 (accessed on 12 January 2025).

- Coelho, M.C.; Farias, T.L.; Rouphail, N.M. Impact of speed control traffic signals on pollutant emissions. Transp. Res. D Transp. Environ. 2005, 10, 323–340. [Google Scholar] [CrossRef]

- Yao, R.; Sun, L.; Long, M. VSP-based emission factor calibration and signal timing optimisation for arterial streets. IET Intell. Transp. Syst. 2019, 13, 228–241. [Google Scholar] [CrossRef]

- Chen, X.; Yuan, Z. Environmentally friendly traffic control strategy-A case study in Xi’an city. J. Clean. Prod. 2020, 249, 119397. [Google Scholar] [CrossRef]

- Lin, H.; Han, Y.; Cai, W.; Jin, B. Traffic signal optimization based on fuzzy control and differential evolution algorithm. IEEE Trans. Intell. Transp. Syst. 2022, 24, 8555–8566. [Google Scholar] [CrossRef]

- Ding, X.; Wang, H.; Dang, X. Multi-objective Optimization of Intersection Signal Timing Considering Exhaust Emissions. J. Syst. Simul. 2024, 36, 1–17. [Google Scholar] [CrossRef]

- Liu, Y. Research on optimization of bus priority pre-signal control. In Proceedings of the Fifth International Conference on Traffic Engineering and Transportation System (ICTETS 2021), Chongqing, China, 22 December 2021. [Google Scholar]

- Zhang, Y.; Zhou, Y.; Wang, B.; Song, J. MMD-TSC: An Adaptive Multi-Objective Traffic Signal Control for Energy Saving with Traffic Efficiency. Energies 2024, 17, 5015. [Google Scholar] [CrossRef]

- Wang, Z.; Xu, L.; Ma, J. Carbon dioxide emission reduction-oriented optimal control of traffic signals in mixed traffic flow based on deep reinforcement learning. Sustainability 2023, 15, 16564. [Google Scholar] [CrossRef]

- Haddad, T.A.; Hedjazi, D.; Aouag, S. A deep reinforcement learning-based cooperative approach for multi-intersection traffic signal control. Eng. Appl. Artif. Intell. 2022, 114, 105019. [Google Scholar] [CrossRef]

- Ren, A.; Zhou, D.; Feng, J.; Tang, M.; Li, T. Traffic signal control based on attention mechanism and deep reinforcement learning. Appl. Res. Comput. 2023, 40, 430–434. [Google Scholar] [CrossRef]

- Tang, C.R.; Hsieh, J.W.; Teng, S.Y. Cooperative Multi-Objective Reinforcement Learning for Traffic Signal Control and Carbon Emission Reduction. arXiv 2023, arXiv:2306.09662. [Google Scholar] [CrossRef]

- Hessel, M.; Modayil, J.; Van Hasselt, H.; Schaul, T.; Ostrovski, G.; Dabney, W.; Horgan, D.; Piot, B.; Azar, M.; Silver, D. Rainbow: Combining improvements in deep reinforcement learning. In Proceedings of the AAAI Conference on Artificial Intelligence, New Orleans, LA, USA, 2–7 February 2018. [Google Scholar]

- Yavas, U.; Kumbasar, T.; Ure, N.K. A new approach for tactical decision making in lane changing: Sample efficient deep Q learning with a safety feedback reward. In Proceedings of the 2020 IEEE Intelligent Vehicles Symposium (IV), Las Vegas, NV, USA, 19 October–13 November 2020; pp. 1156–1161. [Google Scholar]

- Hou, B.; Zhang, K.; Gong, Z.; Li, Q.; Zhou, J.; Zhang, J.; de La Fortelle, A. SoC-VRP: A deep-reinforcement-learning-based vehicle route planning mechanism for service-oriented cooperative ITS. Electronics 2023, 12, 4191. [Google Scholar] [CrossRef]

- He, Y.; Zhang, P.; Shao, Y.; Tao, Y. CO2 Emission Analysis during the Flow of Gasoline and Pure Electric Vehicles. J. Chongqing Jiaotong Univ. (Nat. Sci. Ed.) 2019, 38, 126–130. [Google Scholar]

- Pan, X.; Ge, C.; Lu, R.; Song, S.; Chen, G.; Huang, Z.; Huang, G. On the integration of self-attention and convolution. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, New Orleans, LA, USA, 18–24 June 2022; pp. 815–825. [Google Scholar] [CrossRef]

- Jimenez-Palacios, J.L. Understanding and Quantifying Motor Vehicle Emissions with Vehicle Specific Power and TILDAS Remote Sensing; Massachusetts Institute of Technology: Cambridge, MA, USA, 1998. [Google Scholar]

- Epa, U.S. Methodology for Developing Modal Emission Rates for EPA’s Multi-Scale Motor Vehicle and Equipment Emission System; US Environmental Protection Agency: Washington, DC, USA, 2002. [Google Scholar]

- Notice on the Management of Greenhouse Gas Emission Reporting in the Power Generation Industry for 2023–2025. 2023. Available online: https://www.mee.gov.cn/xxgk2018/xxgk/xxgk06/202302/t20230207_1015569.html (accessed on 12 January 2025).

- Mnih, V.; Kavukcuoglu, K.; Silver, D.; Rusu, A.A.; Veness, J.; Bellemare, M.G.; Graves, A.; Riedmiller, M.; Fidjeland, A.K.; Ostrovski, G. Human-level control through deep reinforcement learning. Nature 2015, 518, 529–533. [Google Scholar] [CrossRef]

- Yang, H.; Jin, W.-L. A control theoretic formulation of green driving strategies based on inter-vehicle communications. Transp. Res. Part C Emerg. Technol. 2014, 41, 48–60. [Google Scholar] [CrossRef]

- Huang, Y.; Song, G.; Peng, F.; Huang, J.; Zhang, Z. Ecological Driving Trajectory Optimization for Signalized Intersections Considering Queue Length. J. Traffic Transp. Eng. 2022, 20, 43–56. [Google Scholar] [CrossRef]

- Zhang, G.; Chang, F.; Jin, J.; Yang, F.; Huang, H. Multi-objective deep reinforcement learning approach for adaptive traffic signal control system with concurrent optimization of safety, efficiency, and decarbonization at intersections. Accid. Anal. Prev. 2024, 199, 107451. [Google Scholar] [CrossRef]

- Wang, P.; Ni, W. An Enhanced Dueling Double Deep Q-Network With Convolutional Block Attention Module for Traffic Signal Optimization in Deep Reinforcement Learning. IEEE Access 2024, 12, 44224–44232. [Google Scholar] [CrossRef]

- Zhang, X.; Liu, H.; Mao, C.; Shi, J.; Meng, G.; Wu, J.; Pan, Y. The intelligent engine start-stop trigger system based on the actual road running status. PLoS ONE 2021, 16, e0253201. [Google Scholar] [CrossRef]

- Salehi, S.; Ghatrehsamani, S.; Akbarzadeh, S.; Khonsari, M. Prediction of Wear in Start–Stop Systems Using Continuum Damage Mechanics. Tribol. Lett. 2025, 73, 11. [Google Scholar] [CrossRef]

- IEA. Global EV Data Explorer; IOP Publishing: Paris, France, 2023. [Google Scholar]

- Li, C.; Zhang, F.; Wang, T.; Huang, D.; Tang, T. Research on Ecological Driving Strategy for Road Intersections Based on Deep Reinforcement Learning. J. Transp. Syst. Eng. Inf. Technol. 2024, 24, 81–92. [Google Scholar] [CrossRef]

- Liao, L.; Liu, J.; Wu, X.; Zou, F.; Pan, J.; Sun, Q.; Li, S.E.; Zhang, M. Time difference penalized traffic signal timing by LSTM Q-network to balance safety and capacity at intersections. IEEE Access 2020, 8, 80086–80096. [Google Scholar] [CrossRef]

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).