Irony and Sarcasm Detection in Turkish Texts: A Comparative Study of Transformer-Based Models and Ensemble Learning

Abstract

1. Introduction

2. Materials and Methods

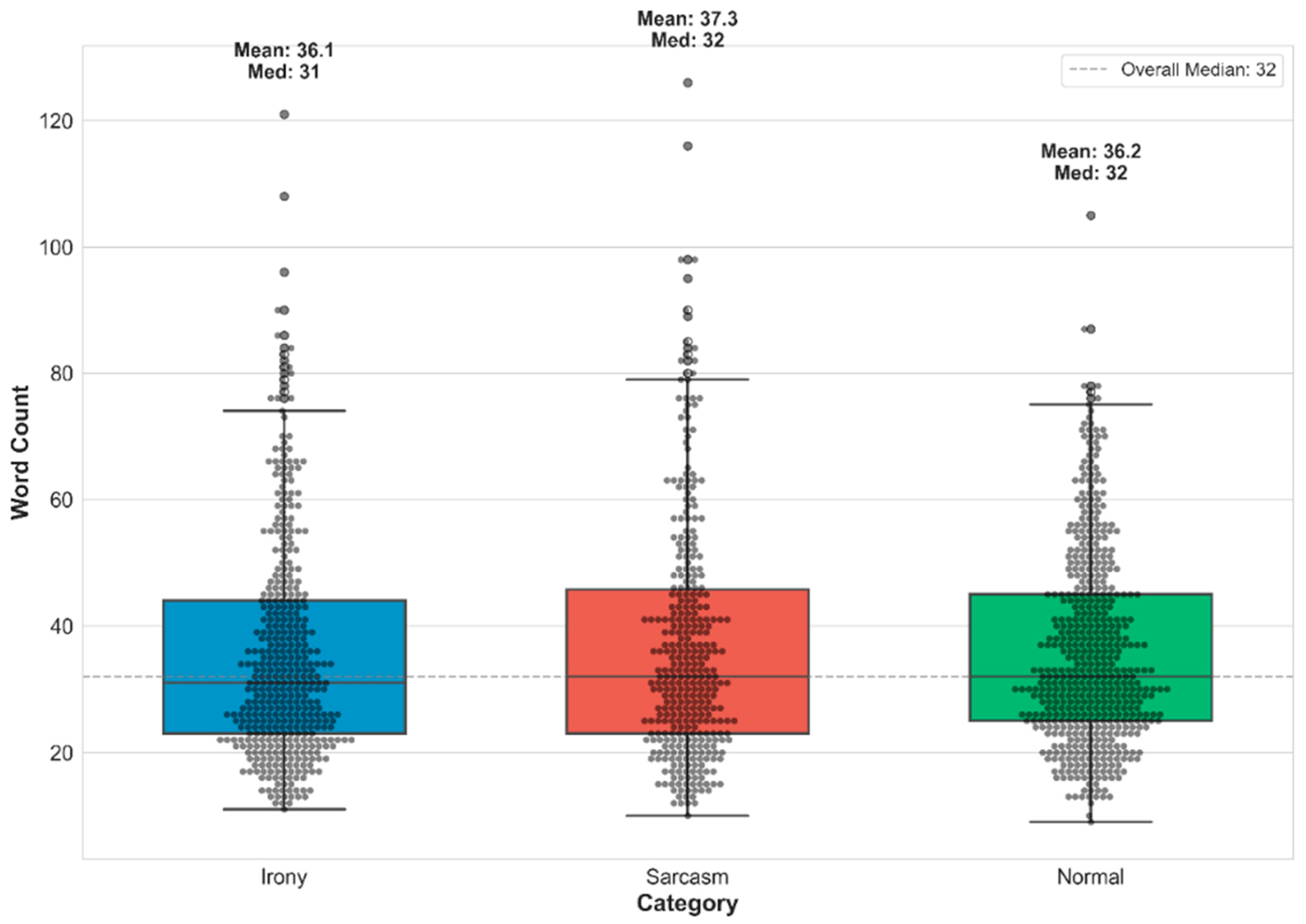

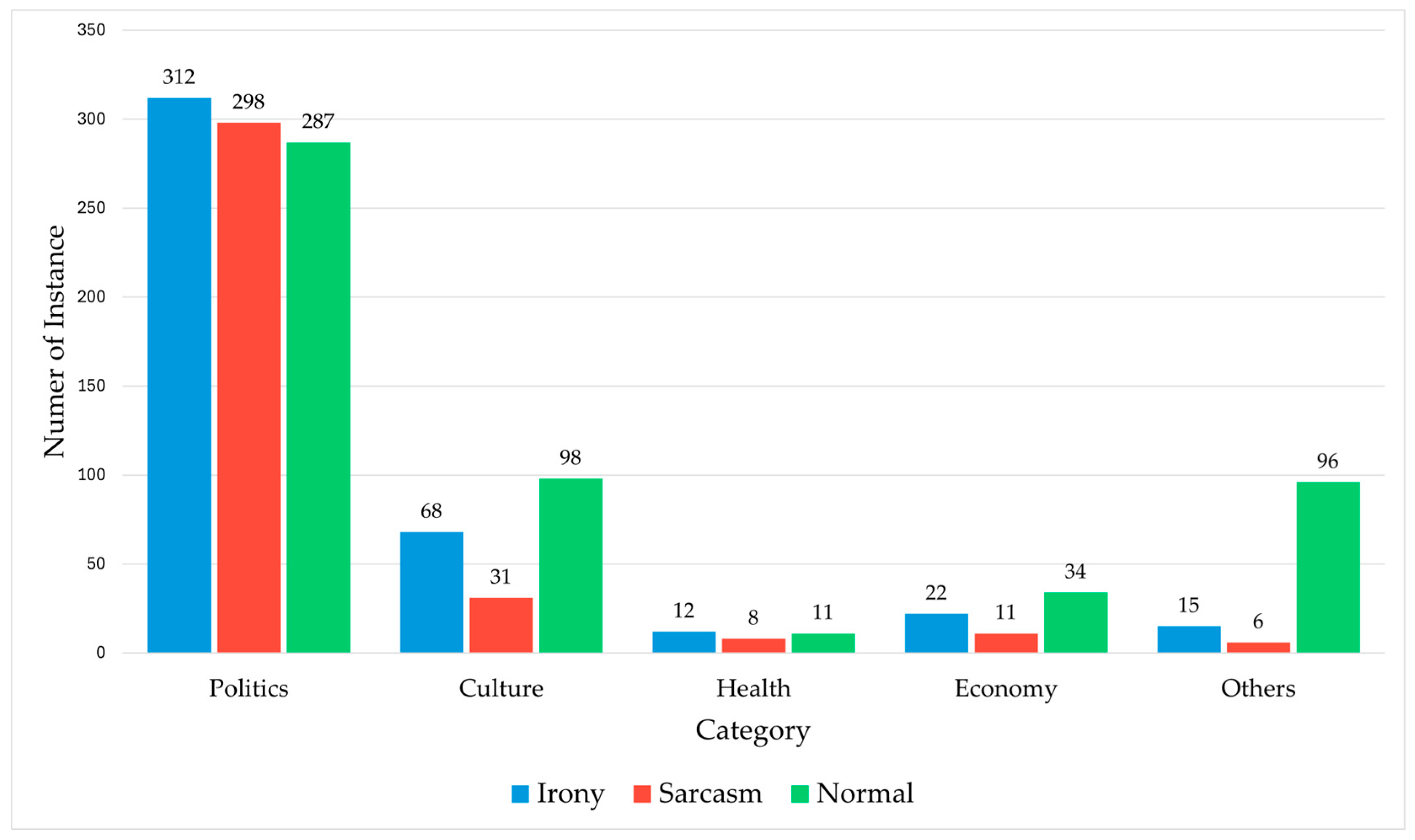

2.1. Dataset

| Class | Monogram Sample | Bigram Sample | Trigram Sample | Paragraph |

|---|---|---|---|---|

| Irony | Böylece siz de bir Danimarkalı kadar mutlu olabilirsiniz. (So you too can be as happy as a Dane.) | Yumuşak bir aydınlatma, hoş kokulu mumlar, lezzetli yemekler, samimi sohbetler, sıcak tutan yumuşak giysiler… Böylece siz de bir Danimarkalı kadar mutlu olabilirsiniz. (Soft lighting, fragrant candles, delicious food, heartfelt conversations, warm and cozy clothes… So you too can be as happy as a Dane.) | Üstelik yapılması gerekenler hiç de zor sayılmazdı. Yumuşak bir aydınlatma, hoş kokulu mumlar, lezzetli yemekler, samimi sohbetler, sıcak tutan yumuşak giysiler… Böylece siz de bir Danimarkalı kadar mutlu olabilirsiniz. (Moreover, what needed to be done was not difficult at all. Soft lighting, fragrant candles, delicious food, heartfelt conversations, warm and cozy clothes… So you too can be as happy as a Dane.) | Kitabı çok satanlar listesine taşıyan ve Türkçe dahil pek çok dilde yayınlanıp popülerleşmesini sağlayan da kuşkusuz insanların bu mutluluk formüllerini öğrenme ihtiyacıydı. Üstelik yapılması gerekenler hiç de zor sayılmazdı. Yumuşak bir aydınlatma, hoş kokulu mumlar, lezzetli yemekler, samimi sohbetler, sıcak tutan yumuşak giysiler… Böylece siz de bir Danimarkalı kadar mutlu olabilirsiniz. (What propelled the book onto bestseller lists and led to its publication and popularity in many languages, including Turkish, was undoubtedly people’s need to learn these formulas for happiness. Moreover, what needed to be done was not difficult at all. Soft lighting, fragrant candles, delicious food, heartfelt conversations, warm and cozy clothes… So you too can be as happy as a Dane.) |

| Sarcasm | Ne de olsa “Alamancı” onun bakışı bir başka… (After all, as an “Alamancı”, her perspective is different…) | Bir de dizileri uzun tutuyorlar, kısa yapsınlar! diyordu, o sevimli Türkçesiyle. Ne de olsa “Alamancı” onun bakışı bir başka… (Also, they make the TV series too long—they should make them shorter!” After all, as an “Alamancı”, her perspective is different…) | Üç yıldır Türkiye’deyim, ilk defa bir ödül alıyorum. Bir de dizileri uzun tutuyorlar, kısa yapsınlar! diyordu, o sevimli Türkçesiyle. Ne de olsa “Alamancı” onun bakışı bir başka… (“I’ve been in Turkey for three years, and this is the first time I’m receiving an award. Also, they make the TV series too long—they should make them shorter!” After all, as an “Alamancı”, her perspective is different…) | Oysa “Hürrem Sultan” Meryem Uzerli’nin dediği başka. Üç yıldır Türkiye’deyim, ilk defa bir ödül alıyorum. Bir de dizileri uzun tutuyorlar, kısa yapsınlar! diyordu, o sevimli Türkçesiyle. Ne de olsa “Alamancı” onun bakışı bir başka… (Yet what “Hürrem Sultan” Meryem Uzerli says is different. She was saying, in her cute Turkish: “I’ve been in Turkey for three years, and this is the first time I’m receiving an award. Also, they make the TV series too long—they should make them shorter!” After all, as an “Alamancı”, her perspective is different…) |

| Normal | Kanalların ve yönetimlerinin geleceğini belirliyor, yapım şirketlerinin milyonlarca dolar kâr veya zarar etmesine neden oluyor. (It determines the future of TV channels and their management, and causes production companies to make millions of dollars in profit or loss.) | Her bir rating altın değil pırlanta değerinde. Kanalların ve yönetimlerinin geleceğini belirliyor, yapım şirketlerinin milyonlarca dolar kâr veya zarar etmesine neden oluyor. (Every single rating point is not just worth gold, but diamonds. It determines the future of TV channels and their management, and causes production companies to make millions of dollars in profit or loss.) | Her gün rating karnesi alan onlarca yapım şirketi kıyasıya mücadele ediyorlar. Her bir rating altın değil pırlanta değerinde. Kanalların ve yönetimlerinin geleceğini belirliyor, yapım şirketlerinin milyonlarca dolar kâr veya zarar etmesine neden oluyor. (Dozens of production companies, each receiving daily ratings reports, compete fiercely. Every single rating point is not just worth gold, but diamonds. It determines the future of TV channels and their management, and causes production companies to make millions of dollars in profit or loss.) | Şaşkınlık verici çünkü Türkiye’de rekabetin en sert ve en görünür olduğu yerlerden biri dizi sektörü. Her gün rating karnesi alan onlarca yapım şirketi kıyasıya mücadele ediyorlar. Her bir rating altın değil pırlanta değerinde. Kanalların ve yönetimlerinin geleceğini belirliyor, yapım şirketlerinin milyonlarca dolar kâr veya zarar etmesine neden oluyor. (It is astonishing, because one of the places where competition is the toughest and most visible in Turkey is the TV series sector. Dozens of production companies, each receiving daily ratings reports, compete fiercely. Every single rating point is not just worth gold, but diamonds. It determines the future of TV channels and their management, and causes production companies to make millions of dollars in profit or loss.) |

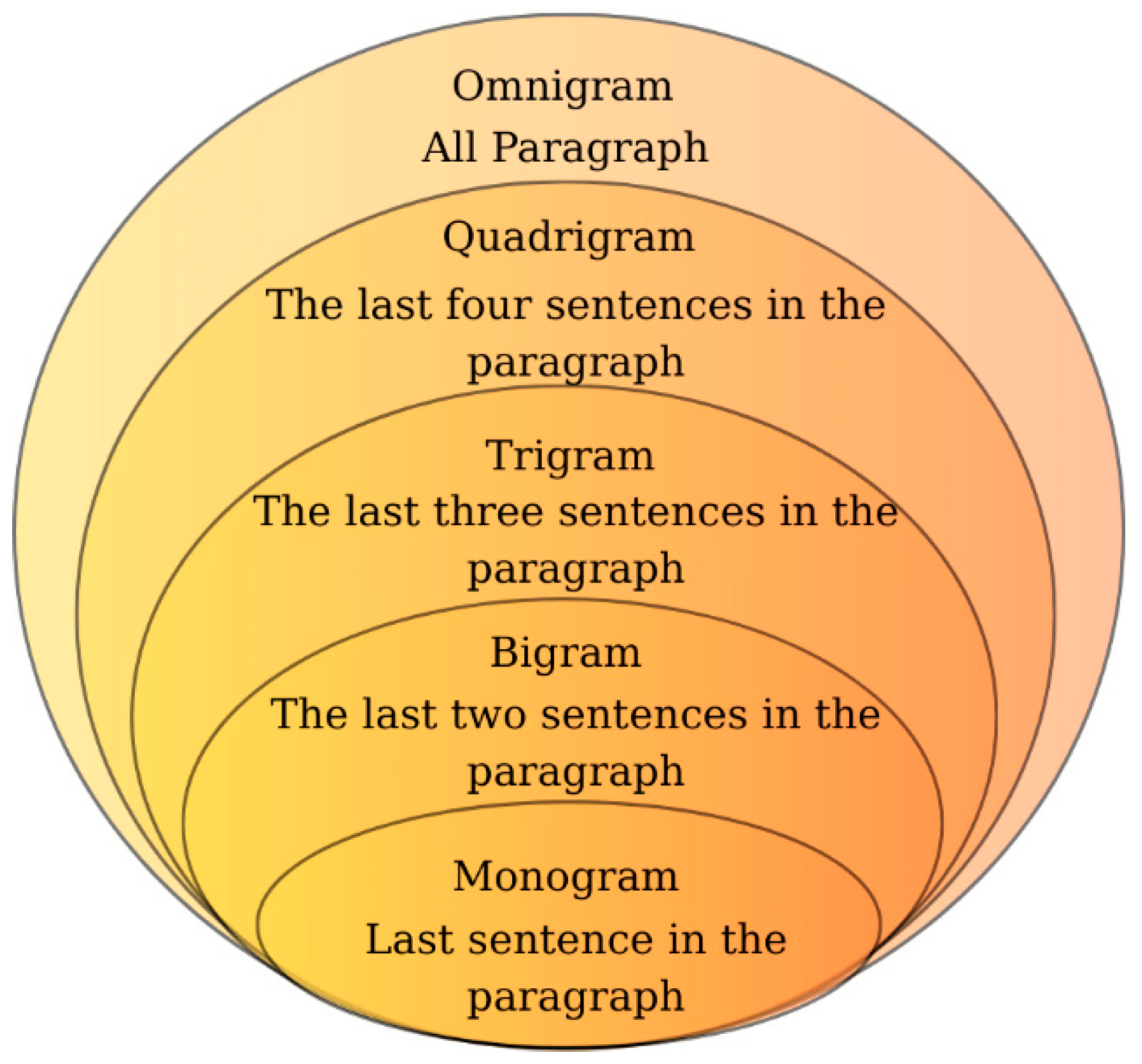

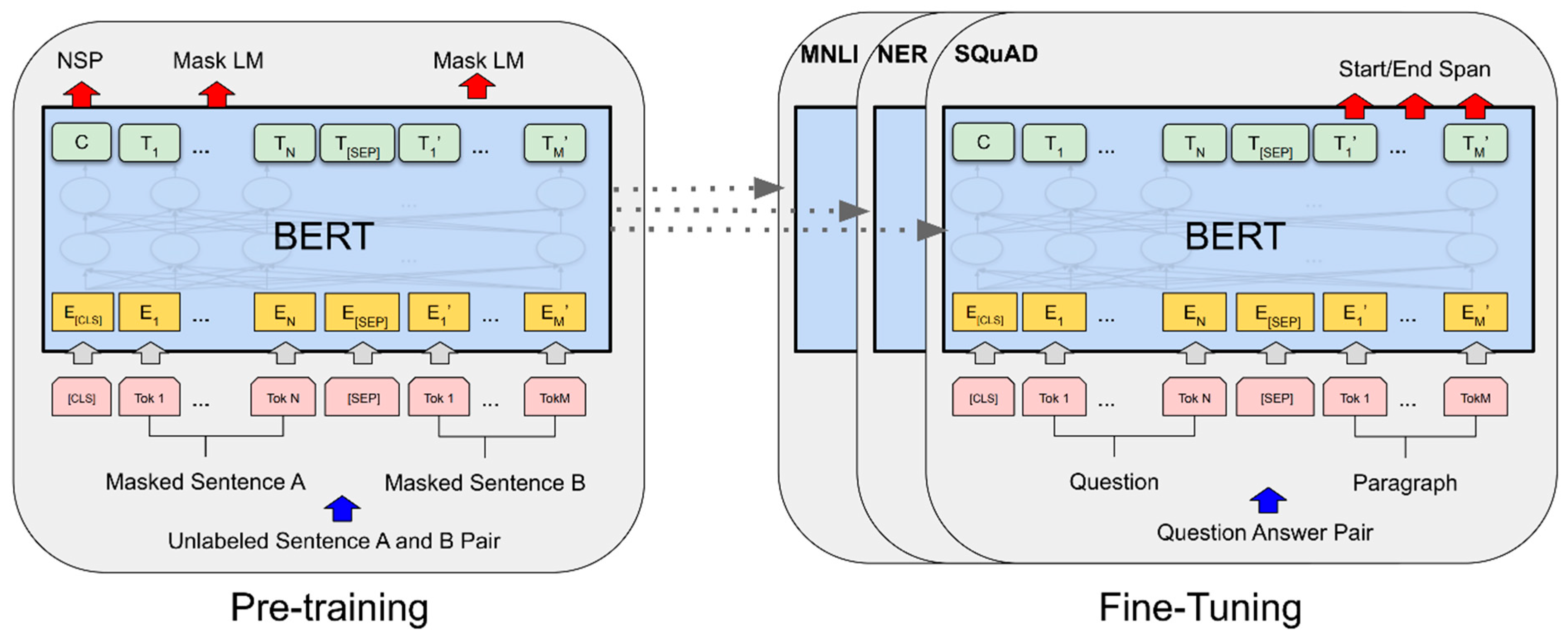

2.2. Modeling

2.2.1. Model Selection

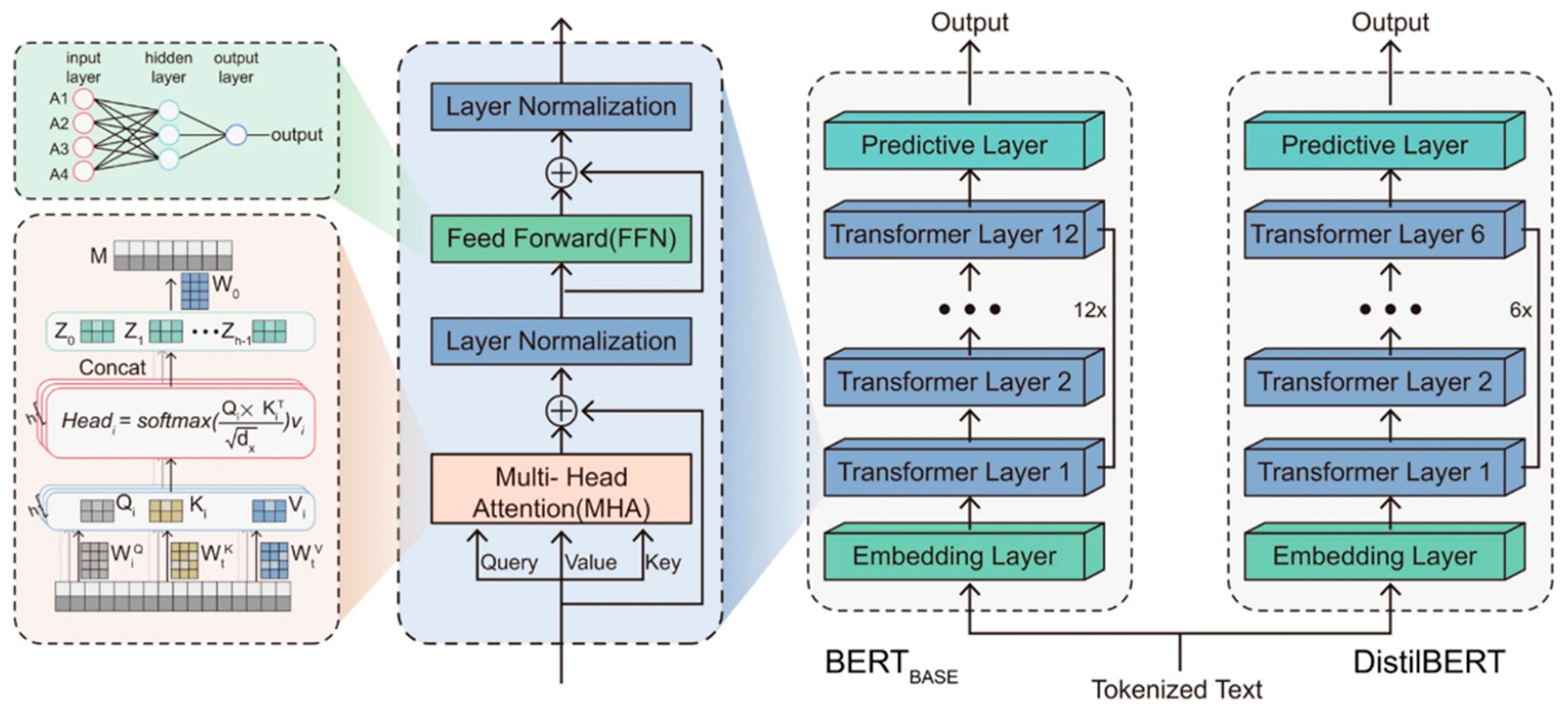

BERTurk

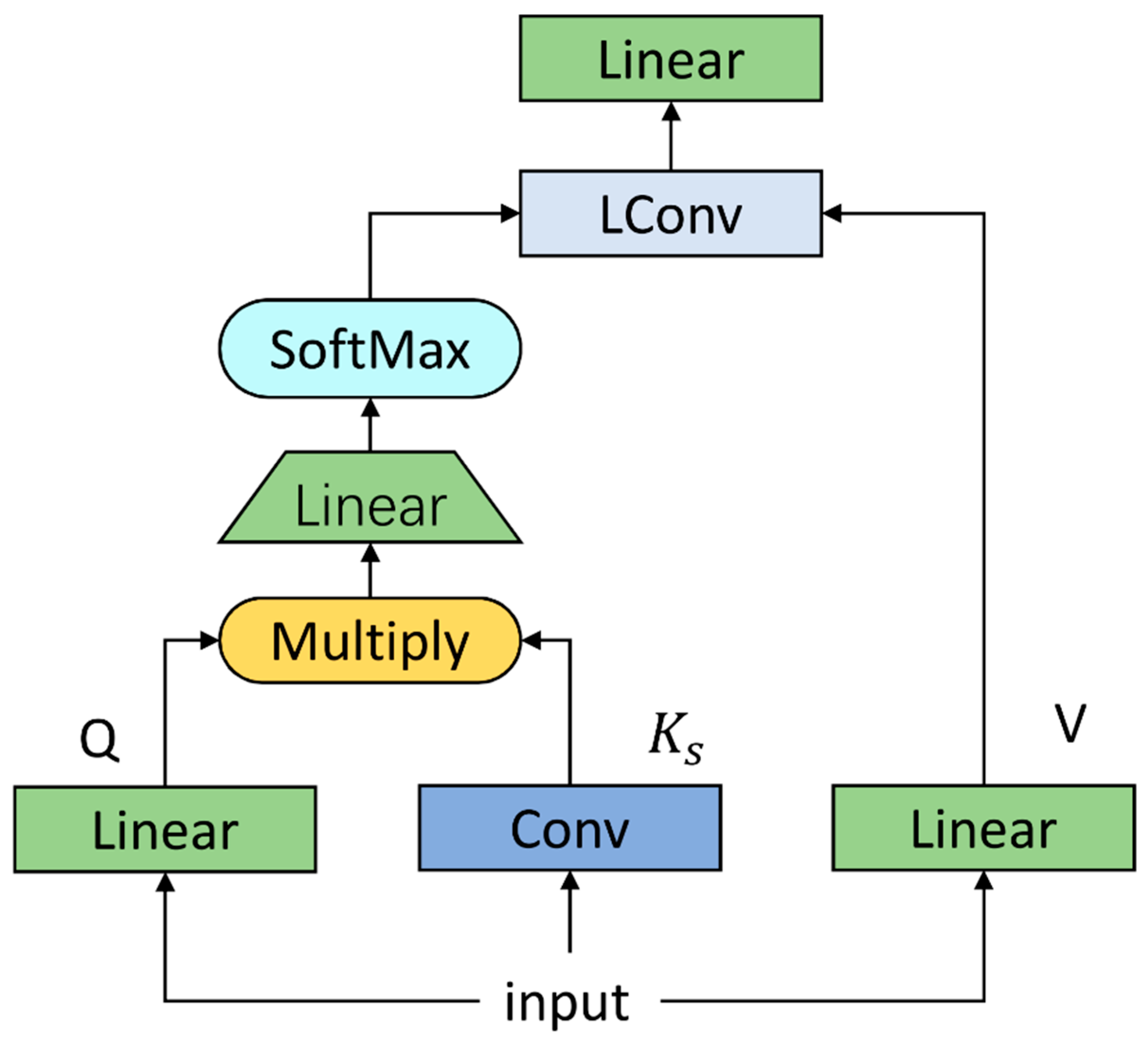

ConvBERTurk

DistilBERTurk

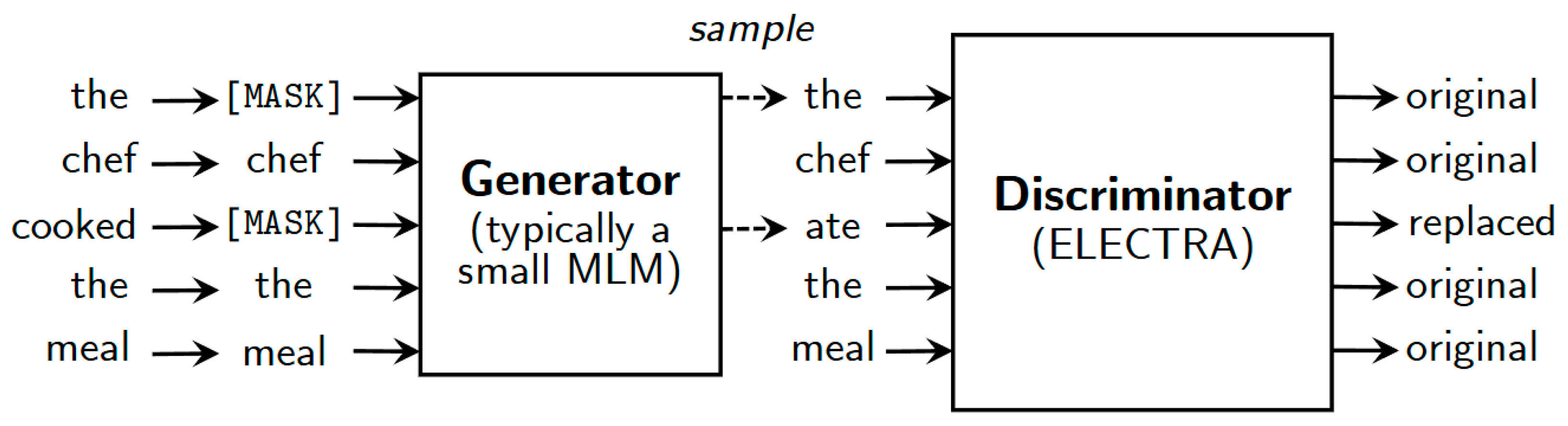

ELECTRATurk

RoBERTaTurk

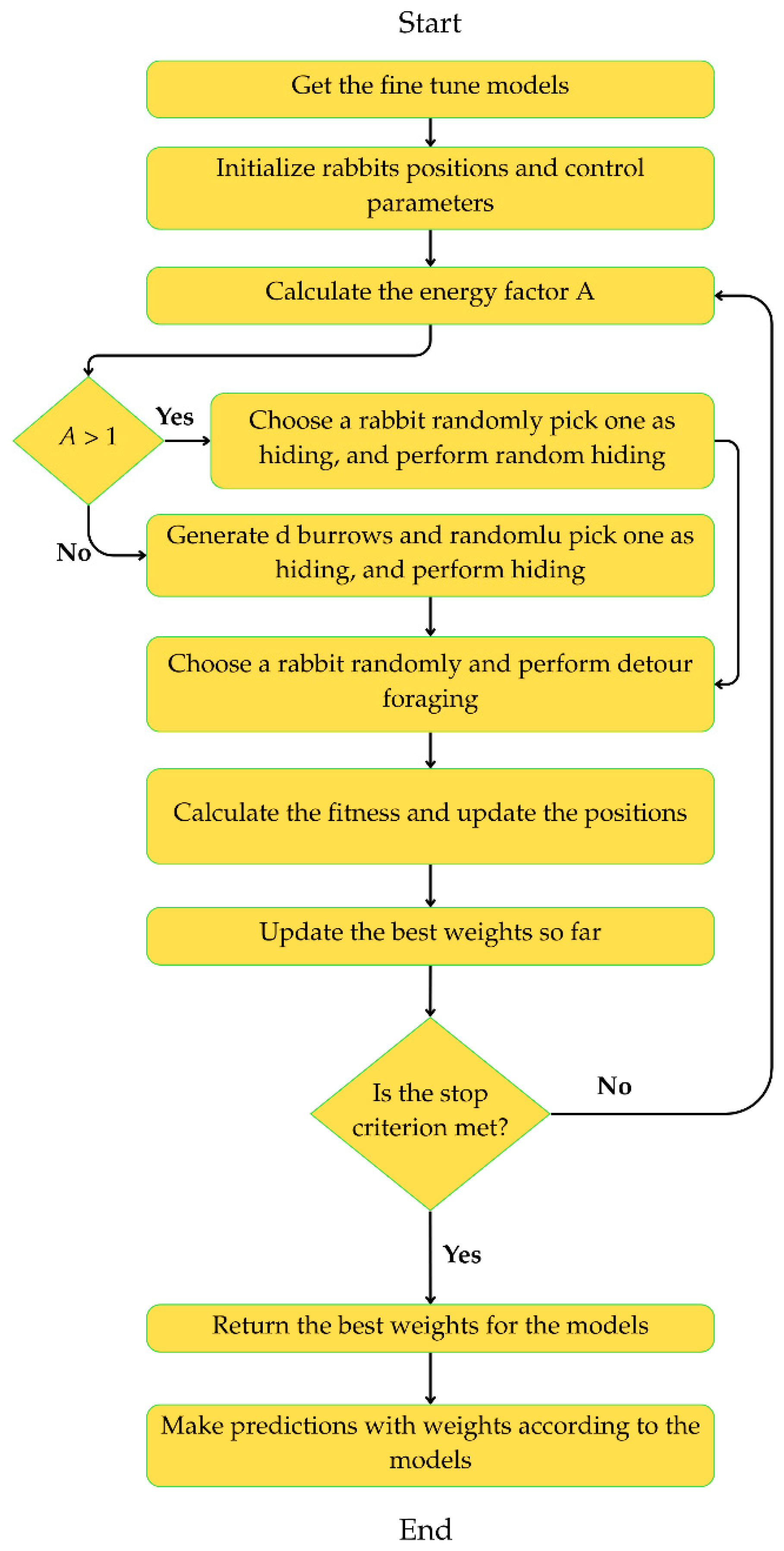

Artificial Rabbits Optimization Algorithm

2.2.2. Ensemble Learning Model

2.3. Evaluation Metrics

3. Results and Discussion

4. Conclusions

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Conflicts of Interest

References

- Junaid, T.; Sumathi, D.; Sasikumar, A.; Suthir, S.; Manikandan, J.; Khilar, R.; Kuppusamy, P.; Raju, M.J. A comparative analysis of transformer based models for figurative language classification. Comput. Electr. Eng. 2022, 101, 108051. [Google Scholar] [CrossRef]

- Bharti, S.K.; Vachha, B.; Pradhan, R.K.; Babu, K.S.; Jena, S.K. Sarcastic sentiment detection in tweets streamed in real time: A big data approach. Digit. Commun. Netw. 2016, 2, 108–121. [Google Scholar] [CrossRef]

- Reyes, A.; Rosso, P.; Buscaldi, D. From humor recognition to irony detection: The figurative language of social media. Data Knowl. Eng. 2012, 74, 1–12. [Google Scholar] [CrossRef]

- Baykara, B.; Güngör, T. Turkish abstractive text summarization using pretrained sequence-to-sequence models. Nat. Lang. Eng. 2023, 29, 1275–1304. [Google Scholar] [CrossRef]

- Kumar, H.M.K.; Harish, B.S. Sarcasm classification: A novel approach by using Content Based Feature Selection Method. in Procedia Comput. Sci. 2018, 143, 378–386. [Google Scholar] [CrossRef]

- Reyes, A.; Rosso, P. Making objective decisions from subjective data: Detecting irony in customer reviews. Decis. Support Syst. 2012, 53, 754–760. [Google Scholar] [CrossRef]

- Fersini, E.; Messina, E.; Pozzi, F.A. Sentiment analysis: Bayesian Ensemble Learning. Decis. Support. Syst. 2014, 68, 26–38. [Google Scholar] [CrossRef]

- Wang, G.; Sun, J.; Ma, J.; Xu, K.; Gu, J. Sentiment classification: The contribution of ensemble learning. Decis. Support Syst. 2014, 57, 77–93. [Google Scholar] [CrossRef]

- Poria, S.; Cambria, E.; Hazarika, D.; Vij, P. A deeper look into sarcastic tweets using deep convolutional neural networks. In COLING 2016—26th International Conference on Computational Linguistics, Proceedings of COLING 2016: Technical Papers, Osaka, Japan, 11–16 December 2016; The COLING 2016 Organizing Committee: Osaka, Japan, 2016. [Google Scholar]

- Sulis, E.; Farías, D.I.H.; Rosso, P.; Patti, V.; Ruffo, G. Figurative messages and affect in Twitter: Differences between #irony, #sarcasm and #not. Knowl. Based Syst. 2016, 108, 132–143. [Google Scholar] [CrossRef]

- Filatova, E. Irony and sarcasm: Corpus generation and analysis using crowdsourcing. In Proceedings of the 8th International Conference on Language Resources and Evaluation, LREC 2012, Istanbul, Turkey, 23–25 May 2012; European Language Resources Association: Istanbul, Turkey, 2012. [Google Scholar]

- Ravi, K.; Ravi, V. Irony Detection Using Neural Network Language Model, Psycholinguistic Features and Text Mining. In Proceedings of the 2018 IEEE 17th International Conference on Cognitive Informatics and Cognitive Computing, ICCI*CC 2018, Berkeley, CA, USA, 16–18 July 2018. [Google Scholar] [CrossRef]

- Ravi, K.; Ravi, V. A novel automatic satire and irony detection using ensembled feature selection and data mining. Knowl. Based Syst. 2017, 120, 15–33. [Google Scholar] [CrossRef]

- Ashwitha, A.; Shruthi, G.; Shruthi, H.R.; Upadhyaya, M.; Ray, A.P.; Manjunath, T.C. Sarcasm detection in natural language processing. Mater. Today Proc. 2020, 37, 3324–3331. [Google Scholar] [CrossRef]

- Lad, R. Sarcasm Detection in English and Arabic Tweets Using Transformer Models. 2023. Available online: https://digitalcommons.dartmouth.edu/cs_senior_theses/20/ (accessed on 12 November 2023).

- Chauhan, D.S.; Singh, G.V.; Arora, A.; Ekbal, A.; Bhattacharyya, P. An emoji-aware multitask framework for multimodal sarcasm detection. Knowl. Based Syst. 2022, 257, 109924. [Google Scholar] [CrossRef]

- Attardo, S. Irony as relevant inappropriateness. J. Pragmat. 2000, 32, 793–826. [Google Scholar] [CrossRef]

- Goel, P.; Jain, R.; Nayyar, A.; Singhal, S.; Srivastava, M. Sarcasm detection using deep learning and ensemble learning. Multimed. Tools Appl. 2022, 81, 43229–43252. [Google Scholar] [CrossRef]

- Kader, F.B.; Nujat, N.H.; Sogir, T.B.; Kabir, M.; Mahmud, H.; Hasan, K. Computational Sarcasm Analysis on Social Media: A Systematic Review. arXiv 2022. Available online: https://arxiv.org/abs/2209.06170 (accessed on 13 November 2023). [CrossRef]

- Taşlıoğlu, H. Ironi Detection on Turkish Microblog Texts; Middle East Technical University: Ankara, Turkey, 2014. [Google Scholar]

- Yue, T.; Mao, R.; Wang, H.; Hu, Z.; Cambria, E. KnowleNet: Knowledge fusion network for multimodal sarcasm detection. Information Fusion. 2023, 100, 101921. [Google Scholar] [CrossRef]

- Rohanian, O.; Taslimipoor, S.; Evans, R.; Mitkov, R. WLV at SemEval-2018 Task 3: Dissecting Tweets in Search of Irony. In NAACL HLT 2018—International Workshop on Semantic Evaluation, SemEval 2018—Proceedings of the 12th Workshop, New Orleans, LA, USA, 5–6 June 2018; Association for Computational Linguistics: New Orleans, LA, USA, 2018; pp. 553–559. [Google Scholar] [CrossRef]

- Ren, L.; Xu, B.; Lin, H.; Liu, X.; Yang, L. Sarcasm Detection with Sentiment Semantics Enhanced Multi-level Memory Network. Neurocomputing 2020, 401, 320–326. [Google Scholar] [CrossRef]

- Chaudhari, P.; Chandankhede, C. Literature survey of sarcasm detection. In Proceedings of the 2017 International Conference on Wireless Communications, Signal Processing and Networking, WiSPNET 2017, Chennai, India, 22–24 March 2017. [Google Scholar] [CrossRef]

- Lee, C.J.; Katz, A.N. The Differential Role of Ridicule in Sarcasm and Irony. Metaphor. Symb. 1998, 13, 1–15. [Google Scholar] [CrossRef]

- Clift, R. Irony in conversation. Lang. Soc. 1999, 28, 523–553. [Google Scholar] [CrossRef]

- De Freitas, L.A.; Vanin, A.A.; Hogetop, D.N.; Bochernitsan, M.N.; Vieira, R. Pathways for irony detection in tweets. In Proceedings of the ACM Symposium on Applied Computing, Gyeongju, Republic of Korea, 24–28 March 2014. [Google Scholar] [CrossRef]

- Abulaish, M.; Kamal, A.; Zaki, M.J. A survey of figurative language and its computational detection in online social networks. ACM Trans. Web 2020, 14, 1–52. [Google Scholar] [CrossRef]

- Harared, N.; Nurani, S. SARCASM: MOCK POLITENESS in THE BIG BANG THEORY. Elite Engl. Lit. J. 2020, 7, 186–199. [Google Scholar] [CrossRef]

- Kaya, S.; Alatas, B. Sarcasm Detection with A New CNN + BiLSTM Hybrid Neural Network and BERT Classification Model. Int. J. Adv. Netw. Appl. 2022, 14, 5436–5443. [Google Scholar] [CrossRef]

- Nagwanshi, P.; Madhavan, C.E.V. Sarcasm detection using sentiment and semantic features. In Proceedings of the International Conference on Knowledge Discovery and Information Retrieval—KDIR 2014, Rome, Italy, 21–24 October 2014. [Google Scholar] [CrossRef]

- Oraby, S.; Harrison, V.; Misra, A.; Riloff, E.; Walker, M. Are you serious? Rhetorical questions and sarcasm in social media dialog. In SIGDIAL 2017—18th Annual Meeting of the Special Interest Group on Discourse and Dialogue, Proceedings of the Conference, Saarbrücken, Germany, 15–17 August 2017; Association for Computational Linguistics: Saarbrücken, Germany, 2017. [Google Scholar] [CrossRef]

- Savini, E.; Caragea, C. Intermediate-Task Transfer Learning with BERT for Sarcasm Detection. Mathematics 2022, 10, 844. [Google Scholar] [CrossRef]

- Frenda, S.; Patti, V.; Rosso, P. When Sarcasm Hurts: Irony-Aware Models for Abusive Language Detection; Springer: Cham, Switzerland, 2023. [Google Scholar] [CrossRef]

- Hamza, M.A.; Alshahrani, H.J.; Hassan, A.Q.A.; Gaddah, A.; Allheeib, N.; Alsaif, S.A.; Al-Onazi, B.B.; Mohsen, H. Computational Linguistics with Optimal Deep Belief Network Based Irony Detection in Social Media. Comput. Mater. Contin. 2023, 75, 4137–4154. [Google Scholar] [CrossRef]

- Maladry, A.; Lefever, E.; Van Hee, C.; Hoste, V. A Fine Line Between Irony and Sincerity: Identifying Bias in Transformer Models for Irony Detection. In Proceedings of the 13th Workshop on Computational Approaches to Subjectivity, Sentiment, & Social Media Analysis, Toronto, ON, Canada, 14 July 2023. [Google Scholar] [CrossRef]

- Malik, M.; Tomás, D.; Rosso, P. How Challenging is Multimodal Irony Detection? In Language Processing and Information Systems; Lecture Notes in Computer Science (including subseries Lecture Notes in Artificial Intelligence and Lecture Notes in Bioinfor-matics); Springer: Cham, Switzerland, 2023. [Google Scholar] [CrossRef]

- Ortega-Bueno, R.; Rosso, P.; Fersini, E. Cross-Domain and Cross-Language Irony Detection: The Impact of Bias on Models’ Generalization. In Natural Language Processing and Information Systems; Lecture Notes in Computer Science (including subseries Lecture Notes in Artificial Intelligence and Lecture Notes in Bioinfor-matics); Springer: Cham, Switzerland, 2023. [Google Scholar] [CrossRef]

- Saroj, A.; Pal, S. Ensemble-based domain adaptation on social media posts for irony detection. Multimed. Tools Appl. 2023, 83, 23249–23268. [Google Scholar] [CrossRef]

- Tasnia, R.; Ayman, N.; Sultana, A.; Chy, A.N.; Aono, M. Exploiting stacked embeddings with LSTM for multilingual humor and irony detection. Soc. Netw. Anal. Min. 2023, 13, 43. [Google Scholar] [CrossRef]

- Tomás, D.; Ortega-Bueno, R.; Zhang, G.; Rosso, P.; Schifanella, R. Transformer-based models for multimodal irony detection. J. Ambient. Intell. Humaniz. Comput. 2023, 14, 7399–7410. [Google Scholar] [CrossRef]

- Bettelli, G.; Panzeri, F. ‘Irony is easy to understand’: The role of emoji in irony detection. Intercult. Pragmat. 2023, 20, 467–493. [Google Scholar] [CrossRef]

- da Rocha Junqueira, J.; Junior, C.L.; Silva, F.L.V.; Côrrea, U.B.; de Freitas, L.A. Albertina in action: An investigation of its abilities in aspect extraction, hate speech detection, irony detection, and question-answering. In Proceedings of the Simpósio Brasileiro de Tecnologia da Informação e da Linguagem Humana (STIL), SBC, Belo Horizonte, Brazil, 25–29 September 2023; pp. 146–155. [Google Scholar]

- Băroiu, A.C.; Trăușan-Matu, Ș. Automatic Sarcasm Detection: Systematic Literature Review. Information 2022, 13, 399. [Google Scholar] [CrossRef]

- Govindan, V.; Balakrishnan, V. A machine learning approach in analysing the effect of hyperboles using negative sentiment tweets for sarcasm detection. J. King Saud Univ. —Comput. Inf. Sci. 2022, 34, 5110–5120. [Google Scholar] [CrossRef]

- Misra, R. News Headlines Dataset for Sarcasm Detection. arXiv 2022. Available online: http://arxiv.org/abs/2212.06035 (accessed on 14 November 2023). [CrossRef]

- Pandey, R.; Singh, J.P. BERT-LSTM model for sarcasm detection in code-mixed social media post. J. Intell. Inf. Syst. 2023, 60, 235–254. [Google Scholar] [CrossRef]

- Qiao, Y.; Jing, L.; Song, X.; Chen, X.; Zhu, L.; Nie, L. Mutual-Enhanced Incongruity Learning Network for Multi-Modal Sarcasm Detection. In Proceedings of the 37th AAAI Conference on Artificial Intelligence, AAAI 2023, Washington, DC, USA, 7–14 February 2023. [Google Scholar] [CrossRef]

- Sharma, D.K.; Singh, B.; Agarwal, S.; Kim, H.; Sharma, R. Sarcasm Detection over Social Media Platforms Using Hybrid Auto-Encoder-Based Model. Electronics 2022, 11, 2844. [Google Scholar] [CrossRef]

- Vinoth, D.; Prabhavathy, P. An intelligent machine learning-based sarcasm detection and classification model on social networks. J. Supercomput. 2022, 78, 10575–10594. [Google Scholar] [CrossRef]

- González-Ibáñez, R.; Muresan, S.; Wacholder, N. Identifying sarcasm in Twitter: A closer look. In Proceedings of the 49th Annual Meeting of the Association for Computational Linguistics: Human Language Technologies—ACL-HLT 2011, Portland, OR, USA, 19–24 June 2011. [Google Scholar]

- Justo, R.; Corcoran, T.; Lukin, S.M.; Walker, M.; Torres, M.I. Extracting relevant knowledge for the detection of sarcasm and nastiness in the social web. Knowl. Based Syst. 2014, 69, 124–133. [Google Scholar] [CrossRef]

- del Pilar Salas-Zárate, M.; Paredes-Valverde, M.A.; Rodriguez-García, M.Á.; Valencia-García, R.; Alor-Hernández, G. Automatic detection of satire in Twitter: A psycholinguistic-based approach. Knowl. Based Syst. 2017, 128, 20–33. [Google Scholar] [CrossRef]

- Al-Ghadhban, D.; Alnkhilan, E.; Tatwany, L.; Alrazgan, M. Arabic sarcasm detection in Twitter. In Proceedings of the 2017 International Conference on Engineering and MIS, ICEMIS 2017, Monastir, Tunisia, 8–10 May 2017. [Google Scholar] [CrossRef]

- da Rocha Junqueira, J.; Da Silva, F.; Costa, W.; Carvalho, R.; Bender, A.; Correa, U.; Freitas, L. BERTimbau in Action: An Investigation of its Abilities in Sentiment Analysis, Aspect Extraction, Hate Speech Detection, and Irony Detection. In Proceedings of the International Florida Artificial Intelligence Research Society Conference, FLAIRS, Clearwater Beach, FL, USA, 14–17 May 2023. [Google Scholar] [CrossRef]

- Ramteke, J.; Shah, S.; Godhia, D.; Shaikh, A. Election result prediction using Twitter sentiment analysis. In Proceedings of the International Conference on Inventive Computation Technologies, ICICT 2016, Coimbatore, India, 26–27 August 2016. [Google Scholar] [CrossRef]

- Bouazizi, M.; Otsuki, T. A Pattern-Based Approach for Sarcasm Detection on Twitter. IEEE Access 2016, 4, 5477–5488. [Google Scholar] [CrossRef]

- Forslid, E.; Wikén, N. Automatic Irony- and Sarcasm Detection in Social Media; Uppsala Universitet: Uppsala, Sweden, 2015. [Google Scholar]

- Nayak, D.K.; Bolla, B.K. Efficient Deep Learning Methods for Sarcasm Detection of News Headlines. In Machine Learning and Autonomous Systems; Smart Innovation, Systems and Technologies; Springer: Singapore, 2022. [Google Scholar] [CrossRef]

- Shrikhande, P.; Setty, V.; Sahani, A. Sarcasm Detection in Newspaper Headlines. In Proceedings of the 2020 IEEE 15th International Conference on Industrial and Information Systems, ICIIS 2020—Proceedings, Rupnagar, India, 26–28 November 2020. [Google Scholar] [CrossRef]

- Zanchak, M.; Vysotska, V.; Albota, S. The sarcasm detection in news headlines based on machine learning technology. In Proceedings of the International Scientific and Technical Conference on Computer Sciences and Information Technologies, Lviv, Ukraine, 22–25 September 2021. [Google Scholar] [CrossRef]

- Zuhri, A.T.; Sagala, R.W. Irony and Sarcasm Detection on Public Figure Speech. J. Elem. Sch. Educ. 2022, 1, 41–45. Available online: https://journal.berpusi.co.id/index.php/joese/article/view/13 (accessed on 12 November 2023).

- Kandasamy, I.; Vasantha, W.B.; Obbineni, J.M.; Smarandache, F. Sentiment analysis of tweets using refined neutrosophic sets. Comput. Ind. 2020, 115, 103180. [Google Scholar] [CrossRef]

- Basiri, M.E.; Nemati, S.; Abdar, M.; Cambria, E.; Acharya, U.R. ABCDM: An Attention-based Bidirectional CNN-RNN Deep Model for sentiment analysis. Future Gener. Comput. Syst. 2021, 115, 279–294. [Google Scholar] [CrossRef]

- Abdar, M.; Basiri, M.E.; Yin, J.; Habibnezhad, M.; Chi, G.; Nemati, S.; Asadi, S. Energy choices in Alaska: Mining people’s perception and attitudes from geotagged tweets. Renew. Sustain. Energy Rev. 2020, 124, 109781. [Google Scholar] [CrossRef]

- Almuqren, L.; Cristea, A. AraCust: A Saudi Telecom Tweets corpus for sentiment analysis. PeerJ Comput. Sci. 2021, 7, e510. [Google Scholar] [CrossRef]

- Zhu, N.; Wang, Z. The paradox of sarcasm: Theory of mind and sarcasm use in adults. Pers. Individ. Dif. 2020, 163, 110035. [Google Scholar] [CrossRef]

- Sekharan, S.C.; Vadivu, G.; Rao, M.V. A Comprehensive Study on Sarcasm Detection Techniques in Sentiment Analysis. Int. J. Pure Appl. Math. 2018, 118, 433–442. [Google Scholar]

- Özkan, M.; Kar, G. TÜRKÇE DİLİNDE YAZILAN BİLİMSEL METİNLERİN DERİN ÖĞRENME TEKNİĞİ UYGULANARAK ÇOKLU SINIFLANDIRILMASI. Mühendislik Bilim. Tasarım Derg. 2022, 10, 504–519. [Google Scholar] [CrossRef]

- Dimovska, J.; Angelovska, M.; Gjorgjevikj, D.; Madjarov, G. Sarcasm and Irony Detection in English Tweets. In ICT Innovations 2018. Engineering and Life Science; Communications in Computer and Information Science; Springer: Cham, Switzerland, 2018. [Google Scholar] [CrossRef]

- Samonte, M.J.C.; Dollete, C.J.T.; Capanas, P.M.M.; Flores, M.L.C.; Soriano, C.B. Sentence-level sarcasm detection in English and Filipino tweets. In Proceedings of the 4th International Conference on Industrial and Business Engineering, Macao, 24–26 October 2018. [Google Scholar] [CrossRef]

- Yan, Y.; Zhang, B.-W.; Ding, G.; Li, W.; Zhang, J.; Li, J.-J.; Gao, W. O2-Bert: Two-Stage Target-Based Sentiment Analysis. Cognit. Comput. 2023, 16, 158–176. [Google Scholar] [CrossRef]

- Gedela, R.T.; Meesala, P.; Baruah, U.; Soni, B. Identifying sarcasm using heterogeneous word embeddings: A hybrid and ensemble perspective. Soft Comput. 2023, 28, 13941–13954. [Google Scholar] [CrossRef]

- Quintero, Y.C.; García, L.A. Irony detection based on character language model classifiers. In Progress in Artificial Intelligence and Pattern Recognition; Lecture Notes in Computer Science (including subseries Lecture Notes in Artificial Intelligence and Lecture Notes in Bioinformatics); Springer: Cham, Switzerland, 2018. [Google Scholar] [CrossRef]

- Bölücü, N.; Can, B. Sarcasm target identification with lstm networks. In Proceedings of the 2020 28th Signal Processing and Communications Applications Conference (SIU), Türkiye, Gaziantep, 5–7 October 2020; IEEE: Piscataway, NJ, USA; pp. 1–4. [Google Scholar]

- Mohammed, P.; Eid, Y.; Badawy, M.; Hassan, A. Evaluation of Different Sarcasm Detection Models for Arabic News Headlines. In Proceedings of the International Conference on Advanced Intelligent Systems and Informatics 2019, Cairo, Egypt, 26–28 October 2019; Advances in Intelligent Systems and Computing; Springer: Cham, Switzerland, 2020. [Google Scholar] [CrossRef]

- Talafha, B.; Za’ter, M.E.; Suleiman, S.; Al-Ayyoub, M.; Al-Kabi, M.N. Sarcasm Detection and Quantification in Arabic Tweets. In Proceedings of the International Conference on Tools with Artificial Intelligence, ICTAI, Washington, DC, USA, 1–3 November 2021. [Google Scholar] [CrossRef]

- Zhang, L.; Zhao, X.; Song, X.; Fang, Y.; Li, D.; Wang, H. A Novel Chinese Sarcasm Detection Model Based on Retrospective Reader. In MultiMedia Modeling; Lecture Notes in Computer Science (including subseries Lecture Notes in Artificial Intelligence and Lecture Notes in Bioinformatics); Springer: Cham, Switzerland, 2022. [Google Scholar] [CrossRef]

- Liu, L.; Chen, X.; He, B. End-to-End Multi-task Learning for Allusion Detection in Ancient Chinese Poems. In Knowledge Science, Engineering and Management; Lecture Notes in Computer Science (including subseries Lecture Notes in Artificial Intelligence and Lecture Notes in Bioinformatics); Springer: Cham, Switzerland, 2020. [Google Scholar] [CrossRef]

- Okimoto, Y.; Suwa, K.; Zhang, J.; Li, L. Sarcasm Detection for Japanese Text Using BERT and Emoji. In Database and Expert Systems Applications; Lecture Notes in Computer Science (including subseries Lecture Notes in Artificial Intelligence and Lecture Notes in Bioinformatics); Springer: Cham, Switzerland, 2021. [Google Scholar] [CrossRef]

- Sharma, Y.; Mandalam, A.V. Irony Detection in Non-English Tweets. In Proceedings of the 2021 6th International Conference for Convergence in Technology, I2CT 2021, Pune, India, 2–4 April 2021. [Google Scholar] [CrossRef]

- Bharti, S.K.; Naidu, R.; Babu, K.S. Hyperbolic Feature-based Sarcasm Detection in Telugu Conversation Sentences. J. Intell. Syst. 2020, 30, 73–89. [Google Scholar] [CrossRef]

- Bharti, S.K.; Babu, K.S.; Jena, S.K. Harnessing Online News for Sarcasm Detection in Hindi Tweets. In Pattern Recognition and Machine Intelligence; Lecture Notes in Computer Science (including subseries Lecture Notes in Artificial Intelligence and Lecture Notes in Bioinformatics); Springer: Cham, Switzerland, 2017. [Google Scholar] [CrossRef]

- Yunitasari, Y.; Musdholifah, A.; Sari, A.K. Sarcasm Detection for Sentiment Analysis in Indonesian Tweets. IJCCS (Indones. J. Comput. Cybern. Syst.) 2019, 13, 53–62. [Google Scholar] [CrossRef]

- Lunando, E.; Purwarianti, A. Indonesian social media sentiment analysis with sarcasm detection. In Proceedings of the 2013 International Conference on Advanced Computer Science and Information Systems, ICACSIS 2013, Sanur Bali, Indonesia, 28–29 September 2013. [Google Scholar] [CrossRef]

- Anan, R.; Apon, T.S.; Hossain, Z.T.; Modhu, E.A.; Mondal, S.; Alam, M.D.G.R. Interpretable Bangla Sarcasm Detection using BERT and Explainable AI. In Proceedings of the 2023 IEEE 13th Annual Computing and Communication Workshop and Conference (CCWC), Las Vegas, NV, USA, 8–11 March 2023; pp. 1272–1278. [Google Scholar] [CrossRef]

- Jain, D.; Kumar, A.; Garg, G. Sarcasm detection in mash-up language using soft-attention based bi-directional LSTM and feature-rich CNN. Appl. Soft Comput. 2020, 91, 106198. [Google Scholar] [CrossRef]

- Karabaş, A.; Dırı, B. Irony Detection with Deep Learning in Turkish Microblogs. In Proceedings of the 2020 28th Signal Processing and Communications Applications Conference (SIU), IEEE, Gaziantep, Turkey, 5–7 October 2020; pp. 1–4. [Google Scholar]

- Onan, A.; Toçoğlu, M.A. Satire identification in Turkish news articles based on ensemble of classifiers. Turk. J. Electr. Eng. Comput. Sci. 2020, 28, 1086–1106. [Google Scholar] [CrossRef]

- Bölücü, N.; Can, B. Semantically-Informed Graph Neural Networks for Irony Detection in Turkish. ACM Trans. Asian Low-Resour. Lang. Inf. Process. 2025, 24, 1–20. [Google Scholar] [CrossRef]

- Öztürk, A.U. Designing and Debiasing Binary Classifiers for Irony and Satire Detection; Middle East Technical University: Ankara, Turkey, 2024. [Google Scholar]

- Riloff, E.; Qadir, A.; Surve, P.; De Silva, L.; Gilbert, N.; Huang, R. Sarcasm as contrast between a positive sentiment and negative situation. In Proceedings of the EMNLP 2013—2013 Conference on Empirical Methods in Natural Language Processing, Seattle, WA, USA, 18–21 October 2013. [Google Scholar]

- Amir, S.; Wallace, B.C.; Lyu, H.; Carvalho, P.; Silva, M.J. Modelling context with user embeddings for sarcasm detection in social media. In Proceedings of the CoNLL 2016—20th SIGNLL Conference on Computational Natural Language Learning, Berlin, Germany, 7–12 August 2016. [Google Scholar] [CrossRef]

- Bamman, D.; Smith, N.A. Contextualized sarcasm detection on twitter. In Proceedings of the 9th International Conference on Web and Social Media, ICWSM 2015, Oxford, UK, 26–29 May 2015. [Google Scholar] [CrossRef]

- Barbieri, F.; Saggion, H.; Ronzano, F. Modelling Sarcasm in Twitter, a Novel Approach. In Proceedings of the 5th Workshop on Computational Approaches to Subjectivity, Sentiment and Social Media Analysis, Baltimore, MD, USA, 27 June 2014; Association for Computational Linguistics: Baltimore, MD, USA, 2014. [Google Scholar] [CrossRef]

- Ptáček, T.; Habernal, I.; Hong, J. Sarcasm detection on Czech and English twitter. In COLING 2014—25th International Conference on Computational Linguistics, Proceedings of COLING 2014: Technical Papers, Dublin, Ireland, 23–29 August 2014; Dublin City University and Association for Computational Linguistics: Dublin, Ireland, 2014. [Google Scholar]

- Schifanella, R.; De Juan, P.; Tetreault, J.; Cao, L. Detecting sarcasm in multimodal social platforms. In Proceedings of the 2016 ACM Multimedia Conference—MM 2016, Amsterdam, The Netherlands, 15–19 October 2016. [Google Scholar] [CrossRef]

- Joshi, A.; Tripathi, V.; Patel, K.; Bhattacharyya, P.; Carman, M. Are word embedding-based features useful for sarcasm detection? In EMNLP 2016—Conference on Empirical Methods in Natural Language Processing, Proceedings; Association for Computational Linguistics: Austin, TX, USA, 2016. [Google Scholar] [CrossRef]

- Son, L.H.; Kumar, A.; Sangwan, S.R.; Arora, A.; Nayyar, A.; Abdel-Basset, M. Sarcasm detection using soft attention-based bidirectional long short-term memory model with convolution network. IEEE Access 2019, 7, 23319–23328. [Google Scholar] [CrossRef]

- Subramanian, J.; Sridharan, V.; Shu, K.; Liu, H. Exploiting emojis for sarcasm detection. In Social, Cultural, and Behavioral Modeling; Lecture Notes in Computer Science (including subseries Lecture Notes in Artificial Intelligence and Lecture Notes in Bioinformatics); Springer: Cham, Switzerland, 2019. [Google Scholar] [CrossRef]

- Tay, Y.; Tuan, L.A.; Hui, S.C.; Su, J. Reasoning with sarcasm by reading in-between. In ACL 2018—56th Annual Meeting of the Association for Computational Linguistics, Proceedings of the Conference (Long Papers), Melbourne, Australia, 15–20 July 2018; Association for Computational Linguistics: Melbourne, Australia, 2018. [Google Scholar] [CrossRef]

- Davidov, D.; Tsur, O.; Rappoport, A. Semi-supervised recognition of sarcastic sentences in twitter and Amazon. In CoNLL 2010—Fourteenth Conference on Computational Natural Language Learning, Proceedings of the Conference, Uppsala, Sweden, 15–16 July 2010; Association for Computational Linguistics: Uppsala, Sweden, 2010. [Google Scholar]

- Sundararajan, K.; Palanisamy, A. Multi-rule based ensemble feature selection model for sarcasm type detection in Twitter. Comput. Intell. Neurosci. 2020, 2020, 2860479. [Google Scholar] [CrossRef]

- Thakur, S.; Singh, S.; Singh, M. Detecting Sarcasm in Text. In Intelligent Systems Design and Applications; Advances in Intelligent Systems and Computing; Springer: Cham, Switzerland, 2020. [Google Scholar] [CrossRef]

- Mukherjee, S.; Bala, P.K. Detecting sarcasm in customer tweets: An NLP based approach. Ind. Manag. Data Syst. 2017, 117, 1109–1126. [Google Scholar] [CrossRef]

- Abercrombie, G.; Hovy, D. Putting sarcasm detection into context: The effects of class imbalance and manual labelling on supervised machine classification of twitter conversations. In 54th Annual Meeting of the Association for Computational Linguistics, ACL 2016—Student Research Workshop; Association for Computational Linguistics: Berlin, Germany, 2016. [Google Scholar] [CrossRef]

- Parde, N.; Nielsen, R. Detecting Sarcasm is Extremely Easy. In Proceedings of the Workshop on Computational Semantics Beyond Events and Roles, Valencia, Spain, 4 April 2017; Association for Computational Linguistics: New Orleans, LA, USA, 2018. [Google Scholar] [CrossRef]

- Liu, Y.; Wang, Y.; Sun, A.; Meng, X.; Li, J.; Guo, J. A Dual-Channel Framework for Sarcasm Recognition by Detecting Sentiment Conflict. In Findings of the Association for Computational Linguistics: NAACL 2022—Findings; Association for Computational Linguistics: Seattle, WA, USA, 2022. [Google Scholar] [CrossRef]

- Kumar, A.; Narapareddy, V.T.; Srikanth, V.A.; Malapati, A.; Neti, L.B.M. Sarcasm Detection Using Multi-Head Attention Based Bidirectional LSTM. IEEE Access 2020, 8, 6388–6397. [Google Scholar] [CrossRef]

- Salim, S.S.; Ghanshyam, A.N.; Ashok, D.M.; Mazahir, D.B.; Thakare, B.S. Deep LSTM-RNN with word embedding for sarcasm detection on twitter. In Proceedings of the 2020 International Conference for Emerging Technology, INCET 2020, Belgaum, India, 5–7 June 2020. [Google Scholar] [CrossRef]

- Mehndiratta, P.; Soni, D. Identification of sarcasm using word embeddings and hyperparameters tuning. J. Discret. Math. Sci. Cryptogr. 2019, 22, 465–489. [Google Scholar] [CrossRef]

- Potamias, R.A.; Siolas, G.; Stafylopatis, A. A robust deep ensemble classifier for figurative language detection. In Engineering Applications of Neural Networks; Communications in Computer and Information Science; Springer: Cham, Switzerland, 2019. [Google Scholar] [CrossRef]

- Jain, T.; Agrawal, N.; Goyal, G.; Aggrawal, N. Sarcasm detection of tweets: A comparative study. In Proceedings of the 2017 10th International Conference on Contemporary Computing, IC3 2017, Noida, India, 10–12 August 2017. [Google Scholar] [CrossRef]

- Kumar, A.; Narapareddy, V.T.; Gupta, P.; Srikanth, V.A.; Neti, L.B.M.; Malapati, A. Adversarial and Auxiliary Features-Aware BERT for Sarcasm Detection. In Proceedings of the 3rd ACM India Joint International Conference on Data Science & Management of Data (8th ACM IKDD CODS & 26th COMAD), Bangalore India, 2–4 January 2021. [Google Scholar] [CrossRef]

- Kalaivani, A.; Thenmozhi, D. Sarcasm identification and detection in conversion context using BERT. In Second Workshop on Figurative Language Processing; Association for Computational Linguistics: Stroudsburg, PA, USA, 2020. [Google Scholar] [CrossRef]

- Srivastava, H.; Varshney, V.; Kumari, S.; Srivastava, S. A Novel Hierarchical BERT Architecture for Sarcasm Detection. In Proceedings of the Second Workshop on Figurative Language Processing, Online, 9 July 2020. [Google Scholar] [CrossRef]

- Gregory, H.; Li, S.; Mohammadi, P.; Tarn, N.; Draelos, R.; Rudin, C. A Transformer Approach to Contextual Sarcasm Detection in Twitter. In Proceedings of the Second Workshop on Figurative Language Processing, Online, 9 July 2020; Association for Computational Linguistics: Stroudsburg, PA, USA, 2020. [Google Scholar] [CrossRef]

- Javdan, S.; Minaei-Bidgoli, B. Applying Transformers and Aspect-based Sentiment Analysis approaches on Sarcasm Detection. In Proceedings of the Second Workshop on Figurative Language Processing, Online, 9 July 2020; Association for Computational Linguistics: Stroudsburg, PA, USA, 2020; pp. 67–71. [Google Scholar] [CrossRef]

- Milliyet. “Milliyet Gazetesi,” Milliyet Gazetecilik ve Yayıncılık A.Ş. Available online: https://www.milliyet.com.tr (accessed on 7 November 2025).

- Cumhuriyet. “Cumhuriyet Gazetesi,” Yeni Gün Haber Ajansı Basın ve Yayıncılık A.Ş. Available online: https://www.cumhuriyet.com.tr (accessed on 7 November 2025).

- Zhang, S.; Dong, L.; Li, X.; Zhang, S.; Sun, X.; Wang, S.; Li, J.; Hu, R.; Zhang, T.; Wu, F.; et al. Instruction Tuning for Large Language Models: A Survey. arXiv 2023. Available online: https://arxiv.org/pdf/2308.10792 (accessed on 21 August 2025). [CrossRef]

- Devlin, J.; Chang, M.W.; Lee, K.; Toutanova, K. BERT: Pre-training of Deep Bidirectional Transformers for Language Understanding. In Proceedings of the NAACL HLT 2019—2019 Conference of the North American Chapter of the Association for Computational Linguistics: Human Language Technologies, Minneapolis, MN, USA, 2–7 June 2019; Volume 1, pp. 4171–4186. Available online: https://arxiv.org/abs/1810.04805v2 (accessed on 10 April 2025).

- Schweter, S. BERTurk-BERT models for Turkish. Zenodo 2020. [Google Scholar] [CrossRef]

- Jiang, Z.; Yu, W.; Zhou, D.; Chen, Y.; Feng, J.; Yan, S. ConvBERT: Improving BERT with Span-based Dynamic Convolution. arXiv 2020. Available online: https://arxiv.org/abs/2008.02496v3 (accessed on 10 April 2025).

- Sanh, V.; Debut, L.; Chaumond, J.; Wolf, T. DistilBERT, a distilled version of BERT: Smaller, faster, cheaper and lighter. arXiv 2019. Available online: https://arxiv.org/abs/1910.01108v4 (accessed on 10 April 2025).

- Abadeer, M. Assessment of DistilBERT performance on Named Entity Recognition task for the detection of Protected Health Information and medical concepts. In Proceedings of the 3rd Clinical Natural Language Processing Workshop, Online, 19 November 2020; Association for Computational Linguistics: Stroudsburg, PA, USA, 2020; pp. 158–167. [Google Scholar] [CrossRef]

- Li, J.; Zhu, Y.; Sun, K. A novel iteration scheme with conjugate gradient for faster pruning on transformer models. Complex Intell. Syst. 2024, 10, 7863–7875. [Google Scholar] [CrossRef]

- Clark, K.; Luong, M.T.; Le, Q.V.; Manning, C.D. ELECTRA: Pre-training Text Encoders as Discriminators Rather Than Generators. In Proceedings of the 8th International Conference on Learning Representations, ICLR 2020, Addis Ababa, Ethiopia, 26–30 April 2020; Available online: https://arxiv.org/abs/2003.10555v1 (accessed on 11 April 2025).

- Husein, A.M.; Livando, N.; Andika, A.; Chandra, W.; Phan, G. Sentiment Analysis Of Hotel Reviews on Tripadvisor with LSTM and ELECTRA. Sink. J. Dan. Penelit. Tek. Inform. 2023, 7, 733–740. [Google Scholar] [CrossRef]

- Xu, Z.; Gong, L.; Ke, G.; He, D.; Zheng, S.; Wang, L.; Bian, J.; Liu, T.-Y. MC-BERT: Efficient Language Pre-Training via a Meta Controller. arXiv 2020. Available online: https://arxiv.org/abs/2006.05744v2 (accessed on 10 April 2025).

- Gargiulo, F.; Minutolo, A.; Guarasci, R.; Damiano, E.; De Pietro, G.; Fujita, H.; Esposito, M. An ELECTRA-Based Model for Neural Coreference Resolution. IEEE Access 2022, 10, 75144–75157. [Google Scholar] [CrossRef]

- Liu, Y.; Ott, M.; Goyal, N.; Du, J.; Joshi, M.; Chen, D.; Levy, O.; Lewis, M.; Zettlemoyer, L.; Stoyanov, V. RoBERTa: A Robustly Optimized BERT Pretraining Approach. arXiv 2019. Available online: https://arxiv.org/abs/1907.11692v1 (accessed on 9 November 2023).

- Wang, L.; Cao, Q.; Zhang, Z.; Mirjalili, S.; Zhao, W. Artificial rabbits optimization: A new bio-inspired meta-heuristic algorithm for solving engineering optimization problems. Eng. Appl. Artif. Intell. 2022, 114, 105082. [Google Scholar] [CrossRef]

| Reference | Methodology | Highlights |

|---|---|---|

| [102] | Rule Based | The algorithm employs two modules: semi supervised pattern acquisition for identifying sarcastic patterns that serve as features for a classifier, and a classification stage that classifies each sentence to a sarcastic class. To assign a score to new examples in the test set, we use a k-nearest neighbors strategy. |

| [103] | Rule Based | After detecting sarcastic tweets, a multi-rule-based approach was used to classify them into four sarcasm types: polite, rude, raging, and deadpan. This approach relies on predefined rules and classifies the tweets based on lexical features, syntactic features, semantic features, and neutral tone with implicit contradiction. |

| [104] | Machine Learning | A supervised machine learning approach was used for sarcasm detection; the Naive Bayes classification algorithm was applied with different feature categories such as content words, function words, POS-based sarcastic patterns, and their combinations. |

| [105] | Machine Learning | To detect sarcasm in customer tweets, supervised learning algorithms using feature sets combining function words and content words were applied with Naive Bayes and maximum entropy classifiers. |

| [106] | Machine Learning | Human vs. machine learning classification performance has been compared under varying amounts of contextual information, and machine performance has been evaluated on balanced and unbalanced, and manually labelled and automatically retrieved datasets. |

| [107] | Machine Learning | The method uses Naive Bayes classification on a feature space augmented using domain adaptation technique. Features are extracted uniformly from both domains and mapped into source, target, and general versions before training. |

| [108] | Deep Learning | The study employs a Dual-Channel Network (DC-Net) that separates the input text into emotional words and the remaining context, encodes these parts separately through the literal and implied channels, and identifies sarcasm in the analyzer module by detecting emotional contradictions. |

| [101] | Deep Learning | In the study, a hybrid deep learning approach was employed that combines features extracted from a soft attention–based bidirectional Long Short-Term Memory (BiLSTM) layer utilizing GloVe word embeddings with a convolutional neural network. |

| [109] | Deep Learning | A deep learning model has been developed that performs sarcasm classification by utilizing a multi-head self-attention–based BiLSTM network, which takes both automatically learned and handcrafted features as input. |

| [110] | Deep Learning | The proposed approach is a deep learning method that performs sarcasm classification using a Recurrent neural network (RNN)- Long Short-Term Memory (LSTM) model after applying word embedding to the preprocessed dataset. The model cleans and encodes the training dataset with label and integer encoding, constructs a word vector matrix, feeds this matrix into the LSTM to record the weights, and calculates the accuracy using the test dataset. |

| [111] | Deep Learning | The study identifies sarcasm in textual data by training and evaluating Convolutional Neural Networks (CNN), RNN, and a hybrid combination of these models, while systematically analyzing the effects of training data size, number of epochs, dropout rates, and different word embeddings on classification performance using the large-scale Reddit corpus. |

| [112] | Ensemble Learning | The study detects sarcasm in tweets through a two-phase approach: first extracting sentiment and punctuation features followed by chi-square feature selection, and second combining the top 200 TF-IDF features with the selected sentiment and punctuation features, then applying Support Vector Machine in the first phase and a voting classifier in the second phase for classification. |

| [113] | Ensemble Learning | The study detects sarcasm by leveraging positive sentiment attached to negative situations through two ensemble-based approaches—a voted ensemble classifier and a random forest classifier—trained on a corpus generated via a seeding algorithm, while also incorporating a pragmatic classifier to detect emoticon-based sarcasm. |

| [114] | Transformer-Based | The study detects sarcasm using the Adversarial and Auxiliary Features-Aware BERT (AAFAB) model, which encodes sentences with Bidirectional Encoder Representations from Transformers (BERT) contextual embeddings, combines them with manually extracted auxiliary features, and applies adversarial training by adding perturbations to input word embeddings for improved generalization. |

| [115] | Transformer-Based | The study identifies sarcasm in social media conversation texts using the BERT model and compares its performance with alternative approaches on Twitter and Reddit datasets with combined context-response and isolated response texts. |

| [116] | Transformer-Based | In the study, sarcasm detection was performed by encoding the context and the response through separate BERT layers, processing the response with a BiLSTM, summarizing the context using convolution and BiLSTM, and finally classifying the output through a multi-channel CNN and a fully connected layer. |

| [117] | Transformer-Based | In the study, LSTM, Gated Recurrent Unit (GRU), and Transformer models were applied to detect sarcasm in Twitter posts, and the best performance was achieved through an ensemble combination of BERT, RoBERTa, XLNet, RoBERTa-large, and ALBERT models. |

| [118] | Transformer-Based | In the study, BERT and aspect-based sentiment analysis approaches were employed to extract the relationship between the contextual dialogue sequence and the response, and to determine whether the response is sarcastic or not. |

| Model | Dataset | Accuracy (%) | Precision (%) | Recall (%) | F-Score (%) |

|---|---|---|---|---|---|

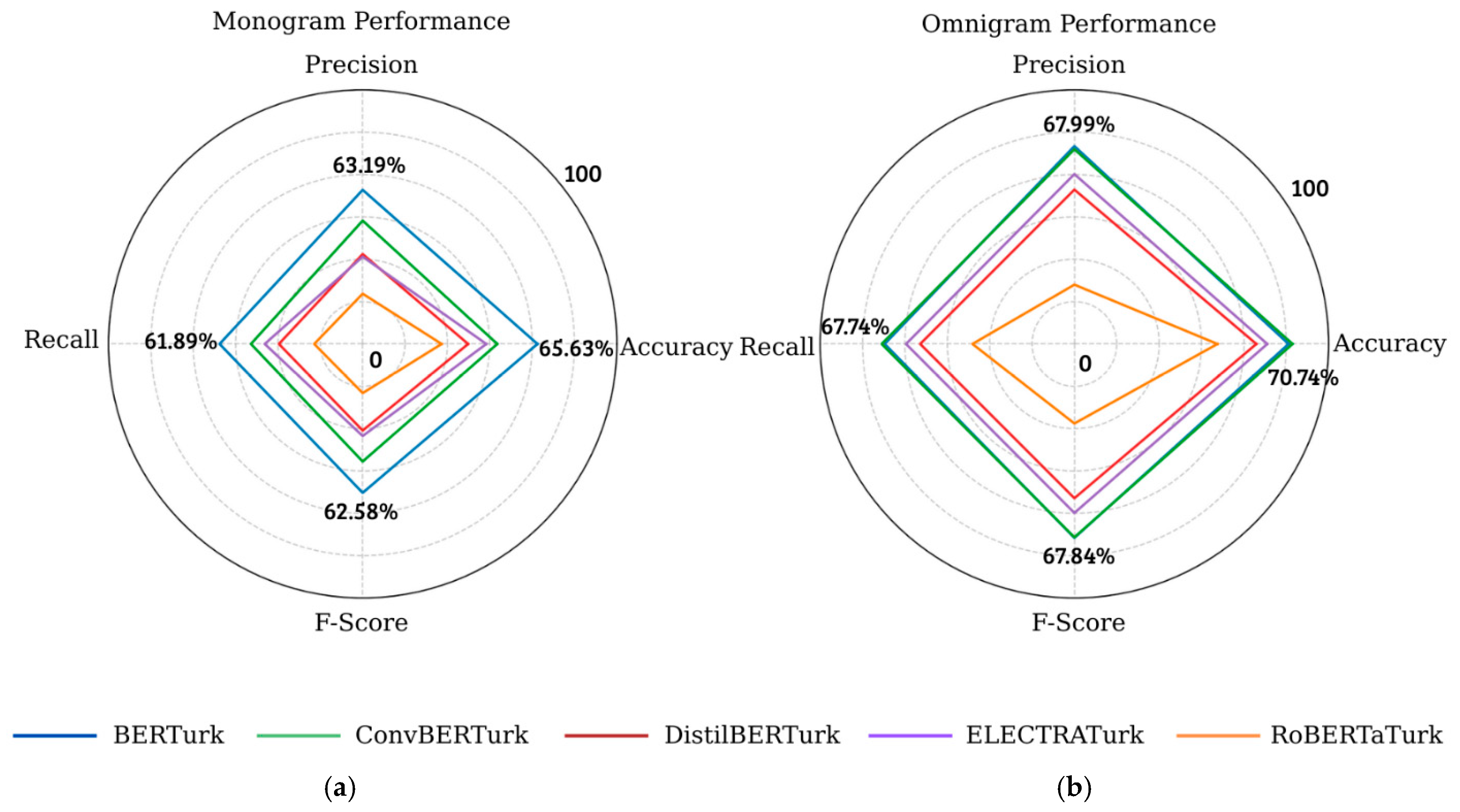

| BERTurk | monogram | 65.63 | 63.19 | 61.89 | 62.58 |

| ConvBERTurk | 60.89 | 59.56 | 58.19 | 58.88 | |

| DistilBERTurk | 57.45 | 55.61 | 54.89 | 55.22 | |

| ELECTRATurk | 59.59 | 55.30 | 56.56 | 55.87 | |

| RoBERTaTurk | 54.32 | 50.93 | 50.72 | 50.80 | |

| BERTurk | bigram | 66.72 | 64.72 | 63.5 | 64.12 |

| ConvBERTurk | 65.3 | 64.39 | 62.00 | 63.18 | |

| DistilBERTurk | 60.91 | 57.81 | 57.52 | 57.66 | |

| ELECTRATurk | 65.53 | 64.08 | 61.93 | 62.99 | |

| RoBERTaTurk | 61.24 | 60.99 | 56.83 | 58.84 | |

| BERTurk | trigram | 67.42 | 64.73 | 64.32 | 64.52 |

| ConvBERTurk | 65.62 | 61.58 | 61.97 | 61.77 | |

| DistilBERTurk | 62.71 | 60.23 | 59.63 | 59.93 | |

| ELECTRATurk | 66.17 | 63.74 | 62.96 | 63.35 | |

| RoBERTaTurk | 61.16 | 56.84 | 56.61 | 56.72 | |

| BERTurk | quadrigram | 67.07 | 65.08 | 63.99 | 64.50 |

| ConvBERTurk | 68.76 | 66.29 | 65.19 | 65.75 | |

| DistilBERTurk | 66.39 | 62.70 | 62.40 | 62.57 | |

| ELECTRATurk | 67.00 | 62.22 | 63.72 | 62.93 | |

| RoBERTaTurk | 61.11 | 57.62 | 56.51 | 57.05 | |

| BERTurk | omnigram | 70.28 | 68.35 | 67.40 | 67.90 |

| ConvBERTurk | 70.74 | 67.99 | 67.74 | 67.84 | |

| DistilBERTurk | 66.47 | 63.22 | 63.20 | 63.22 | |

| ELECTRATurk | 67.76 | 65.05 | 64.87 | 64.96 | |

| RoBERTaTurk | 61.88 | 52.00 | 57.03 | 54.39 |

| Model | Dataset | Accuracy (%) | Precision (%) | Recall (%) | F-Score (%) |

|---|---|---|---|---|---|

| BERTurk | monogram | 65.63 | 63.19 | 61.89 | 62.58 |

| BERTurk | bigram | 66.72 | 64.72 | 63.50 | 64.10 |

| BERTurk | trigram | 67.42 | 64.73 | 64.32 | 64.52 |

| BERTurk | quadrigram | 67.07 | 65.08 | 63.99 | 64.50 |

| BERTurk | omnigram | 7028 | 68.35 | 67.40 | 67.90 |

| ConvBERTurk | monogram | 60.89 | 59.56 | 58.19 | 58.88 |

| ConvBERTurk | bigram | 65.30 | 64.39 | 62.00 | 63.17 |

| ConvBERTurk | trigram | 65.62 | 61.58 | 61.97 | 61.77 |

| ConvBERTurk | quadrigram | 68.76 | 66.29 | 65.19 | 65.75 |

| ConvBERTurk | omnigram | 70.74 | 67.99 | 67.74 | 67.84 |

| Model | Dataset | Accuracy (%) | Precision (%) | Recall (%) | F-Score (%) | Weights |

|---|---|---|---|---|---|---|

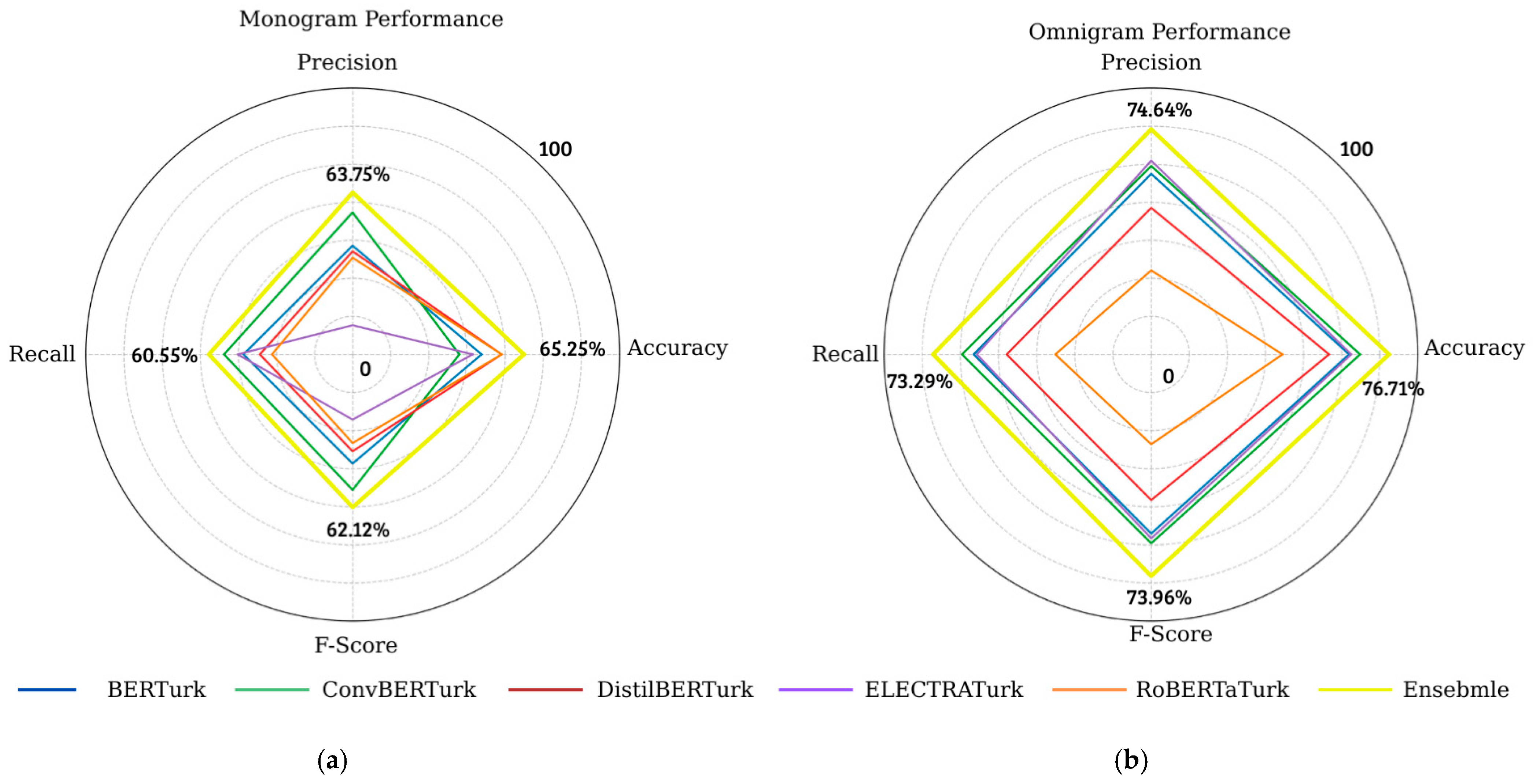

| BERTurk | monogram | 58.02 | 54.55 | 54.62 | 54.58 | 0.2916 |

| ConvBERTurk | 54.20 | 60.31 | 57.97 | 59.12 | 0.0671 | |

| DistilBERTurk | 61.45 | 53.58 | 51.80 | 52.48 | 0.0086 | |

| ELECTRATurk | 56.49 | 40.83 | 55.62 | 47.02 | 0.6122 | |

| RoBERTaTurk | 61.45 | 52.48 | 49.71 | 51.06 | 0.0205 | |

| Average | 58.32 | 52.75 | 53.94 | 52.85 | - | |

| Ensemble Model | 65.25 | 63.75 | 60.55 | 62.12 | - | |

| BERTurk | bigram | 61.07 | 56.26 | 56.50 | 56.38 | 0.0525 |

| ConvBERTurk | 62.21 | 59.26 | 58.45 | 58.85 | 0.0369 | |

| DistilBERTurk | 57.63 | 52.68 | 53.22 | 52.95 | 0.0601 | |

| ELECTRATurk | 64.89 | 59.17 | 59.70 | 59.43 | 0.4594 | |

| RoBERTaTurk | 56.49 | 58.91 | 54.60 | 56.68 | 0.3912 | |

| Average | 60.45 | 57.25 | 56.49 | 56.85 | - | |

| Ensemble Model | 69.84 | 66.42 | 65.18 | 65.80 | - | |

| BERTurk | trigram | 62.50 | 61.68 | 60.44 | 61.06 | 0.0434 |

| ConvBERTurk | 65.27 | 61.51 | 61.12 | 61.31 | 0.0761 | |

| DistilBERTurk | 64.50 | 64.32 | 61.58 | 62.93 | 0.4505 | |

| ELECTRATurk | 66.41 | 63.04 | 62.66 | 62.85 | 0.4267 | |

| RoBERTaTurk | 59.24 | 58.65 | 57.03 | 57.83 | 0.0032 | |

| Average | 63.58 | 61.84 | 60.56 | 61.19 | - | |

| Ensemble Model | 71.37 | 69.78 | 67.70 | 68.73 | - | |

| BERTurk | quadrigram | 64.12 | 59.90 | 59.60 | 59.75 | 0.1992 |

| ConvBERTurk | 61.83 | 62.20 | 61.70 | 61.95 | 0.2988 | |

| DistilBERTurk | 66.03 | 62.95 | 63.00 | 62.98 | 0.3102 | |

| ELECTRATurk | 66.79 | 59.77 | 60.30 | 60.03 | 0.0991 | |

| RoBERTaTurk | 65.27 | 57.29 | 56.80 | 57.04 | 0.0927 | |

| Average | 64.80 | 60.42 | 60.28 | 60.35 | ||

| Ensemble Model | 72.13 | 70.78 | 67.81 | 69.27 | - | |

| BERTurk | omnigram | 69.85 | 66.98 | 66.31 | 66.64 | 0.0639 |

| ConvBERTurk | 71.76 | 68.32 | 68.31 | 68.32 | 0.4383 | |

| DistilBERTurk | 66.41 | 61.10 | 60.64 | 60.87 | 0.0346 | |

| ELECTRATurk | 70.23 | 69.24 | 65.76 | 67.46 | 0.4601 | |

| RoBERTaTurk | 58.40 | 50.29 | 52.29 | 51.27 | 0.0031 | |

| Average | 67.33 | 63.18 | 62.66 | 62.91 | - | |

| Ensemble Model | 76.71 | 74.64 | 73.29 | 73.96 | - |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Eser, M.; Bilgin, M. Irony and Sarcasm Detection in Turkish Texts: A Comparative Study of Transformer-Based Models and Ensemble Learning. Appl. Sci. 2025, 15, 12498. https://doi.org/10.3390/app152312498

Eser M, Bilgin M. Irony and Sarcasm Detection in Turkish Texts: A Comparative Study of Transformer-Based Models and Ensemble Learning. Applied Sciences. 2025; 15(23):12498. https://doi.org/10.3390/app152312498

Chicago/Turabian StyleEser, Murat, and Metin Bilgin. 2025. "Irony and Sarcasm Detection in Turkish Texts: A Comparative Study of Transformer-Based Models and Ensemble Learning" Applied Sciences 15, no. 23: 12498. https://doi.org/10.3390/app152312498

APA StyleEser, M., & Bilgin, M. (2025). Irony and Sarcasm Detection in Turkish Texts: A Comparative Study of Transformer-Based Models and Ensemble Learning. Applied Sciences, 15(23), 12498. https://doi.org/10.3390/app152312498