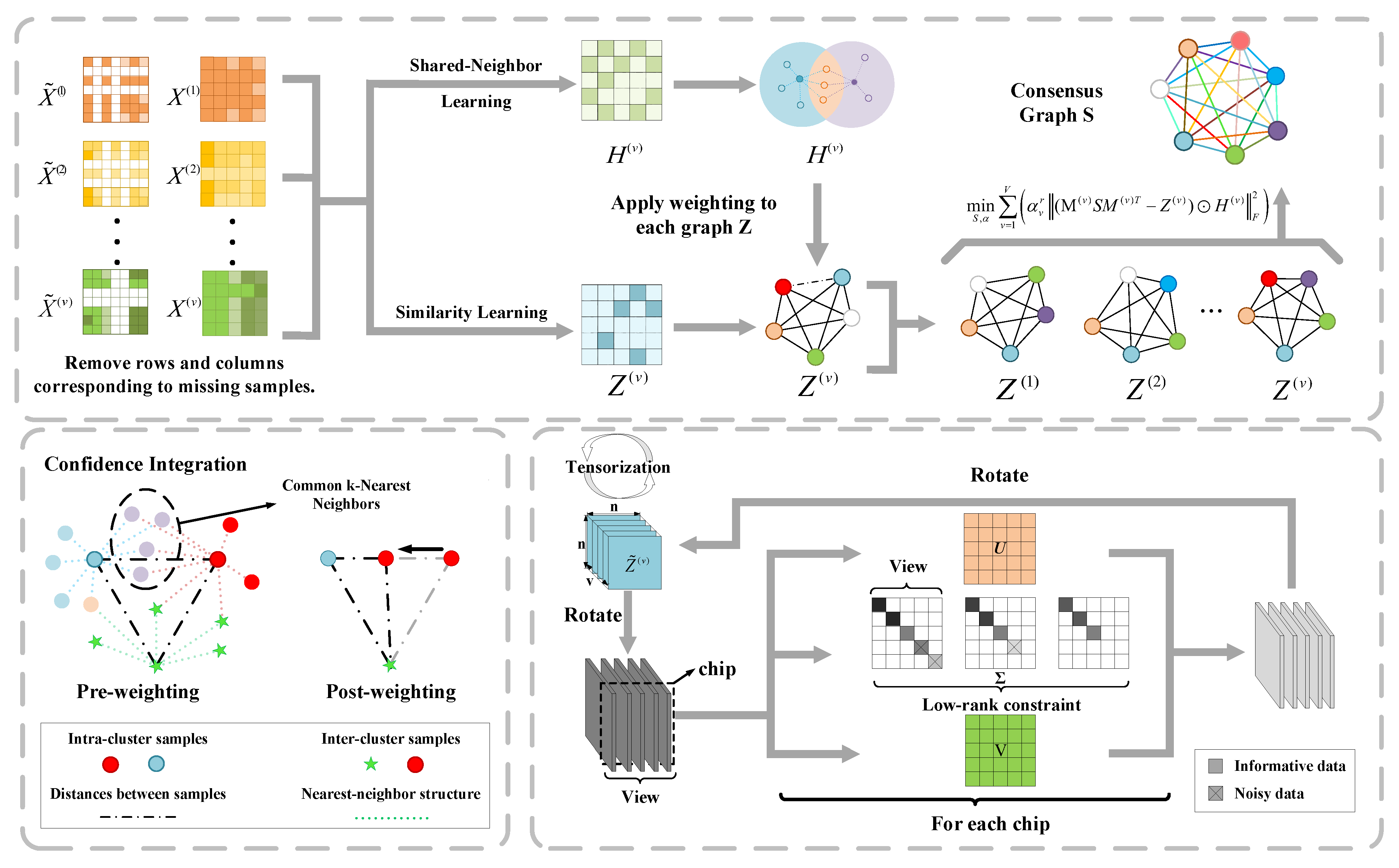

We present a graph-based consensus learning framework for incomplete multi-view clustering. TCGL comprises three key stages: addressing missing data, constructing KNN binary and confidence graphs, and consolidating the confidence graphs into a tensor for low-rank processing. The weighted confidence graph facilitates the generation of a consensus graph, which is subsequently clustered using K-means.

Consensus Graph Learning Method

The standard procedure for graph-based spectral clustering [

26,

27] includes three key steps: affinity graph construction, eigen-decomposition of the graph Laplacian matrix, and final cluster assignment via k-means. The affinity graph is an

matrix, where

n represents the number of samples in the data, and the elements of the matrix indicate the relationships between the samples, such as their similarity or distance. Among these steps, the construction of the affinity graph is particularly important as it significantly impacts the final clustering results.

In recent years, several methods for constructing high-quality affinity graphs have been proposed [

28,

29,

30] aiming to better capture the intrinsic relationships between the data points. One such method is the constrained Laplacian rank (CLR) approach [

31]. CLR aims to learn a new similarity matrix that reflects the cluster structure by ensuring that the matrix has exactly

c blocks, each corresponding to one of the

c clusters. The formulation of this problem is as follows:

where

denotes the consensus high-quality graph to be learned. The constraints ensure that the elements of

S lie within the range

; each row sums to 1; and the rank of its Laplacian matrix

equals

, where

n is the number of samples and

c is the number of clusters. In addition,

represents the pre-constructed affinity graph of the

v-th view, such as the

k-nearest neighbor (

k-NN) graph.

The rationale for imposing the rank constraint

lies in the theorem proposed by [

31], which states that the number of connected components in the graph

is equal to the number of zero eigenvalues of its Laplacian matrix. This constraint ensures exactly

c connected components in the affinity graph, which is consistent with the intrinsic cluster structure of the data.

In graph-learning-based clustering methods, it has been shown that learning a high-quality graph capable of capturing the intrinsic relationships between data points is crucial for improving clustering performance. Therefore, under the assumption that different views should lead to a consistent clustering outcome, many approaches aim to learn a unified consensus graph

by effectively integrating information from all available views for multi-view clustering [

32]. A commonly employed formulation is as follows:

where

is defined as follows:

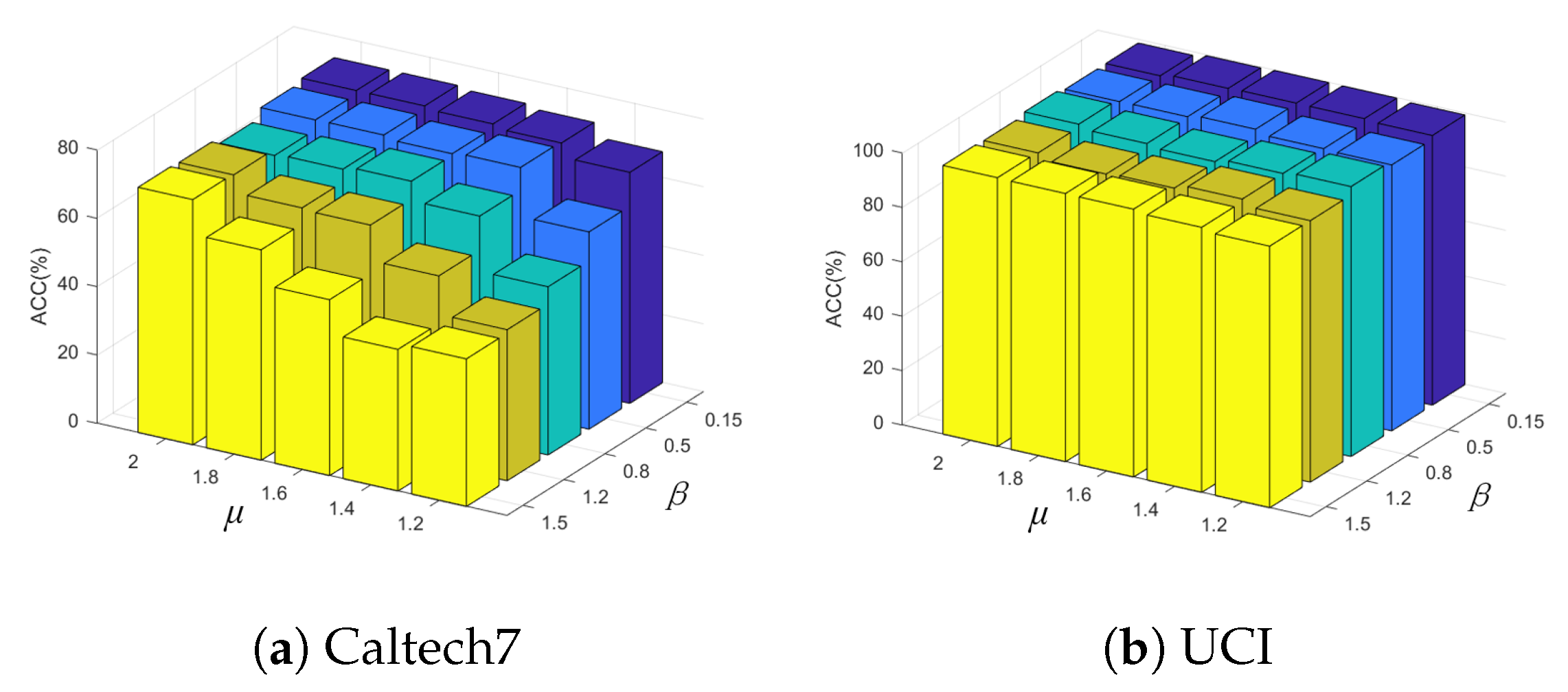

In multi-view clustering, each view might contain varying amounts of information. To integrate this information effectively while acknowledging the differing contributions of each view to the final clustering result, assigning weights to each view is meaningful. This is why is introduced in the formula, where r typically represents the power of the weight, further adjusting the influence of each view. By tuning the values of , we can highlight views that significantly affect the clustering results while downplaying those with lesser impact, thereby enhancing the overall clustering performance.

Furthermore, the rank constraint

in Equation (

10) can be transformed into the minimum optimization problem of

. This transformation allows us to obtain the following equivalent optimization problem:

where

denotes the boundary constraints of variables

and

, and

l is the number of views of the data. After obtaining the consensus graph

by optimizing the above objective, spectral clustering or connected component search methods can be applied to

to achieve the clustering result of the given data. The parameter

r is tunable to control the distribution of the coefficient vector

. Additionally,

and

are penalty parameters introduced to regulate the optimization process.

Challenge and Motivation 1: Although the consensus graph learning model provides an effective multi-view clustering framework, it presents significant challenges under the Generalized Incomplete Multi-View Clustering (GIMC) setting. In GIMC, the core challenge stems from the heterogeneous and unaligned graphs built from available instances. While some techniques aim to recover these missing parts [

25], they are often computationally expensive. Moreover, perfect recovery is impractical, and imperfect reconstructions can lead to severe performance degradation.

To overcome these limitations, this paper employs a method to learn a consensus graph from the available similarity information of the non-missing instances.rather than relying on potentially imperfect recovered graphs. Specifically, we utilize the previously defined

matrix to indicate the availability of each sample in each view, where

indicates that the

j-th view of the

i-th sample is not missing, and

indicates that it is missing. Based on this, we formulate the following optimization problem to learn the consensus graph

using certain graph information from the available views:

where

,

represents the similarity between the

i-th and

j-th available instances in the

v-th view. In our approach,

is constructed by the recognizable k-nearest neighbor algorithm as follows:

Challenge and Motivation 2: For incomplete multi-view data, due to the uncontrollable nature of missing views and noise, the pre-constructed graph may fail to accurately capture the true nearest neighbor relationships between samples. This limitation motivates us to integrate adaptive nearest neighbor graph learning into the consensus graph framework. However, existing solutions often suffer from high computational complexity and optimization difficulties.

Inspired by [

14], we propose an innovative strategy based on spatial proximity assumptions. The core hypothesis of our method is that intrinsically adjacent samples should share overlapping neighbor sets, and the degree of overlap is positively correlated with class consistency. Specifically, pairwise co-neighbor counts are computed by matrix multiplication

, generating a binary adjacency matrix

(with the diagonal initialized to zero) from observable data instances. Subsequently, we apply max-normalization

to transform the co-neighbor counts into probabilistic affinities, constructing a co-neighbor likelihood matrix

. This matrix encodes the probability of sample pairs sharing mutual neighbors and serves as structural prior knowledge. We then incorporate this topological information into the following optimization model:

where ⊙ denotes element-wise multiplication. This formulation effectively incorporates confidence-based structural information, providing a more robust framework for consensus graph learning in incomplete multi-view clustering scenarios. To derive an equivalent formulation of Equation (

18), we first define the transformed variables

and

. Specifically, let

and

. Substituting these into the original problem yields the following equivalent optimization problem:

Challenge and Motivation 3: In multi-view clustering tasks, exploring the local connectivity of data has been proven to be an effective strategy. Neighbors of are typically described as the k-nearest data points in the dataset to .

We previously introduced the specific solution formula for

Z. Based on this, we can redefine the basic formula for computing the nearest neighbor confidence matrix

as follows:

The parameter

serves as a regularization parameter to prevent the trivial solution

. Despite their impressive performance, most existing methods focus on learning common representations or pairwise correlations between views [

33,

34]. However, this approach often fails to capture the full range of deeper, higher-order correlations in multi-view data, leading to the loss of crucial semantic information. Furthermore, these methods typically require a separate post-processing step to obtain the final clustering results and cannot account for the interrelationships between multiple affinity matrices from different views in a unified framework. As a result, this often leads to suboptimal clustering performance. The constraint

is imposed to normalize sample-wise affinities, eliminate scale ambiguity, and maintain comparability across views. This normalization is a standard technique in graph learning and does not restrict the relative similarity structure.

To address the aforementioned issues, we leverage a low-rank tensor-based method for multi-view proximity learning. This method simultaneously optimizes the affinity matrices for each view to effectively capture higher-order correlations in multi-view data, thereby enhancing the final clustering performance. As illustrated in

Figure 1, data samples

from multiple feature subsets or data sources are first provided as input, and corresponding affinity matrices

are generated through a multi-view proximity learning strategy. To further explore the higher-order correlations between different data points across views, we employ a tensor construction mechanism, where multiple affinity matrices are jointly constructed into a tensor

. The mathematical formulation of the method is given as follows:

where

is a regularization parameter,

represents the specific constraint applied to the constructed tensor

, and

refers to the process of constructing the tensor

by merging multiple affinity matrices

into a third-order tensor. Inspired by the work in [

20], we employ a weighted t-SVD-based tensor nuclear norm to capture the higher-order correlations embedded within multi-view affinity matrices. The mathematical formulation for this approach is given as follows:

To achieve the separability of

, the variable-splitting technique is implemented, and an auxiliary tensor variable

is introduced as a substitute for

. Consequently, the model presented in Equation (

19) can be restructured into the subsequent optimization problem.

This work integrates two complementary methodologies. The objective of consensus graph learning is to derive a unified graph that reflects cross-view relationships and the inherent clustering structure. Conversely, low-rank tensorization employs tensors to model higher-order correlations and complex interdependencies between views. Although demonstrably effective individually, these two strategies operate in isolation, limiting the exploitation of their complementary strengths. To overcome this limitation, we propose a unified framework integrating Consensus Graph Learning with Low-rank Tensorization. By jointly optimizing graph construction and tensor decomposition, our approach simultaneously captures local connectivity patterns and global higher-order correlations, providing a more robust and comprehensive solution for multi-view clustering. The details of the framework are as follows:

Remark 2. The proposed objective function incorporates a tensor rank regularization term to capture multi-dimensional interactions across heterogeneous data views. Specifically, the transformed representation undergoes a spatial reorganization through tensor rotation. As demonstrated in Figure 1, the rotational operation yields whose i-th frontal slice encapsulates cross-view associations between n instances. An optimized graph structure should maintain inter-sample relational consistency throughout these heterogeneous feature spaces. To address the inherent structural divergence between different view-specific clusters, we impose a multi-rank minimization constraint on the third-order tensor . This formulation induces spatial low-rank characteristics in each rotated slice , enabling effective extraction of Supplementary Information from disparate viewpoints.

The adoption of third-order tensor representation fundamentally extends conventional matrix-based approaches by modeling triadic relationships rather than pairwise interactions. While matrix factorization techniques can only recover dyadic correlations, the proposed tensor rank minimization framework inherently preserves multi-way dependency patterns. This characteristic makes the tensor regularization term particularly suitable for revealing latent synergistic interactions and multi-dimensional correlations in multi-view learning scenarios.

Remark 3. The rotation of the tensor along the third mode is introduced to make the model compatible with the t-SVD framework proposed by Kilmer and Martin [23]. By applying this rotation, each frontal slice of the transformed tensor corresponds to a mode-3 fiber in the original representation, allowing the tensor to be expressed as a sequence of circulant-structured matrices. This is essential because the t-SVD and the associated tensor nuclear norm are defined under this rotated representation, where convolution-like interactions across views become linear operations in the Fourier domain. Intuitively, the rotation does not change the underlying information but reorganizes the tensor so that correlations across views are captured in a way that aligns with the algebra of the t-product. This enables the model to better exploit multi-view dependencies using a mathematically well-established framework, rather than relying on an arbitrary permutation of tensor dimensions.

Remark 4. The proposed tensor formulation offers a different perspective compared with conventional weighted matrix approaches. While weighted combinations of matrices () are effective for modeling pairwise relationships, they mainly characterize dyadic correlations between views. In contrast, representing multi-view affinities as a third-order tensor provides a unified structure in which all views are jointly considered. The low-rank tensor constraint fosters a shared low-dimensional representation, effectively integrating multi-view information at once. This approach can capture specific higher-order interactions—for instance, cluster patterns shaped by three or more views—which pairwise combinations may not adequately represent.