Artificial Intelligence Chatbots and Temporomandibular Disorders: A Comparative Content Analysis over One Year

Abstract

1. Introduction

2. Materials and Methods

2.1. Models of AI Tested, Settings, Testing Time, and Duration

2.2. Quality and Reliability Assessment

2.3. Content Assessment

2.4. Readability Assessment

2.5. Statistical and Data Analysis

3. Results

4. Discussion

5. Conclusions

Author Contributions

Funding

Institutional Review Board Statement

Data Availability Statement

Conflicts of Interest

Abbreviations

| AI | Artificial Intelligence |

| TMD | Temporomandibular Disorders |

| GQS | Global Quality Score |

| PEMAT | Patient Education Materials Assessment Tool |

| CLEAR | Completeness, Lack of false information, Evidence, Appropriateness, Relevance |

| FRE | Flesch Reading Ease |

| FKGL | Flesch–Kincaid Grade Level |

| LLM | Large Language Model |

| TMJ | Temporomandibular Joint |

| DC/TMD | Diagnostic Criteria for Temporomandibular Disorders |

References

- Okeson, J.; De Kanter, R. Temporomandibular disorders in the medical practice. J. Fam. Pract. 1996, 43, 347–356. [Google Scholar]

- Schiffman, E.; Ohrbach, R.; Truelove, E.; Look, J.; Anderson, G.; Goulet, J.-P.; List, T.; Svensson, P.; Gonzalez, Y.; Lobbezoo, F.; et al. Diagnostic Criteria for Temporomandibular Disorders (DC/TMD) for Clinical and Research Applications: Recommendations of the International RDC/TMD Consortium Network and Orofacial Pain Special Interest Group. J. Oral Facial Pain Headache 2014, 28, 6–27. [Google Scholar] [CrossRef]

- List, T.; Jensen, R.H. Temporomandibular disorders: Old ideas and new concepts. Cephalalgia 2017, 37, 692–704. [Google Scholar] [CrossRef] [PubMed]

- Valesan, L.F.; Da-Cas, C.D.; Réus, J.C.; Denardin, A.C.S.; Garanhani, R.R.; Bonotto, D.; Januzzi, E.; Mendes de Souza, B.D. Prevalence of temporomandibular joint disorders: A systematic review and meta-analysis. Clin. Oral Investig. 2021, 25, 441–453. [Google Scholar] [CrossRef] [PubMed]

- Yao, L.; Sadeghirad, B.; Li, M.; Li, J.; Wang, Q.; Crandon, H.N.; Martin, G.; Morgan, R.; Florez, I.D.; Hunskaar, B.S.; et al. Management of chronic pain secondary to temporomandibular disorders: A systematic review and network meta-analysis of randomised trials. BMJ 2023, 383, e076226. [Google Scholar] [CrossRef]

- Bundorf, M.K.; Wagner, T.H.; Singer, S.J.; Baker, L.C. Who searches the internet for health information? Health Serv. Res. 2006, 41, 819–836. [Google Scholar] [CrossRef] [PubMed]

- Marton, C.; Choo, C.W. A review of theoretical models of health information seeking on the web, J. A review of theoretical models of health information seeking on the web. J. Doc. 2012, 68, 330–352. [Google Scholar] [CrossRef]

- Doyle, D.J.; Ruskin, K.J.; Engel, T.P. The Internet and medicine: Past, present, and future. The Internet and medicine: Past, present, and future. Yale J. Biol. Med. 1996, 69, 429–437. [Google Scholar]

- Jia, X.; Pang, Y.; Liu, L.S. Online Health Information Seeking Behavior: A Systematic Review. Healthcare 2021, 9, 1740. [Google Scholar] [CrossRef]

- Tan, S.S.L.; Goonawardene, N. Internet Health Information Seeking and the Patient-Physician Relationship: A Systematic Review. J. Med. Internet Res. 2017, 19, e9. [Google Scholar] [CrossRef]

- Aldhafeeri, L.; Aljumah, F.; Thabyan, F.; Alabbad, M.; AlShahrani, S.; Alanazi, F.; Al-Nafjan, A. Generative AI Chatbots Across Domains: A Systematic Review. Appl. Sci. 2025, 15, 11220. [Google Scholar] [CrossRef]

- Sallam, M. ChatGPT Utility in Healthcare Education, Research, and Practice: Systematic Review on the Promising Perspectives and Valid Concerns. Healthcare 2023, 11, 887. [Google Scholar] [CrossRef]

- Lee, P.; Bubeck, S.; Petro, J. Benefits, Limits, and Risks of GPT-4 as an AI Chatbot for Medicine. Benefits, Limits, and Risks of GPT-4 as an AI Chatbot for Medicine. N. Engl. J. Med. 2023, 388, 1233–1239. [Google Scholar] [CrossRef]

- Verma, S.; Sharma, R.; Deb, S.; Maitra, D. Artificial intelligence in marketing: Systematic review and future research direction. Int. J. Inf. Manag. Data Insights 2021, 1, 100002. [Google Scholar] [CrossRef]

- Chen, L.; Zaharia, M.; Zou, J. How is ChatGPT’s behavior changing over time? arXiv 2023, arXiv:2307.09009. [Google Scholar] [CrossRef]

- Gupta, M.; Virostko, J.; Kaufmann, C. Large language models in radiology: Fluctuating performance and decreasing discordance over time. Eur. J. Radiol. 2025, 182, 111842. [Google Scholar] [CrossRef] [PubMed]

- Akan, B.; Dindaroğlu, F.Ç. Content and Quality Analysis of Websites as a Patient Resource for Temporomandibular Disorders. Turk. J. Orthod. 2020, 33, 203–209. [Google Scholar]

- Bronda, S.; Ostrovsky, M.G.; Jain, S.; Malacarne, A. The role of social media for patients with temporomandibular disorders: A content analysis of Reddit. J. Oral Rehabil. 2022, 49, 1–9. [Google Scholar] [CrossRef]

- Cannatà, D.; Galdi, M.; Russo, A.; Scelza, C.; Michelotti, A.; Martina, S. Reliability and Educational Suitability of TikTok Videos as a Source of Information on Sleep and Awake Bruxism: A Cross-Sectional Analysis. J. Oral Rehabil. 2025, 52, 434–442. [Google Scholar] [CrossRef]

- Camargo, E.S.; Quadras, I.C.C.; Garanhani, R.R.; de Araujo, C.M.; Stuginski-Barbosa, J. A Comparative Analysis of Three Large Language Models on Bruxism Knowledge. J. Oral Rehabil. 2025, 52, 896–903. [Google Scholar] [CrossRef]

- Hassan, M.G.; Abdelaziz, A.A.; Abdelrahman, H.H.; Mohamed, M.M.Y.; Ellabban, M.T. Performance of AI-Chatbots to Common Temporomandibular Joint Disorders (TMDs) Patient Queries: Accuracy, Completeness, Reliability and Readability. Orthod. Craniofac. Res. 2025; ahead of print. [Google Scholar] [CrossRef]

- Kula, B.; Kula, A.; Bagcier, F.; Alyanak, B. Artificial intelligence solutions for temporomandibular joint disorders: Contributions and future potential of ChatGPT. Korean J. Orthod. 2025, 55, 131–141. [Google Scholar] [CrossRef]

- Sallam, M.; Barakat, M.; Sallam, M. A Preliminary Checklist (METRICS) to Standardize the Design and Reporting of Studies on Generative Artificial Intelligence–Based Models in Health Care Education and Practice: Development Study Involving a Literature Review. Interact. J. Med. Res. 2024, 13, e54704. [Google Scholar] [CrossRef]

- Bernard, A.; Langille, M.; Hughes, S.; Rose, C.; Leddin, D.; Veldhuyzen Van Zanten, S. A systematic review of patient inflammatory bowel disease information resources on the World Wide Web. Am. J. Gastroenterol. 2007, 102, 2070–2077. [Google Scholar] [CrossRef]

- Shoemaker, S.J.; Wolf, M.S.; Brach, C. Development of the Patient Education Materials Assessment Tool (PEMAT): A new measure of understandability and actionability for print and audiovisual patient information. Patient Educ. Couns. 2014, 96, 395–403. [Google Scholar] [CrossRef]

- Charnock, D.; Shepperd, S.; Needham, G.; Gann, R. DISCERN: An instrument for judging the quality of written consumer health information on treatment choices. J. Epidemiol. Community Health 1999, 53, 105–111. [Google Scholar] [CrossRef] [PubMed]

- Sallam, M.; Barakat, M.; Sallam, M. Pilot Testing of a Tool to Standardize the Assessment of the Quality of Health Information Generated by Artificial Intelligence-Based Models. Cureus 2023, 15, e49373. [Google Scholar] [CrossRef]

- Flesch, R. A new readability yardstick. J. Appl. Psychol. 1948, 32, 221–233. [Google Scholar] [CrossRef] [PubMed]

- Kincaid, J.; Fishburne, R.; Rogers, R.; Chissom, B. Derivation of New Readability Formulas (Automated Readability Index, Fog Count and Flesch Reading Ease Formula) for Navy Enlisted Personnel, Institute for Simulation and Training. Available online: https://stars.library.ucf.edu/istlibrary/56 (accessed on 1 April 2025).

- Bellinger, J.R.; De La Chapa, J.S.; Kwak, M.W.; Ramos, G.A.; Morrison, D.; Kesser, B.W. BPPV Information on Google Versus AI (ChatGPT). Otolaryngol. Head Neck Surg. 2024, 170, 1504–1511. [Google Scholar] [CrossRef] [PubMed]

- Incerti Parenti, S.; Bartolucci, M.L.; Biondi, E.; Maglioni, A.; Corazza, G.; Gracco, A.; Alessandri-Bonetti, G. Online Patient Education in Obstructive Sleep Apnea: ChatGPT versus Google Search. Healthcare 2024, 12, 1781. [Google Scholar] [CrossRef]

- Makrygiannakis, M.A.; Giannakopoulos, K.; Kaklamanos, E.G. Evidence-based potential of generative artificial intelligence large language models in orthodontics: A comparative study of ChatGPT, Google Bard, and Microsoft Bing. Eur. J. Orthod. 2024, 46, cjae017. [Google Scholar] [CrossRef]

- Nakano, R.; Hilton, J.; Balaji, S.; Wu, J.; Ouyang, L.; Kim, C.; Hesse, C.; Jain, S.; Kosaraju, V.; Saunders, W.; et al. WebGPT: Browser-assisted question-answering with human feedback. arXiv 2021, arXiv:2112.09332. [Google Scholar] [CrossRef]

- Santamato, V.; Tricase, C.; Faccilongo, N.; Iacoviello, M.; Marengo, A. Exploring the Impact of Artificial Intelligence on Healthcare Management: A Combined Systematic Review and Machine-Learning Approach. Appl. Sci. 2024, 14, 10144. [Google Scholar] [CrossRef]

- Bai, Y.; Kadavath, S.; Kundu, S.; Askell, A.; Kernion, J.; Jones, A.; Chen, A.; Goldie, A.; Mirhoseini, A.; McKinnon, C.; et al. Constitutional AI: Harmlessness from AI Feedback. arXiv 2022, arXiv:2212.08073. [Google Scholar] [CrossRef]

- Rodhen, R.M.; de Holanda, T.A.; Barbon, F.J.; de Oliveira da Rosa, W.L.; Boscato, N. Invasive surgical procedures for the management of internal derangement of the temporomandibular joint: A systematic review and meta-analysis regarding the effects on pain and jaw mobility. Clin. Oral Investig. 2022, 26, 3429–3446. [Google Scholar] [CrossRef]

- Durham, J.; Wassell, R.W. Recent advancements in temporomandibular disorders (TMDs), Rev. Recent advancements in temporomandibular disorders (TMDs). Rev. Pain 2011, 5, 18–25. [Google Scholar] [CrossRef]

- Ghurye, S.; McMillan, R. Orofacial pain—An update on diagnosis and management, Br. Orofacial pain—An update on diagnosis and management. Br. Dent. J. 2017, 223, 639–647. [Google Scholar] [CrossRef]

- Manfredini, D.; Lombardo, L.; Siciliani, G. Temporomandibular disorders and dental occlusion. Temporomandibular disorders and dental occlusion. A systematic review of association studies: End of an era? J. Oral Rehabil. 2017, 44, 86–87. [Google Scholar] [CrossRef]

- Roganović, J.; Radenković, M.; Miličić, B. Responsible Use of Artificial Intelligence in Dentistry: Survey on Dentists’ and Final-Year Undergraduates’ Perspectives. Healthcare 2023, 11, 1480. [Google Scholar] [CrossRef] [PubMed]

- Van Grootel, R.J.; Buchner, R.; Wismeijer, D.; van der Glas, H.W. Towards an optimal therapy strategy for myogenous TMD, physiotherapy compared with occlusal splint therapy in an RCT with therapy-and-patient-specific treatment duration. BMC Musculoskelet. Disord. 2017, 18, 76. [Google Scholar] [CrossRef] [PubMed]

- Ji, Z.; Lee, N.; Frieske, R.; Yu, T.; Su, D.; Xu, Y.; Ishii, E.; Bang, Y.; Chen, D.; Dai, W.; et al. Survey of Hallucination in Natural Language Generation. ACM Comput. Surv. 2023, 55, 3571730. [Google Scholar] [CrossRef]

- Borges do Nascimento, I.J.; Pizarro, A.B.; Almeida, J.M.; Azzopardi-Muscat, N.; Gonçalves, M.A.; Björklund, M.; Novillo-Ortiz, D. Infodemics and health misinformation: A systematic review of reviews. Bull. World Health Organ. 2022, 100, 544–561. [Google Scholar] [CrossRef]

- World Health Organization. Ethics and governance of artificial intelligence for health: Large multi-modal models. In WHO Guidance; World Health Organization: Geneva, Switzerland, 2025; ISBN 978-92-4-008475-9. [Google Scholar]

- Williamson, S.M.; Prybutok, V. Balancing Privacy and Progress: A Review of Privacy Challenges, Systemic Oversight, and Patient Perceptions in AI-Driven Healthcare. Appl. Sci. 2024, 14, 675. [Google Scholar] [CrossRef]

- Hager, P.; Jungmann, F.; Holland, R.; Bhagat, K.; Hubrecht, I.; Knauer, M.; Vielhauer, J.; Makowski, M.; Braren, R.; Kaissis, G.; et al. Evaluation and mitigation of the limitations of large language models in clinical decision-making. Nat. Med. 2024, 30, 2613–2622. [Google Scholar] [CrossRef]

- Shumailov, I.; Shumaylov, Z.; Zhao, Y.; Papernot, N.; Anderson, R.; Gal, Y. AI models collapse when trained on recursively generated data. Nature 2024, 631, 755–759. [Google Scholar] [CrossRef] [PubMed]

- Rao, K.D.; Peters, D.H.; Bandeen-Roche, K. Towards patient-centered health services in India—A scale to measure patient perceptions of quality. Int. J. Qual. Health Care 2006, 18, 414–421. [Google Scholar] [CrossRef] [PubMed]

- Snyder, C.F.; Wu, A.W.; Miller, R.S.; Jensen, R.E.; Bantug, E.T.; Wolff, A.C. The role of informatics in promoting patient-centered care. Cancer J. 2011, 17, 211. [Google Scholar] [CrossRef]

- Howell, M.D. Generative artificial intelligence, patient safety and healthcare quality: A review. BMJ Qual. Saf. 2024, 33, 748–759. [Google Scholar] [CrossRef]

- Abd-Alrazaq, A.; AlSaad, R.; Alhuwail, D.; Ahmed, A.; Healy, P.M.; Latifi, S.; Aziz, S.; Damseh, R.; Alrazak, S.A.; Sheikh, J. Large Language Models in Medical Education: Opportunities, Challenges, and Future Directions. JMIR Med. Educ. 2023, 9, e48291. [Google Scholar] [CrossRef]

- Bernal, J.; Mazo, C. Transparency of Artificial Intelligence in Healthcare: Insights from Professionals in Computing and Healthcare Worldwide. Appl. Sci. 2022, 12, 10228. [Google Scholar] [CrossRef]

| 1 | What are the symptoms of temporomandibular disorders? |

| 2 | How is temporomandibular disorder diagnosed? |

| 3 | What causes temporomandibular disorders? |

| 4 | Are there effective home remedies for TMD relief? |

| 5 | What are the available treatments for temporomandibular disorders? |

| 6 | Can stress and anxiety contribute to temporomandibular disorders? |

| 7 | What exercises can help with temporomandibular disorders? |

| 8 | Are there specific foods to avoid with temporomandibular disorders? |

| 9 | How long does it take to recover from temporomandibular disorders? |

| 10 | When should I see a doctor for temporomandibular disorders? |

| Assessment Variables | ChatGPT Mean (95% CI) | Google Gemini Mean (95% CI) | Microsoft Copilot Mean (95% CI) | p-Value |

|---|---|---|---|---|

| PEMAT Understandability | 60.3 (53.7–66.8) | 73.2 (68.5–77.9) | 61 (51.7–70.3) | 0.070 |

| PEMAT Actionability | 48.0 (38.0–58.0) | 50.0 (36.1–63.9) | 42.0 (31.4–52.6) | 0.392 |

| DISCERN Reliability | 23.2 (21.7–24.7) | 25.2 (24.3–26.1) | 26.6 (23.1–30.1) | 0.694 |

| DISCERN Treatment choice | 15.2 (9.1–21.3) | 15.8 (10.3–21.3) | 13.6 (11.3–15-9) | 0.958 |

| DISCERN Total | 32.0 (24.4–39.6) | 34.4 (27.1–41.7) | 34.3 (28.7–39.9) | 0.850 |

| Flesch Reading Ease Score (FRE) | 28.5 (16.3–40.8) | 41.8 (32.8–50.8) | 33.5 (23.9–43.2) | 0.210 |

| Flesch–Kincaid Grade Level Score (FKGL) | 15.7 (12.6–18.8) | 11.9 (10.5–13.4) | 14.7 (12.6–16.8) | 0.159 |

| word count | 320.8 (273.8–367.8) | 282.7 (251.0–314.4) | 258.6 (194.9–322.3) | 0.210 |

| Assessment Variables | ChatGPT Mean (95% CI) | Google Gemini Mean (95% CI) | Microsoft Copilot Mean (95% CI) | p-Value |

|---|---|---|---|---|

| Global Quality Scale | 4.3 (3.8–4.8) | 4.2 (3.7–4.7) | 4.1 (3.7–4.5) | 0.879 |

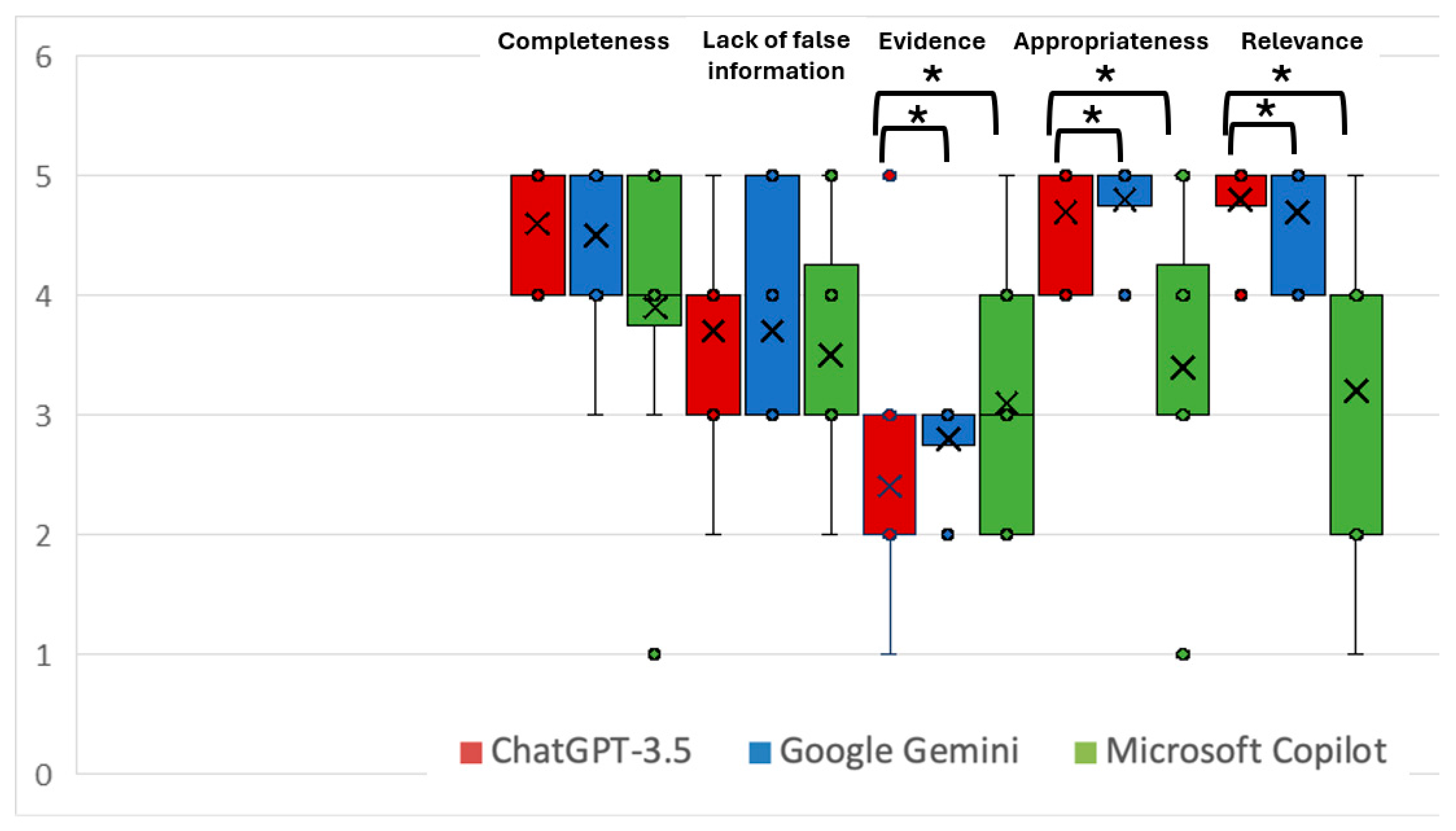

| CLEAR Completeness | 4.6 (4.2–5.0) | 4.3 (3.8–4.8) | 4.1 (3.7–4.5) | 0.174 |

| CLEAR Lack of false information | 3.9 (3.4–4.4) | 4.0 (3.5–4.5) | 4.2 (3.6–4.8) | 0.631 |

| CLEAR Evidence | 3.5 (2.6–4.4) | 3.0 (2.2–3.8) | 3.4 (2.6–4.2) | 0.677 |

| CLEAR Appropriateness | 4.9 (4.8–5.0) | 4.7 (4.4–5.0) | 4.8 (4.5–5.0) | 0.197 |

| CLEAR Relevance | 4.6 (4.1–5.0) | 4.2 (3.5–4.9) | 4.6 (4.2–5.0) | 0.501 |

| CLEAR Total Score | 21.6 (19.8–23.4) | 20.2 (18.5–21.9) | 21.1 (19.8–22.4) | 0.879 |

| PEMAT Understandability | 68.3 (61.0–75.7) | 63.3 (57.6–69.1) | 60.8 (53.9–67.7) | 0.879 |

| PEMAT Actionability | 26.0 (8.1–43.9) | 36.0 (19.8–52.2) | 38.0 (22.3–53.7) | 0.460 |

| DISCERN Reliability | 25.1 (22.3–27.9) | 24.1 (22.1–26.1) | 25.4 (22.8–28.0) | 0.835 |

| DISCERN Treatment choice | 16.2 (9.7–22.7) | 19.2 (14.0–24.4) | 15.0 (10.3–19.7) | 0.361 |

| DISCERN Total | 34.7 (26.2–43.2) | 35.4 (26.6–44.2) | 34.0 (27.8–40.2) | 0.898 |

| Flesch Reading Ease Score (FRE) | 46.8 (37.5–56.0) | 53.8 (42.4–65.2) | 45.9 (34.0–57.7) | 0.292 |

| Flesch–Kincaid Grade Level Score (FKGL) | 9.9 (8.1–11.6) | 9.0 (7.1–11.0) | 10.5 (8.4–12.5) | 0.336 |

| Word count | 276.9 (181.4–372.4) | 234.2 (160.6–307.8) | 180.3 (120.6–240.0) | 0.168 |

| ChatGPT Mean (95% CI) | p-Value | Google Gemini Mean (95% CI) | p-Value | Microsoft Copilot Mean (95% CI) | p-Value | ||||

|---|---|---|---|---|---|---|---|---|---|

| Assessment Variables | T1 | T2 | T1 | T2 | T1 | T2 | |||

| Global Quality Scale | 4.2 (3.9–4.5) | 4.3 (3.8–4.8) | 0.705 | 4.4 (3.9–4.9) | 4.2 (3.7–4.7) | 0.960 | 3.0 (2.3–3.7) | 4.1 (3.7–4.5) | 0.030 * |

| CLEAR Completeness | 4.6 (4.2–5.0) | 4.6 (4.2–5.0) | 1.00 | 4.5 (4.0–5.0) | 4.3 (3.8–4.8) | 0.634 | 3.9 (3.0–4.8) | 4.1 (3.7–4.5) | 0.739 |

| CLEAR Lack of false information | 3.7 (3.1–4.3) | 3.9 (3.4–4.4) | 0.414 | 3.7 (3.0–4.4) | 4.0 (3.5–4.5) | 0.720 | 3.5 (2.8–4.2) | 4.2 (3.6–4.8) | 0.152 |

| CLEAR Evidence | 2.4 (1.6–3.2) | 3.5 (2.6–4.4) | 0.172 | 2.8 (2.5–3.1) | 3.0 (2.2–3.8) | 0.720 | 3.1 (2.3–3.9) | 3.4 (2.6–4.2) | 1.00 |

| CLEAR Appropriateness | 4.7 (4.4–5.0) | 4.9 (4.8–5.0) | 0.249 | 4.8 (4.5–5.0) | 4.7 (4.4–5.0) | 0.634 | 3.4 (2.6–4.2) | 4.8 (4.5–5.0) | 0.042 * |

| CLEAR Relevance | 4.8 (4.5–5.0) | 4.6 (4.1–5.0) | 0.828 | 4.7 (4.4–5.0) | 4.2 (3.5–4.9) | 0.393 | 3.2 (2.3–4.1) | 4.6 (4.2–5.0) | 0.042 * |

| CLEAR Total Score | 20.2 (18.6–21.8) | 21.6 (19.8–23.4) | 0.342 | 20.5 (19.1–21.9) | 20.2 (18.5–21.9) | 1.00 | 17.1 (14.3–19.9) | 21.1 (19.8–22.4) | 0.024 * |

| PEMAT Understandability | 60.3 (53.7–66.8) | 68.3 (61.0–75.7) | 0.342 | 73.2 (68.5–77.9) | 63.3 (57.6–69.1) | 0.033 * | 61.0 (51.7–70.3) | 60.8 (53.9–67.7) | 1.00 |

| PEMAT Actionability | 48.0 (38.0–58.0) | 26.0 (8.1–43.9) | 0.035 * | 50.0 (36.1–63.9) | 36.0 (19.8–52.2) | 0.038 * | 42.0 (31.4–52.6) | 38.0 (22.3–53.7) | 0.671 |

| DISCERN Reliability | 23.2 (21.7–24.7) | 25.1 (22.3–27.9) | 0.411 | 25.2 (24.3–26.1) | 24.1 (22.1–26.1) | 0.720 | 26.6 (23.1–30.1) | 25.4 (22.8–28.0) | 0.918 |

| DISCERN Treatment choice | 15.2 (9.1–21.3) | 16.2 (9.7–22.7) | 0.408 | 15.8 (10.3–21.3) | 19.20 (14.0–24.4) | 0.054 | 13.6 (11.3–15.9) | 15.0 (10.3–19.7) | 0.279 |

| DISCERN Total | 32.0 (24.4–39.6) | 34.7 (26.2–43.2) | 0.411 | 34.4 (27.1–41.7) | 35.4 (26.6–44.2) | 0.720 | 34.3 (28.7–39.9) | 34.0 (27.8–40.2) | 1.00 |

| Flesch Reading Ease Score (FRE) | 28.5 (16.3–40.8) | 46.8 (37.5–56.0) | 0.027 * | 41.8 (32.8–50.8) | 53.8 (42.4–65.2) | 0.056 | 33.6 (23.9–43.2) | 45.9 (34.0–57.7) | 0.026 * |

| Flesch–Kincaid Grade Level Score (FKGL) | 15.7 (12.6–18.8) | 9.9 (8.1–11.6) | 0.027 * | 11.9 (10.5–13.4) | 9.0 (7.1–11.0) | 0.051 | 14.7 (12.6–16.8) | 10.5 (8.4–12.5) | 0.021 * |

| Word count | 320.8 (274–368) | 276.9 (181.4–372.4) | 0.241 | 282.7 (251.0–314.4) | 234.2 (160.6–307.8) | 0.139 | 258.6 (120.6–240.0) | 180.3 (120.6–240.0) | 0.074 |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Incerti Parenti, S.; Maglioni, A.; Evangelisti, E.; Gracco, A.L.T.; Badiali, G.; Alessandri-Bonetti, G.; Bartolucci, M.L. Artificial Intelligence Chatbots and Temporomandibular Disorders: A Comparative Content Analysis over One Year. Appl. Sci. 2025, 15, 12441. https://doi.org/10.3390/app152312441

Incerti Parenti S, Maglioni A, Evangelisti E, Gracco ALT, Badiali G, Alessandri-Bonetti G, Bartolucci ML. Artificial Intelligence Chatbots and Temporomandibular Disorders: A Comparative Content Analysis over One Year. Applied Sciences. 2025; 15(23):12441. https://doi.org/10.3390/app152312441

Chicago/Turabian StyleIncerti Parenti, Serena, Alessandro Maglioni, Elia Evangelisti, Antonio Luigi Tiberio Gracco, Giovanni Badiali, Giulio Alessandri-Bonetti, and Maria Lavinia Bartolucci. 2025. "Artificial Intelligence Chatbots and Temporomandibular Disorders: A Comparative Content Analysis over One Year" Applied Sciences 15, no. 23: 12441. https://doi.org/10.3390/app152312441

APA StyleIncerti Parenti, S., Maglioni, A., Evangelisti, E., Gracco, A. L. T., Badiali, G., Alessandri-Bonetti, G., & Bartolucci, M. L. (2025). Artificial Intelligence Chatbots and Temporomandibular Disorders: A Comparative Content Analysis over One Year. Applied Sciences, 15(23), 12441. https://doi.org/10.3390/app152312441