FASwinNet: Frequency-Aware Swin Transformer for Remote Sensing Image Super-Resolution via Enhanced High-Similarity-Pass Attention and Octave Residual Blocks

Abstract

1. Introduction

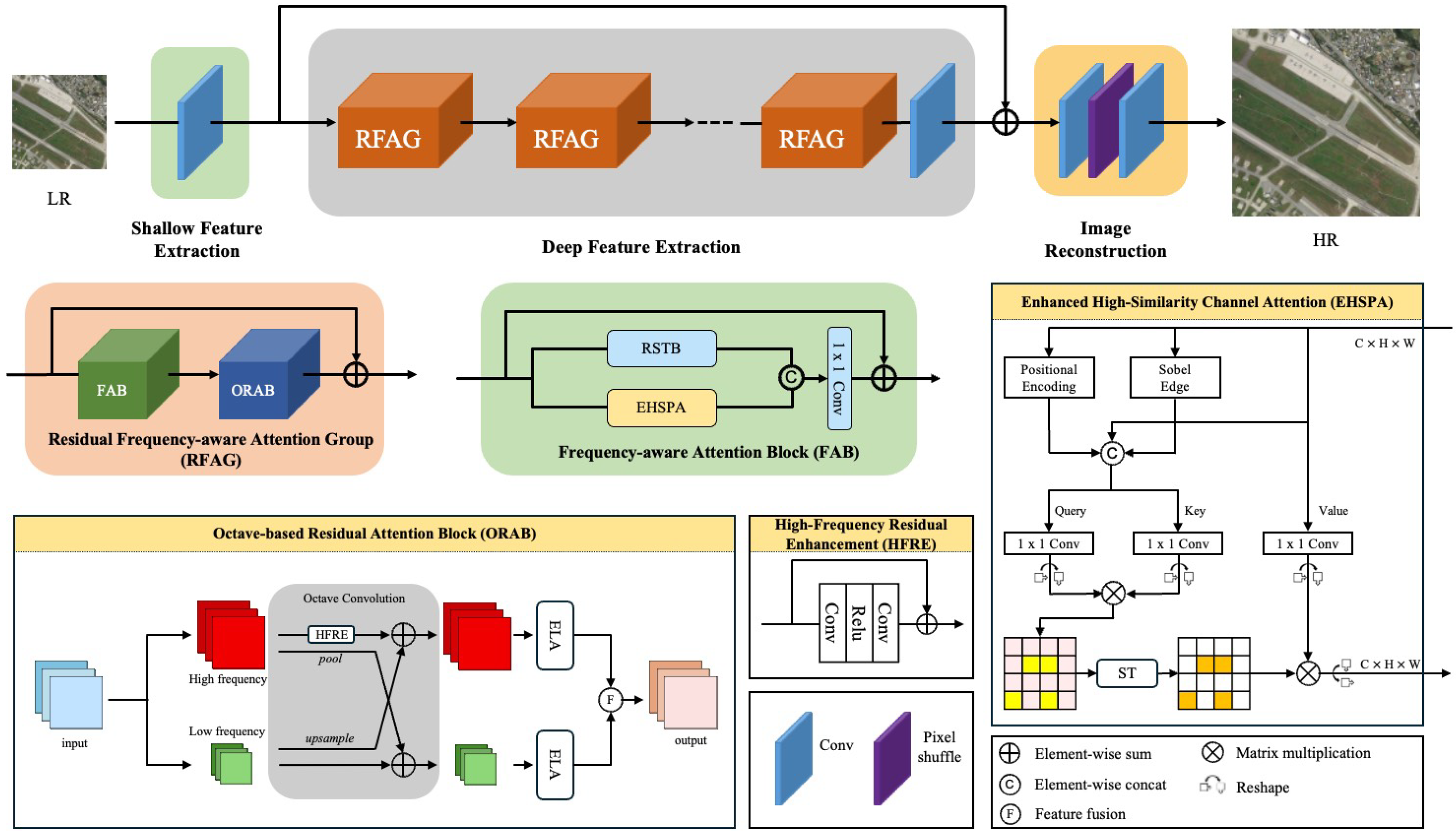

- A frequency-aware super-resolution framework, FASwinNet, is proposed for remote sensing images, integrating frequency-domain modeling and attention mechanisms to enhance reconstruction quality.

- An Enhanced High-Similarity-Pass Attention (EHSPA) module is designed to guide the network toward structurally significant high-frequency regions, thereby enhancing the restoration of fine details in reconstructed images.

- An Octave-based Residual Attention Block (ORAB) is proposed to perform frequency-separated processing and fusion, effectively enhancing the network’s feature representation capability.

2. Related Work

2.1. Remote Sensing Image Super-Resolution

2.2. Image Restoration Using Swin Transformer

3. Methods

3.1. Network Architecture

3.2. Frequency-Aware Attention Block (FAB)

3.3. Octave-Based Residual Attention Block (ORAB)

3.4. Learning Strategy

4. Experiments

4.1. Datasets and Implementation

4.2. Ablation Study

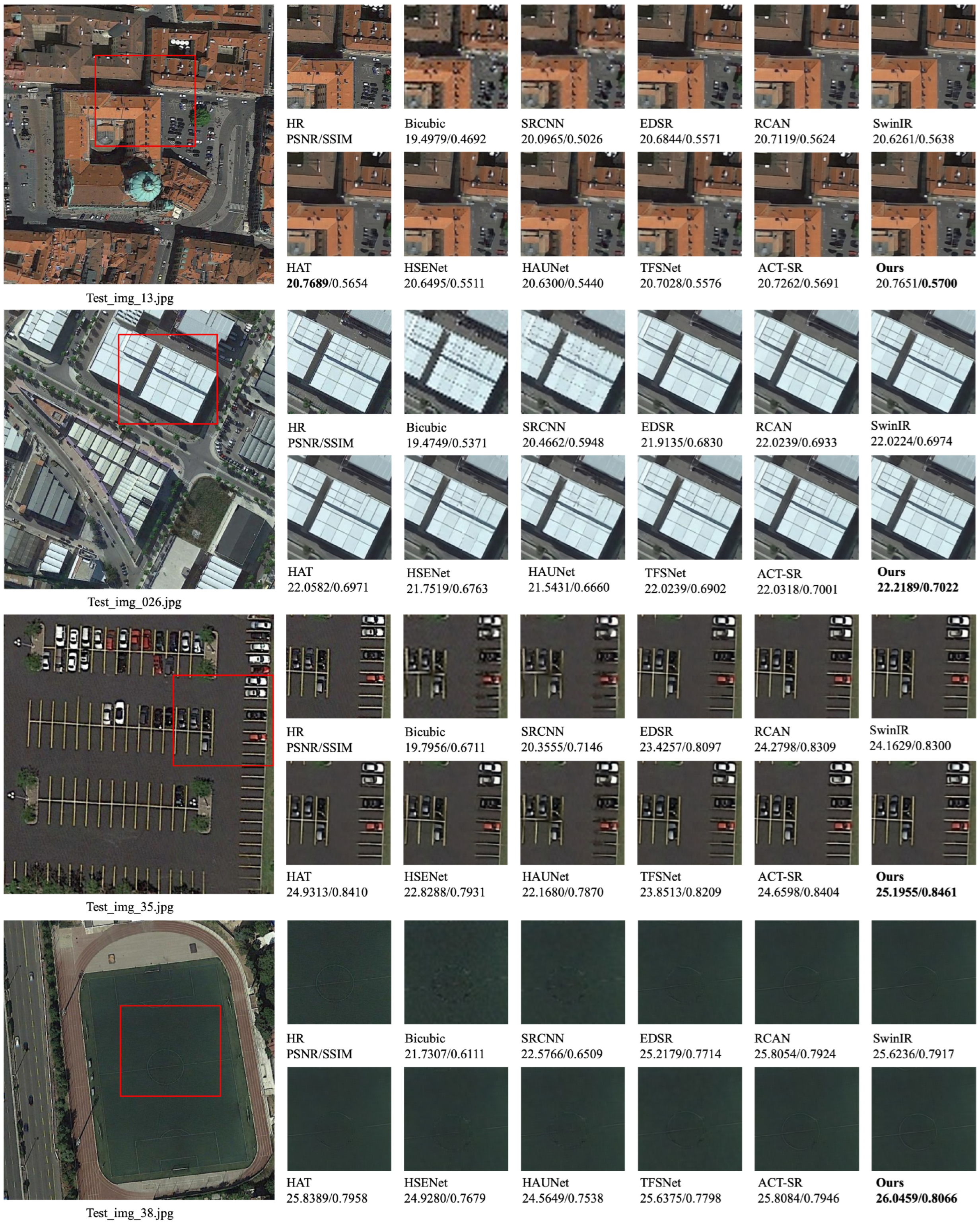

4.3. Comparisons with State-of-the-Art Method

4.4. Limitations

5. Conclusions

- FASwinNet significantly improves super-resolution on the AID dataset (×4 scale), effectively restoring both low- and high-frequency details.

- EHSPA enhances structural sharpness, suppressing high-frequency degradation and alleviating over-smoothing common in Transformer-based SR models.

- ORAB introduces explicit frequency separation, better modeling low-frequency semantics and high-frequency textures, improving clarity of boundaries, roof details, farmland patterns, and other key structures.

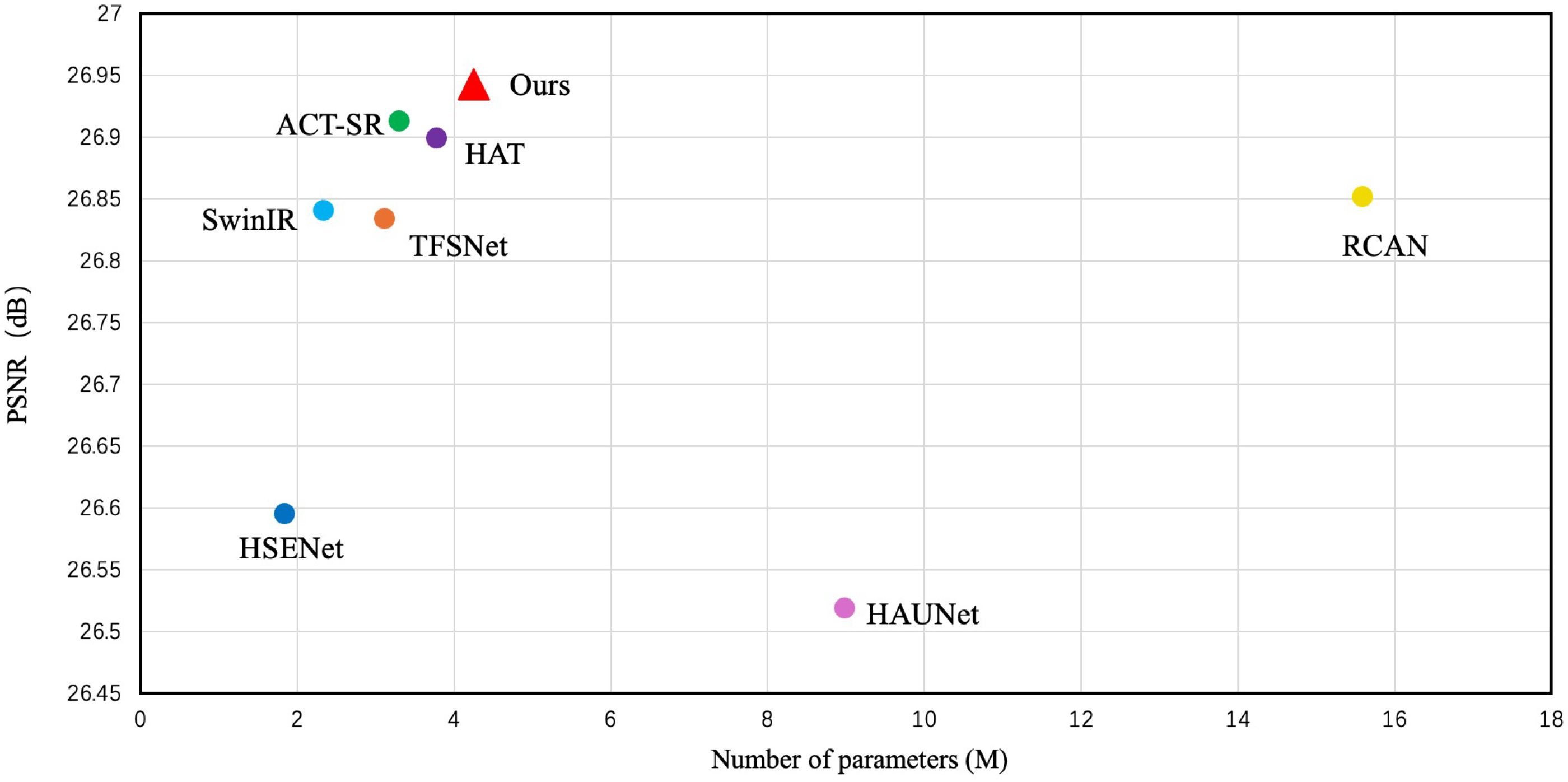

- The model is lightweight, with only 4.26 M parameters, balancing efficiency and performance for practical deployment.

- FASwinNet exhibits strong cross-dataset generalization; when trained on AID and tested on UCMerced without fine-tuning, it consistently outperforms existing methods, showing robustness to scene variations.

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Conflicts of Interest

References

- Yang, D.; Li, Z.; Xia, Y.; Chen, Z. Remote sensing image super-resolution: Challenges and approaches. In Proceedings of the 2015 IEEE International Conference on Digital Signal Processing (DSP), Singapore, 21–24 July 2015; pp. 196–200. [Google Scholar]

- Wang, X.; Yu, K.; Wu, S.; Gu, J.; Liu, Y.; Dong, C.; Qiao, Y.; Change Loy, C. Esrgan: Enhanced super-resolution generative adversarial networks. In Proceedings of the European Conference on Computer Vision (ECCV) Workshops 2018, Munich, Germany, 8–14 September 2018. [Google Scholar]

- Dong, C.; Loy, C.C.; He, K.; Tang, X. Image super-resolution using deep convolutional networks. IEEE Trans. Pattern Anal. Mach. Intell. 2015, 38, 295–307. [Google Scholar] [CrossRef] [PubMed]

- Dong, C.; Loy, C.C.; Tang, X. Accelerating the super-resolution convolutional neural network. In Proceedings of the Computer Vision–ECCV 2016: 14th European Conference, Amsterdam, The Netherlands, 11–14 October 2016; Proceedings, Part II 14. pp. 391–407. [Google Scholar]

- Kong, X.; Zhao, H.; Qiao, Y.; Dong, C. Classsr: A general framework to accelerate super-resolution networks by data characteristic. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition 2021, Nashville, TN, USA, 19–25 June 2021; pp. 12016–12025. [Google Scholar]

- Zhang, Y.; Li, K.; Li, K.; Wang, L.; Zhong, B.; Fu, Y. Image super-resolution using very deep residual channel attention networks. In Proceedings of the European Conference on Computer Vision (ECCV) 2018, Munich, Germany, 8–14 September 2018; pp. 286–301. [Google Scholar]

- Li, Z.; Liu, Y.; Chen, X.; Cai, H.; Gu, J.; Qiao, Y.; Dong, C. Blueprint separable residual network for efficient image super-resolution. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition 2022, New Orleans, LA, USA, 18–24 June 2022; pp. 833–843. [Google Scholar]

- Lim, B.; Son, S.; Kim, H.; Nah, S.; Mu Lee, K. Enhanced deep residual networks for single image super-resolution. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition Workshops 2017, Honolulu, HI, USA, 21–26 July 2017; pp. 136–144. [Google Scholar]

- Zhang, Y.; Tian, Y.; Kong, Y.; Zhong, B.; Fu, Y. Residual dense network for image super-resolution. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition 2018, Salt Lake City, UT, USA, 18–23 June 2018; pp. 2472–2481. [Google Scholar]

- Ashish, V. Attention is all you need. Adv. Neural Inf. Process. Syst. 2017, 30, I. [Google Scholar]

- Dosovitskiy, A.; Beyer, L.; Kolesnikov, A.; Weissenborn, D.; Zhai, X.; Unterthiner, T.; Dehghani, M.; Minderer, M.; Heigold, G.; Gelly, S.; et al. An image is worth 16 × 16 words: Transformers for image recognition at scale. arXiv 2020, arXiv:2010.11929. [Google Scholar]

- Liu, Z.; Lin, Y.; Cao, Y.; Hu, H.; Wei, Y.; Zhang, Z.; Lin, S.; Guo, B. Swin transformer: Hierarchical vision transformer using shifted windows. In Proceedings of the IEEE/CVF International Conference on Computer Vision 2021, Montreal, QC, Canada, 11–17 October 2021; pp. 10012–10022. [Google Scholar]

- Raghu, M.; Unterthiner, T.; Kornblith, S.; Zhang, C.; Dosovitskiy, A. Do vision transformers see like convolutional neural networks? Adv. Neural Inf. Process. Syst. 2021, 34, 12116–12128. [Google Scholar]

- Chen, H.; Wang, Y.; Guo, T.; Xu, C.; Deng, Y.; Liu, Z.; Ma, S.; Xu, C.; Xu, C.; Gao, W. Pre-trained image processing transformer. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition 2021, Nashville, TN, USA, 19–25 June 2021; pp. 12299–12310. [Google Scholar]

- Zamir, S.W.; Arora, A.; Khan, S.; Hayat, M.; Khan, F.S.; Yang, M.H. Restormer: Efficient transformer for high-resolution image restoration. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition 2022, New Orleans, LA, USA, 18–24 June 2022; pp. 5728–5739. [Google Scholar]

- Wang, Z.; Cun, X.; Bao, J.; Zhou, W.; Liu, J.; Li, H. Uformer: A general u-shaped transformer for image restoration. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition 2022, New Orleans, LA, USA, 18–24 June 2022; pp. 17683–17693. [Google Scholar]

- Li, W.; Lu, X.; Qian, S.; Lu, J.; Zhang, X.; Jia, J. On efficient transformer-based image pre-training for low-level vision. arXiv 2021, arXiv:2112.10175. [Google Scholar]

- Liang, J.; Cao, J.; Sun, G.; Zhang, K.; Van Gool, L.; Timofte, R. Swinir: Image restoration using swin transformer. In Proceedings of the IEEE/CVF International Conference on Computer Vision 2021, Montreal, QC, Canada, 11–17 October 2021; pp. 1833–1844. [Google Scholar]

- Chen, X.; Wang, X.; Zhou, J.; Qiao, Y.; Dong, C. Activating more pixels in image super-resolution transformer. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition 2023, Vancouver, BC, Canada, 17–24 June 2023; pp. 22367–22377. [Google Scholar]

- Lei, S.; Shi, Z. Hybrid-scale self-similarity exploitation for remote sensing image super-resolution. IEEE Trans. Geosci. Remote Sens. 2021, 60, 5401410. [Google Scholar] [CrossRef]

- Peng, G.; Xie, M.; Fang, L. Context-aware lightweight remote-sensing image super-resolution network. Front. Neurorobot. 2023, 17, 1220166. [Google Scholar] [CrossRef] [PubMed]

- Chen, Y.; Fan, H.; Xu, B.; Yan, Z.; Kalantidis, Y.; Rohrbach, M.; Yan, S.; Feng, J. Drop an octave: Reducing spatial redundancy in convolutional neural networks with octave convolution. In Proceedings of the IEEE/CVF International Conference on Computer Vision 2019, Seoul, Republic of Korea, 27 October–2 November 2019; pp. 3435–3444. [Google Scholar]

- Xu, W.; Wan, Y. ELA: Efficient Local Attention for Deep Convolutional Neural Networks. arXiv 2024, arXiv:2403.01123. [Google Scholar] [CrossRef]

- Yuan, K.; Guo, S.; Liu, Z.; Zhou, A.; Yu, F.; Wu, W. Incorporating convolution designs into visual transformers. In Proceedings of the IEEE/CVF International Conference on Computer Vision 2021, Montreal, QC, Canada, 11–17 October 2021; pp. 579–588. [Google Scholar]

- Chu, X.; Tian, Z.; Wang, Y.; Zhang, B.; Ren, H.; Wei, X.; Xia, H.; Shen, C. Twins: Revisiting the design of spatial attention in vision transformers. Adv. Neural Inf. Process. Syst. 2021, 34, 9355–9366. [Google Scholar]

- Wu, S.; Wu, T.; Tan, H.; Guo, G. Pale transformer: A general vision transformer backbone with pale-shaped attention. In Proceedings of the AAAI Conference on Artificial Intelligence 2022, Online, 22 February–1 March 2022; Volume 36, pp. 2731–2739. [Google Scholar]

- Dong, X.; Bao, J.; Chen, D.; Zhang, W.; Yu, N.; Yuan, L.; Chen, D.; Guo, B. Cswin transformer: A general vision transformer backbone with cross-shaped windows. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition 2022, New Orleans, LA, USA, 18–24 June 2022; pp. 12124–12134. [Google Scholar]

- Wu, H.; Xiao, B.; Codella, N.; Liu, M.; Dai, X.; Yuan, L.; Zhang, L. Cvt: Introducing convolutions to vision transformers. In Proceedings of the IEEE/CVF International Conference on Computer Vision 2021, Montreal, QC, Canada, 11–17 October 2021; pp. 22–31. [Google Scholar]

- Huang, Z.; Ben, Y.; Luo, G.; Cheng, P.; Yu, G.; Fu, B. Shuffle transformer: Rethinking spatial shuffle for vision transformer. arXiv 2021, arXiv:2106.03650. [Google Scholar] [CrossRef]

- Wang, W.; Xie, E.; Li, X.; Fan, D.P.; Song, K.; Liang, D.; Lu, T.; Luo, P.; Shao, L. Pyramid vision transformer: A versatile backbone for dense prediction without convolutions. In Proceedings of the IEEE/CVF International Conference on Computer Vision 2021, Montreal, QC, Canada, 11–17 October 2021; pp. 568–578. [Google Scholar]

- Li, K.; Wang, Y.; Zhang, J.; Gao, P.; Song, G.; Liu, Y.; Li, H.; Qiao, Y. Uniformer: Unifying convolution and self-attention for visual recognition. IEEE Trans. Pattern Anal. Mach. Intell. 2023, 45, 12581–12600. [Google Scholar] [CrossRef] [PubMed]

- Li, Y.; Zhang, K.; Cao, J.; Timofte, R.; Van Gool, L. Localvit: Bringing locality to vision transformers. arXiv 2021, arXiv:2104.05707. [Google Scholar]

- Patel, K.; Bur, A.M.; Li, F.; Wang, G. Aggregating global features into local vision transformer. In Proceedings of the 2022 26th International Conference on Pattern Recognition (ICPR), Montreal, QC, Canada, 21–25 August 2022; pp. 1141–1147. [Google Scholar]

- Yuan, Q.; Shen, H.; Li, T.; Li, Z.; Li, S.; Jiang, Y.; Xu, H.; Tan, W.; Yang, Q.; Wang, J.; et al. Deep learning in environmental remote sensing: Achievements and challenges. Remote Sens. Environ. 2020, 241, 111716. [Google Scholar] [CrossRef]

- Shi, W.; Caballero, J.; Huszár, F.; Totz, J.; Aitken, A.P.; Bishop, R.; Rueckert, D.; Wang, Z. Real-time single image and video super-resolution using an efficient sub-pixel convolutional neural network. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition 2016, Las Vegas, NV, USA, 27–30 June 2016; pp. 1874–1883. [Google Scholar]

- Su, J.N.; Gan, M.; Chen, G.Y.; Guo, W.; Chen, C.P. High-similarity-pass attention for single image super-resolution. IEEE Trans. Image Process. 2024, 33, 610–624. [Google Scholar] [CrossRef] [PubMed]

- Xia, G.S.; Hu, J.; Hu, F.; Shi, B.; Bai, X.; Zhong, Y.; Zhang, L.; Lu, X. AID: A benchmark data set for performance evaluation of aerial scene classification. IEEE Trans. Geosci. Remote Sens. 2017, 55, 3965–3981. [Google Scholar] [CrossRef]

- Dong, C.; Loy, C.C.; He, K.; Tang, X. Learning a deep convolutional network for image super-resolution. In Proceedings of the Computer Vision–ECCV 2014: 13th European Conference, Zurich, Switzerland, 6–12 September 2014; Proceedings, Part IV 13. pp. 184–199. [Google Scholar]

- Wang, J.; Wang, B.; Wang, X.; Zhao, Y.; Long, T. Hybrid attention-based U-shaped network for remote sensing image super-resolution. IEEE Trans. Geosci. Remote Sens. 2023, 61, 5612515. [Google Scholar] [CrossRef]

- Wang, J.; Lu, Y.; Wang, S.; Wang, B.; Wang, X.; Long, T. Two-stage spatial-frequency joint learning for large-factor remote sensing image super-resolution. IEEE Trans. Geosci. Remote Sens. 2024, 62, 5606813. [Google Scholar] [CrossRef]

- Kang, Y.; Wang, X.; Zhang, X.; Wang, S.; Jin, G. ACT-SR: Aggregation Connection Transformer for Remote Sensing Image Super-Resolution. IEEE J. Sel. Top. Appl. Earth Obs. Remote Sens. 2024, 18, 8953–8964. [Google Scholar] [CrossRef]

| Model | PSNR | SSIM |

|---|---|---|

| Baseline | 26.58 | 0.7177 |

| Baseline + Octave | 26.63 | 0.7181 |

| Baseline + ORAB | 26.70 | 0.7209 |

| Model | PSNR | SSIM |

|---|---|---|

| Baseline | 26.58 | 0.7177 |

| Baseline + HSPA | 26.63 | 0.7186 |

| Baseline + EHSPA | 26.65 | 0.7196 |

| EHSPA | ORAB | PSNR | SSIM |

|---|---|---|---|

| ✗ | ✗ | 26.58 | 0.7177 |

| ✓ | ✗ | 26.65 | 0.7193 |

| ✗ | ✓ | 26.69 | 0.7215 |

| ✓ | ✓ | 26.73 | 0.7234 |

| Method | Parameters | Flops | Inference Time | PSNR | SSIM | MSE | LPIPS |

|---|---|---|---|---|---|---|---|

| Bicubic | – | – | – | 25.34 | 0.6752 | 287.31 | 0.4893 |

| SRCNN | 0.02 M | 0.57 | 2.74 ms | 25.89 | 0.6929 | 249.14 | 0.3859 |

| ESRGAN | 16.69 M | 645.25 | 3096.51 ms | 25.48 | 0.6708 | 264.75 | 0.3359 |

| EDSR | 1.52 M | 16.25 | 77.98 ms | 26.71 | 0.7282 | 205.26 | 0.3145 |

| RCAN | 15.59 M | 106.28 | 510.03 ms | 26.85 | 0.7340 | 198.70 | 0.3056 |

| SwinIR | 2.33 M | 3.48 | 16.70 ms | 26.84 | 0.7346 | 199.31 | 0.3025 |

| HAT | 3.78 M | 4.78 | 22.94 ms | 26.89 | 0.7360 | 195.79 | 0.3038 |

| CTNet | 0.53 M | 0.57 | 2.74 ms | 26.39 | 0.7157 | 221.38 | 0.3424 |

| HSENet | 1.84 M | 38.44 | 184.47 ms | 26.59 | 0.7250 | 210.22 | 0.3170 |

| HAUNet | 8.99 M | 37.79 | 181.35 ms | 26.51 | 0.7212 | 215.64 | 0.3242 |

| TFSNet | 3.13 M | 111.17 | 533.50 ms | 26.83 | 0.7318 | 199.21 | 0.3168 |

| ACT-SR | 3.30 M | 131.5 | 631.06 ms | 26.91 | 0.7376 | 195.95 | 0.2984 |

| Ours | 4.26 M | 13.92 | 66.80 ms | 26.94 | 0.7380 | 194.55 | 0.3022 |

| Method | PSNR | SSIM | MSE | LPIPS |

|---|---|---|---|---|

| Bicubic | 24.29 | 0.5956 | 312.15 | 0.5705 |

| SRCNN | 24.37 | 0.6004 | 306.74 | 0.5604 |

| ESRGAN | 24.32 | 0.5963 | 309.85 | 0.5452 |

| EDSR | 24.58 | 0.6067 | 291.94 | 0.5327 |

| RCAN | 24.59 | 0.6129 | 291.47 | 0.5301 |

| SwinIR | 24.58 | 0.6137 | 292.33 | 0.5221 |

| HAT | 24.63 | 0.6141 | 288.85 | 0.5212 |

| CTNet | 24.56 | 0.6055 | 293.35 | 0.5231 |

| HSENet | 24.58 | 0.6121 | 292.14 | 0.5323 |

| HAUNet | 24.58 | 0.6104 | 292.33 | 0.5384 |

| TFSNet | 24.61 | 0.6112 | 290.52 | 0.5312 |

| ACT-SR | 24.63 | 0.6133 | 288.25 | 0.5203 |

| Ours | 24.64 | 0.6156 | 287.99 | 0.5180 |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Wang, Z.; Liu, S.; Cao, K.; Wang, X. FASwinNet: Frequency-Aware Swin Transformer for Remote Sensing Image Super-Resolution via Enhanced High-Similarity-Pass Attention and Octave Residual Blocks. Appl. Sci. 2025, 15, 12420. https://doi.org/10.3390/app152312420

Wang Z, Liu S, Cao K, Wang X. FASwinNet: Frequency-Aware Swin Transformer for Remote Sensing Image Super-Resolution via Enhanced High-Similarity-Pass Attention and Octave Residual Blocks. Applied Sciences. 2025; 15(23):12420. https://doi.org/10.3390/app152312420

Chicago/Turabian StyleWang, Zhongyang, Shilong Liu, Keyan Cao, and Xinlei Wang. 2025. "FASwinNet: Frequency-Aware Swin Transformer for Remote Sensing Image Super-Resolution via Enhanced High-Similarity-Pass Attention and Octave Residual Blocks" Applied Sciences 15, no. 23: 12420. https://doi.org/10.3390/app152312420

APA StyleWang, Z., Liu, S., Cao, K., & Wang, X. (2025). FASwinNet: Frequency-Aware Swin Transformer for Remote Sensing Image Super-Resolution via Enhanced High-Similarity-Pass Attention and Octave Residual Blocks. Applied Sciences, 15(23), 12420. https://doi.org/10.3390/app152312420