A Novel Low-Illumination Image Enhancement Method Based on Convolutional Neural Network with Retinex Theory

Abstract

1. Introduction

2. Materials and Methods

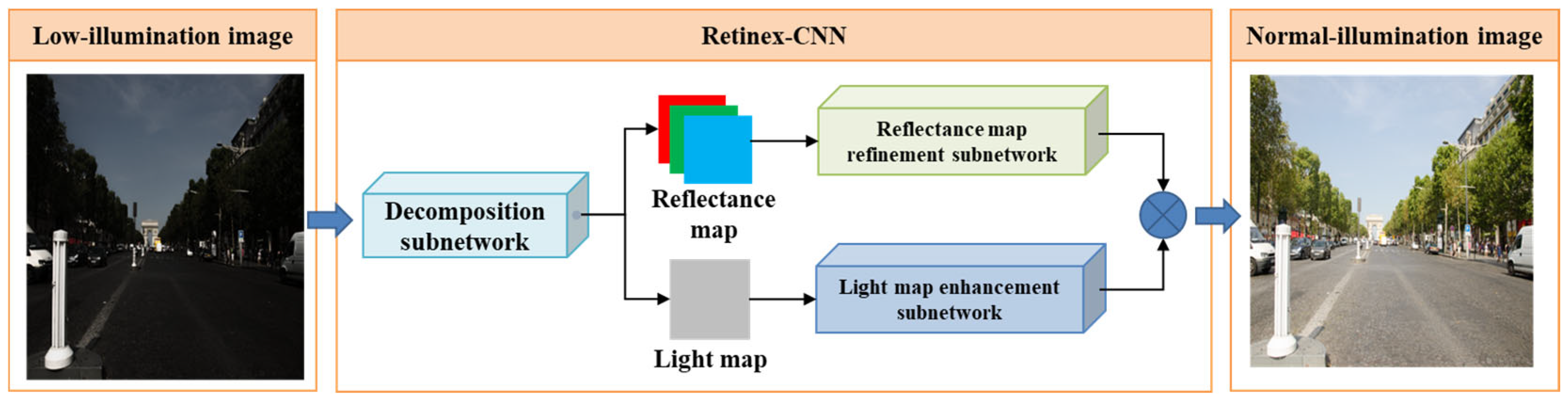

2.1. Retinex-CNN

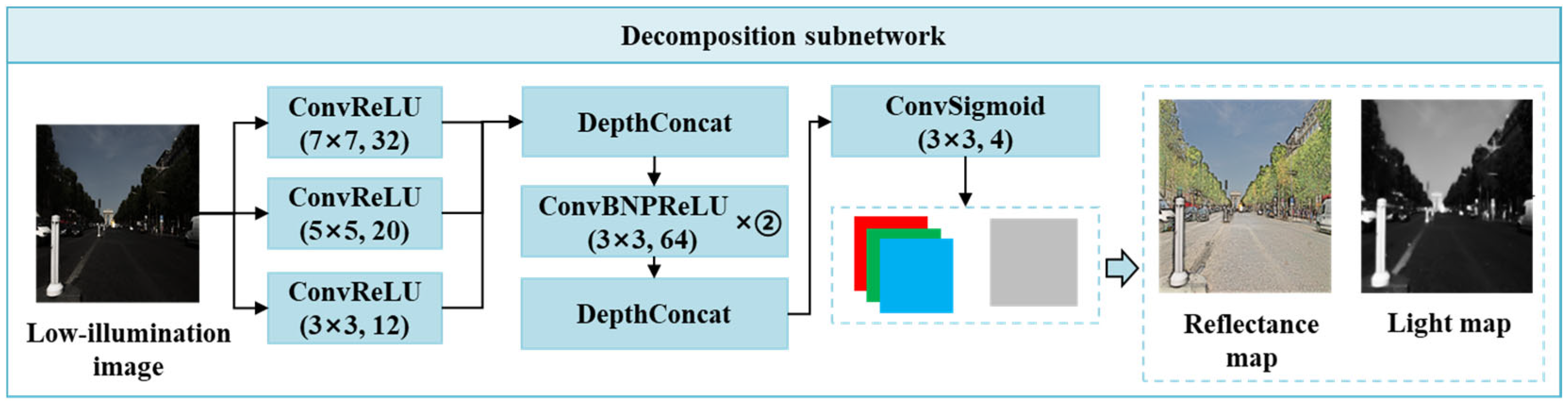

2.1.1. Decomposition Subnetwork

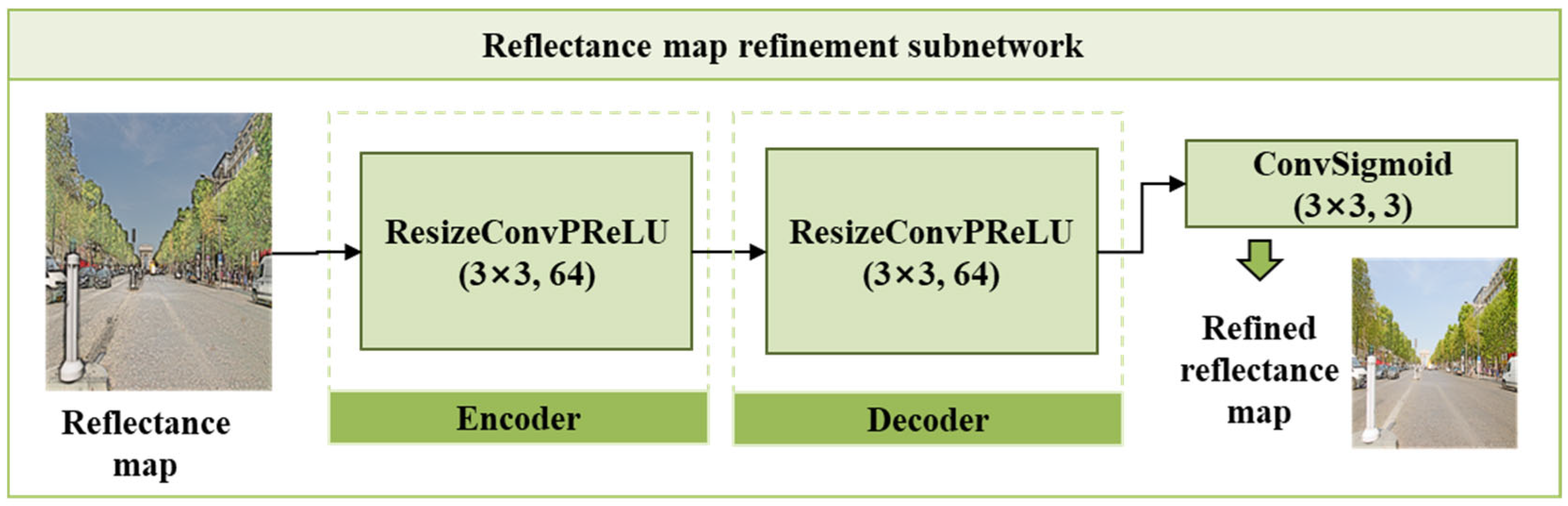

2.1.2. Reflectance Map Refinement Subnetwork

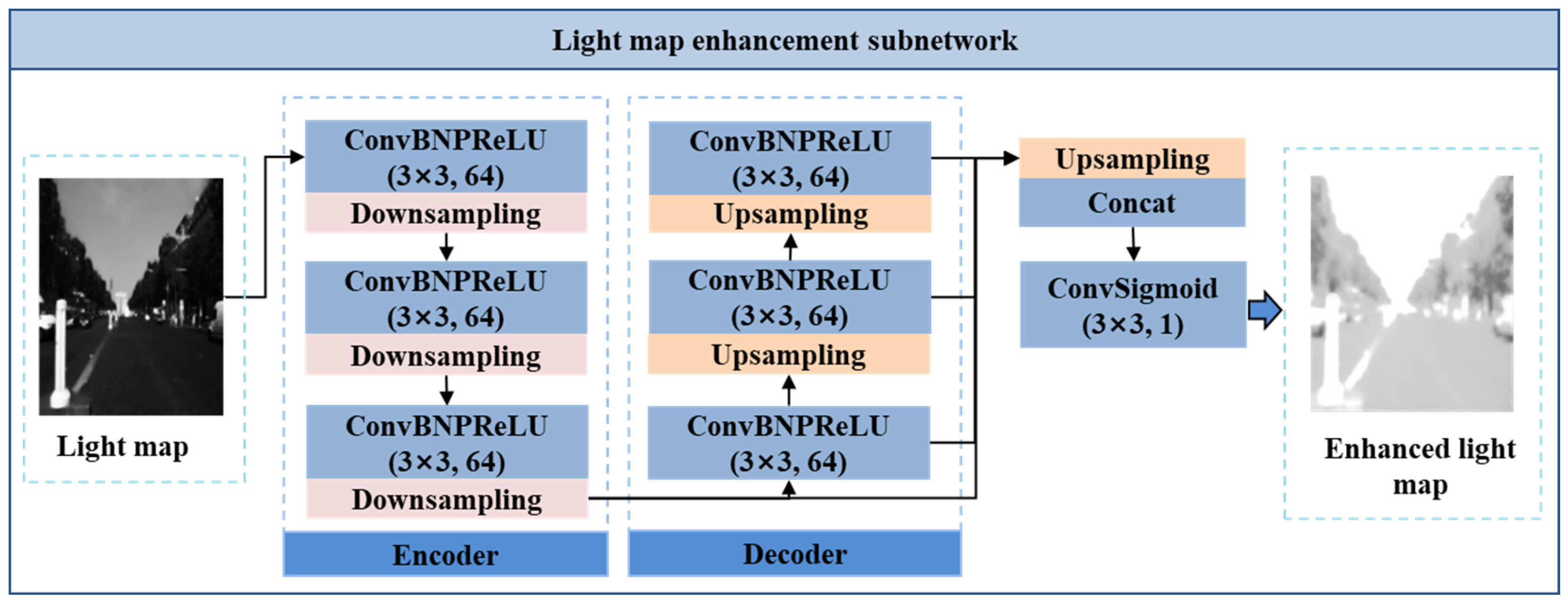

2.1.3. Light Map Enhancement Subnetwork

2.2. Loss Function

2.2.1. Decomposition Subnetwork Loss Function

2.2.2. Light Map Enhancement Subnetwork Loss Function

2.2.3. Reflectance Map Refinement Subnetwork Loss Function

2.3. Experiment Setup

2.4. Image Quality Evaluation Methods

2.4.1. Evaluation Metrics for Composite Image Quality

2.4.2. Actual Image Quality Evaluation Indicators

3. Experiment Results

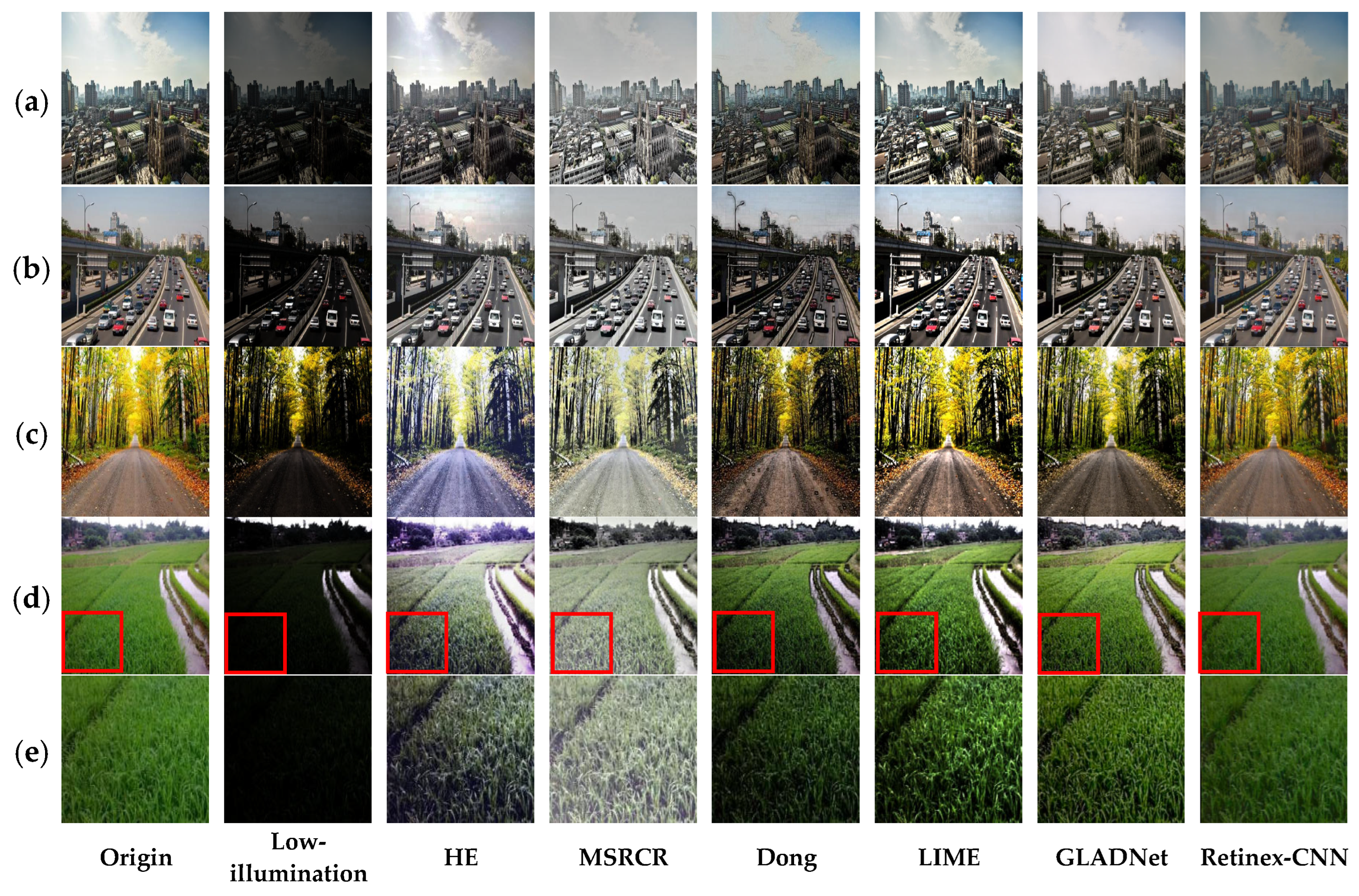

3.1. Enhancement of Synthesized Low-Illumination Images

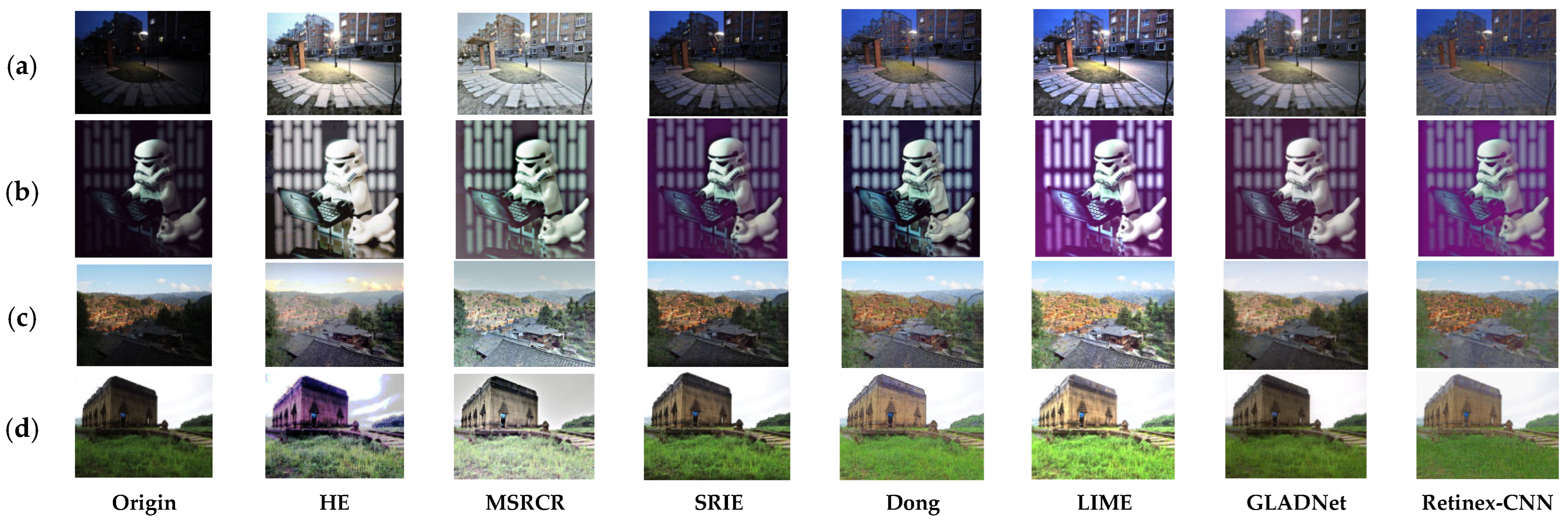

3.2. Enhancement of Real Low-Illumination Images

3.3. Objective Evaluation of Image Quality

4. Analysis and Discussion

4.1. Analysis of Time Consumption

4.2. Strengths and Limitations

5. Conclusions

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Acknowledgments

Conflicts of Interest

References

- Li, C.; Qu, X.; Gnanasambandam, A.; Elgendy, O.A.; Ma, J.; Chan, S.H. Photon-limited object detection using non-local feature matching and knowledge distillation. In Proceedings of the IEEE/CVF International Conference on Computer Vision, Montreal, QC, Canada, 10–17 October 2021; pp. 3976–3987. [Google Scholar]

- Liu, J.; Xu, D.; Yang, W.; Fan, M.; Huang, H. Benchmarking Low-Light Image Enhancement and Beyond. Int. J. Comput. Vis. 2021, 129, 1153–1184. [Google Scholar] [CrossRef]

- Xu, X.; Wang, S.; Wang, Z.; Zhang, X.; Hu, R. Exploring Image Enhancement for Salient Object Detection in Low Light Images. ACM Trans. Multimed. Comput. Commun. Appl. 2021, 17, 1–19. [Google Scholar] [CrossRef]

- Pizer, S.M.; Amburn, E.P.; Austin, J.D.; Cromartie, R.; Geselowitz, A.; Greer, T.; ter Haar Romeny, B.; Zimmerman, J.B.; Zuiderveld, K. Adaptive histogram equalization and its variations. Comput. Vis. Graph. Image Process. 1987, 39, 355–368. [Google Scholar] [CrossRef]

- Ancuti, C.; Ancuti, C.O.; Haber, T.; Bekaert, P. Enhancing underwater images and videos by fusion. In Proceedings of the 2012 IEEE Conference on Computer Vision and Pattern Recognition, Providence, RI, USA, 16–21 June 2012; pp. 81–88. [Google Scholar]

- Hussain, K.; Rahman, S.; Khaled, S.M.; Abdullah-Al-Wadud, M.; Shoyaib, M. Dark image enhancement by locally transformed histogram. In Proceedings of the 8th International Conference on Software, Knowledge, Information Management and Applications (SKIMA 2014), Dhaka, Bangladesh, 18–20 December 2014; pp. 1–7. [Google Scholar]

- Zhai, Y.-S.; Liu, X.-M. An improved fog-degraded image enhancement algorithm. In Proceedings of the 2007 International Conference on Wavelet Analysis and Pattern Recognition, Beijing, China, 2–4 November 2007; pp. 522–526. [Google Scholar]

- Park, S.; Yu, S.; Kim, M.; Park, K.; Paik, J. Dual Autoencoder Network for Retinex-Based Low-Light Image Enhancement. IEEE Access 2018, 6, 22084–22093. [Google Scholar] [CrossRef]

- Wang, W.; Wei, C.; Yang, W.; Liu, J. Gladnet: Low-light enhancement network with global awareness. In Proceedings of the 2018 13th IEEE International Conference on Automatic Face & Gesture Recognition (FG 2018), Xi′an, China, 15–19 May 2018; pp. 751–755. [Google Scholar]

- Xu, W.; Lee, M.; Zhang, Y.; You, J.; Suk, S.; Choi, J.-Y. Deep residual convolutional network for natural image denoising and brightness enhancement. In Proceedings of the 2018 International Conference on Platform Technology and Service (PlatCon), Jeju, Republic of Korea, 29–31 January 2018; pp. 1–6. [Google Scholar]

- Shen, L.; Yue, Z.; Feng, F.; Chen, Q.; Liu, S.; Ma, J. Msr-net: Low-light image enhancement using deep convolutional network. arXiv 2017, arXiv:1711.02488. [Google Scholar]

- Lei, C.; Tian, Q. Low-light image enhancement algorithm based on deep learning and Retinex theory. Appl. Sci. 2023, 13, 10336. [Google Scholar] [CrossRef]

- Ying, Z.; Li, G.; Ren, Y.; Wang, R.; Wang, W. A new low-light image enhancement algorithm using camera response model. In Proceedings of the IEEE International Conference on Computer Vision Workshops, Venice, Italy, 22–29 October 2017; pp. 3015–3022. [Google Scholar]

- Lee, C.; Lee, C.; Kim, C. Contrast enhancement based on layered difference representation. IEEE Trans. Image Process. 2013, 22, 5372–5384. [Google Scholar] [CrossRef] [PubMed]

- Fu, X.; Zeng, D.; Huang, Y.; Liao, Y.; Ding, X.; Paisley, J. A fusion-based enhancing method for weakly illuminated images. Signal Process. 2016, 129, 82–96. [Google Scholar] [CrossRef]

- Ma, K.; Kai, Z.; Zhou, W. Perceptual Quality Assessment for Multi-Exposure Image Fusion. IEEE Trans. Image Process. 2015, 24, 3345–3356. [Google Scholar] [CrossRef] [PubMed]

- Sheikh, H.R.; Sabir, M.F.; Bovik, A.C. A statistical evaluation of recent full reference image quality assessment algorithms. IEEE Trans. Image Process. 2006, 15, 3440–3451. [Google Scholar] [CrossRef] [PubMed]

- Wang, S.; Zheng, J.; Hu, H.M.; Li, B. Naturalness preserved enhancement algorithm for non-uniform illumination images. IEEE Trans. Image Process. 2013, 22, 3538–3548. [Google Scholar] [CrossRef] [PubMed]

- Vonikakis, V.; Kouskouridas, R.; Gasteratos, A. On the evaluation of illumination compensation algorithms. Multimed. Tools Appl. 2017, 77, 9211–9231. [Google Scholar] [CrossRef]

- Fu, X.; Zeng, D.; Huang, Y.; Zhang, X.-P.; Ding, X. A weighted variational model for simultaneous reflectance and illumination estimation. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Las Vegas, NV, USA, 27–30 June 2016; pp. 2782–2790. [Google Scholar]

- Wang, Z.; Bovik, A.C.; Sheikh, H.R.; Simoncelli, E.P. Image quality assessment: From error visibility to structural similarity. IEEE Trans. Image Process. 2004, 13, 600–612. [Google Scholar] [CrossRef] [PubMed]

- Mittal, A.; Soundararajan, R.; Bovik, A.C. Making a “Completely Blind” Image Quality Analyzer. IEEE Signal Process. Lett. 2013, 20, 209–212. [Google Scholar] [CrossRef]

- Ying, Z.; Li, G.; Gao, W. A bio-inspired multi-exposure fusion framework for low-light image enhancement. arXiv 2017, arXiv:1711.00591. [Google Scholar]

- Dong, X.; Pang, Y.; Wen, J. Fast efficient algorithm for enhancement of low lighting video. In ACM SIGGRApH 2010 Posters; ACM: New York, NY, USA, 2010; p. 1. [Google Scholar]

- Guo, X.; Li, Y.; Ling, H. LIME: Low-Light Image Enhancement via Illumination Map Estimation. IEEE Trans. Image Process. 2017, 26, 982–993. [Google Scholar] [CrossRef] [PubMed]

| HE | MSRCR | Dong | LIME | GLADNet | Retinex-CNN | |

|---|---|---|---|---|---|---|

| MSE | 1048.1 | 1559.1 | 1500.3 | 1505.5 | 634.2 | 498.5 |

| PSNR | 19.5223 | 16.7178 | 17.1631 | 16.5124 | 20.9026 | 22.7643 |

| SSIM | 0.7824 | 0.7732 | 0.6202 | 0.6396 | 0.7637 | 0.8237 |

| LOE | 306.946 | 1316.013 | 205.019 | 1757.513 | 388.024 | 289.019 |

| Origin | HE | MSRCR | SRIE | Dong | LIME | GLADNet | Retinex-CNN | |

|---|---|---|---|---|---|---|---|---|

| DICM | 3.8608 | 3.6795 | 3.3491 | 3.2894 | 4.0314 | 3.4989 | 3.1146 | 2.8015 |

| Fusion | 4.1425 | 3.6456 | 3.4853 | 3.5362 | 3.5967 | 3.7663 | 3.4281 | 3.3008 |

| MEF | 5.1884 | 4.3768 | 4.0614 | 4.1803 | 4.6952 | 4.4466 | 3.6899 | 3.4246 |

| LIME | 4.4134 | 4.5304 | 3.8752 | 3.8707 | 4.2005 | 4.3064 | 4.2634 | 4.0082 |

| NPE | 5.4441 | 4.5693 | 4.5644 | 4.8364 | 5.0251 | 4.6432 | 4.7601 | 4.7006 |

| VV | 3.5583 | 3.0058 | 2.7718 | 2.8324 | 2.8047 | 2.8556 | 2.7282 | 2.5117 |

| HE | MSRCR | SRIE | Dong | LIME | GLADNet | Retinex-CNN | |

|---|---|---|---|---|---|---|---|

| DICM | 0.5728 | 0.3670 | 0.6028 | 0.4103 | 0.2620 | 0.6501 | 0.8604 |

| Fusion | 0.8121 | 0.4901 | 0.7165 | 0.5111 | 0.3715 | 0.8527 | 1.0906 |

| MEF | 0.3809 | 0.3069 | 0.5383 | 0.3727 | 0.2482 | 0.5662 | 0.7972 |

| LIME | 0.2822 | 0.2490 | 0.5255 | 0.3289 | 0.2095 | 0.4628 | 0.7574 |

| NPE | 0.4948 | 0.3860 | 0.6013 | 0.4016 | 0.2978 | 0.8103 | 1.0177 |

| VV | 0.8655 | 0.5490 | 0.6991 | 0.5581 | 0.4110 | 0.9328 | 0.9845 |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Mao, H.; Peng, W.; Tian, Y.; Zhu, X. A Novel Low-Illumination Image Enhancement Method Based on Convolutional Neural Network with Retinex Theory. Appl. Sci. 2025, 15, 12324. https://doi.org/10.3390/app152212324

Mao H, Peng W, Tian Y, Zhu X. A Novel Low-Illumination Image Enhancement Method Based on Convolutional Neural Network with Retinex Theory. Applied Sciences. 2025; 15(22):12324. https://doi.org/10.3390/app152212324

Chicago/Turabian StyleMao, Haixia, Wei Peng, Yan Tian, and Xiaochun Zhu. 2025. "A Novel Low-Illumination Image Enhancement Method Based on Convolutional Neural Network with Retinex Theory" Applied Sciences 15, no. 22: 12324. https://doi.org/10.3390/app152212324

APA StyleMao, H., Peng, W., Tian, Y., & Zhu, X. (2025). A Novel Low-Illumination Image Enhancement Method Based on Convolutional Neural Network with Retinex Theory. Applied Sciences, 15(22), 12324. https://doi.org/10.3390/app152212324