1. Introduction

Gender-based violence (GBV)—encompassing physical, sexual, and psychological harm directed at individuals due to their gender—represents a widespread social and public health problem. Women and girls are disproportionately affected by GBV, with long-lasting consequences for their physical and mental well-being [

1]. Research studies consistently link GBV with adverse psychological outcomes, including anxiety, depression, suicidal ideation, substance use, and, in particular, post-traumatic stress disorder (PTSD) [

2,

3,

4,

5,

6]. PTSD is often the most prevalent mental health condition among GBV survivors [

4,

7,

8], with symptoms that impair social functioning, daily activities, and overall quality of life.

According to the World Health Organization (WHO) and the United Nations (UN), a GBV victim is a woman subjected to violence that causes or risks causing physical, sexual, or psychological harm, including threats, coercion, or arbitrary restrictions of liberty, regardless of the context [

9,

10]. Some national legislations, such as Spain’s Organic Law 1/2004 [

11], further recognize the impact on children who also suffer violence, inflicted by the perpetrators with the aim of indirectly damaging their mothers, as victims of “vicarious violence”.

Psychologists and social services professionals typically identify GBV victimization through clinical interviews or self-reported questionnaires, such as the EGS-R for PTSD assessment [

12]. However, this process requires victims to disclose past abuse early, an obstacle given the stigma, fear, or denial that often surrounds these experiences. Under-reporting remains a serious challenge, leaving many cases unidentified [

5,

13,

14].

In this context, speech-based artificial intelligence (AI) tools offer a promising, non-invasive approach to support mental health screening. These tools can be integrated into virtual assistants, therapy applications, or helplines to unobtrusively assess emotional and mental health states. Speech has already proven useful for diagnosing depression [

15], suicide risk [

16,

17], and other physical and mental conditions [

18,

19]. Unlike traditional assessments, speech-based systems may reduce bias by not relying on explicit self-reports, thus improving accessibility and early detection.

Robust security is crucial when deploying such AI-enabled systems in sensitive environments, as devices often handle delicate personal data that must be protected [

20,

21]. AI plays a key role by allowing real-time detection and decision-making while maintaining privacy and integrity. The architecture in [

22] poses an example of how an AI-based IoT solution embedded in cyber–physical systems can balance security with real-time detection.

Machine learning (ML) and, more specifically, deep learning (DL) models can extract meaningful paralinguistic features from speech signals, such as tone, pitch, rhythm, and vocal variability, beyond linguistic content [

23]. However, these models often encode speaker-specific traits, which can degrade models’ generalization and raise privacy concerns in clinical contexts. Although personalization may improve performance in tasks such as speech emotion recognition (SER) [

24], it may introduce undesirable confounds and ethical issues in mental health applications.

Building on our previous work [

25,

26], we hypothesize that speaker identity contributes undesirably to GBV Victim Condition (GBVVC) detection models. Therefore, in order to attain a speaker-agnostic detector that can distinguish between GBV victims and non-victims, we explore the use of domain-adversarial training techniques to disentangle relevant from irrelevant speaker-related information.

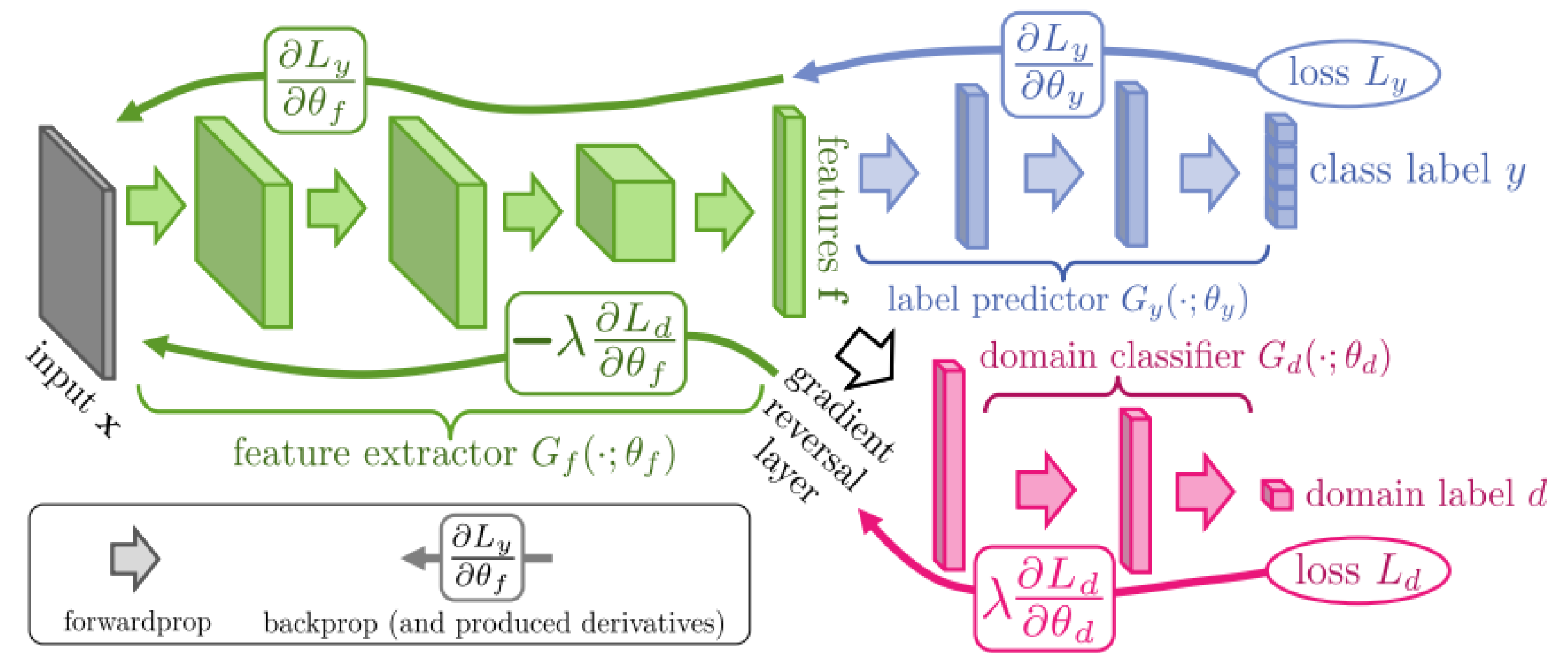

Domain-Adversarial Neural Networks (DANNs) [

27] are used as a novel approach for domain adaptation by learning to ignore domain-sensitive characteristics that are inherently embedded in the data. These networks aim to actively learn feature representations that exclude information on the data domain. This methodology promotes the development of features that are discriminative for a main task while remaining domain-agnostic.

DANNs, as defined in [

27], consist of two classifiers: one for the main classification task and another for domain classification.

Figure 1 represents the original DANN architecture scheme. These classifiers share initial layers that shape the data representation. A gradient reversal layer is introduced between the domain classifier and the feature representation layers so that it forwards the data during forward propagation and reverses the gradient signs during backward propagation. In this manner, the network aims to minimize classification error for the main task while maximizing the error of the domain classifier, ensuring an effective and discriminative representation for the primary task, in which domain information is suppressed.

In our specific context, the main or primary task entails GBVVC classification, while the domain classification refers to speaker identity. We aim to ensure that the feature representation remains discriminative for the primary task while eliminating any traces of speaker-identity information.

Speaker-agnostic models offer several advantages: they prevent the identification of individual speakers, thus preserving privacy in mental health applications. Additionally, these models are designed to be generalizable and independent of the speaker’s identity, making them effective for an extensive range of users, even those who have never been seen by the training phase of the models. By intentionally not learning speaker-specific information, they focus on improving performance for the task at hand, ensuring that the model’s resources are dedicated only to learning relevant information.

We evaluate our method on an extended version of the WEMAC database [

28], which includes spontaneous speech samples and emotional self-assessments from women in response to audiovisual stimuli. The extended dataset comprises additional speech data from 39 GBV survivors, whose participation was ethically approved but whose data remains unpublished due to privacy concerns. All participants were screened with the EGS-R questionnaire, and only survivors with pre-clinical PTSD symptoms (scores

) were included, ensuring the sample represented recovered individuals. For clarity, throughout this manuscript, we use the term GBVVC to denote a condition of prior victimization characterized by residual/pre-clinical PTSD symptomatology as measured by the EGS-R (≤20). The present work focuses on the detection of these subtle, persistent vocal signatures rather than on acute, clinically diagnosed PTSD. Despite this, our system was still able to detect these residual symptoms in the speech signal associated with past victimization. More details on the dataset and feature extraction methodology are presented in

Section 4.1.

Our findings suggest that domain-adversarial learning effectively suppresses speaker-specific cues and enhances the models’ ability to focus on paralinguistic indicators of GBVVC. By promoting generalizability and privacy, this work contributes to the development of trustworthy AI tools for sensitive mental health applications.

State-of-the-Art in Speech-Based Mental Health Detection Models

Our study addresses the automatic detection of pre-clinical post-traumatic stress disorder (PTSD) symptoms in self-identified survivors of gender-based violence (GBV) using speech-only data. To our knowledge, in addition to our prior work, no existing models have utilized audio data for the identification of GBVV, making the task, per se, a novel contribution to the field.

Most prior studies on PTSD detection using machine learning rely on textual data from clinical interviews. However, a growing body of evidence highlights the potential of voice-based biomarkers to identify trauma-related conditions. A systematic review [

29] reported that speech-based machine learning approaches for trauma disorders typically achieve AUCs between 0.80 and 0.95, highlighting the diagnostic effectiveness of paralinguistic features.

More specifically, several studies have demonstrated this potential in the task of PTSD detection. One study analysing acoustic markers from PTSD patient interviews (Clinician-Administered PTSD Scale for DSM-5, CAPS-5 [

30]) achieved 89.1% accuracy and an AUC of 0.954 using a random-forest classifier [

31]. Another study developed a multimodal deep learning model combining acoustic, visual, and linguistic features, reaching an AUC of 0.90 and an F1 score of 0.83 in distinguishing PTSD from major depressive disorder [

32]. And more recently, a study employing openSMILE features and random forest classification reported near-perfect performance (AUC = 0.97, accuracy = 0.92) in differentiating PTSD patients from controls [

33].

Despite these advances, most speech-based datasets have focused on clinical or war veteran populations, leaving a critical gap in understanding pre-clinical and gender-specific manifestations of trauma. In contrast, our work represents the first effort to model GBV-linked psychological outcomes using audio-based methods, achieving an F1-score of 67%, and paving the way for non-invasive, privacy-preserving early digital mental health assessments.

2. Results

2.1. Prior Work

We previously explored the detection of gender-based violence victim condition (GBVVC) from speech signals in our work [

25,

26]. In the first paper [

25], we demonstrated that using paralinguistic features extracted from speech, it is possible to differentiate between victims and non-victims with an accuracy of 71.53% under a speaker-independent (SI) setting. In our subsequent work [

26], we incorporated additional data and investigated the relationship between model performance and self-reported psychological and physical symptoms. The system achieved a user-level SI accuracy of 73.08% via majority voting over individual utterances. However, in both studies, although we employed a Leave-One-Subject-Out (LOSO) strategy, our hypothesis is that speaker-related traits remained entangled with the target GBVVC labels. We hypothesized that, based on the consistently lower performance observed under the LOSO evaluation scheme, compared to other data-splitting strategies, which we believe indicated that the model was inadvertently leveraging speaker-specific characteristics to perform the classification task. This potential confound motivated the present work, where we aim to remove speaker-specific information while preserving task-relevant patterns. As we will further discuss in

Section 3, several authors have already warned about the prevalence of this kind of problem in speech-related research for health diagnosis, where data is less abundant than for other speech technologies.

2.2. Baseline Models

Since this is, to the best knowledge of the authors, the first study addressing this specific task, we established a set of baseline models to set a reference performance and, therefore, contextualize the performance of the proposed Domain-Adversarial Model (DAM). Although our previous models [

25,

26] focused on GBVV classification, they were not designed with an adversarial structure to explicitly enforce speaker invariant feature learning. Hence, they are not directly comparable to the DAM approach. The baseline models introduced here serve this role by providing a clear benchmark under similar experimental conditions.

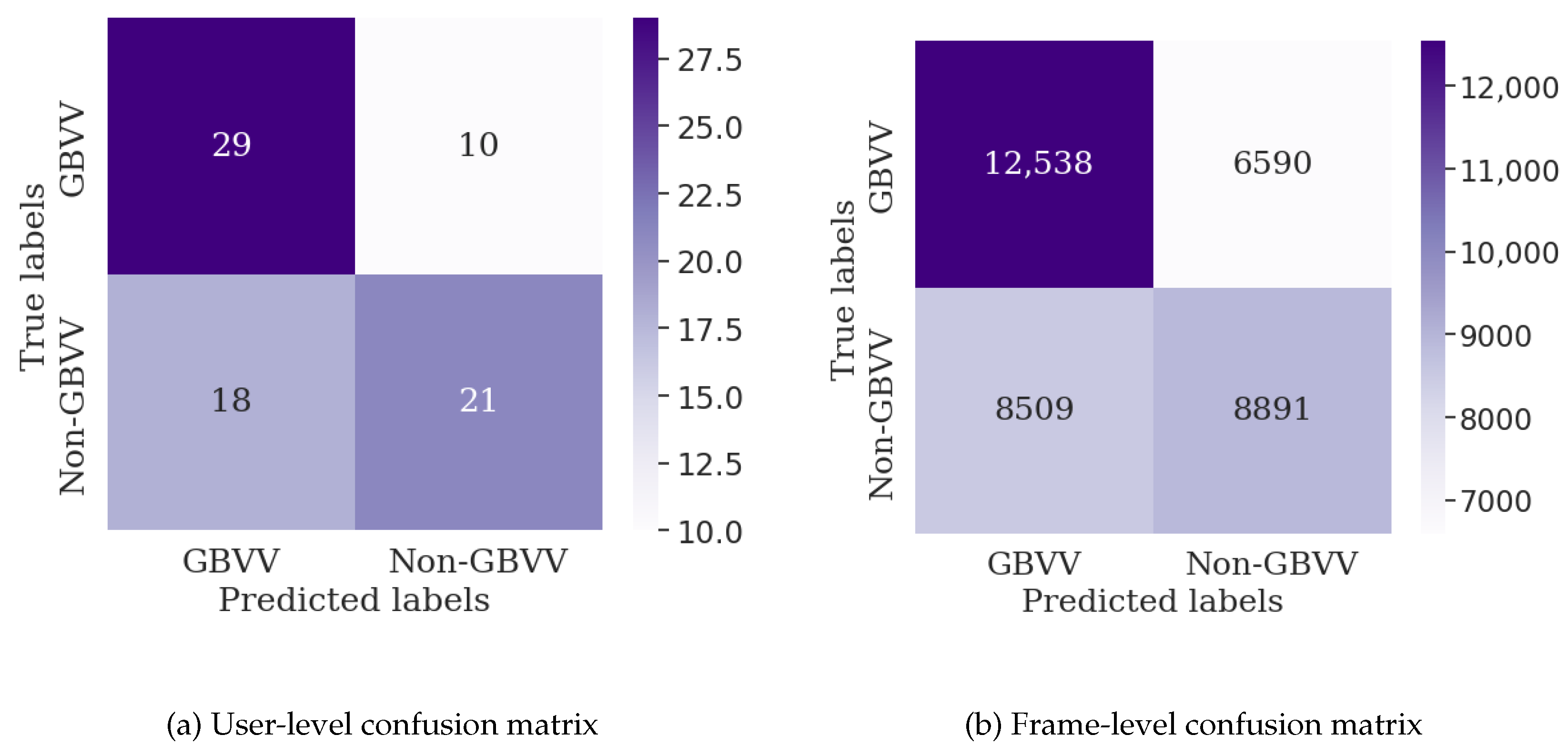

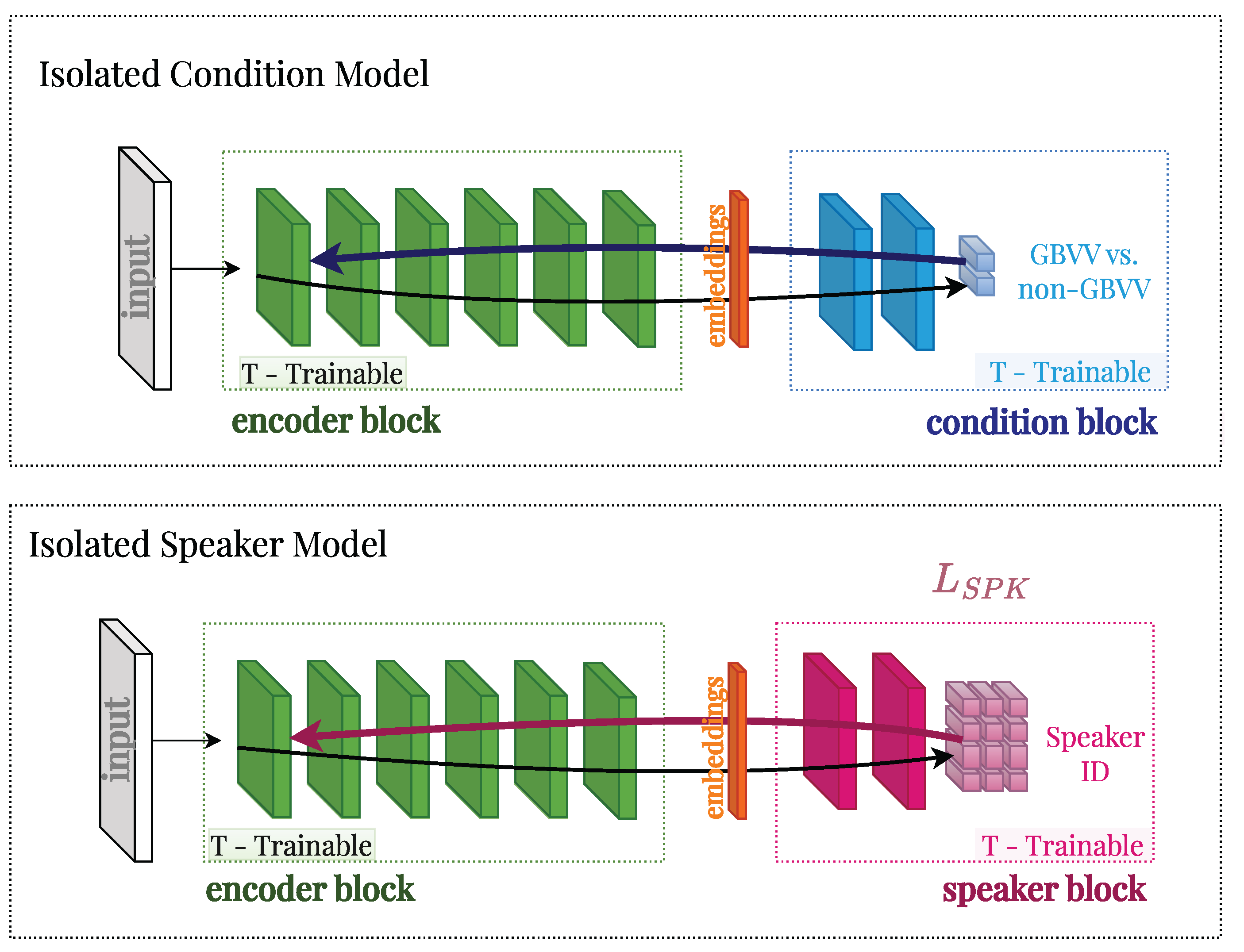

To this end, we first trained two baseline models using speech embeddings: (1) the Isolated Condition Model (ICM), which predicts GBVVC status, and (2) the Isolated Speaker Model (ISM), which identifies speaker identity. These modelsare trained on frame-level (FL) speech segments of 1 s duration. Majority voting (MV) is then applied at the user-level (UL) to obtain final predictions per subject.

The ICM model achieves a frame-level accuracy of

, with a precision of

, recall of

, and F1-score of

. At the user-level, the ICM reaches an accuracy of

, with a precision of

, recall of

, and F1-score of

. These results are presented in the corresponding confusion matrices, in

Figure 2. The figure presents both user-level and frame-level confusion matrices for a visual assessment of model behavior across scales.

In contrast, the ISM model, designed to identify speaker identity from speech, achieves a frame-level accuracy of

(

Table 1),underscoring the strong presence of speaker-specific information in the embeddings. However, since this model performs a multi-class classification task involving 78 different speakers, a confusion matrix or performance metrics such as precision, recall, or F1-score at the user-level are not meaningful or directly comparable. Each prediction corresponds to a specific speaker identity rather than a binary classification problem, thus precluding the application of majority voting or binary confusion-based metrics, as we did with the ICM.

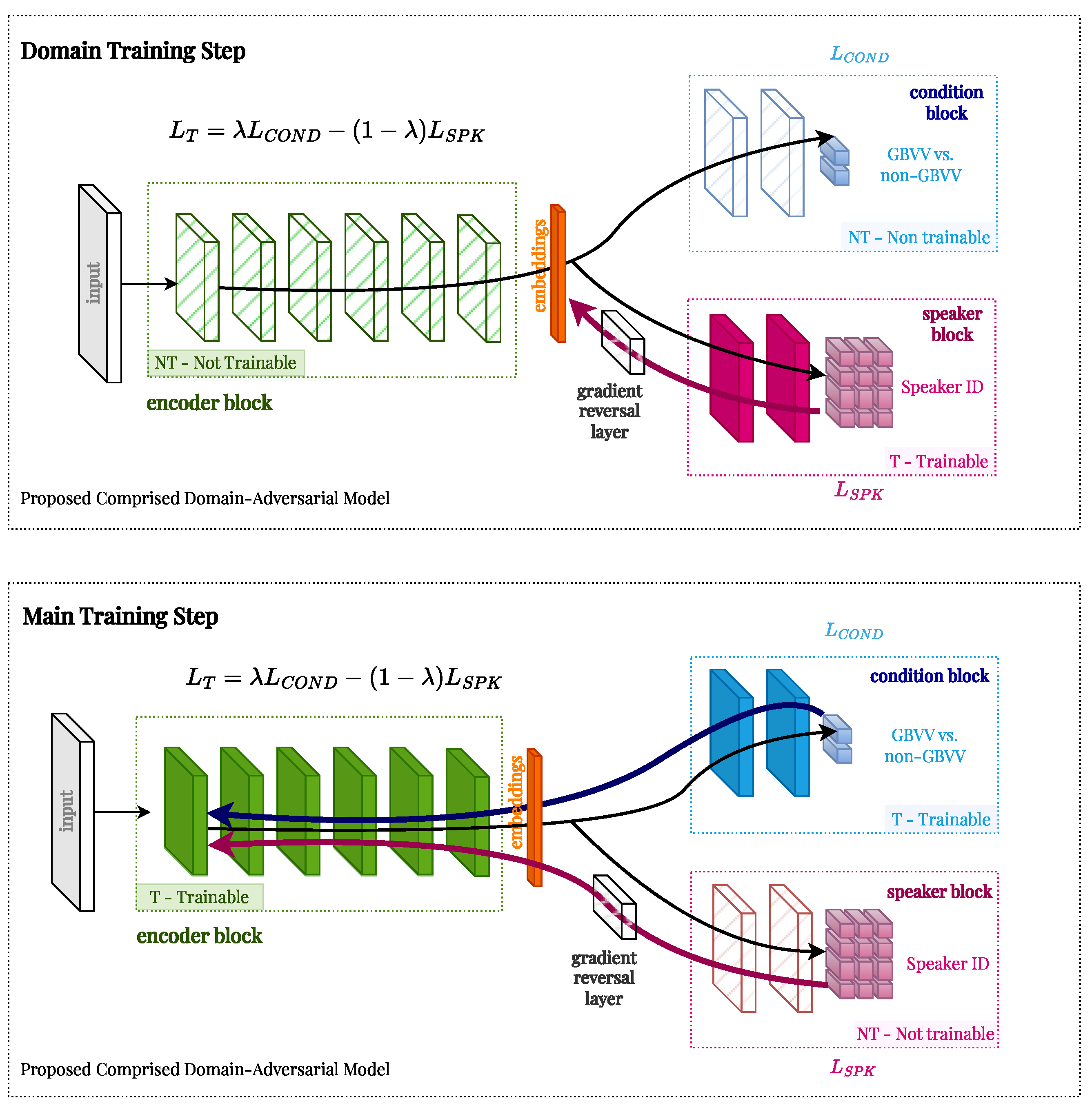

2.3. Speaker-Agnostic GBVVC Detection

In this section, we introduce our Domain-Adversarial Model (DAM), specifically designed to remove speaker-dependent features from the learnt speech embeddings, thereby promoting the emergence of representations that are informative for GBVVC detection but invariant to speaker identity. This is achieved through a gradient reversal layer that enables adversarial training between the main GBVVC classification task and an auxiliary speaker-identification task.

Figure 3 presents the confusion matrices at both user and frame levels for the main task of the DAM. At the user-level, DAM achieves an accuracy of

, precision of

, recall of

, and F1-score of

. At the frame-level, the model yields an accuracy of

, precision of

, recall of

, and F1-score of

. These results reveal a more balanced classification performance compared to the ICM. Specifically, DAM improves upon the ICM by a relative

in frame-level accuracy (from

to

) and

in user-level accuracy (from

to

). It also outperforms the baseline in F1-score at both levels:

points at frame-level and

points at user-level, indicating improved balance between precision and recall.

Nonetheless, the dataset is balanced perfectly at the subject level and quite evenly at the frame level (

GBVVC vs.

non-GBVVC), which validates the use of accuracy as the primary evaluation metric. The results are reported in

Table 1, with mean and standard deviation of the accuracy across 78 LOSO folds, reflecting the real-world variability due to differences in recording quality, vocal traits, and symptom expression. High inter-subject variance is expected in this kind of clinical speech dataset, and the fact that our DAM approach reduces the standard deviation (STD) in the GBVVC classification task while increasing the STD in the speaker classification task highlights its effectiveness in promoting speaker-invariant representations and enhancing the model’s generalization capability.

Table 1 compares four models across two tasks: GBVVC detection and speaker identification. The first column shows the performance of the ICM, which acts as our non-adversarial baseline. While we previously reported better results [

26], those models are not directly compatible with the ones here, trained in an adversarial learning fashion. Hence, the ICM serves as a fairer baseline for this analysis. The second column shows the results of the DAM, which, despite the adversarial unlearning of speaker identity, maintains and slightly improves GBVVC classification performance. This suggests that speaker-related information is not essential—or potentially even detrimental—for detecting the condition.

The third and fourth columns assess the extent to which speaker identity is removed from the learnt representations. The Isolated Speaker Model (ISM), trained directly to identify speakers, achieves

accuracy, confirming that speaker traits are easily learnable from feature embeddings. The fourth column shows the results for the Unlearnt Speaker Model (USM), which uses the same architecture but operates on the frozen embeddings from the DAM (see

Figure 4). The USM, however, shows a steep drop in speaker identification accuracy to

, a relative degradation of

. This demonstrates the success of the adversarial strategy in attenuating speaker-specific information from the learnt feature embeddings.

We emphasize that speaker identification models cannot be evaluated under a true LOSO scheme because, by definition, they cannot classify previously unseen identities. Therefore, we use a subject-dependent evaluation strategy with fewer metrics for ISM and USM, ensuring that no segments from the same audio recording are shared between training and test partitions. This avoids data leakage while preserving speaker identity in the training data. Note that for the multi-class speaker identification task (ISM, USM), user-level metrics (Precision/Recall/F1) are not defined in the same way as for GBVVC (binary) and thus only accuracy is reported for speaker identification.

The attenuation of speaker information by over does not harm GBVVC detection. On the contrary, it enhances generalization, yielding a notable improvement in user-level MV accuracy. This strongly supports our hypothesis that speaker traits act as a confounding variable, and their removal allows the model to better attend to condition-relevant vocal biomarkers. We believe that refining our adversarial strategy to achieve even greater disentanglement of speaker identity could further amplify these gains in future iterations.

In addition, in the comparison of Precision versus Recall in

Table 1, we observe a recall value moderately higher than precision (by approximately 5–10%), indicating that the models exhibit greater sensitivity than specificity. In such cases, the systems effectively identify most relevant instances but tend to generate a moderate proportion of false positives, reflecting a detection strategy oriented toward coverage rather than strict selectivity. Should the requirements of the application change (e.g., becoming time-sensitive or urgent), adjustments to the models should be made to achieve results better aligned with such needs.

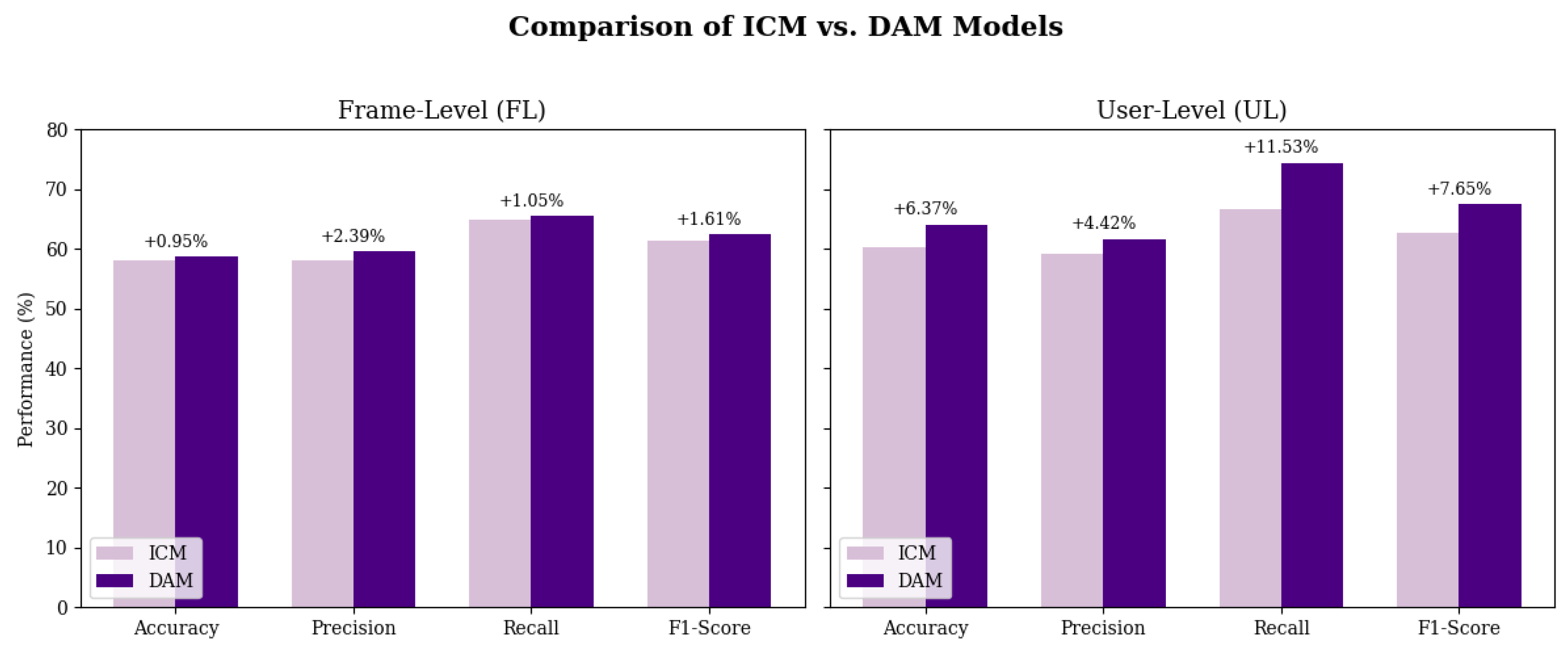

To complement the quantitative comparison in

Table 1,

Figure 5 provides a visual summary of the performance differences between the non-adversarial (ICM) and domain-adversarial (DAM) models. At the frame-level (FL), both models show comparable results, with marginal gains under the adversarial configuration. However, at the user-level (UL), the domain-adversarial model yields consistent and more pronounced improvements across all metrics, particularly for Recall (+11.5%) and F1-Score (+7.6%). These results illustrate the advantage of domain-adversarial training in mitigating speaker-related bias and enhancing generalization to unseen speakers.

In summary, adversarial unlearning reduced speaker identification accuracy by 26.95% (ISM → USM) while producing a relative improvement of 6.37% in user-level GBVVC accuracy and an improvement of 7.64% in user-level GBVVC F1-score (ICM → DAM). This co-occurrence strongly supports the claim that suppressing speaker identity facilitates improved focus on condition-relevant vocal biomarkers.

2.4. The Correlation with EGS-R Score

To further understand what cues the model is leveraging to make GBVVC predictions, we explored the relationship between model performance and clinical symptomatology, as measured by the EGS-R score. This scale, ranging from 0 to 20 in our case, quantifies pre-clinical PTSD-related symptoms such as re-experiencing, emotional numbing, and hyperarousal.

In our previous work [

26], we found that correctly classified GBVVC subjects tended to have higher EGS-R scores, suggesting that our models implicitly relied on speech markers associated with trauma-related symptomatology. Here, we replicate this analysis for both the ICM and DAM models by comparing the mean EGS-R scores of correctly and incorrectly classified GBVVC subjects.

Table 2 shows a statistically significant gap in symptom severity between correctly and incorrectly classified victims for both models (DAM:

, ICM:

, t-Student based). This shows that the mean EGS-R score for correctly classified subjects is significantly higher than that of the subjects who have been incorrectly classified. This reinforces the notion that our models are not making arbitrary predictions, but instead exploit subtle voice markers correlated with PTSD-like symptoms. Furthermore, the difference in EGS-R scores between correct and incorrect classifications increases under the DAM, although not significantly (

, t-Student based), indicating that the adversarial training may have the potential to enhance the model’s focus on trauma-related vocal features once speaker confounds are removed.

Taken together, these findings align with our broader hypothesis: the ability to detect GBVVC from speech is closely tied to the manifestation of early trauma symptoms, which can be encoded in paralinguistic cues such as pitch variability, jitter, or prosodic flattening. By eliminating speaker-dependent information, the DAM enables a clearer identification of these subtle cues, contributing to both improved accuracy and better interpretability grounded in clinical theory.

2.5. Feature Importance

To identify which acoustic features contributed most to the model’s predictions, we performed a SHAP (SHapley Additive exPlanations) analysis. The resulting summary plot is shown in

Figure 6.

The SHAP analysis was conducted on a random subset of 200 samples from the full dataset. As observed, the mean Zero-Crossing Rate emerges as the most influential feature for the detection of both classes. Additionally, the plot is largely dominated by Mel-Frequency Cepstral Coefficients (MFCCs), highlighting their strong contribution to the classification process. Besides the standard deviation of the spectral roll-off, which also shows a significant impact for both classes, most of the top-ranking features correspond to average values rather than dispersion measures. Overall, this interpretability analysis provides insight into which acoustic descriptors are most discriminative between GBVVs and non-GBVVs, suggesting that the model primarily relies on spectral and cepstral dynamics to identify trauma-related vocal characteristics.

3. Discussion and Conclusions

The automatic detection of the gender-based violence victim condition (GBVVC) through speech analysis remains a nascent and underexplored research area. Most prior work in this field has focused on detecting GBV from textual data, particularly through the analysis of social media posts using natural language processing (NLP) techniques [

34,

35,

36]. To the best of our knowledge, no previous studies have attempted to identify the condition of GBV victims directly from their speech, apart from our prior contributions [

25,

26].

In our earlier work, we demonstrated that machine learning (ML) models can distinguish between speech samples from GBV victims and non-victims. However, we identified a potential confounding factor: the models could rely on speaker identity cues, performing speaker identification alongside the detection of speech traits associated with the GBVVC. This risk is widely acknowledged in ML-based clinical diagnostics, especially in speech-related applications, where datasets are typically small and prone to overfitting to speaker-specific features [

37,

38]. As highlighted in [

39], such biases present a significant barrier to the clinical applicability of ML technologies in mental health and diagnostic settings. To address this issue, we pursued a speaker-agnostic approach that mitigates the influence of speaker-specific information in the classification task. This direction aligns with emerging trends in ML such as “Machine Unlearning”, which aims to erase irrelevant or potentially biased information from model representations [

40]. In our case, the objective was to remove speaker identity from the learnt embeddings, thereby ensuring that predictions of GBVVC are not driven by user-specific traits and enhancing model generalizability and privacy.

Inspired by domain-adversarial training methods previously used to disentangle speaker traits from emotion in speech emotion recognition (SER) tasks [

41,

42], we adapted the DANN (Domain-Adversarial Neural Network) framework to our task. By treating each speaker as a domain, we trained an encoder to learn features that are discriminative for GBVVC classification while being invariant to speaker identity. This was accomplished via adversarial training using a gradient reversal layer that discourages the encoder from encoding speaker-related information.

The implementation of this model achieved two main outcomes. First, it reduced the capacity of the model to identify speakers by 26.95%, validating that speaker-specific information had been effectively reduced. Second, this reduction coincided with a relative 6.37% improvement in GBVVC classification accuracy, as shown in

Table 1. These results support our hypothesis that removing speaker-specific information allows the model to focus on more relevant, potentially clinical, acoustic patterns associated with the GBVVC. In doing so, we alleviate previous concerns about the model’s over-reliance on speaker identity and advance towards robust, speaker-independent speech biomarkers. Also, beyond improved technical performance, the adversarial unlearning approach we propose has a meaningful ethical benefit: by reducing speaker identity leakage from learned representations, the model reduces the risk of unauthorized re-identification and thereby supports privacy-preserving screening. This aligns with the objectives of Machine Unlearning, namely, removing or attenuating unwanted information from models, and with fairness goals by discouraging the misuse of identity cues. In sensitive deployments (helplines, clinical triage), the reduction of identity information can lower barriers for individuals seeking help and limit the risk of stigmatization or surveillance.

Additionally, the analysis of EGS-R scores (

Table 2) offers further insights into the model’s behavior. The data suggest that the model performs better for victims exhibiting higher EGS-R scores, a scale associated with pre-clinical PTSD symptoms. Notably, the gap between correctly and incorrectly classified victims widens when speaker information is removed, suggesting that the model is increasingly relying on clinically relevant speech cues—rather than speaker identity—to make predictions. These findings strengthen the hypothesis that pre-clinical trauma manifestations may be encoded in acoustic patterns and can be detected by properly trained models. Furthermore, the SHAP feature importance analysis provides a deeper understanding of the acoustic features that drive the discrimination between GBVVs and non-GBVVs. The results reveal that the model relies primarily on spectral and cepstral descriptors—such as the mean and standard deviation, spectral rolloff, and several MFCC coefficients— but also on the zero-crossing rate, to differentiate between conditions. Overall, this interpretability analysis complements the EGS-R score analysis of the model’s behavior by clarifying which specific features contribute most to the decision process, offering a more transparent view of how the model performs GBVVC detection.

A key limitation of the present study is that the participant pool consisted exclusively of Spanish-speaking women residing in Spain, recruited in collaboration with local institutions. This linguistic and regional homogeneity prevents direct assessment of the model’s generalizability across languages, dialects, and cultural contexts. Moreover, in accordance with ethical requirements designed to prevent revictimization, only participants without a formal PTSD diagnosis (EGS-R ≤ 20) were included. Consequently, the full spectrum of clinical variability could not be captured. We hypothesize that the inclusion of individuals with higher symptom severity might enhance the detectability of GBVVC, as more pronounced trauma could yield stronger acoustic markers. Future studies should therefore aim to expand the dataset to multilingual and multicultural populations, encompassing a broader range of symptom severity, age groups, and socioeconomic backgrounds, to rigorously evaluate cross-linguistic robustness and fairness. Another important limitation is the relatively small number of participants in the victims’ group, which constrains both statistical power and the representativeness of the learned acoustic space. In addition, the current approach relies solely on paralinguistic acoustic features, omitting potentially informative physiological or behavioral modalities—such as heart-rate variability, galvanic skin response, linguistic cues, or facial expressions—that could be incorporated in a multimodal framework to enhance classification robustness and interpretability. From a modeling perspective, the Domain-Adversarial Module (DAM) architecture was intentionally kept simple to prioritize interpretability and training stability; however, more expressive architectures—such as transformer-based encoders, hierarchical attention mechanisms, or recurrent-convolutional hybrids—could better capture fine-grained temporal dynamics and yield further improvements in both speaker-independence and GBVVC detection accuracy. Finally, the use of fixed-length 1-s frames may discard longer-term dependencies relevant for condition identification; future work could explore variable-length or sequence-to-sequence modeling strategies.

The proposed domain-adversarial scheme also holds potential for broader applications beyond GBVVC. It may prove beneficial in other speech-based diagnostic tasks where speaker identity or other personal traits act as confounding variables, particularly in fairness-sensitive contexts such as disease detection across different demographic groups [

43].

As a final note on security, we want to highlight the importance of privacy-preserving and generalizable AI for sensitive detection tasks. Integrating AI-based applications with IoT devices can enable scalable, real-time operation, but could also introduce important security and privacy concerns. By adopting speaker-agnostic approaches, models can provide accurate and fair assessments while safeguarding personal privacy. Ensuring the security of both the AI systems and the IoT infrastructures where they are set is, therefore, critical in the context of trustworthy and ethical mental health applications.

In summary, this work presents the first application of domain-adversarial learning to detect the GBVVC from speech, extending our prior efforts and contributing a novel approach to this under-investigated field. The proposed model achieves both technical and ethical objectives: it enhances classification performance while mitigating identity-related biases. These findings open promising avenues for the development of speech-based tools that are both effective and privacy-preserving, which is particularly valuable in sensitive domains such as GBV detection, mental health support, and non-invasive early diagnosis.