Abstract

This study introduces a Romanian–English bilingual corpus and a fine-tuned cross-lingual embedding framework aimed at improving retrieval performance in Retrieval-Augmented Generation (RAG) systems. The dataset integrates over 130,000 unstructured question–answer pairs derived from SQuAD and 9750 Romanian-generated questions linked to governmental tabular data, subsequently translated bidirectionally to build parallel Romanian–English resources. Multiple state-of-the-art embedding models, including multilingual-e5, Jina-v3, and the Qwen3-Embeddings family, were systematically evaluated on both text and tabular inputs across four language directions (eng-eng, ro-ro, eng-ro, ro-eng). The results show that while multilingual-e5-large achieved the strongest monolingual retrieval performance, Qwen3-Embedding-4B provided the best overall balance across languages and modalities. Fine-tuning this model using Low-Rank Adaptation (LoRA) and InfoNCE loss improved its Mean Reciprocal Rank (MRR) from 0.4496 to 0.4872 (+8.36%), with the largest gains observed in cross-lingual retrieval tasks. The research highlights persistent challenges in structured (tabular) data retrieval due to dataset imbalance and outlines future directions including dataset expansion, translation refinement, and instruction-based fine-tuning. Overall, this work contributes new bilingual analyses and methodological insights for advancing embedding-based retrieval in low-resource and multimodal contexts.

1. Introduction

Large Language Models (LLMs) represent one of the most transformative advances in recent natural language processing (NLP). Their ability to generate coherent, contextually relevant text has enabled applications across domains ranging from digital assistants to scientific discovery. However, despite their impressive general knowledge, LLMs face inherent limitations: their training data is fixed at pretraining time, and they cannot directly access up-to-date or domain-specific private data. To overcome this, Retrieval-Augmented Generation (RAG) systems have emerged as a powerful paradigm, combining the generative abilities of LLMs with external retrieval modules that inject relevant passages from a private knowledge base into the model’s context.

However, the effectiveness of an RAG system strongly depends on the quality of the embedding models used in the retrieval component. Embeddings determine which documents are selected for augmentation, and errors at this stage propagate into the generation process. A strong retrieval backbone ensures that the LLM reasons not only with fluent language but also with accurate, contextually aligned knowledge.

Private datasets typically used in RAG pipelines pose several challenges. First, they are often unstructured and consist of diverse documents, such as reports, manuals, or articles, that lack consistent formatting. Second, in many real-world settings these documents are multilingual, for example, in Romanian and English, reflecting the natural linguistic diversity of the users and sources. Third, private datasets frequently contain tabular data (e.g., CSVs, spreadsheets), where information is structured differently from plain text. These factors complicate the retrieval process: while the end user expects a fluent, accurate answer in their own language, the system must internally search across languages and modalities, integrating evidence regardless of whether it originates from Romanian text, English text, or structured tables.

These realities create a pressing need for cross-lingual embedding models capable of handling both textual and tabular inputs. Such models ensure that retrieval is not biased toward a single language and that relevant content from tables is not overlooked. Without this capability, RAG systems risk providing incomplete or misleading responses, particularly in bilingual or data-rich environments.

The problem is further amplified for Romanian, a low-resource language in the NLP landscape. Compared to English, Romanian has fewer high-quality benchmarks, parallel corpora, and fine-tuned models available. Existing multilingual embeddings often under perform in Romanian or exhibit inconsistencies when bridging Romanian and English. Moreover, most benchmarks focus on textual data only, leaving the challenge of tabular retrieval in cross-lingual contexts underexplored. Similar limitations have been extensively documented for other low-resource languages such as Amharic and Khmer, where even multilingual retrievers like mDPR struggle to achieve stable cross-lingual alignment despite translation-based post-training and fine-tuning efforts [1]. These findings further emphasize the broader challenge of extending retrieval models to languages with limited resources and domain diversity, a context within which Romanian also fits.

This research addresses these gaps by constructing a complete pipeline for training a bilingual Romanian–English embedding model that integrates both narrative (unstructured) and tabular (structured) data sources, combining automatic data collection, machine translation, synthetic data generation, and parameter-efficient fine-tuning. Building on this resource, we evaluate several state-of-the-art multilingual embedding models in monolingual and cross-lingual retrieval tasks and fine-tune the most promising model to improve its performance on Romanian and table-based data. The central hypothesis is that such a pipeline can effectively enhance cross-lingual and cross-modal retrieval by enabling the model to generalize across languages and data types, even when trained on automatically generated and translated data. By explicitly targeting both linguistic diversity and structural heterogeneity, this work aims to strengthen the foundations of embedding-based retrieval in RAG systems and provide practical resources for advancing NLP in low-resource settings.

2. Description of the Romanian–English Bilingual Dataset

Although recent advances in multilingual machine translation have positioned Romanian as a relatively high-resource language within that domain [2], the availability of large-scale datasets for information retrieval and question answering remains limited. Consequently, Romanian continues to be considered a low-resource language in these specific research areas [3].

The bilingual data set was built from two complementary categories of sources: unstructured textual data and structured tabular data. For the unstructured component, we used the traing split of Stanford Question Answering Dataset (SQuAD) [4], a large-scale resource of 130,319 human-annotated question–answer pairs on Wikipedia articles. This corpus was selected due to its richness, domain diversity, and proven utility in question answering research. In addition, we collected structured data by downloading 39 tabular files from the Romanian governmental open data portal https://data.gov.ro accessed on 11 August 2025. These tables span diverse domains including Public Administration, Agriculture, Economy, Governance, Health, Nomenclatures, International Relations, and Population statistics. The files were provided in heterogeneous formats (.xlsx, .xls, .csv) and were chosen with the explicit goal of maximizing thematic coverage and representation of different structural configurations.

The tabular files underwent a preprocessing pipeline to increase variability and applicability for question answering tasks. Each table was automatically split into subtables with randomized row and column counts (minimum of 3 rows, maximum limited by the original structure). Columns were not merged across files, but their order was permuted randomly to enhance diversity. This procedure yielded 975 subtables in total. Inspired by the approach proposed in [5], each subtable was represented as a CSV string by concatenating its headers and cell values into a unified textual format. This ensured compatibility with language model processing, allowing the tables to be treated as natural language inputs rather than structured data.

Synthetic questions were generated for the tabular component to create realistic query-table pairs. Using a locally deployed open-source model (Llama-3.3-70B), each subtable was processed through a prompt specifically designed to elicit exactly 10 natural, conversational questions in Romanian. The prompt discouraged direct reference to table headers or indices, focusing instead on realistic analytical queries. The process was run in a 2-shot prompting setup, without fine-tuning or parameter variation, and resulted in 9750 Romanian questions aligned to 975 subtables. This approach aligns with recent findings that synthetic data produced by large language models can significantly enhance embedding and retrieval tasks by introducing greater linguistic diversity and eliminating the dependence on large manually labeled datasets [6], and with the concept of query generation as an effective strategy for augmenting passage representations to improve cross-lingual dense retrieval performance [7].

To construct a parallel bilingual corpus, all questions and contexts/tables were automatically translated between Romanian and English. Google Translate was employed due to its consistent performance on Romanian–English pairs. Prior studies indicate that while Google Translate occasionally struggles with idiomatic or complex constructions in Romanian [8], it remains a robust baseline compared to other systems, particularly in cross-lingual settings. Furthermore, comparative evaluations show that Google Translate generally outperforms ChatGPT (model GPT-3.5) in Romanian–English translation quality, especially for formal texts [9]. Based on these findings, we relied on Google Translate for bulk translation, acknowledging its limitations but leveraging its strengths in coverage and reliability. Recent findings further reinforce this decision, as the results reported in [10] demonstrate that even when ChatGPT (model GPT-3.5) is guided through advanced, task-specific prompting strategies designed to maximize translation performance, its outputs still fall short of Google Translate’s quality and consistency. This evidence strengthens the assumption that Google Translate remains the more reliable choice for large-scale, automatic Romanian–English translation, particularly in research contexts requiring uniformity across diverse data types.

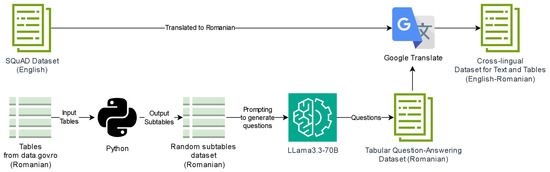

Figure 1 represents the flowchart of the first stage of the proposed pipeline, illustrating the process of dataset construction. In this phase, the Romanian–English bilingual dataset was created by combining unstructured textual data from SQuAD with structured tabular data from the Romanian open data portal. Synthetic questions were generated using Llama 3.3-70B, and all components were automatically translated with Google Translate to form a cross-lingual dataset for both text and tables.

Figure 1.

An overview of the automatic bilingual dataset construction pipeline integrating text and tabular data. The process combines the SQuAD dataset, governmental tables, question generation via Llama 3.3-70B, and machine translation to produce the final English–Romanian corpus.

Given the large-scale nature of the dataset, manual validation was not feasible. Instead, validation consisted of automatic checks on schema integrity, ensuring that no entries contained missing fields and that all translated records preserved alignment across languages. Potential translation errors, such as literal renderings of idioms or morphological inconsistencies documented in previous studies [8], were not systematically corrected, as the dataset aims to reflect realistic cross-lingual noise scenarios relevant to retrieval-augmented generation (RAG) research. We acknowledge that the present study does not include an empirical error analysis of translation-induced noise nor a targeted mitigation step. This choice is aligned with our central hypothesis, which explicitly evaluates whether a fully automatic, low-cost pipeline—combining automatic data collection, machine translation, and parameter-efficient fine-tuning—can deliver meaningful cross-/monolingual retrieval gains despite translation noise.

From the structured component, 9750 questions were paired with 975 subtables, producing 39,000 aligned bilingual entries after translation. The unstructured component (SQuAD-derived) contributed 130,319 question-context pairs [4], also distributed across Romanian, English, and mixed-language alignments.

The overall dataset is therefore balanced in terms of monolingual and cross-lingual language combinations. This structure enables evaluation of retrieval and embedding models across Romanian, English, and bilingual understanding tasks. While both structured and unstructured sources were incorporated, the resulting corpus is still predominantly composed of unstructured textual data. The structured component, though diverse in domain and format, represents a considerably smaller portion of the dataset, which could limit the model’s exposure to complex tabular patterns.

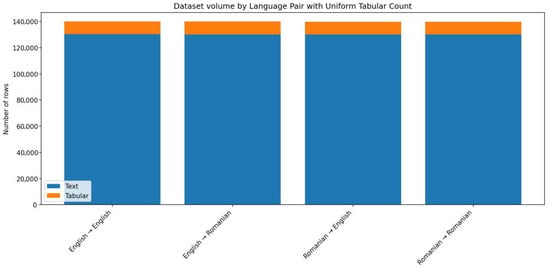

The distribution of the dataset is illustrated in Figure 2, which shows the relative contribution of text and tabular components across the four language pairs (Eng-Eng, EngRo, Ro-Eng, and Ro-Ro). As can be seen, the unstructured textual data represents the majority of the corpus, with nearly 130,000 entries per language pair. The structured tabular component contributes an additional 9750 rows per language pair. Consequently, the dataset provides a balanced evaluation framework for models that must handle both free-text and structured inputs, while also testing their robustness across monolingual and cross-lingual scenarios.

Figure 2.

Distribution of dataset entries by language pair and data type. Bars show aligned question–context pairs for each bilingual direction, with text (blue) and tabular data (orange).

To enable rigorous model evaluation, the dataset was partitioned into distinct subsets. Specifically, 10% of the data was reserved for constructing a held-out test benchmark, which serves as the basis for assessing the performance of different models in a consistent and comparable manner. The remaining 90% of the corpus was further divided into training and validation subsets, with 95% allocated for training and 5% for validation. This stratified splitting strategy ensures both reliable model training and unbiased performance assessment, while maintaining sufficient data diversity in each subset. The split of the dataset was performed randomly, without grouping translated or parallel variants of the same base example. This procedure could allow semantically equivalent text from different language pairs to appear in different subsets. However, given that the dataset includes both direct translations and independently constructed bilingual pairs, and considering that multilingual embedding models process distinct tokenizations across languages, the likelihood of significant overfitting to shared semantics is limited.

3. Methodology

The research process was designed to systematically evaluate the hypothesis that a complete pipeline combining automatic data collection, translation, synthetic data generation, and parameter-efficient fine-tuning can improve the performance of embedding models in bilingual and multi-modal retrieval tasks. To test this hypothesis, a set of state-of-the-art embedding models was assessed for passage retrieval in both monolingual and cross-lingual scenarios. The methodology consisted of four main phases: model selection, benchmark-based evaluation, model ranking and selection for fine-tuning and examining the errors.

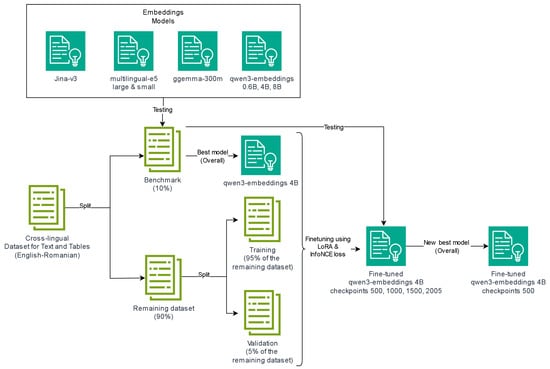

Figure 3 represents the flowchart of the second stage of the proposed pipeline, illustrating the model evaluation and fine-tuning process. In this phase, several embedding models were benchmarked on the bilingual dataset, followed by the selection and fine-tuning of the best-performing model (qwen3-embeddings-4B) using LoRA and the InfoNCE loss. All steps shown in this figure are described in detail in the following paragraphs.

Figure 3.

An overview of the end-to-end methodology pipeline for embedding model evaluation and fine-tuning. The process begins with the cross-lingual dataset, proceeds through benchmarking of multiple candidate models, selection of the best-performing one (qwen3-embeddings-4B), and continues with parameter-efficient fine-tuning using LoRA and InfoNCE loss across several checkpoints.

The first step involved identifying candidate models suitable for embedding-based retrieval. To ensure comparability and relevance, the Hugging Face Hub was queried using the filter “NLP feature extraction”, which returned a curated list of embedding models optimized for semantic similarity and retrieval tasks. After manually reviewing the available models, the following were selected for evaluation: Jina-v3, multilingual-e5-large, multilingual-e5-small, qwen3-embeddings-8b, qwen3-embeddings-4b, qwen3-embeddings-0.6b, ggemma_300m. These models cover a range of architectures, parameter scales, and multilingual capabilities, thus providing a diverse basis for comparison.

The evaluation relied on the benchmark dataset described in Section 2, which was specifically constructed to include both unstructured text and structured tabular data across four language pairings: English–English (eng-eng), Romanian–Romanian (ro-ro), English–Romanian (eng-ro), and Romanian–English (ro-eng).

For each candidate model, passage retrieval was tested by encoding the questions and candidate passages, followed by ranking based on cosine similarity. Although retrieval performance results were reported separately for textual data and tabular data, the evaluation itself was conducted using a mixed dataset containing both types simultaneously. This setup was chosen to better simulate a real-world application scenario, where the nature of the input data is unknown in advance and may include a combination of unstructured text and structured tables. Within this framework, the models were evaluated across all four language pairings, covering both monolingual and cross-lingual retrieval settings. Performance was measured using Mean Reciprocal Rank (MRR), where for each question the reciprocal of the rank assigned to the correct passage was computed, and the final score was obtained by averaging these values across all queries. The choice of MRR as the primary evaluation metric is further supported by previous research. As highlighted in [11], MRR serves as a reliable and widely adopted metric for assessing passage retrieval quality, forming the basis of the MS MARCO benchmark. Moreover, ref. [12] extends this validation to multilingual contexts, demonstrating that MRR remains an effective and consistent measure for evaluating retrieval models across multiple languages. These findings collectively justify its use in our evaluation pipeline as the main indicator of model performance.

Once all models were evaluated, results were consolidated and ranked according to their global overall MRR scores, allowing a fair comparison of retrieval performance across language settings and data types. As shown in Table 1, the multilingual-e5-large model achieved the strongest results on monolingual tasks (particularly in the eng-eng and ro-ro cases) while the scores for cross lingual results are low as the E5 architecture relies on monolingual data sources such as Reddit, StackExchange, Wikipedia, and Common Crawl [13]. Jina-v3 obtained the highest scores on cross-lingual retrieval (eng-ro and ro-eng). Across all models, performance on tabular data was consistently low, highlighting the greater difficulty of this data type compared to unstructured text. Despite this, the qwen3-embeddings family demonstrated robust and balanced results across settings, with qwen3-embeddings-4b ranking highest overall and providing the best tradeoff between monolingual and cross-lingual performance. For this reason, qwen3embeddings-4b was selected as the primary candidate for fine-tuning using the dataset introduced in Section 2. The qwen3-embedding series was specifically designed to leverage the multilingual and multitask strengths of large foundation models, combining large-scale weakly supervised pre-training on synthetic data with fine-tuning and model merging to achieve state-of-the-art results across multiple retrieval benchmarks [14]. Its architecture integrates instruction-aware training and flexible embedding dimensions, allowing consistent performance across diverse tasks, including multilingual and cross-lingual retrieval, making it particularly well suited for applications like the one explored in this study [14].

Table 1.

Embedding model results on proprietary benchmark.

Following the selection of the base model, fine-tuning was conducted using the Low-Rank Adaptation (LoRA) framework, a parameter-efficient technique designed to adapt large transformer models without updating all weights. In this configuration, the pretrained weights of the model were kept frozen, while trainable low-rank matrices were inserted into selected linear layers. The fine-tuning procedure employed the PEFT implementation of LoRA [15], which replaces the full-rank update with a low-rank decomposition:

This re-parameterization drastically reduces the number of trainable parameters from millions to only a fraction of a percent of the full model, while maintaining competitive downstream performance. The frozen-weight design ensures that the pre-trained general knowledge of the base model remains intact, allowing the adapter layers to specialize the model for the retrieval and similarity tasks specific to the bilingual dataset.

The decision to employ LoRA for fine-tuning was motivated by its proven effectiveness across a range of multilingual and low-resource scenarios. As demonstrated in [16], LoRA achieves performance comparable to full fine-tuning when abundant data is available, while significantly outperforming it in low-data regimes and cross-lingual transfer tasks. This adaptability makes it particularly suitable for our bilingual retrieval setup. Furthermore, ref. [17] identifies LoRA as a leading approach in the field of parameter-efficient fine-tuning, emphasizing its optimal balance between computational efficiency and downstream performance. These findings collectively support our choice of LoRA as the fine-tuning strategy for enhancing the cross-lingual embedding model.

The optimization objective used during fine-tuning was the InfoNCE loss. This loss encourages embeddings of semantically aligned text pairs to be close in the vector space, while pushing apart unrelated ones within the same batch. Given a batch of N pairs , the cosine similarity between all query-passage combinations is computed and scaled by a temperature ; the target labels correspond to the diagonal (positive) pairs. The loss is then defined as [18]:

This formulation, combined with in-batch negatives and optional hard-negative mining, improves the model’s discrimination power and promotes smoother embedding distributions. The architecture was adapted with last-token pooling, which correctly extracts the semantic representation for each sequence, and a lightweight projection head was added during training to enhance stability and generalization.

The selection of InfoNCE as the loss function was guided by its proven success in prior studies addressing text matching and retrieval tasks. As demonstrated in [19], InfoNCE effectively enhances dense retrieval models by maximizing similarity between semantically related pairs while maintaining strong separation among negatives. Similarly, ref. [20] reports consistent performance improvements when applying InfoNCE to transformer-based models for complex matching problems, highlighting its robustness across domains. These findings collectively support our decision to adopt InfoNCE as the primary optimization objective for fine-tuning, ensuring both semantic coherence and discriminative representation learning.

The use of LoRA adapters offered substantial memory savings and prevented catastrophic forgetting, an effect also emphasized in [21]. Their findings show that LoRA updates maintain the base model’s general-domain capabilities far better than full finetuning, due to the low-rank constraint acting as a regularizer that limits the deviation from the pretrained weight manifold. Compared to full fine-tuning, this approach requires fewer epochs to converge and consumes significantly less GPU memory, enabling high-rank models to be fine-tuned efficiently on a single GPU. Overall, the combination of the InfoNCE objective with LoRA adaptation enabled targeted alignment of bilingual embeddings while preserving the multilingual robustness and generalization capacity of the base Qwen3 model.

The fine-tuning process was conducted using the AdamW optimizer and the InfoNCE loss objective. Training was performed with an effective batch size of 128, obtained from a batch size of 64 and gradient accumulation of two steps, for a total of two epochs. A warmup ratio of 0.2 was applied, followed by a constant learning rate of . The LoRA adapters were configured with a rank of 128 and a scaling factor , and were applied to the attention projection components of the transformer layers. Gradient checkpointing and bfloat16 precision were used to optimize memory efficiency, enabling fine-tuning on a single NVIDIA GPU with 48 GB of VRAM.

The following checkpoint analyses directly test the effectiveness of the proposed pipeline by evaluating whether fine-tuning on automatically collected, translated, and synthetically generated data improves model performance across language pairs and data modalities.

Table 2 summarizes the Mean Reciprocal Rank (MRR) scores obtained for each model checkpoint, including the original base model and its fine-tuned variants trained for 500, 1000, 1500, and 2005 steps.

Table 2.

Fine-tuning checkpoint results.

Although the fine-tuning process led to a slight decrease in monolingual performance, specifically in the eng-eng and ro-ro configurations, the overall scores improved consistently compared to the base model. The fine-tuned models demonstrated a significant boost in cross-lingual retrieval (Eng-Ro and Ro-Eng), which represents one of the most challenging aspects of multilingual embedding alignment. The experiment was stopped at the 2005-step checkpoint, as a decrease in the overall MRR was observed in consecutive checkpoints. In particular, the average overall scores for the fine-tuned checkpoints surpassed the base qwen3-embeddings-4B model (0.4496 overall), with the best-performing checkpoint (qwen34b_ft_500ckp) achieving an overall MRR of 0.4872, marking an improvement of approximately +8.36%. While the overall improvement of +8.36% in Mean Reciprocal Rank may appear modest in absolute terms, it is important to note that the most substantial gains were observed in cross-lingual retrieval tasks. These scenarios, which require effective semantic alignment between Romanian and English representations, are inherently more complex than monolingual retrieval. The consistent boost in Eng-Ro and Ro-Eng configurations therefore highlights the fine-tuning procedure’s main contribution.

A closer inspection of the results indicates that fine-tuning primarily enhanced cross-lingual matching accuracy. Both Eng-Ro and Ro-Eng tasks benefited from the contrastive optimization objective (InfoNCE) used during training, which explicitly encourages alignment between semantically equivalent text pairs across languages. Moreover, some table data scores, though still lower than those on unstructured text, also showed measurable improvements. This suggests that the model learned to better generalize beyond free-text representations, despite the structured format of tabular inputs posing a more complex challenge for embedding-based retrieval.

When examining the progression of checkpoints, it becomes evident that performance gains on cross-lingual data increased steadily during training, while monolingual results gradually declined. This divergence implies that as the model specialized more in cross-lingual alignment, it slightly compromised its same-language representational sharpness. Interestingly, the checkpoint obtained after 500 steps emerged as the best-balanced configuration, achieving the highest overall MRR. Subsequent checkpoints (1000, 1500, and 2005 steps) continued to improve cross-lingual scores marginally but at the cost of further decreases in Eng-Eng and Ro-Ro performance.

The slight decline in monolingual retrieval performance observed after fine-tuning can be attributed to the competitive interaction between cross-lingual and monolingual objectives. During contrastive optimization, the model is encouraged to minimize the distance between semantically equivalent pairs across languages. While this improves alignment between Eng–Ro embeddings, it can also compress language-specific subspaces, leading to reduced separability of fine-grained monolingual distinctions. Furthermore, because the InfoNCE loss is computed over the full similarity matrix of all query–passage combinations within a batch, it is possible that the model simultaneously processes both cross-lingual (Eng–Ro and Ro–Eng) and monolingual examples. In this setup, the objective explicitly pulls together the positive cross-lingual pairs (main diagonal) while implicitly pushing apart all non-matching pairs, including monolingual ones, thereby distorting their relative geometry in the shared embedding space. Even though these monolingual pairs are not treated as explicit negatives, their embeddings can still be indirectly displaced as a side effect of the global optimization process, which may further contribute to the observed degradation in single-language retrieval. This phenomenon reflects a common trade-off in multilingual embedding learning, where enhancing cross-lingual consistency may come at the cost of diminished precision within a single language domain.

Error Analysis

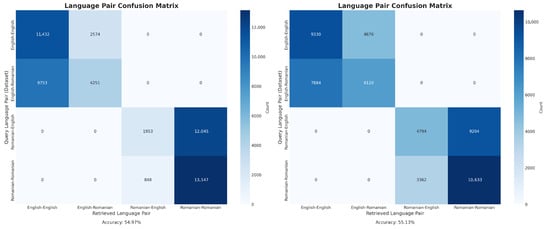

To better evaluate retrieval limitations, particularly in terms of cross-lingual alignment, we conducted an error analysis focused on the language pair of the top-1 retrieved passage for each query, using the benchmark described in Section 2. Specifically, the analysis compared only the language of the retrieved text with the language of the ground-truth passage, without requiring semantic equivalence. Confusion matrices were generated for both the base qwen3-embeddings-4B model and the fine-tuned qwen3-4b_ft_500ckp checkpoint, illustrating the distribution of retrieved language pairs relative to the expected ones and providing insights into the models’ cross-lingual retrieval behavior.

On average, the fine-tuned model achieves slightly higher accuracy in predicting the correct language pair, increasing from 54.97% to 55.13%; this idea is better reflected by the stronger diagonal dominance in Figure 4 (right). However, despite this improvement, the results also reveal a tendency of the model to partially merge monolingual and cross-lingual embedding spaces. As cross-lingual retrieval performance improves, certain monolingual queries begin to be associated with bilingual pairs, indicating an overlap between the previously distinct subspaces. This behavior suggests that fine-tuning enhances cross-lingual alignment but introduces a degree of mixing between language domains.

Figure 4.

A comparison of language pair confusion matrices for the base qwen3-embeddings-4B model (left) and the fine-tuned checkpoint qwen3-4b_ft_500ckp model (right). Each pair reflects only the language of the top-1 retrieved passage relative to the ground-truth passage, without requiring the texts to match semantically. Diagonal cells correspond to correctly matched language pairs, while off-diagonal values indicate cross-lingual retrieval confusions. The fine-tuned model shows variations in language-pair distribution, suggesting adjustments in cross-lingual alignment patterns.

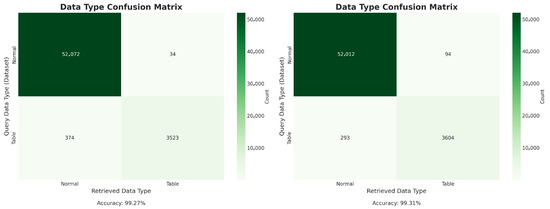

Beyond language alignment, a complementary analysis was conducted to evaluate the models’ ability to distinguish between different data modalities, unstructured text (“Normal”) and structured tables (“Table”). This evaluation does not assess semantic correctness but focuses exclusively on whether the model retrieves the correct type of data corresponding to the query. Such an analysis helps verify that fine-tuning improves cross-lingual behavior without compromising modality awareness, which is crucial for maintaining retrieval consistency in mixed-type datasets.

As shown in Figure 5, the fine-tuned model maintains strong modality separation while exhibiting a slight improvement in accuracy (from 99.27% to 99.31%), confirming that fine-tuning enhanced cross-lingual alignment without degrading the model’s ability to correctly identify data type. This result also highlights the inherent imbalance in the benchmark described in Section 2, where unstructured data dominates the corpus, influencing both retrieval frequency and representation stability.

Figure 5.

A comparison of data-type confusion matrices for the base qwen3-embeddings-4B model (left) and the fine-tuned checkpoint qwen3-4b_ft_500ckp model (right). Each matrix represents how accurately the retrieved passages preserved the expected data modality, either unstructured text (“Normal”) or structured table (“Table”). Diagonal values indicate correct modality retrieval, while off-diagonal counts reflect cases where the model retrieved a passage of the wrong type.

Overall, these findings suggest that the fine-tuning process strengthened cross-lingual representation alignment while preserving the model’s capacity to differentiate between textual and tabular inputs. Despite the data imbalance favoring unstructured content, both the base and fine-tuned models demonstrate a strong awareness of data modality boundaries, indicating that retrieval decisions remain structurally coherent even when language representations shift.

Based on the error analysis results, the fine-tuned model performs effectively in identifying the correct data type of the retrieved passage, achieving a recall of 99.82% for unstructured text and 92.50% for tables. These results confirm that modality classification remains highly reliable after fine-tuning, with only minor deviations for structured inputs. Consequently, the main source of retrieval uncertainty is not the data modality but rather the cross-lingual alignment between language pairs, which continues to represent the primary challenge for further optimization.

4. Challenges and Future Work

One of the major challenges encountered during this research was the generation of questions for the tabular dataset. Unlike the unstructured text component, where question–answer pairs were readily available from established sources, the tabular data required local generation using a 70B parameter large language model. This process demanded substantial computational power and time resources, limiting the volume of generated examples. Consequently, the resulting dataset became imbalanced, with a much larger proportion of unstructured textual data compared to tabular data. This imbalance may have contributed to the relatively smaller performance gains observed in tabular retrieval tasks after fine-tuning, as the model had fewer examples from which to learn robust representations for structured information.

Another limitation encountered during this study was the restricted scope of the fine-tuning evaluation. Due to computational constraints, each configuration was trained using a single random seed, without conducting multi-seed replications or computing variance estimates. Future work will focus on performing repeated fine-tuning runs to assess the stability of results and to provide confidence intervals for the observed performance gains. Moreover, the evaluation relied solely on the Mean Reciprocal Rank (MRR), without incorporating complementary retrieval metrics such as Recall@k or NDCG to validate consistency across measures. Future work will address these aspects by performing repeated fine-tuning runs with multiple seeds, reporting variance-based confidence intervals, and expanding the evaluation to include additional retrieval metrics for a more comprehensive robustness assessment.

To overcome these limitations, future work will focus on expanding and balancing the dataset. This includes collecting additional tabular data and generating new synthetic question–answer pairs, ensuring a more even distribution between textual and tabular examples. A balanced dataset is expected to improve the model’s ability to generalize across both data types, ultimately enhancing retrieval performance in structured contexts. Furthermore, based on the qwen3-embeddings architecture, future experiments will explore the integration of task-specific instructions for table data, inspired by the instruction-finetuning approach proposed in [22]. By embedding natural language prompts that explicitly distinguish between normal text and tabular content, the model may learn to produce task- and domain-aware embeddings, leading to more accurate retrieval across heterogeneous data types.

Another promising direction involves improving translation quality during dataset construction. Since part of the corpus involves bilingual Romanian–English data, translation inconsistencies could introduce noise into the embedding space. To mitigate these effects, future iterations of the dataset will employ hybrid translation pipelines combining neural systems with selective human validation, ensuring more accurate alignment between source and target sentences and preserving semantic fidelity. Therefore, refining the translation pipeline, either through higher-quality neural translation systems or post-editing methods, will be essential to ensure better semantic alignment between language pairs and achieve more reliable cross-lingual retrieval results.

As part of future extensions, we plan to incorporate a group-aware data splitting procedure that identifies and binds all parallel or translated variants of the same example into unified families before performing the train/validation/test division. Such grouping, implemented via stable hashing or group k-fold validation, will eliminate possible semantic leakage between subsets and provide a clearer picture of how embedding models handle unseen bilingual data.

Finally, future work will aim to address the observed trade-off between cross-lingual improvement and monolingual degradation identified during fine-tuning. Several strategies can be explored to mitigate this effect, such as multi-objective optimization that jointly balances monolingual and cross-lingual contrastive losses, ensuring that within-language coherence is preserved while enhancing inter-language alignment. Adaptive fine-tuning schedules that alternate between monolingual and bilingual batches may further maintain representational diversity and prevent over-specialization toward cross-lingual pairs.

5. Conclusions

Across experiments, the results support the central hypothesis that an automated pipeline combining data collection, translation, synthetic augmentation, and parameter-efficient fine-tuning can substantially improve cross-lingual and cross-modal retrieval. By combining over 130,000 text-based and 9750 tabular question–answer pairs translated in both language directions, the study addressed a key gap for Romanian, a low-resource language. Evaluation across multiple state-of-the-art models showed that while multilingual-e5-large performed best monolingually, the qwen3-embeddings family, particularly qwen3-embedding-4B, offered the best balance between monolingual, cross-lingual, and cross-modal tasks. Fine-tuning this model with Low-Rank Adaptation (LoRA) and InfoNCE loss improved the overall Mean Reciprocal Rank (MRR) from 0.4496 to 0.4872 (+8.36%), with the most notable gains in cross-lingual retrieval, demonstrating the practical viability of this low-cost, scalable approach for enhancing multilingual embeddings in low-resource environments.

Despite these advances, performance on tabular data remained below that of unstructured text, largely due to dataset imbalance and the inherent difficulty of representing structured information. Future improvements should focus on expanding tabular coverage, refining translations, and employing instruction-based fine-tuning to differentiate table and text embeddings. Overall, the dataset and fine-tuned models presented here provide valuable resources for advancing bilingual retrieval and demonstrate that efficient fine-tuning and well-designed data can meaningfully enhance embedding performance for low-resource and multimodal applications.

Author Contributions

Conceptualization, B.M.G. and N.P.; methodology, B.M.G. and N.P.; software, B.M.G.; validation, B.M.G. and N.P.; formal analysis, B.M.G. and N.P.; investigation, B.M.G.; resources, B.M.G. and N.P.; data curation, B.M.G.; writing—original draft preparation, B.M.G. and N.P.; writing—review and editing, B.M.G.; visualization, B.M.G.; supervision, B.M.G. and N.P.; project administration, B.M.G. and N.P.; funding acquisition, N.P. All authors have read and agreed to the published version of the manuscript.

Funding

This research received no external funding.

Data Availability Statement

The datasets presented in this article are not readily available because the data are part of an ongoing study.

Conflicts of Interest

The authors declare no conflicts of interest.

Abbreviations

The following abbreviations are used in this manuscript:

| RAG | Retrieval-Augmented Generation |

| LLM | Large Language Model |

| NLP | Natural Language Processing |

| LoRA | Low-Rank Adaptation |

| MRR | Mean Reciprocal Rank |

| InfoNCE | Information Noise-Contrastive Estimation |

| PEFT | Parameter-Efficient Fine-Tuning |

| GPU | Graphics Processing Unit |

| CSV | Comma-Separated Values |

References

- Wu, J.; Ren, Z.; Verberne, S. What are the limits of cross-lingual dense passage retrieval for low-resource languages? arXiv 2024, arXiv:2408.11942. [Google Scholar]

- NLLB Team; Costa-jussà, M.R.; Cross, J.; Çelebi, O.; Elbayad, M.; Heafield, K.; Heffernan, K.; Kalbassi, E.; Lam, J.; Licht, D.; et al. No Language Left Behind: Scaling Human-CenteredMachine Translation. arXiv 2022, arXiv:2207.04672. [Google Scholar] [CrossRef]

- Nitu, M.; Dascalu, M. Natural Language Processing Tools for Romanian—Going Beyond a Low-Resource Language. Interact. Des. Archit. 2024, 60, 7–26. [Google Scholar] [CrossRef]

- Rajpurkar, P.; Zhang, J.; Lopyrev, K.; Liang, P. SQuAD: 100,000+ Questions for Machine Comprehension of Text. arXiv 2016, arXiv:1606.05250. [Google Scholar]

- Berenguer, A.; Mazón, J.-N.; Tomás, D. Word embeddings for retrieving tabular data from research publications. Mach. Learn. 2024, 113, 2227–2248. [Google Scholar] [CrossRef]

- Wang, L.; Yang, N.; Huang, X.; Yang, L.; Majumder, R.; Wei, F. Improving Text Embeddings with Large Language Models. In Proceedings of the 62nd Annual Meeting of the Association for Computational Linguistics (Volume 1: Long Papers), Bangkok, Thailand, 11–16 August 2024. [Google Scholar]

- Zhuang, S.; Shou, L.; Zuccon, G. Augmenting Passage Representations with Query Generation for Enhanced Cross-Lingual Dense Retrieval. In Proceedings of the 46th International ACM SIGIR Conference on Research and Development in Information Retrieval (SIGIR’23), Taipei, Taiwan, 23–27 July 2023; p. 6. [Google Scholar] [CrossRef]

- Pungă, L.; Manda, I.; Chitez, M. How much Romanian does Google Translate know? A corpus-informed genre-specific error analysis of English-into-Romanian translations. Stud. Lang./Kalbų Stud. 2023, 43, 77–92. [Google Scholar] [CrossRef]

- Jiao, W.; Wang, W.; Huang, J.-T.; Wang, X.; Tu, Z. Is ChatGPT a Good Translator? A Preliminary Study. arXiv 2023, arXiv:2301.08745. [Google Scholar]

- Peng, K.; Ding, L.; Zhong, Q.; Shen, L.; Liu, X.; Zhang, M.; Ouyang, Y.; Tao, D. Towards Making the Most of ChatGPT for Machine Translation. arXiv 2023, arXiv:2303.13780. [Google Scholar] [CrossRef]

- Craswell, N.; Mitra, B.; Yilmaz, E.; Campos, D.; Lin, J. MS MARCO: Benchmarking Ranking Models in the Large-Data Regime. arXiv 2021, arXiv:2105.04021. [Google Scholar] [CrossRef]

- Bonifacio, L.; Jeronymo, V.; Abonizio, H.Q.; Campiotti, I.; Fadaee, M.; Lotufo, R.; Nogueira, R. mMARCO: A Multilingual Version of the MS MARCO Passage Ranking Dataset. arXiv 2022, arXiv:2108.13897. [Google Scholar] [CrossRef]

- Wang, L.; Yang, N.; Huang, X.; Jiao, B.; Yang, L.; Jiang, D.; Majumder, R.; Wei, F. Text Embeddings by Weakly-Supervised Contrastive Pre-training. arXiv 2024, arXiv:2212.03533. [Google Scholar] [CrossRef]

- Zhang, Y.; Li, M.; Long, D.; Zhang, X.; Lin, H.; Yang, B.; Xie, P.; Yang, A.; Liu, D.; Lin, J.; et al. Qwen3 Embedding: Advancing Text Embedding and Reranking Through Foundation Models. arXiv 2025, arXiv:2506.05176. [Google Scholar] [CrossRef]

- Hu, E.; Shen, Y.; Wallis, P.; Allen-Zhu, Z.; Li, Y.; Wang, S.; Chen, W. LoRA: Low-Rank Adaptation of Large Language Models. arXiv 2021, arXiv:2106.09685. [Google Scholar]

- Whitehouse, C.; Huot, F.; Bastings, J.; Dehghani, M.; Lin, C.-C.; Lapata, M. Low-Rank Adaptation for Multilingual Summarization: An Empirical Study. arXiv 2024, arXiv:2311.08572. [Google Scholar] [CrossRef]

- Huan, M.; Shun, J. Fine-Tuning Transformers Efficiently: A Survey on LoRA and Its Impact. Preprints 2025. [Google Scholar] [CrossRef]

- van den Oord, A.; Li, Y.; Vinyals, O. Representation Learning with Contrastive Predictive Coding. arXiv 2018, arXiv:1807.03748. [Google Scholar] [CrossRef]

- Izacard, G.; Caron, M.; Hosseini, L.; Riedel, S.; Bojanowski, P.; Joulin, A.; Grave, E. Unsupervised Dense Information Retrieval with Contrastive Learning. arXiv 2022, arXiv:2112.09118. [Google Scholar] [CrossRef]

- Long, Y.; Gu, D.; Li, X.; Lu, P.; Cao, J. Enhancing Educational Content Matching Using Transformer Models and InfoNCE Loss. In Proceedings of the 2024 IEEE 7th International Conference on Information Systems and Computer Aided Education (ICISCAE), Dalian, China, 27–29 September 2024; pp. 11–15. [Google Scholar] [CrossRef]

- Biderman, D.; Portes, J.; Gonzalez Ortiz, J.J.; Paul, M.; Greengard, P.; Jennings, C.; King, D.; Havens, S.; Chiley, V.; Frankle, J.; et al. LoRA Learns Less and Forgets Less. Trans. Mach. Learn. Res. 2024. [Google Scholar] [CrossRef]

- Su, H.; Shi, W.; Kasai, J.; Wang, Y.; Hu, Y.; Ostendorf, M.; Yih, W.-T.; Smith, N.A.; Zettlemoyer, L.; Yu, T. One Embedder, Any Task: Instruction-Finetuned Text Embeddings. In Findings of the Association for Computational Linguistics: ACL 2023; Association for Computational Linguistics: Stroudsburg, PA, USA, 2023; pp. 1102–1121. [Google Scholar] [CrossRef]

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).