1. Introduction

The integration of artificial intelligence in educational ecosystems has revolutionized traditional pedagogical approaches and institutional management strategies. Educational predictive analytics, an interdisciplinary domain leveraging machine learning (ML) and data mining techniques, has emerged as a transformative paradigm for enhancing academic outcomes through data-driven decision support. The global significance of this research area stems from pressing societal challenges in education systems worldwide, including student attrition rates, suboptimal resource allocation, and achievement gaps across diverse demographic populations. With higher education institutions facing unprecedented pressure to demonstrate efficacy and accountability, predictive modeling offers a scientifically rigorous methodology for identifying at-risk students, personalizing learning interventions, and optimizing institutional performance [

1,

2]. The conceptual evolution of educational data mining (EDM) has paralleled advancements in computational intelligence, transitioning from traditional statistical analysis to sophisticated ML algorithms capable of modeling complex, nonlinear relationships in educational data. Early approaches primarily relied on regression techniques and descriptive analytics to identify correlational patterns between student characteristics and academic outcomes. However, the emergence of robust ML frameworks has enabled the development of predictive models that assimilate heterogeneous data sources, including demographic attributes, behavioral patterns, institutional factors, and socio-economic indicators, to generate accurate forecasts of student performance [

1,

3]. This predictive capability carries profound implications for educational equity and institutional effectiveness, enabling early intervention strategies that can potentially mitigate dropout rates and enhance learning outcomes across diverse student populations. Contemporary educational infrastructures generate vast datasets through Learning Management Systems (LMSs), student information systems, and digital learning platforms, creating unprecedented opportunities for analytical modeling. Research by Waheed et al. demonstrated that student clickstream activities within LMS environments contain predictive signals correlated with academic performance, though the relationship between engagement metrics and outcomes requires careful model specification [

4]. The scientific import of educational predictive analytics extends beyond mere technical achievement, embodying a fundamental shift toward evidence-based educational management that aligns institutional practices with empirical insights rather than relying solely on tradition or intuition.

The methodological progression of predictive modeling in education reflects broader trends in computational science, marked by a gradual transition from traditional statistical models to ensemble ML approaches and, most recently, to optimized hybrid architectures. Seminal work in this domain primarily employed logistic regression, discriminant analysis, and decision trees to identify factors correlated with academic success. While these methods established important foundational relationships, particularly between prior academic performance and future outcomes, they often struggled with the complex, high-dimensional datasets characteristic of educational environments [

2]. The advent of ML introduced more sophisticated algorithms to educational prediction tasks, with studies systematically comparing the efficacy of various approaches [

2,

5,

6]. A comprehensive systematic review evaluated ML algorithms, including Support Vector Machines (SVMs), Artificial Neural Networks (ANNs), and Decision Trees, against traditional statistical models, concluding that ML approaches consistently outperformed their conventional counterparts due to their superior capacity to handle large, nonlinear datasets and to continuously enhance predictive accuracy. In particular, ensemble methods such as Random Forests and Gradient Boosting Machines demonstrated remarkable performance in predicting student outcomes by mitigating the limitations of individual base learners [

7]. These ensemble techniques operate on the principle that a collectivity of learners yields greater overall accuracy than an individual learner, effectively addressing the bias-variance tradeoff that plagues single-model approaches [

8].

Recent research has focused on enhancing predictive performance through hybrid architectures that integrate multiple modeling paradigms [

9,

10]. These studies represent the current state of the art in educational prediction, yet they reveal persistent challenges in model optimization and generalization that necessitate further methodological innovation. Ensemble learning has emerged as a particularly promising approach within educational predictive analytics, with demonstrated efficacy across diverse prediction tasks including performance forecasting, dropout identification, and at-risk student classification. The theoretical underpinnings of ensemble methodology rest on the statistical principle that combining multiple diverse models can produce more robust and accurate predictions than a single constituent model [

8]. The literature broadly categorizes ensemble methods into parallel approaches (bagging), sequential approaches (boosting), and heterogeneous integration (stacking), each with distinct mechanistic characteristics and performance attributes [

7]. Bagging (Bootstrap Aggregating) operates by training multiple base learners on random subsets of the original training data, then aggregating their predictions through averaging or majority voting. Bagging primarily reduces model variance and mitigates overfitting, particularly beneficial with high-dimensional educational data containing complex interaction effects [

7,

8]. In contrast, boosting algorithms such as AdaBoost, Gradient Boosting, and XGBoost employ a sequential strategy where each subsequent model focuses on correcting errors made by previous models. This approach systematically reduces bias while maintaining low variance, often achieving superior predictive accuracy compared to bagging methods. The pursuit of optimal ensemble performance has motivated the integration of metaheuristic optimization algorithms with machine learning methodologies, creating hybrid architectures that systematically navigate the complex parameter spaces inherent in ensemble configuration. Metaheuristic algorithms, inspired by natural phenomena and biological systems, offer powerful global search capabilities that effectively address the combinatorial challenges of feature selection, hyperparameter tuning, and model weighting in ensemble construction [

11,

12]. This integration represents a significant advancement beyond manual parameter configuration or grid search approaches, which often prove computationally prohibitive and prone to suboptimal local convergence.

Nature-inspired optimization algorithms have demonstrated remarkable efficacy in refining predictive models across diverse domains. The Grey Wolf Optimizer (GWO), in particular, has emerged as a prominent metaheuristic based on the social hierarchy and hunting behavior of grey wolves, characterized by balanced exploration–exploitation dynamics and relatively few control parameters [

13,

14]. The mechanistic basis for metaheuristic–ensemble integration lies in the multidimensional optimization landscape defined by ensemble parameters. Each base model contributes multiple hyperparameters that collectively define a complex, non-differentiable search space often characterized by numerous local optima. Metaheuristic algorithms excel in such environments due to their population-based stochastic search strategies that simultaneously evaluate multiple candidate solutions, effectively exploring the parameter space while resisting premature convergence. This capability is particularly valuable for weighted ensemble approaches where determining optimal model contributions presents a non-trivial optimization challenge directly impacting predictive accuracy [

13,

14].

Despite considerable methodological advances in educational predictive analytics, several significant limitations persist in current approaches, constraining their practical efficacy and generalizability. Through critical synthesis of the literature, we identify four principal research gaps that merit addressing in contemporary research. First, existing ensemble approaches often lack systematic optimization frameworks for determining optimal model weights and configurations. While studies have implemented weighted voting ensembles, their weighting schemes typically derive from static performance metrics rather than dynamic optimization processes. This represents a substantial limitation because suboptimal model weighting can diminish ensemble performance below theoretical capability. The potential of metaheuristic algorithms to address this challenge remains underexplored in educational contexts. Second, there exists a notable interpretability–transparency deficit in complex ensemble architectures. As observed by Guevara-Reyes et al. [

10], existing approaches lack interpretability and do not provide actionable insights for decision-making, creating a significant barrier to adoption in educational settings where pedagogical decisions require justification. While techniques such as SHAP and LIME (Local Interpretable Model-agnostic Explanations) have been applied to individual educational prediction models [

9], their integration with metaheuristic-optimized ensembles remains limited. Third, there is insufficient exploration of computational efficiency in complex ensemble optimization [

13,

14], particularly concerning real-world educational applications with resource constraints. Metaheuristic optimization introduces nontrivial computational overhead, yet few studies in educational predictive analytics have systematically addressed trade-offs between predictive accuracy and computational feasibility.

In response to the identified research gaps, this study proposes a novel optimized ensemble framework that integrates the GWO with heterogeneous machine learning models for educational predictive analytics. The primary research objective is to design, implement, and empirically validate a GWO-optimized ensemble architecture that significantly enhances predictive accuracy for student academic performance while maintaining practical interpretability and computational feasibility. This central objective operationalizes through several specific research aims, outlined as follows: To develop a weighted heterogeneous ensemble framework that strategically combines the complementary strengths of CatBoost, Extra Trees, and Random Forest algorithms for student performance prediction, explicitly addressing the limitations of single-model approaches documented in prior research. To implement the GWO for automated determination of optimal model weights and hyperparameters within the ensemble architecture, overcoming the suboptimal configuration limitations observed in current educational prediction models. To quantitatively evaluate the predictive performance of the proposed GWO-optimized ensemble against constituent base models and conventional ensemble approaches, using multiple metrics and establishing statistical significance through appropriate hypothesis-testing procedures. To enhance model interpretability through integrated SHAP analysis, identifying feature importance rankings and partial dependency relationships that provide actionable insights for educational intervention strategies, thereby addressing the black box criticism frequently leveled against complex ensemble models. This research makes significant contributions to the field of educational predictive analytics. Methodologically, it introduces a novel integration of metaheuristic optimization with heterogeneous ensemble learning specifically tailored to the characteristics of educational data. Empirically, it provides rigorous validation across multiple performance metrics and comparative benchmarks against established ML models. Practically, it delivers an interpretable predictive framework that supports data-driven educational decision-making with a transparent rationale for predictions. Theoretically, it advances understanding of optimal ensemble construction for educational prediction tasks and demonstrates the efficacy of nature-inspired optimization in this context.

The manuscript is structured as follows: The Literature Review Section in

Section 2 provides a comprehensive analysis of foundational and contemporary research on educational data mining, ensemble learning methods, and metaheuristic optimization. The Methodology Section in

Section 3 details the proposed GWO-optimized ensemble architecture, including data acquisition and preprocessing procedures, GWO implementation details, and evaluation metrics. The Experimental Results and Discussion Section in

Section 4 presents empirical findings from comparative performance evaluations. Finally, the Conclusion Section in

Section 5 synthesizes key findings, reiterates primary contributions, and considers broader impacts for educational theory and practice.

2. Literature Review

Recent advances in educational predictive analytics have increasingly leveraged machine learning ensembles to forecast student performance, yet the optimal strategy for integrating heterogeneous models remains an open challenge. While individual tree-based algorithms such as Random Forests, Extra Trees, and CatBoost have demonstrated strong predictive capabilities, their standalone use often suffers from suboptimal generalization and sensitivity to hyperparameter configuration. In response, this research explores metaheuristic optimization as a means to enhance ensemble design, though systematic investigations of dynamic weight allocation via algorithms such as the GWO in educational contexts remain scarce. The following review critically examines key contributions over the past decade that intersect ensemble learning, metaheuristic optimization, and student outcome prediction, highlighting both progress and persistent gaps that motivate the present work.

Alsumaidaie et al. [

15] evaluated the efficacy of several supervised learning algorithms for forecasting student outcomes, with a specific focus on comparing tree-based models. Their experimental design used Random Forests, Extra Trees, and K-Nearest Neighbors on an educational dataset, rigorously assessing their predictive performance. The Extra Trees classifier emerged as the top performer, thereby underscoring the potential of ensemble tree methods in this domain. Fan et al. [

16] introduced a novel multi-layer architecture built upon the CatBoost algorithm to predict student grades in periodic examinations. Their approach incorporated a feature-importance mechanism to iteratively refine the model’s focus on the most predictive attributes at successive layers. This tailored CatBoost framework demonstrated superior performance over standard implementations. Chen et al. [

17] developed a strategy for student performance prediction that centered on optimizing tree-based ensemble models. Their methodology sought to fine-tune the hyperparameters of these models to maximize predictive accuracy. The optimized framework yielded an average accuracy of 93.11%. Wang [

18] introduced a hybrid model combining DistilBERT with LSTM and optimized it using the Spotted Hyena Optimizer (SHO) for predicting student achievement. Their approach focused on dynamic parameter tuning to efficiently handle large-scale educational datasets. The model demonstrated exceptional performance. Ahmed et al. [

9] conducted a comprehensive comparison of ten regression models, for academic performance prediction. Their study utilized two datasets with distinct features to evaluate model generalizability. The ensemble approach achieved remarkable performance, demonstrating robustness through weighted averaging of top-performing base models. Khan et al. [

19] developed a novel hybrid architecture integrating Convolutional Neural Networks (CNNs) with Random Forests and used XGBoost as a meta-learner for predicting student achievement. Their approach leveraged institutional records from 24,005 students to capture complex feature interactions. The model outperformed the models it was compared to. Guevara-Reyes et al. [

10] deployed optimized machine learning models, specifically XGBoost and Random Forest, to analyze geographic, institutional, and socioeconomic factors affecting student performance. By incorporating SHAP-based interpretability techniques, the research provided transparent insights into feature importance. XGBoost achieved superior performance. Wang and Yu [

20] developed a logistic regression model enhanced with Taylor expansion for predicting student performance in online learning environments. Their methodology constructed eleven learning behavioral indicators from online learning processes and selected the most correlated features for model training. The approach demonstrated significant dependencies between learning initiative and duration, offering insights into behavioral factors affecting academic outcomes. Gul et al. [

21] studied eight machine learning models including Decision Trees, Random Forest, and CatBoost to predict student performance based on demographic and academic features. Their evaluation used multiple error metrics to assess model robustness across different student populations. CatBoost achieved the highest accuracy at 87.46%, outperforming other algorithms and demonstrating the effectiveness of gradient boosting methods for educational classification tasks. Ma [

22] integrated a Random Forest Classifier with meta-heuristic optimization algorithms, specifically Electric Charged Particles Optimization (ECPO) and Artificial Rabbits Optimization (ARO), to predict student performance, demonstrating superior predictive capacity.

The reviewed studies collectively reveal that while metaheuristic optimization has proven effective across various engineering and prediction domains, three persistent gaps remain. First, most ensemble-learning frameworks rely on static or heuristic weighting, leaving dynamic and data-driven optimization strategies underexplored. Second, few metaheuristic studies integrate interpretability mechanisms such as SHAP or LIME, limiting their practical value for decision-makers in educational settings. Third, computational trade-offs between accuracy, interpretability, and scalability are rarely quantified, leading to uncertainty regarding real-world feasibility.

4. Experiment and Discussion Section

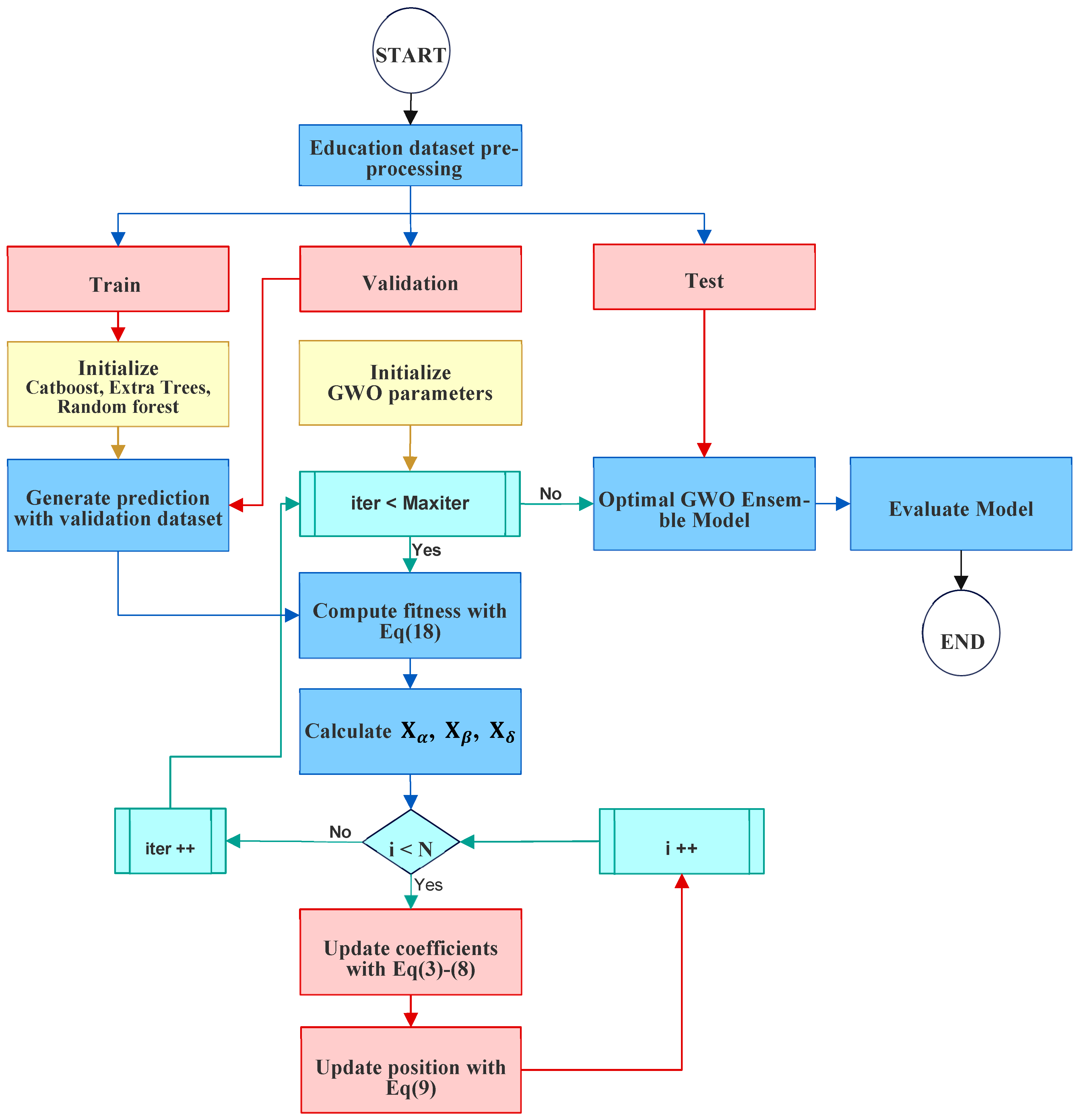

In this experimental study, ML were trained with the following hyperparameter settings to establish a consistent, unbiased baseline for comparison. Specifically, the default configurations consisted of 200 estimators and a maximum depth of 15, for Random Forest and Extra Trees, 200 estimators, a learning rate of 0.05, and maximum depth of 8 for Gradient Boosting, SVR configured with an RBF kernel, , and ; AdaBoost employing 200 estimators, KNN with 10 neighbors; Decision Tree with a maximum depth of 10, and CatBoost iterations is set to 500. The parameters were chosen based on the established literature. The dataset was partitioned such that 80% was allocated to training, and the remaining was split further into validation and test sets at a ratio, thereby ensuring rigorous model evaluation. In the GWO optimization process, the search space for the ensemble weights was defined within the interval, with the number of search agents (population size) set to 30 and a maximum of 1000 iterations for convergence. This parameterization was intended to effectively balance exploration and exploitation during the optimization, thereby determining an optimal combination of model weights that minimized the validation MSE.

The empirical evaluation presented in

Table 2 and

Table 3 provides a comprehensive benchmark of the predictive performance of individual regression models relative to the proposed GWO-based ensemble framework across both the validation and test phases. The results substantiate several key insights regarding model behavior, ensemble efficacy, and the role of metaheuristic optimization in enhancing predictive accuracy and generalization. First, among the individual learners, the tree-based ensemble methods specifically CatBoost, Extra Trees, and Random Forest consistently outperform other algorithms across all evaluation metrics. This observation aligns with the established literature highlighting the robustness of ensemble tree methods in handling nonlinear relationships, feature interactions, and high-dimensional data without extensive preprocessing. CatBoost emerges as the strongest individual model, achieving a validation MSE of 34.34 and an

of 0.8713, closely trailed by Extra Trees

of 0.8709 and Random Forest

of 0.8698. On the test set, CatBoost further demonstrates superior generalization with an MSE of 23.56 and

of 0.9269, reinforcing its capacity to minimize both bias and variance in unseen data.

In contrast, non-ensemble and distance-based models, namely, SVR, KNN, and the single DT exhibit markedly inferior performance. SVR and KNN yield substantially higher MSE values (62.77 and 87.13 on validation, respectively) and lower scores (0.7647 and 0.6735), indicative of limited adaptability to the underlying data structure and sensitivity to feature scaling or neighborhood selection. The Decision Tree’s poor performance further underscores the limitations of single-tree models, including high variance and susceptibility to overfitting, despite their interpretability. Notably, the GWO-optimized ensemble model surpasses all individual regressors on both validation and test sets, achieving the lowest MSE of 32.64 for validation and 22.56 for test, RMSE of 5.71 for validation and 4.75 for test, and MAE of 4.59 for validation and 3.79 for test, alongside the highest scores of 0.8777 for validation and 0.9300 for test. The consistent improvement across metrics, particularly the 4.3% reduction in test MSE relative to CatBoost, demonstrates that the ensemble does not merely average predictions but strategically combines base learners using GWO-derived optimal weights that minimize global error while maximizing explained variance.

The significant gains achieved by the GWO ensemble are particularly important in the context of student exam score tasks, where diminishing performance is common among high-performing models. The success of the GWO ensemble can be attributed to its ability to harness the complementary strengths of heterogeneous base models. For instance, while CatBoost excels in gradient-boosted sequential learning, Random Forest and Extra Trees offer decorrelated bagging-based variance reduction. By assigning data-driven weights via a metaheuristic search that navigates the complex, non-convex landscape of ensemble weight space, GWO effectively balances model-specific biases and variances. This optimization process yields a synergistic predictor that mitigates individual model weaknesses, such as SVR’s rigidity or KNN’s sensitivity to local noise-while amplifying collective strengths. Furthermore, the ensemble’s superior test performance relative to validation suggests enhanced generalization rather than overfitting, a critical attribute in real-world applications where model robustness to distributional shifts is paramount. This contrasts with some boosting methods (AdaBoost and Gradient Boosting), which show larger performance gaps between validation and test phases, potentially indicating over-optimization during training. Conclusively. The GWO ensemble not only achieves state-of-the-art performance on the evaluated dataset but also exemplifies a principled approach to model fusion, one that transcends simple averaging or stacking by embedding optimization directly into the ensemble design.

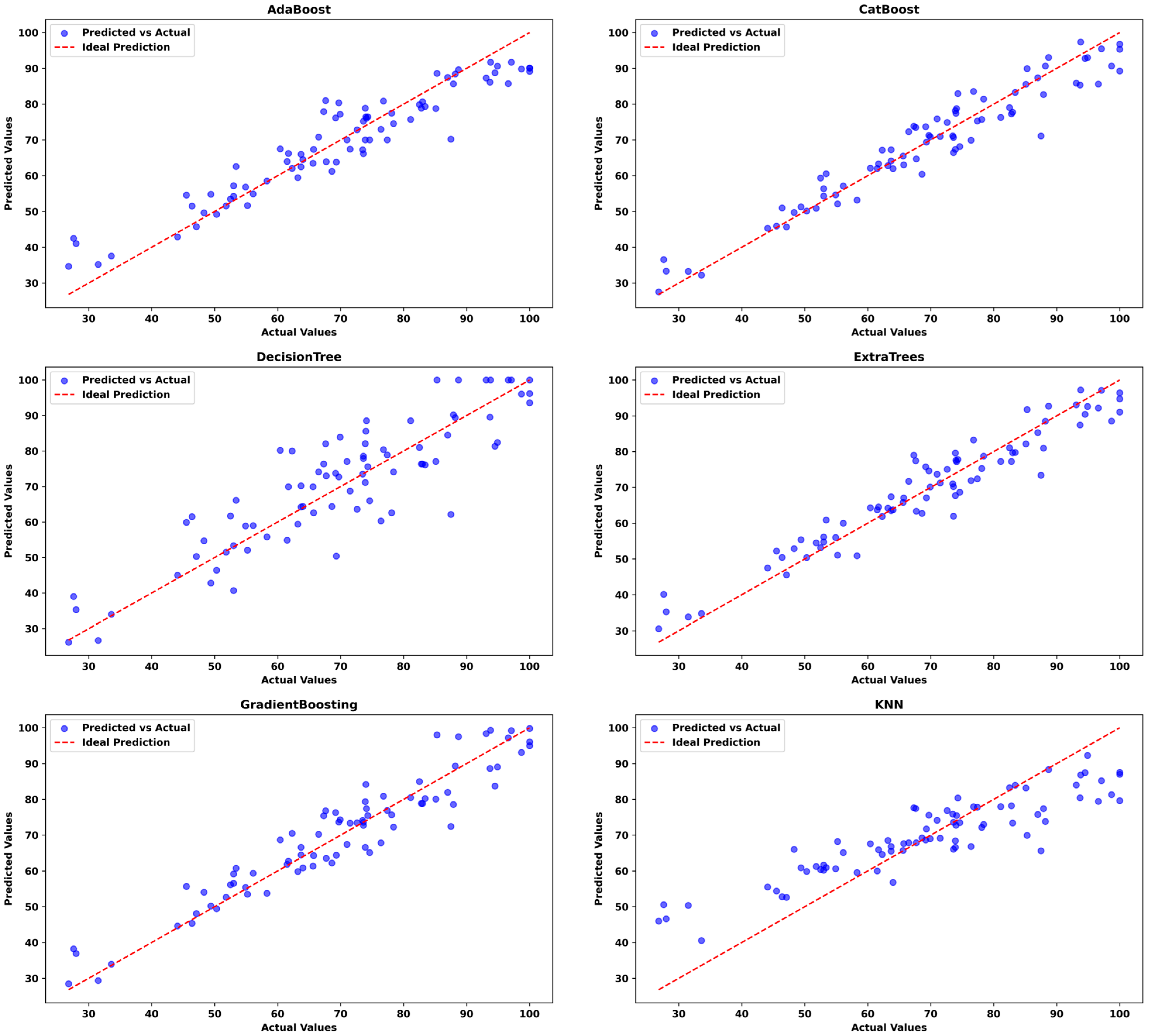

The comparison of predicted versus actual value plots across all models for test data in

Figure 2 demonstrates the superior predictive alignment of the GWO ensemble. Specifically, in the GWO ensemble plot, most predictions closely follow the ideal prediction line, indicating minimal deviation between observed and predicted outcomes. This concentration of data points along the diagonal, compared with the visible scatter and outliers in individual models such as KNN and SVR, indicates both precision and consistency in the ensemble’s performance. Models like CatBoost, Extra Trees, and Random Forests individually show robust correspondence to the ideal line, yet still exhibit more instances of under and over-prediction relative to the GWO ensemble. The GWO ensemble plot, by contrast, reveals a tighter clustering and diminished spread around the diagonal across almost the entire response range. Furthermore, performance indicators such as lower point dispersion and fewer noticeable outliers highlight the ensemble’s robustness and reduced susceptibility to systematic bias or large prediction errors. In contrast, models like KNN, SVR, and Decision Tree are characterized by larger variance and several points falling significantly away from the ideal prediction line, signaling reduced accuracy and less reliable generalization. Even among competent regressors such as Gradient Boosting or AdaBoost, a moderate spread is observed, which is effectively minimized in the GWO ensemble. Collectively, these plots corroborate numerical metrics, demonstrating that the GWO-optimized ensemble leverages the complementary strengths and mitigates the individual weaknesses of its component models, resulting in enhanced overall predictive accuracy and model stability, as evidenced by reduced error variance and closer alignment with the true target values.

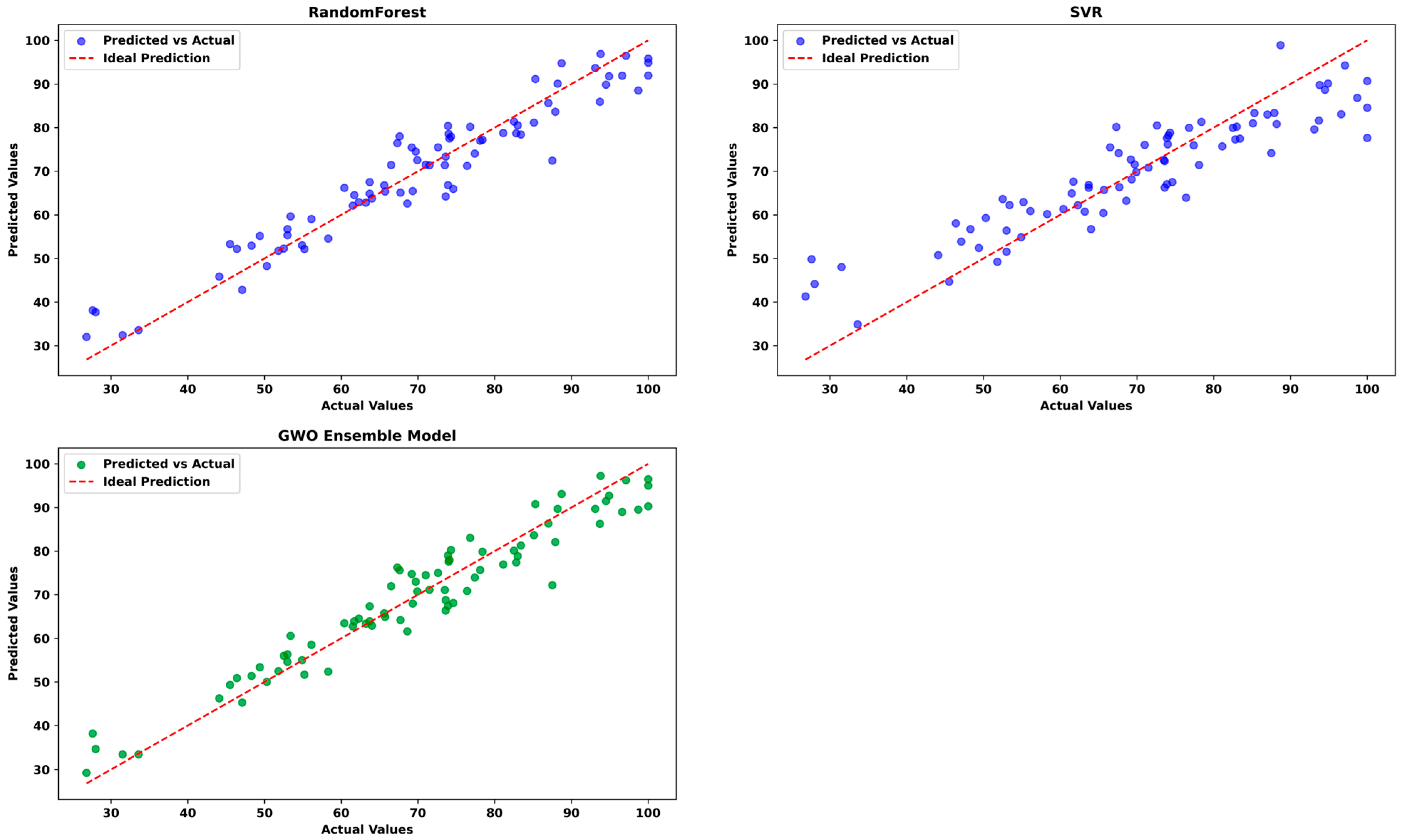

The results depicted in the ensemble model weights bar chart in

Figure 3. demonstrate the outcome of the GWO-based optimization for the regression task. The CatBoost model had the highest relative importance in the ensemble, as reflected by a weight of approximately 0.4781. Extra Trees followed with a substantial weight of 0.4086, while Random Forest contributed less, with a weight of 0.1133. This distribution of ensemble weights indicates that the CatBoost and Extra Trees models provided more informative and reliable predictions for the underlying data and were therefore prioritized in the ensemble aggregation. The allocation of weights determined by the GWO is guided by the minimization of prediction error on the validation set. As a result, models that demonstrated comparatively higher accuracy and robustness on this dataset received greater weights. The relatively lower allocation for Random Forest suggests that, within the context of the ensemble, its predictions were less aligned with the optimal estimation of the target variable, relative to its counterparts. Such an outcome highlights the adaptive capability of metaheuristic ensemble techniques, ensuring that the contribution of each base model is rigorously tailored to maximize overall predictive performance. By assigning dynamic, data-driven weights rather than relying on uniform or expert-defined combinations, the ensemble achieves a nuanced balance, incorporating models that best characterize the underlying patterns of the data, while diminishing the influence of less effective models. This methodology not only enhances model accuracy but also improves generalizability, underscoring the value of optimizer-based ensemble design in complex prediction tasks.

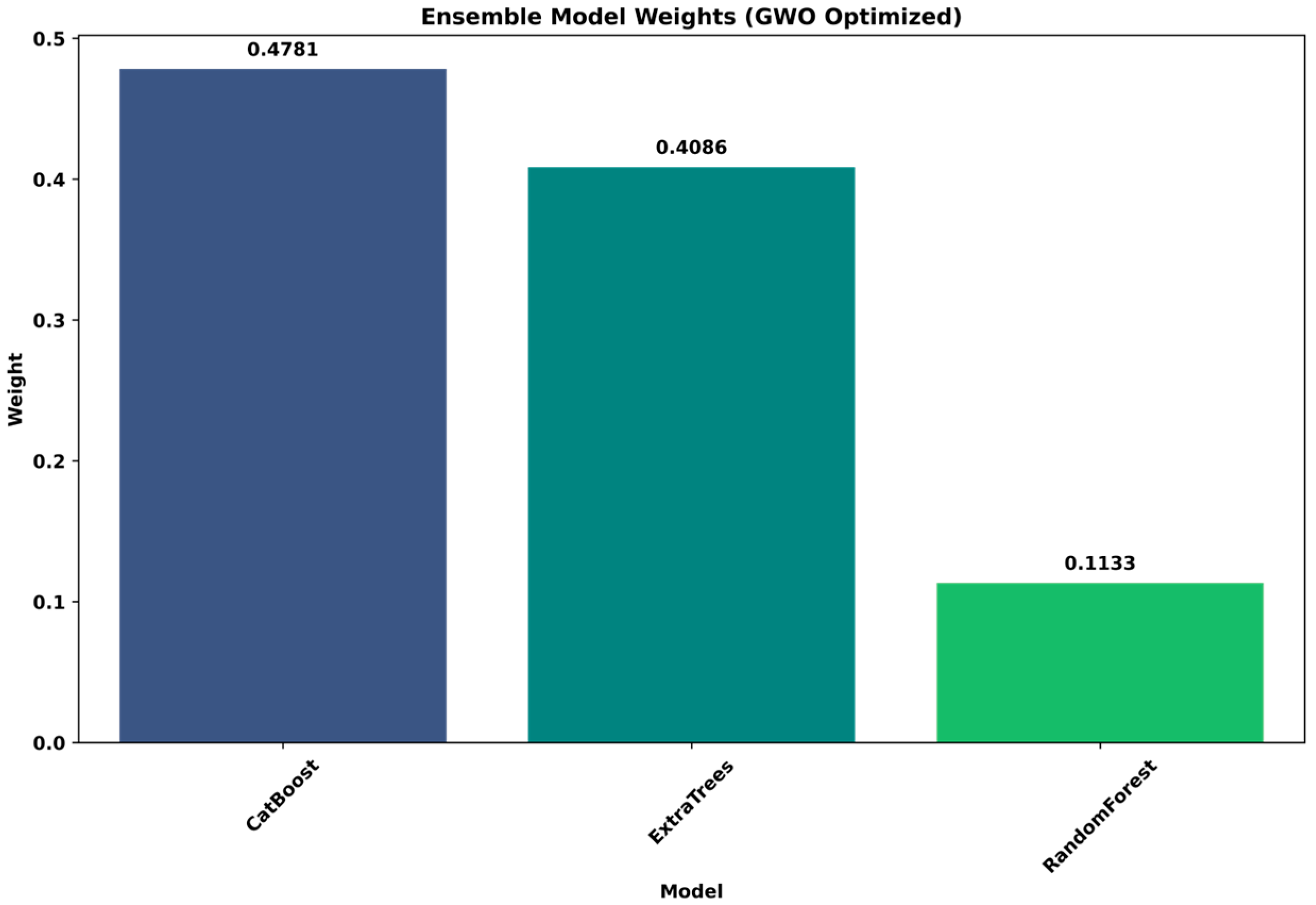

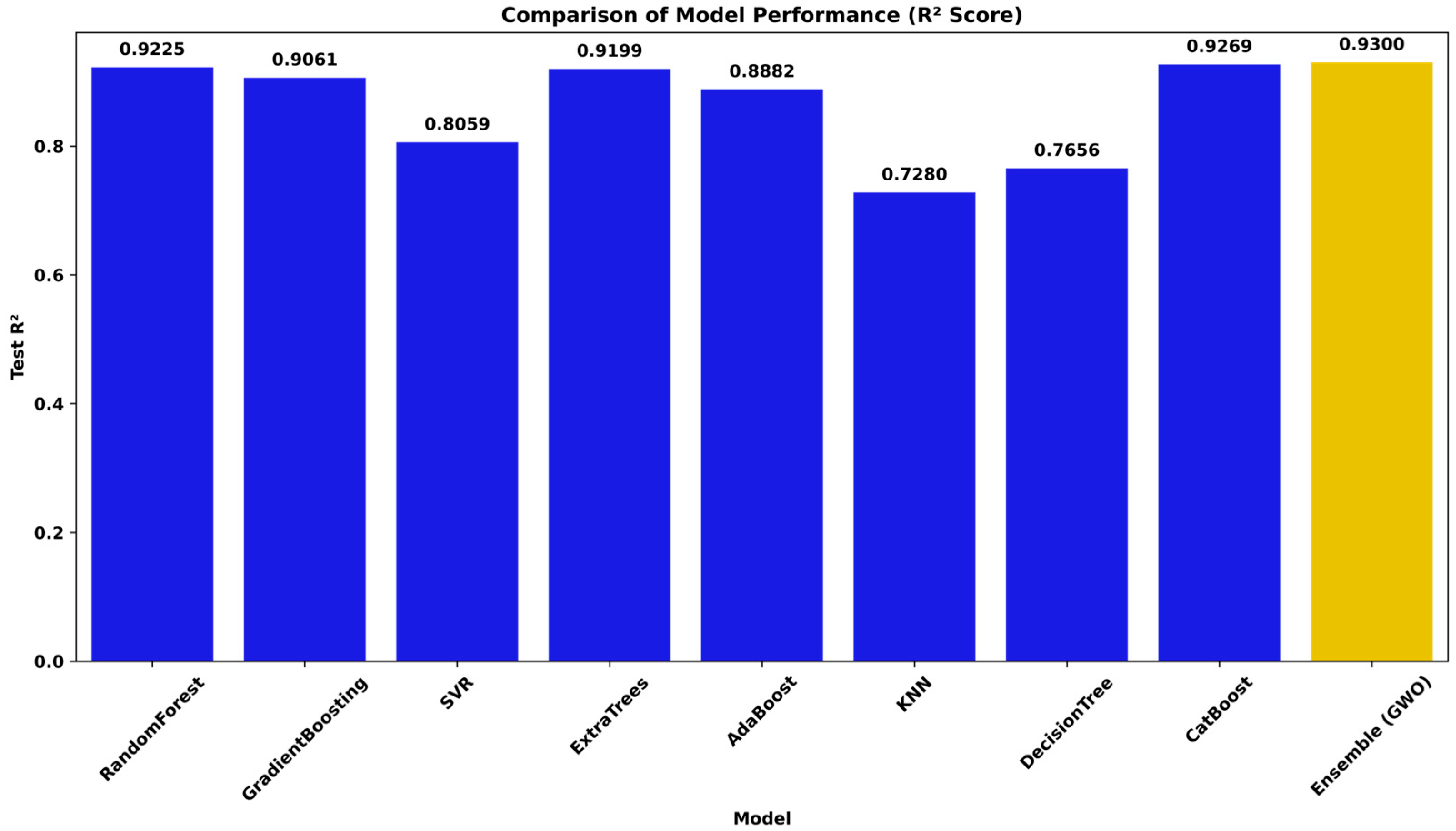

The bar chart in

Figure 4 presents a comparative analysis of the test

scores for the evaluated regression models, including Random Forest, Gradient Boosting, SVR, Extra Trees, AdaBoost, KNN, Decision Tree, CatBoost, and the GWO-optimized ensemble. It is evident from the plot that the GWO ensemble model achieves the highest coefficient of determination, with an

value of 0.9300. CatBoost (0.9269), Random Forest (0.9225), and Extra Trees (0.9199) also demonstrate strong test performance, whereas models such as SVR (0.8059), KNN (0.7280), and Decision Tree (0.7656) display notably lower predictive capacity. The significant improvement in

observed for the ensemble model over the best individual algorithms illustrates the value of optimized model combination, achieved through metaheuristic weighting, in enhancing overall predictive accuracy and robustness. This clearly reflects the superiority of the ensemble strategy in extracting richer information and reducing model-specific bias, ultimately leading to better generalization on unseen data.

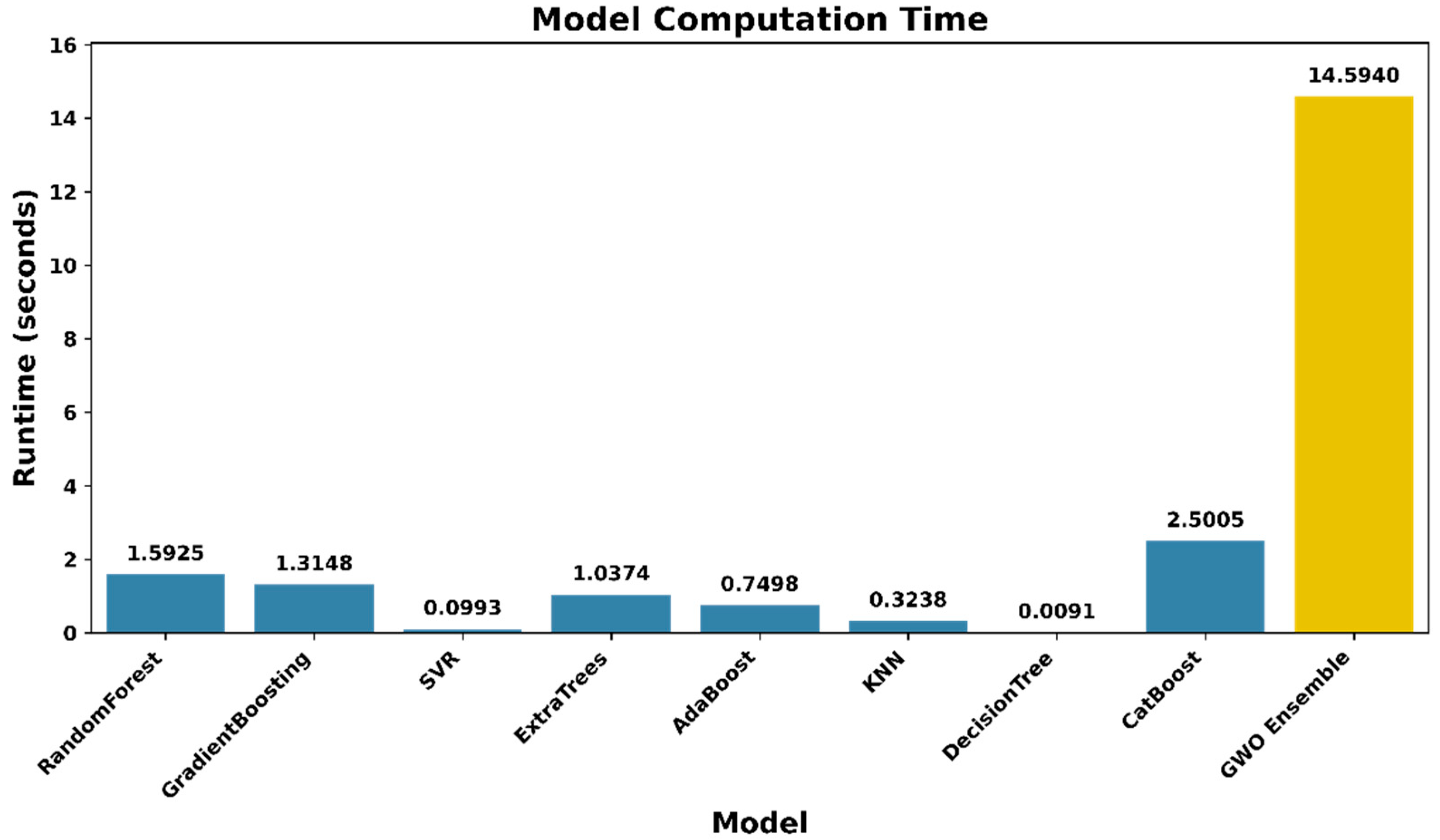

Figure 5 presents the training and prediction runtime (in seconds) for eight individual regressors and the proposed GWO-optimized ensemble. Lightweight models such as Decision Tree (0.009 s) and SVR (0.099 s) exhibit the lowest computational overhead, while ensemble methods, including Random Forest (1.59 s), Gradient Boosting (1.31 s), and CatBoost (2.50 s) require slightly more time due to their iterative tree-building processes. The GWO ensemble, which optimizes combination weights over 1000 iterations with a population size of 20, incurs the highest runtime (14.59 s). This reflects the inherent cost of meta-heuristic optimization but is justified when improvements in predictive performance are critical. The results highlight a clear trade-off between computational efficiency and model sophistication, informing practical deployment choices under resource constraints.

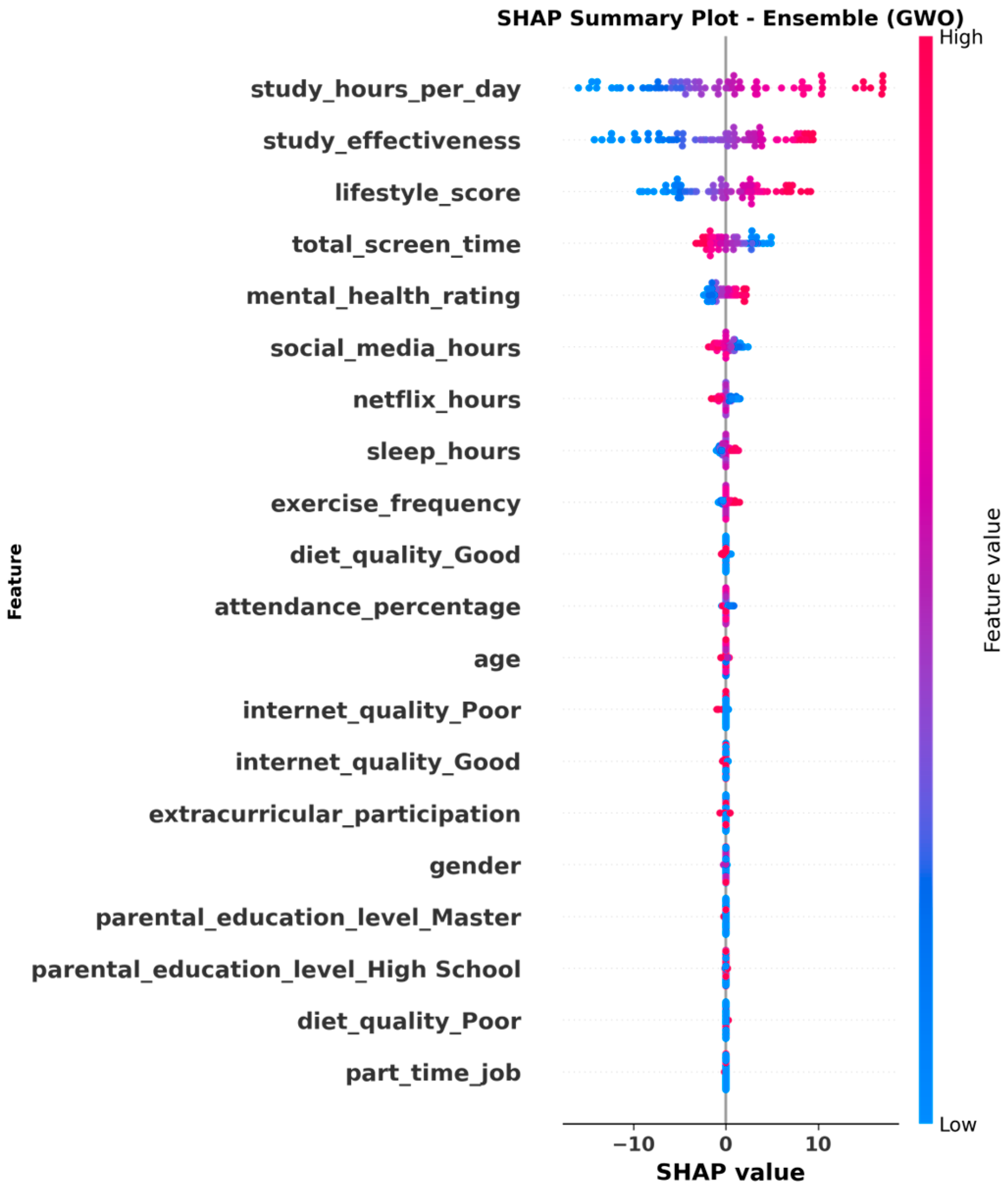

The SHAP summary plot in

Figure 6 for the GWO-optimized ensemble model provides a comprehensive explanation of the relative importance and directionality of each input feature in shaping the model’s predictions. The plot ranks features by their mean absolute SHAP value, with features at the top exerting a stronger impact on the output. In this case, study hours per day, and study effectiveness emerged as the most influential predictors, with higher values of these features consistently contributing to an increase in predicted exam scores. Lifestyle score, total screen time, and mental health rating also show considerable importance, highlighting the multifaceted nature of academic performance, which encompasses both study behaviors and well-being metrics. The color gradient, with blue representing low feature values and pink representing high values, clarifies the magnitude and direction of the effect. For example, a higher study hours per day is associated with positive SHAP values, indicating that increasing study hours typically raises predicted exam scores. Similarly, higher lifestyle score and mental health rating are associated with favorable outcomes, indicating that holistic well-being correlates with academic success. Features such as social media hours, Netflix hours, and total screen time demonstrate a more nuanced effect, with high values yielding both positive and negative SHAP contributions, reflecting the complex relationship between screen time habits and performance. Several categorical and demographic features, such as gender, extracurricular participation, and various levels of parental education, appear lower in the ranking, indicating relatively minor direct influence under the ensemble framework. Furthermore, the compact spread of SHAP values for less impactful features, such as part time job or diet quality poor, suggests limited variability in how these aspects affect the model’s output. Meanwhile, features associated with academic habits, attendance, and select well-being attributes display a wider spread, underscoring their greater explanatory relevance. The SHAP analysis affirms that the ensemble model’s predictions are predominantly shaped by factors explicitly linked to study behavior, personal effectiveness, and lifestyle, while secondary features contribute modestly. This provides transparency into the inner workings of the optimized ensemble and robust interpretability for stakeholders seeking to understand drivers of academic performance in complex predictive modeling contexts.

Beyond confirming expected influences such as study hours and effectiveness, the SHAP analysis revealed several non-obvious relationships. Notably, total screen time showed a bidirectional effect: moderate engagement correlated with improved performance, whereas excessive usage produced sharply negative SHAP values, indicating cognitive overload and exceeding distraction thresholds. Similarly, the interaction between mental health rating and lifestyle score demonstrated a nonlinear relationship: students maintaining a moderate lifestyle balance achieved higher predicted scores than those at either extreme of the wellness scale, suggesting that overly structured routines may reduce cognitive flexibility. These nuanced dependencies provide educators with actionable insights. For instance, academic advisors can use SHAP-derived thresholds to identify students at risk of digital overuse, while institutional wellness programs can align counseling resources toward maintaining behavioral balance rather than simply increasing study time. Such interpretability bridges predictive analytics with evidence-based educational interventions, reinforcing the model’s utility beyond predictive accuracy.

5. Conclusions

This study systematically investigated the efficacy of a GWO-based ensemble model for predicting academic performance, leveraging a combination of advanced machine learning regressors, namely CatBoost, Extra Trees, and Random Forest. Empirical results demonstrated that the proposed metaheuristically weighted ensemble consistently outperformed all individual base learners on both validation and test data. The ensemble’s superior predictive ability was evident across various metrics. The findings substantiate the hypothesis that an optimizer-driven ensemble approach can effectively capitalize on the complementary strengths and distinct error profiles of its components, thereby delivering enhanced accuracy, generalizability, and robustness. The optimized weighting ensured that greater influence was accorded to models yielding higher predictive reliability while still retaining diversity in the ensemble to minimize the risk of overfitting. Notwithstanding these promising results, several limitations should be acknowledged. First, the optimization was conducted on a single dataset, which may leave the model susceptible to data-specific bias and limit generalizability under different data distributions. Second, the current framework primarily relies on the aggregation of base model predictions; potentially richer ensemble strategies were not explored. Third, computational cost increases due to population-based optimization, especially with large datasets or more complex models, which could limit scalability in practical applications.

Future research should address these limitations by incorporating multiple optimization methods to further enhance robustness, evaluating hybrid metaheuristic algorithms to improve optimization performance, and investigating nonlinear stacking or blending architectures. Additionally, extending to broader, more heterogeneous educational datasets, as well as exploring model explainability tailored to domain experts, would strengthen both the scientific foundation and the real-world impact of ensemble-based predictive analytics in educational settings. Taken together, the results position GWO-optimized ensembles as a compelling methodological advancement for regression tasks involving complex, multifactorial input datasets. By integrating sophisticated optimization with state-of-the-art machine learning, this study contributes both methodological innovation and empirical evidence to the expanding literature at the intersection of artificial intelligence and educational data sciences.