Prediction of TBM Performance Under Imbalanced Geological Conditions Using Resampling and Data Synthesis Techniques

Abstract

1. Introduction

2. Related Work

2.1. TBM Performance Prediction Methods

2.1.1. Theoretical Method

2.1.2. Empirical Method

2.1.3. Time-Series Prediction Method

2.2. Classification on Imbalanced Datasets

2.2.1. Misclassification Cost Method

2.2.2. Resampling Method

2.2.3. One-Class Classification Method

2.2.4. Active Learning Method

3. Proposed Method

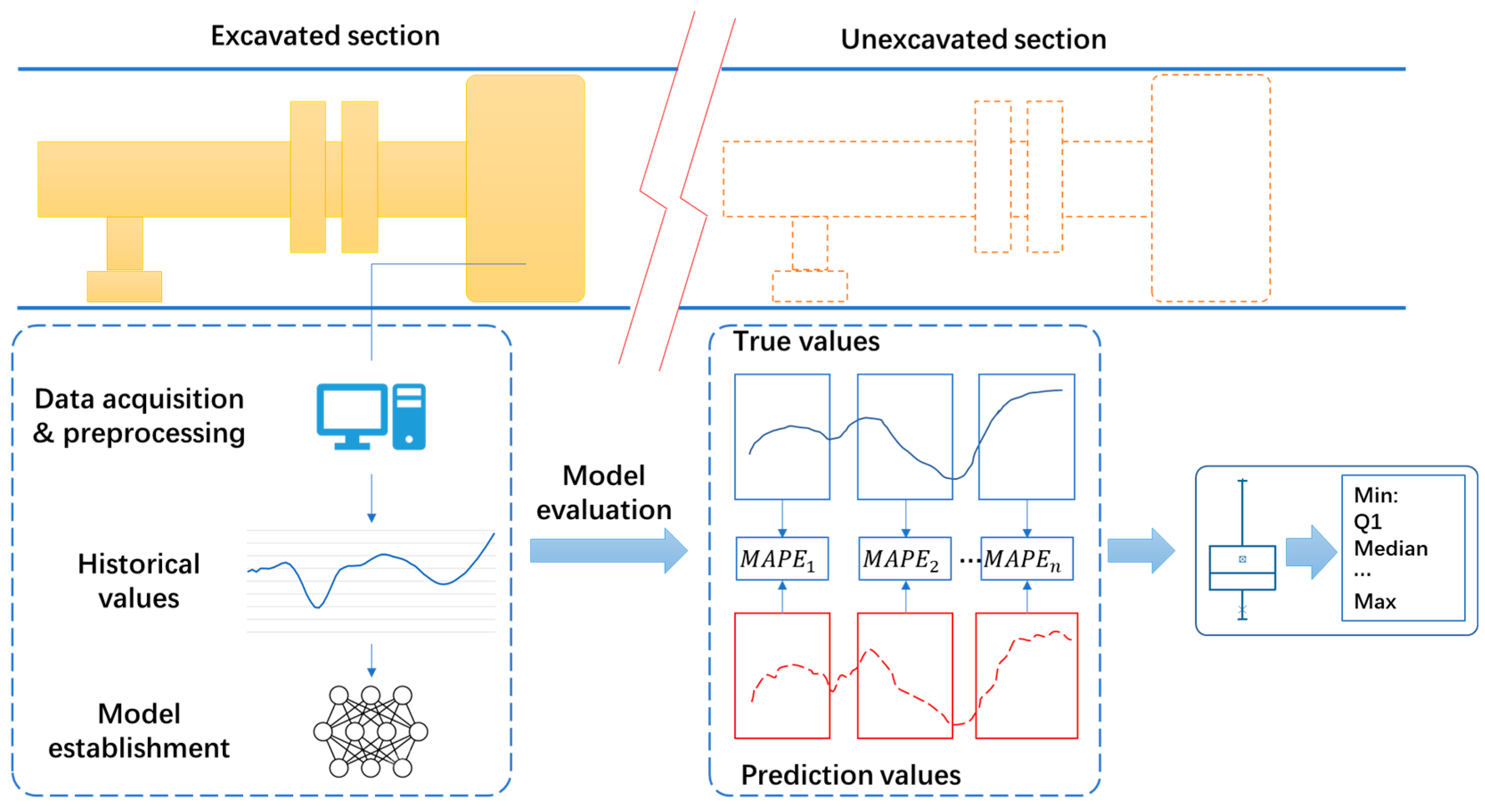

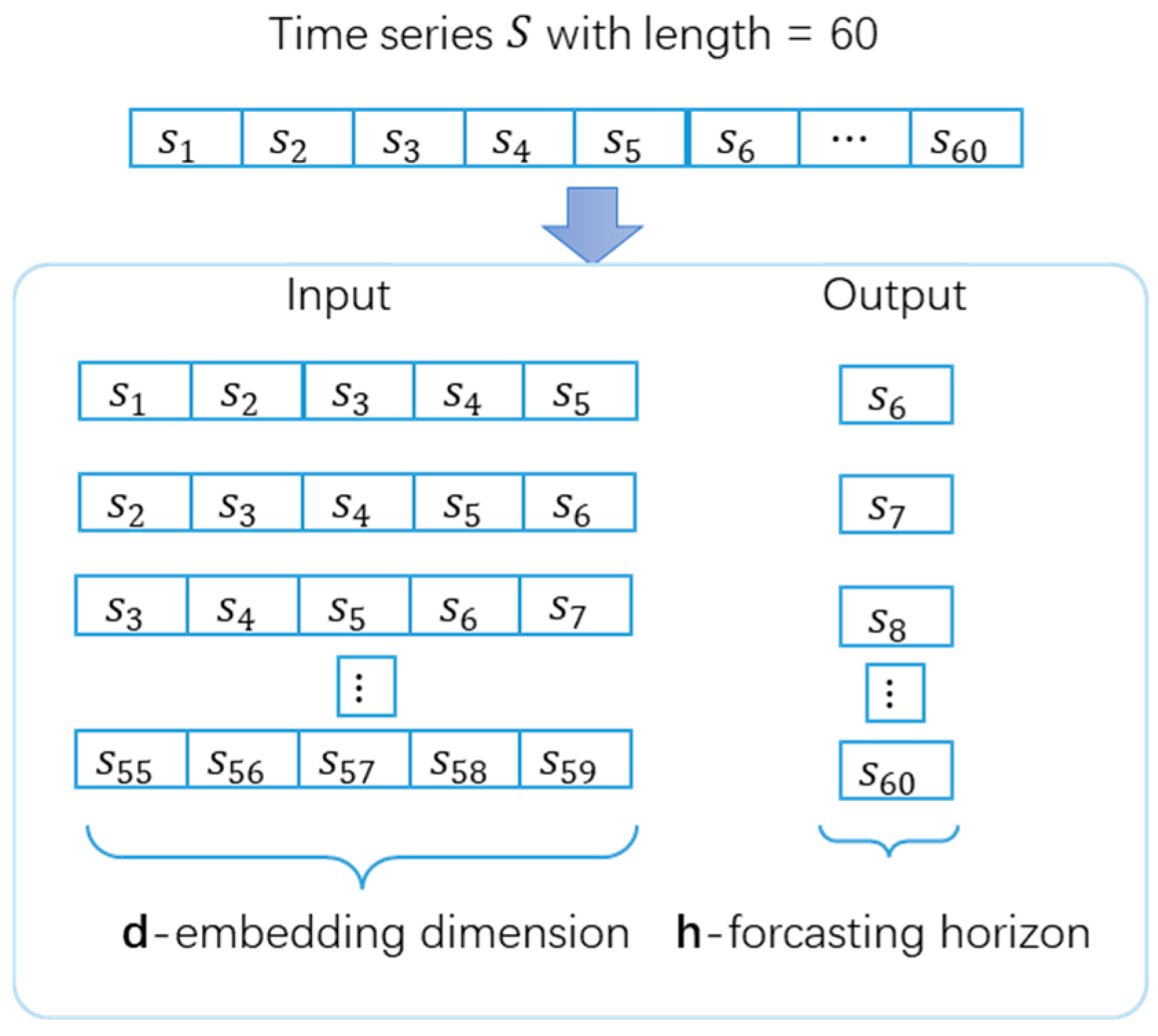

3.1. Dataset Preparation and Model Establishment

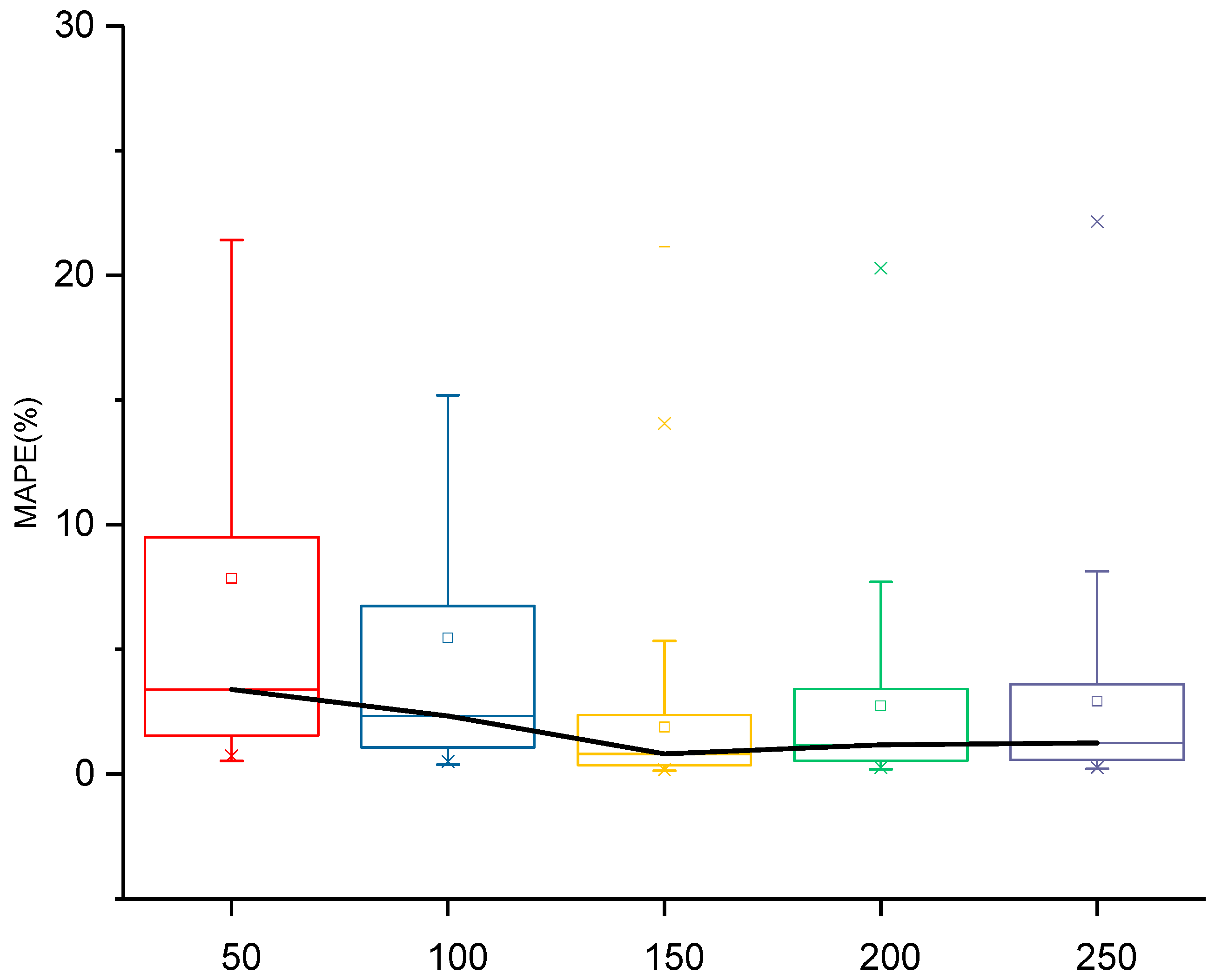

3.2. Influence of Amount of Training Subseries on Prediction Results

3.3. Influence of Dataset Imbalance on Prediction Results of TBM Performance

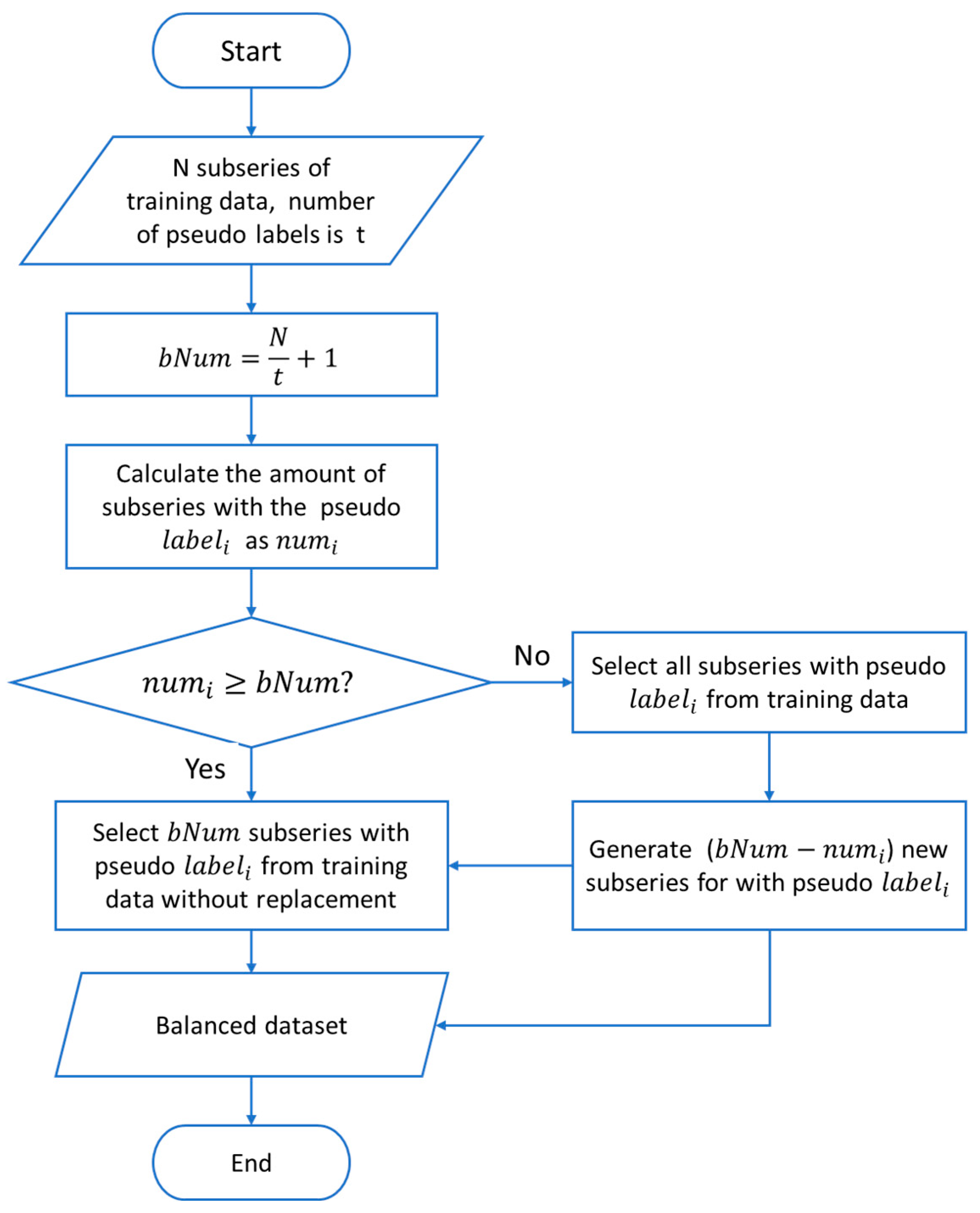

3.4. Creating Balanced Training Datasets for the TBM Performance Prediction Model

4. Results and Discussion

4.1. An Overall Comparison of Prediction Results

4.2. A Detailed Comparison of Prediction Results Using Pseudo Labels

4.3. Comparison with Traditional Methods

5. Conclusions

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Acknowledgments

Conflicts of Interest

References

- Li, C.; Wang, S.; Qu, T.; Zheng, X.; Zhang, X. Overcoming time delays challenges: Decomposition–Reconstruction approaches for time-series TBM performance predictions. Expert Syst. Appl. 2025, 283, 127739. [Google Scholar] [CrossRef]

- Wu, H.; Jiang, Y.; Li, H.; Xu, W.; Le, Y.; Wang, D.; Huo, J. Electromechanical sensor-based condition monitoring and dynamic load characterization for TBM drive system synchronization analysis. IEEE Sens. J. 2025, 1. [Google Scholar] [CrossRef]

- Mostafa, S.; Sousa, R.L.; Einstein, H.H. Toward the automation of mechanized tunneling “exploring the use of big data analytics for ground forecast in TBM tunnels”. Tunn. Undergr. Space Technol. 2024, 146, 105643. [Google Scholar] [CrossRef]

- Rostami, J. Performance prediction of hard rock Tunnel Boring Machines (TBMs) in difficult ground. Tunn. Undergr. Space Technol. 2016, 57, 173–182. [Google Scholar] [CrossRef]

- Hou, S.; Cheng, L.; Liu, Y. Advance prediction of rockmass conditions during TBM tunnelling based on cost-sensitive learning under imbalance dataset. In Proceedings of the 57th U.S. Rock Mechanics/Geomechanics Symposium, Atlanta, GA, USA, 25–28 June 2023. [Google Scholar]

- Salimi, A.; Rostami, J.; Moormann, C. Evaluating the suitability of existing rock mass classification systems for TBM performance prediction by using a regression tree. Procedia Eng. 2017, 191, 299–309. [Google Scholar] [CrossRef]

- Yin, L.; Miao, C.; He, G.; Dai, F.; Gong, Q. Study on the influence of joint spacing on rock fragmentation under TBM cutter by linear cutting test. Tunn. Undergr. Space Technol. 2016, 57, 137–144. [Google Scholar] [CrossRef]

- Entacher, M.; Rostami, J. TBM performance prediction model with a linear base function and adjustment factors obtained from rock cutting and indentation tests. Tunn. Undergr. Space Technol. 2019, 93, 103085. [Google Scholar] [CrossRef]

- Gertsch, R.; Gertsch, L.; Rostami, J. Disc cutting tests in Colorado Red Granite: Implications for TBM performance prediction. Int. J. Rock Mech. Min. Sci. 2007, 44, 238–246. [Google Scholar] [CrossRef]

- Cho, J.-W.; Jeon, S.; Jeong, H.-Y.; Chang, S.-H. Evaluation of cutting efficiency during TBM disc cutter excavation within a Korean granitic rock using linear-cutting-machine testing and photogrammetric measurement. Tunn. Undergr. Space Technol. 2013, 35, 37–54. [Google Scholar] [CrossRef]

- Entacher, M.; Lorenz, S.; Galler, R. Tunnel boring machine performance prediction with scaled rock cutting tests. Int. J. Rock Mech. Min. Sci. 2014, 70, 450–459. [Google Scholar] [CrossRef]

- Ma, H.; Gong, Q.; Wang, J.; Yin, L.; Zhao, X. Study on the influence of confining stress on TBM performance in granite rock by linear cutting test. Tunn. Undergr. Space Technol. 2016, 57, 145–150. [Google Scholar] [CrossRef]

- Park, J.-Y.; Kang, H.; Lee, J.-W.; Kim, J.-H.; Oh, J.-Y.; Cho, J.-W.; Rostami, J.; Kim, H.D. A study on rock cutting efficiency and structural stability of a point attack pick cutter by lab-scale linear cutting machine testing and finite element analysis. Int. J. Rock Mech. Min. Sci. 2018, 103, 215–229. [Google Scholar] [CrossRef]

- Fattahi, H.; Babanouri, N. Applying optimized support vector regression models for prediction of tunnel boring machine performance. Geotech. Geol. Eng. 2017, 35, 2205–2217. [Google Scholar] [CrossRef]

- Mikaeil, R.; Naghadehi, M.Z.; Ghadernejad, S. An extended multifactorial fuzzy prediction of hard rock TBM penetrability. Geotech. Geol. Eng. 2018, 36, 1779–1804. [Google Scholar] [CrossRef]

- Salimi, A.; Rostami, J.; Moormann, C.; Hassanpour, J. Examining feasibility of developing a rock mass classification for hard rock TBM application using non-linear regression, regression tree and generic programming. Geotech. Geol. Eng. 2018, 36, 1145–1159. [Google Scholar] [CrossRef]

- Narimani, S.; Chakeri, H.; Davarpanah, S.M. Simple and non-linear regression techniques used in Sandy-Clayey soils to predict the pressuremeter modulus and limit pressure: A case study of Tabriz subway. Period. Polytech. Civ. Eng. 2018, 62, 825–839. [Google Scholar] [CrossRef]

- Jakubowski, J.; Stypulkowski, J.B.; Bernardeau, F.G. Multivariate linear regression and cart regression analysis of TBM performance at Abu Hamour phase-I tunnel. Arch. Min. Sci. 2017, 62, 825–841. [Google Scholar] [CrossRef]

- Yagiz, S. Utilizing rock mass properties for predicting TBM performance in hard rock condition. Tunn. Undergr. Space Technol. 2008, 23, 326–339. [Google Scholar] [CrossRef]

- Salimi, A.; Faradonbeh, R.S.; Monjezi, M.; Moormann, C. TBM performance estimation using a classification and regression tree (CART) technique. Bull. Eng. Geol. Environ. 2018, 77, 429–440. [Google Scholar] [CrossRef]

- Feng, S.; Chen, Z.; Luo, H.; Wang, S.; Zhao, Y.; Liu, L.; Ling, D.; Jing, L. Tunnel boring machines (TBM) performance prediction: A case study using big data and deep learning. Tunn. Undergr. Space Technol. 2021, 110, 103636. [Google Scholar] [CrossRef]

- Lee, H.-L.; Song, K.-I.; Qi, C.; Kim, J.-S.; Kim, K.-S. Real-time prediction of operating parameter of TBM during tunneling. Appl. Sci. 2021, 11, 2967. [Google Scholar] [CrossRef]

- Qin, C.; Shi, G.; Tao, J.; Yu, H.; Jin, Y.; Lei, J.; Liu, C. Precise cutterhead torque prediction for shield tunneling machines using a novel hybrid deep neural network. Mech. Syst. Signal Process. 2021, 151, 107386. [Google Scholar] [CrossRef]

- Mahmoodzadeh, A.; Nejati, H.R.; Mohammadi, M.; Hashim Ibrahim, H.; Rashidi, S.; Ahmed Rashid, T. Forecasting tunnel boring machine penetration rate using LSTM deep neural network optimized by grey wolf optimization algorithm. Expert Syst. Appl. 2022, 209, 118303. [Google Scholar] [CrossRef]

- Wang, K.; Zhang, L.; Fu, X. Time series prediction of tunnel boring machine (TBM) performance during excavation using causal explainable artificial intelligence (CX-AI). Autom. Constr. 2023, 147, 104730. [Google Scholar] [CrossRef]

- Gao, X.; Shi, M.; Song, X.; Zhang, C.; Zhang, H. Recurrent neural networks for real-time prediction of TBM operating parameters. Autom. Constr. 2019, 98, 225–235. [Google Scholar] [CrossRef]

- Wang, K.; Wu, X.; Zhang, L.; Song, X. Data-driven multi-step robust prediction of TBM attitude using a hybrid deep learning approach. Adv. Eng. Inform. 2023, 55, 101854. [Google Scholar] [CrossRef]

- Zhang, Q. Temporal-spatial prediction methods of TBM performance with evaluation of its transferring ability. KSCE J. Civ. Eng. 2025, 29, 100077. [Google Scholar] [CrossRef]

- Chawla, N.V.; Bowyer, K.W.; Hall, L.O.; Kegelmeyer, W.P. SMOTE: Synthetic minority over-sampling technique. J. Artif. Intell. Res. 2002, 16, 321–357. [Google Scholar] [CrossRef]

- Batista, G.E.; Prati, R.C.; Monard, M.C. A study of the behavior of several methods for balancing machine learning training data. ACM SIGKDD Explor. Newsl. 2004, 6, 20–29. [Google Scholar] [CrossRef]

- Guo, H.; Viktor, H.L. Learning from imbalanced data sets with boosting and data generation: The databoost-im approach. ACM SIGKDD Explor. Newsl. 2004, 6, 30–39. [Google Scholar] [CrossRef]

- Sun, Y.; Kamel, M.S.; Wong, A.K.; Wang, Y. Cost-sensitive boosting for classification of imbalanced data. Pattern Recognit. 2007, 40, 3358–3378. [Google Scholar] [CrossRef]

- Masnadi-Shirazi, H.; Vasconcelos, N. Cost-sensitive boosting. IEEE Trans. Pattern Anal. Mach. Intell. 2010, 33, 294–309. [Google Scholar] [CrossRef] [PubMed]

- Thai-Nghe, N.; Gantner, Z.; Schmidt-Thieme, L. Cost-sensitive learning methods for imbalanced data. In Proceedings of the 2010 International Joint Conference on Neural Networks (IJCNN), Barcelona, Spain, 18–23 July 2010; pp. 1–8. [Google Scholar]

- Domingos, P. Metacost: A general method for making classifiers cost-sensitive. In Proceedings of the Fifth ACM SIGKDD International Conference on Knowledge Discovery and Data Mining, San Diego, CA, USA, 15–18 August 1999; pp. 155–164. [Google Scholar]

- Greiner, R.; Grove, A.J.; Roth, D. Learning cost-sensitive active classifiers. Artif. Intell. 2002, 139, 137–174. [Google Scholar] [CrossRef]

- Liu, X.-Y.; Zhou, Z.-H. The influence of class imbalance on cost-sensitive learning: An empirical study. In Proceedings of the Sixth International Conference on Data Mining (ICDM’06), Hong Kong, China, 18–22 December 2006; pp. 970–974. [Google Scholar]

- Chawla, N.V.; Japkowicz, N.; Kotcz, A. Special issue on learning from imbalanced data sets. ACM SIGKDD Explor. Newsl. 2004, 6, 1–6. [Google Scholar] [CrossRef]

- Han, H.; Wang, W.-Y.; Mao, B.-H. Borderline-SMOTE: A new over-sampling method in imbalanced data sets learning. In Proceedings of the 2005 International Conference on Intelligent Computing, Hefei, China, 23–26 August 2005; pp. 878–887. [Google Scholar]

- Wang, S. A hybrid SMOTE and Trans-CWGAN for data imbalance in real operational AHU AFDD: A case study of an auditorium building. Energy Build. 2025, 348, 116447. [Google Scholar] [CrossRef]

- He, H.; Bai, Y.; Garcia, E.A.; Li, S. ADASYN: Adaptive synthetic sampling approach for imbalanced learning. In Proceedings of the 2008 IEEE International Joint Conference on Neural Networks (IEEE World Congress on Computational Intelligence), Hong Kong, China, 1–8 June 2008; pp. 1322–1328. [Google Scholar]

- Japkowicz, N.; Myers, C.; Gluck, M. A novelty detection approach to classification. In Proceedings of the IJCAI, Montreal, QC, Canada, 20–25 August 1995; pp. 518–523. [Google Scholar]

- Manevitz, L.M.; Yousef, M. One-class SVMs for document classification. J. Mach. Learn. Res. 2001, 2, 139–154. Available online: https://dl.acm.org/doi/10.5555/944790.944808 (accessed on 9 November 2025).

- Raskutti, B.; Kowalczyk, A. Extreme re-balancing for SVMs: A case study. ACM SIGKDD Explor. Newsl. 2004, 6, 60–69. [Google Scholar] [CrossRef]

- Ertekin, S.; Huang, J.; Bottou, L.; Giles, L. Learning on the border: Active learning in imbalanced data classification. In Proceedings of the Sixteenth ACM Conference on Information and Knowledge Management, Lisbon, Portugal, 6–10 November 2007; pp. 127–136. [Google Scholar]

- Tomanek, K.; Hahn, U. Reducing class imbalance during active learning for named entity annotation. In Proceedings of the Fifth International Conference on Knowledge Capture, Redondo Beach, CA, USA, 1–4 September 2009; pp. 105–112. [Google Scholar]

- Zhu, J.; Hovy, E. Active learning for word sense disambiguation with methods for addressing the class imbalance problem. In Proceedings of the 2007 Joint Conference on Empirical Methods in Natural Language Processing and Computational Natural Language Learning (EMNLP-CoNLL), Prague, Czech Republic, 28–30 June 2007; pp. 783–790. [Google Scholar]

- Zhang, Q.; Liu, Z.; Tan, J. Prediction of geological conditions for a tunnel boring machine using big operational data. Autom. Constr. 2019, 100, 73–83. [Google Scholar] [CrossRef]

- Park, S.; Kim, J.; Kim, J.; Wang, S. Fault diagnosis of air handling units in an auditorium using real operational labeled data across different operation modes. J. Comput. Civ. Eng. 2025, 39, 04025065. [Google Scholar] [CrossRef]

- Lemaître, G.; Nogueira, F.; Aridas, C.K. Imbalanced-learn: A python toolbox to tackle the curse of imbalanced datasets in machine learning. J. Mach. Learn. Res. 2017, 18, 559–563. [Google Scholar]

| Hyperparameter | Value |

|---|---|

| Length of input and output | 6, 1 |

| Hidden layers | 3 |

| Neurons per hidden layer | 64 |

| Activation functions | ReLU |

| Learning rate, Epoch, Mini-batch size | 0.001, 400, 64 |

| Dataset | Mean | Min | 1st Quartile (Q1) | Median | 3rd Quartile (Q3) | Max | IQR (Q3−Q1) |

|---|---|---|---|---|---|---|---|

| Imbalanced | 1.893 | 0.138 | 0.372 | 0.816 | 2.364 | 21.160 | 1.992 |

| Balanced | 1.338 | 0.208 | 0.623 | 0.914 | 1.542 | 20.343 | 0.919 |

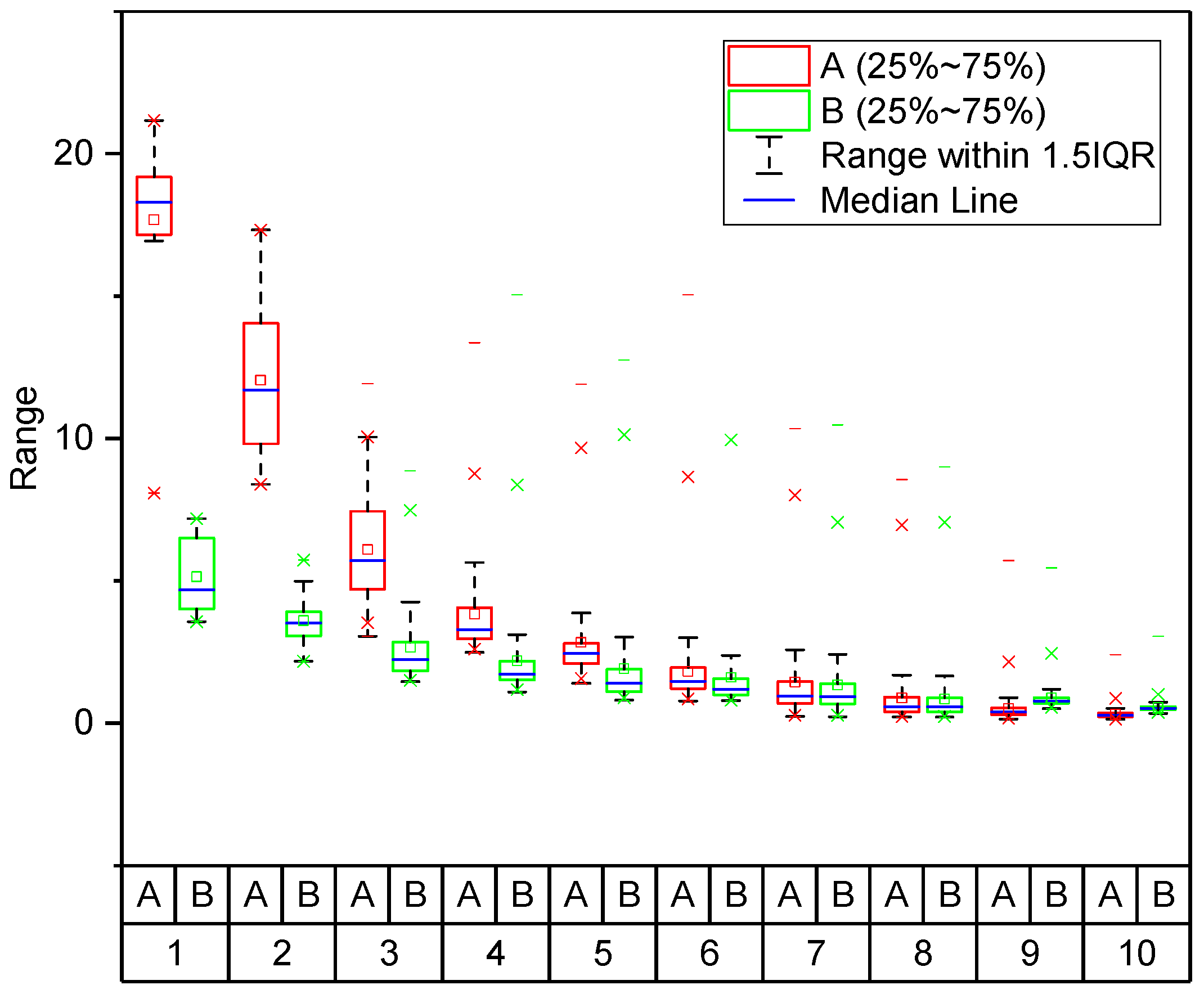

| Sampling Method | Pseudo Labels | Dataset 1 | Mean | Min | Q1 | Median | Q3 | Max | IQR |

|---|---|---|---|---|---|---|---|---|---|

| Oversampling | 1 | A | 17.674 | 8.085 | 17.156 | 18.286 | 19.179 | 21.160 | 2.023 |

| B | 5.144 | 3.567 | 4.008 | 4.684 | 6.500 | 7.182 | 2.492 | ||

| 2 | A | 12.042 | 8.386 | 9.817 | 11.701 | 14.045 | 17.319 | 4.228 | |

| B | 3.596 | 2.175 | 3.062 | 3.512 | 3.912 | 5.733 | 0.851 | ||

| 3 | A | 6.092 | 3.056 | 4.705 | 5.715 | 7.432 | 11.927 | 2.727 | |

| B | 2.664 | 1.463 | 1.835 | 2.235 | 2.853 | 8.866 | 1.018 | ||

| 4 | A | 3.825 | 2.484 | 2.962 | 3.296 | 4.057 | 13.362 | 1.095 | |

| B | 2.194 | 1.095 | 1.524 | 1.724 | 2.181 | 15.034 | 0.658 | ||

| 6 | A | 1.816 | 0.781 | 1.217 | 1.468 | 1.955 | 15.032 | 0.738 | |

| B | 1.609 | 0.788 | 1.004 | 1.195 | 1.565 | 20.343 | 0.561 | ||

| 7 | A | 1.442 | 0.241 | 0.687 | 0.954 | 1.472 | 10.337 | 0.786 | |

| B | 1.340 | 0.233 | 0.675 | 0.926 | 1.385 | 10.478 | 0.710 | ||

| Undersampling | 5 | A | 2.838 | 1.403 | 2.094 | 2.454 | 2.806 | 11.913 | 0.712 |

| B | 1.921 | 0.805 | 1.100 | 1.398 | 1.897 | 12.750 | 0.797 | ||

| 8 | A | 0.883 | 0.241 | 0.687 | 0.954 | 1.472 | 10.337 | 0.786 | |

| B | 0.852 | 0.208 | 0.393 | 0.568 | 0.901 | 9.000 | 0.508 | ||

| 9 | A | 0.507 | 0.143 | 0.295 | 0.394 | 0.544 | 5.717 | 0.248 | |

| B | 0.880 | 0.512 | 0.697 | 0.782 | 0.898 | 5.448 | 0.202 | ||

| 10 | A | 0.322 | 0.138 | 0.233 | 0.283 | 0.356 | 2.413 | 0.123 | |

| B | 0.557 | 0.345 | 0.477 | 0.530 | 0.588 | 3.050 | 0.111 |

| Methods | Mean | Min | Q1 | Median | Q3 | Max | IQR |

|---|---|---|---|---|---|---|---|

| Imbalanced dataset | 1.893 | 0.138 | 0.372 | 0.816 | 2.364 | 21.160 | 1.992 |

| Random over-sampling | 1.512 | 0.261 | 0.558 | 0.951 | 1.732 | 26.268 | 1.174 |

| SMOTE | 1.576 | 0.245 | 0.593 | 1.014 | 1.656 | 33.317 | 1.063 |

| Random under-sampling | 3.945 | 0.361 | 1.169 | 2.090 | 3.856 | 87.560 | 2.687 |

| Prototype generation | 3.837 | 0.263 | 1.049 | 1.939 | 3.766 | 84.332 | 2.717 |

| Proposed method | 1.338 | 0.208 | 0.623 | 0.914 | 1.542 | 20.343 | 0.919 |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Zhang, Q.; Li, S.; Ling, J.; Liu, Z. Prediction of TBM Performance Under Imbalanced Geological Conditions Using Resampling and Data Synthesis Techniques. Appl. Sci. 2025, 15, 12065. https://doi.org/10.3390/app152212065

Zhang Q, Li S, Ling J, Liu Z. Prediction of TBM Performance Under Imbalanced Geological Conditions Using Resampling and Data Synthesis Techniques. Applied Sciences. 2025; 15(22):12065. https://doi.org/10.3390/app152212065

Chicago/Turabian StyleZhang, Qianli, Shiyu Li, Jian Ling, and Zhenyu Liu. 2025. "Prediction of TBM Performance Under Imbalanced Geological Conditions Using Resampling and Data Synthesis Techniques" Applied Sciences 15, no. 22: 12065. https://doi.org/10.3390/app152212065

APA StyleZhang, Q., Li, S., Ling, J., & Liu, Z. (2025). Prediction of TBM Performance Under Imbalanced Geological Conditions Using Resampling and Data Synthesis Techniques. Applied Sciences, 15(22), 12065. https://doi.org/10.3390/app152212065