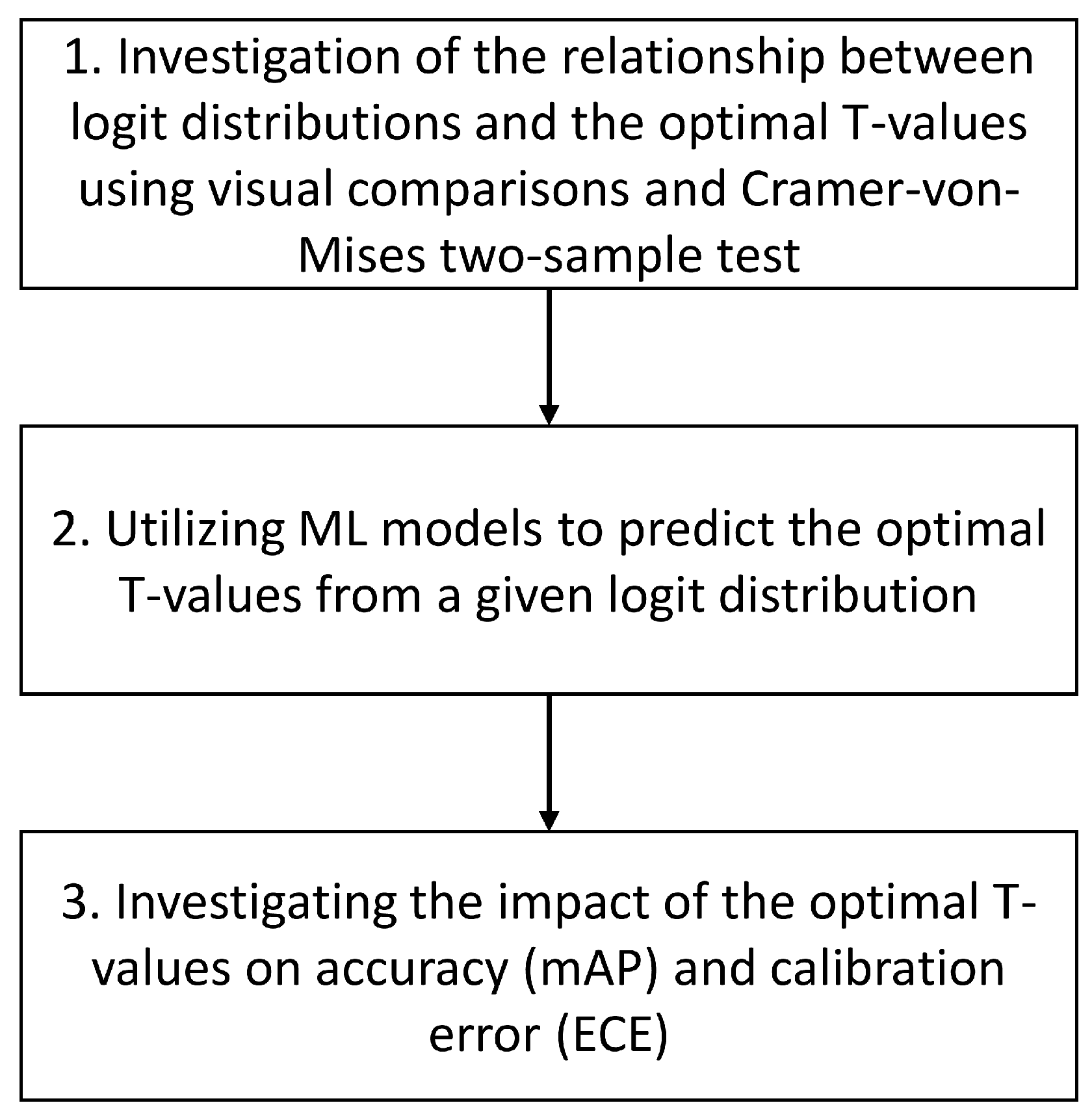

3.1. Overall Approach

This study aims to determine whether there is a correlation between the logit distributions and the optimal T-values of different datasets in order to achieve the best possible calibration using the Temperature Scaling calibration method. Furthermore, the extent to which modeling is possible is investigated, i.e., whether the optimal T-values can be predicted based on a given logit distribution. Finally, the influence of the method on the accuracy (mAP) and calibration error (ECE) of the predictions is determined.

For all investigations carried out within the scope of this study, the model

YOLO-World-V2.1-L (resolution: 1280) [

15] is used, which detects objects using bounding boxes. A maximum of 300 predictions per image will be accepted (default setting). The reasons for using this model are listed in

Section 1. The literature source [

16] explains how false positives (FP) and true positives (TP) are determined.

Figure 1 provides a summary of the methodology. In

the first step, the relationship between the logit distributions and the optimal T-values is investigated. To do this, the optimal T-values of different datasets must first be determined, which differ from each other depending on the dataset. In total, the optimal T-values are derived from 7 different datasets (LVIS minival [

12], Open Images V7 [

22,

23], Pascal VOC [

24], COCO [

25], EgoObjects [

26], Objects365v1 [

14] and a self-created dataset (see

Section 3.2)) with different variants (see

Section 4.1 for further explanations).

As a metric that should be minimized, the ECE is used, which can be calculated according to Equation (

6). This means that a T-value is optimal if the ECE is minimal. Based on [

16], two IoU (intersection over union) threshold values (

and

) are considered for the calculation of the ECE, i.e., there are also two corresponding optimal T-values, one for the minimum

and one for the minimum

. The temperature values are set to

in accordance with [

16,

19]. The set contains

as the minimum and

as the maximum value. For each of these T-values contained in the set, the

and the

are calculated and the minimum is derived. For the calculation of the ECE,

applies to all investigations.

After the optimal T-values have been determined for each dataset, the logit distribution is extracted, which contains the logits of all predictions of a dataset, which are then filtered. Since YOLO-World uses an internal confidence threshold of 0.001 for all predictions, i.e., YOLO-World rejects all predictions whose confidence is less than equal to 0.001, it follows from Equations (

2) and (

3) that

and rearranged to

, this results in

This means that only the logit values that satisfy the condition from Equation (

8) are retained, which greatly reduces the number of logits, since these would not be considered anyway. The logit distribution is always determined at a T-value of

, which corresponds to the prediction of YOLO-World without temperature scaling.

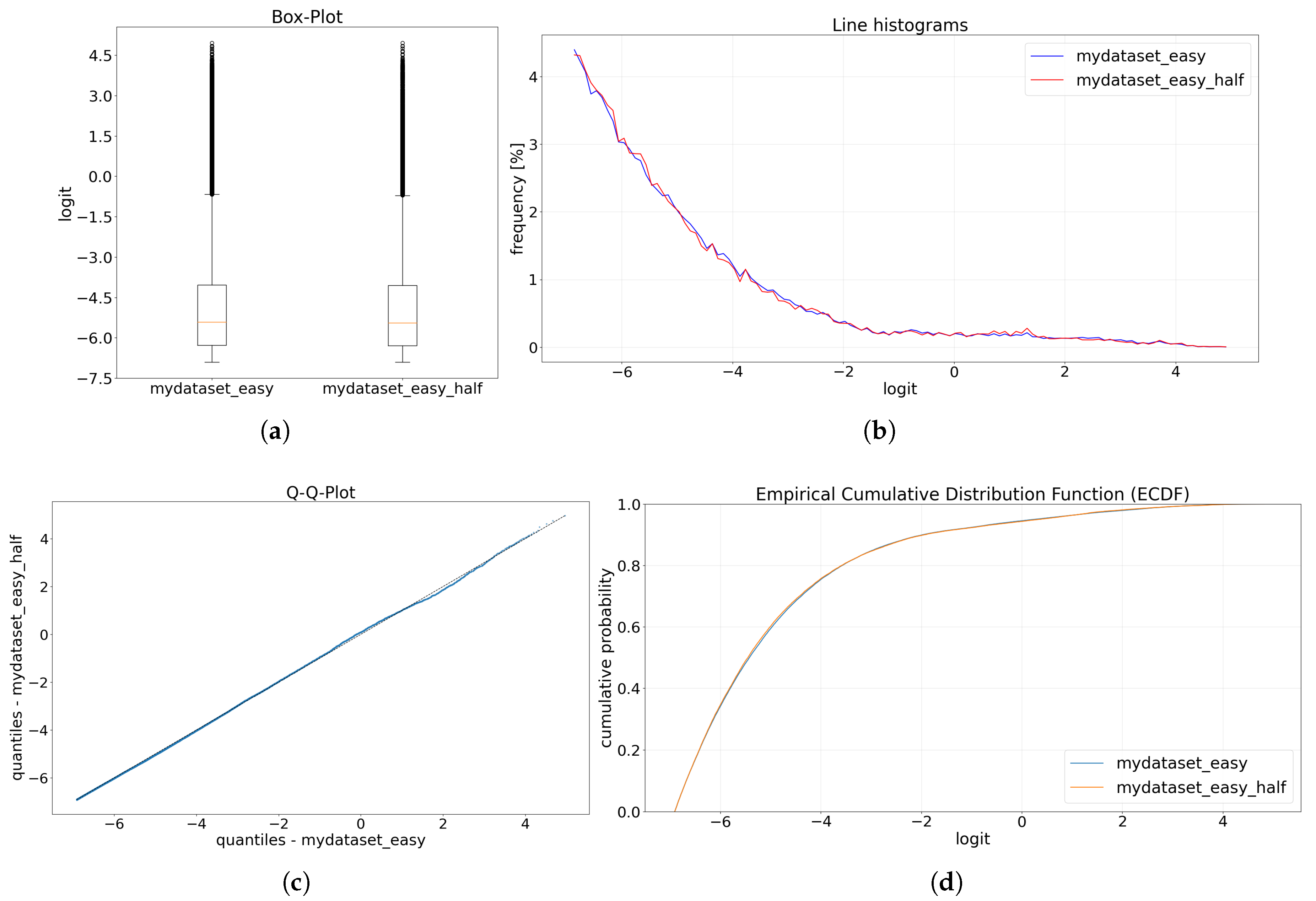

To check the relationship between the optimal T-values and the logit distributions, selected distributions are first tested visually to obtain an initial assessment of whether the same optimal T-values are also based on similar distributions and whether unequal T-values have dissimilar distributions. For the visual comparison, four diagrams are used: box plot, line histogram, Q-Q plot (quantile-quantile plot) and ECDF (empirical cumulative distribution function) plot. The box plot is shown here by default with the first quartile, median and third quartile, where the whiskers according to Tukey [

27] can be calculated as 1.5 times the interquartile range (IQR). Outliers (data points outside the whiskers) are marked as points [

28]. For the Q-Q plot, a total of 10,000 quantiles are calculated per logit distribution and these are plotted against each other for two distributions. The bin size of the histograms for the division of the logits is calculated using the Freedman-Diaconis Estimator [

29], whereby the bin sizes from both logit distributions to be compared are calculated together. A line histogram is then derived from the bin histogram by replacing all bins with a point in the center of the respective bin, which are connected to form a line. In addition, the frequency of the vertical axis is given as a percentage.

After the visual examination, the logit distributions of selected datasets should be compared with each other using a statistical test. This is another way to show that similar distributions result in the same optimal T-values and that different distributions also have different T-values. As a statistical test, the

Cramer-von-Mises two-sample test [

30] is used for this investigation. The null hypothesis of this test states that two samples come from the same distribution. This is considered to be fulfilled if

, where the standard value

is assumed in this study. In addition to the statistical test, the

Earth Mover’s Distance (EMD) [

31,

32] and the non-parametric effect size

Cliff’s Delta [

33] are calculated in order to make further conclusions regarding a correlation between the optimal T-values and the logit distributions. If there is an exact match between two logit distributions, both EMD and Cliff’s Delta are 0. So if two distributions have the same optimal T-values, then EMD and Cliff’s Delta should be very small.

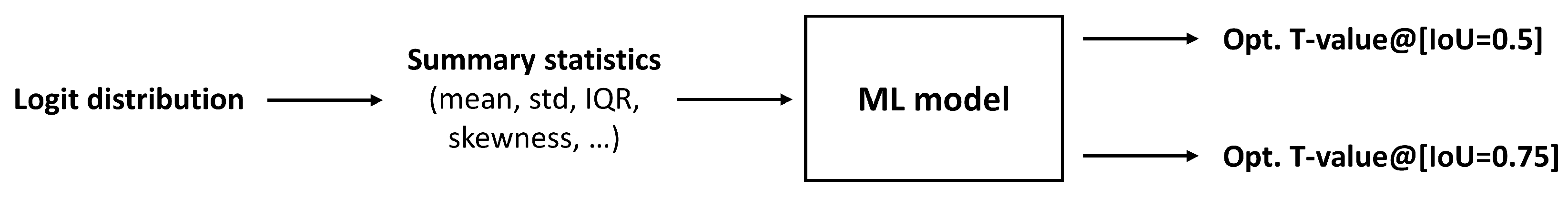

After the relationship between the logit distributions and the optimal T-values has been investigated, this relationship is modeled in

the second step utilizing machine learning (ML) methods. The general scheme is illustrated in

Figure 2. Since the number of logits depends on the number of classes and the number of images, the distributions have different sizes. Furthermore, a logit distribution sometimes consists of several million logits, which means it is not reasonable to use them directly as input for the ML model. Instead, the distribution is mapped using summary statistics, as suggested in [

34], which then serve as input for the ML model. As output/prediction, the optimal T-values for an IoU threshold of 0.5 and 0.75 are obtained, which minimize the respective ECE most, i.e., achieve the best calibration.

To quantify the prediction errors, three error metrics

Mean Absolute Error (MAE),

Root Mean Square Error (RMSE) and

coefficient of determination (R2) are calculated, as suggested in [

35]. The bootstrap resampling method [

36,

37] is used to measure the uncertainty of these errors, where

S corresponds to the number of bootstrap runs (

). The error metrics can be interpreted and calculated as follows, based on [

35,

38]. The MAE indicates the average deviation between the predicted T-values

and the true optimal T-values

, i.e., it is the average prediction error of the ML models. Here,

represents the number of true optimal T-values (or predicted T-values) for each bootstrap run

s per IoU threshold

. In addition,

applies. The RMSE is also calculated from the mean of the difference between

and

, however the difference is squared, which means that large deviations are weighted more heavily. Afterwards, the square root is taken from the result. With the help of R

2, it is possible to describe how well the model can explain the variance of the dependent variable (

) based on the input data (summary statistics of the logit distribution) in comparison to the mean

. In general, the smaller the MAE and the RMSE are, the better the prediction of the model is, while an R

2 value approaching 1 means that the variance could be explained perfectly. The MAE, RMSE, and R

2 can be calculated as a function of the IoU thresholds

and of the bootstrap run

s as follows:

where,

In addition, all predicted T-values

are rounded to the next multiple of 0.05 before the errors are calculated, since the true T-values

are also only available in intervals of 0.05. Subsequently, the median and the 95% percentile bootstrap confidence intervals [

39] are determined from the

S bootstrap runs of the 3 error metrics. Therefore, the errors

,

and

are always given as the median and 95% confidence interval (

).

In

the third and final step, a discussion of the calibration error (ECE) and the accuracy (mAP) should take place. On the one hand, it should be investigated how much the ECE could be improved by the temperature scaling calibration procedure, based on the respective datasets. In this way, the efficiency of this procedure should be derived. On the other hand, the mAP is examined before and after the calibration, as it must not be significantly worse despite an improvement in the calibration error. Finally, the influence of temperature scaling on the metrics Brier Score [

40,

41] and Sigmoid Cross-Entropy [

42,

43] is briefly discussed. Temperature scaling only affects the confidence values and does not influence thresholding or post-processing.

3.2. Self-Created Object Detection Dataset

For these and further investigations, a separate dataset consisting of 10 different object classes was created. For simplicity, this will be referred to as

mydataset. The dataset contains the object classes

dustpan,

light switch,

trowel,

USB flash drive, and

drawing compass, which are not included in the datasets Objects365v1 [

14] (one of the pre-training datasets from YOLO-World) and COCO [

25], and the object classes

cup,

scissors,

bottle,

power outlet/socket, and

computer keyboard, which appear in Objects365v1 [

14], LVIS [

13] and Open Images V7 [

22,

23].

Two different cameras were used to take the pictures: the 48-megapixel camera of the Galaxy A41 smartphone with an image resolution of 3000 × 4000 and a 5 MP Raspberry Pi camera with a 160° wide angle, whereby the resolution of the images is 640 × 480.

mydataset consists of three different complexity levels:

easy,

medium, and

hard. Half of the images in each level were taken with the Galaxy camera and the other half with the Raspberry Pi camera. Each object class occurs equally often in total and per complexity level. A summary of the most important attributes of all complexity levels can be found in

Table 1.

The complexity level easy consists of a total of 800 images, i.e., 400 images were taken with the Galaxy camera and 400 with a Raspberry Pi camera. Each image contains exactly one object, i.e., each of the 10 object classes appears a total of 80 times. The easy level includes additional criteria according to which the images were taken. The background must be simple and must not contain any distractions from the object. The objects are centered in the middle of the image and not occluded. Different variants and angles of the object are allowed, as are slightly changing distances and slightly different lighting conditions, as these are practically unavoidable.

The second level of complexity (medium) consists of two different requirements. In the first part, there is again exactly one object class per image, each class appearing a total of 50 times, whereby this time the distance to the object varies more greatly. This means that larger distances to the object are possible when taking the picture. In addition, the backgrounds are more complex (i.e., slightly less contrast between the object and the background) and contain additional objects, but none of the 10 object classes listed here. As in the easy complexity level, the lighting conditions are slightly variable and occlusion of objects is not allowed. The second part may contain several object classes per image, whereby the same criteria apply as in the first part. Here, each object class should appear 50 times and a random number of the desired objects between 2 and 4 per image. Each object class appears 100 times in this complexity level, which contains a total of 670 images.

In the last level (hard), partial occlusions of objects are allowed, the backgrounds become more complex, i.e., the object is no longer in focus, distances become even larger and perspectives more extreme. As in the other levels, the lighting conditions differ slightly, as this cannot be avoided when taking the pictures. Each object class appears a total of 40 times, where one image contains between 4 and 8 classes. A total of 72 images are included. The complexity level hard also has an extension hard_aug, which includes all images from hard and all images from medium that are augmented. To augment the medium images, Salt&Pepper Noise is applied, where randomly 2% of the pixels are changed to black and white pixels (50% each), a blurring using 11 × 11 Gaussian kernel and a change in brightness by ±35%. This means that from one image, four augmented images are generated, however, the non-augmented images of the medium level are not part of hard_aug. Thus, hard_aug comprises 2752 images.

An example of the images for each complexity level, which includes, among other things, the object class

bottle, can be found in

Figure 3. From these 4 complexity levels, two further variants can be derived. The variant

all includes the 1542 images from

easy,

medium, and

hard, whereas

all_aug contains the 4222 images from

easy,

medium, and

hard_aug. The annotation of the images was done using bounding boxes and the software used was Roboflow [

44]. The images and annotations are available at [

45].

For this study, the following generative AI models were used for the following purposes: